Monitoring the Recovery after 2016 Hurricane Matthew in Haiti via Markovian Multitemporal Region-Based Modeling

Abstract

1. Introduction

2. Previous Work on Land Cover Change Detection

3. Materials and Methods

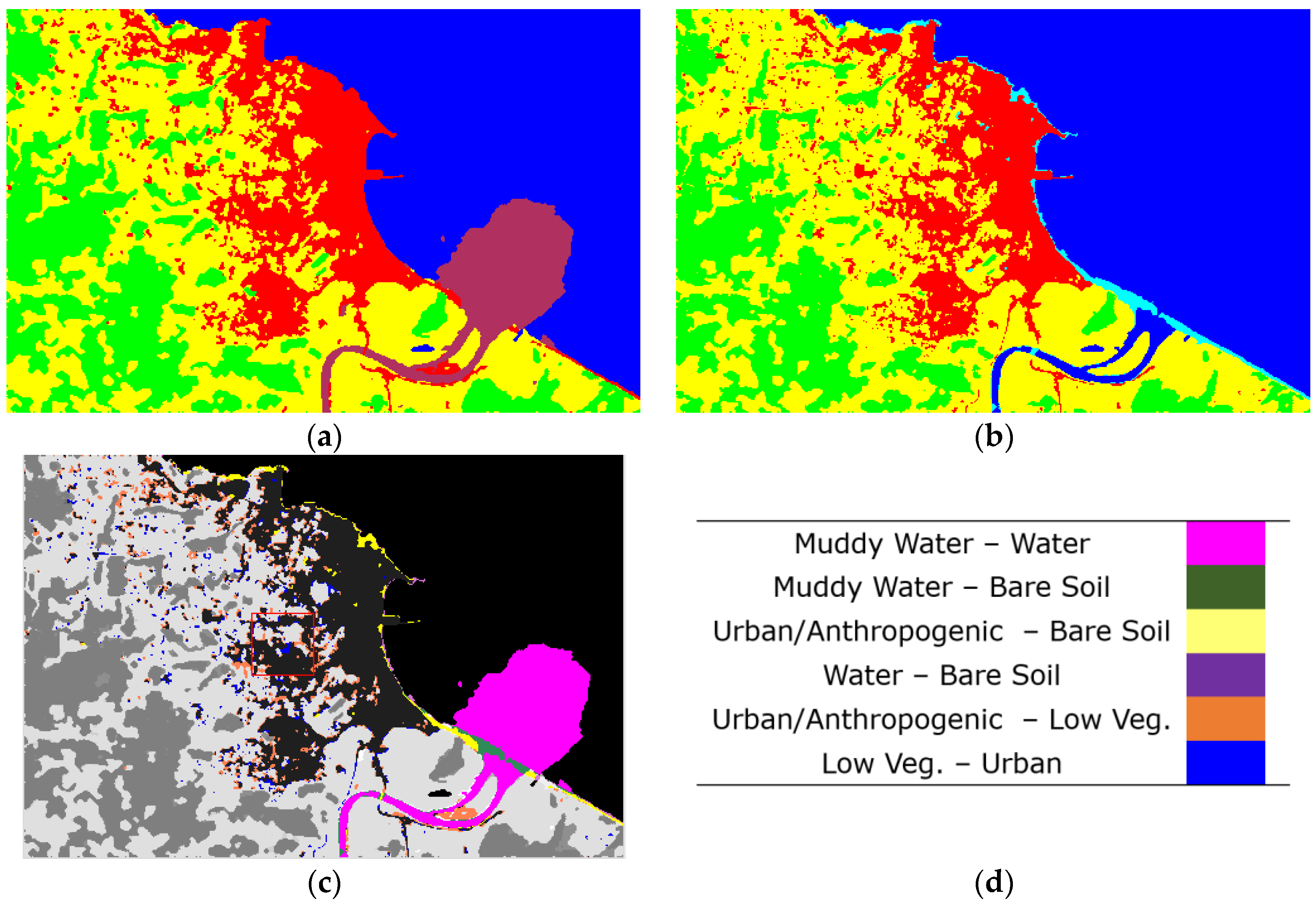

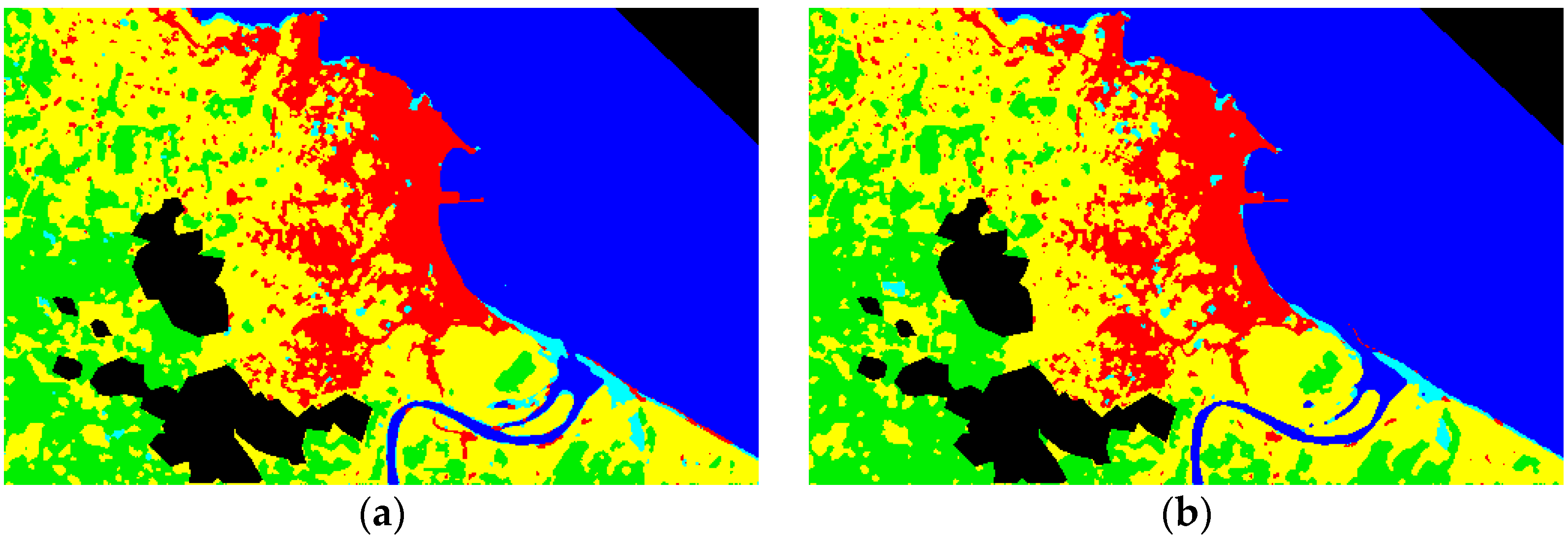

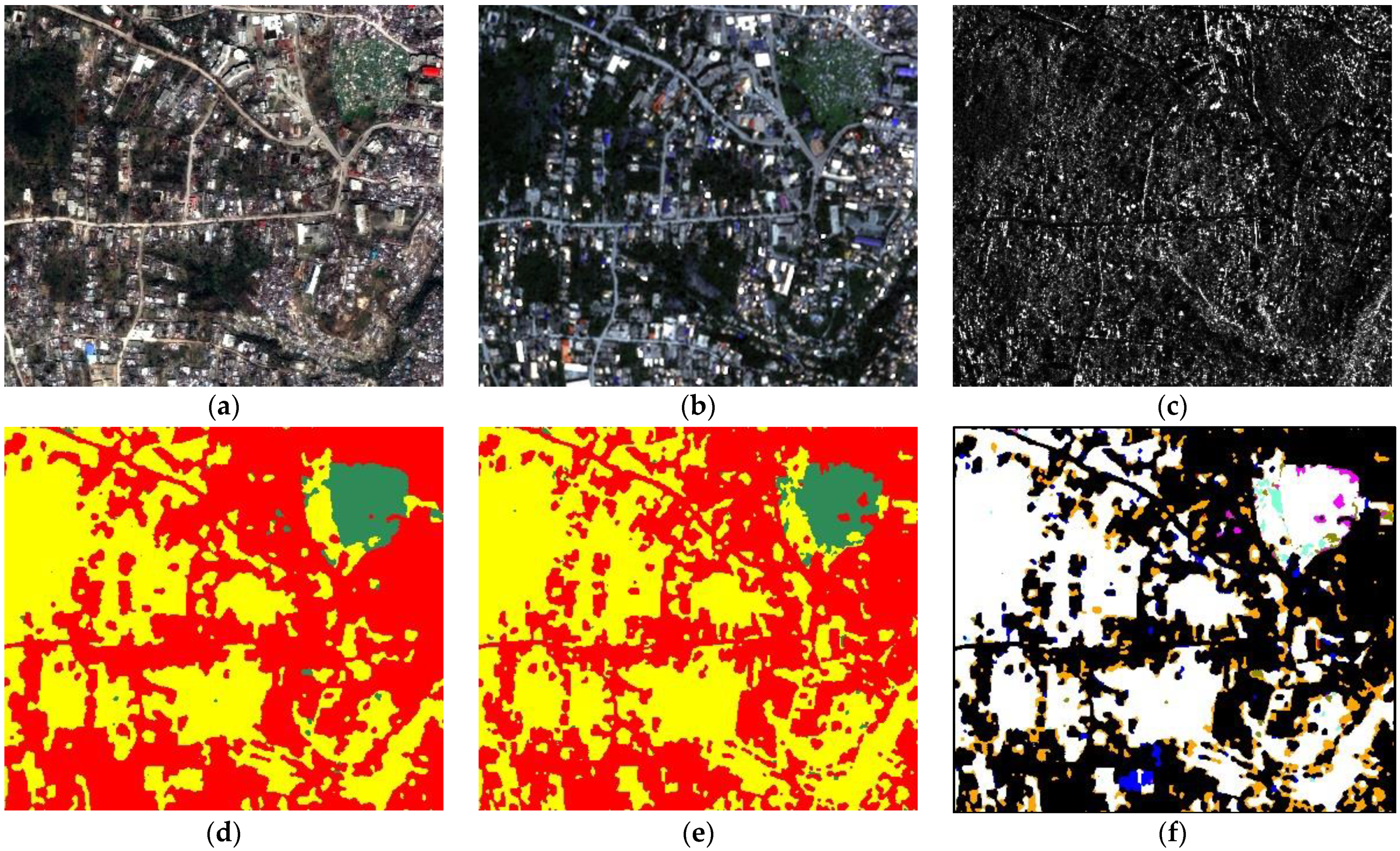

3.1. Case Study

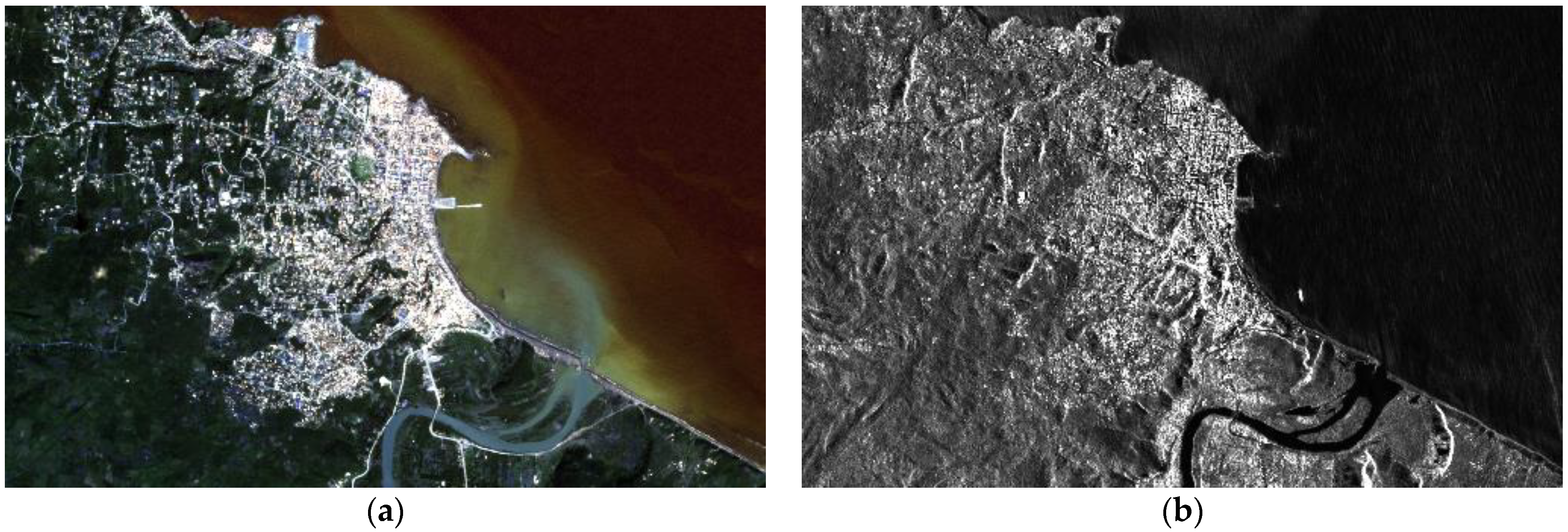

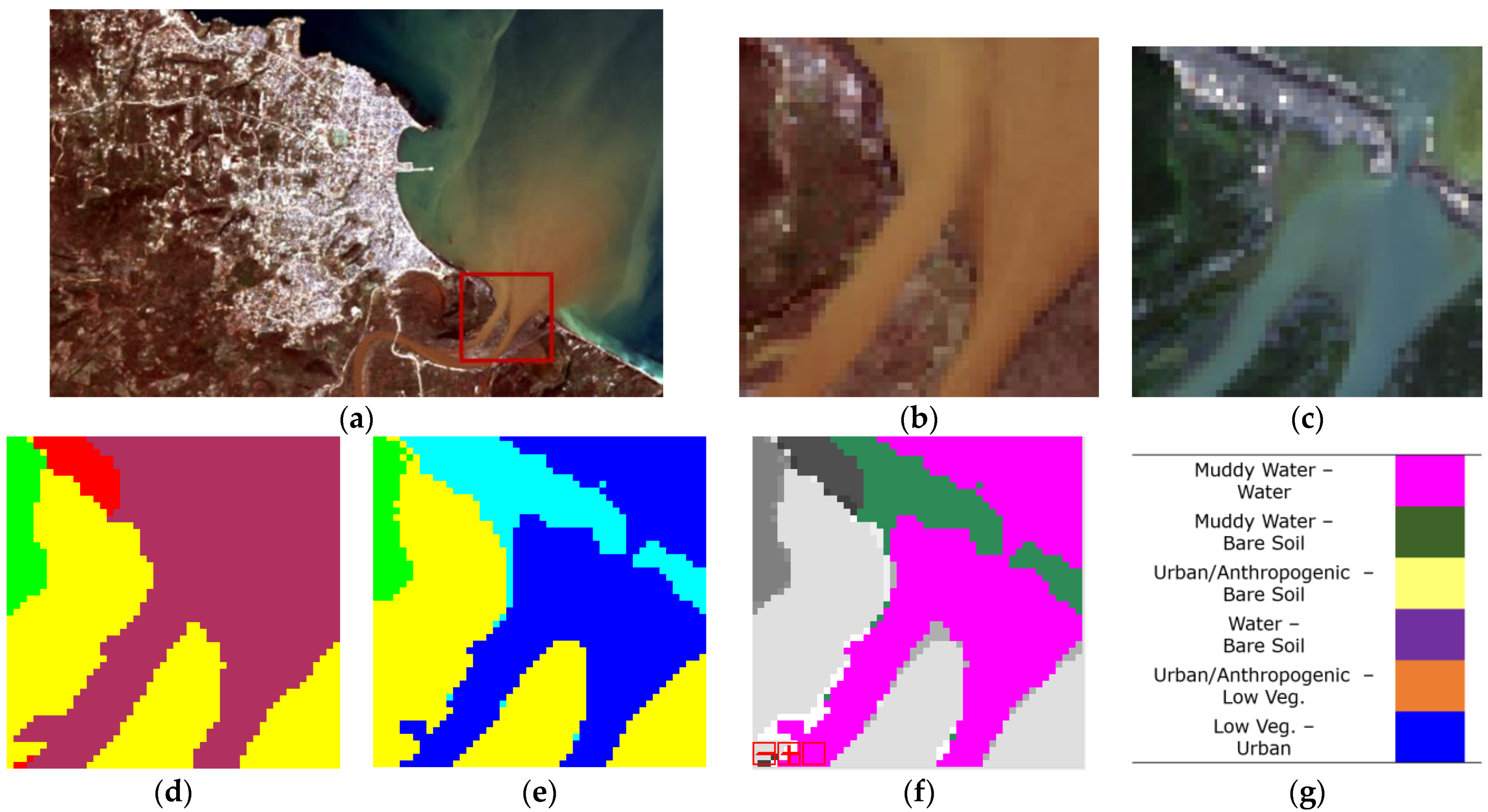

- “Jérémie 2016”: Pansharpened Pléiades multispectral image (Figure 1) collected on 7 October 2016, i.e., few days after Hurricane Matthew hit the imaged area. The image is composed of 4 channels and has a pixel spacing of 0.5 m. The native resolution is 2 m for the multispectral channels and 0.5 m for the panchromatic channel.

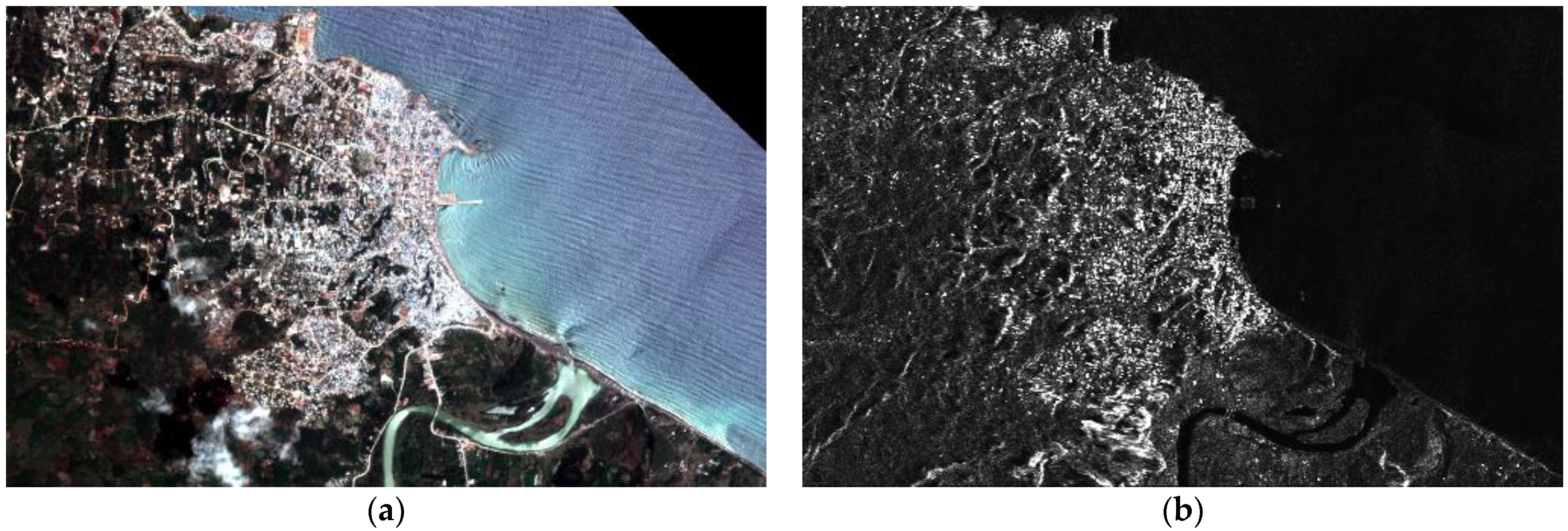

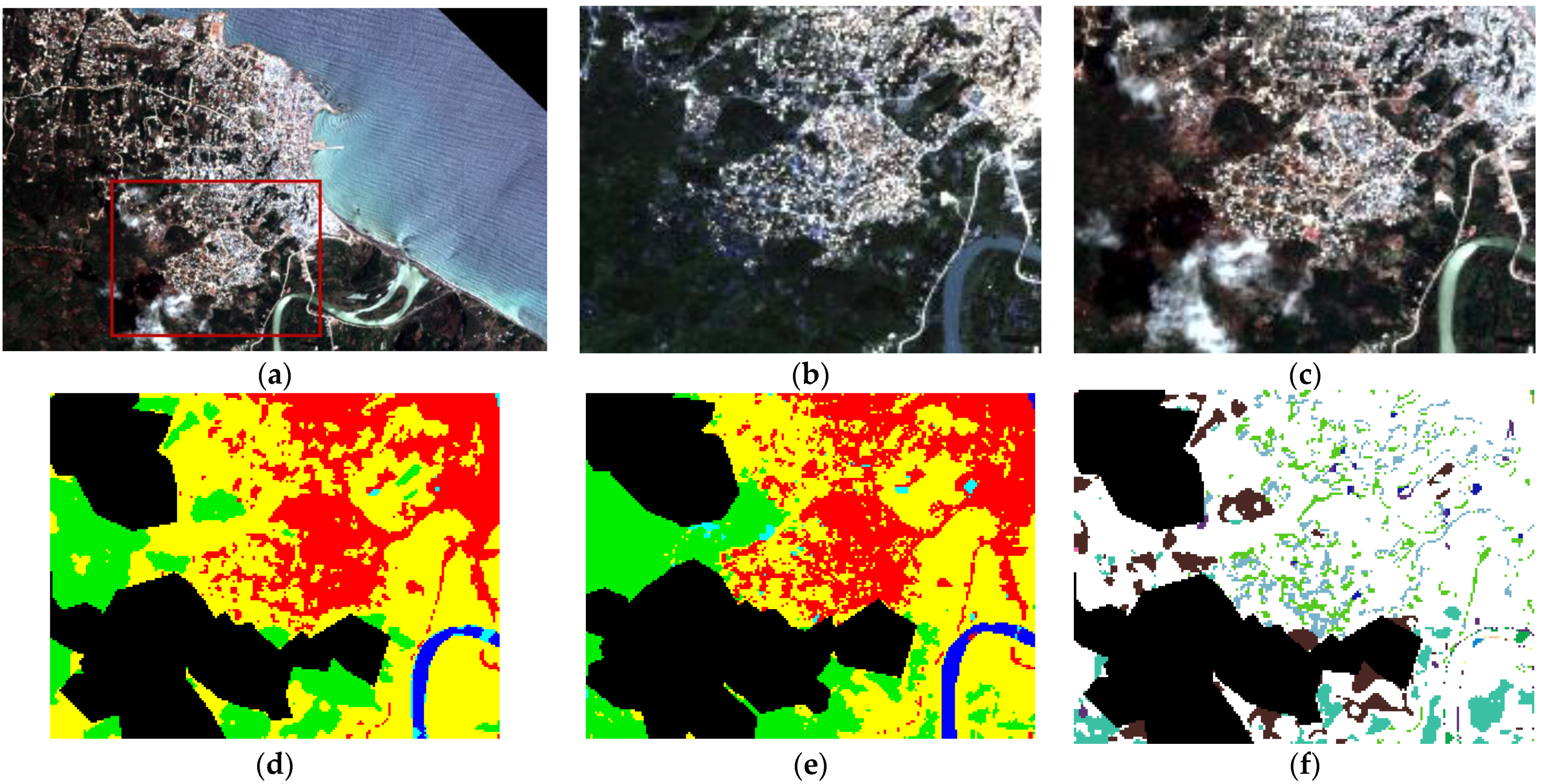

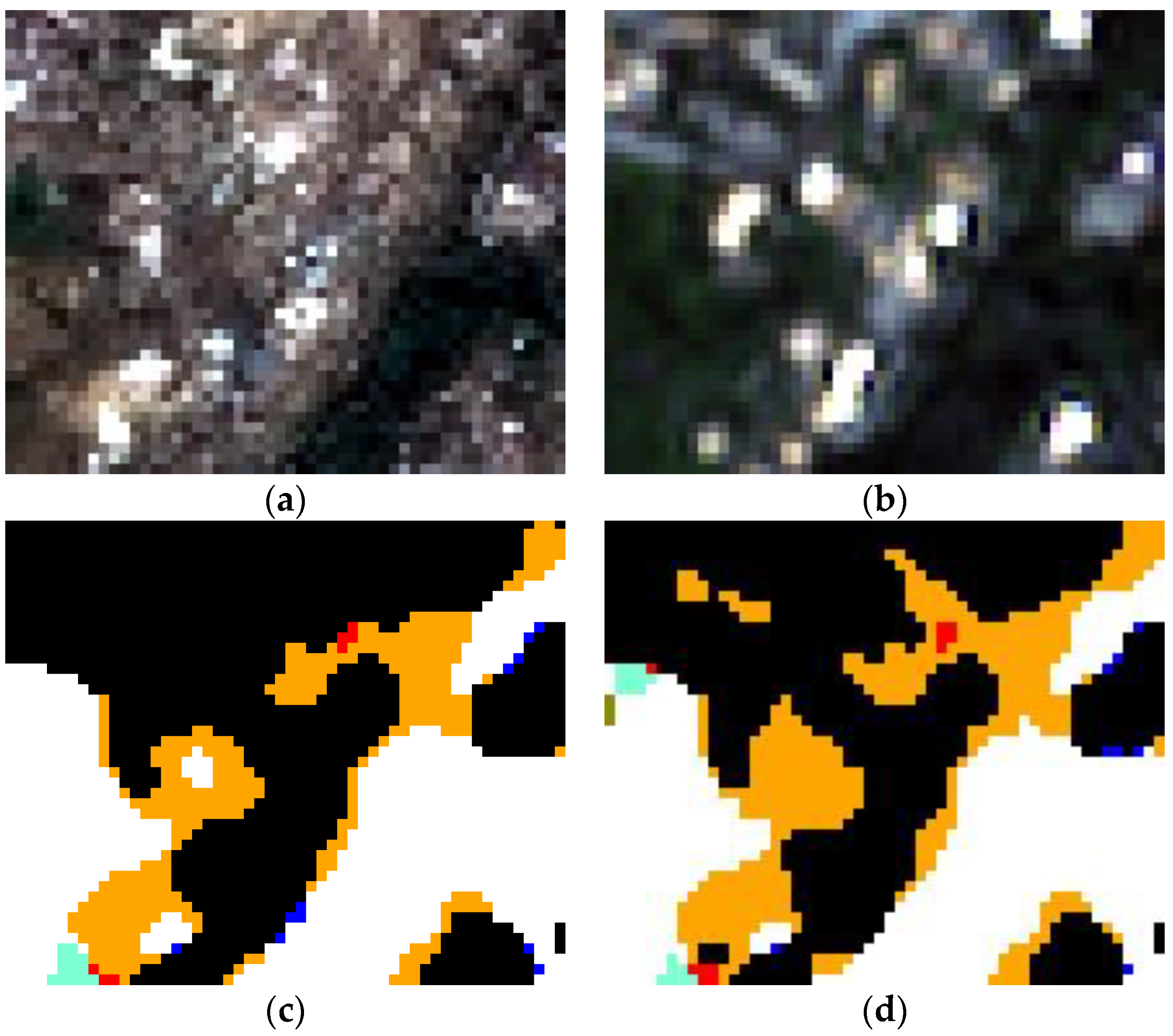

- “Jérémie 2017”: Pléiades multispectral image (Figure 2a) collected on 18 October 2017, and composed of 4 channels with a native resolution of 2 m; COSMO-SkyMed Enhanced Spotlight right-looking image (Figure 2b) collected on 2 December 2016, with pixel spacing of 0.5 m, approximate spatial resolution of 1 m, and HH polarization.

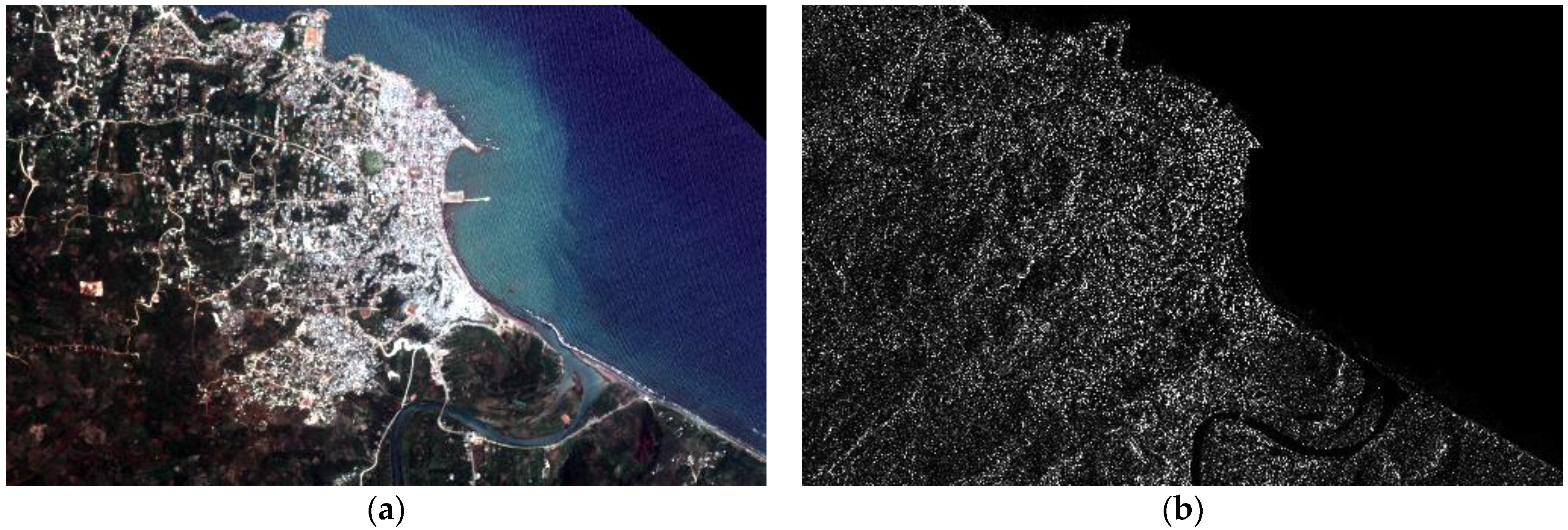

- “Jérémie 2018”: Pléiades multispectral image (Figure 3a) collected on 24 April 2018, and composed of 4 channels with a native resolution of 2 m; COSMO-SkyMed StripMap HIMAGE right-looking image (Figure 3b) collected on 12 May 2018. The polarization is HH and the spatial resolution is approximately 3 m.

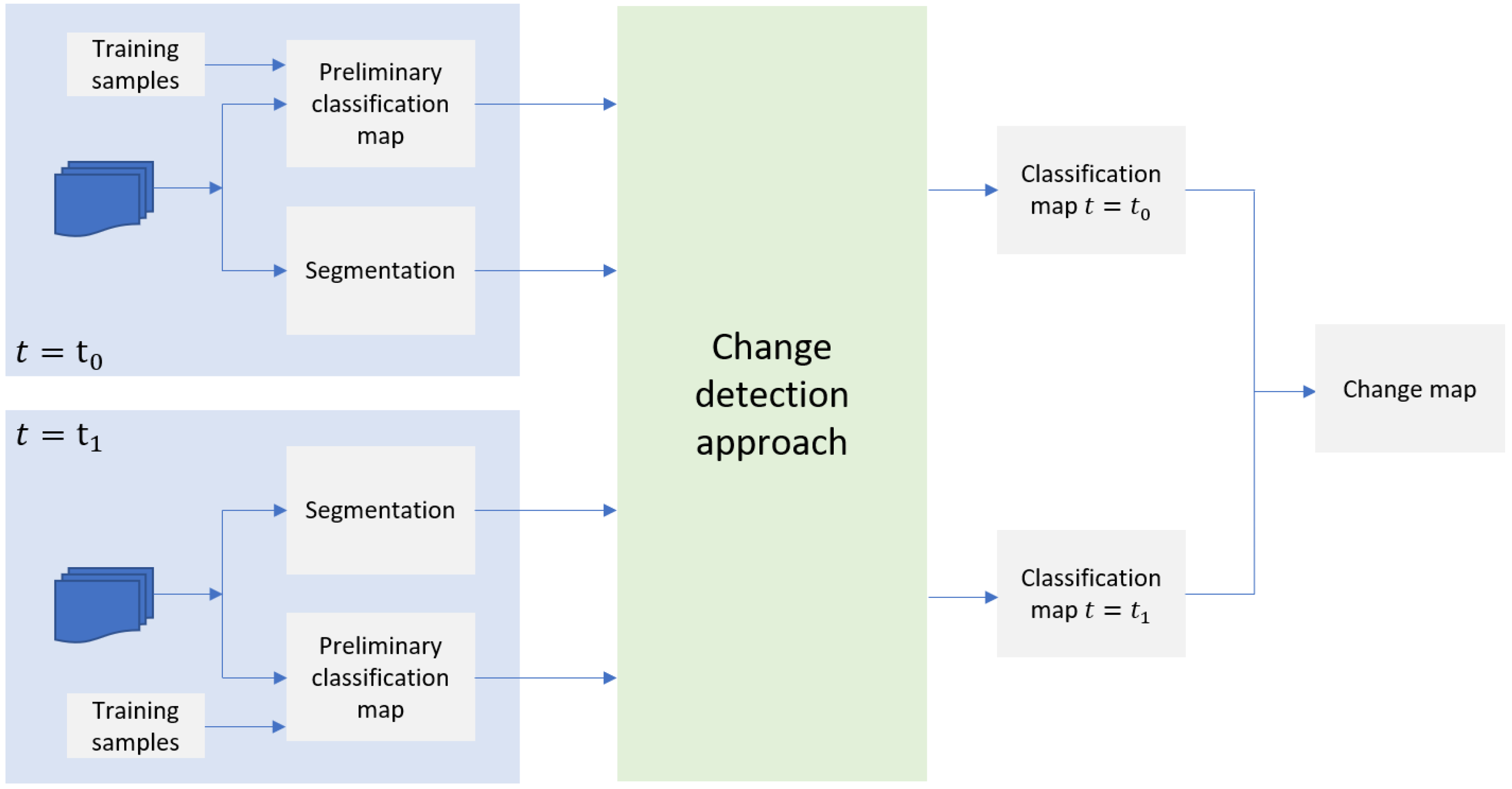

3.2. Overview of the Proposed Method

3.3. Energy Function of the Proposed Markov Model

- The contextual classification method proposed in [76], which consists in a support vector machine (SVM) whose kernel function is based on a region-based approach and incorporates spatial information associated with an input segmentation map. The segmentation map associated with the finest scale among the aforementioned ones is used.

- The framework proposed in [77] and extended in [78] that provides a rigorous methodological integration of the SVM and MRF approaches. It is based on a Hilbert space formulation, and its kernel combines the rationale of SVMs and a predefined spatial MRF model. The well-known Potts model is used in this role. The extensions in [78] also integrate global or near-global energy minimization algorithms based on graph cuts or belief propagation.

- The random forest (RF) classifier, rooted in the ensemble learning theory. RF combines multiple individual decision trees, each trained on a random resampling of the training data (bagging) and using, at each decision node, a random subset of the full set of features.

3.4. Optimization of the Parameters and Energy Minimization

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- The Recovery Observatory in Haiti in Short. Recovery Observatory Haiti by CEOS. Available online: https://www.recovery-observatory.org/drupal/en (accessed on 28 June 2021).

- Haiti RO for Hurricane Matthew Recovery|CEOS|Committee on Earth Observation Satellites. Available online: https://ceos.org/ourwork/workinggroups/disasters/recovery-observatory/haiti-ro-for-hurricane-matthew-recovery/ (accessed on 14 May 2021).

- Bovolo, F.; Bruzzone, L. The Time Variable in Data Fusion: A Change Detection Perspective. IEEE Geosci. Remote Sens. Mag. 2015, 3, 8–26. [Google Scholar] [CrossRef]

- Solberg, A.; Taxt, T.; Jain, A. A Markov random field model for classification of multisource satellite imagery. IEEE Trans. Geosci. Remote Sens. 1996, 34, 100–113. [Google Scholar] [CrossRef]

- Li, S.Z. Markov Random Field Modeling in Image Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar] [CrossRef]

- Geman, S.; Geman, D. Stochastic Relaxation, {G}ibbs Distributions, and the {B}ayesian Restoration of Images. IEEE Trans. Pattern Anal. Mach. Intell. 1984, PAMI-6, 721–741. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B.; Benediktsson, J.A. Land-Cover Mapping by Markov Modeling of Spatial–Contextual Information in Very-High-Resolution Remote Sensing Images. Proc. IEEE 2012, 101, 631–651. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Multitemporal region-based classification of high-resolution images by Markov random fields and multiscale segmentation. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 25–29 June 2011; pp. 102–105. [Google Scholar] [CrossRef]

- Kato, Z. Markov Random Fields in Image Segmentation. Found. Trends Signal Process. 2011, 5, 1–155. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- De Giorgi, A.; Moser, G.; Boni, G.; Pisani, A.R.; Tapete, D.; Zoffoli, S.; Serpico, S.B. Recovery Monitoring in Haiti After Hurricane Matthew Through Markov Random Fields and a Region-Based Approach. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9357–9360. [Google Scholar] [CrossRef]

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Review ArticleDigital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change Detection Techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Radke, R.J.; Andra, S.; Al-Kofahi, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef]

- Johnson, R.D.; Kasischke, E.S. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Sziranyi, T.; Shadaydeh, M. Segmentation of Remote Sensing Images Using Similarity-Measure-Based Fusion-MRF Model. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1544–1548. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef]

- Hedhli, I.; Moser, G.; Zerubia, J.; Serpico, S.B. New cascade model for hierarchical joint classification of multitemporal, multiresolution and multisensor remote sensing data. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 5247–5251. [Google Scholar] [CrossRef]

- Singh, P.; Kato, Z.; Zerubia, J. A Multilayer Markovian Model for Change Detection in Aerial Image Pairs with Large Time Differences. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 924–929. [Google Scholar] [CrossRef]

- Malila, W.A. Change Vector Analysis: An approach for detecting forest changes with Landsat. In Proceedings of the 6th Annual Symposium on Machine Processing of Remotely Sensed Data, West Lafayette, IN, USA, 3–6 June 1980; pp. 326–335. [Google Scholar]

- Melgani, F.; Serpico, S. A markov random field approach to spatio-temporal contextual image classification. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2478–2487. [Google Scholar] [CrossRef]

- Gamba, P.; Dell’Acqua, F.; Lisini, G. Change Detection of Multitemporal SAR Data in Urban Areas Combining Feature-Based and Pixel-Based Techniques. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2820–2827. [Google Scholar] [CrossRef]

- Benedek, C.; Shadaydeh, M.; Kato, Z.; Szirányi, T.; Zerubia, J. Multilayer Markov Random Field models for change detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2015, 107, 22–37. [Google Scholar] [CrossRef]

- Petitjean, F.; Ketterlin, A.; Gançarski, P. A global averaging method for dynamic time warping, with applications to clustering. Pattern Recognit. 2011, 44, 678–693. [Google Scholar] [CrossRef]

- Rignot, E.; Van Zyl, J. Change detection techniques for ERS-1 SAR data. IEEE Trans. Geosci. Remote Sens. 1993, 31, 896–906. [Google Scholar] [CrossRef]

- Oliver, C.; Quegan, S. Understanding Synthetic Aperture Radar Images; SciTech Publishing: Raleigh, NC, USA, 2004. [Google Scholar]

- Pat, J.C.; Mackinnon, D. Automatic detection of vegetation changes in the southwestern United States using remotely sensed images. Photogramm. Eng. Remote Sens. 1994, 60, 571–582. [Google Scholar]

- Muchoney, D.; Haack, B. Change detection for monitoring forest defoliation. Photogramm. Eng. Remote Sens. 1994, 60, 1243–1252. [Google Scholar]

- Bruzzone, L.; Prieto, D. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Moser, G.; Melgani, F.; Serpico, S.B. Unsupervised change-detection methods for remote-sensing images. Opt. Eng. 2002, 41, 3288–3297. [Google Scholar] [CrossRef]

- Deng, J.S.; Wang, K.; Deng, Y.H.; Qi, G.J. PCA-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Jha, C.S.; Unni, N.V.M. Digital change detection of forest conversion of a dry tropical Indian forest region. Int. J. Remote Sens. 1994, 15, 2543–2552. [Google Scholar] [CrossRef]

- Inglada, J.; Mercier, G. A New Statistical Similarity Measure for Change Detection in Multitemporal SAR Images and Its Extension to Multiscale Change Analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Häme, T.; Heiler, I.; Miguel-Ayanz, J.S. An unsupervised change detection and recognition system for forestry. Int. J. Remote Sens. 1998, 19, 1079–1099. [Google Scholar] [CrossRef]

- Romero, N.A.; Cigna, F.; Tapete, D. ERS-1/2 and Sentinel-1 SAR Data Mining for Flood Hazard and Risk Assessment in Lima, Peru. Appl. Sci. 2020, 10, 6598. [Google Scholar] [CrossRef]

- Chini, M.; Pelich, R.-M.; Pulvirenti, L.; Pierdicca, N.; Hostache, R.; Matgen, P. Sentinel-1 InSAR Coherence to Detect Floodwater in Urban Areas: Houston and Hurricane Harvey as A Test Case. Remote Sens. 2019, 11, 107. [Google Scholar] [CrossRef]

- Serra, P.; Pons, X.; Sauri, D. Post-classification change detection with data from different sensors: Some accuracy considerations. Int. J. Remote Sens. 2003, 24, 3311–3340. [Google Scholar] [CrossRef]

- Manandhar, R.; Odeh, I.O.A.; Ancev, T. Improving the Accuracy of Land Use and Land Cover Classification of Landsat Data Using Post-Classification Enhancement. Remote Sens. 2009, 1, 330–344. [Google Scholar] [CrossRef]

- Pacifici, F.; Del Frate, F.; Solimini, C.; Emery, W.J. An Innovative Neural-Net Method to Detect Temporal Changes in High-Resolution Optical Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2940–2952. [Google Scholar] [CrossRef]

- Ling, F.; Li, W.; Du, Y.; Li, X. Land Cover Change Mapping at the Subpixel Scale with Different Spatial-Resolution Remotely Sensed Imagery. IEEE Geosci. Remote Sens. Lett. 2010, 8, 182–186. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinf. 2013, 20, 77–85. [Google Scholar] [CrossRef]

- Hedhli, I.; Moser, G.; Zerubia, J.; Serpico, S.B. A New Cascade Model for the Hierarchical Joint Classification of Multitemporal and Multiresolution Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6333–6348. [Google Scholar] [CrossRef][Green Version]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Pesaresi, M.; Arnason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef]

- Mallat, S. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 674–693. [Google Scholar] [CrossRef]

- Pratt, W.K. Digital Image Processing; Wiley Interscience: Hoboken, NJ, USA, 2007. [Google Scholar]

- Dellepiane, S.; Fontana, F.; Vernazza, G. Nonlinear image labeling for multivalued segmentation. IEEE Trans. Image Process. 1996, 5, 429–446. [Google Scholar] [CrossRef]

- Troglio, G.; Le Moigne, J.; Benediktsson, J.A.; Moser, G.; Serpico, S.B. Automatic Extraction of Ellipsoidal Features for Planetary Image Registration. IEEE Geosci. Remote Sens. Lett. 2011, 9, 95–99. [Google Scholar] [CrossRef]

- Bouman, C.A.; Shapiro, M. A multiscale random field model for Bayesian image segmentation. IEEE Trans. Image Process. 1994, 3, 162–177. [Google Scholar] [CrossRef]

- Vakalopoulou, M.; Karantzalos, K.; Komodakis, N.; Paragios, N. Graph-Based Registration, Change Detection, and Classification in Very High Resolution Multitemporal Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2940–2951. [Google Scholar] [CrossRef]

- Yu, H.; Yang, W.; Hua, G.; Ru, H.; Huang, P. Change Detection Using High Resolution Remote Sensing Images Based on Active Learning and Markov Random Fields. Remote Sens. 2017, 9, 1233. [Google Scholar] [CrossRef]

- Danilla, C.; Persello, C.; Tolpekin, V.; Bergado, J.R. Classification of multitemporal SAR images using convolutional neural networks and Markov random fields. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2231–2234. [Google Scholar] [CrossRef]

- Raha, S.; Saha, K.; Sil, S.; Halder, A. Supervised Change Detection Technique on Remote Sensing Images Using F-Distribution and MRF Model. In Proceedings of the International Conference on Frontiers in Computing and Systems, COMSYS 2020, Jalpaiguri, India, 13–15 January 2020; pp. 249–256. [Google Scholar] [CrossRef]

- Sutton, C.; McCallum, A. An introduction to conditional random fields. Found. Trends Mach. Learn. 2011, 4, 267–373. [Google Scholar] [CrossRef]

- Bendjebbour, A.; Delignon, Y.; Fouque, L.; Samson, V.; Pieczynski, W. Multisensor image segmentation using Dempster-Shafer fusion in Markov fields context. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1789–1798. [Google Scholar] [CrossRef]

- Storvik, G.; Fjortoft, R.; Solberg, A. A bayesian approach to classification of multiresolution remote sensing data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 539–547. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Unsupervised Change Detection from Multichannel SAR Data by Markovian Data Fusion. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2114–2128. [Google Scholar] [CrossRef]

- Solarna, D.; Moser, G.; Serpico, S.B. A Markovian Approach to Unsupervised Change Detection with Multiresolution and Multimodality SAR Data. Remote Sens. 2018, 10, 1671. [Google Scholar] [CrossRef]

- Solarna, D.; Moser, G.; Serpico, S.B. Multiresolution and Multimodality Sar Data Fusion Based on Markov and Conditional Random Fields for Unsupervised Change Detection. In Proceedings of the IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 29–32. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Liu, T.; Yang, L.; Lunga, D. Change detection using deep learning approach with object-based image analysis. Remote Sens. Environ. 2021, 256, 112308. [Google Scholar] [CrossRef]

- Yu, X.; Fan, J.; Chen, J.; Zhang, P.; Zhou, Y.; Han, L. NestNet: A multiscale convolutional neural network for remote sensing image change detection. Int. J. Remote Sens. 2021, 42, 4898–4921. [Google Scholar] [CrossRef]

- Huang, F.; Yu, Y.; Feng, T. Hyperspectral remote sensing image change detection based on tensor and deep learning. J. Vis. Commun. Image Represent. 2018, 58, 233–244. [Google Scholar] [CrossRef]

- Ito, R.; Iino, S.; Hikosaka, S. Change detection of land use from pairs of satellite images via convolutional neural network. In Proceedings of the Asian Conference on Remote Sensing, Kuala Lumpur, Malaysia, 15–19 October 2018; Volume 2, pp. 1170–1176. [Google Scholar]

- Post Disaster Needs Assessment and Recovery Framework: Overview—PDNA—International Recovery Platform. Available online: https://www.recoveryplatform.org/pdna/about_pdna (accessed on 28 June 2021).

- Cigna, F.; Tapete, D.; Danzeglocke, J.; Bally, P.; Cuccu, R.; Papadopoulou, T.; Caumont, H.; Collet, A.; de Boissezon, H.; Eddy, A.; et al. Supporting Recovery after 2016 Hurricane Matthew in Haiti With Big SAR Data Processing in the Geohazards Exploitation Platform (GEP). In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 6867–6870. [Google Scholar] [CrossRef]

- Eastman, R.D.; Le Moigne, J.; Netanyahu, N.S. Research issues in image registration for remote sensing. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Solarna, D.; Gotelli, A.; Le Moigne, J.; Moser, G.; Serpico, S.B. Crater Detection and Registration of Planetary Images Through Marked Point Processes, Multiscale Decomposition, and Region-Based Analysis. IEEE Trans. Geosci. Remote Sens. 2020, 1–20. [Google Scholar] [CrossRef]

- Solarna, D.; Moser, G.; Le Moigne, J.; Serpico, S.B. Planetary crater detection and registration using marked point processes, multiple birth and death algorithms, and region-based analysis. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2337–2340. [Google Scholar] [CrossRef]

- Pinel-Puysségur, B.; Maggiolo, L.; Roux, M.; Gasnier, N.; Solarna, D.; Moser, G.; Serpico, S.; Tupin, F. Experimental Comparison of Registration Methods for Multisensor Sar-Optical Data. In Proceedings of the IGARSS 2021—International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021. [Google Scholar]

- Le Moigne, J.; Eastman, R.D. Multisensor Registration for Earth Remotely Sensed Imagery. In Multi-Sensor Image Fusion and Its Applications; CRC Press: Boca Raton, FL, USA, 2018; pp. 323–346. [Google Scholar] [CrossRef]

- Maggiolo, L.; Solarna, D.; Moser, G.; Serpico, S.B. Automatic Area-Based Registration of Optical and SAR Images through Generative Adversarial Networks and a Correlation-Type Metric. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA. 26 September–2 October 2020; pp. 2089–2092. [Google Scholar] [CrossRef]

- Costa, H.; Foody, G.; Boyd, D. Supervised methods of image segmentation accuracy assessment in land cover mapping. Remote Sens. Environ. 2018, 205, 338–351. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- De Giorgi, A.; Moser, G.; Poggi, G.; Scarpa, G.; Serpico, S.B. Very High Resolution Optical Image Classification Using Watershed Segmentation and a Region-Based Kernel. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 1312–1315. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Combining Support Vector Machines and Markov Random Fields in an Integrated Framework for Contextual Image Classification. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2734–2752. [Google Scholar] [CrossRef]

- Ghamisi, P.; Maggiori, E.; Li, S.; Souza, R.; Tarablaka, Y.; Moser, G.; De Giorgi, A.; Fang, L.; Chen, Y.; Chi, M.; et al. New Frontiers in Spectral-Spatial Hyperspectral Image Classification: The Latest Advances Based on Mathematical Morphology, Markov Random Fields, Segmentation, Sparse Representation, and Deep Learning. IEEE Geosci. Remote Sens. Mag. 2018, 6, 10–43. [Google Scholar] [CrossRef]

- Moon, T.K. The expectation-maximization algorithm. IEEE Signal Process. Mag. 1996, 13, 47–60. [Google Scholar] [CrossRef]

- De Giorgi, A.; Moser, G.; Serpico, S.B. Parameter optimization for Markov random field models for remote sensing image classification through sequential minimal optimization. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 2346–2349. [Google Scholar] [CrossRef]

- Boykov, Y.; Kolmogorov, V. An experimental comparison of min-cut/max- flow algorithms for energy minimization in vision. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1124–1137. [Google Scholar] [CrossRef] [PubMed]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef]

- Greig, D.M.; Porteous, B.T.; Seheult, A.H. Exact Maximum a Posteriori Estimation for Binary Images. J. R. Stat. Soc. Ser. B Stat. Methodol. 1989, 51, 271–279. [Google Scholar] [CrossRef]

- Besag, J. Spatial Interaction and the Statistical Analysis of Lattice Systems. J. R. Stat. Soc. Ser. B Stat. Methodol. 1974, 36, 192–225. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing Information Reconstruction of Remote Sensing Data: A Technical Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Melgani, F. Contextual reconstruction of cloud-contaminated multitemporal multispectral images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 442–455. [Google Scholar] [CrossRef]

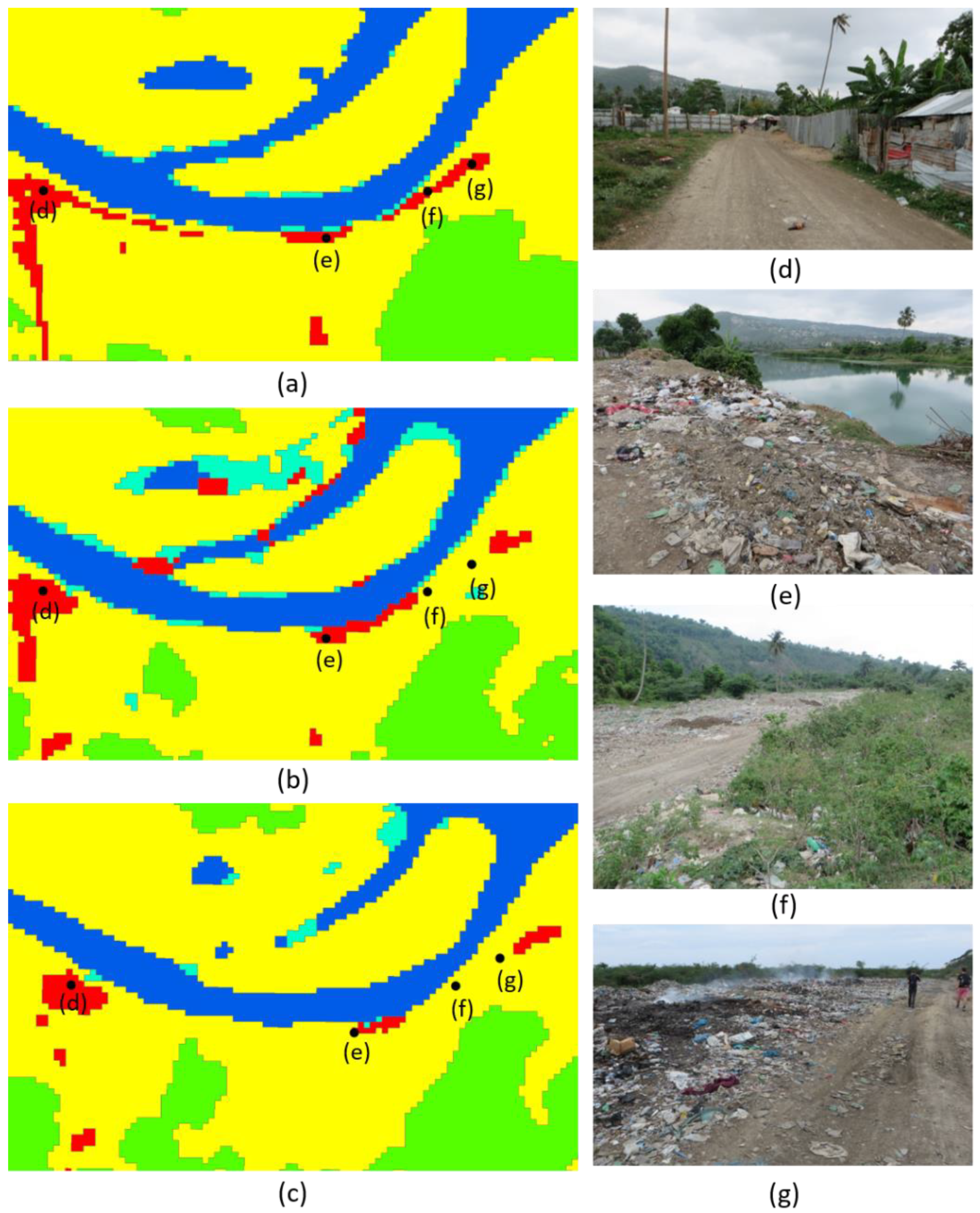

- Recovery Observatory Haiti by CEOS. 2019: 3th Users Workshop in Haiti, Port au Prince and Jérémie. 27 May 2019. Available online: https://www.recovery-observatory.org/drupal/en/groups/events/2019-3th-users-workshop-haiti-port-au-prince-and-j%C3%A9r%C3%A9mie (accessed on 29 June 2021).

- Dell’Acqua, F.; Gamba, P. Texture-based characterization of urban environments on satellite SAR images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 153–159. [Google Scholar] [CrossRef]

- Brás, A.; Berdier, C.; Emmanuel, E.; Zimmerman, M. Problems and current practices of solid waste management in Port-au-Prince (Haiti). Waste Manag. 2009, 29, 2907–2909. [Google Scholar] [CrossRef] [PubMed]

- ReliefWeb. The Waste Management Practices of Aid Organisations—Case Study: Haiti (Executive Summary)—Haiti. Available online: https://reliefweb.int/report/haiti/waste-management-practices-aid-organisations-case-study-haiti-executive-summary (accessed on 29 June 2021).

- Serpico, S.B.; Dellepiane, S.; Boni, G.; Moser, G.; Angiati, E.; Rudari, R. Information Extraction from Remote Sensing Images for Flood Monitoring and Damage Evaluation. Proc. IEEE 2012, 100, 2946–2970. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Tuia, D.; Moser, G.; Camps-Valls, G. Multimodal Classification of Remote Sensing Images: A Review and Future Directions. Proc. IEEE 2015, 103, 1560–1584. [Google Scholar] [CrossRef]

- Pastorino, M.; Moser, G.; Serpico, S.; Zerubia, J. Semantic segmentation of remote sensing images combining hierarchical probabilistic graphical models and deep convolutional neural networks. In Proceedings of the IGARSS 2021—2021 IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 11–16 July 2021. [Google Scholar]

- Maggiolo, L.; Marcos, D.; Moser, G.; Tuia, D. Improving Maps from CNNs Trained with Sparse, Scribbled Ground Truths Using Fully Connected CRFs. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2099–2102. [Google Scholar] [CrossRef]

| Jérémie 2016 | Jérémie 2017 | ||||||

|---|---|---|---|---|---|---|---|

| Class | PA | UA | Class | PA | UA | ||

| Water | 100% | 100% | Water | 97.3% | 100% | ||

| Urban/Anthropogenic | 100% | 100% | Urban/Anthropogenic | 100% | 97.4% | ||

| Tall Veg. | 99.2% | 95.3% | Tall Veg. | 100% | 100% | ||

| Low Veg. | 95.1% | 99.2% | Low Veg. | 97.0% | 100% | ||

| Muddy Water | 100% | 100% | Bare soil | 100% | 89.1% | ||

| OA | 98.9% | OA | 98.9% | ||||

| AA | 98.9% | AA | 98.9% | ||||

| kappa | 98.6% | kappa | 98.8% | ||||

| Class | Jérémie 2018 | Jérémie 2019 | |||

|---|---|---|---|---|---|

| PA | UA | PA | UA | ||

| Water | 100% | 100% | 100% | 100% | |

| Urban/Anthropogenic | 98.4% | 100% | 98.4% | 100% | |

| Tall Veg. | 99.4% | 100% | 100% | 100% | |

| Low Veg. | 100% | 98.0% | 100% | 98.5% | |

| Bare Soil | 100% | 100% | 100% | 100% | |

| OA | 99.5% | 99.6% | |||

| AA | 99.6% | 99.7% | |||

| kappa | 99.3% | 99.5% | |||

| Zoom Jérémie 2016 | Zoom Jérémie 2017 | ||||||

|---|---|---|---|---|---|---|---|

| Class | PA | UA | Class | PA | UA | ||

| Urban/Anthropogenic | 100% | 100% | Urban/Anthropogenic | 98.6% | 100% | ||

| Shrubs and bush | 100% | 100% | Shrubs and bush | 100% | 97.5% | ||

| Grass | 100% | 100% | Grass | 95.6% | 100% | ||

| OA | 100% | OA | 98.9% | ||||

| AA | 100% | AA | 98.1% | ||||

| kappa | 100% | kappa | 98.1% | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Giorgi, A.; Solarna, D.; Moser, G.; Tapete, D.; Cigna, F.; Boni, G.; Rudari, R.; Serpico, S.B.; Pisani, A.R.; Montuori, A.; et al. Monitoring the Recovery after 2016 Hurricane Matthew in Haiti via Markovian Multitemporal Region-Based Modeling. Remote Sens. 2021, 13, 3509. https://doi.org/10.3390/rs13173509

De Giorgi A, Solarna D, Moser G, Tapete D, Cigna F, Boni G, Rudari R, Serpico SB, Pisani AR, Montuori A, et al. Monitoring the Recovery after 2016 Hurricane Matthew in Haiti via Markovian Multitemporal Region-Based Modeling. Remote Sensing. 2021; 13(17):3509. https://doi.org/10.3390/rs13173509

Chicago/Turabian StyleDe Giorgi, Andrea, David Solarna, Gabriele Moser, Deodato Tapete, Francesca Cigna, Giorgio Boni, Roberto Rudari, Sebastiano Bruno Serpico, Anna Rita Pisani, Antonio Montuori, and et al. 2021. "Monitoring the Recovery after 2016 Hurricane Matthew in Haiti via Markovian Multitemporal Region-Based Modeling" Remote Sensing 13, no. 17: 3509. https://doi.org/10.3390/rs13173509

APA StyleDe Giorgi, A., Solarna, D., Moser, G., Tapete, D., Cigna, F., Boni, G., Rudari, R., Serpico, S. B., Pisani, A. R., Montuori, A., & Zoffoli, S. (2021). Monitoring the Recovery after 2016 Hurricane Matthew in Haiti via Markovian Multitemporal Region-Based Modeling. Remote Sensing, 13(17), 3509. https://doi.org/10.3390/rs13173509