Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox

Abstract

:1. Introduction

2. Materials and Methods

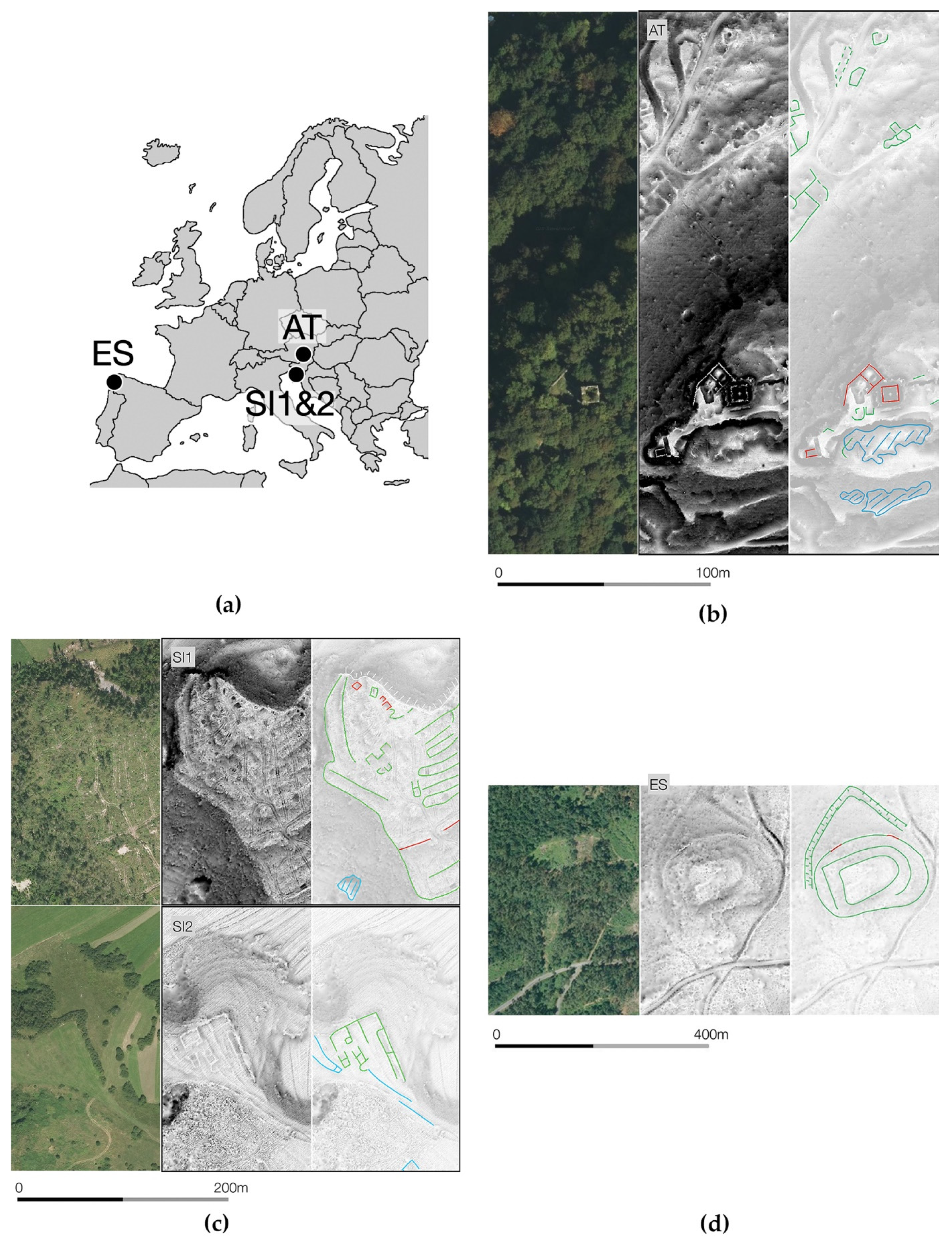

2.1. Test Data

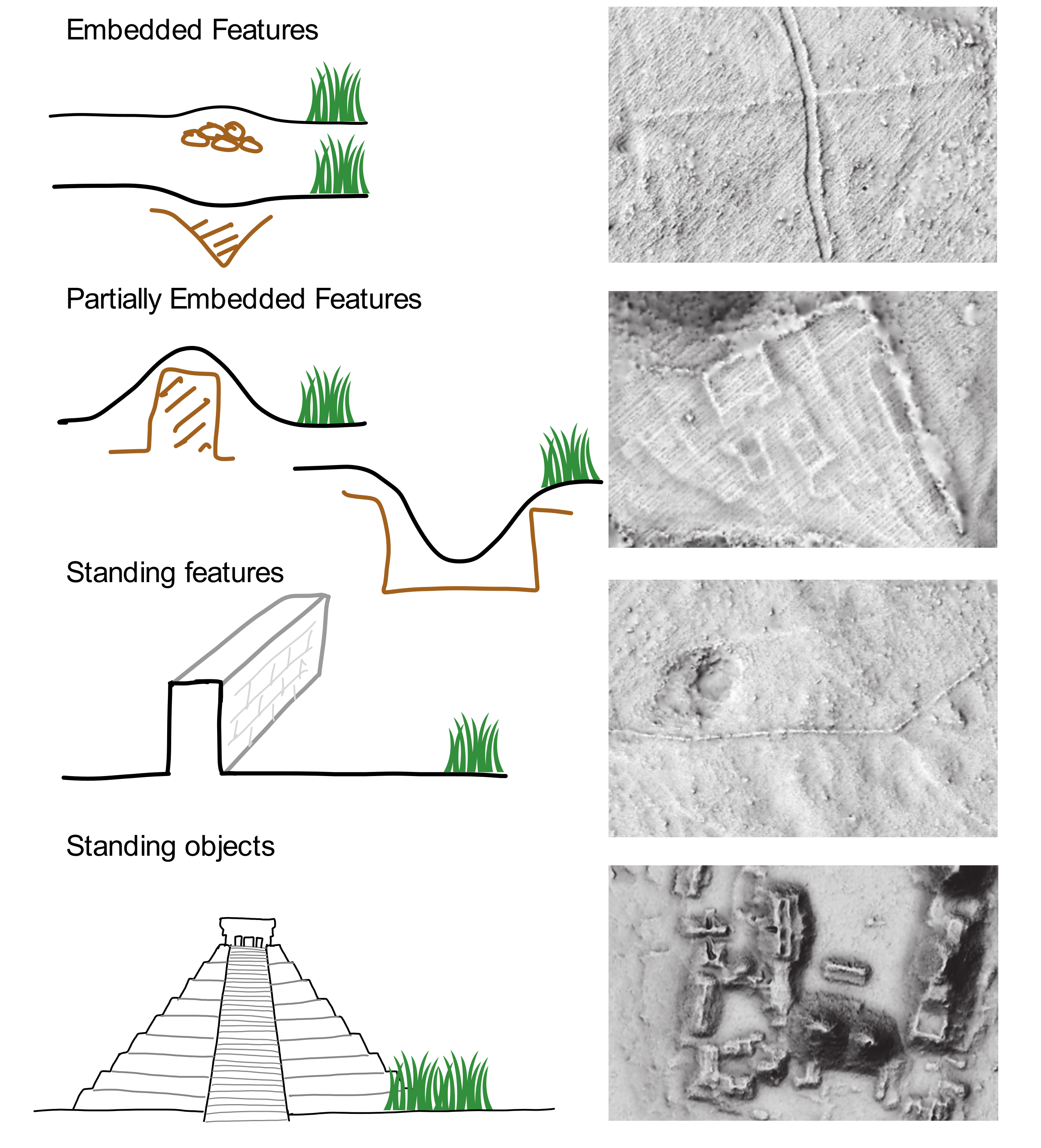

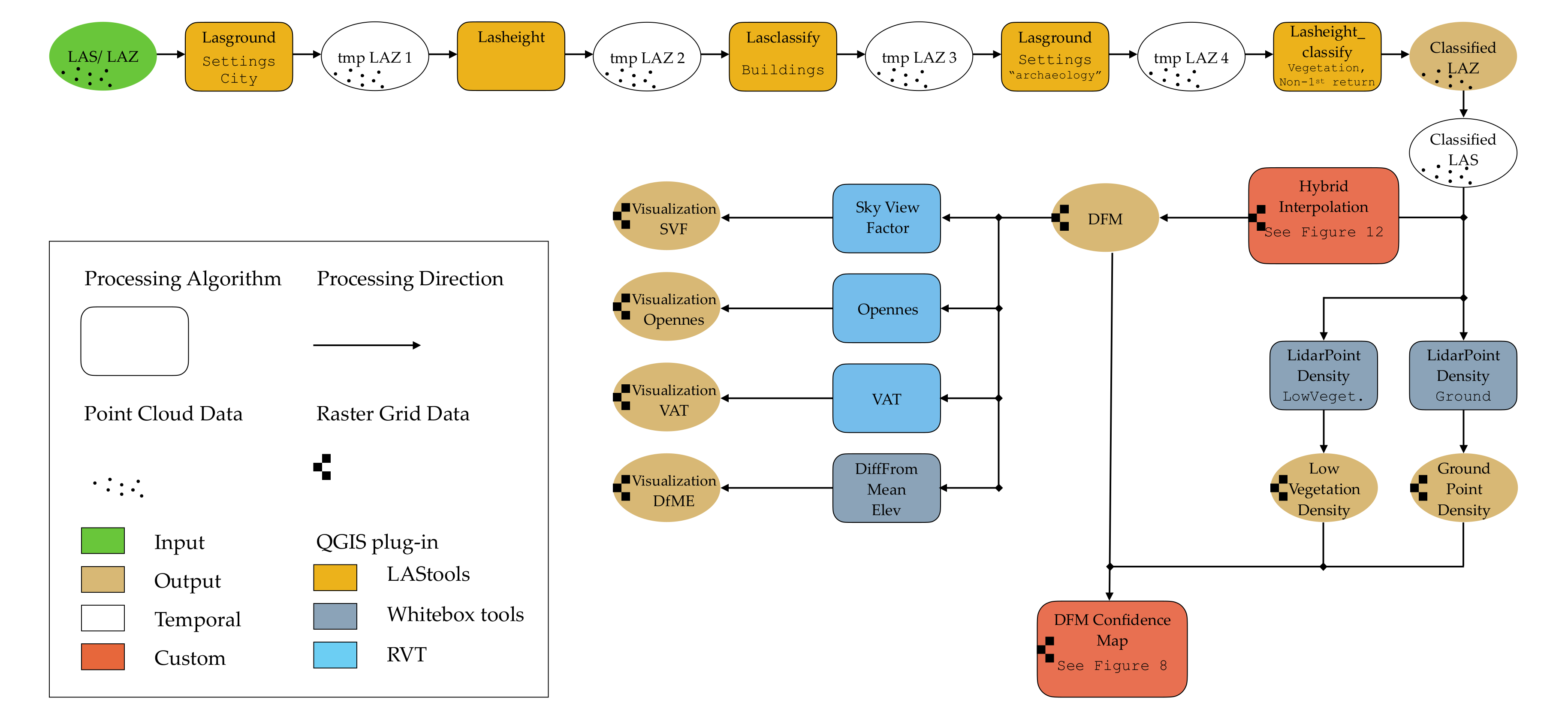

2.2. Automatic Ground Point and Object-Type Classification

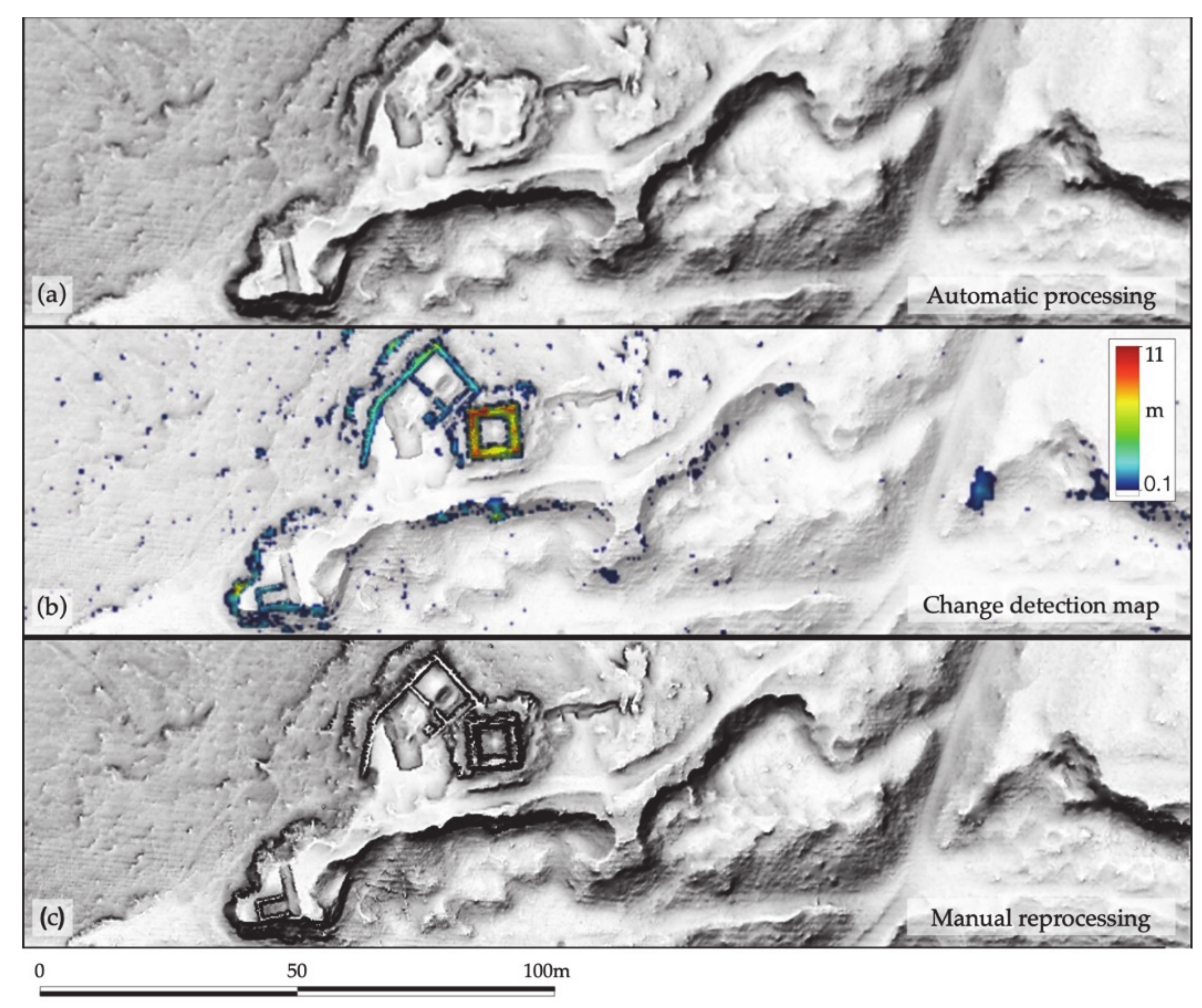

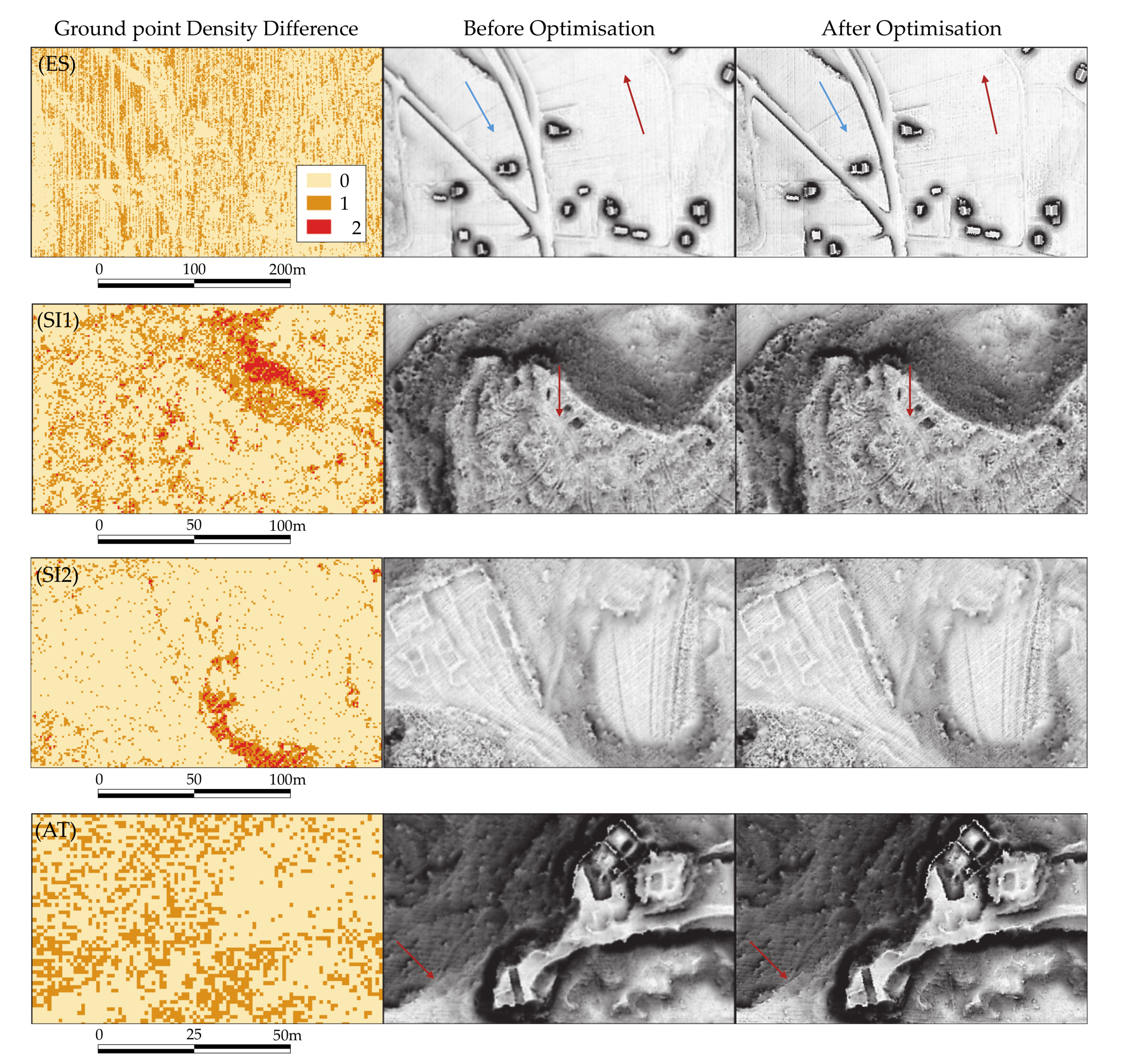

2.3. Manual Reclassification

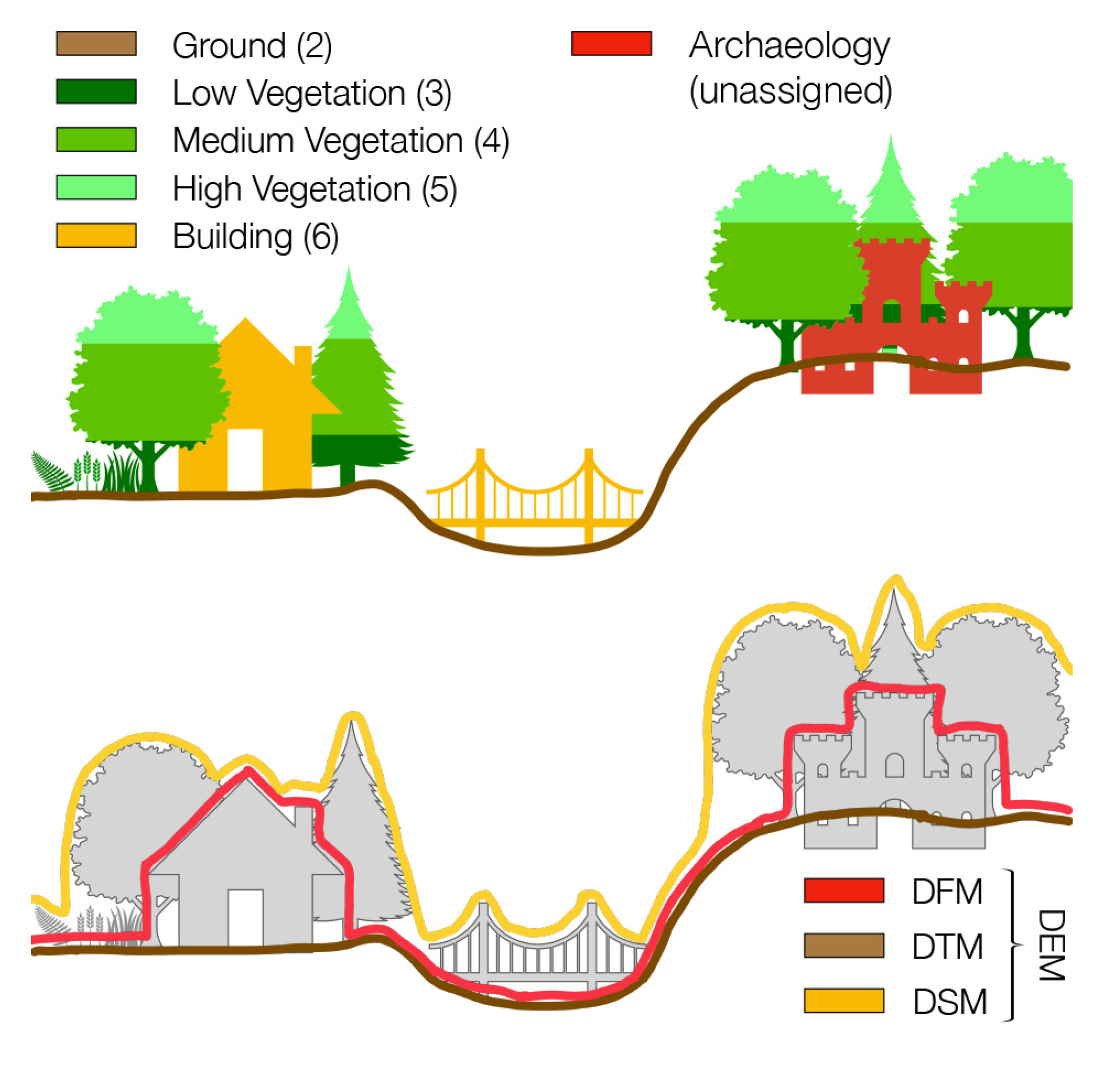

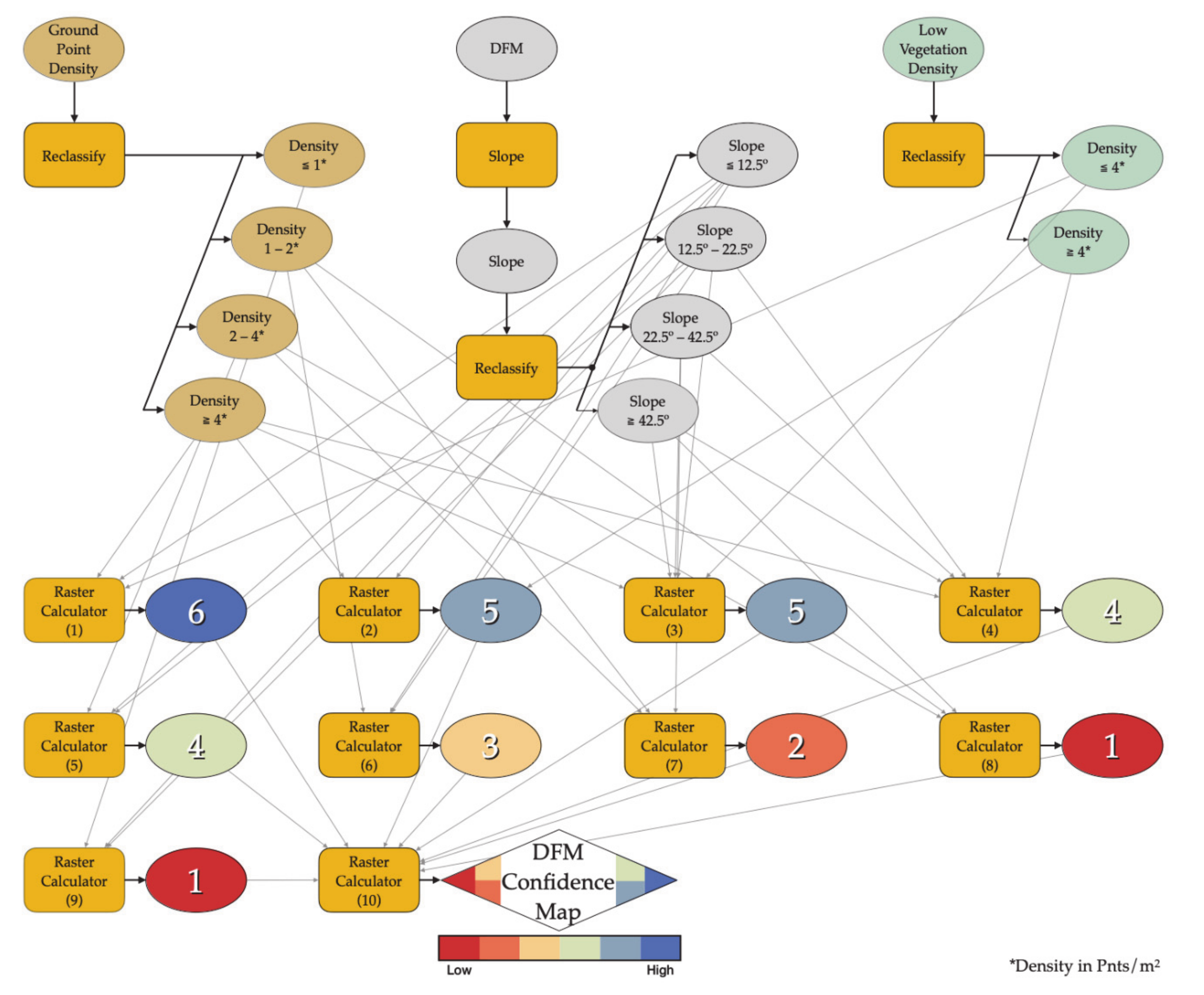

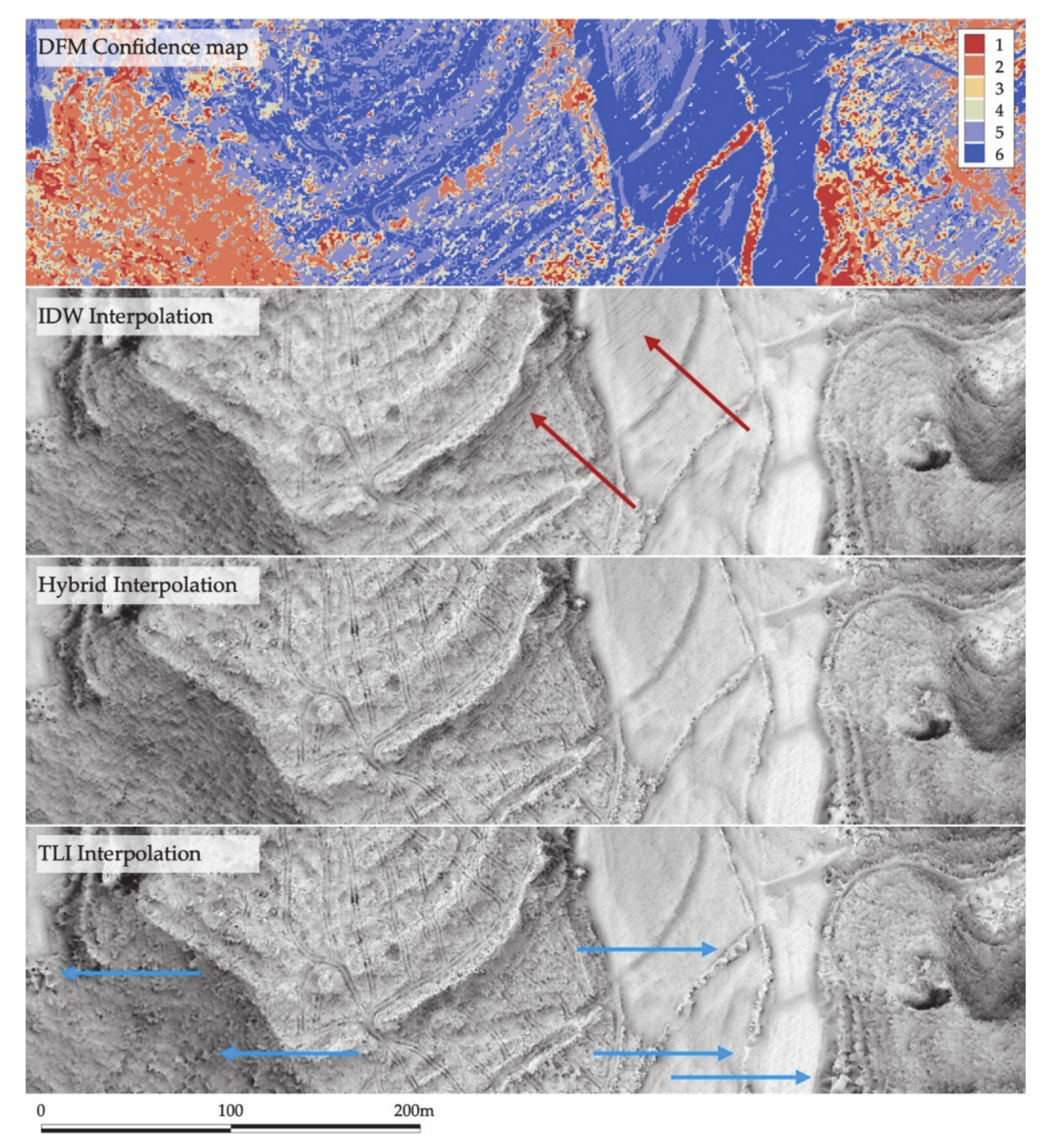

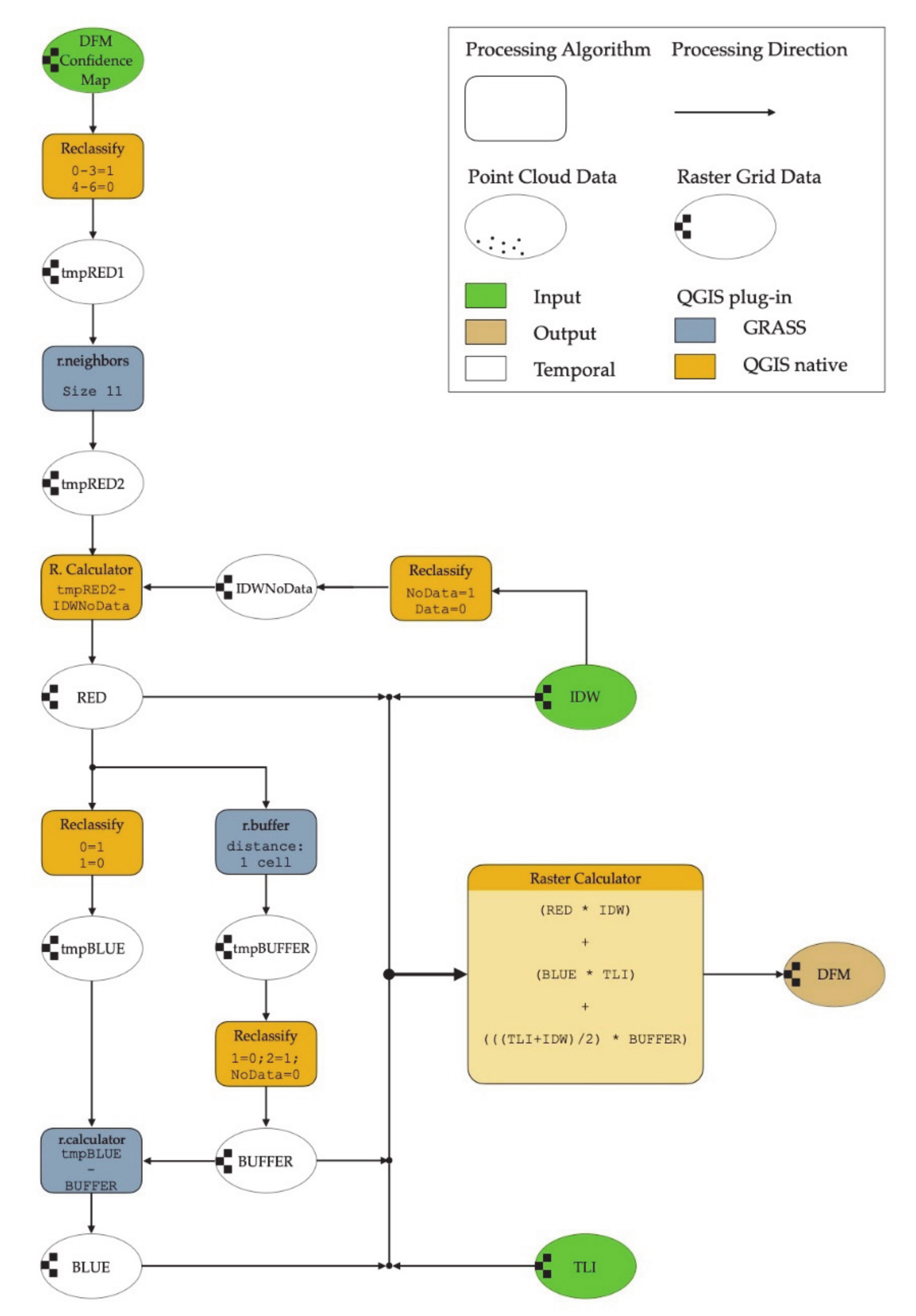

2.4. DFM Interpolation

2.5. Enhanced Visualization

2.6. Pipeline and Toolbox

3. Results

3.1. Automatic Ground Point and Object-Type Classification

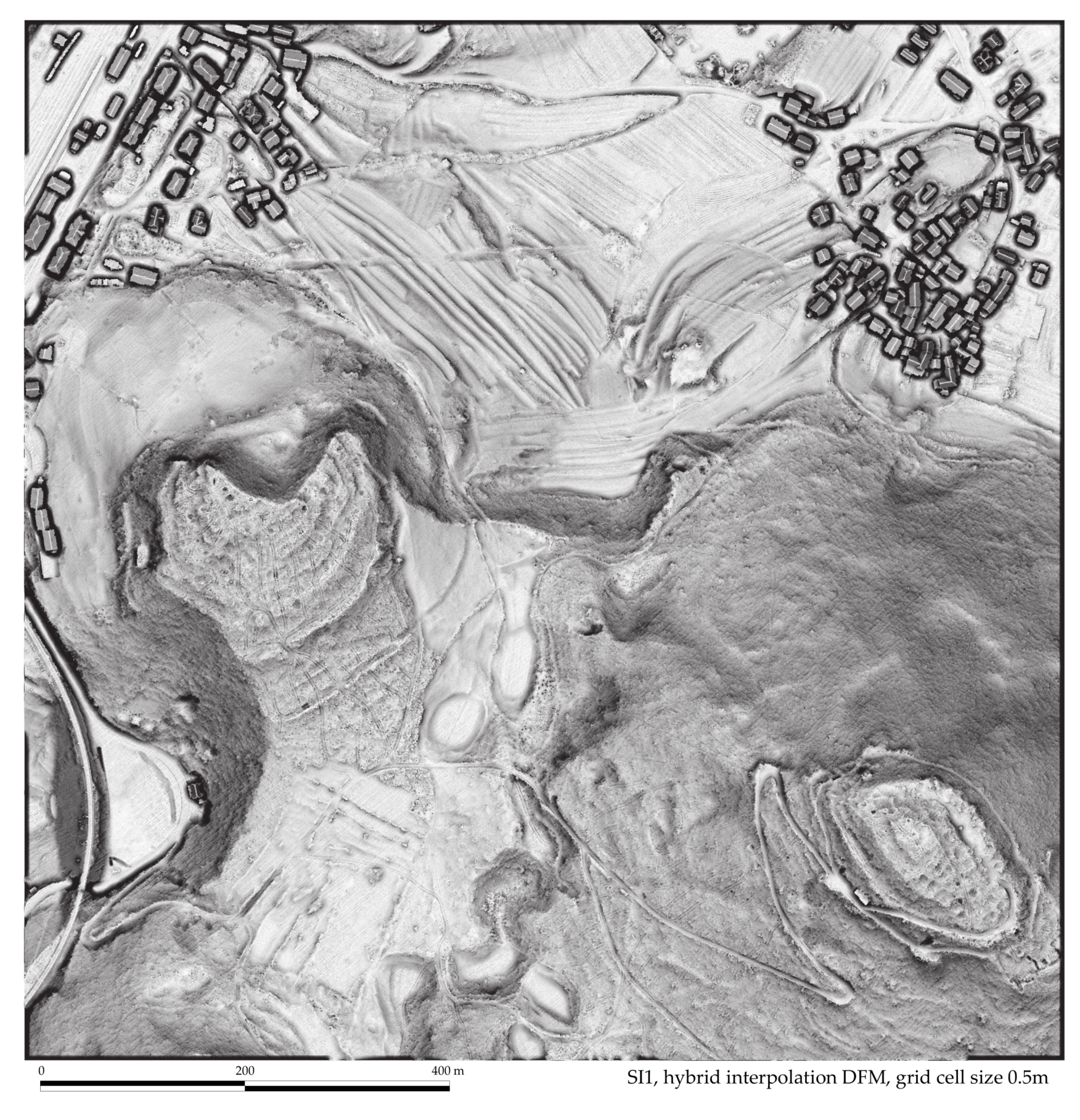

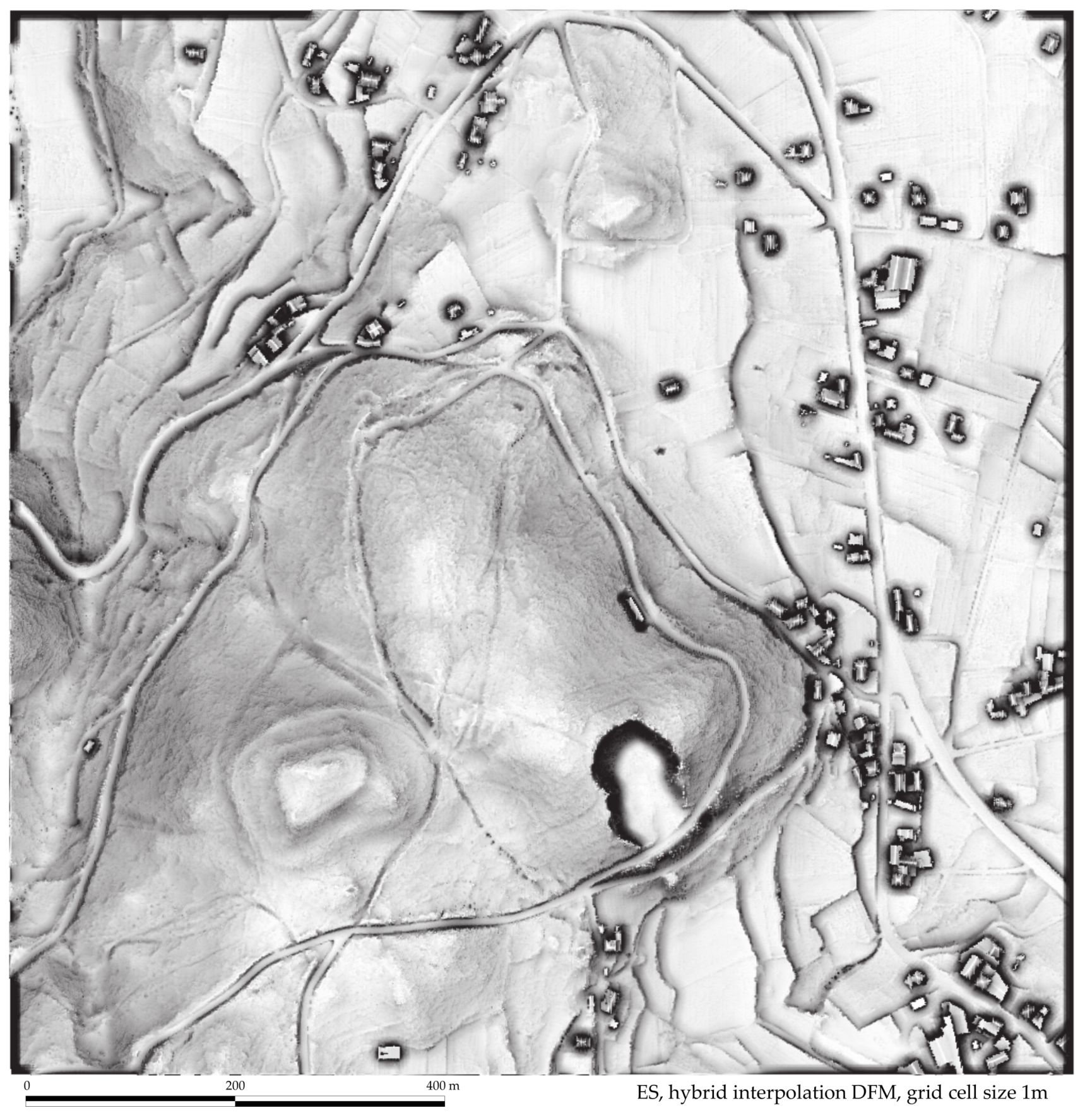

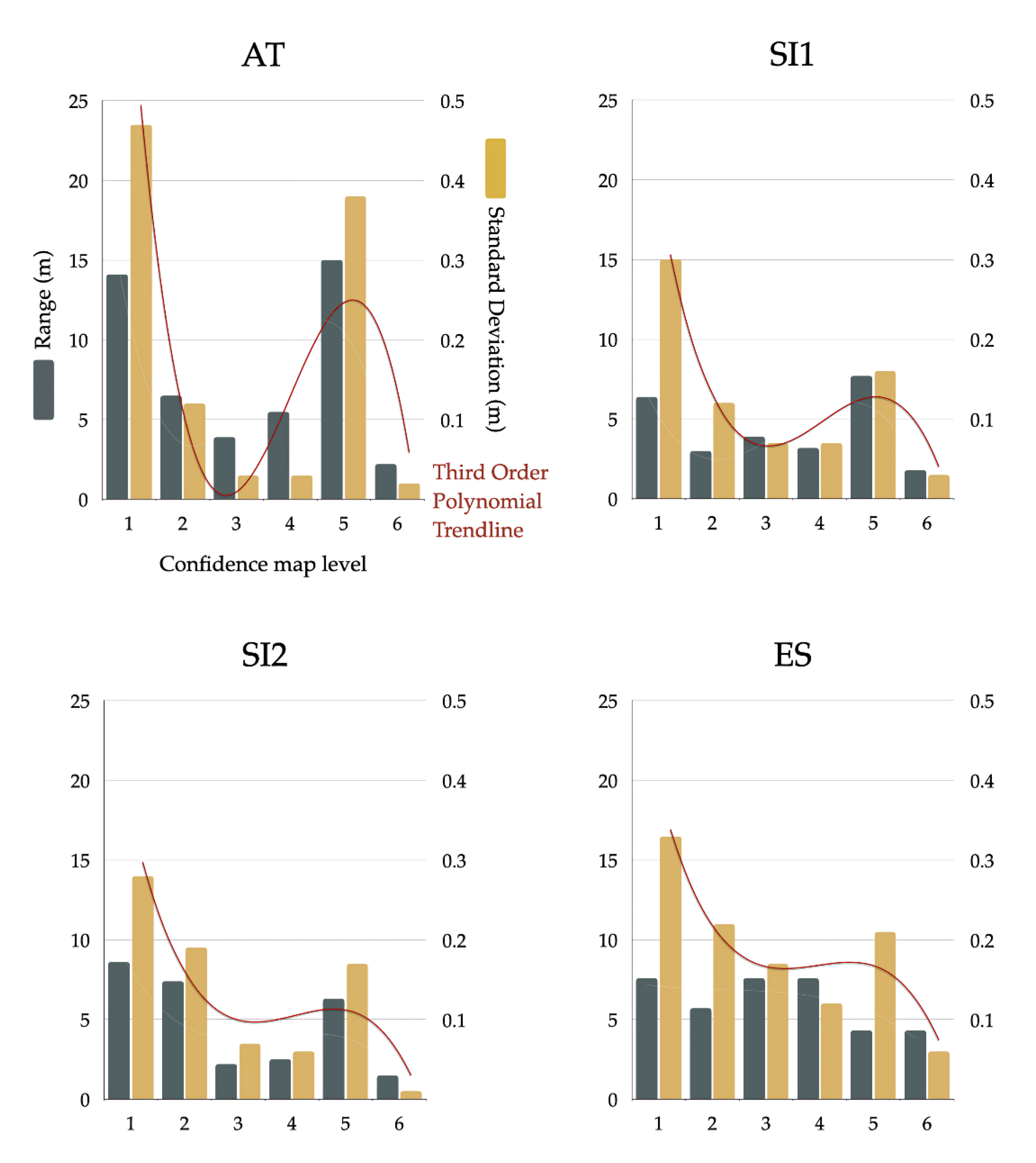

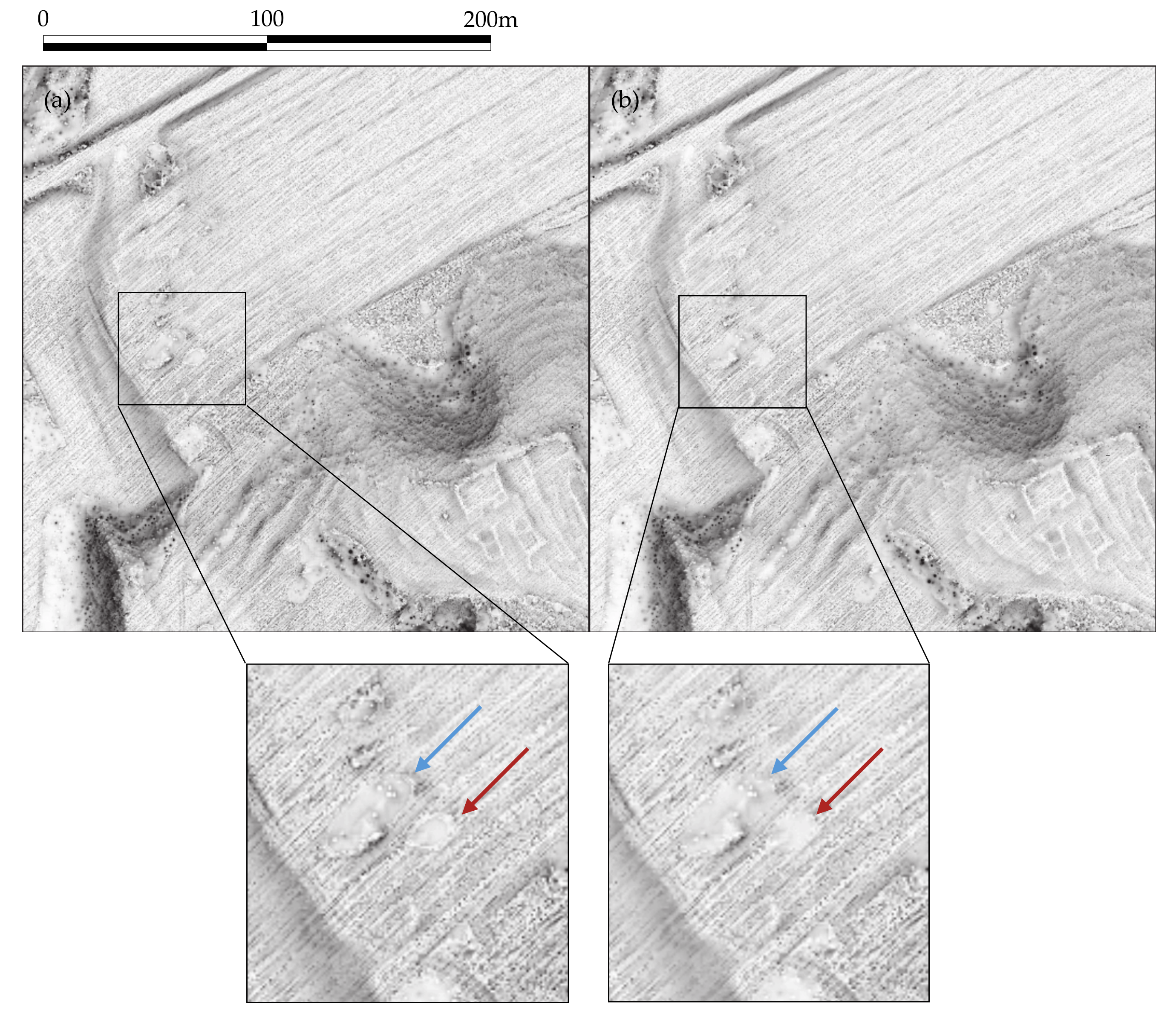

3.2. DFM Interpolation

3.3. Enhanced Visualization

3.4. Documenting the Process (Metadata and Paradata)

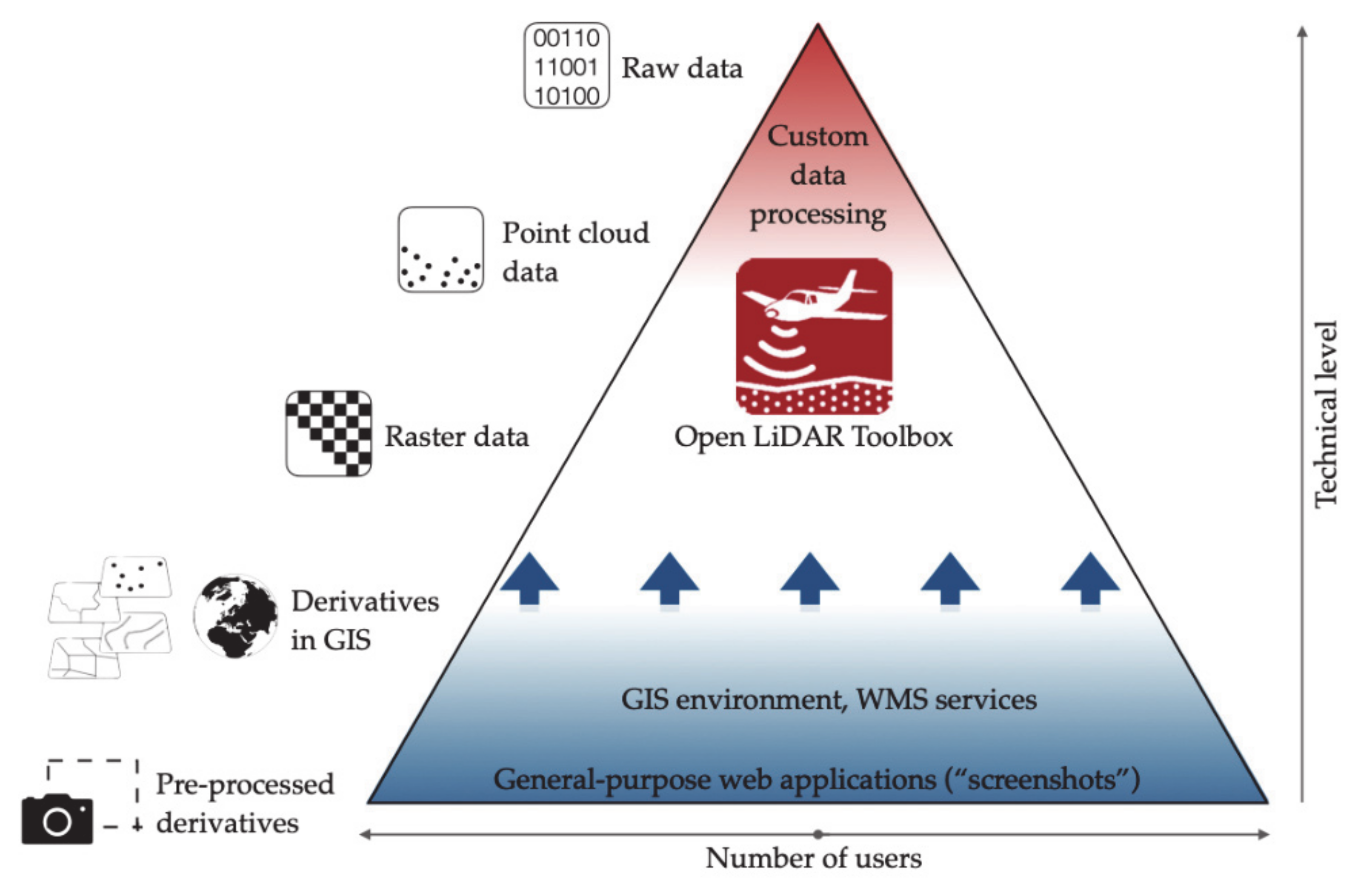

3.5. Open LiDAR Toolbox

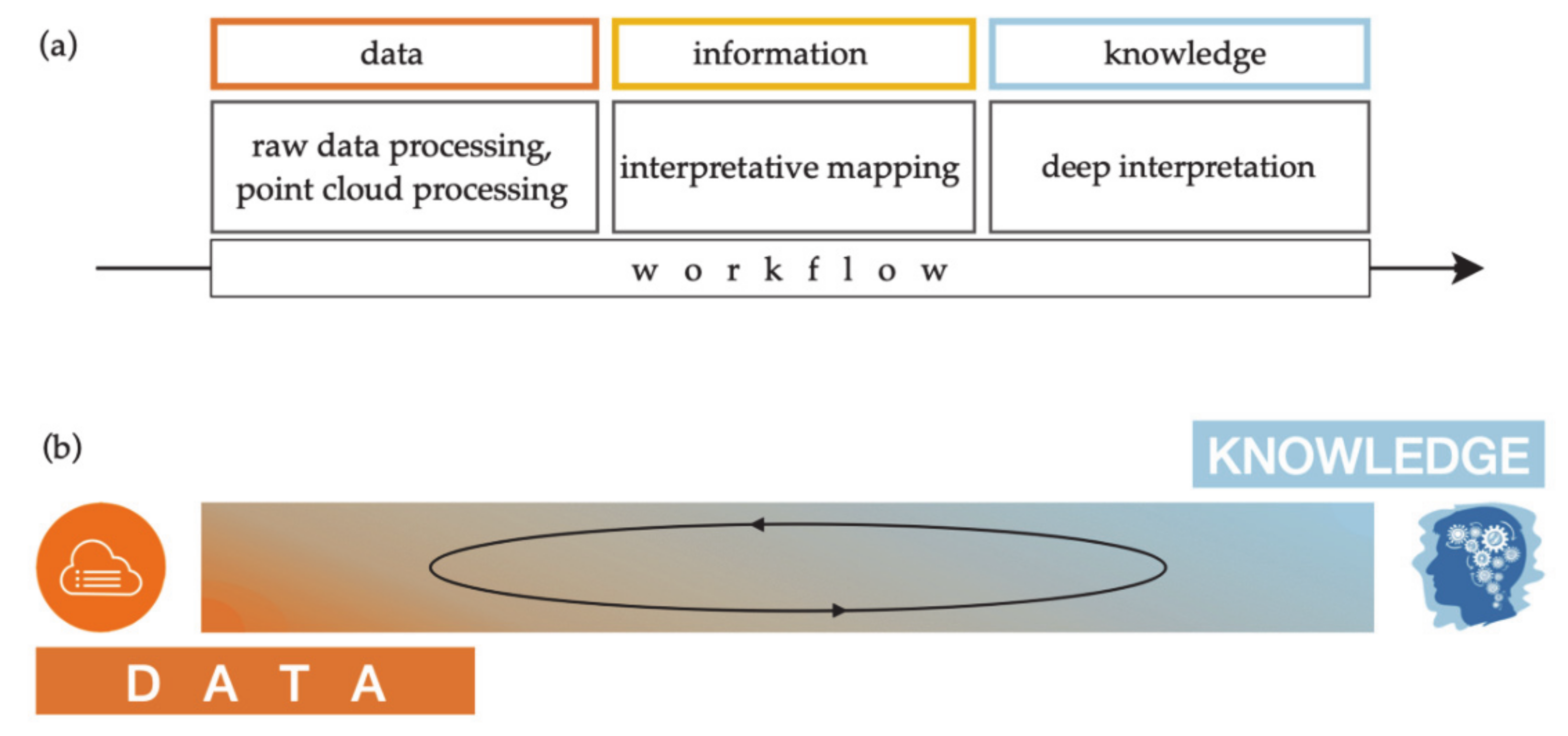

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Cohen, A.; Klassen, S.; Evans, D. Ethics in Archaeological Lidar. J. Comput. Appl. Archaeol. 2020, 3, 76–91. [Google Scholar] [CrossRef]

- Chase, A.S.Z.; Chase, D.; Chase, A. Ethics, New Colonialism, and Lidar Data: A Decade of Lidar in Maya Archaeology. J. Comput. Appl. Archaeol. 2020, 3, 51–62. [Google Scholar] [CrossRef]

- Crutchley, S. Light Detection and Ranging (Lidar) in the Witham Valley, Lincolnshire: An Assessment of New Remote Sensing Techniques. Archaeol. Prospect. 2006, 13, 251–257. [Google Scholar] [CrossRef]

- Challis, K.; Carey, C.; Kincey, M.; Howard, A.J. Assessing the Preservation Potential of Temperate, Lowland Alluvial Sediments Using Airborne Lidar Intensity. J. Archaeol. Sci. 2011, 38, 301–311. [Google Scholar] [CrossRef]

- Lozić, E.; Štular, B. Documentation of Archaeology-Specific Workflow for Airborne LiDAR Data Processing. Geosciences 2021, 11, 26. [Google Scholar] [CrossRef]

- Chase, A.F.; Chase, D.Z.; Weishampel, J.F.; Drake, J.B.; Shrestha, R.L.; Slatton, K.C.; Awe, J.J.; Carter, W.E. Airborne LiDAR, Archaeology, and the Ancient Maya Landscape at Caracol, Belize. J. Archaeol. Sci. 2011, 38, 387–398. [Google Scholar] [CrossRef]

- Evans, D.; Fletcher, R.J.; Pottier, C.; Chevance, J.-B.; Soutif, D.; Tan, B.S.; Im, S.; Ea, D.; Tin, T.; Kim, S.; et al. Uncovering Archaeological Landscapes at Angkor Using Lidar. Proc. Natl. Acad. Sci. USA 2013, 110, 12595–12600. [Google Scholar] [CrossRef] [Green Version]

- Chase, A.F.; Chase, D.Z.; Fisher, C.T.; Leisz, S.J.; Weishampel, J.F. Geospatial Revolution and Remote Sensing LiDAR in Mesoamerican Archaeology. Proc. Natl. Acad. Sci. USA 2012, 109, 12916–12921. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Devereux, B.J.; Amable, G.; Crow, P.; Cliff, A. The Potential of Airborne Lidar for Detection of Archaeological Features under Woodland Canopies. Antiquity 2005, 79, 648–660. [Google Scholar] [CrossRef]

- Bewley, R.; Crutchley, S.; Shell, C. New Light on an Ancient Landscape: Lidar Survey in the Stonehenge World Heritage Site. Antiquity 2005, 79, 636–647. [Google Scholar] [CrossRef]

- Rowlands, A.; Sarris, A. Detection of Exposed and Subsurface Archaeological Remains Using Multi-Sensor Remote Sensing. J. Archaeol. Sci. 2007, 34, 795–803. [Google Scholar] [CrossRef]

- Canuto, M.A.; Estrada-Belli, F.; Garrison, T.G.; Houston, S.D.; Acuña, M.J.; Kováč, M.; Marken, D.; Nondédéo, P.; Auld-Thomas, L.; Castanet, C.; et al. Ancient Lowland Maya Complexity as Revealed by Airborne Laser Scanning of Northern Guatemala. Science 2018, 361, eaau0137. [Google Scholar] [CrossRef] [Green Version]

- Rosenswig, R.M.; López-Torrijos, R. Lidar Reveals the Entire Kingdom of Izapa during the First Millennium BC. Antiquity 2018, 92, 1292–1309. [Google Scholar] [CrossRef]

- Johnson, K.M.; Ouimet, W.B. Rediscovering the Lost Archaeological Landscape of Southern New England Using Airborne Light Detection and Ranging (LiDAR). J. Archaeol. Sci. 2014, 43, 9–20. [Google Scholar] [CrossRef]

- Štular, B. The Use of Lidar-Derived Relief Models in Archaeological Topography The Kobarid Region (Slovenia) Case Study. Arheol. Vestn. 2011, 62, 393–432. [Google Scholar]

- Crutchley, S.; Crow, P. The Light Fantastic: Using Airborne Lidar in Archaeological Survey; English Heritage: Swindon, UK, 2010. [Google Scholar]

- Doneus, M.; Briese, C. Airborne Laser Scanning in forested areas—Potential and limitations of an archaeological prospection technique. In Remote Sensing for Archaeological Heritage Management; Cowley, D.C., Ed.; Europae Archaeologia Consilium (EAC): Brussels, Belgium, 2011; pp. 59–76. ISBN 978-963-9911-20-8. [Google Scholar]

- Opitz, R.S. Interpreting Archaeological Topography. Airborne Laser Scanning, 3D Data and Ground Observation; Opitz, R.S., Cowley, D.C., Eds.; Oxbow Books: Oxford, UK, 2013; pp. 13–31. ISBN 978-1-84217-516-3. [Google Scholar]

- Doneus, M.; Mandlburger, G.; Doneus, N. Archaeological Ground Point Filtering of Airborne Laser Scan Derived Point-Clouds in a Difficult Mediterranean Environment. J. Comput. Appl. Archaeol. 2020, 3, 92–108. [Google Scholar] [CrossRef] [Green Version]

- Štular, B.; Lozić, E. Comparison of Filters for Archaeology-Specific Ground Extraction from Airborne LiDAR Point Clouds. Remote Sens. 2020, 12, 3025. [Google Scholar] [CrossRef]

- Dallas, C. Curating Archaeological Knowledge in the Digital Continuum: From Practice to Infrastructure. Open Archaeol. 2015, 1, 176–207. [Google Scholar] [CrossRef]

- Clarke, D.L. Analytical Archaeology; Routledge: Abingdon-on-Thames, UK, 1978; pp. 19–23. ISBN 0-416-85450-8. [Google Scholar]

- Shanks, M.; Tilley, C. Facts and Values in Archaeology. In Re-Constructing Archaeology: Theory and Practice; Routledge: Abingdon-on-Thames, UK, 1992; pp. 46–67. ISBN 978-0-415-08870-1. [Google Scholar]

- Latour, B. Pandora’s Hope: Essays on the Reality of Science Studies; Harvard University Press: Cambridge, MA, USA, 1999; ISBN 0-674-65335-1. [Google Scholar]

- Dennis, L.M. Digital Archaeological Ethics: Successes and Failures in Disciplinary Attention. J. Comput. Appl. Archaeol. 2020, 3, 210–218. [Google Scholar] [CrossRef]

- Briese, C.; Pfennigbauer, M.; Ullrich, A.; Doneus, M. Multi-Wavelength Airborne Laser Scanning for Archaeological Prospection. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2013, XL-5/W2, 119–124. [Google Scholar] [CrossRef] [Green Version]

- Doneus, M.; Kühteiber, T. Airborne laser scanning and archaeological interpretation—Bringing back the people. In Interpreting Archaeological Topography: Airborne Laser Scanning, 3D Data and Ground Observation; Opitz, R.S., Cowley, D.C., Eds.; Occasional Publication of the Aerial Archaeology Research Group; Oxbow Books: Oxford, UK, 2013; pp. 32–50. ISBN 978-1-84217-516-3. [Google Scholar]

- Opitz, R.S.; Cowley, D.C. (Eds.) Interpreting archaeological topography: Lasers, 3D data, observation, visualisation and applications. In Interpreting Archaeological Topography: Airborne Laser Scanning, 3D Data and Ground Observation; Occasional Publication of the Aerial Archaeology Research Group; Oxbow Books: Oxford, UK, 2013; pp. 1–12. ISBN 978-1-84217-516-3. [Google Scholar]

- Hodder, I.; Hutson, S. Reading the Past. Current Approaches to Interpretation in Archaeology; Cambridge University Press: Cambridge, UK, 2003; ISBN 0-521-82132-0. [Google Scholar]

- Garstki, K. Introduction: Disruptive Technologies and Challenging Futures. In Critical Archaeology in the Digital Age; Garstki, K., Ed.; Cotsen Insititute of Archaeology Press: Los Angeles, CA, USA, In press.

- Štular, B.; Lozić, E.; Eichert, S. Interpolation of Airborne LiDAR Data for Archaeology. HAL Preprints. 2021. Available online: https://hal.archives-ouvertes.fr/hal-03196185 (accessed on 21 June 2021).

- Štular, B.; Lozić, E.; Eichert, S. Airborne LiDAR-Derived Digital Elevation Model for Archaeology. Remote Sens. 2021, 13, 1855. [Google Scholar] [CrossRef]

- Kokalj, Ž.; Hesse, R. Airborne Laser Scanning Raster Data Visualization: A Guide to Good Practice; Založba ZRC: Ljubljana, Slovenia, 2017; ISBN 978-961-254-984-8. [Google Scholar]

- Hobič, J. Arheologija Slovenije/Archaeology of Slovenia. Blog. 2020. Available online: https://arheologijaslovenija.blogspot.com/2016/05/ (accessed on 21 June 2021).

- Štular, B.; Lozić, E. Digitalni podatki. In GIS v Sloveniji; Ciglič, R., Geršič, M., Perko, D., Zorn, M., Eds.; Geografski inštitut Antona Melika ZRC SAZU: Ljubljana, Slovenia, 2016; Volume 13, pp. 157–166. ISBN 978-961-254-929-9. [Google Scholar]

- Vosselman, G. Slope Based Filtering of Laser Altimetry Data. Int. Arch. Photogramm. Remote Sens. 2000, 33, 935–942. [Google Scholar]

- Meng, X.; Currit, N.; Zhao, K. Ground Filtering Algorithms for Airborne LiDAR Data: A Review of Critical Issues. Remote Sens. 2010, 2, 833–860. [Google Scholar] [CrossRef] [Green Version]

- Meng, X.; Wang, L.; Silván-Cárdenas, J.L.; Currit, N. A Multi-Directional Ground Filtering Algorithm for Airborne LIDAR. ISPRS J. Photogramm. Remote Sens. 2009, 64, 117–124. [Google Scholar] [CrossRef] [Green Version]

- Mongus, D.; Žalik, B. Computationally Efficient Method for the Generation of a Digital Terrain Model From Airborne LiDAR Data Using Connected Operators. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 340–351. [Google Scholar] [CrossRef]

- Tóvári, D.; Pfeifer, N. Segmentation Based Robust Interpolation-a New Approach to Laser Data Filtering. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2005, 36, 79–84. [Google Scholar]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Buján, S.; Cordero, M.; Miranda, D. Hybrid Overlap Filter for LiDAR Point Clouds Using Free Software. Remote Sens. 2020, 12, 1051. [Google Scholar] [CrossRef] [Green Version]

- Axelsson, P. DEM Generation from Laser Scanner Data Using Adaptive Tin Models. Int. Arch. Photogramm. Remote Sens. 2000, 33, 110–117. [Google Scholar]

- Pingel, T.J.; Clarke, K.C.; McBride, W.A. An Improved Simple Morphological Filter for the Terrain Classification of Airborne LIDAR Data. ISPRS J. Photogramm. Remote Sens. 2013, 77, 21–30. [Google Scholar] [CrossRef]

- Pfeifer, N.; Mandlburger, G. LiDAR Data Filtering and Digital Terrain Model Generation. In Topographic laser ranging and scanning: Principles and processing; Shan, J., Toth, C.K., Eds.; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2018; pp. 349–378. ISBN 978-1-4987-7227-3. [Google Scholar]

- Cosenza, D.N.; Pereira, L.G.; Guerra-Hernández, J.; Pascual, A.; Soares, P.; Tomé, M. Impact of Calibrating Filtering Algorithms on the Quality of LiDAR-Derived DTM and on Forest Attribute Estimation through Area-Based Approach. Remote Sens. 2020, 12, 918. [Google Scholar] [CrossRef] [Green Version]

- Sithole, G.; Vosselman, G. Experimental Comparison of Filter Algorithms for Bare-Earth Extraction from Airborne Laser Scanning Point Clouds. ISPRS J. Photogramm. Remote Sens. 2004, 59, 85–101. [Google Scholar] [CrossRef]

- ASPRS. LAS Specification Version 1.4-R13; The American Society for Photogrammetry & Remote Sensing: Bethesda, MD, USA, 2013. [Google Scholar]

- Hightower, J.; Butterfield, A.; Weishampel, J. Quantifying Ancient Maya Land Use Legacy Effects on Contemporary Rainforest Canopy Structure. Remote Sens. 2014, 6, 10716–10732. [Google Scholar] [CrossRef]

- Simpson, J.; Smith, T.; Wooster, M. Assessment of Errors Caused by Forest Vegetation Structure in Airborne LiDAR-Derived DTMs. Remote Sens. 2017, 9, 1101. [Google Scholar] [CrossRef] [Green Version]

- Laharnar, B. The Roman Stronghold at Nadleški Hrib, Notranjska Region. Arheol. Vestn. 2013, 64, 123–147. [Google Scholar]

- Dong, P.; Chen, Q. Basics of LiDAR Data Processing. In LiDAR Remote Sensing and Applications; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2018; pp. 41–62. ISBN 978-1-4822-4301-7. [Google Scholar]

- Moore, I.D.; Grayson, R.B.; Ladson, A.R. Digital Terrain Modelling: A Review of Hydrological, Geomorphological, and Biological Applications. Hydrol. Process. 1991, 5, 3–30. [Google Scholar] [CrossRef]

- Shepard, D. A Two-Dimensional Interpolation Function for Irregularly-Spaced Data. In ACM ’68, Proceedings of the 1968 23rd ACM National Conference, USA, January 1968; ACM Press: New York, NY, USA, 1968; pp. 517–524. [Google Scholar] [CrossRef]

- Chen, Z.; Gao, B.; Devereux, B. State-of-the-Art: DTM Generation Using Airborne LIDAR Data. Sensors 2017, 17, 150. [Google Scholar] [CrossRef] [Green Version]

- Mesa-Mingorance, J.L.; Ariza-López, F.J. Accuracy Assessment of Digital Elevation Models (DEMs): A Critical Review of Practices of the Past Three Decades. Remote Sens. 2020, 12, 2630. [Google Scholar] [CrossRef]

- Guo, Q.; Li, W.; Yu, H.; Alvarez, O. Effects of Topographic Variability and Lidar Sampling Density on Several DEM Interpolation Methods. Photogramm. Eng. Remote Sens. 2010, 76, 701–712. [Google Scholar] [CrossRef] [Green Version]

- De Boer, A.G.; Laan, W.N.; Waldus, W.; Van Zijverden, W.K. LiDAR-based surface height measurements: Applications in archaeology. In Beyond Illustration: 2D and 3D Digital Technologies as Tools for Discovery in Archaeology; Frischer, B., Dakouri-Hild, A., Eds.; Archaeopress: Oxford, UK, 2008; pp. 76–84, 154–156. ISBN 978-1-4073-0292-8. [Google Scholar]

- Fernandez-Diaz, J.; Carter, W.; Shrestha, R.; Glennie, C. Now You See It. Now You Don’t: Understanding Airborne Mapping LiDAR Collection and Data Product Generation for Archaeological Research in Mesoamerica. Remote Sens. 2014, 6, 9951–10001. [Google Scholar] [CrossRef] [Green Version]

- Riley, M.A.; Tiffany, J.A. Using LiDAR Data to Locate a Middle Woodland Enclosure and Associated Mounds, Louisa County, Iowa. J. Archaeol. Sci. 2014, 52, 143–151. [Google Scholar] [CrossRef]

- Rochelo, M.J.; Davenport, C.; Selch, D. Revealing Pre-Historic Native American Belle Glade Earthworks in the Northern Everglades Utilizing Airborne LiDAR. J. Archaeol. Sci. Rep. 2015, 2, 624–643. [Google Scholar] [CrossRef]

- Bater, C.W.; Coops, N.C. Evaluating Error Associated with Lidar-Derived DEM Interpolation. Comput. Geosci. 2009, 35, 289–300. [Google Scholar] [CrossRef]

- Montealegre, A.; Lamelas, M.; Riva, J. Interpolation Routines Assessment in ALS-Derived Digital Elevation Models for Forestry Applications. Remote Sens. 2015, 7, 8631–8654. [Google Scholar] [CrossRef] [Green Version]

- Yoëli, P. Analytical Hill Shading. Surv. Mapp. 1965, 25, 573–579. [Google Scholar]

- Kokalj, Ž.; Zakšek, K.; Oštir, K. Application of Sky-View Factor for the Visualisation of Historic Landscape Features in Lidar-Derived Relief Models. Antiquity 2011, 85, 263–273. [Google Scholar] [CrossRef]

- Doneus, M. Openness as Visualization Technique for Interpretative Mapping of Airborne Lidar Derived Digital Terrain Models. Remote Sens. 2013, 5, 6427–6442. [Google Scholar] [CrossRef] [Green Version]

- Guyot, A.; Hubert-Moy, L.; Lorho, T. Detecting Neolithic Burial Mounds from LiDAR-Derived Elevation Data Using a Multi-Scale Approach and Machine Learning Techniques. Remote Sens. 2018, 10, 225. [Google Scholar] [CrossRef] [Green Version]

- Devereux, B.J.; Amable, G.S.; Crow, P. Visualisation of LiDAR Terrain Models for Archaeological Feature Detection. Antiquity 2008, 82, 470–479. [Google Scholar] [CrossRef]

- Hesse, R. LiDAR-Derived Local Relief Models—A New Tool for Archaeological Prospection. Archaeol. Prospect. 2010, 17, 67–72. [Google Scholar] [CrossRef]

- Challis, K.; Forlin, P.; Kincey, M. A Generic Toolkit for the Visualization of Archaeological Features on Airborne LiDAR Elevation Data. Archaeol. Prospect. 2011, 18, 279–289. [Google Scholar] [CrossRef]

- Guyot, A.; Lennon, M.; Hubert-Moy, L. Objective Comparison of Relief Visualization Techniques with Deep CNN for Archaeology. J. Archaeol. Sci. Rep. 2021, 38, 103027. [Google Scholar] [CrossRef]

- Štular, B.; Kokalj, Ž.; Oštir, K.; Nuninger, L. Visualization of Lidar-Derived Relief Models for Detection of Archaeological Features. J. Archaeol. Sci. 2012, 39, 3354–3360. [Google Scholar] [CrossRef]

- Bennett, R.; Welham, K.; Hill, R.A.; Ford, A. A Comparison of Visualization Techniques for Models Created from Airborne Laser Scanned Data. Archaeol. Prospect. 2012, 19, 41–48. [Google Scholar] [CrossRef]

- Kokalj, Ž.; Somrak, M. Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping. Remote Sens. 2019, 11, 747. [Google Scholar] [CrossRef] [Green Version]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A New Look at IHS-like Image Fusion Methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A Comparative Analysis of Image Fusion Methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, W.; Wang, Q.; Li, X. SSR-NET: Spatial-Spectral Reconstruction Network for Hyperspectral and Multispectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5953–5965. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, B. A New Look at Image Fusion Methods from a Bayesian Perspective. Remote Sens. 2015, 7, 6828–6861. [Google Scholar] [CrossRef] [Green Version]

- Lindsay, J.B.; Cockburn, J.M.H.; Russell, H.A.J. An Integral Image Approach to Performing Multi-Scale Topographic Position Analysis. Geomorphology 2015, 245, 51–61. [Google Scholar] [CrossRef]

- Verhoeven, G.J. Mesh Is More-Using All Geometric Dimensions for the Archaeological Analysis and Interpretative Mapping of 3D Surfaces. J. Archaeol. Method Theory 2017, 24, 999–1033. [Google Scholar] [CrossRef]

- Verhoeven, G.; Doneus, M.; Doneus, N.; Štuhec, S. From Pixel to Mesh—Accurate and Straightforward 3D Documentation of Cultural Heritage from the Cres/Lošinj Archipelago. Izd. Hrvat. Arheol. Društva 2015, 30, 165–176. [Google Scholar]

- QGIS Project. QGIS Desktop 3.16 User Guide. 2021. Available online: https://docs.qgis.org/3.16/en/docs/user_manual/index.html (accessed on 21 June 2021).

- Neteler, M.; Bowman, M.H.; Landa, M.; Metz, M. GRASS GIS: A Multi-Purpose Open Source GIS. Environ. Model. Softw. 2012, 31, 124–130. [Google Scholar] [CrossRef] [Green Version]

- GDAL/OGR Contributors. GDAL/OGR Geospatial Data Abstraction software Library. 2021. Available online: https://gdal.org (accessed on 21 June 2021).

- Isenburg, M. Efficient LiDAR Processing Software (Version 170322). Rapidlasso. 2021. Available online: https://rapidlasso.com/lastools/ (accessed on 21 June 2021).

- Lindsay, J.B. Whitebox Tools. 2020. Available online: https://www.whiteboxgeo.com (accessed on 21 June 2021).

- Henry, E.R.; Wright, A.P.; Sherwood, S.C.; Carmody, S.B.; Barrier, C.R.; Van de Ven, C. Beyond Never-Never Land: Integrating LiDAR and Geophysical Surveys at the Johnston Site, Pinson Mounds State Archaeological Park, Tennessee, USA. Remote Sens. 2020, 12, 2364. [Google Scholar] [CrossRef]

- Evans, D. Airborne Laser Scanning as a Method for Exploring Long-Term Socio-Ecological Dynamics in Cambodia. J. Archaeol. Sci. 2016, 74, 164–175. [Google Scholar] [CrossRef] [Green Version]

- Carter, A.; Heng, P.; Stark, M.; Chhay, R.; Evans, D. Urbanism and Residential Patterning in Angkor. J. Field Archaeol. 2018, 43, 492–506. [Google Scholar] [CrossRef]

- Ringle, W.M.; Negrón, T.G.; Ciau, R.M.; Seligson, K.E.; Fernandez-Diaz, J.C.; Zapata, D.O. Lidar Survey of Ancient Maya Settlement in the Puuc Region of Yucatan, Mexico. PLoS ONE 2021, 16, e0249314. [Google Scholar] [CrossRef]

- Šprajc, I.; Dunning, N.P.; Štajdohar, J.; Gómez, Q.H.; López, I.C.; Marsetič, A.; Ball, J.W.; Góngora, S.D.; Olguín, O.Q.E.; Esquivel, A.F.; et al. Ancient Maya Water Management, Agriculture, and Society in the Area of Chactun, Campeche, Mexico. J. Anthropol. Archaeol. 2021, 61, 101261. [Google Scholar] [CrossRef]

- Mattivi, P.; Franci, F.; Lambertini, A.; Bitelli, G. TWI Computation: A Comparison of Different Open Source GISs. Open Geospat. DataSoftware Stand. 2019, 4, 6. [Google Scholar] [CrossRef]

- Eichert, S.; Štular, B.; Lozić, E. Open LiDAR Tools. 2021. Available online: https://github.com/stefaneichert/OpenLidarToolbox (accessed on 21 June 2021).

- Ackoff, R.L. From Data to Wisdom. J. Appl. Syst. Anal. 1989, 16, 3–9. [Google Scholar]

- Liew, A. Understanding Data, Information, Knowledge and Their Inter-Relationships. J. Knowl. Manag. Pract. 2007, 8, 1–16. [Google Scholar]

- Chen, M.; Ebert, D.; Hagen, H.; Laramee, R.S.; Van Liere, R.; Ma, K.-L.; Ribarsky, W.; Scheuermann, G.; Silver, D. Data, Information, and Knowledge in Visualization. IEEE Comput. Graph. Appl. 2008, 29, 12–19. [Google Scholar] [CrossRef] [Green Version]

- Darvill, T. Pathways to a Panoramic Past: A Brief History of Landscape Archaeology in Europe. In Handbook of Landscape Archaeology; David, B., Thomas, J., Eds.; Left Coast Press, Inc.: Walnut Creek, CA, USA, 2008; pp. 60–76. ISBN 978-1-59874-294-7. [Google Scholar]

- Latour, B. Ethnography of a “High-Tech” Case. About Aramis. In Technological Choices: Transformation in Material Cultures Since the Neolithic; Lemonnier, P., Ed.; Routledge: London, UK; New York, NY, USA, 1993; pp. 372–398. ISBN 0-415-07331-6. [Google Scholar]

- Petrie, G.; Toth, C.K. Airborne and Spaceborne Laser Profilers and Scanners. In Topographic Laser Ranging and Scanning: Principles and Processing; Shan, J., Toth, C.K., Eds.; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA; London, UK; New York, NY, USA, 2018; pp. 89–157. ISBN 978-1-4987-7227-3. [Google Scholar]

- Mlekuž, D. Airborne Laser Scanning and Landscape Archaeology. Opvscula Archaeol. 2018, 39, 85–95. [Google Scholar] [CrossRef]

- Huggett, J. A Manifesto for an Introspective Digital Archaeology. Open Archaeol. 2015, 1, 86–95. [Google Scholar] [CrossRef]

- Morgan, C.; Wright, H. Pencils and Pixels: Drawing and Digital Media in Archaeological Field Recording. J. Field Archaeol. 2018, 43, 136–151. [Google Scholar] [CrossRef]

- Dular, J. Gradivo Za Topografijo Dolenjske, Posavja in Bele Krajine v Železni Dobi; Založba ZRC: Ljubljana, Slovenia, 2021; ISBN 978-961-05-0510-5. [Google Scholar]

- Dular, J. Nova Spoznanja o Poselitvi Dolenjske v Starejši Železni Dobi. Arheol. Vestn. 2020, 71, 395–420. [Google Scholar] [CrossRef]

- Črešnar, M.; Mušič, B.; Horn, B.; Vinazza, M.; Leskovar, T.; Harris, S.E.; Batt, C.M.; Dolinar, N. Interdisciplinary Research of the Early Iron Ageiron Production CentreCvinger near Dolenjske Toplice (Slovenia) = Interdisciplinarne Raziskave Železarskega Središča Cvinger Pri Dolenjskih Toplicah Iz Starejše Železne Dobe. Arheol. Vestn. 2020, 71, 529–554. [Google Scholar] [CrossRef]

- Mele, M.; Mušič, B.; Horn, B. Poselitev Doline Reke Solbe v Pozni Bronasti in Starejši Železni Dobi—Nove Raziskave Graškega Joanneuma = Settlements in the Sulm River Valley during the Late Bronze Age and Early Iron Age—New Research of the Universalmuseum Joanneum. Graz. Arheol. Vestn. 2019, 70, 353–380. [Google Scholar]

- Hoskins, W.G. The Making of the English Landscape; Hodder and Stoughton: London, UK, 1954; ISBN 978-0-14-015410-8. [Google Scholar]

- Johnson, M. Landscape studies: The future of the field. In Landscape Archaeology between Art and Science. From a Multi- to an Interdisciplinary Approach; Kluiving, S.J., Guttmann-Bond, E.B., Eds.; Amsterdam University Press: Amsterdam, The Netherlands, 2012; pp. 515–525. ISBN 978 90 4851 607 0. [Google Scholar]

- Ingold, T. Culture on the ground: The world perceived through the feet. In Being Alive: Essays on Movement, Knowledge and Description; Routledge: New York, NY, USA, 2011; pp. 33–50. ISBN 978-0-415-57683-3. [Google Scholar]

- Ingold, T. Tools, minds and machines: An excursion in the philosophy of technology. In The Perception of the Environment. Essays on Livelihood, Dwelling and Skill; Routledge: New York, NY, USA, 2000; pp. 294–311. ISBN 0-203-46602-0. [Google Scholar]

- Ingold, T. Making: Anthropology, Archaeology, Art and Architecture; Routledge: New York, NY, USA, 2013; ISBN 978-0-415-56722-0. [Google Scholar]

- Cowley, D.; Verhoeven, G.; Traviglia, A. Editorial for Special Issue: “Archaeological Remote Sensing in the 21st Century: (Re)Defining Practice and Theory”. Remote Sens. 2021, 13, 1431. [Google Scholar] [CrossRef]

- De Saussure, F. Immutability and Mutability of the Sign. In Course in General Linguistics; Meisel, P., Haun, S., Eds.; Columbia University Press: New York, NY, USA, 2011; pp. 71–79. ISBN 978-0-231-52795-8. [Google Scholar]

- Pfeifer, N.; Köstli, A.; Kraus, K. Interpolation and Filtering of Laser Scanner Data—Implementation and First Results. Int. Arch. Photogramm. Remote Sens. 1998, 32, 153–159. [Google Scholar]

- Evans, J.S.; Hudak, A.T. A Multiscale Curvature Algorithm for Classifying Discrete Return LiDAR in Forested Environments. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1029–1038. [Google Scholar] [CrossRef]

- Yang, Z.; Jiang, W.; Xu, B.; Zhu, Q.; Jiang, S.; Huang, W. A Convolutional Neural Network-Based 3D Semantic Labeling Method for ALS Point Clouds. Remote Sens. 2017, 9, 936. [Google Scholar] [CrossRef] [Green Version]

| Phase | Step | Workflow Step | Arch. Engagement | |

|---|---|---|---|---|

| 1 |

Raw data acquisition & Processing | 1.1 | Project planning | + |

| 1.2 | System calibration | o | ||

| 1.3 | Data acquisition | o | ||

| 1.4 | Registering | + | ||

| 1.5 | Strip adjustment | o | ||

| 2 | Point cloud processing & Derivation of products | 2.1 | Automatic ground point classification | ++ |

| 2.2 | Object-type classification | ++ | ||

| 2.3 | Manual reclassification | +++ | ||

| 2.4 | DFM interpolation | + | ||

| 2.5 | Enhanced visualization | +++ | ||

| 3 | Archaeological interpretation | 3.1 | Data integration | ++ |

| 3.2 | Interpretative mapping | +++ | ||

| 3.3 | Ground assessment | +++ | ||

| 3.4 | ‘Deep’ interpretation | +++ | ||

| 3.5 | Automated mapping | ++ | ||

| 4 | Dissemination & Archiving | 4.1 | Data management | + |

| 4.2 | Dissemination | + | ||

| 4.3 | Archiving | + | ||

| Visualization | Archaeological Features | Relief | Isotropic | Saturation | ||||

|---|---|---|---|---|---|---|---|---|

| e. | p. e. | s. f. | s. o. | |||||

| HSD | Hillshading | 1 | 1 | 2 | 3 | 2 | N | B/W |

| SVF | Sky view factor | 1 | 2 | 3 | 3 | 3 | Y | N |

| OPP | Opennes (positive) | 1 | 3 | 3 | 3 | 1 | Y | B |

| SIL | Sky illumination | 1 | 1 | 2 | 3 | 3 | Y | W |

| SLP | Slope | 2 | 2 | 2 | 3 | 3 | Y | W |

| LRM | Local relief model | 3 | 2 | 2 | 2 | 2 | Y | N |

| LDO | Local dominance | 3 | 2 | 2 | 2 | 2 | Y | N |

| DME | Difference f/mean elev. | 3 | 2 | 2 | 2 | 2 | Y | N |

| VAT | Vis. f/arch. topography | 2 | 3 | 3 | 3 | 3 | Y | N |

| MTP | Multiscale topo. position | 3 | 1 | 2 | 2 | 1–3 1 | Y | N/A |

| EMS | Enhanced multiscale topographic position | 3 | 3 | 2 | 3 | 1–3 1 | Y | N/A |

| Step | Type | Paradata |

|---|---|---|

| (2.1) Automatic ground point classification | Software | LAStools 1.4 Rapidlasso GmbH |

| Filter | lasground_new | |

| Settings | st: 5; g:/; off: 0.05; s+: 1.0; s−: 1.0; b: no; terrain type: wilderness; pre-processing: ultra fine | |

| (2.2) Object-type classification | Software | LAStools 1.4 Rapidlasso GmbH |

| Filter | lasheight | |

| Settings | classify between: 0.5 and 2 as 3; classify between: 2 and 5 as 4; classify above: 5 as 5 | |

| Software | LAStools 1.4 Rapidlasso GmbH | |

| Filter | lasclassify | |

| Settings | building planarity: 0.1; forest ruggedness: 0.4; ground offset: 1.8 | |

| (2.3) Manual reclassification | Yes | See ŠtuLoz2020 |

| (2.4) DFM interpolation | Software | Surfer 19.2.x, Golden Software |

| Filter | Kriging | |

| Settings | Kriging type: point; Drift: none; No. sectors: 4; Max. all sectors: 64; Max. each sector: 16; Min. all sectors: 8; Radius 1: 20; Radius 2: 20; cell size: 0.25 m. | |

| (2.5) Enhanced visualization | Software | RVT 2.2.1, ZRC SAZU |

| Filter | Sky view factor | |

| Settings | No. search directions: 32; Search radius: 10; Remove noise: no; scale: linear stretch between 0.6 and 1.0. |

| Visualization Technique | Primary Use | |

|---|---|---|

| SVF | Sky view factor | Standing objects, relief |

| OPP | Opennes (positive) | Standing f., partially embedded f.; steep slopes |

| DME | Difference f/mean elev. | Embedded features; flats |

| VAT | Vis. f/arch. topography | Overall performance; dissemination |

| Step | Type | Paradata |

|---|---|---|

| (2.1) Automatic ground point classification | Software | LAStools 1.4 Rapidlasso GmbH |

| Filter | lasground_new | |

| Settings | s St: 5; g:/; Off: 0.05; s+: 1.0; s−: 1.0; b: no; Terrain type: city; Pre-processing: fine | |

| Software | LAStools 1.4 Rapidlasso GmbH | |

| Filter | lasground_new | |

| Settings | St: 5; g:/; Off: 0.05; s+: 1.0; s−: 1.0; b: no; Terrain type: wilderness; Pre-processing: ultra fine | |

| (2.2) Object-type classification | Software | LAStools 1.4 Rapidlasso GmbH |

| Filter | lasheight | |

| Settings | classify between: −0.2 and 0.2 as 2; classify between: 0.5 and 2 as 3; Classify above: 2 as 5 | |

| Software | LAStools 1.4 Rapidlasso GmbH | |

| Filter | lasclassify | |

| Settings | Building planarity: 0.1; Forest ruggedness: 0.4; Ground offset: 1.8 | |

| (2.3) Manual reclassification | Yes | See Figure 7 |

| (2.4) DFM interpolation | Software | Open LiDAR Toolbox |

| Filter | Hybrid | |

| Settings | Cell size: 0.5 m | |

| (2.5) Enhanced visualization | Software | Relief Visualization Toolbox 0.6.x (QGIS plug-in) |

| Filter | Sky view factor | |

| Settings | No. search directions: 32; Search radius: 10; Remove noise: no; Scale: linear stretch between 0.6 and 1.0. | |

| Software | Relief Visualization Toolbox 0.6.x (QGIS plug-in) | |

| Filter | Opennes–positive | |

| Settings | No. search directions: 32; Search radius: 10; Remove noise: no; Scale: linear stretch between 0.6 and 1.0. | |

| Software | Relief Visualization Toolbox 0.6.x (QGIS plug-in) | |

| Filter | Blender | |

| Settings | Combination: Archaeological (VAT); Terrain type: general | |

| Software | Whitebox tools 1.2 (QGIS plug-in) | |

| Filter | DiffFromMeanElev | |

| Settings | Filter X dimension: 10; Filter Y dimension: 10 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Štular, B.; Eichert, S.; Lozić, E. Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox. Remote Sens. 2021, 13, 3225. https://doi.org/10.3390/rs13163225

Štular B, Eichert S, Lozić E. Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox. Remote Sensing. 2021; 13(16):3225. https://doi.org/10.3390/rs13163225

Chicago/Turabian StyleŠtular, Benjamin, Stefan Eichert, and Edisa Lozić. 2021. "Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox" Remote Sensing 13, no. 16: 3225. https://doi.org/10.3390/rs13163225

APA StyleŠtular, B., Eichert, S., & Lozić, E. (2021). Airborne LiDAR Point Cloud Processing for Archaeology. Pipeline and QGIS Toolbox. Remote Sensing, 13(16), 3225. https://doi.org/10.3390/rs13163225