Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels

Abstract

:1. Introduction

- (1)

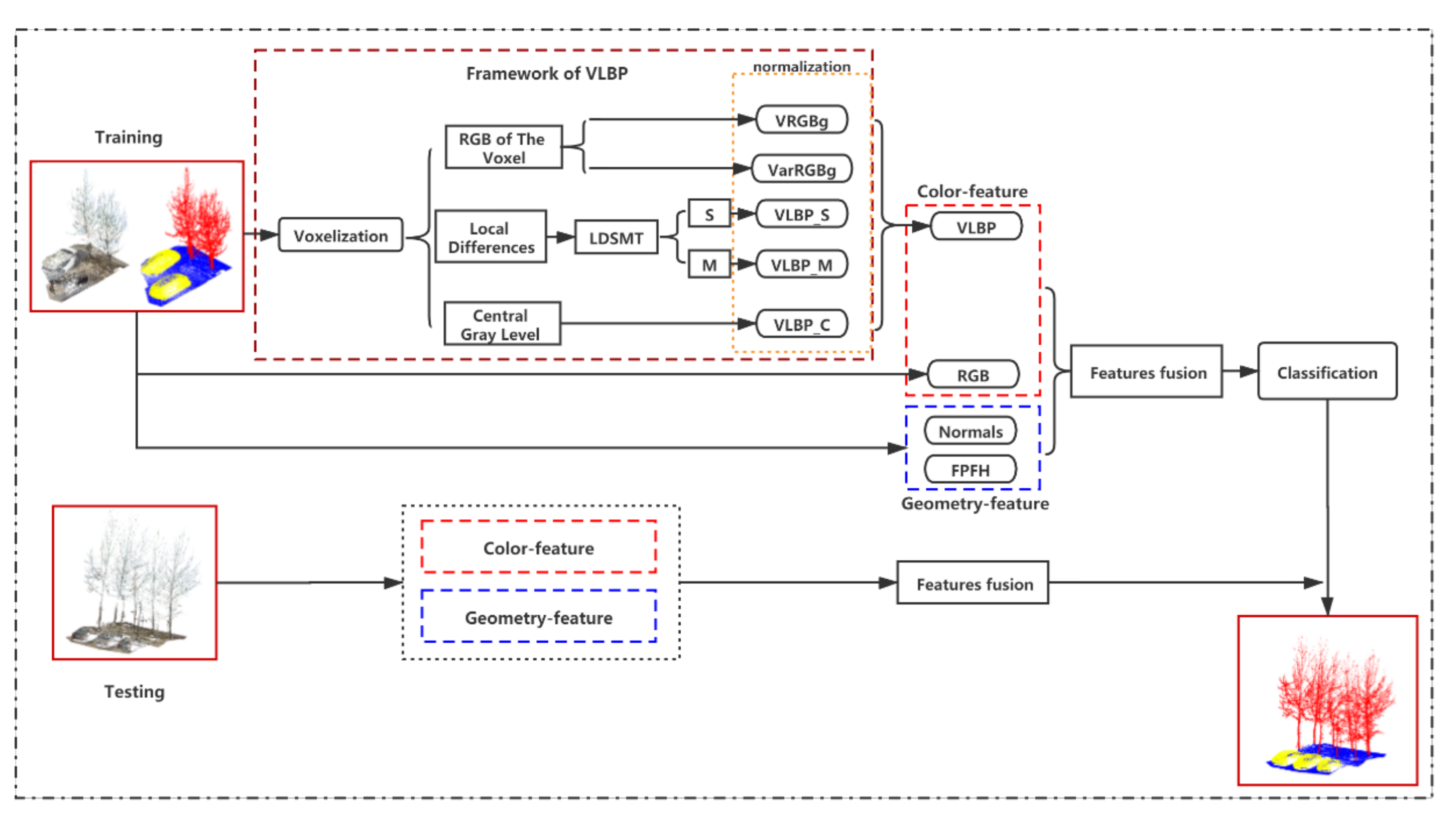

- A point cloud color feature descriptor is proposed, that is, a local binary pattern feature based on voxels (VLBP). This feature describes the local color texture information of the point cloud and has the characteristic that the grayscale does not change with any single transformation. The expression of this grayscale texture information can effectively improve the classification effect of the point cloud.

- (2)

- A point cloud classification algorithm based on the fusion of point cloud color and geometric structure is proposed. The proposed algorithm uses the RGB information of the color and the VLBP feature proposed in this paper as the color feature of the point cloud, merges it into the geometric structure feature of the point cloud to construct a more discriminative and robust point cloud fusion feature, and then uses a random forest classifier to effectively classify the point clouds.

2. Related Work

3. Voxel-Based Shading Point Cloud Feature Descriptor (VLBP)

3.1. Voxelization

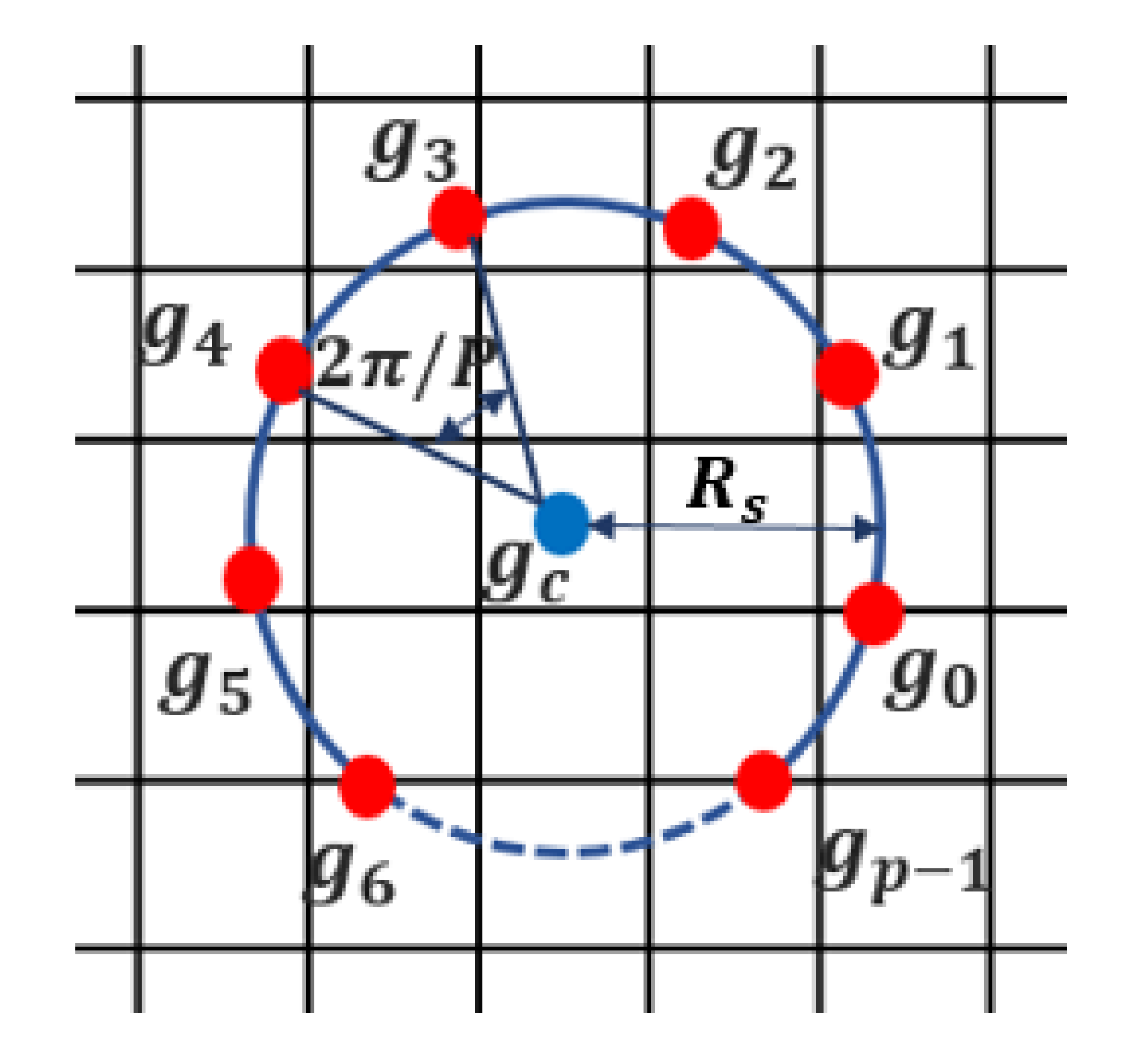

3.2. VLBP Descriptor Construction

3.2.1. Scale Invariance

3.2.2. Rotation Invariance

3.3. FVLBP Feature Vector of Voxel Histogram

- Given search radius r2, take point p as the search center point and use kdtree as the search radius to perform a second radius near-neighbor search to find all points within its radius, r2.

- Find the VLBP_S and VLBP_M values corresponding to all points obtained by this radius neighbor search, divide into the detection interval, and then calculate the VLBP_S and VLBP_M values corresponding to each point. Here, is a threshold to divide the feature value interval for the histogram statistics. The following also pertain to the test interval:

- After traversing all points, record the number of times in the interval and create two new -dimensional features: VLBP_S(r) and VLBP_M(r). Replace VLBP_S and VLBP_M with these two new features.

- The final VLBP characteristics of each voxel are:Among them, VRGBg is the average value of the gray value of the R, G, and B structure of the N points in voxel V. VarRGBg is the variance of the gray value constructed by R, G, and B of N points in voxel V.

- Traverse all points in the point cloud and generate a VLBP feature for each point; change radius r of the first-radius nearest-neighbor search; and generate a set of VLBP features again and continue writing. Repeat this step until the radius set by the scale invariance is reached.

4. Point Cloud Classification Based on Multifeature Fusion and Random Forest

4.1. Multifeature Fusion

4.2. Point Cloud Classification

5. Analysis of Experimental Results

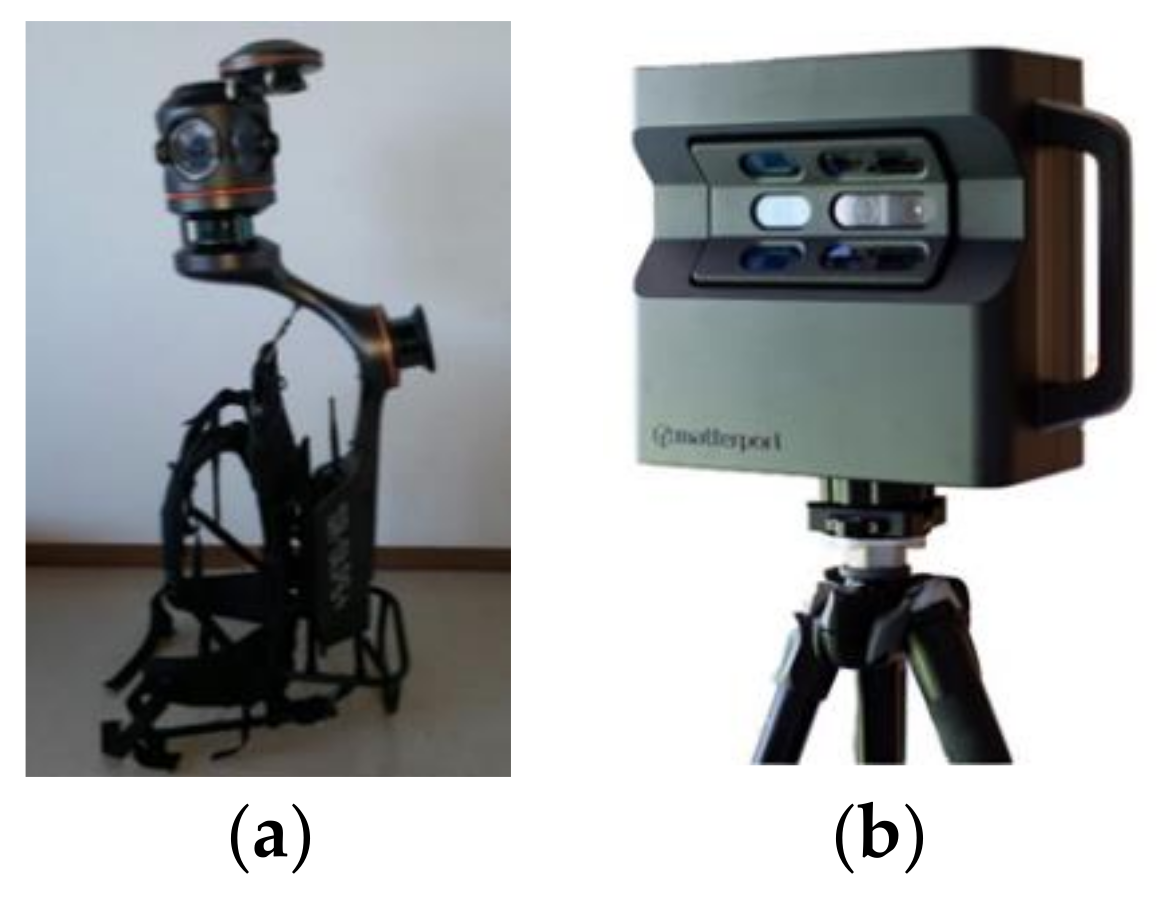

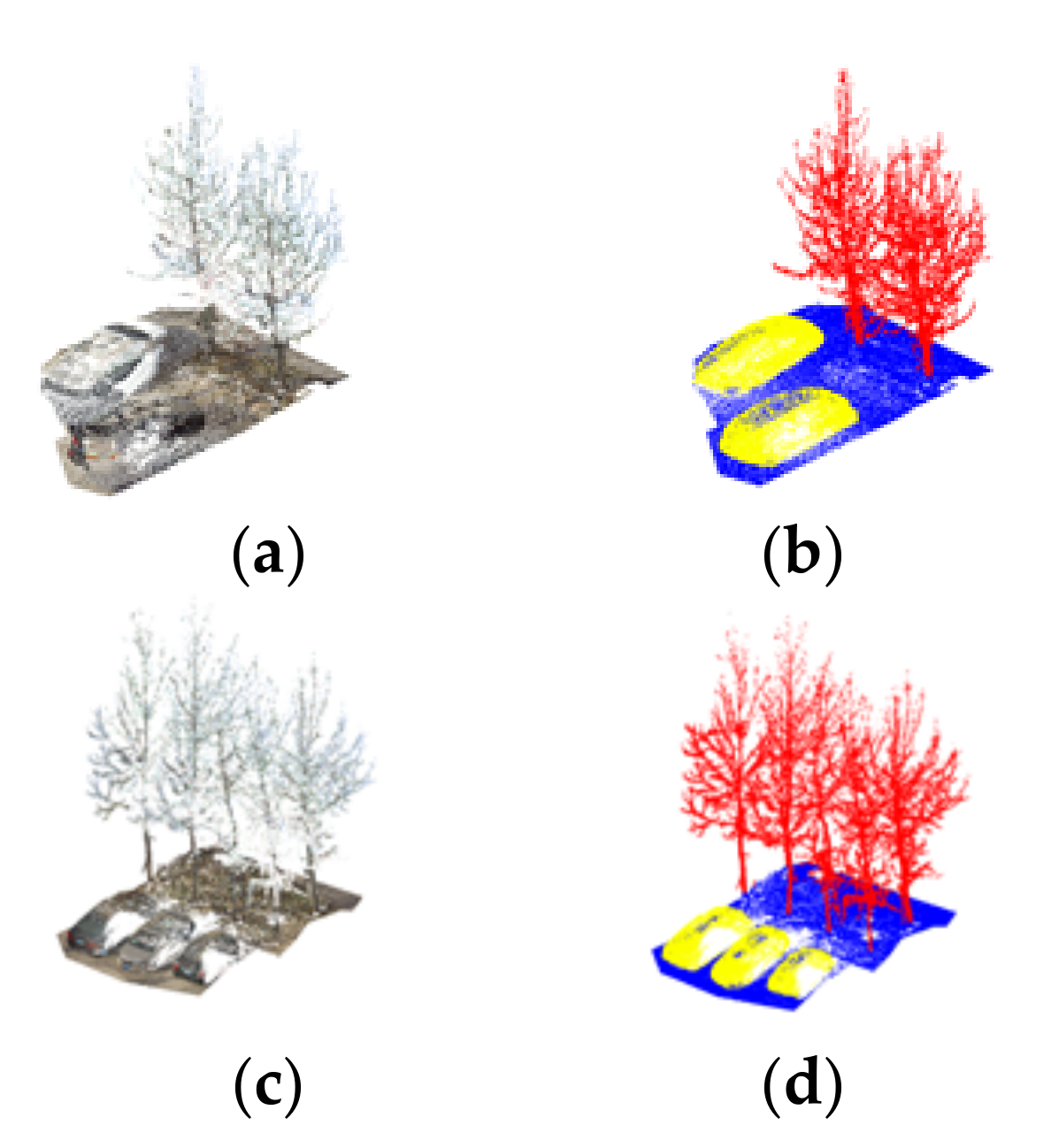

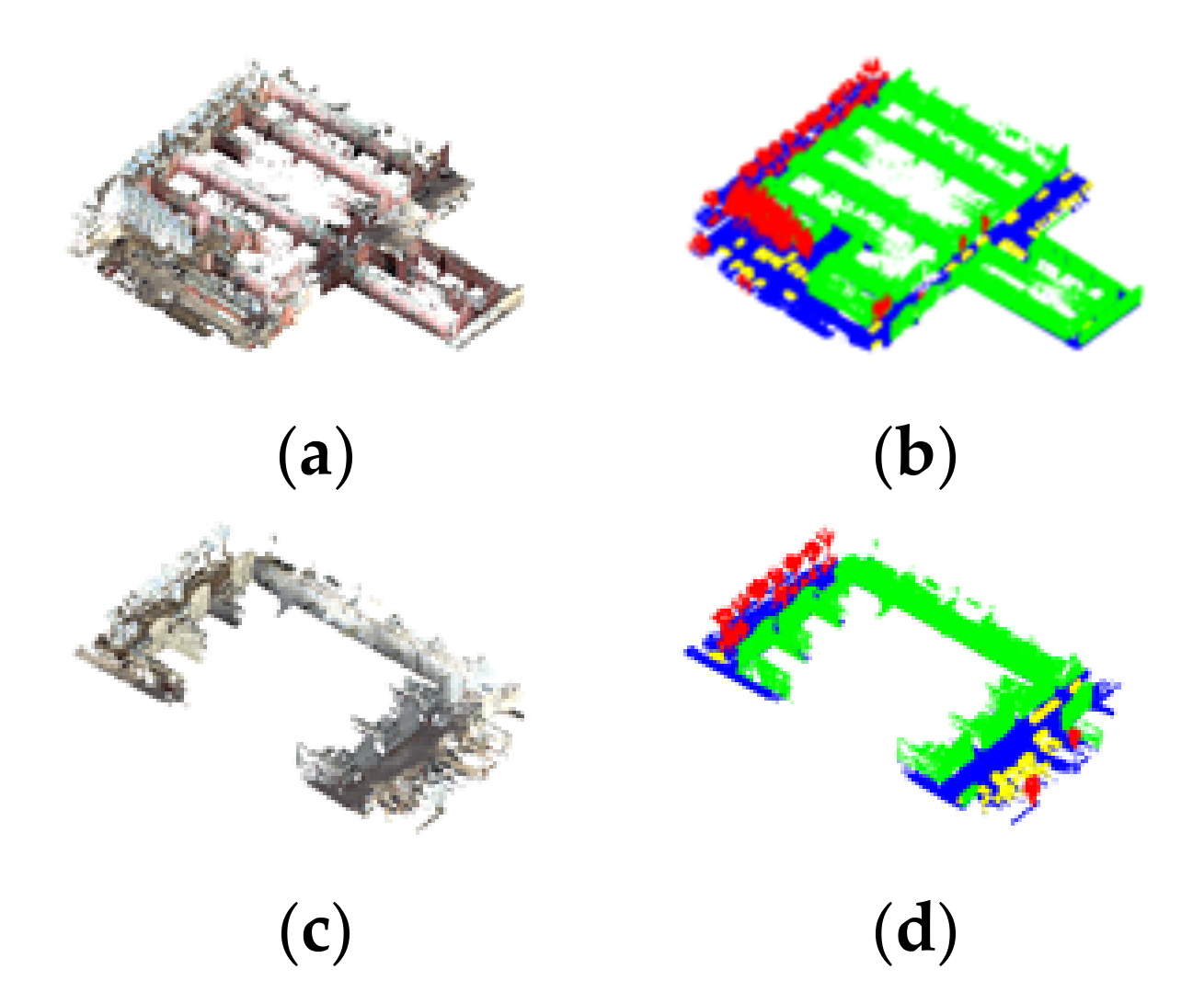

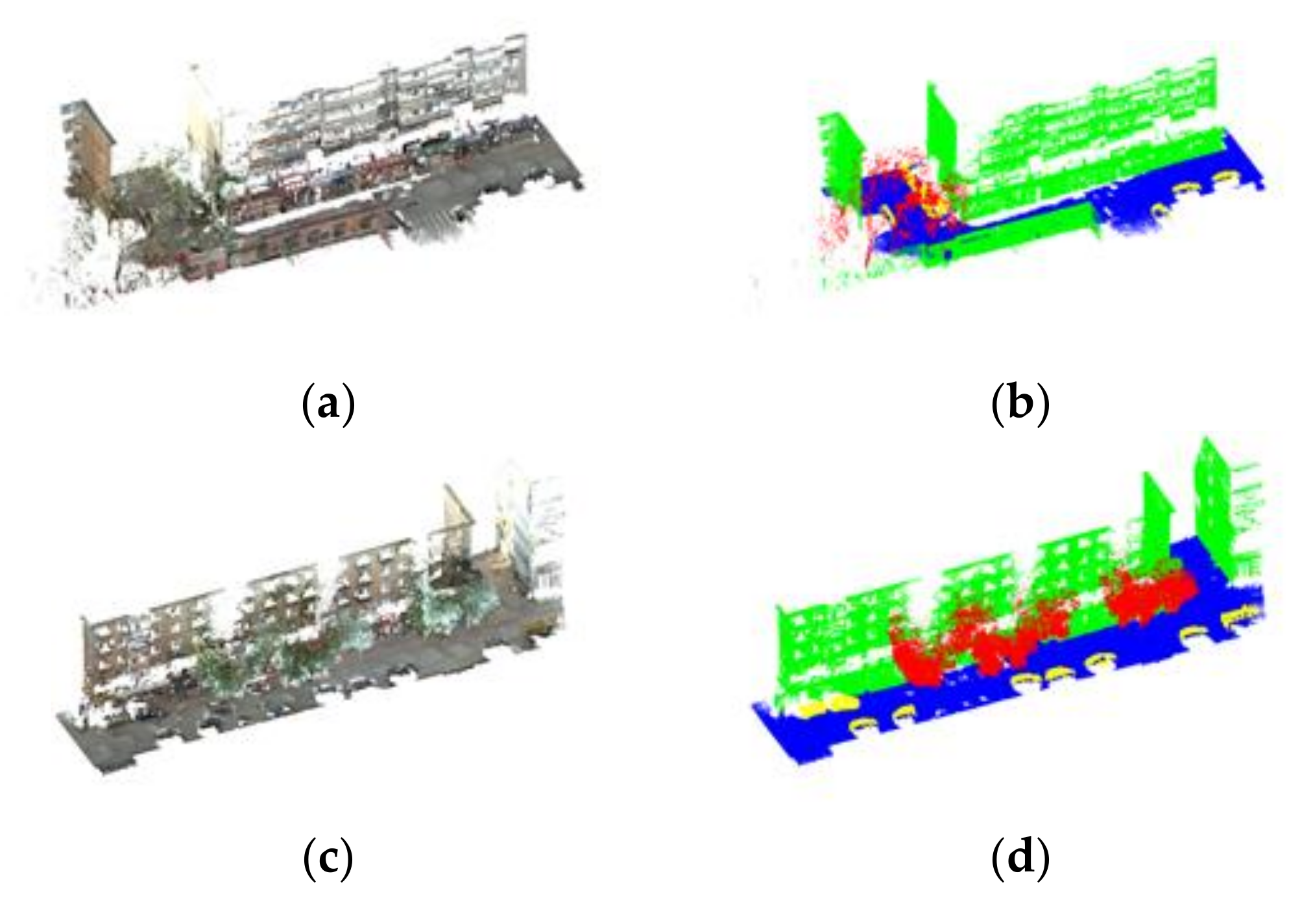

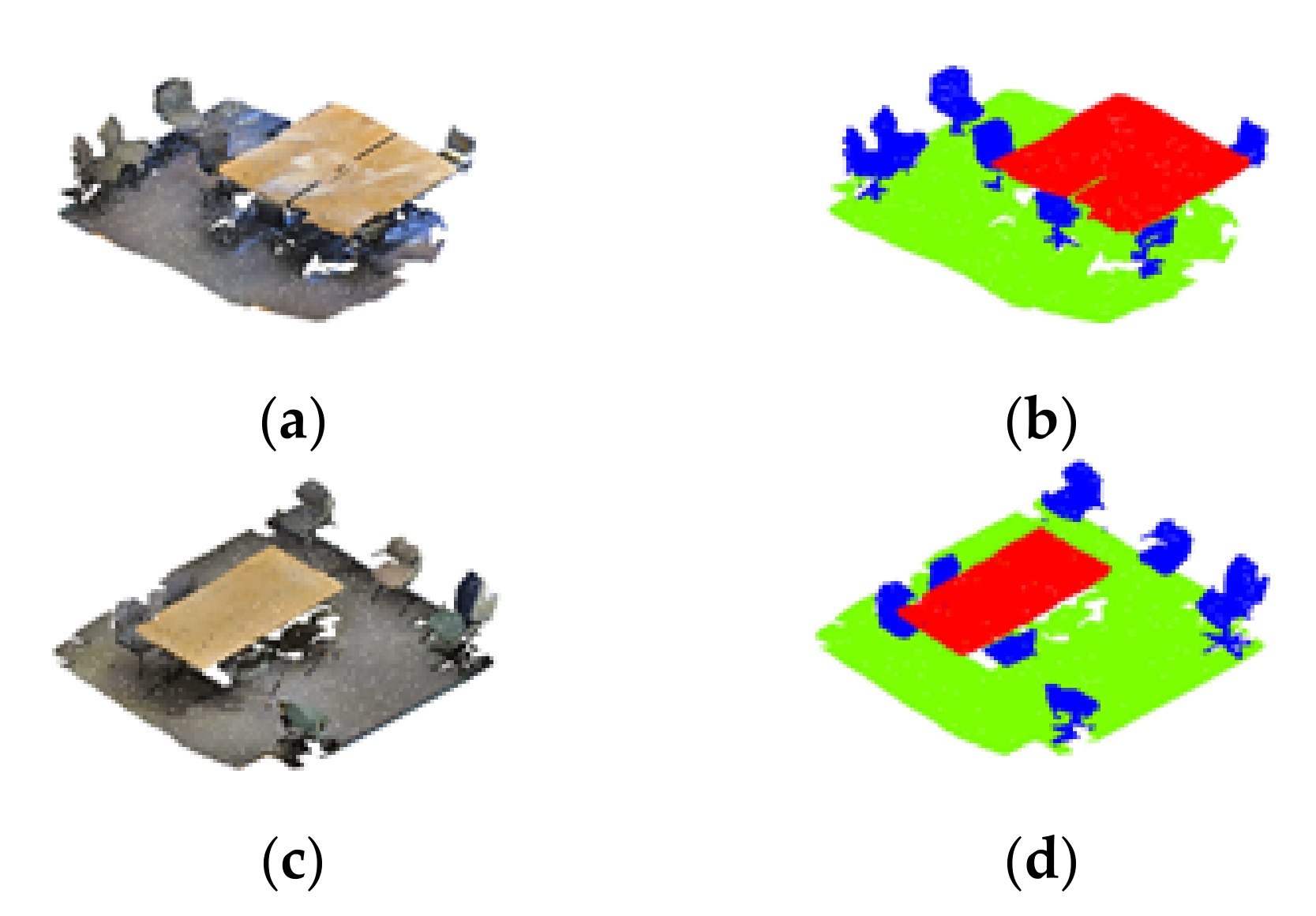

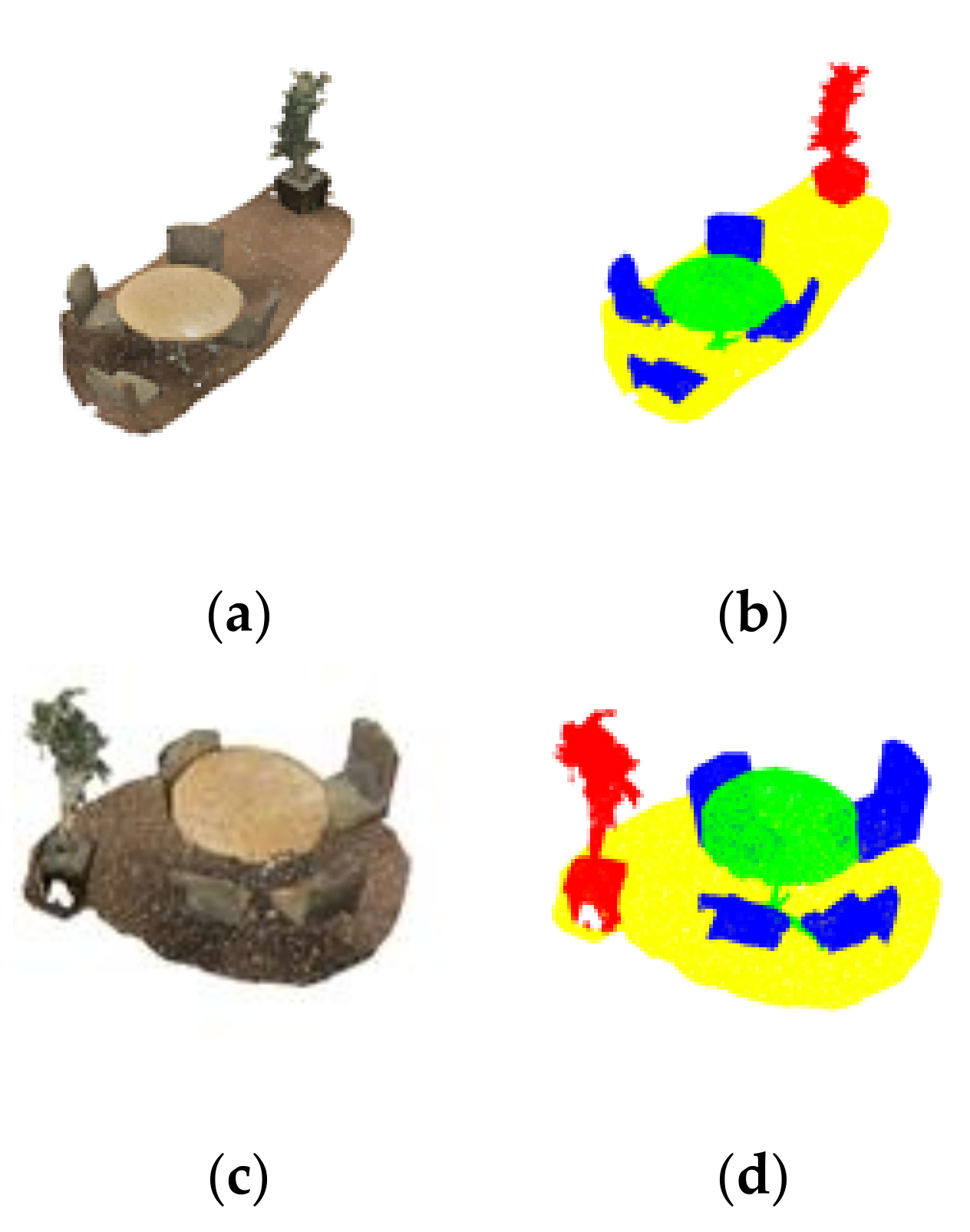

5.1. Experimental Data

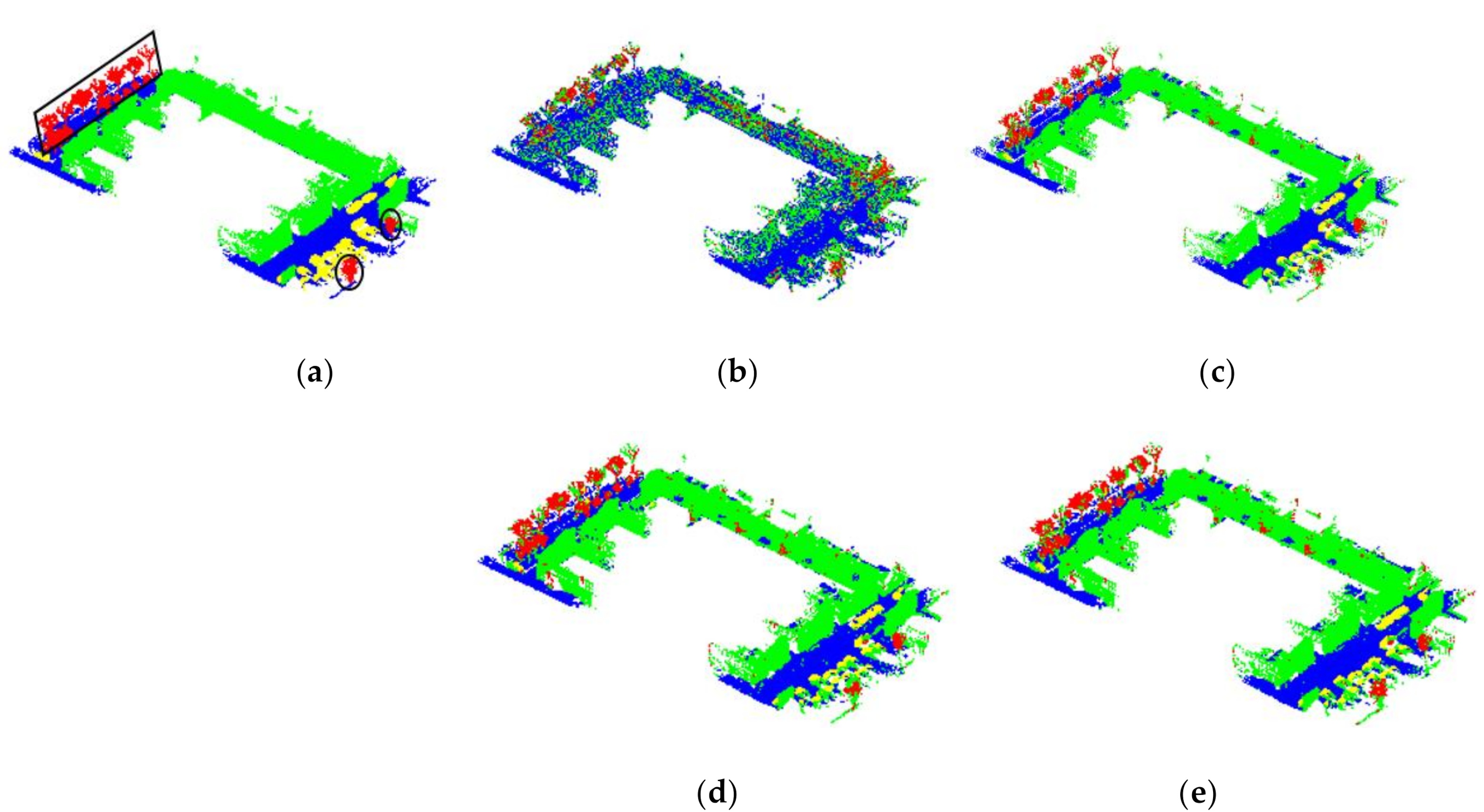

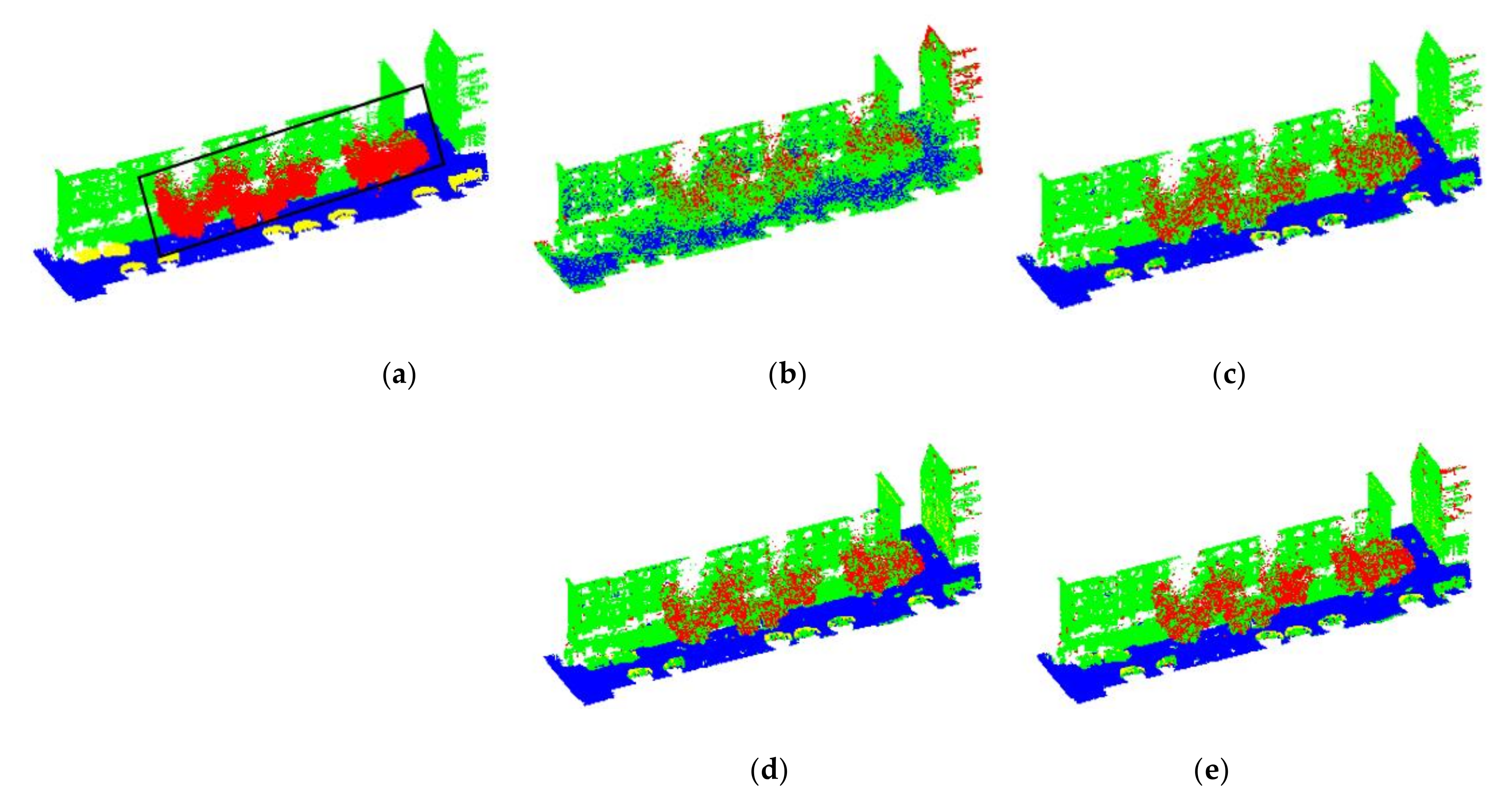

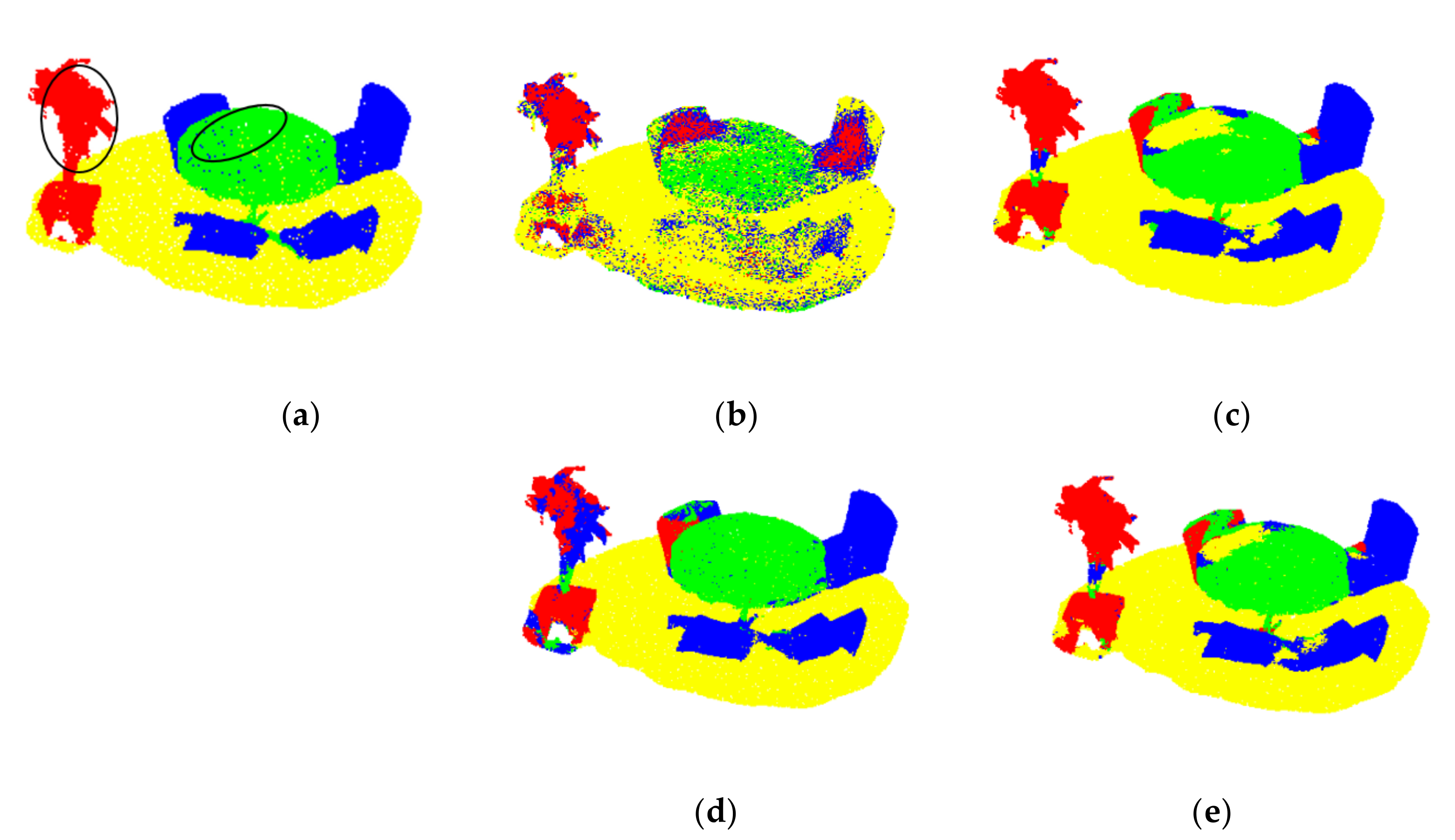

5.2. Point Cloud Classification Effect

- From the data in the table, we can see that the classification accuracy (Kappa/OA) of the proposed algorithm is different in different scenarios, but the point cloud classification accuracy is basically the highest in all feature fusion situations and the results of the five scenarios are in different features. The results’ trends of the classifier is consistent.

- By comparing the results of five scenes of point cloud classifications using different types of classifiers for the same feature, it can be seen that the features used in the proposed method can achieve the best classification results by using random forest classifiers; that is, the classification algorithm designed in this article is better than that based on other classifications.

- A comparison of the effects of classifying different types of features by the same classifier shows that, based only on the VLBP features proposed in this paper, they cannot achieve better classification results because the feature descriptors proposed in this paper only represent the point cloud color information and lack the structural information of the point cloud. The fusion of RGB color information on the basis of geometric features will significantly improve the classification effect of point clouds. On this basis, continuing to integrate the VLBP features extracted in this paper based on color will improve the classification accuracy of point clouds.

- 4.

- By comparing the improvement of the classification accuracy of each scene, we can see that the RGB and VLBP color features are combined on the basis of the geometric structure characteristics of the point cloud, and the classification accuracy of indoor Scene 4 and 5 is more obvious than that of outdoor Scenes 1–3. This is because the coloring of the point cloud is not only related to the point cloud collection equipment but it is also affected by the illumination to a certain extent. This makes the coloring of the point cloud collected indoors more uniform than the point cloud collected outdoors. Thus, compared with outdoor scenes, the classification accuracy of indoor scenes is better.

- (1)

- From the comparison of each metric, we can see that the proposed classification algorithm achieved 86.8/92.1%, 79.4/87.9%, 73.2/83.1%, 94.4/97.1%, and 84.6/89.5% classification Kappa/accuracy in the five scenarios. Considering all evaluation indicators, the proposed algorithm has advantages over both the algorithm without color features (Method 2) and the algorithm with RGB features (Method 3).

- (2)

- A comparison of the four algorithms shows that Method 3 introduces RGB based on the geometric structure characteristics of the point cloud and improves the classification effect of each scene by 0.7%, 0.1%, 0.4%, 5.0%, and 0.1%. The proposed algorithm integrates RGB and VLBP features based on geometric structure features, and the fusion features increase the classification accuracy of each scene by 1.4%, 0.3%, 0.2%, 6.2%, and 1.5%, respectively. This shows that the four fusion features are more discriminative to the point cloud representation, which improves the classification effect. The proposed algorithm can achieve better point cloud classification on the point cloud data of different scenes and the proposed VLBP feature can improve the point cloud classification.

- (3)

- From the comparison in Table 3, it can be seen that Method 3 shows significant improvements for most indicators compared to Method 1 and Method 2, especially in Scene 4 and 5, and the classification effect is significantly improved. This shows that the point cloud color feature has an improved effect on point cloud classification. From a comparison between Method 3 and the algorithm in this paper, it can be seen that in the outdoor Scenes 1–3, the algorithm in this paper performs better than Method 3 in most cases. It can be seen that in the indoor Scene 4 and 5, the algorithm in this paper shows a significant improvement for all indicators compared to Method 3. This also shows that in the case of less noise in the color information of the point cloud coloring, the VLBP feature descriptor proposed in this paper can significantly improve the point cloud classification effect.

- (4)

- By observing Scene 4 and the other four scenes, we can see that the proposed method has the best classification performance on the point cloud scene containing only man-made objects. When there are irregular objects such as plants in the scene, it will increase the complexity of the scene, thereby reducing the classification performance. It can be seen from the precision/recall/F1-scores in Table 6 that the performance of the proposed method for the vast majority objects has a certain improvement. Especially for the indoor point clouds, the improvement is more significant. This is because the color information of the indoor point cloud is more accurate than the outdoor point clouds, which is caused by the colored point cloud collection device.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, Y.; Tong, G.; Du, X.; Yang, X.; Zhang, J.; Yang, L. A single point-based multilevel features fusion and pyramid neighborhood optimization method for ALS point cloud classification. Appl. Sci. 2019, 9, 951. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Zhang, L.; Fang, T. A Multiscale and Hierarchical Feature Extraction Method for Terrestrial Laser Scanning Point Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2409–2425. [Google Scholar] [CrossRef]

- Lin, C.; Chen, J.; Su, P.; Chen, C. Eigen-feature analysis of weighted covariance matrices for LiDAR point cloud classification. ISPRS J. Photogramm. Remote Sens. 2014, 94, 70–79. [Google Scholar] [CrossRef]

- West, K.; Webb, B.; Lersch, J.; Pothier, S. Context-driven automated target detection in 3D data. In Proceedings of the SPIE—The International Society for Optical Engineering, Orlando, FL, USA, 12 April 2004. [Google Scholar]

- Rusu, R.; Bradski, G.; Thibaux, R.; Hsu, J. Fast 3d recognition and pose using the viewpoint feature histogram. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Aldoma, A.; Vincze, M.; Blodow, N.; Gossow, D.; Gedikli, S.; Rusu, R.; Bradski, G. CAD-model recognition and 6DOF pose estimation using 3D cues. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Li, P.; Wang, J.; Zhao, Y.; Wang, Y.; Yao, Y. Improved algorithm for point cloud registration based on fast point feature histograms. Remote Sens. 2016, 10, 045024. [Google Scholar] [CrossRef]

- Sok, C.; Adams, M.D. Visually aided feature extraction from 3D range data. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, H.; Sohn, G. 3D classification of power-line scene from airborne laser scanning data using random forests. Int. Arch. Photogramm. Remote Sens. 2010, 38, 126–132. [Google Scholar]

- Bonifazi, G.; Burrascano, P. Ceramic powder characterization by multilayer perceptron (MLP) data compression and classification. Elsevier. Adv. Powder Technol. 1994, 5, 225–239. [Google Scholar] [CrossRef]

- Lodha, S.; Kreps, E.; Helmbold, D. Aerial LiDAR Data Classification Using Support Vector Machines (SVM). In Proceedings of the 3rd International Symposium on 3D Data Processing, Visualization and Transmission (3DPVT), Chapel Hill, NC, USA, 14–16 June 2006. [Google Scholar]

- Freund, Y.; Schapire, R. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. Elsevier J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Guan, H.; Yu, J.; Li, J. Random forests-based feature selection for land-use classification using lidar data and orthoimagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, B7. [Google Scholar] [CrossRef] [Green Version]

- Genuer, R.; Poggi, J.M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef] [Green Version]

- Mei, J.; Wang, Y.; Zhang, L.; Zhang, B.; Liu, S.; Zhu, P.; Ren, Y. PSASL: Pixel-level and superpixel-level aware subspace learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4278–4293. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Harwood, D. A comparative study of texture measures with classification based on feature distributions. Pattern Recognit Lett. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. Pattern Anal. Mach. Intell. IEEE Trans. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar] [PubMed] [Green Version]

- Guo, Z.; Wang, X.; Zhou, J.; You, J. Robust Texture Image Representation by Scale Selective Local Binary Patterns. IEEE Trans. Image Process. 2016, 25, 687–699. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Xu, X.; Li, B.; Ye, F.; Dong, Q. Circular regional mean completed local binary pattern for texture classification. J. Electron. Imaging 2018, 27, 1. [Google Scholar] [CrossRef]

- Shi, G.; Gao, X.; Dang, X. Improved ICP Point Cloud Registration Based on KDTree. Int. J. Earth Sci. Eng. 2016, 9, 2195–2199. [Google Scholar]

- Tong, G.; Li, Y.; Chen, D. CSPC-Dataset: New LiDAR Point Cloud Dataset and Benchmark for Large-scale Semantic Segmentation. IEEE Access 2020, 8, 87695–87718. [Google Scholar] [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D semantic parsing of large-scale indoor spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Qi, C.; Su, H.; Mo, K.; Guibas, L. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Train | Test | |||||||

|---|---|---|---|---|---|---|---|---|

| Floor | Building | Car | Tree | Floor | Building | Car | Tree | |

| Scene 1 | 54.327 | 29.523 | 46.068 | 25.038 | 63.174 | 93.852 | ||

| Scene 2 | 71.587 | 123.521 | 10.545 | 28.754 | 29.080 | 46.854 | 5918 | 7248 |

| Scene 3 | 119.255 | 180.919 | 15.394 | 13.504 | 155.931 | 201.930 | 17.492 | 87.601 |

| Chair | Table | Floor | Flower | Chair | Table | Floor | Flower | |

| Scene 4 | 24.025 | 17.671 | 77.880 | 24.713 | 29.467 | 74.692 | ||

| Scene 5 | 30.801 | 16.148 | 62.596 | 18.394 | 28.748 | 14.611 | 47.662 | 19.278 |

| Ground Truth | Predicted | |

|---|---|---|

| Positive | Negative | |

| Positive | True Positive (Tp) | False Negative (Fn) |

| Negative | False Positive (Fp) | True Negative (Tn) |

| Classifiers | Features | Scene 1 | Scene 2 | Scene 3 | Scene 4 | Scene 5 |

|---|---|---|---|---|---|---|

| RF | 51.9/73.8 | 32.0/53.6 | 33.8/55.7 | 43.3/71.0 | 45.7/65.8 | |

| 84.0/90.7 | 79.1/87.6 | 73.0/82.9 | 81.9/90.9 | 82.5/88.0 | ||

| 85.7/91.4 | 79.1/87.7 | 73.6/83.3 | 91.4/95.9 | 84.4/88.1 | ||

| 86.8/92.1 | 79.4/87.9 | 73.2/83.1 | 94.4/97.0 | 84.6/89.5 | ||

| MLP | 56.7/75.7 | 32.1/52.5 | 36.7/55.5 | 42.4/69.5 | 36.8/51.2 | |

| 79.0/86.4 | 60.8/73.5 | 57.4/73.3 | 72.9/82.8 | 78.2/84.5 | ||

| 84.9/90.5 | 51.4/69.5 | 60.8/71.8 | 74.0/84.9 | 78.0/85.1 | ||

| 85.8/91.4 | 63.5/78.4 | 57.7/73.7 | 88.1/94.0 | 81.1/86.0 | ||

| SVM | 57.0/75.6 | 34.1/52.4 | 32.8/43.8 | 35.6/70.2 | 36.8/52.8 | |

| 80.0/88.0 | 53.9/73.3 | 52.0/70.0 | 74.5/85.7 | 79.2/85.7 | ||

| 84.5/91.1 | 54.2/73.4 | 53.1/70.5 | 77.1/84.9 | 79.5/87.3 | ||

| 85.0/90.7 | 55.6/74.2 | 56.5/73.5 | 90.8/95.3 | 83.5/88.6 | ||

| PointNet | Deep feature | 62.1/76.3 | 54.2/61.4 | 57.7/74.9 | 58.7/82.9 | 50.8/61.2 |

| Feature | Average Time (min) |

|---|---|

| FVLBP | 2.86 |

| FRGB | 0.58 |

| FNormal | 1.73 |

| FFPFH | 1.47 |

| Classifier | Average Time (min) |

| RF | 3.80 |

| MLP | 5.03 |

| SVM | 16.3 |

| PointNet | 145.86 |

| Method | Feature | Dimension |

|---|---|---|

| Method 1 | FVLBP | 10 |

| Method 2 | FNormal + FFPFH | 36 |

| Method 3 | FNormal + FFPFH + FRGB | 39 |

| Our Method | FNormal + FFPFH + FRGB + FVLBP | 49 |

| Method | Floor | Car | Tree | Building | Kappa (%) | OA (%) | |

|---|---|---|---|---|---|---|---|

| Scene 1 | Method 1 | 0.71/0.71/0.71 | 0.27/0.12/0.17 | 0.80/0.92/0.86 | 51.9 | 73.8 | |

| Method 2 | 0.96/0.81/0.88 | 0.68/0.84/0.75 | 0.95/0.99/0.97 | 84.0 | 90.7 | ||

| Method 3 | 0.96/0.82/0.88 | 0.69/0.85/0.76 | 0.96/0.99/0.98 | 85.7 | 91.4 | ||

| Our | 0.96/0.83/0.89 | 0.70/0.87/0.77 | 0.97/1.00/0.98 | 86.8 | 92.1 | ||

| Scene 2 | Method 1 | 0.47/0.82/0.60 | 0.22/0.06/0.09 | 0.39/0.31/0.35 | 0.68/0.46/0.60 | 32.0 | 53.6 |

| Method 2 | 0.91/0.88/0.90 | 0.87/0.55/0.68 | 0.79/0.73/0.76 | 0.87/0.94/0.90 | 79.1 | 87.6 | |

| Method 3 | 0.91/0.88/0.90 | 0.89/0.55/0.68 | 0.80/0.74/0.77 | 0.87/0.94/0.90 | 79.1 | 87.7 | |

| Our | 0.91/0.89/0.90 | 0.89/0.57/0.69 | 0.82/0.75/0.77 | 0.87/0.94/0.90 | 79.4 | 87.9 | |

| Scene 3 | Method 1 | 0.71/0.51/0.59 | 0.11/0.02/0.03 | 0.60/0.06/0.12 | 0.51/0.86/0.64 | 33.8 | 55.7 |

| Method 2 | 0.99/0.91/0.95 | 0.80/0.30/0.43 | 0.92/0.45/0.60 | 0.73/0.98/0.84 | 73.0 | 82.9 | |

| Method 3 | 0.99/0.91/0.95 | 0.45/0.28/0.34 | 0.93/0.53/0.68 | 0.74/0.95/0.83 | 73.6 | 83.3 | |

| Our | 0.99/0.91/0.95 | 0.58/0.29/0.38 | 0.92/0.48/0.63 | 0.73/0.96/0.83 | 73.2 | 83.1 | |

| Method | Chair | Table | Floor | Flower | Kappa (%) | OA (%) | |

| Scene 4 | Method 1 | 0.30/0.20/0.24 | 0.72/0.37/0.49 | 0.78/0.95/0.86 | 43.3 | 71.0 | |

| Method 2 | 0.89/0.99/0.94 | 0.69/0.80/0.74 | 0.98/0.91/0.94 | 81.9 | 90.9 | ||

| Method 3 | 0.90/0.99/0.94 | 0.90/0.89/0.89 | 0.99/0.97/0.98 | 91.4 | 95.9 | ||

| Our | 0.90/0.99/0.94 | 0.97/0.90/0.93 | 0.99/0.98/0.99 | 94.4 | 97.1 | ||

| Scene 5 | Method 1 | 0.53/0.33/0.41 | 0.67/0.55/0.60 | 0.69/0.87/0.77 | 0.66/0.71/0.68 | 45.7 | 65.8 |

| Method 2 | 0.89/0.77/0.83 | 0.79/0.75/0.77 | 0.92/0.97/0.94 | 0.83/0.92/0.88 | 82.5 | 88.0 | |

| Method 3 | 0.90/0.78/0.83 | 0.82/0.80/0.82 | 0.94/0.98/0.96 | 0.82/0.92/0.88 | 84.4 | 88.1 | |

| Our | 0.90/0.78/0.83 | 0.83/0.80/0.82 | 0.94/0.98/0.96 | 0.84/0.93/0.88 | 84.6 | 89.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Luo, Y.; Gu, X.; Chen, D.; Gao, F.; Shuang, F. Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels. Remote Sens. 2021, 13, 3156. https://doi.org/10.3390/rs13163156

Li Y, Luo Y, Gu X, Chen D, Gao F, Shuang F. Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels. Remote Sensing. 2021; 13(16):3156. https://doi.org/10.3390/rs13163156

Chicago/Turabian StyleLi, Yong, Yinzheng Luo, Xia Gu, Dong Chen, Fang Gao, and Feng Shuang. 2021. "Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels" Remote Sensing 13, no. 16: 3156. https://doi.org/10.3390/rs13163156

APA StyleLi, Y., Luo, Y., Gu, X., Chen, D., Gao, F., & Shuang, F. (2021). Point Cloud Classification Algorithm Based on the Fusion of the Local Binary Pattern Features and Structural Features of Voxels. Remote Sensing, 13(16), 3156. https://doi.org/10.3390/rs13163156