Nearshore Benthic Mapping in the Great Lakes: A Multi-Agency Data Integration Approach in Southwest Lake Michigan

Abstract

:1. Introduction

- Evaluate the integration and role of high-fidelity data (airborne imagery and lidar, satellite imagery, in situ observations, etc.) using machine learning (Support Vector Machine) to identify nearshore benthic substrate and associated habitat and to better understand their implications with respect to the Coastal and Marine Ecological Classification Standard (CMECS) classification system hierarchy;

- Develop a semi-automated, repeatable approach that could be applied in other shallow coastal environments using a case study in southwest Lake Michigan, USA.

2. Materials and Methods

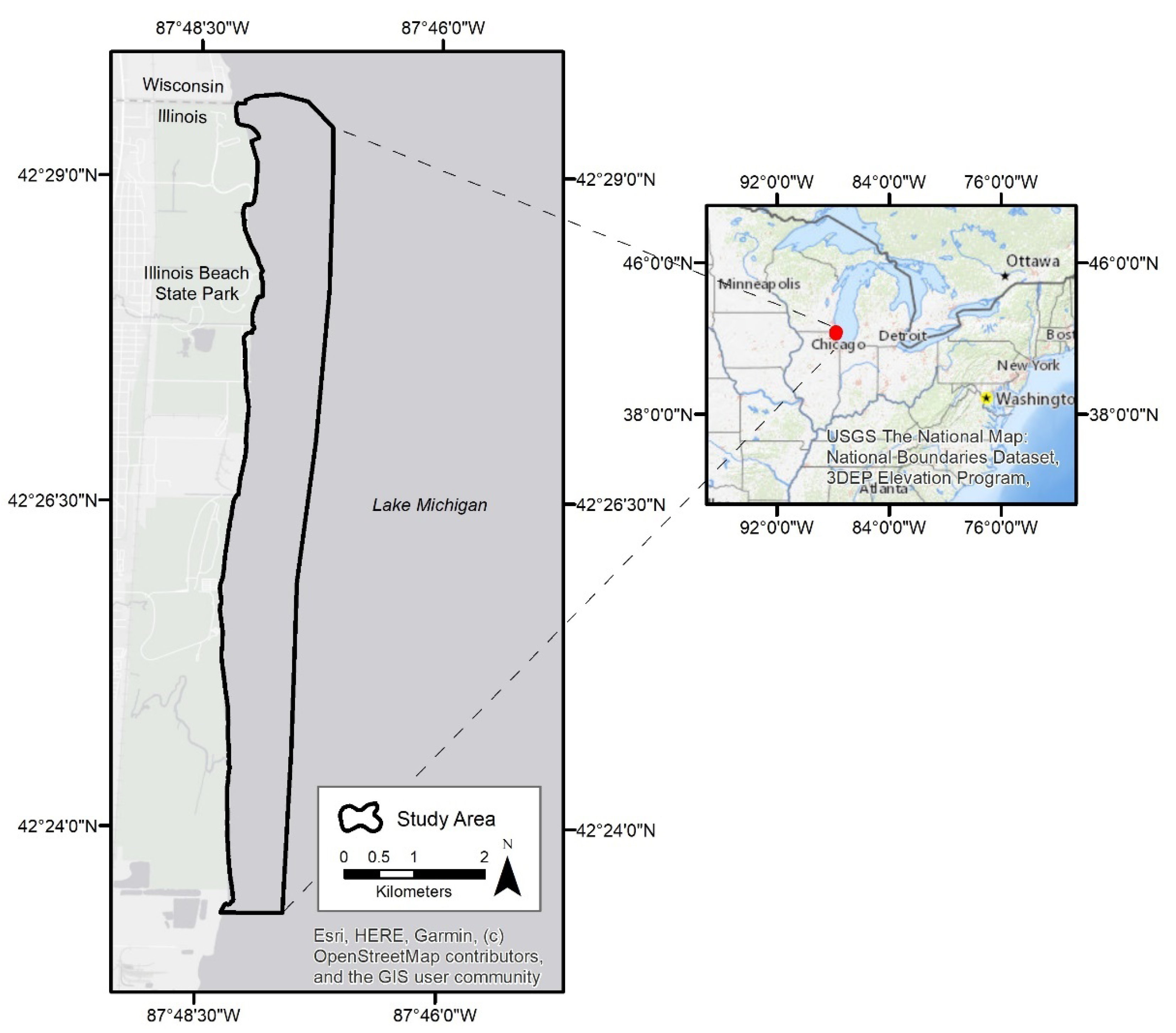

2.1. Study Area

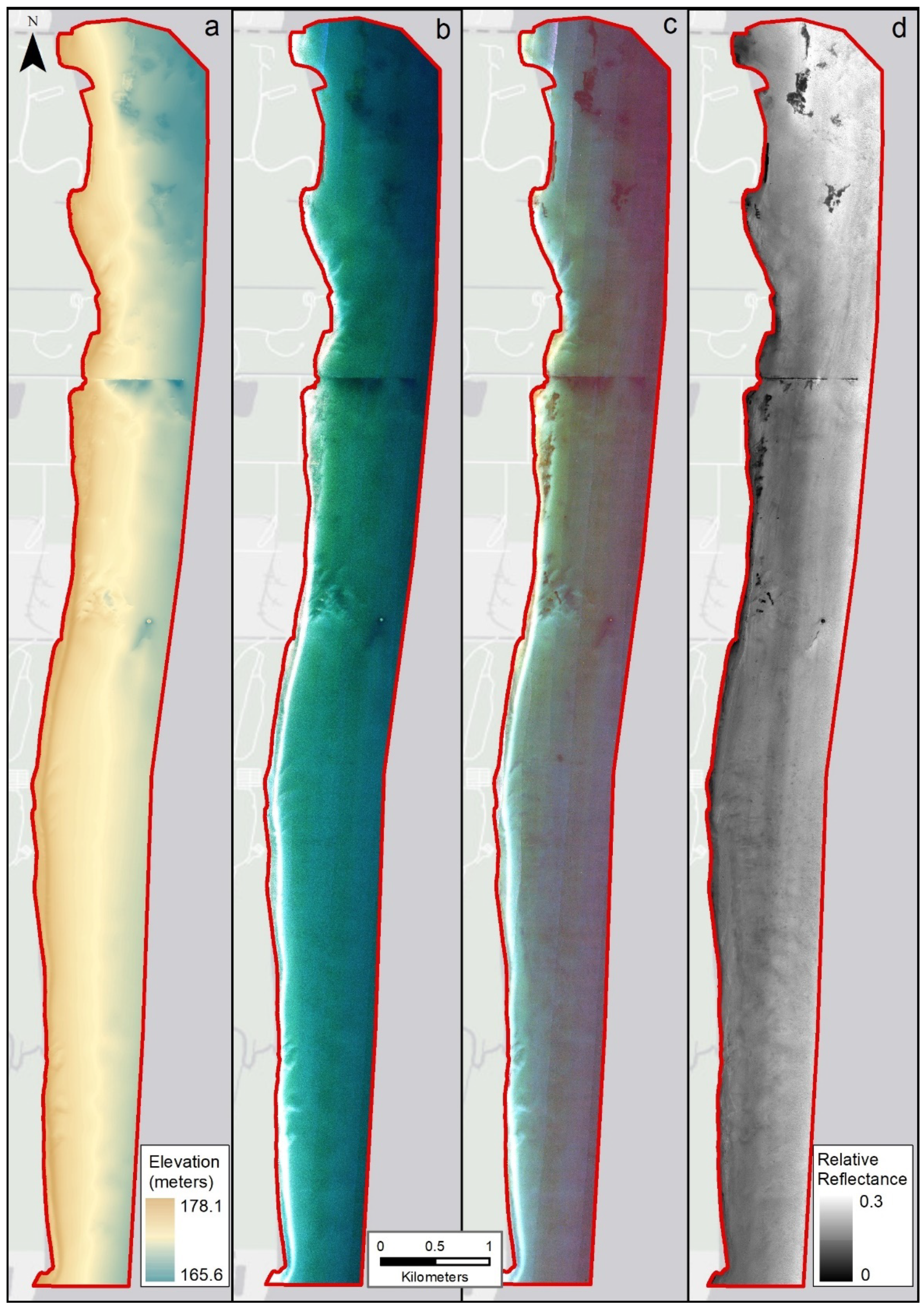

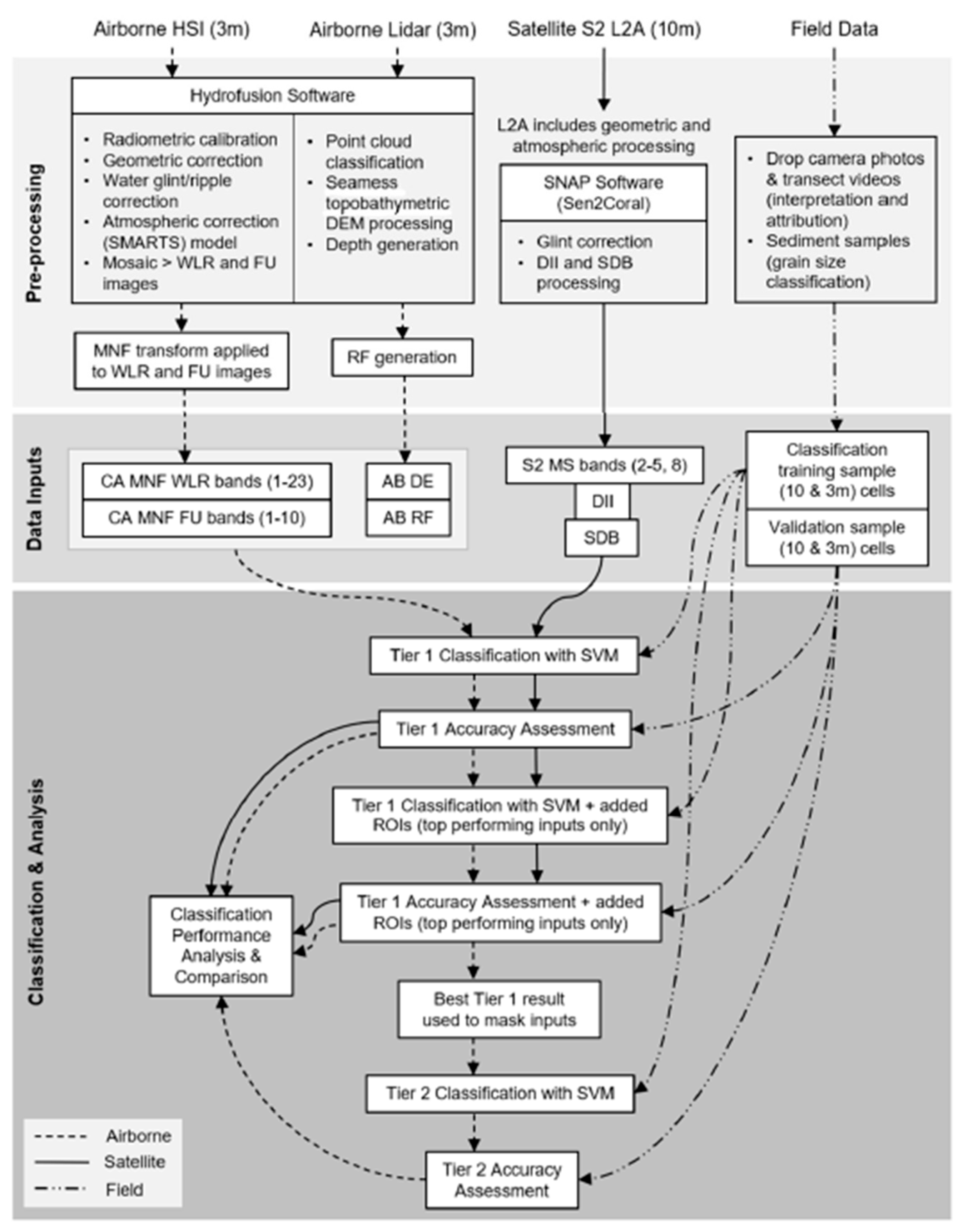

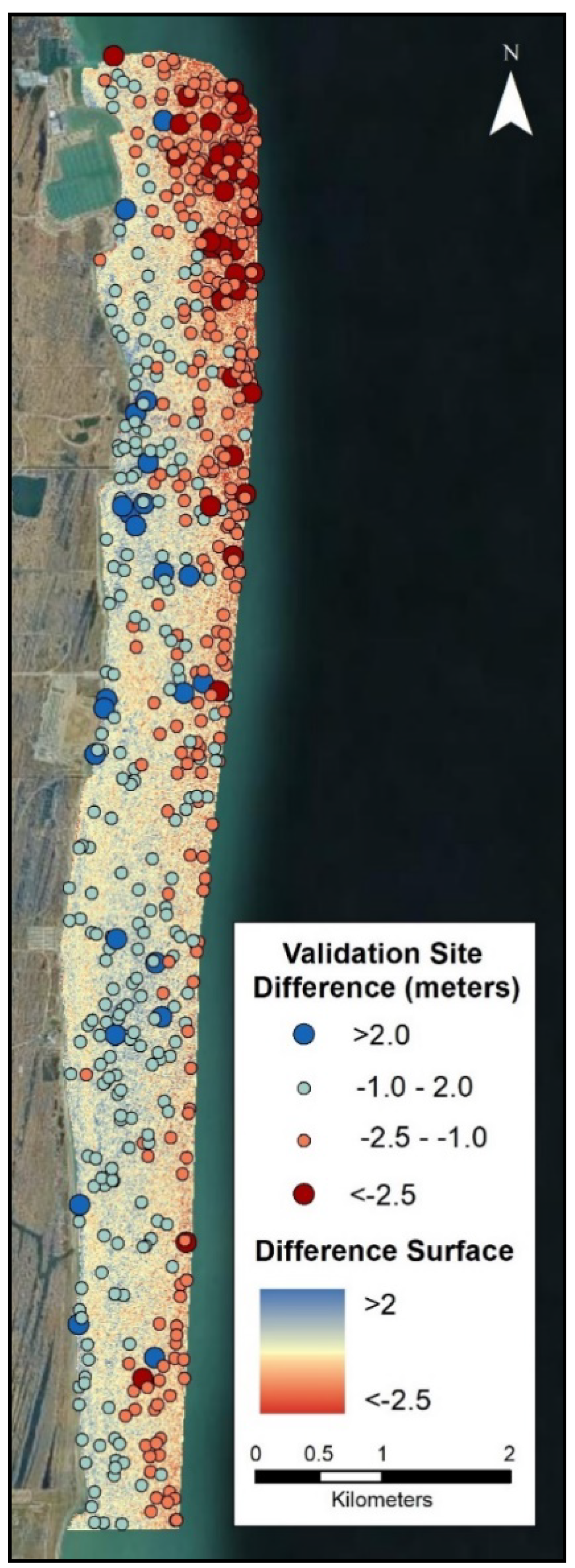

2.2. Airborne Data Pre-Processing

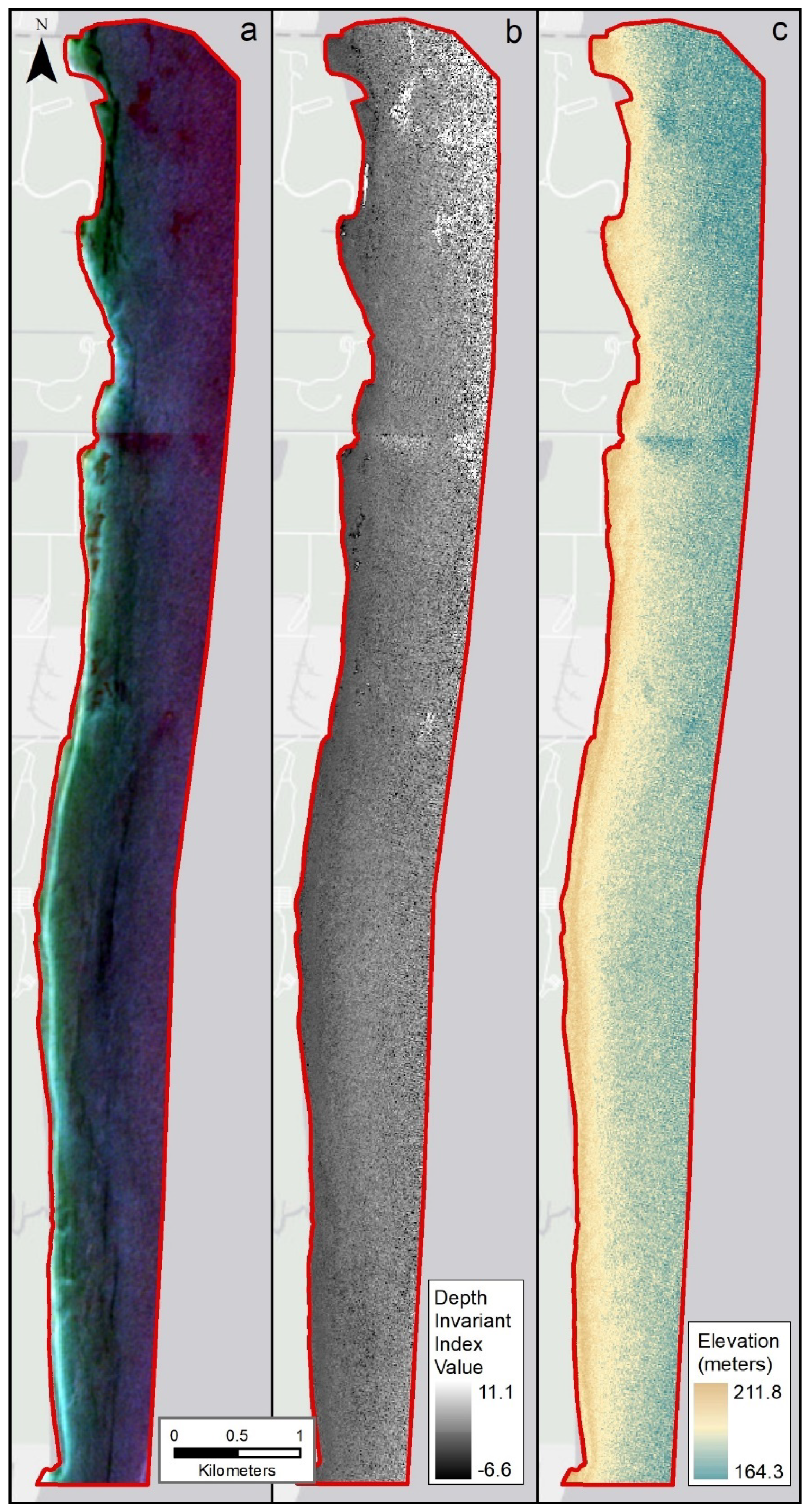

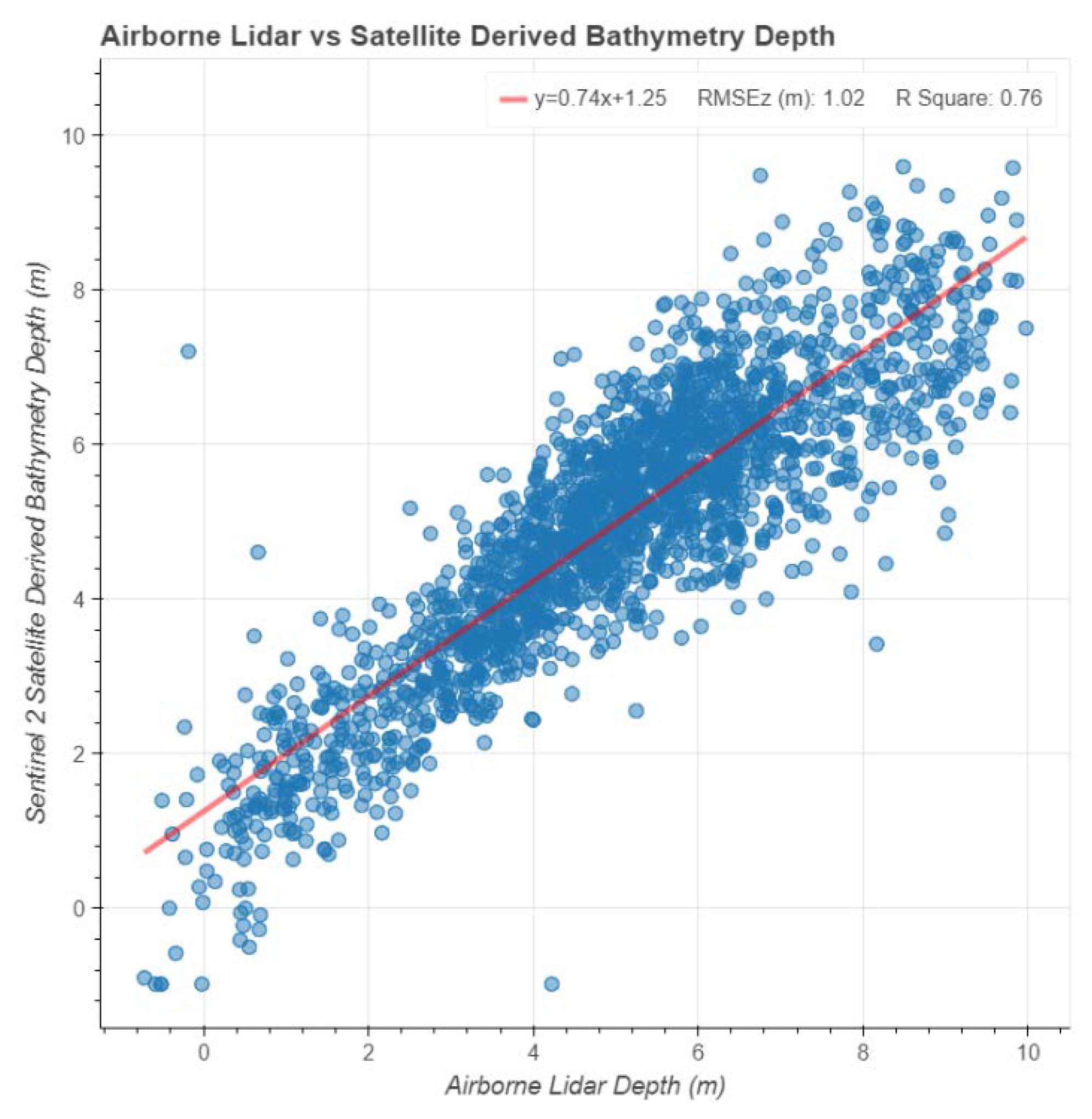

2.3. Satellite Data Pre-Processing

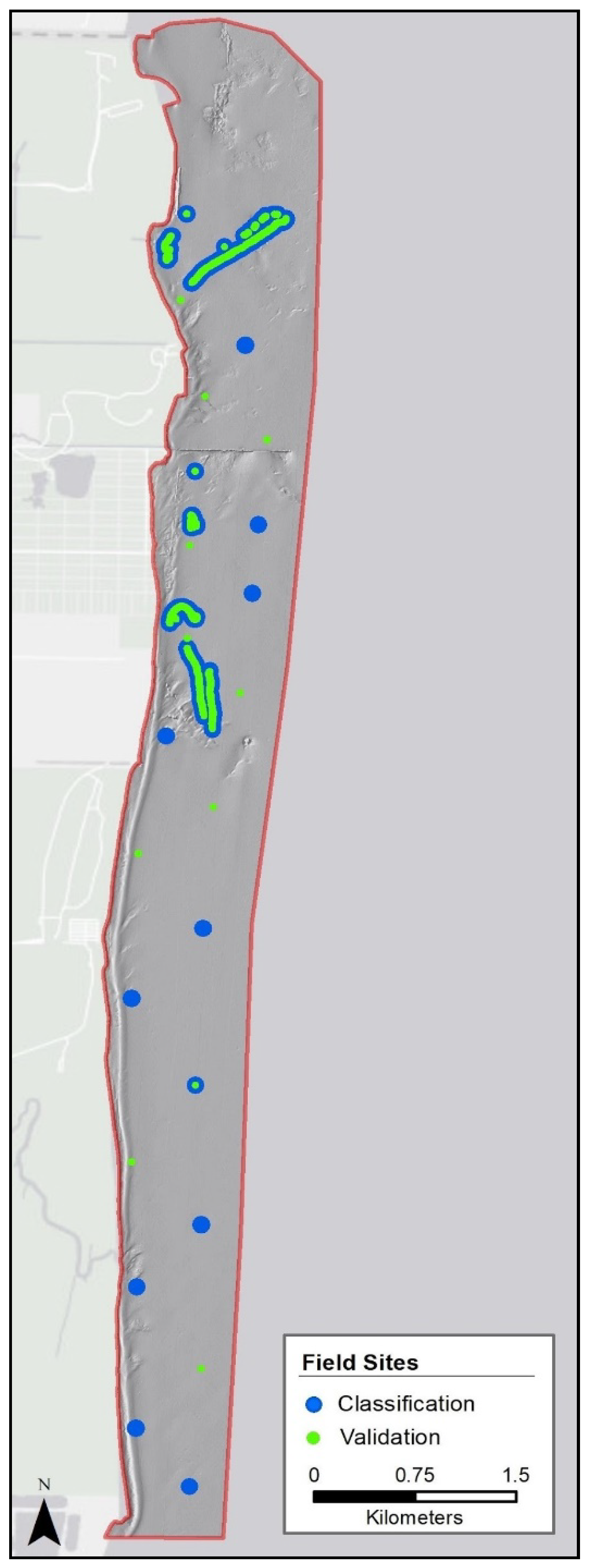

2.4. Field Data Collection

2.5. Data Classification and Analysis

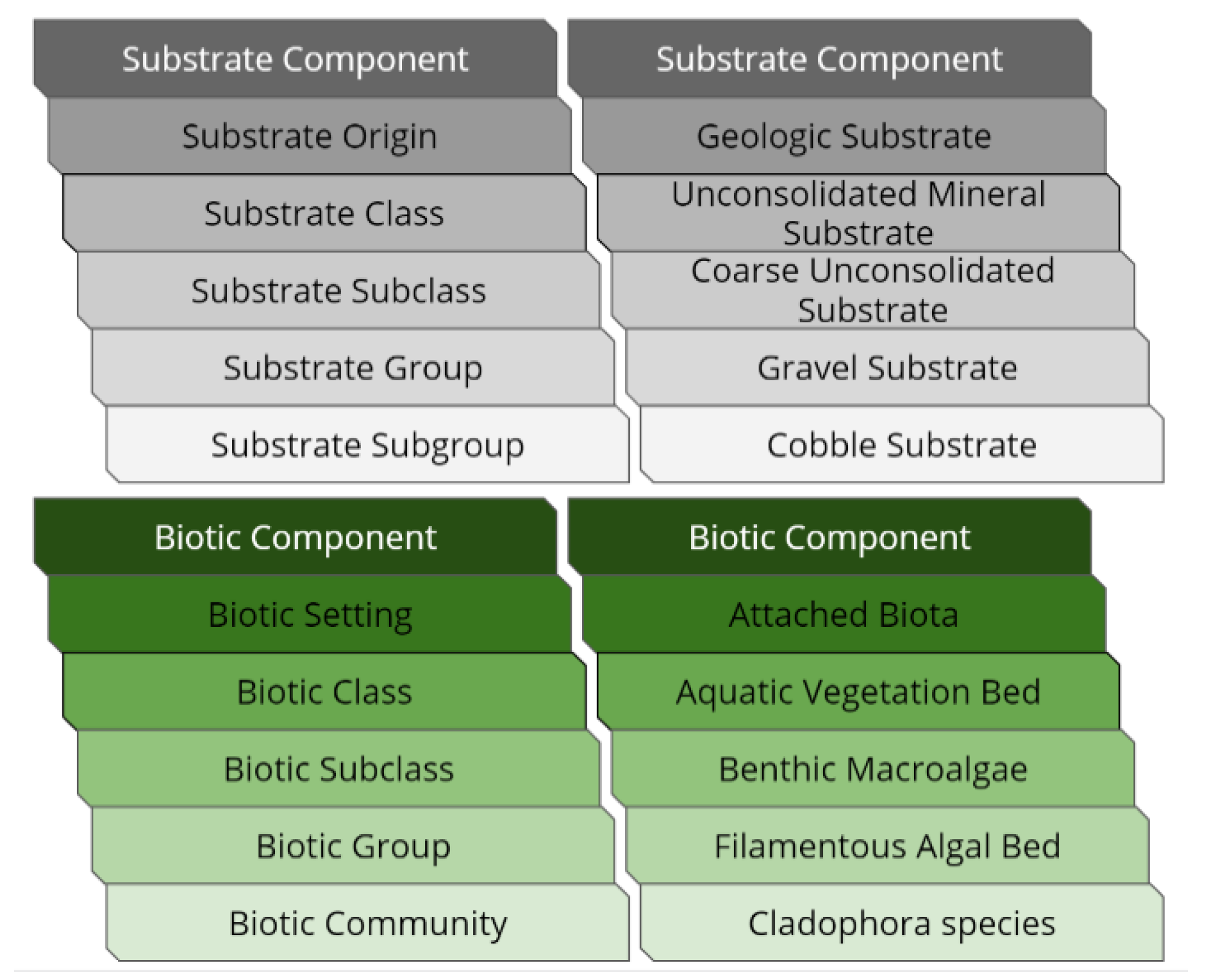

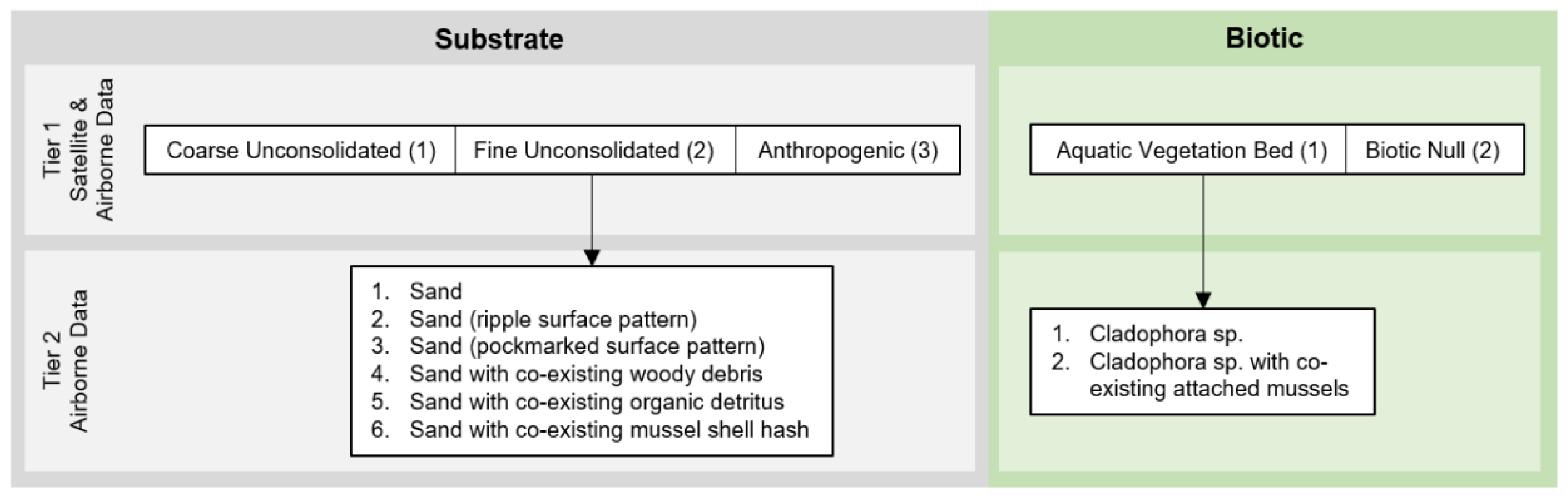

2.5.1. Classification System

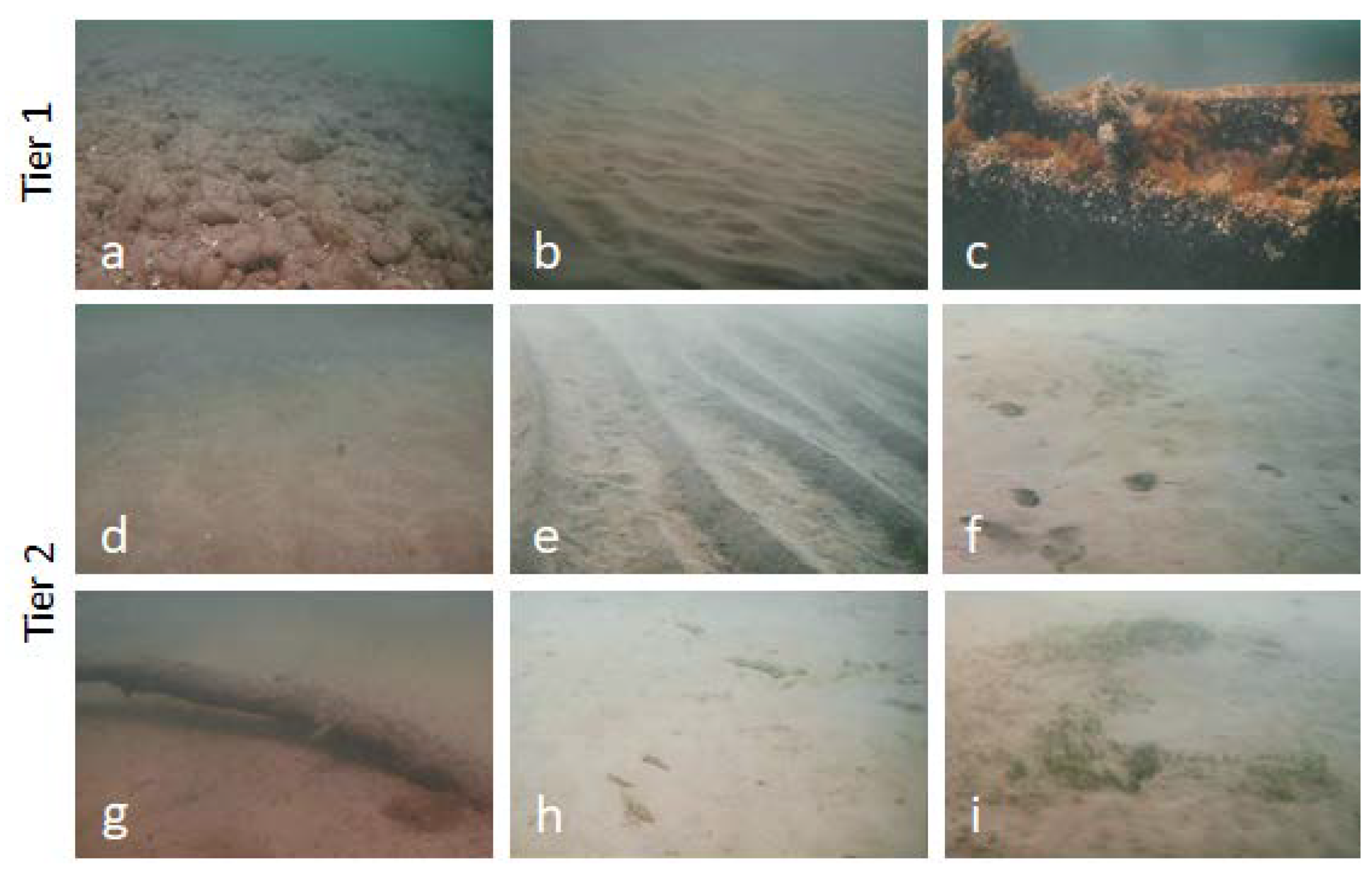

2.5.2. Field Data Classification

2.5.3. Remote Sensing Classification

3. Results

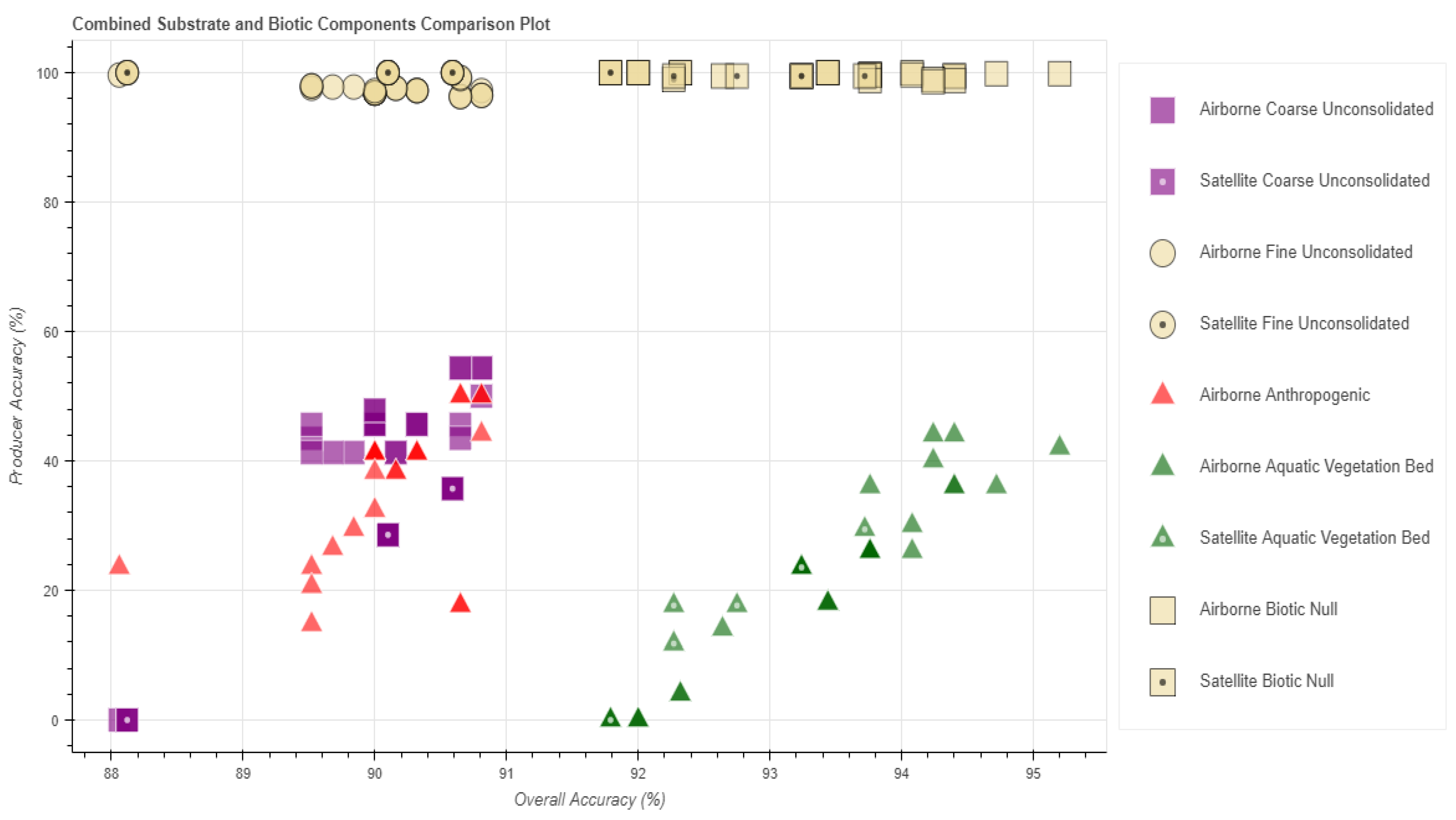

3.1. Classification Performance Analysis

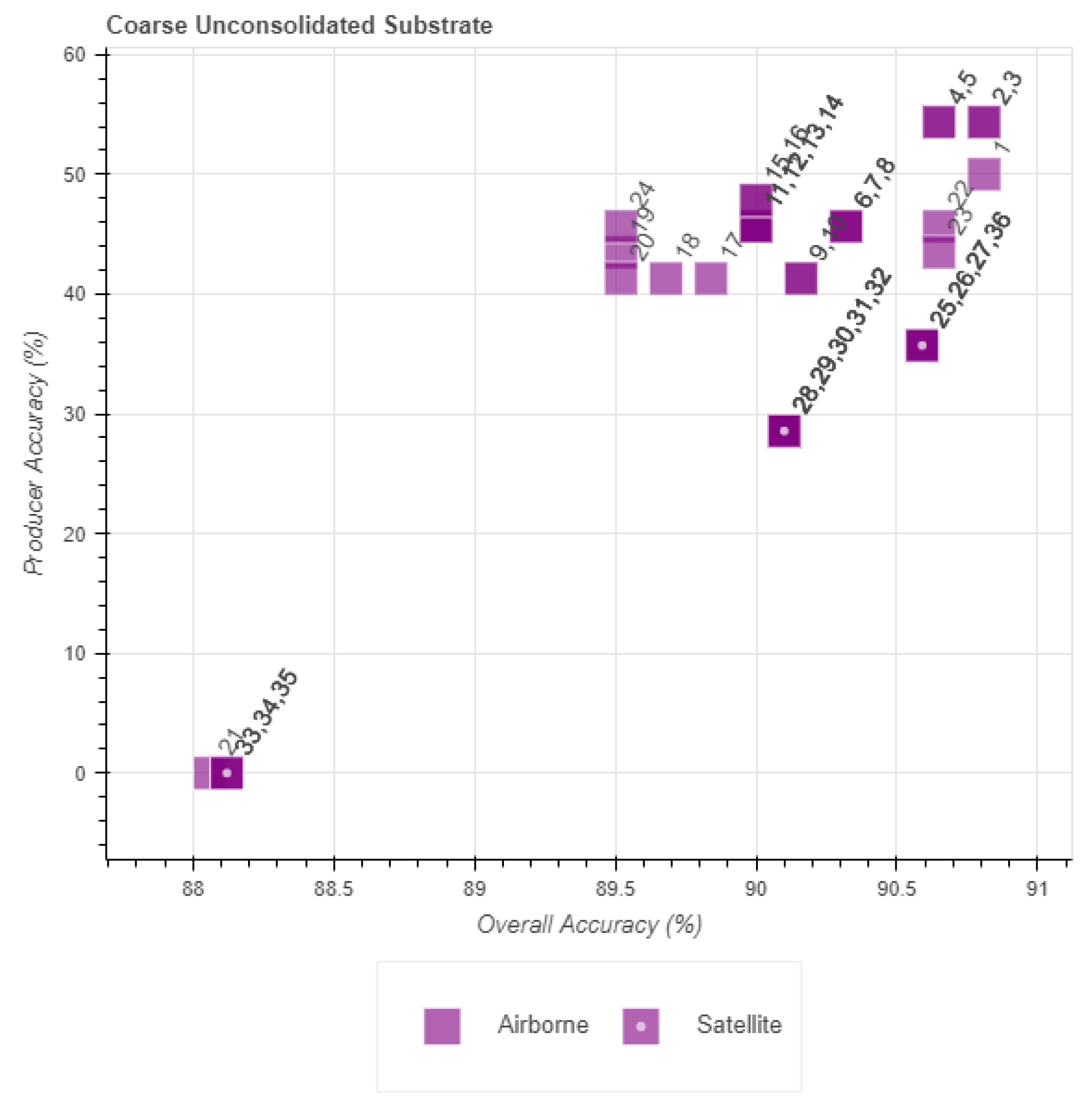

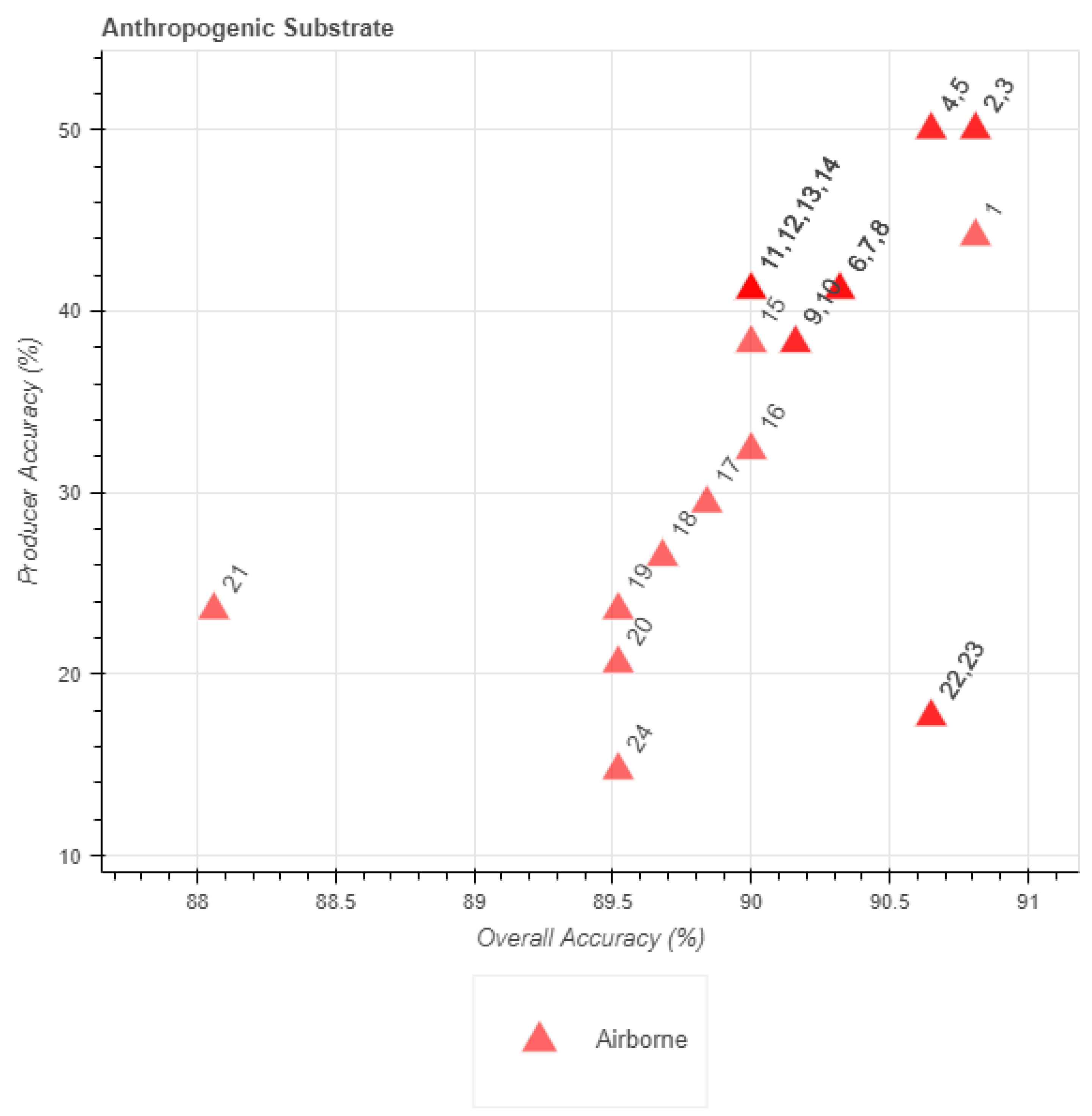

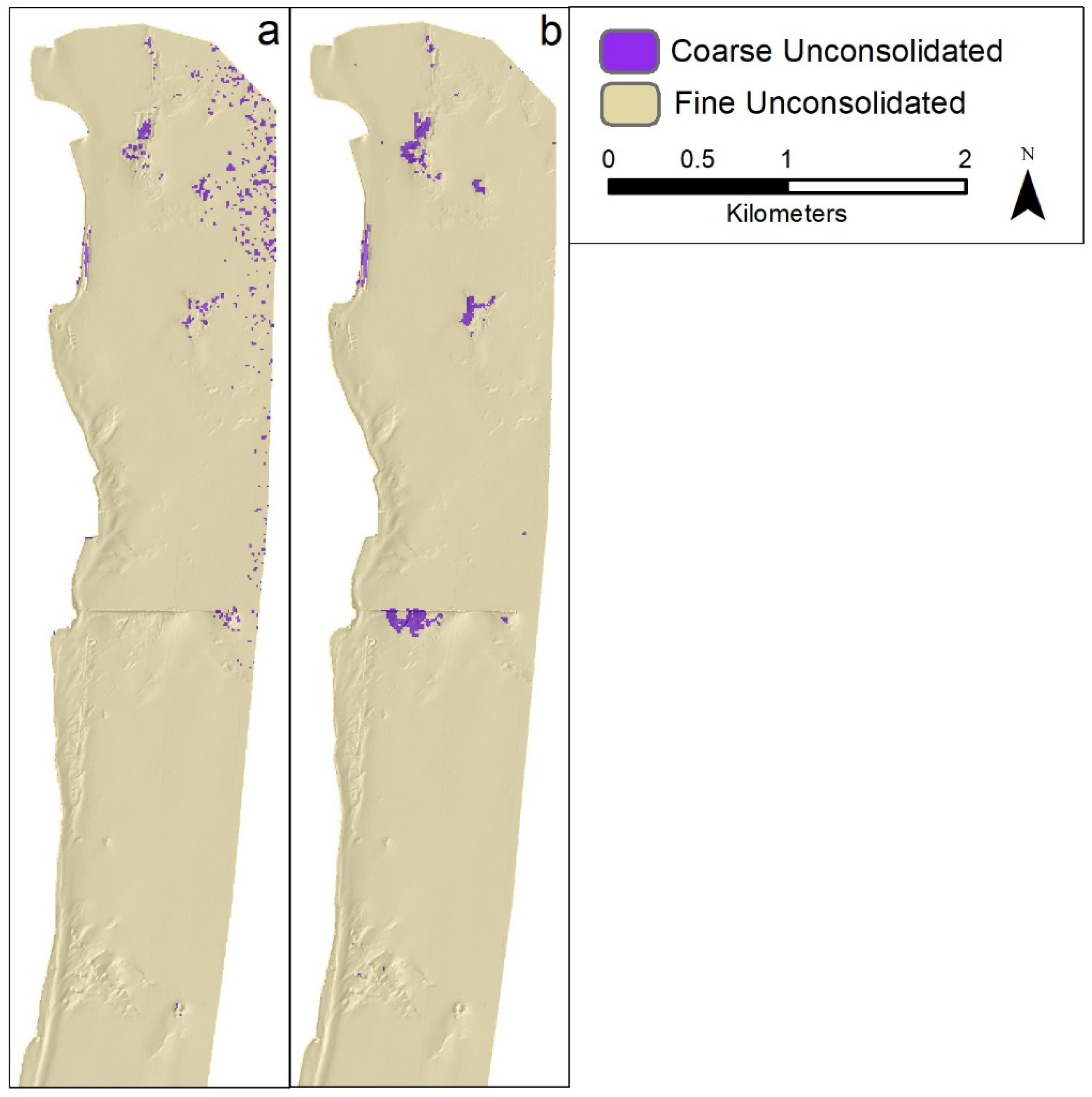

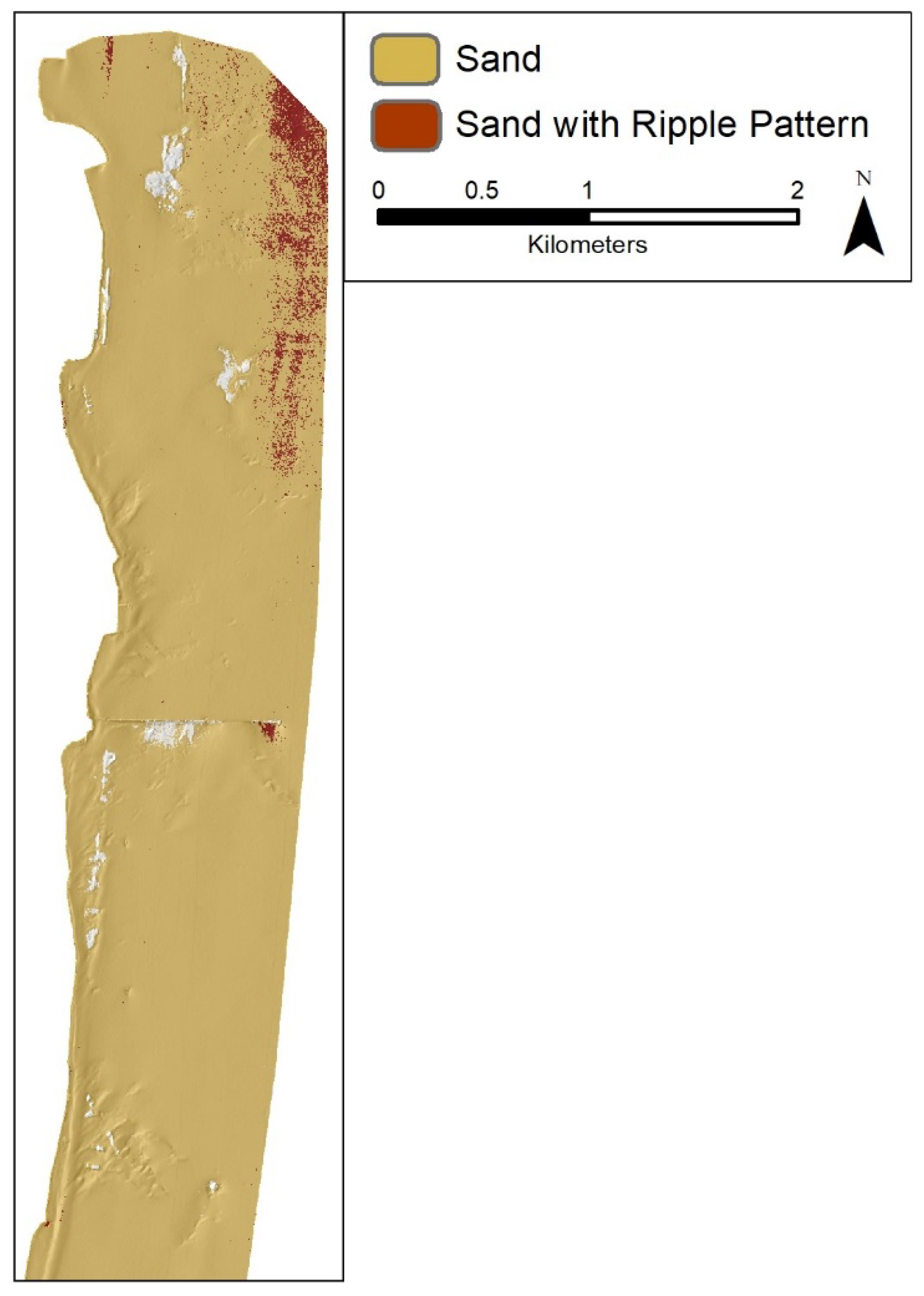

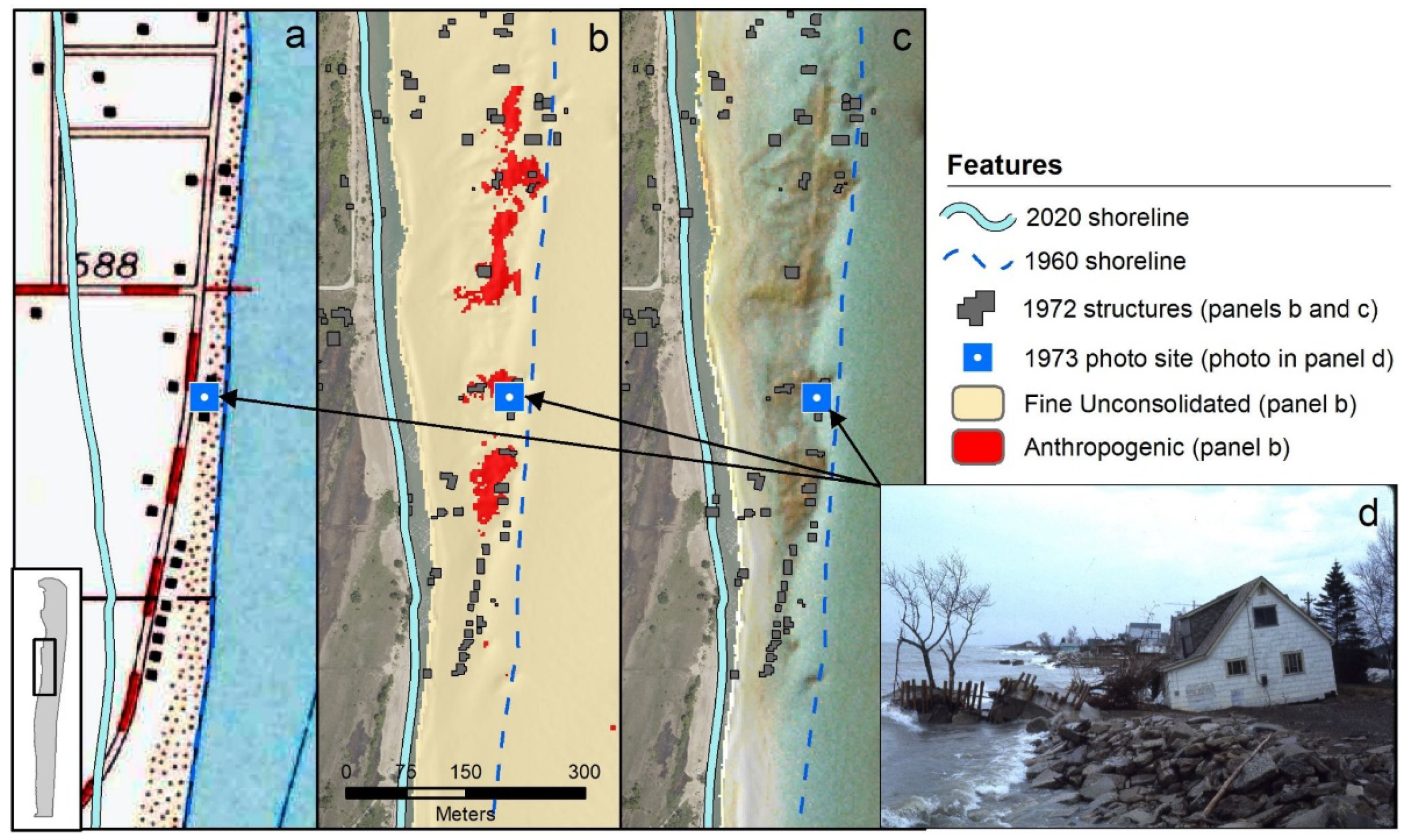

3.1.1. Substrate Component Classification Results

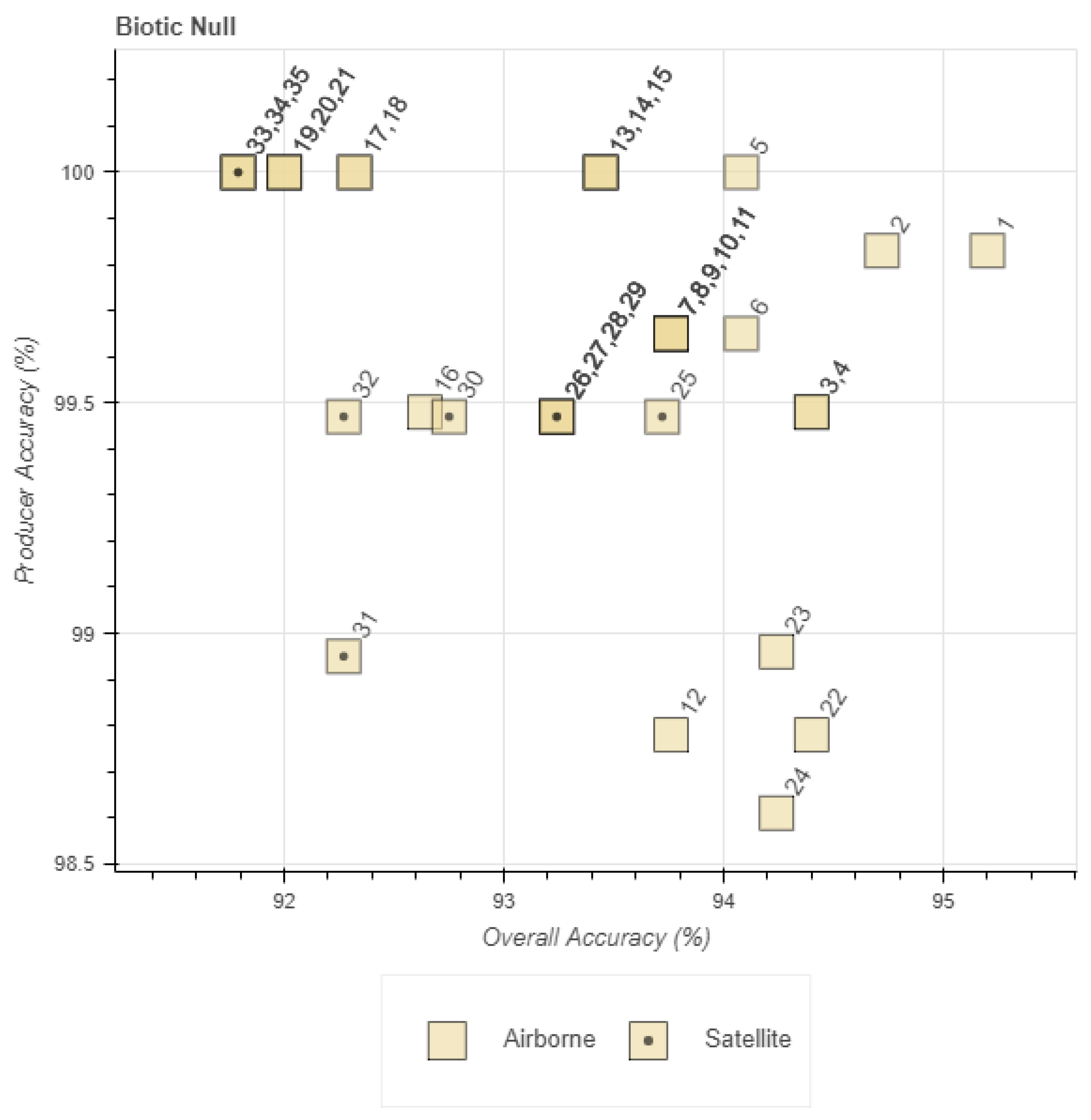

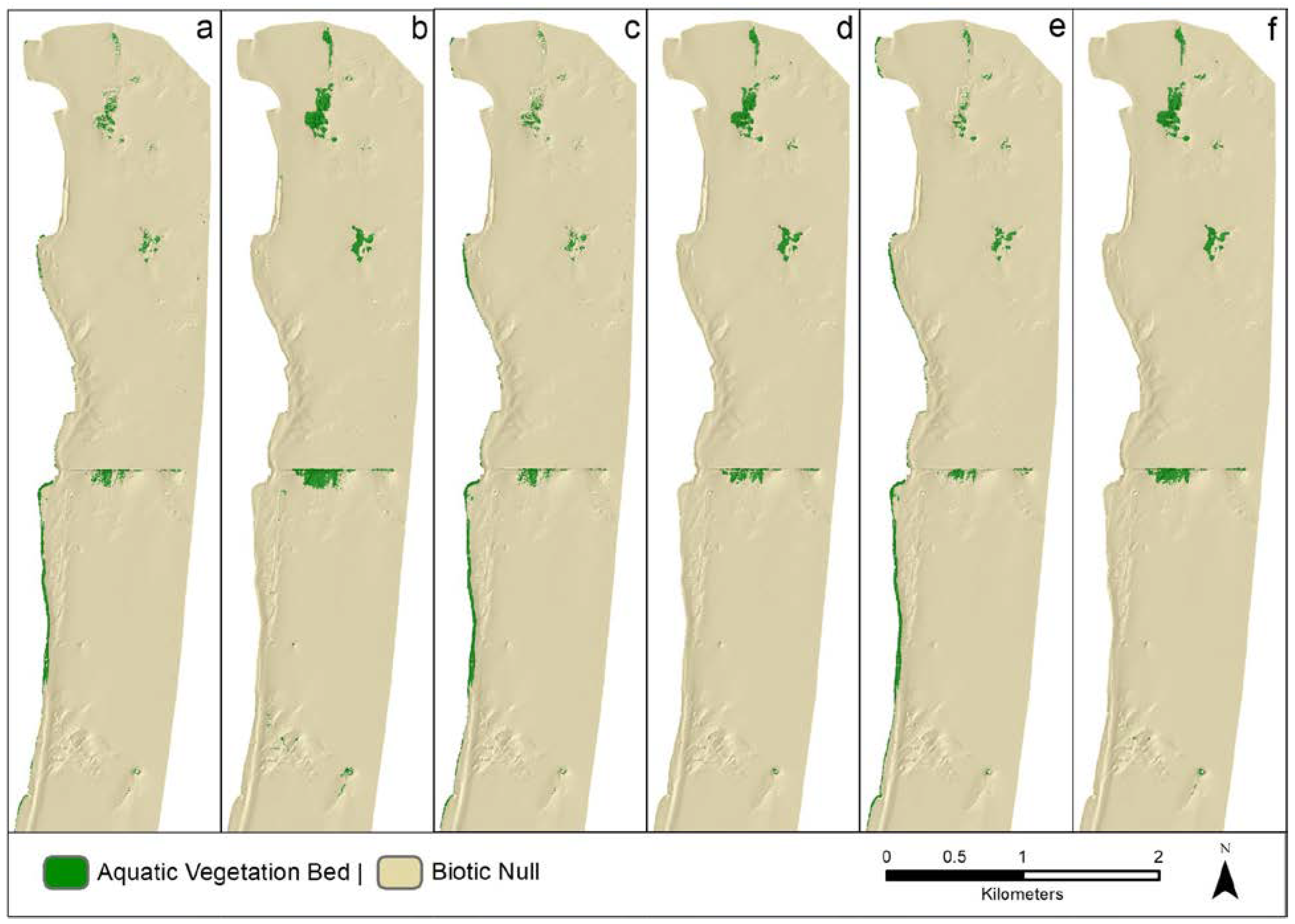

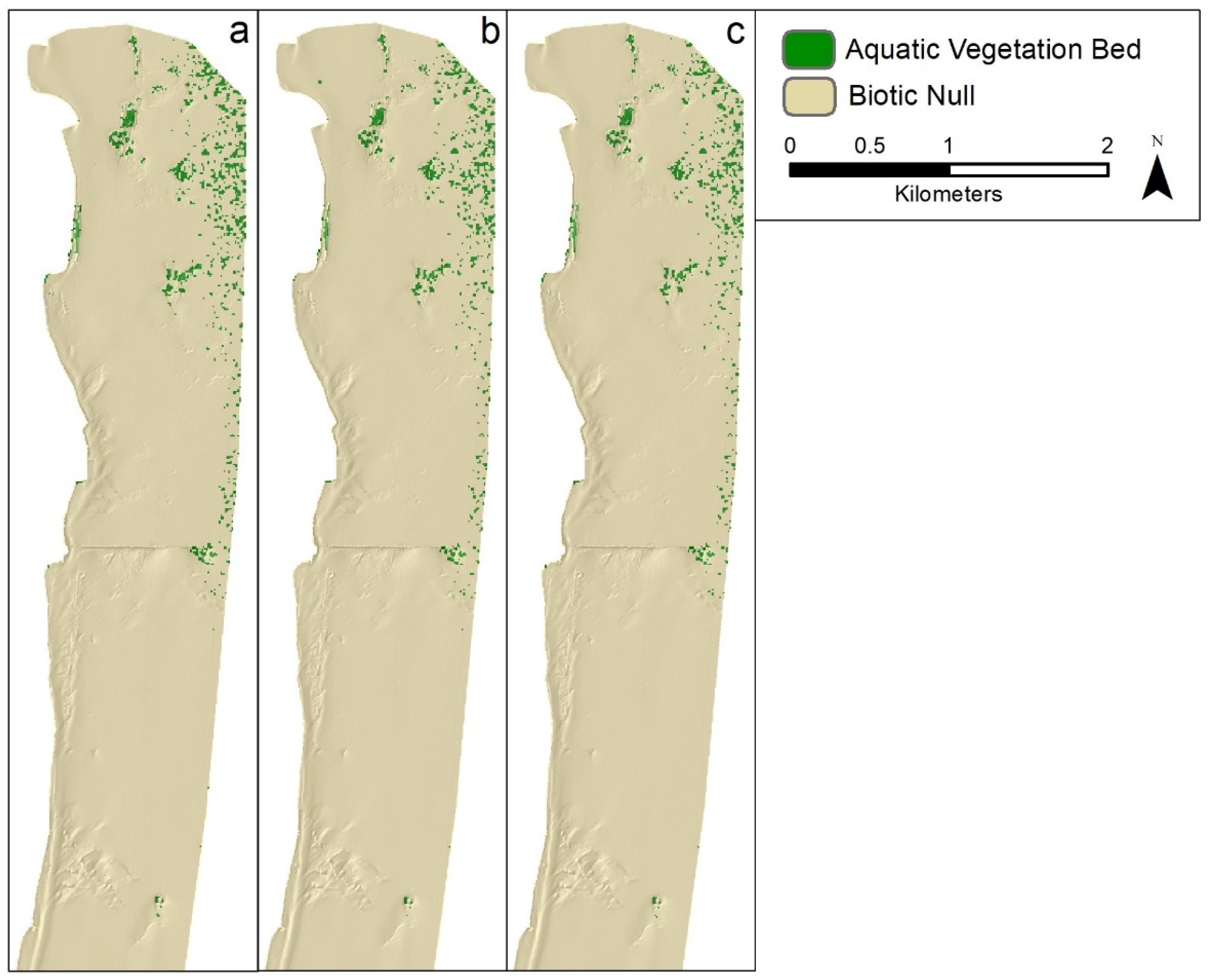

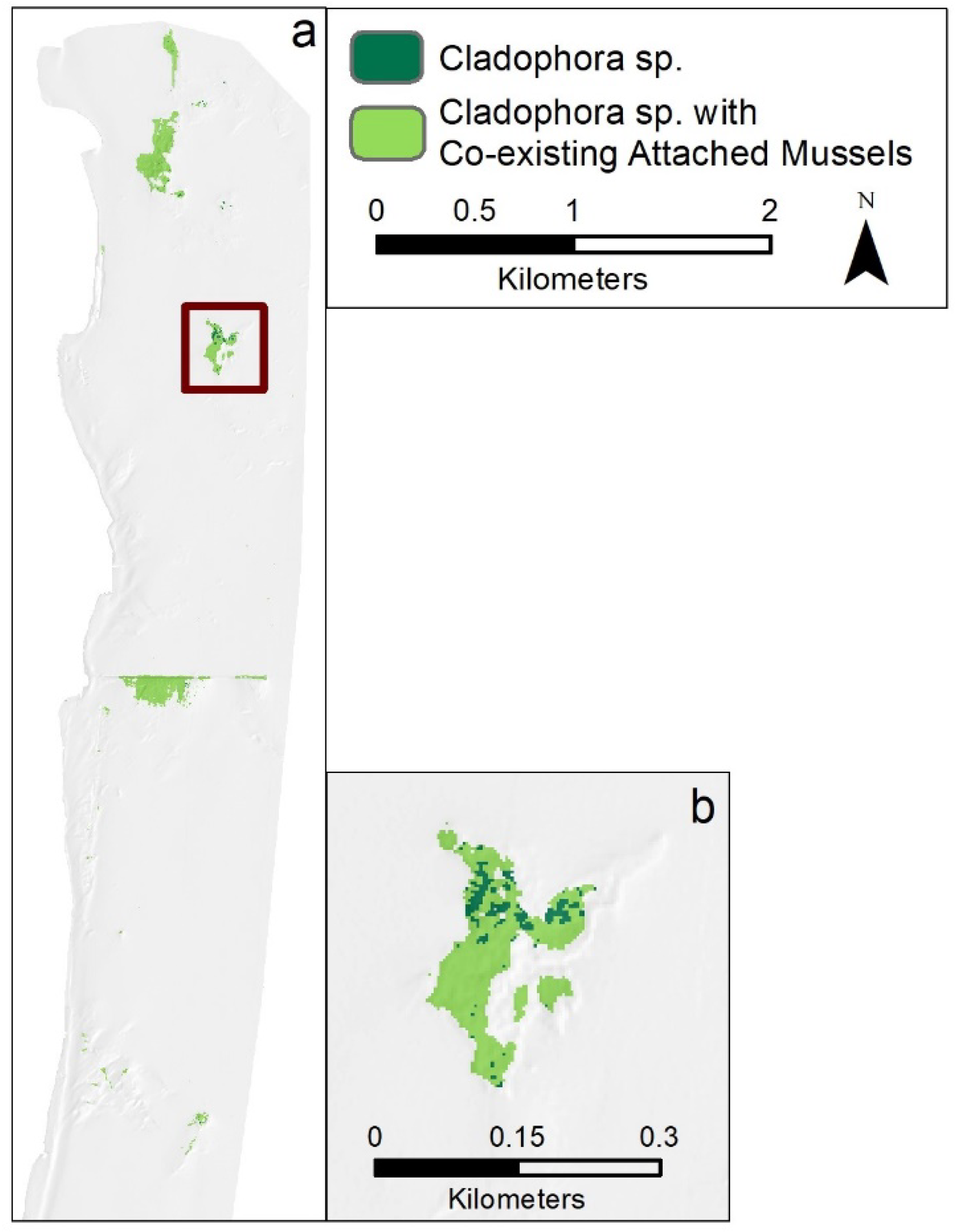

3.1.2. Biotic Component Classification Results

3.2. Component Classification Comparison

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Tier 1 Substrate and Biotic Component Classification Accuracy

| Coarse Unconsolidated | Fine Unconsolidated | Anthropogenic | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Index | Data Type | OA% | KC | Area% | PA% | UA% | Area% | PA% | UA% | Area% | PA% | UA% |

| Airborne Data Combinations | ||||||||||||

| 1 | CA MNF FU | 90.81 | 0.54 | 5.32 | 50 | 69.7 | 91.45 | 97.22 | 92.59 | 3.23 | 44.12 | 75 |

| 2 | CA MNF FU + CA MNF WLR | 90.81 | 0.56 | 5.65 | 54.35 | 71.43 | 90.16 | 96.48 | 93.2 | 4.19 | 50 | 65.38 |

| 3 | CA MNF WLR + CA MNF FU | 90.81 | 0.56 | 5.65 | 54.35 | 71.43 | 90.16 | 96.48 | 93.2 | 4.19 | 50 | 65.38 |

| 4 | CA MNF WLR + CA MNF FU + AB DE | 90.65 | 0.55 | 5.65 | 54.35 | 71.43 | 90 | 96.3 | 93.19 | 4.35 | 50 | 62.96 |

| 5 | AB DE + CA MNF FU + CA MNF WLR | 90.65 | 0.55 | 5.65 | 54.35 | 71.43 | 90 | 96.3 | 93.19 | 4.35 | 50 | 62.96 |

| 6 | CA MNF FU + CA MNF WLR + AB RF | 90.32 | 0.50 | 4.52 | 45.65 | 75 | 91.94 | 97.22 | 92.11 | 3.55 | 41.18 | 63.64 |

| 7 | CA MNF WLR + CA MNF FU + AB RF | 90.32 | 0.50 | 4.52 | 45.65 | 75 | 91.94 | 97.22 | 92.11 | 3.55 | 41.18 | 63.64 |

| 8 | CA MNF FU + AB RF + CA MNF WLR | 90.32 | 0.50 | 4.52 | 45.65 | 75 | 91.94 | 97.22 | 92.11 | 3.55 | 41.18 | 63.64 |

| 9 | AB RF + CA MNF FU + CA MNF WLR | 90.16 | 0.48 | 4.19 | 41.3 | 73.08 | 92.74 | 97.59 | 91.65 | 3.06 | 38.24 | 68.42 |

| 10 | AB RF + AB DE + CA MNF FU + CA MNF WLR | 90.16 | 0.48 | 4.19 | 41.3 | 73.08 | 92.74 | 97.59 | 91.65 | 3.06 | 38.24 | 68.42 |

| 11 | CA MNF FU + CA MNF WLR + AB DE + AB RF | 90.00 | 0.49 | 4.68 | 45.65 | 72.41 | 91.61 | 96.85 | 92.08 | 3.71 | 41.18 | 60.87 |

| 12 | CA MNF WLR + CA MNF FU + AB DE + AB RF | 90.00 | 0.49 | 4.68 | 45.65 | 72.41 | 91.61 | 96.85 | 92.08 | 3.71 | 41.18 | 60.87 |

| 13 | AB DE + AB RF + CA MNF FU + CA MNF WLR | 90.00 | 0.49 | 4.68 | 45.65 | 72.41 | 91.61 | 96.85 | 92.08 | 3.71 | 41.18 | 60.87 |

| 14 | CA MNF FU + AB RF + AB DE + CA MNF WLR | 90.00 | 0.49 | 4.68 | 45.65 | 72.41 | 91.61 | 96.85 | 92.08 | 3.71 | 41.18 | 60.87 |

| 15 | CA MNF FU + AB DE + AB RF | 90.00 | 0.49 | 4.84 | 47.83 | 73.33 | 91.61 | 96.85 | 92.08 | 3.55 | 38.24 | 59.09 |

| 16 | CA MNF FU + AB RF | 90.00 | 0.48 | 5 | 47.83 | 70.97 | 92.26 | 97.22 | 91.78 | 2.74 | 32.35 | 64.71 |

| 17 | AB RF + AB DE + CA MNF FU | 89.84 | 0.44 | 4.19 | 41.3 | 73.08 | 93.39 | 97.78 | 91.19 | 2.42 | 29.41 | 66.67 |

| 18 | AB RF + CA MNF FU | 89.68 | 0.43 | 4.19 | 41.3 | 73.08 | 93.55 | 97.78 | 91.03 | 2.23 | 26.47 | 64.29 |

| 19 | AB DE + AB RF | 89.52 | 0.42 | 4.52 | 43.48 | 71.43 | 93.39 | 97.59 | 91.02 | 2.1 | 23.53 | 61.54 |

| 20 | AB RF + AB DE | 89.52 | 0.41 | 3.87 | 41.3 | 79.17 | 94.03 | 97.96 | 90.74 | 2.1 | 20.59 | 53.85 |

| 21 | CA MNF WLR | 88.06 | 0.16 | 0 | 0 | 0 | 98.39 | 99.63 | 88.2 | 1.61 | 23.53 | 80 |

| Airborne Data Combinations with Additional ROIs | ||||||||||||

| 22 | CA MNF FU | 90.65 | 0.45 | 4.19 | 45.65 | 80.77 | 94.84 | 99.07 | 90.99 | 0.97 | 17.65 | 100 |

| 23 | CA MNF WLR + CA MNF FU + AB DE * | 90.65 | 0.44 | 3.87 | 43.48 | 83.33 | 95.16 | 99.26 | 90.85 | 0.97 | 17.65 | 100 |

| 24 | CA MNF FU + CA MNF WLR | 89.52 | 0.41 | 4.52 | 45.65 | 75 | 94.03 | 97.96 | 90.74 | 1.45 | 14.71 | 55.56 |

| Satellite Data Combinations | ||||||||||||

| 25 | S2 MS + S2 DII + S2 SDB | 90.59 | 0.32 | 2.48 | 35.71 | 100 | 97.52 | 100 | 90.36 | n/a | n/a | n/a |

| 26 | S2 MS + S2 SDB + S2 DII | 90.59 | 0.32 | 2.48 | 35.71 | 100 | 97.52 | 100 | 90.36 | n/a | n/a | n/a |

| 27 | S2 DII + S2 SDB + S2 MS | 90.59 | 0.32 | 2.48 | 35.71 | 100 | 97.52 | 100 | 90.36 | n/a | n/a | n/a |

| 28 | S2 MS + S2 DII | 90.10 | 0.27 | 1.98 | 28.57 | 100 | 98.02 | 100 | 89.9 | n/a | n/a | n/a |

| 29 | S2 DII + S2 MS | 90.10 | 0.27 | 1.98 | 28.57 | 100 | 98.02 | 100 | 89.9 | n/a | n/a | n/a |

| 30 | S2 SDB + S2 DII + S2 MS | 90.10 | 0.27 | 1.98 | 28.57 | 100 | 98.02 | 100 | 89.9 | n/a | n/a | n/a |

| 31 | S2 DII + S2 SDB | 90.10 | 0.27 | 1.98 | 28.57 | 100 | 98.02 | 100 | 89.9 | n/a | n/a | n/a |

| 32 | S2 SDB + S2 DII | 90.10 | 0.27 | 1.98 | 28.57 | 100 | 98.02 | 100 | 89.9 | n/a | n/a | n/a |

| 33 | S2 MS | 88.12 | 0 | 0 | 0 | 0 | 100 | 100 | 88.12 | n/a | n/a | n/a |

| 34 | S2 MS + S2 SDB | 88.12 | 0 | 0 | 0 | 0 | 100 | 100 | 88.12 | n/a | n/a | n/a |

| 35 | S2 SDB + S2 MS | 88.12 | 0 | 0 | 0 | 0 | 100 | 100 | 88.12 | n/a | n/a | n/a |

| Satellite Data Combinations with Additional ROIs | ||||||||||||

| 36 | S2 MS + S2 DII + S2 SDB | 90.59 | 0.32 | 2.48 | 35.71 | 100 | 97.52 | 100 | 90.36 | n/a | n/a | n/a |

| Aquatic Vegetation Bed | Biotic Null | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Index | Data Type | OA% | KC | Area% | PA% | UA% | Area% | PA% | UA% |

| Airborne Data Combinations | |||||||||

| 1 | AB DE + CA MNF FU + CA MNF WLR | 95.2 | 0.56 | 3.52 | 42 | 95.45 | 96.48 | 99.83 | 95.19 |

| 2 | CA MNF FU + CA MNF WLR | 94.72 | 0.50 | 3.04 | 36 | 94.72 | 96.96 | 99.83 | 94.72 |

| 3 | AB RF + AB DE + CA MNF FU + CA MNF WLR | 94.4 | 0.48 | 3.36 | 36 | 85.71 | 96.64 | 99.48 | 94.7 |

| 4 | AB RF + CA MNF FU + CA MNF WLR | 94.4 | 0.48 | 3.36 | 36 | 85.74 | 96.64 | 99.48 | 94.7 |

| 5 | CA MNF WLR + CA MNF FU | 94.08 | 0.39 | 2.08 | 26 | 100 | 97.92 | 100 | 93.95 |

| 6 | CA MNF WLR + CA MNF FU + AB DE | 94.08 | 0.42 | 2.72 | 30 | 88.24 | 97.28 | 99.65 | 94.24 |

| 7 | CA MNF WLR + CA MNF FU + AB DE + AB RF | 93.76 | 0.38 | 2.4 | 26 | 86.67 | 97.6 | 99.65 | 93.93 |

| 8 | CA MNF FU + CA MNF WLR + AB DE + AB RF | 93.76 | 0.38 | 2.4 | 26 | 86.67 | 97.6 | 99.65 | 93.93 |

| 9 | AB DE + AB RF + CA MNF FU + CA MNF WLR | 93.76 | 0.38 | 2.4 | 26 | 86.67 | 97.6 | 99.65 | 93.93 |

| 10 | CA MNF FU + AB RF + AB DE + CA MNF WLR | 93.76 | 0.38 | 2.4 | 26 | 86.67 | 97.6 | 99.65 | 93.93 |

| 11 | CA MNF FU + AB DE + AB RF | 93.76 | 0.38 | 2.4 | 26 | 86.67 | 97.6 | 99.65 | 93.93 |

| 12 | AB DE + AB RF | 93.76 | 0.45 | 4 | 36 | 72 | 96 | 98.78 | 94.67 |

| 13 | CA MNF WLR + CA MNF FU + AB RF | 93.44 | 0.29 | 1.44 | 18 | 100 | 98.56 | 100 | 93.34 |

| 14 | CA MNF FU + CA MNF WLR + AB RF | 93.44 | 0.29 | 1.44 | 18 | 100 | 98.56 | 100 | 93.34 |

| 15 | CA MNF FU + AB RF + CA MNF WLR | 93.44 | 0.29 | 1.44 | 18 | 100 | 98.56 | 100 | 93.34 |

| 16 | CA MNF FU | 92.64 | 0.21 | 1.6 | 14 | 70 | 98.4 | 99.48 | 93.01 |

| 17 | AB RF + AB DE + CA MNF FU | 92.32 | 0.07 | 0.32 | 4 | 100 | 99.68 | 100 | 92.3 |

| 18 | AB RF + AB DE | 92.32 | 0.07 | 0.32 | 4 | 100 | 99.68 | 100 | 92.3 |

| 19 | CA MNF WLR | 92 | 0.00 | 0 | 0 | 0 | 100 | 100 | 92 |

| 20 | CA MNF FU + AB RF | 92 | 0.00 | 0 | 0 | 0 | 100 | 100 | 92 |

| 21 | AB RF + CA MNF FU | 92 | 0.00 | 0 | 0 | 0 | 100 | 100 | 92 |

| Airborne Data Combinations with Additional ROIs | |||||||||

| 22 | AB DE + CA MNF FU + CA MNF WLR * | 94.4 | 0.53 | 4.64 | 44 | 75.86 | 95.36 | 98.78 | 95.3 |

| 23 | AB RF + AB DE + CA MNF FU + CA MNF WLR | 94.24 | 0.50 | 4.16 | 40 | 76.92 | 95.84 | 98.96 | 94.99 |

| 24 | CA MNF FU + CA MNF WLR | 94.24 | 0.52 | 4.8 | 44 | 73.33 | 95.2 | 98.61 | 95.29 |

| Satellite Data Combinations | |||||||||

| 25 | S2 MS + S2 DII + S2 SDB | 93.72 | 0.41 | 2.9 | 29.41 | 83.33 | 97.1 | 99.47 | 94.03 |

| 26 | S2 MS + S2 DII | 93.24 | 0.34 | 2.42 | 23.53 | 80 | 97.58 | 99.47 | 93.56 |

| 27 | S2 DII + S2 MS | 93.24 | 0.34 | 2.42 | 23.53 | 80 | 97.58 | 99.47 | 93.56 |

| 28 | S2 SDB + S2 DII + S2 MS | 93.24 | 0.34 | 2.42 | 23.53 | 80 | 97.58 | 99.47 | 93.56 |

| 29 | S2 DII + S2 SDB + S2 MS | 93.24 | 0.34 | 2.42 | 23.53 | 80 | 97.58 | 99.47 | 93.56 |

| 30 | S2 DII + S2 SDB | 92.75 | 0.26 | 1.93 | 17.65 | 75 | 98.07 | 99.47 | 93.1 |

| 31 | S2 MS + S2 SDB + S2 DII | 92.27 | 0.24 | 2.42 | 17.65 | 60 | 97.58 | 98.95 | 93.07 |

| 32 | S2 SDB + S2 DII | 92.27 | 0.18 | 1.45 | 11.76 | 66.67 | 98.55 | 99.47 | 92.65 |

| 33 | S2 MS | 91.79 | 0 | 0 | 0 | 0 | 100 | 100 | 91.79 |

| 34 | S2 MS + S2 SDB | 91.79 | 0 | 0 | 0 | 0 | 100 | 100 | 91.79 |

| 35 | S2 SDB + S2 MS | 91.79 | 0 | 0 | 0 | 0 | 100 | 100 | 91.79 |

References

- Lane, J.A.; Portt, C.B.; Minns, C.K. Spawning Habitat Characteristics of Great Lakes Fishes; Fisheries and Oceans Canada: Port Hardy, BC, Canada, 1996.

- Burlakova, L.E.; Barbiero, R.P.; Karatayev, A.Y.; Daniel, S.E.; Hinchey, E.K.; Warren, G.J. The benthic community of the Laurentian Great Lakes: Analysis of spatial gradients and temporal trends from 1998 to 2014. J. Great Lakes Res. 2018, 44, 600–617. [Google Scholar] [CrossRef]

- Madenjian, C.P.; Bunnell, D.B.; Warner, D.M.; Pothoven, S.A.; Fahnenstiel, G.L.; Nalepa, T.F.; Vanderploeg, H.A.; Tsehaye, I.; Claramunt, R.M.; Clark, R.D., Jr. Changes in the Lake Michigan food web following dreissenid mussel invasions: A synthesis. J. Great Lakes Res. 2015, 40, 217–231. [Google Scholar] [CrossRef]

- Binational.Net. About the Great Lakes Water Quality Agreement. Available online: https://binational.net/glwqa-aqegl/ (accessed on 1 June 2021).

- Menza, C.; Kendall, M.S.; Sautter, W.; Mabrouk, A.; Hile, S.D. Chapter 2: Lakebed Geomorphology, Substrates, and Habitats. In Ecological Assessment of Wisconsin-Lake Michigan; Menza, C., Kendall, M.S., Eds.; NOAA Technical Memorandum NOS NCCOS 257; NOAA NOS National Centers for Coastal Ocean Science, Marine Spatial Ecology Division: Silver Spring, MD, USA, 2019; pp. 5–30. [Google Scholar] [CrossRef]

- Riseng, C.M.; Wehrly, K.E.; Wang, L.; Rutherford, E.S.; McKenna, J.E., Jr.; Johnson, L.B.; Mason, L.A.; Castiglione, C.; Hollenhorst, T.P.; Sparks-Jackson, B.L.; et al. Ecosystem classification and mapping of the Laurentian Great Lakes. Can. J. Fish. Aquat. Sci. 2018, 75, 1693–1712. [Google Scholar] [CrossRef] [Green Version]

- Kutser, T.; Hedley, J.; Giardino, C.; Roelfsema, C.; Brando, V.E. Remote sensing of shallow waters—A 50 year retrospective and future directions. Remote Sens. Environ. 2020, 240, 111619. [Google Scholar] [CrossRef]

- Wozencraft, J.M.; Dunkin, L.M.; Eisemann, E.R.; Reif, M.K. Applications, Ancillary Systems, and Fusion. In Airborne Laser Hydrography II; eCommons: Ithaca, NY, USA, 2019; pp. 207–230. [Google Scholar]

- Reif, M.K.; Wozencraft, J.M.; Dunkin, L.M.; Sylvester, C.S.; Macon, C.L. A review of US Army Corps of Engineers airborne coastal mapping in the Great Lakes. J. Great Lakes Res. 2013, 39, 194–204. [Google Scholar] [CrossRef]

- Bergsma, E.; Almar, R. Coastal coverage of ESA’ Sentinel 2 mission. Adv. Space Res. 2020, 65, 2636–2644. [Google Scholar] [CrossRef]

- Anderson, N.T.; Marchisio, G.B. WorldView-2 and the evolution of the DigitalGlobe remote sensing satellite constellation: Introductory paper for the special session on WorldView-2. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XVIII; International Society for Optics and Photonics: Baltimore, MD, USA, 2012; Volume 8390. [Google Scholar] [CrossRef]

- Vahtmäe, E.; Paavel, B.; Kutser, T. How much benthic information can be retrieved with hyperspectral sensor from the optically complex coastal waters? J. Appl. Remote Sens. 2020, 14, 16504. [Google Scholar] [CrossRef]

- Stumpf, R.P.; Holderied, K.; Sinclair, M. Determination of water depth with high-resolution satellite imagery over variable bottom types. Limnol. Oceanogr. 2003, 48, 547–556. [Google Scholar] [CrossRef]

- Eugenio, F.; Marcello, J.; Martin, J.; Rodríguez-Esparragón, D. Benthic Habitat Mapping Using Multispectral High-Resolution Imagery: Evaluation of Shallow Water Atmospheric Correction Techniques. Sensors 2017, 17, 2639. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brando, V.E.; Anstee, J.M.; Wettle, M.; Dekker, A.G.; Phinn, S.R.; Roelfsema, C. A physics based retrieval and quality assessment of bathymetry from suboptimal hyperspectral data. Remote Sens. Environ. 2009, 113, 755–770. [Google Scholar] [CrossRef]

- Lee, Z.; Carder, K.L.; Mobley, C.D.; Steward, R.G.; Patch, J.S. Hyperspectral remote sensing for shallow waters: A semianalytical model. Appl. Opt. 1998, 37, 6329–6338. [Google Scholar] [CrossRef] [PubMed]

- Kim, M.; Park, J.Y.; Aitken, J. Atmospheric correction of the CASI hyperspectral image using the scattering angle by the direct solar beam. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XVIII; International Society for Optics and Photonics: Baltimore, MD, USA, 2012; Volume 8390. [Google Scholar] [CrossRef]

- Garcia, R.A.; McKinna, L.I.W.; Hedley, J.D.; Fearns, P.R.C.S. Improving the optimization solution for a semi-analytical shallow water inversion model in the presence of spectrally correlated noise. Limnol. Oceanogr. Methods 2014, 12, 651–669. [Google Scholar] [CrossRef] [Green Version]

- Casal, G.; Kutser, T.; Dominguez-Gomez, J.A.; Sanchez-Carnero, N.; Freire, J. Mapping benthic macroalgal communities in the coastal zone using CHRIS-PROBA mode 2 images. Estuar. Coast. Shelf Sci. 2011, 94, 281–290. [Google Scholar] [CrossRef]

- Mumby, P.J.; Edwards, A.J. Mapping marine environments with IKONOS imagery: Enhanced spatial resolution can deliver greater thematic accuracy. Remote Sens. Environ. 2002, 82, 248–257. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Assessment of Machine Learning Algorithms for Automatic Benthic Cover Monitoring and Mapping Using Towed Underwater Video Camera and High-Resolution Satellite Images. Remote Sens. 2018, 10, 773. [Google Scholar] [CrossRef] [Green Version]

- Wicaksono, P.; Lazuardi, W. Assessment of PlanetScope images for benthic habitat and seagrass species mapping in a complex optically shallow water environment. Int. J. Remote Sens. 2018, 39, 5739–5765. [Google Scholar] [CrossRef]

- Zhang, C. Applying data fusion techniques for benthic habitat mapping and monitoring in a coral reef ecosystem. ISPRS J. Photogram Remote Sens. 2015, 104, 213–223. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping seabed sediments: Comparison of manual, geostatistical, object-based image analysis and machine learning approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef] [Green Version]

- Ierodiaconou, D.; Schimel, A.C.G.; Kennedy, D.; Monk, J.; Gaylard, G.; Young, M.; Diesing, M.; Rattray, A. Combining pixel and object based image analysis of ultra-high resolution multibeam bathymetry and backscatter for habitat mapping in shallow marine waters. Mar. Geophys. Res. 2018, 39, 271–288. [Google Scholar] [CrossRef]

- Diesing, M.; Stephens, D. A multi-model ensemble approach to seabed mapping. J. Sea Res. 2015, 100, 62–69. [Google Scholar] [CrossRef]

- Goetz, S.; Gardiner, N.; Viers, J. Monitoring freshwater, estuarine and near-shore benthic ecosystems with multi-sensor remote sensing: An introduction to the special issue. Remote Sens. Environ. 2008, 112, 3993–3995. [Google Scholar] [CrossRef]

- Kerfoot, W.C.; Hobmeier, M.M.; Swain, G.; Regis, R.; Raman, V.K.; Brooks, C.N.; Grimm, A.; Cook, C.; Shuchman, R.; Reif, M. Coastal Remote Sensing: Merging Physical, Chemical, and Biological Data as Tailings Drift onto Buffalo Reef, Lake Superior. Remote Sens. 2021, 13, 2434. [Google Scholar] [CrossRef]

- Rose, K.V.; Nayegandhi, A.; Moses, C.S.; Beavers, R.; Lavoie, D.; Brock, J.C. Gap Analysis of Benthic Mapping at Three National Parks–Assateague Island National Seashore, Channel Islands National Parks, and Sleeping Bear Dunes National Lakeshore: U.S.; US Geological Survey: Reston, VA, USA, 2012; p. 60.

- McClinton, T. Great Lakes Benthic Habitat Mapping: South Manitou Island Phase 2 CMECS Substrate and Biotic Components; Technical report developed for NOAA Office for Coastal Management; David Evans and Associates: Vancouver, WA, USA, 2018; p. 32. [Google Scholar]

- Menza, C.; Kendall, M.S. (Eds.) Ecological Assessment of Wisconsin-Lake Michigan; NOAA Technical Memorandum NOS NCCOS 257; NOAA NOS National Centers for Coastal Ocean Science, Marine Spatial Ecology Division: Silver Spring, MD, USA, 2019; p. 106. [CrossRef]

- Shuchman, R.A.; Sayers, M.J.; Brooks, C.N. Mapping and monitoring the extent of submerged aquatic vegetation in the Laurentian Great Lakes with multi-scale satellite remote sensing. J. Great Lakes Res. 2013, 39, 78–89. [Google Scholar] [CrossRef]

- Mwakanyamale, K.E.; Brown, S.E.; Theuerkauf, E.J. Delineating spatial distribution and thickness of unconsolidated sand along the southwest Lake Michigan shoreline using TEM and ERT geophysical methods. J. Great Lakes Res. 2020, 46, 1544–1558. [Google Scholar] [CrossRef]

- Tuell, G.; Barbor, K.; Wozencraft, J. Overview of the coastal zone mapping and imaging lidar (CZMIL): A new multisensor airborne mapping system for the U.S. Army Corps of Engineers. Proc. SPIE 2010, 7695, 76950R. [Google Scholar]

- Feygels, V.I.; Park, J.Y.; Wozencraft, J.; Aitken, J.; Macon, C.; Mathur, A.; Payment, A.; Ramnath, V. Czmil (coastal zone mapping and imaging lidar): From first flights to first mission through system validation. In Proceedings of the SPIE Defense, Security, and Sensing, Baltimore, MD, USA, 29 April–3 May 2013; pp. 1–15. [Google Scholar]

- Kim, M.; Park, J.Y.; Tuell, G.H. A Constrained Optimization Technique for Estimating Environmental Parameters from CZMIL Hyperspectral and Lidar Data. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XVI; SPIE: Bellingham, DC, USA, 2010; Volume 7695. [Google Scholar]

- National Renewable Energy Laboratory; SMARTS: Simple Model of the Atmospheric Radiative Transfer of Sunshine. Available online: https://www.nrel.gov/grid/solar-resource/smarts.html (accessed on 1 October 2019).

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective; Prentice-Hall Inc.: Saddle River, NJ, USA, 1996. [Google Scholar]

- Tuell, G.H.; Park, J.Y.; Aitken, J.; Ramnath, V.; Feygels, V.; Guenther, G.; Kopilevich, Y. SHOALS-enabled 3D benthic mapping. Proc. SPIE 2005, 5806, 816–826. [Google Scholar]

- Copernicus Open Access Hub. Sentinel 2 Level 1C Imagery Processed by ESA. Available online: https://scihub.copernicus.eu/dhus/#/home (accessed on 28 January 2020).

- Sentinel Application Platform (SNAP) [Computer Software]. Available online: https://step.esa.int/main/download/snap-download/ (accessed on 18 June 2020).

- Song, C.; Woodcock, C.E.; Seto, K.C.; Lenney, M.P.; Macomber, S.A. Classification and change detection using Landsat TM data: When and how to correct atmospheric effects? Remote Sens. Environ. 2001, 75, 230–244. [Google Scholar] [CrossRef]

- Serco Italia SPA. Sen2Coral Toolbox for Coral Reef Monitoring, Great Barrier Reef (Version 1.1). Available online: https://rus-copernicus.eu/portal/the-rus-library/learn-by-yourself/ (accessed on 18 June 2020).

- Lyzenga, D.R. Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef] [PubMed]

- Green, P.E.; Mumby, P.J.; Edwards, A.J.; Clark, C.D. Remote Sensing Handbook for Tropical Coastal Management; UNESCO: Paris, France, 2000; p. 316. [Google Scholar]

- Coastal and Marine Ecological Classification Standard. FGDC-STD-018-2012. Federal Geographic Data Committee. Reston, VA. Available online: https://www.fgdc.gov/standards/projects/cmecs-folder/CMECS_Version_06-2012_FINAL.pdf (accessed on 15 October 2019).

- Strong, J.A.; Clements, A.; Lillis, H.; Galparsoro, I.; Bildstein, T.; Pesch, R.; Birchenough, S. A review of the influence of marine habitat classification schemes on mapping studies: Inherent assumptions, influence on end products, and suggestions for future developments. ICES J. Mar. Sci. 2019, 76, 10–22. [Google Scholar] [CrossRef] [Green Version]

- Marine and Coastal Spatial Data Subcommittee. Coding System Approach for Coastal and Marine Ecological Classification Standard (CMECS) Classification and Modifier Units. In Technical Guidance Document 2014-1; Federal Geographic Data Committee: Reston, VA, USA, 2014; p. 8. [Google Scholar]

- Marcello, J.; Eugenio, F.; Martin, J.; Marques, F. Seabed mapping in coastal shallow waters using high resolution multispectral and hyperspectral imagery. Remote Sens. 2018, 10, 1208. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Reif, M.; Piercy, C.; Jarvis, J.; Sabol, B.; Macon, C.; Loyd, R.; Colarusso, P.; Dierssen, H.; Aitken, J. Ground Truth Sampling to Support Remote Sensing Research and Development: Submersed Aquatic Vegetation Species Discrimination Using an Airborne Hyperspectral/Lidar System; U.S. Army Engineer Research and Development Center: Vicksburg, MS, USA, 2012. [Google Scholar]

- McCarthy, M.J.; Radabaugh, K.R.; Moyer, R.P.; Muller-Karger, F.E. Enabling efficient, large-scale high-spatial resolution wetland mapping using satellites. Remote Sens. Environ. 2018, 208, 189–201. [Google Scholar] [CrossRef]

- Reif, M.K.; Theel, H.J. Remote sensing for restoration ecology: Application for restoring degraded, damaged, transformed, or destroyed ecosystems. Int. Environ. Assess. 2017, 13, 614–630. [Google Scholar] [CrossRef] [PubMed]

- Poursanidis, D.; Traganos, D.; Reinartz, P.; Chrysoulakis, N. On the use of Sentinel-2 for coastal habitat mapping and satellite-derived bathymetry estimation using downscaled coastal aerosol band. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 58–70. [Google Scholar] [CrossRef]

- Traganos, D.; Poursanidis, D.; Aggarwal, B.; Chrysoulakis, N.; Reinartz, P. Estimating satellite-derived bathymetry (SDB) with the google earth engine and sentinel-2. Remote Sens. 2018, 10, 859. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.; Weng, Q.A. Survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- U.S. Geological Survey’s TopoView. Available online: https://ngmdb.usgs.gov/topoview/viewer/ (accessed on 20 May 2021).

- Abrams Aerial Survey Corporation. Topographic Map of Illinois Beach Prepared for State of Illinois, Department of Conservation, Springfield Illinois. Scale 1:2.400; Abrams Aerial Survey Corporation: Lansing, MI, USA, 1972. [Google Scholar]

- Collinson, C. House Falling in Water, Lake Michigan Shoreline, Zion, Illinois, April 1973: [35 mm Slide Scanned to JPG Format]; Illinois State Geological Survey Photograph Collection, University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1973. [Google Scholar]

| CMECS Hierarchy | CMECS Code | CMECS Description | Class Tier 1 Index # | Class Tier 2 Index # | Field Observation |

|---|---|---|---|---|---|

| Substrate Component | |||||

| Substrate Origin | S1 | Geologic Substrate | --- | --- | --- |

| Substrate Class | S1.2 | Geologic Substrate|Unconsolidated Mineral Substrate | 1 | X | Yes |

| Substrate Subclass | S1.2.1 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate | --- | --- | --- |

| Substrate Group | S1.2.1.1 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Substrate | 1 | X | Yes |

| Substrate Group | S1.2.1.1/S2.5.3.3 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Substrate (COE: Mussell Shell Hash) | 1 | X | Yes |

| Substrate Subgroup | S1.2.1.1.1 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Substrate|Boulder Substrate | 1 | X | Yes |

| Substrate Subgroup | S1.2.1.1.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Substrate|Cobble Substrate | 1 | X | Yes |

| Substrate Subgroup | S1.2.1.1.2/S2.5.3.3 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Substrate|Cobble Substratestrate (COE: Mussel Shell Hash) | 1 | X | Yes |

| Substrate Group | S1.2.1.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravel Mixes Substrate | 1 | X | Yes |

| Substrate Subgroup | S1.2.1.3.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravelly|Gravelly Muddy Sand | 1 | X | Yes |

| Substrate Subgroup | S1.2.1.3.2/S2.5.3.3 | Geologic Substrate|Unconsolidated Mineral Substrate|Coarse Unconsolidated Substrate|Gravelly Substrate|Gravelly Muddy Sand (COE: Mussel Shell Hash) | 1 | X | Yes |

| Substrate Subclass | S1.2.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate | --- | --- | --- |

| Substrate Group | S1.2.2.1 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate | --- | --- | --- |

| Substrate Subgroup | S1.2.2.1.1 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand | 2 | 1 | Yes |

| Substrate Subgroup | S1.2.2.1.1(SP04) | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand (Surf Pattern: Ripple Surface) | 2 | 2 | Yes |

| Substrate Subgroup | S1.2.2.1.1(SP07)[P] | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand (Surf Pattern: Pockmarked Surface Pattern) | 2 | 3 | Yes |

| Substrate Subgroup | S1.2.2.1.1/S2.3.1.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand (COE: Woody Debris) | 2 | 4 | Yes |

| Substrate Subgroup | S1.2.2.1.1/S2.3.2 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand (COE: Organic Detritus; Possible INT: Spotted Surface Color) | 2 | 5 | Yes |

| Substrate Subgroup | S1.2.2.1.1/S2.5.3.3 | Geologic Substrate|Unconsolidated Mineral Substrate|Fine Unconsolidated Substrate|Slightly Gravelly Substrate|Slightly Gravelly Sand (COE: Mussel Shell Hash) | 2 | 6 | Yes |

| Substrate Origin | S3 | Anthropogenic Substrate | --- | --- | --- |

| Substrate Class | S3.1 | Anthropogenic Substrate|Anthropogenic Rock Substrate | --- | --- | --- |

| Substrate Subclass | S3.1.2 | Anthropogenic Substrate|Anthropogenic Rock Substrate|Anthropogenic Rock Rubble Substrate | 3 | X | Yes |

| Substrate Subclass | S3.1.2/S2.3.1.2 | Anthropogenic Substrate|Anthropogenic Rock Substrate|Anthropogenic Rock Rubble Substrate (COE: Woody Debris) | 3 | X | Yes |

| Substrate Subclass | S3.2.1 | Anthropogenic Substrate|Anthropogenic Wood Substrate|Anthropogenic Wood Reef Substrate (INT: Shipwreck SC-419) | 3 | X | Yes |

| Substrate Subclass | S3.2.1/S3.4.2 | Anthropogenic Substrate|Anthropogenic Wood Substrate|Anthropogenic Wood Reef Substrate (COE: Sparse Metal Substrate; INT: Shipwreck Solon H. Johnson) | 3 | X | Yes |

| Substrate Subclass | S3.4.1 | Anthropogenic Substrate|Anthropogenic Metal Substrate|Anthropogenic Metal Reef Substrate | 3 | X | Yes |

| Substrate Subclass | S3.4.1/S3.2.2 | Anthropogenic Substrate|Anthropogenic Metal Substrate|Anthropogenic Metal Reef Substrate (COE: Wood Rubble Substrate) | 3 | X | Yes |

| Biotic Component | |||||

| Biotic Setting | B2 | Benthic/Attached Biota | --- | --- | --- |

| Biotic Class | B2.5 | Benthic/Attached Biota|Aquatic Veg Bed | --- | --- | --- |

| Biotic Subclass | B2.5.1 | Benthic/Attached Biota|Aquatic Veg Bed|Benthic Macroalgae | --- | --- | --- |

| Biotic Group | B2.5.1.4 | Benthic/Attached Biota|Aquatic Veg Bed|Benthic Macroalgae|Filamentous Algal Bed | --- | --- | --- |

| Biotic Community | B2.5.1.4.4 | Benthic/Attached Biota|Aquatic Veg Bed|Benthic Macroalgae|Filamentous Algal Bed|Cladophora sp | 1 | 1 | Yes |

| Biotic Community | B2.5.1.4.4/B2.2.2.21.3[P] | Benthic/Attached Biota|Aquatic Veg Bed|Benthic Macroalgae|Filamentous Algal Bed|Cladophora sp. (COE: Attached Mussels; PCT cover 9–11) | 1 | 2 | Yes |

| Biotic Null | No Biota Observed | 2 | X | Yes |

| Short Name (Index #) | # of Classification Samples (3 m) | # of Validation Samples (3 m) | # of Classification Samples (10 m) | # of Validation Samples (10 m) |

|---|---|---|---|---|

| Substrate Component | ||||

| Coarse Unconsolidated (1) | 52 | 46 | 19 | 14 |

| Fine Unconsolidated (2) | 540 | 540 | 182 | 178 |

| Anthropogenic (3) | 38 | 34 | 13 | 10 |

| TOTAL | 630 | 620 | 214 | 202 |

| Biotic Component | ||||

| Aquatic Vegetation Bed (1) | 50 | 50 | 19 | 17 |

| Biotic Null (2) | 575 | 575 | 190 | 190 |

| TOTAL | 625 | 625 | 209 | 207 |

| Short Name (Index #) | # of Classification Samples (3 m) | # of Validation Samples (3 m) |

|---|---|---|

| Fine Unconsolidated (Substrate Component) | ||

| Slightly Gravelly Sand (1) | 501 | 502 |

| Slightly Gravelly Sand/Surf Pattern: Ripple Surface (2) | 3 | 4 |

| Slightly Gravelly Sand/Surf Pattern: Pockmarked Surface Pattern (3) | 17 | 16 |

| Slightly Gravelly Sand/COE: Woody Debris (4) | 1 | 0 |

| Slightly Gravelly Sand/COE: Organic Detritus; Possible INT: Spotted Surface Color (5) | 10 | 12 |

| Slightly Gravelly Sand/COE: Mussel Shell Hash (6) | 2 | 2 |

| TOTAL | 534 | 536 |

| Aquatic Vegetation Bed (Biotic Component) | ||

| Cladophora sp. (1) | 3 | 5 |

| Cladophora sp./COE: Attached Mussels (2) | 15 | 17 |

| TOTAL | 18 | 22 |

| Classification Inputs | Collection Date | Spatial Resolution (m) |

|---|---|---|

| Satellite (S2) | ||

| S2 Multispectral (MS) [Bands 2–5, 8] | 10 July 2018 | 10 |

| Depth Invariant Index (DII) | 10 July 2018 | 10 |

| Satellite-Derived Bathymetry (SDB) | 10 July 2018 | 10 |

| Airborne (AB) | ||

| CASI (CA) Minimum Noise Fraction (MNF) Fusion (FU) [Bands 1–10] | 17 July 2018 | 3 |

| CA MNF Water-Leaving Reflectance (WLR) [Bands 1–23] | 17 July 2018 | 3 |

| AB Lidar Bathymetry [Depth] (DE) | 17 July 2018 | 3 |

| AB Lidar Relative Bathymetric Reflectance (RF) | 17 July 2018 | 3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reif, M.K.; Krumwiede, B.S.; Brown, S.E.; Theuerkauf, E.J.; Harwood, J.H. Nearshore Benthic Mapping in the Great Lakes: A Multi-Agency Data Integration Approach in Southwest Lake Michigan. Remote Sens. 2021, 13, 3026. https://doi.org/10.3390/rs13153026

Reif MK, Krumwiede BS, Brown SE, Theuerkauf EJ, Harwood JH. Nearshore Benthic Mapping in the Great Lakes: A Multi-Agency Data Integration Approach in Southwest Lake Michigan. Remote Sensing. 2021; 13(15):3026. https://doi.org/10.3390/rs13153026

Chicago/Turabian StyleReif, Molly K., Brandon S. Krumwiede, Steven E. Brown, Ethan J. Theuerkauf, and Joseph H. Harwood. 2021. "Nearshore Benthic Mapping in the Great Lakes: A Multi-Agency Data Integration Approach in Southwest Lake Michigan" Remote Sensing 13, no. 15: 3026. https://doi.org/10.3390/rs13153026

APA StyleReif, M. K., Krumwiede, B. S., Brown, S. E., Theuerkauf, E. J., & Harwood, J. H. (2021). Nearshore Benthic Mapping in the Great Lakes: A Multi-Agency Data Integration Approach in Southwest Lake Michigan. Remote Sensing, 13(15), 3026. https://doi.org/10.3390/rs13153026