Abstract

Sparse imaging relies on sparse representations of the target scenes to be imaged. Predefined dictionaries have long been used to transform radar target scenes into sparse domains, but the performance is limited by the artificially designed or existing transforms, e.g., Fourier transform and wavelet transform, which are not optimal for the target scenes to be sparsified. The dictionary learning (DL) technique has been exploited to obtain sparse transforms optimized jointly with the radar imaging problem. Nevertheless, the DL technique is usually implemented in a manner of patch processing, which ignores the relationship between patches, leading to the omission of some feature information during the learning of the sparse transforms. To capture the feature information of the target scenes more accurately, we adopt image patch group (IPG) instead of patch in DL. The IPG is constructed by the patches with similar structures. DL is performed with respect to each IPG, which is termed as group dictionary learning (GDL). The group oriented sparse representation (GOSR) and target image reconstruction are then jointly optimized by solving a norm minimization problem exploiting GOSR, during which a generalized Gaussian distribution hypothesis of radar image reconstruction error is introduced to make the imaging problem tractable. The imaging results using the real ISAR data show that the GDL-based imaging method outperforms the original DL-based imaging method in both imaging quality and computational speed.

1. Introduction

Inverse synthetic aperture radar (ISAR) can obtain high resolution images of moving targets in all weather, day and night. It is an important tool for target surveillance and recognition in non-cooperative scenarios [1]. Traditionally, ISAR imaging uses the range-Doppler (RD) type of methods. Under the assumption of a small rotational angle, the cross-range imaging is achieved by fast Fourier transform (FFT). If the targets undergo complex motion, the imaging time needs to be selected or the high-order motion needs to be compensated. The imaging results of this type of method usually suffer from sidelobe interferences.

The sparsity-driven radar imaging methods have verified that incorporating the sparsity as prior information in the radar image formation process is able to cope with the shortcomings of the RD type of methods. These sparsity-driven imaging methods [2,3,4,5,6,7,8,9] assume that the target scene admits sparsity in a particular domain. In particular, regularized-based image formation models focus on enhancing point-based and region-based [2,3,4,5,6] image features by imposing sparsity on features of the target scene, whereas sparse transformation-based image formation models [7,8,9] represent the reflectivity fields sparsely with dictionaries by imposing sparsity on the representation coefficients through the dictionaries. Both models have been shown to offer better image reconstruction quality as compared to traditional RD imaging methods. However, the aforementioned ways for sparsifying the target scene only depict pre-defined image features and are not adaptive to the unknown target scenes; the performance is, therefore, limited.

In contrast to dictionaries constructed with fixed image transformations used in sparse transformation based image formation models, the dictionaries obtained by the dictionary learning (DL) technology [10,11,12,13] are generated with the prior information of the unknown target image. Thus, the learned dictionaries are adaptive to the target images to be reconstructed and can find the optimal sparse representation coefficients [14,15]. Nevertheless, the strategy of processing each target scene patch independently during the DL and sparse coding stages neglects the important feature information between the patches, such as the self-similarity information which has been proved to be very efficient for preserving image details [16,17,18,19,20] during the image formation process. Both DL and sparse coding stages are calculated with relatively expensive nonlinear estimations, e.g., orthogonal matching pursuit (OMP). These two deficiencies actually limit the improvement of the reconstruction quality and efficiency of DL-based ISAR sparse imaging, respectively.

In order to exploit self-similarity information between patches to recover more details of the target image, we adopt the image patch group (IPG) instead of the independent patch as the unit in DL and sparse coding stages. The IPG is constructed by the patches with a similar structure. A singular value decomposition (SVD) based DL method is performed with respect to each IPG, which is termed as group dictionary learning (GDL). The group-oriented sparse representation (GOSR) and target image reconstruction are then jointly optimized by solving a norm minimization problem exploiting GOSR, during which a generalized Gaussian distribution hypothesis [21] of radar image reconstruction error is employed to make the imaging problem tractable. The initial idea of our work for ISAR imaging using GDL was presented in the conference paper [22].

Compared with the existing ISAR sparse imaging methods, the innovations of the proposed imaging method are as follows: (1) The IPGs, instead of independent patches, are used as the units in DL and sparse coding stages. The GOSRs characterize the local sparsity of target image and self-similarity information between patches, simultaneously. (2) A GDL method with low complexity is designed. The GDL is performed with respect to each IPG rather than the target image using the simple SVD. (3) An iterative algorithm combined with soft thresholding function is developed to solve the GOSRs-based norm minimization problem for target sparse imaging.

The real ISAR data are used to demonstrate the performance of the proposed GDL-based sparse imaging method. The comparisons with the greedy Kalman filtering (GKF) based sparse imaging method [9] and on-line DL and off-line DL based sparse imaging methods [15] are conducted.

The rest of this paper is organized as follows: Section 2 briefly presents the ISAR measurements model and sparse imaging model. Section 3 presents the DL-based ISAR sparse imaging methods. Section 4 elaborates the GDL-based ISAR sparse imaging method in great detail. Section 5 shows the real ISAR data imaging results and the performance analyses of our imaging method. Section 6 draws the conclusions.

2. Imaging Model

2.1. Model of ISAR Measurements

We consider an ISAR imaging geometry, including a moving radar platform and a target with both transnational motion and rotational motion, in an image projection plan (IPP). The radar first transmits a linear frequency modulated (LFM) pulsed waveform . Here, t represents the fast time, represents the pulse width, is the frequency modulation rate, is the carrier frequency of the transmitted waveform and is the rectangular function. The received signal from the target scene is then mixed with a reference chirp. After performing the operations of demodulation, range compression and motion compensation of higher order [23] on the de-chirped signal, the ISAR image formation can be formulated as a 2D inverse Fourier transform (FT) [9] as follows:

where is the range frequency with B denoting the bandwidth of the transmitted waveform, and , denotes the effective rotational vector of the target [24], denotes the local coordinate system centered at on the target, the is the spectrum of the amplitude modulation due to the azimuth antenna beam pattern, and is the reflectivity distribution of the ISAR target scene to be reconstructed. The is the ISAR measurement after motion compensation and range compression.

2.2. Sparse Imaging Model

Let be the vector of the reflectivity function and be the vector of ISAR measurements for discrete samples of fast-time and slow-time domain. The relationship between the ISAR measurements and the reflectivity function to be reconstructed can be modeled in terms of a linear system of equations in a matrix form [9] as follows:

where is the observation matrix of ISAR imaging. Specifically, the is a Fourier matrix formed by , where denotes the 1D Fourier transform matrix applied to the column dimension of , and denotes the 1D Fourier transform matrix applied to the row dimension of . is the noise vector embedded in the ISAR measurements. We assume that the number of samples in the range and cross-range dimensions are and , respectively. and are both vectors with the dimension of , and is a square matrix.

The is naturally sparse, considering that the background of an ISAR image usually has relatively low reflectivity and the target to be imaged is a composition of a number of relatively strong scatterers. The target image can be reconstructed with measurements smaller than in the theoretic framework of CS based on the following under-determined linear systems of equations:

where is a randomly under-sampled measurement vector, with is the measurement matrix, which is a partial Fourier matrix obtained by , where denotes the sensing matrix, is the noise vector corresponding to the under-sampled measurements .

The imaging problem in Equation (3) can be formulated as a space sparse constrained norm minimization model as follows:

The sparse representations in the transform domains depict the certain features (point-based or region-based image features) of the interested target [7,9], thereby enhancing the imaging quality of the target scene . Let be a dictionary, which sparsely represents the as follows:

where the vector is sparse representation of in the domain expanded by . Thus, the image reconstruction in Equation (4) is performed by obtaining the sparse representation firstly as follows:

and then form the target image by the following:

However, these dictionaries were artificially designed using the fixed image transformations and cannot be adaptive to the unknown target scene to find optimal sparse representations.

3. DL-Based Sparse Imaging

The main idea of DL-based ISAR sparse imaging is to utilize an adaptive dictionary to sparsely represent the unknown target scenes [15]. The adaptive dictionary can be learned off-line from the previously available ISAR data or on-line from the current data to be processed; the atoms in the adaptive dictionary are generated with prior information of the unknown target scene rather than the fixed image transformations.

3.1. Off-Line DL Based Sparse Imaging

A block processing strategy is adopted for reducing the size of training images to improve the efficiency of DL. Extracting the patches from a training image can be simply expressed as follows:

where denotes vectorized training image, denotes the vectorized patch extracted from , denotes the operator of patch extraction and is the index of the patch.

Given a set of patches , the patch-based DL can be modeled as a norm minimization problem [15] as follows:

where is the patch based dictionary to be learned, is the sparse representation of over the , and is the required sparsity level for each patch.

The K-SVD algorithm is used to optimize and alternatively, leading to the optimal . Then, the , containing the prior information of the unknown target image, is applied to the following joint optimization problem for reconstructing the target image:

where is the to be reconstructed patch, and is the sparse representation of over , is the regularization parameter and balances the measurements fidelity and sparse representation.

An iterative strategy is utilized to minimize Equation (10). In each iteration, the is obtained with OMP and the is reconstructed by ; the target image is estimated by performing conjugate gradient algorithm on the set .

3.2. On-Line DL-Based Sparse Imaging

On-line DL-based sparse imaging models the DL, sparse coding and image reconstruction as a joint optimization problem [15] as follows:

An alternating iteration procedure is adopted to solve Equation (11). and are alternately solved with K-SVD, and is also reconstructed by implementing the conjugate gradient algorithm on set during each iteration.

The dictionaries offered by both off-line DL method and on-line DL method are able to find better sparse representations of the target image as compared to the fixed image transformations [15]. However, the K-SVD used for the DL inevitably requires high computational complexity. In addition, from Equations (9)–(11), it can be noticed intuitively that each patch is actually considered independently in the process of DL and sparse coding, which neglects the important feature information between similar patches in essence, such as self-similarity information.

4. GDL-Based Sparse Imaging

In order to rectify the above problems of DL-based sparse imaging, we adopt the IPG instead of an independent patch as the unit for DL and sparse coding with the aim of exploiting the local sparsity of target image and the self-similarity information between patches simultaneously. Each IPG is composed of patches with similar structures and is represented by the form of a matrix. An effective SVD-based DL method is performed with respect to each IPG to obtain the corresponding dictionary.

4.1. Construction of Image Patch Group

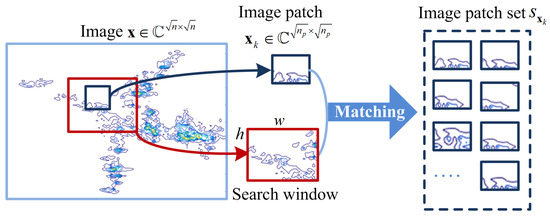

Given a vectorized image , the size of equals that of , i.e., . In order to intelligibly elaborate the construction of IPG, the vectorized form of the needs to be converted to the matrix form with the size of as shown in Figure 1.

Figure 1.

Extract each patch (as shown by the dark blue square) from image and for each , search its l best similar patches in the search window (as shown by the red square) to compose the image patch set .

The image is divided into N overlapped patches . For each patch , denoted by the dark blue square in Figure 1, in the search window (red square), we search its l best matched patches to compose the image patch set . Here, the similarity between patches is measured, using a certain similarity criterion.

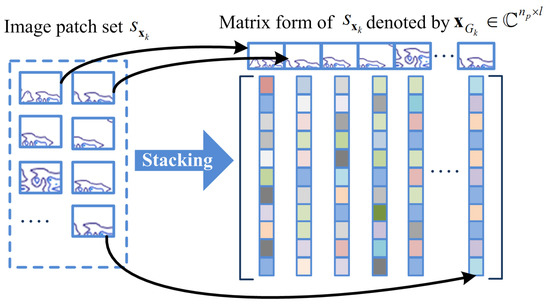

Next, all the patches in are stacked into a matrix of size , represented by , which contains every patch in as its columns, as shown in Figure 2. The matrix , including all patches with similar structures, is named an IPG. For simplicity, we define the construction of the IPG as follows:

where denotes the operator that extracts the IPG from , and its transpose, denoted by , can put the IPG back into its original position in the reconstructed image, padded with zeros elsewhere.

Figure 2.

Reshape each patch in to vector, and stack all vectors in the form of matrix to construct the image patch group .

By averaging all the IPGs, the reconstruction of the whole image from set becomes the following:

where denotes the element-wise division and is a matrix of size with all the elements being 1.

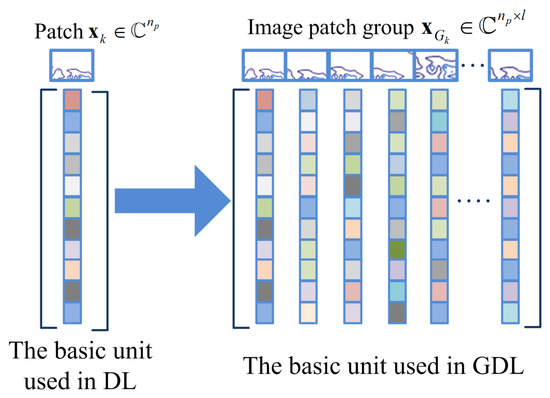

Note that in our work, each patch is represented as a vector, and each IPG is represented as a matrix as shown in Figure 3. According to the above definition, it is obvious to observe that each patch corresponds to an IPG . One can also see that the construction of explicitly exploits the self-similarity information between patches.

Figure 3.

The comparison between image patch and image patch group .

4.2. ISAR Image Patch Group Based Imaging Model

Let be an initial ISAR target image obtained by directly implementing the 2D FFT on the measurements , represented by the following:

The quality of the initial target image is very poor as expected since the pulses cannot be coherently integrated. The purpose of GDL-based imaging is to completely reconstruct the high quality target image from .

According to the method provided in Section 4.1, we construct the ISAR IPG set using the , and the size of each is . During the construction of each IPG, the cross-correlation is selected as the criterion to measure the similarity between patches. Thus, reconstructing the from can be modeled as follows:

4.3. Group Dictionary Learning Based Sparse Imaging

To enforce the local sparsity and the self-similarity of target image simultaneously in a unified framework, we suppose that the can be sparsely represented over a group dictionary . Here, is assumed to be known. Note that each atom is a matrix of the same size as the IPG , and m is the number of atoms in . Different from the dictionary in patch-based DL, here, is of size , that is, . How to learn with high efficiency is given in detail in the next subsection.

Similar to the notations about sparse coding process in patch-based DL, the sparse coding process of each IPG over is to seek a sparse representation such that , we refer to the as GOSR. Thus, the target image reconstruction model in Equation (15) can be rewritten as follows:

Only measurement and IPG set are available. However, we need to obtain the optimal group dictionaries and corresponding GOSRs . Similar to the joint optimization model in on-line DL-based sparse imaging described in Section 3, we reform the reconstruction model in Equation (16) as a joint optimization model as follows:

where is sparse level of each group. The weight in our formulation is a positive constant and balances the measurements fidelity and GOSRs. The first term in Equation (17) captures the quality of the sparse approximations of with respect to group dictionaries , the second term in the cost measures of the measurements fidelity.

Our formulation is, thus, capable of designing an adaptive group dictionary for each IPG, and also using the group dictionary to reconstruct the current IPG. In addition, the model in Equation (17) can typically avoid artifacts seen in the initial image obtained in Section 4.2. All of the above are done, using only the under-sampled measurements and set .

In our work, we adopt the alternate iteration strategy to minimize the joint optimization problem in Equation (17) to solve the , the and the . Each iteration includes N cycles, and each cycle involves two steps: learning as well as jointly optimizing the and . In the first step, is obtained by GDL, while the corresponding and are fixed. In the second step, the learned is fixed, and are estimated by solving a norm minimization problem. The details of these two steps are further given in the following subsections.

4.4. Group Dictionary Learning

In this subsection, we show how to learn the group dictionary for each IPG . Note that, on one hand, we hope that each can be represented by the corresponding faithfully. On the other hand, we hope that the sparse representation coefficient of over the is as sparse as possible. According to the patch-based DL method presented in Section 3, the GDL can be intuitively modeled as follows:

Note that the group dictionary form is very complex. If we adopt the iteration method, such as K-SVD, to learn group dictionary, it is a time-consuming process. Therefore, we do not directly utilize Equation (18) to learn the group dictionary for each IPG.

In order to obtain the group dictionary with high efficiency, in this paper, the SVD-based GDL method is directly performed on each . Thus, the can be decomposed into the sum of a series of weighted rank-one matrices as follows:

where is the singular value set, denotes the singular value vector with the values in set as its elements. The left singular vector and the right singular vector are the columns of unitary matrices and , respectively. H represents the Hermitian transpose operation.

Each atom in for is defined as follows:

where the .

Therefore, the ultimate adaptively learned group dictionary for is defined as follows:

Based on the definitions in above, we can obtain . From Equations (19)–(21), we can obviously see that the SVD-based GDL method guarantees that all the patches in an IPG use the same group dictionary and share the same dictionary atoms. In addition, it is clear to see that the proposed GDL is self-adaptive to each IPG and is quite efficient, requiring only one SVD for each IPG.

4.5. Group Sparse Representation and Target Image Reconstruction

According to the second term in Equation (17), the joint optimization problem of GOSRs and the target image can be formulated as follows:

By multiplying the for and , the Equation (22) becomes the following:

where denotes the target image to be reconstructed and is the initial image of defined in Section 4.2.

The Equation (23) can be rewritten as a regularized form by introducing a regularized parameter :

where the parameter controls the trade-off between the first and second terms in Equation (24).

The Equation (24) can be minimized in the iteration manners of greedy pursuit or convex optimization. In each iteration, using the to reconstruct the , the reconstructed is regarded as a novel in next iteration. Since the form of the is too complicated, to exactly reconstruct from in the iteration manners is a very hard process.

In order to reduce the difficulty of minimizing Equation (24), we perform some experiments to investigate the statistics of the error between the initial images and corresponding reconstruction results in each iteration, where t is the index of iteration. Since obtaining the initial images and the exact reconstruction results is not available, we use the poor quality images obtained by the RD method with different under-sampled measurements to approximate the initial images and reconstructed results. Concretely, the images reconstructed with 25%, 30% and 35% measurements are regarded as the approximated initial images in 1st, 2nd and 3rd iterations, and the images obtained by 30%, 35% and 40% measurements are regarded as the reconstruction result in the 1st, 2nd and 3rd iterations.

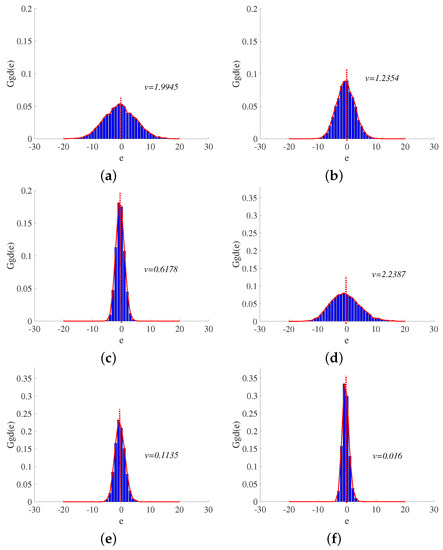

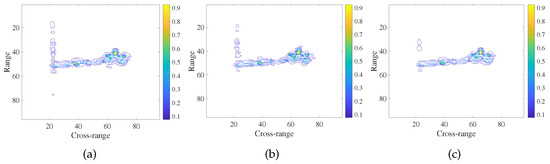

We use the real plane data and ship data as the examples. By implementing the approximate operation mentioned above for the motion compensated real plane data, we can calculate the reconstruction errors in the first three iterations, i.e., . Then, we can drawn the probability density histograms for , and , as shown in Figure 4a–c, respectively. In Figure 4a, the horizontal axis denotes the range of the pixel values in error matrix , and the vertical axis denotes the probability of the number of pixel values in different ranges to the number of total pixel. From Figure 4a, we can observe that the probability density histograms of can quite be characterized as a generalized Gaussian distribution (the probability density function of generalized Gaussian distribution is given in https://sccn.ucsd.edu/wiki/Generalized_Gaussian_Probability_Density_Function, accessed on 22 November 2020.) where the mean is zero and variance is . The is estimated by the following:

where n is the number of total pixels.

Figure 4.

The probability density histograms of errors of the plane imaging in (a) t = 1, (b) t = 2 and (c) t = 3 iterations and the ship imaging in (d) t = 1, (e) t = 2 and (f) t = 3 iterations. The shape parameter in generalized Gaussian probability density function keeps constant of 1.3 for these instances. The horizontal axis denotes the range of pixel values in and the vertical axis denotes the probability of the number of pixel values in different ranges to the number of total pixels.

Similar to observation in Figure 4a, the probability density histograms of and shown in Figure 4b,c can also be approximated as the generalized Gaussian distributions.

We also perform the approximation operation mentioned above for the motion compensated ship data. The probability density histograms of , and of ship data are shown in Figure 4d–f, which have distributions similar to those of the plane data.

Based on the statistics of the probability density histograms of reconstruction errors in the iteration process, to enable minimizing Equation (24) tractably, a reasonable assumption is made in this paper. We suppose that each element in satisfies an independent distribution with zero mean and variance be . By this assumption, for , we can obtain the following conclusion:

where denotes the to be reconstructed IPG, be a probability function. Probability coefficient with is the size of the patch, l is the number of patches in an IPG, and N is the number of IPGs extracted from the initial image. The detailed proof of Equation (26) is given in Appendix A.

According to the approximation in Equation (26), we have the following equation with probability nearly at 1:

Note that (28) can be efficiently minimized by solving N joint optimization problems, each of which is expressed as follows:

From the definitions of , we can know and , is the singular value vector defined in Section 4.4. Due to the construction of in Equation (21) and the unitary property of and , we can obtain the following relationship:

The detailed proof of Equation (30) is provided in Appendix B.

According to Lemma 1 in [25], the closed-form solution of is as follows:

where denotes the soft thresholding function and “·” denotes the element-wise product.

Then, the IPG in Equation (29) can be reconstructed by the following:

where is the group dictionary obtained in Equation (21).

According to the strategy of alternatively solving Equations (21) and (29), all IPGs can be sequentially recovered, and the target image is reconstructed through Equation (13).

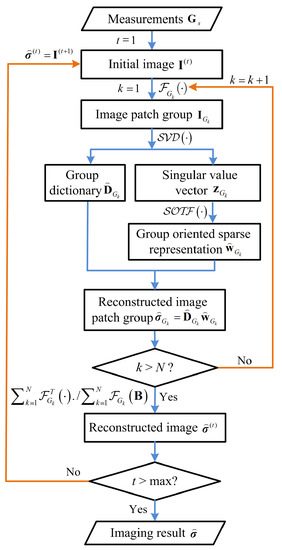

So far, all issues in the process from under-sampled measurements to the target image reconstruction have been solved. In light of all derivations above, a detailed flow chart of the proposed algorithm for ISAR imaging using GDL is shown in Figure 5.

Figure 5.

The flow chart of the group dictionary learning based ISAR imaging algorithm.

5. Experimental Results

In this section, we use real plane data and ship data sets to demonstrate the performance of the proposed GDL based ISAR imaging method. In order to evaluate the feasibility and the chief advantages of our method faithfully, the GDL ISAR imaging method is compared with the greedy Kalman filtering (GKF) imaging method [9], ISAR image patch based online dictionary learning (ONDL) imaging method and offline dictionary learning (OFDL) imaging method [15], which deal with the ISAR data in a spatial domain and transform domain adaptive to ISAR data, respectively.

5.1. Imaging Data and Parameters

The plane data were collected by a ground-based ISAR operating at C band; the bandwidth of the transmitted waveform is 400 MHz. A de-chirp processing was used for the range compression of the plane data. The ship data were collected by a shore-based X-band radar, and the bandwidth of corresponding transmitted waveform is 80 MHz.

All data sets were motion compensated by the minimum entropy based global range alignment algorithm [26] as well as the improved phase gradient algorithm (PGA) [27]. The details of the size of the raw data (), under-sampling ratios () as well as the sparsity are listed in Table 1. Note that the sparsity is estimated, using the approach in [28].

Table 1.

The parameters of the real ISAR data sets used for verifying the performance of the GDL-based ISAR imaging method.

All data sets used for verifying the reconstruction performance of the proposed imaging method were obtained by performing a random under-sampling operation on both the range domain and cross-range domain of the corresponding motion-compensated raw data. For the plane data, we consider two types of under-sampling ratios, which are and . For the ship data, we set the under-sampling ratio to , i.e., 4608 measurements, as listed in Table 1.

We set the optimal parameters in the GDL-based imaging method as listed in Table 2. The detailed settings of all the parameters are discussed in Section 5.5. All the experiments are performed in Matlab2015b on an assembled computer with Intel (R) Core (TM) i7-7700 CPU @ 3.60 GHz, 8G memory, and a Windows 7 operating system.

Table 2.

The parameter settings of GDL imaging method for different ISAR data sets imaging.

5.2. Image Quality Evaluation

To provide a quantitative evaluation of the images reconstructed with the proposed imaging method, we use two types of performance evaluation indices [29]. One is the “true-value” based indices and the other is the conventional image quality indices. The “true-value” based indices assess the accuracy of position of reconstructed scatterers. The conventional indices mainly assess the visual quality of the reconstructed images.

The “true-value” based evaluation is based on the comparison of the original or reference image (which represents the “true-value”) with the reconstructed image. Since we do not have ground-truth images of non-cooperative targets, in our experiment, a high-quality image reconstructed by the conventional RD method using full data is referred to as the reference image in our work. Thus, the metrics evaluate the performance of the proposed GDL-based imaging method as compared to the RD method. The “true-value” based evaluation uses the following indices: False Alarm (FA) and Missed Detection (MD). FA is used for assessing the scatterers that are incorrectly reconstructed. MD is used for assessing the missed scatterers.

The conventional image quality evaluation includes the target-to-clutter ratio (TCR), image entropy (ENT) and image contrast (IC). The TCR that we use in our work is defined as follows:

where represents the pixel index, denotes the reconstructed value at pixel in the reconstructed image and , denote the target region and clutter region in , respectively. We determine and by performing a binarization processing on the RD image. The pixels whose values are greater than a specified threshold are classified into and otherwise into .

5.3. Imaging Results of Real Data

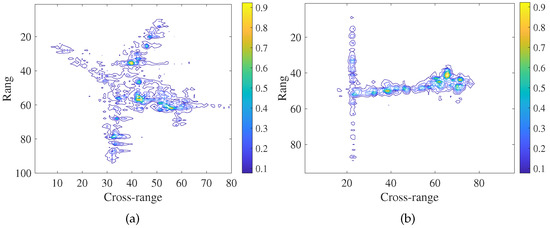

Figure 6a,b presents the full data imaging results of the plane data and ship data, using the RD method, respectively.

Figure 6.

The (a) plane image and (b) ship image obtained by RD method using full data.

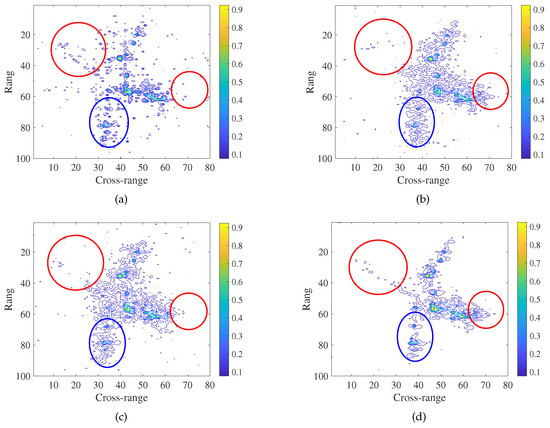

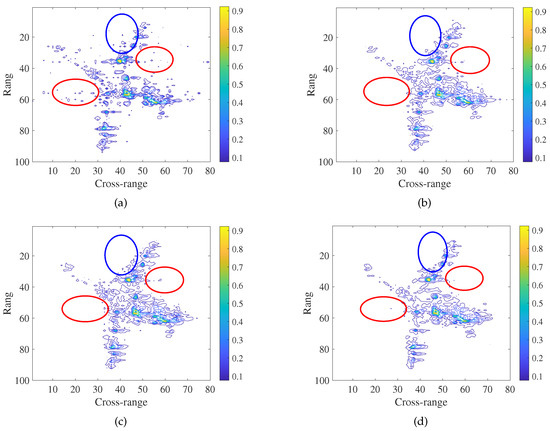

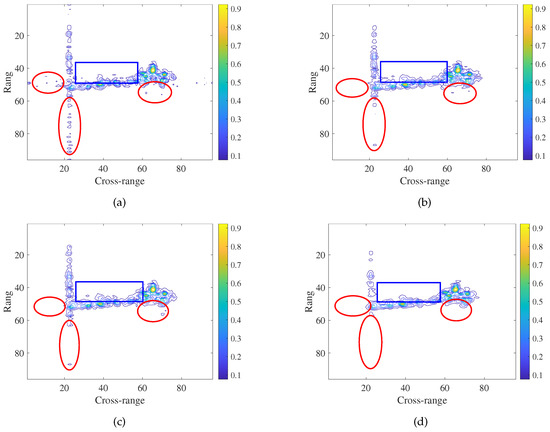

Figure 7 shows the imaging results of 25% measurements of plane data, using the GKF, ONDL, and OFDL, as well as our GDL-imaging methods, respectively. Figure 8 shows the imaging results of 50% measurements of plane data. Figure 9 shows the imaging results obtained by ship raw data, using the different imaging methods mentioned above. Note that all imaging results are displayed with the same contour level.

Figure 7.

The imaging results of the plane data obtained by (a) GKF imaging method, (b) ONDL imaging method, (c) OFDL imaging method and (d) the GDL imaging method, using 25% measurements.

Figure 8.

The imaging results of plane data obtained by (a) GKF imaging method, (b) ONDL imaging method, (c) OFDL imaging method and (d) the GDL imaging method, using 50% measurements.

Figure 9.

The target images of ship data yielded by (a) GKF imaging method, (b) ONDL imaging method, (c) OFDL imaging method as well as (d) the GDL imaging method using 50% measurements.

Comparing Figure 7a–d, we see that many artifacts appear in the results of the GKF, ONDL, and OFDL methods. The first three imaging methods cannot provide well-reconstructed images, while our GDL method well reconstructs the nose, tail, and wings of the plane as indicated by the red and blue circles in Figure 7. This verifies the superiority of the proposed GDL-based imaging method in target shape reconstruction. The GOSR obtained by the group dictionary can account for the self-similarity information between image patches, leading to better retaining of the information regarding the plane shape as compared to the other methods considered here.

Figure 8 shows the imaging results of 50% measurements of plane data, using the imaging methods considered here. Specifically, the GDL-based imaging method provides the best results. The fewest artifacts appear in the reconstructed image of GDL as shown in the regions indicated by the red and blue circles in Figure 8.

From Figure 9 we can see that the ship target can be reconstructed successfully, using these four methods. It shows the imaging results of the ship target obtained by the GKF, ONDL, OFDL, and GDL methods, using 50% measurements. By performing a further comparison of the regions indicated by red circles and blue rectangles, we see that the result shown in Figure 9d have the fewest artifacts or interferences.

We also see that there are some errors in the results of the GDL method, for example, the poor reconstruction of the nose of the plane in Figure 7. Note that the target region is reconstructed exploiting GOSRs that are calculated with Equation (32), which reflects that the quality of the GOSRs is influenced by the singular value vector of IPG and the soft thresholding. Therefore, the reasons for the poor reconstruction of the target may be that the singular value vector of IPG or the soft thresholding are not accurate enough.

5.4. Quantitative Evaluation of Image Quality

Except for the visual comparisons of the imaging results, we also evaluate the image quality using the metrics introduced in Section 5.2. The evaluations of the imaging results are listed in Table 3.

Table 3.

Evaluation of real ISAR data image quality.

From the second and third columns in Table 3, we see the results of our method have the smallest FA and MD, which means that our method can reconstruct the position of target scatterers most accurately and suppress the artifacts and sidelobe in the background well. This is consistent with the imaging results shown in Figure 7, Figure 8 and Figure 9.

As indicated in the fourth, fifth and sixth columns in Table 3, the TCR, ENT and IC of the GDL-based imaging method show the best values. This is also consistent with the visual comparison of Figure 7, Figure 8 and Figure 9.

The last column of Table 3 presents the computing time of each imaging method considered. It can be seen that the proposed GDL-based imaging method is the fastest one among all methods. This is due to the non-iterative processing employed in the SVD during the GDL process as compared to other methods.

5.5. Discussion on the Parameter Setting

In our experiments, all parameters in GDL based imaging method are shown in Table 2. The denotes the size of the vectorized image patch, and the denotes the moving step of the search window on the initial image during the IPG extraction process. represents the initial size of search window, and l represents the number of similar image patches in an IPG. The and are the regularization parameters. The parameters, including , , , l, and , are set empirically as shown in Table 2 and are kept unchanged for all three data sets where is adjustable.

From Equations (31) and (A1), we know the parameter balances the suppression of the artifacts and the preservation of the details of the target. If is too small, the artifacts cannot be fully suppressed, whereas if is too large, the target details may be lost. Thus, we consider the visual results and the quantitative indices of the imaging results simultaneously to explore the optimal values of for each type of imaging data.

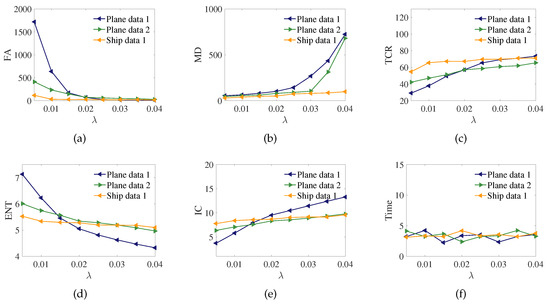

Figure 10 shows the variation of FA, MD, TCR, ENT, IC and Times with different values of , where other parameters, including , , , l, are kept constant for three data sets. We see that as the increases, the values of FA and ENT decrease, while the values of MD, TCR and IC increase. Note that all metrics but MD tend to the optimal situation with the increase in . The higher MD, in fact, indicates the sparser result, which means that the target structure details in the result may be missing, leading to the relatively bad appearance.

Figure 10.

The curves showing the variation of the quantitative indices (a) FA, (b) MD, (c) TCR, (d) ENT, (e) IC and (f) time of imaging quality with different values of for three data sets.

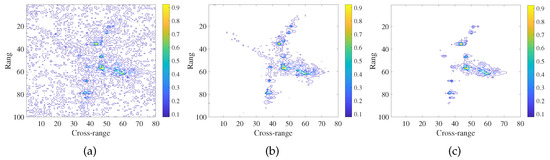

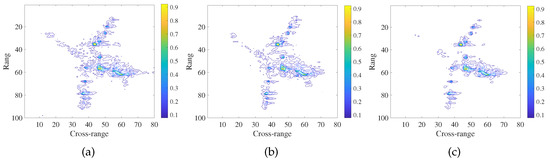

The target images of three data sets with different values of are shown in Figure 11, Figure 12 and Figure 13, respectively. From the Figure 11b, Figure 12b and Figure 13b; we can see that three data sets have the best image quality in the case of that equals 0.02, 0.03 and 0.035, respectively. The target images have the smallest number of artifacts and the best target shape. Furthermore, the quantitative indices of the three results are better than those of the results obtained by other imaging methods as shown in Table 3. Thus, the optimal values of for plane data 1 and plane data 2 as well as ship data 1 imaging can be set as 0.02, 0.03 and 0.035, respectively.

Figure 11.

The imaging results of 25% measurements of plane data reconstructed by GDL method in the three cases of (a) = 0.01, (b) = 0.02, and (c) = 0.03.

Figure 12.

The target images of 50% measurements of plane data obtained by GDL method in the three cases of (a) = 0.02, (b) = 0.03, and (c) = 0.04.

Figure 13.

The imaging results of 50% measurements of ship data yield by GDL method in the three cases of (a) = 0.02, (b) = 0.035, and (c) = 0.04.

6. Conclusions

In this paper, we extended the DL-based ISAR sparse imaging method and presented the GDL based ISAR sparse imaging method. The GDL without the time-consuming iteration process has high efficiency. The sparse representation extracted from IPG contains the self-similarity information between the image patches and the local sparse prior information of the target image. The self-similarity information is very helpful in preserving the target shape or contour during the imaging process. The GDL ISAR sparse imaging method is better than the state-of-the-art ISAR sparse imaging methods considered in this paper in both imaging quality and computation speed.

Author Contributions

Conceptualization, C.H. and L.W.; methodology, C.H. and L.W.; software, C.H; validation, C.H. and L.W.; formal analysis, C.H.; writing—original draft preparation, C.H.; writing—review and editing, L.W., D.Z. and O.L.; visualization, C.H.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Key Research and Development Program of China with the Grant No. 2017YFB0502700, the National Natural Science Foundation of China with the Grant No. 61871217, the Aviation Science Foundation with the Grant No. 20182052011 and the Postgraduate Research and Practice Innovation Program of Jiangsu Province with the Grant No. KYCX18_0291, the Fundamental Research Funds for Central Universities under Grant NZ2020007 and the Fundamental Research Funds for Central Universities under Grant NG2020001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Theorem A1.

Let , , , , be the error between σ and denoted by , where each element in is represented by . Assume that is independent and satisfies a distribution with . Then, for , the relationship between and satisfies the following property:

Proof.

Based on the assumption that each is independent, we know that each is also independent. Since mean and variance , the mean of can be expressed as follows:

By invoking the Convergence in Probability of Law of Large Numbers, for , it leads to the following:

i.e.,

Further, let and denote the concatenations of all and , respectively. The error between and is represented by where each element in denoted by and . Due to the assumption that each is independent and satisfies a distribution with , the same manipulation with Equation (A3) applied to yields the following:

which can be rewritten as follows:

From Equation (A4), we know the following:

From Equation (A6) we know . Therefore, when , we have and . Thus, the Equation (A7) can be scaled to the following:

i.e.,

Therefore, the Equation (A1) is proved. □

Appendix B

Theorem A2.

Proof.

According to the definitions of , and , we have the following:

Then,

where denotes the diagonal matrix.

According to the property that the square of F norm of a matrix equals its trace, the Equation (A12) can be unfolded as follows:

where is the operator for calculating the trace of a matrix. □

References

- Chen, V.C.; Martorella, M. Principles of Inverse Synthetic Aperture Radar SAR Imaging; Scitech: Raleigh, NC, USA, 2014. [Google Scholar]

- Cetin, M.; Karl, W.C. Feature-enhanced synthetic aperture radar image formation based on nonquadratic regularization. IEEE Trans. Image Process. 2001, 10, 623–631. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cetin, M.; Karl, W.C.; Castanon, D.A. Feature enhancement and ATR performance using nonquadratic optimization-based SAR imaging. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1375–1395. [Google Scholar]

- Zhang, X.; Bai, T.; Meng, H.; Chen, J. Compressive Sensing-Based ISAR Imaging via the Combination of the Sparsity and Nonlocal Total Variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 990–994. [Google Scholar] [CrossRef]

- Xu, Z.; Wu, Y.; Zhang, B.; Wang, Y. Sparse radar imaging based on L1/2 regularization theory. Chin. Ence Bull. 2018, 63, 1306–1319. [Google Scholar] [CrossRef]

- Wang, M.; Yang, S.; Liu, Z.; Li, Z. Collaborative Compressive Radar Imaging With Saliency Priors. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1245–1255. [Google Scholar] [CrossRef]

- Samadi, S.; Cetin, M.; Masnadi-Shirazi, M.A. Sparse representation-based synthetic aperture radar imaging. IET Radar Sonar Navig. 2011, 5, 182–193. [Google Scholar] [CrossRef] [Green Version]

- Raj, R.G.; Lipps, R.; Bottoms, A.M. Sparsity-based image reconstruction techniques for ISAR imaging. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014; pp. 0974–0979. [Google Scholar] [CrossRef]

- Wang, L.; Loffeld, O.; Ma, K.; Qian, Y. Sparse ISAR imaging using a greedy Kalman filtering. Signal Process. 2017, 138, 1. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An Algorithm for Designing Overcomplete Dictionaries for Sparse Representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Rubinstein, R.; Peleg, T.; Elad, M. Analysis K-SVD: A Dictionary-Learning Algorithm for the Analysis Sparse Model. IEEE Trans. Signal Process. 2013, 61, 661–677. [Google Scholar] [CrossRef] [Green Version]

- Ojha, C.; Fusco, A.; Pinto, I.M. Interferometric SAR Phase Denoising Using Proximity-Based K-SVD Technique. Sensors 2019, 19, 2684. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Review 2001, 43, 129–159. [Google Scholar] [CrossRef] [Green Version]

- Soğanlui, A.; Cetin, M. Dictionary learning for sparsity-driven SAR image reconstruction. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1693–1697. [Google Scholar] [CrossRef]

- Hu, C.; Wang, L.; Loffeld, O. Inverse synthetic aperture radar imaging exploiting dictionary learning. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1084–1088. [Google Scholar] [CrossRef]

- Kindermann, S.; Osher, S.; Jones, P.W. Deblurring and Denoising of Images by Nonlocal Functionals. Multiscale Model. Simul. 2005, 4, 1091–1115. [Google Scholar] [CrossRef]

- Elmoataz, A.; Lezoray, O.; Bougleux, S. Nonlocal Discrete Regularization on Weighted Graphs: A Framework for Image and Manifold Processing. IEEE Trans. Image Process. 2008, 17, 1047–1060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peyré, G. Image Processing with Nonlocal Spectral Bases. Multiscale Model. Simul. 2008, 7, 703–730. [Google Scholar] [CrossRef] [Green Version]

- Jung, M.; Bresson, X.; Chan, T.F.; Vese, L.A. Nonlocal Mumford-Shah Regularizers for Color Image Restoration. IEEE Trans. Image Process. 2011, 20, 1583–1598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Zhao, D.; Jiang, F.; Gao, W. Structural Group Sparse Representation for Image Compressive Sensing Recovery. In Proceedings of the 2013 Data Compression Conference, Snowbird, UT, USA, 20–22 March 2013; pp. 331–340. [Google Scholar] [CrossRef]

- Varanasi, M.K.; Aazhang, B. Parametric generalized Gaussian density estimation. J. Acoust. Soc. Am. 1989, 86, 1404–1415. [Google Scholar] [CrossRef]

- Hu, C.; Wang, L.; Sun, L.; Loffeld, O. Inverse Synthetic Aperture Radar Imaging Using Group Based Dictionary Learning. In Proceedings of the 2018 China International SAR Symposium (CISS), Shanghai, China, 10–12 October 2018; pp. 1–5. [Google Scholar]

- Lazarov, A.; Minchev, C. ISAR geometry, signal model, and image processing algorithms. IET Radar Sonar Navig. 2017, 11, 1425–1434. [Google Scholar] [CrossRef]

- Tran, H.T.; Giusti, E.; Martorella, M.; Salvetti, F.; Ng, B.W.H.; Phan, A. Estimation of the total rotational velocity of a non-cooperative target using a 3D InISAR system. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 0937–0941. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Zhao, C.; Xiong, R.; Ma, S.; Gao, W. Image Compressive Sensing Recovery via Collaborative Sparsity. IEEE J. Emerg. Sel. Top. Circuits Syst. 2012, 2, 380–391. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, L.; Yu, Y.; Tao, Q.; Zhu, Z. Robust ISAR Range Alignment via Minimizing the Entropy of the Average Range Profile. IEEE Geosci. Remote Sens. Lett. 2009, 6, 204–208. [Google Scholar] [CrossRef]

- Ling, W.; Dai, Y.Z.; Zhao, D.Z. Study on Ship Imaging Using SAR Real Data. J. Electron. Inf. Technol. 2007, 29, 401. [Google Scholar] [CrossRef]

- Wang, L.; Loffeld, O. ISAR imaging using a null space l1 minimizing Kalman filter approach. In Proceedings of the 2016 4th International Workshop on Compressed Sensing Theory and its Applications to Radar, Sonar and Remote Sensing (CoSeRa), Aachen, Germany, 19–22 September 2016; pp. 232–236. [Google Scholar] [CrossRef]

- Bacci, A.; Giusti, E.; Cataldo, D.; Tomei, S.; Martorella, M. ISAR resolution enhancement via compressive sensing: A comparison with state of the art SR techniques. In Proceedings of the 2016 4th International Workshop on Compressed Sensing Theory and Its Applications to Radar, Sonar and Remote Sensing (CoSeRa), Aachen, Germany, 19–22 September 2016; pp. 227–231. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).