Comparative Analysis of Different Mobile LiDAR Mapping Systems for Ditch Line Characterization

Abstract

:1. Introduction

2. Related Work

2.1. Mobile LiDAR for Transportation Applications

2.2. Drainage Network Extraction

3. Data Acquisition Systems and Dataset Description

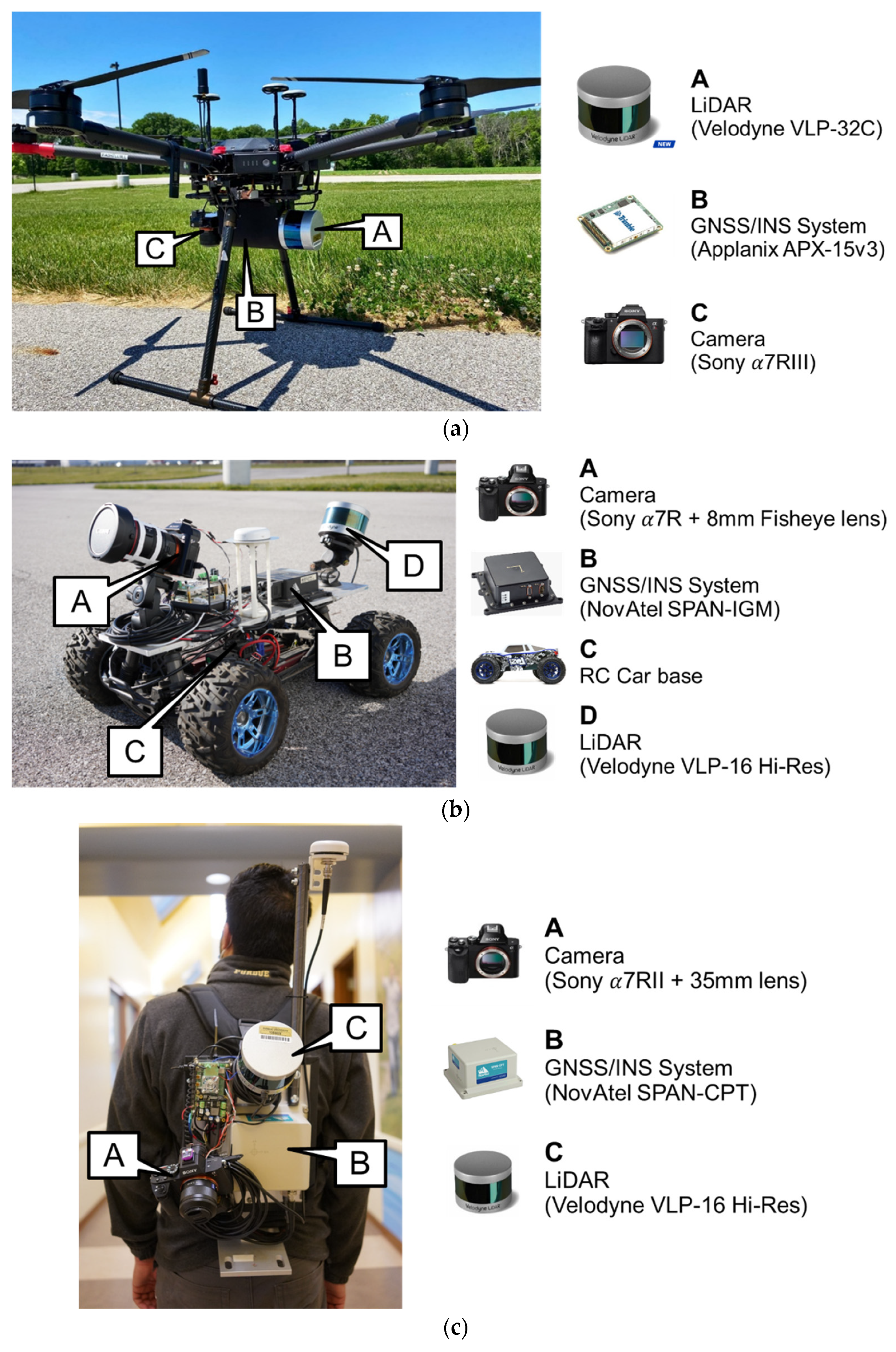

3.1. Specifications of Different MLMS Units

3.2. System Calibration of Different MLMS Units

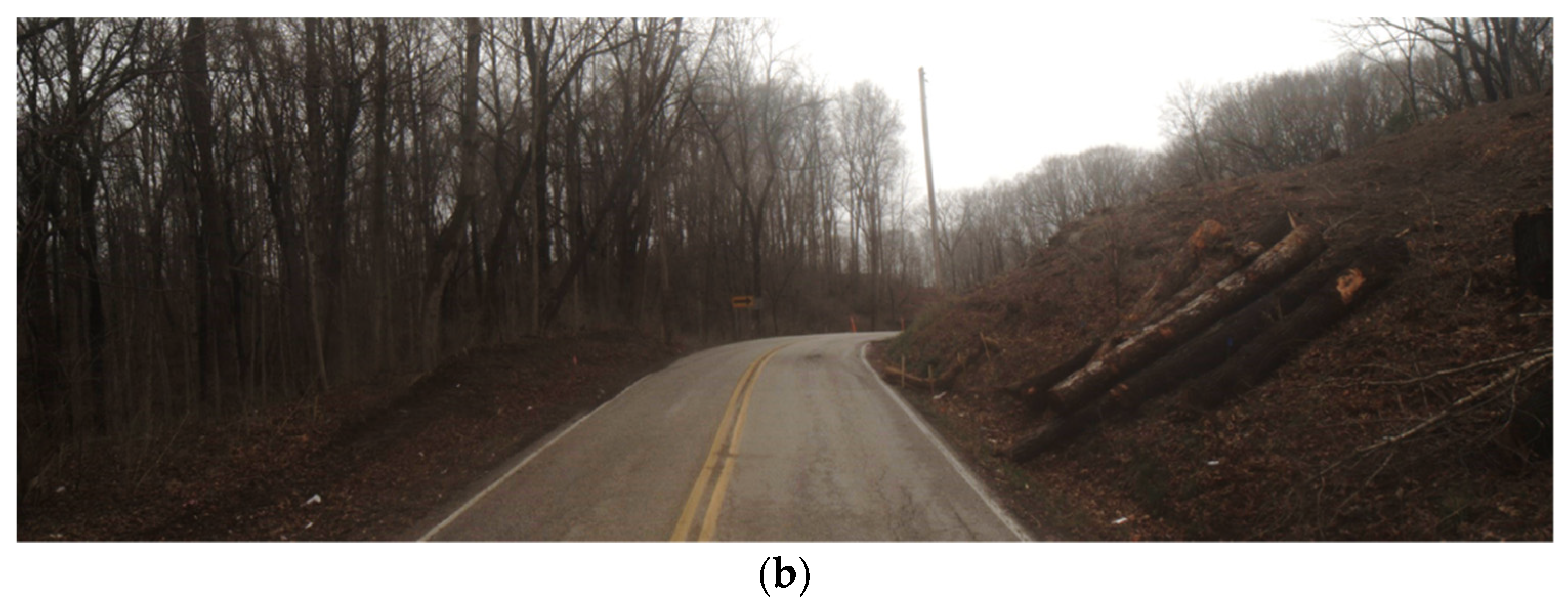

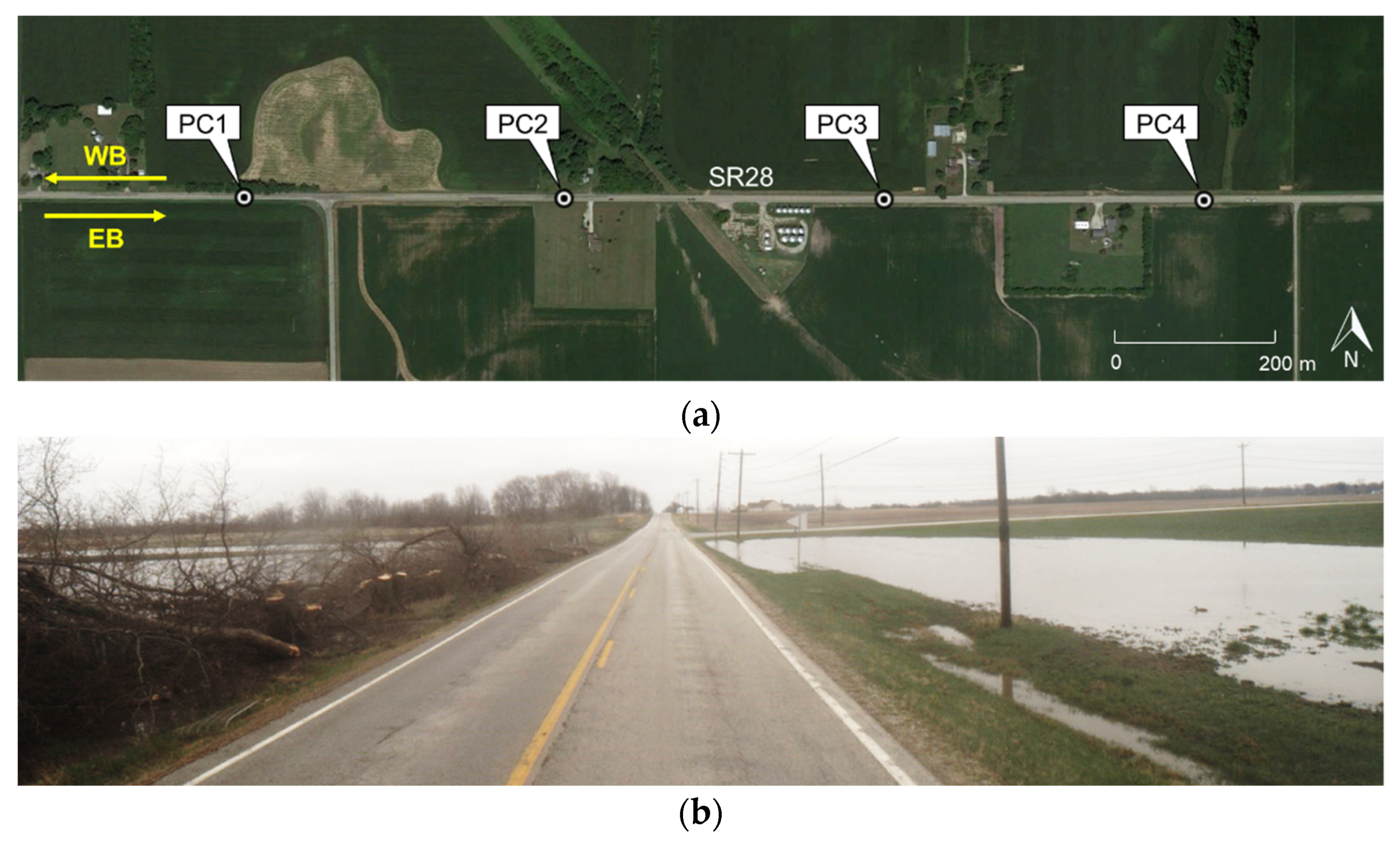

3.3. Dataset Description

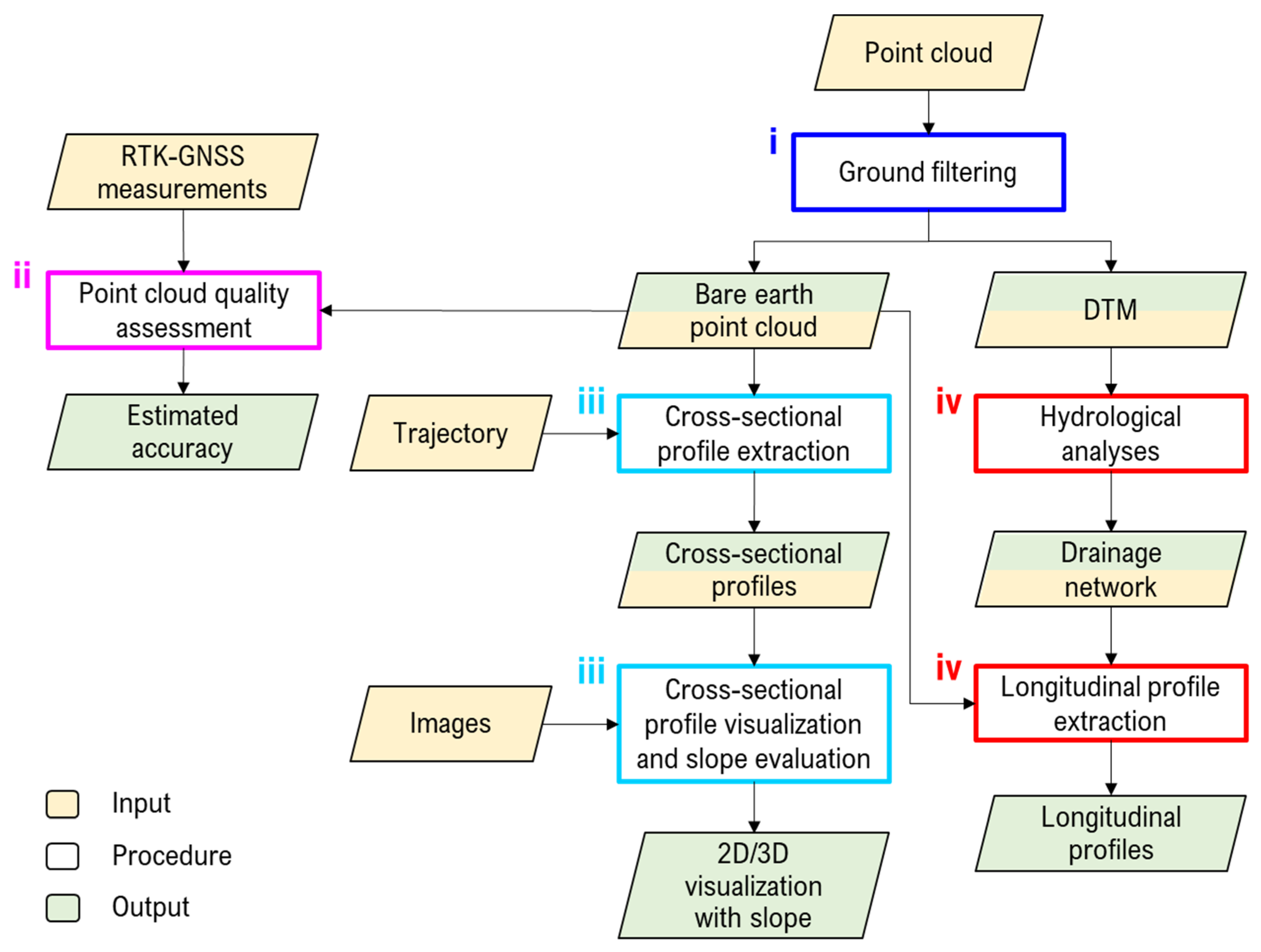

4. Methodology for Ditch Mapping and Characterization

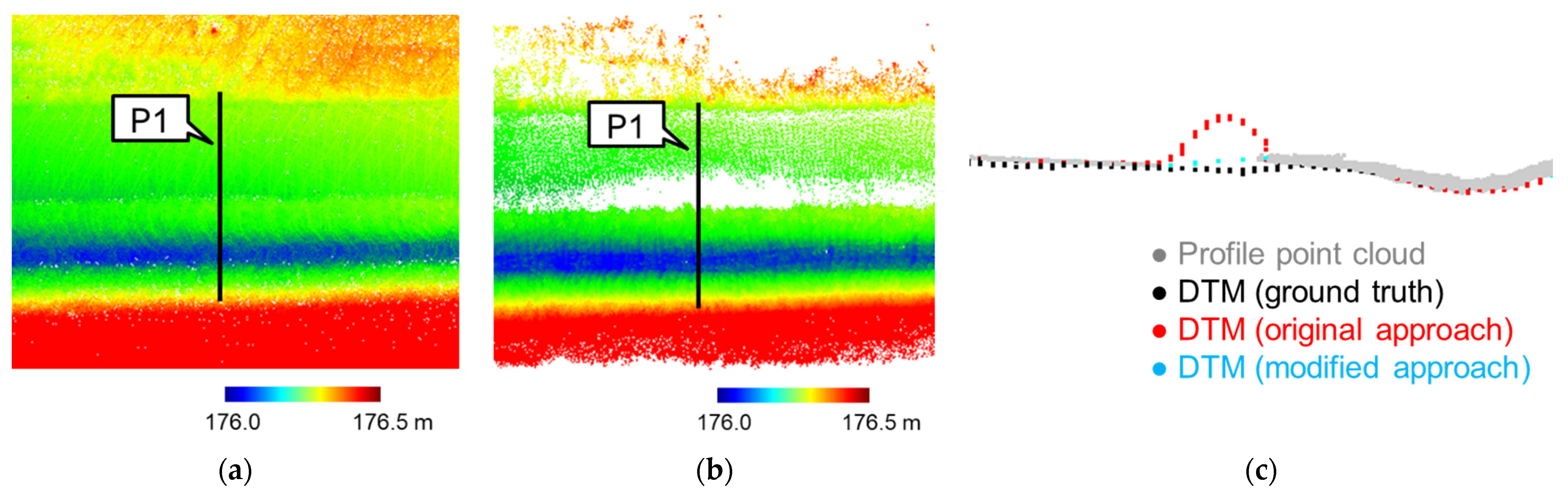

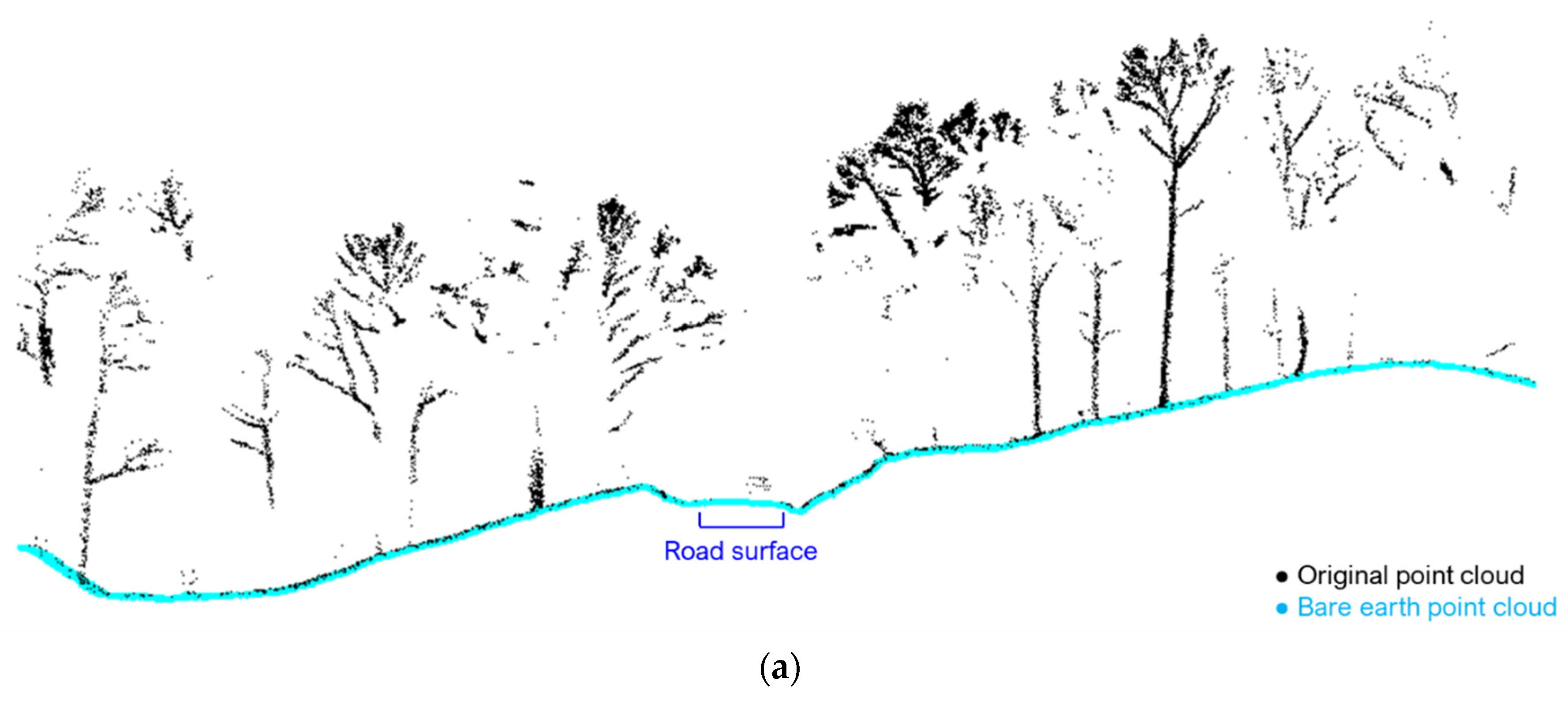

4.1. Ground Filtering

4.2. Point Cloud Quality Assessment

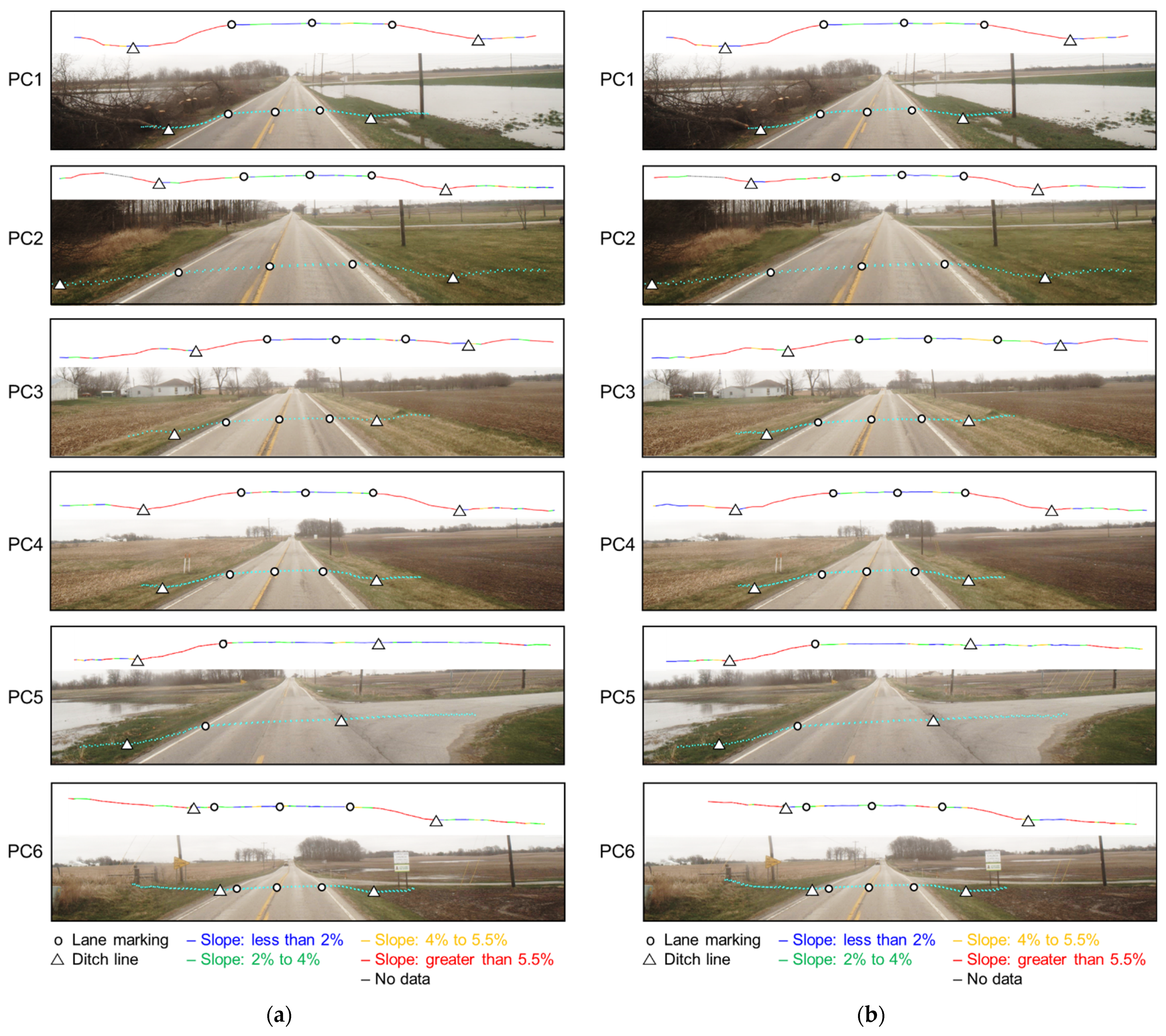

4.3. Cross-Sectional Profile Extraction, Visualization, and Slope Evaluation

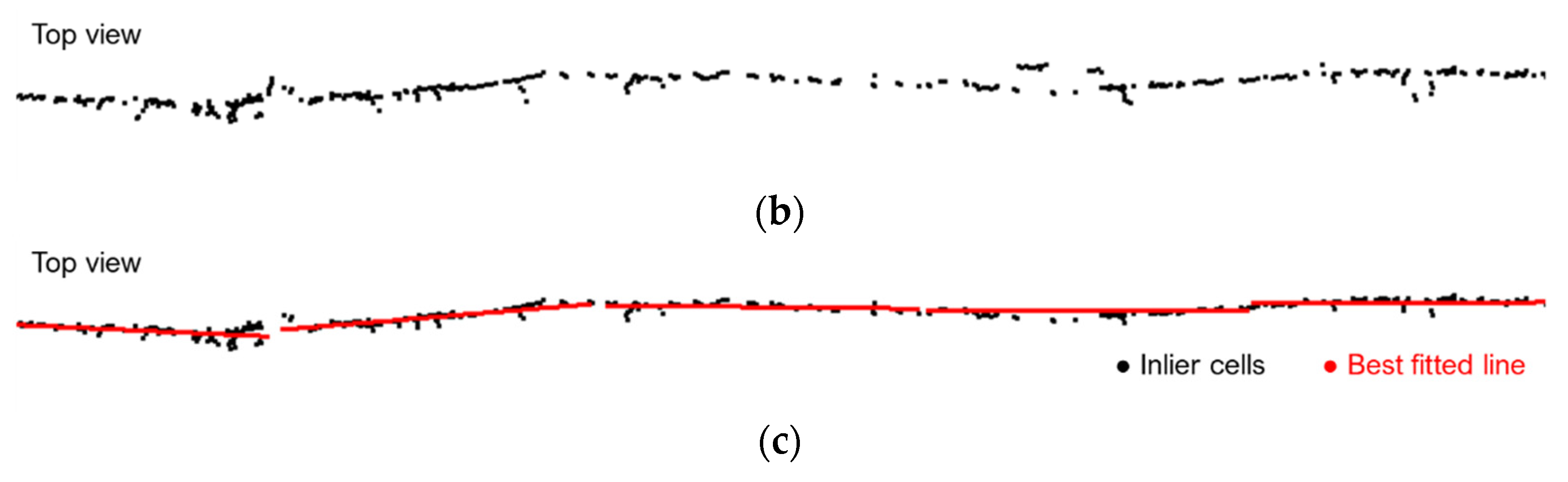

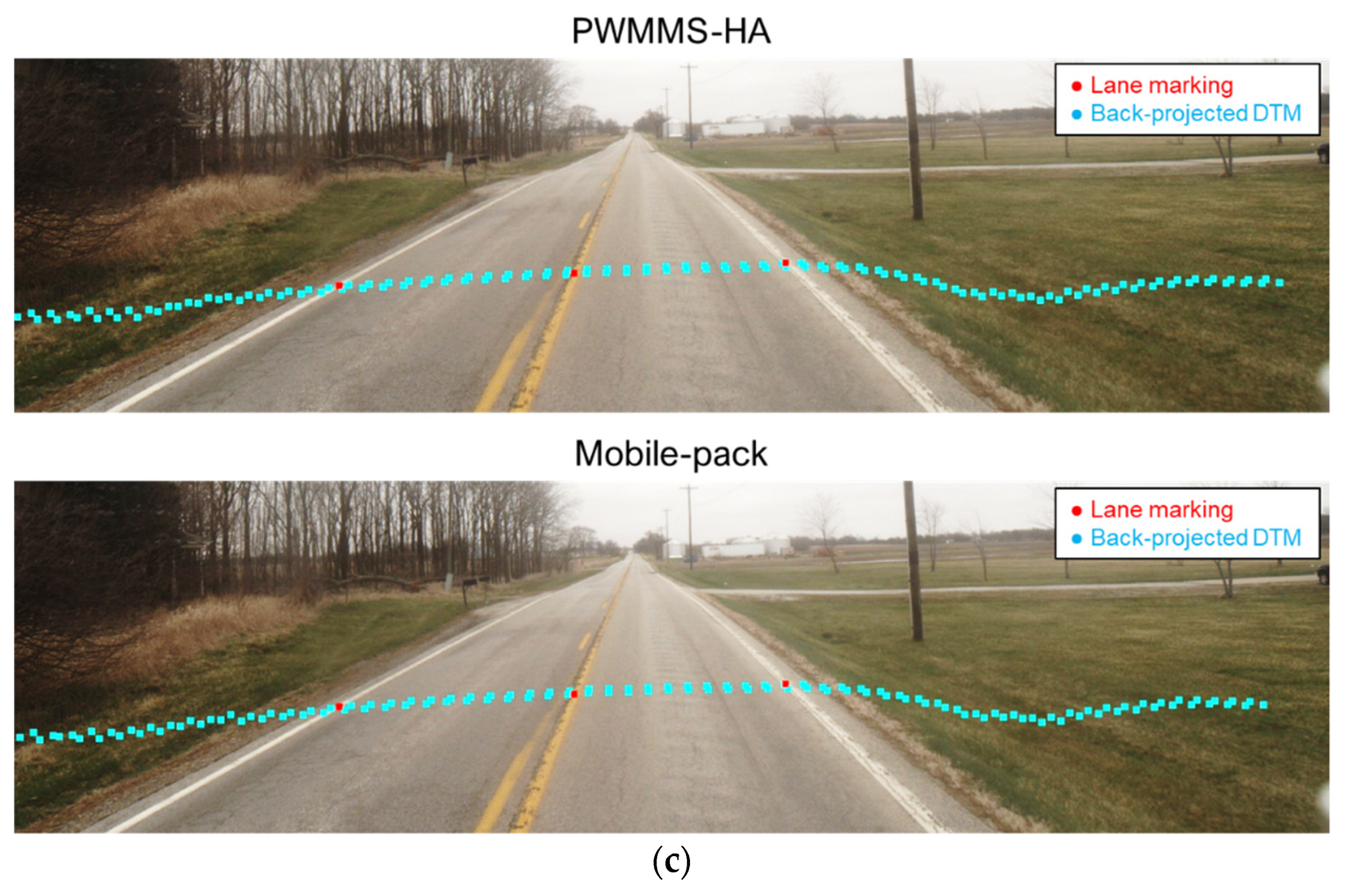

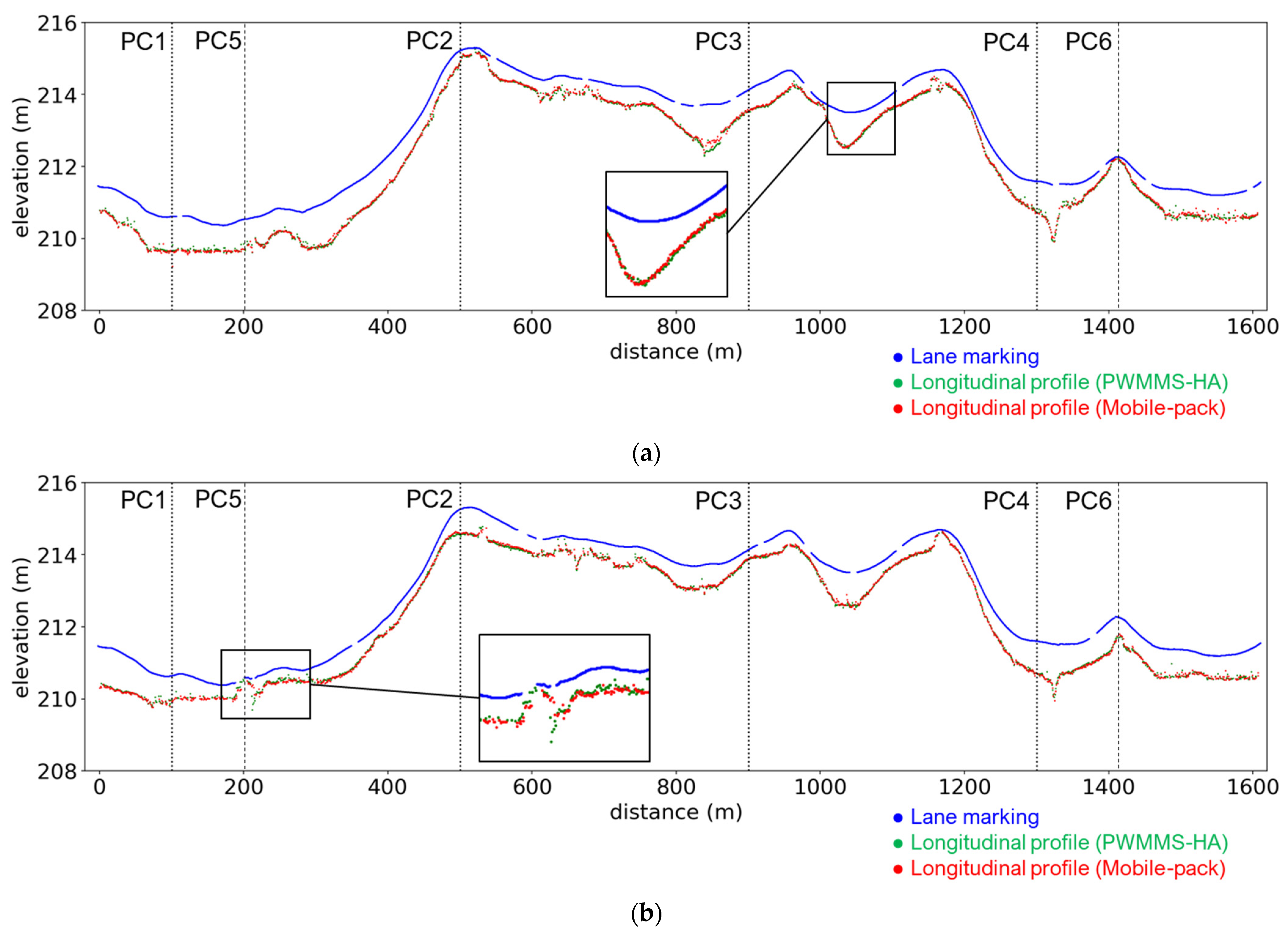

4.4. Drainage Network and Longitudinal Profile Extraction

5. Experimental Results

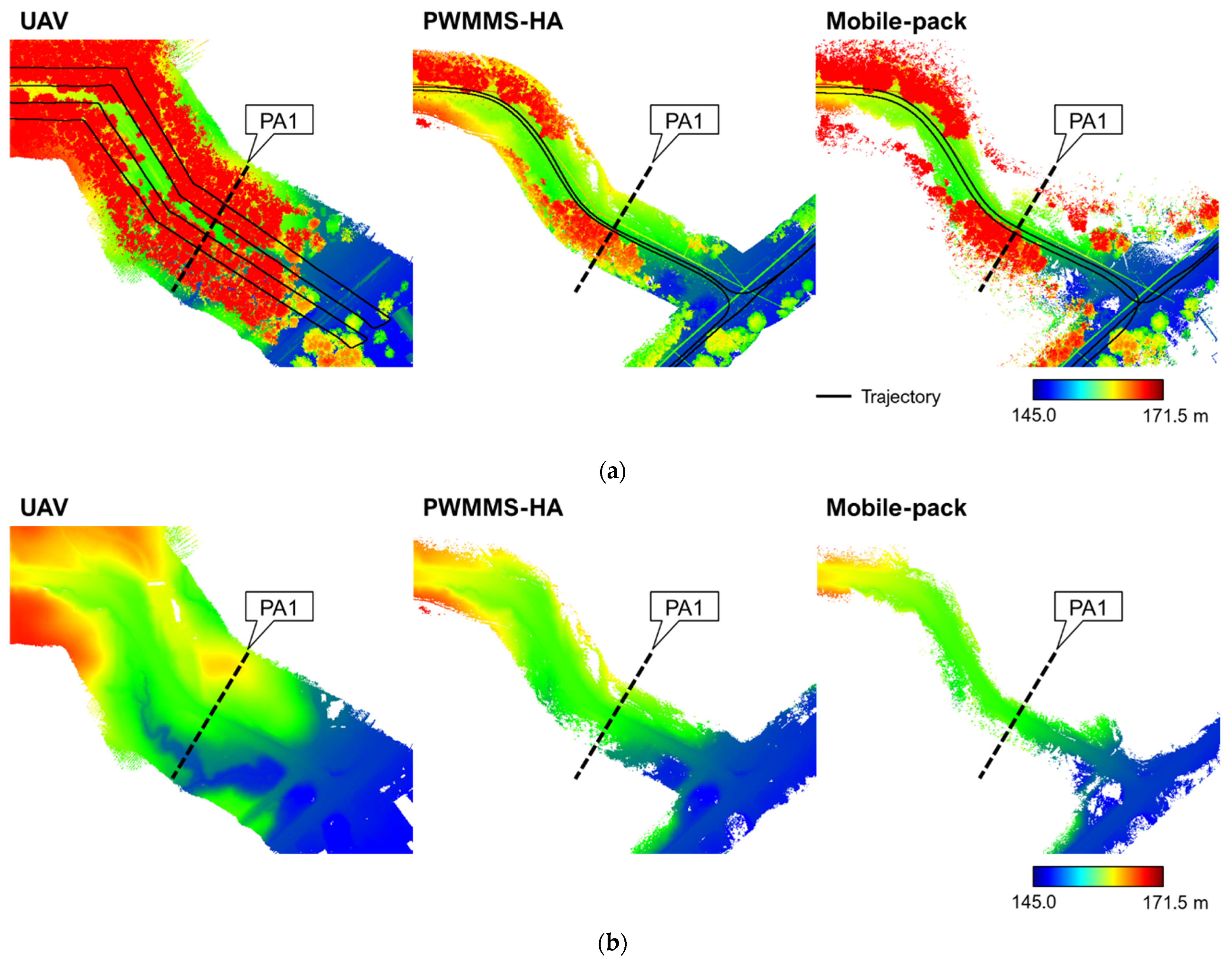

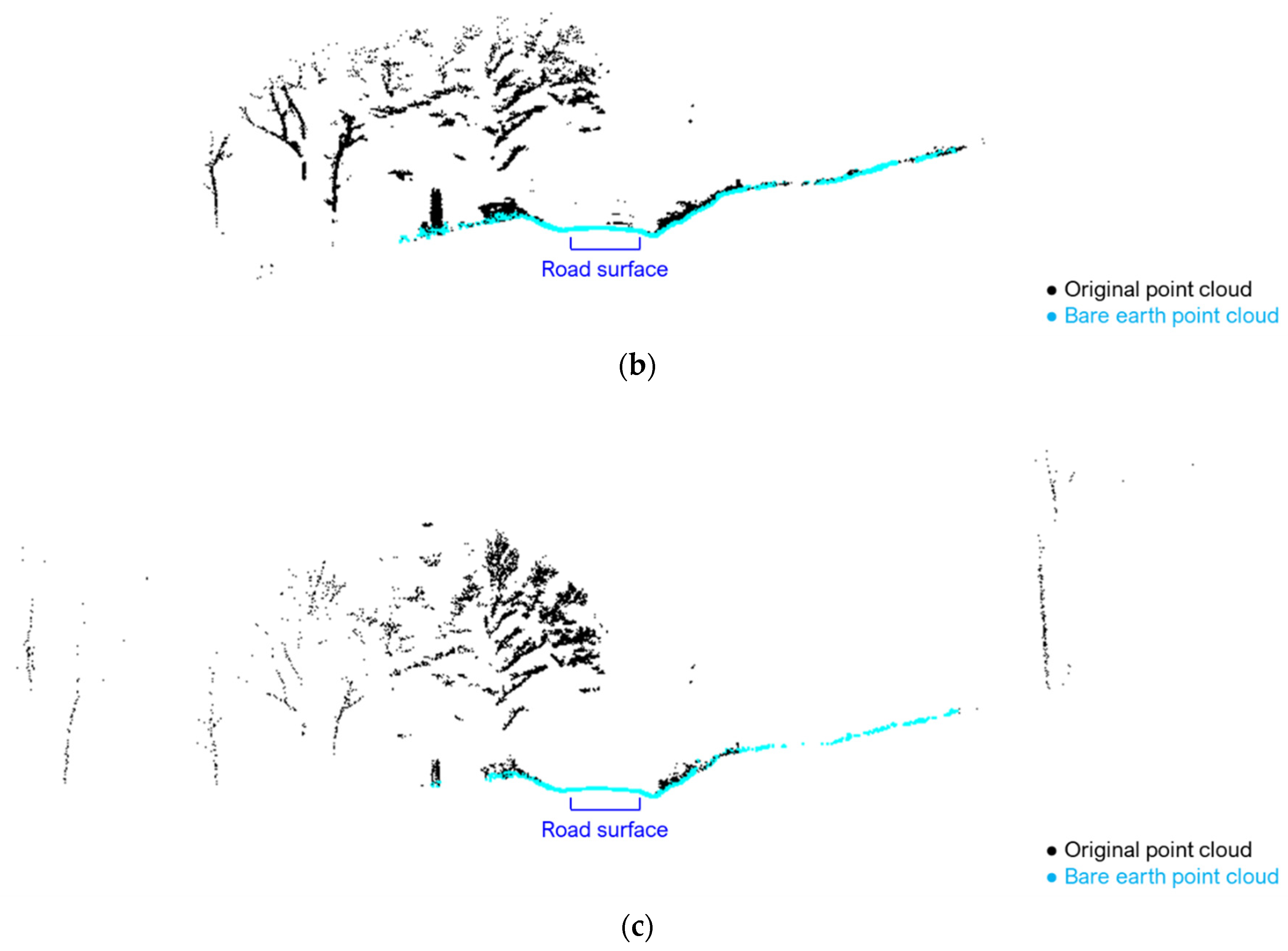

5.1. Comparison between Ground and UAV Systems for Mapping Roadside Ditches

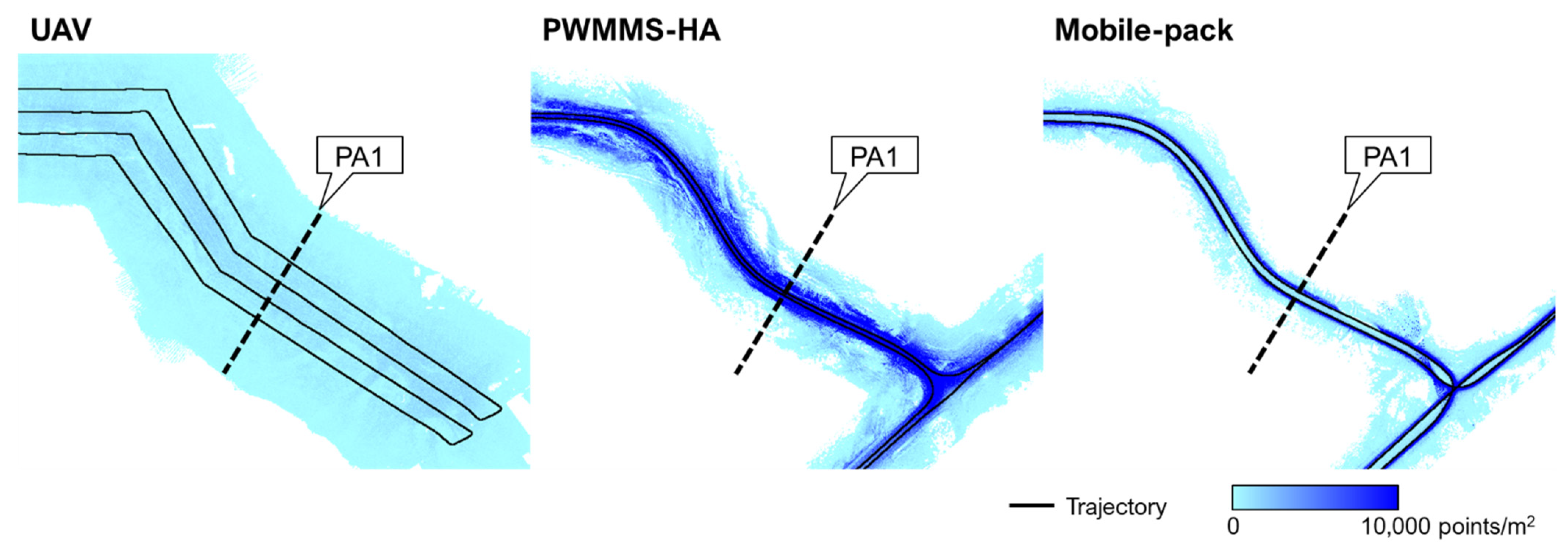

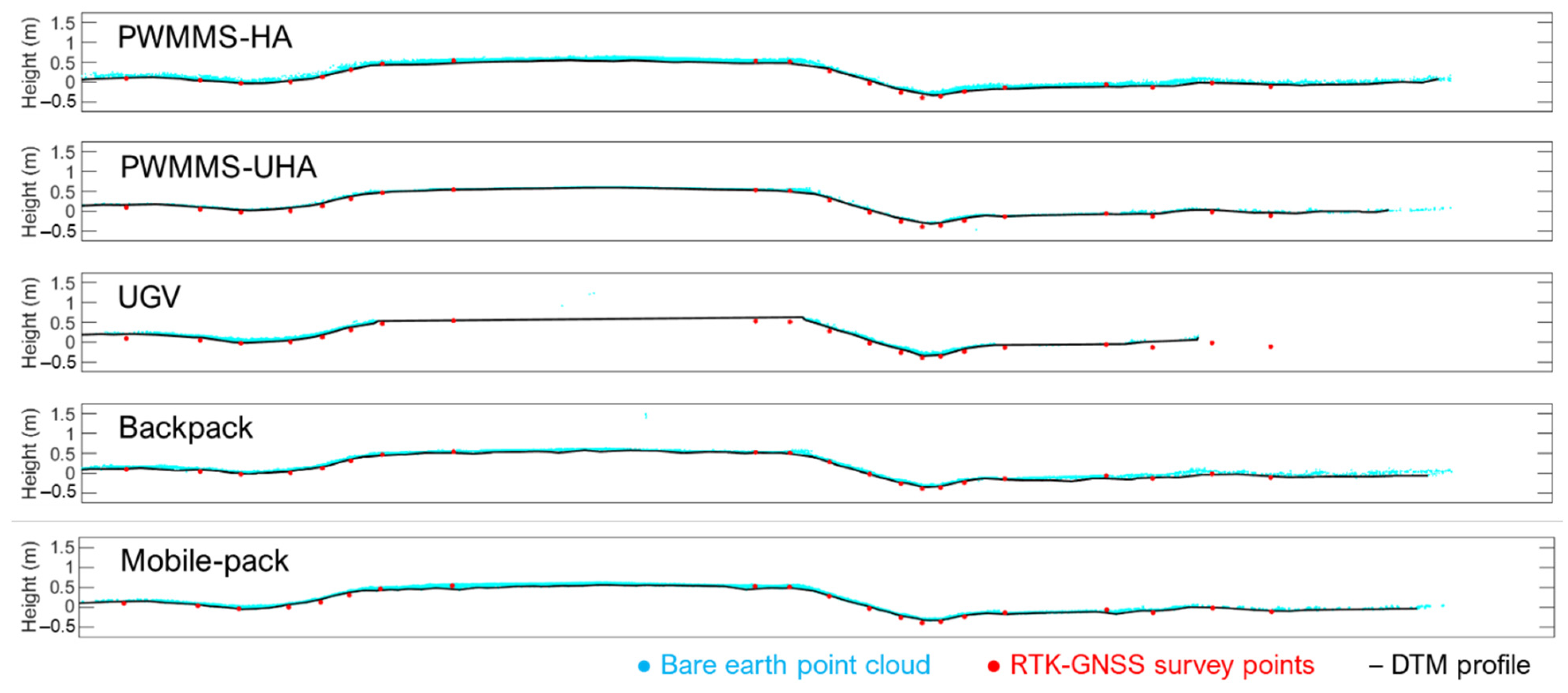

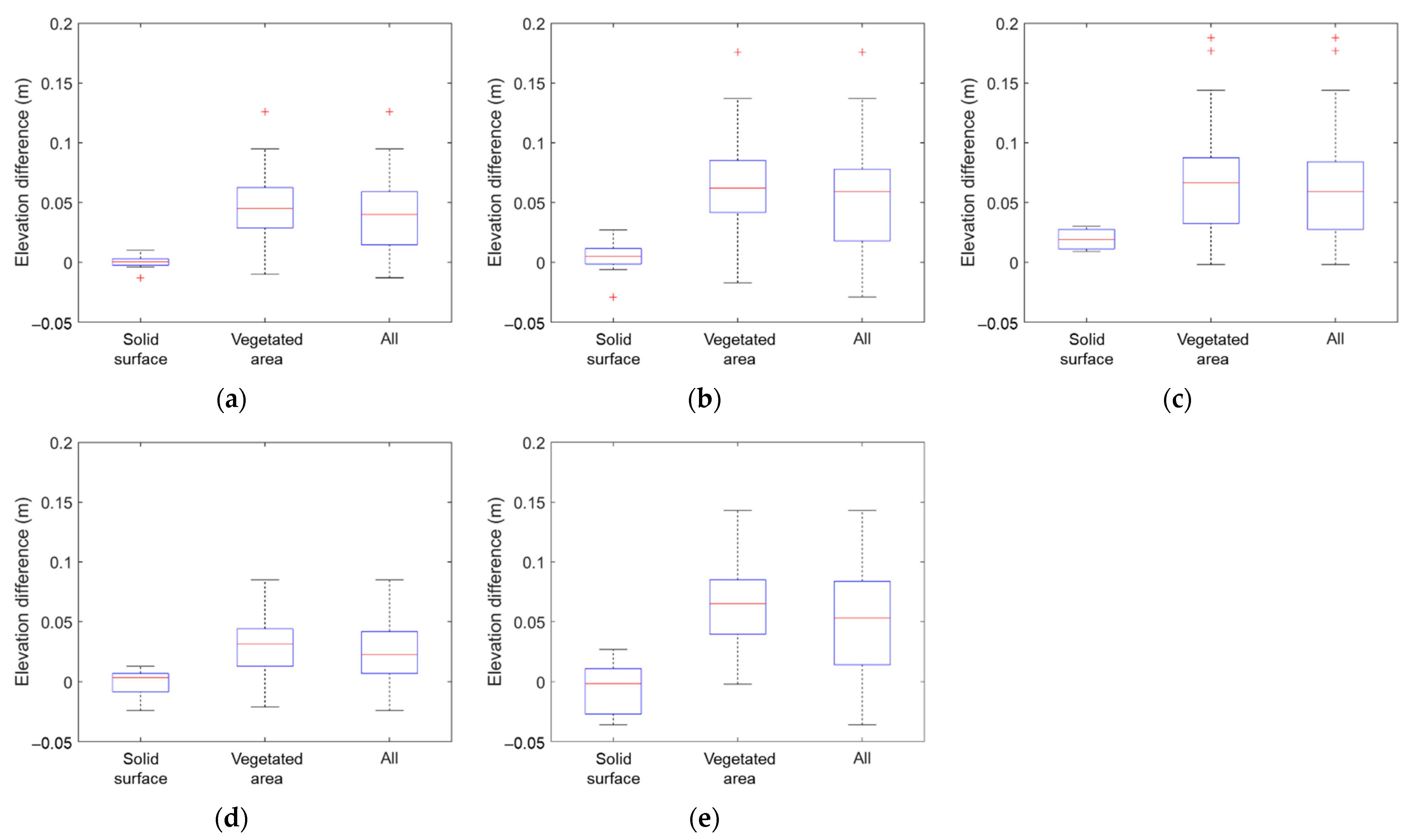

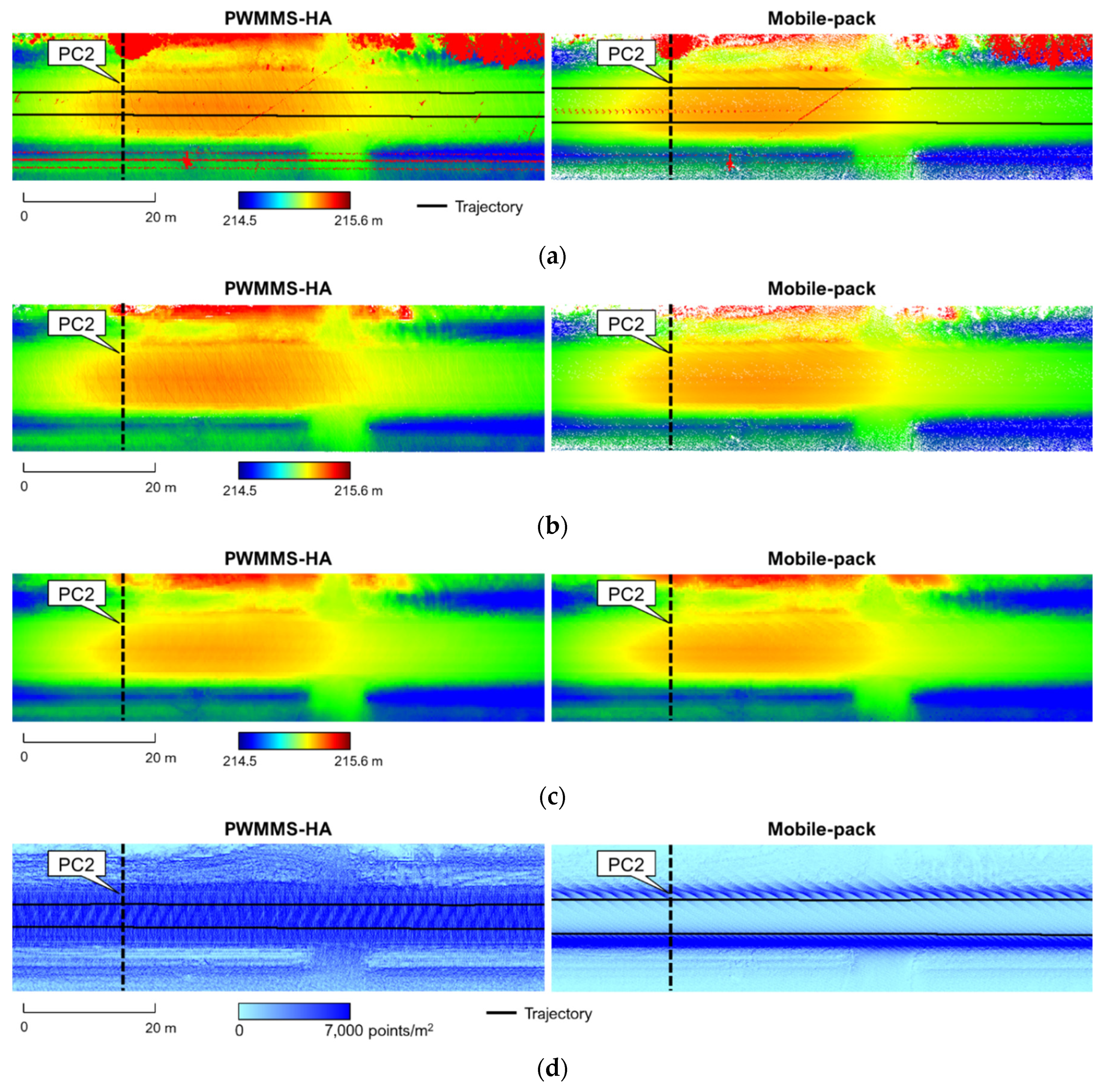

5.2. Comparative Performance of Different Ground MLMS Units

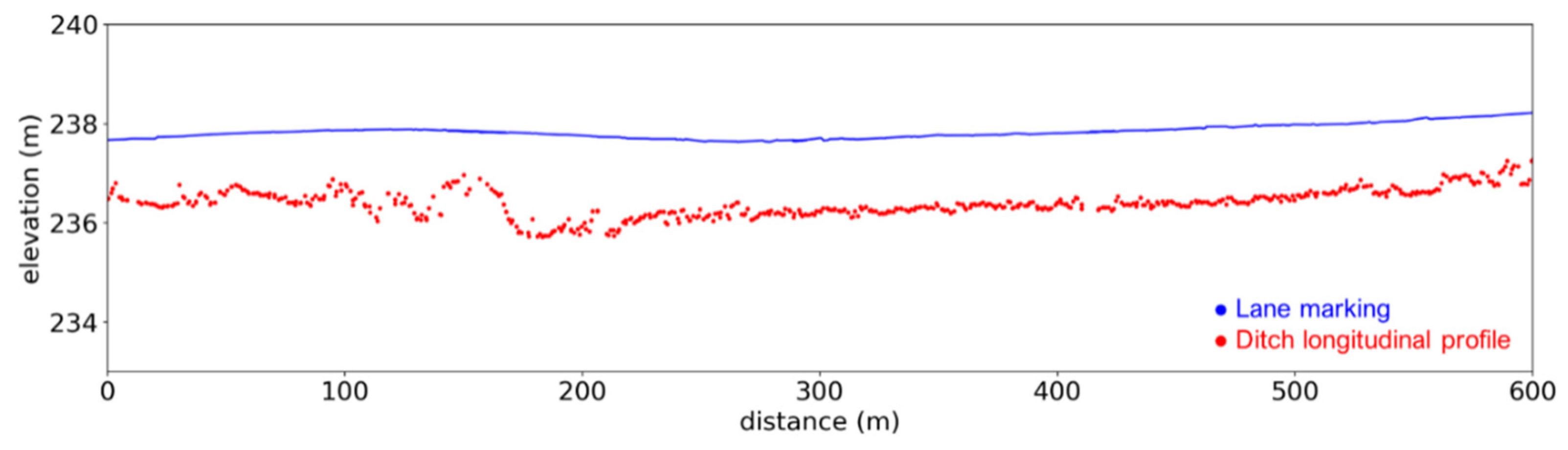

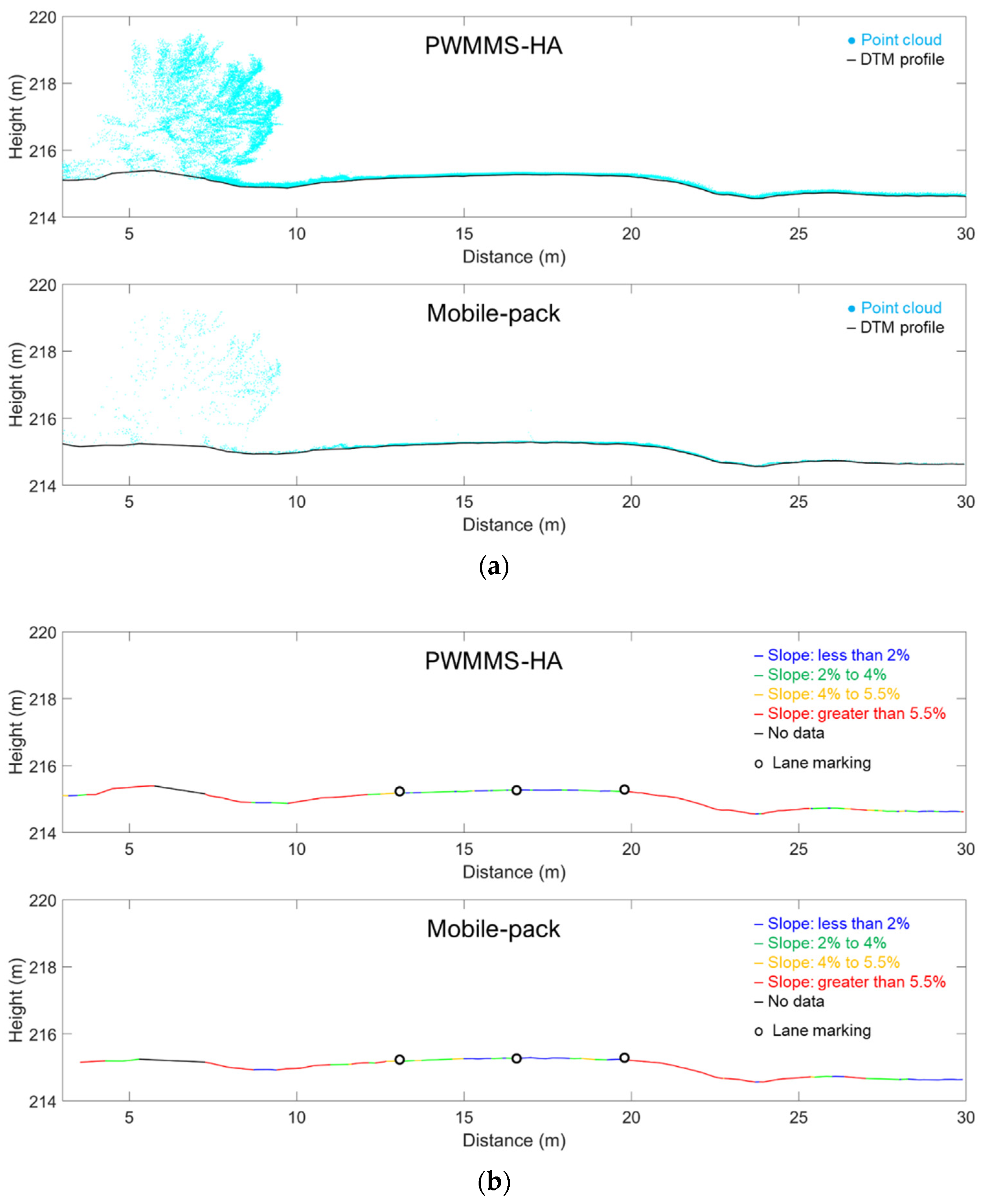

5.3. Ditch Line Characterization Using LiDAR Data

- bare earth point cloud and corresponding DTM;

- cross-sectional profiles in 3D and 2D, together with the slope evaluation results; and

- drainage network and longitudinal profiles.

6. Discussion

6.1. Comparative Performance of Different MLMS Units

6.2. Potential of Mobile LiDAR Data for Flooded Region Detection and Flood Risk Assessment

7. Conclusions and Recommendations for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Buchanan, B.; Easton, Z.M.; Schneider, R.L.; Walter, M.T. Modeling the hydrologic effects of roadside ditch networks on receiving waters. J. Hydrol. 2013, 486, 293–305. [Google Scholar] [CrossRef]

- Schneider, R.; Orr, D.; Johnson, A. Understanding Ditch Maintenance Decisions of Local Highway Agencies for Improved Water Resources across New York State. Transp. Res. Rec. 2019, 2673. [Google Scholar] [CrossRef]

- Matos, J.A. Improving Roadside Ditch Maintenance Practices in Ohio. Master’s Thesis, University of Cincinnati, Cincinnati, OH, USA, 2016. [Google Scholar]

- Gharaibeh, N.G.; Lindholm, D.B. A condition assessment method for roadside assets. Struct. Infrastruct. Eng. 2014, 10, 409–418. [Google Scholar] [CrossRef]

- Oti, I.C.; Gharaibeh, N.G.; Hendricks, M.D.; Meyer, M.A.; Van Zandt, S.; Masterson, J.; Horney, J.A.; Berke, P. Validity and Reliability of Drainage Infrastructure Monitoring Data Obtained from Citizen Scientists. J. Infrastruct. Syst. 2019, 25, 04019018. [Google Scholar] [CrossRef]

- Hendricks, M.D.; Meyer, M.A.; Gharaibeh, N.G.; Van Zandt, S.; Masterson, J.; Cooper, J.T.; Horney, J.A.; Berke, P. The development of a participatory assessment technique for infrastructure: Neighborhood-level monitoring towards sustainable infrastructure systems. Sustain. Cities Soc. 2018, 38, 265–274. [Google Scholar] [CrossRef] [PubMed]

- Costabile, P.; Costanzo, C.; De Lorenzo, G.; De Santis, R.; Penna, N.; Macchione, F. Terrestrial and airborne laser scanning and 2-D modelling for 3-D flood hazard maps in urban areas: New opportunities and perspectives. Environ. Model. Softw. 2021, 135, 104889. [Google Scholar] [CrossRef]

- Siegel, Z.S.; Kulp, S.A. Superimposing height-controllable and animated flood surfaces into street-level photographs for risk communication. Weather. Clim. Extrem. 2021, 32, 100311. [Google Scholar] [CrossRef]

- Levavasseur, F.; Lagacherie, P.; Bailly, J.S.; Biarnès, A.; Colin, F. Spatial modeling of man-made drainage density of agricultural landscapes. J. Land Use Sci. 2015, 10, 256–276. [Google Scholar] [CrossRef] [Green Version]

- Roelens, J.; Höfle, B.; Dondeyne, S.; Van Orshoven, J.; Diels, J. Drainage ditch extraction from airborne LiDAR point clouds. ISPRS J. Photogramm. Remote. Sens. 2018, 146, 409–420. [Google Scholar] [CrossRef]

- Ariza-Villaverde, A.B.; Jiménez-Hornero, F.J.; Gutiérrez de Ravé, E. Influence of DEM resolution on drainage network extraction: A multifractal analysis. Geomorphology 2015, 241, 243–254. [Google Scholar] [CrossRef]

- Metz, M.; Mitasova, H.; Harmon, R.S. Efficient extraction of drainage networks from massive, radar-based elevation models with least cost path search. Hydrol. Earth Syst. Sci. 2011, 15, 667–678. [Google Scholar] [CrossRef] [Green Version]

- Cheng, Y.T.; Patel, A.; Wen, C.; Bullock, D.; Habib, A. Intensity thresholding and deep learning based lane marking extraction and lanewidth estimation from mobile light detection and ranging (LiDAR) point clouds. Remote Sens. 2020, 12, 1379. [Google Scholar] [CrossRef]

- Wen, C.; Sun, X.; Li, J.; Wang, C.; Guo, Y.; Habib, A. A deep learning framework for road marking extraction, classification and completion from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 147, 178–192. [Google Scholar] [CrossRef]

- Cai, H.; Rasdorf, W. Modeling road centerlines and predicting lengths in 3-D using LIDAR point cloud and planimetric road centerline data. Comput. Civ. Infrastruct. Eng. 2008, 23, 157–173. [Google Scholar] [CrossRef]

- Lin, Y.C.; Cheng, Y.T.; Lin, Y.J.; Flatt, J.E.; Habib, A.; Bullock, D. Evaluating the Accuracy of Mobile LiDAR for Mapping Airfield Infrastructure. Transp. Res. Rec. 2019, 2673, 117–124. [Google Scholar] [CrossRef]

- Ravi, R.; Habib, A.; Bullock, D. Pothole mapping and patching quantity estimates using lidar-based mobile mapping systems. Transp. Res. Rec. 2020, 2674, 124–134. [Google Scholar] [CrossRef]

- You, C.; Wen, C.; Member, S.; Wang, C.; Member, S.; Li, J.; Member, S.; Habib, A. Joint 2-D–3-D Traffic Sign Landmark Data Set for Geo-Localization Using Mobile Laser Scanning Data. IEEE Trans. Intell. Transp. Syst. 2018, 20, 2550–2565. [Google Scholar] [CrossRef]

- Soilán, M.; Riveiro, B.; Martínez-Sánchez, J.; Arias, P. Traffic sign detection in MLS acquired point clouds for geometric and image-based semantic inventory. ISPRS J. Photogramm. Remote Sens. 2016, 114, 92–101. [Google Scholar] [CrossRef]

- Castro, M.; Lopez-Cuervo, S.; Paréns-González, M.; de Santos-Berbel, C. LIDAR-based roadway and roadside modelling for sight distance studies. Surv. Rev. 2016, 48, 309–315. [Google Scholar] [CrossRef]

- Gargoum, S.A.; El-Basyouny, K.; Sabbagh, J. Assessing Stopping and Passing Sight Distance on Highways Using Mobile LiDAR Data. J. Comput. Civ. Eng. 2018, 32, 04018025. [Google Scholar] [CrossRef]

- Gong, J.; Zhou, H.; Gordon, C.; Jalayer, M. Mobile terrestrial laser scanning for highway inventory data collection. Comput. Civ. Eng. 2012, 2012, 545–552. [Google Scholar]

- Jalayer, M.; Gong, J.; Zhou, H.; Grinter, M. Evaluation of Remote Sensing Technologies for Collecting Roadside Feature Data to Support Highway Safety Manual Implementation. J. Transp. Saf. Secur. 2015, 7, 345–357. [Google Scholar] [CrossRef]

- Gargoum, S.; El-Basyouny, K. Automated extraction of road features using LiDAR data: A review of LiDAR applications in transportation. In Proceedings of the 2017 4th International Conference on Transportation Information and Safety (ICTIS), Banff, AB, Canada, 8–10 August 2017; pp. 563–574. [Google Scholar] [CrossRef]

- Williams, K.; Olsen, M.J.; Roe, G.V.; Glennie, C. Synthesis of Transportation Applications of Mobile LIDAR. Remote Sens. 2013, 5, 4652–4692. [Google Scholar] [CrossRef] [Green Version]

- Guan, H.; Li, J.; Cao, S.; Yu, Y. Use of mobile LiDAR in road information inventory: A review. Int. J. Image Data Fusion 2016, 7, 219–242. [Google Scholar] [CrossRef]

- Tsai, Y.; Ai, C.; Wang, Z.; Pitts, E. Mobile cross-slope measurement method using lidar technology. Transp. Res. Rec. 2013, 2367, 53–59. [Google Scholar] [CrossRef]

- Holgado-Barco, A.; Riveiro, B.; González-Aguilera, D.; Arias, P. Automatic Inventory of Road Cross-Sections from Mobile Laser Scanning System. Comput. Civ. Infrastruct. Eng. 2017, 32, 3–17. [Google Scholar] [CrossRef] [Green Version]

- Puente, I.; Akinci, B.; González-Jorge, H.; Díaz-Vilariño, L.; Arias, P. A semi-automated method for extracting vertical clearance and cross sections in tunnels using mobile LiDAR data. Tunn. Undergr. Sp. Technol. 2016, 59, 48–54. [Google Scholar] [CrossRef]

- Levavasseur, F.; Bailly, J.S.; Lagacherie, P.; Colin, F.; Rabotin, M. Simulating the effects of spatial configurations of agricultural ditch drainage networks on surface runoff from agricultural catchments. Hydrol. Process. 2012, 26, 3393–3404. [Google Scholar] [CrossRef] [Green Version]

- Barber, C.P.; Shortridge, A. Lidar elevation data for surface hydrologic modeling: Resolution and representation issues. Cartogr. Geogr. Inf. Sci. 2005, 32, 401–410. [Google Scholar] [CrossRef]

- Ibeh, C.; Pallai, C.; Saavedra, D. Lidar-based roadside ditch mapping in York and Lancaster Counties. Pennsylvania. pp. 1–17. Available online: https://www.chesapeakebay.net/documents/Lidar-Based_Roadside_Ditch_Mapping_Report.pdf (accessed on 22 June 2021).

- Bertels, L.; Houthuys, R.; Sterckx, S.; Knaeps, E.; Deronde, B. Large-scale mapping of the riverbanks, mud flats and salt marshes of the scheldt basin, using airborne imaging spectroscopy and LiDAR. Int. J. Remote Sens. 2011, 32, 2905–2918. [Google Scholar] [CrossRef]

- Murphy, P.; Ogilvie, J.; Meng, F.-R.; Arp, P. Advanced Bash-Scripting Guide An in-depth exploration of the art of shell scripting Table of Contents. Hydrol. Process. 2007, 22, 1747–1754. [Google Scholar] [CrossRef]

- Günen, M.A.; Atasever, Ü.H.; Taşkanat, T.; Beşdok, E. Usage of unmanned aerial vehicles (UAVs) in determining drainage networks. E-J. New World Sci. Acad. 2019, 14, 1–10. [Google Scholar] [CrossRef]

- Pricope, N.G.; Halls, J.N.; Mapes, K.L.; Baxley, J.B.; Wu, J.J. Quantitative comparison of uas-borne lidar systems for high-resolution forested wetland mapping. Sensors 2020, 20, 4453. [Google Scholar] [CrossRef]

- Yan, L.; Liu, H.; Tan, J.; Li, Z.; Chen, C. A multi-constraint combined method for ground surface point filtering from mobile LiDAR point clouds. Remote Sens. 2017, 9, 958. [Google Scholar] [CrossRef] [Green Version]

- Serifoglu Yilmaz, C.; Yilmaz, V.; Güngör, O. Investigating the performances of commercial and non-commercial software for ground filtering of UAV-based point clouds. Int. J. Remote Sens. 2018, 39, 5016–5042. [Google Scholar] [CrossRef]

- Bolkas, D.; Naberezny, B.; Jacobson, M.G. Comparison of sUAS Photogrammetry and TLS for Detecting Changes in Soil Surface Elevations Following Deep Tillage. J. Surv. Eng. 2021, 147, 04021001. [Google Scholar] [CrossRef]

- Bailly, J.S.; Lagacherie, P.; Millier, C.; Puech, C.; Kosuth, P. Agrarian landscapes linear features detection from LiDAR: Application to artificial drainage networks. Int. J. Remote Sens. 2008, 29, 3489–3508. [Google Scholar] [CrossRef]

- Rapinel, S.; Hubert-Moy, L.; Clément, B.; Nabucet, J.; Cudennec, C. Ditch network extraction and hydrogeomorphological characterization using LiDAR-derived DTM in wetlands. Hydrol. Res. 2015, 46, 276–290. [Google Scholar] [CrossRef]

- Broersen, T.; Peters, R.; Ledoux, H. Automatic identification of watercourses in flat and engineered landscapes by computing the skeleton of a LiDAR point cloud. Comput. Geosci. 2017, 106, 171–180. [Google Scholar] [CrossRef] [Green Version]

- Roelens, J.; Rosier, I.; Dondeyne, S.; Van Orshoven, J.; Diels, J. Extracting drainage networks and their connectivity using LiDAR data. Hydrol. Process. 2018, 32, 1026–1037. [Google Scholar] [CrossRef]

- Balado, J.; Martínez-Sánchez, J.; Arias, P.; Novo, A. Road environment semantic segmentation with deep learning from mls point cloud data. Sensors 2019, 19, 3466. [Google Scholar] [CrossRef] [Green Version]

- Applanix POSLV 220 Datasheet. Available online: https://www.applanix.com/products/poslv.htm (accessed on 26 April 2020).

- Applanix APX-15 Datasheet. Available online: https://www.applanix.com/products/dg-uavs.htm (accessed on 26 April 2020).

- Novatel IMU-ISA-100C. Available online: https://docs.novatel.com/OEM7/Content/Technical_Specs_IMU/ISA_100C_Overview.htm (accessed on 26 April 2020).

- Novatel SPAN-CPT. Available online: https://novatel.com/support/previous-generation-products-drop-down/previous-generation-products/span-cpt (accessed on 26 May 2021).

- Novatel SPAN-IGM-A1. Available online: https://novatel.com/support/span-gnss-inertial-navigation-systems/span-combined-systems/span-igm-a1 (accessed on 26 May 2021).

- Velodyne Puck Hi-Res Datasheet. Available online: https://velodynelidar.com/products/puck-hi-res/ (accessed on 26 May 2021).

- Velodyne HDL32E Datasheet. Available online: https://velodynelidar.com/products/hdl-32e/ (accessed on 26 May 2021).

- Riegl VUX-1HA. Available online: http://www.riegl.com/products/newriegl-vux-1-series/newriegl-vux-1ha (accessed on 26 April 2020).

- Z+F Profiler 9012. Available online: https://www.zf-laser.com/Z-F-PROFILER-R-9012.2d_laserscanner.0.html (accessed on 26 April 2020).

- Velodyne Ultra Puck Datasheet. Available online: https://velodynelidar.com/products/ultra-puck/ (accessed on 26 May 2021).

- Ravi, R.; Lin, Y.J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-LiDAR multi-camera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Habib, A.; Lay, J.; Wong, C. LIDAR Error Propagation Calculator. Available online: https://engineering.purdue.edu/CE/Academics/Groups/Geomatics/DPRG/files/LIDARErrorPropagation.zip (accessed on 23 June 2021).

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Lin, Y.C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Renaudin, E.; Habib, A.; Kersting, A.P. Featured-based registration of terrestrial laser scans with minimum overlap using photogrammetric data. ETRI J. 2011, 33, 517–527. [Google Scholar] [CrossRef]

- Ravi, R.; Habib, A. Least squares adjustment with a rank-deficient weight matrix and its applicability towards image/LiDAR data processing. Photogramm. Eng. Remote Sens. 2021, in press. [Google Scholar]

- McGee, H.W.; Nabors, D.; Baughman, T. Maintenance of Drainage Features for Safety: A Guide for Street and Highway Maintenance Personnel (No. FHWA-SA-09-024); United States. Federal Highway Administration: Washington, DC, USA, 2009. [Google Scholar]

- Jenson, S.K.; Domingue, J.O. Extracting topographic structure from digital elevation data for geographic information system analysis. Photogramm. Eng. Remote Sens. 1988, 54, 1593–1600. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Paradigm for Model Consensus: A Apphcatlons to Image Fitting with Analysis and Automated Cartography. Graph. Image Process. 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Maidment, D.R.; Morehouse, S. Arc Hydro: GIS for Water Resources; ESRI, Inc.: Redlands, CA, USA, 2002. [Google Scholar]

| UAV | UGV | Backpack/ Mobile-Pack | PWMMS-HA | PWMMS-UHA | |||

|---|---|---|---|---|---|---|---|

| GNSS/INS Sensors | Applanix APX15v3 | NovAtel SPAN-IGM-S1 | NovAtel SPAN-CPT | Applanix POS LV 220 | NovAtel ProPak6; IMU-ISA-100C | ||

| Sensor Weight | 0.06 kg | 0.54 kg | 2.28 kg | 2.40 + 2.50 kg | 1.79 + 5.00 kg | ||

| Positional Accuracy | 2–5 cm | 2–3 cm | 1–2 cm | 2–5 cm | 1–2 cm | ||

| Attitude Accuracy (Roll/Pitch) | 0.025° | 0.006° | 0.015° | 0.015° | 0.003° | ||

| Attitude Accuracy (Heading) | 0.08° | 0.02° | 0.03° | 0.025° | 0.004° | ||

| LiDAR Sensors | Velodyne VLP-32C | Velodyne VLP-16 High-Res | Velodyne VLP-16 High-Res | Velodyne VLP-16 High-Res | Velodyne HDL-32E | Riegl VUX 1HA | Z+F Profiler 9012 |

| Sensor Weight | 0.925 kg | 0.830 kg | 0.830 kg | 0.830 kg | 1.0 kg | 3.5 kg | 13.5 kg |

| No. of Channels | 32 | 16 | 16 | 16 | 32 | 1 | 1 |

| Pulse repetition rate | 600,000 point/s (single return) | ~300,000 point/s (single return) | ~300,000 point/s (single return) | ~300,000 point/s (single return) | ~695,000 point/s (single return) | Up to 1,000,000 point/s | Up to 1,000,000 point/s |

| Maximum Range | 200 m | 100 m | 100 m | 100 m | 100 m | 135 m | 119 m |

| Range Accuracy | 3 cm | 3 cm | 3 cm | 3 cm | cm | 5 mm | 2 mm |

| MLMS Cost (USD) | ~$60,000 | ~$37,000 | ~$36,000 | ~$190,000 | ~$320,000 | ||

| UAV | UGV | Backpack/Mobile-Pack | PWMMS-HA | PWMMS-UHA | ||

|---|---|---|---|---|---|---|

| LiDAR units | Lever Arm | ±1.2–1.5 cm | ±1.0–1.3 cm | ±0.5–0.8 cm | ±0.8–1.8 cm | ±0.5–0.6 cm |

| Boresight | ±0.02–0.04° | ±0.02–0.08° | ±0.02–0.03° | ±0.02–0.05° | ±0.01–0.02° | |

| Camera units | Lever Arm | ±2.7–5.4 cm | ±3.7–6.5 cm | ±3.0–4.9 cm | ±3.8–6.6 cm | ±3.1–6.0 cm |

| Boresight | ±0.03–0.04° | ±0.12–0.14° | ±0.08–0.12° | ±0.07–0.14° | ±0.06–0.11° |

| UAV | UGV | Backpack/ Mobile-Pack | PWMMS-HA | PWMMS-UHA | |

|---|---|---|---|---|---|

| Suggested sensor-to-object distance | 50 m | 5 m | 5 m | 30 m | 30 m |

| Corresponding accuracy | ±5–6 cm | ±2–4 cm | ±2–3 cm | ±2–3 cm | ±1–2 cm |

| Accuracy at 50 m | ±5–6 cm | ±3–7 cm | ±3–4 cm | ±3–6 cm | ±2–3 cm |

| ID | Location | Data Collection Date | System | Number of Tracks | Average Speed (mph) | Data Acquisition Time (min) | Length (mile) |

|---|---|---|---|---|---|---|---|

| A-1 | CR500N | 13 March 2021 | UAV | 4 | 8 | 12 | 0.4 |

| A-2 | 26 March 2021 | PWMMS-HA | 2 | 29 | 4 | 0.5 | |

| A-3 | 26 March 2021 | Mobile-pack | 2 | 20 | 4 | 0.5 | |

| B-1 | McCormick Rd. and Cherry Ln. | 22 December 2020 | PWMMS-HA | 2 | 20 | 10 | 1.6 |

| B-2 | 22 December 2020 | PWMMS-UHA | 2 | 20 | 10 | 1.6 | |

| B-3 | 22 December 2020 | UGV | 4 | 4 | 30 | 0.5 | |

| B-4 | 22 December 2020 | Backpack | 4 | 3 | 32 | 0.5 | |

| B-5 | 26 March 2021 | Mobile-pack | 2 | 26 | 4 | 1.1 | |

| C-1 | SR28 | 26 March 2021 | PWMMS-HA | 2 | 47 | 37 | 13.2 |

| C-2 | 26 March 2021 | Mobile-pack | 2 | 50 (WB)/30 (EB) | 35 | 13.2 |

| Dataset | Point Density (Points/m2) | ||

|---|---|---|---|

| 25th Percentile | Median | 75th Percentile | |

| A-1 (UAV) | 200 | 500 | 1000 |

| A-2 (PWMMS-HA) | 500 | 1800 | 6100 |

| A-3 (Mobile-pack) | 400 | 1200 | 3800 |

| Reference | Source | Number of Observations | |||

|---|---|---|---|---|---|

| Parameter | Std. Dev. | ||||

| UAV | PWMMS-HA | 111,973 | 0.083 | 0.028 | 2.615 |

| UAV | Mobile-pack | 55,742 | 0.064 | −0.008 | 2.864 |

| PWMMS-HA | Mobile-pack | 67,133 | 0.043 | −0.029 | 1.671 |

| Reference | Source | Number of Observations | M3C2 Distance (m) | |||

|---|---|---|---|---|---|---|

| Mean | Std. Dev. | RMSE | Median | |||

| UAV | PWMMS-HA | 93,124 | 0.034 | 0.068 | 0.076 | 0.030 |

| UAV | Mobile-pack | 50,123 | 0.001 | 0.074 | 0.074 | −0.004 |

| PWMMS-HA | Mobile-pack | 63,408 | −0.028 | 0.062 | 0.068 | −0.027 |

| Reference | Source | Number of Observations | |||

|---|---|---|---|---|---|

| Parameter | Std. Dev. | ||||

| PWMMS-HA | PWMMS-UHA | 13,610 | 0.010 | −0.013 | 8.711 |

| PWMMS-HA | UGV | 4737 | 0.021 | 0.007 | 3.385 |

| PWMMS-HA | Backpack | 12,480 | 0.012 | −0.027 | 1.137 |

| PWMMS-HA | Mobile-pack | 11,539 | 0.018 | −0.019 | 1.750 |

| Reference | Source | Number of Observations | M3C2 Distance (m) | |||

|---|---|---|---|---|---|---|

| Mean | Std. Dev. | RMSE | Median | |||

| PWMMS-HA | PWMMS-UHA | 11,279 | −0.012 | 0.013 | 0.018 | −0.013 |

| PWMMS-HA | UGV | 4018 | 0.012 | 0.028 | 0.031 | 0.008 |

| PWMMS-HA | Backpack | 10,272 | −0.029 | 0.017 | 0.033 | −0.029 |

| PWMMS-HA | Mobile Backpack | 10,261 | −0.021 | 0.022 | 0.031 | −0.022 |

| System | Platform | Pros | Cons |

|---|---|---|---|

| UAV | Aerial |

|

|

| UGV | Wheel-based |

|

|

| Backpack | Portable |

|

|

| Mobile-pack | Wheel-based |

|

|

| PWMMS-HA | Wheel-based |

|

|

| PWMMS-UHA | Wheel-based |

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-C.; Manish, R.; Bullock, D.; Habib, A. Comparative Analysis of Different Mobile LiDAR Mapping Systems for Ditch Line Characterization. Remote Sens. 2021, 13, 2485. https://doi.org/10.3390/rs13132485

Lin Y-C, Manish R, Bullock D, Habib A. Comparative Analysis of Different Mobile LiDAR Mapping Systems for Ditch Line Characterization. Remote Sensing. 2021; 13(13):2485. https://doi.org/10.3390/rs13132485

Chicago/Turabian StyleLin, Yi-Chun, Raja Manish, Darcy Bullock, and Ayman Habib. 2021. "Comparative Analysis of Different Mobile LiDAR Mapping Systems for Ditch Line Characterization" Remote Sensing 13, no. 13: 2485. https://doi.org/10.3390/rs13132485

APA StyleLin, Y.-C., Manish, R., Bullock, D., & Habib, A. (2021). Comparative Analysis of Different Mobile LiDAR Mapping Systems for Ditch Line Characterization. Remote Sensing, 13(13), 2485. https://doi.org/10.3390/rs13132485