A Scalable, Supervised Classification of Seabed Sediment Waves Using an Object-Based Image Analysis Approach

Abstract

1. Introduction

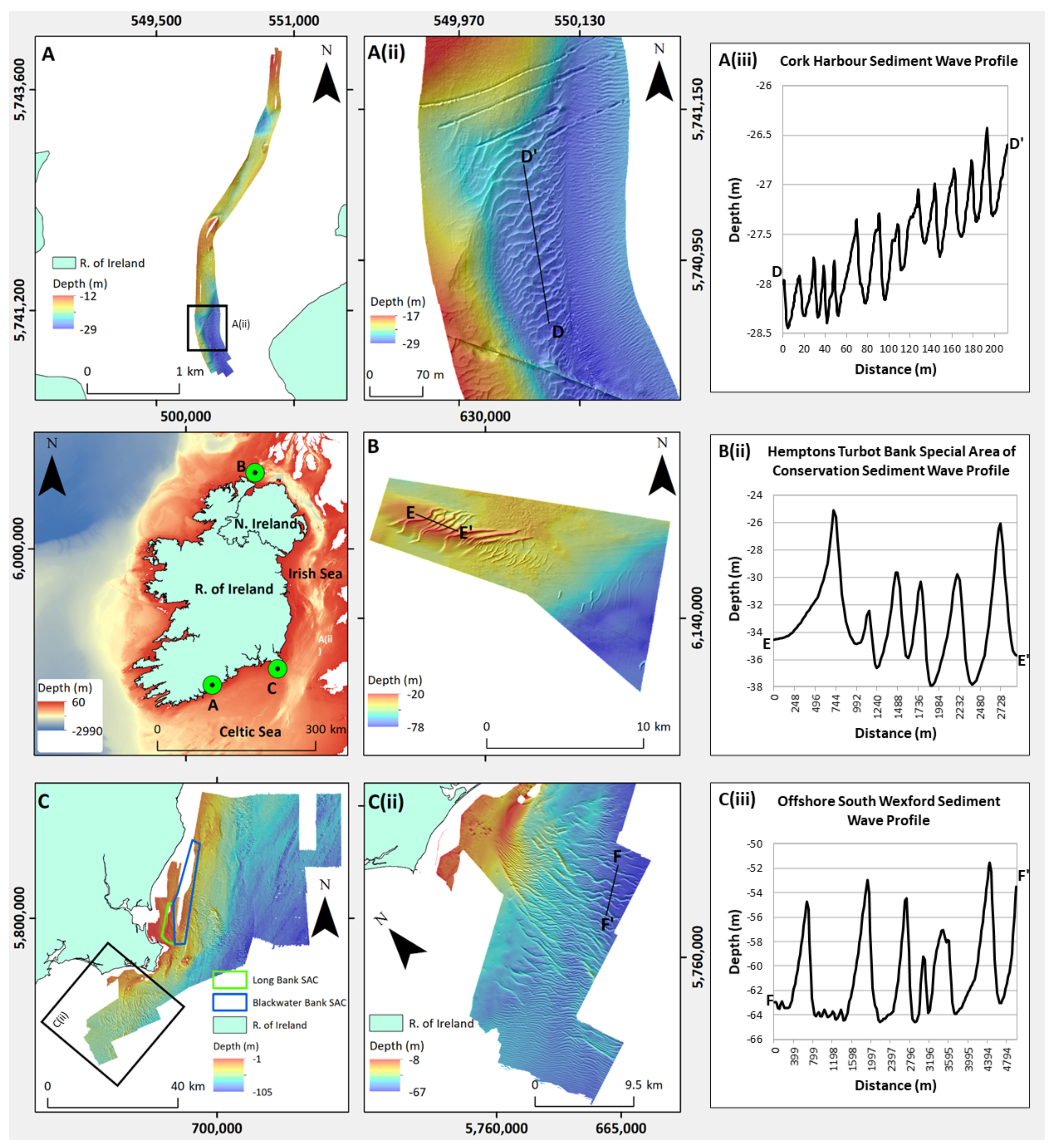

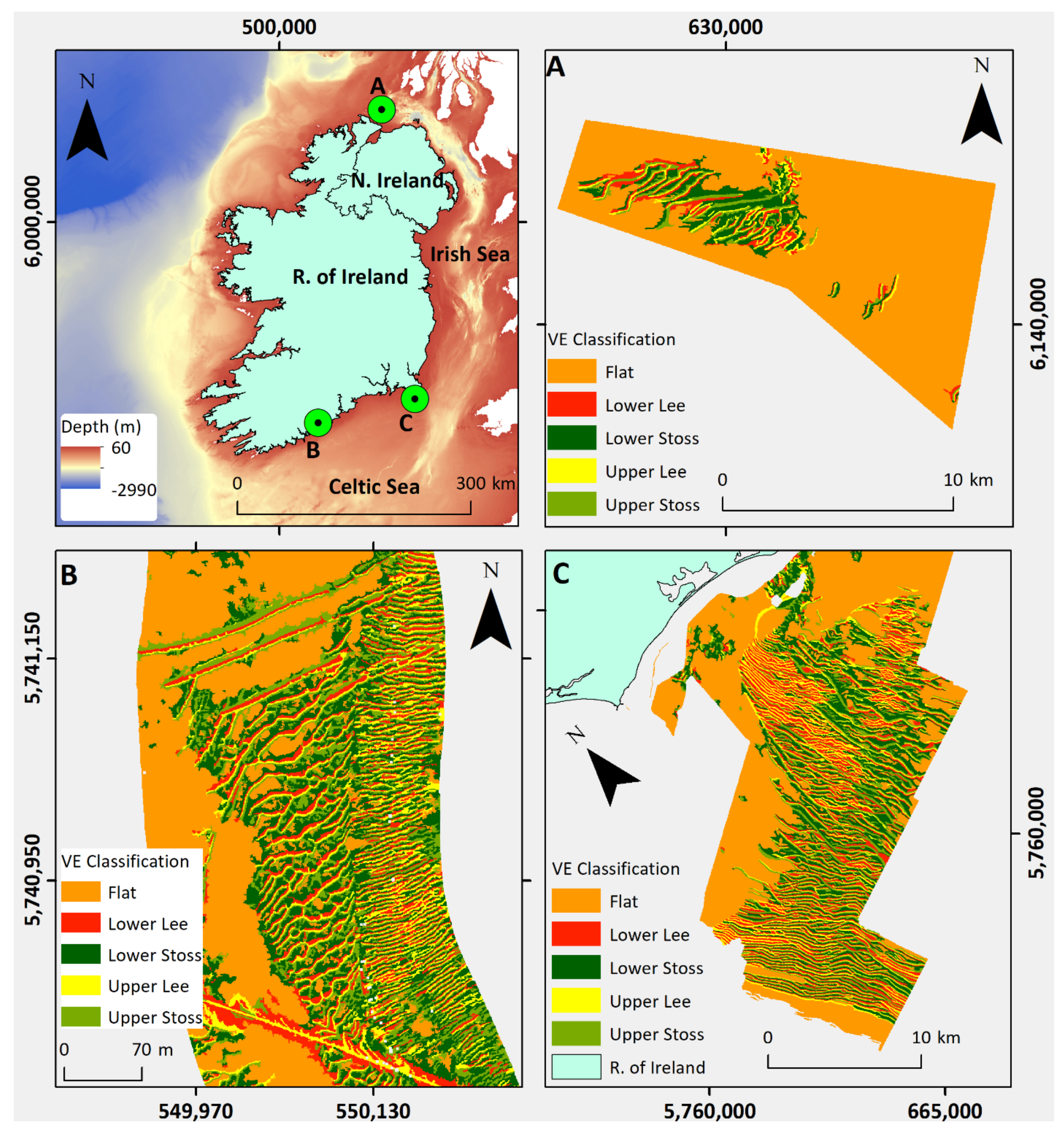

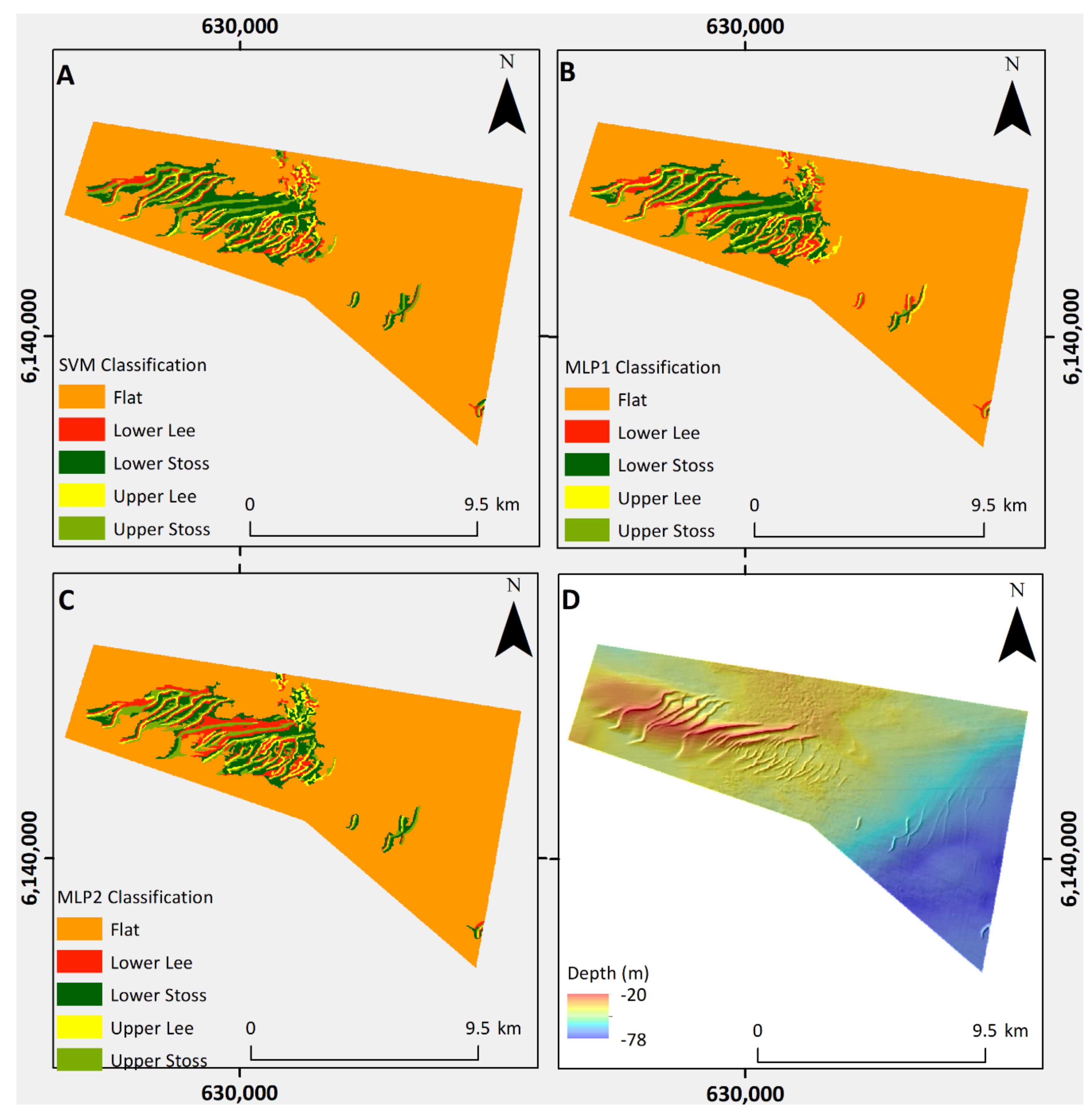

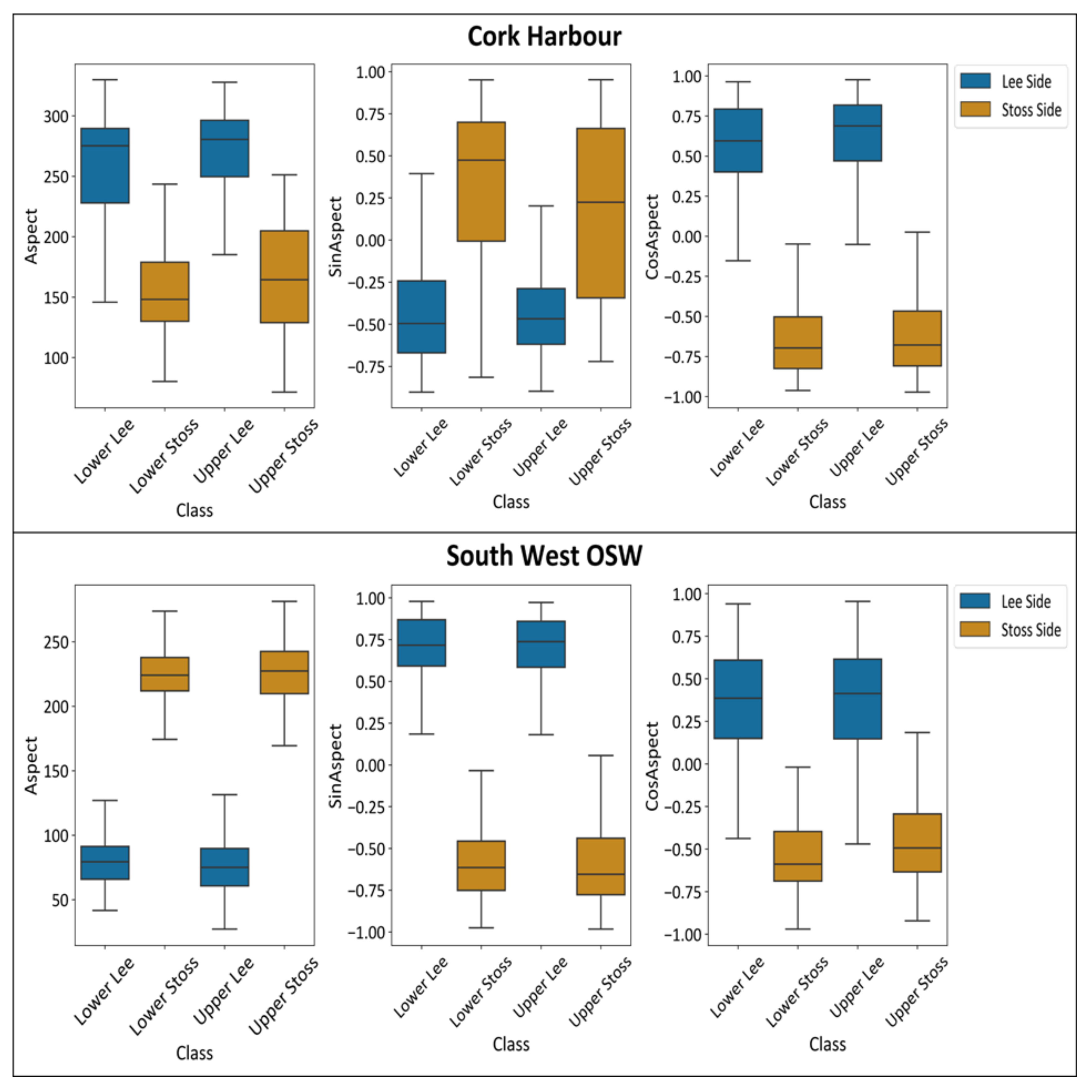

Study Sites

2. Materials and Methods

2.1. Multibeam Echosounder Data Acquisition and Processing

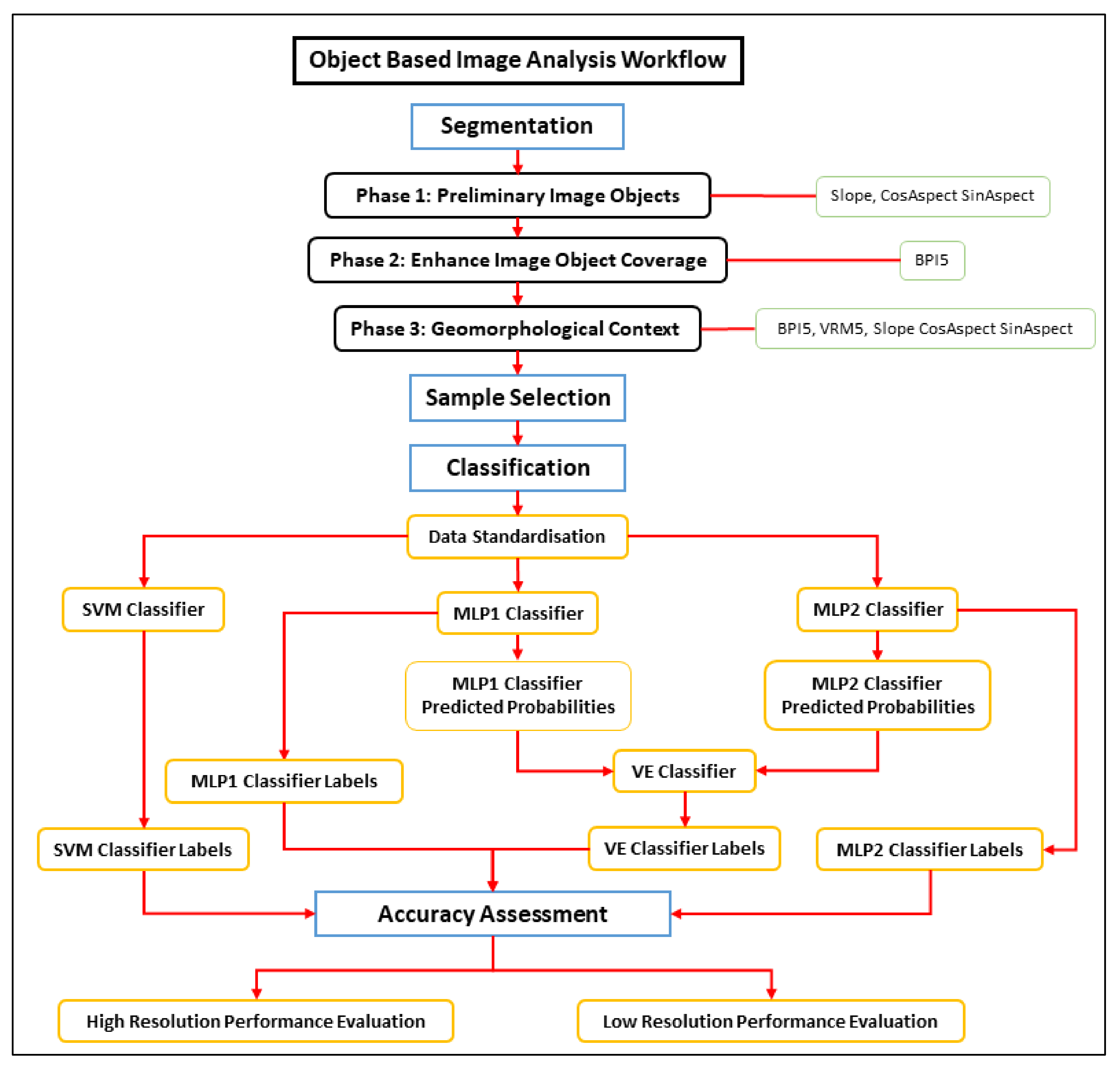

2.2. Object-Based Image Analysis

2.2.1. Segmentation

2.2.2. Sample Selection

2.2.3. Classification

Classifiers

2.2.4. Accuracy Assessment

K-Fold Cross-Validation

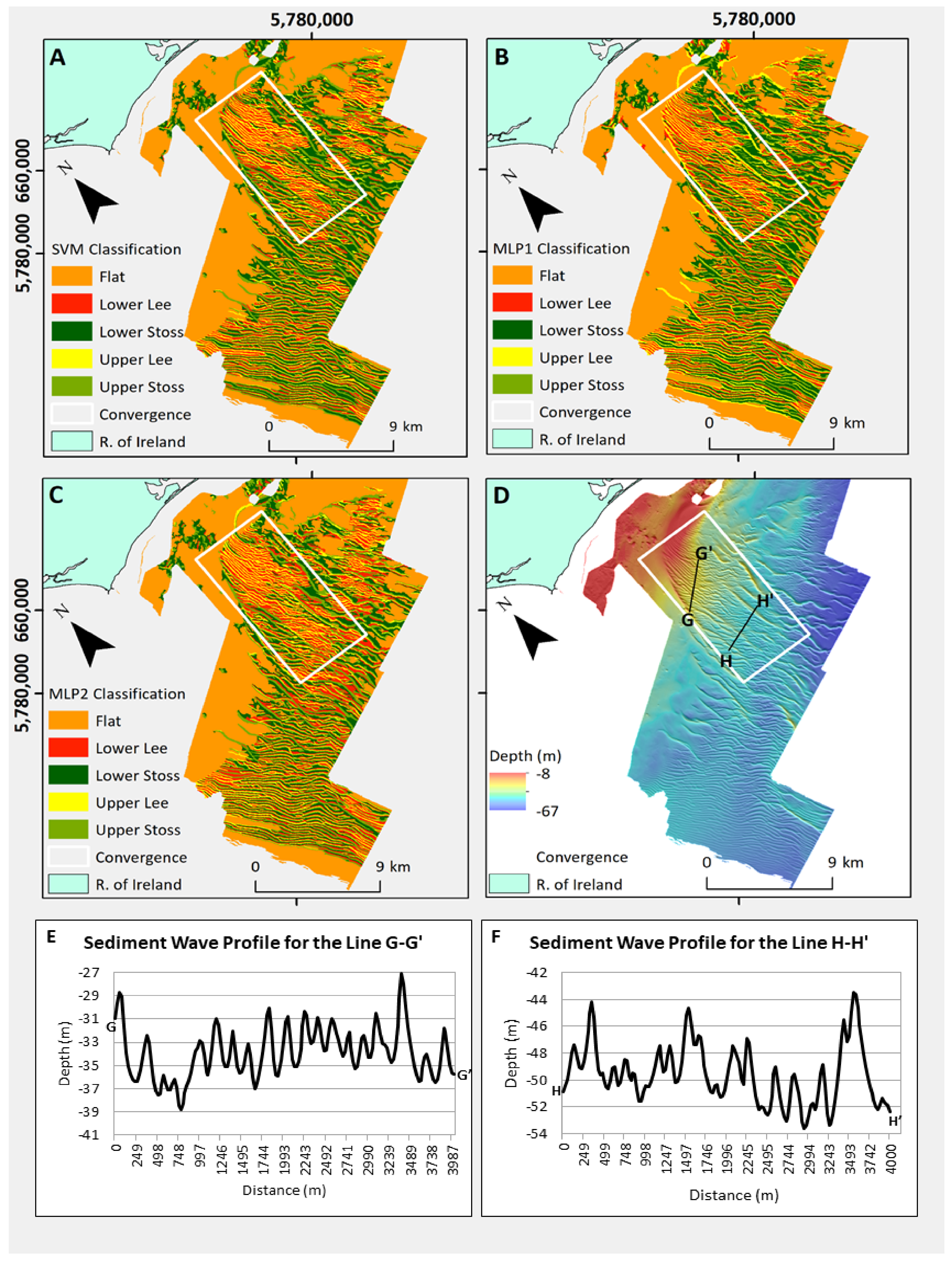

Hemptons Turbot Bank SAC and Offshore South Wexford Test Data

3. Results

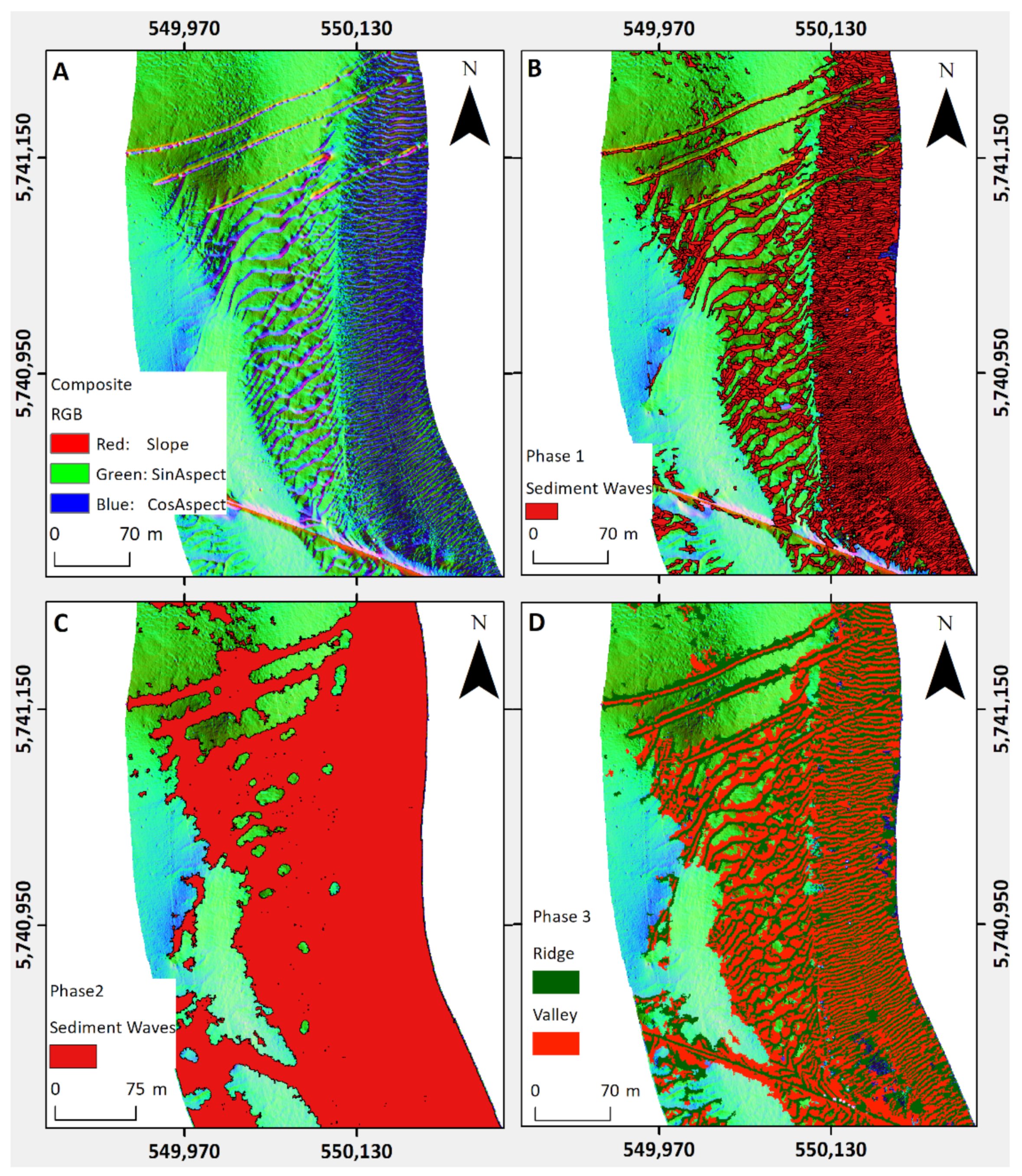

3.1. Segmentation

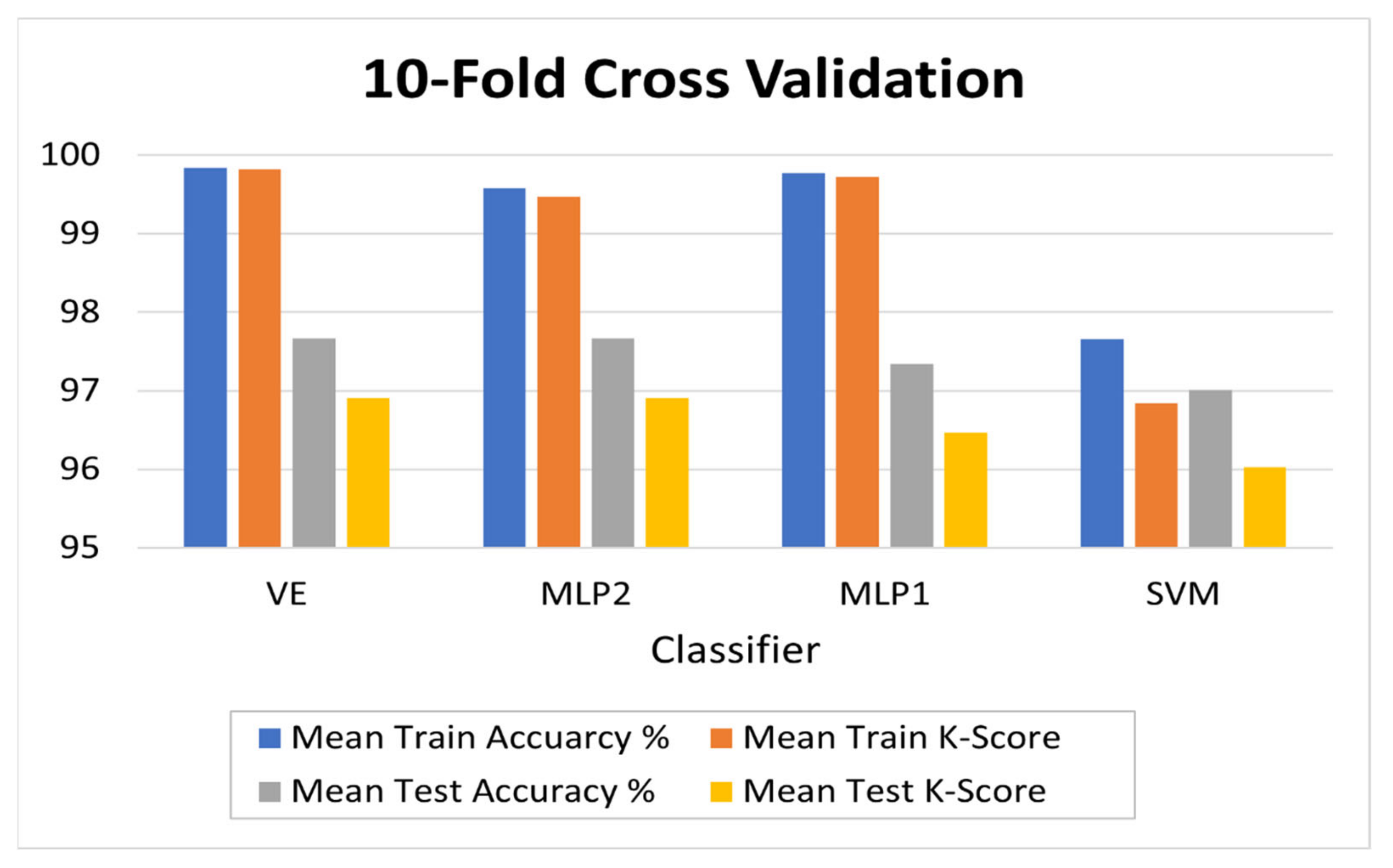

3.2. High-Resolution Performance Evaluation

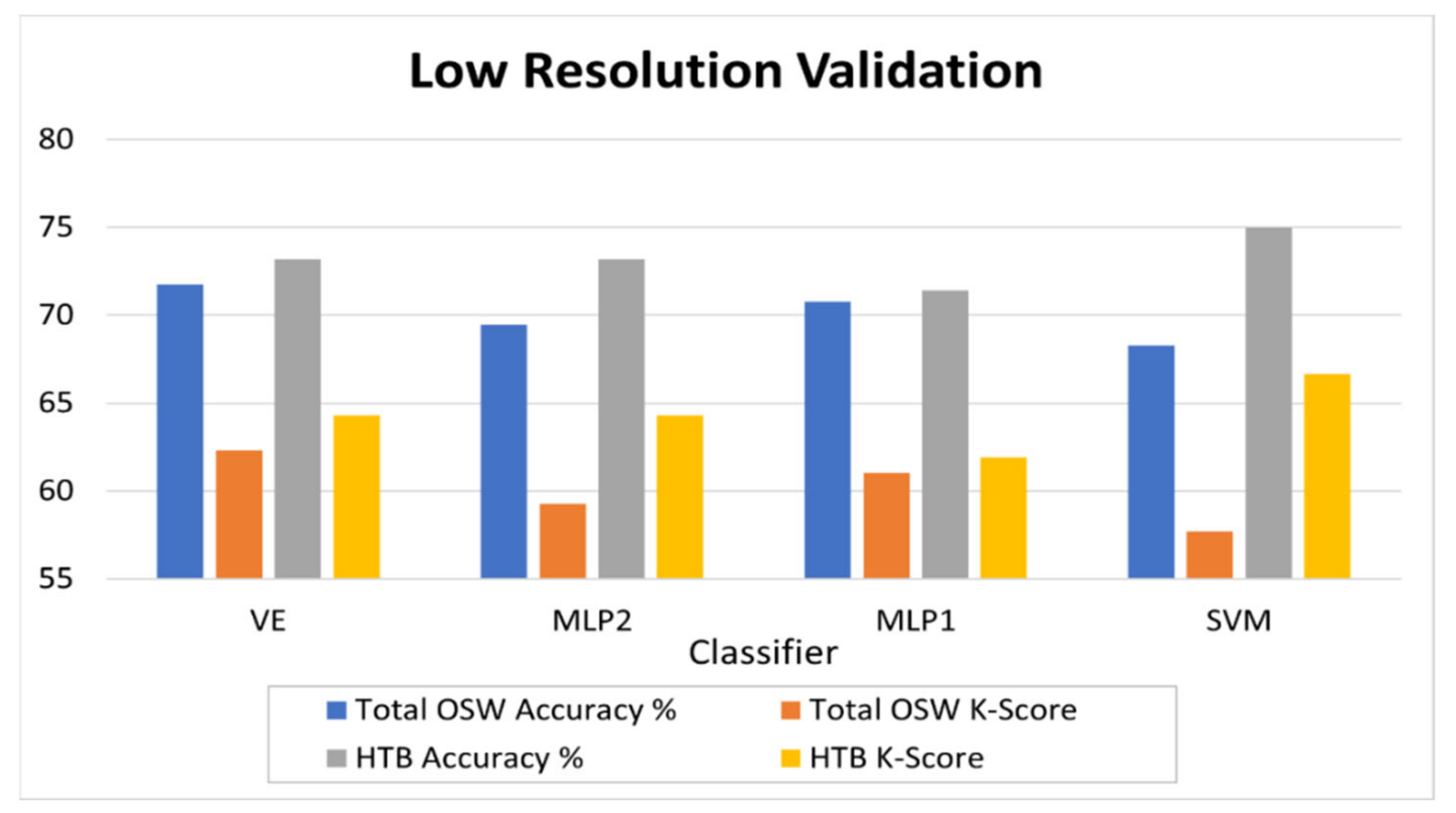

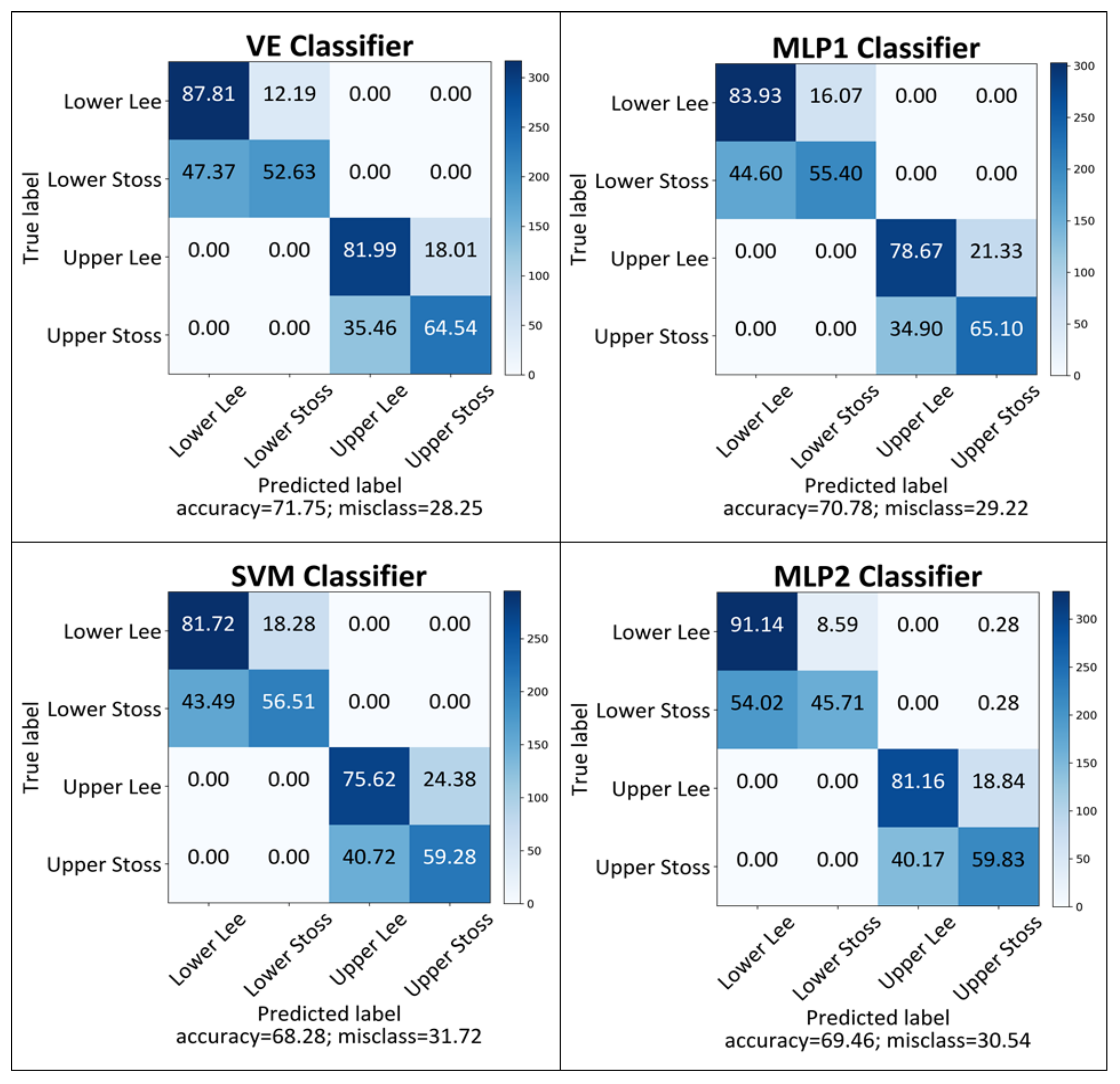

3.3. Low-Resolution Performance Evaluation

4. Discussion

4.1. Accuracy of Each Classifier on High-Spatial-Resolution Data

4.2. Accuracy of Each Classifier on Low-Spatial-Resolution Data

4.3. Implications for Suitability for a Scale-Standardised Object-Based Technique

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guinan, J.; McKeon, C.; Keeffe, E.; Monteys, X.; Sacchetti, F.; Coughlan, M.; Nic Aonghusa, C. INFOMAR data supports offshore energy development and marine spatial planning in the Irish offshore via the EMODnet Geology portal. Q. J. Eng. Geol. Hydrogeol. 2021, 54, qjegh2020-033. [Google Scholar] [CrossRef]

- Thorsnes, T. MAREANO—An introduction. Nor. J. Geol. 2009, 89, 3. [Google Scholar]

- Mayer, L.; Jakobsson, M.; Allen, G.; Dorschel, B.; Falconer, R.; Ferrini, V.; Lamarche, G.; Snaith, H.; Weatherall, P. The Nippon Foundation—GEBCO seabed 2030 project: The quest to see the world’s oceans completely mapped by 2030. Geosciences 2018, 8, 63. [Google Scholar] [CrossRef]

- Diesing, M.; Green, S.L.; Stephens, D.; Lark, R.M.; Stewart, H.A.; Dove, D. Mapping seabed sediments: Comparison of manual, geostatistical, object-based image analysis and machine learning approaches. Cont. Shelf Res. 2014, 84, 107–119. [Google Scholar] [CrossRef]

- Zajac, R.N. Challenges in marine, soft-sediment benthoscape ecology. Landsc. Ecol. 2008, 23, 7–18. [Google Scholar] [CrossRef]

- Damveld, J.H.; Roos, P.C.; Borsje, B.W.; Hulscher, S.J.M.H. Modelling the two-way coupling of tidal sand waves and benthic organisms: A linear stability approach. Environ. Fluid Mech. 2019, 19, 1073–1103. [Google Scholar] [CrossRef]

- Greene, H.G.; Cacchione, D.A.; Hampton, M.A. Characteristics and Dynamics of a Large Sub-Tidal Sand Wave Field—Habitat for Pacific Sand Lance (Ammodytes personatus), Salish Sea, Washington, USA. Geosciences 2017, 7, 107. [Google Scholar] [CrossRef]

- Damveld, J.H.; van der Reijden, K.J.; Cheng, C.; Koop, L.; Haaksma, L.R.; Walsh, C.A.J.; Soetaert, K.; Borsje, B.W.; Govers, L.L.; Roos, P.C.; et al. Video Transects Reveal That Tidal Sand Waves Affect the Spatial Distribution of Benthic Organisms and Sand Ripples. Geophys. Res. Lett. 2018, 45, 11837–11846. [Google Scholar] [CrossRef]

- Brown, C.J.; Smith, S.J.; Lawton, P.; Anderson, J.T. Benthic habitat mapping: A review of progress towards improved understanding of the spatial ecology of the seafloor using acoustic techniques. Estuar. Coast. Shelf Sci. 2011, 92, 502–520. [Google Scholar] [CrossRef]

- Goodchild, M.F. Scale in GIS: An overview. Geomorphology 2011, 130, 5–9. [Google Scholar] [CrossRef]

- Brown, C.J.; Sameoto, J.A.; Smith, S.J. Multiple methods, maps, and management applications: Purpose made seafloor maps in support of ocean management. J. Sea Res. 2012, 72, 1–13. [Google Scholar] [CrossRef]

- Kucharczyk, M.; Hay, G.J.; Ghaffarian, S.; Hugenholtz, C.H. Geographic object-based image analysis: A primer and future directions. Remote. Sens. 2020, 12, 2012. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Phinn, S.; Roelfsema, C.; Mumby, P. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote. Sens. 2012, 33, 3768–3797. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote. Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Janowski, L.; Tegowski, J.; Nowak, J. Seafloor mapping based on multibeam echosounder bathymetry and backscatter data using Object-Based Image Analysis: A case study from the Rewal site, the Southern Baltic. Oceanol. Hydrobiol. Stud. 2018, 47, 248–259. [Google Scholar] [CrossRef]

- Diesing, M.; Mitchell, P.; Stephens, D. Image-based seabed classification: What can we learn from terrestrial remote sensing? ICES J. Mar. Sci. 2016, 73, 2425–2441. [Google Scholar] [CrossRef]

- Ierodiaconou, D.; Schimel, A.C.G.; Kennedy, D.; Monk, J.; Gaylard, G.; Young, M.; Diesing, M.; Rattray, A. Combining pixel and object based image analysis of ultra-high resolution multibeam bathymetry and backscatter for habitat mapping in shallow marine waters. Mar. Geophys. Res. 2018, 39, 271–288. [Google Scholar] [CrossRef]

- Lucieer, V.; Lamarche, G. Unsupervised fuzzy classification and object-based image analysis of multibeam data to map deep water substrates, Cook Strait, New Zealand. Cont. Shelf Res. 2011, 31, 1236–1247. [Google Scholar] [CrossRef]

- Lacharité, M.; Brown, C.J.; Gazzola, V. Multisource multibeam backscatter data: Developing a strategy for the production of benthic habitat maps using semi-automated seafloor classification methods. Mar. Geophys. Res. 2018, 39, 307–322. [Google Scholar] [CrossRef]

- Diesing, M.; Thorsnes, T. Mapping of Cold-Water Coral Carbonate Mounds Based on Geomorphometric Features: An Object-Based Approach. Geosciences 2018, 8, 34. [Google Scholar] [CrossRef]

- Janowski, L.; Trzcinska, K.; Tegowski, J.; Kruss, A.; Rucinska-Zjadacz, M.; Pocwiardowski, P. Nearshore Benthic Habitat Mapping Based on Multi-Frequency, Multibeam Echosounder Data Using a Combined Object-Based Approach: A Case Study from the Rowy Site in the Southern Baltic Sea. Remote. Sens. 2018, 10, 1983. [Google Scholar] [CrossRef]

- Brown, C.J.; Beaudoin, J.; Brissette, M.; Gazzola, V. Multispectral Multibeam Echo Sounder Backscatter as a Tool for Improved Seafloor Characterization. Geosciences 2019, 9, 126. [Google Scholar] [CrossRef]

- Fakiris, E.; Blondel, P.; Papatheodorou, G.; Christodoulou, D.; Dimas, X.; Georgiou, N.; Kordella, S.; Dimitriadis, C.; Rzhanov, Y.; Geraga, M.; et al. Multi-Frequency, Multi-Sonar Mapping of Shallow Habitats—Efficacy and Management Implications in the National Marine Park of Zakynthos, Greece. Remote. Sens. 2019, 11, 461. [Google Scholar] [CrossRef]

- Lurton, X.; Lamarche, G.; Brown, C.; Lucieer, V.; Rice, G.; Schimel, A.; Weber, T. Backscatter Measurements by Seafloor-Mapping Sonars: Guidelines and Recommendations; A Collective Report by Members of the GeoHab Backscatter Working Group; GeoHab Backscatter Working Group: Eastsound, WA, USA, 2015; pp. 1–200. [Google Scholar]

- McGonigle, C.; Brown, C.J.; Quinn, R. Insonification orientation and its relevance for image-based classification of multibeam backscatter. ICES J. Mar. Sci. 2010, 67, 1010–1023. [Google Scholar] [CrossRef]

- McGonigle, C.; Brown, C.J.; Quinn, R. Operational Parameters, Data Density and Benthic Ecology: Considerations for Image-Based Classification of Multibeam Backscatter. Mar. Geod. 2010, 33, 16–38. [Google Scholar] [CrossRef]

- Clarke, J.E.H. Multispectral Acoustic Backscatter from Multibeam, Improved Classification Potential. In Proceedings of the United States Hydrographic Conference, San Diego, CA, USA, 16–19 March 2015; pp. 15–19. [Google Scholar]

- Malik, M.; Lurton, X.; Mayer, L. A framework to quantify uncertainties of seafloor backscatter from swath mapping echosounders. Mar. Geophys. Res. 2018, 39, 151–168. [Google Scholar] [CrossRef]

- Lamarche, G.; Lurton, X. Recommendations for improved and coherent acquisition and processing of backscatter data from seafloor-mapping sonars. Mar. Geophys. Res. 2018, 39, 5–22. [Google Scholar] [CrossRef]

- Schimel, A.C.G.; Beaudoin, J.; Parnum, I.M.; Le Bas, T.; Schmidt, V.; Keith, G.; Ierodiaconou, D. Multibeam sonar backscatter data processing. Mar. Geophys. Res. 2018, 39, 121–137. [Google Scholar] [CrossRef]

- Koop, L.; Snellen, M.; Simons, D.G. An Object-Based Image Analysis Approach Using Bathymetry and Bathymetric Derivatives to Classify the Seafloor. Geosciences 2021, 11, 45. [Google Scholar] [CrossRef]

- IHO. IHO Standards for Hydrographic Surveys; Special Publication; International Hydrographic Bureau: Monaco, 2008; p. 44. [Google Scholar]

- Eleftherakis, D.; Berger, L.; Le Bouffant, N.; Pacault, A.; Augustin, J.-M.; Lurton, X. Backscatter calibration of high-frequency multibeam echosounder using a reference single-beam system, on natural seafloor. Mar. Geophys. Res. 2018, 39, 55–73. [Google Scholar] [CrossRef]

- Calvert, J.; Strong, J.A.; Service, M.; McGonigle, C.; Quinn, R. An evaluation of supervised and unsupervised classification techniques for marine benthic habitat mapping using multibeam echosounder data. ICES J. Mar. Sci. 2014, 72, 1498–1513. [Google Scholar] [CrossRef]

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GISci. Remote. Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Powers, R.P.; Hay, G.J.; Chen, G. How wetland type and area differ through scale: A GEOBIA case study in Alberta’s Boreal Plains. Remote. Sens. Environ. 2012, 117, 135–145. [Google Scholar] [CrossRef]

- Momeni, R.; Aplin, P.; Boyd, D.S. Mapping complex urban land cover from spaceborne imagery: The influence of spatial resolution, spectral band set and classification approach. Remote. Sens. 2016, 8, 88. [Google Scholar] [CrossRef]

- Liu, M.; Yu, T.; Gu, X.; Sun, Z.; Yang, J.; Zhang, Z.; Mi, X.; Cao, W.; Li, J. The Impact of Spatial Resolution on the Classification of Vegetation Types in Highly Fragmented Planting Areas Based on Unmanned Aerial Vehicle Hyperspectral Images. Remote. Sens. 2020, 12, 146. [Google Scholar] [CrossRef]

- Nash, S.; Hartnett, M.; Dabrowski, T. Modelling phytoplankton dynamics in a complex estuarine system. Proc. Inst. Civ. Eng. Water Manag. 2011, 164, 35–54. [Google Scholar] [CrossRef]

- Bartlett, D.; Celliers, L. Geoinformatics for applied coastal and marine management. In Geoinformatics for Marine and Coastal Management; CRC Press: Boca Raton, FL, USA, 2016; pp. 31–46. [Google Scholar]

- O’Toole, R.; MacCraith, E.; Finn, N. KRY12_05 Cork Harbour and Approaches; Geological Survey of Ireland, Marine Institute: Dublin, Ireland, 2012; pp. 1–53. [Google Scholar]

- Verfaillie, E.; Doornenbal, P.; Mitchell, A.J.; White, J.; Van Lancker, V. The Bathymetric Position Index (BPI) as a Support Tool for Habitat Mapping. Worked Example for the MESH Final Guidance. 2007, pp. 1–14. Available online: https://www.researchgate.net/publication/242082725_Title_The_bathymetric_position_index_BPI_as_a_support_tool_for_habitat_mapping (accessed on 26 March 2020).

- Wolf, J.; Walkington, I.A.; Holt, J.; Burrows, R. Environmental impacts of tidal power schemes. Proc. Inst. Civ. Eng. Marit. Eng. 2009, 162, 165–177. [Google Scholar] [CrossRef]

- National Parks & Wildlife Service. Hempton’s Turbot Bank SAC Conservation Objectives Supporting Document—Marine Habitats; Arts, Heritage and the Gaeltacht; NPWS: Dublin, Ireland, 2015; pp. 1–9. [Google Scholar]

- Wallis, D. Rockall-North Channel MESH Geophysical Survey, RRS Charles Darwin Cruise CD174, BGS Project 05/05 Operations Report; Holmes, R., Long, D., Wakefield, E., Bridger, M., Eds.; British Geological Survey: Nottingham, UK, 2005; p. 72. [Google Scholar]

- National Parks & Wildlife Service. Hempton’s Turbot Bank SAC Site Synopsis; Department of Arts, Heritage and the Gaeltacht; NPWS: Dublin, Ireland, 2014; p. 1. [Google Scholar]

- Van Landeghem, K.J.J.; Baas, J.H.; Mitchell, N.C.; Wilcockson, D.; Wheeler, A.J. Reversed sediment wave migration in the Irish Sea, NW Europe: A reappraisal of the validity of geometry-based predictive modelling and assumptions. Mar. Geol. 2012, 295–298, 95–112. [Google Scholar] [CrossRef]

- Ward, S.L.; Neill, S.P.; Van Landeghem, K.J.; Scourse, J.D. Classifying seabed sediment type using simulated tidal-induced bed shear stress. Mar. Geol. 2015, 367, 94–104. [Google Scholar] [CrossRef]

- Atalah, J.; Fitch, J.; Coughlan, J.; Chopelet, J.; Coscia, I.; Farrell, E. Diversity of demersal and megafaunal assemblages inhabiting sandbanks of the Irish Sea. Mar. Biodivers. 2013, 43, 121–132. [Google Scholar] [CrossRef]

- Van Landeghem, K.J.; Wheeler, A.J.; Mitchell, N.C.; Sutton, G. Variations in sediment wave dimensions across the tidally dominated Irish Sea, NW Europe. Mar. Geol. 2009, 263, 108–119. [Google Scholar] [CrossRef]

- QPS. Qimera, 1.7.6; QPS: Zeist, The Netherlands, 2019. [Google Scholar]

- EMODnet Bathymetry Consortium, EMODnet Digital Bathymetry (DTM). 2020. Available online: https://www.emodnet-bathymetry.eu/ (accessed on 16 March 2020).

- Wilson, M.F.; O’Connell, B.; Brown, C.; Guinan, J.C.; Grehan, A.J. Multiscale terrain analysis of multibeam bathymetry data for habitat mapping on the continental slope. Mar. Geod. 2007, 30, 3–35. [Google Scholar] [CrossRef]

- Walbridge, S.; Slocum, N.; Pobuda, M.; Wright, D.J. Unified Geomorphological Analysis Workflows with Benthic Terrain Modeler. Geosciences 2018, 8, 94. [Google Scholar] [CrossRef]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–27. [Google Scholar]

- Trimble, eCognition Developer 9.0 User Guide; Trimble Germany GmbH: Munich, Germany, 2014.

- Gao, Y.; Mas, J.F.; Kerle, N.; Navarrete Pacheco, J.A. Optimal region growing segmentation and its effect on classification accuracy. Int. J. Remote. Sens. 2011, 32, 3747–3763. [Google Scholar] [CrossRef]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote. Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote. Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Marpu, P.R.; Neubert, M.; Herold, H.; Niemeyer, I. Enhanced evaluation of image segmentation results. J. Spat. Sci. 2010, 55, 55–68. [Google Scholar] [CrossRef]

- Wu, H.; Cheng, Z.; Shi, W.; Miao, Z.; Xu, C. An object-based image analysis for building seismic vulnerability assessment using high-resolution remote sensing imagery. Nat. Hazards 2014, 71, 151–174. [Google Scholar] [CrossRef]

- Johansen, K.; Tiede, D.; Blaschke, T.; Arroyo, L.A.; Phinn, S. Automatic Geographic Object Based Mapping of Streambed and Riparian Zone Extent from LiDAR Data in a Temperate Rural Urban Environment, Australia. Remote. Sens. 2011, 3, 1139–1156. [Google Scholar] [CrossRef]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.; Prentice-Hall Inc.: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- Trimble. Ecognition Developer Reference Book 10.0.1; Trimble: Sunnyvale, CA, USA, 2020; pp. 164–167. [Google Scholar]

- Witharana, C.; Lynch, H.J. An Object-Based Image Analysis Approach for Detecting Penguin Guano in very High Spatial Resolution Satellite Images. Remote. Sens. 2016, 8, 375. [Google Scholar] [CrossRef]

- Secomandia, M.; Owenb, M.J.; Jonesa, E.; Terentea, V.; Comriea, R. Application of the Bathymetric Position Index Method (BPI) for the Purpose of Defining a Reference Seabed Level for Cable Burial. In Proceedings of the Offshore Site Investigation Geotechnics 8th International Conference, 12–14 September 2017; pp. 904–913. [Google Scholar]

- Jordahl, K. GeoPandas: Python Tools for Geographic Data. 2014. Available online: https://github.com/geopandas/geopandas (accessed on 16 March 2020).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Moritz, P.; Nishihara, R.; Wang, S.; Tumanov, A.; Liaw, R.; Liang, E.; Elibol, M.; Yang, Z.; Paul, W.; Jordan, M.I. Ray: A distributed framework for emerging {AI} applications. In Proceedings of the 13th {USENIX} Symposium on Operating Systems Design and Implementation ({OSDI} 18), Carlsbad, CA, USA, 8–10 October 2018; pp. 561–577. [Google Scholar]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing deep neural networks, ensemble classifiers, and support vector machine algorithms for object-based urban land use/land cover classification. Remote. Sens. 2019, 11, 1713. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Dixon, B.; Candade, N. Multispectral landuse classification using neural networks and support vector machines: One or the other, or both? Int. J. Remote. Sens. 2008, 29, 1185–1206. [Google Scholar] [CrossRef]

- Wicaksono, P.; Aryaguna, P.A.; Lazuardi, W. Benthic habitat mapping model and cross validation using machine-learning classification algorithms. Remote. Sens. 2019, 11, 1279. [Google Scholar] [CrossRef]

- Liu, S.; Qi, Z.; Li, X.; Yeh, A.G.-O. Integration of Convolutional Neural Networks and Object-Based Post-Classification Refinement for Land Use and Land Cover Mapping with Optical and SAR Data. Remote. Sens. 2019, 11, 690. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote. Sens. Environ. 2019, 221, 430. [Google Scholar] [CrossRef]

- Conti, L.A.; Lim, A.; Wheeler, A.J. High resolution mapping of a cold water coral mound. Sci. Rep. 2019, 9, 1016. [Google Scholar] [CrossRef] [PubMed]

- Mas, J.F.; Flores, J.J. The application of artificial neural networks to the analysis of remotely sensed data. Int. J. Remote. Sens. 2008, 29, 617–663. [Google Scholar] [CrossRef]

- Sheela, K.G.; Deepa, S.N. Review on Methods to Fix Number of Hidden Neurons in Neural Networks. Math. Probl. Eng. 2013, 2013, 425740. [Google Scholar] [CrossRef]

- Zhang, C. Combining Ikonos and Bathymetric LiDAR Data to Improve Reef Habitat Mapping in the Florida Keys. Pap. Appl. Geogr. 2019, 5, 256–271. [Google Scholar] [CrossRef]

- Jony, R.I.; Woodley, A.; Raj, A.; Perrin, D. Ensemble Classification Technique for Water Detection in Satellite Images. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Saqlain, M.; Jargalsaikhan, B.; Lee, J.Y. A Voting Ensemble Classifier for Wafer Map Defect Patterns Identification in Semiconductor Manufacturing. IEEE Trans. Semicond. Manuf. 2019, 32, 171–182. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Rey-Area, M.; Guirado, E.; Tabik, S.; Ruiz-Hidalgo, J. FuCiTNet: Improving the generalization of deep learning networks by the fusion of learned class-inherent transformations. Inf. Fusion 2020, 63, 188–195. [Google Scholar] [CrossRef]

- Yuba, N.; Kawamura, K.; Yasuda, T.; Lim, J.; Yoshitoshi, R.; Watanabe, N.; Kurokawa, Y.; Maeda, T. Discriminating Pennisetum alopecuoides plants in a grazed pasture from unmanned aerial vehicles using object-based image analysis and random forest classifier. Grassl. Sci. 2021, 67, 73–82. [Google Scholar] [CrossRef]

- Ming, D.; Zhou, W.; Xu, L.; Wang, M.; Ma, Y. Coupling Relationship Among Scale Parameter, Segmentation Accuracy, and Classification Accuracy in GeOBIA. Photogramm. Eng. Remote. Sens. 2018, 84, 681–693. [Google Scholar] [CrossRef]

- Mishra, N.B.; Crews, K.A. Mapping vegetation morphology types in a dry savanna ecosystem: Integrating hierarchical object-based image analysis with Random Forest. Int. J. Remote. Sens. 2014, 35, 1175–1198. [Google Scholar] [CrossRef]

| EMODnet Dataset Name | Corresponding Region | Resolution (m) |

|---|---|---|

| CV16 CelticSeaIreland 14 m | South–West Offshore South Wexford (OSW) | 25 |

| HRDTM IrishSeaWexford 14 m | North–East OSW | 25 |

| 366_NorthAtlanticOceanDonegal | Hemptons Turbot Bank Special Area of Conservation (HTB SAC) | 25 |

| Bathymetric Derivatives | Scale (Pixel) |

|---|---|

| Aspect | 3 |

| Bathymetric Position Index (BPI) * | 3, 5 *, 9, 25 |

| CosAspect (Northness) * | 3 |

| Curvature | 3 |

| Planiform Curvature | 3 |

| Profile Curvature | 3 |

| Relative Topographic Position (RTP) | 3 |

| SinAspect (Eastness) * | 3 |

| Slope * | 3 |

| Vector Terrain Ruggedness (VRM) * | 3, 5 *, 9, 25 |

| Zeromean | 3, 9 |

| Model | Statistic | Train Acc Score | Test Acc Score | Acc Score Diff | Train K-Score | Test K-Score | K-Score Diff |

|---|---|---|---|---|---|---|---|

| SVM | Mean | 97.66 | 97.01 | 1.57 | 96.84 | 96.03 | 2.10 |

| St Dev | 0.25 | 2.18 | 1.59 | 0.31 | 2.92 | 2.15 | |

| MLP2 | Mean | 99.58 | 97.67 | 2.23 | 99.47 | 96.91 | 2.98 |

| St Dev | 0.23 | 1.60 | 1.34 | 0.33 | 2.14 | 1.78 | |

| VE | Mean | 99.84 | 97.67 | 2.27 | 99.82 | 96.91 | 3.00 |

| St Dev | 0.21 | 1.60 | 1.50 | 0.24 | 2.14 | 2.03 | |

| MLP1 | Mean | 99.77 | 97.34 | 2.48 | 99.72 | 96.47 | 3.29 |

| St Dev | 0.34 | 1.39 | 1.37 | 0.43 | 1.87 | 1.84 |

| Study Site | North OSW | South–East OSW | South–West OSW |

|---|---|---|---|

| VE Accuracy (%) | 66.48 | 67.06 | 76.43 |

| VE K-score | 0.55 | 0.56 | 0.69 |

| MLP1 Accuracy (%) | 65.66 | 67.99 | 74.52 |

| MLP1 K-score | 0.54 | 0.57 | 0.66 |

| MLP2 Accuracy (%) | 65.11 | 62.62 | 75.48 |

| MLP2 K-score | 0.53 | 0.50 | 0.67 |

| SVM Accuracy (%) | 62.36 | 65.89 | 73.25 |

| SVM K-score | 0.50 | 0.55 | 0.64 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Summers, G.; Lim, A.; Wheeler, A.J. A Scalable, Supervised Classification of Seabed Sediment Waves Using an Object-Based Image Analysis Approach. Remote Sens. 2021, 13, 2317. https://doi.org/10.3390/rs13122317

Summers G, Lim A, Wheeler AJ. A Scalable, Supervised Classification of Seabed Sediment Waves Using an Object-Based Image Analysis Approach. Remote Sensing. 2021; 13(12):2317. https://doi.org/10.3390/rs13122317

Chicago/Turabian StyleSummers, Gerard, Aaron Lim, and Andrew J. Wheeler. 2021. "A Scalable, Supervised Classification of Seabed Sediment Waves Using an Object-Based Image Analysis Approach" Remote Sensing 13, no. 12: 2317. https://doi.org/10.3390/rs13122317

APA StyleSummers, G., Lim, A., & Wheeler, A. J. (2021). A Scalable, Supervised Classification of Seabed Sediment Waves Using an Object-Based Image Analysis Approach. Remote Sensing, 13(12), 2317. https://doi.org/10.3390/rs13122317