3.1. Experimental Overview

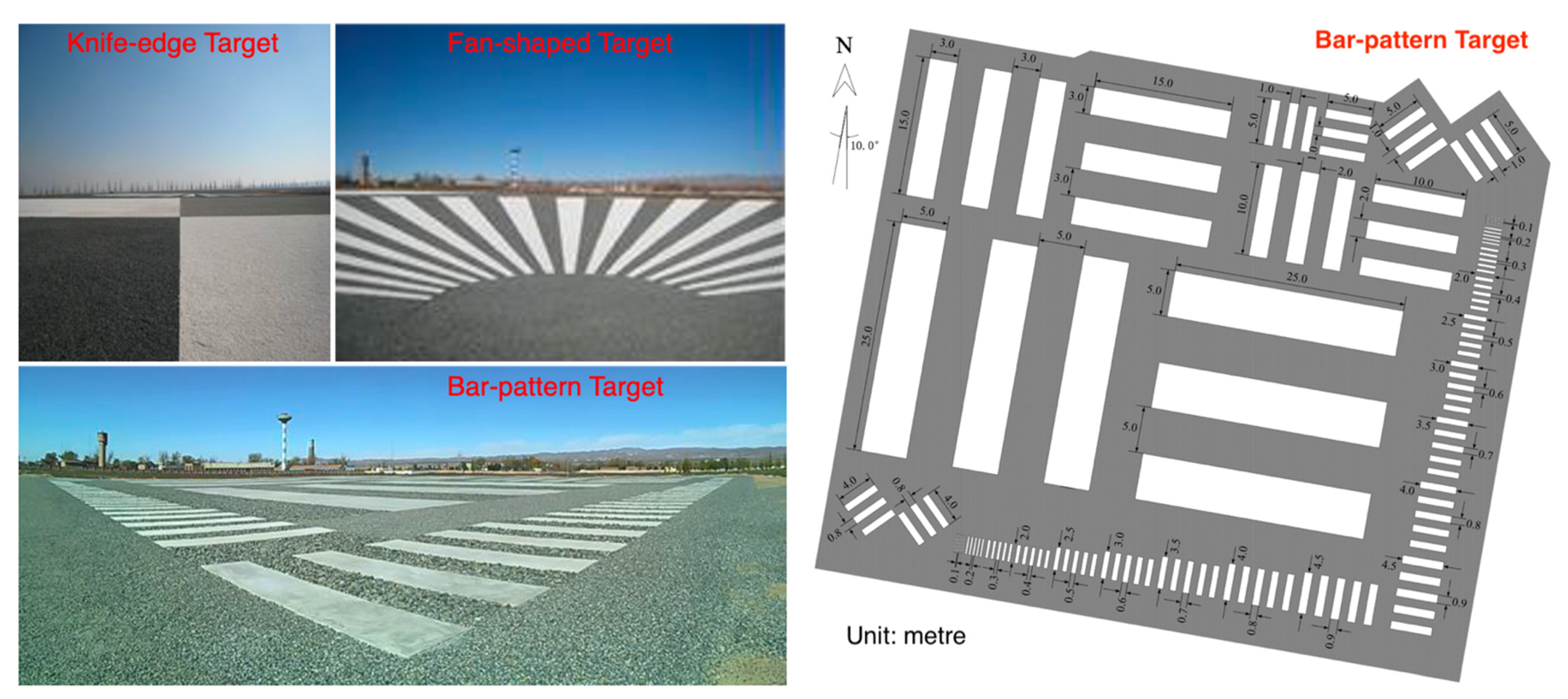

The Baotou Geocal site (50 km away from the Baotou city) located at 40°51′06.00″N, 109°37′44.14″E, Inner Mongolia, China, was used as the test site in this study, in order to obtain assessments which can be compared against other published ones. The permanent artificial optical targets (see

Figure 5), at the Baotou Geocal site, provided broad dynamic range, good uniformity, high stability and multi-function capabilities. Since 2013, the artificial optical targets have been successfully used for payload radiometric calibration and on-orbit performance assessment for a variety of international and domestic satellites. The artificial optical targets were set-up on a flat area of approximately 300 km

2, with an average altitude of 1270 m. The Baotou Geocal site features a cold semi-arid climate that has (an average of) ~300 clear-sky days every year, which has made it an ideal site for geo-calibration and validation work. Some illustration photos (courtesy of [

48]) of the artificial optical targets, including a knife-edge target, a fan-shaped target and a bar-pattern target, at the Baotou Geocal site, as well as the fully measured reference truth (provided by L. Ma [

48] and Y. Zhou [

49] in private correspondence) for the bar-pattern target, can be found in

Figure 5.

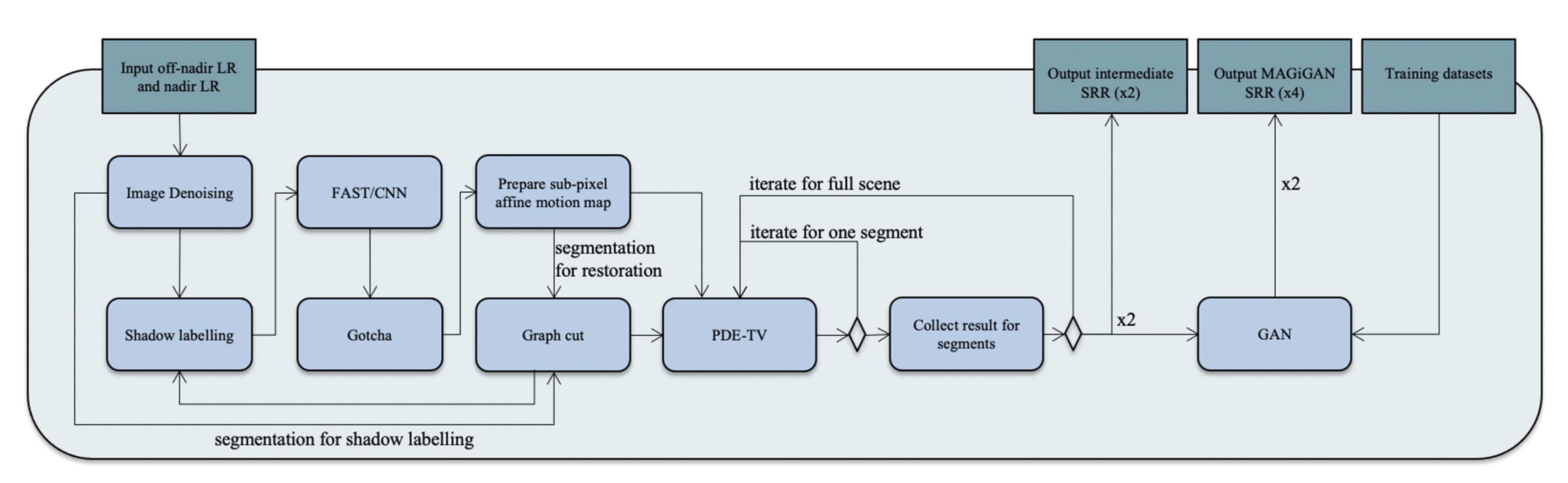

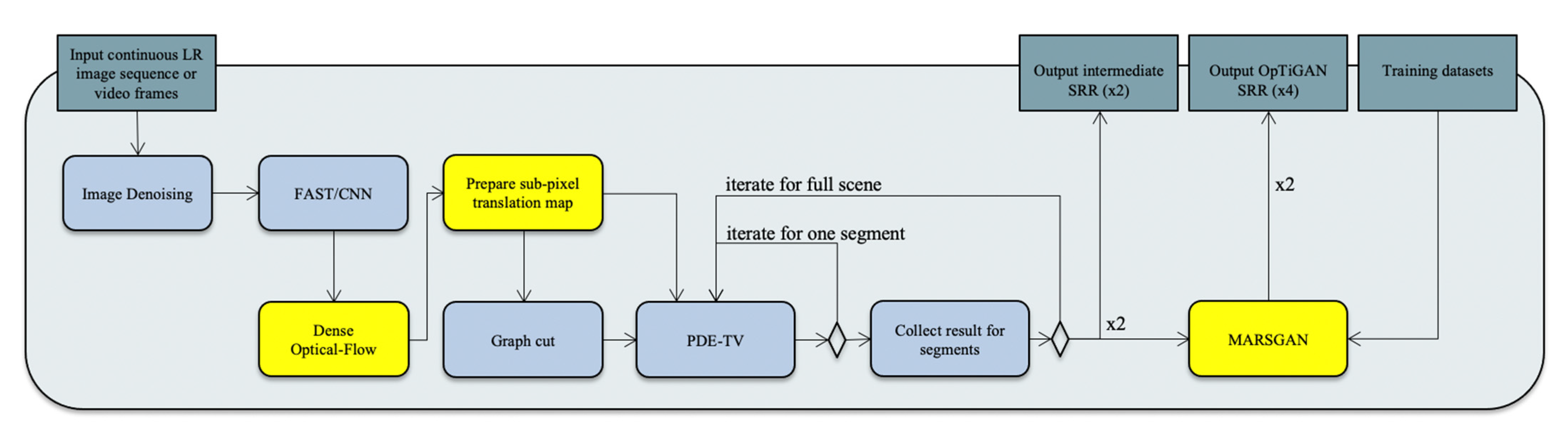

We tested the proposed OpTiGAN SRR system with three ultra-high-resolution satellite datasets, i.e., the 31 cm/pixel WorldView-3 PAN images, the 75 cm/pixel Deimos-2 PAN images and the 70 cm/pixel SkySat HD video frames.

Table 1 shows the input image IDs used in this work. Note that the first row was used as the reference image in case of multi-image SRR processing and it was also the sole-input in case of single-image SRR processing.

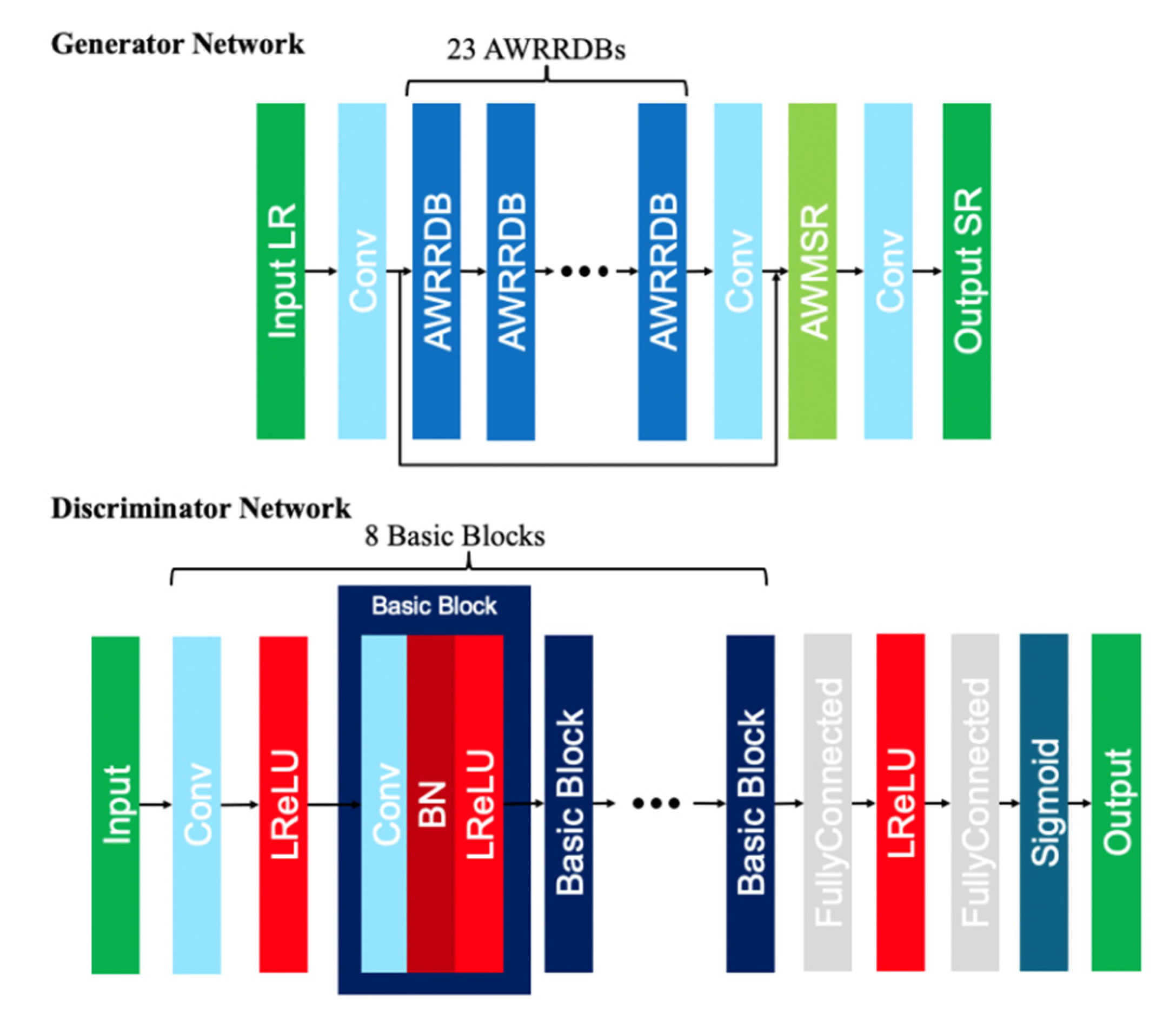

Since MAGiGAN and OpTiGAN require different inputs, i.e., LR images with viewing angle differences and without viewing angle differences, respectively, we are not able to provide results of a comparison of performance between these two SRR systems. However, in this work, we provide intercomparisons against four different SRR techniques. The four SRR techniques include: (1) an optimised version of the SRGAN [

4] single-image SRR network that was used in MAGiGAN [

1] (hereafter referred to as SRGAN, in all text, figures and tables); (2) ESRGAN [

32] single-image SRR network; (3) MARSGAN [

43] single-image SRR network, which was also used as the second stage processing of OpTiGAN; (4) optical-flow PDE-TV (OFTV; hereafter referred to as OFTV, in all text, figures and tables) multi-image SRR, which was also used as the first stage processing of OpTiGAN. It should be noted that all deep network-based SRR techniques, i.e., SRGAN, ESRGAN, MARSGAN and OpTiGAN, were trained with the same training datasets, as described in

Section 2.3.

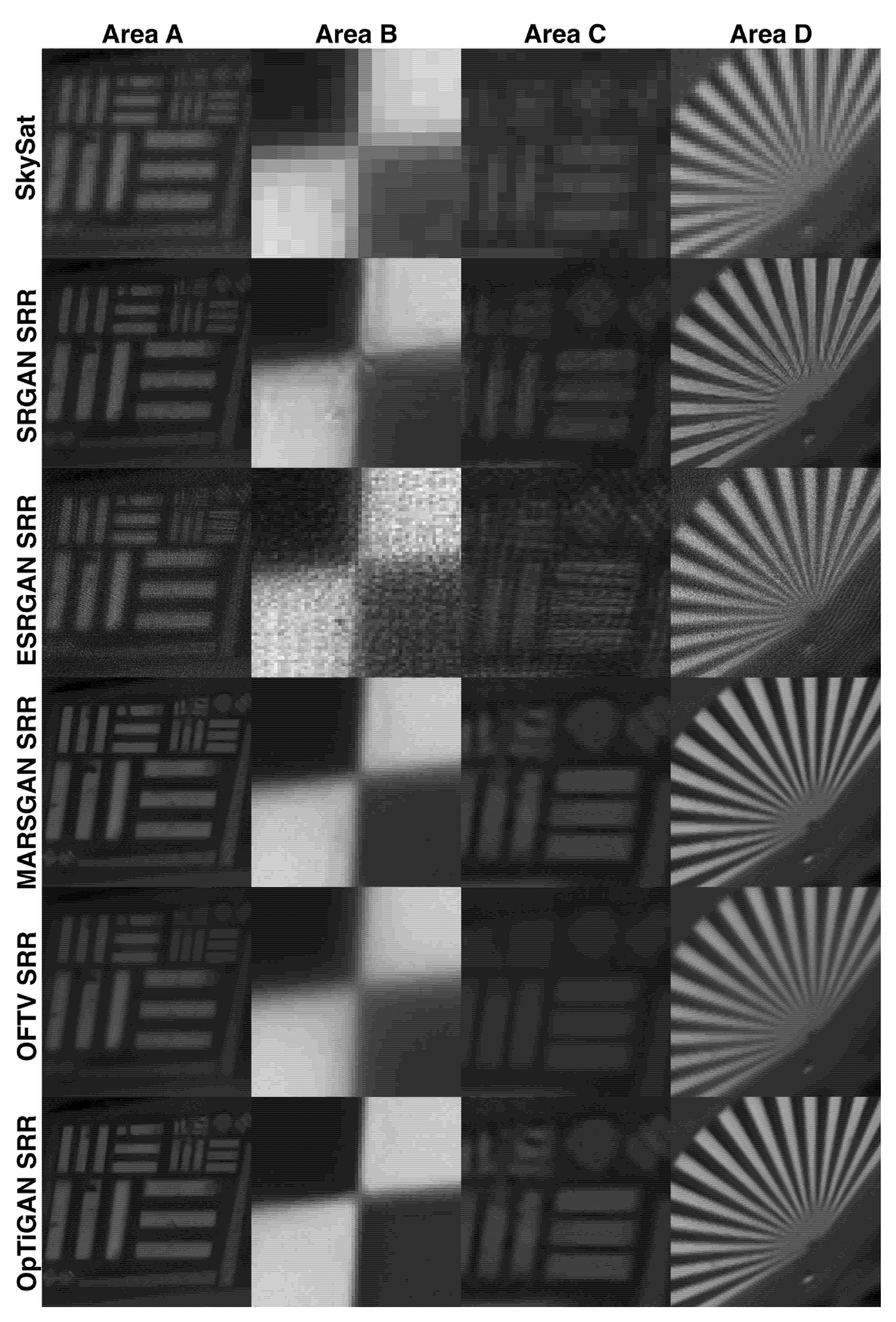

3.2. Demonstration and Assessments of WorldView-3 Results

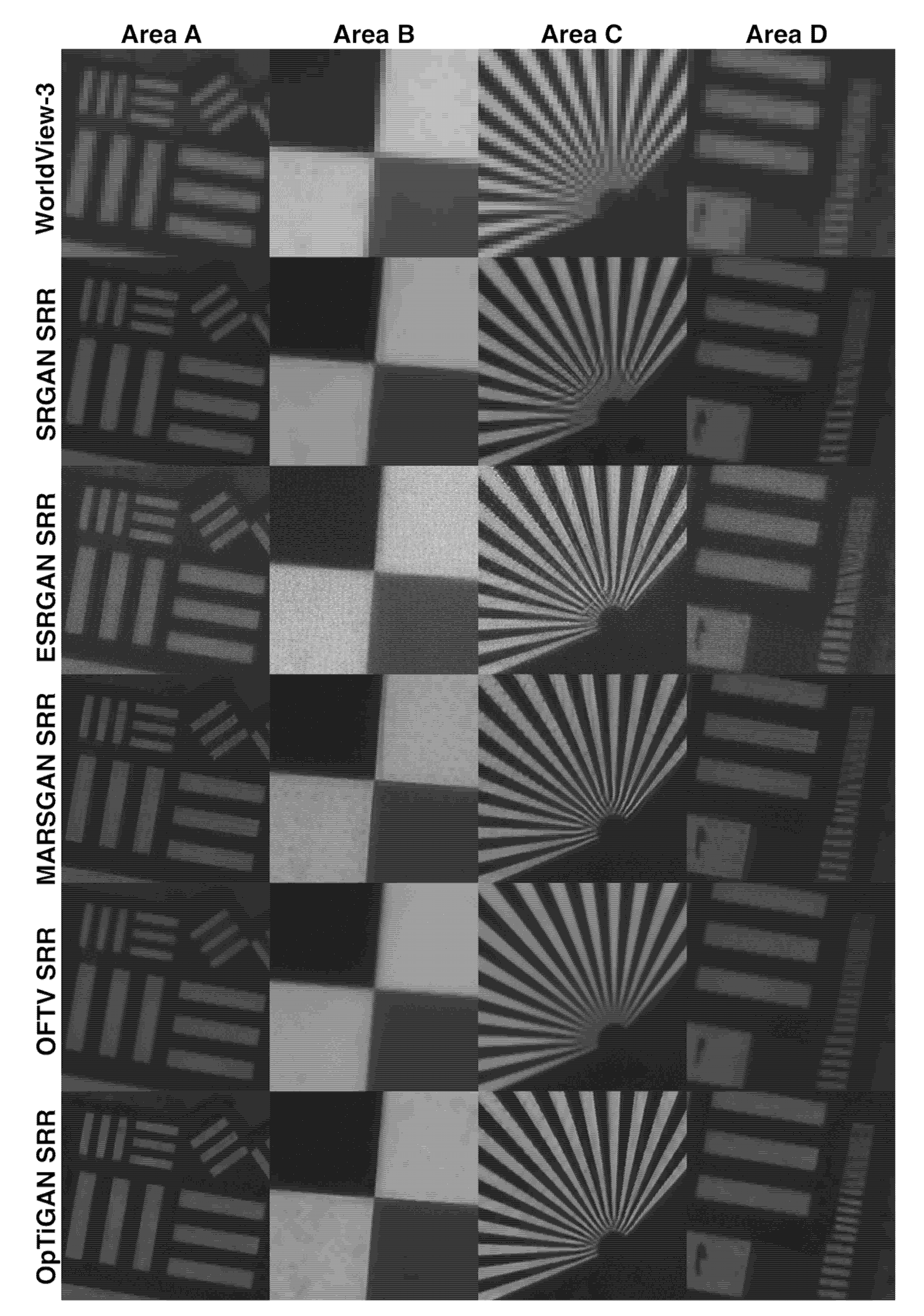

For the WorldView-3 experiments, we used a single input image for the SRGAN, ESRGAN and MARSGAN SRR processing and used five overlapped input images for the OFTV and OpTiGAN SRR processing. Four cropped areas (A–D) covering the different artificial targets at the Baotou Geocal site are shown in

Figure 6 for comparisons of the SRR results and the original input WorldView-3 image.

Area A showed the 10 m × 2 m and 5 m × 1 m bar-pattern targets. We can observe that all five SRR results showed good restoration of these larger sized bars. The SRGAN result of Area A showed some rounded corners of the 5 m × 1 m bars, whereas the ESRGAN result showed sharper corners, but with some high-frequency noise. The MARSGAN result showed the best overall quality among the three single-image deep learning-based SRR techniques. In comparison to the deep learning-based techniques, OFTV showed smoother edges/outlines, but with no observable artefact. The OpTiGAN result, which was based on OFTV and MARSGAN, showed the best overall quality, i.e., similar sharpness on edges/outlines/corners, but with no artefact or high-frequency noise.

Area B showed the central area of the knife-edge target. All three deep learning-based techniques showed sharp edges of the target; however, the SRGAN result appeared to demonstrate some artefacts at the centre corner and some artefacts at the edges, ESRGAN showed some high-frequency noise, whilst MARSGAN had some artefacts at the edges. OFTV showed a blurrier edge compared to SRGAN, ESRGAN and MARSGAN, but it showed the least number of artefacts. OpTiGAN had shown sharp edges that were similar to ESRGAN and MARSGAN, but with much less noise and artefact.

Area C showed a fan-shaped target. We can observe that all five SRR results showed good restoration of the target at mid-range radius. The MARSGAN and OpTiGAN results showed reasonable restoration at the centre radius, i.e., by the end of the target, with only a few artefacts at the centre and in between each strip pattern. ESRGAN also showed some reasonable restoration at small radius, but the result was much noisier compared to MARSGAN and OpTiGAN. SRGAN showed obvious artefacts at small radius. OFTV did not show as much detail as the other techniques, but, instead, it showed no observable noise or artefact. In terms of sharpness, ESRGAN, MARSGAN and OpTiGAN had the best performance. However, MARSGAN and OpTiGAN had less noise compared to ESRGAN, with OpTiGAN showing the least artefacts among the three.

Area D showed a zoom-in view of the larger 10 m × 2 m bar-pattern targets along with multiple smaller bar-pattern targets with size ranges from 2.5 m × 0.5 m, 2 m × 0.4 m, 2 m × 0.3 m, 2 m × 0.2 m, to 2 m × 0.1 m, from bottom-right to top-right (see

Figure 5). We can observe that all five SRR techniques were able to restore the 2.5 m × 0.5 m bars reasonably well, despite the SRGAN results showing some tilting. The tilting artefact became more severe for the 2 m × 0.4 m bars for SRGAN and the bars were not clearly visible at the 2 m × 0.4 m scale and above. ESRGAN showed clear individual bars at 2 m × 0.4 m, but all of them had the same tilting artefact. In the MARSGAN result, we could observe smaller bars up to 2 m × 0.3 m, but still with artefacts, i.e., tilting and wrong shapes. OFTV showed smoother edges of the bars, but with very little artefact. The shapes and angles of the small bar strips, at the right side of the crop, were more correct (refer to

Figure 5) with OFTV and the smallest resolvable bars were in between 2 m × 0.4 m and 2 m × 0.3 m. Finally, the OpTiGAN result showed the best restoration of the 2 m × 0.4 m bars compared to the other four SRR results. The smallest recognisable bars were between 2 m × 0.3 m and 2 m × 0.2 m, for OpTiGAN. However, OpTiGAN still had some artefacts for bars at the 2 m × 0.3 m scale that were similar to the MARSGAN result.

In

Table 2, we show the BRISQUE and PIQE image quality scores (0–100, lower scores representing better image quality) that were measured from the full-image (see

Supplementary Materials for the full-image) at the Baotou Geocal site. We can observe improvements in terms of image quality from all five SRR techniques. MARSGAN achieved the best image quality score from BRISQUE, whereas ESRGAN achieved the best image quality score from PIQE. The image quality scores for OpTiGAN were close to ESRGAN and MARSGAN. The lower image quality scores reflected better overall image sharpness and contrast. However, since this measurement did not count for incorrect high-frequency texture, incorrect reconstruction of small sized targets and synthetic artefacts, the better scores do not reflect absolute better quality of the SRR results. More quantitative assessments are given in

Table 3 and

Table 4.

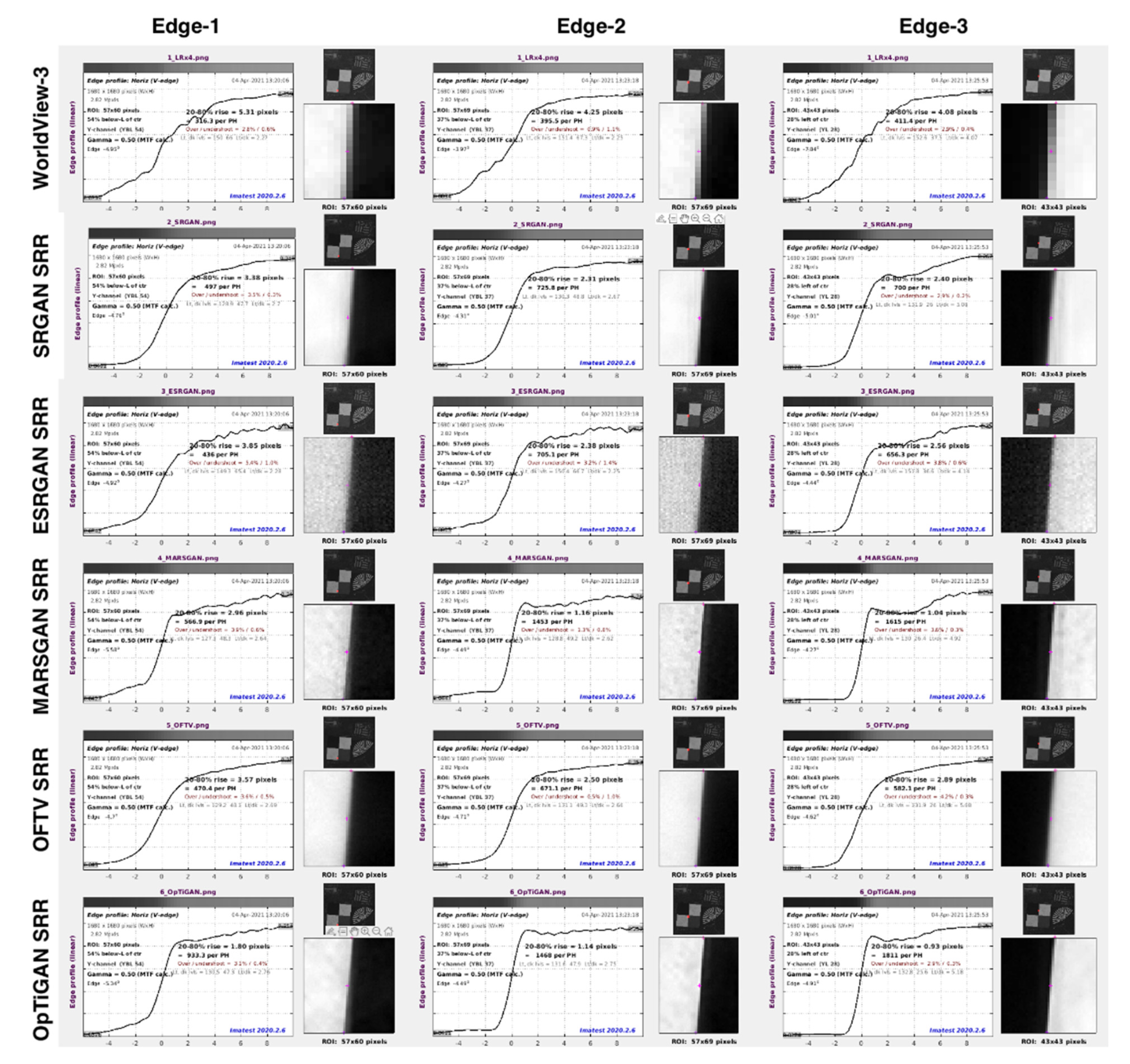

It has been the case that in the field of photo-realistic SRR, many SRR techniques present “fancy” images with four times or even eight times of upscaling factor, but their effective resolution never reaches the upscaling factor. In this paper, we present a more quantitative assessment using the Imatest

® slanted-edge measurement for the knife-edge target at the Baotou Geocal site. In

Figure 7, we show three automatically detected edges and their associated 20–80% profile rise analysis for each of the input WorldView-3 image, SRGAN SRR result, ESRGAN SRR result, MARSGAN SRR result, OFTV SRR result and OpTiGAN SRR result. The total pixel counts for the 20–80% profile rise of each slanted edge are summarised in

Table 3. We divided the total pixel counts of the input WorldView-3 image (upscaled by a factor of 4 for comparison) with the total pixel counts of the SRR results to get the effective resolution enhancement factor for each of the measured edges. Note that the edge sharpness measurements are generally similar but may be different from area to area, even in the same image, thus we averaged three measurements to get the final effective resolution enhancement factor. The average effective resolution enhancement factors are shown in the last row of

Table 3.

Another quantitative assessment of image effective resolution was achieved via visually checking the smallest resolvable bar targets for each of the SRR results. This is summarised in

Table 4. We checked the smallest resolvable bar targets in terms of “recognisable” and “with good visual quality”, where “recognisable” means visible and identifiable and does not count for noise or artefacts and “with good visual quality” means clearly visible with little or no artefacts. We can see from

Table 4 that, with a 31 cm/pixel resolution WorldView-3 image, we can resolve 60 cm/80 cm bar targets and, with a 9 cm/pixel OpTiGAN SRR, we can resolve 20 cm/30 cm bar targets.

Table 4 also shows the effective resolution calculated from

Table 3, along with input and processing information. Note, here, the proposed OpTiGAN system has significantly shortened the required processing time from a few hours to around 10 min for five of 420 × 420-pixel input images in comparison to MAGiGAN [

1], which we based the aforementioned modifications on.

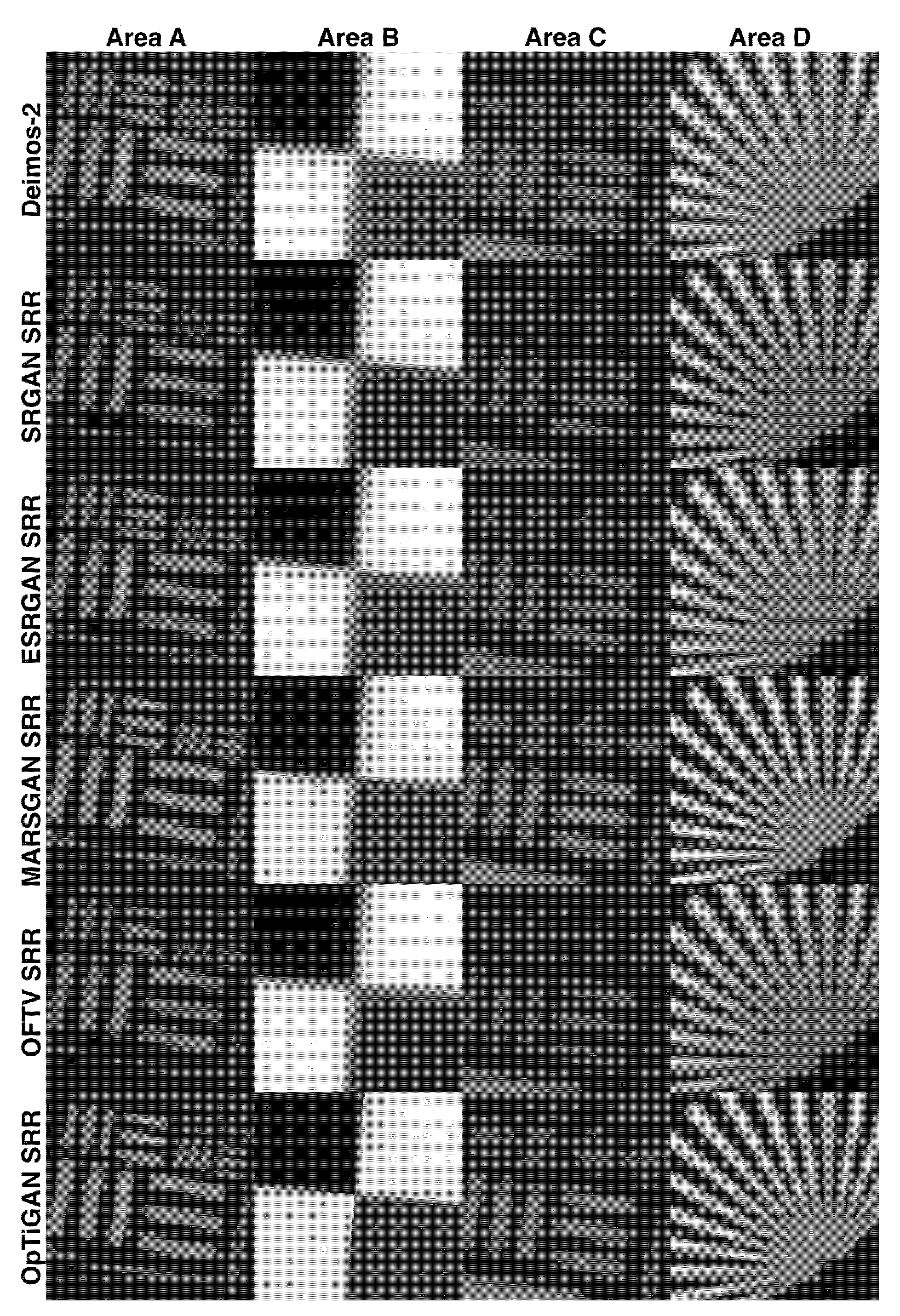

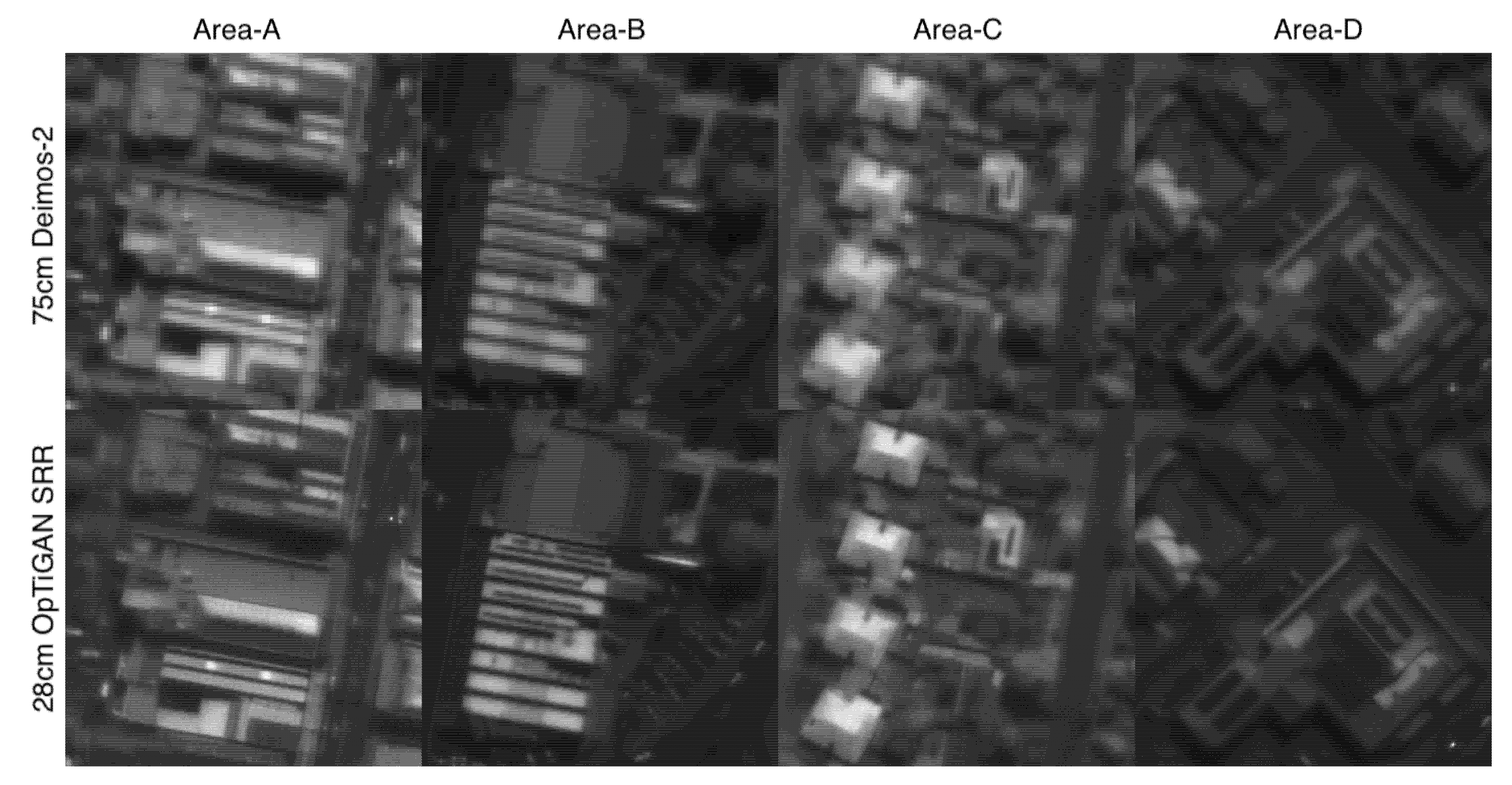

3.3. Demonstration and Assessments of Deimos-2 Results

For the Deimos-2 experiments, we used one input for the SRGAN, ESRGAN and MARSGAN SRR processing and three repeat-pass inputs for OFTV and OpTiGAN SRR processing. Four cropped areas (A–D) covering the different artificial targets at the Baotou Geocal site are shown in

Figure 8 for comparison with the SRR results and the original input Deimos-2 image.

Area A showed the overall area of the bar-pattern targets that ranged from 25 m × 5 m to 2 m × 0.1 m. We can observe that all five SRR techniques were able to bring out the correct outlines for the 10 m × 2 m bars; however, the ESRGAN results displayed some artefacts (incorrect shape) and the OFTV results were slightly smoother than the others. For the smaller 5 m × 1 m bars, only ESRGAN, MARSGAN and OpTiGAN results showed some reasonable restoration, but with added noise. Some details could be seen for the 4.5 m × 0.9 m bars with MARSGAN and OpTiGAN, but with too much noise.

Area B showed the centre of the knife-edge target. We can observe that the SRGAN, ESRGAN and OFTV results were quite blurry at the centre and edges. In addition, ESRGAN tended to produce some artificial textures that made the edges even more blurry. MARSGAN showed sharper edges in comparison to SRGAN, ESRGAN and OFTV. OpTiGAN showed further improvement on top of MARSGAN.

Area C showed a zoom-in view of the smaller 10 m × 2 m and 5 m × 1 m bar-pattern targets. We could clearly observe the noise in SRGAN and ESRGAN for the 10 m × 2 m bars. The OFTV result was blurry, but without any artefact. MARSGAN and OpTiGAN had the best (and similar) restoration for the 10 m × 2 m bars. For the 5 m × 1 m bars, only OpTiGAN produced reasonable restoration.

Area D showed a fan-shaped target. We can observe that all five SRR results showed good restoration of the target at mid-to-long radius. At the centre of the fan-shaped target, the SRGAN and OFTV results were blurry and the ESRGAN, MARSGAN and OpTiGAN results showed different levels of artefact.

In

Table 5, we show the BRISQUE and PIQE image quality scores (0–100, lower score represents better quality) that were measured with the full-image (see

Supplementary Materials for the full-image) at the Baotou Geocal site. We can observe improvements in terms of image quality from all five SRR techniques. MARSGAN and OpTiGAN received the best overall score for the Deimos-2 results.

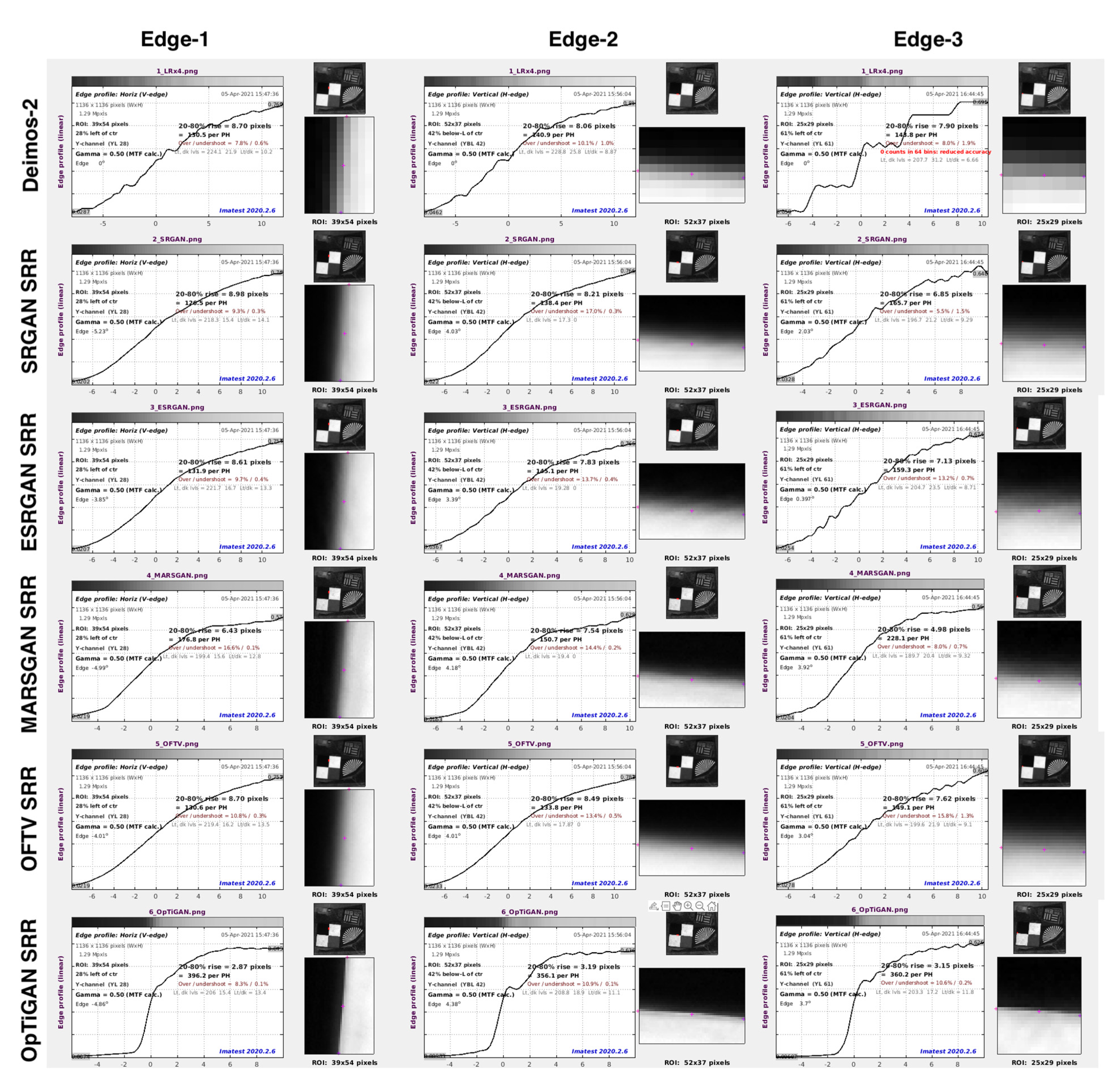

In

Figure 9, we present a quantitative assessment achieved via the Imatest

® slanted-edge measurements for the knife-edge target at the Baotou Geocal site. There were three automatically detected edges and their associated 20–80% profile rise analysis are shown, for each of the input Deimos-2 image, SRGAN SRR result, ESRGAN SRR result, MARSGAN SRR result, OFTV SRR result and OpTiGAN SRR result. The total pixel counts for the 20–80% profile rise of each slanted edge is summarised in

Table 6. We divided the total pixel counts of the input Deimos-2 image (upscaled by a factor of 4 for comparison) with the total pixel counts of the SRR results to get the effective resolution enhancement factor for each of the measured edge. The three measurements were then averaged to get the final effective resolution enhancement factor, as shown in the last row of

Table 6. For the Deimos-2 experiments, we can observe that the effective resolution enhancements were generally not good with SRGAN, ESRGAN and OFTV; however, with OpTiGAN, we still obtained a factor of 2.96 times improvement.

The other quantitative assessment of image effective resolution was achieved via visual checking of the smallest resolvable bar targets for each of the SRR results. The results are summarised in

Table 7. We can observe, from

Table 7, that even the effective resolutions for edges were generally not good for SRGAN, ESRGAN and OFTV, as shown in

Table 6. The results still showed reasonable restorations for the bar targets. We can see, from

Table 7, that, with a 75 cm/pixel resolution Deimos-2 image, we can resolve 2 m/3 m bar targets and, with a 28 cm/pixel OpTiGAN SRR, we can resolve 1 m/2 m bar targets.

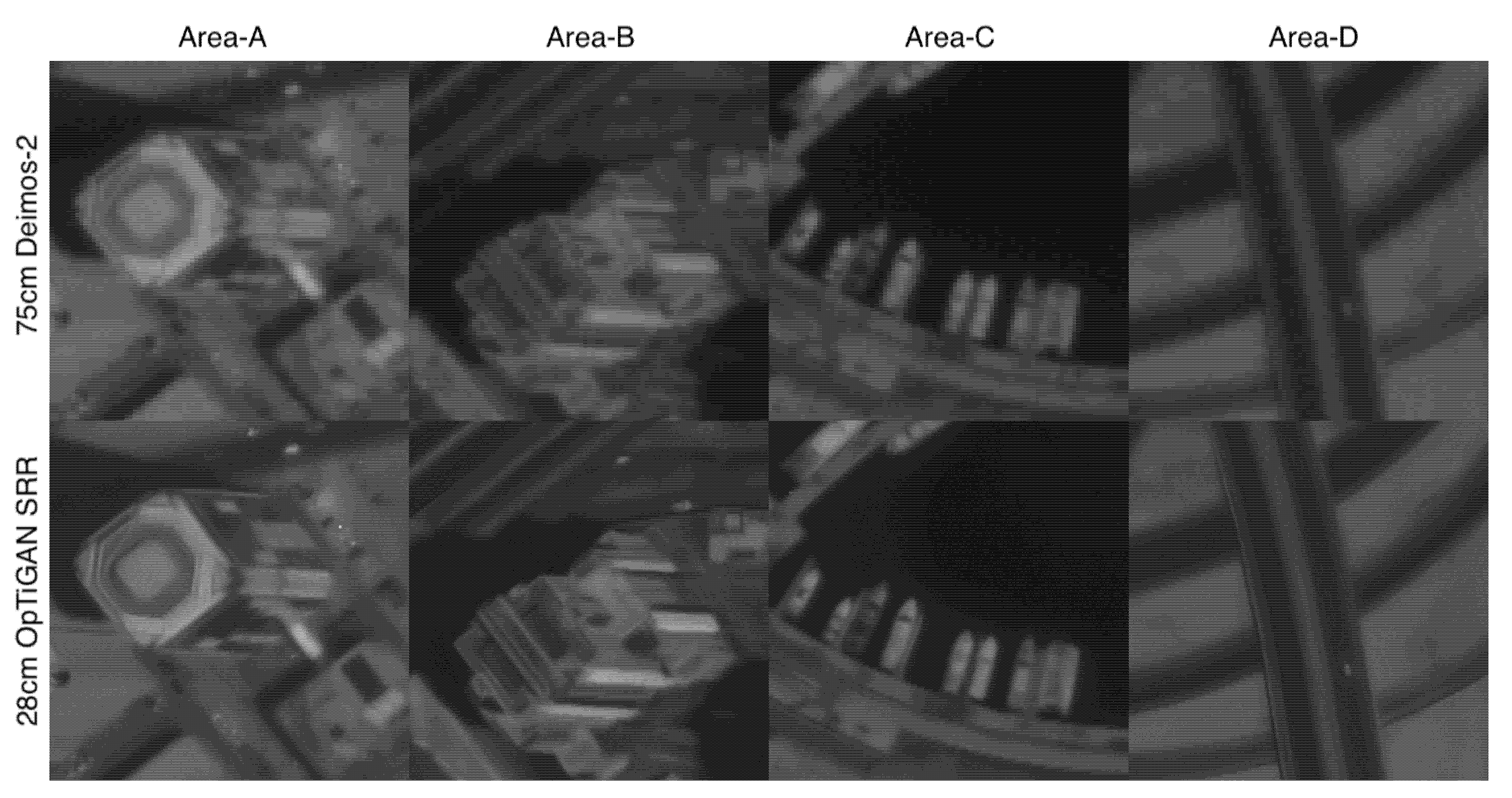

3.4. Demonstration and Assessments of SkySat Results

For the SkySat experiments, we used one input for the SRGAN, ESRGAN and MARSGAN SRR processing and five continuous video frames for the OFTV and OpTiGAN SRR processing. Four cropped areas (A–D) covering the different artificial targets available at the Baotou Geocal site are shown in

Figure 10 for comparison with the SRR results and the original input SkySat video frames.

Area A showed the overall area of the bar-pattern targets that ranged from 25 m × 5 m to 2 m × 0.1 m. We can observe that SRGAN, MARSGAN, OFTV and OpTiGAN were able to bring out the correct outlines of the 10 m × 2 m bars. The ESRGAN result showed some high-frequency textures and also showed some reconstruction of the 5 m × 1 m bars; however, its result was very noisy. OFTV was blurry, but with the least artefact. The MARSGAN and OpTiGAN results showed the best restoration with little noise and artefact, for the bar-pattern targets, and, especially, for bringing out the 5 m × 1 m bars. A few textures have been revealed for the 4.5 m × 0.9 m bars from OpTiGAN, but no individual 4.5 m × 0.9 m bar has been resolved from any SRR result.

Area B showed the centre of the knife-edge target. We can observe artefacts from SRGAN, noise from ESRGAN, blurring effects from MARSGAN and OFTV. In terms of edge sharpness for this area, SRGAN and OpTiGAN results were the best, but OpTiGAN had significantly less artefact and noise compared to SRGAN.

Area C showed a zoom-in view of the smaller 10 m × 2 m and 5 m × 1 m bar-pattern targets. For the smaller 5 m × 1 m bars, the MARSGAN and OpTiGAN results showed some good restoration, but both with artefacts and noise.

Area D showed the fan-shaped target. The ESRGAN result showed the best restoration at the centre, but it was also the noisiest. SRGAN displayed artefacts towards the centre. MARSGAN and OpTiGAN showed the best restoration for mid-to-long radiuses.

Finally, we give BRISQUE and PIQE image quality scores (0–100, lower scores represent better quality) in

Table 8. We can observe significant improvements in terms of image quality from all five SRR results. ESRGAN, MARSGAN and OpTiGAN demonstrated the best overall score for the SkySat experiments.

In

Figure 11, we present the Imatest

® slanted-edge measurements for the knife-edge target. There were three automatically detected edges and their associated 20–80% profile rise analysis are shown, for each of the input SkySat video frames, SRGAN, ESRGAN, MARSGAN, OFTV and OpTiGAN SRR results. The total pixel counts for the 20–80% profile rise of each slanted edge is summarised in

Table 9. We divided the total pixel counts of the input SkySat video frame (upscaled by a factor of 4 for comparison) with the total pixel counts of the SRR results to get an indicative effective resolution enhancement factor for each of the measured edge. The three measurements were then averaged to get the final effective resolution enhancement factor, as shown in the last row of

Table 9. For the SkySat experiments, we can only observe marginal effective resolution enhancements for SRGAN, ESRGAN, MARSGAN and OFTV; however, with OpTiGAN, the effective resolution enhancement factor was much higher, at 3.94 times.

The smallest resolvable bar targets from the original SkySat video frame and its SRR results are summarised in

Table 10. With a 70 cm/pixel resolution SkySat video frame, we can resolve 2 m/5 m bar targets. SRGAN brought the 3 m bars to visually good quality. ESRGAN did not improved the quality of the 5 m and 3 m bars, but made the 1 m bar resolvable. MARSGAN improved the quality of the 5 m and 3 m bars and also made the 1 m bar resolvable. OFTV did not make the 1 m bars resolvable, but improved the visual quality for 5 m, 3 m and 2 m bars. Finally, with the 18 cm/pixel OpTiGAN SRR, 1 m bars were resolvable and the qualities for 5 m, 3 m and 2 m bars were all improved.