Road Extraction in Mountainous Regions from High-Resolution Images Based on DSDNet and Terrain Optimization

Abstract

1. Introduction

2. Related Work

2.1. Non-Deep Learning Methods

2.2. Deep Learning Methods

3. Materials and Methods

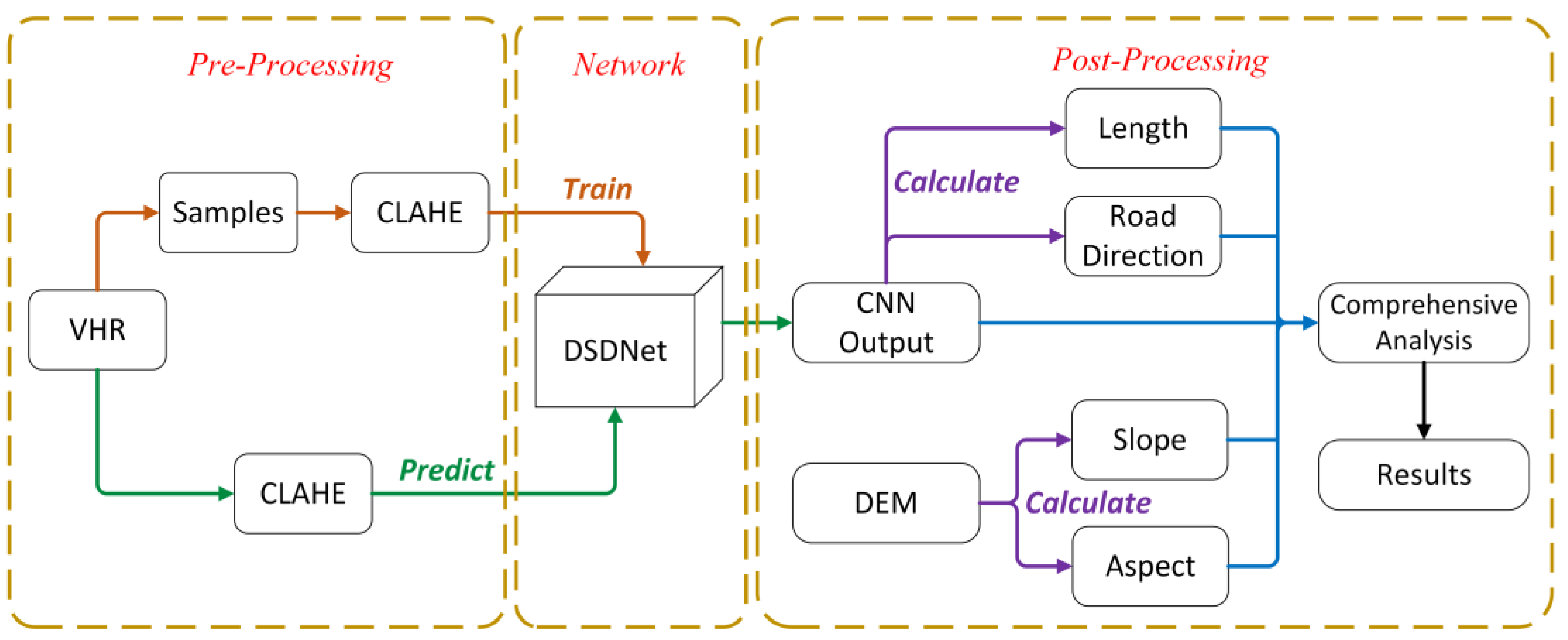

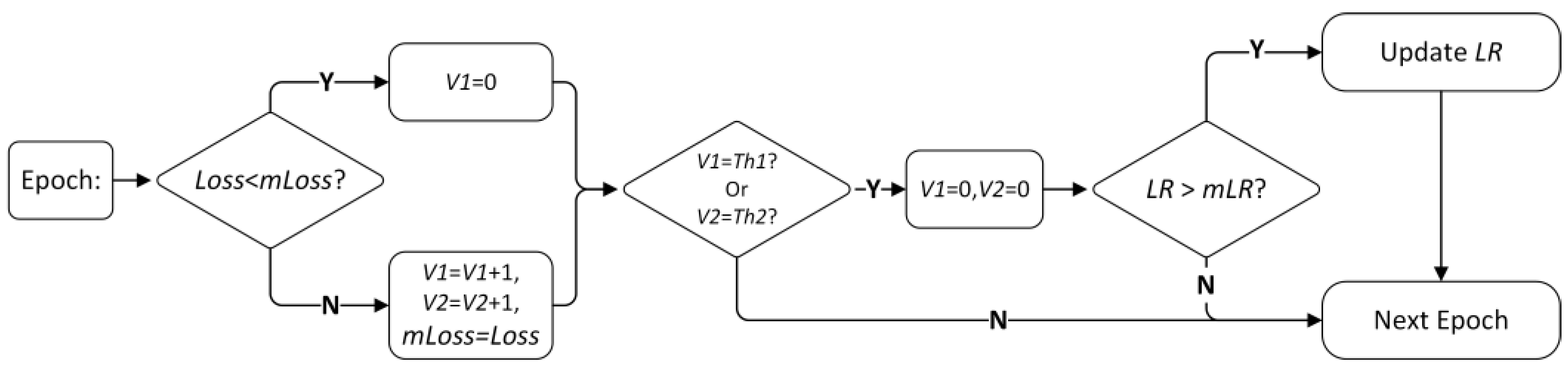

3.1. Overall Process of Road Extraction

- Preprocessing: We used the CLAHE algorithm to perform targeted preprocessing for mountain road extraction.

- Network: We proposed DSDNet with optimizations of the existing network model.

- Postprocessing: We calculated some indicators to constrain the extraction results according to the characteristics of the mountain terrain.

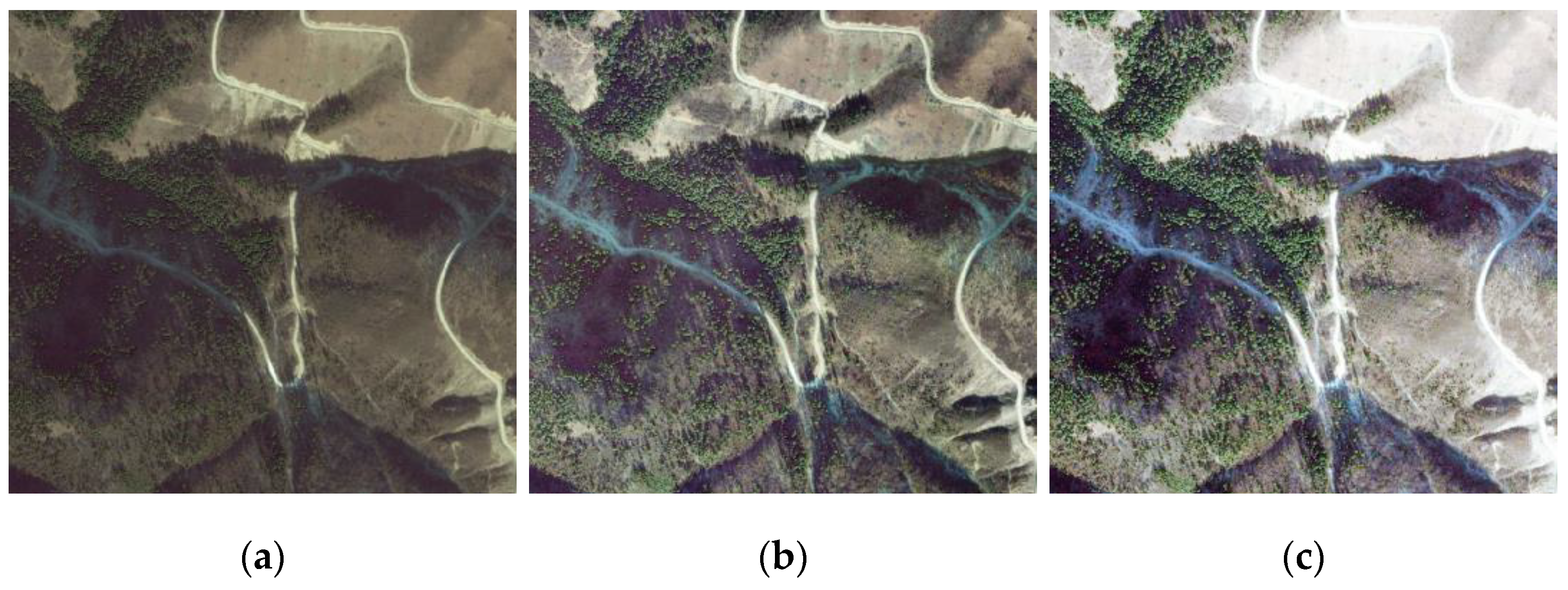

3.2. CLAHE Algorithm

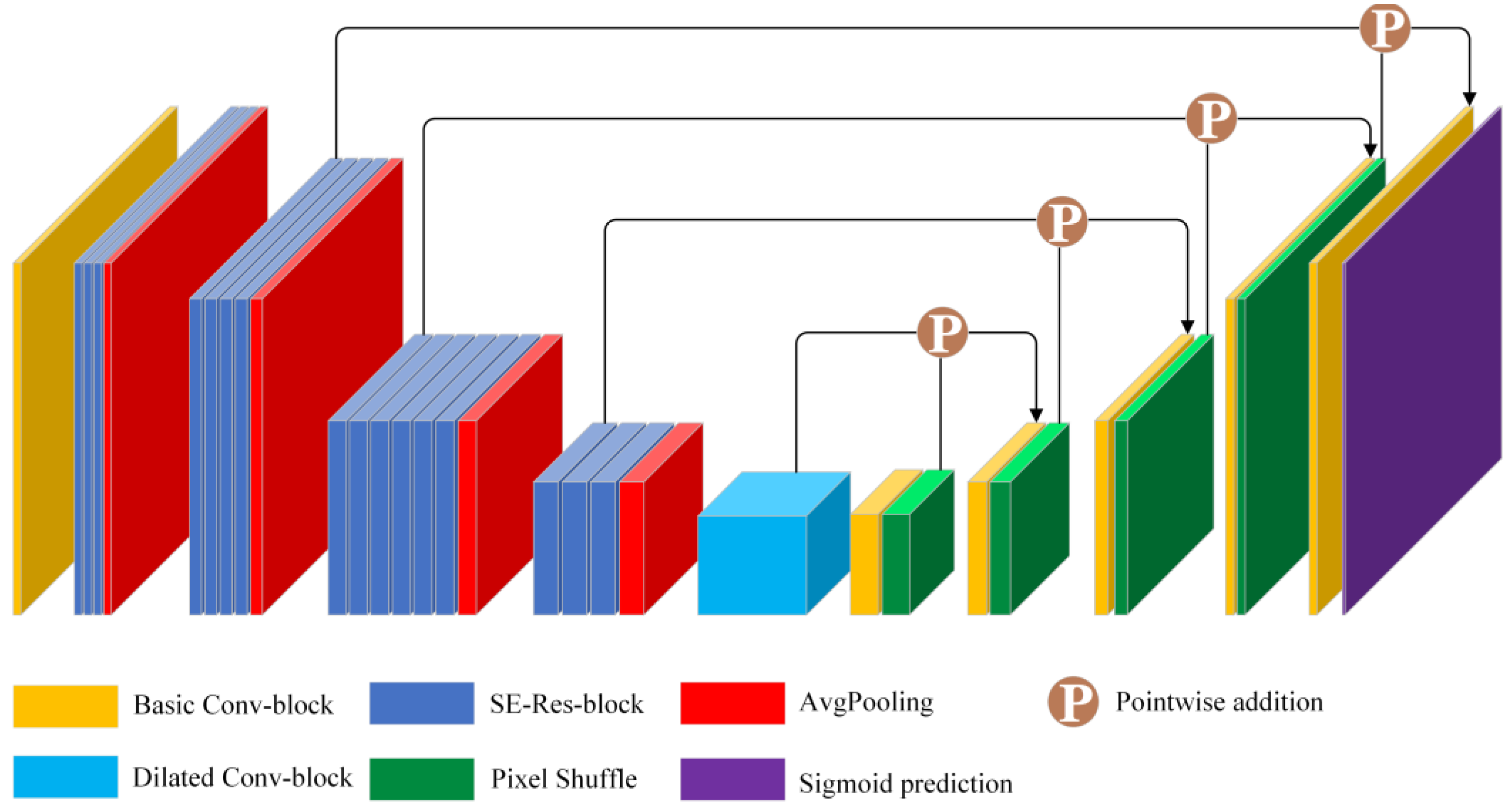

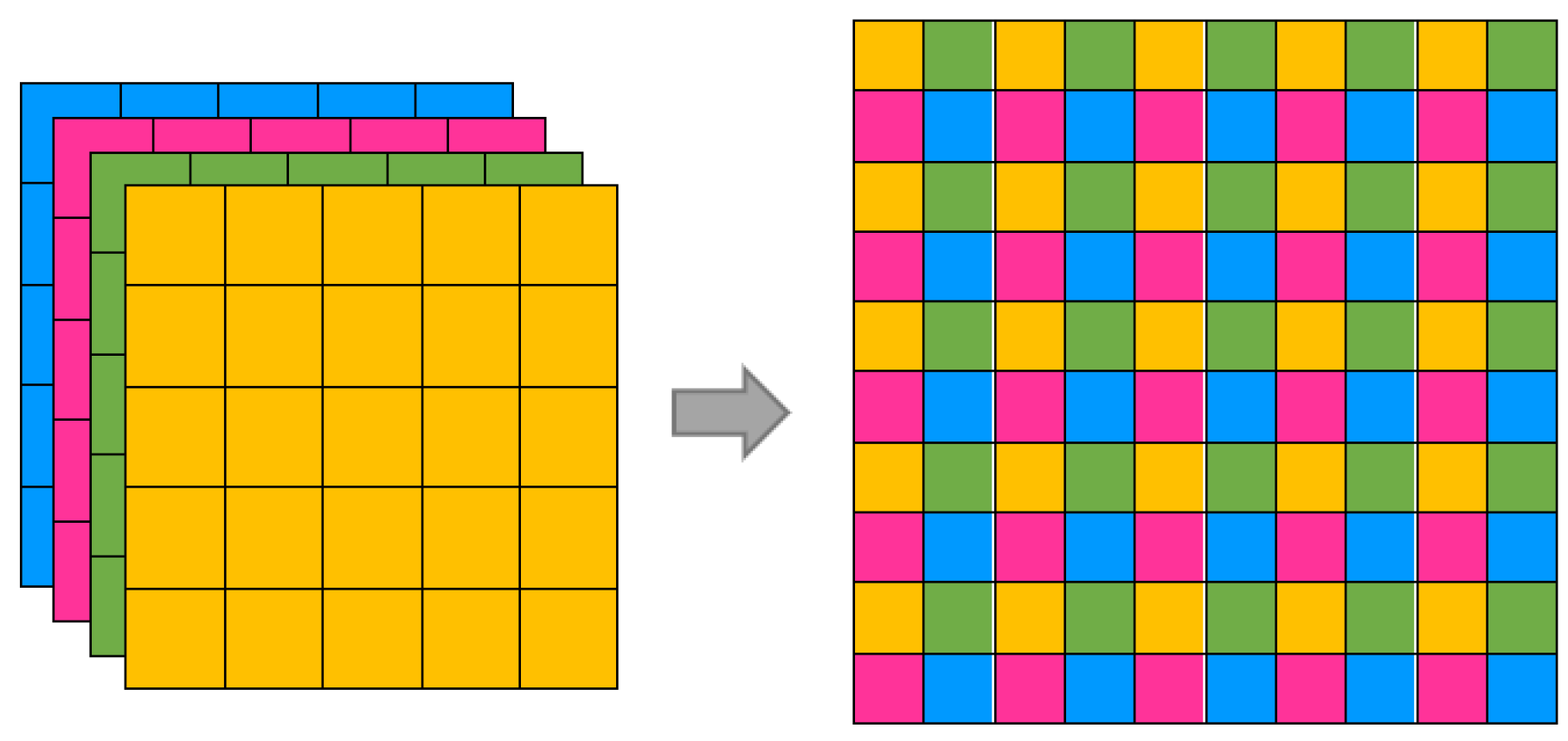

3.3. Network Structure

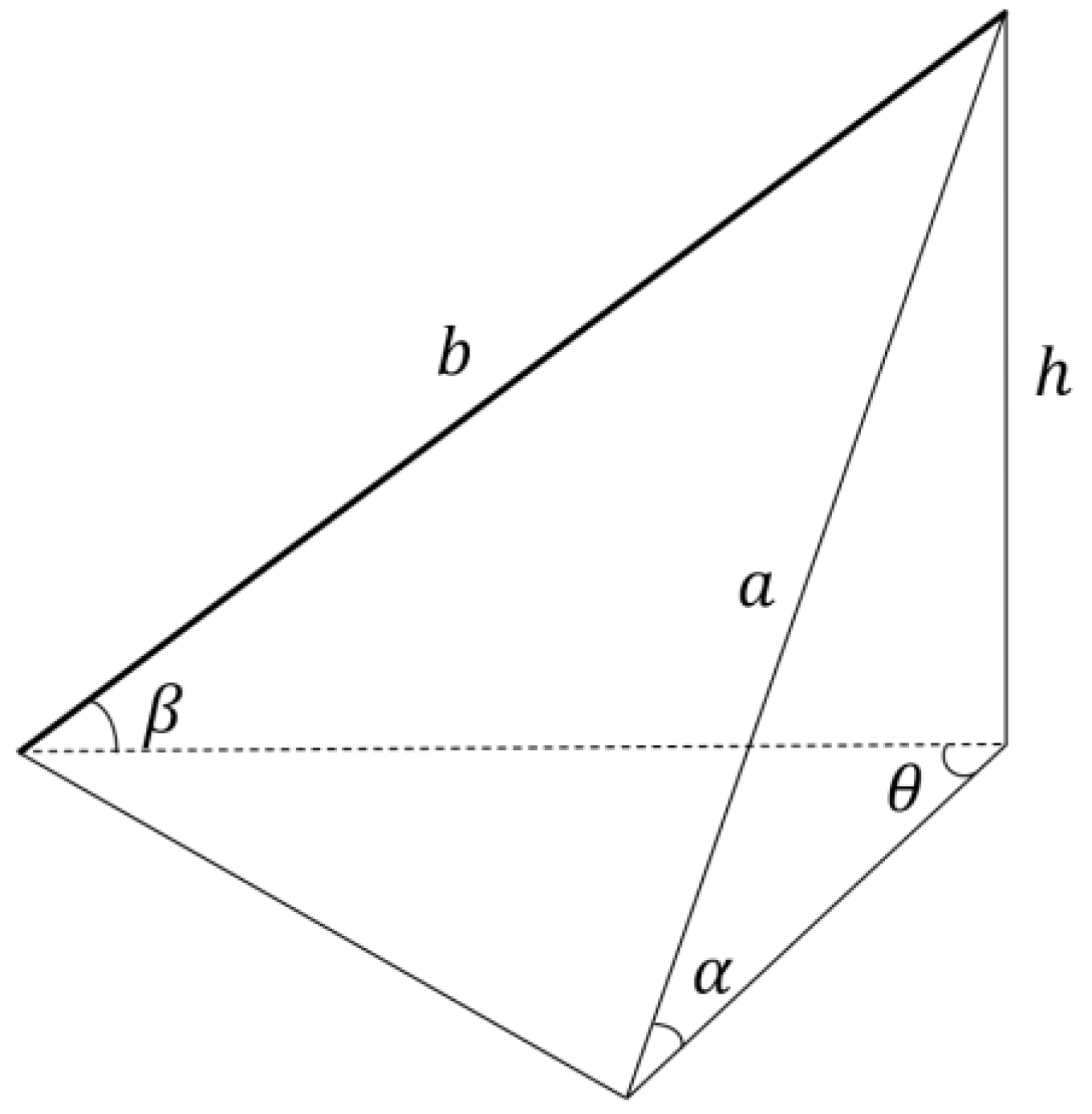

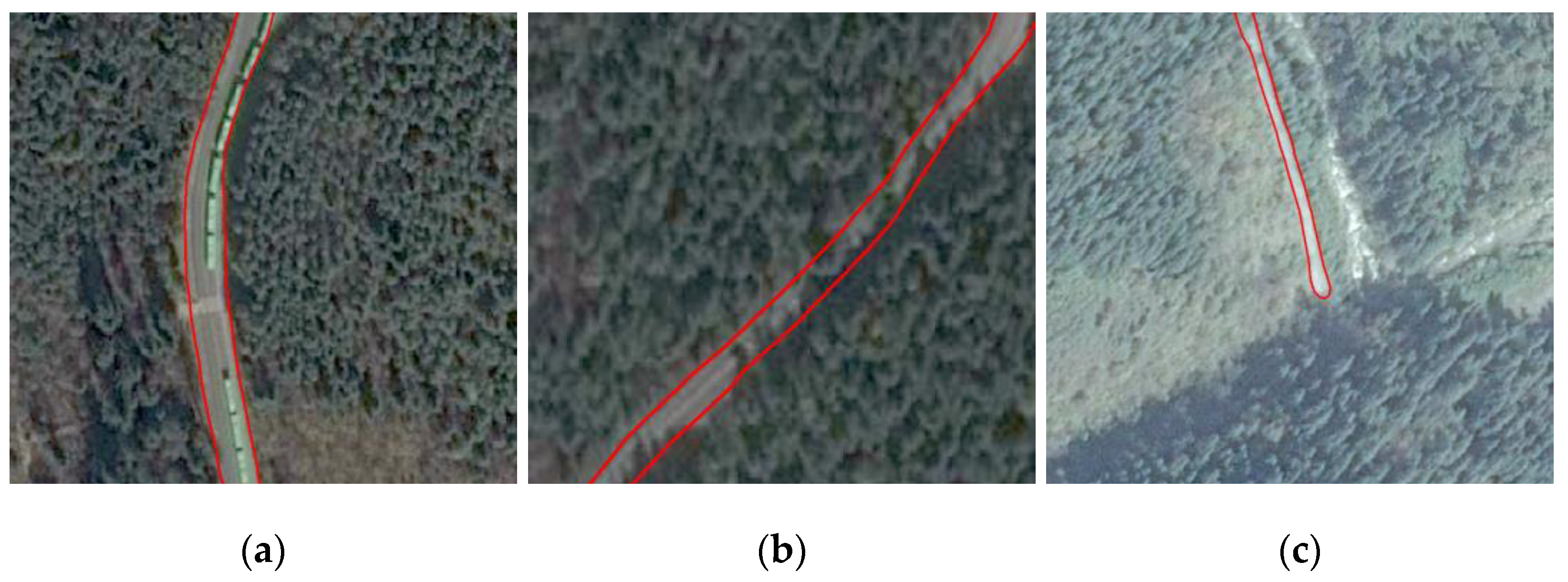

3.4. Terrain Constraints Processing

- The length of bare riverbeds and valley lines is usually very short.

- The directions of these bare riverbeds are sometimes similar to the slope gradient, but the roads are not. We proposed the road-gradient angle to represent the angle of road direction and the steepest direction.

- The slope in the road direction is usually small but the bare riverbeds and valley lines are not. We used road-direction slope to represent the slope at the road direction.

4. Research Region and Experimental Environment

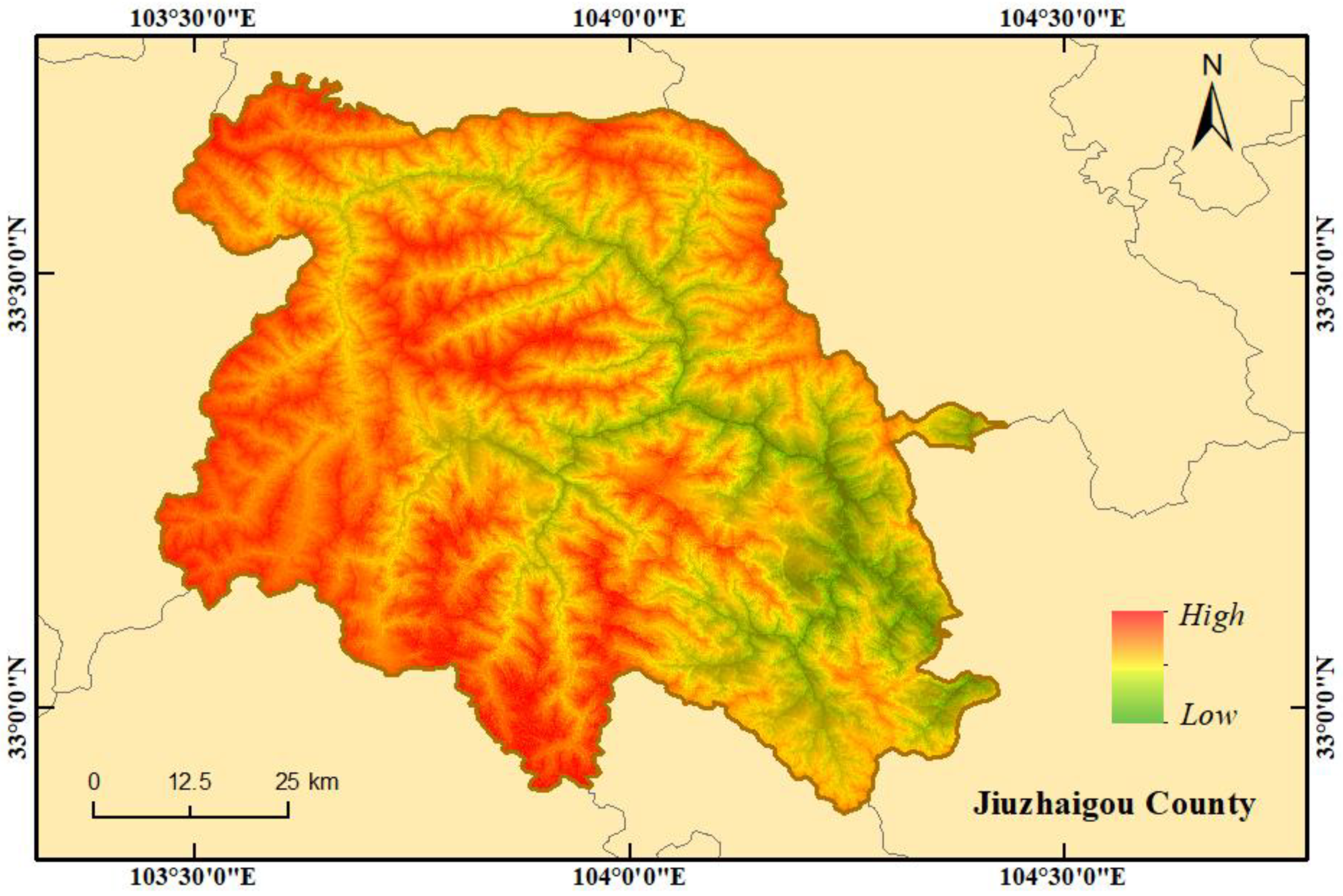

4.1. Research Region

4.2. Experimental Environment

4.3. Experimental Using Samples Locations

4.4. Experiment over a Complete Region

5. Accuracy Evaluation Scheme

5.1. Basic Accuracy Evaluation Indicators

5.2. Specific Evaluation Method

5.2.1. Cross Validation Based on Raster Data

5.2.2. Large-Scale Validation on Point Data

5.2.3. Validation Using OSM Data

6. Results and Discussion

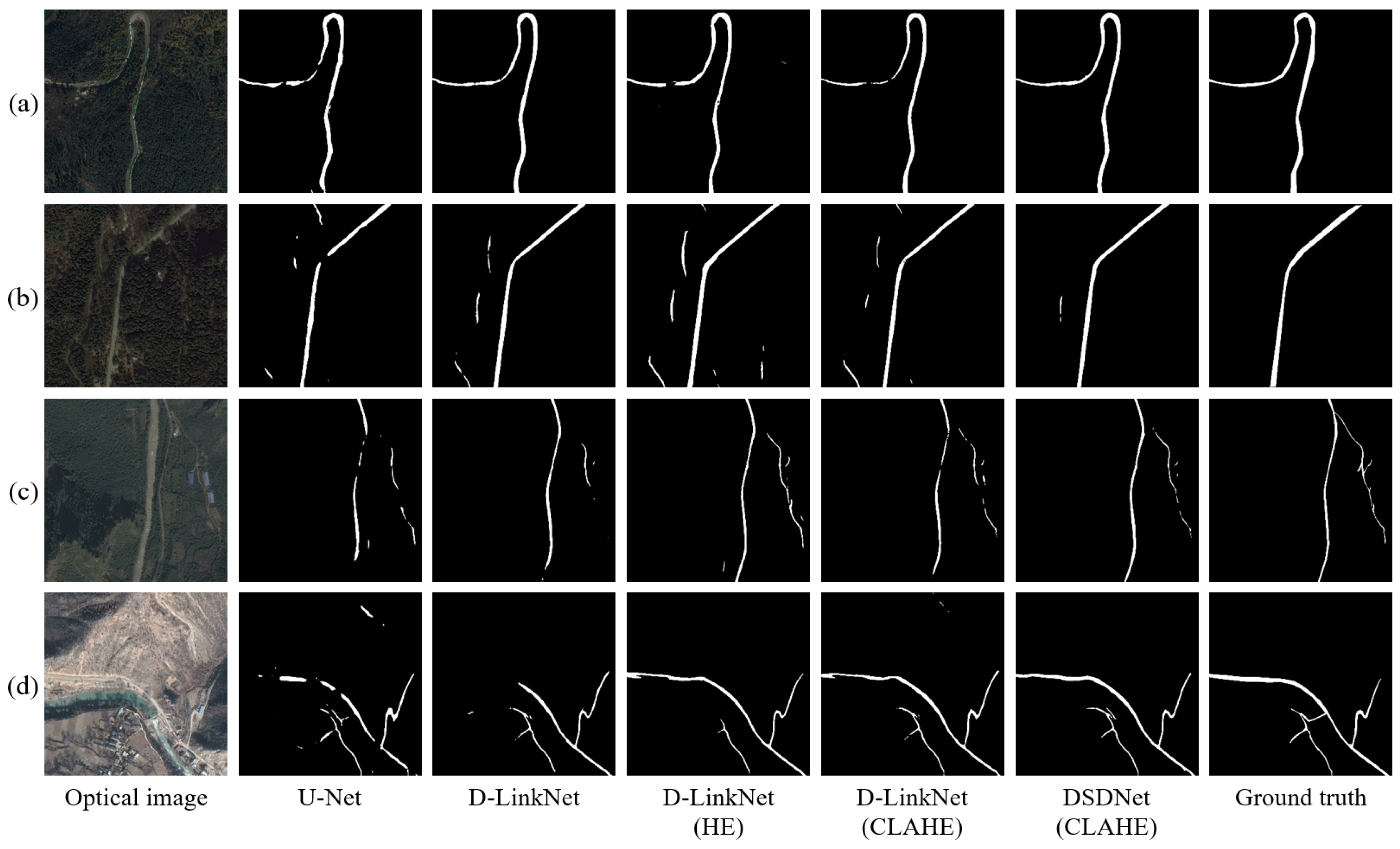

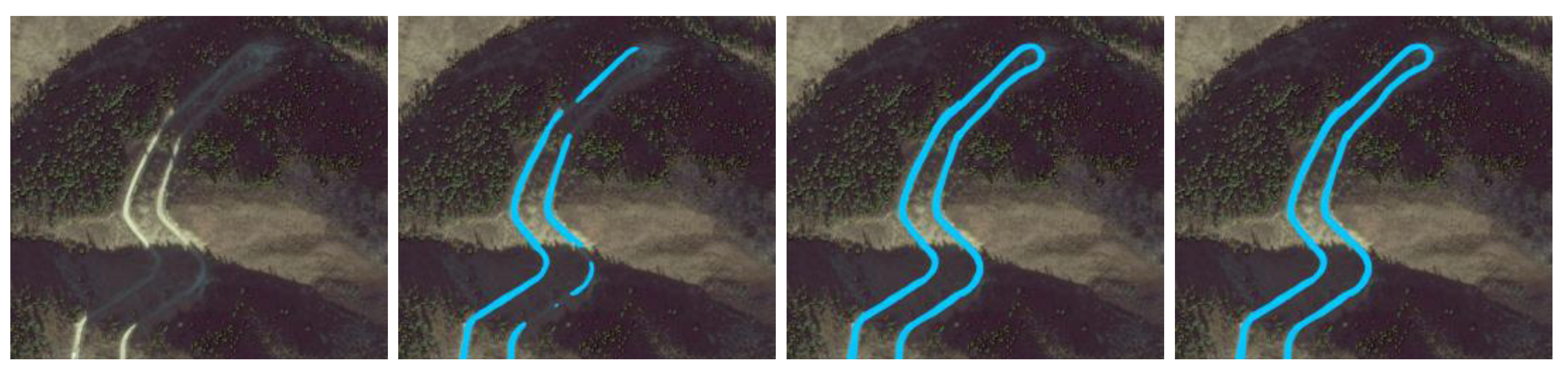

6.1. Results of the Raster Samples

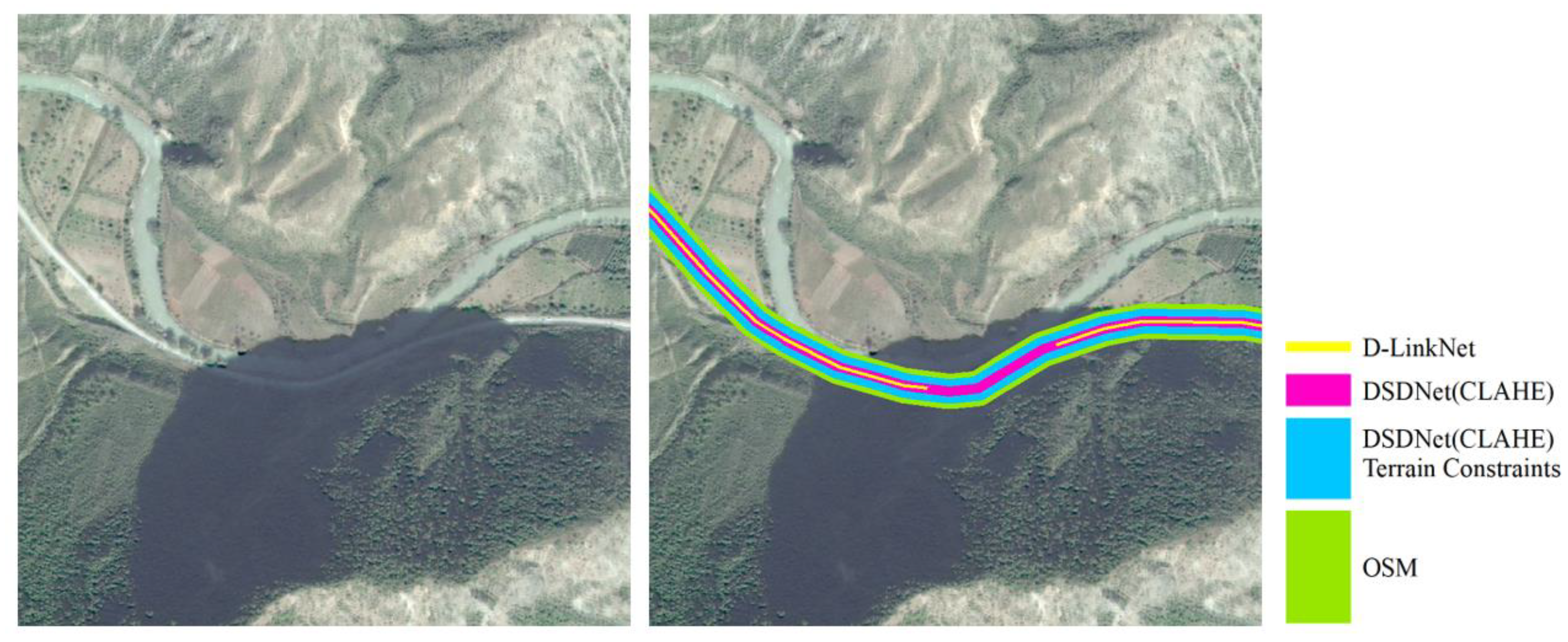

6.2. Results for the Complete Region

7. Conclusions and Future Lines of Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| VHR | Very High Resolution image |

| OSM | OpenStreetMap |

| HE | Histogram Equalization |

| AHE | Adaptive Histogram Equalization |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| SVM | Support Vector Machine |

| DEM | Digital Elevation Model |

References

- Zhou, M.T.; Sui, H.G.; Chen, S.X.; Wang, J.D.; Chen, X. BT-RoadNet: A boundary and topologically-aware neural network for road extraction from high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2020, 168, 288–306. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep learning approaches applied to remote sensing datasets for road extraction: A state-of-the-art review. Remote Sens. 2020, 12, 1444. [Google Scholar] [CrossRef]

- Lian, R.B.; Wang, W.X.; Mustafa, N.; Huang, L.Q. Road extraction methods in high-resolution remote sensing images: A comprehensive review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5489–5507. [Google Scholar] [CrossRef]

- Lu, J.W.; Hu, J.L.; Zhou, J. Deep metric learning for visual understanding: An overview of recent advances. IEEE Signal Process Mag. 2017, 34, 76–84. [Google Scholar] [CrossRef]

- Fu, K.; Peng, J.S.; He, Q.W.; Zhang, H.X. Single image 3D object reconstruction based on deep learning: A review. Multimed Appl. 2020. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Chaurasia, A.; Culurciello, E. LinkNet: Exploiting encoder representations for efficient semantic segmentation. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, X.; Han, X.; Li, C.; Tang, X.; Zhou, H.; Jiao, L. Aerial image road extraction based on an improved generative adversarial network. Remote Sens. 2019, 11, 930. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, C.K.; Li, H.M.; Luo, Z. A road extraction method based on high resolution remote sensing image. In Proceedings of the 2020 International Conference on Geomatics in the Big Data Era (ICGBD), Guilin, China, 15–10 November 2020; pp. 671–676. [Google Scholar] [CrossRef]

- Soni, P.K.; Rajpal, N.; Mehta, R. Semiautomatic road extraction framework based on shape features and ls-svm from high-resolution images. J. Indian Soc. Remote Sens. 2020, 48, 513–524. [Google Scholar] [CrossRef]

- Gruen, A.; Li, H.H. Road extraction from aerial and satellite images by dynamic programming. ISPRS J. Photogramm. Remote Sens. 1995, 50, 11–20. [Google Scholar] [CrossRef]

- Hinz, A.; Baumgartner, A. Automatic extraction of urban road networks from multi-view aerial imagery. ISPRS J. Photogramm. Remote Sens. 2003, 58, 83–98. [Google Scholar] [CrossRef]

- Zhao, Q.H.; Wu, Y.; Wang, H.; Li, Y. Road extraction from remote sensing image based on marked point process with local structure constraint. Chin. J. Sci. Instrum. 2020, 41, 185–195. [Google Scholar] [CrossRef]

- Peng, B.; Xu, A.; Li, H.; Han, Y. Road extraction based on object-oriented from high-resolution remote sensing images. In Proceedings of the 2011 International Symposium on Image and Data Fusion, Tengchong, China, 9–11 August 2011; pp. 1–4. [Google Scholar] [CrossRef]

- Yang, X.F.; Li, X.T.; Ye, Y.M.; Lau, P.Y.K.; Zhang, X.F.; Huang, X.H. Road detection and centerline extraction via deep recurrent convolutional neural network U-Net. IEEE Trans. Geosci. Remote Sens. 2019, 57, 185–195. [Google Scholar] [CrossRef]

- He, X.H.; Li, D.S.; Li, P.L.; Hu, S.K.; Chen, M.Y.; Tian, Z.H.; Zhou, G.S. Road extraction from high resolution remote sensing images based on EDRNet model. Comput. Eng. 2020, 1–11. [Google Scholar] [CrossRef]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. Roadtracer: Automatic extraction of road networks from aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 19–21 June 2018; pp. 4720–4728. [Google Scholar] [CrossRef]

- Qi, X.; Li, K.; Liu, P.; Zhou, X.; Sun, M. Deep attention and multi-scale networks for accurate remote sensing image segmentation. IEEE Access. 2020, 8, 146627–146639. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Zhang, Y.; Xiong, Z.Y.; Zang, Y.; Wang, C.; Li, J.; Li, X. Topology-aware road network extraction via multi-supervised generative adversarial networks. Remote Sens. 2019, 11, 1017. [Google Scholar] [CrossRef]

- Costea, D.; Marcu, A.; Slusanschi, E.; Leordeanu, M. Creating roadmaps in aerial images with generative adversarial networks and smoothing-based optimization. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2100–2109. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.C.; Kong, T.; Zhang, W.C.; Yang, C.; Liu, C.F. A survey on deep transfer learning. In Proceedings of the 2018 Artificial Neural Networks and Machine Learning (ICANN), Rhodes, Greece, 4–7 October 2018; pp. 192–196. [Google Scholar] [CrossRef]

- Li, W.; Wu, G.D.; Du, Q. Transferred deep learning for anomaly detection in hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 2019, 57, 2305–2323. [Google Scholar] [CrossRef]

- Diane, C.; Kyle, D.F.; Narayanan, C.K. Transfer learning for activity recognition: A survey. Know. Inf. Syst. 2014, 57, 537–556. [Google Scholar] [CrossRef]

- Senthilnath, J.; Varia, N.; Dokania, A.; Anand, G.; Benediktsson, J.A. Deep TEC: Deep transfer learning with ensemble classifier for road extraction from UAV image. Remote Sens. 2020, 12, 245. [Google Scholar] [CrossRef]

- He, H.; Yang, D.F.; Wang, S.C.; Wang, S.Y.; Liu, X. Road segmentation of cross-modal remote sensing images using deep segmentation network and transfer learning. Ind. Robot. 2019, 46, 384–390. [Google Scholar] [CrossRef]

- Zhang, Q.; Kong, Q.; Zhang, C.; You, S.; Wei, H.; Sun, R.; Li, L. A new road extraction method using Sentinel-1 SAR images based on the deep fully convolutional neural network. Eur. J. Remote Sens. 2019, 52, 572–582. [Google Scholar] [CrossRef]

- Henry, C.; Azimi, S.M.; Merkle, N. Road segmentation in SAR satellite images with deep fully convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2020, 15, 1867–1871. [Google Scholar] [CrossRef]

- Martins, V.S.; Kaleita, A.L.; Gelder, B.K.; da Silveira, H.L.F.; Abe, C.A. Exploring multiscale object-based convolutional neural network (multi-OCNN) for remote sensing image classification at high spatial resolution. ISPRS J. Photogramm. Remote Sens. 2020, 168, 56–73. [Google Scholar] [CrossRef]

- Robinson, C.; Hou, L.; Malkin, K. Large scale high-resolution land cover mapping with multi-resolution data. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12718–12727. [Google Scholar] [CrossRef]

- Salberg, A.B.; Trier, Ø.D.; Kampffmeyer, M. Large-scale mapping of small roads in lidar images using deep convolutional neural networks. In Proceedings of the Scandinavian Conference on Image Analysis, Tromso, Norway, 12–14 June 2017; pp. 193–204. [Google Scholar] [CrossRef]

- Courtial, A.; Ayedi, A.E.; Touya, G.; Zhang, X. Exploring the potential of deep learning segmentation for mountain roads generalisation. ISPRS Int. J. Geo-Inf. 2020, 9, 338. [Google Scholar] [CrossRef]

- Abdollahi, A.; Bakhtiari, H.R.R.; Nejad, M.P. Investigation of SVM and level set interactive methods for road extraction from google earth images. J. Indian Soc. Remote Sens. 2018, 46, 423–430. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, C.; Wu, M. D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the 31st Meeting of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 192–196. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 18th IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar] [CrossRef]

- Spolti, A.; Guizilini, V.; Mendes, C.C.T.; Croce, M.; Geus, A.; Oliveira, H.C.; Backes, A.R.; Souza, J. Application of u-net and auto-encoder to the road/non-road classification of aerial imagery in urban environments. In Proceedings of the 15th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP), Valetta, Malta, 27–29 February 2020; pp. 607–614. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Pretreatment | Network | Group | Precision | Recall | F1 | mF1 |

|---|---|---|---|---|---|---|

| - | U-Net | 1 | 0.8501 | 0.8354 | 0.8427 | 0.8145 |

| 2 | 0.7879 | 0.7845 | 0.7862 | |||

| - | D-LinkNet | 1 | 0.8966 | 0.8305 | 0.8622 | 0.8529 |

| 2 | 0.8453 | 0.8418 | 0.8436 | |||

| HE | D-LinkNet | 1 | 0.8559 | 0.8604 | 0.8582 | 0.8413 |

| 2 | 0.8079 | 0.8416 | 0.8244 | |||

| CLAHE | D-LinkNet | 1 | 0.8969 | 0.8338 | 0.8642 | 0.8579 |

| 2 | 0.8536 | 0.8494 | 0.8515 | |||

| CLAHE | DSDNet | 1 | 0.8979 | 0.8463 | 0.8713 | 0.8631 |

| 2 | 0.8567 | 0.8531 | 0.8549 |

| Method | Post-Processing | Precision | Recall | F1 |

|---|---|---|---|---|

| D-LinkNet | - | 0.7981 | 0.8218 | 0.8098 |

| DSDNet (CLAHE) | - | 0.7647 | 0.9010 | 0.8273 |

| DSDNet (CLAHE) | Terrain Constraints | 0.8318 | 0.8812 | 0.8558 |

| Method | Post-Processing | Recall |

|---|---|---|

| D-LinkNet | - | 0.8673 |

| DSDNet (CLAHE) | - | 0.8854 |

| DSDNet (CLAHE) | Terrain Constraints | 0.8801 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Shen, Z.; Li, Y.; Xia, L.; Wang, H.; Li, S.; Jiao, S.; Lei, Y. Road Extraction in Mountainous Regions from High-Resolution Images Based on DSDNet and Terrain Optimization. Remote Sens. 2021, 13, 90. https://doi.org/10.3390/rs13010090

Xu Z, Shen Z, Li Y, Xia L, Wang H, Li S, Jiao S, Lei Y. Road Extraction in Mountainous Regions from High-Resolution Images Based on DSDNet and Terrain Optimization. Remote Sensing. 2021; 13(1):90. https://doi.org/10.3390/rs13010090

Chicago/Turabian StyleXu, Zeyu, Zhanfeng Shen, Yang Li, Liegang Xia, Haoyu Wang, Shuo Li, Shuhui Jiao, and Yating Lei. 2021. "Road Extraction in Mountainous Regions from High-Resolution Images Based on DSDNet and Terrain Optimization" Remote Sensing 13, no. 1: 90. https://doi.org/10.3390/rs13010090

APA StyleXu, Z., Shen, Z., Li, Y., Xia, L., Wang, H., Li, S., Jiao, S., & Lei, Y. (2021). Road Extraction in Mountainous Regions from High-Resolution Images Based on DSDNet and Terrain Optimization. Remote Sensing, 13(1), 90. https://doi.org/10.3390/rs13010090