Spatially Enhanced Spectral Unmixing Through Data Fusion of Spectral and Visible Images from Different Sensors

Abstract

1. Introduction

- We propose a computationally light strategy for the fusion of data from different sensors without the need for full spatial, spectral, and temporal overlapping.

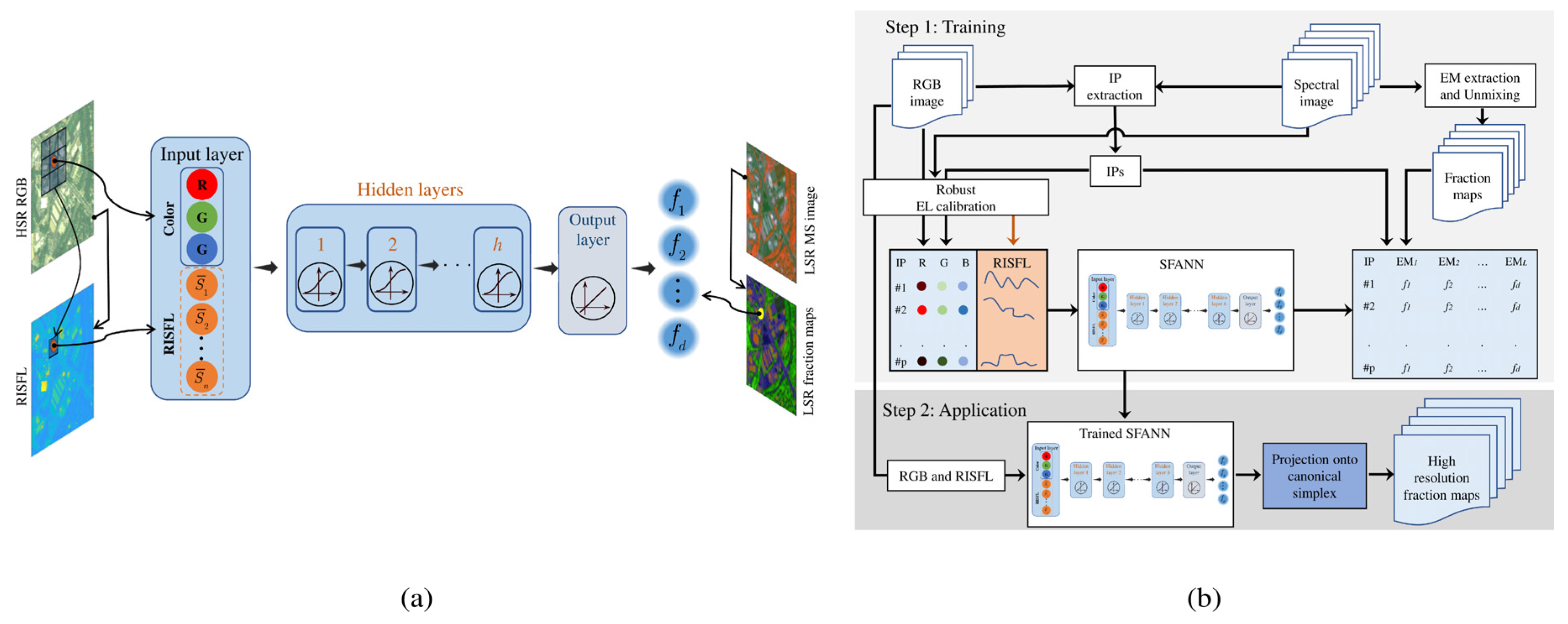

- We introduce a new rotation-invariant spatial feature for learning (RISFL) that allows for the incorporation of spatial information within the machine learning process without the need for CNNs.

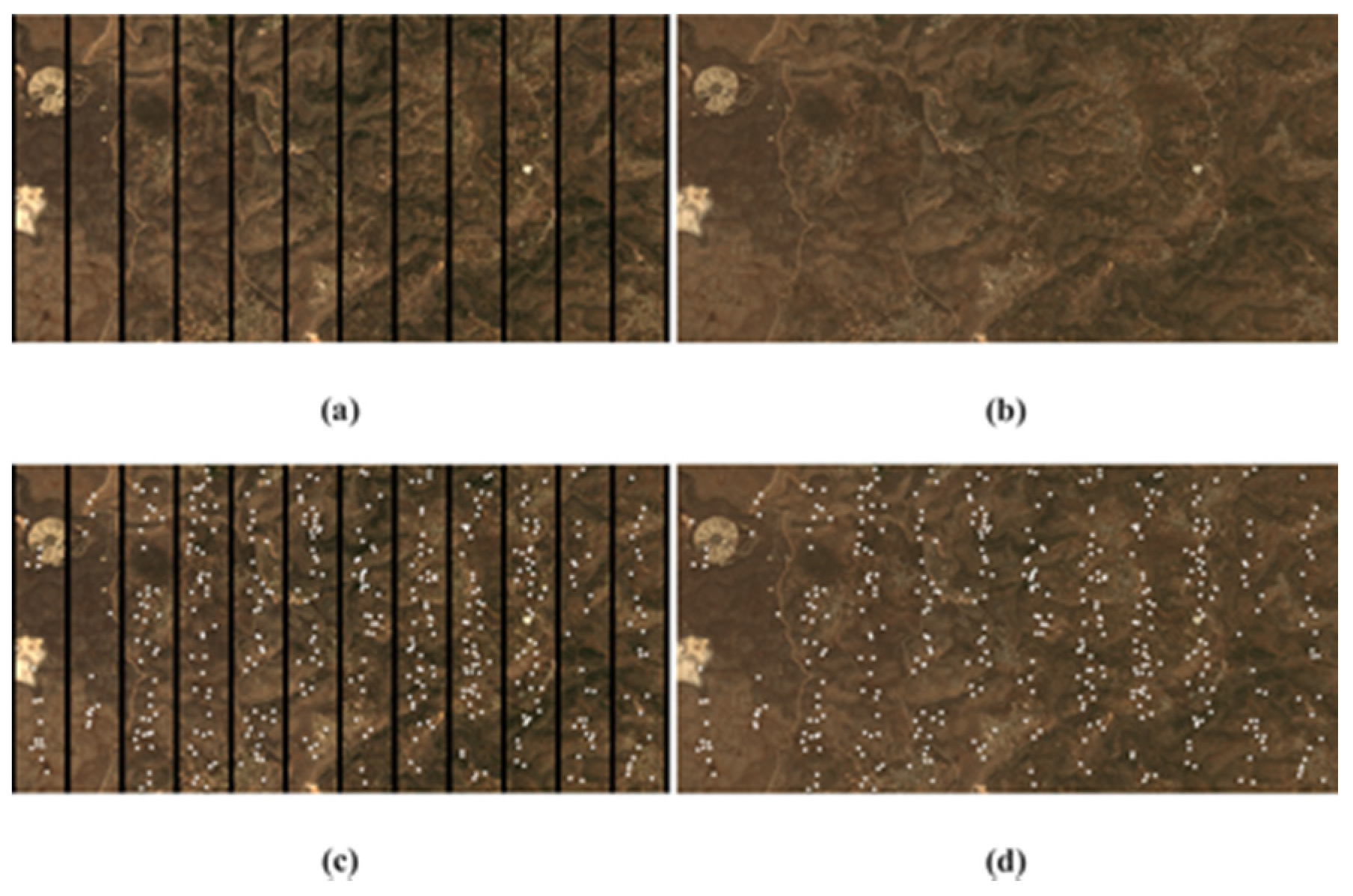

- Using IPs, as proposed in the paper, we suggest a new strategy for data fusion that allows for the use of sources with missing-data pixels, e.g., images provided by Landsat 7 with data gaps due to scan line corrector failure [36].

2. Materials and Methods

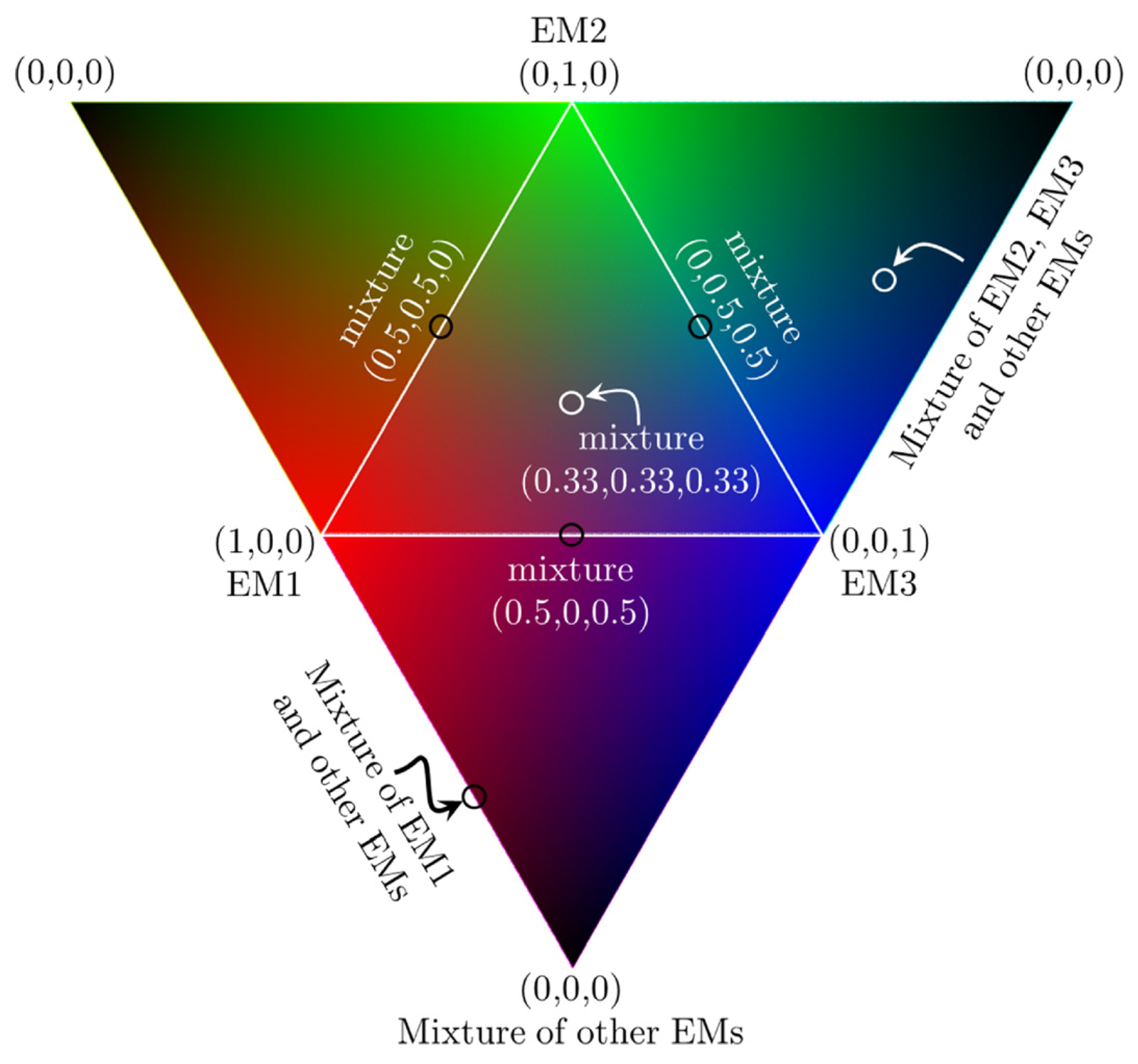

2.1. Spectral Unmixing

2.2. Invariant Points (IPs) for Supervised Learning and Fusion of Data from Different Sensors

2.3. Automatic Extraction of IPs

2.4. Rotation Invariant Spatial Features for Learning for the Incorporation of Spatial Information

- Be robust to rotation.

- Maps the spatial distribution of the original colors surrounding the pixels.

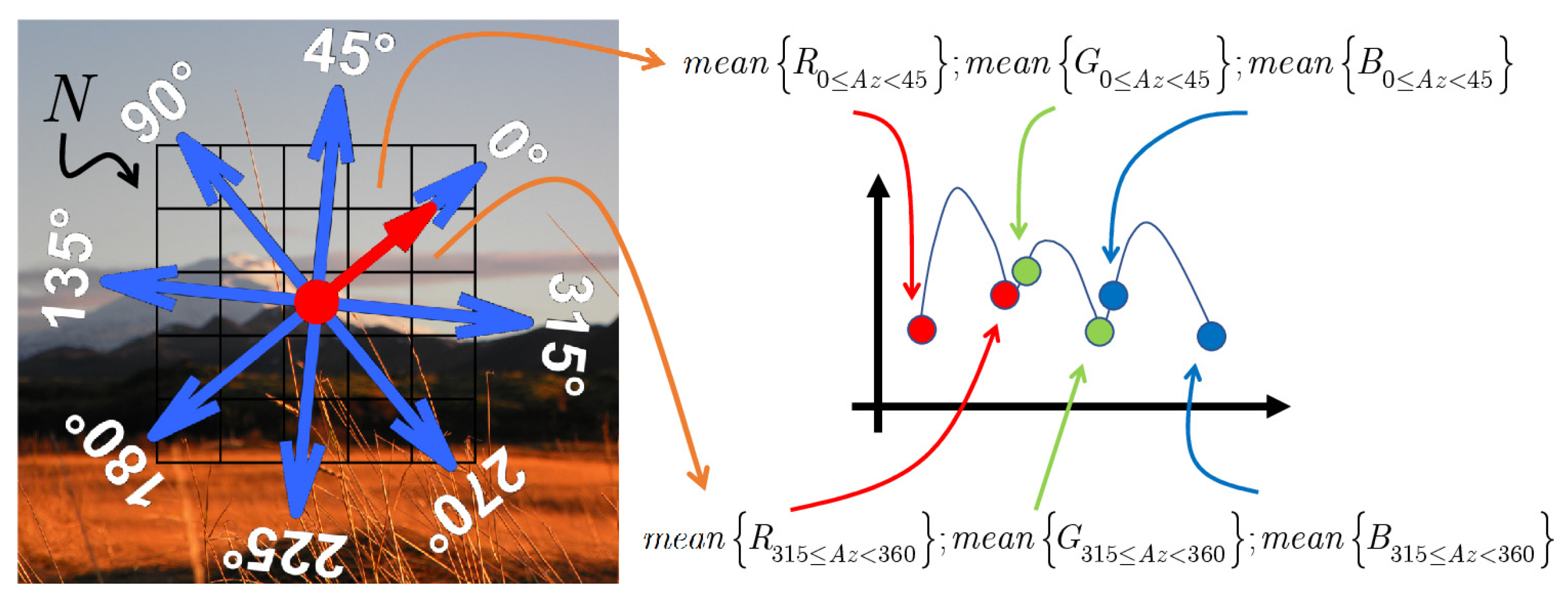

- Compute the direction (azimuth) of the local gradient, i.e., , at the pixel .

- Calculate the azimuth to each pixel in in a local coordinate system centered at pixel and rotated by , such that the new azimuth of the gradient direction is zero.

- Divide into directional regions (see Figure 3), , such thatwhere is a set of pixels within , and is the local azimuth of the pixel at location . For example, let . The first directional region, , will include all pixels in with azimuth values equal to or greater than and smaller than , and the last directional region, , will include all pixels with azimuths equal to or greater than and smaller than (see Figure 2)

- For each region, calculate the mean value of the R, G, and B for all pixels that fall within the region.

2.5. Empirical Line Calibration

2.6. Data Fusion for Resolution Enhancement of Fraction Maps

2.6.1. SFANN for Fraction Estimation

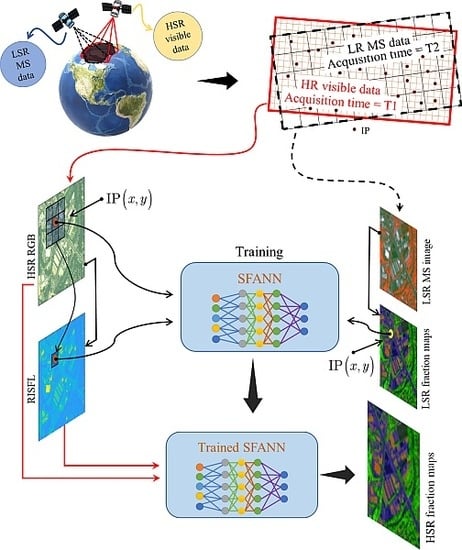

2.6.2. Methodological Framework

- Extract IPs between the spectral and visible RGB images.

- Calibrate the RGB values using robust empirical line (EL) calibration.

- Extract RISFLs.

- Automatically extract EMs from the spectral image.

- Estimate the fraction map for each EM using the unmixing process.

- Using the IPs, train the SFANN to model the relationship between the calibrated RGB values, the RISFLs, and the fractions of each EM.

- Apply the trained SFANN to all pixels in the visible image and their RISFLs to create fraction maps with HSR.

2.7. Data and Experimental Evaluation

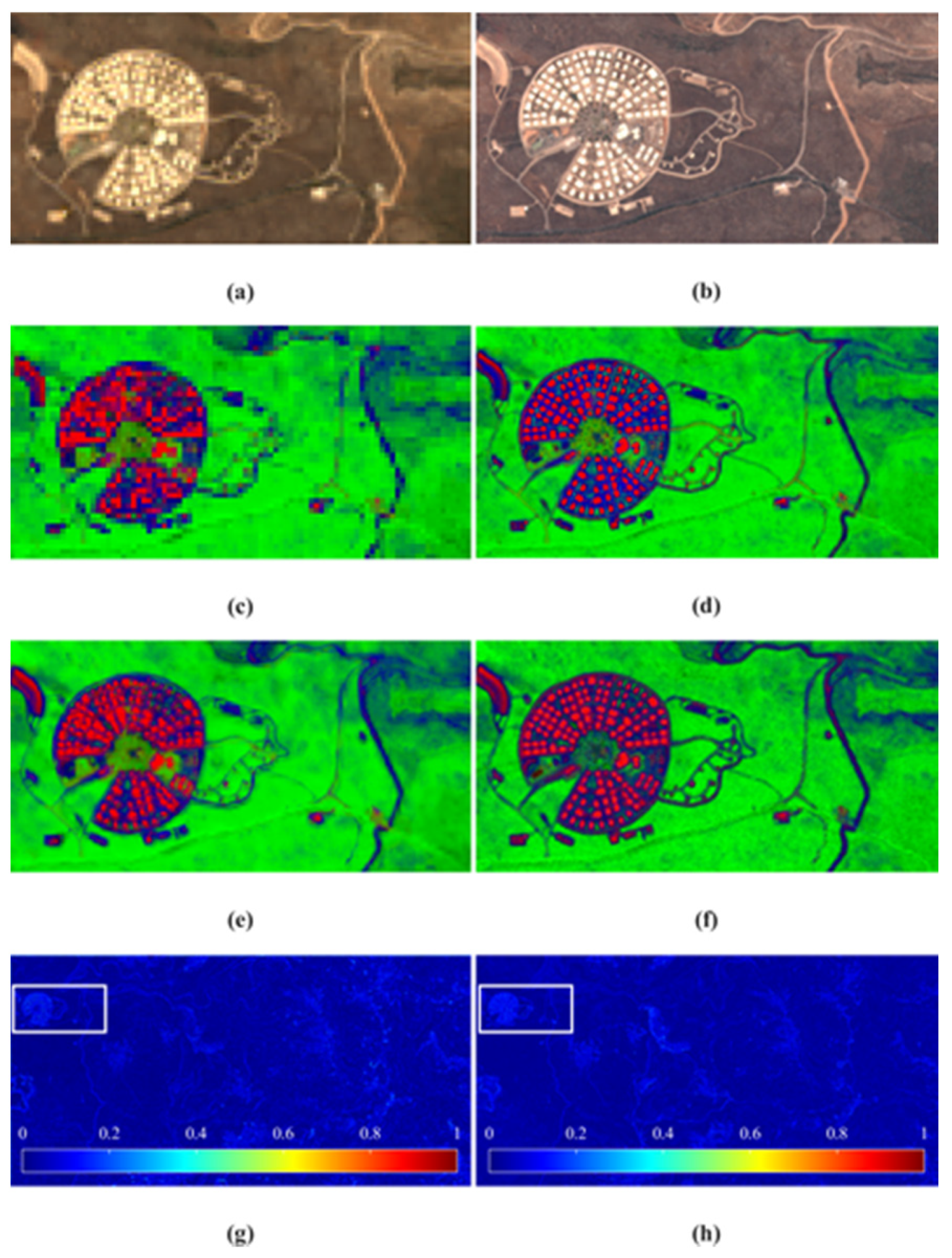

- Venus dataset-a, a spectral image with 12 bands and an SR of 10 m along with its corresponding uncalibrated spectral image with an SR of 5 m, both provided by the Venus satellite (Figure 6c,d).

- Venus dataset-b, similar to data set #2 but for a different area that contains multiple homogeneous regions (Figure 6e,f).

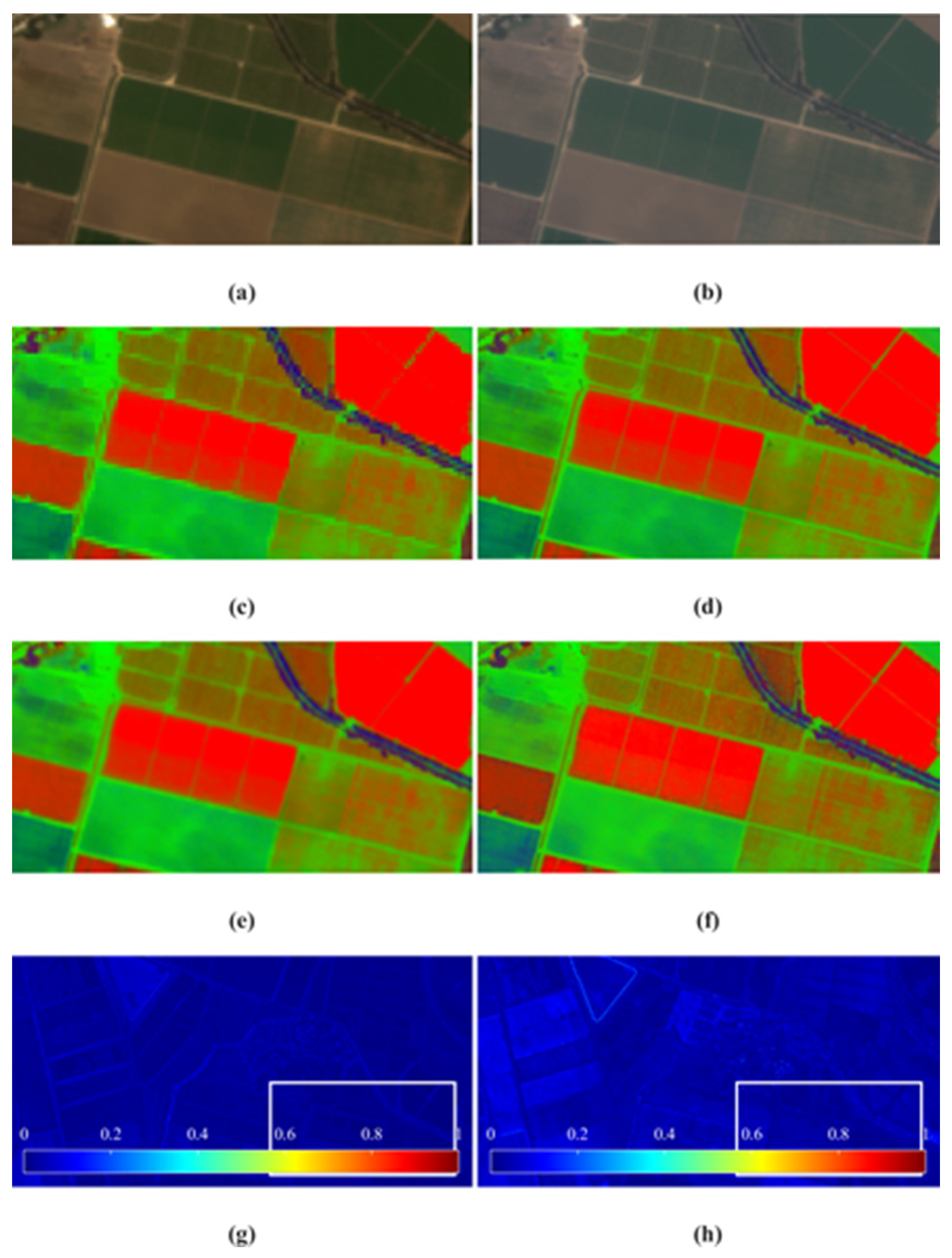

- A spectral image provided by the GeoEye-1 satellite with four bands and SR of 1.84 meters and a corresponding panchromatic image with SR of 0.46 m.

- A spectral image provided by the Ikonos satellite with four bands and SR of 3.28 meters and a corresponding panchromatic image with SR of 0.82 m.

- A spectral image provided by the WorldView-2 satellite with eight bands and SR of 1.84 meters and a corresponding panchromatic image with SR of 0.46 m.

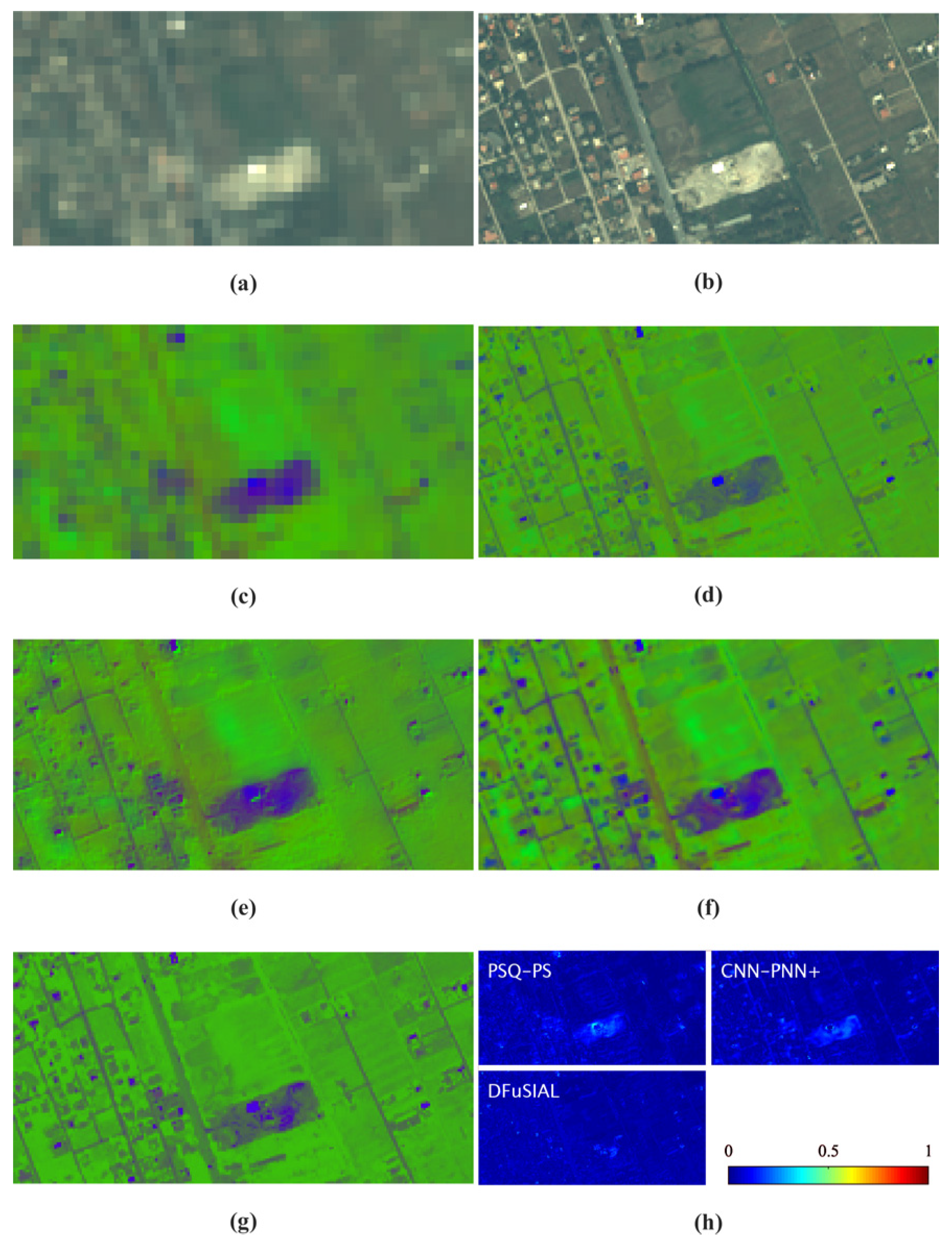

- An RGB image, with an SR of 1 m, available for free, on Google Earth and a corresponding spectral image of the same area with SR of 10 m provided by the Sentinel 2 A satellite.

- Group-1 includes datasets 1, 2, and 3. In each of these three cases, a real full-reference HSR spectral image is available and used for the evaluation of the obtained HSR fraction maps.

- -

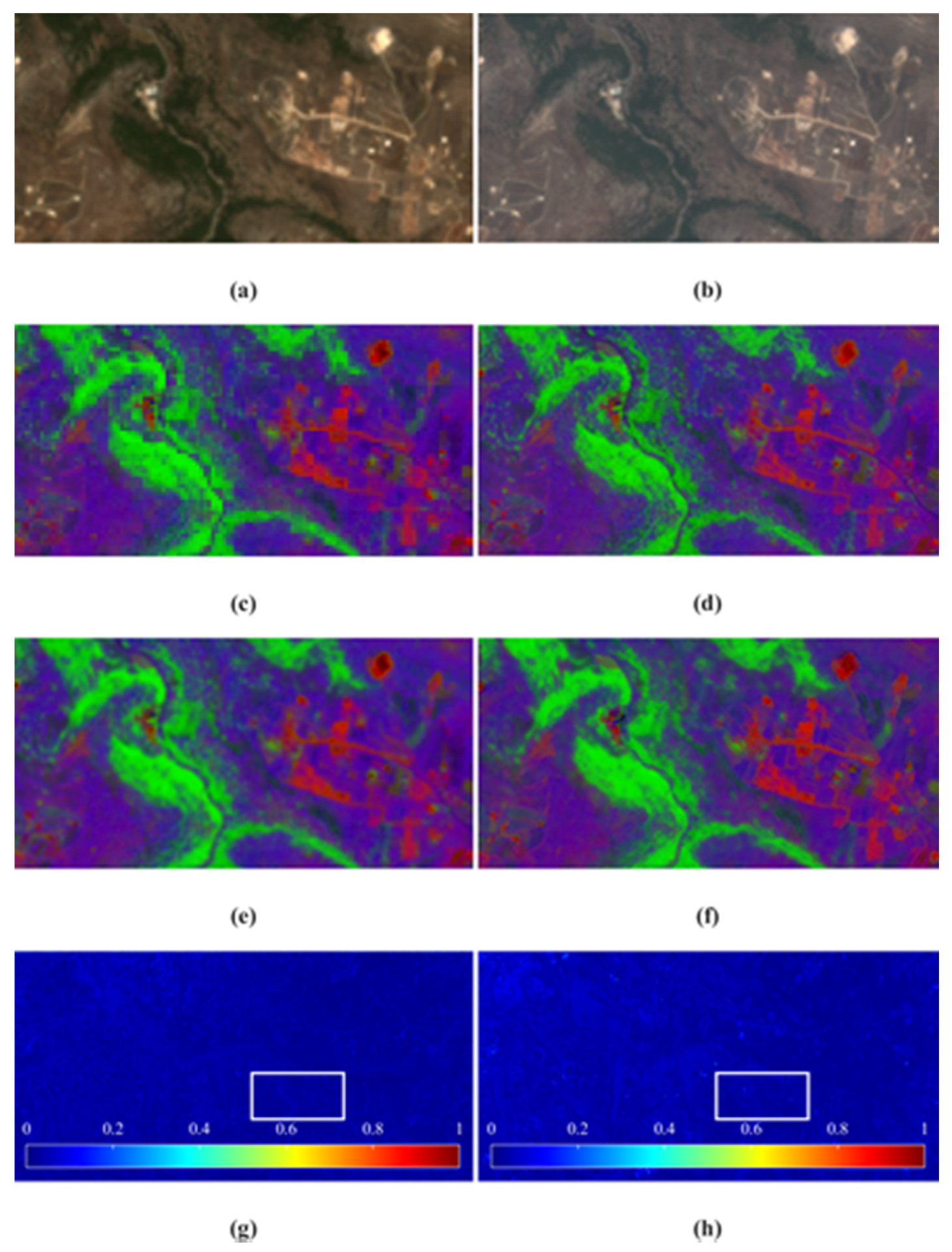

- The Sentinel image has 13 bands: 1–8, 8 A, and 9–12. The central wavelength of bands 1–4, 8 A, 11, and 12 is similar to that of the Landsat bands and so we use these bands in our experiments. The visible bands, 2, 3, and 4, have an SR of 10 m and were used to create a corresponding RGB image. The resolution of bands 1, 8 A, 11, and 12 was artificially resampled from 20 m to 10 m. The Landsat image was acquired on 28 May 2017, whereas the Sentinel image was acquired three days later, on 31 May 2017.

- -

- The second and third datasets combine fully overlapping images that were simultaneously acquired on 17 June 2018 by the Venus satellite. The satellite provides the LSR (10 m) image with reflectance units, while the HSR (5 m) image is available only with uncalibrated DN units.

- Group-2 includes datasets 4, 5, and 6. Since a real full-reference is not available for these images, we adopted the strategy presented in [25] and [26] following Wald’s protocol [60] for generating simulated full-reference. We reduced the SR of the original data by resampling the spectral and panchromatic images in these datasets by a factor of 0.25 to create new spectral images for GeoEye-1, Ikonos, and WorldView-2 with SR of 7.36 m, 13.12, and 7.36 m, respectively. The original MS image is then used as a reference ground truth.

- Group-3 includes data set 7. The RGB image, taken from Google Earth, was acquired on 08/08/2014 and is the most recent available HSR image of the selected area. A corresponding spectral image was derived from the Sentinel-2 A image with SR of 10 m from dataset 1. No reference ground truth is available in this group.

- (1)

- The conventional filter-based approach, presented in [61], is intended for preserving spectral quality (PSQ). For the sake of convenience, we use the term PSQ-PS to refer to this method in the rest of the paper.

- (2)

- The novel CNN-based approach, presented in [26], utilizes a target-adaptive fine-tuning step to achieve a further improvement of the results with comparison to its baseline and accurate method pansharpening neural network (PNN) [25]. We use the term CNN-PNN+ to refer to this method in the rest of the paper.

- Type 1:

- The , its , the , and the values of the fractions obtained by the different methods are computed according to Equations (7)–(9), with respect to HSR ground truth (GT) fraction maps derived by applying SUnSAL on the available HSR spectral image. All pixels in the HSR are used in the evaluation.

- Type 2:

- The , its , the , and the values are computed with respect to the fractions obtained by SUnSAL for the LSR spectral image. Only IPs are used in this evaluation. More specifically, we use 20% of IPs for the evaluation, and the rest (80%) are used to feed and train the SFANN.

3. Results and Discussion

3.1. Quantitative Evaluation

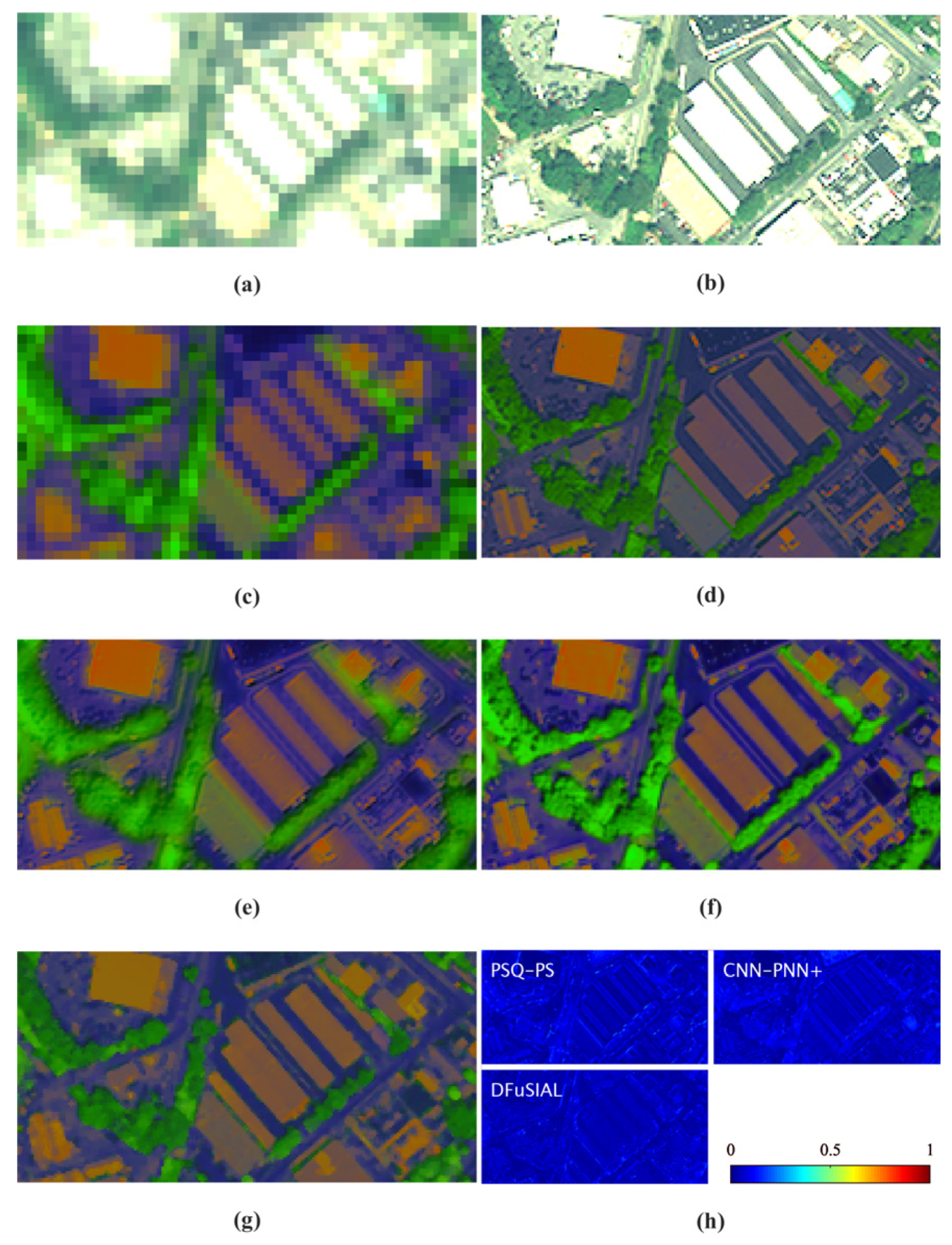

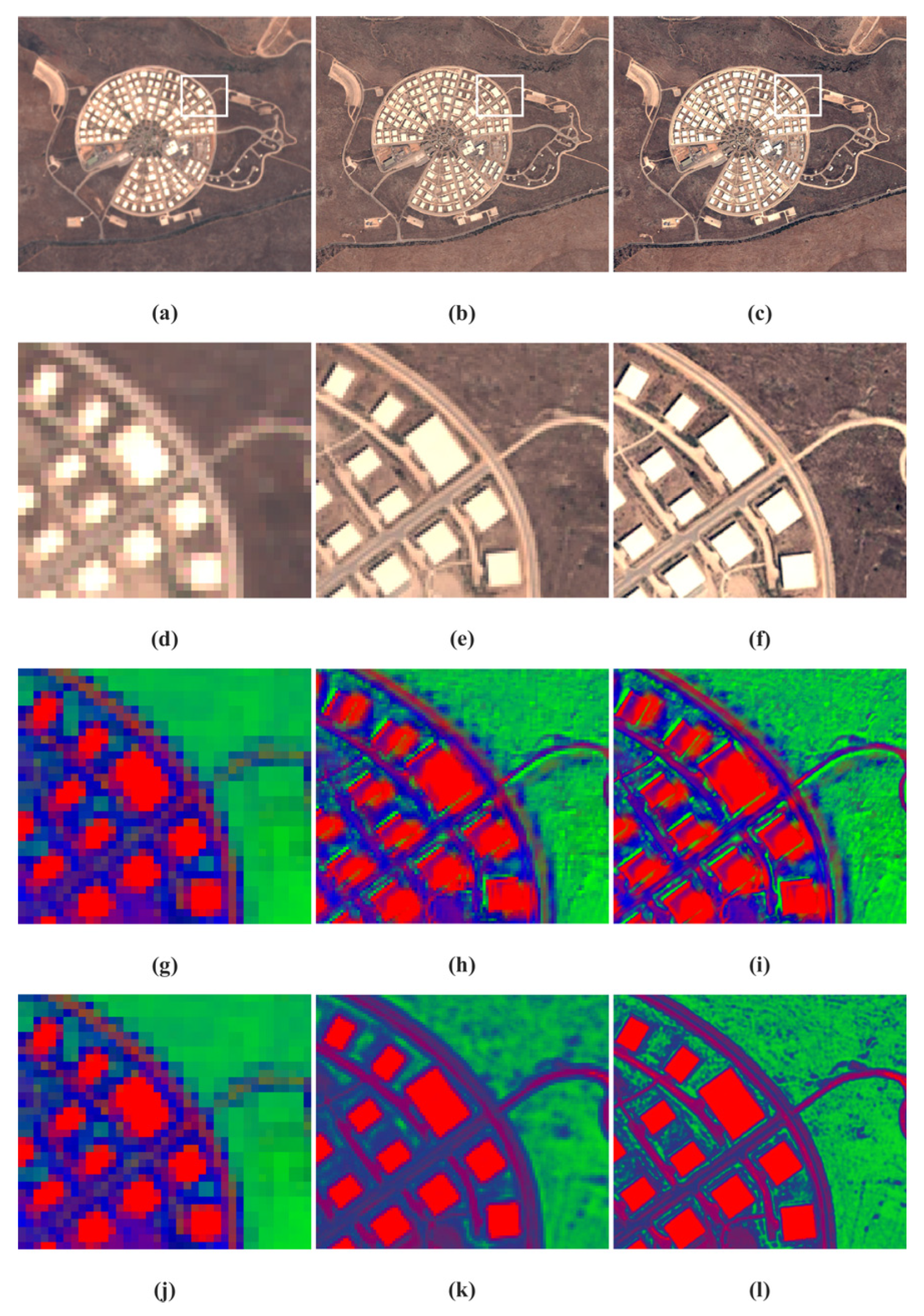

3.2. Visual Evaluation

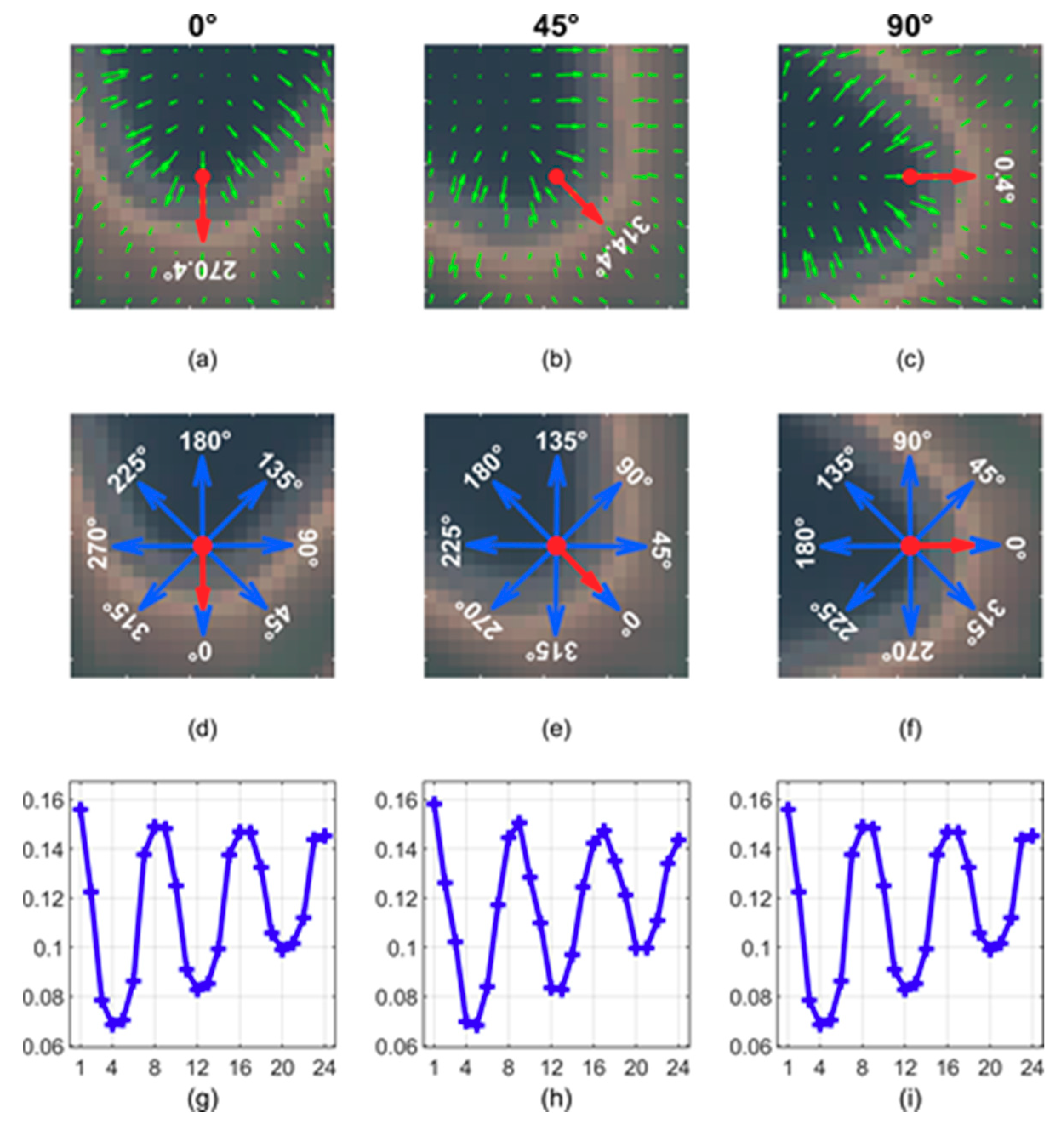

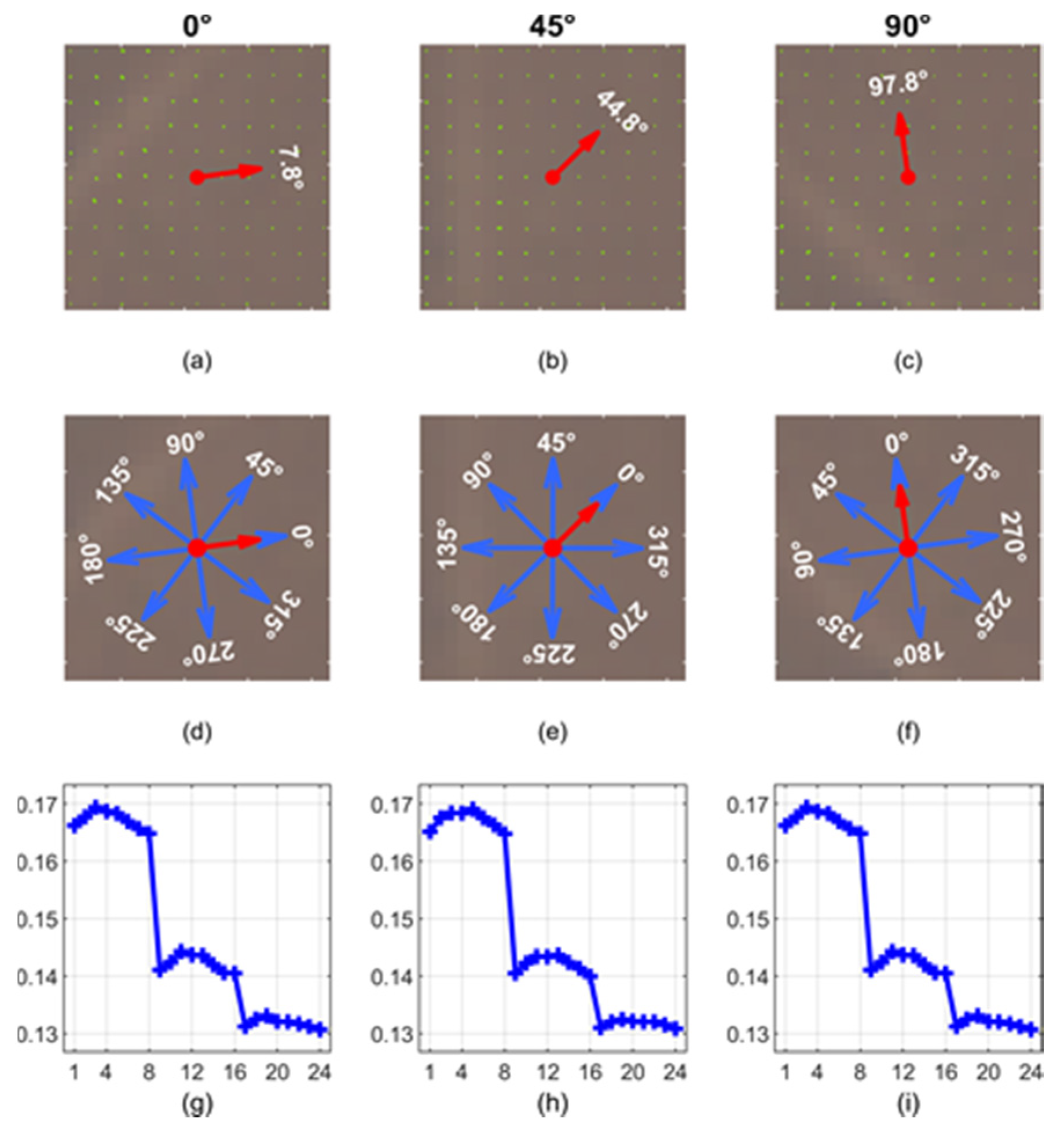

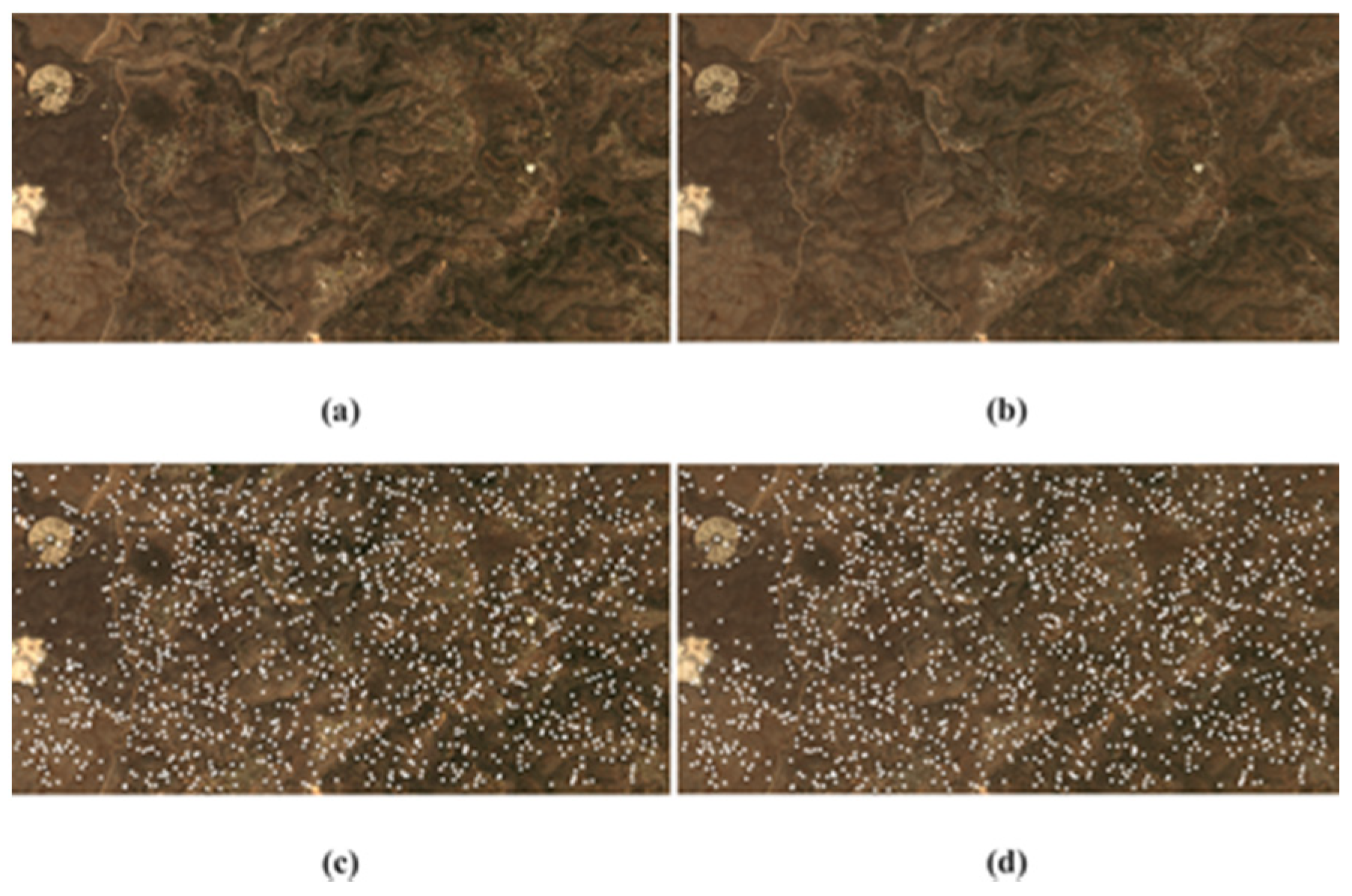

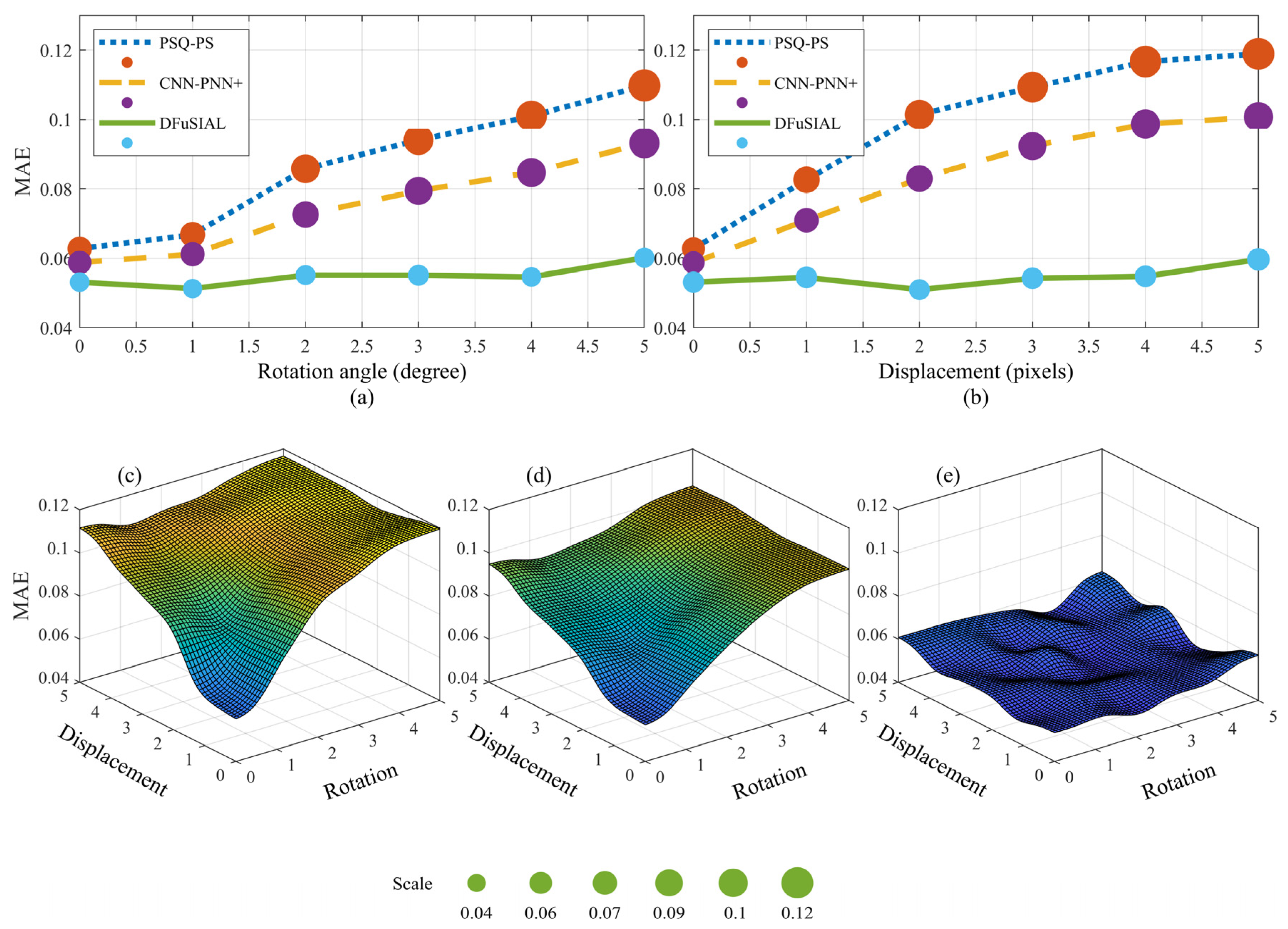

3.3. Evaluation of the Performance under Rotation and Displacement Mismatch between Data Sources (Experiment 4)

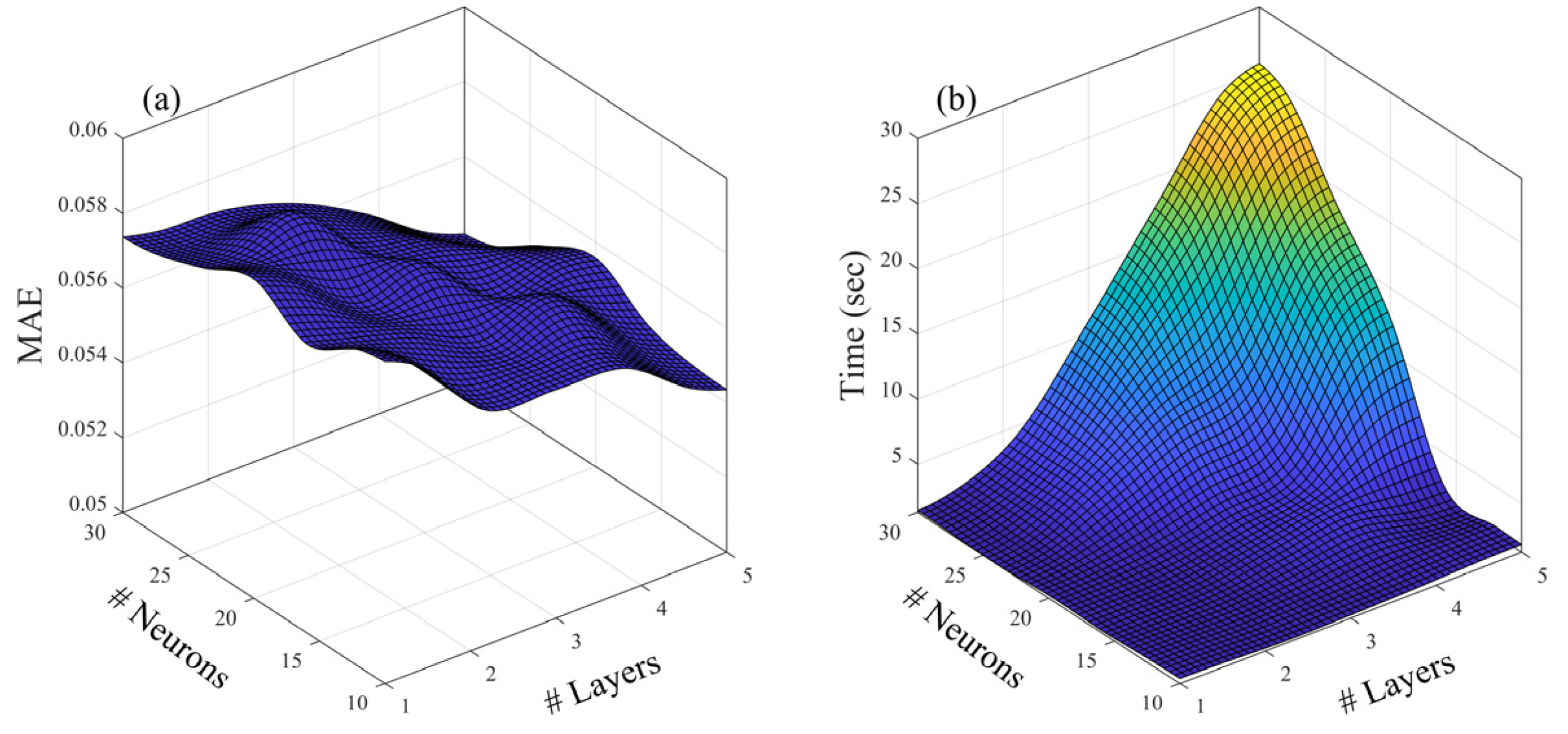

3.4. Sensitivity Test

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Boreman, G.D. Classification of imaging spectrometers for remote sensing applications. Opt. Eng. 2005, 44, 013602. [Google Scholar] [CrossRef]

- Garini, Y.; Young, I.T.; McNamara, G. Spectral imaging: Principles and applications. Cytom. Part. A 2006, 69, 735–747. [Google Scholar] [CrossRef]

- Goetz, A.F.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging spectrometry for Earth remote sensing. Science 1985, 228, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Gat, N.; Subramanian, S.; Barhen, J.; Toomarian, N. Spectral imaging applications: Remote sensing, environmental monitoring, medicine, military operations, factory automation, and manufacturing. In Proceedings of the 25th Annual AIPR Workshop on Emerging Applications of Computer Vision, Washington, DC, USA, 26 February 1997; Volume 2962, pp. 63–77. [Google Scholar]

- Manolakis, D.; Shaw, G. Detection algorithms for hyperspectral imaging applications. IEEE Signal. Process. Mag. 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Shaw, G.A.; Burke, H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003, 14, 3–28. [Google Scholar]

- Klein, M.; Aalderink, B.; Padoan, R.; De Bruin, G.; Steemers, T. Quantitative hyperspectral reflectance imaging. Sensors 2008, 8, 5576–5618. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Li, W. Mapping urban impervious surfaces by using spectral mixture analysis and spectral indices. Remote Sens. 2020, 12, 94. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simões, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis: Practical discussion and challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution-based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Amolins, K.; Zhang, Y.; Dare, P. Wavelet based image fusion techniques—An introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 2007, 62, 249–263. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Yadaiah, N.; Singh, L.; Bapi, R.S.; Rao, V.S.; Deekshatulu, B.L.; Negi, A. Multisensor data fusion using neural networks. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006. [Google Scholar]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A new pan-sharpening method with deep neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A deep network architecture for pan-sharpening. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Washington, DC, USA, 14–19 June 2017; pp. 1753–1761. [Google Scholar]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and hyperspectral image fusion using a 3-D-convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef]

- Xing, Y.; Wang, M.; Yang, S.; Jiao, L. Pan-sharpening via deep metric learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 165–183. [Google Scholar] [CrossRef]

- Ye, F.; Guo, Y.; Zhuang, P. Pan-sharpening via a gradient-based deep network prior. Signal. Process. Image Commun. 2019, 74, 322–331. [Google Scholar] [CrossRef]

- Guo, P.; Zhuang, P.; Guo, Y. Bayesian Pan-Sharpening With Multiorder Gradient-Based Deep Network Constraints. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 950–962. [Google Scholar] [CrossRef]

- He, L.; Rao, Y.; Li, J.; Chanussot, J.; Plaza, A.; Zhu, J.; Li, B. Pansharpening via detail injection based convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1188–1204. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-adaptive CNN-based pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5443–5457. [Google Scholar] [CrossRef]

- Li, Z.; Cheng, C. A CNN-based pan-sharpening method for integrating panchromatic and multispectral images using landsat 8. Remote Sens. 2019, 11, 2606. [Google Scholar] [CrossRef]

- Vitale, S.; Scarpa, G. A detail-preserving cross-scale learning strategy for CNN-based pansharpening. Remote Sens. 2020, 12, 348. [Google Scholar] [CrossRef]

- Yang, Y.; Tu, W.; Huang, S.; Lu, H. PCDRN: Progressive cascade deep residual network for pansharpening. Remote Sens. 2020, 12, 676. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Goh, G.B.; Hodas, N.O.; Vishnu, A. Deep learning for computational chemistry. J. Comput. Chem. 2017, 38, 1291–1307. [Google Scholar] [CrossRef]

- Wang, D.; Li, Y.; Ma, L.; Bai, Z.; Chan, J. Going deeper with densely connected convolutional neural networks for multispectral pansharpening. Remote Sens. 2019, 11, 2608. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Carla, R.; Garzelli, A.; Santurri, L. Sensitivity of pansharpening methods to temporal and instrumental changes between multispectral and panchromatic data sets. IEEE Trans. Geosci. Remote Sens. 2017, 55, 308–319. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in land cover and crop classification based on temporal features learning from sentinel-2 data using recurrent-convolutional neural network (R.-CNN). Appl. Sci. 2019, 10, 238. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Markham, B.L.; Storey, J.C.; Williams, D.L.; Irons, J.R. Landsat sensor performance: History and current status. IEEE Trans. Geosci. Remote Sens. 2004, 42, 2691–2694. [Google Scholar] [CrossRef]

- Chang, C.I. Constrained subpixel target detection for remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1144–1159. [Google Scholar] [CrossRef]

- Kizel, F.; Shoshany, M.; Netanyahu, N.S.; Even-Tzur, G.; Benediktsson, J.A. A stepwise analytical projected gradient descent search for hyperspectral unmixing and its code vectorization. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4925–4943. [Google Scholar] [CrossRef]

- Netanyahu, N.S.; Goldshlager, N.; Jarmer, T.; Even-Tzur, G.; Shoshany, M.; Kizel, F. An iterative search in end-member fraction space for spectral unmixing. IEEE Geosci. Remote Sens. Lett. 2011, 8, 706–709. [Google Scholar]

- Iordache, M.-D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L. Incorporating spatial information in spectral unmixing: A review. Remote Sens. Environ. 2014, 149, 70–87. [Google Scholar] [CrossRef]

- Plaza, A.; Martinez, P.; Perez, R.; Plaza, J. A quantitative and comparative analysis of endmember extraction algorithms from hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2004, 42, 650–663. [Google Scholar] [CrossRef]

- Gao, G.; Gu, Y. Multitemporal landsat missing data recovery based on tempo-spectral angle model. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3656–3668. [Google Scholar] [CrossRef]

- Zhang, Q.; Yuan, Q.; Zeng, C.; Li, X.; Wei, Y. Missing data reconstruction in remote sensing image with a unified spatial–temporal–spectral deep convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4274–4288. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Kizel, F.; Benediktsson, J.A. Data fusion of spectral and visible images for resolution enhancement of fraction maps through neural network and spatial statistical features. In Proceedings of the 9th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 23–26 September 2018. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary Robust invariant scalable keypoints. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Kizel, F.; Shoshany, M. Spatially adaptive hyperspectral unmixing through endmembers analytical localization based on sums of anisotropic 2 D. Gaussians. Isprs J. Photogramm. Remote Sens. 2018, 141, 185–207. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Kizel, F.; Benediktsson, J.A.; Bruzzone, L.; Pedersen, G.B.M.; Vilmundardottir, O.K.; Falco, N. Simultaneous and constrained calibration of multiple hyperspectral images through a new generalized empirical line model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2047–2058. [Google Scholar] [CrossRef]

- Svozil, D.; Kvasnicka, V.; Pospichal, J. Introduction to multi-layer feed-forward neural networks. Chemom. Intell. Lab. Syst. 1997, 39, 43–62. [Google Scholar] [CrossRef]

- Hagan, M.T.; Menhaj, M.B. Training feedforward networks with the Marquardt algorithm. IEEE Trans. Neural Netw. 1994, 5, 989–993. [Google Scholar] [CrossRef]

- Zhao, G.; Ahonen, T.; Matas, J.; Pietikainen, M. Rotation-invariant image and video description with local binary pattern features. IEEE Trans. Image Process. 2012, 21, 1465–1477. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Chen, Y.; Ye, X. Projection onto a simplex. arXiv 2011, arXiv:1101.6081. [Google Scholar]

- Khoshsokhan, S.; Rajabi, R.; Zayyani, H. Sparsity-constrained distributed unmixing of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1279–1288. [Google Scholar] [CrossRef]

- Nascimento, J.M.P.; Dias, J.M.B. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M.A.T. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2nd Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Reykjavik, Iceland, 4–16 June 2010. [Google Scholar]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Shahdoosti, H.R.; Ghassemian, H. Fusion of MS and PAN images preserving spectral quality. IEEE Geosci. Remote Sens. Lett. 2015, 12, 611–615. [Google Scholar] [CrossRef]

- Peng, X. TSVR: An efficient twin support vector machine for regression. Neural Netw. 2010, 23, 365–372. [Google Scholar] [CrossRef] [PubMed]

- Svetnik, V.; Liaw, A.; Tong, C.; Christopher, J.; Culberson, C.R.P.; Sheridan, R.P.; Feuston, B.P. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947–1958. [Google Scholar] [CrossRef] [PubMed]

| Data Set | HSR Data | LSR Data | Number of Detected IPs | RISFL Parameters | |||

|---|---|---|---|---|---|---|---|

| s | n | ||||||

| Experiment 1 | Test 1 | 1 | Sentinel-2 A SR = 10 m 900 × 1800 × 7 * | Landsat-8 SR = 30 m 300 × 600 × 7 | 1696 | 5 × 5 | 6 |

| Test 2 | 2 | Venus SR = 5 m 800 × 1600 × 12 * | Venus SR = 10 m 400 × 800 × 12 | 3568 | 5 × 5 | 6 | |

| Test 3 | 3 | Venus SR = 5 m 800 × 1600 × 12 * | Venus SR = 10 m 400 × 800 × 12 | 1791 | 7 × 7 | 6 | |

| Test 4 | 1 | Sentinel-2 A SR = 10 m 900 × 1800 × 7 * | Landsat-8 SR = 30 m 300 × 600 × 7 With simulated missing-data pixels | 499 | 5 × 5 | 6 | |

| Experiment 2 | Test 5 | 4 | GeoEye-1 SR = 1.84 m 320 × 320 × 4 * | Resampled GeoEye-1 SR = 7.36 m 80 × 80 × 4 * | 98 | 5 × 5 | 4 |

| Test 6 | 5 | Ikonos SR = 3.28 m 320 × 320 × 4 * | Resampled Ikonos SR = 13.12 m 80 × 80 × 4 * | 110 | 5 × 5 | 4 | |

| Test 7 | 6 | WorldView-2 SR = 1.84 m 320 × 320 × 7 * | Resampled WorldView-2 SR = 7.36 m 80 × 80 × 7 * | 140 | 5 × 5 | 4 | |

| Experiment 3 | Test 8 | 7 | Google Earth SR = 1 m 1975 × 2269 × 3 * | Sentinel-2 A SR = 10 m 213 × 245 × 7 * | 200 ** | 5 × 5 | 4 |

| Type-1 Evaluation | ||||||||

|---|---|---|---|---|---|---|---|---|

| PSQ-PS | DFuSIAL | |||||||

| MAE | STD | RMSE | Max | MAE | STD | RMSE | Max | |

| Test 1 | 0.035 | 0.046 | 0.058 | 0.172 | 0.033 | 0.038 | 0.051 | 0.148 |

| Test 2 | 0.028 | 0.032 | 0.043 | 0.124 | 0.028 | 0.030 | 0.044 | 0.122 |

| Test 3 | 0.019 | 0.027 | 0.031 | 0.096 | 0.029 | 0.042 | 0.056 | 0.133 |

| Test 4 | 0.057 | 0.072 | 0.093 | 0.274 | 0.034 | 0.040 | 0.052 | 0.152 |

| Type-2 Evaluation | ||||||||

|---|---|---|---|---|---|---|---|---|

| PSQ-PS | DFuSIAL | |||||||

| MAE | STD | RMSE | Max | MAE | STD | RMSE | Max | |

| Test 1 | 0.024 | 0.034 | 0.043 | 0.126 | 0.022 | 0.031 | 0.043 | 0.121 |

| Test 2 | 0.024 | 0.030 | 0.038 | 0.113 | 0.024 | 0.031 | 0.039 | 0.117 |

| Test 3 | 0.015 | 0.021 | 0.026 | 0.074 | 0.020 | 0.025 | 0.029 | 0.080 |

| Test 4 | 0.060 | 0.072 | 0.094 | 0.272 | 0.034 | 0.036 | 0.051 | 0.141 |

| Type-1 Evaluation | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSQ-PS | CNN-PNN+ | DFuSIAL | ||||||||||

| MAE | STD | RMSE | Max | MAE | STD | RMSE | Max | MAE | STD | RMSE | Max | |

| Test 5 | 0.051 | 0.055 | 0.075 | 0.215 | 0.038 | 0.037 | 0.053 | 0.150 | 0.055 | 0.050 | 0.075 | 0.209 |

| Test 6 | 0.040 | 0.041 | 0.058 | 0.164 | 0.041 | 0.039 | 0.056 | 0.157 | 0.034 | 0.027 | 0.044 | 0.116 |

| Test 7 | 0.063 | 0.069 | 0.095 | 0.270 | 0.059 | 0.065 | 0.090 | 0.254 | 0.050 | 0.040 | 0.064 | 0.170 |

| Evaluation Type 2 | ||||||||

|---|---|---|---|---|---|---|---|---|

| PSQ-PS | DFuSIAL | |||||||

| MAE | STD | RMSE | Max | MAE | STD | RMSE | Max | |

| Test 8 | 0.078 | 0.101 | 0.128 | 0.353 | 0.043 | 0.050 | 0.065 | 0.172 |

| PSQ-PS | DFuSIAL | |

|---|---|---|

| Runtime (s) | Runtime (s) | |

| Test 1 | 65 | 90 |

| Test 2 | 70 | 193 |

| Test 3 | 74 | 76 |

| Test 4 | 65 | 79 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kizel, F.; Benediktsson, J.A. Spatially Enhanced Spectral Unmixing Through Data Fusion of Spectral and Visible Images from Different Sensors. Remote Sens. 2020, 12, 1255. https://doi.org/10.3390/rs12081255

Kizel F, Benediktsson JA. Spatially Enhanced Spectral Unmixing Through Data Fusion of Spectral and Visible Images from Different Sensors. Remote Sensing. 2020; 12(8):1255. https://doi.org/10.3390/rs12081255

Chicago/Turabian StyleKizel, Fadi, and Jón Atli Benediktsson. 2020. "Spatially Enhanced Spectral Unmixing Through Data Fusion of Spectral and Visible Images from Different Sensors" Remote Sensing 12, no. 8: 1255. https://doi.org/10.3390/rs12081255

APA StyleKizel, F., & Benediktsson, J. A. (2020). Spatially Enhanced Spectral Unmixing Through Data Fusion of Spectral and Visible Images from Different Sensors. Remote Sensing, 12(8), 1255. https://doi.org/10.3390/rs12081255