1. Introduction

Olive plants are among the most ancient cultivated fruit trees. For many centuries, the production of olive trees has had an important impact on the Spanish economy. Specifically, Jaén, a southern region of Spain, is considered one of the most relevant producers of virgin olive oil around the world [

1]. This province contains 550 thousand hectares of olive groves and produces around 50% (600 thousand tons per year) of the total national olive oil production, and more than 20% of the world’s total production of olive oil. Therefore, advances to increase the final production and farming sustainability of olive trees have a high impact on the local economy.

Remote sensing techniques are effective solutions for data acquisition with different spatio-temporal resolutions [

2]. Early approaches proposed methods to analyze the evolution of olive trees using satellite images and Geographic Information Systems (GIS) [

3,

4,

5]. According to the emergence of novel sensors for plant phenotyping, some studies were presented to assess plant sustainability using heterogeneous data (spectral indices and temperature) [

6,

7]. More recently, Zarco et al. [

8] used a hyperspectral sensor (visible and near-infrared (VNIR) model; Headwall Photonics) and a thermal camera (FLIR SC655; FLIR System) to study the Xylella fastidiosa propagation in olive trees through the analysis of some physiological traits over orthomosaic maps. In general, previous works include interesting methods for monitoring and feature extraction of olive trees. However, these are based on bi-dimensional information using a multilayered plant-trait scheme. Therefore, many values from the image-based observations have to be interpolated in the same pixel of the orthomosaic map. Thus, the overall measurements of the plant traits are computed by a coarse estimation. The novelty of our approach lies in focusing on automatically mapping meaningful plant traits on a 3D model of an olive orchard. Our study object is the point cloud of every olive tree, which is enriched by the leaf reflectance response to plant stress. Undoubtedly, the contribution of drone technology in this field is highly positive to get a higher spatial resolution of 3D models and detailed observations of plant reflectance by a more efficient approach [

9,

10].

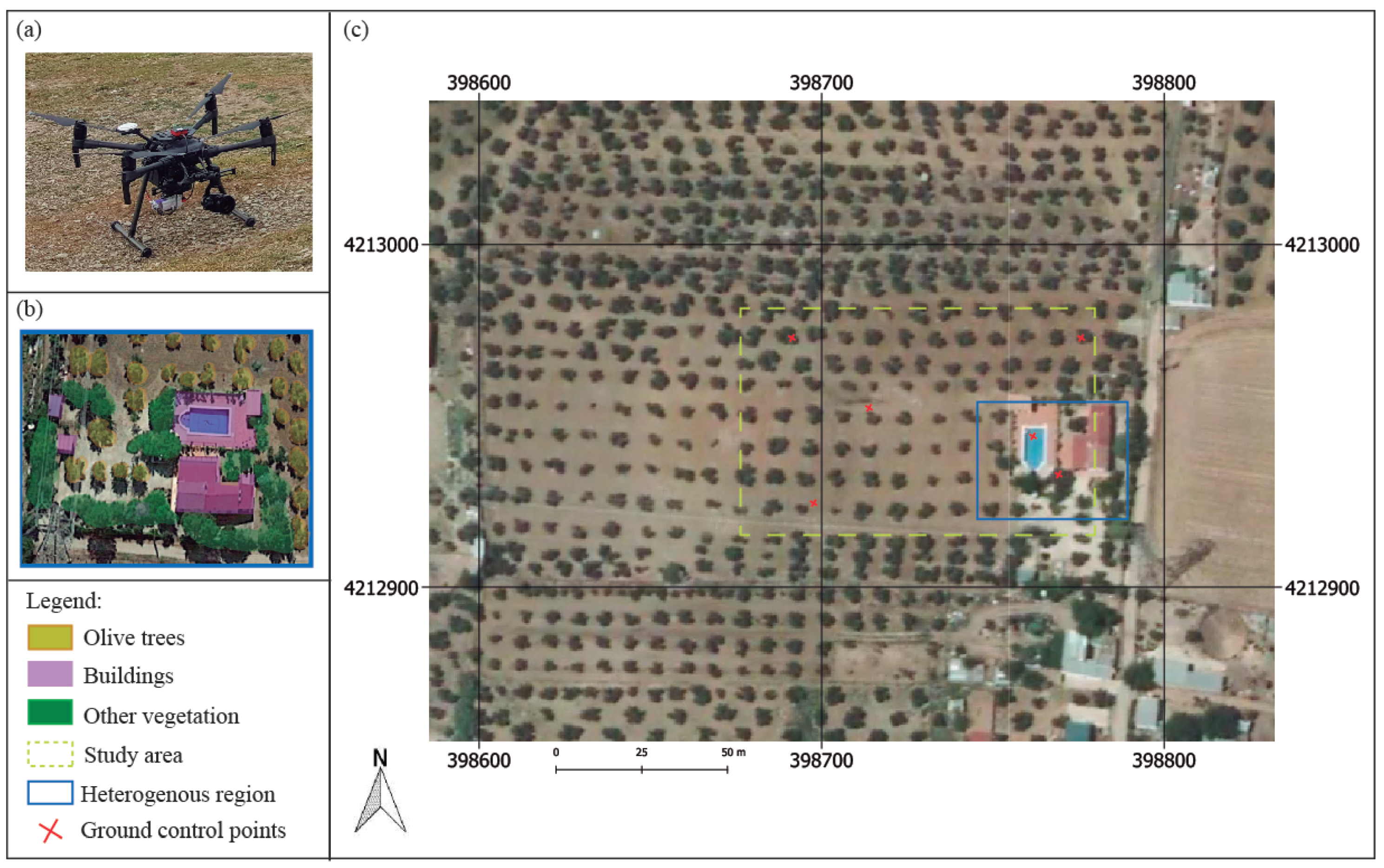

Among the available aerial remote sensing platforms, Unmanned Aerial Vehicles (UAVs) are considered to be cost-effective and they can capture high-resolution imagery from multiple viewpoints [

11,

12]. In contrast to satellite images, UAV-based cameras provide a higher spatial resolution and a more detailed observation of individual plants [

13]. These solutions are an increasingly used trend for several monitoring tasks using various types of sensors [

14]. As a result, the use of drone provides a high versatility during the acquisition process, by planning custom flights at different heights or angles to capture images. Regarding some UAV-based applications in Precision Agriculture (PA), Vanegas et al. [

15] used hyperspatial and hyperspectral data for improving the plant pest surveillance in vineyards. Other approaches focused on using drones either for the classification of tree species [

16] or the acquisition of thermographic and multispectral features to inspect the plant status [

17,

18]. In this paper, the input images are collected by two UAV-based systems (a high-resolution RGB camera and a multispectral sensor), which provide accurate data of plant shape and reflectance response. Moreover, a multi-temporal approach is considered to monitor the variation of observed plant features.

According to the study of plant morphology, the 3D reconstruction of the branching structure can be modeled by photogrammetric techniques as well as Light Detection and Ranging (LiDAR) sensors. Nevertheless, the modeling of 3D plant structures is still challenging because olive trees contain many overlapping branches in the crown and a high leaf density, which make the generation of plant geometry quite complicated. Some studies proposed different methods for the assessment of geometric features such as the height, area or volume of fruit trees [

19,

20,

21] and for the detection of potential phytosanitary problems at the canopy level [

22,

23]. Undoubtedly, the complete plant geometry provides a real perception of many morphological traits, which cannot be directly identified in the image. Zarco-Tejada et al. [

24] measured the height of olive trees through the photogrammetric process based on UAV-based imagery using a consumer-grade camera onboard a low-cost unmanned platform. The generated 3D scenes had a sufficient resolution to quantify a single-tree height with a similar accuracy to more complex and costly LiDAR systems. However, the resulting point cloud mainly represents the ground and higher branches of olive trees with a significant lack of lateral and internal structures. Our method provides a more accurate reconstruction of plant structure with a higher density of 3D points for each olive tree. Consequently, the trunk, main branches, and many leaves are modeled on a dense point cloud. The efficient managing of these heavy geometric models to merge with spectral layers is also discussed in this work.

Research in ecology studies the evolution of plants through a detailed plant inventory by monitoring at different canopy scales and image-based remote sensing [

25]. Unlike a direct visual disease detection on-site, UAV-based sensors are commonly used to measure plant reflectance in several narrow-bands [

26]. Leaf reflectance can be observed at different wavelengths, in which the reflected light depends on some biochemical components of the leaf internal structure (chlorophylls and carotenoids) [

27]. Multispectral sensors capture several significant bands to detect many properties for diagnosing the plant physiological status such as the drought and heat stress, nutrient content, and plant biomass. Several approaches provide advances for the vegetation assessment using different spectral traits of plants [

17,

28]. Thus, vegetation monitoring is possible through feature extraction to promote sustainable farming [

29]. Other approaches used multispectral data to propose a 2D-based analysis for disease detection [

30] and segmentation of vegetation areas [

31]. In addition, recent contributions are provided by the fusion of LiDAR and hyperspectral remotely sensed data [

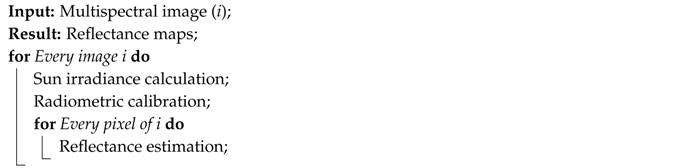

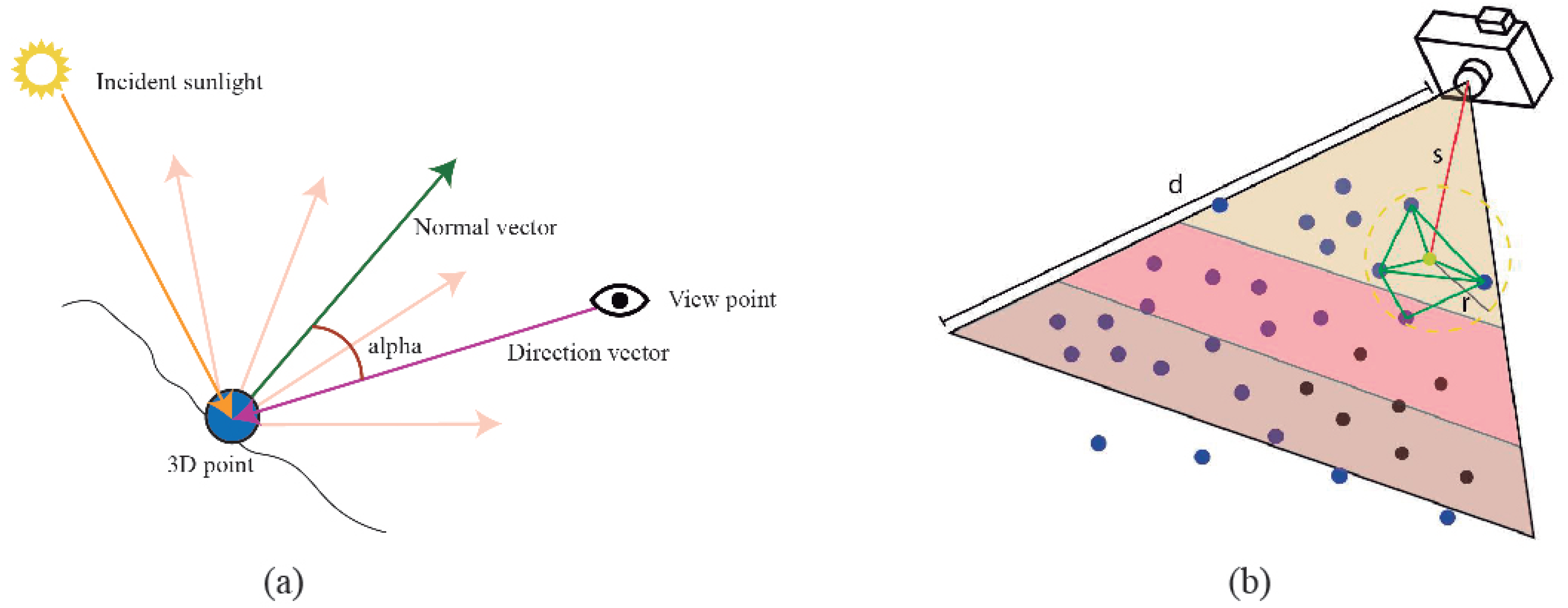

32]. Regarding the novelty of our methodology to previous works, we propose the generation of a reflectance map for each multispectral image to project all pixel values on the point cloud. The plant reflectance is observed from multiple viewpoints to detect the light interactions with top, lateral and lower branches of the tree.

Recently, several studies have presented novel methods by fusing heterogeneous image datasets to extract key feature patterns of the monitored plantation. Nevalainen et al. [

33] used hyperspectral imaging for individual tree detection with UAV-based photogrammetric point clouds. Degerickx et al. [

34] provided an urban tree health assessment using airborne hyperspectral and LiDAR imagery. In addition, other studies focus on multi-temporal plant diagnosing from very high-resolution satellite images [

35] and individual crop measurements based on the clustering of terrestrial LiDAR data [

36]. Specifically, UAV-based approaches use drone sensors for an accurate reconstruction of photogrammetric point clouds [

37] and even forest inventory [

38]. The resulting 3D models of the plant structure can be used for feature extraction of tree height and volume, which provides information about the morphology of olive trees. By applying the proposed methodology, many input feature layers can be mapped on the 3D model of plants without any human intervention. A fully automated method is proposed to enrich the point cloud with spectral data relating to plant health. Our results can be inspected in a 3D virtual environment for visual-based assessments by an expert.

The individual tree detection plays an increasingly significant role in an automated plant-monitoring process. Mohan et al. [

39] used UAV-based imagery for individual tree detection using a local-maxima based algorithm on Canopy Height Models (CHMs). Individual Tree Crown Detection and Delineation (ITCD) algorithms have advanced through novel approaches by the integration of heterogeneous data sources [

40,

41]. Marques et al. [

42] proposed a fully automated process to monitor chestnuts plantations. This method is based on RGB and multispectral imagery for tree identification and counting as well as feature plant extraction. However, it is based on an image segmentation instead of a 3D classification. Previous works mainly describe an image-based segmentation by using orthomosaics. In our research, the multispectral and geometric data are used to identify every olive tree as a single entity on the point cloud. In this way, a multi-temporal inventory is developed to study the evolution of morphological and spectral features of olive trees.

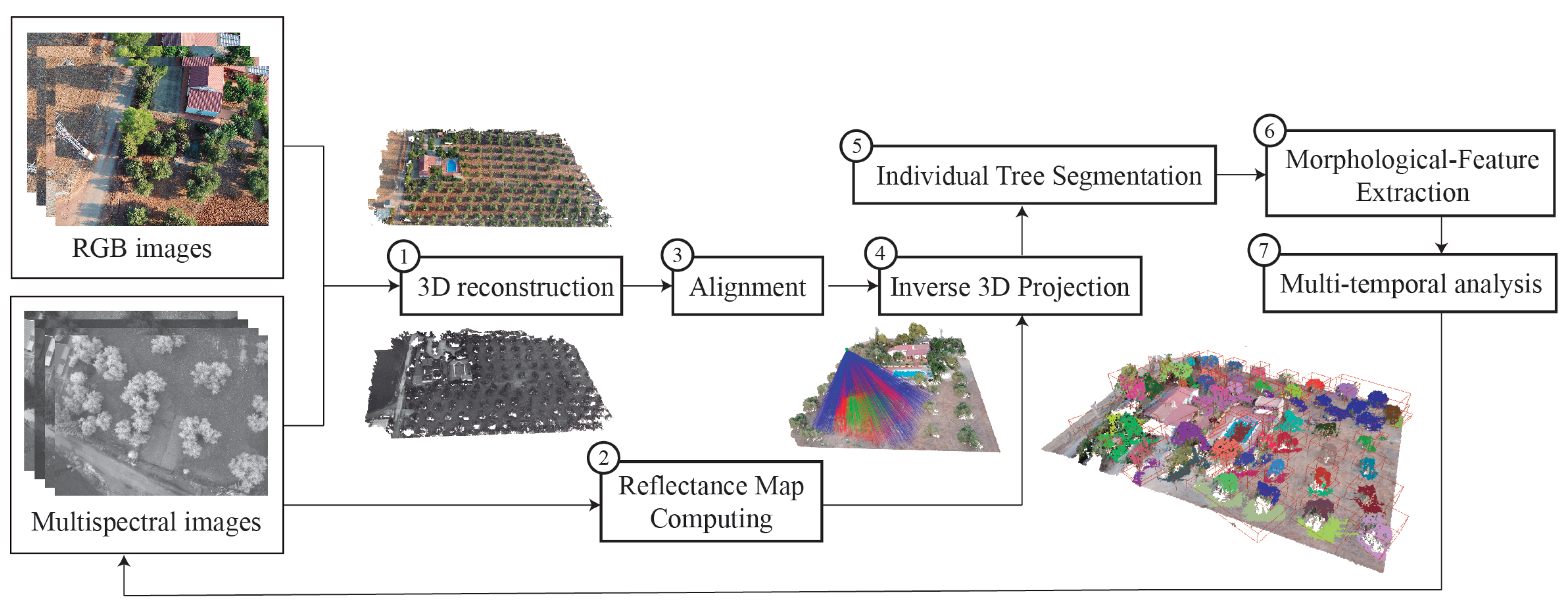

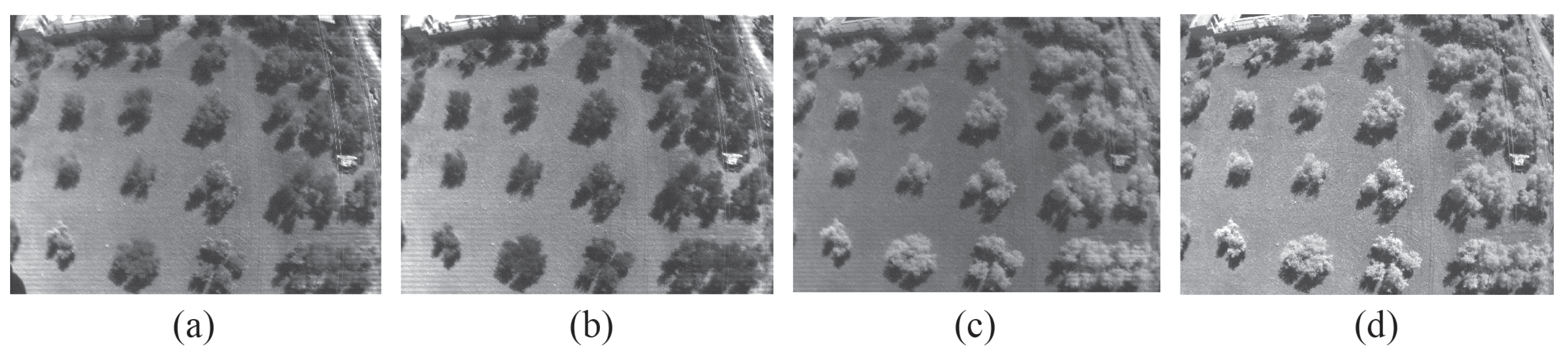

Regarding the focus of our study, it is based on the fusion of multispectral imagery and photogrammetric 3D point clouds and multi-temporal analysis of individual olive trees. UAV image sets have been acquired using a high-resolution camera and a multispectral sensor. The mapping of image-based spectral information on the 3D model of the olive plantation is the core of this paper. The capability of multi-temporal analysis of plant development by multispectral monitoring is also approached. The presented methodology provides a novel framework for a fully automated fusion of UAV-based heterogeneous data over high detailed 3D tree models. This paper is organized as follows:

Section 2 describes the materials and methods used in this research;

Section 3 shows the results of the proposed methodology;

Section 4 analyzes the results and the novelty of our approach;

Section 5 presents the main conclusions.

4. Discussion

The results provided by the application of our methodology demonstrate its utility to extract meaningful traits on the plant structure of olive trees. In this farming sector, the use of novel remote sensing techniques during every tillage stage is not still sufficient for monitoring the plant status and early detection of some diseases, which cause a negative effect of the olive production. Therefore, the use of emerging platforms like UAVs and the development of efficient methods are becoming increasingly important gather and combine heterogeneous data, which provide meaningful features of an olive tree health [

9,

10]. This paper proposes a novel approach focused on fusing geometric and spectral traits of an olive orchard. The extraction of morphological and multispectral features of comprehensive 3D models, as well as the multi-temporal monitoring of these features to assess the plant development of olive trees, are the core of our method.

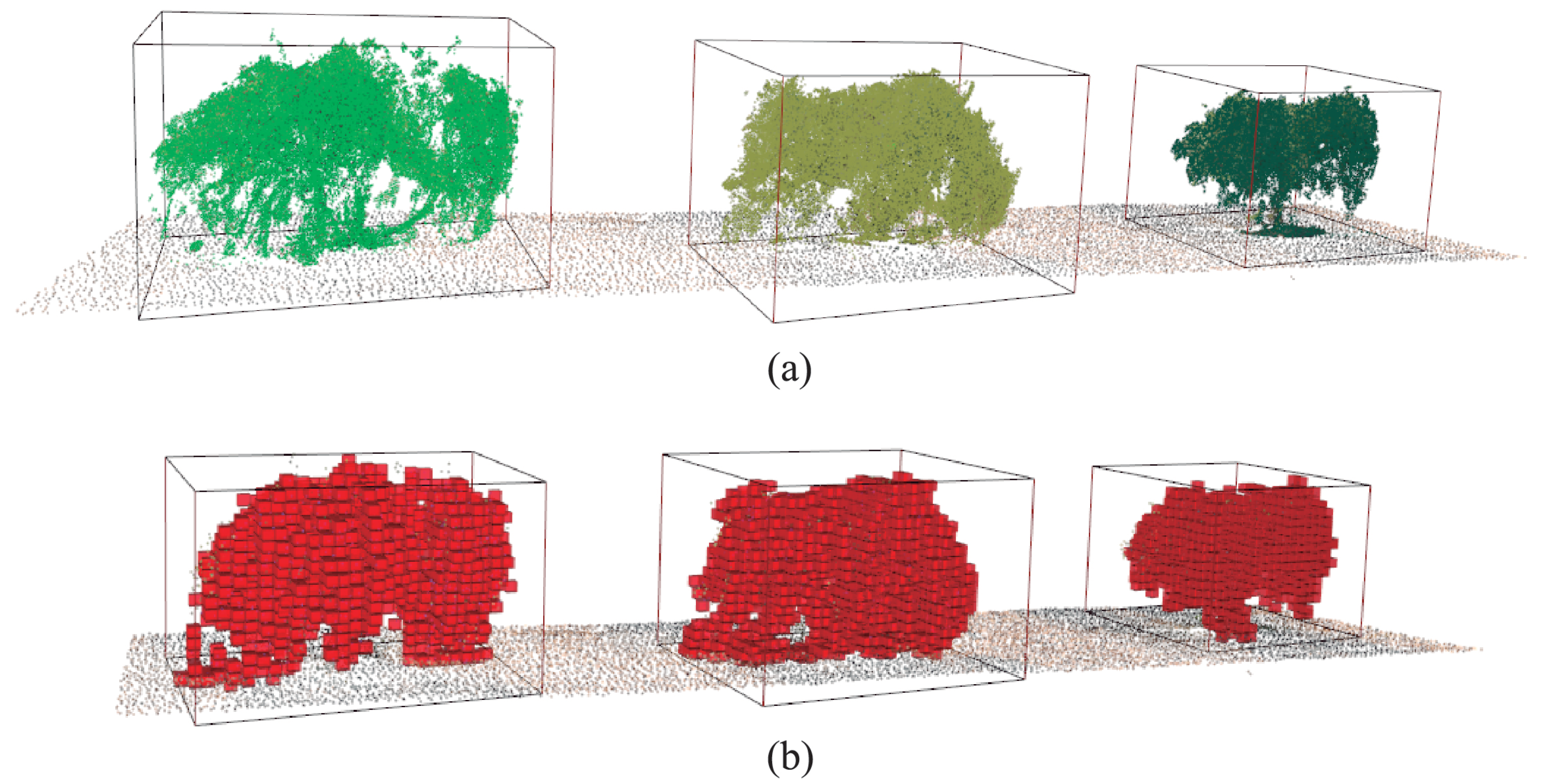

Remote sensing methods for the estimation of plant height and volume increase in importance for PA, using either LiDAR or photogrammetry. In this study, image-based sensors are used to observe the structure and reflectance for each olive. Consequently, olive trees were characterized by the spectral reflectance and some morphological properties such as the height and volume. Yan et al. [

58] proposed the use of a concave hull operation for the extraction of structural plant parameters. In contrast, our solution is based on a voxel-based decomposition of the plant space using a 3D octree, which is widely used for the partition of three-dimensional space by recursive subdivision [

56,

59]. An advantage of our method is that it is not affected by the irregular shape and disperse branching of the tree canopy whereas the calculation of the concave hull is not the most adequate solution in this case. Moreover, the triangulation of the concave hull requires the correction of non-manifold geometry in the photogrammetric model and the generation of the 3D mesh, which negatively affects the final performance.

One of the goals of our approach is to ensure the automatic process for each step of the proposed methodology. Unlike other approaches [

45] based on field data acquisition and GCPs measurement in every flight campaign, the validation of the morphological features was made by measuring the dimensions of fixed physical objects in the olive plantation. It supposes a higher efficient technique to acquire validated data for further flight campaigns without repeated time-consuming acquisitions of field data. Regarding the result of this study, the creation of a spatio-temporal inventory of individual olive trees by the 3D plant structures, spectral reflectance and VIs, as well as the vegetation evolution are discussed in the following sections.

4.1. Inventory of Individual Olive Trees

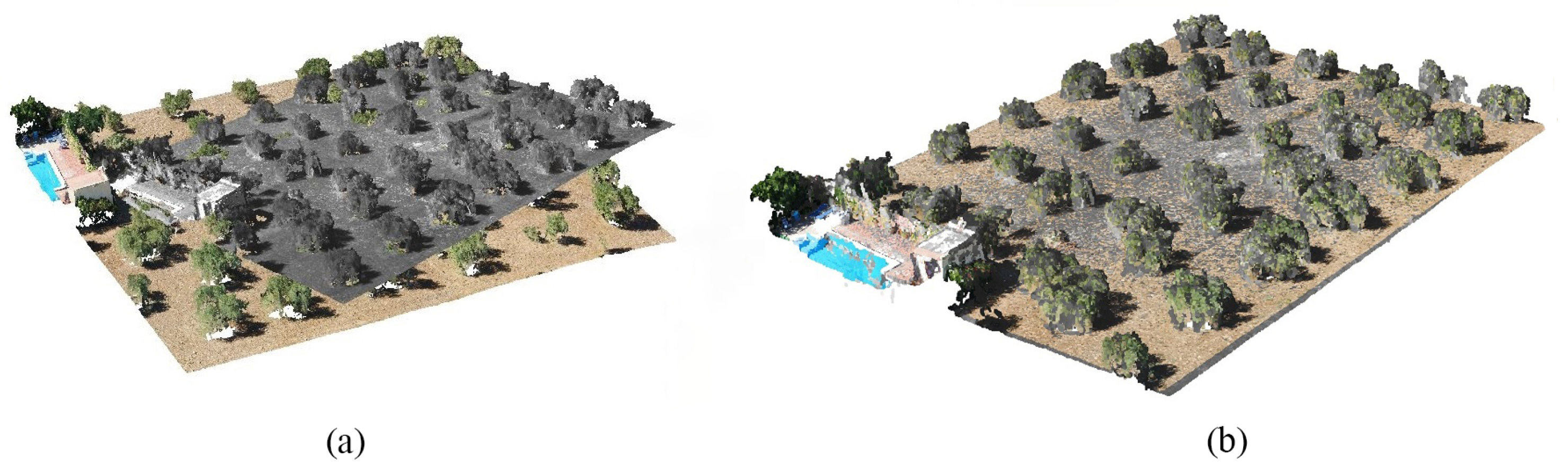

One of the main contributions of our approach is the automatic fusion between the photogrammetric point cloud of olive trees and multispectral images. The resulting geometry of olive trees has been modeled with a high spatial density (20 thousand of points by a cubic meter on average). The managing of the high detailed model of the olive plantation has been accelerated by a spatial data structure, the k-d tree. Therefore, an interactive visualization of the point cloud can be performed in real-time, using the canvas of the proposed application. It is an important novelty of our method in contrast to other approaches based on bidimensional and fixed observations [

6,

7,

8]

In this study, the high-resolution RGB point cloud is enriched with a reflectance response at various multispectral bands. The RMSE of data alignment is lower than 3 cm, which is considered valid because the mean GSD of multispectral images is greater than 3.38 cm. As a result, every 3D point contains many reflectance values at visible bands (green and red), REG and NIR bands and some vegetation indices (NDVI, RVI, GRVI, and NDRE). By the previous characterization of the point cloud, different semantic layers can be analyzed using the proposed framework. It means a novel advance in PA in order to review the spectral response of target crops from any viewpoint in a 3D scenario. Previous works, which use the VIs for the plant assessment [

60], can benefit from our approach by using our methods to study the spatial distribution of reflectance on the point cloud.

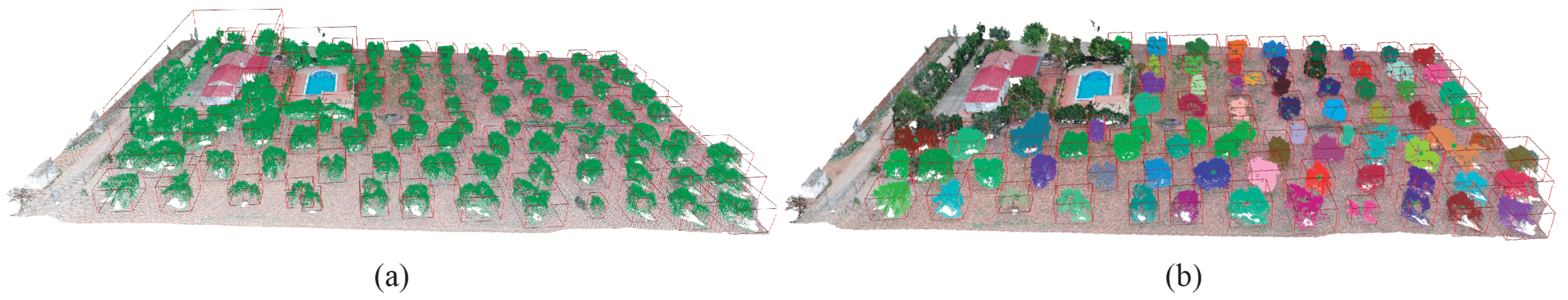

Moreover, the characterization of the point cloud with multispectral features is meaningful for the recognition of individual olive trees. By applying our method, all olive trees are correctly identified, 72 plants in total. Therefore, the overall reflectance and the height and volumes can be obtained for each tree. In contrast to previous work based on satellite or air-bone to acquire images for monitoring of olive trees [

3], we can characterize an olive plantation with a higher spatial resolution of plant structures and their multispectral responses from a 3D perspective. This work focuses on the automation of every method to reduce the time-consuming tasks for heterogeneous data fusion and field data acquisition. Consequently, an efficient multi-temporal approach is provided to assess the evolution of plant health by visualizing the enriched 3D models and the analysis of data variability.

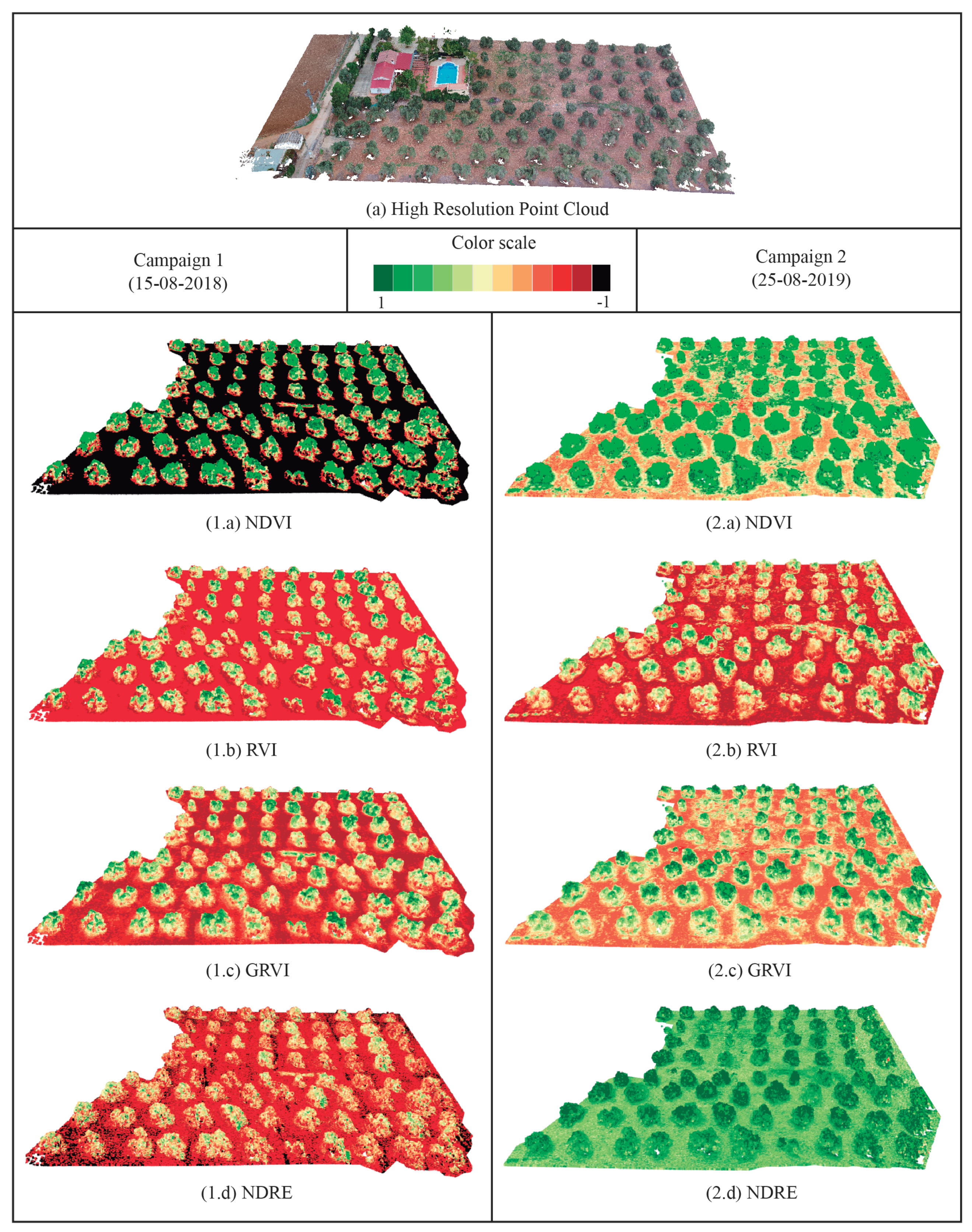

4.2. Vegetation Evolution

The use of several sources of remotely-sensed data and field data, which may differ in spatial resolution, spatial–temporal coverage and sensor origins, is becoming increasingly popular due to the recent rapid development in remote sensing techniques [

33,

34]. In this work, we address the evolution of olive trees by considering morphological and multispectral traits of olive trees. During both flight campaigns, the vegetative cycle of olive trees was the same when the final stage of olive ripening was underway. In terms of a qualitative and quantitative analysis of the plant development, which is observed in

Figure 13 and

Figure 14, some meaningful conclusions can be extracted.

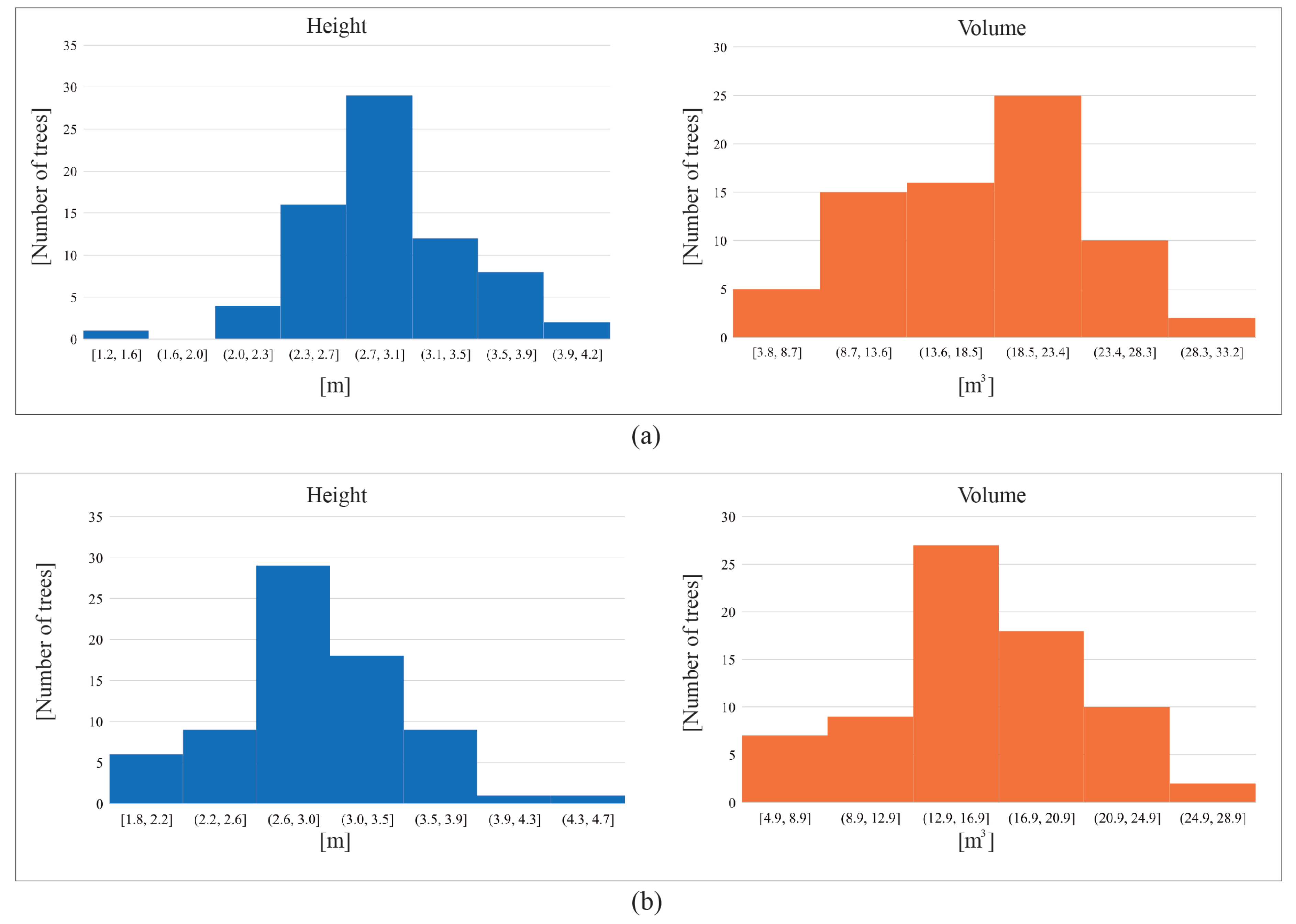

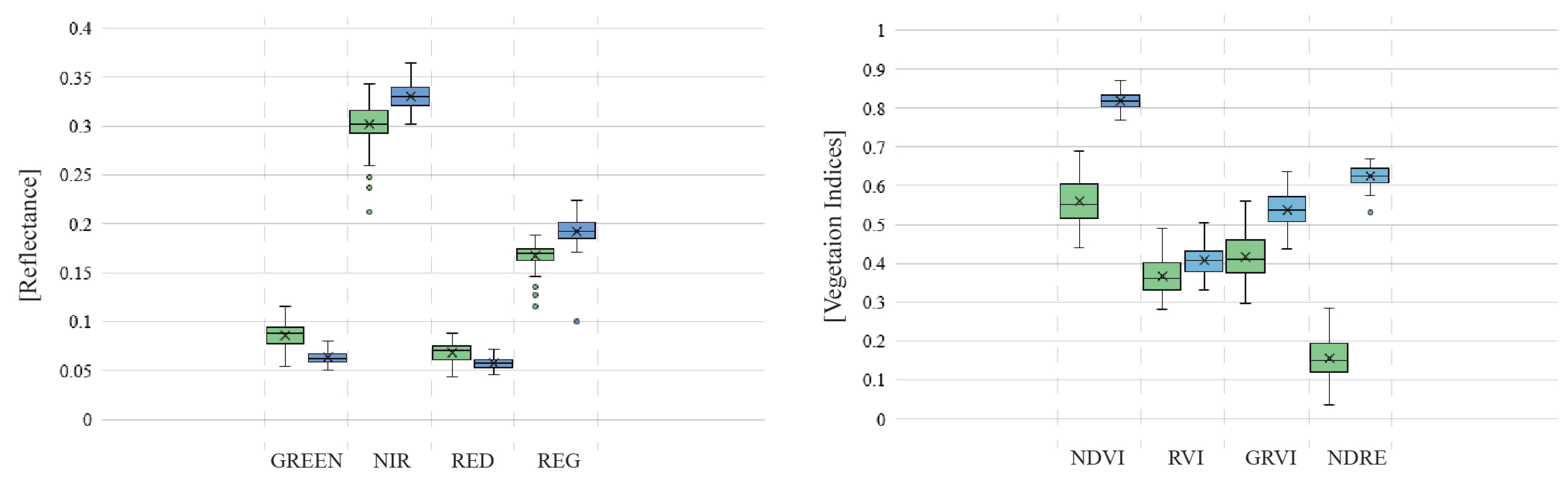

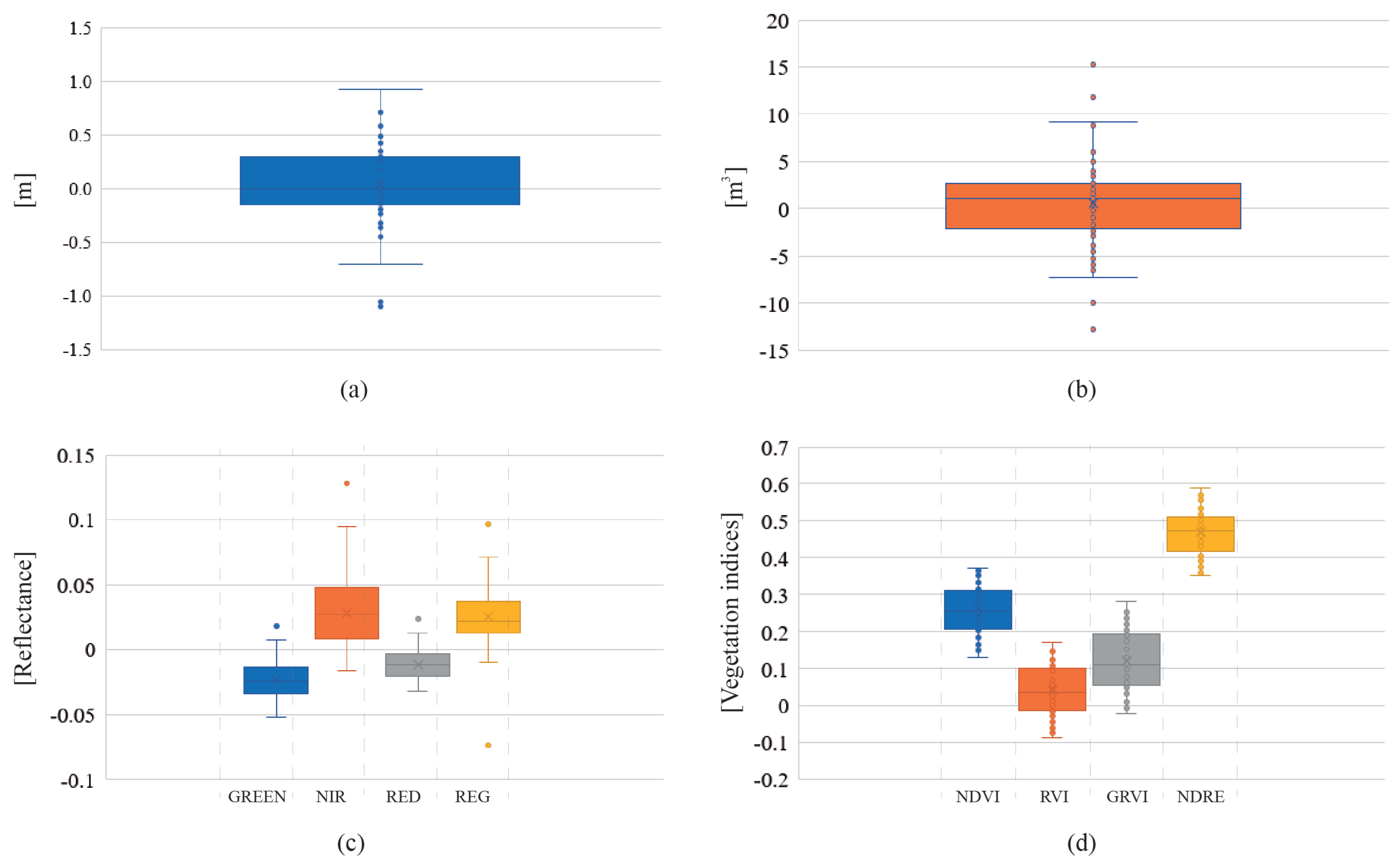

In the first campaign, olive trees present 16 m and 2.9 m like mean volume and height values. The reflectance in the visible range is quite low, 8% in green and 6% in red. Otherwise, NIR and REG wavelengths present a higher reflected light close to 30% and 17%, respectively. This ratio of values is greatly determined by leaf pigments of the plant. The most visible light, red light is whereas infrared light is least sensitive to chlorophyll and is more reflected. According to the studied VIs by combining previous multispectral bands, the mean NDVI of olive trees has a low value (0.55). It means that plants might present nutritional stress, a low vigor, or a mid-low canopy cover. By visualizing the enriched 3D model, a high contrast is presented between the soil and trees. Focusing on olive trees, healthier regions of the plants are easily detected by the green color, in contrast to stressed parts that become more brown. In the middle of the surveyed area, a high number of olive trees were detected with lower values of VIs. These key observations were taken into account for the next monitoring.

In the second campaign, the evolution of olive trees shows a positive trend in comparison to the first one. The mean volume of every tree is 17.28 m, which means 3% of the volume growth. No significant differences in the tree height measurements have been detected. In the studied multispectral bands, the reflectance in visible bands has decreased to 5% in green and 6% in red. Regarding NIR and REG values, these have increased to 35% and 20% respectively. These changes are interpreted as a significant improvement in plant health. It is justified by the fact that VIs provide better results about the plant sustainability. The NDVI increases to 0.81 and the mean NDRE is 0.59. These differences are caused by the higher reflectance in the infrared domain. Moreover, GRVI and RVI present a slight increase to 0.35 and 0.47 respectively. The visual-based analysis, by using our 3D scenario, demonstrates the adequate evolution of olive trees in the studied plantation. A high difference is detected in the NDRE model, where the soil and plants show higher values. It is due to the emergence of low vegetation around olive trees in this time frame. Finally, regarding the area in which the lowest reflectance values were obtained in the first campaign, only three olives maintain a similar behaviour. In these cases, the NIR values are lower than the rest close to 20%. Nevertheless, the surrounding olive trees have increased the NDVI by 10%. This approach enables a more complete characterization of olive trees parameters as well as the development of a fully automatic process to analyze the plant evolution by a visual and statistical analysis of the individual crop’s profile.

5. Conclusions

The potential of novel sensors in precision farming creates a great opportunity to advance in the development of efficient methods and techniques for UAV data processing. The huge quantity of data, which this technology provides in a non-intrusive way, and the high number of available sensors to monitor the vegetation status require advanced applications for fusing heterogeneous information.

The proposed innovative tool has a high utility in PA for using heterogeneous and multi-temporal data series. Our approach proposes novel advances in morphological and spectral feature extraction, 3D segmentation of vegetation and the fusion of the plant geometry and multispectral traits to characterize the comprehensive plant structure. In addition, a visual-based analysis is carried out by using the interactive canvas of our framework. An intuitive interaction with the point cloud, as well as the fluent visualization of every 3D model, becomes a potential scenario to monitor and find out interesting variables in the target crop. Likewise, the variability of data is studied by a statistical approach to detect meaningful changes of morphological and multispectral features in two flight campaigns. Our method is based on mapping many multispectral images on the plant geometry, thereby obtaining spectral information about every region in the 3D plant space. One of our goals was the generation of an efficient and fully automated process for fusing multispectral data with the plant geometry. Thus, experts can directly inspect the plant health on a detailed 3D model with multispectral traits of the tree structure.

Although this solution focuses on olive trees, it can be also applied to other fruit trees. In this study, the surveyed area contains 72 olive trees. High-resolution RGB and multispectral images were captured by UAV-based cameras to reconstruct a 3D model of the olive plantation and to measure the reflected light in some specific spectral bands. The resulting geometry was highly detailed with accurate modeling of branches, the trunk, and the tree crown. The 3D model of each olive tree was characterized by the height and volume as well as the mean spectral reflectance and VIs by combining some narrow bands. To compare these features in different time frames, the position, orientation and scale of the RGB point clouds were corrected by the use of GCPs. As a result, an enriched point cloud was obtained with a comprehensive 3D model and accurate reflectance measurements of the plant structure. According to the variability of the studied features, some conclusions were drawn. In general, the target olive plantation shows significant symptoms, which indicate a positive trend. According to our analysis, the mean volume of each olive tree slightly increases in the second campaign. The NDVI value and infrared light increase significantly in the plant space. Most olive trees present more greenery in the second campaign and adequate plant growth is observed. This trend is visible by reviewing the enriched models of olive tree structure in the 3D environment.

Several open problems can be subject to further research. Regarding the gathered features about the morphology and spectral response for each tree, we will focus on the study of disease detection. Moreover, we want to use the proposed method for soil monitoring. Finally, the application of this framework for the plant species classification is an interesting topic to approach in the future.