Joint Spatial-spectral Resolution Enhancement of Multispectral Images with Spectral Matrix Factorization and Spatial Sparsity Constraints

Abstract

1. Introduction

1.1. Spatial Resolution Improvement of HSI

1.2. Spectral Resolution Enhancement Techniques

- Improves spatial and spectral resolution simultaneously, which unifies the spatial- and spectral enhancement steps in one framework taking high resolution spectral features and spatial information as the constraints for each other in an alternate solving process. To our best knowledge, this is the first attempt.

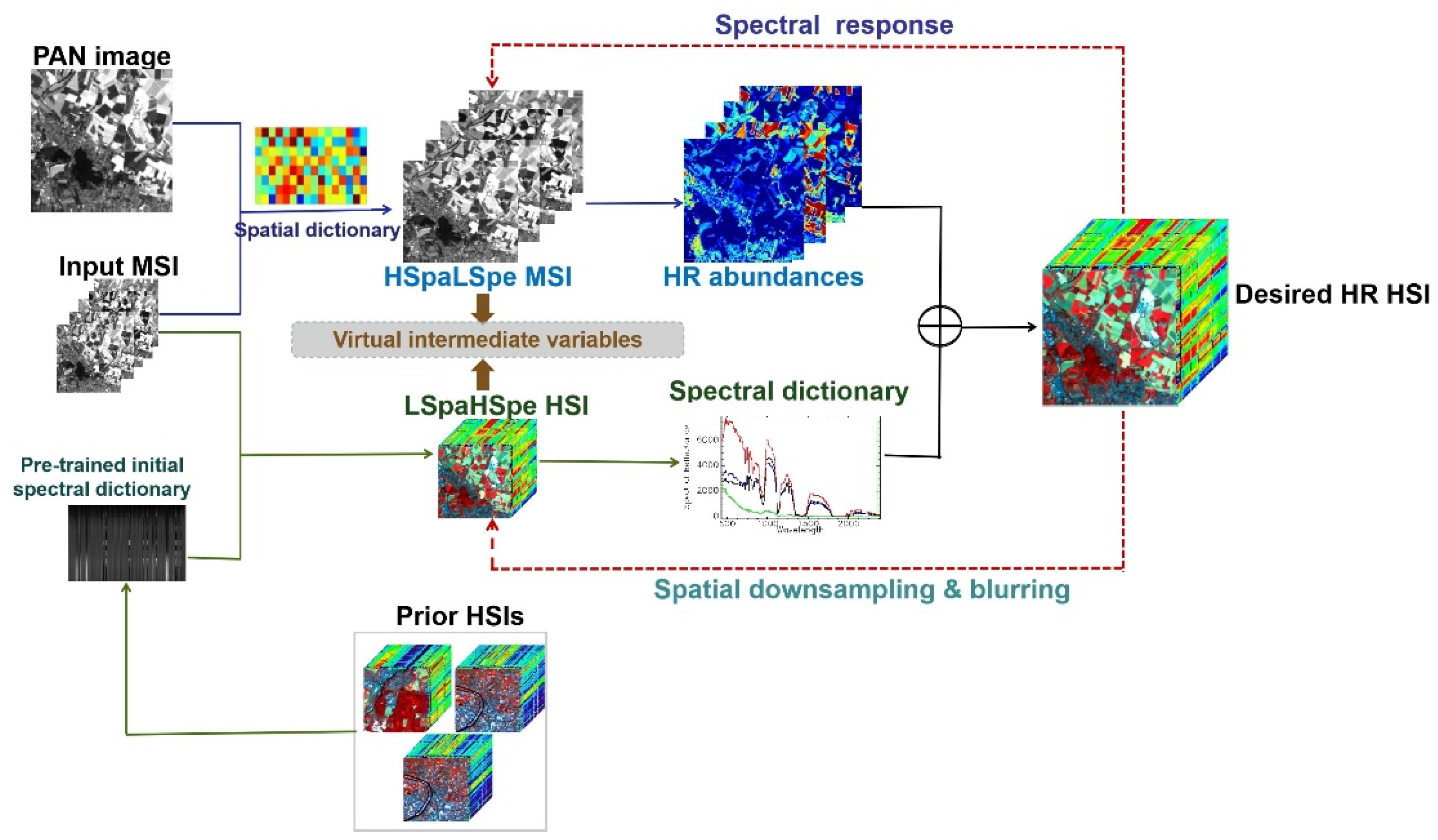

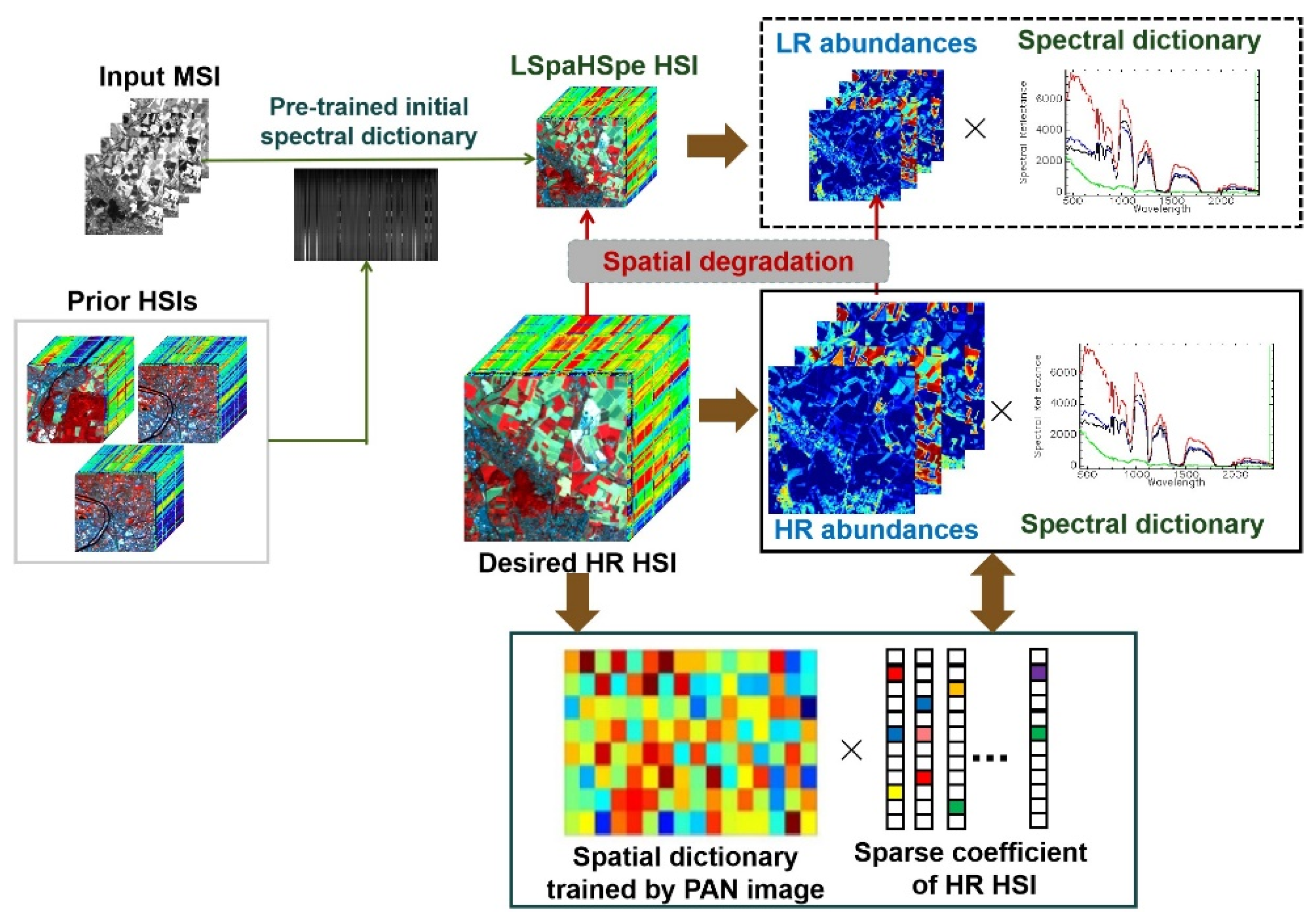

- Designed spectral and spatial observation models for the joint spatial and spectral enhancement problem. Virtual intermediate variables LSpaHSpe and HSpaLSpe are introduced in spectral and spatial observation models to find spectral/spatial relationships between input LR MSI and the desired HR HSI. The high spectral resolution dictionary and the corresponding high spatial resolution abundances are alternately solved to recover a high spatial and spectral resolution HSIs. LSpaHSpe and HSpaLSpe are only virtual intermediate variables without having to solve them in the proposed method.

- The proposed joint spatial-spectral enhancement algorithm is applied to real remote sensing data, such as ALI/Hyperion (30 m, 9 bands/ 30 m, 242 bands), for a target scene, the PAN image of ALI (10 m) is used to provide high spatial resolution features, while prior Hyperion HSIs (30 m, 242 bands) over different scenes are used to train spectral dictionary with high spectral resolution characteristics. So high spatial- and spectral resolution Hyperion data (10 m, 242 bands) of the target scene is achieved from the input LR ALI data (30 m, 9 bands).

2. Spatial Observation Model and Spectral Observation Model

2.1. Spatial Observation Model

2.2. Spectral Observation Model

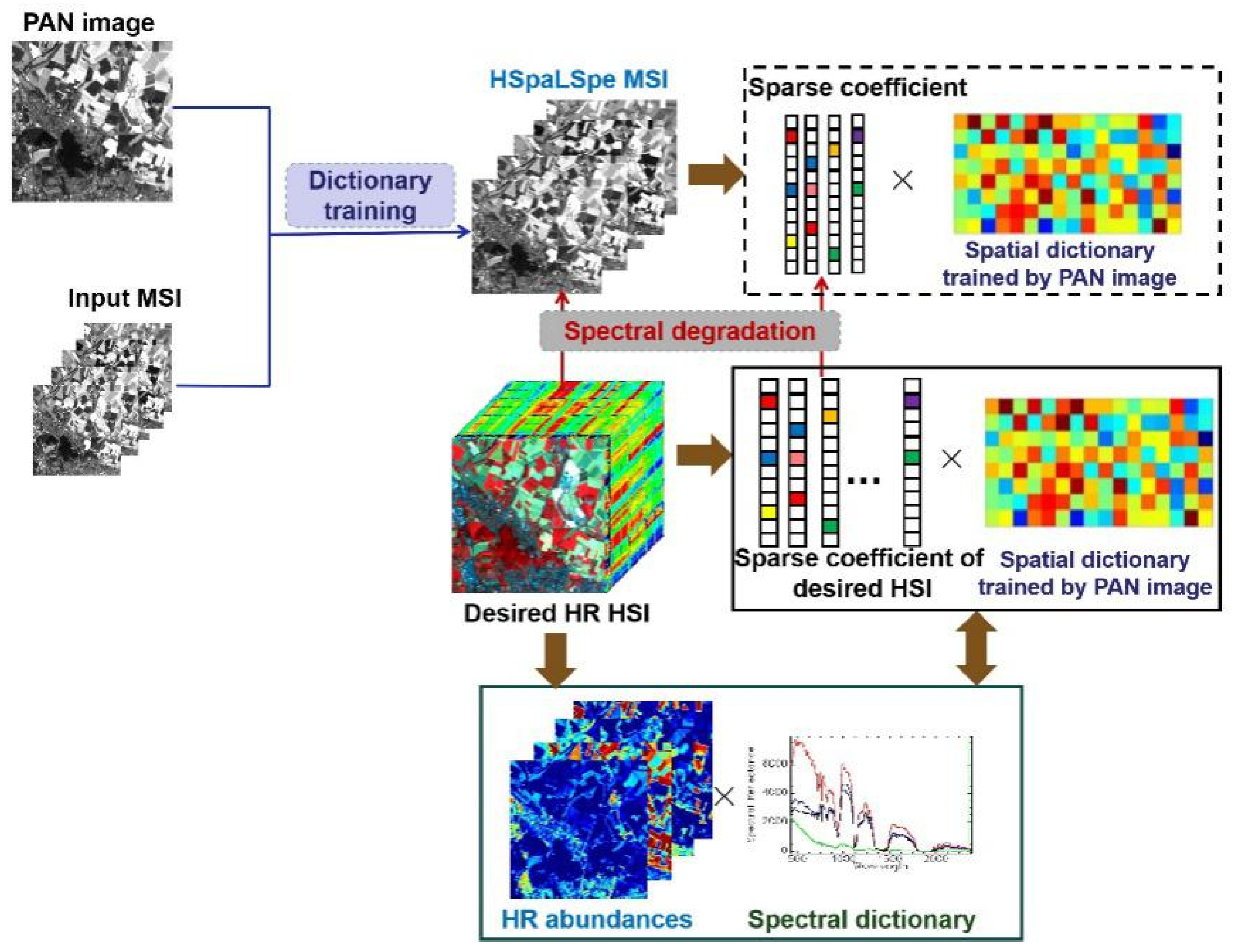

3. Proposed Joint Spatial-spectral Enhancement Algorithm

3.1. Formulation of Spectral Observation Model to Solve Spectral Dictionary

3.2. Formulation of Spatial Observation Model to Solve abundances

3.3. Joint Spatial-spectral Enhancement Algorithm

3.4. Solver

3.4.1. Solution of Initial Spectral Dictionary

- (1)

- Solve with respect to a fixed

- (2)

- Update with respect to a fixed

3.4.2. Solution of Spatial Sparse Information

- (1)

- Solve spatial sparse coefficient matrix

- (2)

- Solve spatial sparse coefficient matrix with respect to a fixed

3.4.3. Solution of High Spatial Resolution Abundance

- (1)

- Solve with respect to a fixed

- (2)

- Solve with respect to a fixed

3.4.4. Solution of High Resolution Spectral Dictionary

- (1)

- Update with respect to a fixed

- (2)

- Solve with respect to a fixed

| Algorithm 1: Joint spatial-spectral resolution enhancement algorithm(J-SpeSpaRE) |

| Input: LR MSI , prior HSIs , spatial dictionary pre-trained by HR PAN image. |

| Initialization: Iteration time i=1. |

| Step 1: Train the initial spectral dictionary with Equation (15). Step 2: Solve the sparse coefficient matrix with Equation (16). Begin Step 3: Solve the high spatial resolution abundance with Equation (17). Step 4: Solve the high resolution spectral dictionary with Equation (18). Step 5: Recover the HR HSI . Step 6: i=i+1. End Return when |

| Output: HR HSI . |

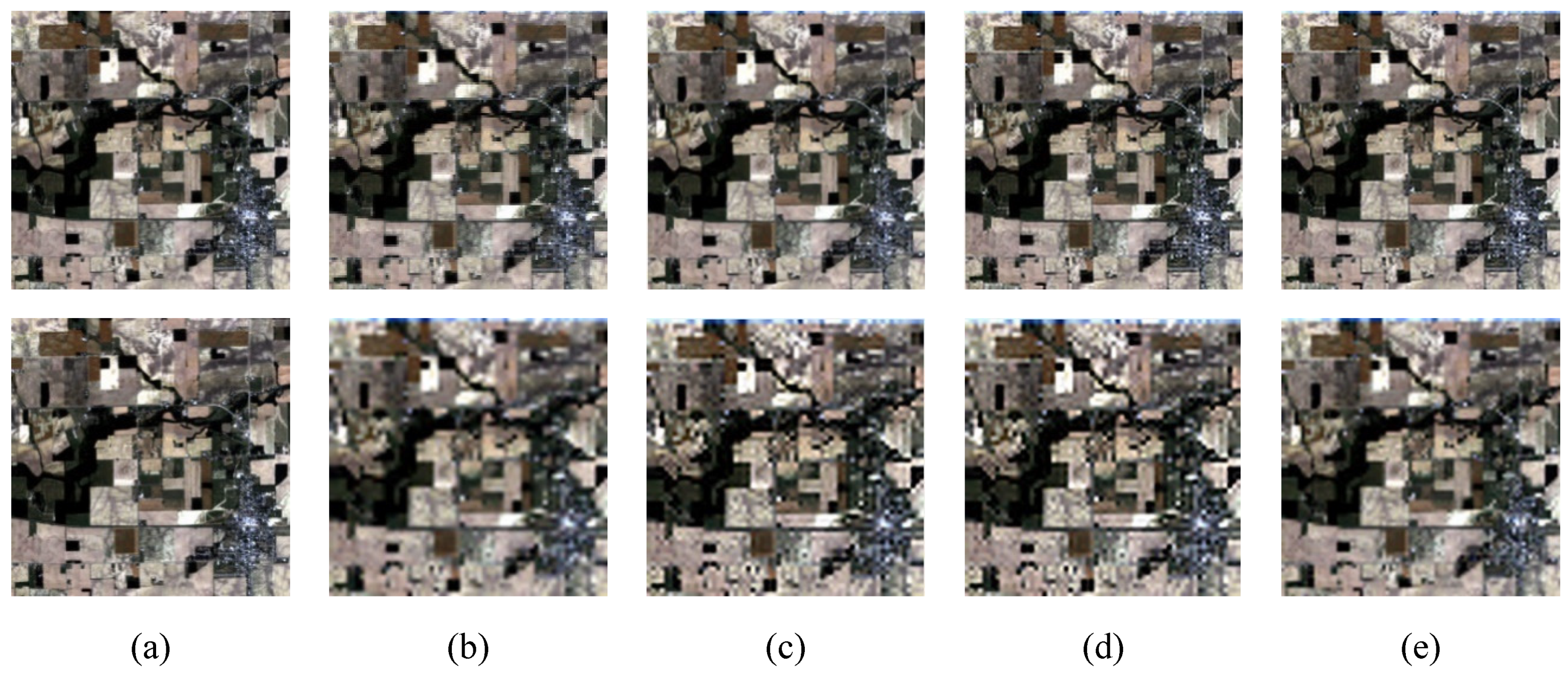

4. Experiment Results

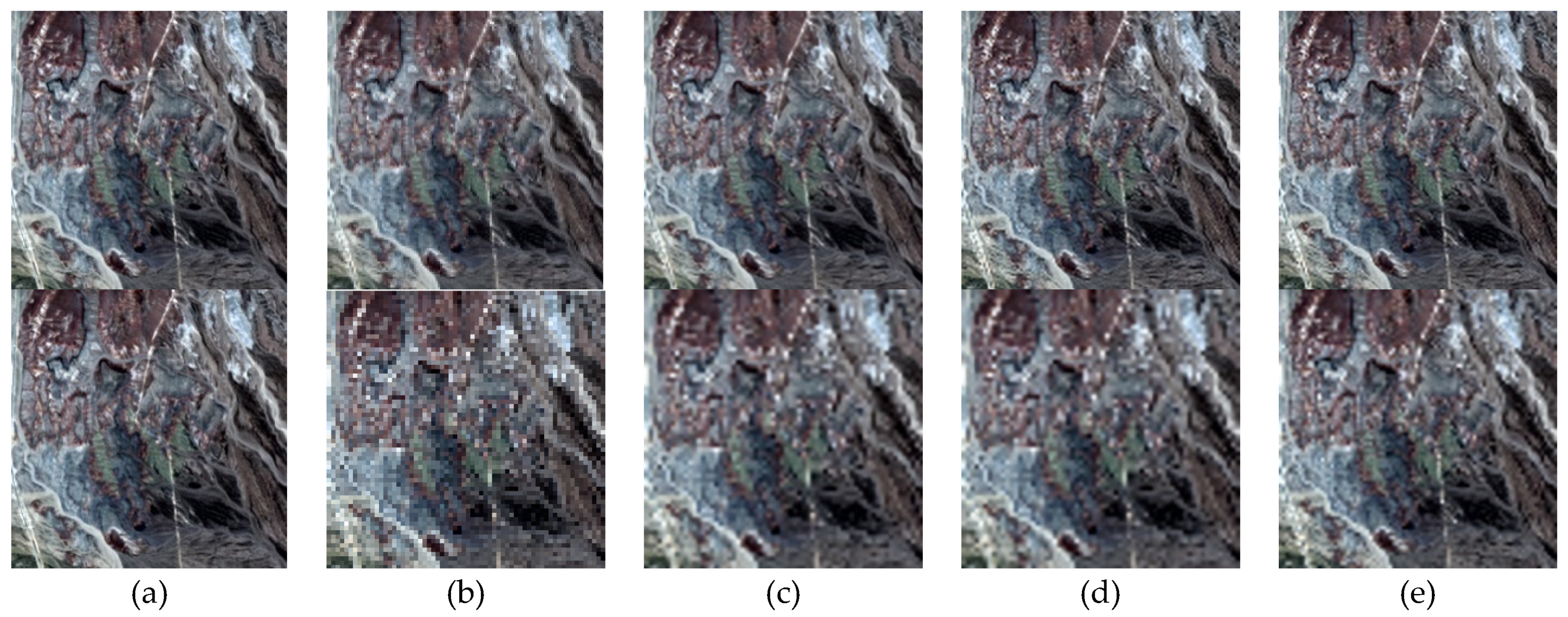

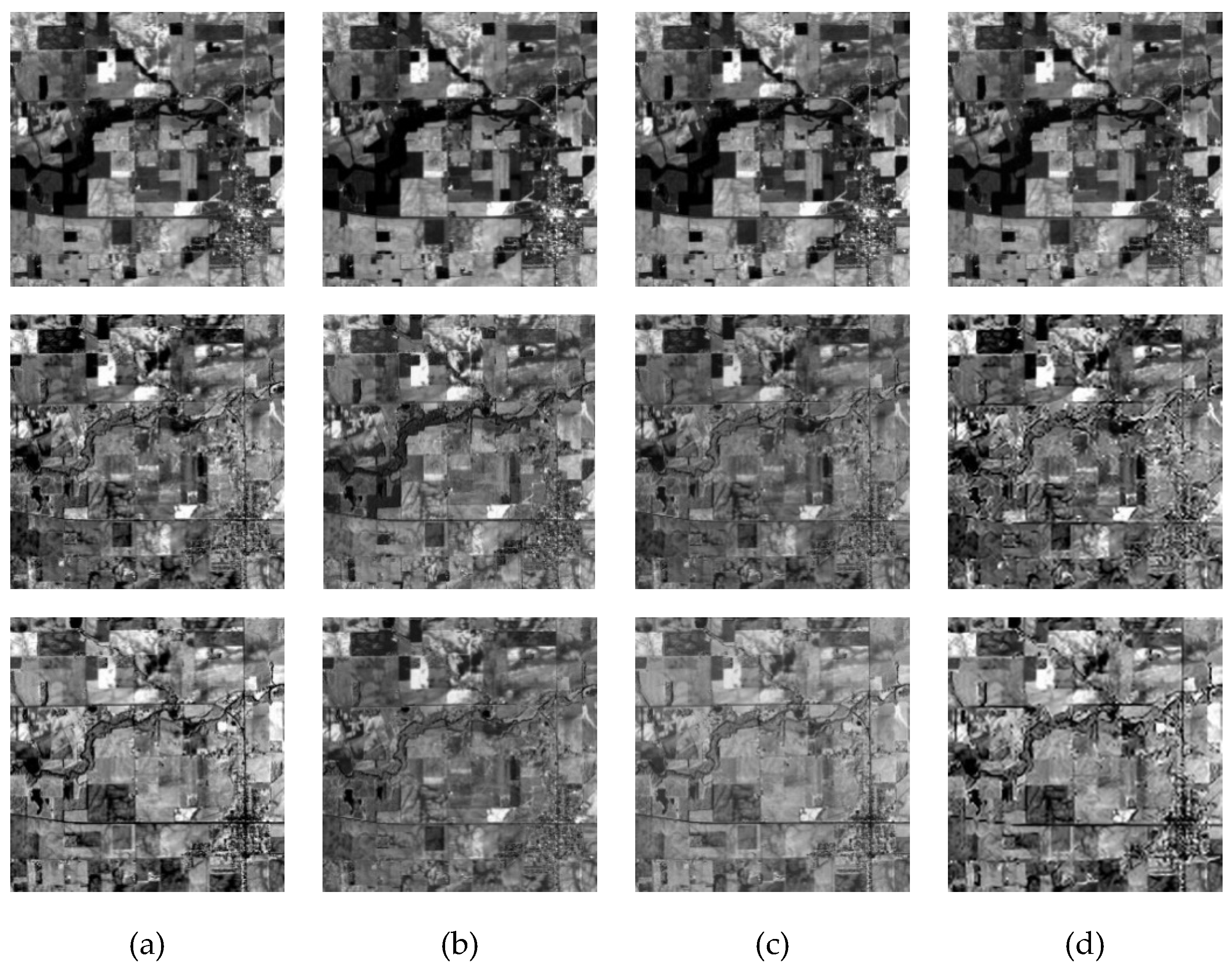

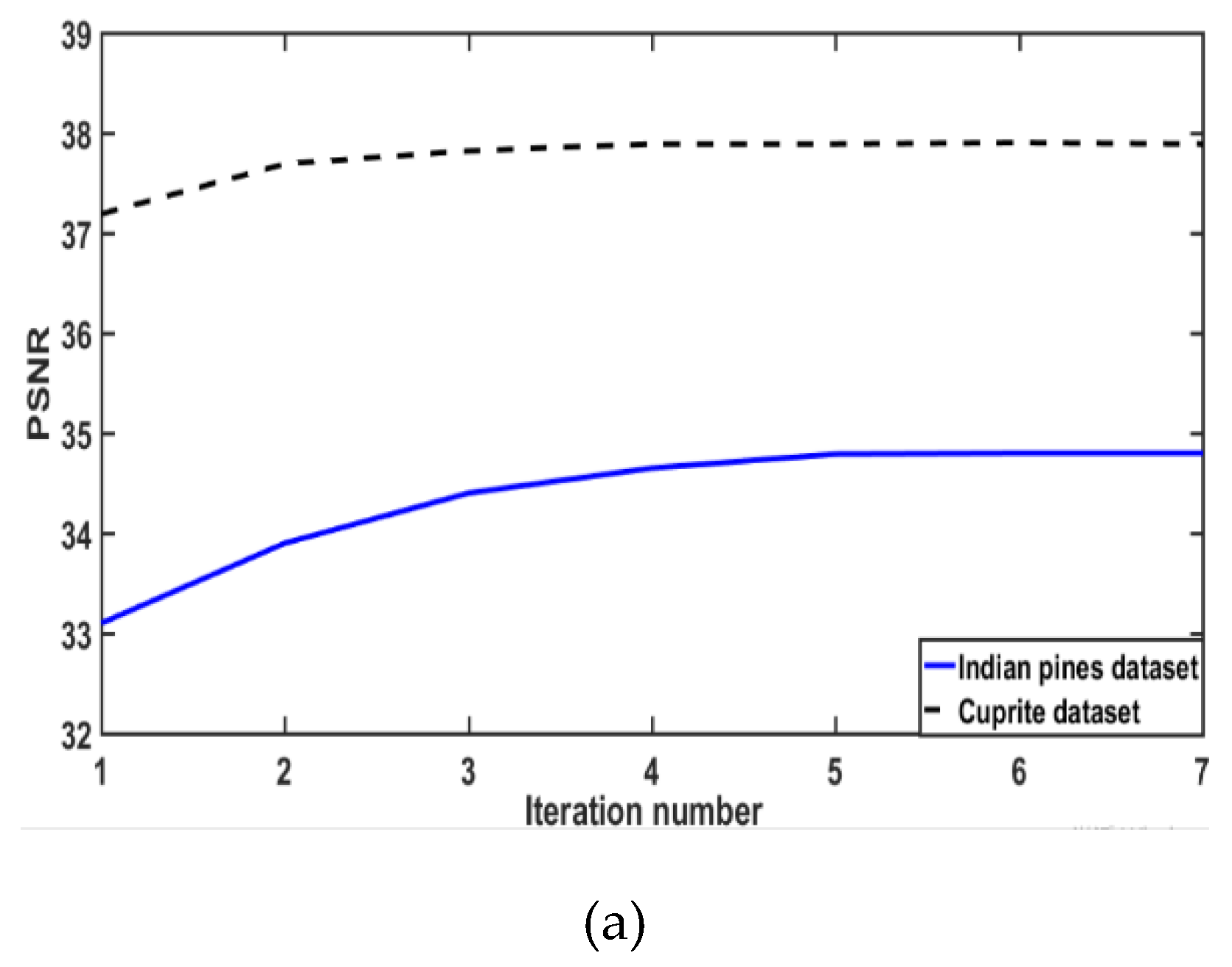

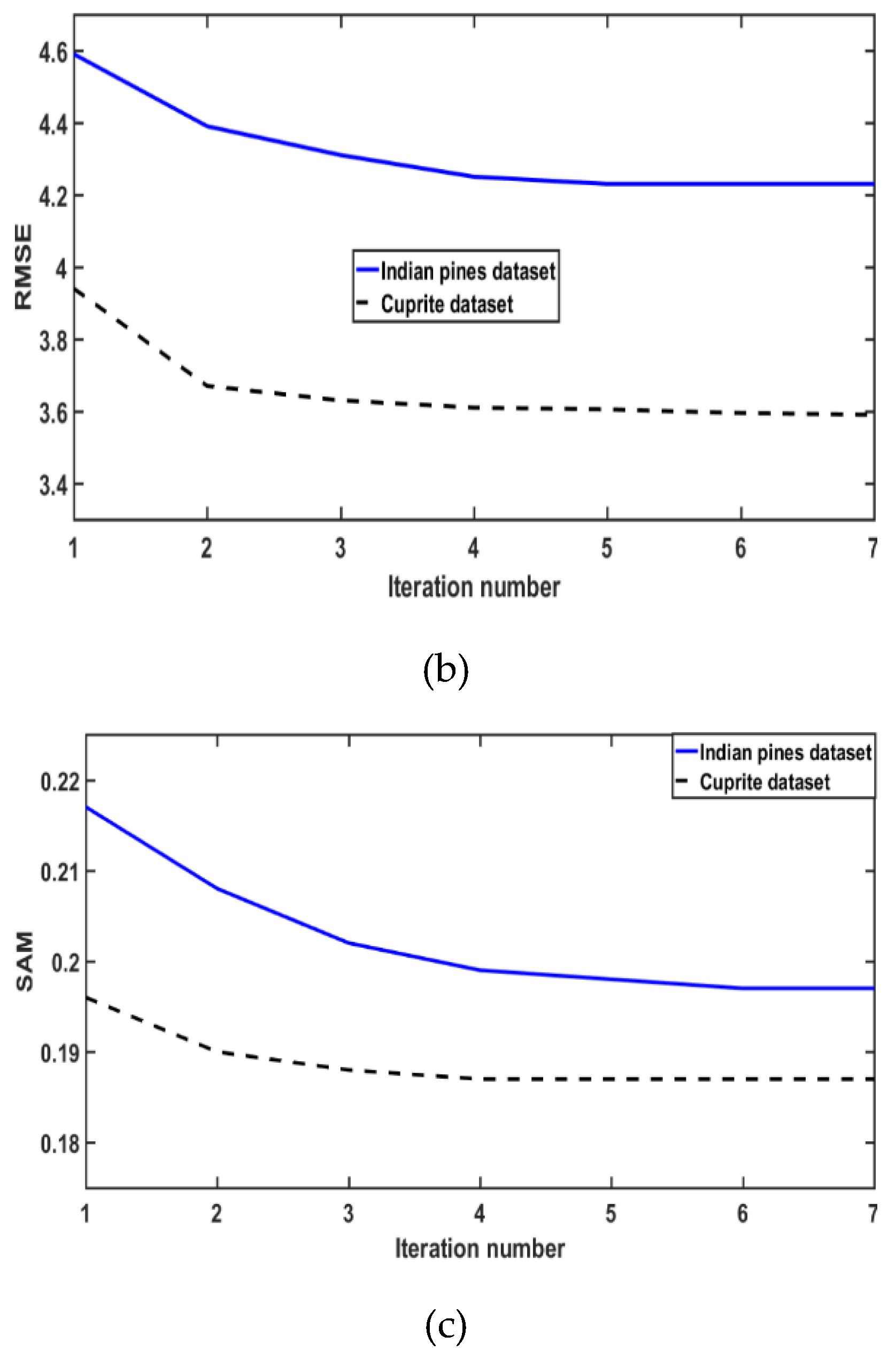

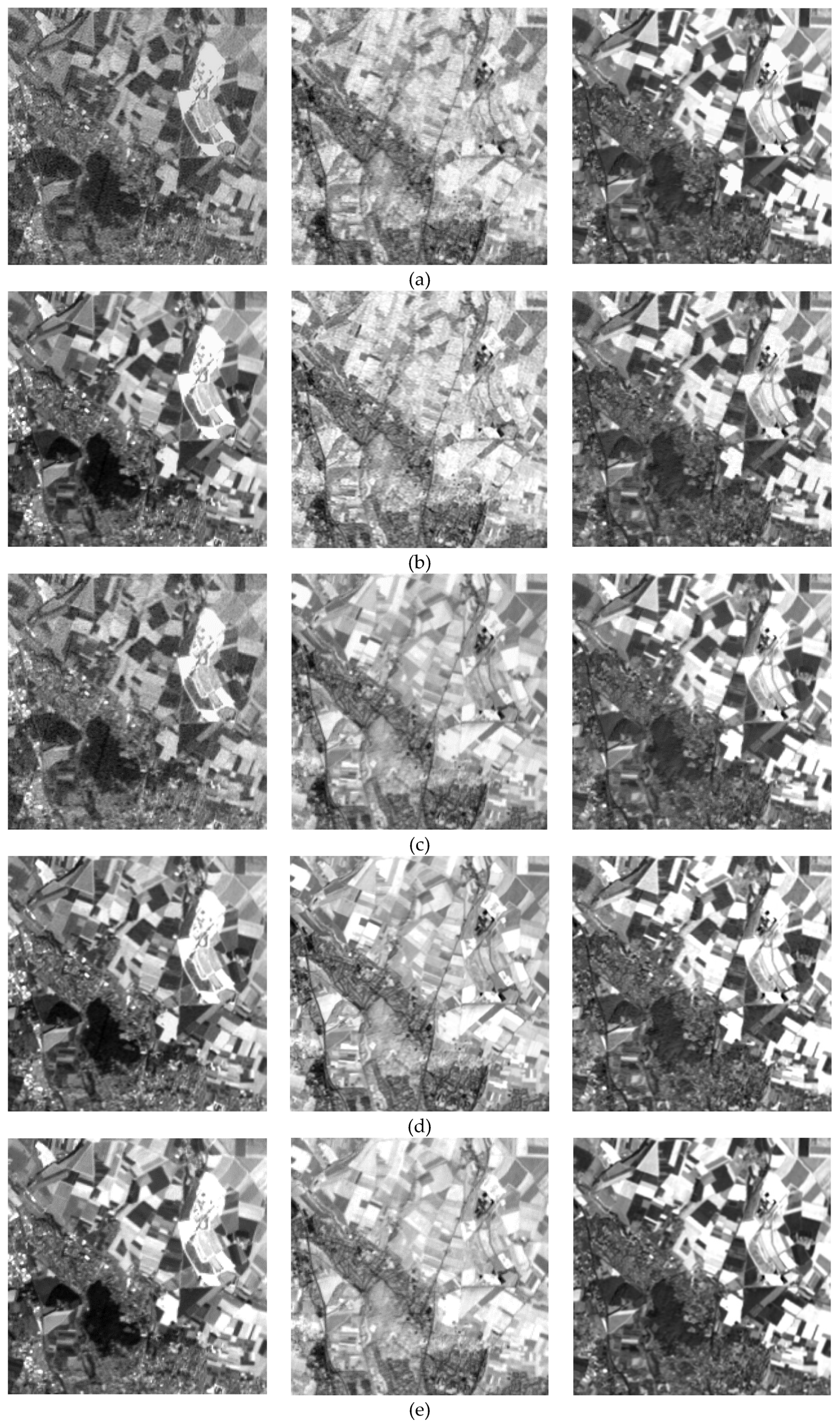

4.1. Experiments on Simulated Datasets

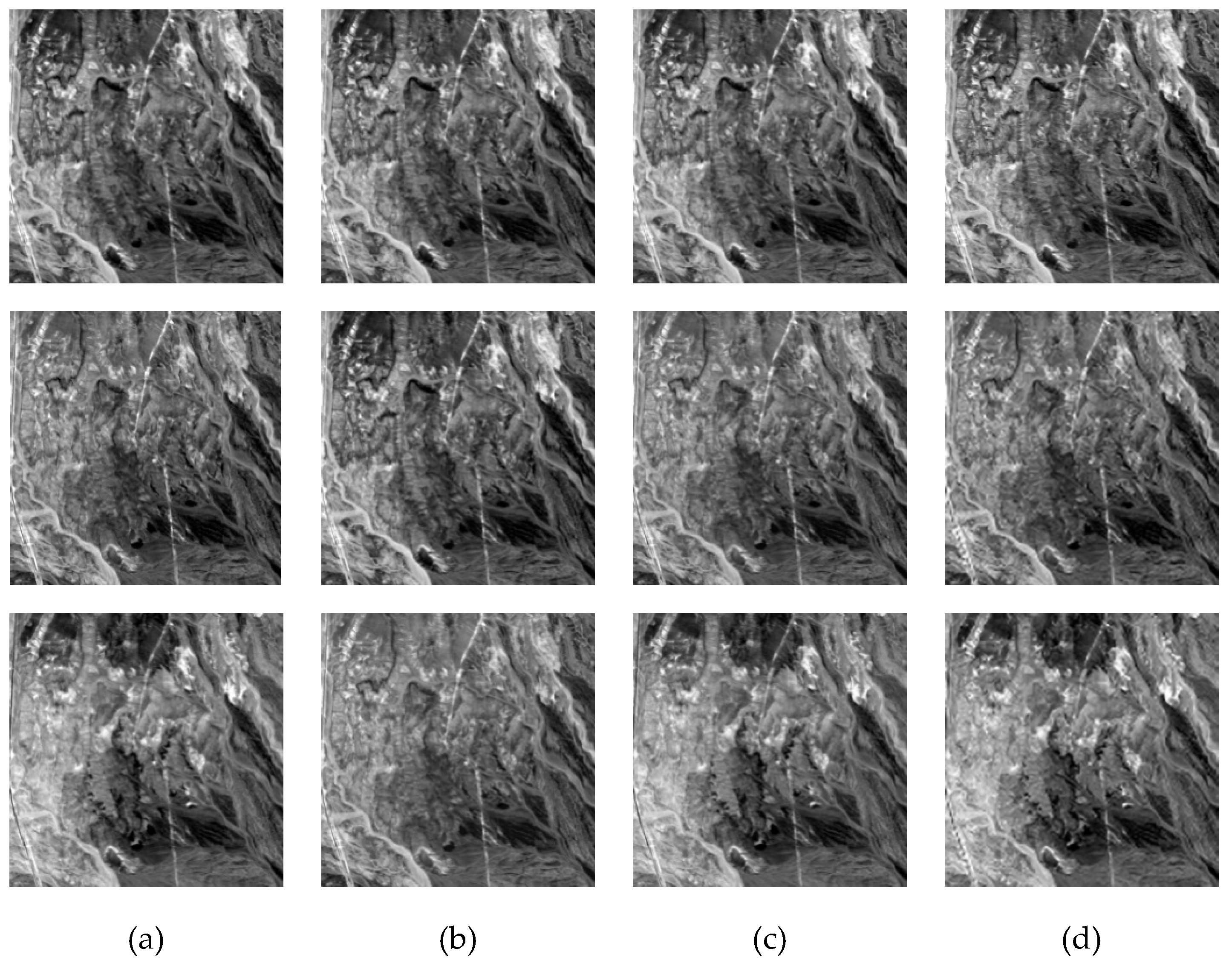

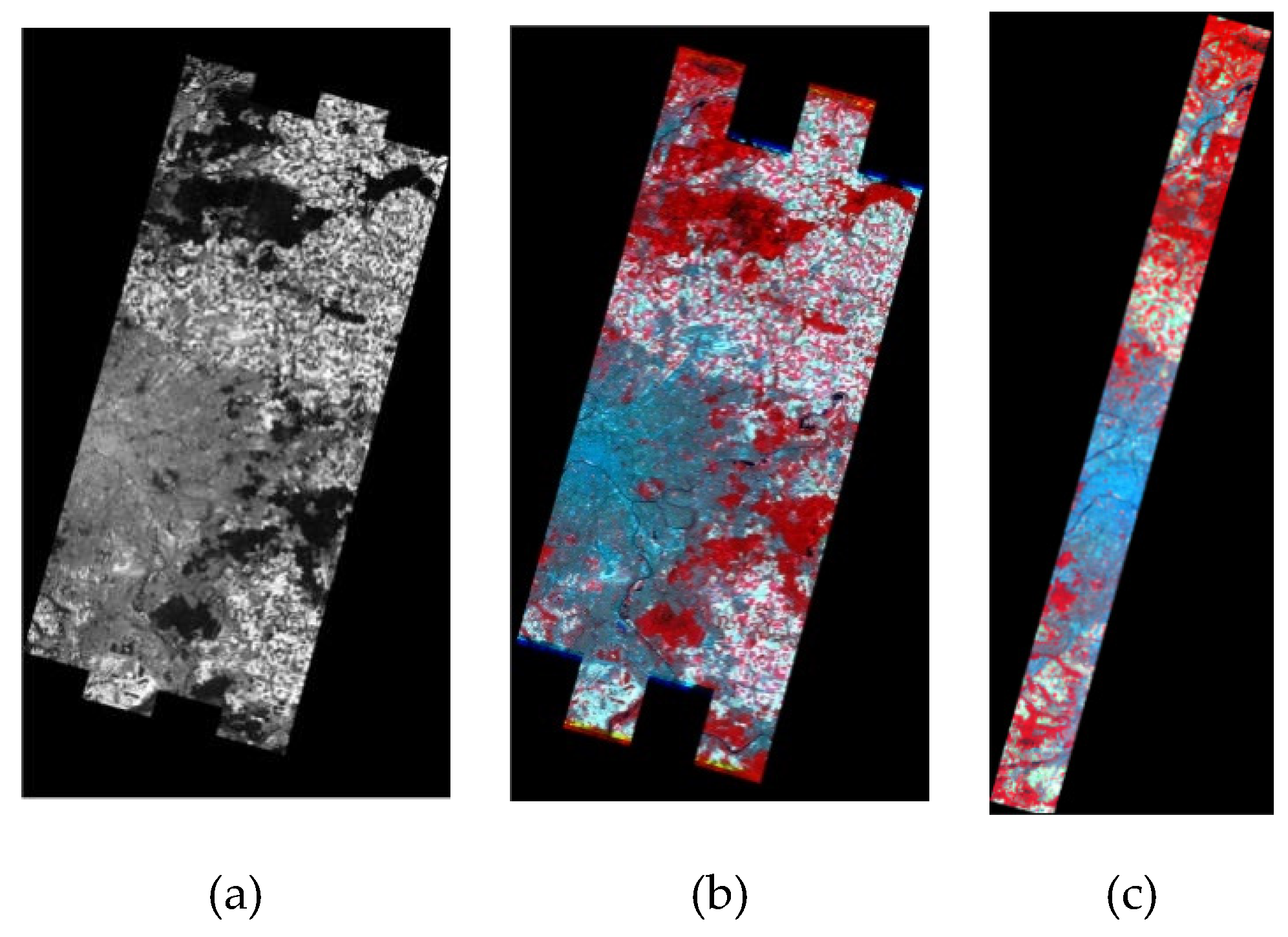

4.2. Effectiveness of Joint Spatial and Spectral Enhancement Framework

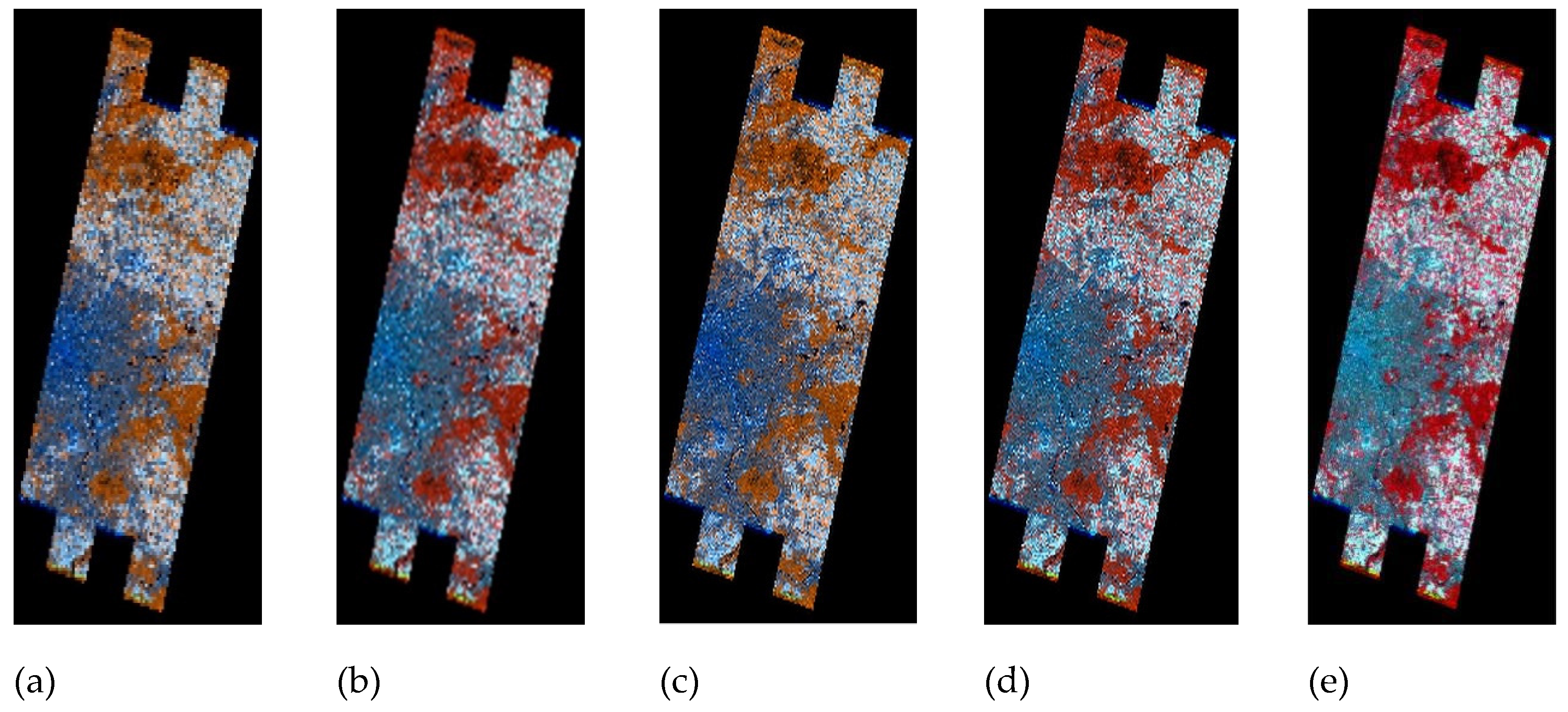

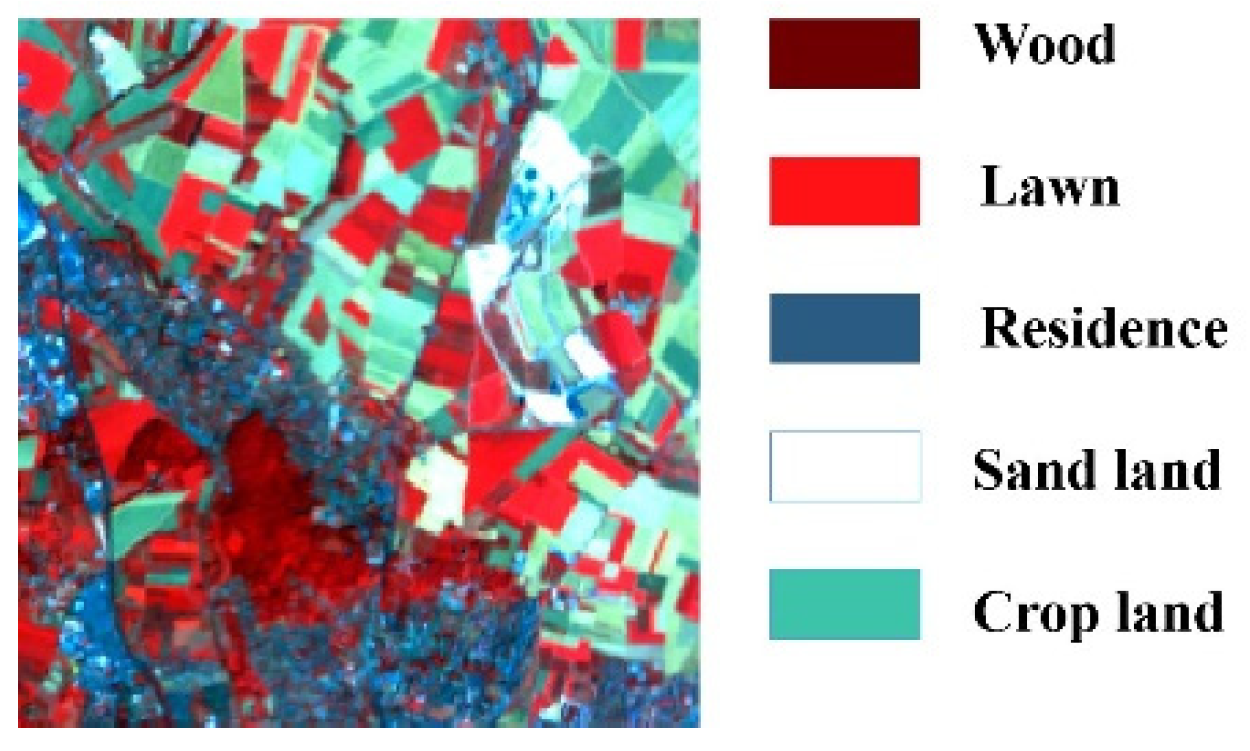

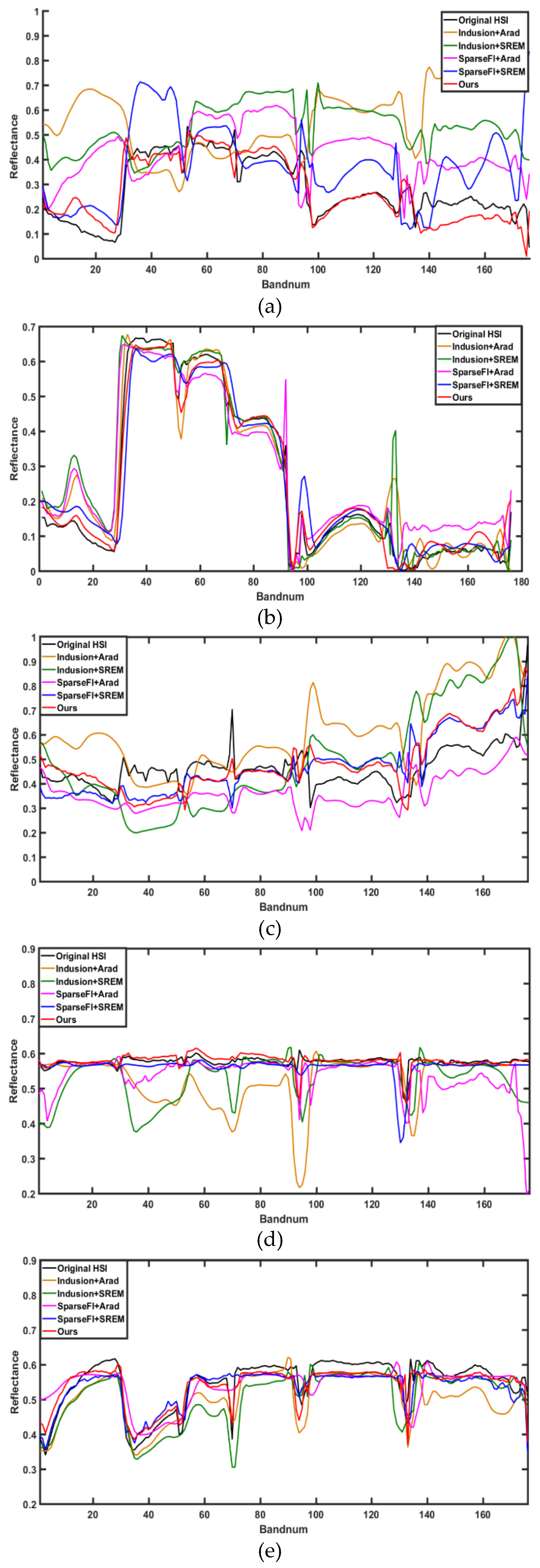

4.3. Experiment on Real Dataset

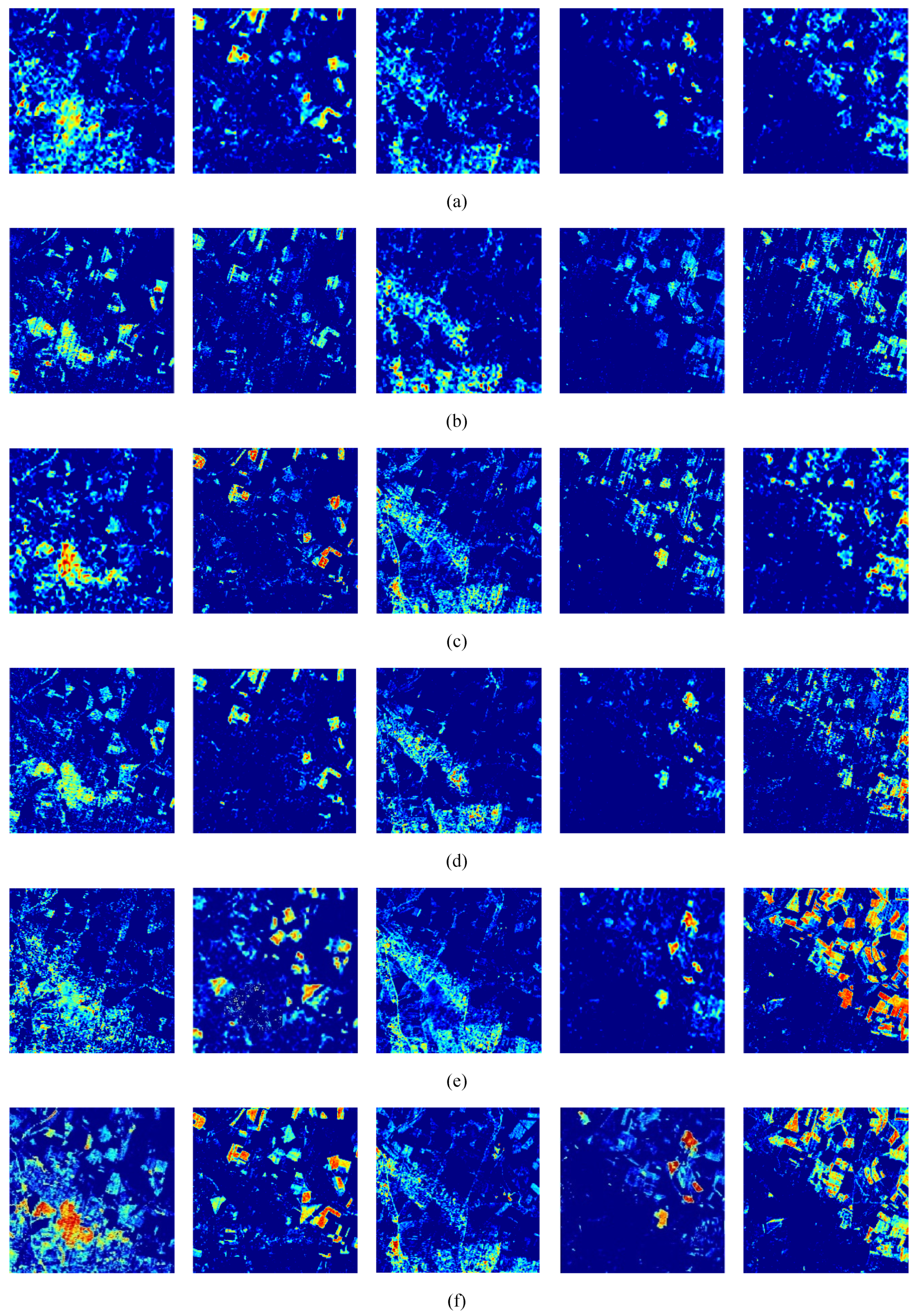

4.4. Spectral Unmixing on Real Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W. Learning and transferring deep joint spectral-spatial features for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Zhang, Y.; Du, B.; Zhang, L.; Liu, T. Joint sparse representation and multitask learning for hyperspectral target detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 894–906. [Google Scholar] [CrossRef]

- Lorente, D.; Aleixos, N.; Gómez-Sanchis, J.; Cubero, S.; García-Navarrete, O.L.; Blasco, J. Recent advances and applications of hyperspectral imaging for fruit and vegetable quality assessment. Food Bioprocess Technol. 2012, 5, 1121–1142. [Google Scholar] [CrossRef]

- Yokoya, N.; Chan, J.C.W.; Segl, K. Potential of resolution-enhanced hyperspectral data for mineral mapping using simulated EnMAP and Sentinel-2 images. Remote Sens. 2016, 8, 172. [Google Scholar] [CrossRef]

- Yi, C.; Zhao, Y.Q.; Chan, J.C.W. Hyperspectral image superresolution based on spatial and spectral correlation fusion. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4165–4177. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.Q.; Chan, J.C.W.; Xiao, L. A Multi-Scale Wavelet 3D-CNN for Hyperspectral Image Super-Resolution. Remote Sens. 2019, 11, 1557. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Vivone, G.; Restaino, R.; Addesso, P.; Chanussot, J. Global and local Gram-Schmidt methods for hyperspectral pansharpening. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Milan, Italy, 26–31 July 2015; pp. 37–40. [Google Scholar]

- Licciardi, G.A.; Khan, M.M.; Chanussot, J.; Montanvert, A.; Condat, L.; Jutten, C. Fusion of hyperspectral and panchromatic images using multiresolution analysis and nonlinear PCA band reduction. EURASIP J. Adv. Signal Process. 2012, 1, 207. [Google Scholar] [CrossRef]

- Yang, S.; Zhang, K.; Wang, M. Learning low-rank decomposition for pan-sharpening with spatial- spectral offsets. IEEE Trans. Neural Netw. Learn. Syst. 2017, 20, 3647–3657. [Google Scholar]

- Yi, C.; Zhao, Y.Q.; Yang, J.; Chan, J.C.W.; Kong, S.G. Joint hyperspectral superresolution and unmixing with interactive feedback. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3823–3834. [Google Scholar] [CrossRef]

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the Accuracy of multispectral image pansharpening by learning a deep residual network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Chan, R.H.; Chan, T.F.; Shen, L.; Shen, Z. Wavelet algorithms for high-resolution image reconstruction. SIAM J. Sci. Comput. 2003, 24, 1408–1432. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Hyperspectral resolution enhancement using high-resolution multispectral imagery with arbitrary response functions. IEEE Trans. Geosci. Remote Sens. 2005, 43, 455–465. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3631–3640. [Google Scholar]

- Dong, W.; Fu, F.; Shi, G.; Cao, X.; Wu, J.; Li, G.; Li, X. Hyperspectral image super-resolution via non-negative structured sparse representation. IEEE Trans. Image Process. 2016, 25, 2337–2352. [Google Scholar] [CrossRef]

- Yi, C.; Zhao, Y.Q.; Chan, J.C.-W. Spectral super-resolution for multispectral image based on spectral improvement strategy and spatial preservation strategy. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9010–9024. [Google Scholar] [CrossRef]

- Chi, C.; Yoo, H.; Ben-Ezra, M. Multi-spectral imaging by optimized wide band illumination. Int. J. Comput. Vis. 2010, 86, 140. [Google Scholar] [CrossRef]

- Gat, N. Imaging spectroscopy using tunable filters: A review. Proc. SPIE 2000, 4056, 50–64. [Google Scholar]

- Oh, S.W.; Brown, M.S.; Pollefeys, M.; Kim, S.J. Do it yourself hyperspectral imaging with everyday digital cameras. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2461–2469. [Google Scholar]

- Sun, X.; Zhang, L.; Yang, H.; Wu, T.; Cen, Y.; Guo, Y. Enhancement of spectral resolution for remotely sensed multispectral image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2198–2211. [Google Scholar] [CrossRef]

- Arad, B.; Ben-Shahar, O. Sparse recovery of hyperspectral signal from natural RGB images. In Computer Vision—ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 19–34. [Google Scholar]

- Wu, J.; Aeschbacher, J.; Timofte, R. In defense of shallow learned spectral reconstruction from RGB images. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 471–479. [Google Scholar]

- Galliani, S.; Lanaras, C.; Marmanis, D.; Baltsavias, E.; Schindler, K. Learned Spectral Super-Resolution. arXiv 2017, arXiv:1703.09470. Available online: https://arxiv.org/abs/1703.09470 (accessed on 28 March 2017).

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Chakrabarti, A.; Zickler, T. Statistics of real-world hyperspectral images. In Proceedings of the IEEE Conference on Vision Pattern Recognit (CVPR) 2011, Providence, RI, USA, 20–25 June 2011; pp. 193–200. [Google Scholar]

- Zhu, X.X.; Bamler, R. A sparse image fusion algorithm with application to pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2827–2836. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.W. Nonlocal tensor sparse representation and low-rank regularization for hyperspectral image compressive sensing reconstruction. Remote Sens. 2019, 11, 193. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In Computer Vision; Springer: Cham, Switzerland, 2014; pp. 63–78. [Google Scholar]

- Zhao, Y.-Q.; Yang, J. Hyperspectral image denoising via sparse representation and low-rank constraint. IEEE Trans. Geosci. Remote Sens. 2014, 53, 296–308. [Google Scholar] [CrossRef]

- Zhu, X.X.; Grohnfeldt, C.; Bamler, R. Exploiting joint sparsity for pansharpening: The J-SparseFI algorithm. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2664–2681. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Afonso, M.V.; Bioucas-Dias, J.M.; Figueiredo, M.A.T. An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 2011, 20, 681–695. [Google Scholar] [CrossRef] [PubMed]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Höfling, H.; Tibshirani, R. Pathwise coordinate optimization. Ann. Appl. Statist. 2007, 1, 302–332. [Google Scholar] [CrossRef]

- Donoho, D.L. For most large underdetermined systems of equations, the minimal 1-norm near-solution approximates the sparsest nearsolution. Commun. Pure Appl. Math. 2006, 59, 907–934. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; The Johns Hopkins University Press: Baltimore, MD, USA, 1983. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening through Multivariate Regression of MS $ + $Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Khan, M.M.; Chanussot, J.; Condat, L.; Montanvert, A. Indusion: Fusion of Multispectral and Panchromatic Images Using the Induction Scaling Technique. IEEE Geosci. Remote Sens. Lett. 2008, 5, 98–102. [Google Scholar] [CrossRef]

- Xue, J.; Zhao, Y.; Liao, W.; Chan, J.C.W. Nonlocal Low-Rank Regularized Tensor Decomposition for Hyperspectral Image Denoising. IEEE Trans. Geos. Remote Sens. 2019, 57, 5174–5189. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Lanaras, C.; Baltsavias, E.; Schindler, K.K. Hyperspectral Super-Resolution with Spectral Unmixing Constraints. Remote Sens. 2017, 9, 1196. [Google Scholar] [CrossRef]

- Wald, L. Data fusion. In Definitions and Architectures—Fusion of Images of Different Spatial Resolutions; Ecole de Mines de Paris: Paris, France, 2002. [Google Scholar]

- Zhang, L.; Wei, W.; Bai, C.; Gao, Y.; Zhang, Y. Exploiting clustering manifold structure for hyperspectral imagery super-resolution. IEEE Trans. Image Process. 2018, 27, 5969–5982. [Google Scholar] [CrossRef]

- Folkman, M.A.; Pearlman, J.; Liao, L.B.; Jarecke, P.J. EO-1/hyperion hyperspectral imager design, development, characterization, and calibration. Proc. SPIE 2001, 4151, 40–51. [Google Scholar]

- Ma, W.K.; Bioucas-Dias, J.M.; Chan, T.H.; Gillis, N.; Gader, P.; Plaza, A.J.; Chi, C.Y. A signal processing perspective on hyperspectral unmixing. IEEE Signal Process. Mag. 2014, 31, 67–81. [Google Scholar] [CrossRef]

| GSA | Indusion | SparseFI | J-SpeSpaRE (After Spectral Degradation) | |

|---|---|---|---|---|

| MPSNR | 37.030 | 38.234 | 38.735 | 39.097 |

| MSSIM | 0.693 | 0.729 | 0.738 | 0.756 |

| MFSIM | 0.797 | 0.823 | 0.844 | 0.899 |

| SAM | 0.160 | 0.151 | 0.147 | 0.120 |

| PD | 7.874 | 6.350 | 5.192 | 4.318 |

| GSA | Indusion | SparseFI | SpeSpaRE (After Spectral Degradation) | |

|---|---|---|---|---|

| MPSNR | 41.065 | 42.371 | 43.012 | 43.855 |

| MSSIM | 0.699 | 0.728 | 0.740 | 0.759 |

| MFSIM | 0.754 | 0.797 | 0.831 | 0.884 |

| SAM | 0.160 | 0.142 | 0.139 | 0.114 |

| PD | 8.347 | 6.468 | 5.703 | 4.911 |

| Arad | SREM | J-SpeSpaRE (After Spatial Degradation) | |

|---|---|---|---|

| RMSE | 4.872 | 4.548 | 4.230 |

| SAM | 0.230 | 0.211 | 0.209 |

| MPSNR | 33.682 | 34.177 | 34.802 |

| CC | 0.930 | 0.967 | 0.970 |

| Arad | SREM | J-SpeSpaRE (After Spatial Degradation) | |

|---|---|---|---|

| RMSE | 4.5372 | 4.1020 | 3.5989 |

| SAM | 0.245 | 0.216 | 0.191 |

| MPSNR | 35.936 | 37.294 | 37.997 |

| CC | 0.955 | 0.971 | 0.932 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, C.; Zhao, Y.-q.; Chan, J.C.-W.; Kong, S.G. Joint Spatial-spectral Resolution Enhancement of Multispectral Images with Spectral Matrix Factorization and Spatial Sparsity Constraints. Remote Sens. 2020, 12, 993. https://doi.org/10.3390/rs12060993

Yi C, Zhao Y-q, Chan JC-W, Kong SG. Joint Spatial-spectral Resolution Enhancement of Multispectral Images with Spectral Matrix Factorization and Spatial Sparsity Constraints. Remote Sensing. 2020; 12(6):993. https://doi.org/10.3390/rs12060993

Chicago/Turabian StyleYi, Chen, Yong-qiang Zhao, Jonathan Cheung-Wai Chan, and Seong G. Kong. 2020. "Joint Spatial-spectral Resolution Enhancement of Multispectral Images with Spectral Matrix Factorization and Spatial Sparsity Constraints" Remote Sensing 12, no. 6: 993. https://doi.org/10.3390/rs12060993

APA StyleYi, C., Zhao, Y.-q., Chan, J. C.-W., & Kong, S. G. (2020). Joint Spatial-spectral Resolution Enhancement of Multispectral Images with Spectral Matrix Factorization and Spatial Sparsity Constraints. Remote Sensing, 12(6), 993. https://doi.org/10.3390/rs12060993