1. Introduction

With the advent of systematic earth observation from satellites, a remarkable archive of remotely sensed image times series has been developed with a diverse set of variables, including atmospheric and ocean temperature, precipitation, vegetation and ocean productivity, and the like. From analysis of these time series, it has become apparent the many phenomena exhibit preferred patterns of variability [

1]. In the atmospheric and ocean sciences, these are commonly known as

teleconnections—patterns of variability that show degrees of synchrony over large distances in space [

2]. Another common description is to refer to them as climate modes—major patterns of variability in the climate system [

3].

A variety of techniques have been used or developed to discover these patterns in earth observation image series, including principal components analysis (PCA), also known as empirical orthogonal function (EOF) analysis, and empirical orthogonal teleconnections (EOTs). In this paper, we introduce a new procedure based on a sequential autoencoder that is related to, but different from, these two former procedures. The technique will be shown to be exceptionally fast and powerful in its ability to extract meaningful teleconnection patterns.

One of the most common tools in the search for such patterns has been principal components analysis (PCA) [

4] (pp. 53–54). At its core, PCA is a dimensionality reduction technique that seeks to describe a multivariate data set with a reduced number of features, called components, such that those components are independent and can describe the bulk of the variability in the original data [

5]. While it is remarkably successful in achieving this reduction, interpretability is constrained by the fact that the components are bi-orthogonal [

5] (p. 291), [

4] (p. 54)—orthogonal in both space and time in the context of image time series. It has been noted by many (e.g., [

6] (p. 296); [

5] (p. 270)), that this strict orthogonality can sometimes make these components very difficult to interpret, and unlikely to relate to physical processes. A common solution has been to relax this bi-orthogonality in favor of obliquely rotated components that achieve some form of simple structure [

5] (pp. 270–279). However, this approach has been criticized as being somewhat subjective (in terms of the number of components to rotate and the specific algorithm to use [

4] (pp. 53–78), and capable of splitting patterns between components [

6] (p. 306).

As an alternative to rotation, [

7] introduced an iterative regression-based procedure that produces components that are independent in time, but not in space. EOT seeks to find the single location in space (a pixel), whose values over time (a vector) can best describe the time vectors for all other pixels over the area analyzed (e.g., the entire earth). In [

7], it determines this based on explained variance using regression (essentially an unstandardized analysis). It then takes the vector at that location, and uses it to create a residual series of images where that vector is subtracted from the vector of every location. For example, if the data analyzed were monthly sea surface temperature (SST) anomalies over a period of many years, the technique would invariably find a point in the central Pacific where the pattern of ENSO (El Niño/Southern Oscillation) anomalies would dominate. This would be the first EOT (a one-dimensional vector over time). The procedure then removes this ENSO vector from the temporal vectors associated with every pixel in the image. The series is now free of ENSO. At this point, it repeats the process to find the second EOT, and so on. In the end, the EOTs extracted are orthogonal in time, but not necessarily orthogonal in space. Thus, they are similar to an oblique rotation in comparison to PCA [

4] (p. 66).

In the original formulation, EOT was unstandardized (based on covariance) and uncentered (without subtracting the mean). [

8] introduced a variant of EOT in the Earth Trends Modeler (a software program for remotely sensed image time series analysis within the TerrSet modeling suite) with options for centered and standardized (based on correlation) EOT as well. Generally, it has been the experience of the second author here that the centered and standardized options work best and readily find meaningful teleconnections (e.g., [

9], [

10]). As indicated by [

4] (p. 66), the advantage of EOTs is that they yield a natural rotation without the need for subjective decisions.

Comparing EOTs to PCA, one of the striking characteristics is that EOTs are regional in character, whereas PCA is global (in the context of a global image time series). Despite the fact that when it searches for an EOT, it looks for a location that can best describe the temporal vector at all locations globally, it then characterizes the EOT by the vector at the single location found. Further, it is this local vector which is subtracted to produce the residual series before calculating the next EOT. In the implementation of the Earth Trends Modeler, after the requested EOTs have all been calculated, it then creates partial correlation images to characterize the loadings—the correlations between the EOTs and the original image series analyzed. These images typically exhibit patterns which are quite regional in character.

PCA/EOF and EOT are both seeking latent structure—deep structural patterns that have high explanatory value in describing the variability of the image series over space and time. In recent years, a parallel interest in latent structure has arisen in the field of artificial intelligence, in the context of autoencoders.

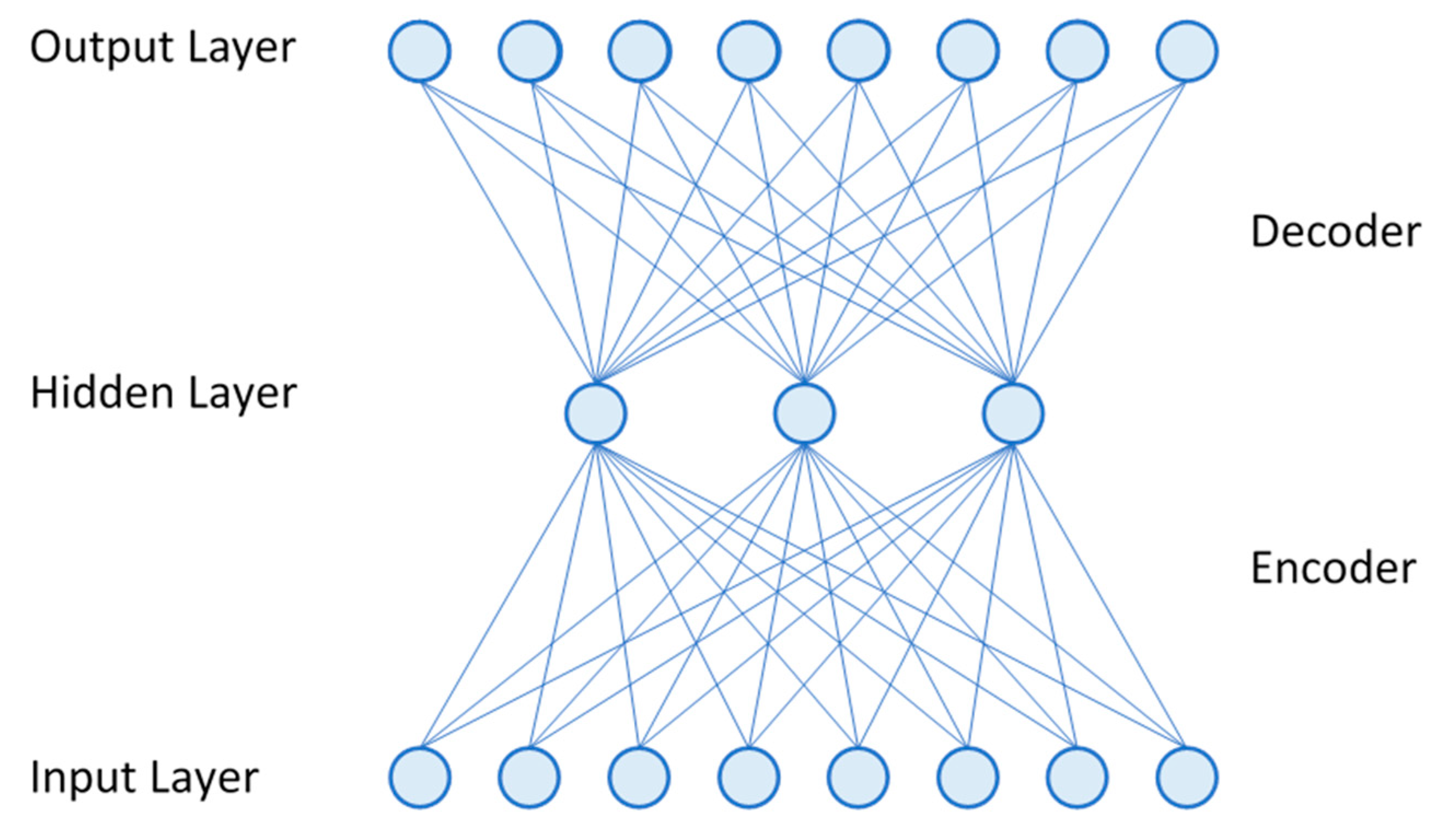

An autoencoder [

11], in its simplest form, is an artificial neural network with one hidden layer where the output layer is meant to be as faithful a reproduction as possible of the input layer (

Figure 1). Interpreting this in the context of image times series (where a series of images for some variable such as sea surface temperature has been captured over time), the input layer nodes may each (for example) represent a one-dimensional time series (a vector) at a single pixel in space. The output nodes are also temporal vectors at each pixel, and are meant to be the closest possible match to the input nodes, given the structure of the network. When the number of hidden layer nodes is less than the number of input and output nodes, the hidden layer needs to determine a latent structure that can best reproduce the original inputs with a reduced dimensionality. In a basic autoencoder, each node in the hidden and output layers performs a weighted linear combination of its inputs, which may subsequently be modified by a non-linear activation function. Thus, each connection in

Figure 1 represents a weight, and each node in the hidden and output layers represents a weighted linear combination (a dot product), optionally followed by a non-linear activation. It is frequently stated that when a linear activation is used (i.e., the activation step is left out), the autoencoder behaves similarly, but not identical to, a principal component analysis [

12].

As is typical of neural networks, the architecture of the autoencoder is exposed to multiple training examples with expected output values, and weights are progressively altered through backpropagation [

11,

13]. Eventually, the network converges on a set of connection weights that maximize the correspondence between the inputs and the outputs (which, in this case, are similar to the inputs, albeit generated only from the limited number of hidden nodes). Note also that the architecture is flexible. The interpretation offered here where the nodes represent temporal vectors corresponds with what is known as S-mode analysis [

14]. It is equally possible to consider the nodes as vectors over space for a single time step, which would be the equivalent of T-mode analysis [

14].

Autoencoders have been known since the 1980s [

11] and have commonly been used for unsupervised feature learning. In this case, the hidden layer nodes represent important features of the inputs. For example, if the inputs are faces, the hidden layer nodes may represent important aspects of faces, in general. These discovered features can then be used in classification tasks, such as face recognition. Other applications include noise removal [

11], dimensionality reduction [

15] and image compression [

16]. In each if these cases, the reduced number of nodes of the hidden layer serve to reject noise and capture the essence of the image with a lower and more efficient dimensionality. Another application is a generative variational autoencoder [

17] that can use the learned latent structure to generate new plausible instances. For example, based on a sample of faces, such an architecture might generate new plausible faces based on the latent structure of the faces analyzed. This, however, uses a somewhat different architecture that is not the focus of this research.

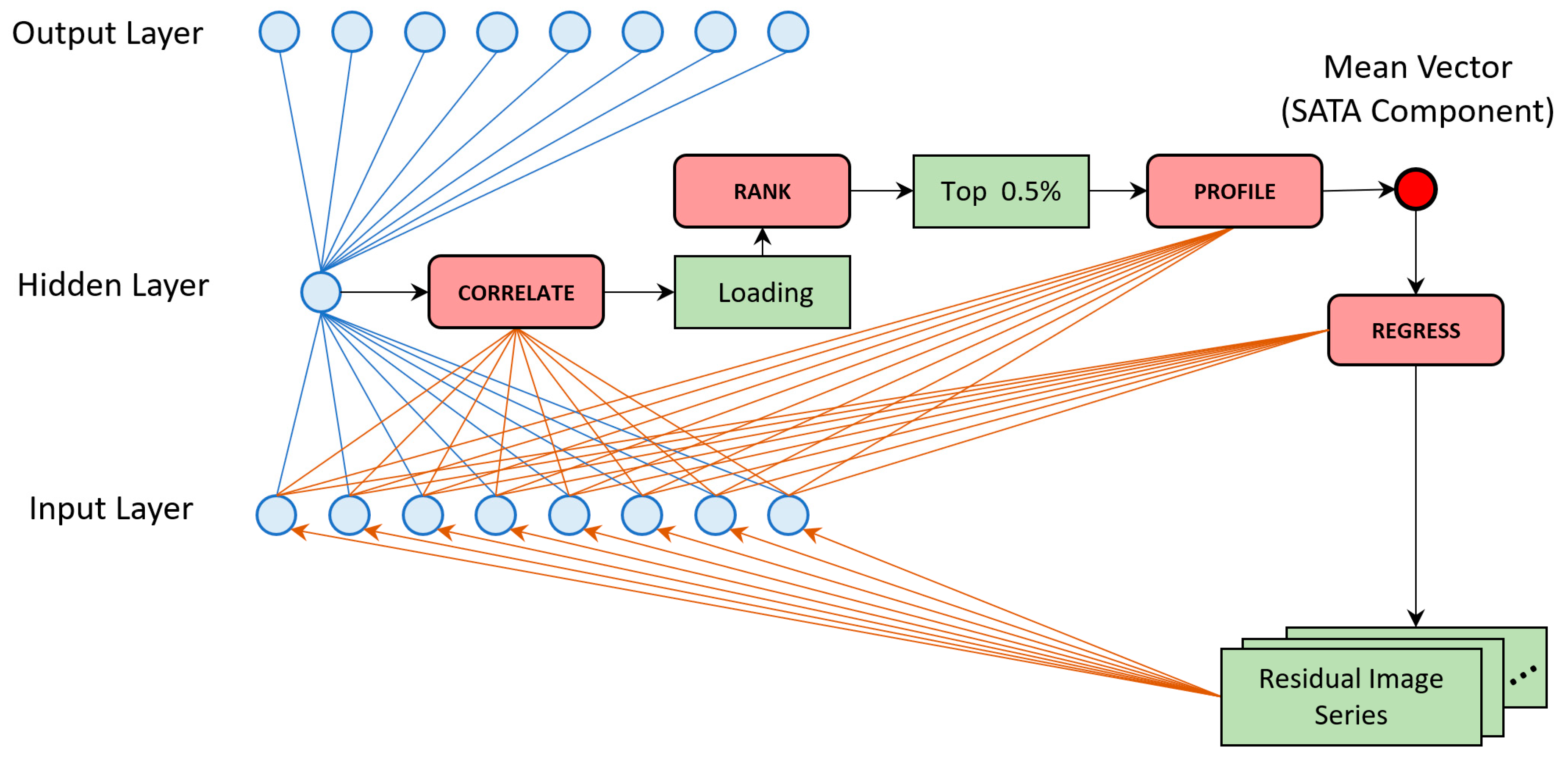

Latent structure lies at the heart of an autoencoder – the capture of essential information with a limited number of dimensions. Thus, they are similar in intent to PCA and EOT. To explore their potential use in the analysis of teleconnections, [

18] explored varying architectures of a basic linear autoencoder to examine the nature of the latent structure encoded from an analysis of global monthly sea surface temperature anomalies from 1982 to 2017. What she found was that as the number of hidden nodes increased, the latent structure became distributed across the nodes in a manner that no longer made sense geographically or temporally (despite being able to produce an output that was a good reproduction of the input series). However, if she created an autoencoder with a single node, similar to the logic of [

19], the result was identical to the first S-mode PCA of the series. She then showed that by applying the autoencoder sequentially, fitting the single node to produce the component, then removing that component by creating a residual series (just as is done with EOT), she could produce a full sequence of PCAs identical to a PCA solved by eigendecomposition. In this paper, we therefore extend this work to consider an autoencoder as the basis for a similar analysis to EOT. EOT is a brute force technique that can take many days to solve a typical application. A faster alternative would be a welcome contribution.

3. Results

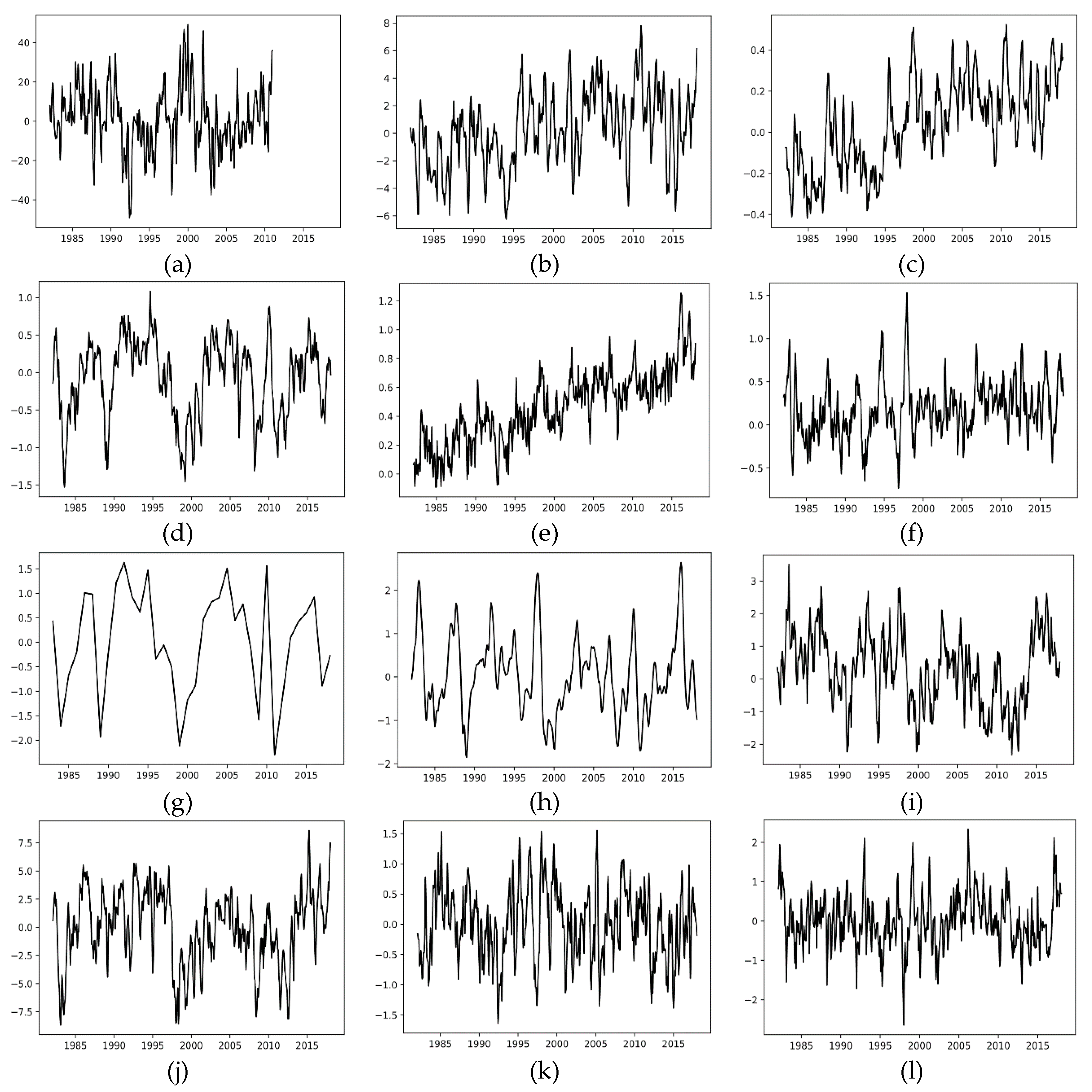

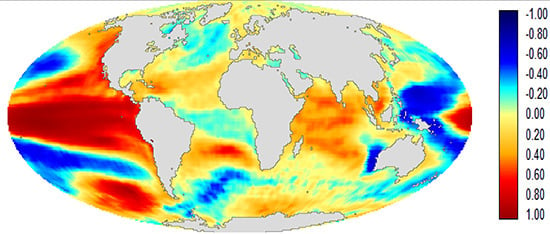

Figure 4,

Figure 5,

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10,

Figure 11,

Figure 12 and

Figure 13 show the first 10 SATA components (SCs) produced along with their loading images (maps of the degree of correlation between the time series at each pixel and the SC vector). Comparable figures for the EOT analysis can be found in

Supporting Materials Figures S1-S10. For the SATA and EOT components, the X axis is time and the Y axis is the component score measured in the same units as the data analyzed (degrees Celsius in this case).

Table 2 shows the association between the SCs (numbered in column

a) and EOTs (numbered in column

c) and the climate indices listed in

Table 1 along with the lag of maximum correlation (in column

b for the SATA components and column

d for the EOT components). Finally, column

e indicates the cross-correlation between corresponding SATA and EOT components. All correlations with a prewhitened significance of p < 0.05 or stronger are tabulated, with those more significant than p < 0.01 and p < 0.001 being noted with asterisks.

For the first three SCs and EOTs, the two methods produce very similar results. After this, they diverge, although SC8 and EOT4, SC9 and EOT7, and SC10 and EOT10 are clearly corresponding patterns.

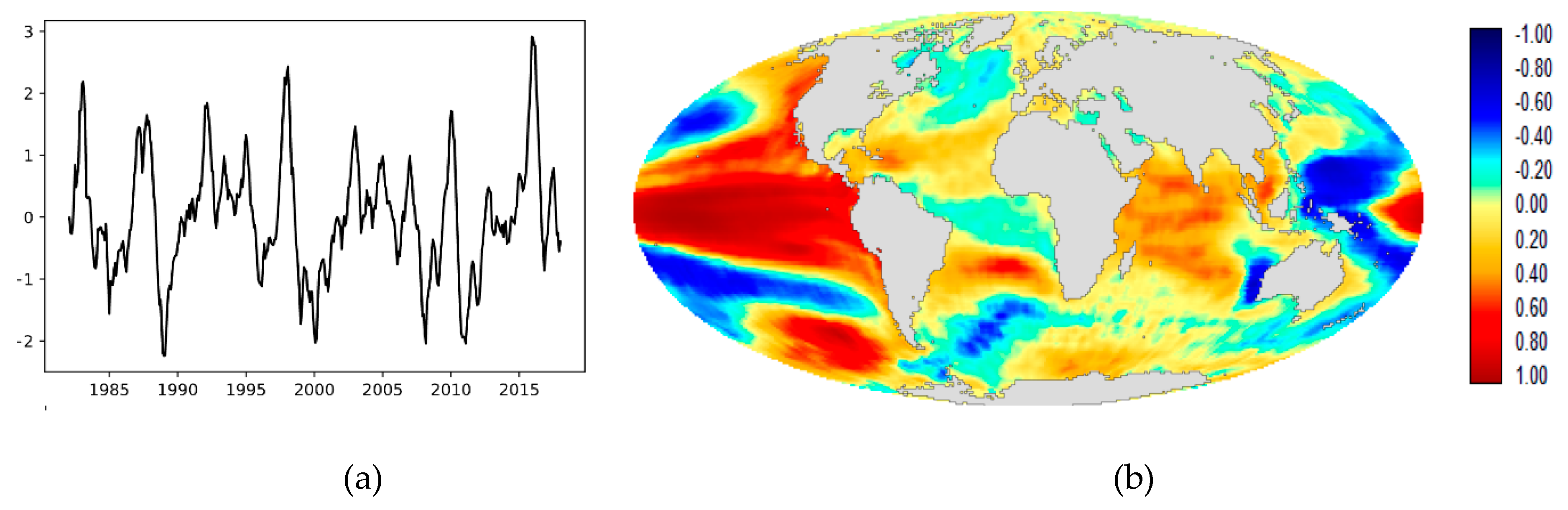

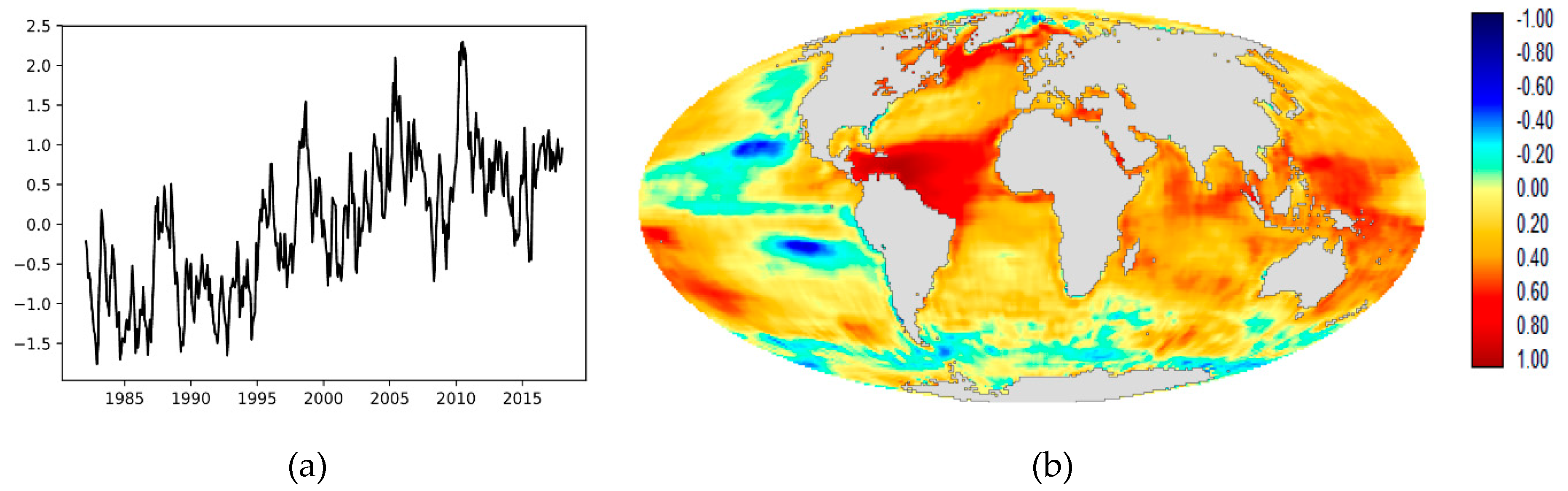

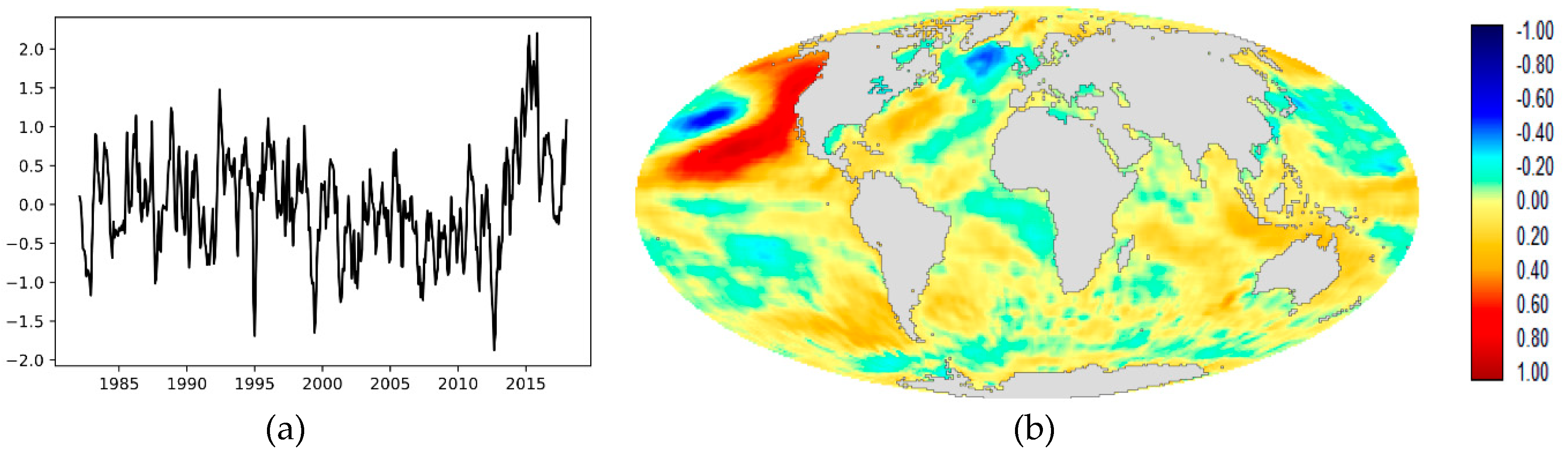

SC1 (

Figure 4) clearly describes the El Niño/Southern Oscillation (ENSO) phenomenon [

34]. The correlation with the Oceanic Niño Index (ONI), as seen in

Table 2, is very high—0.96 at lag +1. The lag relationship indicates that it found a pattern that peaks, in the case of El Niño, 1 month after that indicated by the ONI index. SC1 is also correlated with the IOD at 0.35 at lag +1, indicating that the IOD index precedes the component by 1 month. This is interesting given the evidence provided by [

35] that the Indian Ocean Dipole has a role to play in the development of Super El Niños. Note that SC1 is very similar to EOT1 (

Figure S1), r=0.97, although the correlations with the ONI and IOD indices are higher with SC1.

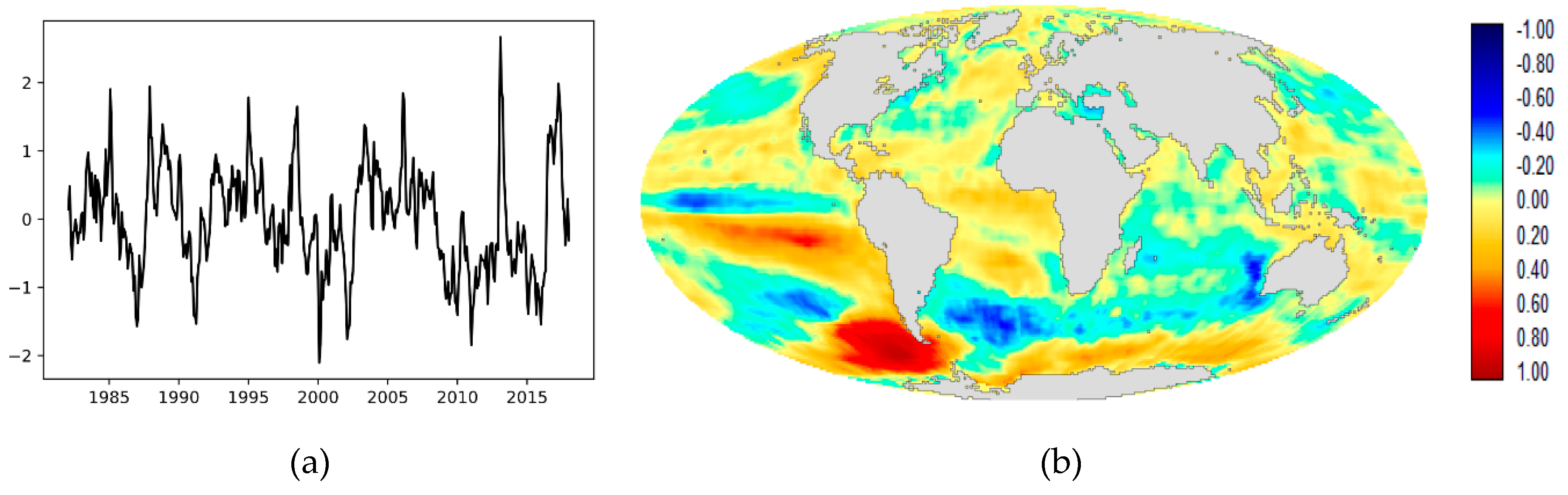

SC2 (

Figure 5) correlates highly (0.84) with the Atlantic Multidecadal Oscillation [

32], [

33] and with Global Mean Sea Surface Temperature Anomaly (0.72). The latter association (tested with trend-preserving prewhitening) is logical given the time frame analyzed (1982-2017) which spans the half cycle of the AMO from a trough in the mid-1970s to a peak in the 2000s [

34]. Looking at the loading image, it is clear that the areas of positive association with the component cover large parts of the globe, and not just the Atlantic. Thus, an element of global ocean warming seems plausible. SC2 is also correlated with the Atlantic Meridional Mode (AMM) at 0.67. Note that these correlations with the AMO and the AMM are significant at the p < 0.001 level, even though the first difference prewhitening removed the secular trend. EOT2 (

Figure S2), which is cross-correlated with SC2 at 0.80, is visually quite similar. However, there was no significant correlation between EOT2 and the AMO and AMM. The only significant correlation was with Global Mean Sea Surface Temperature Anomaly (using trend-preserving prewhitening).

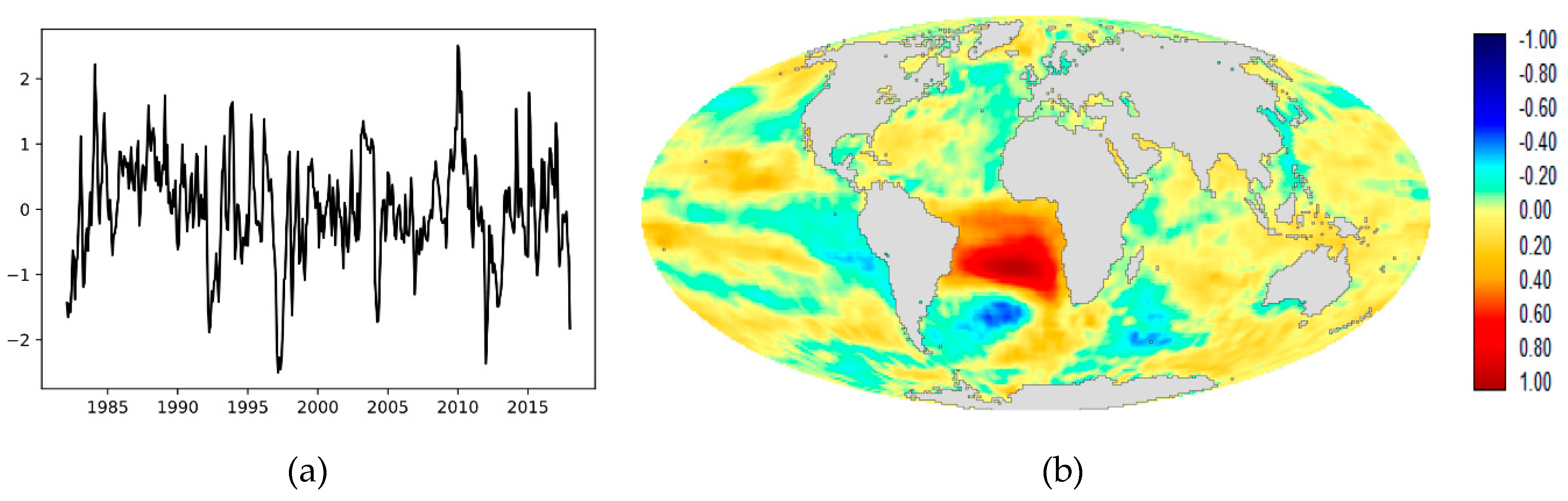

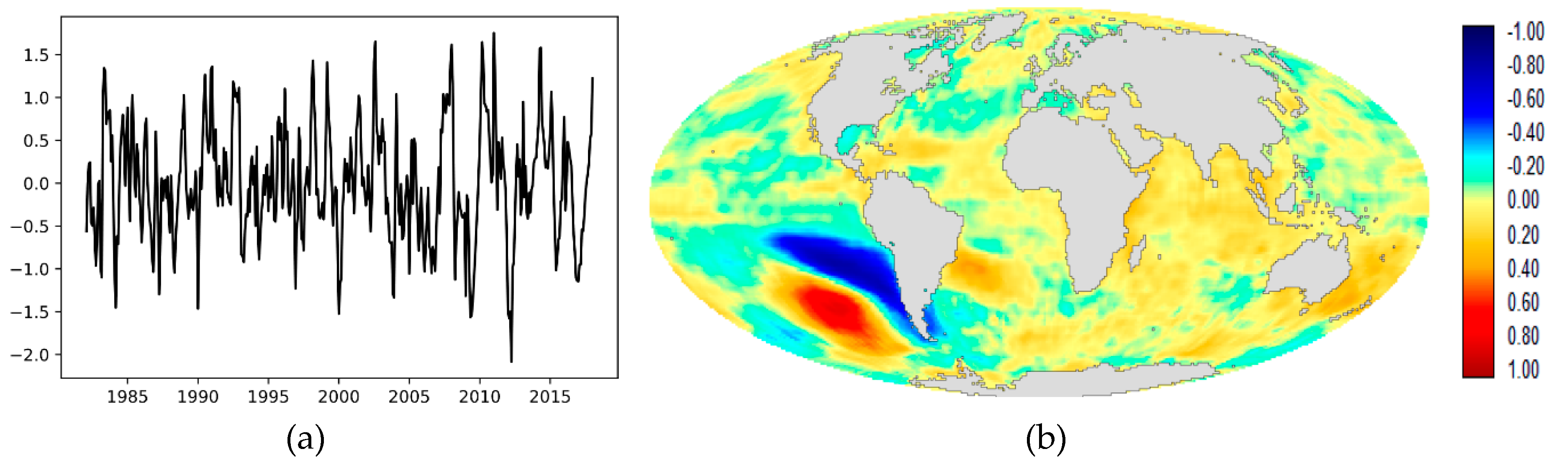

SC3 (

Figure 6) and EOT3 (

Figure S3) are cross-correlated at 0.69 and appear to be similar and related to the ENSO Modoki phenomenon [

35]. ENSO Modoki is a central Pacific Ocean warming that is distinct from canonical ENSO events which have stronger patterns in the eastern Pacific. The correlation with the EMI for SC3 is 0.50 at lag -4, and for EOT it is 0.46. The MII index [

36] shows an even stronger relationship, with a correlation of 0.72 with SC3, but no significant relationship with EOT3. [

37] postulated that there are two types of ENSO Modoki event, and developed the MII index [

36] to help distinguish between them. The EMI considers only SST anomalies, while the MII index is derived from a multivariate EOF analysis that considers the normalized EMI, the Niño4 index and 850 hPa relative vorticity anomalies averaged over the Philippine Sea in the autumn months (September, October, and November—SON). Type I events originate in the central Pacific, while Type II events originate in the subtropical northeastern Pacific. The correlations would suggest that SC3 captures a Modoki Type II pattern.

SC4 (

Figure 7) is also related to ENSO Modoki. SC4 is clearly a La Niña Modoki event, with correlations of −0.31 and −0.40 with the EMI and MII indices. Most striking, however, is its association with the Philippine Sea which is known to exhibit positive anomalies during a La Niña Modoki event [

38]. To investigate the possibility that SC4 is representative of a Modoki Type I, the EMI index was averaged over the autumn months (SON) to develop an annual index comparable to the MII index. MII was then subtracted from this autumn EMI to get an index to Modoki Type I. The correlation of SC4 with this index was 0.35 (p < 0.001 when prewhitened), lending some support for the possibility that SC4 represents Modoki Type I.

SC5 (

Figure 8) is a very distinctive pattern involving the southeastern Pacific and southwest Atlantic oceans that is related to the Antarctic Dipole [

39], [

40]. The pattern correlates with the Antarctic Dipole Index (ADI) at −0.43. [

40] indicated a positive lag relationship between ENSO and the Antarctic Dipole. This is confirmed here using the ONI, with a significant correlation of 0.26 (p < 0.001) at lag +5. Note that this pattern was not found in the EOT analysis.

SC6 (

Figure 9) presents a distinctive dipole in the south Atlantic Ocean. Its correlation with the South Atlantic Ocean Dipole (SAOD) [

41] is 0.42. However, the positive pole appears slightly south of the northeast pole defined by [

41] and the negative pole is centered to the southern end of their southwest pole. Again, this pattern was not found in the EOT analysis.

SC7 (

Figure 10) is also in the southern oceans and again has no counterpart in the EOT analysis. It correlates with the Subtropical Indian Ocean Dipole (SIOD) [

42] at −0.46. The pattern mapped corresponds with the negative phase of the SIOD. The index is specified as the difference between SST anomalies in the Indian Ocean southeast of Madagascar (seen in light blue) and the area in the eastern subtropical Indian Ocean just off the west coast of Australia. However, the dominant focus of this SC is warming off Australia, and the primary focus is slightly more easterly than the area measured for the dipole index. [

43] also noted a discrepancy between definitions of the SIOD poles and an evident dipole in cases of extremely wet and extremely dry years in southwest Western Australia. The pattern here seems to match best their discussion of extremely dry years. The relationship in the Pacific is unknown to the authors, although it can be noted that the correlations with the ONI (Lag -10) and EMI indices are −0.27 and −0.18, respectively.

SC8 (

Figure 11) and EOT4 (

Figure S4) are similar. The former is correlated with the EMI at 0.40 and the latter at −0.41, while the correlation between them is −0.37. The identity of the pattern is unclear. It would appear to involve variability of the Pacific Cold Tongue (a region of cold water extending westward from South America along the equator [

44], [

45]) that is unrelated to ENSO, and which is related to ENSO Modoki. It is possible that it is an evolutionary relationship. SC8 is related to SC3 at lag +6 with a correlation of 0.26 (p < 0.01 prewhitened). This suggests that SC8 may be an earlier stage in the development towards SC3. However, this requires further research.

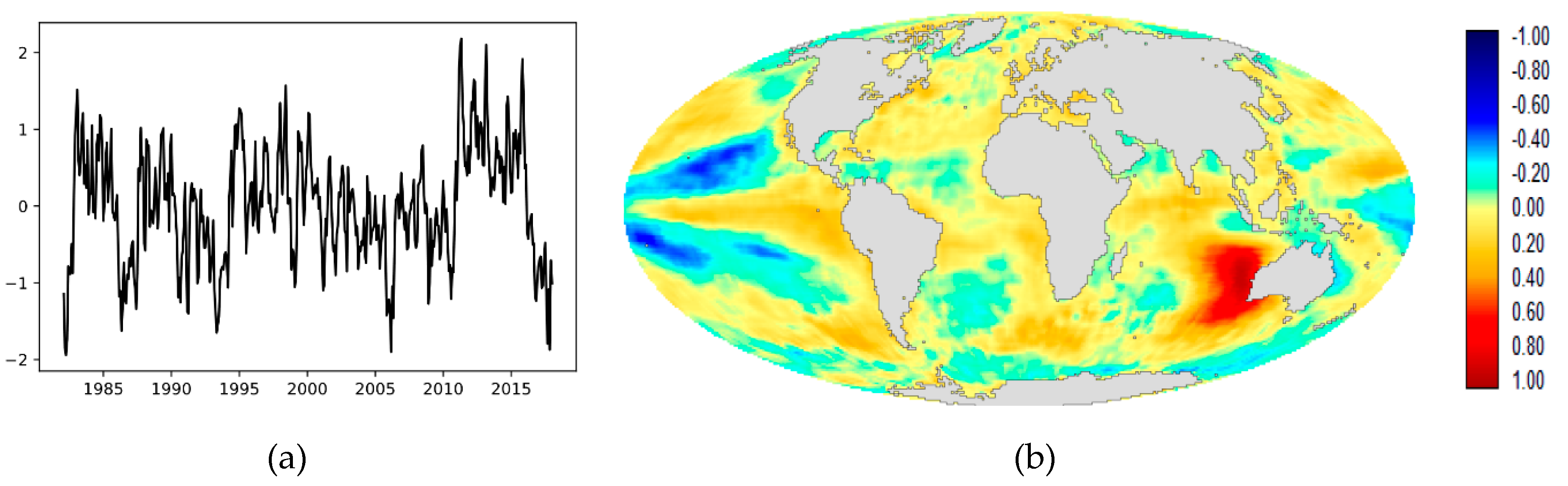

SC9 (

Figure 12) is distinctive and is clearly the same pattern as that found in EOT7 (

Figure S7), with a cross-correlation of 0.57. SC9 and EOT7 correlate with the PDO at 0.46 and 0.41, respectively. It is also interesting to note that SC9 and EOT7 correlate with the Pacific Meridional Mode (PMM) at 0.53 and 0.50, respectively.

SC10 (

Figure 13) is a very distinctive pattern in the southeast Pacific that is unknown to the authors at this time. However, it is apparently the same pattern as is found by EOT10 (

Figure S10). SC10 and EOT10 are correlated at 0.62. However, SATA sees this as a clear dipole, which is not so evident with EOT.

Note that while the SATA found four components that had no counterpart in the EOT analysis, it is also the case that there were four EOT components that had no counterpart in the SATA output. These were EOTs 5 (

Figure S5), 6 (

Figure S6), 8 (

Figure S8) and 9 (

Figure S9). EOT 5 is associated with tropical Pacific dynamics and is correlated both with ENSO Modoki and with ENSO. However, it is not significantly correlated with any of the SATA components. EOTs 6 and 9 did not correlate with any of the climate indices and are not patterns recognized by the authors. EOT 8, however, did correlate with the Atlantic Meridional Mode (AMM) at 0.63, as will be discussed further below.

In summary, the SATA analysis found nine components that it could significantly correlate with known climate indices.

Figures S11–S19 show temporal plots between these SATA components and their most closely associated climate index.

4. Discussion

Looking at the SATA components and their relationship with established climate indices, it is evident that the technique is highly successful in finding teleconnection patterns, and it can do so very deep into the data. For example, SATA Component 9 nicely captures the Pacific Meridional Mode, eight residuals deep into the data. Like EOT, the components are independent in time (because the analysis is based on successive residuals) but not necessarily in space. Thus, they are similar in character to oblique rotations of PCA.

Comparing SATA with EOT, there are frequent correspondences between the two techniques. However, there are also differences. The SATA correlations with known teleconnection indices are generally, and noticeably, higher than those for EOT. We tested the effect of using only a single pixel as opposed to the mean vector of the top 0.5%, for the first three components, and found that the correlations matched exactly those of EOT with that change. Thus, the additional explanatory power would appear to come from the regularizing effect of capturing the mean vector of the top 0.5%. We see this as an important feature, as using a single pixel tends to overfit.

Another distinct advantage of the SATA approach is that it is better able to capture teleconnections with multiple centers of action. This is clearly evident in SC10. Both EOT and SATA found this pattern. However, SATA characterized the pattern clearly as a dipole, which was not as strongly evident with EOT. To confirm its character, the SATA component was correlated with monthly anomalies in 500 hPa geopotential heights from the NCEP/NCAR Reanalysis I data [

46]. The result is indicated in

Supporting Materials Figure S20, where the index provided by SC10 shows a clear and matching dipole structure in the geopotential height data. Thus, we conclude that it really is a dipole. This is clearly an advantage of the SATA approach. EOT characterizes a pattern from a single location. Thus, in a case with multiple centers of action, it will favor one of the poles. Examining the 180 pixels chosen as the top 0.5% of vectors for SC10, 47% came from the negative pole and 53% came from the positive pole. Thus, the SATA vector developed was fairly evenly representative of the two poles.

Comparing the ability of the two techniques to discover known teleconnections, SATA found four teleconnections not detected by EOT within the first 10—the Atlantic Multidecadal Oscillation (AMO), the Antarctic Dipole (ADI), the South Atlantic Ocean Dipole (SAOD) and the Subtropical Indian Ocean Dipole (SIOD). The omission of the AMO in the EOT results is interesting. EOT2 correlates with the AMO at 0.71 and with the Atlantic Meridional Mode at 0.50 at Lag -1. However, after prewhitening with first differencing, which removed the substantial secular trend, neither is significant (p>0.1). Thus, the apparent correlation without prewhitening appears to only be related to the secular trend. However, SATA Component 2 is significantly correlated to both after the trend is removed by prewhitening. As was noted above, the EOT component is based on a single location, while the SATA component is based on an average of the top 0.5% of pixels. It would appear that the larger sample and broader geographic distribution provided a better characterization of the AMO and AMM that stood up to significance testing in the first difference prewhitened series.

While SATA found four teleconnections not found by EOT, EOT found one that was not found by SATA among the first 10—the Atlantic Meridional Mode (AMM), as a separate teleconnection distinct from the AMO. It is interesting to note that there are perspectives that the AMM and the AMO are both part of a single phenomenon, as well as those that see them as distinct [

47]. In this analysis, EOT sees a separate component related to the AMM (EOT8), while SATA, at least within the first 10 SCs, only finds the one associated with both the AMO and AMM in SC2. This is interesting because EOT8 is again based on a single pixel vector, which it found in the tropical Atlantic. Of the 180 pixel vectors (top 0.5%) selected to characterize SC2, most also came from the tropical Atlantic, but 14% of them came from the North Atlantic Sub-Polar Gyre, supporting the concept of a very broad geographic pattern.

Another difference between SATA and EOT is the issue of efficiency. EOT is a brute force technique that is computationally intensive and quite time consuming. On the contrary, SATA is surprisingly fast in comparison. In a test on identical hardware (see

Appendix B for details), our implementation of SATA in Python using the TensorFlow and TerrSet libraries ran two orders of magnitude faster than EOT in R [

48] and three orders of magnitude faster than the implementation in the Earth Trends Modeler [

8], which is currently a single-threaded application. Part of this derives from the remarkable speed with which the backpropagation by gradient descent in TensorFlow converges on a solution, and part derives from the efficiency of calculating the many dot products involved using a GPU.

This research was developed through an exploration of the potential of autoencoders for image times series analysis. Note, however, that in the implementation described here, where the activation function is linear, the autoencoder could be replaced with an S-mode [

14] PCA to extract the first component as a substitute for the autoencoder hidden node in each iteration. Since a linear autoencoder with one hidden layer spans the same subspace as PCA [

11,

12], a PCA with one component is, in fact, a basic linear autoencoder, as demonstrated by [

18]. Only the mathematical procedure for the solution is different. However, as indicated by [

12], a neural network-based autoencoder is better able to deal with high dimensional data. S-mode PCA of image time series, where each pixel is a separate variable over time, is extremely high dimensional. A neural network autoencoder derives weights by iterative adjustment, requires no matrix inversion, and has been demonstrated here to be very efficient. Indeed, Deep Learning frameworks, such as the TensorFlow library used in this implementation are routinely applied to problems with many billions of weights to be solved [

22].

This ability to handle high dimensional data would also allow a simple implementation of an Extended SATA (ESATA), similar in spirit to an Extended PCA [

5]. With an Extended SATA, multiple image series would be analyzed simultaneously for the presence of common patterns by simple concatenation over space of the series data. Again, the ability to find patterns with multiple centers of actions would be a distinct advantage. There is an implementation of Extended EOT (EEOT) in the Earth Trends Modeler [

8]. However, it is computationally intensive, and as with regular EOT, EEOT derives its pattern from a single pixel in just one of the series. With suitable adjustment of the threshold, ESATA could easily accommodate multiple centers of action across the series. Note also that the various series analyzed can be of different resolutions and even different locations in space, as long as all series analyzed share the same temporal resolution and duration.

Finally, it is evident that the SATA patterns, like EOT, are very regional in character. Both techniques search for patterns globally, but then characterize them locally (although for SATA, the characterization does not need to be derived from a contiguous group of pixels, but may involve multiple centers of action). This regional character raises the question about how to measure the relative importance of the components. With PCA/EOF, this is indicated by the percent variance explained. With regional patterns such as these, however, this does not seem appropriate. For example, with SATA Component 9, most of the Earth’s ocean surface shows no appreciable relationship with the pattern over time. However, in the North Pacific, the relationship is strong. A global measure of percent variance explained would be so diluted that it would make the component seem inconsequential. Perhaps a better indicator would be to measure the surface area over which the explanatory value (the square of the loading) exceeds a threshold, such as 50% variance explained. The area can then be compared to other components.

Planned future research includes the Extended SATA mentioned above as well as an investigation of SATA in T-mode. The current implementation is S-mode where the variables differ in their spatial location (i.e., each pixel represents a different series over time). In T-mode, the variables differ in time. Thus, each time step represents a different image. As demonstrated by [

14], S-mode and T-mode can potentially yield very different insights about the data. Also to be explored is the potential of using non-linear activations in the autoencoder architecture.