Abstract

Many people use smartphone cameras to record their living environments through captured images, and share aspects of their daily lives on social networks, such as Facebook, Instagram, and Twitter. These platforms provide volunteered geographic information (VGI), which enables the public to know where and when events occur. At the same time, image-based VGI can also indicate environmental changes and disaster conditions, such as flooding ranges and relative water levels. However, little image-based VGI has been applied for the quantification of flooding water levels because of the difficulty of identifying water lines in image-based VGI and linking them to detailed terrain models. In this study, flood detection has been achieved through image-based VGI obtained by smartphone cameras. Digital image processing and a photogrammetric method were presented to determine the water levels. In digital image processing, the random forest classification was applied to simplify ambient complexity and highlight certain aspects of flooding regions, and the HT-Canny method was used to detect the flooding line of the classified image-based VGI. Through the photogrammetric method and a fine-resolution digital elevation model based on the unmanned aerial vehicle mapping technique, the detected flooding lines were employed to determine water levels. Based on the results of image-based VGI experiments, the proposed approach identified water levels during an urban flood event in Taipei City for demonstration. Notably, classified images were produced using random forest supervised classification for a total of three classes with an average overall accuracy of 88.05%. The quantified water levels with a resolution of centimeters (<3-cm difference on average) can validate flood modeling so as to extend point-basis observations to area-basis estimations. Therefore, the limited performance of image-based VGI quantification has been improved to help in flood disasters. Consequently, the proposed approach using VGI images provides a reliable and effective flood-monitoring technique for disaster management authorities.

1. Introduction

Much evidence shows that rainfall has intensified globally in recent years [1,2]. Within only a few hours, considerable amounts of rainfall can occur in an urban area, leading to large amounts of water in the drainage system. When the amount of accumulated water exceeds the design capacity of a drainage system, flooding occurs on roads. The characteristics of a flash flood can be estimated using simulation models for urban areas, such as SOBEK [3], SWMM [4], and Flash Flood Guidance [5,6]. These models are computed based on their requisition of a small area, uniform rainfall, and an operating drainage system. Moreover, these simple simulations rely on rainfall, the design capacity of the drainage system, and numerical model data regarding elevation. Thus, flood simulations are limited by data indeterminacy and physical complexity and are subject to the appropriateness of the model and computational efficiency [7]. Several studies attempted to use open-source and multiperiod data, such as optical and synthetic aperture radar (SAR) remotely sensed images, to overcome model limitations and assess the extent of flooding [8,9,10,11,12,13]. In large river basins, remote sensing data provide geographical identification of flooding areas, and combines with local hydrological monitoring data to effectively predict or restore flooding impacts. However, these satellite telemetry spatial data were mostly presented in meters of ground resolution. When in situ water level monitoring data are lacking, the accuracy of flooding range assessments is limited by the spatial data resolution. Moreover, satellite and airborne optical and radar images are not suitable for detecting the water level on a city road, especially under severe weather.

A condensed urban perspective of critical geospatial technologies and techniques includes four components, (i) remote sensing; (ii) geographic information systems; (iii) object-based image analysis; and (iv)sensor webs, all which were recommend to be integrated within the language of open geospatial consortium (OGC) standards [14]. Schnebele and Cervone combined volunteered geographic information (VGI) with high-resolution remote sensing data, and the resultant modified contour regions dramatically assisted in the presentation of detailed flood hazard maps [15]. In addition to employing smartphone sensors in professional fields, such as construction inspection [16], many people use smartphone cameras to record their living environments through captured images and share aspects of their daily lives on social networks, such as Facebook, Instagram, and Twitter, so that crowdsourcing data regarding various phenomena can be inexpensively acquired. Kaplan and Haenlein classified social media applications subsumed into specific categories by characteristic, including collaborative projects, blogs, content communities, social networking sites, virtual game worlds, and virtual social worlds [17]. Social networks, which provide platforms for sharing VGI, enable citizens to easily obtain information such as texts, images, times, and locations. Recently, the framework and applications of a civic social network, FirstLife, was developed following a participatory design approach and an agile methodology by VGI with social networking functionalities [18]. Those platforms allow users to create a crowd-based entity description and offer an opportunity to disseminate information and engage people at an affordable cost. Therefore, VGI has grown in popularity with the development of citizen sensors in natural hazards [19]. Granell and Ostermann employed a method of systematic mapping to investigate researches using VGI and geo-social media in the disaster management context, and found the majority of the studies searching potential solutions of data handling [20]. Many applications for disaster detection and flood positioning using crowdsourcing have been built to identify disaster relevant documents based on merely keyword filtering or classical language processing on user-generated texts. Currently, most VGI applications handle text information with positioning and time—so-called text-based VGI. With a dramatic increase of multi-source images, the image-based SGI provides great opportunities for relatively low-cost, fine-scale, and quantitative complementary data. A collective sensing approach was proposed to incorporate imperfect VGI and very-high-resolution optical remotely sensed data for the mapping of individual trees by using an individual tree crown detection technique [21]. In addition to accurate image localization [22], massive amounts of street view photographs from Google Street View were used for estimating the sky view factor, which was proven to assist with urban climate and urban planning research [23].

In one study, user-generated text and photographs concerning rainfall and flooding were retrieved from social media by using feature matching, and deep learning was used to detect flooding events through spatiotemporal clustering [24]. Based on credible and accurate data, image-based VGI was evaluated for validation of land cover maps [25,26,27] and flood disaster management plans [28,29,30]. Most feasible image-based VGI properties in the aforementioned studies were used for land cover and change identification. In addition, scales of detected objects such as flooding areas and heights are expected be quantified through spatial computation of image-based VGI and three-dimensional spatial information; however, VGI studies still face challenges, which include exploring the use of image-based VGI as data interpreters; improving methods to estimate water level from images; and harmonizing the time frequency and spatial distribution of models with those of crowdsourced data [31,32]. Rosser et al. mentioned that, when image-based VGI, hydrological monitoring data and flooding simulations are integrated to estimate flooding extents, the accuracy of calculation is affected by the resolution of the terrain model [33]. However, high-resolution urban models from LiDAR and airborne photogrammetry can provide 3D information over a large urban area, but such data are not always available due to budget and time limitations. As a novel remote sensing detection technology, unmanned aerial vehicles (UAVs) equipped with cameras can help build high-resolution spatial information and monitor disasters [34]. UAV-based spatial data provides a high ground resolution to facilitate VGI corresponding ground information, and VGI supplies a reference for flooding simulation to confirm the moment and verify the assumption of flood modeling.

Quantitative observations of rainfall and flooding events extracted from social media by applying machine learning approaches to user-generated photos can play a significant role in further analyses. Thus, the aim of the present study is to develop an image-based VGI water level detection approach by considering unknown smartphone camera parameters and imaging positions. Image-based VGI records flooding scenes and also contains a lot of flooding-irrelevant information, such as trees, buildings, cars, and pedestrians. Image classification was initially employed to reduce scene complexity and identify flooding regions, in which water lines were exacted by edge detection and straight line detection. Then, in order to reveal the imaging positions of the image-based VGI and heights of the detected water lines, a UAV-based orthophoto with a centimeter ground resolution was provided to identify the scene features, the same as in image-based VGI. A UAV-derived digital elevation model was applied to obtain camera parameters and determine water levels based on the photogrammetric principle. Finally, the proposed method of water level calculation was performed in an urban flood case study, and was compared with the simulated water levels through flood modeling. Through the difference between the VGI-derived and simulated water level values, the assumption of the flood modeling was evaluated. The quantified water levels with the resolution of centimeters can validate flood modeling so to extend point-basis observation to area-basis estimation that verify the applicability and reliability of the image-based VGI method. Finally, analyses and case studies are conducted during an urban flooding event in Gongguan in Taipei City, Taiwan for demonstration and discussion.

2. Methods

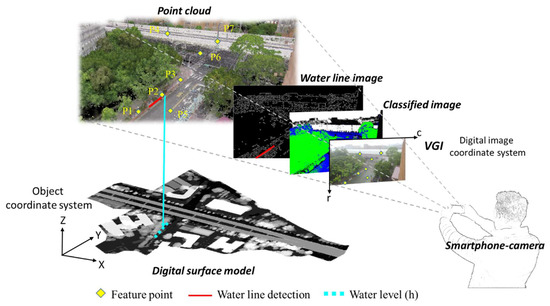

The proposed water level detection using VGI involves two processes: identifying water lines in an image-based VGI and measuring the water level based on photogrammetric principles (i.e., collinearity equations). A schematic is presented in Figure 1.

Figure 1.

The geometry of VGI water level calculation.

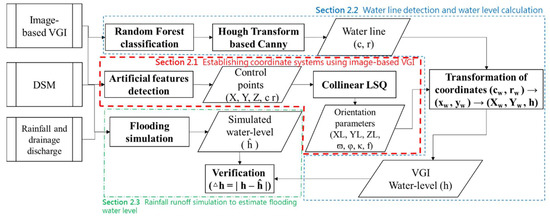

The proposed method aims to cope with three problems in VGI water level detection: (1) unknown smartphone camera orientation parameters and VGI shooting positions, (2) VGI water line detection, and (3) VGI water level measurement. Figure 2 presents the analysis flowchart for the proposed method. To solve the collinearity equations, the coordinate system of the object (world) must be defined according to the description in Section 2.1, which also introduces the parameters establishing the relationship between the object space and image space. Subsequently, Section 2.2 introduces a compound method for detecting water lines and measuring water levels. Section 2.3 describes a rainfall runoff simulation to estimate flooding water levels. Finally, the simulated water levels were compared with the corresponding VGI-derived water levels at a designated time.

Figure 2.

Flowchart for the proposed VGI water level detection method.

2.1. Establishing Coordinate Systems Using Image-Based VGI

Several pretreatment processes need to be conducted to establish the relationship between the object coordinate system and image coordinate system. These processes are described as follows: (1) identifying feature points in the image-based VGI such as road markings, zebra crossings, street lights, traffic signals, and buildings; (2) measuring the coordinates of calibration points by using a digital surface model (DSM); and (3) determining the interior and exterior orientation of the camera. Based on image-based VGI, categories such as flooding, vegetation, and buildings are classified by RF classifiers to generate a classified image. Using HT-Canny, the classified image is then transformed into an edge image to detect water line positions in an image system.

Object-scale computing using the photogrammetric method can be facilitated by introducing control points to link an image to world coordinates [35]. Previous studies employed image information, including camera-known parameters and water gauges, to develop water level monitoring systems [36,37,38]. The interior orientation includes the focal length, the location of the principal point, and the description of lens distortion. These parameters are determined based on camera calibration or recommended reference values. The exterior orientation describes the position and orientation of the camera in the object space, which contains six independent parameters: for position and for orientation. These parameters can be obtained by solving the following collinearity equations [35]:

where (x, y) represent the image coordinates of the calibration point, (X, Y, Z) represent the object coordinates of the calibration point, m11–m33 are the elements in a 3 × 3 rotation matrix M, (x0, y0) are the offsets from the fiducial-based origin to the perspective center origin, and f is the focal length.

In Equation (1), (x, y) represent the calibration point in the photo coordinates transformed from the digital image coordinates (c, r) and can be expressed as follows:

where W and D are the pixel dimensions, and dW and dD are the nominal sizes of the pixel.

The rotation matrix M represents the camera orientation in the object space and can be expressed as follows:

In Equation (1), x0, y0, and f are interior parameters and should be determined in advance based on a smartphone camera reference. Once seven or more calibration points have been identified and measured, at least 14 new equations can be written based on Equation (1). The camera’s position , orientation , and focal length f can then be uniquely determined through a least squares (LSQ) technique.

2.2. Water Line Detection and Water Level Calculation

To highlight notable objects in images, image blur processing and deep learning techniques were applied to reduce noise interference and for the removal of background objects [39,40]. Other studies used supervised classification methods, such as random forest (RF), nearest neighbor, support vector machines, genetic algorithm, wavelet transform, and maximum likelihood classification to identify land coverage [41,42,43,44]. Of these methods, RF outperforms most others because having fewer tuning parameters prevents overfitting and retains key variables [45,46,47]. According to relevant research on classification algorithms, an RF classifier effectively improves the classification accuracy and provides the best classification results, even when it is used to classify remotely sensed data with strong noise [48,49]. In this study, RF classification is used to simplify complex scenes and highlight water lines in collected image-based VGI. The RF algorithm is developed through bootstrap aggregating (bagging) and the random selection of features to be used for classification and regression [50,51]. In bagging, a training dataset containing K random replacement examples (pixels) is selected for each feature, and the pixels are defined by all decision trees. The decision trees are involved in the attribute selection measure; a commonly used attribute selection measure is the Gini impurity, which is based on the impurity of an attribute with respect to the classes involved. When randomly selected pixels belong to class Ci, the Gini impurity according to a given training set T can be expressed as follows:

where is the probability of the selected case belonging to class Ci.

In the random selection of features, the numbers of features and trees are the two provisional parameters in the RF classifier [52]. After the bagging process and parameter setting are completed, the classification analysis generates classified images and the accuracy of classified pixels. Accurate assessments of the classification results can be evaluated through the commonly used indicators, including producer’s accuracy, user’s accuracy, F1 score, and Kappa statistics [53]. These indexes were calculated through the validation dataset obtained from more than one-third of the reference locations [54].

After category classifications are ensured through the RF classifier, these boundaries of categories are identified through edge detection. The popular methods of edge detection include Canny, Sobel, Robert, Prewitt, and Laplacian operators, in which the performance of the Canny edge detector is considered superior to that of all other edge detection operators [55,56]. Canny edge detection is performed by smoothing images through the Gaussian filter and then calculating edge gradients with a mask. In the implementation of Canny edge detection, a smoothed image processed by Gaussian filter is used to calculate edge gradient of characteristics by moving a mask, and the gradient image is used as a reference for identifying edge lines. The Hough transform, which is based on the Canny edge detector (hereafter, “HT-Canny”), is useful to detect straight line features [57,58,59]. In Hough transform, the detected edge lines are signified by a standard line model constituting of the detected line slope and length. Through properly setting the thresholds of slope and length, the water straight lines are found out through image-based VGI. The water line, generally a straight line representing the intersection between an object and the water surface, is a recognizable feature in a classified image and thus can be identified using a line detection method. Subsequently, the Canny edge detector can extract structural information from the image. The Hough transform is then applied to identify the position of the water line in the image. The equation of the water line is expressed in the Hesse normal form as follows:

where is the distance from the origin to the closest point on the straight line, and is the angle between the x-axis and the line connecting the origin to the closest point.

After the water line , camera’s shooting position , orientation , and focal length f parameters have been determined, the collinearity equations define the relationship between the photo coordinate system and object coordinate system. The collinearity equations can be rewritten and inferred water level in the following basic form:

The approximate location (X, Y) can search the corresponding position of detected water line through the UAV orthophoto and DSM data. UAV imagery through image-based modeling has been proven efficient in 3D scene reconstruction and damage estimation [60]. At the beginning of urban flooding, the flooding depth started from road surfaces, so the initial water level h is considered as the same height as the road elevation. Under setting the fixed water line , camera’s shooting position , orientation , and focal length f, (X, Y) and h are repeatedly iteratively modified by the least squares (LSQ) technique. After i iterations, the elevation is treated as a new candidate water level hi+1 and compared with the previous water level hi. When the elevation difference |hi+1 − hi| is less than 0.1 m, the water level hi+1 is determined at the location (Xi+1, Yi+1).

2.3. Rainfall Runoff Simulation to Estimate Flooding Water Level

The rainfall runoff model used in this study is a simple conceptual model also known as the lumped model. A lumped model is the most widely used tool for operational applications, since the model is implemented easily with limited climate inputs and streamflow data [61]. Water storage can be expressed as the following discrete time continuity equation:

where is the inflow at time i; is the outflow at time i, which is the designed drainage capacity of the sewage system; and is the initial storage at time 0, which is assumed to be 0. All units of storage are cubic meters.

The proposed method is based on the following basic assumptions: (1) Rainfall and design drainage capacity in the small study area are uniform; (2) Rainfall in the study area is drained directly through the sewage system with a completely impermeable landcover and without baseflow and soil infiltration; (3) The sewage system is fully functioning; and (4) Water detention by buildings and trees is ignored. Based on these assumptions, rainfall is accumulated in a DSM, and the design drainage capacity is the homogeneous outflow in each DSM grid. When the accumulated water exceeds the design drainage capacity of the DSM grid, the inundation water level is obtained from the lowest elevation. Finally, the flooding water level and flooding map are updated by unit observation time. The flooding water level can be expressed as follows:

where A is the study area, is the water storage at time j, and Z is the ground elevation.

3. Case Study

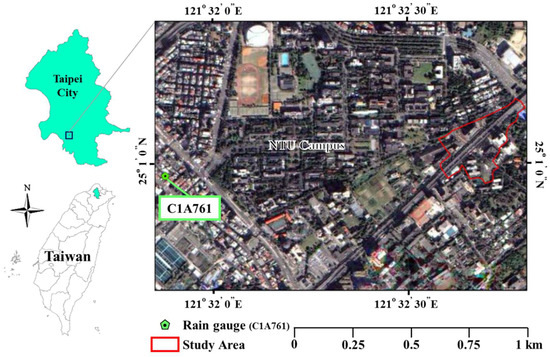

The proposed VGI water level detection method was tested in an urban field. The test was conducted in Gongguan in Taipei City, Taiwan, which repeatedly faces flash floods and road safety problems because of its high population density and heavy traffic. A heavy rainfall event occurred in Gongguan between 13:00 and 17:00 on 14 June 2015. This event had high-intensity rainfall over a short duration (≤131.5 mm/h) and precipitation exceeding the design drainage capacity of 78.8 mm/h [62]. This was a severe flood such as rarely occurs on this important road near National Taiwan University, so no water level monitoring equipment had been installed in the neighborhood. The nearest hydrological station, located 1.3 km from the flooding area, is the C1A761 rain gauge station (Figure 3). In this study, the C1A761 rain gauge record was used as the reference value of rainfall and was converted to the volume of water inflow in the area. The inflow volume deducted by the design drainage capacity was distributed in a spatial model to show the elevation of flooding level.

Figure 3.

Study area (red polygon) at Gongguan, Taipei City, Taiwan.

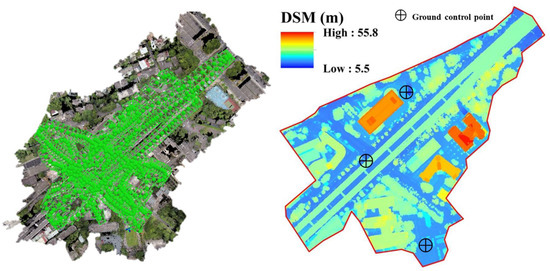

Spatial information was collected by an unmanned aerial vehicle, DJI Phantom 2 Vision+ (Dà-Jiāng Innovations Science and Technology Co, Shenzhen). In total, 589 positioned images of 2.84cm spatial resolution were used to generate a 0.03-m ground resolution DSM and an orthophoto in Pix4Dmapper Pro Version 1.4.46 (Prilly, Switzerland). The study area covered 0.0637 km2, and elevation of 5.5 m to 55.8 m is denoted in blue to red in Figure 4. The accuracy of the DSM was examined by three ground control points acquired through static positioning using the Global Navigation Satellite System. The root mean square errors of the X, Y, and Z directions are ±0.018 m, ±0.046 m, and ±0.009 m, respectively.

Figure 4.

589 positioned unmanned aerial vehicle images generated a UAV orthophoto (left) and DSM with 0.03-m ground resolution (right).

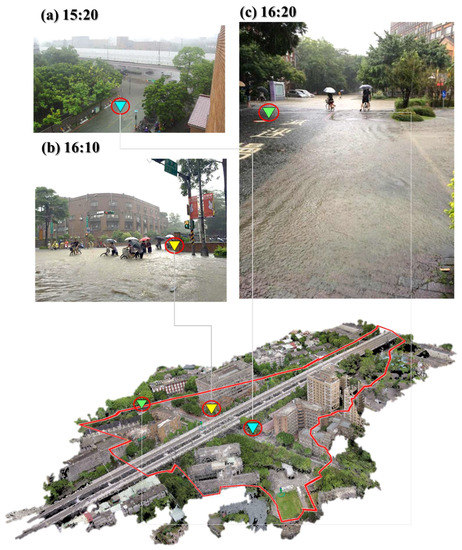

The image-based VGI collected from social networks, including Facebook and the popular bulletin board system in Taiwan named PTT, were used in this study. These three images were photographs posted at approximately 15:20, 16:10, and 16:20 on 14 June 2015. Approximate shooting locations for these photographs could be visualized in the study area, as shown in Figure 5. The coordinates of these locations were obtained from the DSM as references for calculating the VGI water level.

Figure 5.

Image-based VGI illustrating photograph shooting locations and acquisition times of (a) 15:20, (b) 16:10, and (c) 16:20 through the point cloud.

4. Results

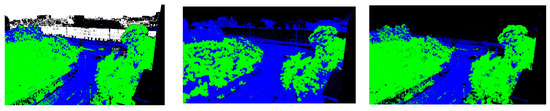

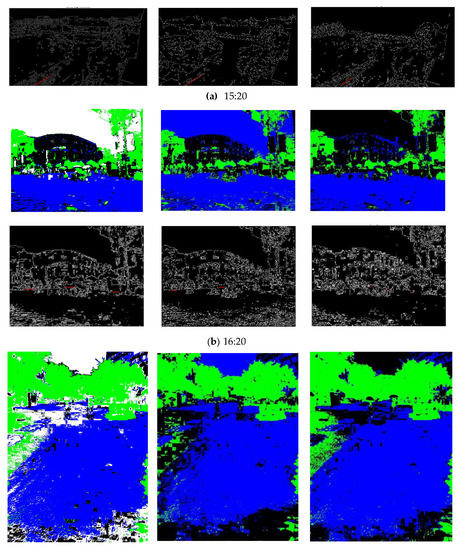

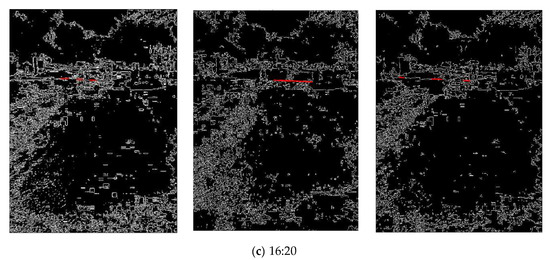

In this study, RF, maximum likelihood (ML), and support vector machine (SVM) classification were used to identify three classes (vegetation, water, and building) in the image-based VGI. A total of 26,933 pixels were selected for all three classes through equalized random sampling, divided into 13,000 pixels as a training set and 13,933 pixels as a test set. Regarding the RF parameter settings, three classified features at each node and 60 trees were used. Table 1 shows the percentages of producer’s and user’s accuracy, F1 score, overall accuracy, and kappa statistic for three classification categories at three times. The results of RF classification are the best with overall accuracies are 80.10% (15:20), 80.12% (16:10), and 79.93% (16:20), respectively. The average overall accuracy of the test data is 80.05%. Kappa coefficient values are 70.03% (15:20), 70.11% (16:10), and 68.80% (16:20), respectively. In the classified image-based VGI, vegetation appears in green, water areas in blue, and buildings in black. The edges of water lines detected using HT-Canny were marked as red lines; their positions in each digital photo coordinate system were calculated (Figure 6).

Table 1.

The percentage of Producer’s accuracy, user’s accuracy, F1 score, overall accuracy, and kappa statistic for three classification categories of RF, ML, and SVM classifications.

Figure 6.

VGI classified images (upper) and water line detection (bottom). Image-based VGIs were simplified into three classification categories, namely water in blue, vegetation in green, and buildings in black based on the classifications of RF (left), ML (middle), and SVM (right). The classified image-based VGIs were processed using HT-Canny to detect water lines (red lines) at the acquisition times of (a) 15:20, (b) 16:10, and (c) 16:20.

After water line extraction was completed, the calculation of VGI water levels relied on the shooting position, camera orientation parameters, and control point coordinates. The approximate shooting positions were confirmed using the collected image-based VGI, Google Street View, and DSM. The camera interior orientation parameters, including the focal length and charge-coupled device (CCD) size, are referred to as smartphone camera parameters.

This study calculated the average value of the public smartphone parameters as an initial value. For the initial interior parameters, the focal length was 4.6 mm, and the CCD size was 1/2.5”. Considering human postures that a person normally holds a camera to shoot ground scene, the initial camera three-axis orientations of the exterior parameter were set as (80°, 5°, 80°), respectively. To solve seven uncertain parameters, including shooting position (XL, YL, ZL), orientations , and the focal length f, more than seven control points were provided as redundant observations for LSQ calculation. In this case study, nine control points can be distinguished from each image-based VGI and the UAV orthophoto. The point coordinates are listed in Table 2.

Table 2.

Control point coordinates.

Through the rainfall observations and simple discrete time continuity Equations (8) and (9), the flooding process and simulated water level were determined. The parameters of rainfall runoff simulation are shown in Table 3. The initial flood water level is assumed to be the lowest ground elevation (5.670 m), which was lower than the average ground elevation (8.989 m) of the VGI scene. When the simulated water level, which refers to the lowest ground elevation plus the depth of flooding, is higher than the ground elevations of the DSM, the flooding ranges are drawn in the UAV-derived DSM and orthophoto.

Table 3.

The parameters of the rainfall runoff simulation.

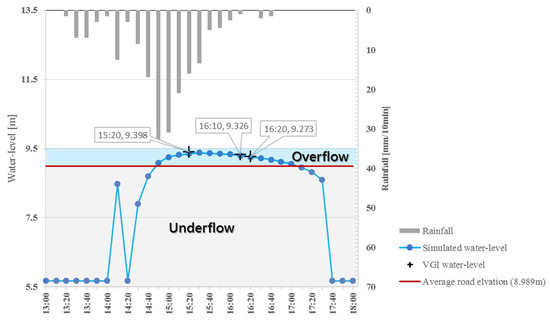

Using Equation (7), the control points, orientation parameters of image-based VGI, detected water lines, and VGI water levels were calculated though LSQ, as listed in Table 4. The VGI water levels of 9.398 m, 9.326 m, and 9.273 m occurred at 15:20, 16:10, and 16:20, respectively. The differences between the VGI water levels and simulated water levels were between 0.018 m and 0.045 m. Notably, all of the VGI water levels were higher than the simulated water levels, possibly because the simulated water levels had been underestimated; this highlighted the problem of baseflow not being considered in the simple conceptual model. By contrast, these differences could be used to estimate the baseflow in the study area. Since VGI images convey ground-truth information to assist in the correction of hypothetical lumped models, the water level differences were considered as a result of neglecting baseflow and soil infiltration. The neglected values are between 0.318 and 0.796 m3/hr, which are estimated by multiplying the study area (0.0637 km2) by the hourly water level differences (between 0.018 and 0.045 m) per hour. The estimated values provided references for revising hydrological modeling.

Table 4.

Comparison between simulated water levels and VGI water levels determined based on the orientation parameters.

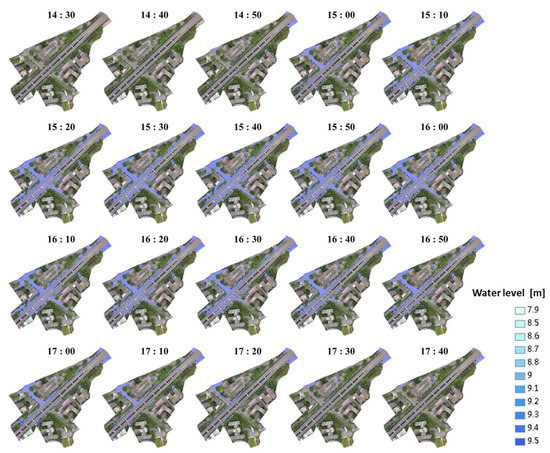

The rainfall hyetograph and simulated water level are shown in Figure 7, and the simulated flooding process from 14:30 to 17:40 is shown in Figure 8. The simulation analysis confirmed that the significant water level of 9.252 m was reached at 15:00, and the flood area was located on the trunk road. At 15:20, the peak water level of 9.353 m was reached, and the flood spread over all roads in the study area. By 16:30, the flood had gradually receded, and the water level decreased to 8.586 m. Finally, at 17:40, the flooding event ended.

Figure 7.

Rainfall hyetograph and corresponding water level distribution.

Figure 8.

Serial illustration of the simulated flooding area from 14:30 to 17:40.

The analysis was conducted in MATLAB R2018a 64bit (Natick, MS, USA) and was tested on a personal computer, processor: Intel Core i7-4700HQ 2.4 GHz (Santa Clara, CA, USA); memory: 8.00 GB DDR3; operating system: Windows 7 (Redmond, WA, USA). The average computation time required to classify image-based VGI and detect water lines was 355 s. The average time required to determine VGI water levels and orientations was 42 s. In summary, the proposed approach quantified the water levels to within 0.001 m by using image-based VGI and real field information; thus, this approach can determine warning water levels and positions as references for disaster relief.

5. Discussion and Conclusions

In the past few decades, many studies have used urban flood simulation models to estimate the temporal impact of flooding. These flood simulations are limited by hydrological observations and physical complexity, which affect the model’s suitability and calculation efficiency. Furthermore, the collection and verification of in situ observation data are crucial for the evaluation of the simulation. Especially on busy roads in urban areas and in the absence of water gauges, image-based VGI that records flooding provides an opportunity for spatial and temporal verification.

This study combined image classification, line detection, collinearity equations, and LSQ to quantify water levels based on image-based VGI acquired by smartphone cameras. Based on the theoretical analysis and validation described in this paper, image-based VGI classified using the RF classifier can be used to identify flooded areas. The proposed novel approach successfully manages the ambient complexity of urban image-based VGI to detect water lines. Through a centimeter-accurate DSM, the detected waterlines are used to quantify flood water levels. Therefore, the fact that there is no water level monitoring equipment at the flooding site is no longer a barrier to the acquisition of information. Moreover, by employing photogrammetric principles, the proposed method can determine imaging locations, water levels, and smartphone camera parameters. In addition, differences between VGI and simulated water levels provide a baseflow reference for simple flood modeling. The quantified water levels with a resolution of centimeters (<3-cm difference on average) can validate flood modeling so as to extend point-basis observations to area-basis estimations. Therefore, the limited performance of image-based VGI quantification has been improved to deal with flood disasters.

Overall, this research reveals that flooding water levels can be quantified by linking the VGI classification images with a UAV-based DSM through photogrammetry techniques, thus overcoming the limitations of past studies that used only image-based VGI to qualitatively assess the impact ranges of flooding. Based on our results, we suggest that the use of image-based VGI to obtain flooding water level must meet two requirements. The first requirement is orthographic images and a DSM with a centimeter-level ground resolution, to provide spatial identification of control point features in image-based VGI, such as building corners, ground markings, and streetlights. The other requirement is that the shooting perspective of image-based VGI should avoid being close to parallel or vertical to the ground, in order to decrease the difficulty of identifying the flooding water line on images and any resulting errors. Fortunately, most VGI images are shot at an oblique perspective. In other words, a better spatial distribution of image-based VGI is able to capture the outlines of buildings or bridges as spatial references in a frame of images, such as in Google Street View.

In the future, the proposed VGI setup can be promoted through the execution of street-monitoring techniques to supply long-term continuous imagery; these images, acquired by smartphone cameras, can then be incorporated into Google Street View to construct and update local spatial information. Eventually, by employing more deep learning techniques with distribution computation [63], a great amount of VGI photos can be processed and integrated into a disaster-monitoring system for flooding and traffic management in an economical and time-efficient manner.

Author Contributions

Conceptualization, Y.-T.L. and M.-D.Y.; methodology, Y.-T.L. and M.-D.Y.; software, Y.-T.L., Y.-F.S. and J.-H.J.; validation, Y.-T.L., Y.-F.S. and J.-H.J.; formal analysis, Y.-T.L. and M.-D.Y.; writing—original draft preparation, Y.-T.L. and M.-D.Y.; writing—review and editing, Y.-T.L. and M.-D.Y.; visualization, Y.-T.L. and M.-D.Y.; supervision, M.-D.Y. and J.-Y.H.; project administration, M.-D.Y.; funding acquisition, M.-D.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Pervasive AI Research (PAIR) Labs, Taiwan, and the Innovation and Development Center of Sustainable Agriculture via The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project of the Ministry of Education (MOE) in Taiwan. This research was partially funded by the Ministry of Science and Technology, Taiwan, under Grant Number 108-2634-F-005-003.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Panthou, G.; Vischel, T.; Lebel, T. Recent trends in the regime of extreme rainfall in the Central Sahel. Int. J. Climatol. 2014, 34, 3998–4006. [Google Scholar] [CrossRef]

- Bao, J.; Sherwood, S.C.; Alexander, L.V.; Evans, J.P. Future increases in extreme precipitation exceed observed scaling rates. Nat. Clim. Chang. 2017, 7, 128–132. [Google Scholar] [CrossRef]

- Deltares. SOBEK User Manual—Hydrodynamics, Rainfall Runoff and Real Time Control; Deltares: HV Delft, The Netherlands, 2017; pp. 58–73. [Google Scholar]

- Rossman, L.A.; Huber, W.C. Storm Water Management Model Reference Manual Volume I—Hydrology; United States Environmental Protection Agency: Washington, DC, USA, 2016; pp. 20–25. [CrossRef]

- Reed, S.; Schaake, J.; Zhang, Z. A distributed hydrologic model and threshold frequency-based method for flash flood forecasting at ungauged locations. J. Hydrol. 2007, 337, 402–420. [Google Scholar] [CrossRef]

- Norbiato, D.; Borga, M.; Degli Esposti, S.; Gaume, E.; Anquetin, S. Flash flood warning based on rainfall thresholds and soil moisture conditions: An assessment for gauged and ungauged basins. J. Hydrol. 2008, 362, 274–290. [Google Scholar] [CrossRef]

- Neal, J.; Villanueva, I.; Wright, N.; Willis, T.; Fewtrell, T.; Bates, P. How much physical complexity is needed to model flood inundation? Hydrol. Process. 2012, 26, 2264–2282. [Google Scholar] [CrossRef]

- Van der Sande, C.J.; De Jong, S.M.; De Roo, A.P.J. A segmentation and classification approach of IKONOS-2 imagery for land cover mapping to assist flood risk and flood damage assessment. Int. J. Appl. Earth Obs. Geoinf. 2003, 4, 217–229. [Google Scholar] [CrossRef]

- Duncan, J.M.; Biggs, E.M. Assessing the accuracy and applied use of satellite-derived precipitation estimates over Nepal. Appl. Geogr. 2012, 34, 626–638. [Google Scholar] [CrossRef]

- Wood, M.; De Jong, S.M.; Straatsma, M.W. Locating flood embankments using SAR time series: A proof of concept. Int. J. Appl. Earth Obs. Geoinf. 2018, 70, 72–83. [Google Scholar] [CrossRef]

- Yang, M.D.; Yang, Y.F.; Hsu, S.C. Application of remotely sensed data to the assessment of terrain factors affecting Tsao-Ling landside. Can. J. Remote Sens. 2004, 30, 593–603. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Hsu, C.H.; Chang, K.C.; Wu, A.M. Mapping of the 26 December 2004 tsunami disaster by using FORMOSAT-2 images. Int. J. Remote Sens. 2007, 28, 3071–3091. [Google Scholar] [CrossRef]

- Yang, M.D. A genetic algorithm (GA) based automated classifier for remote sensing imagery. Can. J. Remote Sens. 2007, 33, 593–603. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective sensing: Integrating geospatial technologies to understand urban systems—An overview. Remote Sens. 2011, 3, 1743–1776. [Google Scholar] [CrossRef]

- Schnebele, E.; Cervone, G. Improving remote sensing flood assessment using volunteered geographical data. Nat. Hazards Earth Syst. Sci. 2013, 13, 669–677. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Lin, H.Y. Fusion of infrared thermal image and visible image for 3D thermal model reconstruction using smartphone sensors. Sensors 2018, 18, 2003. [Google Scholar] [CrossRef]

- Kaplan, A.M.; Haenlein, M. Users of the world, unite! The challenges and opportunities of Social Media. Bus. Horiz. 2010, 53, 59–68. [Google Scholar] [CrossRef]

- Boella, G.; Calafiore, A.; Grassi, E.; Rapp, A.; Sanasi, L.; Schifanella, C. FirstLife: Combining Social Networking and VGI to Create an Urban Coordination and Collaboration Platform. IEEE Access 2019, 7, 63230–63246. [Google Scholar] [CrossRef]

- Longueville, B.D.; Luraschi, G.; Smits, P.; Peedell, S.; Groeve, T.D. Citizens as sensors for natural hazards: A VGI integration workflow. Geomatica 2010, 64, 41–59. [Google Scholar]

- Granell, C.; Ostermann, F.O. Beyond data collection: Objectives and methods of research using VGI and geo-social media for disaster management. Comput. Environ. Urban Syst. 2016, 59, 231–243. [Google Scholar] [CrossRef]

- Vahidi, H.; Klinkenberg, B.; Johnson, B.A.; Moskal, L.M.; Yan, W. Mapping the Individual Trees in Urban Orchards by Incorporating Volunteered Geographic Information and Very High Resolution Optical Remotely Sensed Data: A Template Matching-Based Approach. Remote Sens. 2018, 10, 1134. [Google Scholar] [CrossRef]

- Zamir, A.R.; Shah, M. Accurate image localization based on google maps street view. In European Conference on Computer Vision; Heraklion: Crete, Greece, 2010; pp. 255–268. [Google Scholar] [CrossRef]

- Liang, J.; Gong, J.; Sun, J.; Zhou, J.; Li, W.; Li, Y.; Shen, S. Automatic sky view factor estimation from street view photographs—A big data approach. Remote Sens. 2017, 9, 411. [Google Scholar] [CrossRef]

- Feng, Y.; Sester, M. Extraction of pluvial flood relevant volunteered geographic information (VGI) by deep learning from user generated texts and photos. ISPRS Int. J. Geoinf. 2018, 7, 39. [Google Scholar] [CrossRef]

- Comber, A.; See, L.; Fritz, S.; Van der Velde, M.; Perger, C.; Foody, G. Using control data to determine the reliability of volunteered geographic information about land cover. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 37–48. [Google Scholar] [CrossRef]

- Foody, G.M.; See, L.; Fritz, S.; Van der Velde, M.; Perger, C.; Schill, C.; Boyd, D.S. Assessing the accuracy of volunteered geographic information arising from multiple contributors to an internet based collaborative project. Trans. GIS 2013, 17, 847–860. [Google Scholar] [CrossRef]

- Fonte, C.C.; Bastin, L.; See, L.; Foody, G.; Lupia, F. Usability of VGI for validation of land cover maps. Int. J. Geogr. Inf. Sci. 2015, 29, 1269–1291. [Google Scholar] [CrossRef]

- Poser, K.; Dransch, D. Volunteered geographic information for disaster management with application to rapid flood damage estimation. Geomatica 2010, 64, 89–98. [Google Scholar]

- Hung, K.C.; Kalantari, M.; Rajabifard, A. Methods for assessing the credibility of volunteered geographic information in flood response: A case study in Brisbane, Australia. Appl. Geogr. 2016, 68, 37–47. [Google Scholar] [CrossRef]

- Kusumo, A.N.L.; Reckien, D.; Verplanke, J. Utilising volunteered geographic information to assess resident’s flood evacuation shelters. Case study: Jakarta. Appl. Geogr. 2017, 88, 174–185. [Google Scholar] [CrossRef]

- Assumpção, T.H.; Popescu, I.; Jonoski, A.; Solomatine, D.P. Citizen observations contributing to flood modelling: Opportunities and challenges. Hydrol. Earth Syst. Sci. 2018, 22, 1473–1489. [Google Scholar] [CrossRef]

- See, L.M. A Review of Citizen Science and Crowdsourcing in Applications of Pluvial Flooding. Front. Earth Sci. 2019, 7, 44. [Google Scholar] [CrossRef]

- Rosser, J.F.; Leibovici, D.G.; Jackson, M.J. Rapid flood inundation mapping using social media, remote sensing and topographic data. Nat. Hazards 2017, 87, 103–120. [Google Scholar] [CrossRef]

- Liu, P.; Chen, A.Y.; Huang, Y.N.; Han, J.Y.; Lai, J.S.; Kang, S.C.; Tsai, M.H. A review of rotorcraft unmanned aerial vehicle (UAV) developments and applications in civil engineering. Smart Struct. Syst. 2014, 13, 1065–1094. [Google Scholar] [CrossRef]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry, 1st ed.; John Wiley and Sons Inc.: Chichester, NY, USA, 2001; p. 237. [Google Scholar]

- Royem, A.A.; Mui, C.K.; Fuka, D.R.; Walter, M.T. Technical note: Proposing a low-tech, affordable, accurate stream stage monitoring system. Trans. ASABE 2012, 55, 2237–2242. [Google Scholar] [CrossRef]

- Lin, F.; Chang, W.Y.; Lee, L.C.; Hsiao, H.T.; Tsai, W.F.; Lai, J.S. Applications of image recognition for real-time water-level and surface velocity. In Proceedings of the 2013 IEEE International Symposium on Multimedia, Anaheim, CA, USA, 9–11 December 2013; pp. 259–262. [Google Scholar] [CrossRef]

- Lin, Y.T.; Lin, Y.C.; Han, J.Y. Automatic water-level detection using single-camera images with varied poses. Measurement 2018, 127, 167–174. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C.; Pan, N.F.; Yang, Y.F. Systematic image quality assessment for sewer inspection. Expert Syst. Appl. 2011, 38, 1766–1776. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar] [CrossRef]

- Yang, M.D.; Su, T.C. Automation model of sewerage rehabilitation planning. Water Sci. Technol. 2006, 54, 225–232. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.D.; Su, T.C.; Pan, N.F.; Liu, P. Feature extraction of sewer pipe defects using wavelet transform and co-occurrence matrix. Int. J. Wavelets Multiresolut. Inf. Process. 2011, 9, 211–225. [Google Scholar] [CrossRef]

- Su, T.C.; Yang, M.D. Application of morphological segmentation to leaking defect detection in sewer pipelines. Sensors 2014, 14, 8686–8704. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Ghosh, A.; Sharma, R.; Joshi, P.K. Random forest classification of urban landscape using Landsat archive and ancillary data: Combining seasonal maps with decision level fusion. Appl. Geogr. 2014, 48, 31–41. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Phiri, D.; Morgenroth, J.; Xu, C.; Hermosilla, T. Effects of pre-processing methods on Landsat OLI-8 land cover classification using OBIA and random forests classifier. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 170–178. [Google Scholar] [CrossRef]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random forest classification of wetland landcovers from multi-sensor data in the arid region of Xinjiang, China. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef]

- Zurqani, H.A.; Post, C.J.; Mikhailova, E.A.; Schlautman, M.A.; Sharp, J.L. Geospatial analysis of land use change in the Savannah River Basin using Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 175–185. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cutler, A.; Cutler, D.R.; Stevens, J.R. Random forests. In Ensemble Machine Learning; Springer: Boston, MA, USA, 2012; pp. 157–175. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Deus, D. Integration of ALOS PALSAR and landsat data for land cover and forest mapping in northern tanzania. Land 2016, 5, 43. [Google Scholar] [CrossRef]

- Tsutsumida, N.; Comber, A.J. Measures of spatio-temporal accuracy for time series land cover data. Int. J. Appl. Earth Obs. Geoinf. 2015, 41, 46–55. [Google Scholar] [CrossRef]

- Yang, M.D.; Huang, K.S.; Wan, J.; Tsai, H.P.; Lin, L.M. Timely and quantitative damage assessment of oyster racks using UAV images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 6. [Google Scholar] [CrossRef]

- Batra, B.; Singh, S.; Sharma, J.; Arora, S.M. Computational analysis of edge detection operators. Int. J. Appl. Res. 2016, 2, 257–262. [Google Scholar]

- Li, Y.; Chen, L.; Huang, H.; Li, X.; Xu, W.; Zheng, L.; Huang, J. Nighttime lane markings recognition based on Canny detection and Hough transform. In Proceedings of the 2016 IEEE International Conference on Real-time Computing and Robotics, Angkor Wat, Cambodia, 6–9 June 2016; pp. 411–415. [Google Scholar] [CrossRef]

- Gabriel, E.; Hahmann, F.; Böer, G.; Schramm, H.; Meyer, C. Structured edge detection for improved object localization using the discriminative generalized Hough transform. In Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Rome, Italy, 27–29 February 2016; pp. 393–402. [Google Scholar]

- Deng, G.; Wu, Y. Double Lane Line Edge Detection Method Based on Constraint Conditions Hough Transform. In Proceedings of the 2018 17th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Wuxi, China, 19–23 October 2018; pp. 107–110. [Google Scholar] [CrossRef]

- Yang, M.D.; Huang, K.S.; Kuo, Y.H.; Tsai, H.P.; Lin, L.M. Spatial and spectral hybrid image classification for rice-lodging assessment through UAV imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Lampert, D.J.; Wu, M. Development of an open-source software package for watershed modeling with the Hydrological Simulation Program in Fortran. Environ. Model. Softw. 2015, 68, 166–174. [Google Scholar] [CrossRef]

- Chen, C.F.; Liu, C.M. The definition of urban stormwater tolerance threshold and its concept estimation: An example from Taiwan. Nat. Hazards 2014, 73, 173–190. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic Segmentation Using Deep Learning with Vegetation Indices for Rice Lodging Identification in Multi-date UAV Visible Images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).