PCDRN: Progressive Cascade Deep Residual Network for Pansharpening

Abstract

1. Introduction

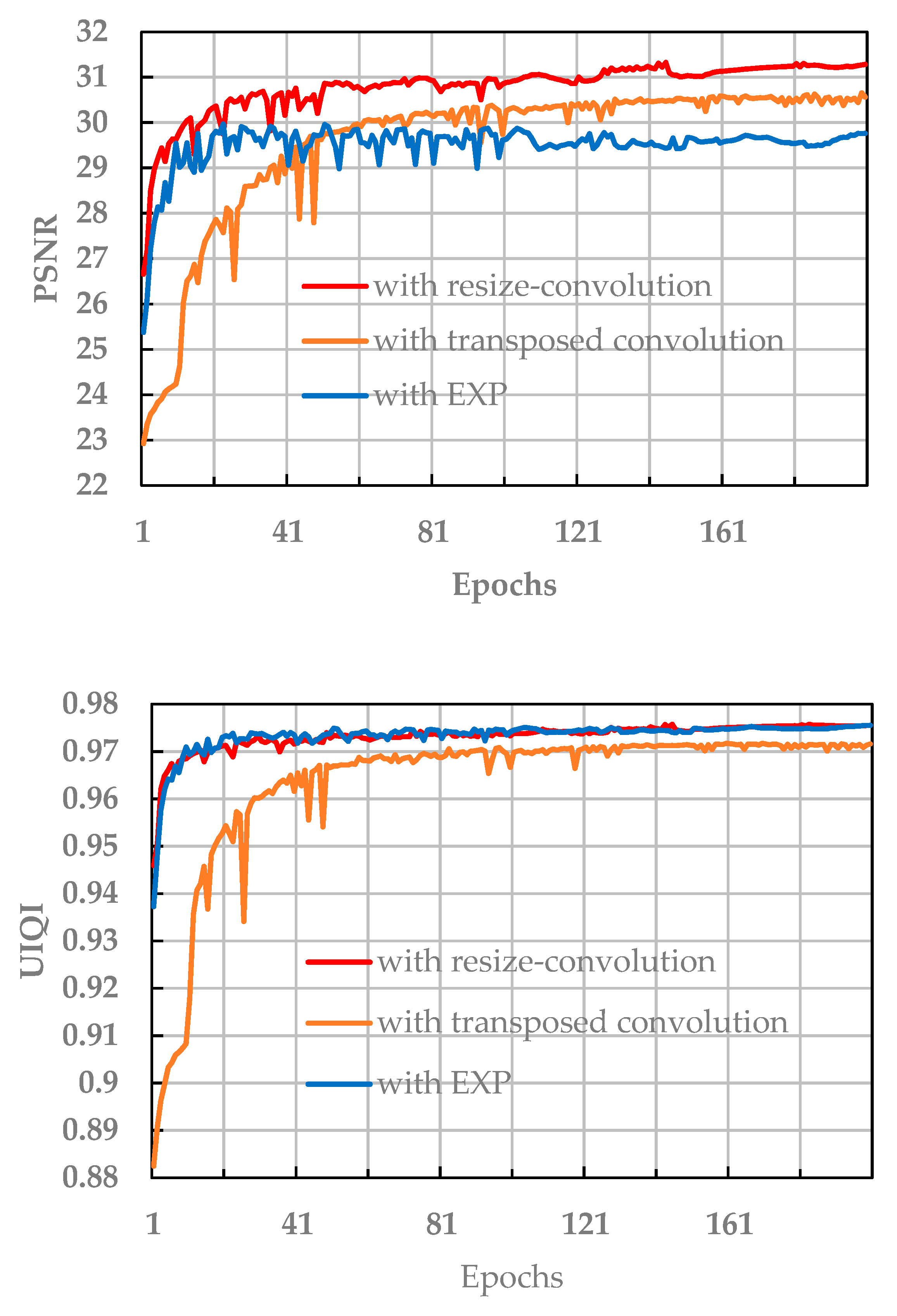

2. Related Work

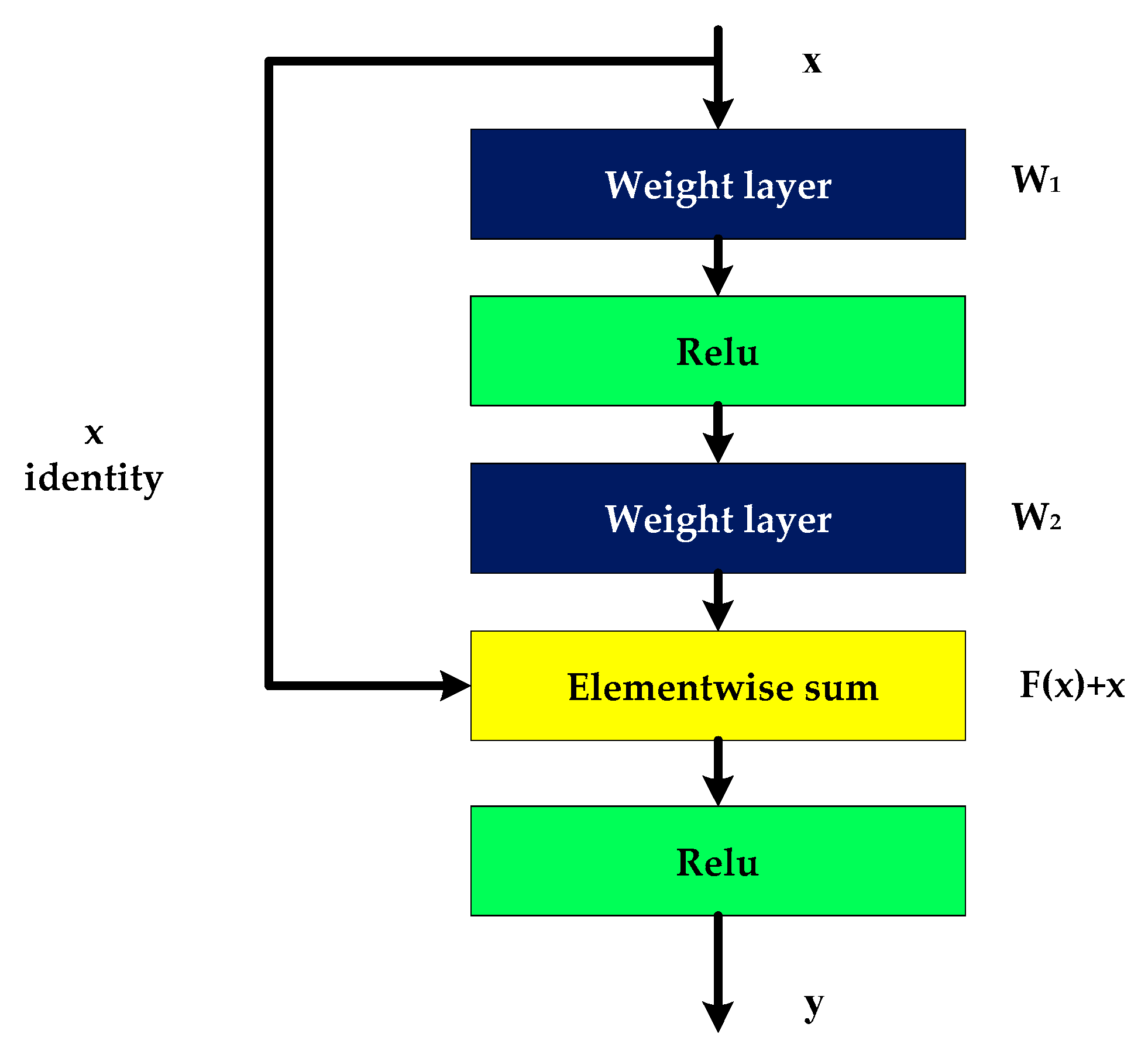

2.1. Residual Network

2.2. Universal Image Quality Index

3. Proposed Method

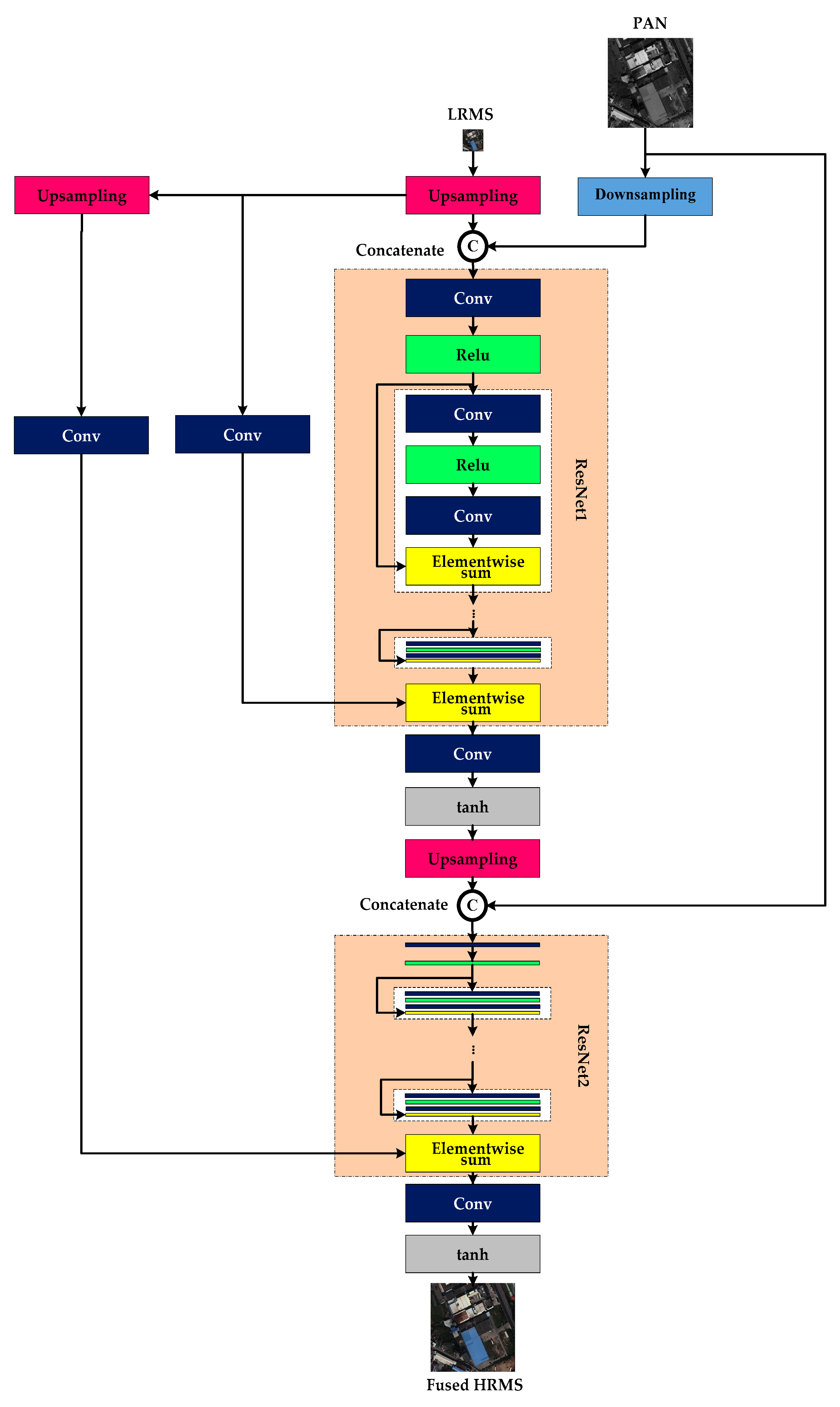

3.1. Flowchart of PCDRN

3.2. Multitask Loss Function

| Algorithm 1. The Algorithm for Normalization method |

| Input: LRMS image (lrms), PAN image(pan), |

| the number of training epochs (max_train_epoch) |

| Output: the normalized coefficients and |

| Initialize: |

| 1) c ← 0.00001 |

| For i = 0 to max_train_epoch do |

| 2) Input and to compute by and by , respectively |

| If (converge) |

| 3) Compute by |

| 4) Compute by |

| 5) Compute by averaging the |

| 6) Compute by averaging the |

| Endif |

| Endfor |

| 7) Compute the coefficient by and the coefficient by |

3.3. Resize-Convolution

4. Experimental Results

4.1. Experimental Settings

4.1.1. Datasets

4.1.2. Training Details

4.1.3. Compared Methods

- EXP: an interpolation method based on polynomial kernel [31];

- AIHS: adaptive IHS [35];

- ATWT: a Trous wavelet transform [9];

- GSA: Gram Schmidt adaptive [36];

- BT: Brovey transform [37];

- MMMT: a matting model and multiscale transform [16];

- GS: Gram Schmidt [6];

- ASIM: adaptive spectral-intensity modulation [40];

- DRPNN: a deep ResNet for pansharpening [41];

- MSDCNN: a multiscale and multidepth CNN for pansharpening [12].

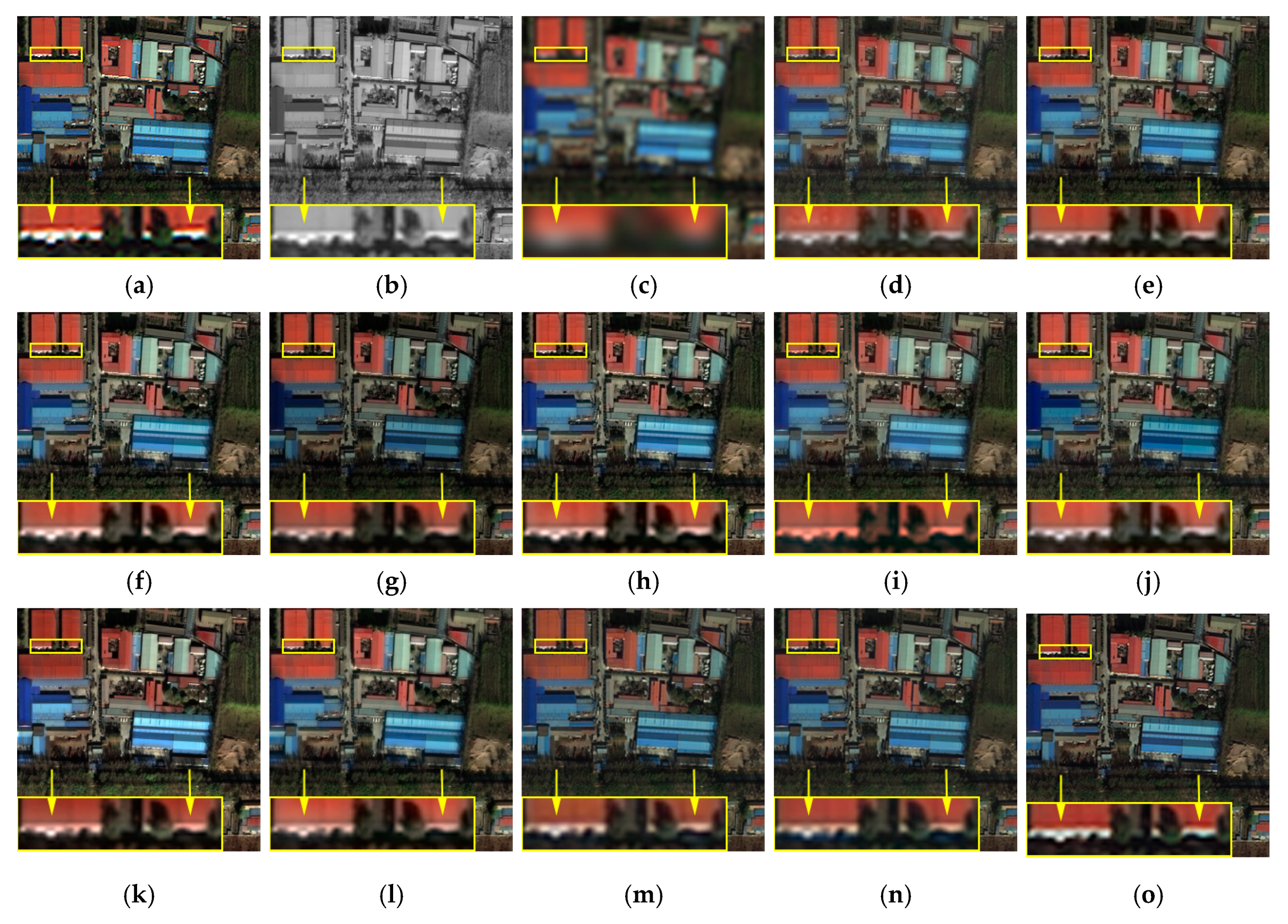

4.2. Experiments on Simulated Data

4.2.1. Experiments on Pléiades Dataset

4.2.2. Experiments on WorldView-3 Dataset

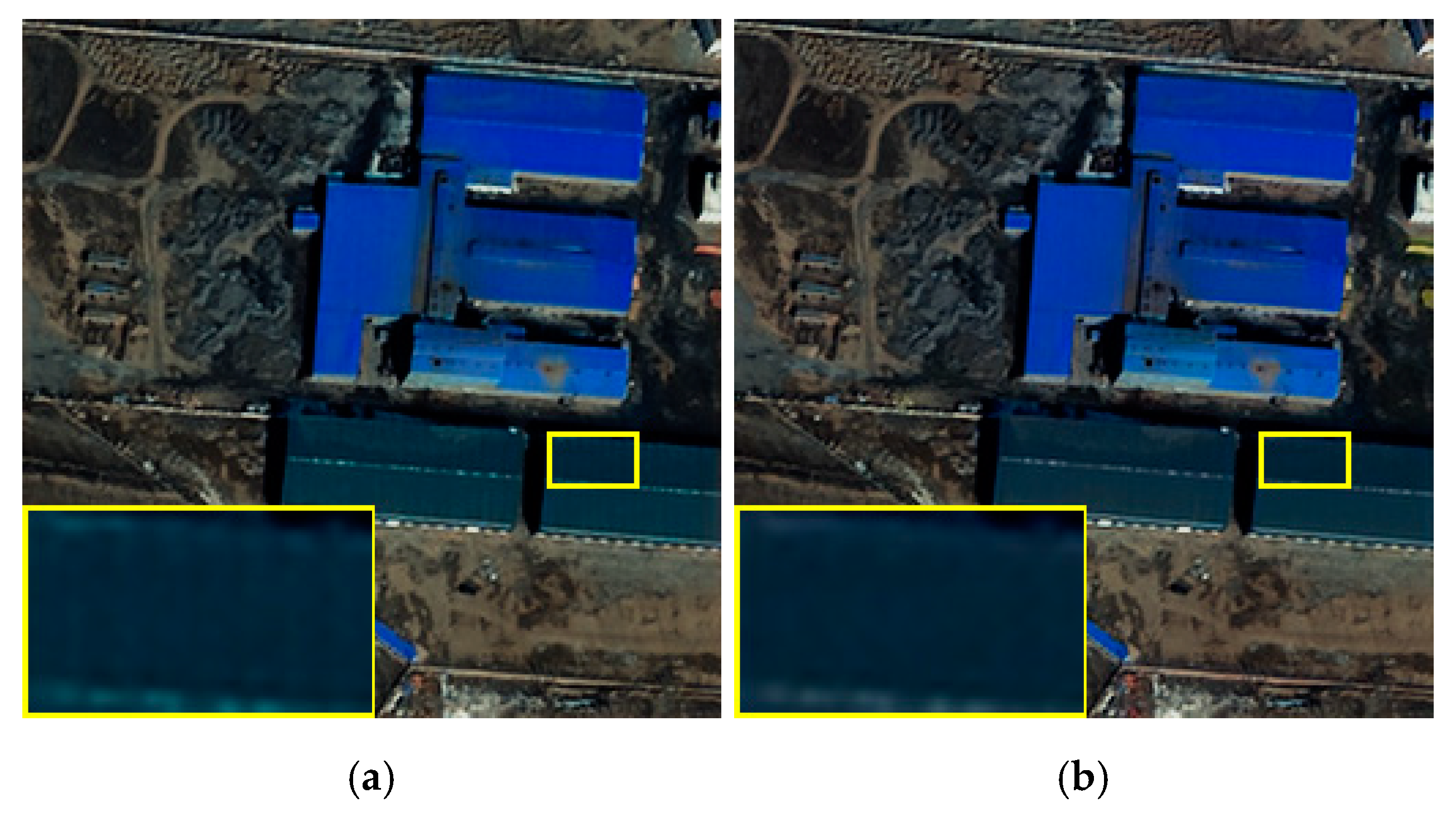

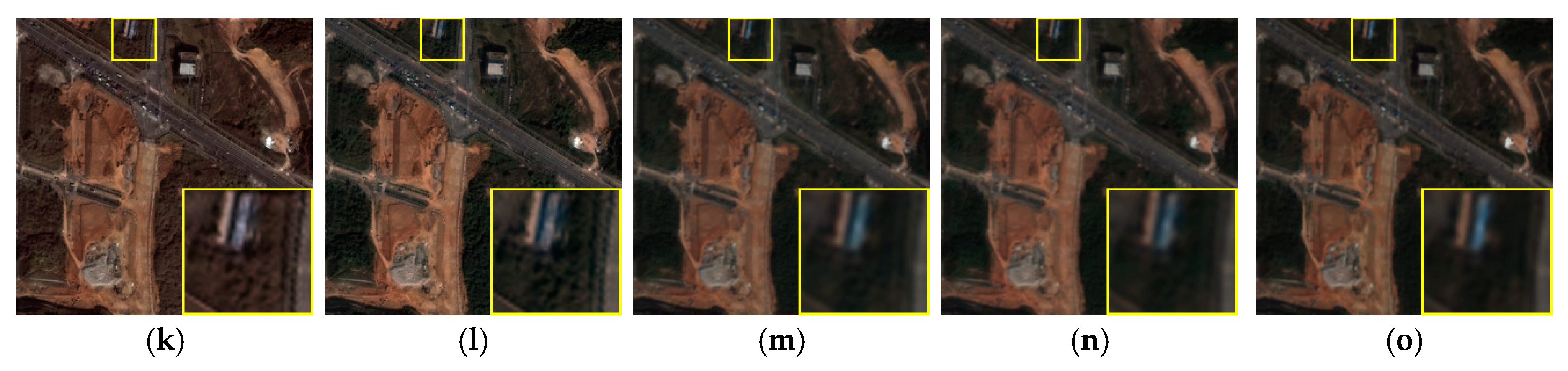

4.3. Experiments on Real Data

4.3.1. Experiments on Pléiades Dataset

4.3.2. Experiments on WorldView-3 Dataset

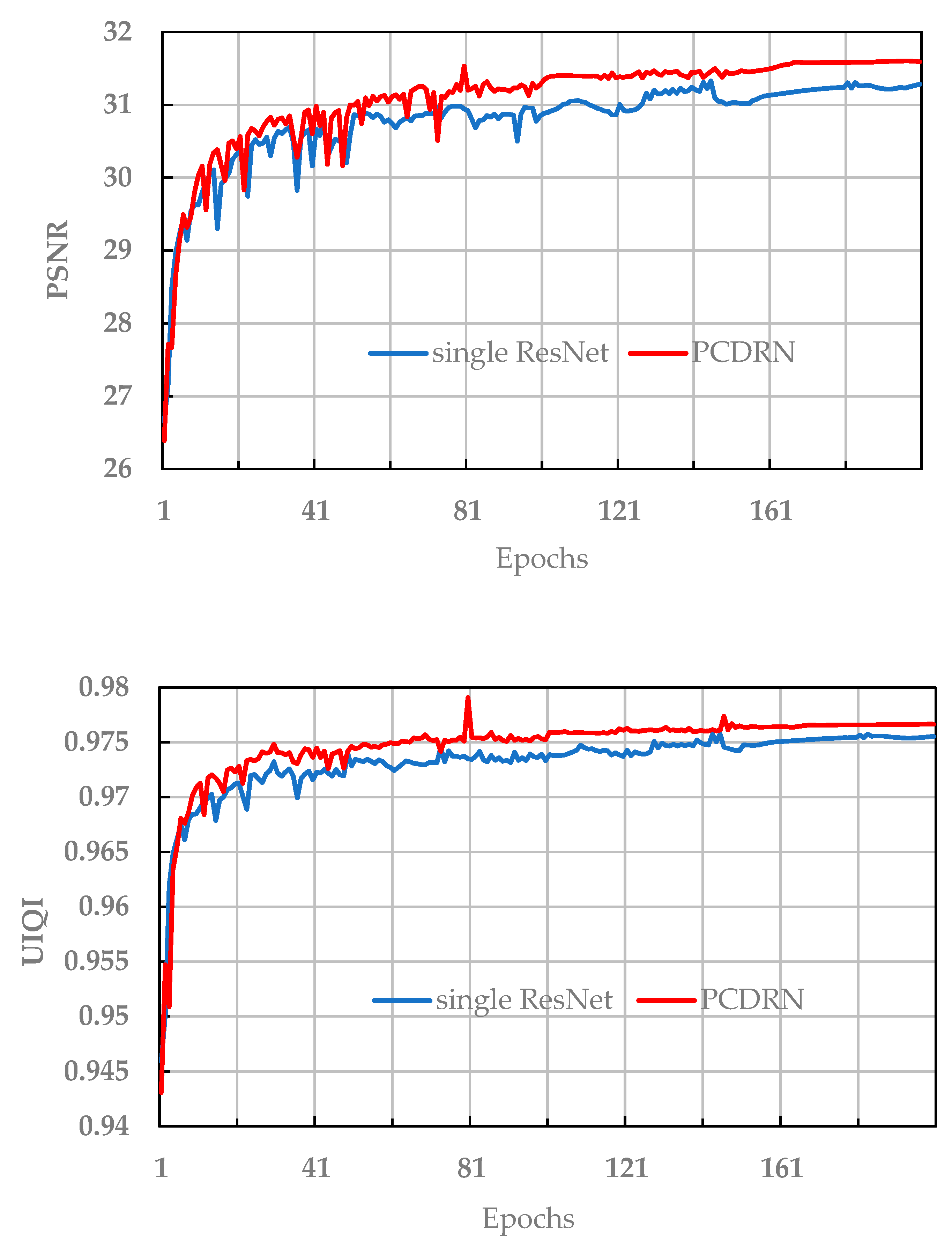

5. Further Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fusion 2005, 6, 225–234. [Google Scholar] [CrossRef]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Mura, M.D.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A Critical Comparison Among Pansharpening Algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. US Patent 6,011,875, 4 January 2000. [Google Scholar]

- Loncan, L.; Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Miao, Q.; Wang, B. Multi-sensor image fusion based on improved laplacian pyramid transform. Acta Opt. Sin. 2007, 27, 1605–1610. [Google Scholar]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Li, S.; Kwok, J.T.; Wang, Y. Using the discrete wavelet frame transform to merge Landsat TM and SPOT panchromatic images. Inf. Fusion 2002, 3, 17–23. [Google Scholar] [CrossRef]

- Yang, Y.; Tong, S.; Huang, S.; Lin, P. Multi-focus Image Fusion Based on NSCT and Focused Area Detection. IEEE Sens. J. 2015, 15, 2824–2838. [Google Scholar] [CrossRef]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- Joshi, M.; Jalobeanu, A. MAP estimation for multiresolution fusion in remotely sensed images using an IGMRF prior model. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1245–1255. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, H.; Liu, T.; Wang, F.; Li, H. Remote sensing image fusion via wavelet transform and sparse representation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 158–173. [Google Scholar] [CrossRef]

- Yang, Y.; Wan, W.; Huang, S.; Lin, P.; Que, Y. A Novel Pan-Sharpening Framework Based on Matting Model and Multiscale Transform. Remote Sens. 2017, 9, 391. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A deep network architecture for pan-sharpening. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wei, Y.; Yuan, Q. Deep residual learning for remote sensed imagery pansharpening. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai, China, 18–21 May 2017. [Google Scholar]

- Shao, Z.; Cai, J. Remote sensing image fusion with deep convolutional neural network. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1656–1669. [Google Scholar] [CrossRef]

- He, L.; Rao, Y.; Li, J.; Chanussot, J.; Plaza, A.; Zhu, J.; Li, B. Pansharpening via Detail Injection Based Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 1188–1204. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Nezhad, Z.H.; Karami, A.; Heylen, R.; Scheunders, P. Fusion of hyperspectral and multispectral images using spectral unmixing and sparsecoding. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 2377–2389. [Google Scholar] [CrossRef]

- Dumoulin, V.; Visin, F. A Guide to Convolution Arithmetic for Deep Learning [Online]. Available online: https://arxiv.org/abs/1603.07285 (accessed on 5 February 2020).

- Chong, N.; Wong, L.; See, J. GANmera: Reproducing aesthetically pleasing photographs using deep adversarial networks. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2016. [Google Scholar]

- Zhu, X.X.; Bamler, R. A sparse image fusion algorithm with application to pan-sharpening. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2827–2836. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of pansharpening algorithms: Outcome of the 2006 GRSS data fusion contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef]

- Wald, L. Quality of high resolution synthesised images: Is there a simple criterion? In Proceedings of the 3rd Conference Fusion Earth Data: Merging Point Measurement, Raster Maps and Remotely Sensed Images, Sophia Antipolis, France, 26–28 January 2000. [Google Scholar]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi-/hyper-spectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Selva, M.; Santurri, L.; Baronti, S. On the use of the expanded image in quality assessment of pansharpened images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 320–324. [Google Scholar] [CrossRef]

- Yang, Y.; Nie, Z.; Huang, S.; Lin, P.; Wu, J. Multilevel features convolutional neural network for multifocus image fusion. IEEE Trans. Comput. Imag. 2019, 5, 262–273. [Google Scholar] [CrossRef]

- Rahmani, S.; Strait, M.; Merkurjev, D.; Moeller, M.; Wittman, T. An adaptive IHS pan-sharpening method. IEEE Geosci. Remote Sens. Lett. 2010, 7, 746–750. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS+Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Gillespie, A.; Kahle, A.; Walker, R. Color enhancement of highly correlated images. II. Channel ratio and “Chromaticity” transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. An MTF-based spectral distortion minimizing model for pan-sharpening of very high resolution multispectral images of urban areas. In Proceedings of the 2nd GRSS/ISPRS Joint Workshop Remote Sens. Data Fusion URBAN Areas, Berlin, Germany, 22–23 May 2003. [Google Scholar]

- Yang, Y.; Wu, L.; Huang, S.; Tang, Y.; Wan, W. Pansharpening for multiband images with adaptive spectral-intensity modulation. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3196–3208. [Google Scholar] [CrossRef]

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the accuracy of multispectral image pansharpening by learning a deep residual network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef]

| Methods | PSNR (↑) | UIQI (↑) |

|---|---|---|

| PCDRN using MSE loss | 31.3716 | 0.9754 |

| PCDRN using MSE+UIQI loss | 31.5914 | 0.9767 |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| Transposed convolution | 26.3580 | 0.9705 | 0.9576 | 0.8460 | 5.0280 | 5.3059 |

| Resize-convolution | 26.6129 | 0.9760 | 0.9621 | 0.8412 | 4.4173 | 5.1850 |

| PAN | MS | |

|---|---|---|

| Pleiades | 0.5 m GSD (0.7 m GSD at nadir) | 2 m GSD (2.8 m GSD at nadir) |

| WorldView-3 | 0.31 m GSD at nadir | 1.24 m GSD at nadir |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| EXP * | 26.5538 | 0.9008 | 0.8900 | 0.8129 | 4.1067 | 5.0701 |

| AIHS | 27.4025 | 0.9231 | 0.9074 | 0.8491 | 4.5483 | 4.6003 |

| ATWT | 28.0016 | 0.9235 | 0.9279 | 0.8745 | 4.5283 | 4.2789 |

| GSA | 27.0666 | 0.9130 | 0.9265 | 0.8702 | 4.5274 | 4.7951 |

| BT | 20.6228 | 0.8936 | 0.8179 | 0.4855 | 4.3264 | 15.1748 |

| MTF_GLP_CBD | 27.2334 | 0.9129 | 0.9276 | 0.8712 | 4.4921 | 4.6934 |

| MMMT | 27.7634 | 0.9212 | 0.9225 | 0.8698 | 4.7816 | 4.3933 |

| GS | 26.7568 | 0.9046 | 0.8889 | 0.8366 | 4.5298 | 4.9587 |

| MTF_GLP_HPM | 22.3144 | 0.7899 | 0.8482 | 0.7274 | 4.7561 | 7.6598 |

| ASIM | 28.8910 | 0.9383 | 0.9448 | 0.9059 | 4.1985 | 3.8388 |

| DRPNN | 29.2947 | 0.9712 | 0.9500 | 0.9070 | 3.4037 | 3.9624 |

| MSDCNN | 28.0166 | 0.9608 | 0.9394 | 0.8660 | 3.4079 | 4.6946 |

| PCDRN | 30.2689 | 0.9846 | 0.9681 | 0.9171 | 2.9567 | 3.5617 |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| EXP * | 26.9511 | 0.9279 | 0.9257 | 0.8161 | 3.1093 | 3.9761 |

| AIHS | 28.2598 | 0.9502 | 0.9431 | 0.8659 | 3.4181 | 3.4507 |

| ATWT | 28.7868 | 0.9504 | 0.9550 | 0.8832 | 3.3870 | 3.2380 |

| GSA | 27.9760 | 0.9454 | 0.9530 | 0.8757 | 3.5046 | 3.5725 |

| BT | 19.8484 | 0.9355 | 0.8576 | 0.5249 | 3.2301 | 12.8501 |

| MTF_GLP_CBD | 27.9234 | 0.9437 | 0.9519 | 0.8717 | 3.5005 | 3.5770 |

| MMMT | 28.6014 | 0.9496 | 0.9528 | 0.8839 | 3.5549 | 3.2942 |

| GS | 27.5243 | 0.9400 | 0.9331 | 0.8536 | 3.5229 | 3.7475 |

| MTF_GLP_HPM | 23.8909 | 0.8678 | 0.8995 | 0.7632 | 3.9791 | 5.5050 |

| ASIM | 29.4570 | 0.9594 | 0.9643 | 0.9067 | 3.2244 | 2.9717 |

| DRPNN | 30.1216 | 0.9805 | 0.9680 | 0.8846 | 2.6809 | 2.9884 |

| MSDCNN | 29.2428 | 0.9739 | 0.9629 | 0.8638 | 2.7117 | 3.2713 |

| PCDRN | 31.5914 | 0.9884 | 0.9767 | 0.9004 | 2.3488 | 2.6322 |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| EXP * | 21.7015 | 0.8676 | 0.8758 | 0.6946 | 4.9591 | 7.5501 |

| AIHS | 23.4222 | 0.9412 | 0.9097 | 0.8054 | 5.9824 | 5.6479 |

| ATWT | 25.3147 | 0.9466 | 0.9433 | 0.8957 | 5.7960 | 4.9356 |

| GSA | 25.6204 | 0.9480 | 0.9502 | 0.9045 | 7.0358 | 4.7366 |

| BT | 20.9394 | 0.9353 | 0.8793 | 0.6533 | 5.0673 | 10.7228 |

| MTF_GLP_CBD | 25.6786 | 0.9485 | 0.9490 | 0.9053 | 6.7749 | 4.6830 |

| MMMT | 24.9489 | 0.9422 | 0.9367 | 0.8862 | 6.0845 | 5.1410 |

| GS | 23.5740 | 0.9267 | 0.9081 | 0.8455 | 6.6026 | 6.1103 |

| MTF_GLP_HPM | 23.1149 | 0.9185 | 0.9277 | 0.8365 | 5.2112 | 5.9757 |

| ASIM | 25.6169 | 0.9473 | 0.9488 | 0.9083 | 5.9963 | 4.7283 |

| DRPNN | 24.5411 | 0.9604 | 0.9258 | 0.8676 | 5.8623 | 5.6337 |

| MSDCNN | 23.9079 | 0.9508 | 0.9175 | 0.8470 | 5.8183 | 6.1578 |

| PCDRN | 26.0631 | 0.9817 | 0.9566 | 0.9206 | 4.7731 | 4.9920 |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| EXP * | 24.4725 | 0.8696 | 0.8754 | 0.6853 | 4.5627 | 6.2634 |

| AIHS | 26.0239 | 0.9431 | 0.9087 | 0.7960 | 5.1772 | 4.9621 |

| ATWT | 27.7866 | 0.9460 | 0.9419 | 0.8771 | 4.9188 | 4.1494 |

| GSA | 28.3915 | 0.9501 | 0.9534 | 0.8962 | 5.3194 | 3.8844 |

| BT | 21.8128 | 0.9367 | 0.8728 | 0.5729 | 4.6377 | 10.9669 |

| MTF_GLP_CBD | 28.2251 | 0.9483 | 0.9510 | 0.8906 | 5.2584 | 3.9354 |

| MMMT | 27.5249 | 0.9433 | 0.9355 | 0.8650 | 5.0332 | 4.2669 |

| GS | 26.8001 | 0.9399 | 0.9183 | 0.8504 | 5.2075 | 4.7135 |

| MTF_GLP_HPM | 23.6310 | 0.8831 | 0.8986 | 0.7906 | 5.7262 | 6.3586 |

| ASIM | 28.1808 | 0.9475 | 0.9484 | 0.8869 | 4.8056 | 3.9356 |

| DRPNN | 27.0167 | 0.9566 | 0.9337 | 0.8449 | 4.9077 | 4.5371 |

| MSDCNN | 26.5101 | 0.9506 | 0.9263 | 0.8300 | 5.0109 | 4.8904 |

| PCDRN | 28.8141 | 0.9781 | 0.9571 | 0.8872 | 4.2326 | 3.7930 |

| Methods | Dλ (↓) | DS (↓) | QNR (↑) |

|---|---|---|---|

| EXP * | 0.0012 | 0.1961 | 0.8029 |

| AIHS | 0.0954 | 0.1035 | 0.8110 |

| ATWT | 0.1055 | 0.1080 | 0.7978 |

| GSA | 0.1447 | 0.1621 | 0.7167 |

| BT | 0.1509 | 0.1192 | 0.7479 |

| MTF_GLP_CBD | 0.1145 | 0.1042 | 0.7932 |

| MMMT | 0.0997 | 0.0908 | 0.8185 |

| GS | 0.1110 | 0.1452 | 0.7599 |

| MTF_GLP_HPM | 0.1284 | 0.1497 | 0.7410 |

| ASIM | 0.1008 | 0.0996 | 0.8096 |

| DRPNN | 0.0581 | 0.0162 | 0.9266 |

| MSDCNN | 0.0495 | 0.0243 | 0.9274 |

| PCDRN | 0.0376 | 0.0276 | 0.9358 |

| Methods | Dλ (↓) | DS (↓) | QNR (↑) |

|---|---|---|---|

| EXP * | 0.0026 | 0.2055 | 0.7924 |

| AIHS | 0.1021 | 0.1142 | 0.7961 |

| ATWT | 0.1121 | 0.1172 | 0.7848 |

| GSA | 0.1347 | 0.1619 | 0.7259 |

| BT | 0.1660 | 0.1433 | 0.7153 |

| MTF_GLP_CBD | 0.1098 | 0.1011 | 0.8009 |

| MMMT | 0.1013 | 0.0982 | 0.8107 |

| GS | 0.1076 | 0.1465 | 0.7625 |

| MTF_GLP_HPM | 0.1424 | 0.1427 | 0.7368 |

| ASIM | 0.1105 | 0.1142 | 0.7887 |

| DRPNN | 0.0533 | 0.0363 | 0.9123 |

| MSDCNN | 0.0434 | 0.0462 | 0.9123 |

| PCDRN | 0.0375 | 0.0382 | 0.9256 |

| Methods | Dλ (↓) | DS (↓) | QNR (↑) |

|---|---|---|---|

| EXP * | 0.0005 | 0.1082 | 0.8913 |

| AIHS | 0.0391 | 0.0457 | 0.9169 |

| ATWT | 0.0676 | 0.0730 | 0.8643 |

| GSA | 0.0881 | 0.1130 | 0.8089 |

| BT | 0.1255 | 0.1113 | 0.7772 |

| MTF_GLP_CBD | 0.0749 | 0.0738 | 0.8568 |

| MMMT | 0.0493 | 0.0602 | 0.8935 |

| GS | 0.0534 | 0.0633 | 0.8866 |

| MTF_GLP_HPM | 0.1167 | 0.1460 | 0.7544 |

| ASIM | 0.0857 | 0.0907 | 0.8314 |

| DRPNN | 0.0589 | 0.0586 | 0.8859 |

| MSDCNN | 0.0554 | 0.0683 | 0.8801 |

| PCDRN | 0.0281 | 0.0480 | 0.9253 |

| Methods | Dλ (↓) | DS (↓) | QNR (↑) |

|---|---|---|---|

| EXP * | 0.0028 | 0.1163 | 0.8813 |

| AIHS | 0.0585 | 0.1032 | 0.8451 |

| ATWT | 0.0679 | 0.1113 | 0.8292 |

| GSA | 0.0778 | 0.1324 | 0.8011 |

| BT | 0.0994 | 0.1066 | 0.8058 |

| MTF_GLP_CBD | 0.0778 | 0.1041 | 0.8272 |

| MMMT | 0.0689 | 0.1024 | 0.8366 |

| GS | 0.0507 | 0.1106 | 0.8452 |

| MTF_GLP_HPM | 0.1011 | 0.1363 | 0.7782 |

| ASIM | 0.0803 | 0.1046 | 0.8241 |

| DRPNN | 0.0644 | 0.0836 | 0.8583 |

| MSDCNN | 0.0554 | 0.0849 | 0.8649 |

| PCDRN | 0.0279 | 0.0450 | 0.9285 |

| Methods | PSNR (↑) | CC (↑) | UIQI (↑) | Q2n (↑) | SAM (↓) | ERGAS (↓) |

|---|---|---|---|---|---|---|

| EXP * | 27.1185 | 0.9287 | 0.9286 | 0.7936 | 3.3655 | 4.9278 |

| AIHS | 27.2114 | 0.9276 | 0.9273 | 0.7744 | 4.2540 | 5.0893 |

| ATWT | 27.3592 | 0.9231 | 0.9343 | 0.7742 | 4.5509 | 5.0941 |

| GSA | 26.2029 | 0.9143 | 0.9286 | 0.7534 | 5.3822 | 5.8476 |

| BT | 23.2679 | 0.9145 | 0.8656 | 0.6511 | 5.0901 | 9.0333 |

| MTF_GLP_CBD | 26.4855 | 0.9177 | 0.9313 | 0.7586 | 5.2536 | 5.6210 |

| MMMT | 27.2816 | 0.9275 | 0.9353 | 0.8046 | 4.5076 | 4.9474 |

| GS | 26.4368 | 0.9136 | 0.9164 | 0.7423 | 4.8918 | 5.6067 |

| MTF_GLP_HPM | 21.9264 | 0.8428 | 0.8587 | 0.6403 | 6.1466 | 7.9485 |

| ASIM | 27.6697 | 0.9336 | 0.9400 | 0.8234 | 4.2760 | 4.8197 |

| DRPNN | 28.7108 | 0.9692 | 0.9525 | 0.8474 | 3.5782 | 4.3122 |

| MSDCNN | 27.4453 | 0.9538 | 0.9405 | 0.8234 | 3.7876 | 4.9131 |

| PCDRN | 29.5375 | 0.9853 | 0.9603 | 0.8691 | 3.2530 | 4.0998 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Tu, W.; Huang, S.; Lu, H. PCDRN: Progressive Cascade Deep Residual Network for Pansharpening. Remote Sens. 2020, 12, 676. https://doi.org/10.3390/rs12040676

Yang Y, Tu W, Huang S, Lu H. PCDRN: Progressive Cascade Deep Residual Network for Pansharpening. Remote Sensing. 2020; 12(4):676. https://doi.org/10.3390/rs12040676

Chicago/Turabian StyleYang, Yong, Wei Tu, Shuying Huang, and Hangyuan Lu. 2020. "PCDRN: Progressive Cascade Deep Residual Network for Pansharpening" Remote Sensing 12, no. 4: 676. https://doi.org/10.3390/rs12040676

APA StyleYang, Y., Tu, W., Huang, S., & Lu, H. (2020). PCDRN: Progressive Cascade Deep Residual Network for Pansharpening. Remote Sensing, 12(4), 676. https://doi.org/10.3390/rs12040676