Abstract

Hyperspectral image classification methods may not achieve good performance when a limited number of training samples are provided. However, labeling sufficient samples of hyperspectral images to achieve adequate training is quite expensive and difficult. In this paper, we propose a novel sample pseudo-labeling method based on sparse representation (SRSPL) for hyperspectral image classification, in which sparse representation is used to select the purest samples to extend the training set. The proposed method consists of the following three steps. First, intrinsic image decomposition is used to obtain the reflectance components of hyperspectral images. Second, hyperspectral pixels are sparsely represented using an overcomplete dictionary composed of all training samples. Finally, information entropy is defined for the vectorized sparse representation, and then the pixels with low information entropy are selected as pseudo-labeled samples to augment the training set. The quality of the generated pseudo-labeled samples is evaluated based on classification accuracy, i.e., overall accuracy, average accuracy, and Kappa coefficient. Experimental results on four real hyperspectral data sets demonstrate excellent classification performance using the new added pseudo-labeled samples, which indicates that the generated samples are of high confidence.

1. Introduction

Hyperspectral images (HSIs) have hundreds of spectral bands that contain detailed spectral information. Thus, hyperspectral images are widely used in precision agriculture [1], geological prospecting [2], target detection [3], and landscape classification [4]. Classification is one of the important branches in hyperspectral image processing [5] because it can help people understand hyperspectral image scenes via visual classification. However, in the context of supervised classification, the existence of the “Hughes phenomenon” [6] caused by an imbalance between the limited training samples and the extreme spectral dimension of hyperspectral images influences the classification performance [7].

To alleviate the “Hughes phenomenon”, dimensionality reduction [8,9] and semi-supervised classification [10,11] have been extensively studied. The former can reduce the dimensions of hyperspectral images, and the latter can increase the number of training samples. In general, the dimension reduction method can be divided into two categories: feature extraction and feature selection. Among them, feature extraction [12,13] reduces computational complexity by projecting high-dimensional data into low-dimensional data space, and feature selection [14] selects appropriate bands from the original set of spectral bands. In addition to the dimensionality reduction method, semi-supervised classification is often used in the case of small samples. For example, graph-based [15,16], active learning-based [17,18], and generative model-based [19,20] methods have attracted the attention of researchers seeking to tackle the problems caused by the limited availability of labeled samples. These semi-supervised methods can compensate for the lack of labeled samples and improve the classification results.

Pseudo-labeled sample generation is also a commonly used method for semi-supervised classification, which attempts to assign pseudo-labels to more samples. In [21], a combination of active learning (AL) and semi-supervised learning (SSL) is used in a novel manner to obtain more pseudo-labeled samples. In [22], high-confidence semi-labeled samples whose class labels are determined by an ML classifier are added to the training samples to improve hyperspectral image classification performance. In [23], a constrained Dirichlet process mixture model is proposed that produces high-quality pseudo-labels for unlabeled data. In [24], a sifting generative adversarial networks is used to generate more numerous, more diverse, and more authentic labeled samples for data augmentation. However, active learning requires manual intervention, and other methods require multiple iterations or deep learning to converge.

In recent years, sparse representation-based classification (SRC) methods have drawn much attention in dealing with small training sample problems in hyperspectral image classification [25,26]. The goal of SRC is to represent an unknown sample compactly using only a small number of atoms in an overcomplete dictionary. The class label with the minimal representative error will be assigned to the unlabeled samples. Some researchers extended the SRC-based methods to utilize spatial information in hyperspectral images, e.g., joint SRC [27,28], kernel SRC [29,30,31], adaptive SRC [32,33], etc. In addition, there was also some work that used multi-objective optimization to improve SRC directly [34,35]. Ideally, the nonzero elements in a sparse representation vector will be associated with atoms of a single class in the dictionary, and we can easily assign a test sample to that class.

Motivated by sparse representation, a novel sparse representation-based sample pseudo-labeling (SRSPL) method is proposed. In the field of remote sensing, if a pixel contains only one type of land cover, this pixel is called a pure pixel; otherwise, it is a mixed pixel. Due to the limitation of the spatial resolution of the imaging spectrometers, the problem of mixed pixels is quite common in hyperspectral remote sensing images [36]. In general, for the mixed pixels of HSIs, the sparse representation of their spectral features usually involves multiple classes, so the representation coefficient vectors have larger entropies. Conversely, for the pure pixels of HSIs, the spectral features can be linearly represented using atoms from a single class in the overcomplete dictionary, so the representation coefficient vectors of pure pixels will have smaller entropies. Thus, the pixels with small sparse representation entropies can be used to augment the training samples. Using this method, the size of the training sample set can be effectively increased. However, due to factors such as illumination, shading, and noise, the spectral features of different land covers in natural scenes are usually subject to some degree of distortion. Consequently, the generated pseudo-labeled samples cannot guarantee a high quality when the sparse representation method is applied to the hyperspectral pixels. To solve this problem, the intrinsic image decomposition (IID) [37] method is used in this paper before generating samples to extract the spectral reflectance components of the original hyperspectral image. The classification accuracy on four HSIs verifies that the pseudo-labeled samples generated by the proposed method are of high quality.

The main innovations of the proposed SRSPL method are summarized as follows:

1. The information entropy of sparse representation coefficient vectors is first used in the training sample generation of HSIs. The SRC method will assign class labels with minimal sparse representation errors for hyperspectral pixels other than training samples and test samples. However, we only select pixels with small class uncertainty as pseudo-labeled samples to augment the original training set.

2. We have found that, for HSI, remote sensing reflectance extraction is necessary before using the sparse representation method. In this paper, the effective IID method is performed on the HSI, which is beneficial for reducing the sparse representation error.

The experimental results illustrate that the proposed method for pseudo-labeled sample generation based on sparse representation is very effective in improving the classification results.

2. Related Work

2.1. Intrinsic Image Decomposition of Hyperspectral Images

Intrinsic image decomposition (IID) [37] is a challenging problem in computer vision that aims at modeling the perceiving function of human vision to distinguish the reflectance and shading of the objects from a single image [38]. Since the intrinsic components of an image reflect different physical characteristics of the scene, e.g., reflectance, illumination, and shading, many issues such as natural image segmentation [39] can benefit from IID. Given a single RGB image , the IID algorithm decomposes into two components: the spectral reflectance component and the shading component :

We denote each pixel as , where and . The parameters r, g, and b refer to the red, green, and blue channels of a color image, respectively.

The optimization-based IID [40] method is based on the assumption of the local color characteristics in images; in a local window of an image, the changes in pixel values are usually caused by changes in the reflectance [41]. Under this assumption, the shading component could be separated from the input image. Thus, the reflectance value of one pixel can be represented by the weighted summation of its neighborhood pixel values:

where is the set of neighboring pixels around pixel i; measures the similarity of the intensity value and the spectral angle value between pixel i and pixel j; represents the intensity image, which is calculated by averaging all the image bands; , denote the angle between the pixel vectors and ; and , denote the variance of the intensities and the angle in a local window around i, respectively.

Based on Equations (1) and (2), the shading component and the reflectance component can be obtained by optimizing the following energy function:

The complete description of the optimized process can be found in [40].

Kang et al. [37] first extended the IID as a feature extraction method from a three-band image to a hyperspectral image (HSI) of more than 100 bands and achieved excellent results. Here, the pixel values of HSIs are determined by the spectral reflectance, which is determined by the material of different objects, and the shading component, which consists of light and shape-dependent properties. The shading component is not directly related to the material of the object. Therefore, IID is adopted to extract the spectral reflectance components of the HSI to distinguish more classes, and remove useless spatial information preserved in the shading component of the HSIs.

2.2. Sparse Representation Classification of Hyperspectral Images

The sparse representation classification (SRC) framework was first introduced for face recognition [42]. Chen et al. [43] extended the SRC to pixelwise HSI classification, which relied on the observation that spectral pixels of a particular class should lie in a low-dimensional subspace spanned by dictionary atoms (training pixels) from the same class. An unknown test pixel can be represented as a linear combination of training pixels from all classes. For HSI classification, suppose that we have C distinct classes and stack an overcomplete dictionary , where B denotes the number of bands and N is the number of training samples, respectively. Set is a set of labels, and refers to the cth class. For a test sample of HSI, can be represented as follows:

where represents the sparse vector. Intuitively, the sparse vector can be measured by the -norm of it (-norm counts the number of nonzero entries in a vector). Since the combinatorial -norm minimization is an NP-hard problem, the -norm minimization, as the closest convex function to -norm minimization, is widely employed in sparse coding, and it was shown that -norm and -norm minimizations are equivalent if the solution is sufficiently sparse [44].

As the hyperspectral signals from the same class often span the same low-dimensional subspace that is constructed by the corresponding training samples, which involves the non-zero entries of the sparse vector, the class of the hyperspectral signal can be directly determined by the characteristics of the recovered sparse vector . Given the dictionary of training samples , the sparse representation coefficient vector can be be recovered by solving the following Lasso problem:

where represents the norm, represents the norm of the vector and is a scalar regularization parameter. This optimization problem can be solved by Proximal Gradient Descent (PGD) [45]. After the sparse representation vector is obtained, the label of the test sample of HSI can be assigned by the minimal reconstructed residual:

where is the subdictionary of the cth class and denotes the representative coefficient of the cth class.

3. Proposed Method

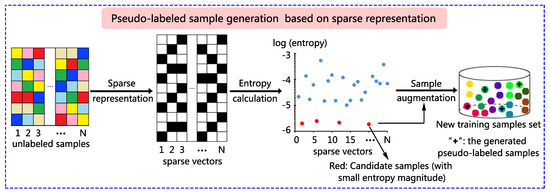

A novel sparse representation-based sample pseudo-labeling (SRSPL) method is proposed in this paper. The SRSPL method consists of the following three steps. First, IID is used to reduce the dimension and noise of the original hyperspectral image. Second, sparse representation is applied to generate the sparse representation vectors of the hyperspectral pixels other than the training samples and test samples. The information entropies of all these hyperspectral pixels are calculated based on the sparse representation vectors to discriminate purity, and the samples with low representation entropies are selected to generate candidate samples. Third, these candidate samples are assigned pseudo-labels by the minimal reconstructed residual. Then, these pseudo-labeled samples are augmented to the original training set, and an extended random walker (ERW) classifier is trained to evaluate the sample quality. Figure 1 shows a graphical example illustrating the principle of the proposed SRSPL method.

Figure 1.

Graphical example illustrating the process of pseudo-labeled sample generation based on sparse representation. In the new training sample set, small balls of different colors represent different classes of training samples, and the plus sign represents a newly generated pseudo-labeled sample.

3.1. Feature Extraction of Hyperspectral Images

(1) Spectral Dimensionality Reduction

The initial hyperspectral image is divided into M groups of equal band sizes [37], where N is the number of pixels and B is the dimension of the initial image. The number of bands in each group is denoted by .

The averaging-based image fusion is applied to each group and the resulting fused bands are used for further processing:

where m is the mth group, is the nth band in the mth group of the original hyperspectral data, is the number of bands in the mth group, and is the mth fused band. Here, the averaging-based method can ensure that the pixels of the dimensionality-reduced hyperspectral image can still be directly interpreted in a physical sense. In other words, the pixels of the dimensionality-reduced image will be related to the reflectance of the scene. Moreover, this method can effectively remove image noise.

(2) Band Grouping

The dimensionality-reduced image is partitioned into several subgroups of adjacent bands as follows:

where refers to the kth subgroup, is the largest integer not greater than , and is the smallest integer not less than . Here, Z refers to the number of bands in each subgroup.

(3) Reflectance Extraction with the Optimization-Based IID

For each subgroup , the optimization-based IID method is used to obtain the reflectance and shading components, and the equation is as follows:

where and refer to the reflectance and the shading components of the kth subgroup, respectively.

The reflectance components of the different subgroups are combined to obtain the resulting IID features, which is an M-dimensional feature matrix that can be used for subsequent processing.

3.2. Candidate Sample Selection Based on Sparse Representation

To find the purest pixels and use them as candidate samples, we first calculate the sparse representation of all the hyperspectral samples other than the training samples and test them (denoted as ). Then, we determine the purity of the samples by calculating its sparse representation information entropy. When a sample has a lower sparse representation information entropy, it is very likely to be a pure pixel. The process can be described as follows:

(1) Calculating the Hyperspectral Sample’s Sparse Representation

For a given sample , denotes the reflectance component of , and . The goal of sparse representation is to represent by a sparse linear combination of training samples that make up the dictionary. Suppose is a set of labels and refers to the cth class. The representation coefficient of can be calculated based on an overcomplete dictionary according to Equation (5). Therefore, is assumed to lie in the union of the C different subspaces, which can be seen as the sparse linear combination of all the training samples:

where is the structured overcomplete dictionary, which consists of the class sub-dictionaries . is the number of training samples, and is an -dimensional representation coefficient vector formed by concatenating the sparse vectors .

The representation coefficient of can be recovered by solving the following optimization problem:

where is a scalar regularization parameter and requires experimental analysis to determine the optimal value.

(2) Discriminating the Purity of Hyperspectral Samples based on Information Entropy

The information entropy of each is calculated based on its representation coefficient:

where denotes an entropy function and is the representation coefficient vector of . The purity of is determined according to the magnitude of the entropy.

(3) Finding the Candidate Samples

First, the information entropy for the reflectance components of each is obtained. Second, these samples corresponding to these reflectance components are sorted in ascending order according to the magnitude of their entropies. Third, the first T samples are selected as the candidate samples set , where the parameter T is an optimal value obtained by experiments.

3.3. Pseudo-Label Assignment for Candidate Samples

For each candidate sample, one pseudo-label is determined based on the minimal reconstructed residual:

where is the sub-dictionary of the cth class and denotes the representative coefficient of the cth class. Thus, the pseudo-labeled sample set is .

3.4. Classification Model Optimization Using Pseudo-Labeled Samples

First, the pseudo-labeled samples set and the initial labeled samples set are combined to form the new labeled samples set . Then, the ERW [46] algorithm is used as a classification model to evaluate the quality of these newly generated pseudo-labeled samples, where the ERW algorithm is adopted to calculate a set of optimized probabilities for the class of each pixel to be determined based on the maximum probability.

3.5. Pseudo-Code of the Proposed SRSPL Method

For a detailed illustration of the proposed SRSPL method, the pseudocode of the proposed SRSPL method is shown in Algorithm 1.

| Algorithm 1: Sparse Representation-based Sample Pseudo-Labeling (SRSPL) for Hyperspectral Image |

| Classification |

| Input: Hyperspectral image ; the initial labeled samples set ; the hyper- |

| spectral samples set other than the training samples and test samples: |

| Output: Classification map |

| 1: Reduce the dimension and noise of based on averaging image fusion (Equation (7)) to obtain the |

| dimensionally reduced image . |

| 2: According to Equation (8), partition the into several subgroups of adjacent bands, denoted as . |

| 3: According to Equations (9) and (10), obtain the spectral reflectance components of each , (through the |

| optimization-based IID method) and combined to obtain the resulting IID features . |

| 4: For each Do |

| (1) According to Equation (11) and (12), solve the sparse representation coefficient of each sample |

| based on the reflectance component and the overcomplete dictionary . |

| (2) According to Equation (13), calculate the information entropy of each to discriminating the |

| purity of each sample . |

| 5:End For |

| 6: Sort the samples corresponding to these reflectance components in ascending order according to their |

| entropy magnitudes. |

| 7: Selected the first T samples as the candidate samples set . |

| 8: According to Equation (14), assign the pseudo-label for each sample in set , to obtain the pseudo- |

| labeled samples set . |

| 9: Combine the initial labeled samples set and the pseudo-labeled samples set to the new |

| labeled samples set . |

| 10: Classify the spectral reflectance components with the extended random walker (ERW) classifier and the |

| new labeled samples set to obtain the final classification map . |

| 11:Return |

4. Experiment

4.1. Experimental Data Sets

(1) Indian Pines data set: The Indian Pines image was acquired by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor, which captured the Indian Pines unlabeled agricultural site in northwestern Indiana. The image contains 220 × 145 × 145 bands. Twenty water absorption bands (Nos. 104–108, 150–163, and 220) were removed before hyperspectral image classification. The spatial resolution of the Indian Pines image is 20 m per pixel, and the spectral coverage ranges from 0.4 to 2.5 m. Figure 2 shows a color composite of the Indian Pines image and the corresponding ground-truth data.

Figure 2.

Indian Pines data set. (a) false-color composite; (b,c) ground truth data.

(2) University of Pavia data set: The University of Pavia image, which captured the campus of the University of Pavia, Italy, was recorded by the Reflective Optics System Imaging Spectrometer (ROSIS). This image contains 115 bands of size 610 × 340 and has a spatial resolution of 1.3 m per pixel and a spectral coverage ranging from 0.43 to 0.86 m. Using a standard preprocessing approach before hyperspectral image classification, 12 noisy channels were removed. Nine classes of interest are considered for this image. Figure 3 shows a color composite of the University of Pavia image and the corresponding ground-truth data.

Figure 3.

University of Pavia data set. (a) false-color composite; (b,c) ground truth data.

(3) Salinas data set: The Salinas image was captured by the AVIRIS sensor over Salinas Valley, California, at a spatial resolution of 3.7 m per pixel. The Salinas image contains 224 bands of size 512 × 217. Twenty water absorption bands (Nos. 108–112, 154–167, and 224) were discarded before classification. Figure 4 shows a color composite of the Salinas image and the corresponding ground-truth data.

Figure 4.

Salinas data set. (a) false-color composite; (b,c) ground truth data.

(4) Kennedy Space Center data set: The Kennedy Space Center (KSC) image was captured by the National Aeronautics and Space Administration (NASA) Airborne Visible/Infrared Imaging Spectrometer instrument at a spatial resolution of 18 m per pixel. The KSC image contains 224 bands of size 512 × 614. The water absorption and low signal-to-noise ratio (SNR) bands were discarded before classification. Figure 5 shows the KSC image and the corresponding ground-truth data.

Figure 5.

Kennedy Space Center data set. (a) false-color composite; (b,c) ground truth data.

4.2. Parameter Analysis

(1) Analysis of the Influence of Parameter

For sparse representation, the regularization parameter used in Equation (12) controls the relative importance between sparsity level and reconstruction error, leading to different classification accuracies. Figure 6 shows the effect of varying on the classification accuracies for Indian Pines, University of Pavia, Salinas, and Kennedy Space Center data sets. In the experiment, the four different data sets are trained with 20 samples per class, respectively, and the remaining labeled samples are used for testing. The regularization parameter for sparse representation is chosen between and . In Figure 6, when , the overall accuracy (OA) shows an increasing trend on each data set. As continues to increase, the overall trend of OA is decreasing. This is because more weight on sparsity level and less weight on approximation accuracy. Therefore, is set as the optimal value in this paper.

Figure 6.

Effects of the regularization parameter on the overall accuracy (OA) for four different data sets.

(2) Analysis of the Influence of Parameter T

The parameter T is selected by using the five-fold cross-validation strategy and repeating the finetuning procedure. As described in Section 3.2, when the entropy of the sparse representation vector of a sample is small, the probability of that sample being a pure sample is large. As T increases, samples subsequently added will have a larger entropy, and these newly pseudo-labeled samples have a high probability of being mixed pixels. Thus, although the number of samples increases, the magnitude of the increase in the classification accuracy is small; consequently, it is important to choose a suitable T. In the experiment, the four different data sets are trained with 20 samples per class, respectively, and the remaining labeled samples are used for testing. Figure 7 shows the impact of different numbers of pseudo-labeled samples on the OA for the four data sets. The OA corresponding to different T values is the mean value of 30 random replicate experiments. As seen by observing Figure 7, for the different data sets, when T < 40, the OA tends to grow, although there is a downward trend in the interval related to the quality of the generated samples and the selected sample points. When T > 40, the OA shows a significant downward trend. In addition, when T = 40, the OAs are highest. Therefore, in this paper, we selected T = 40 as the default parameter value.

Figure 7.

Effects of the number of generated pseudo-labeled samples (T) on the overall accuracy (OA) for different data sets.

(3) Analysis of the Influences of Parameters M and Z

The parameters M and Z from Equation (8) are hyperparameters that require tuning. We evaluated the influences of the parameters M and Z by objective and visual analyses. In the experiment, the four different data sets are trained with 5, 20, 3, and 15 samples per class, respectively, and the remaining labeled samples are used for testing. Figure 8 shows the OA of the proposed SRSPL method of four different data sets. For the four data sets, as Figure 8 shows, as M and Z change, the OA exhibits large fluctuations. When M is less than 10, the accuracy of the proposed method is relatively low. This result indicates that, when M has few features, useful spectral information will be lost. Furthermore, the results show that the proposed method can achieve stable and high accuracy when the size of the subgroup is less than 8 because the IID method works for small channel images. In this paper, the default parameter settings M = 32 and Z = 4 were adopted for subsequent testing of the data sets.

Figure 8.

Experimental results. (a) the results for the Indian Pines data set; (b) the results for University of Pavia data set; (c) the results for Salinas data set; (d) the results for Kennedy Space Center data set. Each image displays the overall classification accuracy of the proposed method with respect to varying feature numbers (M) and different subgroup sizes (Z).

(4) Sensitivity Analysis

The parameters involved in our proposed SRSPL method are mainly regularization parameter , the number of generated pseudo-labeled samples T, feature numbers M and different subgroup sizes Z. We have conducted an extensive experimental analysis of each parameter for the SRSPL method. The one-parameter-at-a-time (OAT) [47] approach is used for model parameter sensitivity analysis, that is, to ensure that other parameters remain unchanged when changing one parameter, and then to study the effect of one parameter on the model. Figure 9 shows the sensitivity of parameters , T, M and Z for four data sets. It can be seen that the sensitivity value of on the four data sets is the smallest. However, parameters T, M, and Z show a higher sensitivity value on the four different data sets. Thus, it follows that the has a slight effect on the SRSPL method and T, M, Z have a greater effect on the SRSPL method, which is highly sensitive and should be tuned.

Figure 9.

Sensitivity of the parameters for different data sets.

4.3. Performance Evaluation

We applied the proposed SRSPL method and other methods to four hyperspectral images to evaluate the effectiveness of various hyperspectral image classification methods. We compared the proposed SRSPL method with several other classification methods, including the traditional SVM [48], three semi-supervised methods, e.g., extended random walker (ERW) [46], spatial-spectral label propagation based on SVM (SSLP-SVM) [49], the maximizer of the posterior marginal by loopy belief propagation (MPM-LBP) [50] and two deep learning methods, e.g., recurrent 2D convolutional neural network (R-2D-CNN) [51], cascaded recurrent neural network (CasRNN) [52]. IID [37] is used to extract the useful features and reduce noise in the proposed SRSPL method. Therefore, in order to verify the validity of the IID, an experiment that does not use the IID method is added (record as Without-IID). In this section, the effect of different feature extraction methods to the proposed method is also analyzed, e.g., principal component analysis (PCA) [53], image fusion, and recursive filtering (IFRF) [54] (record as With-PCA and With-IFRF, respectively). Here, the magnitude setting of the parameter T in the With-PCA and With-IFRF methods is the same as the SRSPL method. The SVM classifier was implemented from a library for LIBSVM and used a Gaussian kernel. The tests were performed using five-fold cross-validation.

The parameters for the ERW, SSLP-SVM, MPM-LBP, R-2D-CNN, CasRNN, PCA and IFRF methods were set to the default parameters reported in the corresponding papers. In this paper, three common metrics, namely: the OA, average accuracy (AA), and Kappa coefficient, were used to evaluate classifier performance. The classification results for each method are given as the average of 30 experiments to reduce the influence of sample randomness. The data on the left side of Table 1, Table 2 and Table 3 represent the mean values, while those on the right represent the standard deviations.

Table 1.

Classification performance of different methods performed on the Indian Pines data set with 5–25 training samples per class. Without-IID is the proposed method without the IID Step. With-PCA and With-IFRF denote the proposed method using different methods for feature extraction. (SVM, support vector machine; ERW, extended random walker; SSLP-SVM, spatial-spectral label propagation based on SVM; MPM-LBP, the maximizer of the posterior marginal by loopy belief propagation; R-2D-CNN, recurrent 2D convolutional neural network; CasRNN, cascaded recurrent neural network; SRSPL, sample pseudo-labeling method based on sparse representation. Three common metrics: overall accuracy (OA), average accuracy (AA), Kappa coefficient. The bold values indicate the greatest accuracy among the methods in each case.)

Table 2.

Classification accuracies (University of Pavia) in percentages for the tested methods when 20 training samples per class are provided. (The bold values indicate the greatest accuracy among the methods in each case.)

Table 3.

Classification accuracies (University of Pavia) in percentages for the tested methods when 20 training samples per class are provided. (The bold values indicate the greatest accuracy among the methods in each case.)

The experiment was first performed on the Indian Pines date set. As shown in Table 1, the training samples were randomly selected and accounted for 5, 10, 15, 20, and 25 per class of the reference data. Note that, as the number of training samples increases, the classification accuracy grows steadily. The proposed SRSPL method always outperforms the other methods, such as ERW, SSLP-SVM, and MPM-LBP, in terms of the highest overall classification accuracies; this method obtained the highest accuracies in all cases (84.73%, 90.90%, 93.88%, 95.52% and 97.09%, respectively). Compared with the two deep learning methods R-2D-CNN and CasRNN, the SRSPL method can achieve higher accuracy in the case of small samples. Compared with the three methods of Without-IID, With-PCA, and With-IFRF, Table 1 shows that the proposed SRSPL method obtains higher accuracies, which indicates that feature extraction using the IID method is effective.

Figure 10 shows the classification maps obtained for the Indian Pines data set with the five methods when using 25 training samples from each class. As shown in Figure 10c–g, the proposed SRSPL method performs better than the other classification methods (i.e., ERW, SSLP-SVM, MPM-LBP, R-2D-CNN, and CasRNN) when fewer training samples are provided. For example, the classification maps of the SVM, SSLP-SVM, MPM-LBP, R-2D-CNN, and CasRNN methods include more noise, and the ERW method does not perform as well as the SRSPL method does on certain classes.

Figure 10.

The classification maps of the different tested methods for the Indian Pines image. (a) ground-truth data; (b) SVM, support vector machine; (c) ERW, extended random walke; (d) SSLP-SVM, spatial-spectral label propagation based on SVM; (e) MPM-LBP, the maximizer of the posterior marginal by loopy belief propagation; (f) R-2D-CNN, recurrent 2D convolutional neural network; (g) CasRNN, cascaded recurrent neural network; (h) SRSPL, sample pseudo-labeling method based on sparse representation.

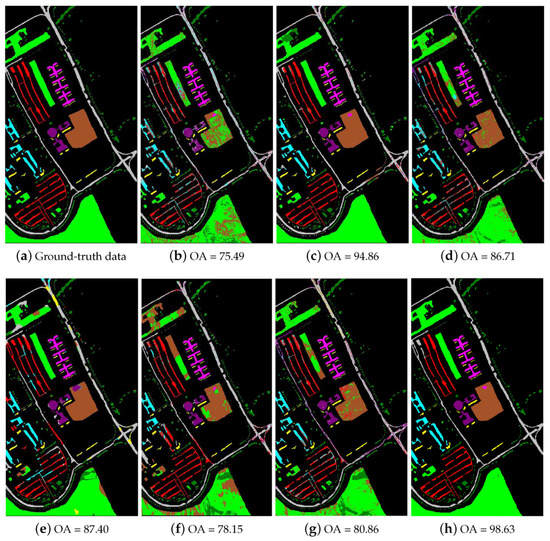

Table 2 shows the experimental results using the University of Pavia data set when the number of training samples per class is 20. As Table 2 shows, the nine compared methods achieve various performance characteristics when only 20 training samples per class are provided. The proposed SRSPL method not only outperforms the R-2D-CNN and CasRNN methods, which are the state-of-the-art methods based on deep learning in the hyperspectral image classification, but also outperforms the other compared methods by 2–20%. Notably, on classes such as “Asphalt” and “Trees”, the proposed SRSPL method performs much better than does the ERW method-by 12.57% and 12.94%, respectively. In the feature extraction experiment, the OA of the proposed SRSPL method outperforms the Without-IID, With-PCA, and With-IFRF methods by 2.01%, 15.14%, and 1.87%, respectively. Figure 11 shows the classification maps obtained for the University of Pavia data set with the different methods when using 20 training samples from each class. As Figure 11 shows, the SRSPL method is superior to other semi-supervised and deep learning methods in visual appearance, which shows its effectiveness.

Figure 11.

The classification maps of different methods for the University of Pavia image. (a) ground-truth data; (b) SVM; (c) ERW; (d) SSLP-SVM; (e) MPM-LBP; (f) R-2D-CNN; (g) CasRNN; (h) SRSPL.

The third experiment was conducted on the Salinas data set. In Table 3, three training samples per class were randomly selected for training, and the rest were used for testing. Given the limited samples, the experiment is quite challenging. The corresponding quantitative values of the classification results are tabulated in Table 3. As shown, although only limited training samples were provided, some of the methods achieved high OA and Kappa scores. In fact, some of the methods achieved 100% classification accuracy on several classes. This is because the Salinas image includes many large uniform regions that make classification simpler. Compared with the other methods, the proposed SRSPL method greatly improves the classification accuracy when training samples are extremely limited. Because the R-2D-CNN and CasRNN methods require a large number of samples for training, when there are small training samples, it is easy to produce overfitting and thus lead to poor classification results. As seen from Figure 12, the proposed SRSPL method can classify most of the features correctly, which reflects the effectiveness of the method.

Figure 12.

The classification maps of different methods for the Salinas image. (a) ground-truth data; (b) SVM; (c) ERW; (d) SSLP-SVM; (e) MPM-LBP; (f) R-2D-CNN; (g) CasRNN; (h) SRSPL.

The fourth experiment was performed on the Kennedy Space Center data set. In the experiment, the proposed SRSPL method was compared with the SVM method and three other semi-supervised methods, e.g., ERW, SSLP-SVM, MPM-LBP, and two different deep learning methods, e.g., R-2D-CNN, CasRNN. Figure 13 shows the changes in OA and Kappa coefficient as the number of training samples per class increases from 3 to 15. Compared with the deep learning-based classification methods R-2D-CNN and CasRNN, the proposed SRSPL method shows great advantages. As shown in Figure 13, the SRSPL method achieves the highest accuracy among the tested methods.

Figure 13.

The classifications of different methods for the Kennedy Space Center image when the number of training samples increases from three per class to 15 per class. (a) OA; (b) Kappa coefficient.

Finally, we evaluated the computational times of the three different semi-supervised methods (i.e., MPM-LBP, SSLP-SVM, and SRSPL) on the four different data sets using 20 samples per class using MATLAB on a computer with 3.6 GHz CPU and 8-GB memory. Because the semi-supervised method proposed in this paper does not involve a single training process, its computational cost is greatly improved. Table 4 shows that the proposed SRSPL method requires less time to process the Indian Pines, Salinas, and Kennedy Space Center data sets than do the other tested methods.

Table 4.

Execution times of three semi-supervised methods of four data sets. (The bold values indicate the minimum computational times among the methods in each data set.)

5. Discussion

In hyperspectral image classification, it is difficult and expensive to obtain enough labeled samples for model training. Considering the strong spectral correlation between labeled and unlabeled samples in the image, we proposed a novel sparse representation-based sample pseudo-labeling method (SRSPL). The pseudo-labeled samples generated by this method can be used to augment the training set, thereby solving the problem of poor classification performance under the condition of small samples.

Compared with other pseudo-labeled sample generation method (such as SSLP-SVM), the proposed SRSPL method is more reliable in generating pseudo-labeled samples. The SSLP-SVM method only adds a small number of pseudo-labeled samples near the labeled samples, and the added samples may be mixed samples. The proposed SRSPL method considers the relationship between sample purity and information entropy, that is, the purer the sample’s spectrum, the lower the information entropy of the sparse representation coefficient, and vice versa. Specifically, the spectral characteristics of a pure sample can be linearly represented using a single class of atoms in an overcomplete dictionary, so its coefficient vector will have smaller entropy. In our method, pure samples with smaller entropy are used to expand the initial training sample set, which can greatly improve classifier performance.

In the case of small samples, compared with other classifiers (such as SVM, ERW, and MPM-LBP), the SRSPL method produces a good classification map for each hyperspectral data set visually, as shown in Figure 9, Figure 10 and Figure 11. This is because the hyperspectral image is first processed using the intrinsic image decomposition technology, which helps reduce errors in subsequent sparse representations, and the pseudo-label samples generated by the SRSPL method can help optimize the classification model. In addition, compared with deep-learning-based classifiers (such as R-2D-CNN and CasRNN), the proposed SRSPL method performs better for a limited number of samples because, in such cases, these two methods are more likely to overfit the training samples, resulting in poor classification results. Thus, the proposed SRSPL method shows better classification results than other comparative methods.

6. Conclusions

In this paper, we proposed a novel sample pseudo-labeling method based on sparse representation that addresses the problem of limited samples. The previously proposed semi-supervised methods for generating pseudo-labeled samples typically select some samples and assign them a pseudo-label based on spectral information correlations or local neighborhood information. However, due to the presence of mixed pixels, the selected samples are not necessarily representative. To find the purest samples, we designed a sparse representation-based pseudo-labeling method that utilizes the coefficient vector of sparse representation and draws on the definition and idea of entropy from information theory. Overall, the proposed SRSPL method provides a new option for semi-supervised learning, which is the first contribution of the paper. Moreover, the proposed SRSPL method also solves the problem of uneven sample distribution through sparse representation based on spectral features, which is beneficial for subsequent classification. In addition, by comparing the standard deviations of OA, AA, and Kappa of 30 random replicate experiments with other state-of-the-art classification methods, we found that the proposed SRSPL method had higher robustness and stability. This is the second contribution of the paper. The experimental results on four real-world hyperspectral images show that the proposed SRSPL method is superior to other state-of-the-art classification methods from the perspectives of both quantitative indicators and classification maps.

Author Contributions

B.C. conceived of the idea of this paper. J.C. designed the experiments and drafted the paper. B.C., Y.L., N.G., and M.G. revised the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was co-supported by the National Natural Science Foundation of China (NSFC) (41406200, 61701272) and the National Key R&D Program of China (2017YFC1405600).

Acknowledgments

The authors would like to thank all reviewers and editors for their comments on this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kang, X.; Li, C.; Li, S.; Lin, H. Classification of hyperspectral images by Gabor filtering based deep network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1166–1178. [Google Scholar] [CrossRef]

- Pontius, J.; Martin, M.; Plourde, L.; Hallett, R. Ash decline assessment in emerald ash borer-infested regions: A test of tree-level, hyperspectral technologies. Remote Sens. Environ. 2008, 112, 2665–2676. [Google Scholar] [CrossRef]

- Imani, M. Manifold structure preservative for hyperspectral target detection. Adv. Space Res. 2018, 61, 2510–2520. [Google Scholar] [CrossRef]

- Cao, X.; Zhou, F.; Xu, L.; Meng, D.; Xu, Z.; Paisley, J. Hyperspectral image classification with Markov random fields and a convolutional neural network. IEEE Trans. Image Process. 2018, 27, 2354–2367. [Google Scholar] [CrossRef]

- Sharma, S.; Buddhiraju, K.M. Spatial–spectral ant colony optimization for hyperspectral image classification. Int. J. Remote Sens. 2018, 39, 2702–2717. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Xia, J.; Du, P.; He, X.; Chanussot, J. Hyperspectral remote sensing image classification based on rotation forest. IEEE Geosci. Remote Sens. Lett. 2013, 11, 239–243. [Google Scholar] [CrossRef]

- Mohanty, R.; Happy, S.; Routray, A. Spatial–Spectral Regularized Local Scaling Cut for Dimensionality Reduction in Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2018, 16, 932–936. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Yang, J. Convolutional neural network in network (CNNiN): Hyperspectral image classification and dimensionality reduction. IET Image Process. 2018, 13, 246–253. [Google Scholar] [CrossRef]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J. Semi-supervised deep learning classification for hyperspectral image based on dual-strategy sample selection. Remote Sens. 2018, 10, 574. [Google Scholar] [CrossRef]

- Cui, B.; Xie, X.; Hao, S.; Cui, J.; Lu, Y. Semi-supervised classification of hyperspectral images based on extended label propagation and rolling guidance filtering. Remote Sens. 2018, 10, 515. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Yu, A.; Fu, Q.; Wei, X. Supervised deep feature extraction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1909–1921. [Google Scholar] [CrossRef]

- He, N.; Paoletti, M.E.; Haut, J.M.; Fang, L.; Li, S.; Plaza, A.; Plaza, J. Feature extraction with multiscale covariance maps for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 57, 755–769. [Google Scholar] [CrossRef]

- Medjahed, S.A.; Ouali, M. Band selection based on optimization approach for hyperspectral image classification. Egyptian J. Remote Sens. Space Sci. 2018, 21, 413–418. [Google Scholar] [CrossRef]

- Cui, B.; Xie, X.; Ma, X.; Ren, G.; Ma, Y. Superpixel-based extended random walker for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3233–3243. [Google Scholar] [CrossRef]

- Jamshidpour, N.; Homayouni, S.; Safari, A. Graph-based semi-supervised hyperspectral image classification using spatial information. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, LA, USA, 21–24 August 2016; pp. 1–4. [Google Scholar]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.; Li, J.; Plaza, A. Active learning with convolutional neural networks for hyperspectral image classification using a new bayesian approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6440–6461. [Google Scholar] [CrossRef]

- Wang, Z.; Du, B.; Zhang, L.; Zhang, L.; Jia, X. A novel semisupervised active-learning algorithm for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3071–3083. [Google Scholar] [CrossRef]

- Li, J.; Xi, B.; Li, Y.; Du, Q.; Wang, K. Hyperspectral classification based on texture feature enhancement and deep belief networks. Remote Sens. 2018, 10, 396. [Google Scholar] [CrossRef]

- Zhou, S.; Chen, Q.; Wang, X. Fuzzy deep belief networks for semi-supervised sentiment classification. Neurocomputing 2014, 131, 312–322. [Google Scholar] [CrossRef]

- Sun, B.; Kang, X.; Li, S.; Benediktsson, J.A. Random-walker-based collaborative learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 212–222. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Adaptive expansion of training samples for improving hyperspectral image classification performance. In Proceedings of the Iranian Conference on Electrical Engineering (ICEE), Mashhad, Iran, 14–16 May 2013; pp. 1–6. [Google Scholar]

- Wu, H.; Prasad, S. Semi-supervised deep learning using pseudo labels for hyperspectral image classification. IEEE Trans. Image Process. 2017, 27, 1259–1270. [Google Scholar] [CrossRef] [PubMed]

- Ma, D.; Tang, P.; Zhao, L. SiftingGAN: Generating and Sifting Labeled Samples to Improve the Remote Sensing Image Scene Classification Baseline In Vitro. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1046–1050. [Google Scholar] [CrossRef]

- Prasad, S.; Labate, D.; Cui, M.; Zhang, Y. Morphologically decoupled structured sparsity for rotation-invariant hyperspectral image analysis. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4355–4366. [Google Scholar] [CrossRef]

- Prasad, S.; Labate, D.; Cui, M.; Zhang, Y. Rotation invariance through structured sparsity for robust hyperspectral image classification. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 6205–6209. [Google Scholar]

- Li, J.; Zhang, H.; Zhang, L. Efficient superpixel-level multitask joint sparse representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5338–5351. [Google Scholar]

- Tu, B.; Zhang, X.; Kang, X.; Zhang, G.; Wang, J.; Wu, J. Hyperspectral image classification via fusing correlation coefficient and joint sparse representation. IEEE Geosci. Remote Sens. Lett. 2018, 15, 340–344. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification via kernel sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 51, 217–231. [Google Scholar] [CrossRef]

- Gan, L.; Xia, J.; Du, P.; Chanussot, J. Multiple feature kernel sparse representation classifier for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5343–5356. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, Z.; Chen, Y.; Yang, J. Spatial-spectral graph regularized kernel sparse representation for hyperspectral image classification. ISPRS Int. J. Geo-Inf. 2017, 6, 258. [Google Scholar] [CrossRef]

- Fang, L.; Wang, C.; Li, S.; Benediktsson, J.A. Hyperspectral image classification via multiple-feature-based adaptive sparse representation. IEEE Trans. Instrum. Meas. 2017, 66, 1646–1657. [Google Scholar] [CrossRef]

- Tong, F.; Tong, H.; Jiang, J.; Zhang, Y. Multiscale union regions adaptive sparse representation for hyperspectral image classification. Remote Sens. 2017, 9, 872. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. Multiobjective-based sparse representation classifier for hyperspectral imagery using limited samples. IEEE Trans. Geosci. Remote Sens. 2018, 57, 239–249. [Google Scholar] [CrossRef]

- Jian, M.; Jung, C. Class-discriminative kernel sparse representation-based classification using multi-objective optimization. IEEE Trans. Signal Process. 2013, 61, 4416–4427. [Google Scholar] [CrossRef]

- Sun, X.; Yang, L.; Zhang, B.; Gao, L.; Gao, J. An endmember extraction method based on artificial bee colony algorithms for hyperspectral remote sensing images. Remote Sens. 2015, 7, 16363–16383. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Fang, L.; Benediktsson, J.A. Intrinsic image decomposition for feature extraction of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2241–2253. [Google Scholar] [CrossRef]

- Tappen, M.F.; Freeman, W.T.; Adelson, E.H. Recovering intrinsic images from a single image. In Advances in Neural Information Processing Systems; Massachusetts Institute of Technology Press: Cambridge, MA, USA, 2003; pp. 1367–1374. [Google Scholar]

- Yacoob, Y.; Davis, L.S. Segmentation using appearance of mesostructure roughness. Int. J. Comput. Vis. 2009, 83, 248–273. [Google Scholar] [CrossRef]

- Shen, J.; Yang, X.; Li, X.; Jia, Y. Intrinsic image decomposition using optimization and user scribbles. IEEE Trans. Cybern. 2013, 43, 425–436. [Google Scholar] [CrossRef]

- Shen, J.; Yang, X.; Jia, Y.; Li, X. Intrinsic images using optimization. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011; pp. 3481–3487. [Google Scholar]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 210–227. [Google Scholar] [CrossRef]

- Chen, Y.; Nasrabadi, N.M.; Tran, T.D. Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3973–3985. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso: A retrospective. J. R. Stat. Soc. Ser. (Stat. Methodol.) 2011, 73, 273–282. [Google Scholar] [CrossRef]

- Nitanda, A. Stochastic proximal gradient descent with acceleration techniques. In Advances in Neural Information Processing Systems; Massachusetts Institute of Technology Press: Cambridge, MA, USA, 2014; pp. 1574–1582. [Google Scholar]

- Kang, X.; Li, S.; Fang, L.; Li, M.; Benediktsson, J.A. Extended random walker-based classification of hyperspectral images. IEEE Trans. Geosci. Remote. Sens. 2014, 53, 144–153. [Google Scholar] [CrossRef]

- Bouda, M.; Rousseau, A.N.; Gumiere, S.J.; Gagnon, P.; Konan, B.; Moussa, R. Implementation of an automatic calibration procedure for HYDROTEL based on prior OAT sensitivity and complementary identifiability analysis. Hydrol. Process. 2014, 28, 3947–3961. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- Wang, L.; Hao, S.; Wang, Q.; Wang, Y. Semi-supervised classification for hyperspectral imagery based on spatial-spectral label propagation. ISPRS J. Photogramm. Remote Sens. 2014, 97, 123–137. [Google Scholar] [CrossRef]

- Wu, Y.; Li, J.; Gao, L.; Tan, X.; Zhang, B. Graphics processing unit–accelerated computation of the Markov random fields and loopy belief propagation algorithms for hyperspectral image classification. J. Appl. Remote Sens. 2015, 9, 097295. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.; Zhang, X.; Huang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote. Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Hang, R.; Liu, Q.; Hong, D.; Ghamisi, P. Cascaded recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote. Sens. 2019, 57, 5384–5394. [Google Scholar] [CrossRef]

- Farrell, M.D.; Mersereau, R.M. On the impact of PCA dimension reduction for hyperspectral detection of difficult targets. IEEE Geosci. Remote Sens. Lett. 2005, 2, 192–195. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Benediktsson, J.A. Feature extraction of hyperspectral images with image fusion and recursive filtering. IEEE Trans. Geosci. Remote Sens. 2013, 52, 3742–3752. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).