Structure-Aware Convolution for 3D Point Cloud Classification and Segmentation

Abstract

1. Introduction

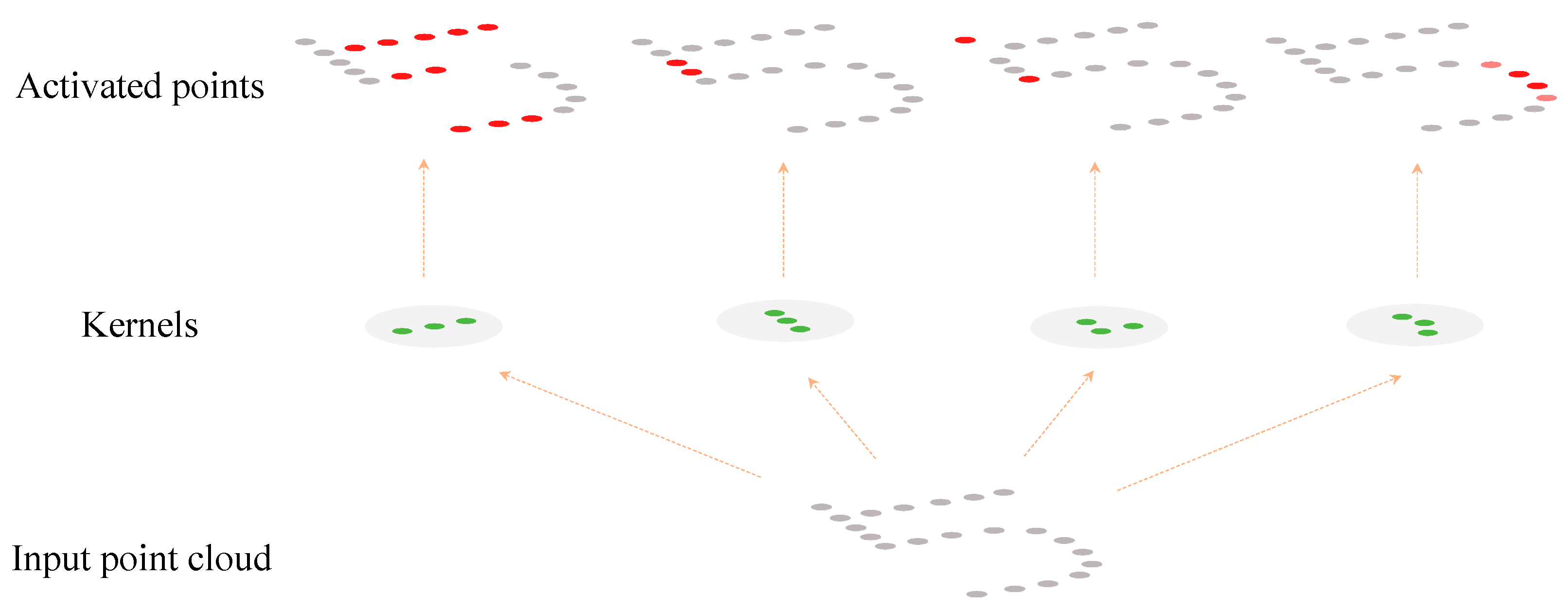

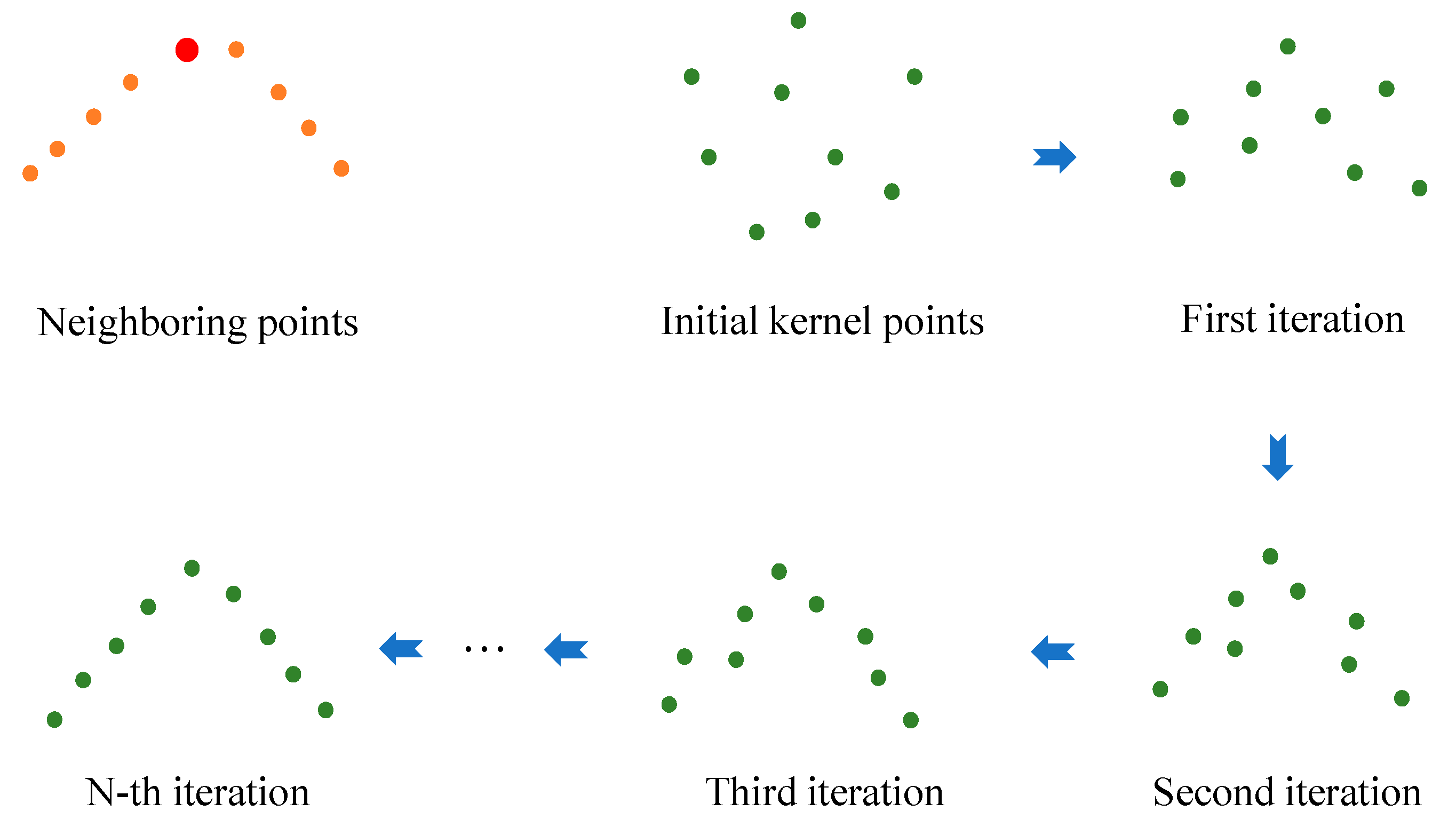

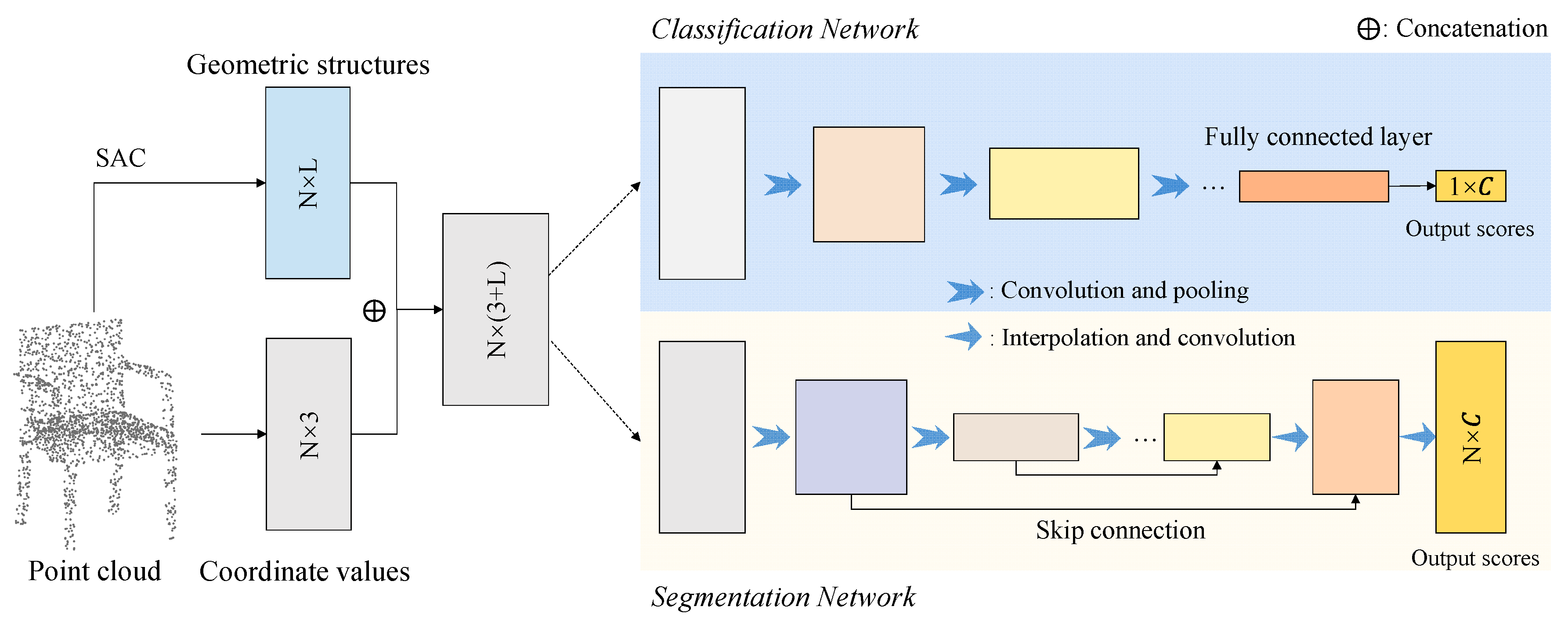

- We propose a novel structure-aware convolution (SAC) to explicitly capture the geometric structure of point clouds by matching each point’s neighborhoods with a series of learnable 3D point kernels (which can be regarded as 3D geometric “templates”);

- We show how to integrate our SAC into existing point cloud deep learning networks, and train end-to-end point cloud classification and segmentation networks;

2. Related Works

2.1. Feature Extraction for 3D Point Clouds

2.2. Classification with Extracted Features

2.3. Deep Learning on Point Clouds

3. Methods

3.1. Structure-Aware Convolution

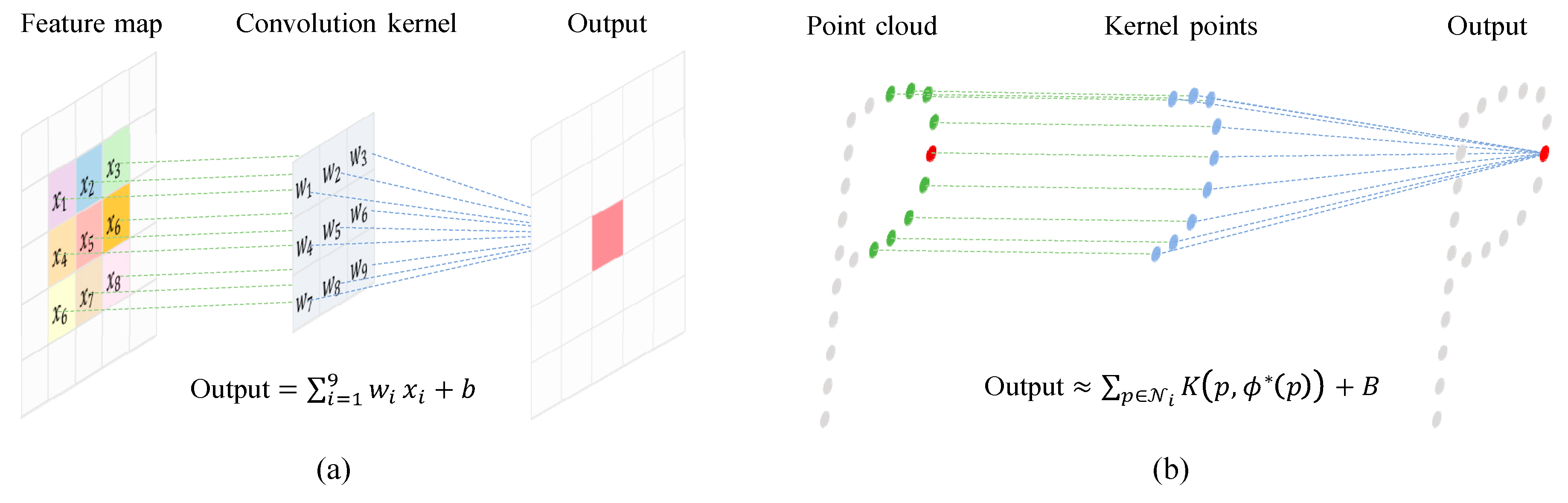

3.2. Relationship to Standard Convolution

3.2.1. Reformulation of Standard Convolution

3.2.2. Reformulation of SAC

3.3. Deep Learning Networks with the Proposed SAC

4. Materials and Experiments

4.1. Tasks and Evaluation Metrics

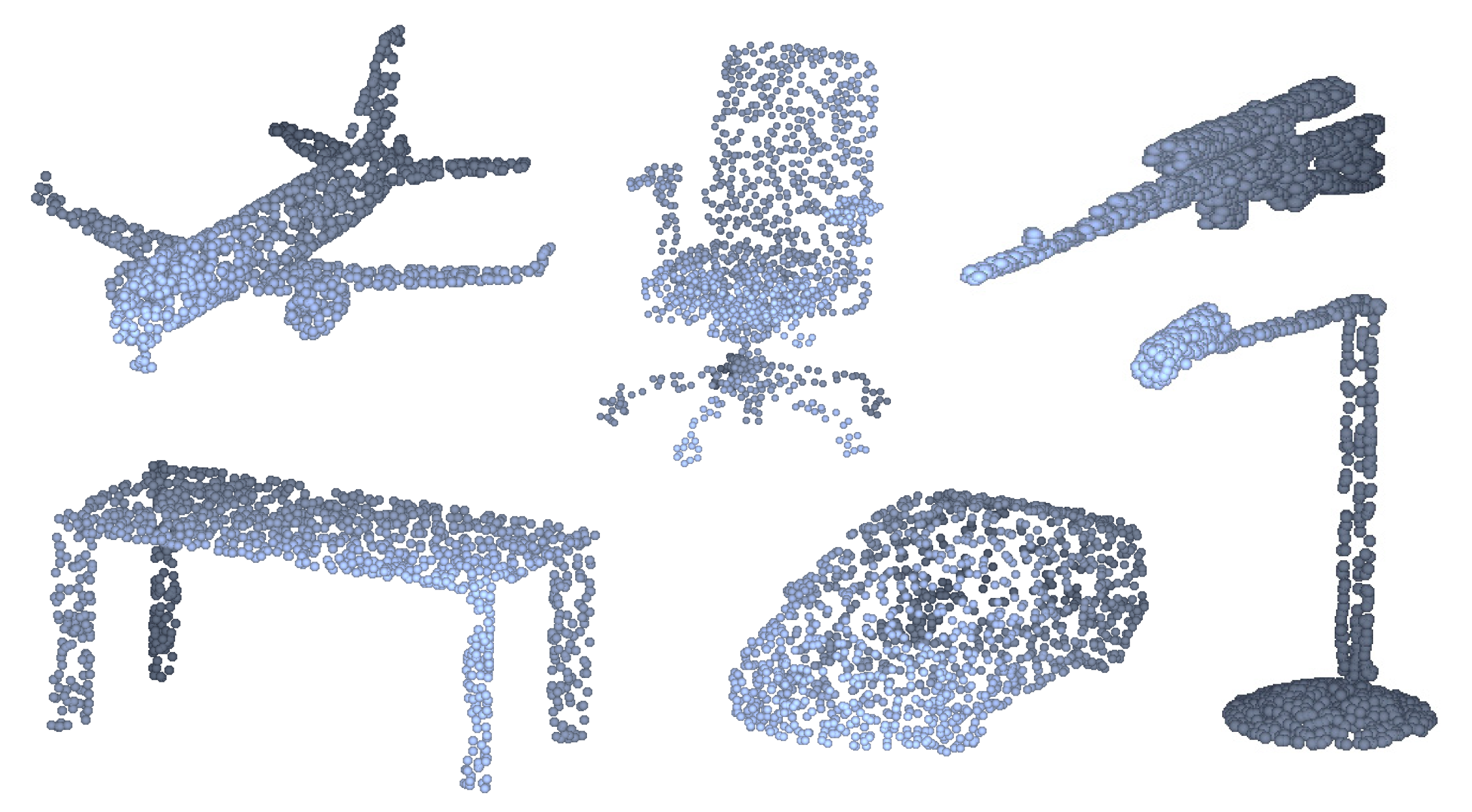

- Object classification. The input of the classification task is the point cloud of the 3D object and our goal is to recognize which category it belongs to (e.g., airplane, car, or table);

- Semantic segmentation. The input of the semantic segmentation task is the point cloud of the 3D scene, and it aims to assign each point a meaningful category label.

4.2. Object Classification Results

4.3. Semantic Segmentation Results

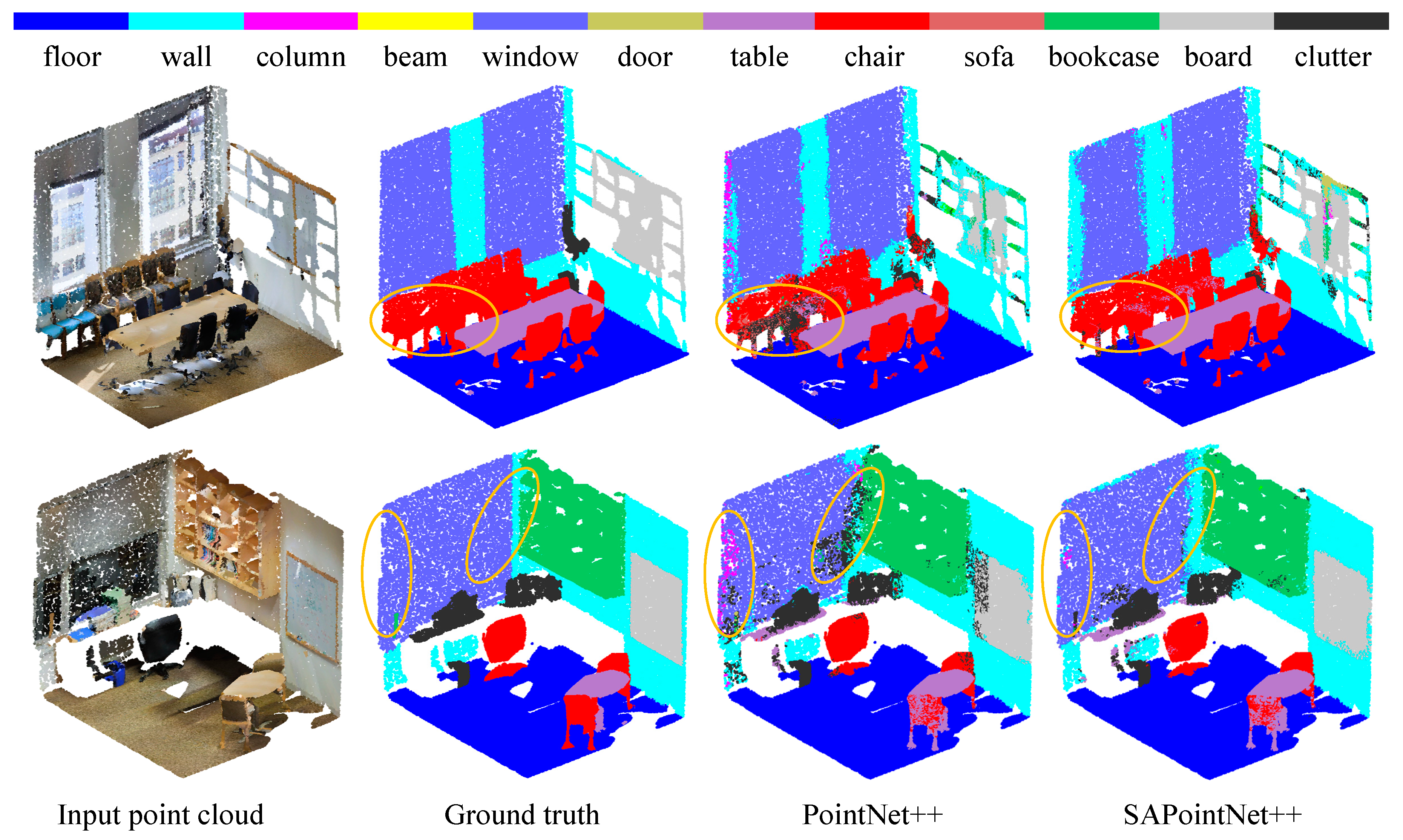

4.3.1. Semantic Segmentation for Indoor Scene

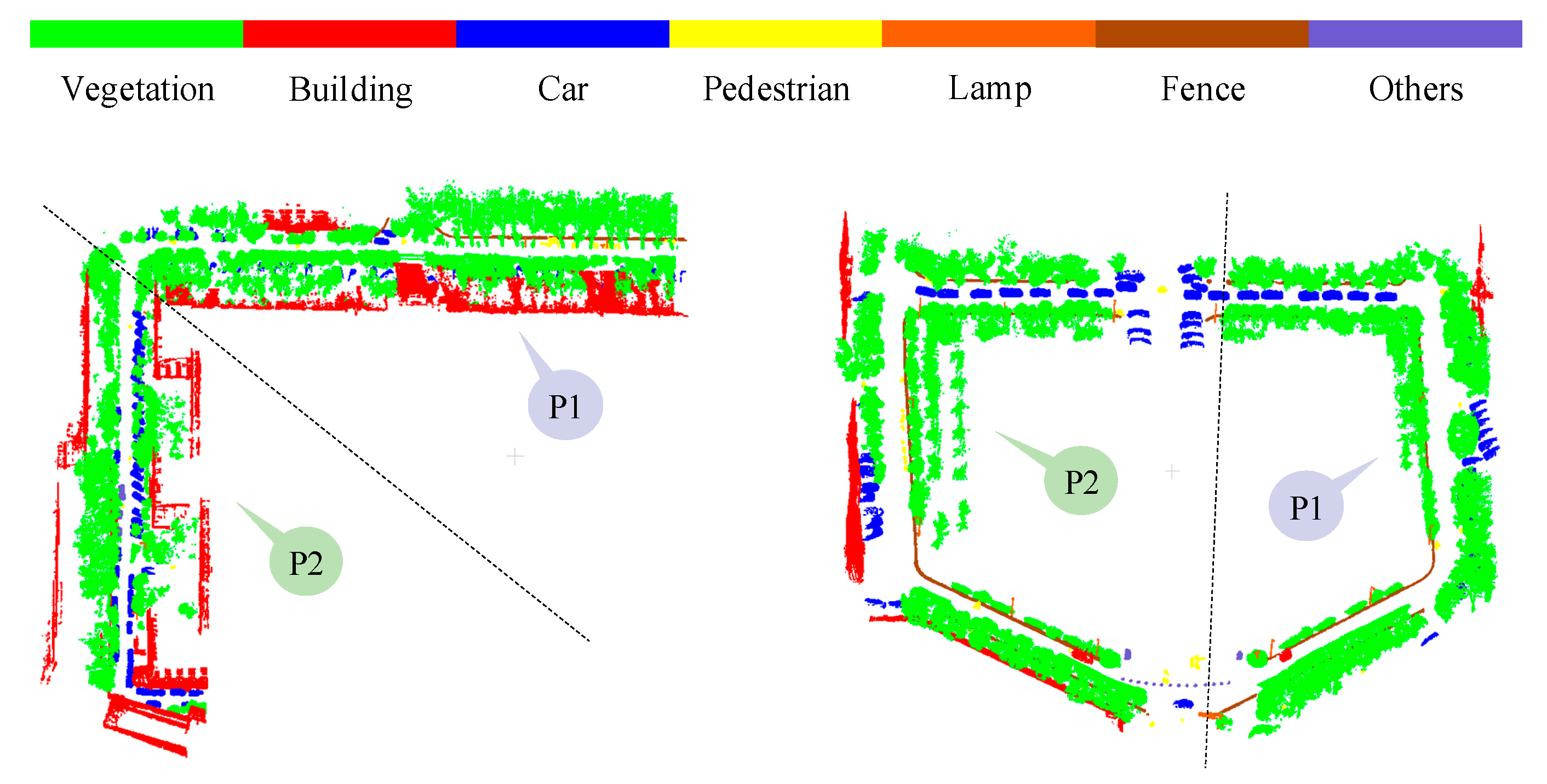

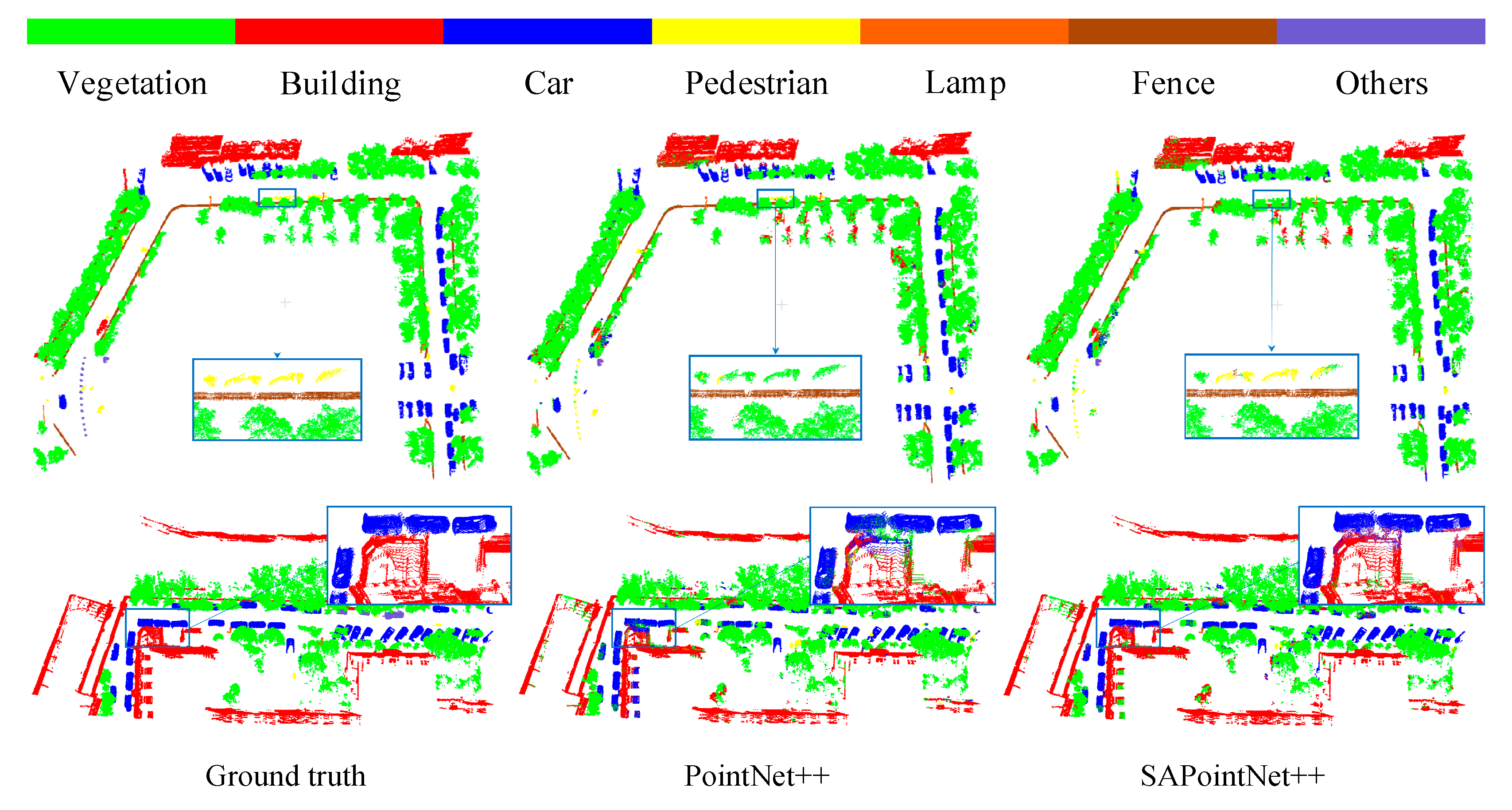

4.3.2. Semantic Segmentation for Outdoor Scene

5. Discussion

5.1. Parametric Sensitivity Analysis

5.2. KNN vs. Ball Query

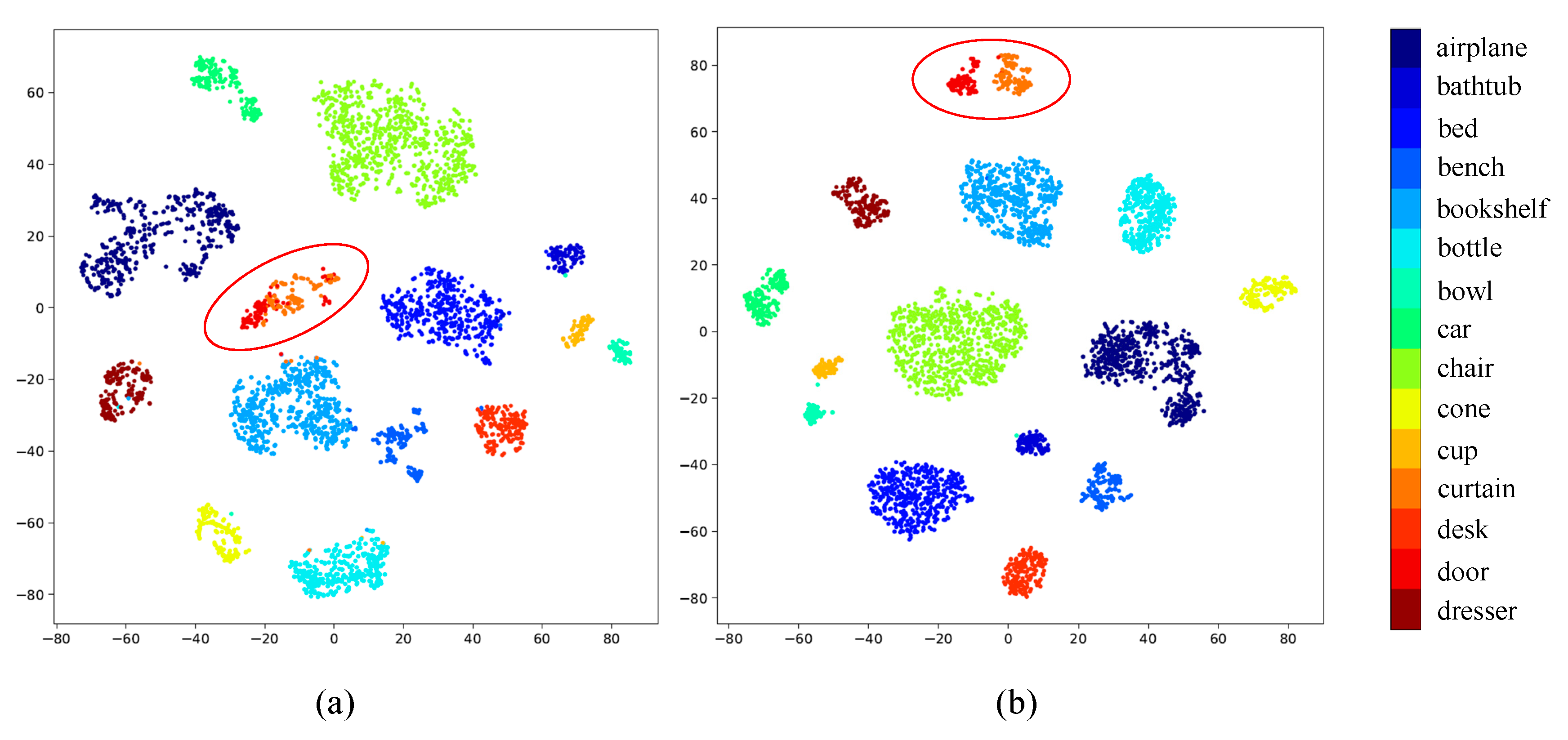

5.3. Latent Visualization

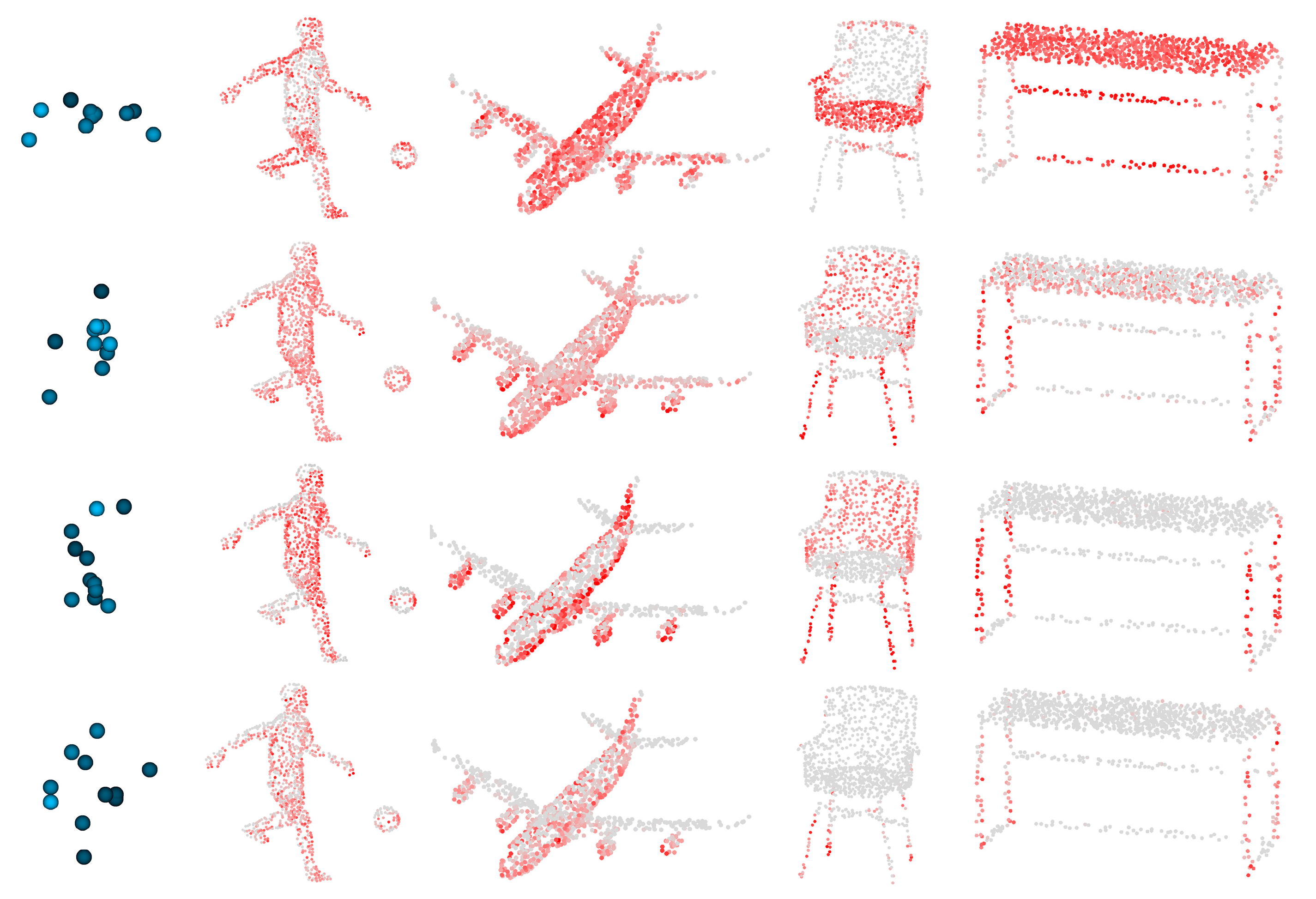

5.4. Visualization of the Learned Kernels

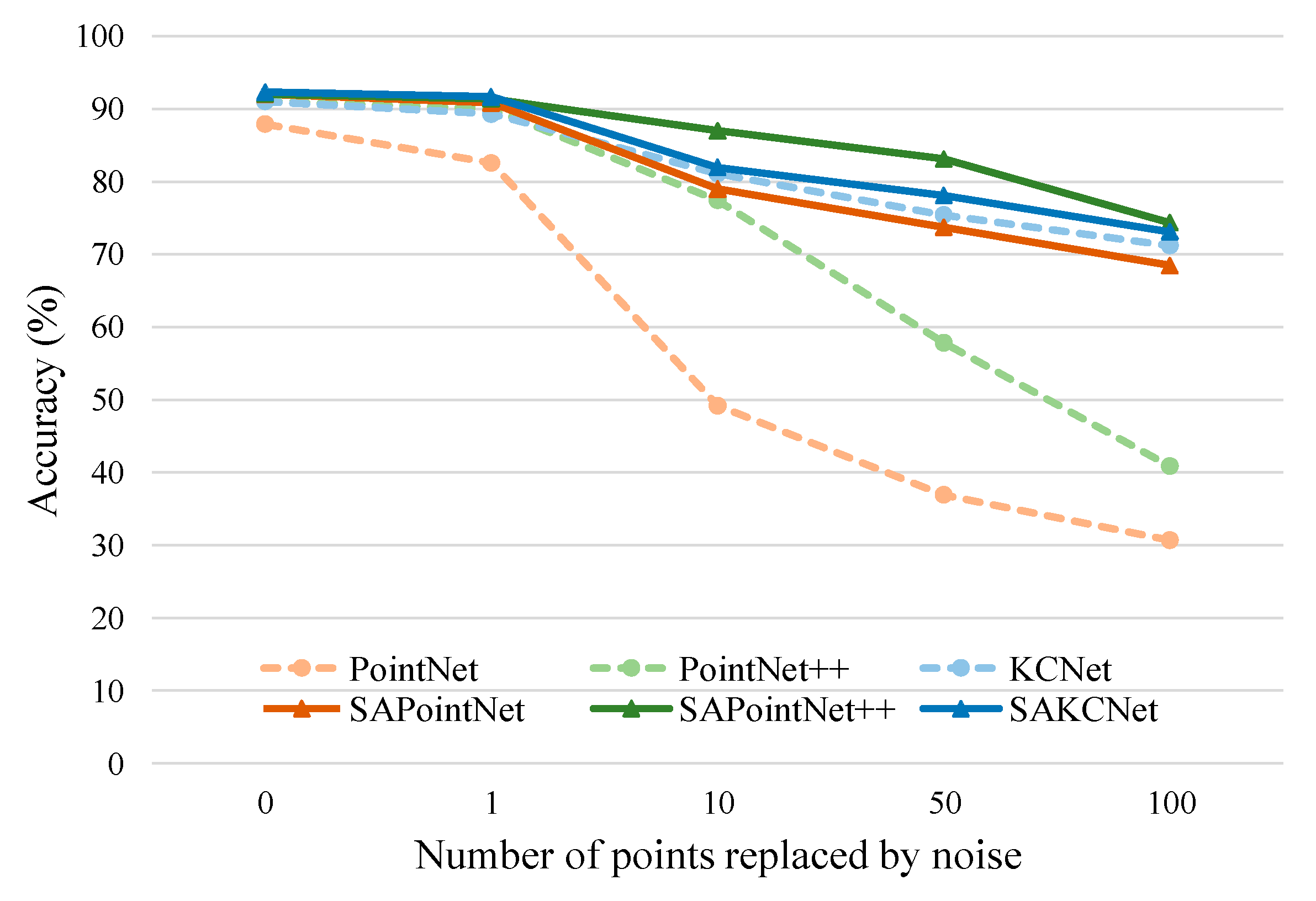

5.5. Robustness Test

6. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-Shape Convolutional Neural Network for Point Cloud Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8895–8904. [Google Scholar]

- Zheng, M.; Wu, H.; Li, Y. An adaptive end-to-end classification approach for mobile laser scanning point clouds based on knowledge in urban scenes. Remote Sens. 2019, 11, 186. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Available online: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networ (accessed on 14 February 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Huang, Y.; Hou, Y.; Zhang, S.; Shan, J. Graph Attention Convolution for Point Cloud Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10296–10305. [Google Scholar]

- Graham, B.; Engelcke, M.; van der Maaten, L. 3D Semantic Segmentation with Submanifold Sparse Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9224–9232. [Google Scholar]

- Tchapmi, L.P.; Choy, C.B.; Armeni, I.; Gwak, J.; Savarese, S. SEGCloud: Semantic Segmentation of 3D Point Clouds. In Proceedings of the 2017 International Conference on 3D Vision, Qingdao, China, 10–12 October 2017; pp. 537–547. [Google Scholar]

- Yang, Z.; Jiang, W.; Xu, B.; Zhu, Q.; Jiang, S.; Huang, W. A convolutional neural network-based 3D semantic labeling method for ALS point clouds. Remote Sens. 2017, 9, 936. [Google Scholar] [CrossRef]

- Gadelha, M.; Wang, R.; Maji, S. Multiresolution Tree Networks for 3D Point Cloud Processing. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–118. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Wang, L.; Huang, Y.; Shan, J.; He, L. MSNet: Multi-scale convolutional network for point cloud classification. Remote Sens. 2018, 10, 612. [Google Scholar] [CrossRef]

- Feng, Y.; Zhang, Z.; Zhao, X.; Ji, R.; Gao, Y. GVCNN: Group-View Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 264–272. [Google Scholar]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-view Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 945–953. [Google Scholar]

- Kalogerakis, E.; Averkiou, M.; Maji, S.; Chaudhuri, S. 3D Shape Segmentation with Projective Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6630–6639. [Google Scholar]

- Le, T.; Bui, G.; Duan, Y. A multi-view recurrent neural network for 3D mesh segmentation. Comput. Graph. 2017, 66, 103–112. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. Available online: http://papers.nips.cc/paper/7095-pointnet-deep-hierarchical-feature-learning-on-point-se (accessed on 14 February 2020).

- Shen, Y.; Feng, C.; Yang, Y.; Tian, D. Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4548–4557. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast Point Feature Histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Salti, S.; Tombari, F.; Di Stefano, L. SHOT: Unique signatures of histograms for surface and texture description. Comput. Vis. Image Underst. 2014, 125, 251–264. [Google Scholar] [CrossRef]

- Xu, Y.; Ye, Z.; Yao, W.; Huang, R.; Tong, X.; Hoegner, L.; Stilla, U. Classification of LiDAR Point Clouds Using Supervoxel-Based Detrended Feature and Perception-Weighted Graphical Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 13, 72–88. [Google Scholar] [CrossRef]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of lidar data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Weinmann, M.; Schmidt, A.; Mallet, C.; Hinz, S.; Rottensteiner, F.; Jutzi, B. Contextual classification of point cloud data by exploiting individual 3D neigbourhoods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 271–278. [Google Scholar] [CrossRef]

- Richter, R.; Behrens, M.; Döllner, J. Object class segmentation of massive 3D point clouds of urban areas using point cloud topology. Int. J. Remote Sens. 2013, 34, 8408–8424. [Google Scholar] [CrossRef]

- Anand, A.; Koppula, H.S.; Joachims, T.; Saxena, A. Contextually guided semantic labeling and search for three-dimensional point clouds. Int. J. Robot. Res. 2013, 32, 19–34. [Google Scholar] [CrossRef]

- Chen, G.; Maggioni, M. Multiscale Geometric Dictionaries for Point-Cloud Data. In Proceedings of the International Conference on Sampling Theory and Applications (SampTA), Singapore, 2–6 May 2011; Volume 4. [Google Scholar]

- Zhang, Z.; Zhang, L.; Tong, X.; Mathiopoulos, P.T.; Guo, B.; Huang, X.; Wang, Z.; Wang, Y. A Multilevel Point-Cluster-Based Discriminative Feature for ALS Point Cloud Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3309–3321. [Google Scholar] [CrossRef]

- Hackel, T.; Wegner, J.D.; Schindler, K. Fast semantic segmentation of 3D point clouds with strongly varying density. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 177–184. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. SVM-Based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Ghamisi, P.; Höfle, B. LiDAR Data Classification Using Extinction Profiles and a Composite Kernel Support Vector Machine. IEEE Geosci. Remote Sens. Lett. 2017, 14, 659–663. [Google Scholar] [CrossRef]

- Lodha, S.K.; Fitzpatrick, D.M.; Helmbold, D.P. Aerial Lidar Data Classification Using AdaBoost. In Proceedings of the 6th International Conference on 3-D Digital Imaging and Modeling, Montreal, QC, Canada, 21–23 August 2007; pp. 435–442. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Classification of Urban LiDAR Data Using Conditional Random Field and Random Forests. In Proceedings of the Joint Urban Remote Sensing Event, Sao Paulo, Brazil, 21–23 April 2013; Volume 856, pp. 139–142. [Google Scholar]

- Ni, H.; Lin, X.; Zhang, J. Classification of ALS point cloud with improved point cloud segmentation and random forests. Remote Sens. 2017, 9, 288. [Google Scholar] [CrossRef]

- Najafi, M.; Namin, S.T.; Salzmann, M.; Petersson, L. Non-Associative Higher-Order Markov Networks for Point Cloud Classification. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 500–515. [Google Scholar]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Conditional random fields for LiDAR point cloud classification in complex urban areas. ISPRS Ann. Photogramm. Remote Sens. Spatioal Inf. Sci. 2012, 3, 263–268. [Google Scholar] [CrossRef]

- Niemeyer, J.; Rottensteiner, F.; Soergel, U.; Heipke, C. Hierarchical higher order crf for the classification of airborne lidar point clouds in urban areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 655–662. [Google Scholar] [CrossRef]

- Yan, J.; Shan, J.; Jiang, W. A global optimization approach to roof segmentation from airborne lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 94, 183–193. [Google Scholar] [CrossRef]

- Le, T.; Duan, Y. PointGrid: A Deep Network for 3D Shape Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9204–9214. [Google Scholar]

- Wang, P.-S.; Liu, Y.; Guo, Y.-X.; Sun, C.-Y.; Tong, X. O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis. ACM Trans. Graph. 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Bronstein, M.M.; Bruna, J.; Lecun, Y.; Szlam, A.; Vandergheynst, P. Geometric Deep Learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [Google Scholar] [CrossRef]

- Simonovsky, M.; Komodakis, N. Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 29–38. [Google Scholar]

- Yi, L.; Su, H.; Guo, X.; Guibas, L.J. SyncSpecCNN: Synchronized Spectral CNN for 3D Shape Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6584–6592. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Engelmann, F.; Kontogianni, T.; Hermans, A.; Leibe, B. Exploring Spatial Context for 3D Semantic Segmentation of Point Clouds. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Venice, Italy, 22–29 October 2017; pp. 716–724. [Google Scholar]

- Ravanbakhsh, S.; Schneider, J.; Poczos, B. Deep Learning with Sets and Point Clouds. arXiv Prepr. 2016, arXiv:1611.04500. [Google Scholar]

- Zaheer, M.; Kottur, S.; Ravanbakhsh, S.; Poczos, B.; Salakhutdinov, R.; Smola, A. Deep Sets. Available online: http://papers.nips.cc/paper/6931-deep-sets (accessed on 14 February 2020).

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. Spidercnn: Deep Learning on Point Sets with Parameterized Convolutional Filters. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 87–102. [Google Scholar]

- Komarichev, A.; Zhong, Z.; Hua, J. A-CNN: Annularly Convolutional Neural Networks on Point Clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7421–7430. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Atzmon, M.; Maron, H.; Lipman, Y. Point convolutional neural networks by extension operators. ACM Trans. Graph. 2018, 37, 1–12. [Google Scholar] [CrossRef]

- Armeni, I.; Sener, O.; Zamir, A.R.; Jiang, H.; Brilakis, I.; Fischer, M.; Savarese, S. 3D Semantic Parsing of Large-Scale Indoor Spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1534–1543. [Google Scholar]

- Maaten, L.V.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Method | Accuracy | Points | Params | Inference Speed | Device |

|---|---|---|---|---|---|

| PointNet [1] | 87.10% | 1024 | 0.8 M | 50.5 batch/s | GTX 1060 |

| SAPointNet (ours) | 92.02% | 1024 | 0.8 M | 9.8 batch/s | GTX 1060 |

| PointNet++ [19] | 90.07% | 1024 | 1.5 M | 6.1 batch/s | GTX 1060 |

| SAPointNet++ (ours) | 92.05% | 1024 | 1.5 M | 5.6 batch/s | GTX 1060 |

| KCNet [20] | 91.00% | 1024 | 0.9 M | 8.8 batch/s | GTX 1060 |

| SAKCNet (ours) | 92.40% | 1024 | 0.9 M | 8.6 batch/s | GTX 1060 |

| KPConv (deform)‡ [51] | 90.08% | ~1700 | 15.2 M | 3.5 batch/s | GTX 1060 |

| KPConv (rigid) [51] | 92.7% | ~6800 | 14.3 M | 8.7 batch/s | RTX 2080Ti |

| KPConv (deform) [51] | 92.9% | ~6800 | 15.2 M | 8.0 batch/s | RTX 2080Ti |

| Method | mIoU | Ceiling | Floor | Wall | Beam | Column | Window | Door | Chair | Table | Bookcase | Sofa | Board | Clutter |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet | 41.09 | 88.80 | 97.33 | 69.80 | 0.05 | 3.92 | 46.26 | 10.76 | 52.61 | 58.93 | 40.28 | 5.85 | 26.38 | 33.22 |

| SAPointNet | 45.62 | 90.06 | 97.56 | 72.56 | 0.00 | 3.19 | 42.44 | 5.42 | 68.03 | 70.51 | 48.47 | 22.22 | 30.54 | 42.09 |

| PointNet++ | 50.79 | 91.40 | 97.08 | 75.59 | 0.02 | 0.74 | 52.07 | 24.44 | 72.77 | 68.43 | 55.59 | 32.77 | 42.94 | 46.47 |

| SAPointNet++ | 53.12 | 92.01 | 97.69 | 75.62 | 0.00 | 2.77 | 52.85 | 29.48 | 77.39 | 72.56 | 56.55 | 36.87 | 46.55 | 50.20 |

| KCNet | 46.20 | 91.79 | 97.50 | 73.79 | 0.00 | 5.29 | 45.09 | 6.43 | 67.48 | 67.20 | 50.40 | 21.90 | 28.53 | 45.20 |

| SAKCNet | 48.27 | 91.44 | 97.97 | 72.35 | 0.00 | 6.22 | 47.43 | 4.73 | 68.20 | 67.25 | 55.11 | 32.61 | 38.12 | 46.08 |

| Method | mIoU | Vegetation | Building | Car | Pedestrian | Lamp | Fence | Others |

|---|---|---|---|---|---|---|---|---|

| PointNet | 27.81 | 65.44 | 49.52 | 43.89 | 3.92 | 0.26 | 29.39 | 2.24 |

| SAPointNet | 42.82 | 72..25 | 55.99 | 57.24 | 25.35 | 26.82 | 58.71 | 3.35 |

| PointNet++ | 52.52 | 82.28 | 75.78 | 73.63 | 28.62 | 26.85 | 72.10 | 8.38 |

| SAPointNet++ | 56.18 | 85.71 | 76.48 | 74.40 | 32.27 | 35.85 | 79.14 | 9.44 |

| KCNet | 44.07 | 71.65 | 58.25 | 66.02 | 23.24 | 28.02 | 57.61 | 3.72 |

| SAKCNet | 46.43 | 71.81 | 57.27 | 70.91 | 26.52 | 33.32 | 60.66 | 4.56 |

| Convolution Kernels | 16 | 32 | 48 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Kernel Points | 5 | 11 | 17 | 5 | 11 | 17 | 5 | 11 | 17 | |

| σ | 0.01 | 89.79 | 90.03 | 90.07 | 89.81 | 90.08 | 90.19 | 89.83 | 90.32 | 90.68 |

| 0.05 | 90.92 | 91.61 | 91.82 | 91.21 | 91.97 | 92.02 | 91.45 | 92.01 | 92.05 | |

| 0.1 | 90.68 | 91.33 | 91.45 | 91.01 | 91.75 | 91.71 | 91.31 | 91.69 | 91.85 | |

| Method | KNN | Ball Query |

|---|---|---|

| SAPointNet | 92.02 | 91.04 |

| SAPointNet++ | 92.05 | 91.33 |

| SAKCNet | 92.40 | 91.23 |

| Method | S3DIS Dataset | WHU Dataset | ||

|---|---|---|---|---|

| KNN | Ball Query | KNN | Ball Query | |

| SAPointNet | 45.03 | 45.62 | 36.87 | 42.82 |

| SAPointNet++ | 52.51 | 53.12 | 55.19 | 56.18 |

| SAKCNet | 47.09 | 48.27 | 38.53 | 46.43 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Liu, Y.; Zhang, S.; Yan, J.; Tao, P. Structure-Aware Convolution for 3D Point Cloud Classification and Segmentation. Remote Sens. 2020, 12, 634. https://doi.org/10.3390/rs12040634

Wang L, Liu Y, Zhang S, Yan J, Tao P. Structure-Aware Convolution for 3D Point Cloud Classification and Segmentation. Remote Sensing. 2020; 12(4):634. https://doi.org/10.3390/rs12040634

Chicago/Turabian StyleWang, Lei, Yuxuan Liu, Shenman Zhang, Jixing Yan, and Pengjie Tao. 2020. "Structure-Aware Convolution for 3D Point Cloud Classification and Segmentation" Remote Sensing 12, no. 4: 634. https://doi.org/10.3390/rs12040634

APA StyleWang, L., Liu, Y., Zhang, S., Yan, J., & Tao, P. (2020). Structure-Aware Convolution for 3D Point Cloud Classification and Segmentation. Remote Sensing, 12(4), 634. https://doi.org/10.3390/rs12040634