1. Introduction

Hyperspectral satellite images have significant advantages in the recognition of ground contents, but they are not easily understood by the human eye. A hyperspectral image usually has hundreds of 16-bits quantized bands, which is converted to an 8-bits Red-Green-Blue (RGB) image for screen presentation, i.e., hyperspectral visualization. The visualization of hyperspectral images are primarily needed by data centers with preprocessing or distribution systems. For these systems, the visualization produces quick views which help us to judge the availability of selected hyperspectral images. Besides visual recognition, the visualization can improve the accuracy of registration and classification of hyperspectral images because the spatial information is aggregated to present enriched textural and structural characteristics.

Many algorithms have been proposed for hyperspectral visualization, which can be categorized into two groups, namely band-selection-based and dimension-reduction-based. Dimension-reduction-based methods are then classed into linear-projection-based and nonlinear-projection-based to account for the adaptive strategy.

The group of band-selection-based methods choose three separate bands from all the hyperspectral bands. Manually specifying the three bands is experience dependent, therefore is accounted for as an optimization to various purposes such as higher class separability or perceptual color distance. For example, Su et al. [

1] used minimum estimated abundance covariance for band selection of true, infrared, and false color composites. Zhu et al. [

2] used dominant set extraction to search a graph formulation of band selection which was measured with structure awareness for band informativeness and independence. Amankwah and Aldrich [

3] used both mutual information and spatial information to select the bands. Yuan et al. [

4] proposed a multitask sparsity pursuit framework with compressive sensing based descriptors and joint sparsity constraint to select the bands. Later on, Yuan et al. [

5] proposed a dual clustering method including the contextual information in the clustering process, a new descriptor revealing the image context, and a strategy in selecting the cluster representatives. Demir et al. [

6] utilized a one-bit transform to select suitable color bands in low complexity for dedicated hardware implementation.

It is commonly understood that the spectral range of each hyperspectral band is too narrow to hold rich spatial information. Therefore, more bands are involved by means of dimension reduction algorithms. The kind of linear-projection-based methods were first proposed to account for this. For example, Du et al. [

7] used principal component analysis (PCA), independent component analysis (ICA), Fisher’s linear discriminant analysis, and their variations for hyperspectral visualization and then compared their performance. Zhu et al. [

8] used correlation coefficient and mutual information as the criteria to select three independent components with ICA for color representation. Meka and Chaudhuri [

9] visualized hyperspectral images by summing up all the spectral points at each pixel location and optimizing the weights by minimizing the 3-D total-variation norm to improve statistical characteristics of the fused image. Jacobson and Gupta [

10] investigated the CIE 1964 tristimulus color matching envelopes and transformed them to the sRGB color space to obtain the fixed linear spectral weighting envelopes. These weights could stretch the visual bands of a hyperspectral image for the linear combination of the red, green, and blue bands, respectively. Algorithms based on PCA or CMF are irrelevant to image content.

In addition to linear projection, the alternative nonlinear methods of dimension reduction were also exploited for hyperspectral visualization. Najim et al. [

11] employed the modified stochastic proximity embedding algorithm to cut the spectral dimension as well as to avoid similar colors of dissimilar spectral signatures. Kotwal and Chaudhuri [

12] suggested a hierarchical group scheme and bilateral filtering for hyperspectral visualization preserving edges and even the minor details without introducing visible artifacts. In one of the latest work, Kang et al. [

13] proposed the decolorization-based hyperspectral image visualization (DHV) framework for hyperspectral visualization. In the DHV framework, hundreds of hyperspectral bands are averaged into nine bands, which are then combined into three bands by means of decolor algorithms [

14,

15,

16,

17] for natural images.

The dimension reduction methods assure no natural colors. Therefore, most of them cannot produce good colors except for the work related to color-matching function (CMF) in [

10] where CIE 1964 was considered. Motivated by [

10], many variations were proposed. Mahmood and Scheunders [

18] used the wavelet transform for hyperspectral visualization by fusing CMFs at the low-level subbands and denoising at the high-level subbands. Moan et al. [

19] excluded irrelevant bands by comparing entropy between bands, segmented remained bands by thresholding the CMFs, and used the normalized information at second and third orders to select the bands with minimal redundancy and maximal informative content. Sattar et al. [

20] used dimension reduction methods, including PCA, maximum noise fraction, and ICA, to get nine bands from a hyperspectral image, and then combined them with the CMF stretching for higher class separability and consistent rendering. Masood et al. [

21] proposed spectral residual and phase quaternion Fourier transform to generate the saliency maps in both spatial and spectral domains, which were concatenated with the hyperspectral bands and CMFs to linearly combine the color image.

Although CMF-based linear methods can achieve good color and details, researchers have noticed that better visualization methods should adapt to local characteristics, i.e., using different visualization strategies for different categories of pixels. To illustrate more salient features, Cui et al. [

22] clustered the spectral signature of image pixels, mapped the points to the human vision color space, and then performed the convex optimization on cluster representatives and interpolation of the remaining spectral samples. Long et al. [

23] introduced the principle of equal variance to divide all hyperspectral bands into three subgroups of uniformly distributed energy, and treated normal pixels and outliers separately using two different mapping methods to enhance global contrast. Cai et al. [

24] proposed a feature-driven multilayer visualization technique by analyzing the spatial distribution and importance of each endmember and then visualize it adaptively based on its commonness. Erturk et al. [

25] used bilateral filters to extract the base and detail images, reducing contrast in the base image but preserving the detail so that the significance of the detail image can be enhanced, which is a high-dynamic-range (HDR) technique for display devices. Mignotte [

26] used the criterion of preserving spectral distance to measure the agreement between the distance of spectrums associated with each pair of pixels and their

(also written as

) perceptual color distance in the final three-band image, which led to the optimization of a nonstationary Markov random field. Liao et al. [

27] proposed a fusion approach based on constrained manifold learning, which preserves the image structures by forcing pixels with similar signatures being displayed with similar colors.

Although many algorithms have been proposed, there has been a lack of visualization methods for the near-infrared spectrums. With the increase of hyperspectral sensors, more and more images are captured in the near-infrared band exceeding 760 nm. For example, the spectral range of the shortwave infrared (SWIR) hyperspectral camera mounted on the TIANGONG-1 is 800–2800 nm, and the atmospheric detector mounted on the GAOFEN-5 is also in a similar spectral range. Commonly used algorithms focus on the visualization of images from sensors such as AVIRIS and ROSIS that span to visible light range [

28,

29,

30], which may not be suitable for visualization of the near-infrared detectors.

When the near infrared bands are concerned, it is still challenging to show hyperspectral images with naturally looking colors. Band-selection-based and CMF-based methods may fail because they rely on the visual light bands for natural colors. Dimension reduction tends to produce unnatural colors even for the visual light bands. When the visual light bands are missing, none of the above-mentioned methods can assure the quick view images of natural colors. As an example, our earlier method [

31] will lose effect because it requires visual light bands to correct the fused colors.

In this paper, a deep convolutional neural network is designed for the visualization of near infrared hyperspectral bands. It is an end-to-end model, i.e., a hyperspectral image is fed into the network, which outputs a three-band image for visualization. Against the experienced methods for hyperspectral visualization, supervised learning is employed in the newly proposed method to train the network to tune to the expected natural colors. This is accomplished with the repeated observation data. In line with the hyperspectral images, multispectral images covering red, green, and blue spectrums can be easily obtained, which offer the expectations that best describes the terrestrial content of the same place and time. These multispectral images guide the network to fuse natural color and maintain good detail.

The main contributions of this article are listed.

The visualization of near-infrared hyperspectral images is delicately discussed for the first time in response to the growing trend.

An end-to-end deep convolution network is designed to visualize hyperspectral images, which is very straightforward and flexible to adapt to a variety of transformation styles.

A discriminator network is introduced to improve the training quality.

The rest of this article is arranged as follows. In

Section 2, the proposed method is presented where the adversarial framework, network architecture, training, and preprocessing are uncovered in detail. In

Section 3 and

Section 4, the newly proposed method is tested for the EO-1 Hyperion data without visual light bands, which is compared with five state-of-the-art visualization methods to prove its feasibility. In

Section 5, the new method is tested for the TIANGONG-1 shortwave infrared bands.

Section 6 presents an extended experiment for the visualization of EO-1 Hyperion data where visual light bands are kept. Possible constraints and extensions are discussed in

Section 7.

Section 8 gives the conclusion.

2. Methodology

The mapping from hyperspectral images to multispectral images may not be a strict dimension reduction process. In our experience, objects should be rendered in fixed colors at a given time. This process implicitly introduces an understanding of the content of the image. We try to describe this mapping process here. The first step is to classify the features, that is, to distinguish small image blocks into different feature categories, such as woodland or artificial buildings. The second step is to find shallow features such as structures and textures. The third step is to color each shallow feature so that it can be understood by the human eye when it is restored back to the image. These mapping steps can be explained with an encoder-decoder system. The first step makes up an encoder for feature extraction, while the second and third steps correspond to a decoder for image reconstruction.

The latest codec methods are implemented using deep convolutional neural networks, which have been widely used for image processing. In image segmentation, features are extracted using deep convolutional networks and aggregated and rendered as labeled images. In conditional image generation, the coded part of the deep convolutional network learns the conditional image features, merges them with the random features, and generates a new image through the decoder. In image restoration, the basic features of defective images are learned by the encoder and then sent to the decoder to repair missing information. These works are essentially the same as the hyperspectral visualization that we understand. Therefore, we will harness an encoder-decoder neural network to visualize the near-infrared hyperspectral images.

2.1. Framework with Neural Networks

The aim of hyperspectral visualization is to fuse a three-band image from a hyperspectral input image . For an hyperspectral image, we describe by a real-valued tensor of size and by , respectively. Here, W, H, and C denote the width, height, and number of channels, respectively.

Our ultimate goal is to train a generating function

G that estimates for a given hyperspectral input image its corresponding three-band multispectral counterpart. To achieve this, a generator network is trained as a feed-forward convolutional neural network (CNN)

parameterized by

. Here

denotes the weights and biases of a

L-layer network and is obtained by optimizing a specific loss function

. For training input images

,

with corresponding output images

,

,

is solved, where

N denotes the number of training samples. In training,

is obtained by finding the multispectral images whose spatial resolution and captured time are similar to

.

In the remainder of this section, the architecture, loss function, adversarial-based improvement, and data processing of this network will be introduced. For convenience, the proposed method is called Hyperspectral Visualization of Convolutional Neural Networks, or HVCNN for short.

2.2. Generative Network: Architecture

To describe the encoding-decoding process, the U-Net architecture [

32,

33] is used. The encoder is a downsampled convolutional network to aggregate features, where the stride between adjacent layers is 2. The typical input is a 128 × 128-sized multichannel image, then the encoder network has 7 layers to output a small number of high-level features. The filter sizes are 4 × 4 for all convolutional layers. The possible depth values are 64, 128, 256 as listed in

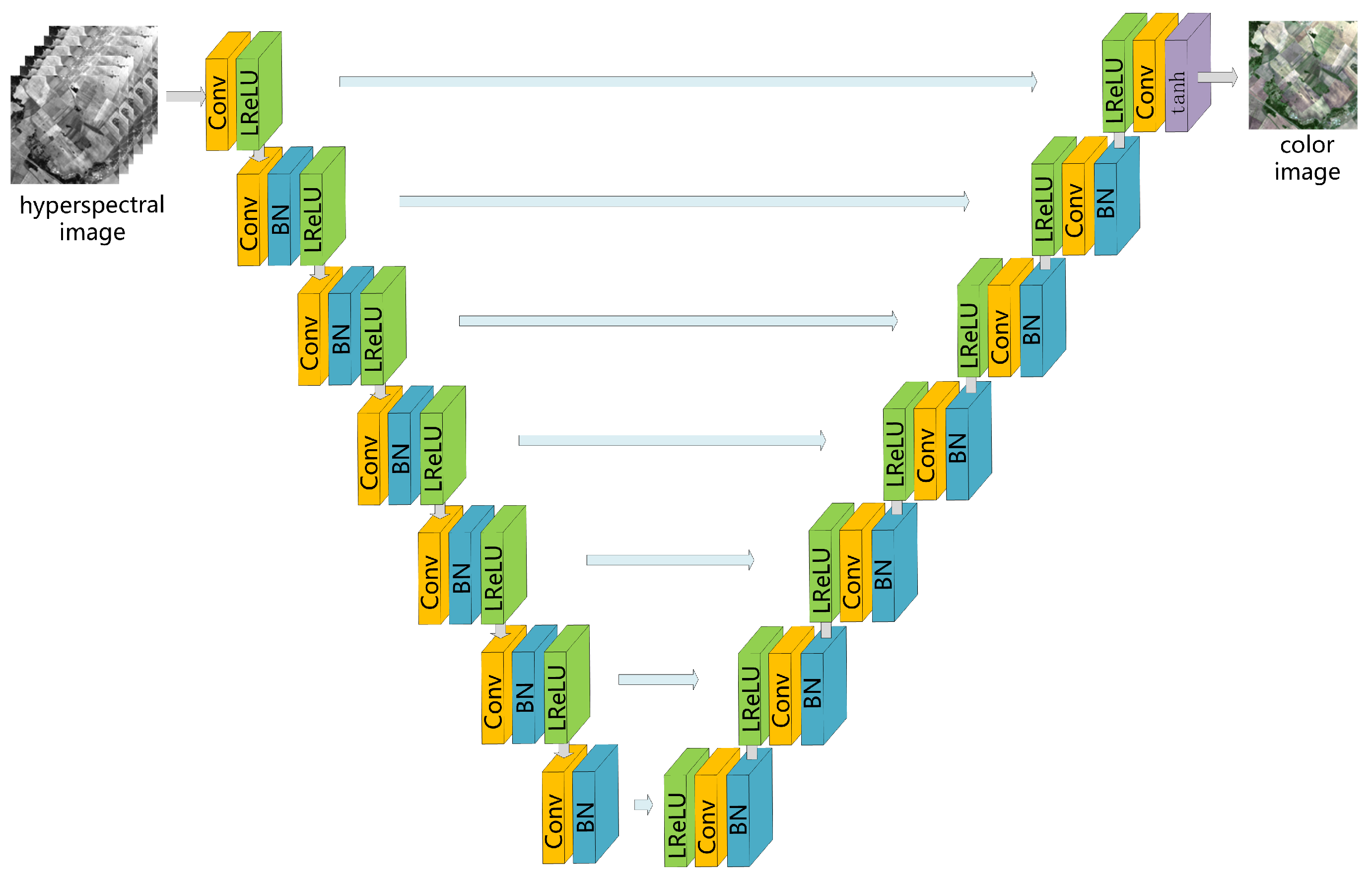

Table 1. The first convolutional layer is followed by a Leaky-ReLU function for activation, while other convolutional layers are followed by a batch norm (BN) layer and a Leaky-ReLU layer.

Symmetrical to the encoder, the decoder part contains seven 4 × 4 transposed convolutions with a stride of 2. Low-level features have higher resolution to hold position and detail, but they are noisy and of few semantics. On the contrary, high-level features have stronger semantic information, but details are not perceivable. Concatenation is then used to combine low-level features and high-level features to improve model performance. In other words, the input of each transposed convolutional layer in the decoder is a thicker feature formed by concatenating the output of the previous layer and the corresponding encoder layer output. The function tanh is used for activation of the last convolutional layer. Therefore, the entire network has a total of 14 convolutional layers, as is shown in

Figure 1.

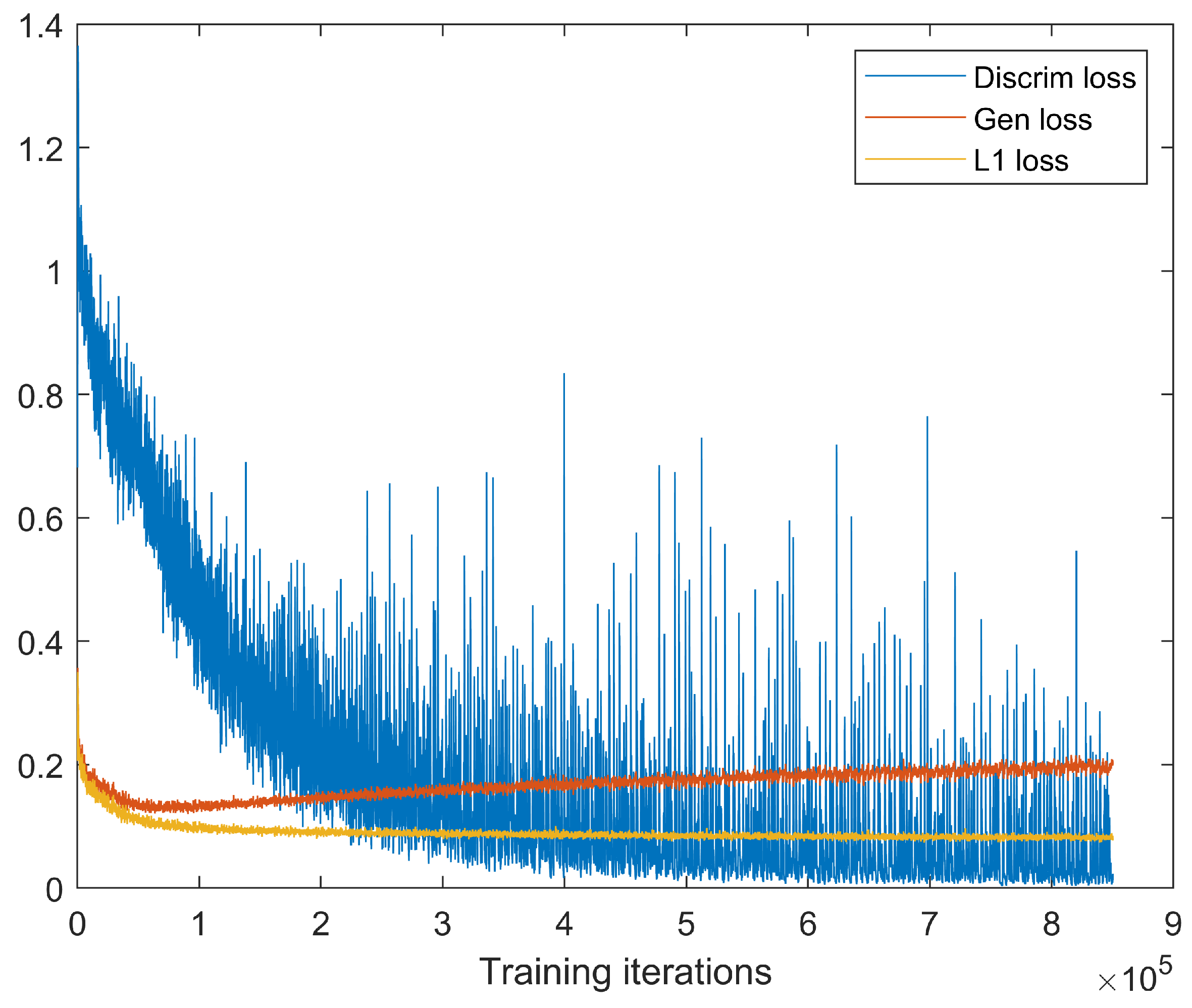

2.3. Adversarial Network: Architecture and Loss Function

It is commonly known that the performance of a generator can be improved by a discriminator, which leads to a generative adversarial network (GAN). To distinguish the real target image from the generated color image samples, a discriminator network

D is further defined with parameter

. We adopt the idea in GANs where

is optimized along with

G in an alternating manner to solve the minimum-maximum adversarial problem under the expectation

and distribution

p:

Here denotes that two images are combined into one image as the input of the discriminator.

This formula trains a high-quality generative model G to fool the discriminator D as much as possible. The discriminator D is trained to distinguish generated images from real images. Alternate training allows the generator and discriminator to find high-quality solutions in each single-step iteration, and they upgrade as the opponents upgrade. In this way, the generator can learn a solution that is highly similar to the target image.

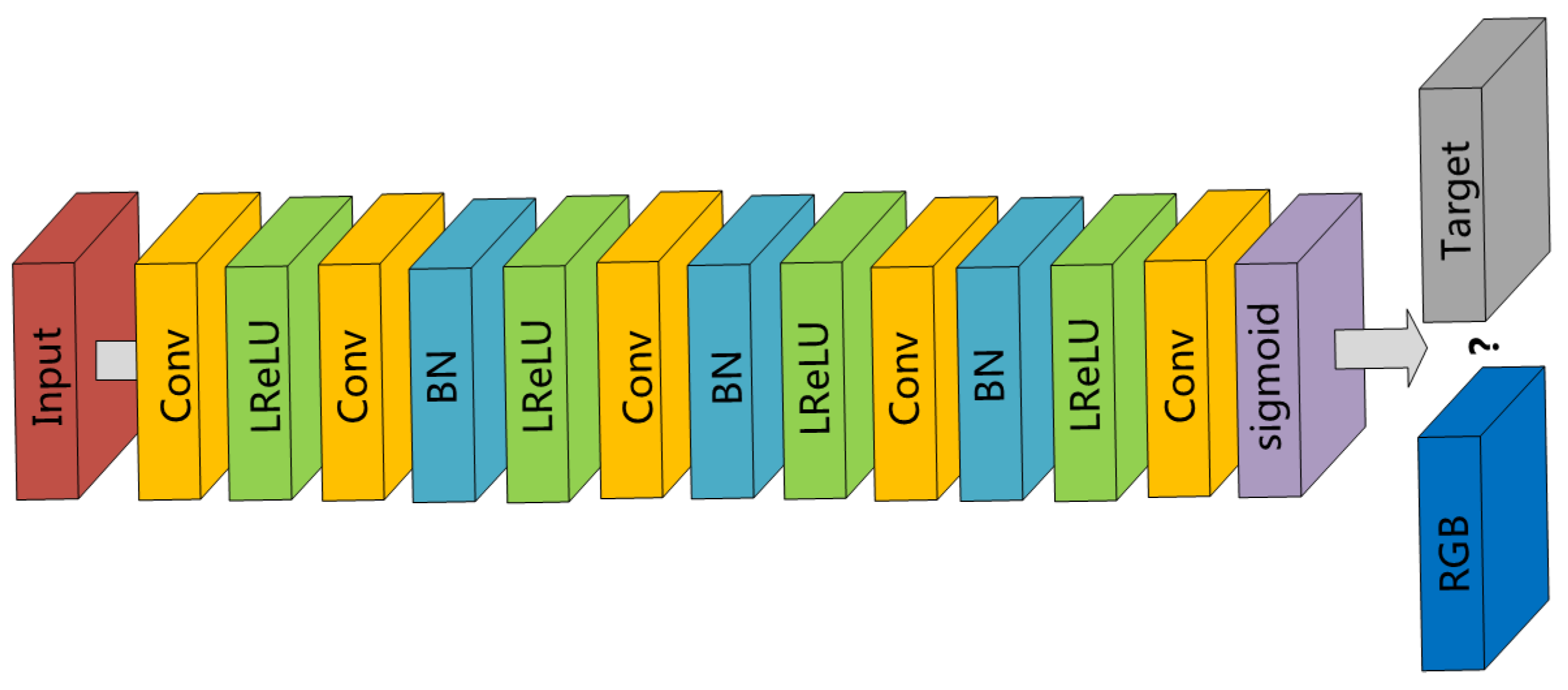

In our method, the adversarial network is built upon CNNs (see

Figure 2), too. It contains five convolutional layers. The sigmoid function is used in the last layer as the activation function to assess the probability of the group to which each image belongs.

2.4. Generative Network: Loss Function

The definition of the generative loss function

is critical for the performance of the generator network. In our model, the generative loss is formulated as the weighted sum of two components—content loss and adversarial loss for minimization, i.e.,

The content loss

is defined with the

norm, i.e., pixel-wise mean absolute error (MAE). MAE represents the average error margin of the predicted value, regardless of the direction of the error. Compared to the mean square error (MSE) which is easier to be solved, MAE is more robust to outliers.

is calculated with

The adversarial loss

is defined based on the probability of the discriminator

on all training samples as

where

denotes the probability that the reconstructed image

from the input image

is an accepted color image, and

N denotes the number of training samples. To better update the gradient,

is minimized instead of

.

2.5. Preprocessing

Prior to the network training, each pair of hyperspectral images and the counterpart three-band image should preferably have the same quantization range to speed up network convergence. This can be achieved by stretching each band independently to 0–255. On the other hand, affected by the imaging environment and atmospheric pollution, there are many abnormal points in remote sensing images, which are often very bright or very dark. The abnormal points in the target image will degrade the training effect. To solve this problem, a nonlinear stretching method is used. After obtaining the cumulative distribution of the image histogram, the darkest 0.1% and brightest 0.1% of the pixel range are eliminated. All the pixels within the statistical threshold range are linearly stretched to 0–255. It is necessarily pointed out that this operation should be performed not on a small image block but on a large image. For example, in our experiments, each nonlinear stretch is performed on a complete image of more than 1,000,000 pixels.

When further used in the network, the input and output images need adjustment once again, i.e., linearly stretched from to . The network synthesized image is linearly stretched back to 0 to 255 for manifestation. If it is necessary to obtain a 16-bit output image, the original threshold can be used to map pixel values to the approximate range.

3. Experimental Scheme

The EO-1 Hyperion data were tested to illustrate the feasibility of the proposed method. All the visual light bands were removed from the EO-1 Hyperion data to simulate a near-infrared hyperspectral image. The red, green, and blue bands of the LandSat-8 data were used as the target towards natural color. Therefore, there is no overlapping spectrums between input images and output images.

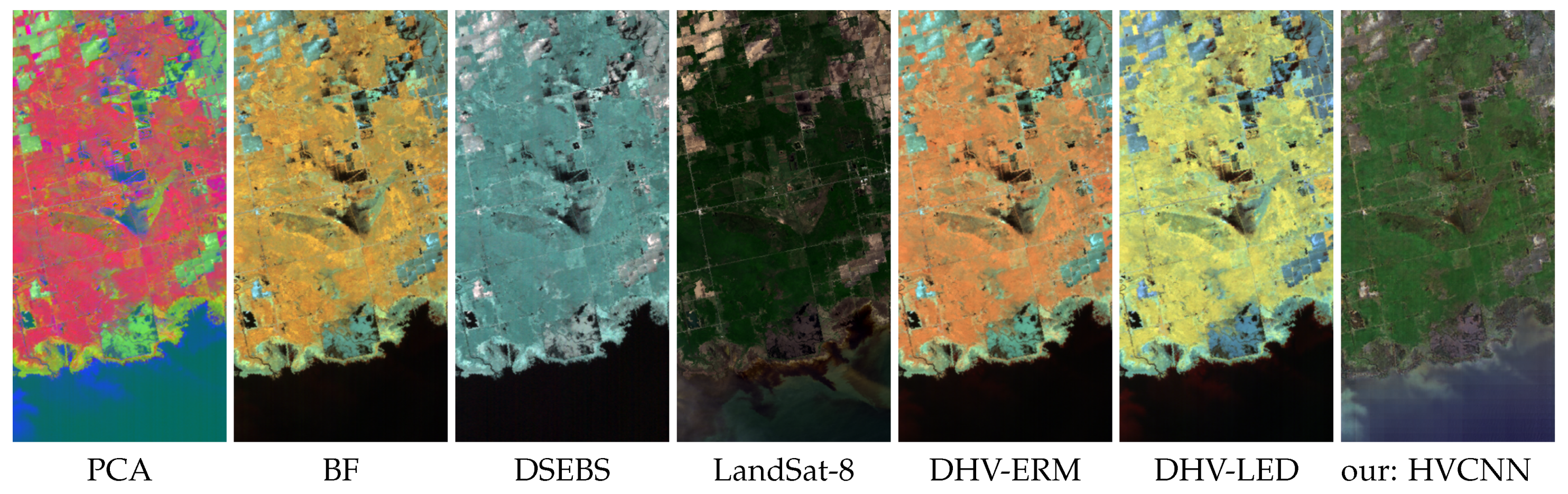

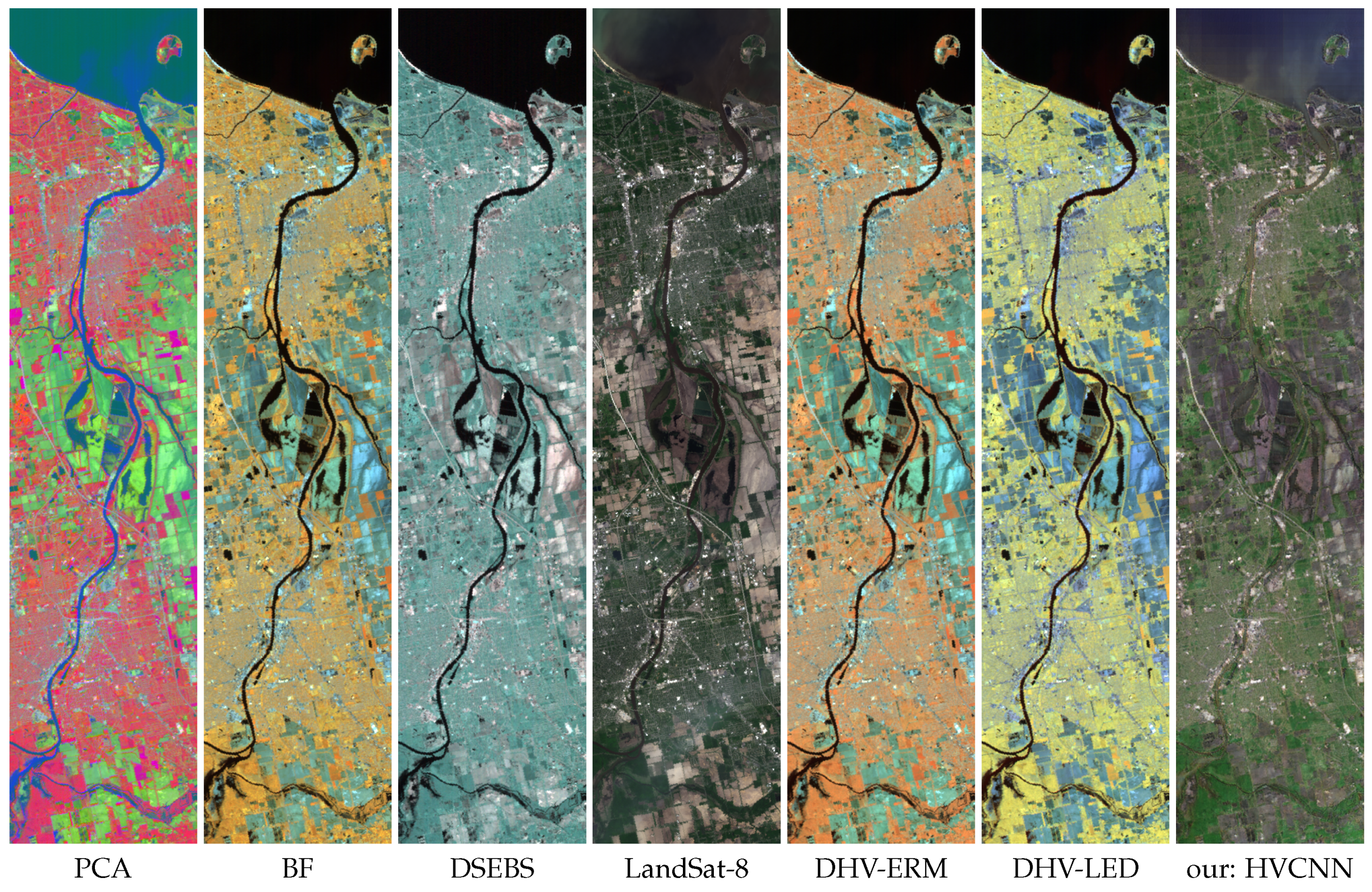

To identify the performance of the proposed visualization method, a variety of state-of-the-art methods were compared, including the classical principal component analysis method [

7] (named as PCA), the Bilateral Filtering based method [

12] (named as BF), the Dominant Set Extraction based Band Selection [

2] (named as DSEBS), and the Decolorization-based Hyperspectral image Visualization (DHV) framework [

13]. Two decolorization models, the Extended RGB2Gray Model (ERM) [

15] and the Log-Euclidean metric based Decolorization (LED) [

16] suggested in [

13] along with the DHV framework, were compared and named as DHV-ERM and DHV-LED, respectively.

Parameters of the proposed method were fixed in the experiment. The sizes of input and output image blocks for training were 128 × 128. The Adam optimizer was used where the parameter was 0.5 and the learning rate was 0.0002. The parameter in the loss function of the generative network was 100. The training was repeated 200 epochs with the batch size set to 1.

In our algorithm, the 0.1% stretch instead of a linear stretch mapped the image nonlinearly to the range [0, 255]. In order to give a fair comparison, the 0.1% stretch was used for all algorithms. In other words, all the hyperspectral images were 0.1% stretched before putting into the algorithms. This benefited all the competing algorithms by increasing their contrast levels.

The quality of synthesized RGB images is assessed with metrics. Structural SIMilarity (SSIM) measures the structural similarity. Correlated coefficient (CC) and peak signal to noise ratio (PSNR) measure the radiometric discrepancy. Spectral angle mapper (SAM) [

34], relative dimensionless global error in synthesis (ERGAS) [

35], and relative average spectral error (RASE) [

36] measure the color consistency. Q4 [

37] measures the general similarity. The three-band images from the LandSat-8 red, green, and blue bands are set as the reference. The ideal results are 1 for SSIM, CC, and Q4 while 0 for SAM, ERGAS, and RASE.

6. Extended Experiment: Visualization of Hyperspectral Images Covering Visual Light Spectrums

The purpose of this article is to design a visualization method for near-infrared hyperspectral images so that they can be visually recognized by the human eye. However, the proposed method should not be limited to near-infrared images. Obviously, the supervised-learning-based neural network can also deal with hyperspectral images containing visible light. On the other hand, the algorithms involved in the comparisons are not specifically designed for near-infrared hyperspectral image visualization. Then the above-mentioned comparisons are not strictly fair. Rationally, we hope to know whether the new method can behave as superior as shown in the earlier experiments for a traditional hyperspectral visualization issue that may cover the visual light spectrums. To explore the answer, an additional experiment on Hyperion was appended in the traditional hyperspectral visualization, i.e., to visualize hyperspectral images owning visual light spectrums.

To illustrate the performance when the spectral range of the hyperspectral sensor completely covers the spectral range of the multispectral sensor for reference, training was repeated when all the data and parameters remain the same except for the input images that extended to the visual light spectrums. For all the 242 bands of the Hyperion data, 145 spectral bands (10–55, 82–97, 102–119, 134–164, 187–220) were used while others were removed due to uncalibration or noise. As correlated coefficients are in line with the PSNR evaluations, mutual information (MI) was calculated to measure the similarity between the overall structures.

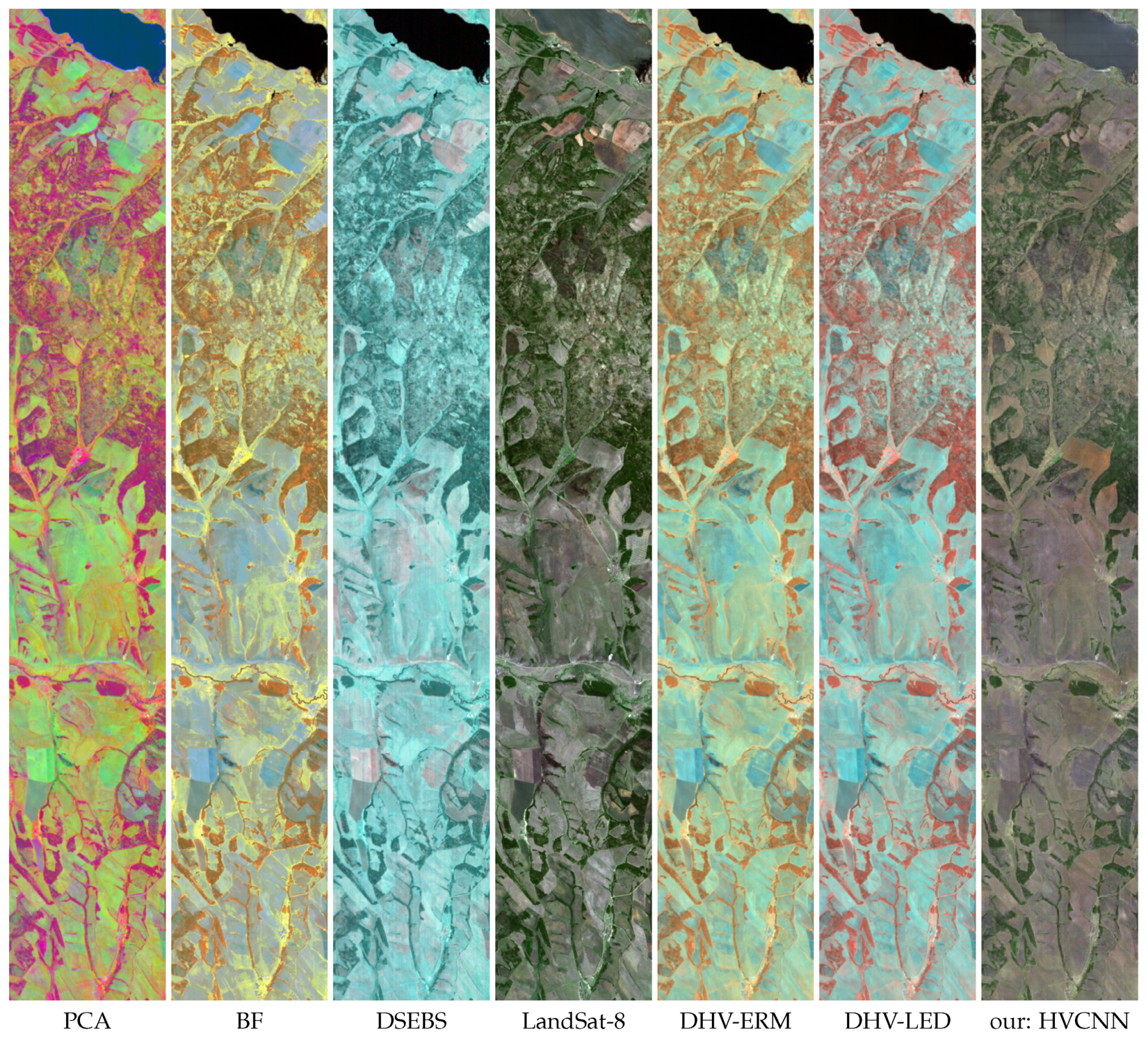

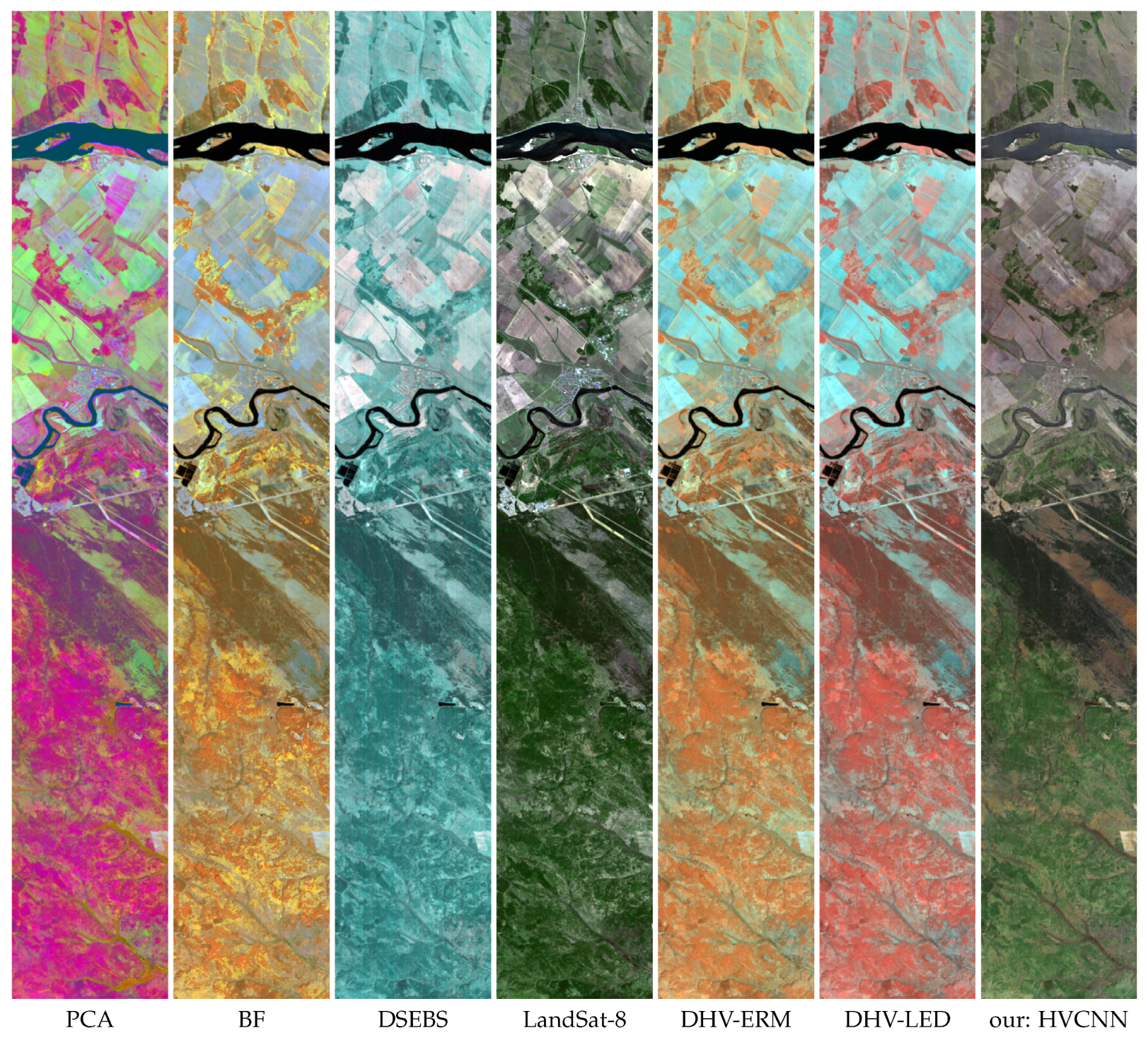

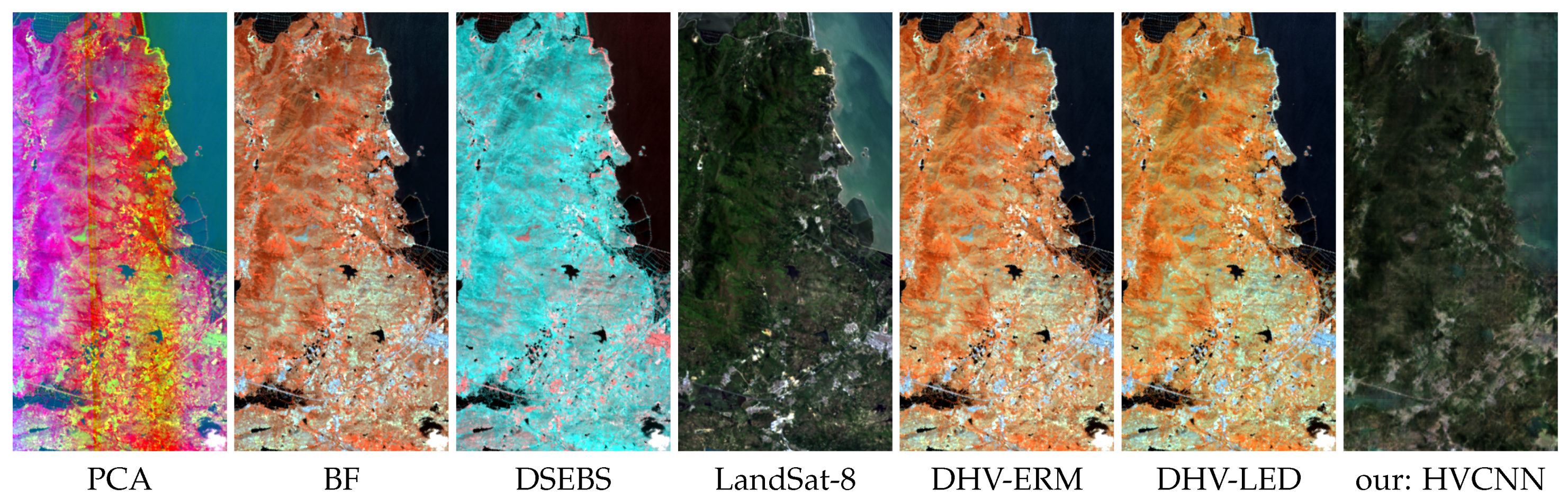

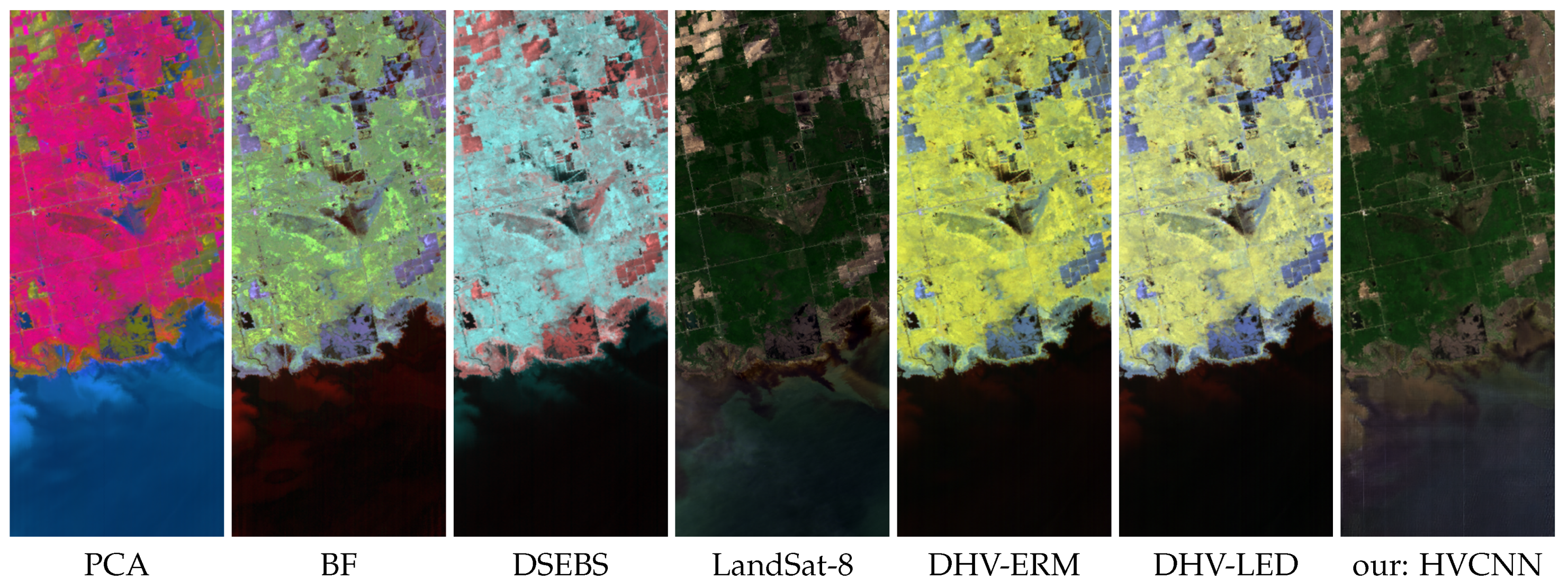

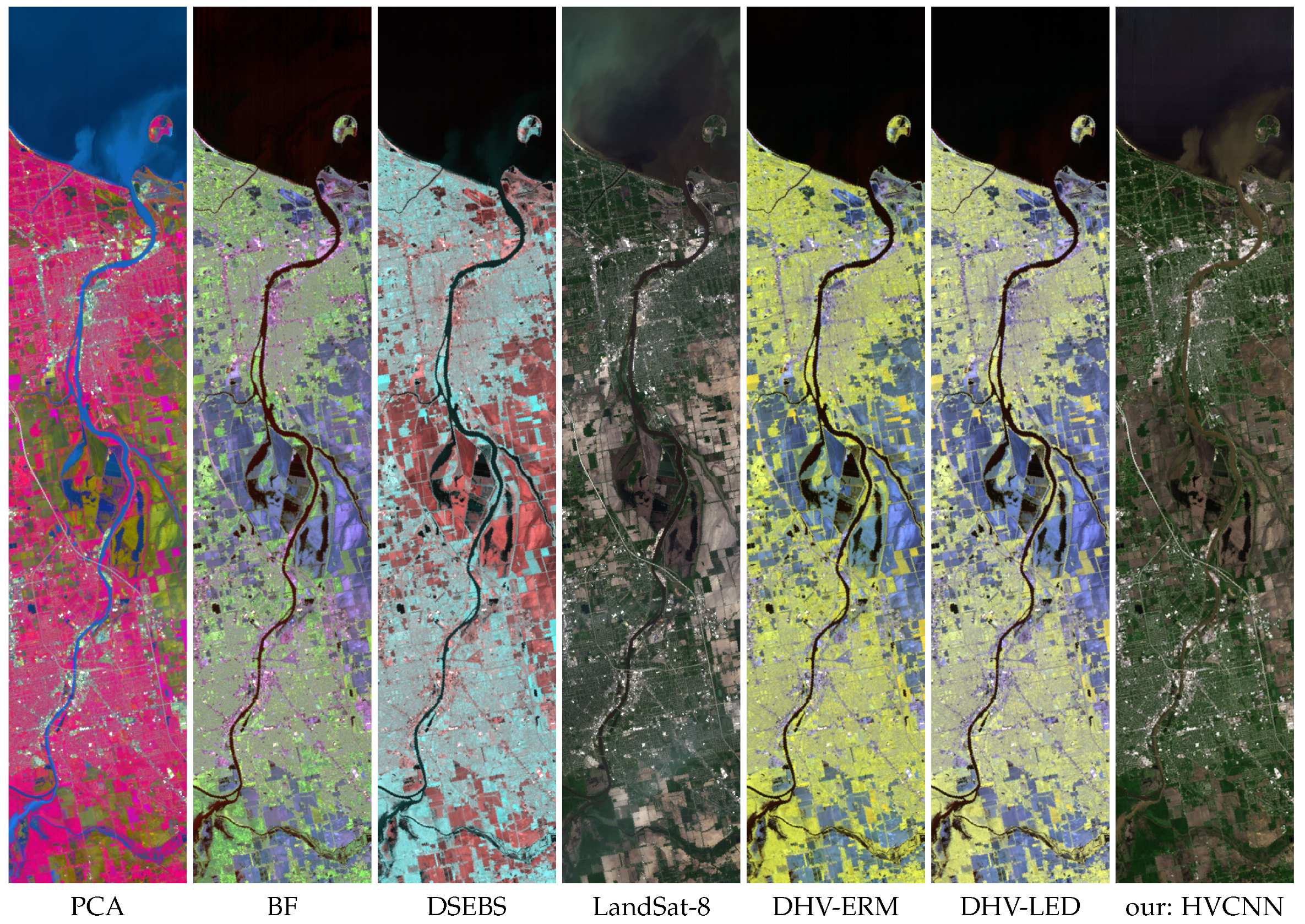

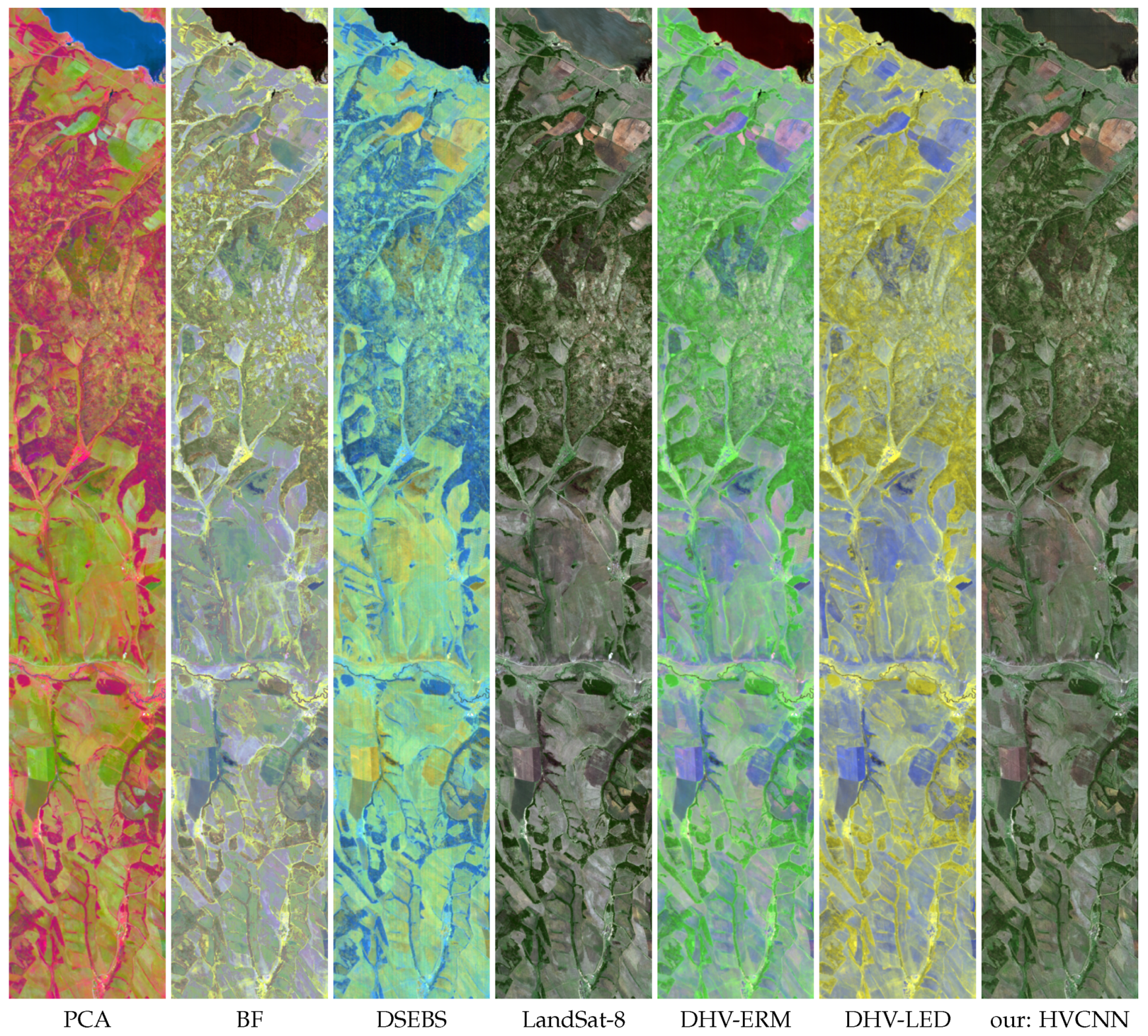

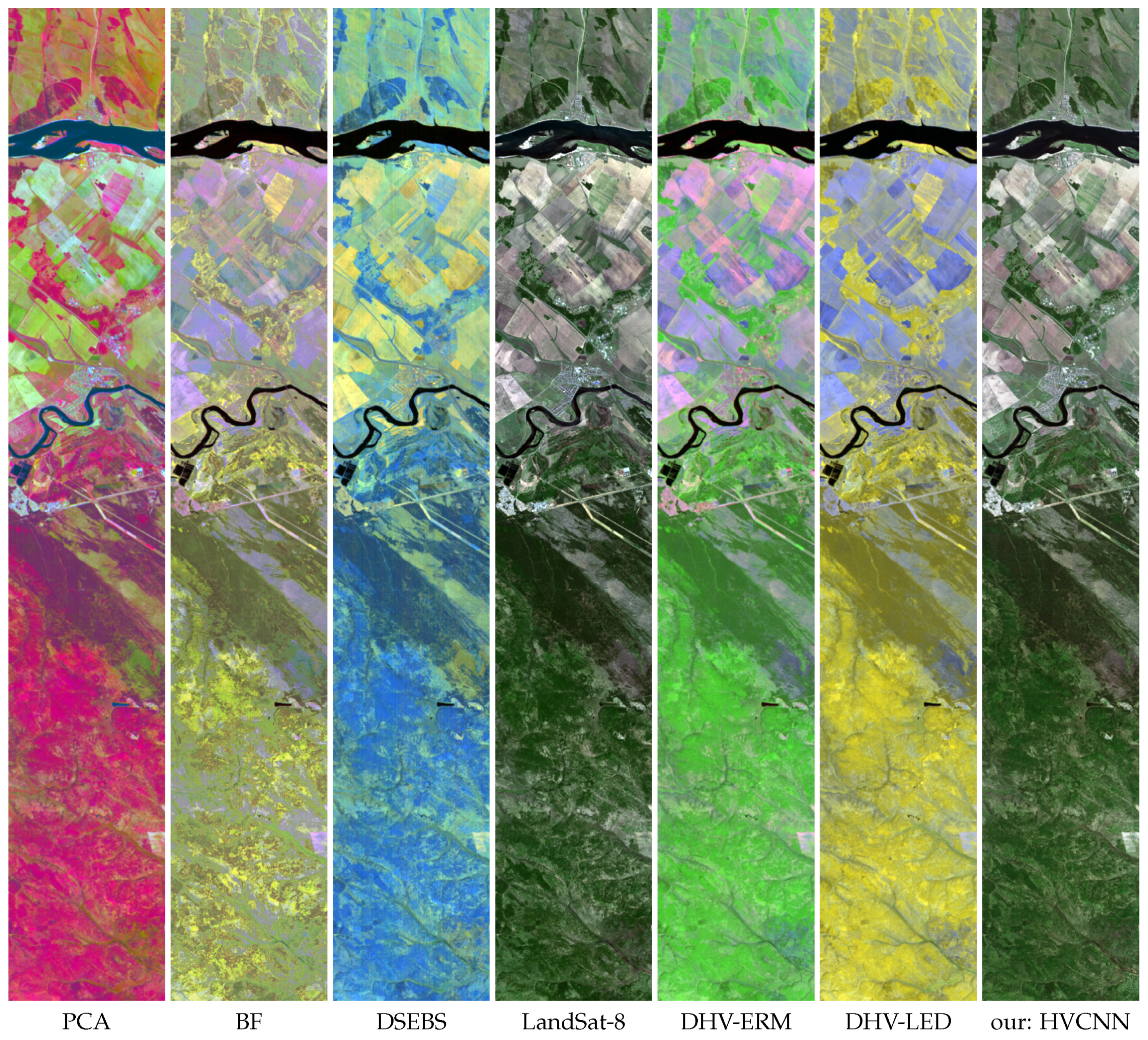

The visualization results of the full hyperspectral images are presented in

Figure 9,

Figure 10,

Figure 11 and

Figure 12. Given a one-by-one comparison for the HVCNN results in

Figure 3,

Figure 4,

Figure 5 and

Figure 6, it is easily concluded that the fidelity of the synthesized red bands are greatly improved, making the overall color closer to the target images. At the same time, the edges and contours are clearer. The urban area in

Figure 4 and

Figure 10 can explain the conclusion strongly. As for the competing algorithms, the colors are slightly improved and the details are greatly improved, but the images are not directly understood yet.

Where the evaluation values in

Table 6,

Table 7,

Table 8 and

Table 9 are concerned, both data fidelity and color consistency are improved. For image 3 and 4, the PSNR values have reached 25 dB, and the Q4 values are over 0.9, which show that the HVCNN results could be understood as normal RGB images. Competing algorithms fail to fuse expected colors, but they have good structure and detail according to SSIM and MI.