Abstract

The development of low-cost miniaturized thermal cameras has expanded the use of remotely sensed surface temperature and promoted advances in applications involving proximal and aerial data acquisition. However, deriving accurate temperature readings from these cameras is often challenging due to the sensitivity of the sensor, which changes according to the internal temperature. Moreover, the photogrammetry processing required to produce orthomosaics from aerial images can also be problematic and introduce errors to the temperature readings. In this study, we assessed the performance of the FLIR Lepton 3.5 camera in both proximal and aerial conditions based on precision and accuracy indices derived from reference temperature measurements. The aerial analysis was conducted using three flight altitudes replicated along the day, exploring the effect of the distance between the camera and the target, and the blending mode configuration used to create orthomosaics. During the tests, the camera was able to deliver results within the accuracy reported by the manufacturer when using factory calibration, with a root mean square error (RMSE) of 1.08 °C for proximal condition and ≤3.18 °C during aerial missions. Results among different flight altitudes revealed that the overall precision remained stable (R² = 0.94–0.96), contrasting with the accuracy results, decreasing towards higher flight altitudes due to atmospheric attenuation, which is not accounted by factory calibration (RMSE = 2.63–3.18 °C). The blending modes tested also influenced the final accuracy, with the best results obtained with the average (RMSE = 3.14 °C) and disabled mode (RMSE = 3.08 °C). Furthermore, empirical line calibration models using ground reference targets were tested, reducing the errors on temperature measurements by up to 1.83 °C, with a final accuracy better than 2 °C. Other important results include a simplified co-registering method developed to overcome alignment issues encountered during orthomosaic creation using non-geotagged thermal images, and a set of insights and recommendations to reduce errors when deriving temperature readings from aerial thermal imaging.

1. Introduction

Monitoring surface temperature is important for many fields of science and technology, with applications ranging from a simple inspection of electronic components to climate change analysis using sea surface temperature data (SST). Most of these applications rely on remotely sensed measurements, in which temperature is derived from surface radiation emitted in the 8–14 µm wavelengths and registered by thermal infrared (TIR) cameras [1,2,3]. Since the 1970s, surface temperature can be monitored with worldwide coverage retrieving TIR data from satellite platforms, being constantly used in studies developed on a regional scale, such as hydrological modeling [3,4,5], forest fire detection [6,7], and environmental monitoring [8]. Within the last 20 years, the use of Lepton cameras was expanded to proximal and aerial platforms reflecting technology advances with the development of cost-effective miniaturized thermal sensors [9,10,11]. Some of these cameras are low power and lightweight enough to fit onto unmanned aerial vehicles (UAVs), with potential use for small-scale remote sensing where high spatial and temporal resolution is required [11,12]. Thermal imagery from UAVs has offered significant advances in agricultural applications, being used for plant phenotyping [13,14,15], crop water stress detection [16,17,18,19,20,21], evapotranspiration estimation [22], and plant disease detection [23].

Although the miniaturization of thermal cameras was fundamental to expand the use of TIR data, this process ultimately affected the sensitivity and accuracy of these sensors [24]. To reduce the size and weight, miniaturized thermal cameras do not include an internal cooling system, being classified as uncooled thermal cameras for this reason. Without a cooling mechanism, the internal temperature of the camera is susceptible to change during operation, affecting the sensitivity of the microbolometers that compose the sensor (focal plane array, FPA), which changes according to the internal temperature [25]. As a result, the temperature readings change with the FPA temperature [26,27], introducing errors in temperature measurements. This issue becomes more evident during UAV campaigns in which the camera may experience abrupt changes in internal temperatures due to wind and temperature drift during flight [28]. To minimize measurement bias caused by fluctuations in the FPA temperature, thermal cameras usually perform a non-uniformity correction (NUC) after a predefined time interval or temperature change by taking an image with the shutter closed [24,25]. Considering that the temperature is uniform across the shutter, the offset of each microbolometer is adjusted to ensure that further images will have a harmonized and accurate response signal [25,29]. Because NUC is based on the assumption that the shutter temperature is equivalent to the rest of the camera interior, it is argued that it would not be valid for UAV imagery since the shutter mechanism is more susceptible to temperature drift due to the wind effect than other parts inside the camera [28]. Moreover, it is not clear if the frequency in which NUC is applied is enough to minimize sensor drift, especially for low-cost cameras that often do not allow adjustments on the NUC rate. For this reason, it is imperative that uncooled thermal cameras undergo a proper warm-up period before data acquisition to allow a temperature stabilization of FPA even when NUC is enabled [11,27].

Other sources of errors that can also affect the performance of thermal cameras are target emissivity, the distance between the sensor and the target object, and atmospheric condition. While emissivity can be the main source of error when deriving temperature from TIR data [9], it can easily be adjusted using reference values that are well-documented in the literature. On the other hand, the atmospheric attenuation of TIR radiation that affects airborne and orbital data demands more resources and might be more complex to correct [30]. The atmospheric attenuation can cause large errors on temperature measurements [31,32], and is mainly affected by meteorological variables like air temperature and humidity along with the distance between the camera and target object [30]. To correct temperature measurements, the amount of radiation attenuated by the atmosphere is estimated from radiative transfer models using meteorological data and the distance between the sensor and surface as input variables [33,34]. Although radiative transfer models are efficient, sometimes input data is not available and the method is often considered time-consuming [30]. Therefore, calibration methods that present a straightforward approach and account for more than one source of error are necessary to achieve accurate measurements, especially when deriving temperature from UAV thermal surveys.

Another important aspect of TIR UAV imagery is the image processing used to generate orthomosaics. Because such thermal cameras produce low resolution and poor contrast, the detection of common features among overlapped images performed during the alignment process becomes challenging, resulting in defective orthomosaics [22,35,36]. To overcome this issue, some authors propose a co-registration process based on camera positions obtained from images captured simultaneously by a second camera that offers a higher resolution (usually a red blue green (RGB) or multispectral camera) [36,37,38]. In this case, the dataset from the second camera is processed first and the camera positions are then transferred to the corresponding thermal dataset, which significantly improves the alignment performance. In most cases, however, the thermal sensor is not coupled with a second camera or the simultaneous trigger is not possible, demanding alternative solutions. Moreover, the blending mode used to generate the orthomosaic can also influence the final results [13,22]. To assign pixel values, overlapping images with distinct viewing angles can be combined in different ways, based on the blending configuration [39]. As a result, temperature values from the same target might change according to the blending mode. Although some studies have discussed the effects of the blending configuration on thermal data [13,22,28,40], it has not been investigated how it can affect the overall precision and accuracy of temperature readings.

Within this context, in this study, we investigated the performance of a low-cost uncooled thermal camera, based on the precision and accuracy of temperature readings. We first assessed the proximal scenario, which aimed to analyze the Lepton camera under ideal conditions using factory calibration. Moreover, we investigated the camera performance on aerial conditions based on three flight altitudes conducted at different moments along the day. To overcome alignment issues, a co-registering method was proposed, and the orthomosaics produced were then evaluated according to the blending modes tested. Finally, we tested calibration models based on the ground reference temperature, aiming to reduce the residuals of temperature readings.

2. Materials and Methods

The study was designed to analyze the performance of the low-cost radiometric thermal camera FLIR Lepton 3.5 (FLIR Systems, Inc., Wilsonville, OR, USA) in proximal and aerial conditions. As an original equipment manufacturer (OEM) sensor, FLIR Lepton 3.5 is designed to be built into a variety of electronic products (i.e., smartphones), requiring additional hardware and programming to properly work. The camera used in this study is based on an open-source project “DIY-Thermocam V2” developed by Ritter (2017), in which the Lepton module is connected to a Teensy 3.6 microcontroller (PJRC, Sherwood, OR, USA), assembled on a printed circuit board along with a lithium-polymer battery (3.7 V, 2000 mAh) and a touchscreen liquid crystal display (LCD). The Lepton sensor retail price is around $200 (USD), with the final cost of building the prototype camera (hereafter referred to as Lepton camera) about $400 (USD), which is at least three times less expensive than commercial solutions.

The Lepton camera features an uncooled VOx microbolometer FPA with a resolution of 160 × 120 pixels and a spectral range of 8–14 µm. Temperature readings are extracted from raw 14-bit images with a resolution of 0.05 °C. During the tests, the NUC was enabled with the default configuration, being performed on a three-minute interval or when the internal temperature exceeds 1.5 °C [41].

Some other sensors were also used during the study to provide temperature reference measurements, enabling comparisons and further calibration procedures. Table 1 details the specifications of the sensors used to acquire temperature data.

Table 1.

Detailed specifications of temperature sensors used during the study [41,42,43,44].

2.1. Proximal Analysis

To evaluate the precision and accuracy of temperature measurements obtained with the Lepton camera, an experiment under controlled conditions was setup using liquid water as a blackbody-like target [3]. An FLIR E5 handheld camera (FLIR Systems, Inc., Wilsonville, OR, USA) was also used for comparison purposes, placed in a tripod along with the Lepton camera facing perpendicularly a polystyrene box at a distance of 1 m. The polystyrene box was filled with water and a thermocouple (Testo 926, Testo SE & Co. KGaA, Lenzkirch, Germany) was attached at the side of the box, with the probe positioned parallel to the water surface at a 2 mm depth to record reference temperature data.

Data collection started after a 15-min stabilization time [28], with both cameras being trigged simultaneously to capture a single image, repeating this process after increments in target temperature of approximately 1 °C over a range from 9.1 to 52.4 °C. Each increment on the target temperature was achieved by adding hot water to the polystyrene box, taking special care on the homogenization process, and then removing the same amount of water added to maintain a constant level. Reference temperature measurements were made based on the thermocouple readings, extracted according to the registration time of each image file. The experiment was repeated three times (n = 102) and the laboratory temperature was kept stable at 22 °C.

Temperature data of FLIR E5 was extracted using FLIR Tools software (FLIR Systems, Inc., Wilsonville, USA), configured to adjust temperature data based on an emissivity of 0.99 [45,46], relative humidity of 80%, air temperature of 22 °C, and target distance of 1 m. Average temperature values were obtained from each image using a box measurement tool, using pixels within the central portion of the polystyrene box. For images captured by the Lepton camera, temperature data was extracted using the open-source software ThermoVision (v. 1.10.0, Johann Henkel, Berlin, Germany). The software converts raw digital number values (DN) into temperature data based on a standard equation from the manufacturer (Equation (1)). To obtain the average temperature from each image, the same process used for FLIR E5 data was adopted, extracting the temperature values based on pixels located at the center portion of the reference target. It is worth mentioning that the boundary boxes used to extract average temperature values from each camera had equivalent dimensions, covering approximately 3200 pixels, and remained in the same position throughout the analysis since both cameras were on attached to a fixed support:

Temperature (°C) = 0.01DN − 273.15.

Furthermore, temperature values derived from each camera were combined with reference measurements in linear regression models, and the residuals calculated to assess the precision and accuracy of the sensors individually.

2.2. Aerial Analysis

To explore the spatial and temporal variation of surface temperature, the use of thermal data is mostly based on orthomosaics, which are obtained through photogrammetric techniques applied to aerial imagery. In comparison to proximal approaches, aerial thermal imaging is more complex, and tends to have a lower degree of precision and accuracy due to the effect of environmental conditions and factors linked to dynamic data acquisition [24,28,40,47,48]. In addition, image processing leading up to orthomosaic obtention can also influence the results and must be taken into account. To close these gaps, aerial data acquisition was conducted in distinct scenarios, covering different weather conditions and flight altitudes, followed by tests involving orthomosaic generation, calibration strategies, and their effect on the overall precision and accuracy of the Lepton camera.

2.2.1. Data Acquisition

UAV missions were conducted at the Biosystems Engineering Department of Luiz de Queiroz College of Agriculture—University of São Paulo (ESALQ-USP), Piracicaba, Brazil (Figure 1a). The Lepton camera was attached to a DJI Phantom 4 Advanced quadcopter (SZ DJI Technology Co., Shenzhen, China) using a custom-made 3-D-printed fixed support, adjusted to collect close to nadir images. Three flight plans were used for image acquisition, based on each flight altitude tested: 35, 65, and 100 m. Prior to each mission, the camera was turned on at least 15 min before the flight to ensure that camera measurements were stable [28]. The image acquisition rate varied between 1.5 and 2 Hz among flights, corresponding to a forward overlap and side overlap of ≥80% and ≥70%, respectively, and flight speed limited to 5 m/s.

Figure 1.

Data acquisition scheme for aerial analysis. (a) Distribution and types of reference targets and ground control points; (b) Thermal image snapshot; (c) UAV platform with the Lepton camera onboard; (d) Lepton camera; (e) FLIR Lepton 3.5 OEM sensor; (f) Ground reference temperature platform with the MLX90614 sensor.

In order to capture a wider range of environmental conditions and target temperatures, the missions were performed in the early morning, close to solar noon, and at the end of the afternoon, with the three flight altitudes performed subsequently at each period, and cloudless conditions during all flights. More details regarding each mission and flight conditions are enlisted in Table 2.

Table 2.

Flight mission details. AGL = Above Ground Level; GSD = Ground Sample Distance; Tair = Air temperature; RH = Relative humidity; SR = Shortwave Radiation.

Reference measurements were obtained from seven targets distributed across the study area (Figure 1a). These targets were selected to cover a wide range of temperatures, different types of surfaces, and materials. Additionally, we only used targets covering at least 12 m² and with enough contrast to be distinguishable in the thermal images. The temperature of the targets was monitored during each flight using MLX90614 infrared sensors (hereafter referred to as the reference sensor) (Melexis, Ypres, Belgium) (Figure 1f). The reference sensor was installed with a nadir viewing angle 0.7 m above the target and connected to an Arduino microcontroller, recording temperature data at 5-s intervals. To ensure that reference temperature measurements were adjusted to an equivalent emissivity, the reference sensors were submitted to the protocol described in Section 2.1, in which an empirical line calibration was developed for each device using water as a blackbody-like target [3] (R²val = 0.999, RMSE = 0.28 °C). Even though the emissivity among the selected targets may vary, we decided to use a standard value since determining the emissivity of targets in the field was not feasible, and because using emissivity values reported in the literature would add an extra layer of uncertainty to the analysis.

2.2.2. Flight Altitude Analysis

The performance of the Lepton camera in aerial conditions was first assessed based on the flight altitudes tested during the study. Although the accuracy of temperature readings is expected to be reduced when increasing the flight level, it is important to quantify the magnitude of these deviations and evaluate the overall precision to make sure further calibration procedures are feasible.

To ensure that temperature readings were obtained in a more realistic perspective, individual images were used during the analysis. This ensured that temperature data were obtained without any processing that could change the original values. In addition, the number of samples used to perform statistical analysis was significantly higher, improving the robustness of the results. For each mission, six images per target were manually selected from the database. The selection criteria included three images from each flight direction, with the target located on the central portion of the images to avoid the vignetting effect [22,28,40].

The selected images were first imported to the open-source software Thermal Data Viewer [49], which converts in batch the proprietary files into a spreadsheet format (.CSV), and then converted into 14 bit TIFF images with DN values using R programming language [50]. Furthermore, the TIFF images were treated individually using ArcGIS software (v. 10.2.2, ESRI Ltd., Redlands, CA, USA). The first step was to set the spatial resolution according to the GSD from Table 2, ensuring that the pixel dimension was correct and in the metric system. Then, a circular buffer was used to extract the mean DN value, by visually locating the target and manually positioning the center point over the identified spot. Regarding the buffer size, a diameter of 1.6 m was used for all images, with average values derived from at least four pixels for missions conducted at a 100-m flying altitude, and a minimum of 11 and 43 pixels for the 65- and 35-m missions, respectively. Finally, the average DN value was converted into temperature data applying the factory model (Equation (1)), and then paired with the reference temperature using the time stamp of each image file.

2.2.3. Orthomosaic Generation and Blending Modes Analysis

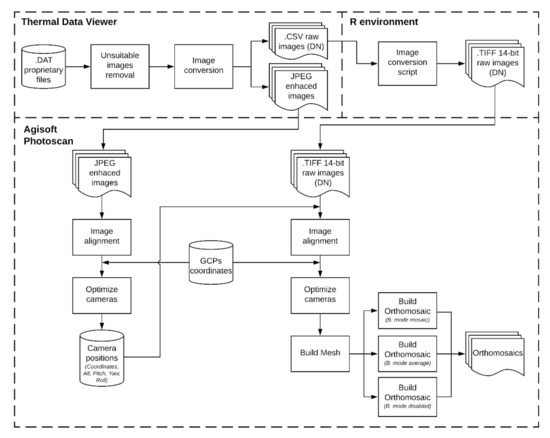

Since the Lepton camera used in the study works independently from the UAV platform and does not produce geotagged images, additional steps were necessary prior to orthomosaic generation. This limitation is mainly caused by the inability of the camera to precisely record the time of each image acquisition and by fluctuations in the image capture rate, which makes the process of geotagging images using GNSS data from the UAV platform impractical. To solve this issue, a co-registering process was proposed and tested, in which the camera positions were estimated applying the structure from motion (SfM) algorithm to upscaled (pixel aggregation) thermal images with enhanced contrast. Through this process, the software can stitch overlapped images and generate a 3-D point cloud [51], resulting in estimated camera positions when adding ground control points (GCPs).

To obtain the estimated camera positions for each dataset, we first excluded all images that were not within the flight plan (taking off, landing, maneuvering) along with blurry images and images with bands of dead pixels. The remaining images were then batch converted by Thermal Data Viewer into a JPEG format with an upscaled resolution and enhanced contrast. The software applies a bilinear interpolation increasing the original resolution to 632 × 466 pixels and adjusts the contrast applying adaptive gamma correction. Furthermore, the images were loaded into Agisoft Photoscan Professional (v. 1.2.6, Agisoft LLC, St. Petersburg, Russia) and image alignment was performed with the following settings: accuracy set as the highest, pair selection as generic, standard key point limit of 20,000, and tie point limit of 1000. If images were not aligned in the first attempt, these images were manually selected along with overlapped aligned images and realigned. After being aligned, five GCPs were added to the project, with coordinates previously measured with an RTK GNSS receiver (Topcon GR-3, 1 cm accuracy, Topcon Corporation, Tokyo, Japan). As GCPs targets, we used the roof corners’ edge of buildings, which produced temperatures different enough from the surrounding targets to be distinguishable in thermal images. To reach the roof of the buildings, the GNSS receiver was attached to a telescopic pole, positioning the antenna right beside the roof corner. Finally, optimization of the camera alignment was performed, and the camera positions were exported.

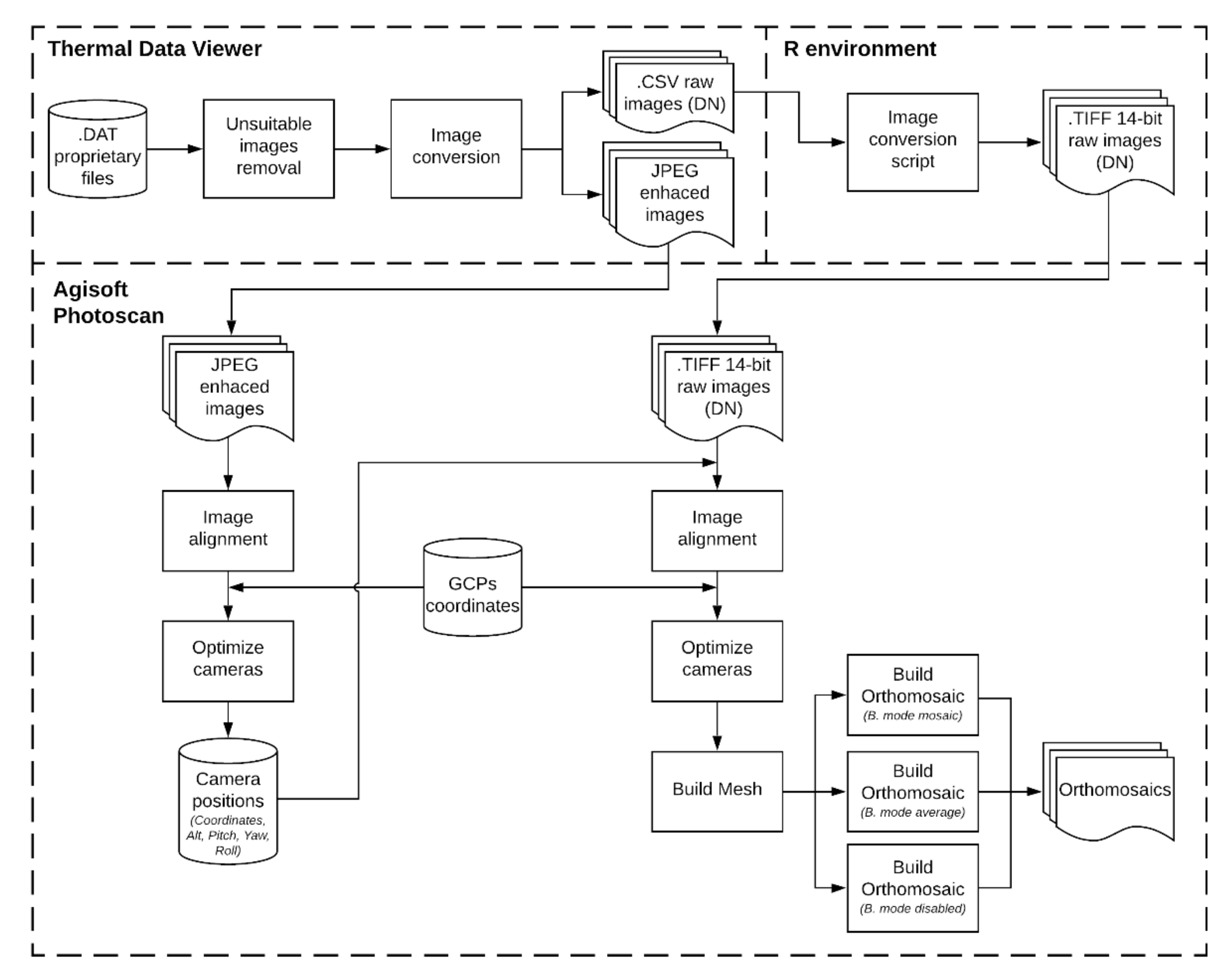

To produce the orthomosaics of each mission, the same dataset used to estimate the camera positions was employed. However, in this case, the raw images were converted into 14 bit TIFF files, preserving the original resolution (160 × 120 pixels). This process was carried out by Thermal Data Viewer, exporting the proprietary files into a spreadsheet format (.CSV), and then converting into the TIFF format using R programming language. TIFF thermal images are composed of raw DN values and have reduced contrast, which, in addition to the low resolution of TIR sensors, affects the performance of SfM-based processing, especially if no camera positions are provided [22,35,36,52]. When the image positions obtained in the last step were added, the alignment process was significantly improved as a result of a pre-selection of overlapped images based on the coordinates of each image and the availability of the pitch-yaw-roll information. Moreover, the aforementioned GCPs were added to the project and the alignment optimization was executed, followed by the mesh generation. The final step was the orthomosaic creation, in which three orthomosaics were exported for each mission, based on the blending modes tested in the study: mosaic, average, and disabled. Other parameters available in Agisoft are the color correction, which was turned off, and the pixel size, which was adjusted to the maximum for all projects. An overview of all processing steps to obtain the orthomosaics is provided in Figure 2.

Figure 2.

Overall flowchart for orthomosaics generation.

The extraction of temperature values from the orthomosaics representing each blending mode tested was carried out in ArcGIS software. Using the coordinates measured by the RTK-GNSS receiver from each target location, a buffer with a 1.6-m diameter was created. Then, using the zonal statistics tool, the average DN value was extracted using the pixels within the buffer of each target and converted to temperature data using the factory calibration model (Equation (1)), being later compared with reference temperature readings. To provide more reliable reference measurements, the average temperature was calculated considering the time interval in which each of the targets appears on the aerial images. This was achieved by manually selecting sequences of images depicting the target, and extracting temperature values from the reference sensor in these intervals to calculate the average reference temperature of the target.

2.3. Empirical Line Calibration

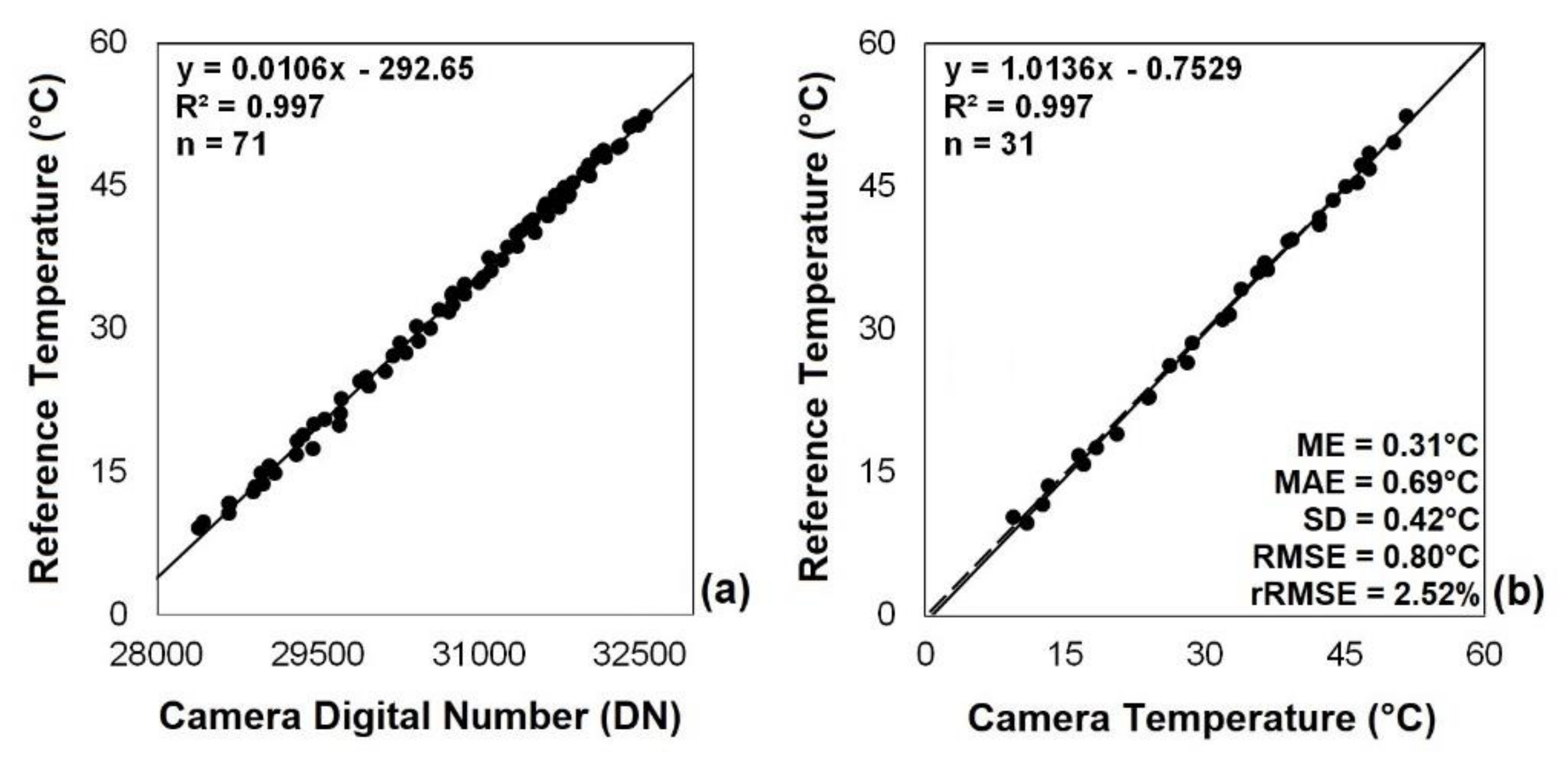

2.3.1. Proximal Calibration

To develop a proximal calibration model for the Lepton camera, the images collected during the proximal analysis (Section 2.1) were employed, with 70% used to calibrate the model (n = 71), and the remaining 30% for validation (n = 31), being selected to provide an equivalent range of temperature values for both steps. First, the average DN value was extracted from each image by the box measurement tool from ThermoVision software, using a fixed polygon to extract the pixel values within the central portion of the polystyrene box. The average DN values from images corresponding to the calibration step were then combined with reference temperature measurements on a linear regression model, which was later applied to convert DN data from images into temperature readings during the validation step. Furthermore, the temperature readings estimated through the linear regression model were compared with reference temperature data to evaluate the precision and accuracy of the calibration model developed.

2.3.2. Aerial Calibration

Considering the wide range of conditions over the data acquisition from aerial analysis, being performed with different flight altitudes and at different times of the day, we developed multiple calibration models with different levels of specificity. The first model tested the performance of a general calibration model, based on a single dataset combining all flight altitudes and periods of the day analyzed in the study. Moreover, we also evaluated the performance of calibration models generated for specific conditions, with individual models for each flight altitude and periods of the day. In addition, the proximal calibration model obtained in Section 2.3.1 was also tested on aerial conditions to provide a parameter related to the atmospheric attenuation of TIR radiation.

To obtain the calibration models and properly compare the results, we first established a protocol that was used throughout the analysis. The dataset from the reference targets was separated into two groups, with four targets used for calibration (asphalt, brachiaria grass, clay tile roof, short grass) and the other three for the validation step (concrete, long grass, water) (Figure 1a). These targets were selected to provide the widest range of temperature values possible for the calibration and validation process, and were employed for all the models tested, ensuring that the different models were calibrated and validated with equivalent datasets from the same targets to promote fair comparisons.

All the calibration models were generated using individual images without any processing, based on the datasets obtained during the flight altitude analysis (Section 2.2.2). This way, we were able to increase the number of samples used to calibrate the models and avoided introducing any uncertainties from the orthomosaic processing, adding robustness to the method. To extract the average DN value from each image used for calibration, the same procedure described in the third paragraph of Section 2.2.2 was used, in which a buffer with a 1.6-m diameter was manually positioned over the target location to extract the mean DN value and then paired with the reference temperature according to the timestamp of the image. Moreover, the datasets were organized accordingly and used to build the linear regression model of each strategy mentioned above.

To test the linear regression models obtained, we decided to use the orthomosaics instead of individual images, since the majority of users extract temperature data from aerial thermal images through orthomosaics. In addition, we decided to use only the orthomosaics generated by the blending mode that provided the best precision and accuracy during our tests, aiming to reproduce the best scenario to test calibration models. To calculate the average DN value from targets selected for validation, the method described in the fourth paragraph of Section 2.2.3 was used, with raw DN data extracted from a 1.6-m circular buffer positioned over the target location based on coordinates measured by an RTK-GNSS receiver. The average DN value from each target was then converted into temperature applying the corresponding linear regression model previously obtained, and paired with reference temperature measurements calculated considering the time interval in which the target appears on the aerial images used to build the orthomosaic.

2.4. Statistical Analysis

To assess the performance of the Lepton camera, we compared the thermal temperature data with reference temperature readings, analyzing the relationship between the measurements and the residuals. The precision of the camera was evaluated using the coefficient of determination values (R²), whereas the accuracy was assessed based on the residuals analysis, represented by the mean error (ME), mean absolute error (MAE), root mean square error (RMSE), and the relative root mean square error (rRMSE). The same methodology was used to assess the performance of the calibration strategies, extracting the coefficients mentioned above from the residuals of the validation step, and calculating the R² value along with the significance level from the regression model obtained in the calibration step.

3. Results

3.1. Proximal Analysis

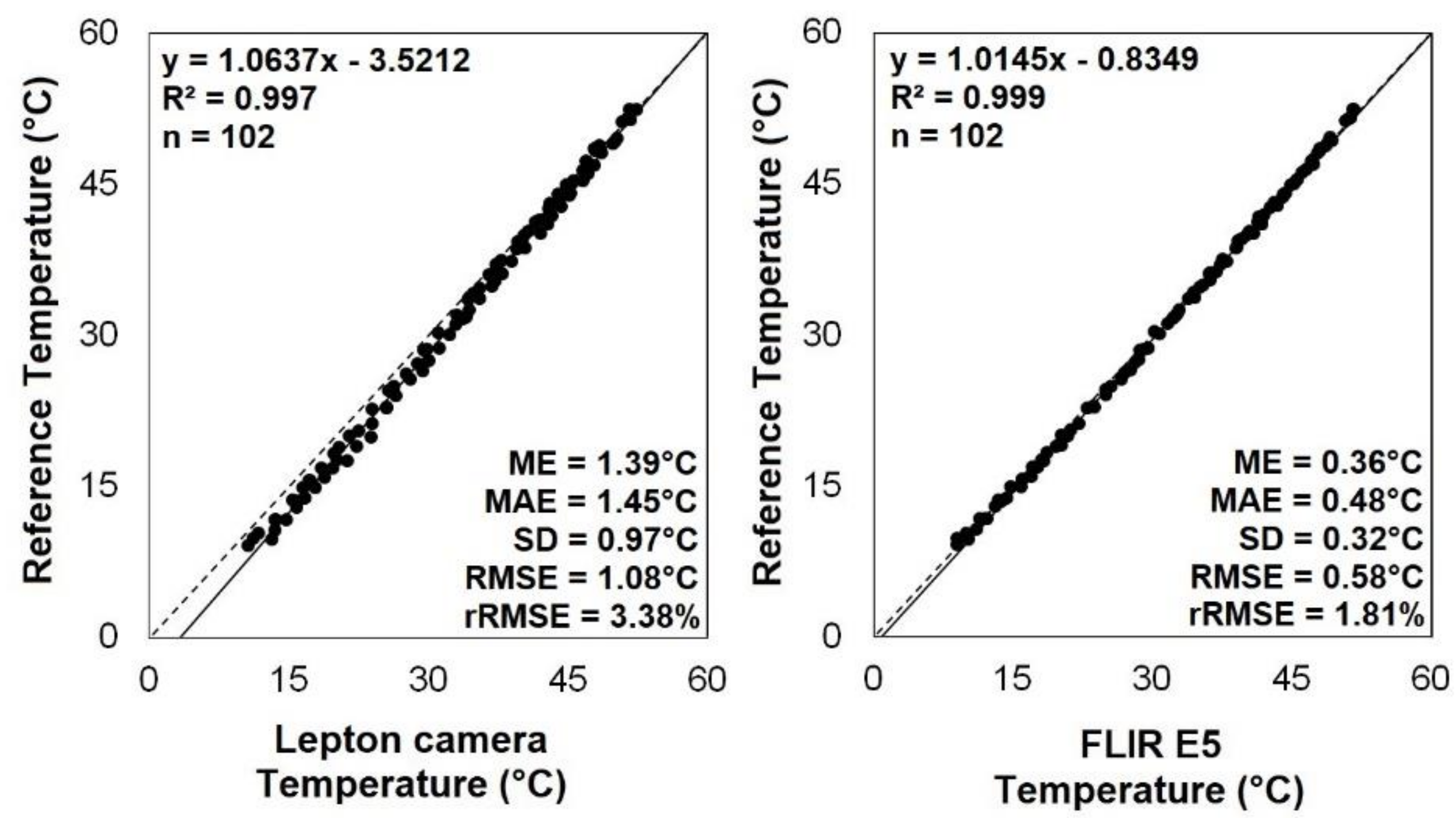

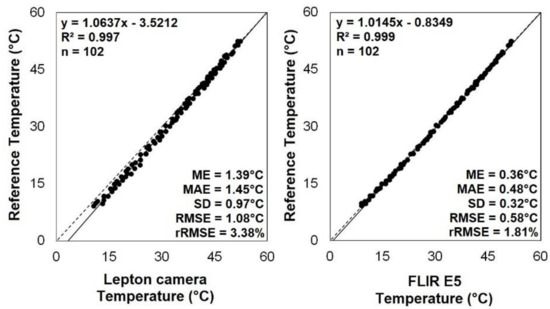

Temperature readings from the thermal cameras presented a strong relationship with the reference temperature data, with R² values higher than 0.99 (Figure 3), indicating that these devices provide high-precision measurements in the temperature range (9.1 to 52.4 °C) and conditions tested.

Figure 3.

Linear regression models between TIR temperature and reference temperature readings for the Lepton camera and FLIR E5. Dashed line = 1:1 line; solid line = linear regression line; n = number of samples; R² = coefficient of determination; ME = mean error; MAE = mean absolute error; RMSE = root mean square error; rRMSE = relative RMSE p < 0.001 for all R².

In terms of accuracy, the cameras were distinct. The Lepton camera temperature readings had higher residuals, with MAE of 1.45 °C and RMSE of 1.08 °C, whereas the FLIR E5 results were 0.48 and 0.58 °C, respectively. When compared to the average reference temperature, rRMSE from the Lepton camera represents 3.38%, and 1.81% for FLIR E5.

3.2. Aerial Analysis

3.2.1. Flight Altitudes

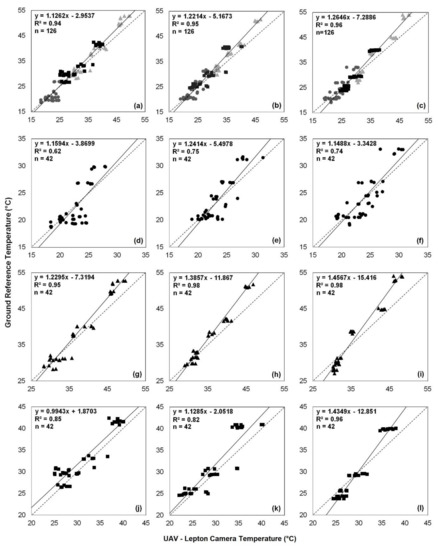

Based on the overall results (Table 3), the relationship between the thermal data and reference temperature, expressed by the R² values, was similar, demonstrating that the precision of TIR measurements was not affected by the flight altitudes tested in the study. On the other hand, the results related to the residuals, which express the accuracy of the camera readings, were slightly different between missions. Datasets collected at 35 m yielded the lowest residuals, with an overall RMSE of 2.63 °C (rRMSE = 8.35%). Missions conducted at 65 and 100 m presented nearly the same accuracy, with RMSE of 3.15 and 3.18 °C (rRMSE of 9.96% and 10.04%), respectively.

Table 3.

Relationship and residuals between TIR measurements and reference temperature for aerial conditions according to flight altitudes tested. n = number of samples; R² = coefficient of determination; ME = mean error; MAE = mean absolute error; RMSE = root mean square error; rRMSE = relative RMSE; p-value < 0.001 (**).

Contrasting with the overall results reported, the 35-m flight had the weakest performance among missions carried out in the early morning (A, D, and G), with an R² of 0.62 and RMSE = 2.41 °C, being the only mission in which TIR temperature was overestimated (ME > 0) in relation to reference values. Results from the 65- and 100-m flight altitudes also produced weaker results in terms of precision for early morning missions, with R² values of 0.75 and 0.74, respectively. However, the best accuracy values were observed in this condition, with RMSE ranging from 2.06 (mission D) to 2.41 °C (mission A), indicating that the lower R² values might be linked to the smaller temperature range.

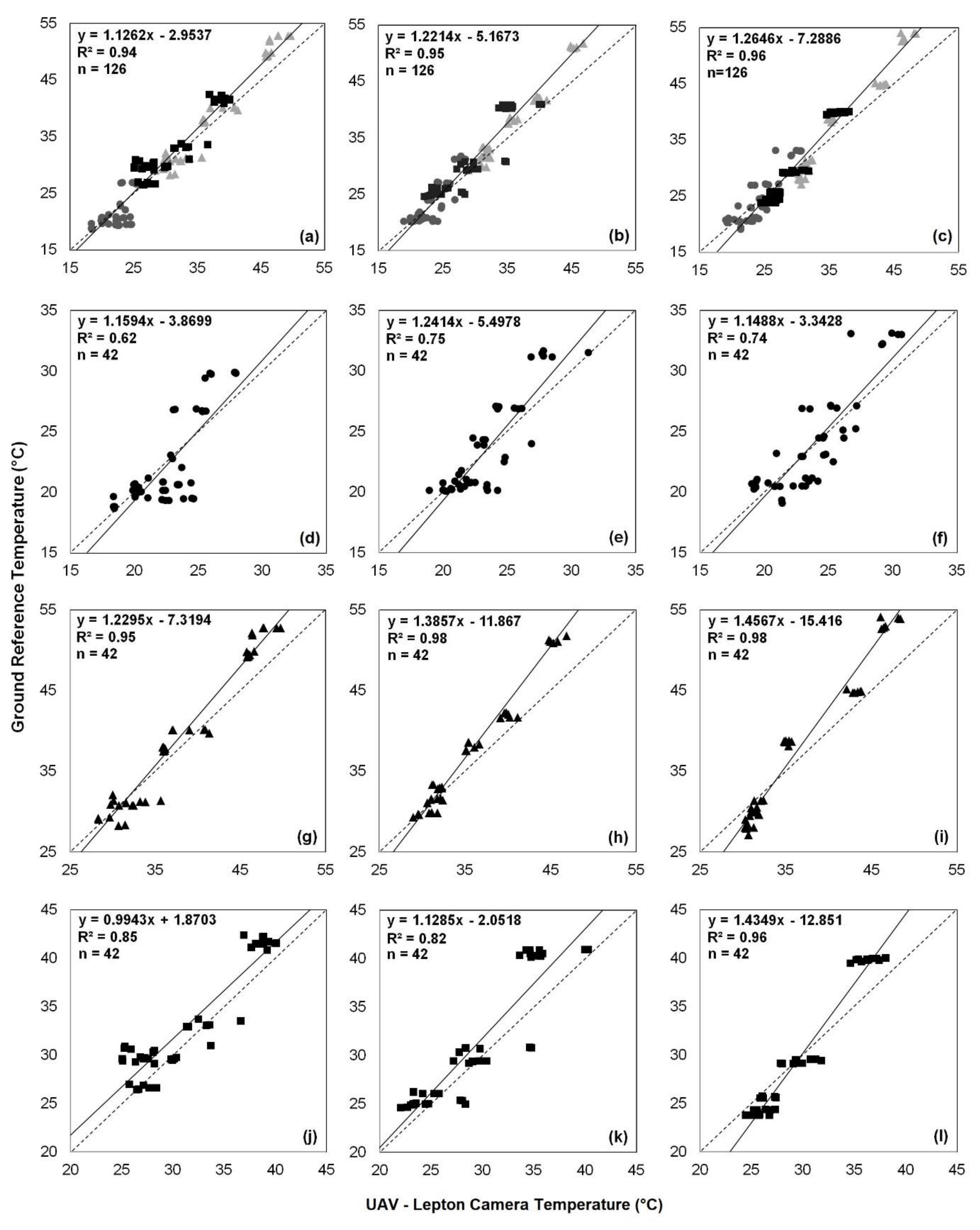

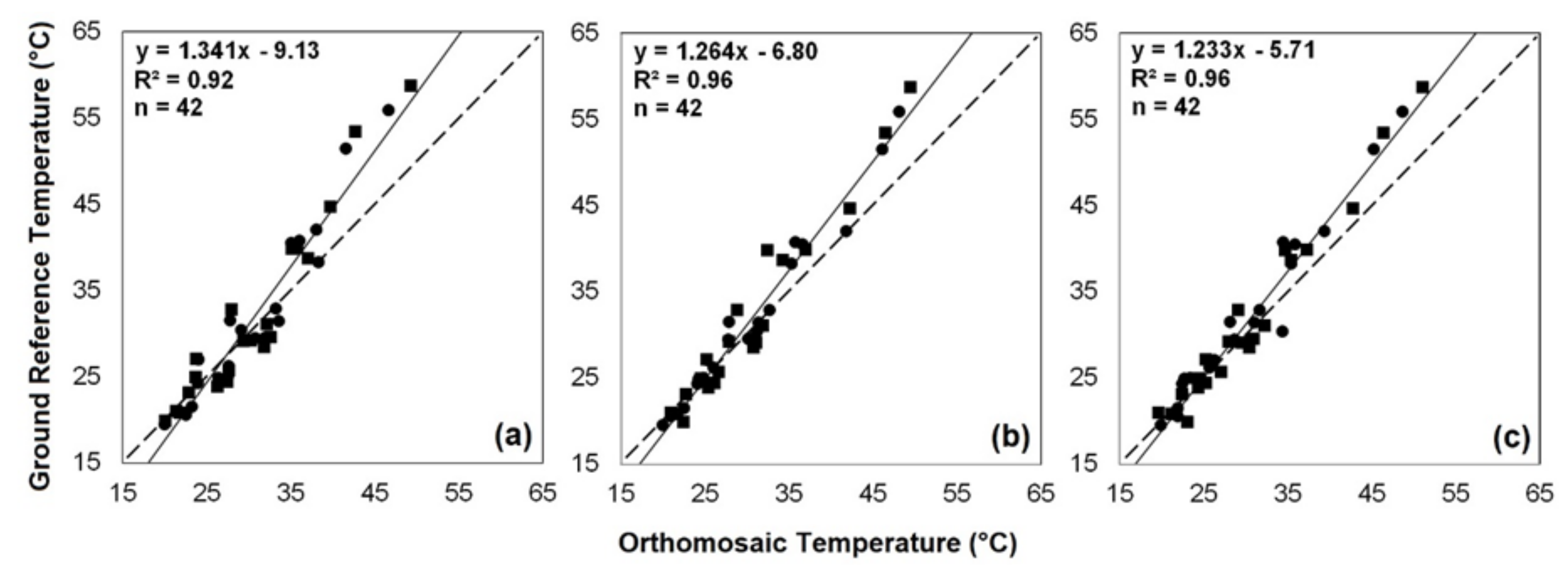

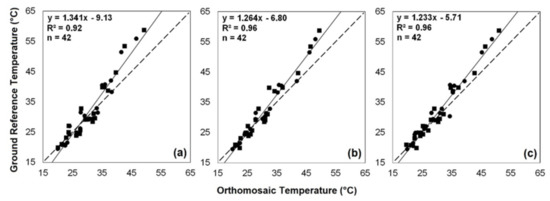

Regarding the linear regression models generated (Figure 4), different patterns of slope and intercept values were observed according to the flight altitudes tested. Based on overall models and the range of temperature analyzed, the slope tends to increase and the intercept to decrease when elevating the flight altitude, indicating that low temperatures would be gradually overestimated and high temperature underestimated when increasing the flight altitude and deriving TIR temperature using the factory calibration.

Figure 4.

Linear regression models between temperature readings from the Lepton camera and ground reference temperature throughout the missions in different flight altitudes. Overall models for the 35- (a), 65- (b), and 100-m flight altitudes (c); individual models with for the 35- (d,g,j), 65- (e,h,k), and 100-m flight altitudes (f,i,l); symbols indicate datasets collected in the early morning (●), close to solar noon (▲), and at the end of the afternoon (■). Dashed line = 1:1 line; solid line = linear regression line; p < 0.001 for all R².

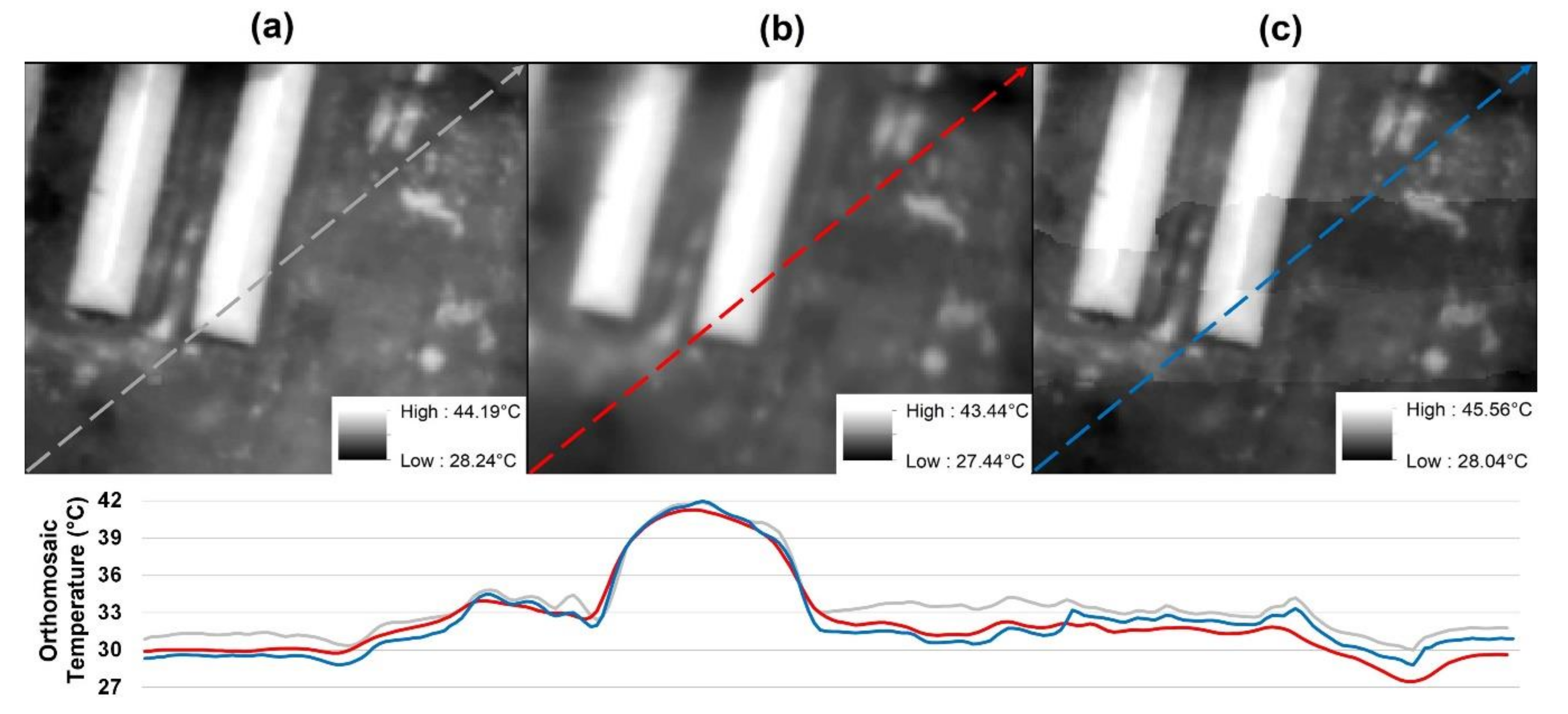

3.2.2. Blending Models

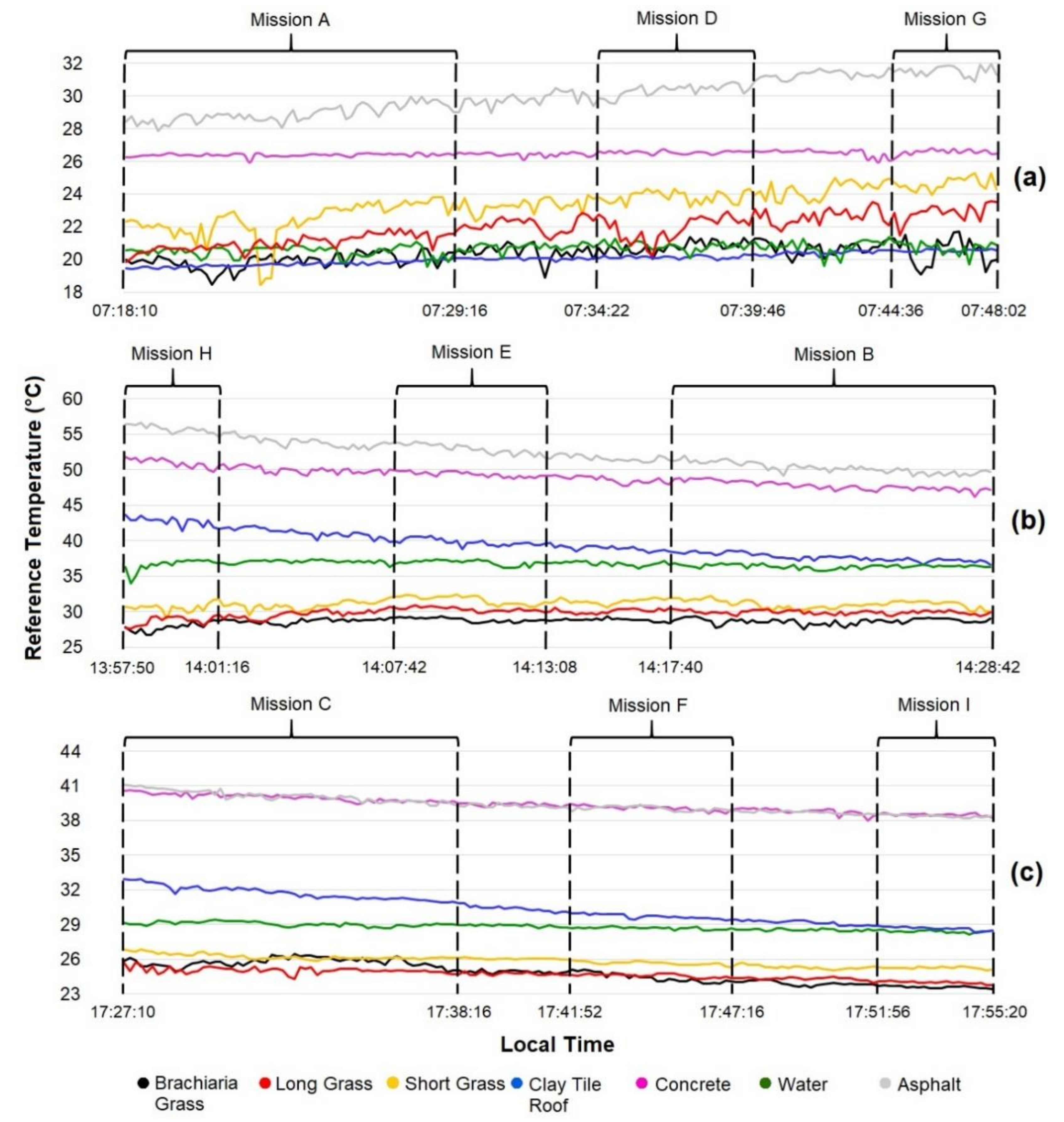

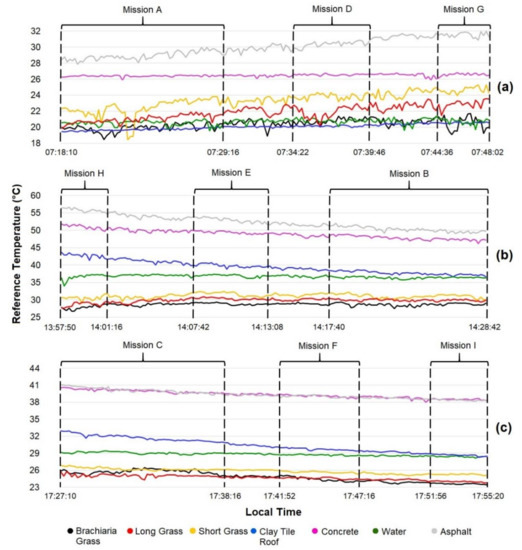

The performance of blending modes was assessed based on missions from the 65- and 100-m flight altitudes. Datasets from the 35-m missions (A, B, and C) were not included in the analysis because the orthomosaic from mission A could not be properly generated, and using only the two remaining orthomosaics would compromise the comparison with results from other flight altitudes. The orthomosaic was not successfully generated because some images covering a significant part of the area of study could not be aligned and further covered by the orthomosaic. Since the 35-m missions take at least twice the time required by other flight altitudes to complete image acquisition and targets in the early morning particularly experience dramatic increases in temperature values (Figure 5a), the process of stitching images performed by the SfM algorithm might be affected, because DN values from the same object extracted of images derived of a parallel flight line can change abruptly, and the algorithm may not recognize that these two values belong to the same target. This problem was not experienced in other missions using the 35-m data (mission B, C), probably because the targets’ temperature was more stable in these conditions (Figure 5b,c).

Figure 5.

Ground reference temperature from validation targets across all missions. Missions conducted in the early morning (a), close to solar noon (b), and at the end of the afternoon (c).

The overall results, which combine datasets from the 65- and 100-m flight altitudes, indicate that the mosaic mode had the weakest performance, with an R² = 0.92 and RMSE of 3.93 °C (rRMSE = 12.43%) (Table 4). The average blending mode and the disabled option yielded the best precision values, with an overall R² of 0.96 in both cases. The accuracy was also similar, with an RMSE of 3.14 °C (rRMSE = 9.93%) for the average mode, and equal to 3.08 °C (rRMSE = 9.74%) with the blending mode disabled.

Table 4.

Relationship and residuals between the temperature extracted from the orthomosaics and the reference temperature for aerial conditions according to the blending modes tested. n = number of samples; R² = coefficient of determination; ME = mean error; MAE = mean absolute error; RMSE = root mean square error; rRMSE = relative RMSE; p-value < 0.001 (**); p-value < 0.01 (*).

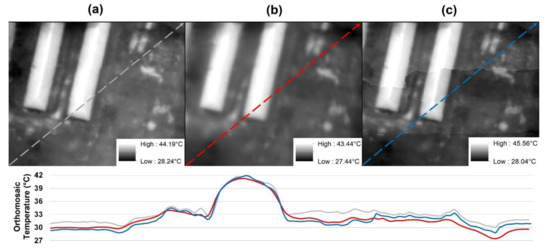

Analyzing the overall regression models obtained according to the blending mode used (Figure 6), the slope and intercept values were similar to those obtained in the flight altitude assessment. The model generated using the mosaic blending mode was slightly different than the others, with a higher value for the slope and lower intercept, meaning that low temperatures would be more overestimated and high temperatures underestimated, compromising the accuracy. The average mode and the disabled option resulted in very similar regression models, with comparable slope and intercept values, closer to the 1:1 line.

Figure 6.

Linear regression models between the ground reference temperature and TIR temperature from orthomosaics obtained with the mosaic (a) and averaged (b) blending modes, and with blending disabled (c). Symbols indicate datasets from 65 (●) and 100 m (■) flight altitudes. Dashed line = 1:1 line; solid line = linear regression line; p < 0.001 for all R².

Besides the differences in terms of statistical results, the contrast between the blending configurations tested is even more evident when comparing the visual results from the orthomosaics. A zoomed view of the area is shown in Figure 7, illustrating the effect of the blending modes used by showing temperature values’ variation across a transect drawn on the orthomosaics corresponding to the mosaic mode (Figure 7a), average (Figure 7b), and disabled option (Figure 7c). The visual difference is more evident for the average and the disabled mode, in which a smoother characteristic is observed for the average one, whereas the mosaic and disabled option present more contrast between transitions in temperature values, giving the impression of enhanced detail. Additionally, when the blending mode is disabled, we can notice the presence of seamlines between images used to obtain the orthomosaic, with abrupt changes in temperature values along the transect profile. Differences in terms of accuracy are also visible when comparing the temperature values from orthomosaics with the reference values of ground reference targets depicted in Figure 7, with a higher discrepancy for mosaic mode values in relation to the average and the disabled option.

Figure 7.

Zoomed view of the area based on orthomosaics from mission E with mosaic (a) and averaged (b) blending modes, and with blending disabled (c). The profile graph illustrates temperature values along the transect from orthomosaics using the blending modes tested.

3.2.3. Co-registration Process

The proposed co-registering method was fundamental to overcome the alignment issues encountered with non-geotagged thermal images during the photogrammetry process. Without camera positions, an average of 37% of the images were properly aligned, with an overall of 1516 and 833 tie points for missions conducted at the 65- and 100-m flight altitude, respectively. When the camera positions generated were added to the project, the percentage of aligned images more than doubled, reaching an average value of 84.7%. The overall number of tie points also increased, with an average of 3806 points for the 65-m missions, and 1724 points with missions from the 100-m flight altitude. Detailed information is presented in Table 5.

Table 5.

Results from the alignment step of the orthomosaic processing with and without the use of camera positions obtained with the co-registering method.

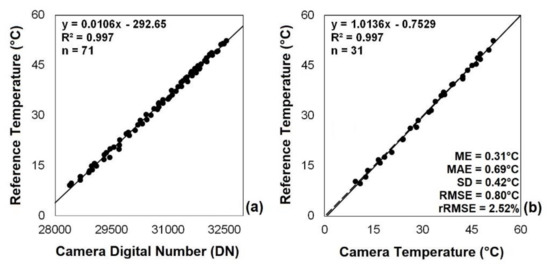

3.3. Calibration Strategies

3.3.1. Proximal Calibration

The calibration model generated with 70% of the proximal dataset, combining the DN values from raw images and the reference temperature, yielded an R² of 0.997 (Figure 8a). The residuals extracted from the remaining 30% of the data resulted in an MAE of 0.69 °C and RMSE = 0.80 °C, equivalent to 2.52% (rRMSE) (Figure 8).

Figure 8.

Linear regression models of the proximal calibration analysis. (a) calibration model between DN values extracted from raw images and reference temperature data; (b) validation model between TIR calibrated temperature and reference data. Dashed line = 1:1 line; solid line = linear regression line; n = number of samples; R² = coefficient of determination; ME = mean error; MAE = mean absolute error; RMSE = root mean square error; rRMSE = relative RMSE p < 0.001 for all R².

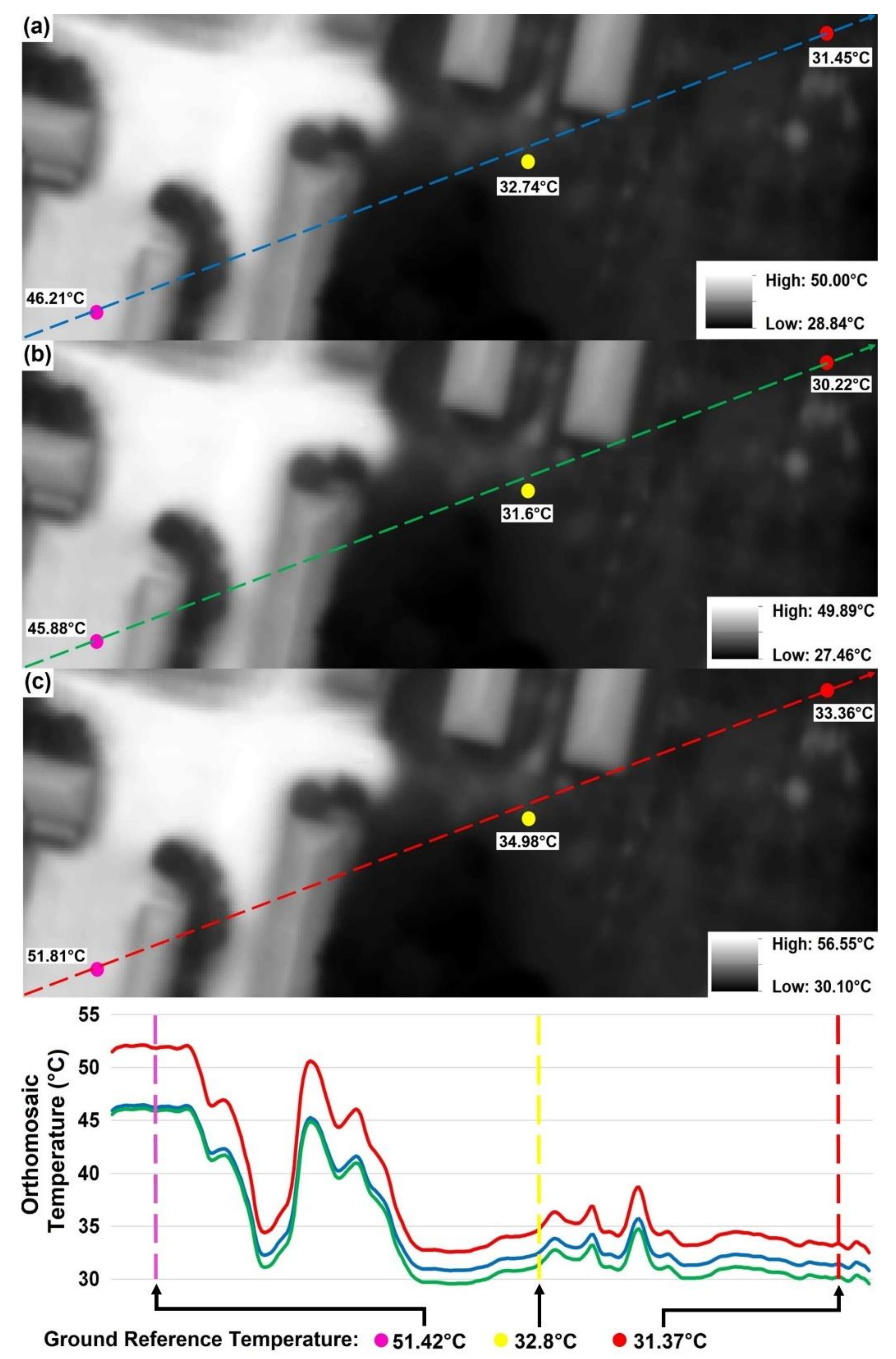

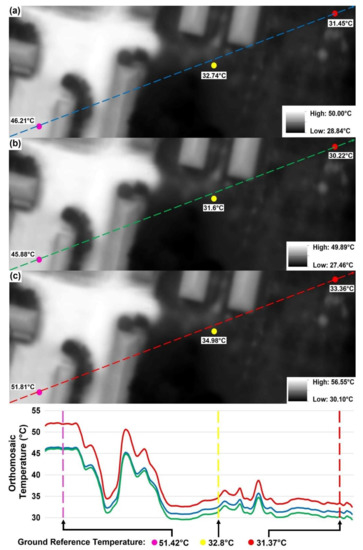

3.3.2. Aerial Calibration

Since the average and disabled mode had equivalent results terms of precision and accuracy, we decided to use datasets obtained with the average blending mode to avoid issues related to the abrupt changes in temperature values observed in orthomosaics made with disabled blending, as described in Section 3.2.2.

The first calibration strategy tested used the model obtained from the proximal analysis (Section 3.3.1) in aerial conditions, based on missions from the 65- and 100-m flight altitudes. The residuals, which correspond to the accuracy degree, were the highest observed, with an RMSE of 3.40 °C (rRMSE = 10.71%) (Table 6). Additionally, the ME was the lowest observed, corresponding to −2.75 °C, indicating that temperature values corrected by this model would be underestimated. The general model, which was generated combining aerial images from the 65- and 100-m missions, had an R² of 0.95 for the calibration dataset and yielded the best accuracy based on the residuals from the validation step, with RMSE of 1.31 °C (rRMSE = 4.12%). Other two models were generated and validated using individual datasets from the 65- and 100-m missions, resulting in residuals slightly higher than the ones obtained with the general model. The 65-m model produced an RMSE of 1.56 °C (rRMSE = 4.93%), whereas the 100-m model yielded an RMSE equal to 1.32 °C (rRMSE = 4.16%). The last strategy tested was the calibrating temperature using models generated specifically for each time of the day data acquisition took place, with calibration models developed for early morning, noon, and the end of afternoon, and validated with corresponding datasets. In terms of accuracy, this strategy provided the lowest performance among calibrations based on aerial images, with RMSE ranging from 1.57 to 1.94 °C (rRMSE between 4.81% and 6.74%). In addition, temperature values calibrated by these models were underestimated (ME < 0), a characteristic that was not present in other aerial calibration models. This effect can be clearly observed in Figure 9, in which the result of different calibration models on temperature readings is illustrated in the profile graph, combining orthomosaics calibrated with factory (Figure 9a), proximal (Figure 9b), and general calibration (Figure 9c), whereby the factory and proximal models underestimate temperature, whilst the general model yields more accurate readings.

Table 6.

Cross-validation results between the DN values of orthomosaics and the ground reference temperature based on different datasets. n = number of samples; R² = coefficient of determination; ME = mean error; MAE = mean absolute error; RMSE = root mean square error; rRMSE = relative RMSE; p-value < 0.001 (**); p-value < 0.01 (*).

Figure 9.

Zoomed view of the area based on orthomosaic from mission E, with temperature data calibrated with the factory (a), proximal (b), and general model (c). The profile graph illustrates temperature values along the transect from orthomosaics calibrated with the above-mentioned models. Dashed lines indicate where along the transect the reference targets (colored dots) are located.

4. Discussion

Considering that factory calibration of radiometric Lepton cameras is performed based on proximal analysis, using temperature readings of a blackbody radiator in a controlled environment [41], we first reproduced this scenario to assess the performance of the sensor under ideal conditions and compared the results with a commercial camera. Under a stable ambient temperature and target ranging from 9.1 to 52.4 °C, the Lepton camera delivered accuracy within the values reported by the manufacturer, with an MAE of 1.45 °C and RMSE = 1.08 °C. These results were better than the values reported by Osroosh et al. [53] during tests using radiometric Lepton sensors, in which an MAE of 2.1 °C and RMSE = 2.4 °C were obtained from a blackbody calibrator in a temperature range from 0 to 70 °C, conducted at a room temperature of 23 °C without replication. The lower accuracy can be explained by the wider temperature range used during the tests but also the lack of a proper initial stabilization period required by uncooled thermal cameras to stabilize temperature readings [11,28,54], which is not stated in the article. In comparison to the FLIR E5 camera, the accuracy of the Lepton camera readings was inferior, with residuals nearly double the E5 results (MAE = 0.48 °C, RMSE = 0.58 °C). Because E5 allows adjustments to perform calibration, optimizing temperature readings according to the informed air temperature, relative humidity, distance, and emissivity of the target, and thus more accurate results were expected. However, in terms of precision, the cameras yielded equivalent results, with R² > 0.99.

The camera performance on aerial mode was tested on different flight altitudes with replications throughout the day, aiming to provide a wide range of environmental and flying conditions to the analysis. We first assessed the effect of the distance between the camera and the target, which was based on three flight altitudes: 35, 65, and 100 m, using temperature data extracted from individual images without any processing and with the reference target positioned close to the central portion of the frame to avoid the vignetting effect. According to factory calibrated temperature data, the overall precision was similar among the flight altitudes tested (R² = 0.94–0.96). The accuracy, however, decreased with the increase in flight altitude, with a higher variation between 35 and 65 m. Since TIR radiation is attenuated by the atmosphere [11,31,32], when increasing the distance between the camera from the target, less radiation will reach the sensor, resulting in lower temperature values and ultimately, weaker accuracy. This effect can be clearly observed on the overall regression models (Figure 4), in which the slope from the regression line elevates towards higher flight altitudes, demonstrating that the factory calibration gradually becomes more inaccurate as the distance between the camera and target is increased. In order to quantify the amount of radiation attenuated due to scattering and absorption by the atmospheric, radiative transfer models, such as MODTRAN [55,56,57,58], are often employed to correct TIR temperature, deriving profiles according to the distance between the camera and target object, relative humidity, and atmospheric temperature. The correction profiles’ curves have a logarithmic shape when plotted against the distance between the camera and target [59], explaining the higher contrast of accuracy between the 35- and 65-m missions, and are significantly affected by relative humidity in low altitudes [11], justifying the distinct patterns of the coefficient values among regressions from missions conducted at different times of the day. Although radiative transfer models are considered efficient, implementing this calibration can become time-consuming for some users [30] and require input meteorological data, which is often not available.

Furthermore, other sources of error must be taken into account, especially the variations of the internal camera temperature experienced during flight conditions, which changes the sensitivity of the microbolometers, causing unstable temperature readings across time [24,25,29]. A key strategy to mitigate the variations of the internal camera temperature is implementing a stabilization time needed for the camera to warm up before image acquisition [11,28,54], associated with non-uniformity correction (NUC) being switched on to compensate the internal temperature drift effect and provide a harmonized response signal across the FPA sensor [24]. The contrasting results reported from mission A, in which the 35-m mission delivered the worst performance among flights conducted in the early morning, were probably caused by an insufficient stabilization time before image acquisition. Even though the same warm-up time was used for all missions, the early morning flights experienced air temperature values approximately 10 °C lower than other missions, increasing the temperature difference between the initial and stabilized condition. This effect becomes evident when analyzing the performance of subsequent missions from early morning (D and G), in which the camera’s performance was significantly improved as a result of a steadier internal temperature. To avoid this issue, we recommend adding extra time for camera stabilization before flight campaigns, especially when the range between the air and internal camera temperature is more pronounced. Another effective measure is adding extra flight lines at the beginning of the mission to allow the camera temperature to stabilize according to the air temperature and wind conditions encountered during flight [28].

Regarding the orthomosaic generation, the method developed to estimate camera positions was fundamental to overcome the alignment issues encountered with raw images, frequently reported in studies using the SfM algorithm in the mosaicking process of thermal images [22,35,36,52]. In our study, performing the initial alignment process of raw images was even more difficult because the Lepton camera used did not record any coordinates for the images captured, and a post geo-tagging process was not feasible. The co-registration process based on enhanced contrast thermal images differs from other methods because it does not require additional images captured from a second camera (normally RGB) [36,37,38], which is usually triggered simultaneously with the TIR sensor to allow the further co-registration process. As a result, the number of aligned images more than doubled and we were able to successfully align the raw images and generate the orthomosaics from eight out of nine missions. The only case we were not able to produce the orthomosaic was mission A, which experienced the aforementioned issues related to an insufficient warm-up time and dramatic changes in the temperature of target objects during the flight, which we believe affected the image alignment process. For this reason, missions from 35 m were not used during the blending modes and calibration analysis, maintaining only flight altitudes with complete replications to provide more reliable conclusions among the results.

The blending modes available in Agisoft Photoscan that were tested in our study resulted in orthomosaics with significant differences in terms of precision and accuracy, with contrasting visual aspects. When the orthomosaics are generated with the blending mode disabled, each resulting pixel is extracted from a single image with the view being closest to the nadir angle [39]. On the other hand, when activated, the blending mode merges temperature data from different images, in which the average option combines the temperature values from all images covering the target object in a simple average [13], whereas the mosaic option applies a weighted average, with pixels closer to the nadir viewing angle being more important [40]. The results using the disabled mode provided the best overall results, with an R² = 0.96 and RMSE of 3.08 °C (rRMSE = 9.74%), reflecting the benefit of using only close to nadir view images to reduce the vignette effect and avoiding the extra layer of uncertainty that blending modes might introduce as reported in other studies [22,60]. However, the visual result from orthomosaics obtained with the blending mode disabled (Figure 7c) can be an issue for applications aiming at the spatial distribution of TIR data, since the seamlines from the individual images used in the composition become apparent as a result of abrupt changes in temperature values from one image to another due to different viewing geometries [13]. Results from the average blending mode were equivalent to the ones obtained with the disabled option, achieving the same overall precision (R² = 0.96) and nearly the same accuracy, with an average RMSE of 3.14 °C (rRMSE = 9.93%). Since all overlapping images are combined in the average mode, using a wide range of camera positions and viewing angles to derive average temperature, a reduced level of accuracy is expected due to a more pronounced vignetting effect. The residuals, however, were equivalent to those obtained with the blending mode disabled, indicating that vignetting errors were not transferred to the orthomosaic generated with the average blending mode. Similar results were obtained by Hoffmann et al. [22], in which the orthomosaic produced with the average setting delivered results that were equivalent to the same orthomosaic generated excluding all images’ edges, aiming to eliminate the vignetting effect. The final method tested was the mosaic option, which is the default blending mode in Agisoft, being constantly used to produce thermal orthomosaics [28,61,62]. Although it combines features from the average and disabled mode, the performance of this method was significantly lower, with an R² = 0.92 and RMSE of 3.93 °C (rRMSE = 12.43%), indicating that this might not be the most appropriate blending mode to produce thermal orthomosaics. Considering that this method assigns a higher weight to close to nadir view images when averaging a pixel value, the expected accuracy would be close to the values obtained with the blending mode disabled. However, it is unclear if any other factors are taken into account and how exactly the software attributes the weight of each image to calculate the average value of each pixel. Other studies that employed the mosaic blending mode to produce thermal orthomosaics achieved results within the values observed in our study, with R² ranging from 0.70 to 0.96 and RMSE between 3.55 and 5.45 °C [28,61,62].

The results using the empirical line calibration demonstrated significant improvements in the camera performance for proximal and aerial conditions. When applying a proximal calibration model, the accuracy of the Lepton camera was significantly improved in relation to the factory configuration, reducing the MAE and RMSE in 0.76 and 0.28 °C, respectively. The achieved residuals are comparable to the ones obtained with the FLIR E5 camera, which accounts for optimizations for proximal readings, and corresponds with the accuracy reported by Osroosh et al. [53] after calibrating FLIR Lepton sensors in laboratory conditions. However, when employed in aerial conditions, the proximal calibration model yielded results equivalent to the factory calibration, with the lowest accuracy among the models tested (RMSE = 3.40 °C), indicating that proximal approaches may not be suitable for aerial imaging. To be valid, proximal calibration should account for variations in the internal camera temperature, which are mainly influenced by ambient temperature [27,63], and the images corrected for the attenuation of thermal radiance by the atmosphere.

On the other hand, calibration models based on the ground reference temperature consistently reduced the residuals from aerial missions, resulting in RMSE values between 1.31 and 1.94 °C. Since the models are adjusted combining TIR data with high-accuracy ground temperature, they can cover the main sources of error involved in aerial imagery, correcting the attenuation of radiation caused by the atmosphere, and reducing errors caused by variations in the camera temperature among different flights [28]. The general model, which combines datasets from flight altitudes of 65 and 100 m, delivered the lowest residuals among the validation results, representing a reduction in MAE and RMSE values of 1.19 and 1.83 °C, respectively (MAE = 0.99 °C, RMSE = 1.31 °C). Other methods of calibration, such as the use of neural network calibration proposed by Ribeiro-Gomes et al. [61], using sensor temperature and DN values as input variables, improved the accuracy of TIR measurements in 2.18 °C, with a final RMSE of 1.37 °C. Moreover, Mesas-Carrascosa et al. [24] proposed a method to correct TIR temperature, removing the drift effect of microbolometer sensors based on the features used by SfM in the mosaicking process, and achieving a final accuracy within 1 °C. Using snow as the ground reference source, Pestana et al. [64] corrected temperature derived from TIR imagery, increasing the accuracy by 1 °C. A similar approach was used by Gómez-Candón et al. [26], in which thermal imagery was calibrated with a final accuracy of around 1 °C by using ground reference targets distributed across the flight path, deriving separate calibration models for images closest to each overpass. Furthermore, the results of specific models using individual datasets from the 65- and 100-m missions, as well as datasets dividing missions according to the time of day, provided slightly higher residuals, with RMSE values ranging from 1.32 to 1.94 °C. Even though the general model performed better in our tests, demonstrating that a more robust calibration might deliver better accuracies, the use of individual models generated from ground reference data acquired specifically for the flight campaign to be corrected ensures that any specific condition encountered will be properly covered in the calibration process, maintaining a reliable accuracy degree.

5. Conclusions

The low-cost Lepton camera tested in our study was able to deliver results within the specifications reported by the manufacturer, with accuracy values comparable to more expensive models in proximal and aerial conditions.

The aerial analysis focused on the use of orthomosaics to represent TIR data from UAV missions, in which the co-registering process proposed in our study was fundamental to overcome issues related to the use of non-geotagged images during the alignment process needed for orthomosaic obtention. Moreover, the blending modes tested significantly affected the overall accuracy of temperature derived from the orthomosaics, with the best results obtained with the average and disabled mode.

Although the accuracy decreased towards higher flight altitudes, demonstrating that the factory calibration does not account for atmospheric attenuation of TIR radiation, the precision remained stable among the flight altitudes tested, indicating that alternative calibration methods can be used to improve the final accuracy. Based on this assumption, calibration models were obtained relating camera DN to a high-accuracy reference temperature, reducing the error on TIR temperature readings by up to 1.83 °C, with a final accuracy below 2 °C.

Author Contributions

M.G.A., L.M.G. and M.M. conceived the idea, M.G.A., L.M.G. and M.M. designed and performed the experiments, M.G.A. analyzed the data. All the authors contributed to writing and editing the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

We thank the Coordination for the Improvement of Higher Education Personnel (CAPES) for providing the scholarship to M.G.A. (grant: 8882.378424/2019-01).

Acknowledgments

The authors would like to thank: Rodrigo Gonçalves Trevisan and Tarik Marques Tanure for supporting the Lepton camera construction; André Zabini for lending us the FLIR E5 camera; Áureo Santana de Oliveira and Juarez Renó do Amaral for all the technical assistance with electronic instrumentation; The Precision Agriculture Laboratory (LAP-ESALQ) for providing the UAV platform used during flight campaigns; and the SmartAgri company for access to a 3D printer used for camera construction.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Prakash, A. Thermal Remote Sensing: Concepts, Issues and Applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 239–243. Available online: https://www.isprs.org/proceedings/XXXIII/congress/part1/239_XXXIII-part1.pdf (accessed on 21 July 2020).

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Baker, E.A.; Lautz, L.K.; McKenzie, J.M.; Aubry-Wake, C. Improving the accuracy of time-lapse thermal infrared imaging for hydrologic applications. J. Hydrol. 2019, 571, 60–70. [Google Scholar] [CrossRef]

- Eschbach, D.; Piasny, G.; Schmitt, L.; Pfister, L.; Grussenmeyer, P.; Koehl, M.; Skupinski, G.; Serradj, A. Thermal-infrared remote sensing of surface water–groundwater exchanges in a restored anastomosing channel (Upper Rhine River, France). Hydrol. Process. 2017, 31, 1113–1124. [Google Scholar] [CrossRef]

- Mundy, E.; Gleeson, T.; Roberts, M.; Baraer, M.; McKenzie, J.M. Thermal imagery of groundwater seeps: Possibilities and limitations. Groundwater 2017, 55, 160–170. [Google Scholar] [CrossRef]

- Flasse, S.P.; Ceccato, P. A contextual algorithm for AVHRR fire detection. Int. J. Remote Sens. 1996, 17, 419–424. [Google Scholar] [CrossRef]

- Quintano, C.; Fernandez-Manso, A.; Roberts, D.A. Burn severity mapping from Landsat MESMA fraction images and Land Surface Temperature. Remote Sens. Environ. 2017, 190, 83–95. [Google Scholar] [CrossRef]

- Kustas, W.; Anderson, M. Advances in thermal infrared remote sensing for land surface modeling. Agric. For. Meteorol. 2009, 149, 2071–2081. [Google Scholar] [CrossRef]

- Aubrecht, D.M.; Helliker, B.R.; Goulden, M.L.; Roberts, D.A.; Still, C.J.; Richardson, A.D. Continuous, long-term, high-frequency thermal imaging of vegetation: Uncertainties and recommended best practices. Agric. For. Meteorol. 2016, 228, 315–326. [Google Scholar] [CrossRef]

- Klemas, V.V. Coastal and environmental remote sensing from unmanned aerial vehicles: An overview. J. Coast. Res. 2015, 31, 1260–1267. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Perich, G.; Hund, A.; Anderegg, J.; Roth, L.; Boer, M.P.; Walter, A.; Liebisch, F.; Aasen, H. Assessment of multi-image unmanned aerial vehicle based high-throughput field phenotyping of canopy temperature. Front. Plant Sci. 2020, 11, 150. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Natarajan, S.; Basnayake, J.; Wei, X.; Lakshmanan, P. High-throughput phenotyping of indirect traits for early-stage selection in sugarcane breeding. Remote Sens. 2019, 11, 2952. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolás, E.; Nortes, P.A.; Alarcón, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Martínez, J.; Egea, G.; Agüera, J.; Pérez-Ruiz, M. A cost-effective canopy temperature measurement system for precision agriculture: A case study on sugar beet. Precis. Agric. 2017, 18, 95–110. [Google Scholar] [CrossRef]

- Zhang, L.; Niu, Y.; Zhang, H.; Han, W.; Li, G.; Tang, J.; Peng, X. Maize canopy temperature extracted from UAV thermal and RGB imagery and its application in water stress monitoring. Front. Plant Sci. 2019, 10, 1–18. [Google Scholar] [CrossRef]

- Crusiol, L.G.T.; Nanni, M.R.; Furlanetto, R.H.; Sibaldelli, R.N.R.; Cezar, E.; Mertz-Henning, L.M.; Nepomuceno, A.L.; Neumaier, N.; Farias, J.R.B. UAV-based thermal imaging in the assessment of water status of soybean plants. Int. J. Remote Sens. 2020, 41, 3243–3265. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Matese, A.; Baraldi, R.; Berton, A.; Cesaraccio, C.; Di Gennaro, S.F.; Duce, P.; Facini, O.; Mameli, M.G.; Piga, A.; Zaldei, A. Estimation of Water Stress in grapevines using proximal and remote sensing methods. Remote Sens. 2018, 10, 114. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. 2016, 20, 697–713. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Pérez-Porras, F.; de Larriva, J.E.M.; Frau, C.M.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P.; García-Ferrer, A. Drift correction of lightweight microbolometer thermal sensors on-board unmanned aerial vehicles. Remote Sens. 2018, 10, 615. [Google Scholar] [CrossRef]

- Olbrycht, R.; Więcek, B.; De Mey, G. Thermal drift compensation method for microbolometer thermal cameras. Appl. Opt. 2012, 51, 1788–1794. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; Virlet, N.; Labbé, S.; Jolivot, A.; Regnard, J.L. Field phenotyping of water stress at tree scale by UAV-sensed imagery: New insights for thermal acquisition and calibration. Precis. Agric. 2016, 17, 786–800. [Google Scholar] [CrossRef]

- Nugent, P.W.; Shaw, J.A.; Pust, N.J. Correcting for focal-plane-array temperature dependence in microbolometer infrared cameras lacking thermal stabilization. Opt. Eng. 2013, 52, 061304. [Google Scholar] [CrossRef]

- Kelly, J.; Kljun, N.; Olsson, P.-O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sens. 2019, 11, 567. [Google Scholar] [CrossRef]

- Budzier, H.; Gerlach, G. Calibration of uncooled thermal infrared cameras. J. Sens. Sens. Syst. 2015, 4, 187–197. [Google Scholar] [CrossRef]

- Torres-Rua, A. Vicarious calibration of sUAS microbolometer temperature imagery for estimation of radiometric land surface temperature. Sensors 2017, 17, 1499. [Google Scholar] [CrossRef]

- Hammerle, A.; Meier, F.; Heinl, M.; Egger, A.; Leitinger, G. Implications of atmospheric conditions for analysis of surface temperature variability derived from landscape-scale thermography. Int. J. Biometeorol. 2017, 61, 575–588. [Google Scholar] [CrossRef]

- Meier, F.; Scherer, D.; Richters, J.; Christen, A. Atmospheric correction of thermal-infrared imagery of the 3-d urban environment acquired in oblique viewing geometry. Atmos. Meas. Tech. 2011, 4, 909–922. [Google Scholar] [CrossRef]

- Kay, J.E.; Kampf, S.K.; Handcock, R.N.; Cherkauer, K.A.; Gillespie, A.R.; Burges, S.J. Accuracy of lake and stream temperatures estimated from thermal infrared images. JAWRA J. Am. Water Resour. Assoc. 2005, 41, 1161–1175. [Google Scholar] [CrossRef]

- Barsi, J.A.; Barker, J.L.; Schott, J.R. An Atmospheric Correction Parameter Calculator For A Single Thermal Band Earth-Sensing Instrument. In Proceedings of the IGARSS 2003. 2003 IEEE International Geoscience and Remote Sensing Symposium. Proceedings (IEEE Cat. No.03CH37477), Toulouse, France, 21–25 July 2003; pp. 3014–3016. Available online: https://atmcorr.gsfc.nasa.gov/Barsi_IGARSS03.PDF (accessed on 25 July 2020).

- Dillen, M.; Vanhellemont, M.; Verdonckt, P.; Maes, W.H.; Steppe, K.; Verheyen, K. Productivity, stand dynamics and the selection effect in a mixed willow clone short rotation coppice plantation. Biomass Bioenergy 2016, 87, 46–54. [Google Scholar] [CrossRef]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV-based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef]

- Raza, S.-E.-A.; Smith, H.K.; Clarkson, G.J.J.; Taylor, G.; Thompson, A.J.; Clarkson, J.; Rajpoot, N.M. Automatic detection of regions in spinach canopies responding to soil moisture deficit using combined visible and thermal imagery. PLoS ONE 2014, 9, e9761. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Aasen, H.; Bolten, A. Multi-temporal high-resolution imaging spectroscopy with hyperspectral 2D imagers—From theory to application. Remote Sens. Environ. 2018, 205, 374–389. [Google Scholar] [CrossRef]

- Aragon, B.; Johansen, K.; Parkes, S.; Malbeteau, Y.; Al-Mashharawi, S.; Al-Amoudi, T.; Andrade, C.F.; Turner, D.; Lucieer, A.; McCabe, M.F. A calibration procedure for field and UAV-based uncooled thermal infrared instruments. Sensors 2020, 20, 3316. [Google Scholar] [CrossRef]

- FLIR LEPTON: Engineering Datasheet. Available online: https://www.flir.com/globalassets/imported-assets/document/flir-lepton-engineering-datasheet.pdf (accessed on 12 July 2020).

- FLIR EX SERIES: Datasheet. Available online: https://flir.netx.net/file/asset/12981/original/attachment (accessed on 6 October 2020).

- MLX906014 Family: Datasheet Single and Dual Zone. Available online: https://www.melexis.com/-/media/files/documents/datasheets/mlx90614-datasheet-melexis.pdf (accessed on 6 October 2020).

- Testo 926: Datasheet. Available online: https://static-int.testo.com/media/ef/d7/e9ed0e5e694b/testo-926-Data-sheet.pdf (accessed on 6 October 2020).

- Buettner, K.J.; Kern, C.D. The determination of infrared emissivities of terrestrial surfaces. J. Geophys. Res. 1965, 70, 1329–1337. [Google Scholar] [CrossRef]

- Griggs, M. Emissivities of natural surfaces in the 8-to 14-micron spectral region. J. Geophys. Res. 1968, 73, 7545–7551. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Barr, S.L.; Suarez, J.C. Investigating the performance of a low-cost thermal imager for forestry applications. In Proceedings of the Image and Signal Processing for Remote Sensing XXII, Edinburgh, UK, 26–28 September 2016. [Google Scholar] [CrossRef]

- Ritter, M. Further Development of an Open-Source Thermal Imaging System in Terms of Hardware, Software and Performance Optimizations. Available online: https://github.com/maxritter/DIY-Thermocam (accessed on 12 July 2020).

- R Core Team R: A Language and Environment for Statistical Computing. Available online: https://www.r-project.org/ (accessed on 17 July 2020).

- Verhoeven, G. Taking computer vision aloft—Archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Pech, K. Generation of Multitemporal Thermal Orthophotos from UAV Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 1, 305–310. Available online: https://core.ac.uk/download/pdf/206789497.pdf (accessed on 7 July 2020). [CrossRef]

- Osroosh, Y.; Khot, L.R.; Peters, R.T. Economical thermal-RGB imaging system for monitoring agricultural crops. Comput. Electron. Agric. 2018, 147, 34–43. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Suarez, J.C.; Barr, S.L. Use of miniature thermal cameras for detection of physiological stress in conifers. Remote Sens. 2017, 9, 957. [Google Scholar] [CrossRef]

- Berk, A.; Bernsten, L.S.; Robertson, D.C. MODTRAN: A Moderate Resolution Model for LOWTRAN7; GL-TR-89-0122; Air Force Geophysics Laboratory: Hanscom AFB, MA, USA, 1989; Available online: https://apps.dtic.mil/sti/pdfs/ADA185384.pdf (accessed on 23 July 2020).

- Berk, A.; Bernstein, L.S.; Anderson, G.P.; Acharya, P.K.; Robertson, D.C.; Chetwynd, J.H.; Adler-Golden, S.M. MODTRAN cloud and multiple scattering upgrades with application to AVIRIS. Remote Sens. Environ. 1998, 65, 367–375. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.; Adler-Golden, S.M.; Chetwynd, J.H.; Hoke, M.L.; et al. MODTRAN 5: A reformulated atmospheric band model with auxiliary species and practical multiple scattering options: Update. Algorithms Technol. Multispectr. Hyperspectr. Ultraspectr. Imag. XI 2005, 5806, 662. [Google Scholar] [CrossRef]

- Berk, A.; Conforti, P.; Kennett, R.; Perkins, T.; Hawes, F.; van den Bosch, J. MODTRAN6: A major upgrade of the MODTRAN radiative transfer code. In Proceedings of the 2014 6th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Lausanne, Switzerland, 24–27 June 2014; pp. 113–119. [Google Scholar] [CrossRef]

- Sugiura, R.; Noguchi, N.; Ishii, K. Correction of low-altitude thermal images applied to estimating soil water status. Biosyst. Eng. 2007, 96, 301–313. [Google Scholar] [CrossRef]

- Deery, D.M.; Rebetzke, G.J.; Jimenez-Berni, J.A.; James, R.A.; Condon, A.G.; Bovill, W.D.; Hutchinson, P.; Scarrow, J.; Davy, R.; Furbank, R.T. Methodology for high-throughput field phenotyping of canopy temperature using airborne thermography. Front. Plant Sci. 2016, 7, 1808. [Google Scholar] [CrossRef]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.F.; Ballesteros, R.; Poblete, T.; Moreno, M.A. Uncooled thermal camera calibration and optimization of the photogrammetry process for UAV applications in agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef]

- Song, B.; Park, K. Verification of accuracy of unmanned aerial vehicle (UAV) land surface temperature images using in-situ data. Remote Sens. 2020, 12, 288. [Google Scholar] [CrossRef]

- Sun, L.; Chang, B.; Zhang, J.; Qiu, Y.; Qian, Y.; Tian, S. Analysis and measurement of thermal-electrical performance of microbolometer detector. In Proceedings of the SPIE Optoelectronic Materials and Devices II, Wuhan, China, 19 November 2007. [Google Scholar] [CrossRef]

- Pestana, S.; Chickadel, C.C.; Harpold, A.; Kostadinov, T.S.; Pai, H.; Tyler, S.; Webster, C.; Lundquist, J.D. Bias correction of airborne thermal infrared observations over forests using melting snow. Water Resour. Res. 2019, 55, 11331–11343. [Google Scholar] [CrossRef]

Publisher's Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).