Abstract

Continuous observation of climate indicators, such as trends in lake freezing, is important to understand the dynamics of the local and global climate system. Consequently, lake ice has been included among the Essential Climate Variables (ECVs) of the Global Climate Observing System (GCOS), and there is a need to set up operational monitoring capabilities. Multi-temporal satellite images and publicly available webcam streams are among the viable data sources capable of monitoring lake ice. In this work we investigate machine learning-based image analysis as a tool to determine the spatio-temporal extent of ice on Swiss Alpine lakes as well as the ice-on and ice-off dates, from both multispectral optical satellite images (VIIRS and MODIS) and RGB webcam images. We model lake ice monitoring as a pixel-wise semantic segmentation problem, i.e., each pixel on the lake surface is classified to obtain a spatially explicit map of ice cover. We show experimentally that the proposed system produces consistently good results when tested on data from multiple winters and lakes. Our satellite-based method obtains mean Intersection-over-Union (mIoU) scores > 93%, for both sensors. It also generalises well across lakes and winters with mIoU scores > 78% and >80% respectively. On average, our webcam approach achieves mIoU values of ≈87% and generalisation scores of ≈71% and ≈69% across different cameras and winters respectively. Additionally, we generate and make available a new benchmark dataset of webcam images (Photi-LakeIce) which includes data from two winters and three cameras.

1. Introduction

Climate change is one of the main challenges for humanity today and there is a great necessity to observe and understand the climate dynamics and quantify its past, present, and future state [1,2]. Lake observables such as ice duration, freeze-up, and break-up dynamics etc. play an important role in understanding climate change and provide a good opportunity for long-term monitoring. Lake ice (as part of lakes) is therefore considered an Essential Climate Variable (ECV) [3] of the Global Climate Observing System (GCOS). In addition, the European Space Agency (ESA) encourages climate research and long-term trend analysis through the Climate Change Initiative (CCI [4], CCI+ [5]). This consortium recently addressed the following variables: Lake water level, lake water extent, lake surface water temperature, lake ice, and lake water reflectance. Recent research also emphasises the socio-economic and biological role of lake ice [6]. Moreover, according to an analysis of data from 513 lakes, winter ice in lakes is depleting at a record pace due to global warming [2]. That study also underlined the importance of lake ice monitoring, observing that a comprehensive, large-scale assessment of lake ice loss is still missing. The vanishing ice affects winter tourism, cold-water ecosystems, hydroelectric power generation, water transportation, freshwater fishing, etc., which further emphasises the need to monitor lake ice in an efficient and repeatable manner [7]. Interestingly, an investigation of the long-term ice phenological patterns in Northern Hemisphere lakes [8] observed trends towards later freeze-up (average shift of 5.8 days per 100 years) and earlier break-up (average shift of 6.5 days per 100 years), which was also confirmed by another overview [9]. However, a previous 50-year (1951–2000) study [10] based on Canadian lakes confirmed the earlier break-up trend but reported less of a clear trend for freeze-up dates.

The idea of monitoring lake ice for climate studies is not new in the cryosphere research community. A main requirement for monitoring lake ice is high temporal resolution (daily) with an accuracy of ±2 days for phenological events such as ice-on/off dates (according to GCOS). Among the data sources that fulfil this requirement are optical satellite images such as MODIS and VIIRS. In the following, we delve deeper into the literature on using optical satellite images and webcams for monitoring lake ice.

1.1. Optical Satellite Images for Lake Ice Monitoring

At present, satellite images are the only means for systematic, dense, large-scale monitoring applications. Satellite observations with good temporal as well as spatial resolution are ideal for the remote sensing of lake ice phenology. Optical satellite imagery such as MODIS and VIIRS offer very good temporal resolution and satisfactory spatial resolution, making them a good choice. On the other hand, although sensors such as Landsat-8 and Sentinel-2 have a good spatial resolution, the insufficient temporal resolution rules them out as main sources for monitoring lake ice. Some literature exists which uses optical satellite data for lake ice analysis. Inter-annual changes in the temporal extent and intensity of lake ice and snow cover in the Alaska region have been studied using MODIS imagery [11]. In addition, studies by Brown and Duguay [12] and Kropáček et al. [13] demonstrated that MODIS data is effective for surveying lake ice. The former approach used MODIS and Interactive Multi-sensor Snow and Ice Mapping System (IMS) snow products to monitor daily ice cover changes. The latter derived ice phenology of 59 lakes (area larger than 100 km) on the Tibetan Plateau from MODIS 8-day composite data for the period 2001–2010. The estimated area of open water was compared against the area extracted from high-resolution satellite images (Landsat, Envisat/ASAR, TerraSAR-X and SPOT) and achieved a Root Mean Square (RMS) error of 9.6 days. Recently, Qiu et al. [14] derived the daily lake ice extent from MODIS data by employing the snowmap algorithm [15]. The results of this approach were consistent with the reference observations from passive microwave data (AMSR-E and AMSR2, average correlation coefficient of 0.91). Additionally, the MODIS daily snow product was used to derive the lake ice phenology of more than 20 lakes in China (Xinjiang territory) using a threshold-based method [16]. On average, the estimated freeze-up start and break-up end dates were respectively 7.33 and 4.73 days different (mean absolute error) compared to the reference dates derived from passive microwave data (AMSR-E and AMSR2). Very recently, another threshold-based technique [17] was also proposed using MODIS data which achieved a mean absolute error of 5.54 days and 7.31 days for break-up and freeze-up dates respectively.

Lake Ice Cover (LIC), a sub-product of the newly released CCI Lakes [18] product, provides the spatial cover (spatial resolution of 250 m) of lake ice and the associated uncertainty at a daily temporal resolution. At present, LIC is only available for 250 lakes spread across the globe. However, none of the target lakes in Switzerland are included in this list. Hence, a direct comparison with our results is not feasible. Lake Ice Extent (LIE) [19] is one of the Copernicus Global Land Service near-real-time products derived by thresholding the Top-of-Atmosphere reflectances from Level 1B calibrated radiances of Terra MODIS (Collection 6) for snow-covered ice, snow-free ice, and water. The 250 m resolution product has been validated against ice break-up observations over 34 Finnish lakes spanning four years (2010–2013). However, the LIE product has high uncertainty during the lake freezing period due to low light conditions in the higher latitudes as well as uncertainty in cloud cover detection. In addition, the LIE differs by an average of 3.3 days compared to the in-situ ground truth, not quite meeting the GCOS specification. MODIS snow product [15,20] maps snow and ice cover (including ice on large, inland lakes) at a relatively coarse spatial resolution of 500m and daily temporal resolution using Earth Observation System (EOS) MODIS data. A comparison of specifications of our machine learning-based product with the operational products is shown in Table 1.

Table 1.

Comparison of specifications of our machine learning-based product with the operational products such as CCI Lake Ice Cover (CCI LIC), Lake Ice Extent (LIE), MODIS Snow Product (MODIS SP), and VIIRS Snow Product (VIIRS SP).

Though in many aspects VIIRS and MODIS imagery are similar [22,23], the former has not been leveraged much to study lake ice. Previously, Sütterlin et al. [24] proposed to use VIIRS data to retrieve lake ice phenology in Swiss lakes using a threshold approach. Another algorithm [25] used VIIRS to detect inland lake ice in Canada. Using VIIRS as well as MODIS, Trishchenko and Ungureanu [26] constructed a long time series over Canada and neighbouring regions. They also developed ice probability maps using both sensors. Various approaches have been proposed using the Landsat-8 and/or Sentinel-2 optical satellite images [27,28,29,30]. However, we do not go into the details since our work is focused on sensors with at least daily coverage.

1.2. Webcams for Lake Ice Monitoring

To some extent, satellite remote sensing can be substituted by close-range webcams [31], especially in cloudy scenarios. As far as we know, the FC-DenseNet (Tiramisu) model [32] of Xiao et al. [33] used terrestrial webcam images for the first time for lake ice monitoring application, followed by a joint approach [34] which used in-situ temperature and pressure observations, and a satellite-based technique in addition to webcams. We note that these two works presented results only on cameras that capture a single lake (St. Moritz) and the generalisation performance was poor, especially for cross-camera predictions. In this work we achieve better prediction performance using webcams compared to such approaches. In addition, we report results on data from two lakes (St. Moritz, Sihl) and two winters (2016–2017, 2017–2018).

1.3. Machine (Deep) Learning Approaches for Lake Ice Monitoring

The literature on lake ice monitoring is vast. However, most works make use of elementary threshold-based or index-based methodologies. While, machine learning approaches have been successfully leveraged for various remote sensing and environment monitoring applications, their use for lake ice detection remains under explored. We intend to fill this research gap in our paper. To our knowledge, the previous version of our satellite-based method [35] and Xiao et al. [33] applied machine learning techniques for the first time to detect ice in lakes. Very recently, we also proposed a preliminary version of our webcam-based methodology [36]. In this paper, we extend our works [34,35,36] and perform thorough experimentation, targeting an operational system for lake ice monitoring. For completeness, we mention that, very recently, we have also explored the possibility to detect lake ice using Sentinel-1 SAR data with deep learning [37].

1.4. Motivation and Contributions

Existing observations and data on lake ice from local authorities, fishermen, ice-skaters, police, internet media, publications, etc. are not well documented. Additionally, there has been a significant decrease in the number of such field observations in the past two decades [9,38]. At the same time, the potential of different remote sensing sensors has been demonstrated to measure the occurrence of lake ice. In this context we note that, for our target region of Switzerland, the database at the National Snow and Ice Data Centre (NSIDC) currently includes only the ice-on/off dates of a single lake (St. Moritz), and only until 2012. Given the need for automated, continuous monitoring of lake ice, we propose to explore the potential of artificial intelligence to support an operational system. In this paper, we put forward a system which monitors selected Alpine lakes in Switzerland and detects the spatio-temporal extent of ice and in particular the ice-on/off dates. Though satellite data is the best operational input for global coverage, close-range webcam data can be very valuable in regions with large enough camera networks (including Switzerland). Firstly, we use low spatial resolution (250–1000 m) but high temporal resolution (1 day) multispectral satellite images from two optical satellite sensors (Suomi NPP VIIRS [39], Terra MODIS [40]). Here, we tackle lake ice detection using XGBoost [41] and Support Vector Machines (SVM) [42]. Secondly, we investigate the potential of images from freely available webcams using Convolutional Neural Networks (CNN), for the independent estimation of lake ice. Given an input webcam image, such networks are designed to predict pixel-wise class probabilities. Additionally, we use webcam data for the validation of results from satellite data.

2. Materials and Methods

2.1. Definitions Used

By definition, ice-on date is the first day a lake is totally or in great majority frozen (≈90%), with a similar day after it (this is the same definition as in Franssen and Scherrer [43], i.e., end of freeze-up). Ice-off is used here as the symmetric of ice-on, i.e., the first day after having all or almost all lake frozen, when any significant amount of clear water appears and in the subsequent days this water area increases. We point out that our ice-off date marks the start of melting (break-up), such that the two dates symmetrically delimit the fully frozen period. As far as we know there is no universally accepted definition of ice-on/off dates. Hence, in the scientific cooperation we had with MeteoSwiss we adopted the above definition which is consistent throughout this work. Ice thickness plays no role in this definition. In very rare cases, in Switzerland, there may be more than one such date. In those cases we use the latest ice-on and the earliest ice-off dates. Some researchers, especially in North America and in the NSIDC database, define ice-off as the end of break-up, when almost everything is water [9]. That date can also be retrieved with our scheme, without any changes to the methodology. Clean pixels are those that are totally within the lake outline. In all subsequent investigations with satellite image data, only the cloud-free clean pixels are used. Additionally, non-transition dates are the days when a lake is mostly (≈90% or above) frozen (ice, snow) or non-frozen (water) while the partially frozen days are termed as transition dates.

2.2. Target Lakes and Winters

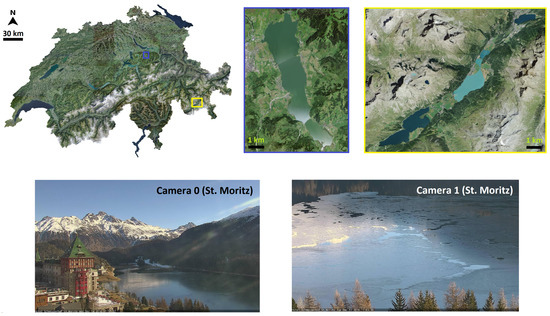

Using satellite images, we processed the Swiss Alpine lakes: Sihl, Sils, Silvaplana, and St. Moritz, see Figure 1. To assess the performance, the data from two full winters (16–17 and 17–18) are used, including the relatively short but challenging freeze-up and break-up periods. In each winter, we processed all available dates from the beginning of September until the end of May. The target lakes exhibited moderate to high difficulty, with a variable area (very small to mid-sized), altitude (low to high), and surrounding topography (flat/hilly to mountainous), and they freeze more or less often. More details of the target lakes are shown in Table 2, including the details of the nearest meteorological stations. For completeness, the temperature and precipitation data near the observed lakes were also plotted (see Figure 2 for 2016–2017 winter months). Additionally, we processed three different webcams monitoring lakes St. Moritz and Sihl from the same two winters. For satellite images, the lake outlines are digitised from Open Street Map (OSM) and have an accuracy of ≈25–50 m. For webcams, our algorithm automatically determines the lake outline.

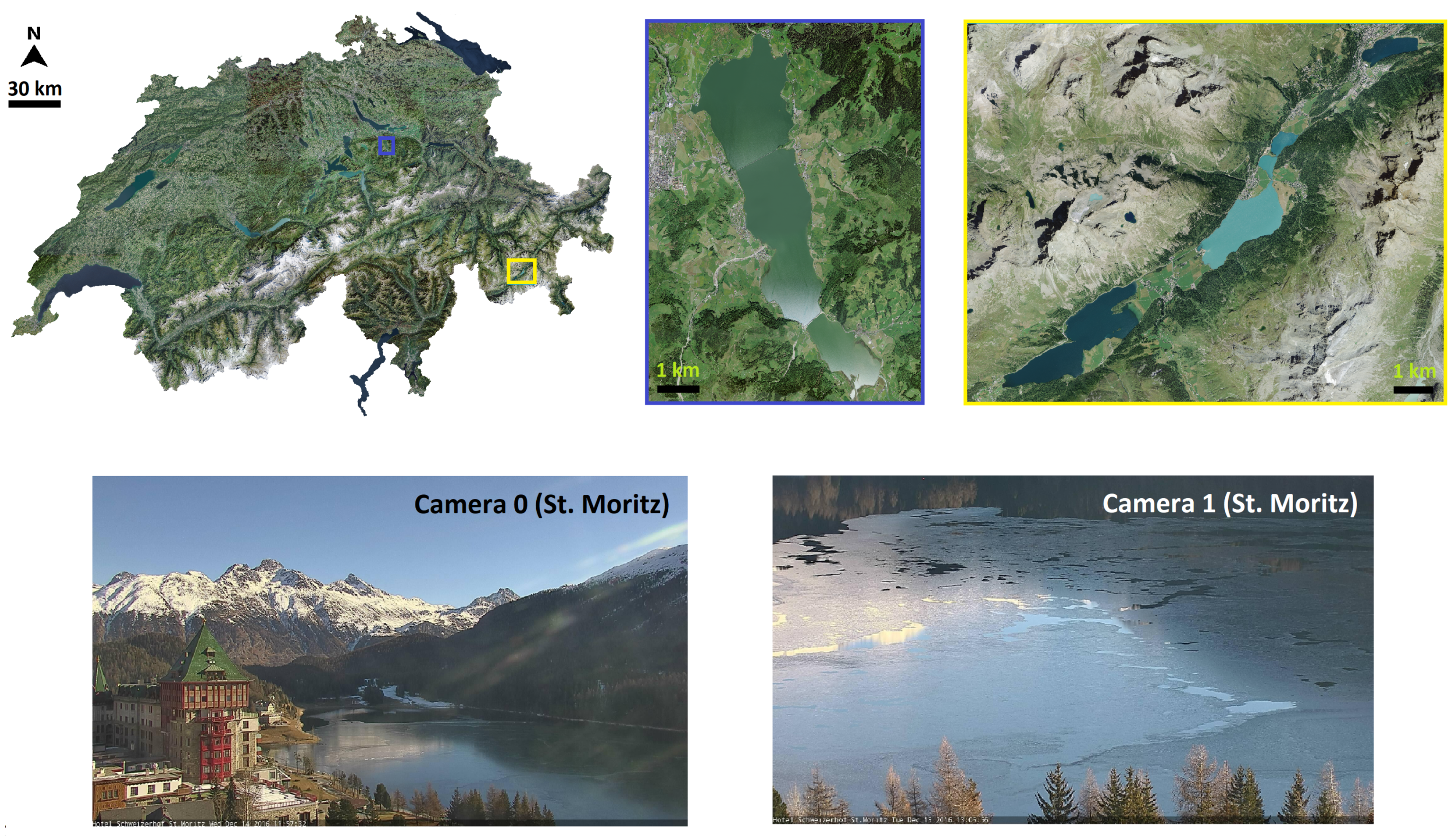

Figure 1.

On the first row, left image shows the orthophoto map of Switzerland (source: Swisstopo [44]). Regions around the four target lakes (shown as blue and yellow rectangles on the map) are zoomed in and shown on the right side of the map (lake Sihl on the left, region around lakes Sils, Silvaplana, St. Moritz on the right). On the second row, the image footprints of two webcams monitoring lake St. Moritz are displayed (Camera 0 and 1 images were captured on 14 December 2016 and 13 December 2016 respectively when the lake was partially frozen). Best if viewed on screen.

Table 2.

Characteristics of the target lakes (data mainly from Wikipedia). Latitude (lat, North), longitude (lon, East), altitude (alt, m), area (km), volume (vol, Mm), and the maximum and average depth [depth(M,A)] in m are shown. Additionally, for each lake, the nearest meteorological station (MS) is shown together with the corresponding latitude, longitude, and altitude.

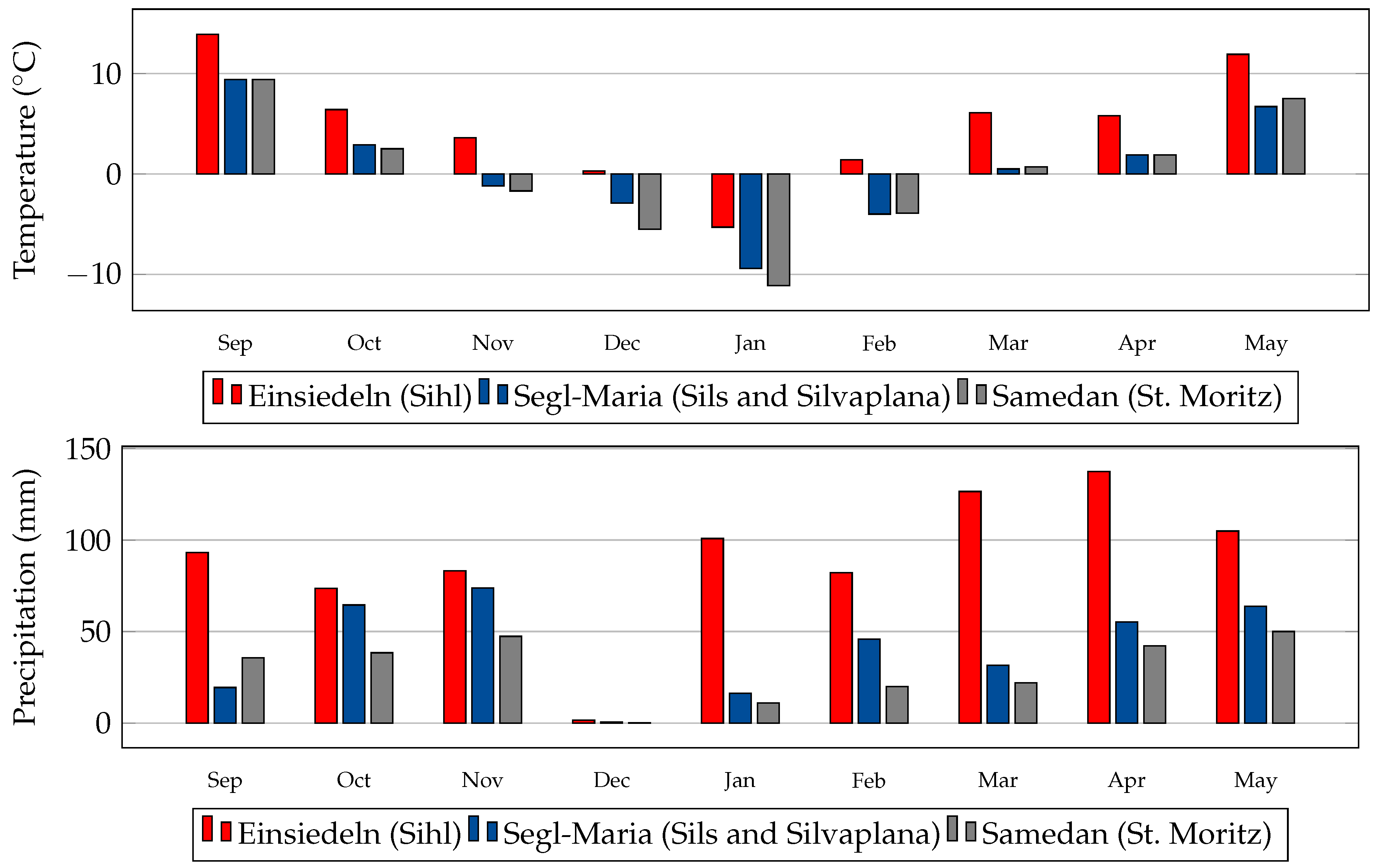

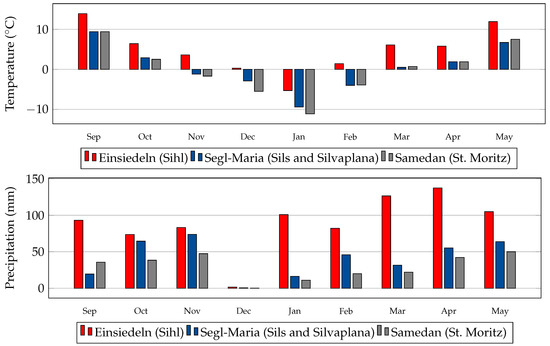

Figure 2.

Bar graphs of mean monthly air temperature 2m above ground (top) and total monthly precipitation (bottom) in winter 2016–2017, recorded at the meteorological stations closest to the respective lakes. Data courtesy of MeteoSwiss.

2.3. Data

We use data from three different type of sensors for lake ice monitoring. Parameters of all these data types are shown in Table 3.

Table 3.

Parameters of the used data (GSD = Ground Sampling Distance).

2.3.1. Optical Satellite Images

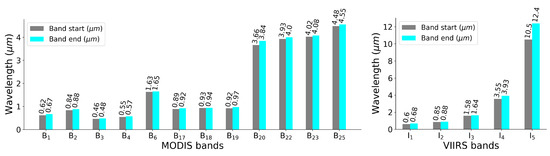

Both MODIS (aboard Terra [40] and Aqua [45] satellites) and VIIRS (Suomi NPP [39] satellite) images are freely available and have high temporal resolution. Due to the lower quality of Aqua imagery we used Terra images in our analysis. Additionally, following the approach of Tom et al. [35], we used only 12 MODIS bands and discarded the rest. For our MODIS analysis, we downloaded the following products: MOD02 (calibrated and geolocated radiance, level 1B), MOD03 (geolocation), and MOD35 (48-bit cloud mask) from the LAADS DAAC (Level-1 and Atmosphere Archive & Distribution System Distributed Active Archive Center) archive [46]. Note that, only the I-bands are used in our VIIRS analysis. See Figure 3 for the spectral range of MODIS and VIIRS bands used in our approach.

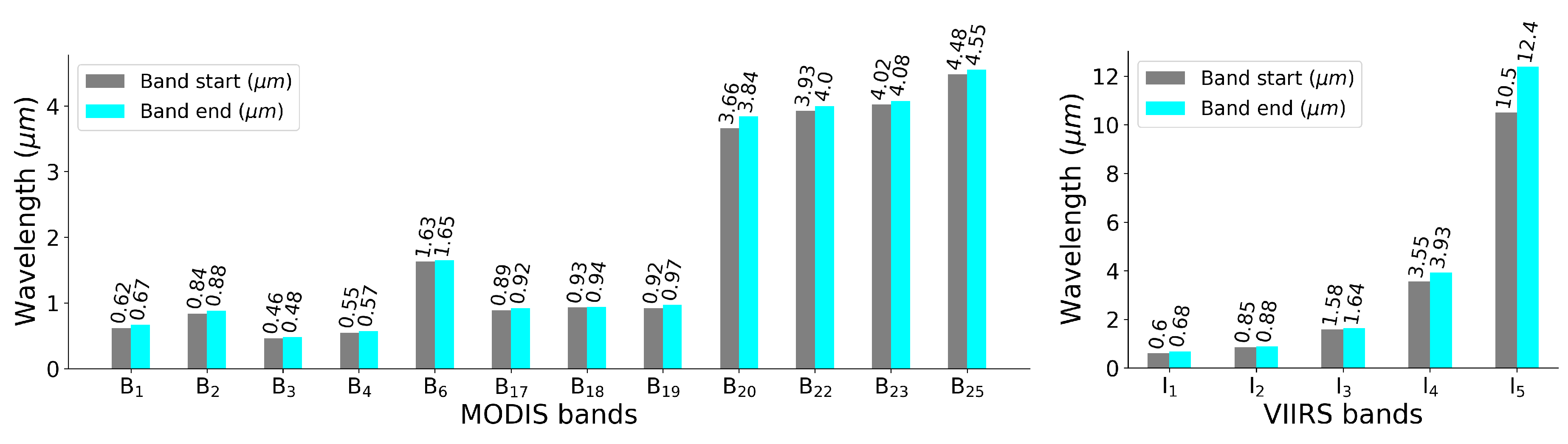

Figure 3.

Spectral range of MODIS (left) and VIIRS (right) bands used in our analysis. The start and end wavelengths are shown for each band.

Technicalities about the processed satellite data are shown in Table 4 and Table 5. It can be seen that we analysed relatively less data in winter of 17–18 as opposed to the previous winter, due to the fact that winter 17–18 was more cloudy than 16–17 in the regions of interest. We only processed the dates when a lake was at least 30% cloud-free, which effectively lowered the temporal resolution from 1 day to approximately 2 days. The effective temporal resolution varies across sensors and winters (see Table 5). Additionally, for lakes Sihl and St. Moritz, there were more transition days in winter 17–18. Throughout, we used the non-transition dates for training the SVM model as referred to in Section 3.1. This factor along with class-imbalance explains why the decrease in data is more evident for the class frozen. Note also that the transition dates are more likely to occur near the freezing and thawing periods. One can note class imbalance in the dataset of both winters. In each winter, we processed all available acquisitions during the period from September till May, while the lakes were typically fully (or mostly) frozen during only a small subset of these dates. Moreover, the class imbalance was alarmingly high for lake Sihl. This is because Sihl had a moderate freezing frequency compared to the other three lakes, because of its lower altitude and larger area, see Table 2. Note also that the presence of a dam near the northern part of lake Sihl makes its freezing pattern relatively less natural.

Table 4.

Total number of clean, cloud-free pixels on non-transition dates from MODIS (M) and VIIRS (V) sensors (at least 30% cloud-free days) used in our experiments.

Table 5.

Dataset statistics. M/V format displays the respective numbers of MODIS/VIIRS. The effective temporal resolution (shown as ‘resolution’) and fraction of transition dates over all processed dates (Trans fraction) are also shown. #Pixels (clean) displays the number of clean pixels per acquisition.

Even after collecting data from a full winter, very few pixels are available to train a machine learning-based system using MODIS imagery, due to the low spatial resolution. For instance, in every acquisition, there exist only four clean pixels for lake St. Moritz, refer to Table 5. This problem is even worse for VIIRS where there is no clean pixel at all for the same lake (see Table 5). Note that from Table 4, the total number of VIIRS (clean) pixels processed is significantly less compared to MODIS mainly due to the lower spatial resolution (see also Table 5). A challenge for machine learning is the scarcity of lake pixels. Note also that the small number of pixels per lake makes a correction of the lake outlines’ absolute geolocation a necessity (refer Section 2.4). Furthermore, it is highly probable during the transition periods that both frozen and non-frozen classes coexist within a single clean pixel (mixels). For this reason, we also generate the probability for each pixel to be frozen as an end result, especially targeting such mixels during the transition periods. Note also that data hungry deep learning approaches cannot be deployed, as they cannot be reliably trained with such small datasets.

2.3.2. Webcam Images

We reported our results on various cameras with different intrinsic and extrinsic parameters, which are freely available. For the experiments, we manually removed some unusable images, examples are shown in Figure 4. We point out that for oblique webcam viewpoints, the GSD varied greatly between nearby and distant parts of a lake, as does the angle between the viewing rays and lake surface. As a consequence, webcam images are hard to interpret in the far field, in practice usable distances tend to be up to ≈1 km. We note that the usable distance also depends on the surface material, e.g., snow on ice can be detected at further distances where it is already impossible (for humans as well as machines) to distinguish black ice from water.

Figure 4.

Example images that were discarded from the dataset due to bad illumination (left), sun over-exposure (middle), and thick fog (right).

We make available (https://github.com/czarmanu/photi-lakeice-dataset) a new webcam dataset, termed Photi-LakeIce, for lake ice monitoring and report our results on it. Sample images and details of the dataset are presented in Figure 5 and Table 6 respectively. RGB images (and the corresponding ground truth annotations) from two lakes (Sihl and St. Moritz) and two winters (2016–2017, 2017–2018) are included in the dataset. Though the camera mounted at Hotel Schweizerhof in St. Moritz is rotating, in our analysis we consider it as two different fixed cameras (camera 0 and camera 1, see Figure 6). The major difference between these two streams is image scale: Camera 0 captures images with larger GSD compared to camera 1. Another camera that monitors Sihl is non-stationary, but captures the lake at the same scale (refer Figure 5 row 3). Hence, we consider it as a single rotating camera (camera 2). Our dataset is not limited to but includes images with different lighting conditions (due to the sun’s angle, time of the year, presence of clouds, etc.), shadows (from both clouds and nearby mountains), fog conditions (we remove the extreme cases but keep the images from slightly foggy days), wind scenarios, etc.

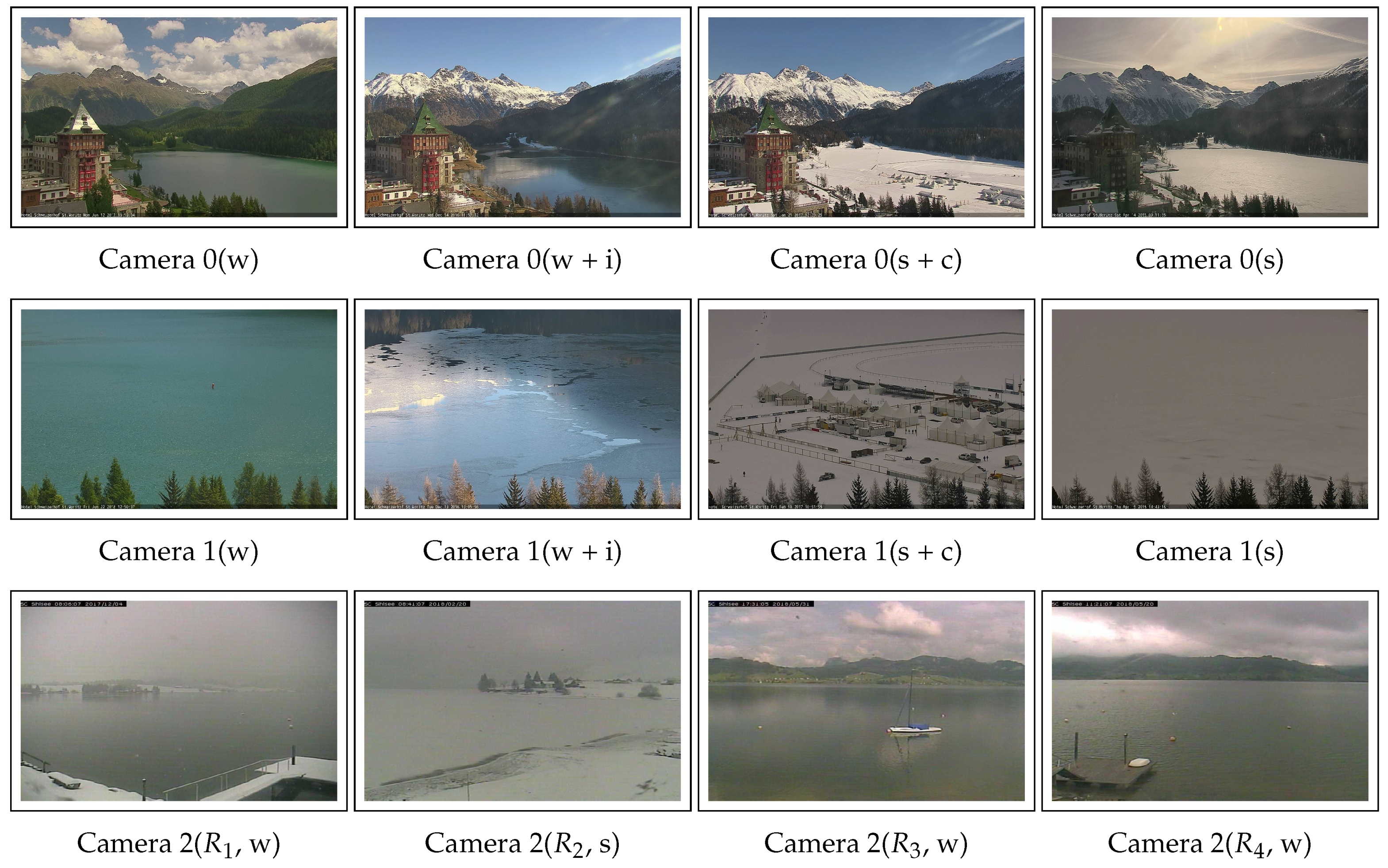

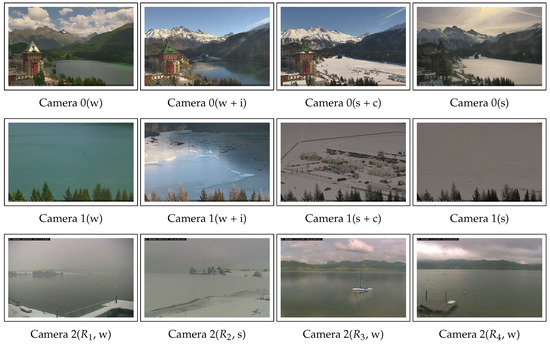

Figure 5.

Photi-LakeIce dataset. Rows 1 and 2 display sample images from cameras 0 and 1 (St. Moritz) respectively. Row 3 shows example images of camera 2 (Sihl, non-stationary, and some rotations [, etc.] are also displayed). State of the lake: water(w), ice(i), snow(s), and clutter(c) is also displayed in brackets.

Table 6.

Details of the Photi-LakeIce dataset. Lat and Long respectively denote latitude (North) and longitude (East) of the approximate camera location. Res stands for resolution and H and W represent height and width of the image in pixels (after cropping).

Figure 6.

Two webcams monitoring lake St. Moritz along with their approximate coverage. Image courtesy of Google.

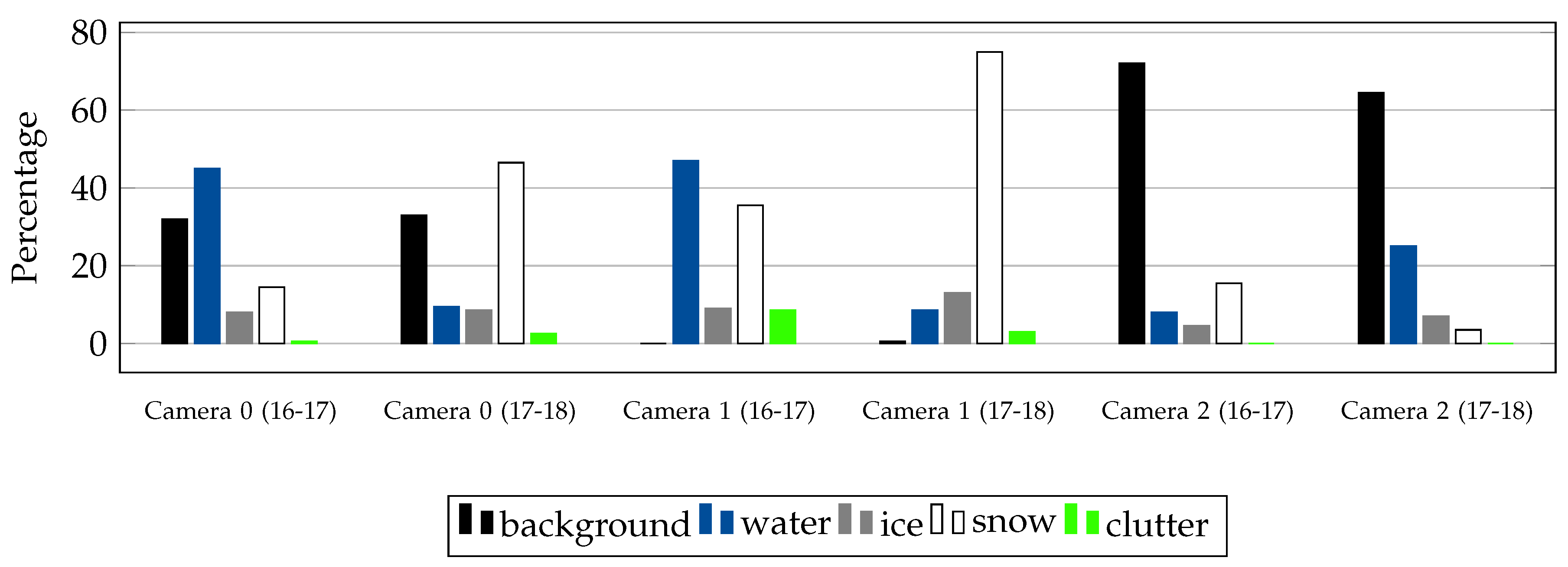

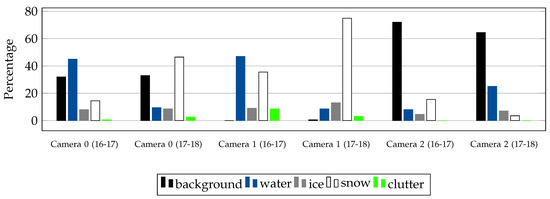

To study the class imbalance in our dataset, we plot the class distribution, individually for each camera and winter, see Figure 7. It can be inferred that the classes are highly imbalanced in most of the sub-datasets, where ice and clutter classes suffer the most. In Figure 7, we show the percentage of the class background in addition to the four main classes. Note that the percentage of clutter in camera 0 is less compared to camera 1. Note also, camera 1 has almost zero background while the lake area (foreground) to background ratio for Sihl is too low, making it a very challenging case. Additionally, the number of ice pixels is consistently low in all the cameras across all years. It will not be surprising if the performance of classes clutter and ice are not good in a relative sense. Note that the background class frequencies differ from one year to another even for the same camera, since in each year the foreground-background separation was done by different human experts. The difference is even more so for camera 2 (Sihl), since it is rotating.

Figure 7.

Bar graphs displaying class imbalance (including the class background) in our dataset. Ice and clutter are the under-represented classes.

2.3.3. Ground Truth Generation for Webcam Analysis

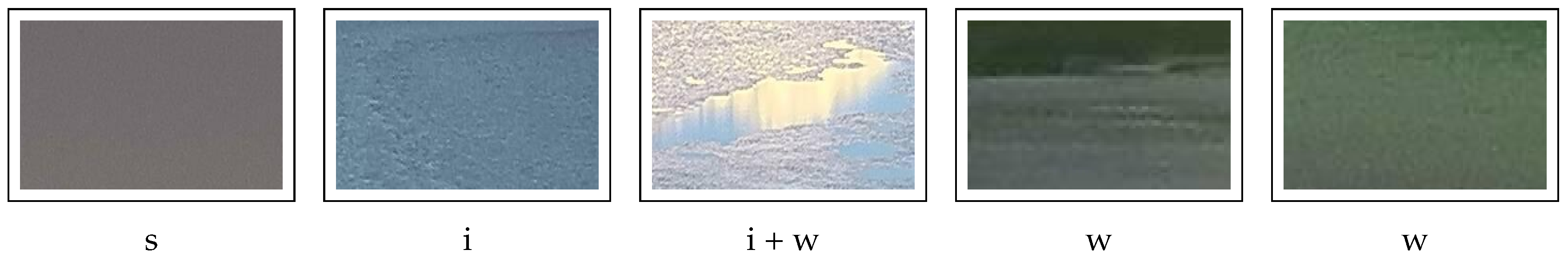

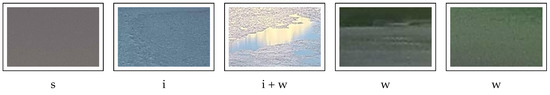

The main difficulty in designing a machine (deep) learning system is the requirement for accurately labelled data. However, to generate pixel-wise labels, the interpretation of webcam images is challenging for several reasons. Image quality is limited and off-the-shelf webcams only offer poor radiometric and spectral resolution and are subject to adverse lighting conditions like fog, which makes the image interpretation process difficult even for humans (see Figure 8). Besides the limitations of the sensor itself, the cameras are mounted with a rather horizontal viewing angle such that large parts of the water body can be observed. As a result, large differences in GSD within a single image are present. Significant intra-class appearance differences exist throughout image sequences. This is caused by different ice structures, partly frozen water surfaces, waves, varying illumination conditions, reflections, and shadows. Furthermore, inter-class appearance similarities exist, which impedes automatic interpretation. In fact, even manual interpretation for some examples is impossible without using additional temporal cues. Pixel-wise ground truth annotations are produced by human operators by labelling polygons within the input images using the LabelMe tool [47]. For the lake detection task, each pixel is either labelled as foreground (lake) or background. Foreground pixels are further annotated as water, ice, snow, and clutter for lake ice segmentation.

Figure 8.

Inter-class similarities and intra-class differences of states snow (s), ice (i), and water (w) in our webcam data.

2.3.4. Ground Truth Generation for MODIS and VIIRS Analysis

To generate the ground truth for our satellite image analysis, for each day, a human expert visually interpreted the state of a lake (completely frozen, partly frozen, completely non-frozen, or partly non-frozen) using webcam images of the same. Note also that most of the freely-available webcams are not optimally installed to monitor lakes. Hence, besides webcam images, we interpret cloud-free Sentinel-2 images whenever available. Additionally, we attempted to use online media reports to enrich the generated ground truth, which however only provided limited information. In our analysis, webcams have ground truth at a better granularity level (hourly, per pixel label) compared to satellite images (daily, global label). Accurately registering webcam pixels with satellite image pixels is beyond the scope of this work, hence we did not transfer the webcam-based per-pixel ground truth to the satellite images.

2.4. Methodology

2.4.1. Satellite Image Analysis

Pre-processing of MODIS data (re-sampling to UTM32N coordinate system, re-projection) is done using MRTSWATH [48] software. Similarly, VIIRS imagery data is pre-processed (assembling of data granules, re-sampling to UTM32N, and mapping) with the SatPy [49] package. VIIRS cloud masks are extracted with H5py [50] and re-sampled using Pyresample [51] and GDAL [52] libraries. Among the 12 selected MODIS bands (refer Section 2.3.1), the lower resolution bands (500m and 1000m GSD) were upsampled to 250m resolution using bilinear interpolation. This step is not necessary for VIIRS analysis, since we use only the I-bands (≈375 m GSD). For both VIIRS and MODIS, we only analysed the images with at least 30% cloud-free clean pixels. In MODIS images, there are also some pixels marked as invalid, which were masked out. For MODIS, we merged the cloudy and uncertain clear bits to construct a binary cloud-mask from the standard cloud-mask product. Similarly, a VIIRS pixel is considered non-cloudy only if it is either confidently clear or probably clear. After Douglas–Peucker generalisation [53], the outlines were further backprojected from the ground coordinate system onto the satellite images to steer the estimation of lake ice. In addition, just the clean pixels were analysed, after rectifying the outlines for absolute geolocation shifts, and backprojecting onto the VIIRS band (≈375 m GSD), respectively MODIS band (250m GSD) as in Tom et al. [35]. For MODIS, the mean offsets in x and y direction were 0.75 and 0.85 pixels, respectively. For VIIRS, the mean offsets were 0 and 0.3 pixels in x, respectively y direction.

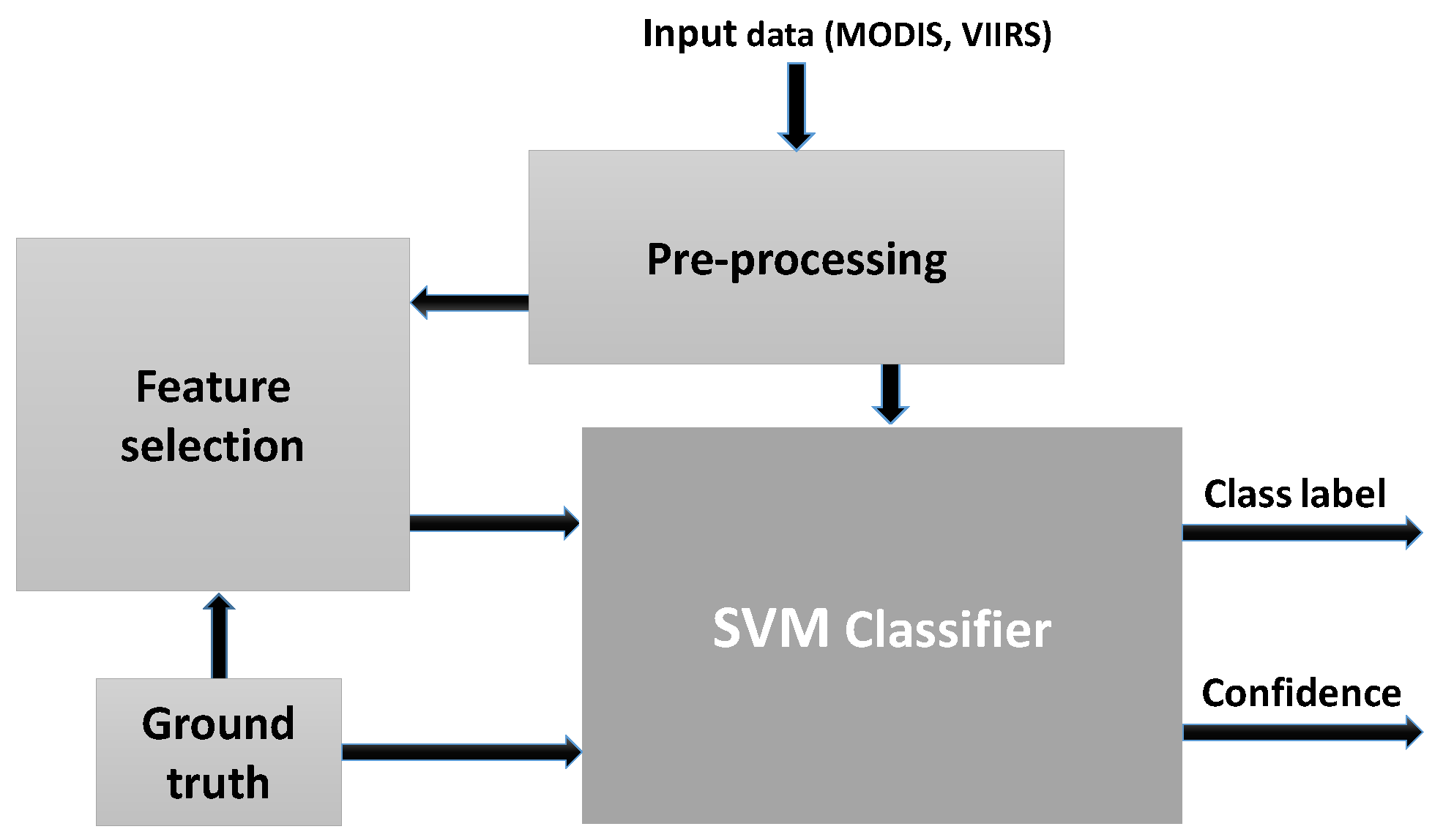

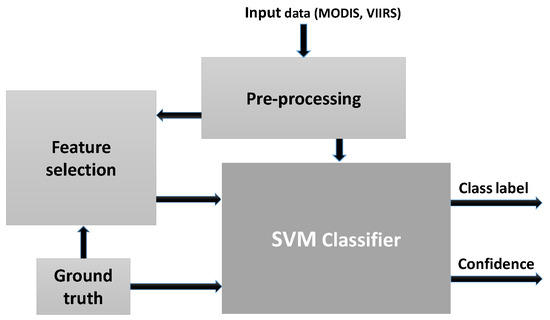

Figure 9 displays the block diagram of the proposed lake ice monitoring system using satellite images. Our semantic segmentation methodology is generic and is applicable to both VIIRS and MODIS imagery. Here, we formulated ice detection as a supervised pixel-wise classification problem (two classes: Frozen and non-frozen). To assess the inter-class separability of different bands, we carried out a supervised variable importance analysis using the XGBoost feature learning system [41]. The training of that method, a gradient boosting approach based on ensembles of decision trees, makes explicit variable importance conditioned on the class labels. The outcome (F-score) indicates how valuable each feature is in the formation of the boosted (shallow) decision trees within the model. The more a feature (in our case a band) is used to make correct predictions with the decision trees, the higher its relative importance. Though XGBoost is also a classifier by default, we only used the built-in variable selection to automatically determine the most informative bands. For the actual classification based on the selected channels we employed a support vector machine (SVM, [42]) classifier, mainly because with SVM it is straight-forward to compare a linear and a non-linear variant.

Figure 9.

Block diagram of the proposed lake ice detection approach using satellite data.

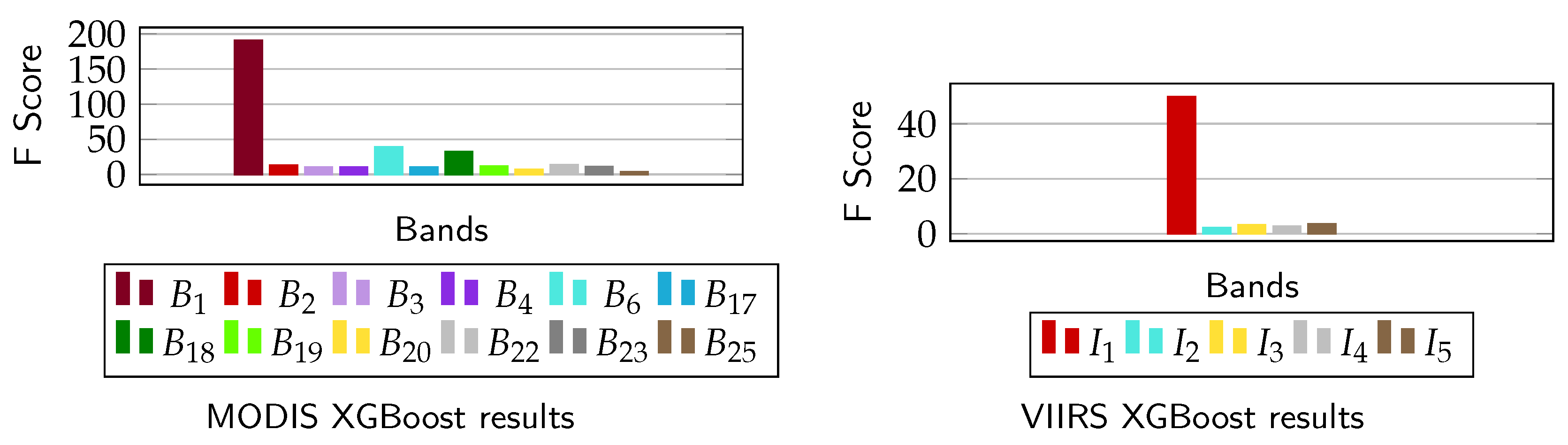

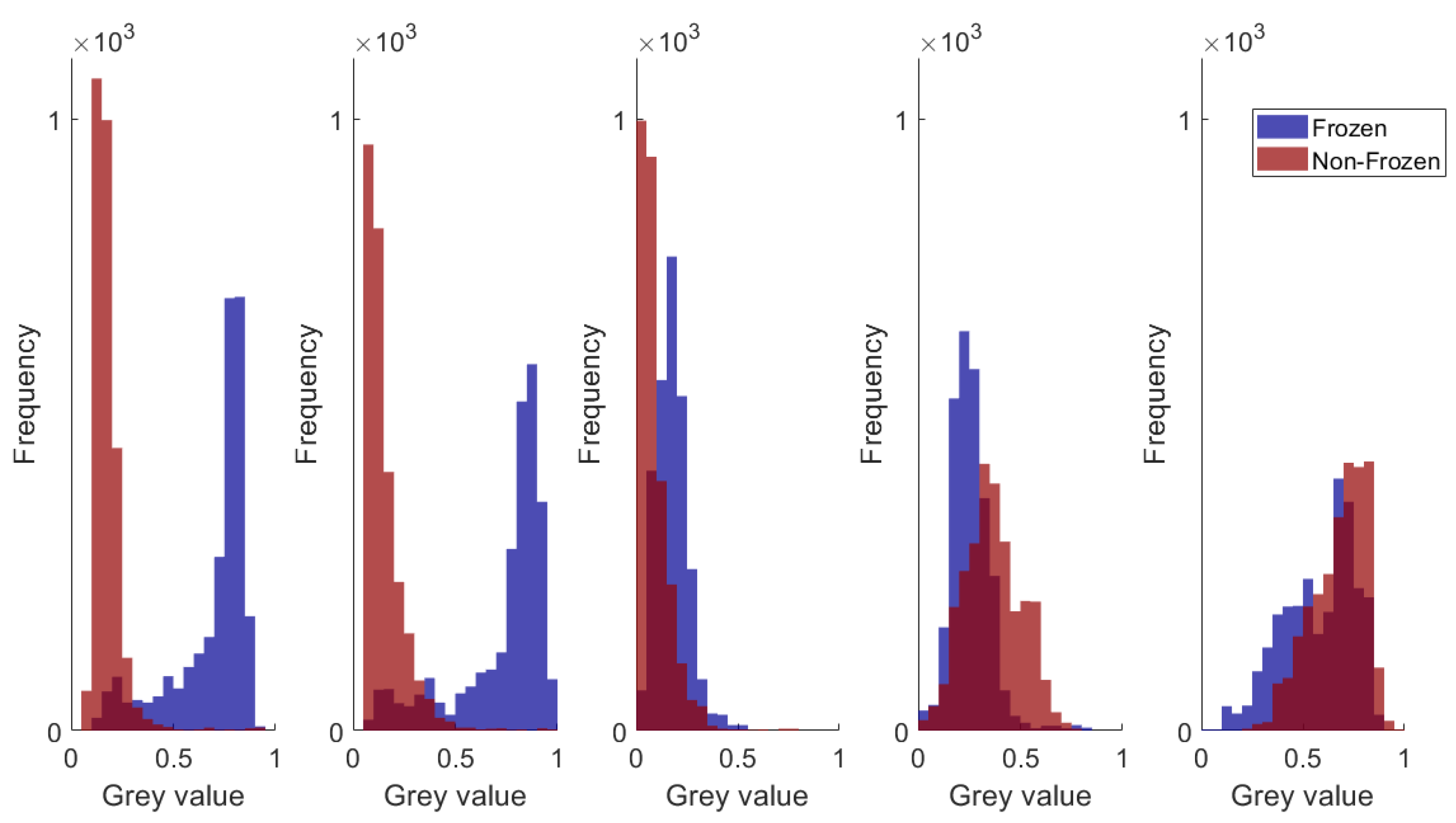

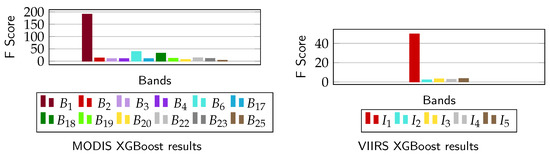

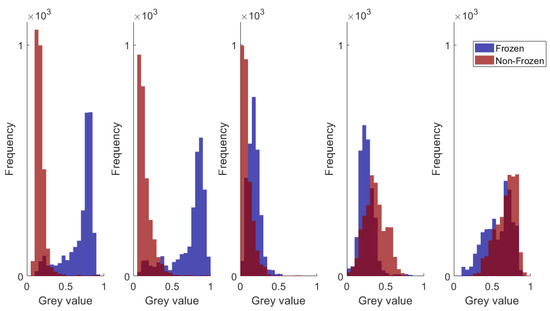

The 12 usable MODIS bands and 5 I-bands of VIIRS were independently analysed with XGBoost. All data from winter 16–17 (see Table 4) was used to perform this analysis and the results for both MODIS and VIIRS are shown in Figure 10 (left and right respectively). Bands and attained the best scores among the analysed MODIS and VIIRS bands respectively. Furthermore, we plotted the gray-value histograms (see Figure 11) in order to double-check the results generated by XGBoost. Due to space limitations, only the histograms for VIIRS are shown. Similar histograms for MODIS can be found in Tom et al. [54]. It can be judged from Figure 11 that the two classes are almost similarly separable in the two near-infrared bands and . Since those two bands have a similar spectrum and are highly correlated, XGBoost picks only one among them. The same holds for the two near-infrared MODIS bands and .

Figure 10.

Bar graphs for MODIS (left) and VIIRS (right) showing the significance of each of the selected bands (12 for MODIS, 5 for VIIRS) for frozen vs. non-frozen pixel separation using the XGBoost algorithm [41]. All non-transition days from winter 16–17 are included in the analysis.

Figure 11.

VIIRS grey-value histograms for sanity check (Bands , , , , are respectively shown from left to right).

It is very likely that the physical state of a lake pixel is the same on subsequent days (except during the highly dynamic freezing and thawing periods). Hence, as a post-processing step, multi-temporal analysis (MTA) is applied. For each pixel, a moving average of the SVM class scores is computed along the time dimension. The average is computed within a fixed window length (smoothing parameter) that is determined empirically. Choosing the smoothing parameter is critical, as too-high values can easily wash out the critical dynamics during the transition days. Since the pixels from each MODIS (or VIIRS) acquisition are predicted independently by the trained SVM model, MTA is expected to improve the SVM results by leveraging on the temporal relationships. We test three different averaging schemes: Mean, median, and Gaussian.

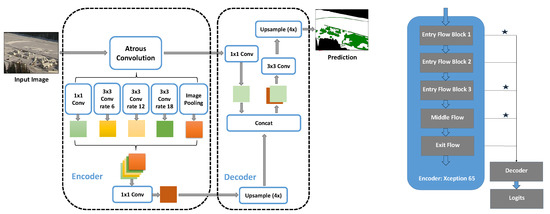

2.4.2. Webcam Image Analysis

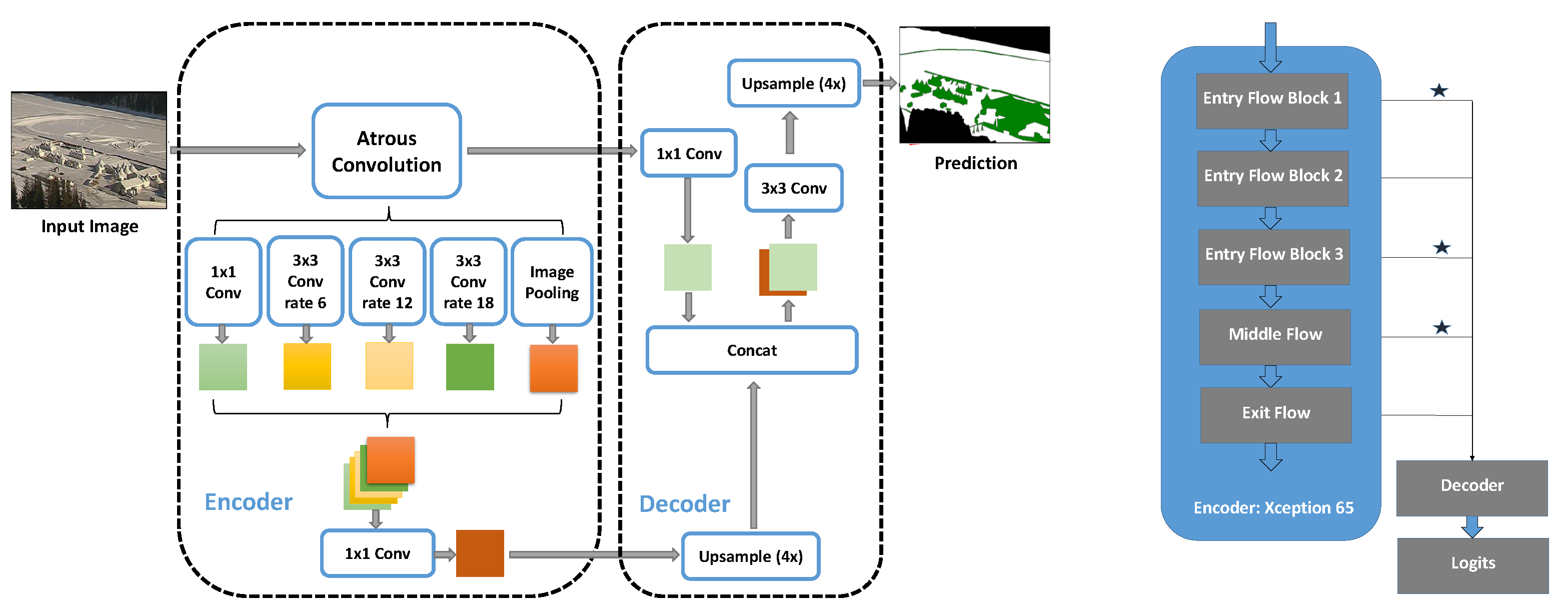

Similarly to satellite analysis, we formulated our webcam approach as supervised semantic segmentation problem. Here, we made use of the prominent high-performance deep learning architecture, Deeplab v3+ [55], with the Xception65 encoder branch, see Figure 12 (left). That method has a proven track record on other semantic segmentation benchmarks such as PASCAL VOC [56] and Cityscapes [57]. Our network classified each pixel in the RGB webcam image as water, ice, snow, or clutter. The clutter class incorporates man-made objects on the lake, e.g., structures built for sport events (such as tents in St. Moritz), boats, etc. Note that, as for satellite images, lake ice segmentation is done only for foreground (lake) pixels.

Figure 12.

Deeplab v3+ (left) and Deep-U-Lab (right) architectures. The “⋆” symbol indicates the additional skip connections for Deep-U-Lab.

By integrating the spatial pyramid pooling technique as well as atrous convolution into the standard encoder-decoder architecture, the Deeplab v3+ network encodes rich contextual information at arbitrary scales and retrieves segment boundaries more precisely. Spatial convolution was applied independently to each channel, followed by 1 × 1 (point-wise) convolution to combine the per-channel outputs. This markedly reduces the computational complexity without any noticeable performance drop. Where needed, these depthwise separable convolutions employ stride 2 in the spatial component, making separate pooling operations obsolete. Note that the atrous (dilated) convolution effectively increases the receptive field without blurring the signal. Using multiple atrous rates makes sure that features are extracted at various spatial scales.

Inspired from U-net [58], and with an aim to sharpen the class boundaries, in addition to the single skip connection used in Deeplab v3+ per default, we introduced three more from different flow blocks (entry and mid-level flow) of the Xception65 encoder to the decoder. We call this new variant Deep-U-Lab, see Figure 12 (right). The new feature maps thus generated are concatenated along with the existing ones, for better preservation of high frequency information at the class boundaries.

In order to deal with the shortage (in deep learning terms) of labelled data, we made use of transfer-learning that allows one to re-use knowledge gained from other similar tasks. To do so, we employed a model pre-trained on the PASCAL VOC benchmark dataset (for both lake detection and ice segmentation tasks) rather than starting from scratch. The weights in all layers were fine-tuned since tuning just the final layer did not work, emphasising the fact that even low-level texture characteristics differ between our webcam images and the PASCAL dataset and must be adapted.

In previous works [33,34], the lake pixels were manually delineated before inferring their class. Locating the lake pixels makes the job easier for the classifier as it does not have to deal with the spectral appearance outside of the lake. We propose to automate this step, in order to make lake ice observation more practical in operational scenarios. Automated lake detection is very useful especially when scaling up the webcam network to also include non-stationary cameras. Hence, we formulate lake detection as a two-class (background, foreground) segmentation problem and train yet another instance of our Deep-U-Lab model. Then, a fine-grained classifier predicts the state (water, ice, snow, and clutter) of lake pixels.

With the intention to minimise overfitting of the model, we performed data augmentation, i.e., more synthetically generated variations were added to the training dataset. This was done by applying random rotations, zooming, up-down, and left-right flipping.

3. Results

For our evaluation we use the following error metrics: Recall, precision, overall accuracy, Intersection over Union (IoU, a.k.a. Jaccard index), and mean IoU across classes (mIoU).

3.1. Experiments with Satellite Images

Unless explicitly specified, the experimental settings are the same (but independent) for both MODIS and VIIRS. The time series is divided into two parts: Transition and non-transition dates. During the partially frozen transition days, ground truth annotation was very challenging, as one has to discriminate thin transparent ice from water. Hence, the quantitative results are reported only on non-transition dates. However, qualitative analysis was done on all the available dates. Furthermore, with VIIRS only three lakes (Sihl, Sils, Silvaplana) were processed since there exists no clean pixel for lake St. Moritz (see Table 5).

3.1.1. Four-Fold Cross Validation

As a first experiment, the data of all the lakes were combined from the two winters (16–17 and 17–18) and 4-fold cross validation was performed in order to figure out the optimum SVM parameter settings for detailed experimentation. We did a grid-search for the two main hyperparamaters of SVM (the cost of a misclassification and the kernel width gamma) and found that, for both sensors, the best results with Radial Basis Function (RBF) kernel are obtained with values 10 and 1 for cost and gamma, respectively. With linear kernel, value 0.1 as cost works best for both MODIS and VIIRS. We notice that classification of our dataset using the RBF kernel is fairly sensitive to the selection of hyperparameters, while the linear kernel provides consistent results. Note also, optimum hyperparameters might vary from one dataset to another. Quantitative results of 4-fold cross validation experiments with the optimum parameters are displayed in Table 7. For MODIS, the best results were obtained when all 12 bands are used as feature vector (and for VIIRS with all 5 bands). In addition, our results show that the performance of RBF kernel is a bit better compared to the linear counterpart. Additionally, we tested variants that use fewer bands, down to a single band with the highest F-score as selected by XGBoost ( for MODIS and for VIIRS, refer Figure 10). Since it does not make sense to run an RBF kernel with a single input band, only the linear kernel was tested for this experiment. Even with a single band and a simple linear kernel the results are fairly decent. The results are even better when using the top-five bands of MODIS, but slightly worse than the full 12-band feature vector. Multi-temporal analysis (MTA) improves the results by a very small margin. For both MODIS and VIIRS, MTA with Gaussian kernel (smoothing parameter 3) gives the best results and is therefore used in all further experiments. For the best setting, we show the results in more detail in Table 8.

Table 7.

The 4-fold cross validation results on MODIS and VIIRS data from two winters (16–17 and 17–18). For the same SVM setup, results without and with multi-temporal analysis (MTA) are shown.

Table 8.

Detailed results on MODIS (left) and VIIRS (right) data for the best cases of 4-fold cross validation on combined data from two winters.

We note that feature selection may be beneficial especially with very small training sets. Ideally, SVM automatically prioritizes the more important dimensions in the feature vector, but when only few examples are available, the danger of spurious correlations in less discriminative bands increases. For lake ice detection, where few channels carry most of the information, we recommend the use of automatic feature selection in case the SVM over-fits.

For a practically useful and efficient learning-based monitoring framework, a model should be trained using annotated data from a handful of lakes as well as a few winters, but should be able to predict for lakes and winters not seen during training. To test the performance of our approach in such scenarios, we perform the leave one lake out, respectively leave one winter out cross validation experiments. In all the following experiments, we used all 12 (5) bands of MODIS (VIIRS), optimum hyperparameters chosen by grid-search (cost 10 and gamma 1 for RBF kernel, cost 0.1 for linear kernel), and MTA with Gaussian kernel (smoothing parameter 3).

3.1.2. Leave One Lake out Cross Validation

This experiment evaluates the across-lake generalisation capability of the classifier. We used the SVM model trained on pixels of all but one lake (from both winters) and test on the pixels from the remaining lake, in round-robin mode. MODIS and VIIRS results are presented in Table 9 and Table 10, respectively. As per the results, our models fair well even when trained using only pixels from different lakes. Using both RBF and linear kernels, our algorithm gives excellent results on lakes Sils and Silvaplana consistently with both VIIRS and MODIS data. Table 2 shows that both these lakes are similar in many aspects. It is expected that for a learning-based system, predictions are better if the test data is more similar to the training data. The performance of RBF kernel on lakes St. Moritz and Sihl is also good, but not as good as Sils and Silvaplana. Recall that St. Moritz has just four clean pixels per MODIS acquisition (see Table 5) and that the absolute geolocation accuracy could be critical for such a small lake. It appears that for St. Moritz, the linear kernel does not generalise unlike other lakes, but we do not draw any firm conclusions based on these results as the lake is too small. Lake Sihl is slightly different compared to the other lakes (altitude, area etc., refer Table 2) and hence the SVM encounters a more generalisation loss. Still a mean IoU of 0.78 (corresponding to 93% correctly classified pixels) for MODIS, respectively 0.85 (95%) for VIIRS is a rather good result. For both sensors, the performance of the linear kernel on Sihl is better compared to RBF. Given the fact that Sihl has a somewhat different geography than the other lakes, it appears that the linear kernel generalises better.

Table 9.

MODIS leave one lake out results. Numbers are in A/B format where A and B represent the results using Radial Basis Function (RBF) and linear kernels, respectively. The better kernel for a given experiment is shown in black, worse kernel in grey.

Table 10.

VIIRS leave one lake out results. Numbers are in A/B format where A and B represent the results using RBF and linear kernels, respectively. The better kernel for a given experiment is shown in black, worse kernel in grey.

3.1.3. Leave One Winter out Cross Validation

To investigate the adaptability of a model to the potentially different conditions of an unseen winter, we trained the classifier using pixels from one of the two available winters (from all lakes), and tested on the data from the other winter. The results for MODIS and VIIRS are shown in Table 11 and Table 12, respectively. Comparing these results with Table 7, it can be inferred that, across winters, the SVM does encounter a generalisation loss, especially with the RBF kernel. The loss with the linear kernel is minimal. Apparently, the RBF overfitted to the data characteristics of the specific winter and did not generalise as well as its linear counterpart. Note also, it is possible that freezing patterns could vary across winters even for the same lake, and learning-based systems might fail in case a pattern appears while testing that was not encountered during training. It is encouraging that the linear kernel does not seem to overfit much, owing to its relatively lower capacity. Still, the results hint that the annotated data from more than one winter should be present in the training set when setting up an operational system.

Table 11.

MODIS leave one winter out results. The numbers are shown in A/B format where A and B represent the outcomes using RBF and linear kernels, respectively. The better kernel for a given experiment is shown in black, worse kernel in grey. Left is winter 16–17, right is winter 17–18.

Table 12.

VIIRS leave one winter out results. The numbers are shown in A/B format where A and B represent the outcomes using RBF and linear kernels respectively. The better kernel for a given experiment is shown in black, worse kernel in grey. Left is winter 16–17, right is winter 17–18.

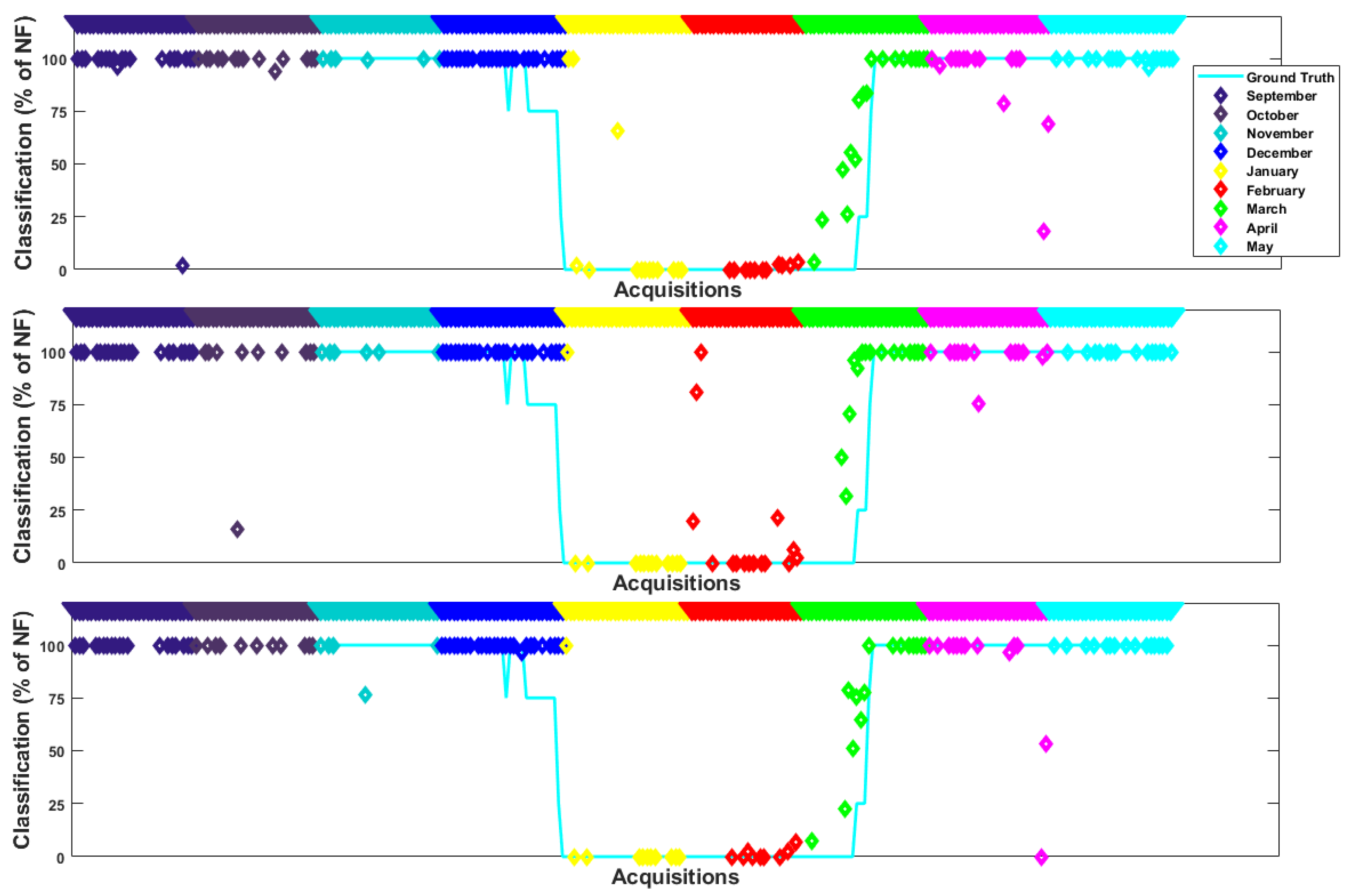

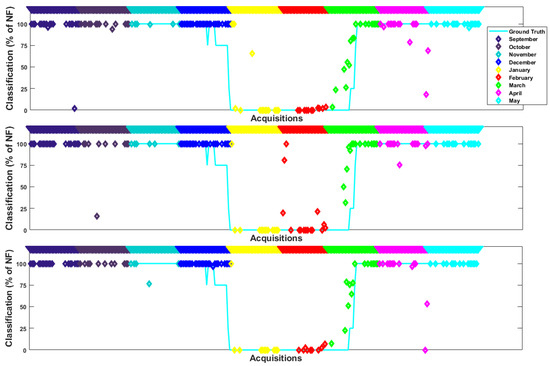

3.1.4. Timeline Plots and Qualitative Results

Figure 13 shows the results of lake Sihl from a full winter (September 2016 till May 2017), listed in chronological order on the x-axis. For each cloud-free day (at least 30% of the lake pixels non-cloudy), the SVM result is shown on the y-axis (in the top and middle timelines) as the percentage of cloud-free clean pixels that are classified as non-frozen. In the bottom timeline, we display the MODIS snow product (100 means no snow and 0 means fully snow covered). The webcam-based ground truth is shown as a cyan colour line in all timeline plots, with four levels (100 for fully non-frozen, 75 for more snow or more ice days, 25 for more water and 0 for fully-frozen). For each sensor, the combined training data of all available lakes from two winters (except Sihl from 16–17) is used for these timeline plots. It can be seen from both MODIS and VIIRS timelines that thin ice vs.water confusion exists for both MODIS and VIIRS. This is because during the freeze-up period (late December), the model classifies a set of consecutive days as completely non-frozen, while the ground truth asserts more ice, probably thin ice floating on water.

Figure 13.

MODIS (top) and VIIRS (middle) timeline results of lake Sihl for full winter 16–17 using linear kernel. A timeline of the MODIS snow and ice product (bottom) is also plotted for comparison with our results and the webcam-based ground truth. In all timelines, the x-axis shows all dates that are at least 30% cloud-free in chronological order and the respective results [% of Non-Frozen (NF) pixels] are plotted on the y-axis.

In this paper, we compare our results of lake Sihl from winter 2016–2017 with the MODIS snow product [15]. It can be inferred from Figure 13 that except for very few days, our MODIS results are in agreement with the MODIS snow product. Although the newly added MOD10A1F [20] (collection 6.1) seems to be a better option with the ’cloud gap filled’ feature, we use the MOD10A1 product [15] (collection 6, daily cloud-free snow cover derived from the Terra MODIS) since the former product is not yet available [59] for winter 2016–2017. Note that the MODIS product has a relatively coarse spatial resolution of 500m as opposed to our results at 250m resolution.

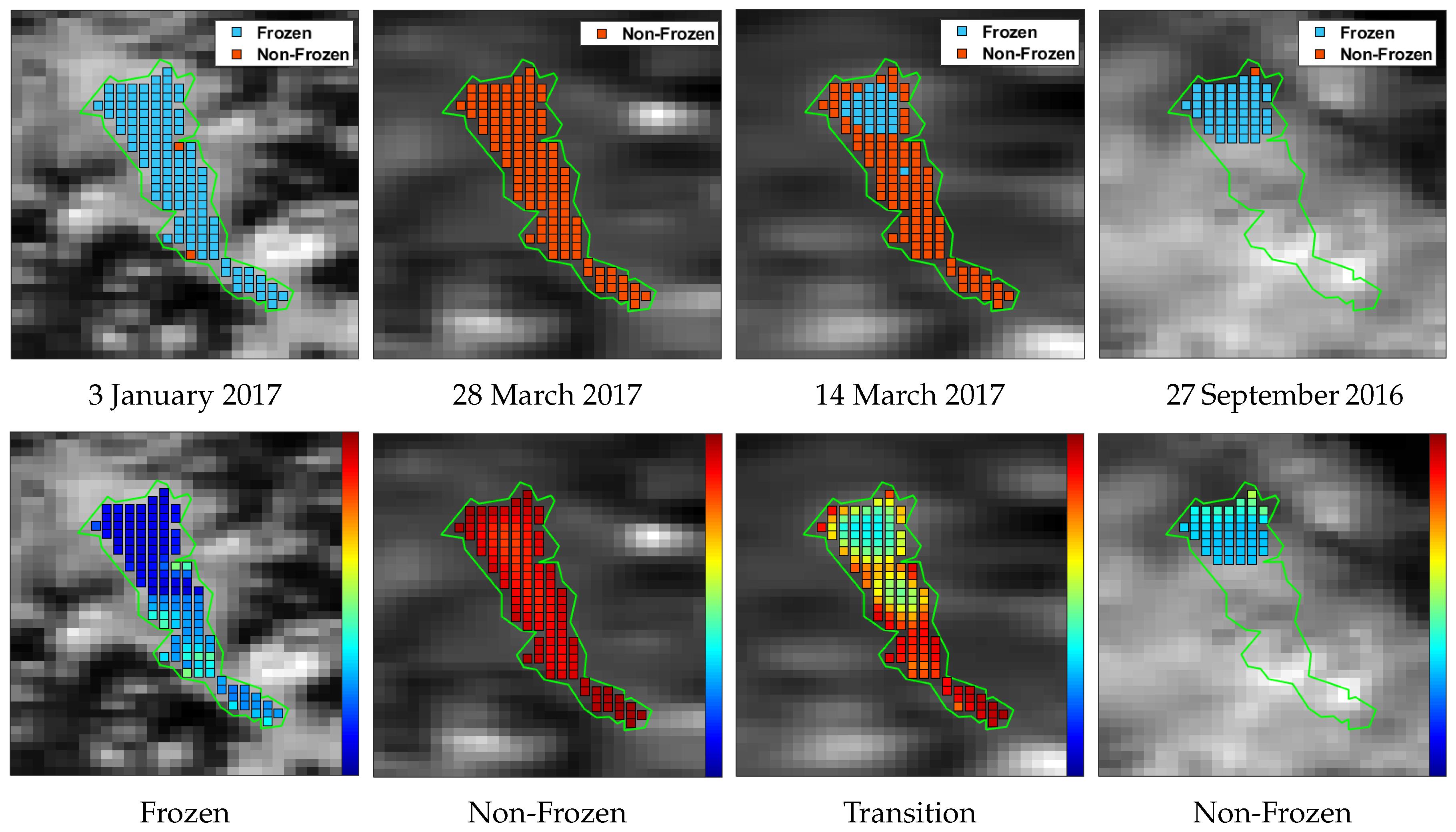

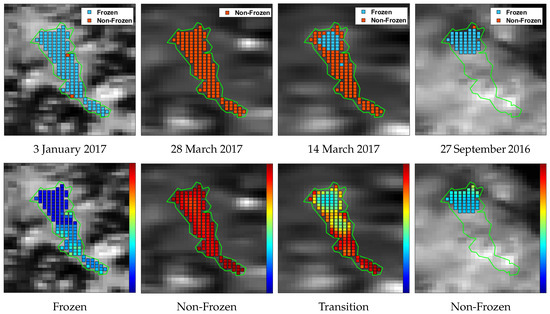

Figure 14 displays exemplary qualitative results (lake Sihl, MODIS data, winter 16–17). Three non-transition dates (27 September 2016, 3 January 2017, and 28 March 2017) and a transition date (14 March 2017) are shown. The first and second rows portray the classification results and the confidence of the classifier (soft probability maps) respectively. In row 1, a clean pixel is shown as blue if the classifier estimates it as frozen, and red if non-frozen. In the second row, more blue/less red colour denotes a higher probability of being frozen. A pixel is not processed if it is cloudy. All except the fourth column show successfully classified days. In column 4, we present the results of an actually fully non-frozen day (27 September 2016) that was detected as almost fully frozen. Note the missing cloudy pixels in this image. This example shows that erroneous cloud masks (especially the false negatives) also induce errors in our predictions. Similar effects can be observed for the end of April (MODIS) and early October (VIIRS). Confusion due to undetected clouds is also the reason why a few days were estimated as non-frozen during mid-winter (see VIIRS timeline, February).

Figure 14.

MODIS qualitative results using the linear kernel. Top and bottom rows show classification results and corresponding confidence respectively. Results of cloudy pixels are not displayed. First, second, and third columns show success cases while the fourth column displays a failure case. In the second row, more red means more non-frozen and more blue means more frozen. The dates and ground truth labels are shown below each sub-figure in the first and second rows respectively.

3.2. Experiments with Webcam Images

The neural network is implemented in Tensorflow [60]. We extracted square patches (crop size, see Table 13) from the images and train the network by minimising the weighted cross-entropy loss, giving more attention to the under-represented classes to compensate imbalances in the training data. Testing is performed at full image resolution without cropping. More details about the hyperparameters used are shown in Table 13.

Table 13.

Hyperparameters for the Deep-U-Lab model.

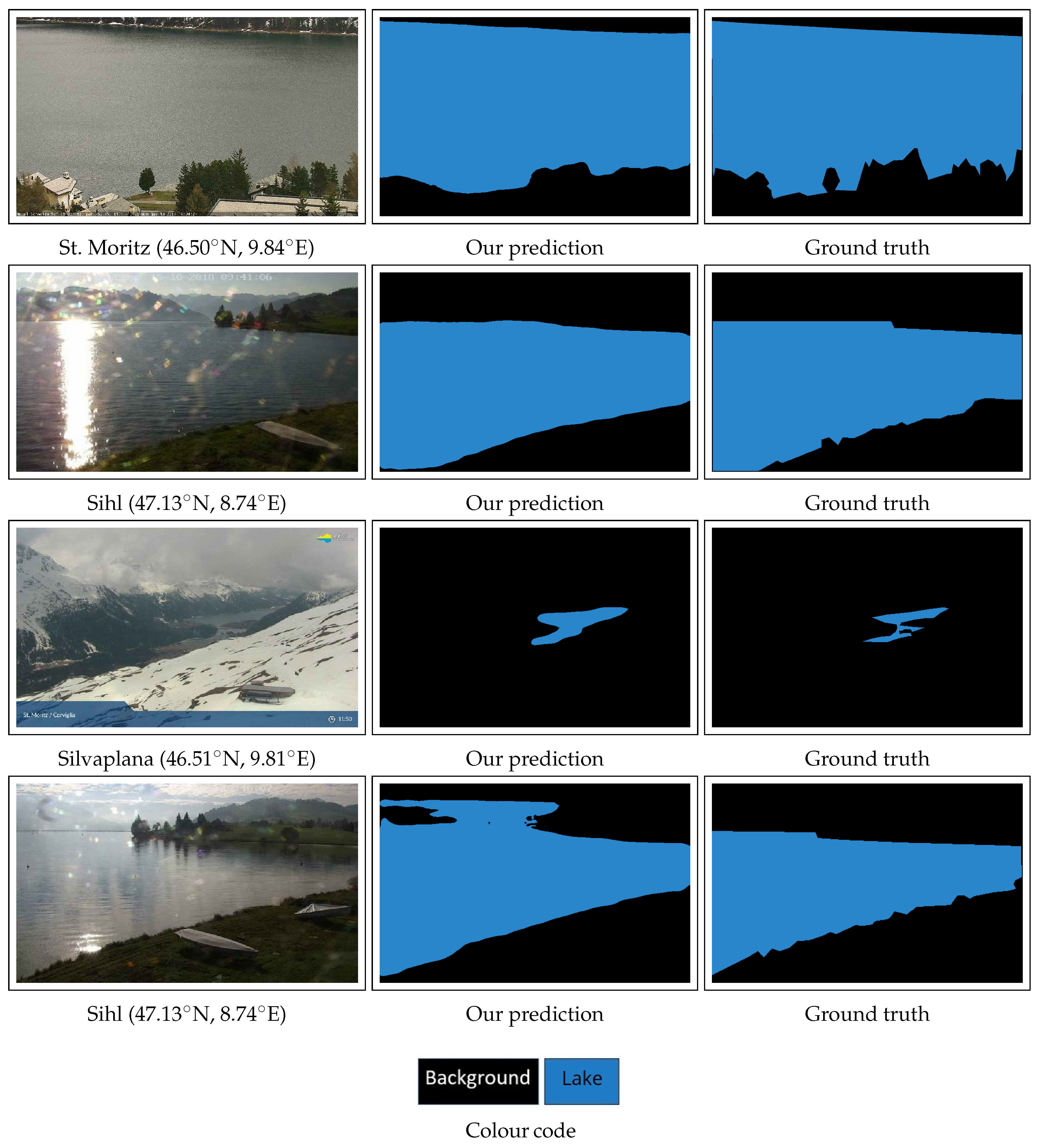

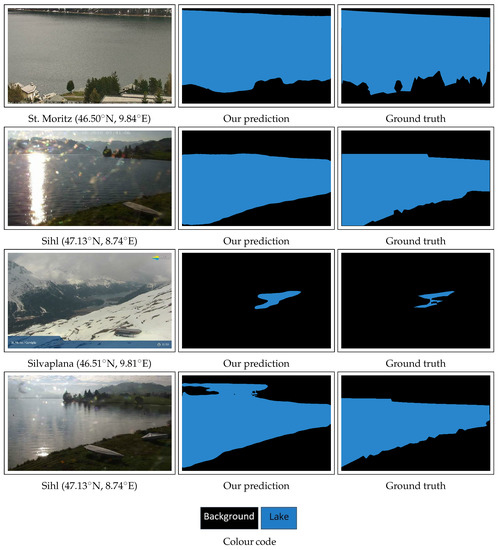

For the task of lake detection, we collected image streams from four different lakes, see Table 14. The cameras near lakes Sihl and St. Moritz are rotating while the others are stationary. Performance of the network for lake detection is assessed only on summer images in order to sidestep the complications in winter due to the presence of snow in and around the lake. A score of ≥0.9 mIoU is achieved, see Table 14. However, the IoU for the lake (class foreground, FG) is somewhat lower because of the severe class imbalance. Note that IoU is a rather strict measure, e.g., detection with 80%, recall at 80%, and precision results in an IoU of 60%. Qualitative results are displayed in Figure 15. The first three rows show successful cases while the last row displays a case with some misclassification. Note that on such a low visibility day, even humans find it difficult to spot the transition from lake to sky. Whereas our network detected the lake even in the presence of challenging sun reflections (row 2) and when the foreground lake area is very small (row 3).

Table 14.

Lake detection results (mIoU). The four cameras that monitor lake Silvaplana are indicated as , , , and . BG and FG denote background and foreground (lake area) respectively.

Figure 15.

Lake detection results. Both success (rows 1,2,3) and failure (row 4) cases are shown. The colour code used to visualise the results is also displayed. The first column shows the lakes being monitored, along with the approximate location (latitude, longitude) of the webcam.

To assess lake ice segmentation, we experimented exhaustively for the two lakes (St. Moritz, Sihl) and two winters (16–17, 17–18) annotated in the Photi-LakeIce dataset. The evaluation includes experiments for segmentation within the same camera, across cameras, across winters, and across lakes.

For same camera experiments, we employed a 75%/25% train-test split. Corresponding quantitative results are presented in Table 15. Note that, in all comparable experiments, we surpass previous state-of-the-art [34] by a significant margin. They produced results only on two cameras monitoring St. Moritz. We demonstrate our system also on a new lake (Sihl, camera 2) with images that are significantly harder to classify (see Figure 5 and Table 6) due to poor spatial resolution, image compression artefacts, frequent unfavourable lighting, etc. Additionally, the foreground to background pixel ratios in Sihl images are very low, which poses an additional challenge, and magnifies the influence of very small misclassified areas on the quantitative error metrics. As a result, the performance on lake Sihl is not as good as for St. Moritz. Nevertheless, the predictions have a mean IoU > 74%. The images with a mix of classes, like water with some ice or partially snow-covered ice are the most difficult ones to classify in part due to the fact that the ice class is especially rare and therefore under-represented in the training data, as snow that falls on the ice does not melt away for a long time.

Table 15.

Results (IoU) of same camera train/test experiments. We compare our results with Tiramisu Network [34] (shown in grey). Cameras 0 and 1 monitor lake St. Moritz while camera 2 captures lake Sihl.

All results shown so far are for networks trained with data augmentation. To quantify the influence of this common practice, we also report results without augmentation for camera 0, which are 2 percent points lower, see Table 16.

Table 16.

Effect of data augmentation (IoU values) on the same camera train/test experiment (camera 0).

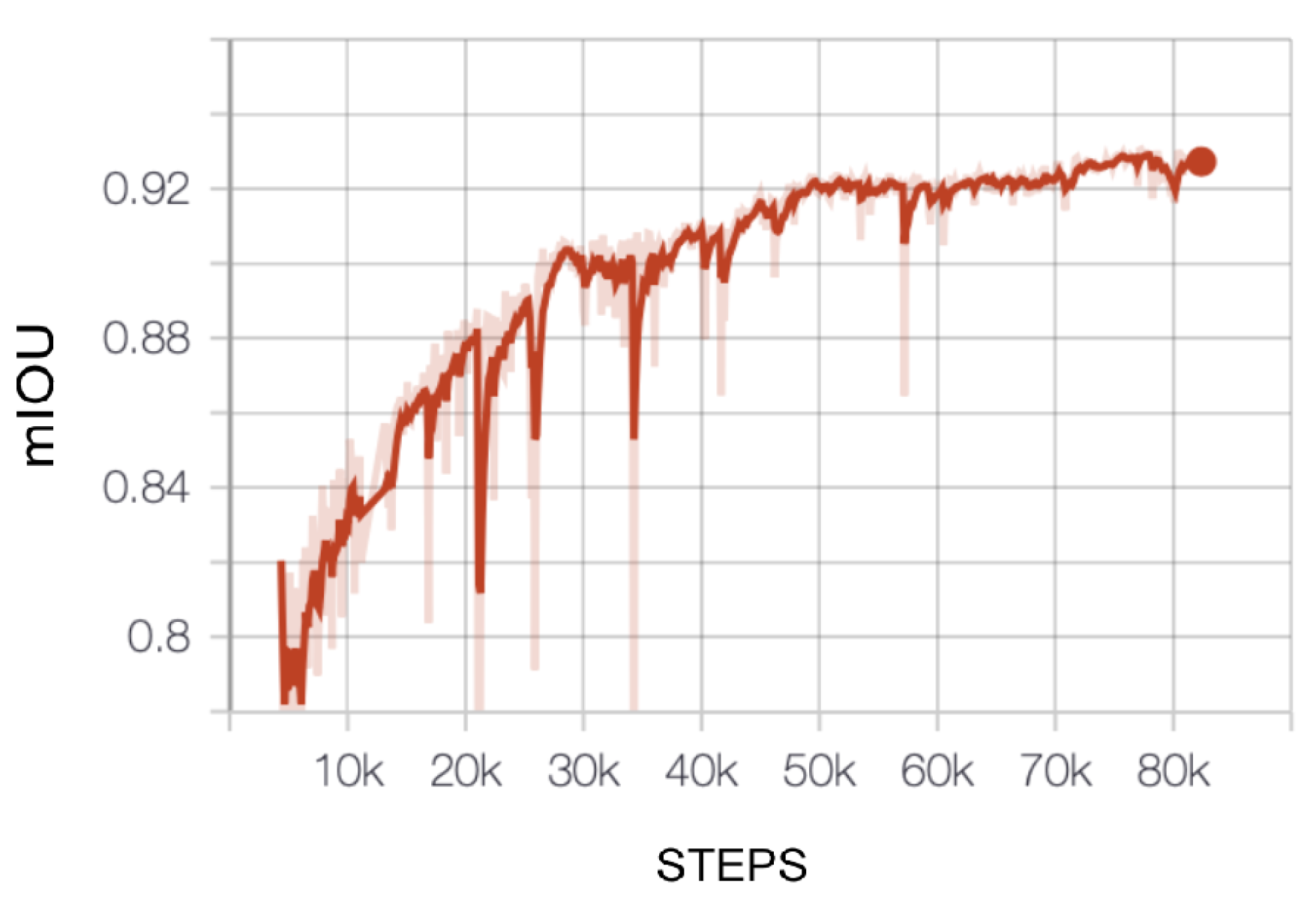

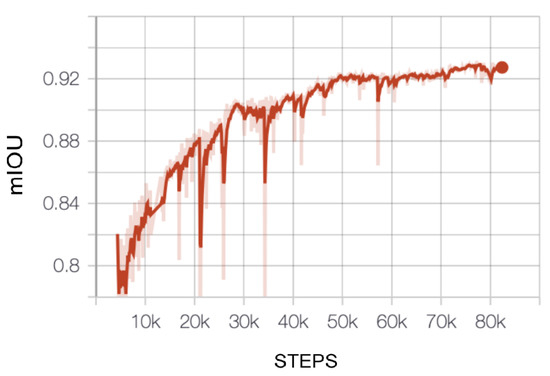

Additionally, in order to study how quickly the network learns, the mIoU is plotted on the training set against the number of training iterations. For that study, we use the example of lake St. Moritz (camera 0) from winter 16–17. Results are shown in Figure 16. The (smoothed) learning curve is very steep initially (<10 k steps) but does not completely saturate, which indicates that more training data could probably improve the results further.

Figure 16.

Evolution of mean IoU (mIoU) against the number of training steps (camera 0, St. Moritz, winter 2016–2017). Dark red curve represents a smoothed version of the original (light red) curve.

The generalisation performance (across cameras and winters) of the best webcam model reported in previous work [34] is still unsatisfactory, especially for the cross-camera case. As can be seen from our cross-camera results (within St. Moritz cameras, refer Table 17), the Deep-U-Lab model trained using data from one camera works well on a different camera, meaning that our method generalises well across cameras with totally different viewpoints, image scales, and lighting conditions. Note that, we indeed improve over prior state-of-the-art [34] significantly (gain of 35–40 percent points), which implies that Deep-U-Lab has the capacity to learn generalisable class appearance, without overfitting to a specific camera geometry or viewpoint. Our results for winter 17–18 are not as good as 16–17, primarily due to complicated lighting and ice patterns (e.g., black ice) which appeared only in that winter. In addition, the scores for the ice and clutter classes are low, primarily due to lower sample numbers. A comparison to prior work is not possible for winter 17–18, as that season has not been processed before.

Table 17.

Results (IoU) of cross-camera experiments. We compare our results with the Tiramisu Network [34] (shown in grey). Both cameras 0 and 1 monitor lake St. Moritz.

Deep-U-Lab performs superior to prior state-of-the-art in cross-winter experiments, too (Table 18), outperforming [34] by about 14–20 percent points. However, it does not generalise across winters as well on lake Sihl.

Table 18.

Results (IoU) of cross-winter experiments. We compare our results with the Tiramisu Network [34] (shown in grey). Cameras 0 and 1 monitor lake St. Moritz while camera 2 captures lake Sihl.

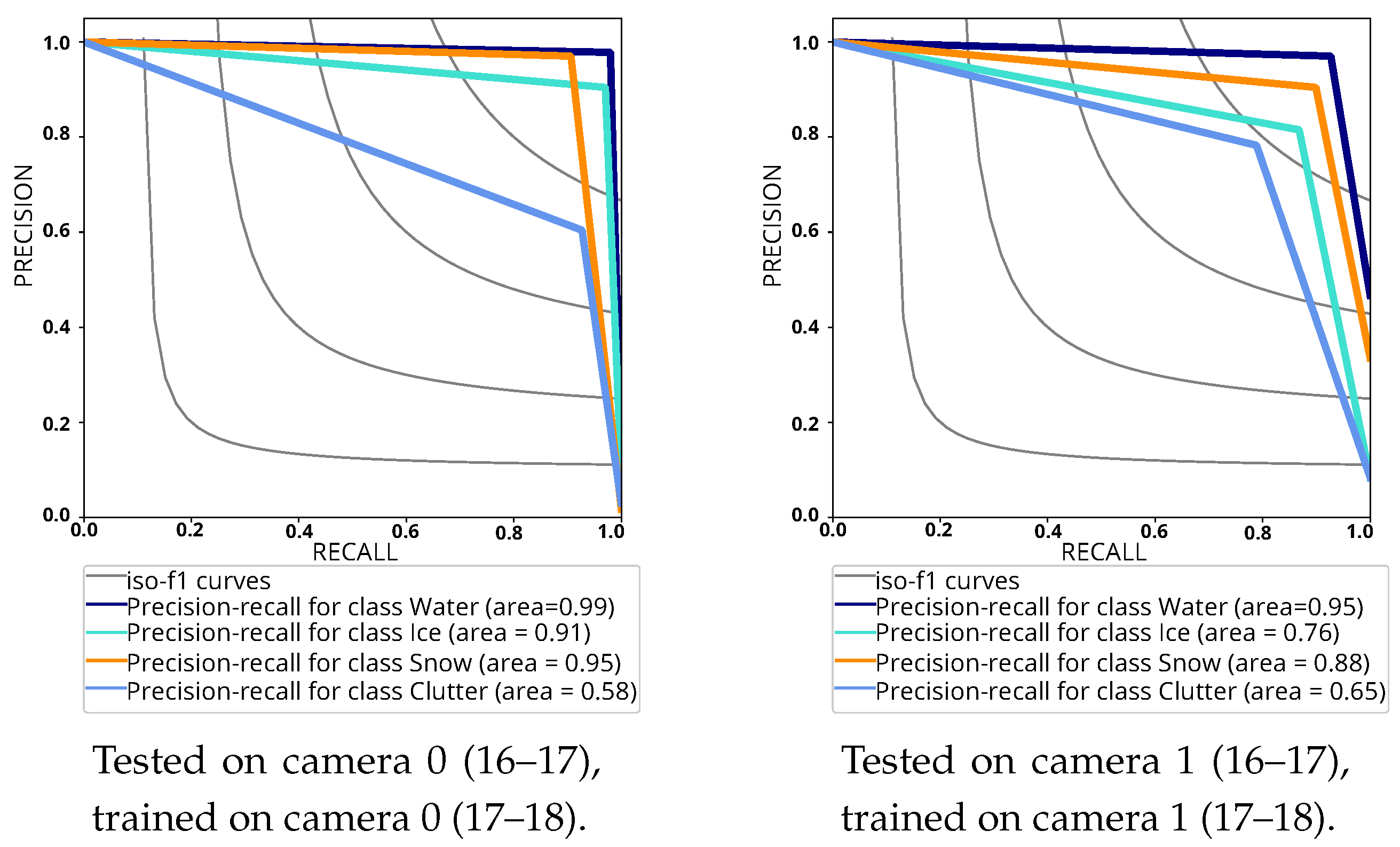

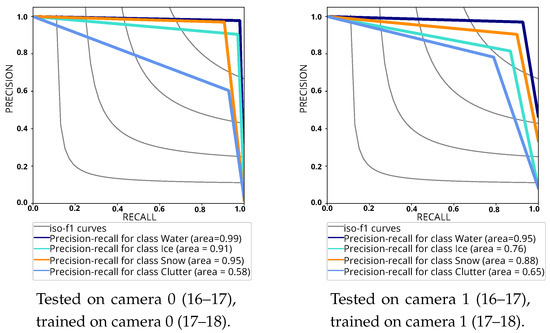

For a more complete picture of the cross-winter generalisation experiment, we also plot precision-recall curves (refer Figure 17). Similar curves for same camera and cross-camera experiments can be found in Prabha et al. [36]. As expected, segmentation of the two under-represented classes (clutter and ice) is less correct. Additionally, for the class clutter, a considerable amount of the deviations from ground truth occur due to improper annotations rather than erroneous predictions. As drawing pixel-accurate ground truth boundaries around narrow man-made items placed on frozen lakes such as tents, poles, etc. is time-consuming and tedious, the clutter objects are often annotated with rough summary masks that include considerable snow/ice background. This greatly exaggerates the (relative) number of clutter pixels in the annotations, thus increasing the relative error.

Figure 17.

Precision-recall plots (St. Moritz) of cross-winter experiments. Best if viewed on screen.

According to Figure 17, operating points around 85% recall are a good trade-off for cross-winter segmentation, if not every single pixel must be labelled. A more extreme test is generalisation across lakes, with different spatial resolution, image quality, reflection, and lighting patterns, shadows, etc. To our knowledge our work is the first one to try this. See Table 19 for results. Before training the models, we remove the clutter pixels from camera 0, since camera 2 does not have any clutter that could serve as training data. Classifying images from a lake with different characteristics and acquired with a different type of camera proves challenging. In one case, the results are acceptable for the more frequent classes despite a noticeable drop, as for the case camera 2→camera 0. In the other case camera 0→camera 2 the attempt largely fails. The images of lake Sihl (camera 2) are of clearly lower quality and more difficult to classify, challenging even human annotators. Consequently, training on St. Moritz does not equip the classifier to deal with them.

Table 19.

Results (IoU) of cross-lake experiments. Cameras 0 and 2 monitor lakes St. Moritz and Sihl respectively.

In a further experiment, we divide the Photi-LakeIce dataset into six folds, see Table 20. This makes it possible to perform experiments with a larger amount of training data, given that in previous experiments the loss had not fully saturated. As expected from a high-capacity statistical model, more training data improves the results i.e., it seems feasible to build a practical system if one is willing to undertake a bigger (but still reasonable and realistic) annotation effort. An exception in this experiment is lake Sihl (camera 2), where the performance drops. This confirms the observation above that this camera is the most difficult one to segment in our dataset, and the domain gap from St. Moritz to Sihl is too large to bridge without appropriate adaptation measures. One solution might be fine-tuning with at least a small set of cleverly picked samples from the target lake, but this is beyond the scope of the present paper.

Table 20.

Results (IoU) of leave one dataset out experiments. Cameras 0 and 1 monitor lake St. Moritz while camera 2 captures lake Sihl.

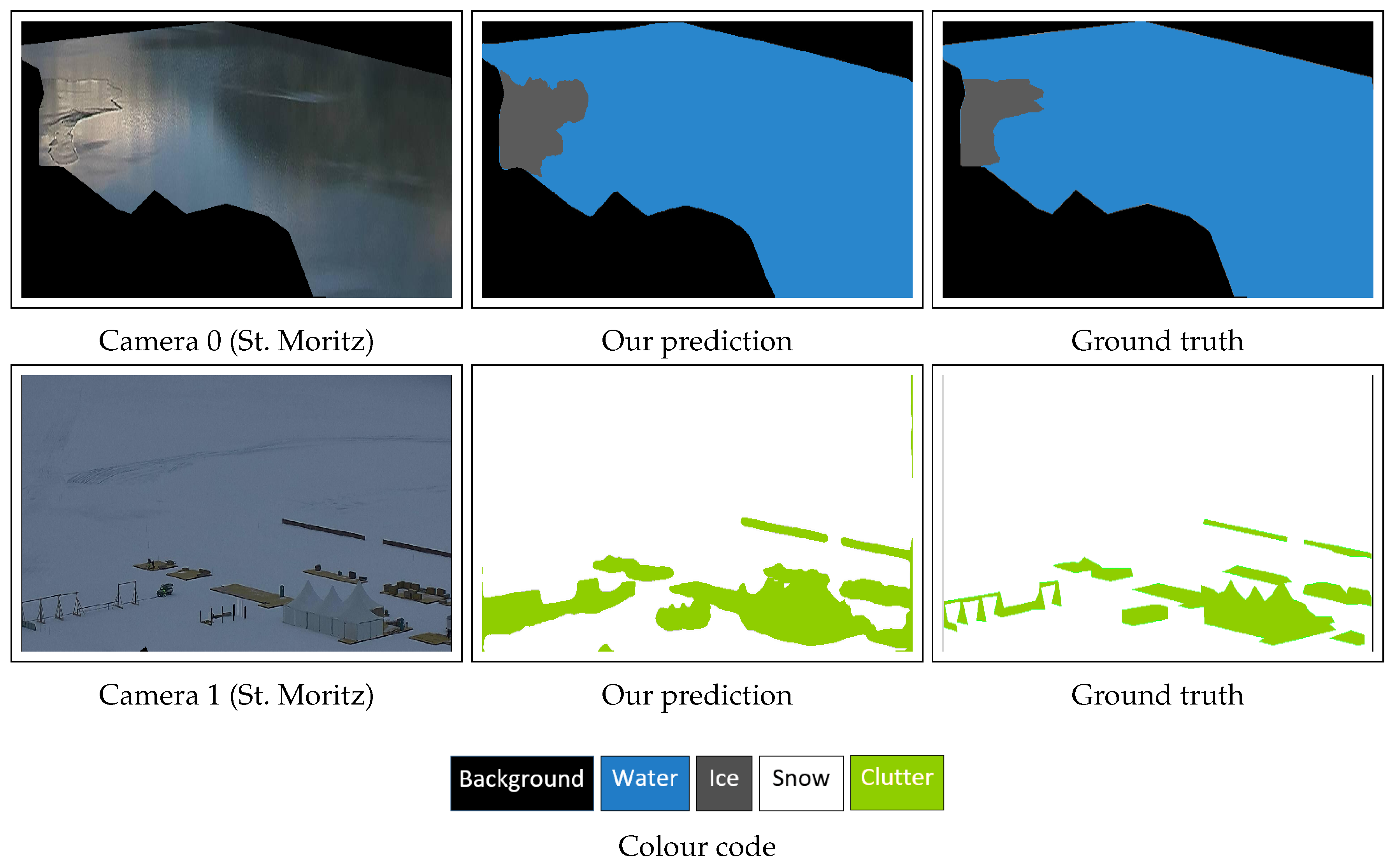

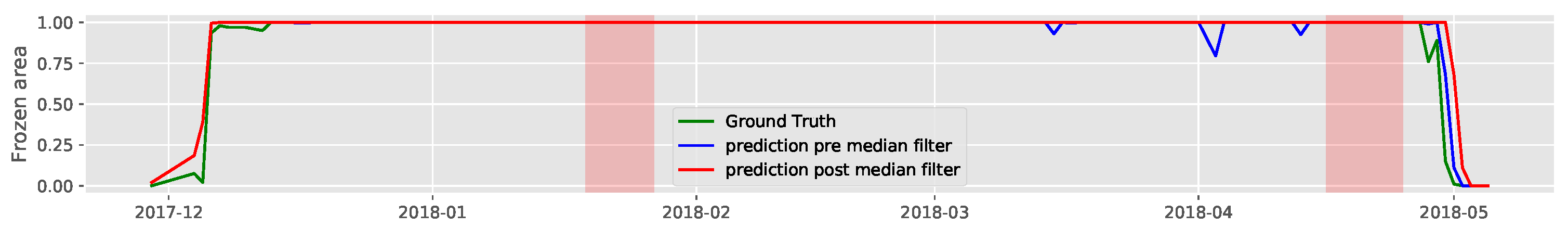

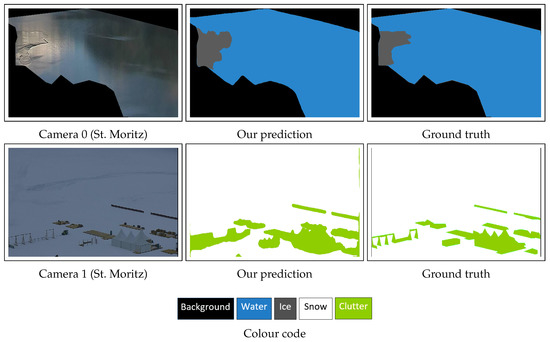

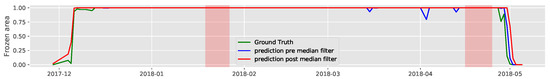

To assess the lake ice segmentation visually, we depict qualitative webcam results for cameras 0 and 1 in Figure 18. Deep-U-Lab successfully segments correctly in challenging scenarios. For instance, our network performed well even when shadows appeared on the lake either from clouds or nearby mountains (Figure 18 row 1). To determine how well the Deep-U-Lab predictions follow the ground truth, especially during freezing and thawing periods, we plot time series results that include all the transition as well as non-transition days from a full winter (17–18, see Figure 19). Per image, we sum the numbers of ice and snow pixels and divide by the sum of all lake pixels. The resulting fractions of frozen pixels are aggregated into a daily value by taking the median. Optionally, the daily values are further processed with another 3-day median to improve temporal coherence. The daily fractions of frozen pixels (y-axis) are displayed in chronological order (x-axis), for the ground truth, daily prediction, and smoothed prediction. Smoothing across time improves the final results by ≈3%.

Figure 18.

Qualitative lake ice segmentation results on webcam images. The colour code is also shown.

Figure 19.

Cross-camera time series results (winter 17–18) of lake St. Moritz using Deep-U-Lab. Results for camera 1 (when trained on camera 0 data) is displayed. All dates are shown in chronological order on the x-axis and the respective results (percentage of frozen pixels) are plotted on the y-axis. Data lost due to technical failures are shown as red bars.

3.3. Ice-on/off Results

We go on to estimate the ice-on and ice-off dates using our satellite- and webcam-based approaches, results are shown in Table 21. A comparison with the ground truth dates (estimated by visual interpretation of webcams by a human operator) is also provided. Additionally, we compare our results with the in-situ temperature analysis results reported in Tom et al. [34]. We can only show the results for one winter (16–17), since ground truth is not available for 17–18.

Table 21.

Ice-on/off dates (winter 16–17). Ground truth dates are shown in the order of confidence in case of more than one candidate.

Prior to the estimation of the two dates, we combine the time series results of both MODIS and VIIRS (Figure 13) in order to minimise data gaps due to clouds, by simply filling in missing days in the VIIRS time series with MODIS results, whenever the latter are available. Even after merging the two time series, some gaps still exist during the critical transition periods. Note that the presence of gaps near the ice-on/off dates could affect the accuracy and confidence in the estimated dates. This is one of the risks when using optical satellite image analysis and where webcams could constitute a valuable alternative, if sufficient coverage can be ensured for a lake of interest.

We estimate the ice-on/off dates from the combined time series and show them as “satellite approach” in Table 21. The best results are obtained with an RBF kernel for St. Moritz and with a linear kernel for rest of the lakes. In most cases the ice-on/off dates have an offset of 1–4 days from the ground truth. Exceptions are the ice-off dates of Sils and Silvaplana. Note that data from only one winter (of the lake being tested) is present in the corresponding training set. It appears that training data from more winters is critical to estimate accurate ice-on/off dates. We note that there could also be noise in the ground truth ice-on/off dates due to human interpretation errors. Using the satellite approach, significant errors in ice-on/off estimation exist for St. Moritz. Recall that the daily decision for lake St. Moritz is taken based on just four pixels and based only on cloud-free days in MODIS. This clearly points to the fact that MODIS (and even more, VIIRS) imagery is not the best choice for very small lakes. The results obtained with webcams show a higher accuracy for lake St. Moritz (see Table 21 and Figure 19). Here, we use camera 0 to estimate the two phenological dates, since it has a better coverage of the lake than camera 1. Note that no data is available between 18 March 2017 and 26 April 2017 due to a technical problem with the camera, and ice-off unfortunately occurred during that period. While we obtain excellent results for St. Moritz with webcams, the accuracy of ice-on/off for lake Sihl is not good, primarily because of the limited image quality with low spatial resolution (see Table 6), compression artefacts, and acute view angles (see Figure 5).

4. Discussion

4.1. VIIRS and MODIS Analysis

The optical satellite sensors such as MODIS and VIIRS can clearly serve as a basis for routine monitoring of lake ice (especially for global coverage) and the results achieved show a high level of accuracy. One weakness is their inability to penetrate clouds, especially during lake freeze-up and break-up. The main advantage of MODIS is the availability of longer time series data. In addition, MODIS has useful bands in various areas of the electromagnetic spectrum. However, there are several disadvantages too. The radiometric quality is not that good, moreover, the sensor is very old and its absolute geolocation is less accurate than that of VIIRS (more important for small lakes). Furthermore, MODIS data is expected to eventually be discontinued, whereas VIIRS operation is guaranteed over a longer future period (JPSS-1/NOAA-20 until 2024; JPSS-2 with same suite of sensors will be launched in 2021 with designed life time of 7 years; JPSS-3 and -4 are in the planning phase).

Poor spatial resolution (particularly for VIIRS), makes it impossible to operate our satellite methodology on small-size lakes up to at least 2 km. Another issue is the significant confusion between (thin) ice and water since the similar reflectance of these two classes can confuse the classifier. Unfortunately there exist very few non-transition dates with no clouds, snow-free ice, mixture of thin ice, and water in both the MODIS and VIIRS datasets, such that training a reliable model for these situations still remains a challenge. Moreover, the presence of label noise in the ground truth impacts the training. Such noise occurs mainly because most of the webcams are not optimally configured and it is very difficult to capture the whole lake in a single webcam frame. This problem is even bigger for larger lakes. Integrating the visual interpretations from multiple cameras observing a lake is cumbersome as well as challenging. One possible solution for the noise problem could be to not train on dates near the transition period, for which label noise in the ground truth is more likely. It is, however, equally possible that this would even aggravate the problem, as the conditions seen in the training set would become even less representative of the transition periods. Large-scale in-situ measurements are an alternative to webcam-based ground truth, but are not realistic for wide-area coverage. Furthermore, imperfections of the cloud-masks bring in more errors in the high-accuracy range where our method operates.

Regarding our SVM-based methodology, the RBF kernel tends to not generalise as well as its linear counterpart. However, this behaviour may depend on the available training data. Under our current experimental conditions, the linear kernel overall has the upper hand, but this assessment could still change when using data from multiple winters.

4.2. Webcam Analysis

For webcam data featuring sufficient spatial resolution, we see a great potential for lake ice monitoring. We do note that webcam placement is restricted by practical considerations. Selecting and/or mounting webcams for lake ice monitoring will normally be a compromise between the ideal geometric configuration and finding a place where the device can be installed with reasonable effort. For the ideal placement, the usual perspective imaging rules apply, most importantly viewing directions from above are preferable over grazing angles, and viewing directions directly towards the sun should be avoided as much as possible.

One question that still remains unanswered is: What is the reason that results in Deep-U-Lab outperforming the Tiramisu lake ice network [32,33,34]? One possible explanation is that our model profits from the smarter dilated convolutions and multi-scale pyramid pooling at the feature extraction stage, effectively letting the network grasp a relatively broader context as opposed to the Tiramisu network. Additionally, our model heavily benefits from the pre-trained weights to learn with still limited training data for the lake ice task. Our Deep-U-Lab model did not converge when we tried to train it without transfer learning whereas pre-trained weights for FC-DenseNet are not available, so that we can not at the moment quantify the influence of pre-training.

Regarding the computational efficiency of the CNN approach, (off-line) training for 100k steps on camera 0 (820 images) takes ≈24 h on a PC equipped with a NVIDIA GeForce GTX 1080 Ti graphics card (for cross-camera experiment, lake St. Moritz, winter 16–17). Testing takes ≈10 min for the 1180 images of camera 1.

5. Conclusions

We investigated the potential of machine-learning based image analysis, in combination with various image sensors to retrieve lake ice. So far such an approach has rarely been explored, especially with regard to the many small lakes on Earth (particularly in mountainous regions), but it can be a valuable source of information that is largely independent of in-situ observations as well as models of the freezing/thawing process. We put forward an easy-to-use, SVM-based approach to detect lake ice in MODIS and VIIRS satellite images and show that it delivers conclusive results. Additionally, we set a new state-of-the-art from webcam-based lake ice monitoring, using the Deep-U-Lab network, and have in that context also automated the detection of lake outlines as a further step towards operational monitoring with webcams. Finally, we introduced a new, public webcam dataset with pixel-accurate annotations.

To detect lake ice from MODIS and VIIRS optical satellite imagery, we proposed a simple, generic machine learning-based approach that achieves high accuracy for all tested lakes. Though we focused on Swiss Alpine lakes, the proposed approach is very straight-forward and hence the results could hopefully be directly applied to other lakes with similar conditions, in Switzerland and abroad, and possibly to other sensors with similar characteristics. We demonstrated that our approach generalises well across winters and lakes (with similar geographical and meteorological conditions). In addition to the lake ice detection from space, we have proposed the use of free data from terrestrial webcams for lake ice monitoring. Webcams are especially suitable for small lakes (ca. up to 2 km), which cannot be monitored by VIIRS-type sensors. Despite the limited image quality, we obtained promising results using deep learning. Webcams have good ice detection capability with a much higher spatial resolution compared to satellites. However, one has no control over the location, orientation, lake area coverage, and image quality (often poor) of public webcams. In addition, there are no, or too few, webcams for some lakes. On the positive side, webcams are largely unaffected by cloud cover. For continental/global coverage, satellite-based monitoring is clearly the method of choice, again confirming the advantage of satellite observations for large-scale Earth observation. For focused, local campaigns and as a source of reference data at selected sites, the webcam-based monitoring method may be an interesting alternative. Note also, it may in certain cases be warranted to install dedicated webcams (respectively, surveillance cameras) with pan, tilt and zoom functionalities optimised for lake ice monitoring.

One way to circumvent the problem of clouds with optical satellite sensors is to use microwave data. In particular, Sentinel-1 SAR data (GSD 10 m, freely available) looks very promising [37]. Optical sensors such as Sentinel-2 and Landsat-8 are visually easier to interpret w.r.t. lake ice than Radar, and have a better spatial resolution than MODIS and VIIRS. Although they are not suitable as a stand-alone source for lake ice monitoring due to their low temporal resolution (under ideal conditions five days), they may still in certain cases be useful to fill gaps in VIIRS/MODIS results.

We consider our satellite-based approach as a first step and ultimately hope to produce a 20-year time series, using MODIS data since 2000. It will be interesting to correlate the longer-term lake freezing trends with other climate time series such as surface temperature or CO levels. We expect that such a time series will be helpful to draw conclusions about the local and global climate change.

One technical finding of our study is that the prior learning-based approaches [33,34] did not fully leverage the power of deep CNNs to observe lake ice. At the methodological level, we clearly demonstrated the potential of machine (deep) learning systems for lake ice monitoring, and hope that this research direction will be pursued further. Given the good cross-winter and cross-camera generalisation of the models and computational efficiency at inference time (on GPU for the CNN part), an operational deployment is within reach. Our results show that employing the state-of-the-art CNN frameworks was highly effective for ice analysis, especially during the transition periods. What still needs improvement is cross-lake generalisation. We do expect that a Deep-U-Lab model trained using data from a couple of winters could consistently reach >80% IoU on the four major classes. From the segmentation results we were in many cases able to determine the ice-on and ice-off dates to within 1–2 days and for that task the relatively better quality webcams were particularly helpful, as satellite-based segmentation was less reliable during the transition periods. An interesting direction may be to reduce the one-time effort for ground truth labelling with techniques such as domain adaptation or active learning.

For monitoring small lakes, integrating the webcam results with in-situ temperature measurements seems to be a possible future direction. Additionally, for such lakes, usage of UAVs equipped with both thermal and RGB cameras could be a plausible option, but may be difficult to operationalise due to the need for accurate geo-referencing, lack of robustness in cold weather, as well as legal flight restrictions. An intriguing extension of the webcam-based approach could be to use crowd-sourced imagery for lake ice detection. We published some preliminary results in one of our recent works [36]. A large, and exponentially growing, number of images are available on the internet and social media. With the advance of smartphones equipped with cameras and the habit of selfies, many personal images show a lake in background. Still regular coverage of a given site is hard to ensure, and accurate geo-referencing of such images is challenging.

Author Contributions

Conceptualization, M.T., R.P., E.B., L.L.-T., K.S.; Data curation, M.T., R.P. and T.W.; Methodology, M.T. and R.P.; Software, M.T. and R.P.; Supervision, M.T., E.B., L.L.-T. and K.S.; Validation, M.T., R.P. and T.W.; Writing–original draft, M.T.; Writing–review and editing, E.B., K.S. All authors have read and agreed to the submitted version of the manuscript.

Funding

This research was funded mainly by the Swiss Federal Office of Meteorology and Climatology MeteoSwiss (in the framework of GCOS Switzerland) and partially by the Sofja Kovalevskaja Award of the Humboldt Foundation. The APC was funded by ETH Zurich.

Acknowledgments

We express our gratitude to all the partners in our MeteoSwiss projects for their support. Additionally, we acknowledge Mathias Rothermel, Muyan Xiao, and Konstantinos Fokeas for their contributions in collecting and annotating the images of Photi-LakeIce dataset. We also thank Hotel Schweizerhof for providing the webcam data of lake St. Moritz.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rolnick, D.; Donti, P.L.; Kaack, L.H.; Kochanski, K.; Lacoste, A.; Sankaran, K.; Ross, A.S.; Milojevic-Dupont, N.; Jaques, N.; Waldman-Brown, A.; et al. Tackling Climate Change with Machine Learning. arXiv 2019, arXiv:1906.05433v2. [Google Scholar]

- Sharma, S.; Blagrave, K.; Magnuson, J.J.; O’Reilly, C.M.; Oliver, S.; Batt, R.D.; Magee, M.R.; Straile, D.; Weyhenmeyer, G.A.; Winslow, L.; et al. Widespread loss of lake ice around the Northern Hemisphere in a warming world. Nat. Clim. Chang. 2019, 9, 227–231. [Google Scholar] [CrossRef]

- WMO. 2020. Available online: https://public.wmo.int/en/programmes/global-climate-observing-system/essential-climate-variables (accessed on 24 March 2020).

- ESA Climate Change Initiative. Available online: https://www.esa.int/Applications/Observing_the_Earth/Space_for_our_climate/ESA_s_Climate_Change_Initiative (accessed on 11 October 2020).

- ESA CCI+ Overview. Available online: https://climate.esa.int/sites/default/files/01_180320%20CCI%2B%20Overview%20revised.pdf (accessed on 11 October 2020).

- Knoll, L.B.; Sharma, S.; Denfeld, B.A.; Flaim, G.; Hori, Y.; Magnuson, J.J.; Straile, D.; Weyhenmeyer, G.A. Consequences of lake and river ice loss on cultural ecosystem services. Limnol. Oceanogr. Lett. 2019, 4, 119–131. [Google Scholar] [CrossRef]

- Schindler, D.W.; Beaty, K.G.; Fee, E.J.; Cruikshank, D.R.; DeBruyn, E.R.; Findlay, D.L.; Linsey, G.A.; Shearer, J.A.; Stainton, M.P.; Turner, M.A. Effects of Climatic Warming on Lakes of the Central Boreal Forest. Science 1990, 250, 967–970. [Google Scholar] [CrossRef] [PubMed]

- Magnuson, J.J.; Robertson, D.M.; Benson, B.J.; Wynne, R.H.; Livingstone, D.M.; Arai, T.; Assel, R.A.; Barry, R.G.; Card, V.; Kuusisto, E.; et al. Historical Trends in Lake and River Ice Cover in the Northern Hemisphere. Science 2000, 289, 1743–1746. [Google Scholar] [CrossRef]

- Duguay, C.; Bernier, M.; Gauthier, Y.; Kouraev, A. Remote sensing of lake and river ice. In Remote Sensing of the Cryosphere; Tedesco, M., Ed.; Wiley-Blackwell: Oxford, UK, 2015; pp. 273–306. [Google Scholar]

- Duguay, C.; Prowse, T.; Bonsal, B.; Brown, R.; Lacroix, M.; Menard, P. Recent trends in Canadian lake ice cover. Hydrol. Process. 2006, 20, 781–801. [Google Scholar] [CrossRef]

- Spencer, P.; Miller, A.E.; Reed, B.; Budde, M. Monitoring lake ice seasons in southwest Alaska with MODIS images. In Proceedings of the Pecora Conference, Denver, CO, USA, 18–20 November 2008. [Google Scholar]

- Brown, L.C.; Duguay, C.R. Modelling Lake Ice Phenology with an Examination of Satellite-Detected Subgrid Cell Variability. Adv. Meteorol. 2012, 6, 431–446. [Google Scholar] [CrossRef]

- Kropáček, J.; Maussion, F.; Chen, F.; Hoerz, S.; Hochschild, V. Analysis of ice phenology of lakes on the Tibetan Plateau from MODIS data. Cryosphere 2013, 7, 287–301. [Google Scholar] [CrossRef]

- Qiu, Y.; Xie, P.; Leppäranta, M.; Wang, X.; Lemmetyinen, J.; Lin, H.; Shi, L. MODIS-based Daily Lake Ice Extent and Coverage dataset for Tibetan Plateau. Big Earth Data 2019, 3, 170–185. [Google Scholar] [CrossRef]

- Riggs, G.A.; Hall, D.K.; Román, M.O. MODIS Snow Products Collection 6 User Guide. Available online: https://modis-snow-ice.gsfc.nasa.gov/uploads/C6_MODIS_Snow_User_Guide.pdf (accessed on 7 October 2020).

- Cai, Y.; Ke, C.Q.; Yao, G.; Shen, X. MODIS-observed variations of lake ice phenology in Xinjiang, China. Clim. Chang. 2020, 158, 575–592. [Google Scholar] [CrossRef]

- Zhang, S.; Pavelsky, T.M. Remote Sensing of Lake Ice Phenology across a Range of Lakes Sizes, ME, USA. Remote Sens. 2019, 11, 1718. [Google Scholar] [CrossRef]