A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy)

Abstract

1. Introduction

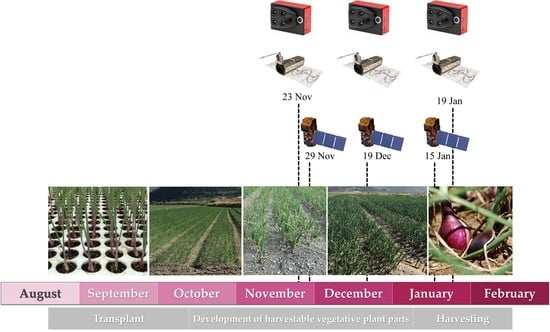

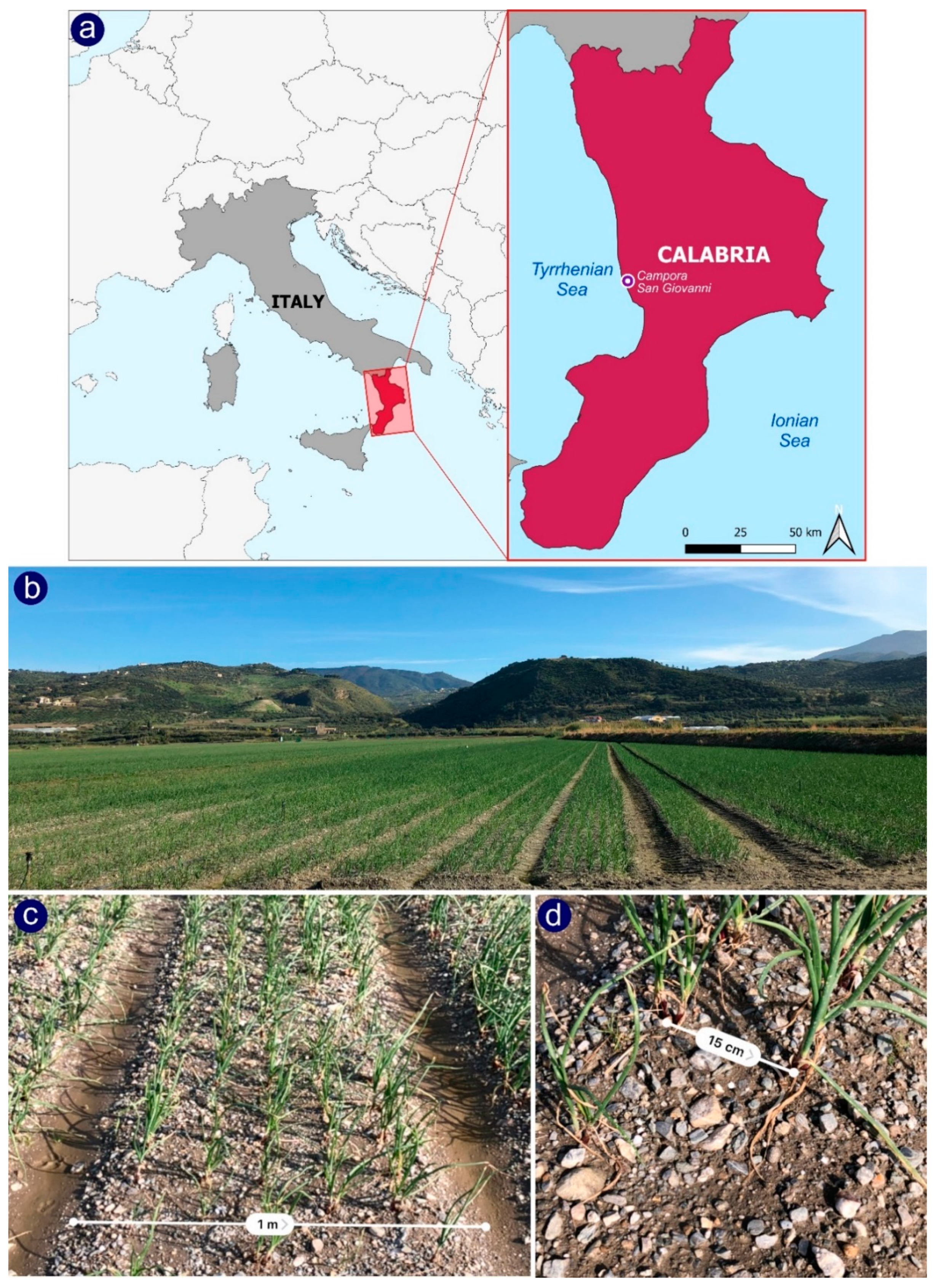

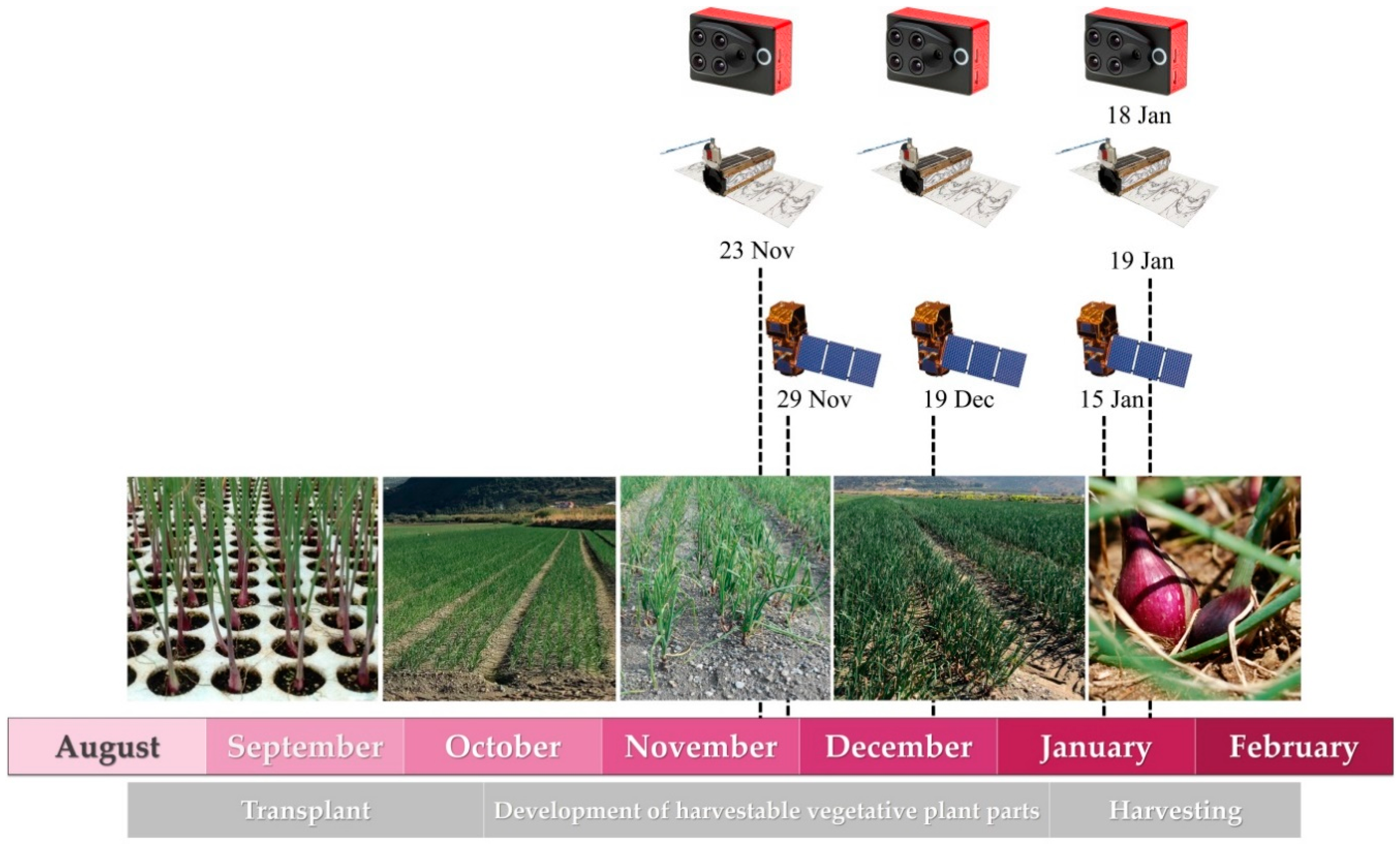

2. Materials and Methods

2.1. Study Site

2.2. Platforms and Data Acquisition

2.2.1. UAV Images

2.2.2. Satellite Images

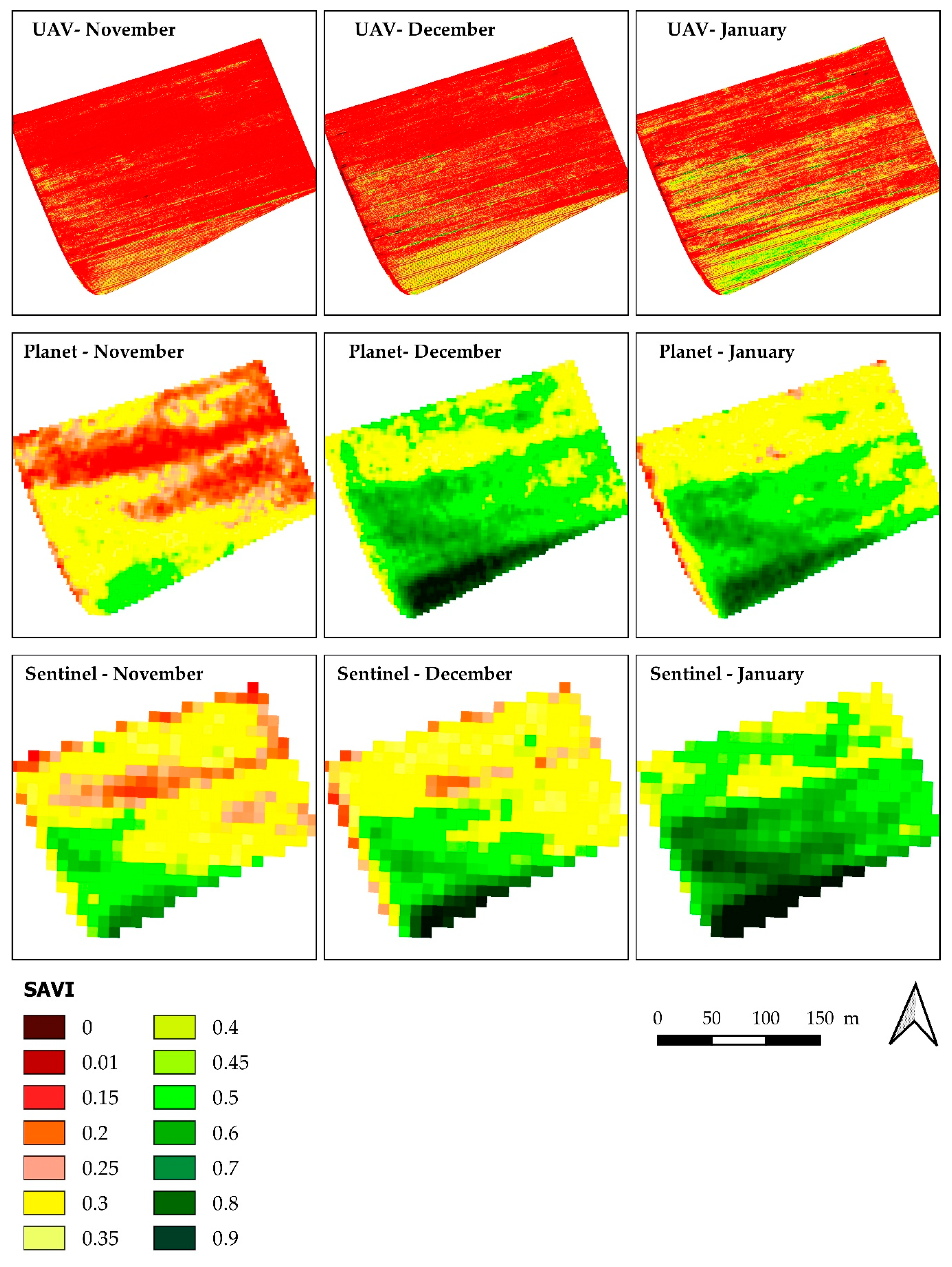

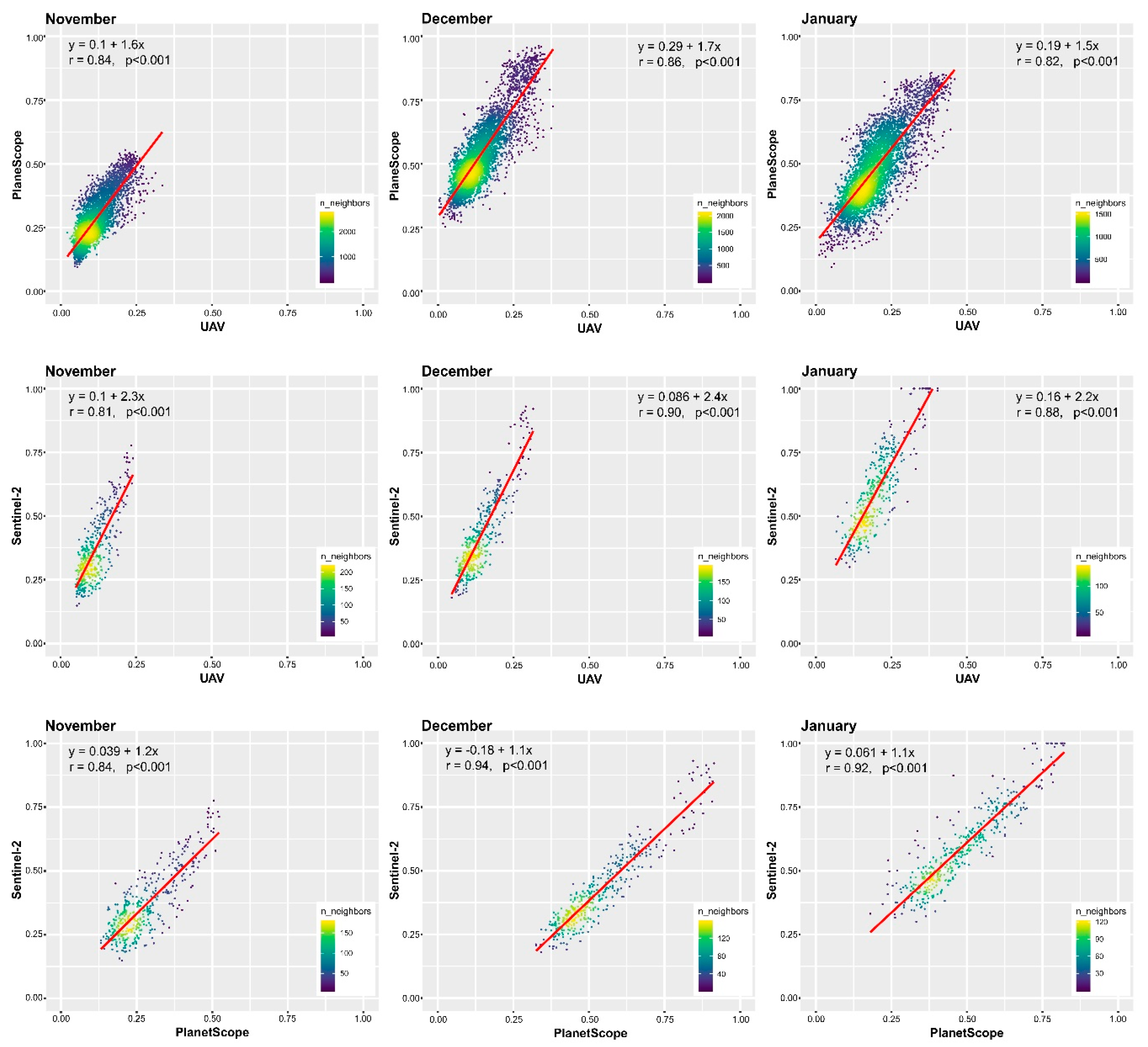

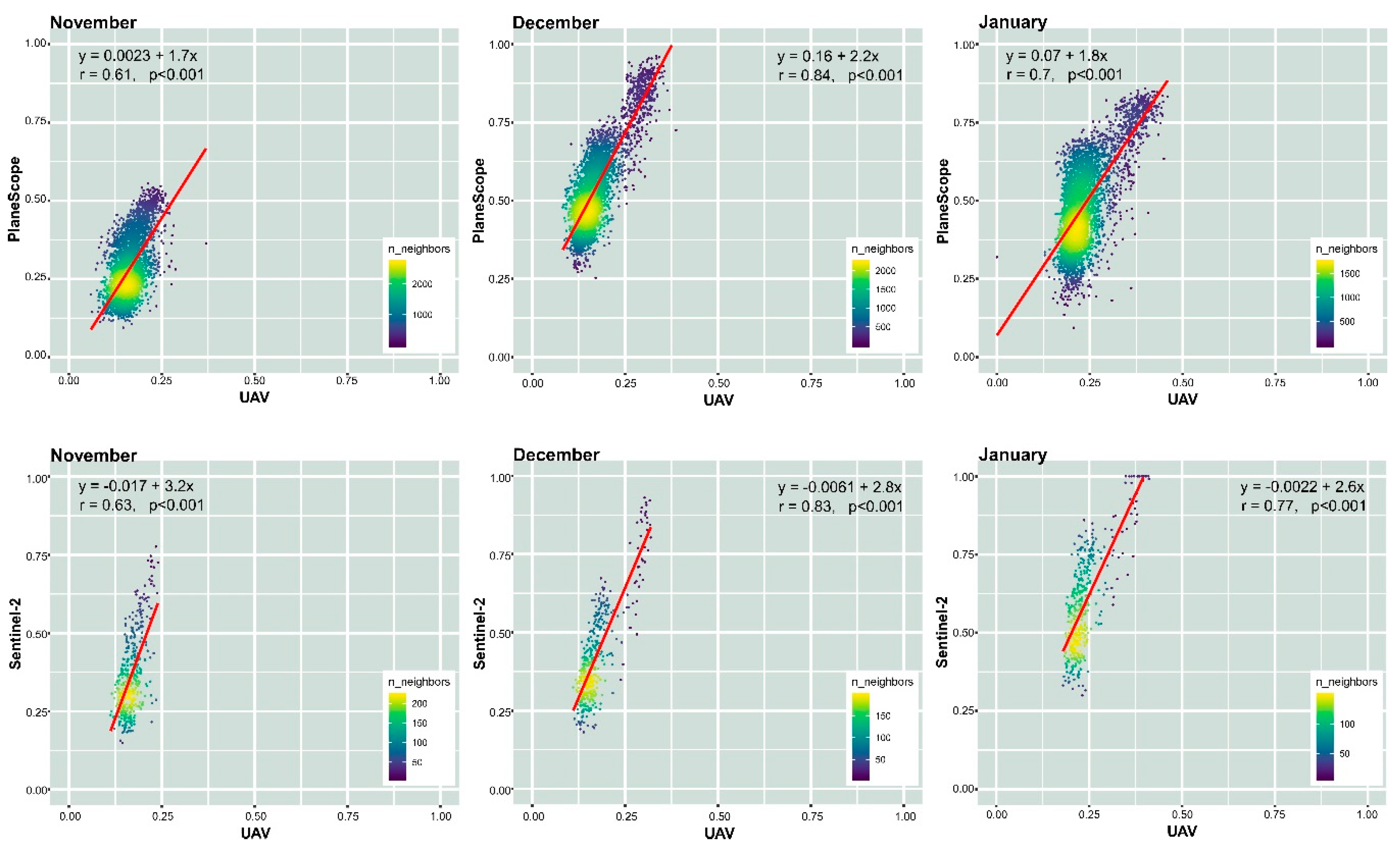

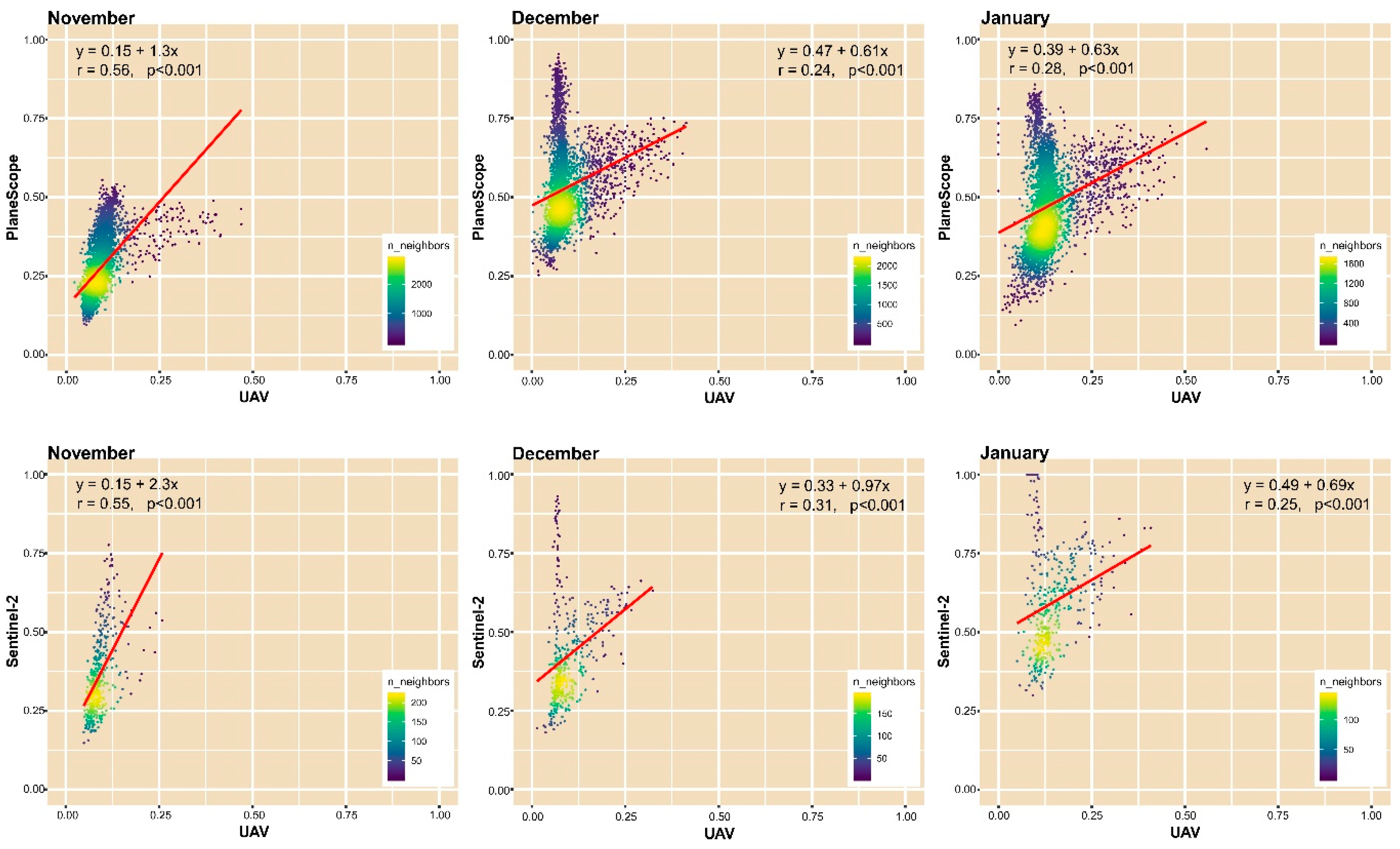

2.3. Comparison of Vegetation Indices (VIs) from the Three Platforms

2.4. Image Segmentation and Classification

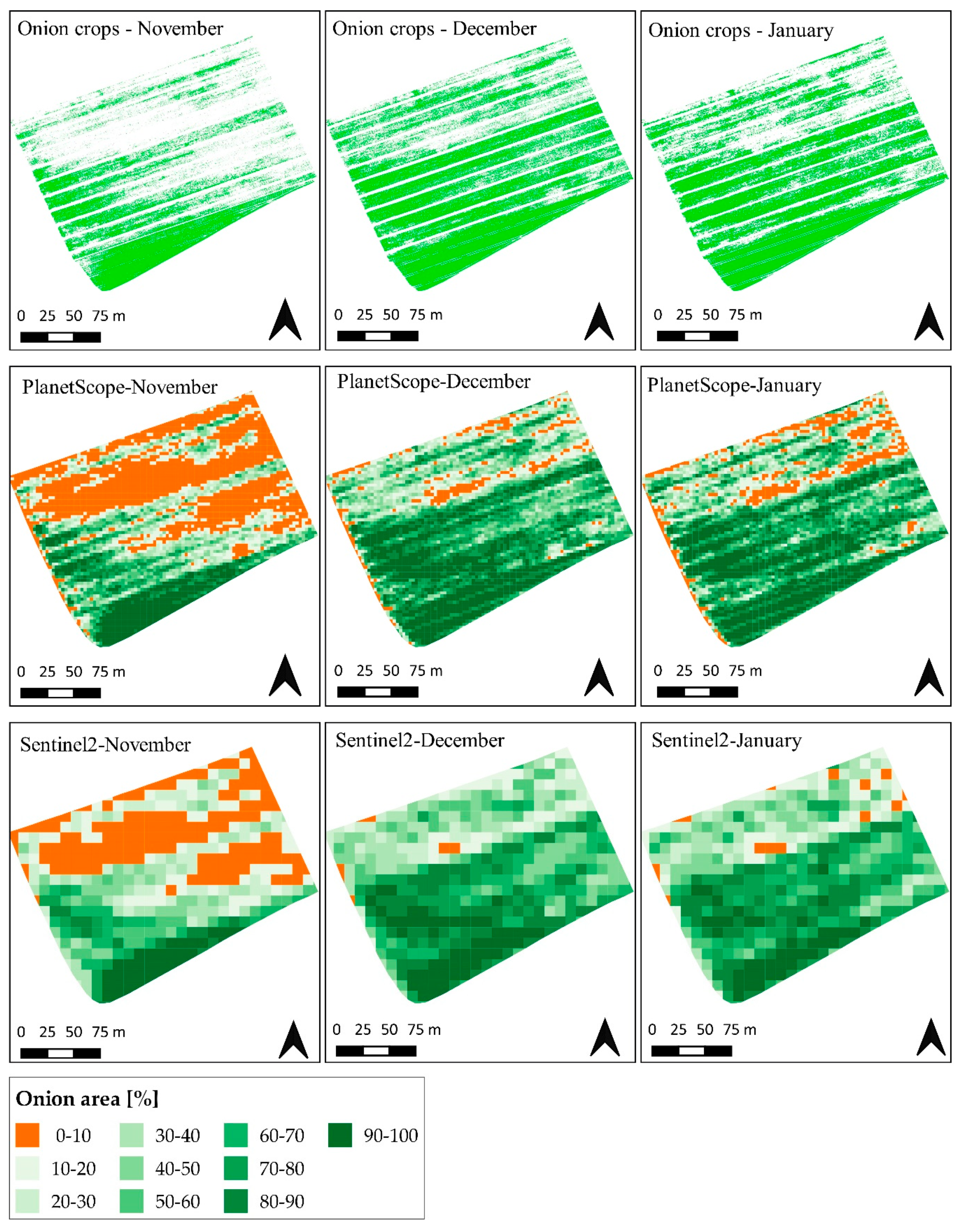

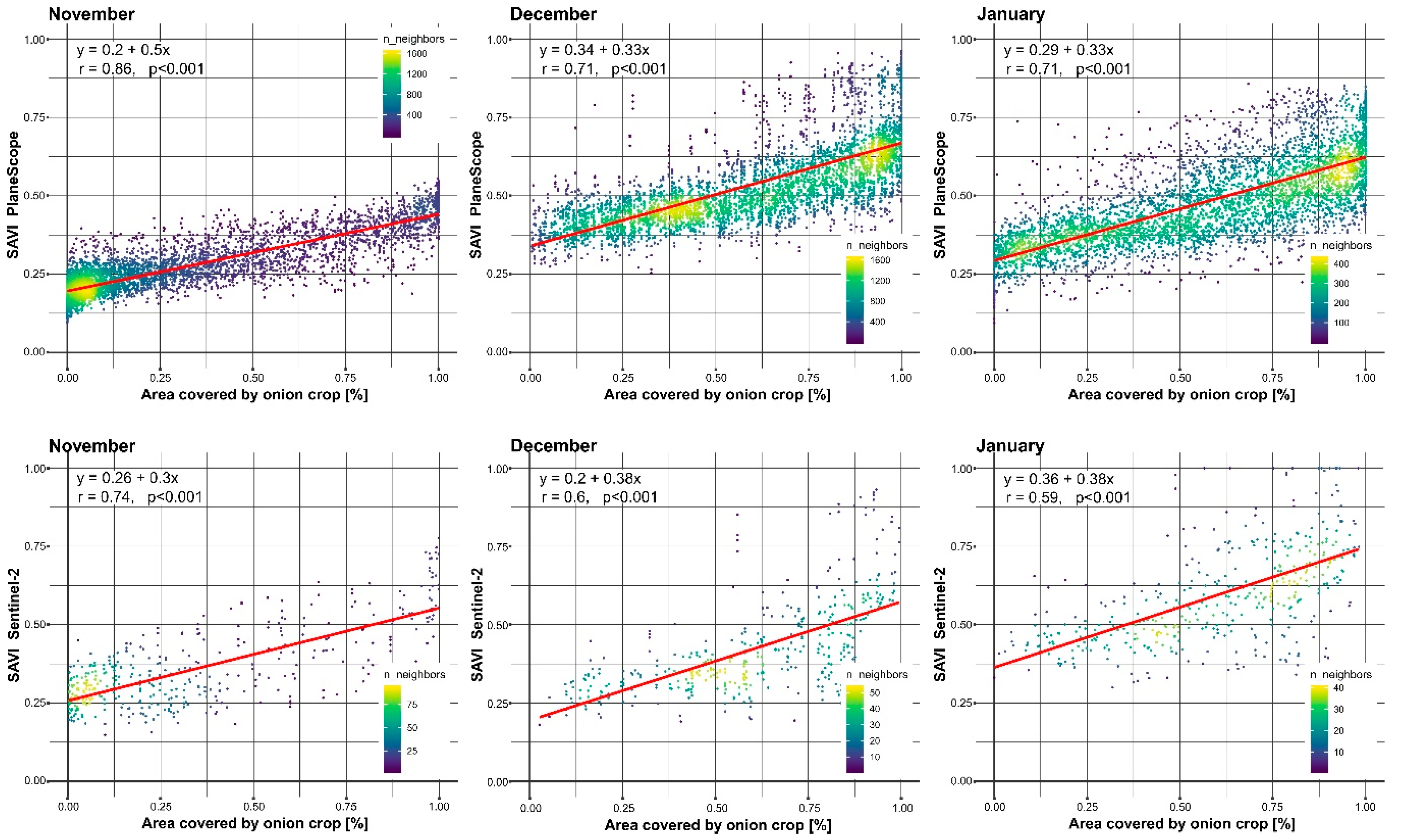

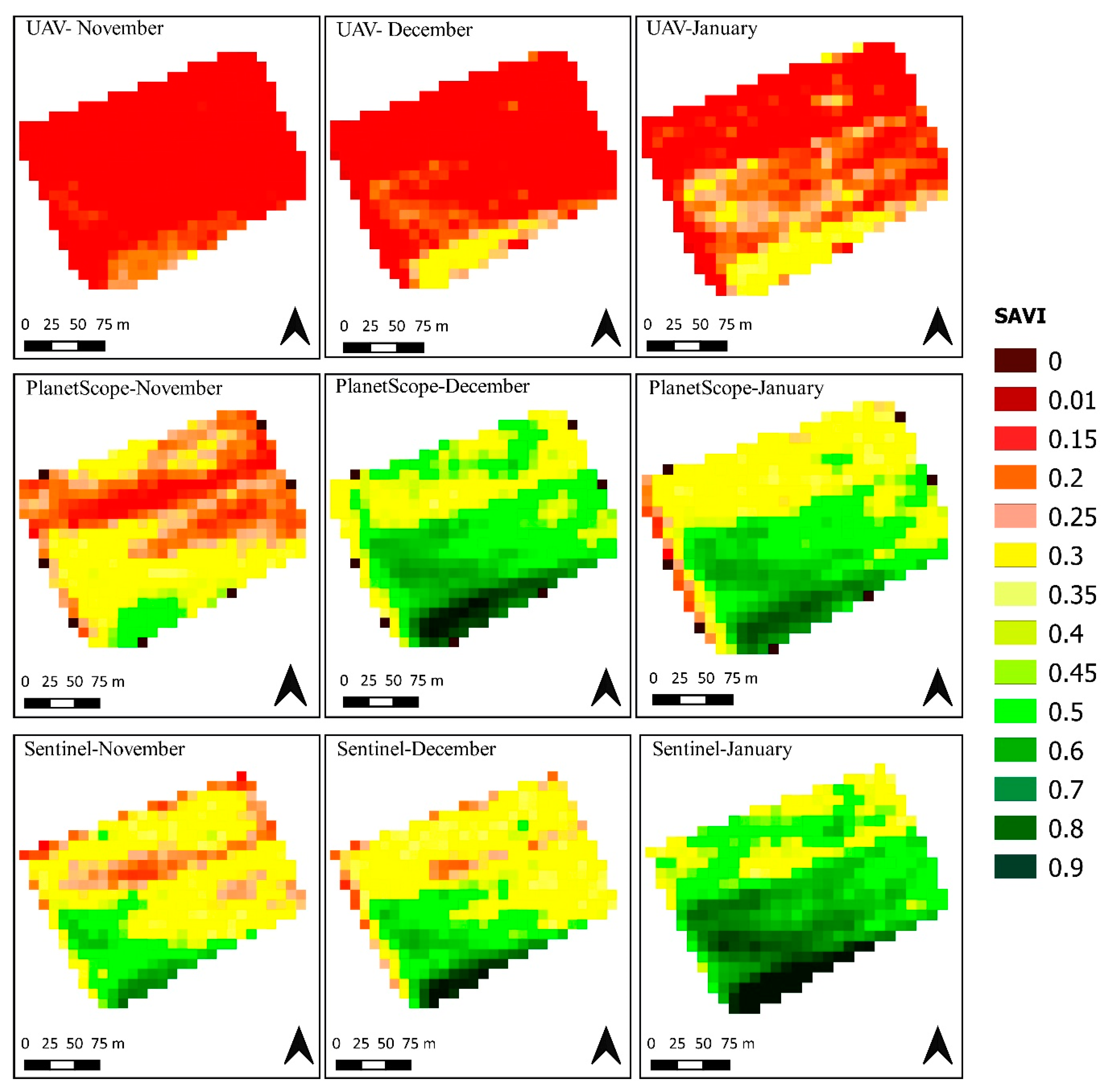

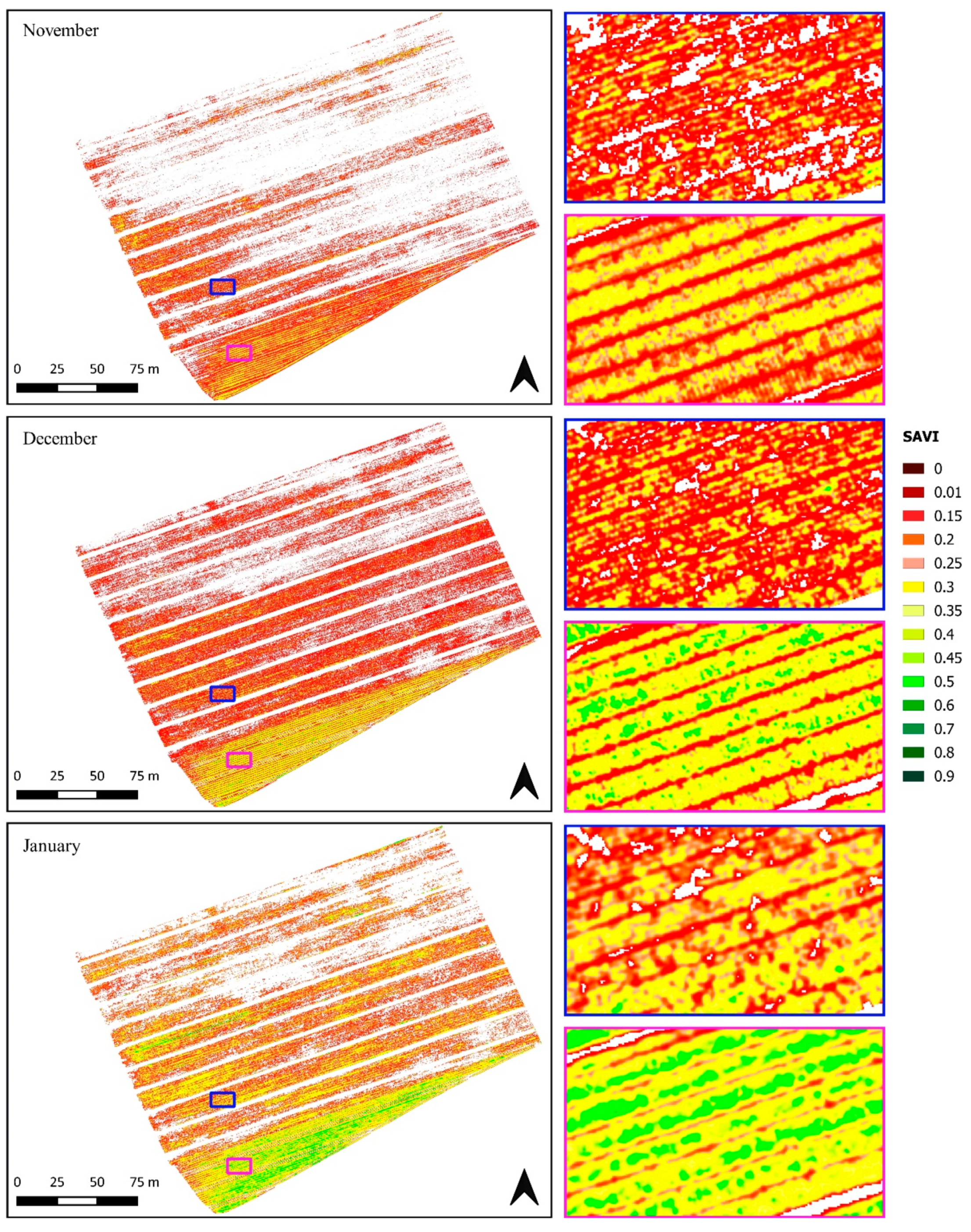

3. Results and Discussions

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Solano, F.; Di Fazio, S.; Modica, G. A methodology based on GEOBIA and WorldView-3 imagery to derive vegetation indices at tree crown detail in olive orchards. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101912. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Moran, M.S.; Inoue, Y.; Barnes, E.M. Opportunities and limitations for image-based remote sensing in precision crop management. Remote Sens. Environ. 1997, 61, 319–346. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D.; et al. UAV-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ICI 8640 P, FLIR Vue Pro R 640, and thermomap cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV thermal imagery in precision agriculture: State of the art and future research outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- McCabe, M.F.; Houborg, R.; Lucieer, A. High-resolution sensing for precision agriculture: From Earth-observing satellites to unmanned aerial vehicles. Remote Sens. Agric. Ecosyst. Hydrol. XVIII 2016, 9998, 999811. [Google Scholar] [CrossRef]

- ESA. Resolution and Swath. Available online: earth.esa.int/web/sentinel/missions/sentinel-2/instrument-payload/resolution-and-swath (accessed on 2 April 2020).

- Vizzari, M.; Santaga, F.; Benincasa, P. Sentinel 2-Based Nitrogen VRT Fertilization in Wheat: Comparison between Traditional and Simple Precision Practices. Agronomy 2019, 9, 278. [Google Scholar] [CrossRef]

- Modica, G.; Pollino, M.; Solano, F. Sentinel-2 Imagery for Mapping Cork Oak (Quercus suber L.) Distribution in Calabria (Italy): Capabilities and Quantitative Estimation. In New Metropolitan Perspectives; ISHT Smart Innovation, Systems and Technologies; Calabrò, F., Della Spina, L., Bevilacqua, C., Eds.; Springer: Cham, Switzerland, 2019; Volume 100, pp. 60–67. ISBN 9783319920993. [Google Scholar]

- Houborg, R.; McCabe, M.F. High-Resolution NDVI from planet’s constellation of earth observing nano-satellites: A new data source for precision agriculture. Remote Sens. 2016, 8, 768. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- He, Y.; Weng, Q. High Spatial Resolution Remote Sensing: Data, Analysis, and Applications; CRC Press: Boca Raton, FL, USA, 2018; ISBN 9780429470196. [Google Scholar]

- Jeong, S.; Kim, D.; Yun, H.; Cho, W.; Kwon, Y.; Kim, H. Monitoring the growth status variability in Onion (Allium cepa) and Garlic (Allium sativum) with RGB and multi-spectral UAV remote sensing imagery. In Proceedings of the 7th Asian-Australasian Conference on Precision Agriculture, Hamilton, New Zealand, 16–18 October 2017; pp. 1–6. [Google Scholar]

- Tang, C.; Turner, N.C. The influence of alkalinity and water stress on the stomatal conductance, photosynthetic rate and growth of Lupinus angustifolius L. and Lupinus pilosus Murr. Aust. J. Exp. Agric. 1999, 39, 457–464. [Google Scholar] [CrossRef]

- Benincasa, P.; Antognelli, S.; Brunetti, L.; Fabbri, C.A.; Natale, A.; Sartoretti, V.; Modeo, G.; Guiducci, M.; Tei, F.; Vizzari, M. Reliability of Ndvi Derived By High Resolution Satellite and Uav Compared To in-Field Methods for the Evaluation of Early Crop N Status and Grain Yield in Wheat. Exp. Agric. 2017, 1–19. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1–22. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GISci. Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- De Castro, A.I.; Peña, J.M.; Torres-Sánchez, J.; Jiménez-Brenes, F.; López-Granados, F. Mapping Cynodon dactylon in vineyards using UAV images for site-specific weed control. Adv. Anim. Biosci. 2017, 8, 267–271. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass c rop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 1–12. [Google Scholar] [CrossRef]

- Ok, A.O.; Ozdarici-Ok, A. 2-D delineation of individual citrus trees from UAV-based dense photogrammetric surface models. Int. J. Digit. Earth 2018, 11, 583–608. [Google Scholar] [CrossRef]

- Ozdarici-Ok, A. Automatic detection and delineation of citrus trees from VHR satellite imagery. Int. J. Remote Sens. 2015, 36, 4275–4296. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- De Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D characterization of vineyards using a novel UAV imagery-based OBIA procedure for precision viticulture applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef]

- Peña, J.M.; Kelly, M.; De Castro, A.I.; López Granados, F. Object-based approach for crop row characterization in UAV images for site-specific weed management. In Proceedings of the 4th International Conference on Geographic Object-Based Image Analysis (GEOBIA 2012), Rio Janeiro, Brazil, 7–9 May 2012; Queiroz-Feitosa, A., Ed.; pp. 426–430. [Google Scholar]

- Córcoles, J.I.; Ortega, J.F.; Hernández, D.; Moreno, M.A. Estimation of leaf area index in onion (Allium cepa L.) using an unmanned aerial vehicle. Biosyst. Eng. 2013, 115, 31–42. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernandez, D.; Moreno, M.A. Onion biomass monitoring using UAV-based RGB imaging. Precis. Agric. 2018, 1–18. [Google Scholar] [CrossRef]

- Aboukhadrah, S.H.; El—Alsayed, A.W.A.H.; Sobhy, L.; Abdelmasieh, W. Response of Onion Yield and Quality To Different Planting Date, Methods and Density. Egypt. J. Agron. 2017, 39, 203–219. [Google Scholar] [CrossRef]

- Mallor, C.; Balcells, M.; Mallor, F.; Sales, E. Genetic variation for bulb size, soluble solids content and pungency in the Spanish sweet onion variety Fuentes de Ebro. Response to selection for low pungency. Plant Breed. 2011, 130, 55–59. [Google Scholar] [CrossRef]

- Ranjitkar, H. A Handbook of Practical Botany; Ranjitkar, A.K., Ed.; Kathmandu Publishing: Kathmandu, Nepal, 2003. [Google Scholar]

- Pareek, S.; Sagar, N.A.; Sharma, S.; Kumar, V. Onion (Allium cepa L.). In Fruit and Vegetable Phytochemicals: Chemistry and Human Health; Yahia, E.M., Ed.; Wiley & Sons: Hoboken, NJ, USA, 2017. [Google Scholar]

- Zhao, L.; Shi, Y.; Liu, B.; Hovis, C.; Duan, Y.; Shi, Z. Finer Classification of Crops by Fusing UAV Images and Sentinel-2A Data. Remote Sens. 2019, 11, 12. [Google Scholar] [CrossRef]

- Bernardi, B.; Zimbalatti, G.; Proto, A.R.; Benalia, S.; Fazari, A.; Callea, P. Mechanical grading in PGI Tropea red onion post harvest operations. J. Agric. Eng. 2013, 44, 317–322. [Google Scholar] [CrossRef][Green Version]

- Tiberini, A.; Mangano, R.; Micali, G.; Leo, G.; Manglli, A.; Tomassoli, L.; Albanese, G. Onion yellow dwarf virus ∆∆Ct-based relative quantification obtained by using real-time polymerase chain reaction in “Rossa di Tropea” onion. Eur. J. Plant Pathol. 2019, 153, 251–264. [Google Scholar] [CrossRef]

- Consorzio di Tutela della Cipolla Rossa di Tropea Calabria IGP. Available online: www.consorziocipollatropeaigp.com (accessed on 30 April 2020).

- Meier, U. Growth Stages of Mono- and Dicotyledonous Plants; Federal Biological Research Centre for Agriculture and Forestry, Ed.; Blackwell Wissenschafts-Verlag: Berlin, Germany, 2001; Volume 12, ISBN 9783826331527. [Google Scholar]

- Bukowiecki, J.; Rose, T.; Ehlers, R.; Kage, H. High-Throughput Prediction of Whole Season Green Area Index in Winter Wheat With an Airborne Multispectral Sensor. Front. Plant Sci. 2020, 10, 1. [Google Scholar] [CrossRef] [PubMed]

- Iqbal, F.; Lucieer, A.; Barry, K. Poppy crop capsule volume estimation using UAS remote sensing and random forest regression. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 362–373. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Fawcett, D.; Panigada, C.; Tagliabue, G.; Boschetti, M.; Celesti, M.; Evdokimov, A.; Biriukova, K.; Colombo, R.; Miglietta, F.; Rascher, U.; et al. Multi-Scale Evaluation of Drone-Based Multispectral Surface Reflectance and Vegetation Indices in Operational Conditions. Remote Sens. 2020, 12, 514. [Google Scholar] [CrossRef]

- Jorge, J.; Vallbé, M.; Soler, J.A. Detection of irrigation inhomogeneities in an olive grove using the NDRE vegetation index obtained from UAV images vegetation index obtained from UAV images. Eur. J. Remote Sens. 2019, 52, 169–177. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Adão, T.; Guimarães, N.; Sousa, A.; Peres, E.; Sousa, J.J. Vineyard Variability Analysis through UAV-Based Vigour Maps to Assess Climate Change Impacts. Agronomy 2019, 9, 581. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Cubero-Castan, M.; Schneider-Zapp, K.; Bellomo, M.; Shi, D.; Rehak, M.; Strecha, C. Assessment Of The Radiometric Accuracy In A Target Less Work Flow Using Pix4D Software. In Proceedings of the 2018 9th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 23–26 September 2018; Volume 2018, pp. 1–4. [Google Scholar]

- Messina, G.; Fiozzo, V.; Praticò, S.; Siciliani, B.; Curcio, A.; Di Fazio, S.; Modica, G. Monitoring Onion Crops Using Multispectral Imagery from Unmanned Aerial Vehicle (UAV). In Proceedings of the “NEW METROPOLITAN PERSPECTIVES. Knowledge Dynamics and Innovation-driven Policies Towards Urban and Regional Transition”, Reggio Calabria, Italy, 18–23 May 2020; Bevilacqua, C., Francesco, C., Della Spina, L., Eds.; Springer: Reggio Calabria, Italy, 2020; Volume 2, pp. 1640–1649. [Google Scholar]

- Messina, G.; Praticò, S.; Siciliani, B.; Curcio, A.; Di Fazio, S.; Modica, G. Telerilevamento multispettrale da drone per il monitoraggio delle colture in agricoltura di precisione. Un’applicazione alla cipolla rossa di Tropea (Multispectral UAV remote sensing for crop monitoring in precision farming. An application to the Red onion of Tropea). LaborEst 2020, 21. in press. [Google Scholar]

- Bartsch, A.; Widhalm, B.; Leibman, M.; Ermokhina, K.; Kumpula, T.; Skarin, A.; Wilcox, E.J.; Jones, B.M.; Frost, G.V.; Höfler, A.; et al. Feasibility of tundra vegetation height retrieval from Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2020, 237, 111515. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, S.; Qin, X.; Zhao, N.; Liang, L. Mapping of urban surface water bodies from sentinel-2 MSI imagery at 10 m resolution via NDWI-based image sharpening. Remote Sens. 2017, 9, 596. [Google Scholar] [CrossRef]

- Spoto, F.; Martimort, P.; Drusch, M. Sentinel—2: ESA’s Optical High-Resolution Mission for GMES Operational Services; Elsevier: Amsterdam, The Netherlands, 2012; Volume 707 SP. ISBN 9789290922711. [Google Scholar]

- Rapinel, S.; Mony, C.; Lecoq, L.; Clément, B.; Thomas, A.; Hubert-Moy, L. Evaluation of Sentinel-2 time-series for mapping floodplain grassland plant communities. Remote Sens. Environ. 2019, 223, 115–129. [Google Scholar] [CrossRef]

- Copernicus. Available online: scihub.copernicus.eu (accessed on 15 April 2020).

- Planet Team. Planet Application Program Interface: In Space for Life on Earth; Planet Team: San Francisco, CA, USA, 2017; Available online: https://api. planet.com (accessed on 30 April 2020).

- Ghuffar, S. DEM generation from multi satellite Planetscope imagery. Remote Sens. 2018, 10, 1462. [Google Scholar] [CrossRef]

- Kääb, A.; Altena, B.; Mascaro, J. Coseismic displacements of the 14 November 2016 Mw 7.8 Kaikoura, New Zealand, earthquake using the Planet optical cubesat constellation. Nat. Hazards Earth Syst. Sci. 2017, 17, 627–639. [Google Scholar] [CrossRef]

- Planet Labs Inc. Planet Imagery and Archive. Available online: https://www.planet.com/products/planet-imagery/ (accessed on 30 April 2020).

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Taylor, P.; Silleos, N.G. Vegetation Indices: Advances Made in Biomass Estimation and Vegetation Monitoring in the Last 30 Years Vegetation Indices. Geocarto Int. 2006, 37–41. [Google Scholar] [CrossRef]

- Huete, A.R.; Jackson, R.D.; Post, D.F. Spectral response of a plant canopy with different soil backgrounds. Remote Sens. Environ. 1985, 17, 37–53. [Google Scholar] [CrossRef]

- Khaliq, A.; Comba, L.; Biglia, A.; Ricauda Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of satellite and UAV-based multispectral imagery for vineyard variability assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Aguilar, F.J.; García Lorca, A.; Guirado, E.; Betlej, M.; Cichon, P.; Nemmaoui, A.; Vallario, A.; Parente, C. Assessment of multiresolution segmentation for extracting greenhouses from WorldView-2 imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2016, 41, 145–152. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. 2000 Multi-resolution segmentation: An optimization approach for high quality multi-scale. Beiträge zum Agit XII Symposium Salzburg, Heidelberg 2000, 12–23. [Google Scholar] [CrossRef]

- Trimble Inc. eCognition ® Developer; Trimble Germany GmbH: Munich, Germany, 2019; pp. 1–266. [Google Scholar]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- El-naggar, A.M. Determination of optimum segmentation parameter values for extracting building from remote sensing images. Alexandria Eng. J. 2018, 57, 3089–3097. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Modica, G.; Messina, G.; De Luca, G.; Fiozzo, V.; Praticò, S. Monitoring the vegetation vigor in heterogeneous citrus and olive orchards. A multiscale object-based approach to extract trees’ crowns from UAV multispectral imagery. Comput. Electron. Agric. 2020. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; De Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed]

- Malacarne, D.; Pappalardo, S.E.; Codato, D. Sentinel-2 Data Analysis and Comparison with UAV Multispectral Images for Precision Viticulture. GI_Forum 2018, 105–116. [Google Scholar] [CrossRef]

- Chuvieco, E. Fundamentals of Satellite Remote Sensing, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2016; ISBN 9781498728072. [Google Scholar]

- Jones, H.G.; Vaughan, R.A. Remote Sensing of Vegetation Principles, Techniques, and Applications; Oxford University Press: Oxford, UK, 2010; ISBN 9780199207794. [Google Scholar]

- Tarnavsky, E.; Garrigues, S.; Brown, M.E. Multiscale geostatistical analysis of AVHRR, SPOT-VGT, and MODIS global NDVI products. Remote Sens. Environ. 2008, 112, 535–549. [Google Scholar] [CrossRef]

- Anderson, J.H.; Weber, K.T.; Gokhale, B.; Chen, F. Intercalibration and Evaluation of ResourceSat-1 and Landsat-5 NDVI. Can. J. Remote Sens. 2011, 37, 213–219. [Google Scholar] [CrossRef]

- Goward, S.N.; Davis, P.E.; Fleming, D.; Miller, L.; Townshend, J.R. Empirical comparison of Landsat 7 and IKONOS multispectral measurements for selected Earth Observation System (EOS) validation sites. Remote Sens. Environ. 2003, 88, 80–99. [Google Scholar] [CrossRef]

- Soudani, K.; François, C.; le Maire, G.; Le Dantec, V.; Dufrêne, E. Comparative analysis of IKONOS, SPOT, and ETM+ data for leaf area index estimation in temperate coniferous and deciduous forest stands. Remote Sens. Environ. 2006, 102, 161–175. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, T. Comparison of Landsat-7 ETM+ and ASTER NDVI measurements. In Proceedings of the Remote Sensing of the Environment: The 17th China Conference on Remote Sensing, Hangzhou, China, 27–31 August 2010; Volume 8203, p. 82030K. [Google Scholar]

- Abuzar, M. Comparing Inter-Sensor NDVI for the Analysis of Horticulture Crops in South-Eastern Australia. Am. J. Remote Sens. 2014, 2, 1. [Google Scholar] [CrossRef]

- Psomiadis, E.; Dercas, N.; Dalezios, N.R.; Spyropoulos, N.V. The role of spatial and spectral resolution on the effectiveness of satellite-based vegetation indices. Remote Sens. Agric. Ecosyst. Hydrol. XVIII 2016, 9998, 99981L. [Google Scholar] [CrossRef]

- Yin, H.; Udelhoven, T.; Fensholt, R.; Pflugmacher, D.; Hostert, P. How Normalized Difference Vegetation Index (NDVI) Trendsfrom Advanced Very High Resolution Radiometer (AVHRR) and Système Probatoire d’Observation de la Terre VEGETATION (SPOT VGT) Time Series Differ in Agricultural Areas: An Inner Mongolian Case Study. Remote Sens. 2012, 4, 3364–3389. [Google Scholar] [CrossRef]

- Miura, T.; Yoshioka, H.; Fujiwara, K.; Yamamoto, H. Inter-comparison of ASTER and MODIS surface reflectance and vegetation index products for synergistic applications to natural resource monitoring. Sensors 2008, 8, 2480–2499. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, H.K.; Roy, D.P.; Yan, L.; Huang, H. Sharpening the Sentinel-2 10 and 20 m Bands to Planetscope-0 3 m Resolution. Remote Sens. 2020, 12, 2406. [Google Scholar] [CrossRef]

- Gallo, K.P.; Daughtry, C.S.T. Differences in vegetation indices for simulated Landsat-5 MSS and TM, NOAA-9 AVHRR, and SPOT-1 sensor systems. Remote Sens. Environ. 1987, 23, 439–452. [Google Scholar] [CrossRef]

- Teillet, P.M.; Staenz, K.; Williams, D.J. Effects of spectral, spatial, and radiometric characteristics on remote sensing vegetation indices of forested regions. Remote Sens. Environ. 1997, 61, 139–149. [Google Scholar] [CrossRef]

- Huete, A.R.; Liu, H.Q.; Batchily, K.; Van Leeuwen, W. A comparison of vegetation indices over a global set of TM images for EOS-MODIS. Remote Sens. Environ. 1997, 59, 440–451. [Google Scholar] [CrossRef]

- Wilson, E.H.; Sader, S.A. Detection of forest harvest type using multiple dates of Landsat TM imagery. Remote Sens. Environ. 2002, 80, 385–396. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, M.; Wang, N. Precision agriculture—A worldwide overview. Comput. Electron. Agric. 2002, 36, 113–132. [Google Scholar] [CrossRef]

- Robert, P.C. Precision agriculture: A challenge for crop nutrition management. Plant Soil 2002, 247, 143–149. [Google Scholar] [CrossRef]

- Gebbers, R.; Adamchuk, V.I. Precision agriculture and food security. Science 2010, 327, 828–831. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165. [Google Scholar] [CrossRef]

| Platform | UAV | SATELLITE | |

|---|---|---|---|

| Parrot Disco-Pro AG | PlanetScope | Sentinel-2 | |

|  Camera Parrot Sequoia |  3U Cubesat |  |

| Number of channels used | 4 | 4 | 4 |

| Spectral wavebands (nm) | Green 550 (width 40) Red 660 (width 40) Red Edge 690 (width 10) NIR 790 (width 40) | Blue 464–517 (width 26.5) Green 547–585 (width 19) Red 650–682 (width 16) NIR 846–888 (width 21) | Blue 426–558 (width 66) Green 523–595 (width 36) Red 633–695 (width 31) NIR 726–938 (width 106) |

| Radiometric resolution | 10 bit | 16 bit | 16 bit |

| Dimension | 59 mm × 41 mm × 28 mm | 100 mm × 100 mm × 300 mm | 3.4 × 1.8 × 2.35 m |

| Weight | 72 g | 4 kg | 1000 kg |

| FOV | HFOV: 62° VFOV: 49° | HFOV: 24.6 km VFOV: 16.4 km | HFOV: 290 km |

| Flight quote AGL | 50 m | 475 km | 786 km |

| Ground resolution distance (GSD) | 5 cm | 3.7 m | 10 m |

| Number of images to cover the study site | >1000 | 1 | 1 |

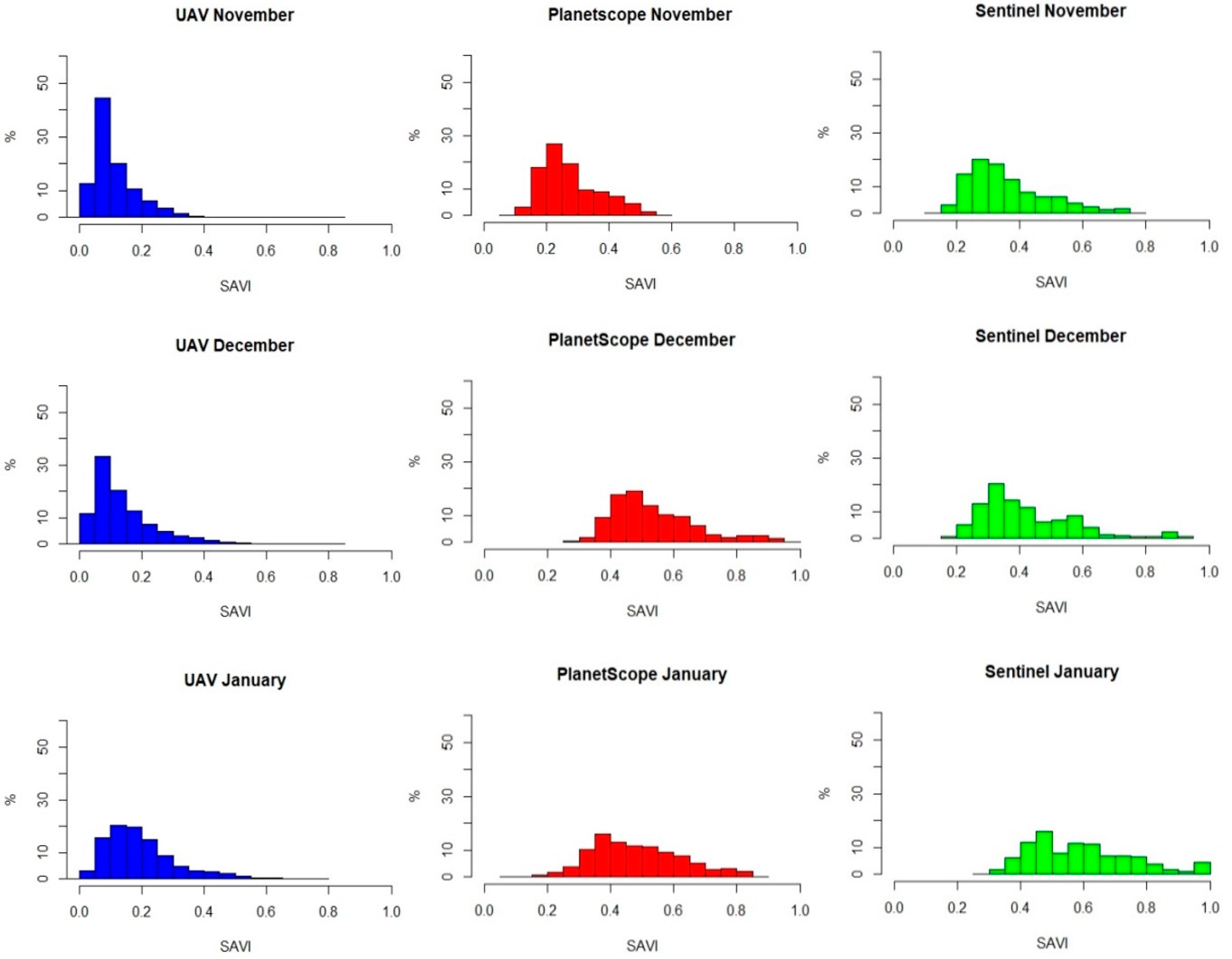

| Date | Platform | Number of Pixels | SAVI Mean | SAVI Standard Deviation | SAVI CV (%) |

|---|---|---|---|---|---|

| November 2018 | UAV | 28,132,559 | 0.112 | 0.07 | 62.5 |

| PlanetScope | 8118 | 0.276 | 0.09 | 32.6 | |

| Sentinel-2 | 696 | 0.360 | 0.12 | 33.3 | |

| December 2018 | UAV | 28,132,559 | 0.142 | 0.10 | 70.4 |

| PlanetScope | 8118 | 0.536 | 0.13 | 24.2 | |

| Sentinel-2 | 696 | 0.420 | 0.15 | 35.7 | |

| January 2019 | UAV | 28,132,559 | 0.199 | 0.11 | 55.2 |

| PlanetScope | 8118 | 0.484 | 0.14 | 28.9 | |

| Sentinel-2 | 696 | 0.590 | 0.16 | 27.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sens. 2020, 12, 3424. https://doi.org/10.3390/rs12203424

Messina G, Peña JM, Vizzari M, Modica G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sensing. 2020; 12(20):3424. https://doi.org/10.3390/rs12203424

Chicago/Turabian StyleMessina, Gaetano, Jose M. Peña, Marco Vizzari, and Giuseppe Modica. 2020. "A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy)" Remote Sensing 12, no. 20: 3424. https://doi.org/10.3390/rs12203424

APA StyleMessina, G., Peña, J. M., Vizzari, M., & Modica, G. (2020). A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sensing, 12(20), 3424. https://doi.org/10.3390/rs12203424