Abstract

Dead wood such as coarse dead wood debris (CWD) is an important component in natural forests since it increases the diversity of plants, fungi, and animals. It serves as habitat, provides nutrients and is conducive to forest regeneration, ecosystem stabilization and soil protection. In commercially operated forests, dead wood is often unwanted as it can act as an originator of calamities. Accordingly, efficient CWD monitoring approaches are needed. However, due to the small size of CWD objects satellite data-based approaches cannot be used to gather the needed information and conventional ground-based methods are expensive. Unmanned aerial systems (UAS) are becoming increasingly important in the forestry sector since structural and spectral features of forest stands can be extracted from the high geometric resolution data they produce. As such, they have great potential in supporting regular forest monitoring and inventory. Consequently, the potential of UAS imagery to map CWD is investigated in this study. The study area is located in the center of the Hainich National Park (HNP) in the federal state of Thuringia, Germany. The HNP features natural and unmanaged forest comprising deciduous tree species such as Fagus sylvatica (beech), Fraxinus excelsior (ash), Acer pseudoplatanus (sycamore maple), and Carpinus betulus (hornbeam). The flight campaign was controlled from the Hainich eddy covariance flux tower located at the Eastern edge of the test site. Red-green-blue (RGB) image data were captured in March 2019 during leaf-off conditions using off-the-shelf hardware. Agisoft Metashape Pro was used for the delineation of a three-dimensional (3D) point cloud, which formed the basis for creating a canopy-free RGB orthomosaic and mapping CWD. As heavily decomposed CWD hardly stands out from the ground due to its low height, it might not be detectable by means of 3D geometric information. For this reason, solely RGB data were used for the classification of CWD. The mapping task was accomplished using a line extraction approach developed within the object-based image analysis (OBIA) software eCognition. The achieved CWD detection accuracy can compete with results of studies utilizing high-density airborne light detection and ranging (LiDAR)-based point clouds. Out of 180 CWD objects, 135 objects were successfully delineated while 76 false alarms occurred. Although the developed OBIA approach only utilizes spectral information, it is important to understand that the 3D information extracted from our UAS data is a key requirement for successful CWD mapping as it provides the foundation for the canopy-free orthomosaic created in an earlier step. We conclude that UAS imagery is an alternative to laser data in particular if rapid update and quick response is required. We conclude that UAS imagery is an alternative to laser data for CWD mapping, especially when a rapid response and quick reaction, e.g., after a storm event, is required.

1. Introduction

1.1. Role and Mapping of Dead Wood

Dead wood has been recognized as an important component of natural forests since it enriches forest ecosystems in terms of plant, fungus, and animal diversity [1,2,3,4,5,6]. It provides micro-habitats for several species and nutrients through the contribution of organic matter. Moreover, it is beneficial to forest regeneration, ecosystem stabilization, soil protection, and carbon sequestration [1,5,6]. However, in commercially used and managed forests, which are often characterized by a low diversity of tree species, dead wood can cause economic losses since it can be the originator of calamities such as insect outbreaks or other diseases [7].

In European natural forests, the stock of dead wood ranges from 50 up to 300 m³/ha [1,5,6]. Dead wood arises from trees or tree components and their death due to old age, windthrow, snow-break, fire, insect attacks, fungal infestation, bark injury, or logging activities [6]. Accordingly, the forms of appearance of above ground dead wood are diverse and comprise standing dead trees (snags), downed trees (logs), stumps, branches, and twigs [6,8]. Above ground dead wood is often categorized into fine dead wood debris (FWD) and coarse wood debris (CWD) using a threshold of 10 cm, which refers to the diameter of the dead wood components [8]. Most studies on dead wood assessment focus on CWD [2,3,4,5,8,9,10,11,12,13,14]. Dead wood goes through various stages of decay until it is completely decomposed. Albrecht (1991) [6] distinguishes four stages of decay: fresh dead wood, beginning decay, advanced decay, and heavily decomposed. During the decomposition process, the color and three-dimensional (3D) structure of dead wood change, which has to be considered when developing dead wood assessment strategies [9,15]. For example, for heavily decomposed CWD, a 3D structure-based separation of dead wood and ground might become unfeasible [11,12,15,16].

Dead wood mapping can be conducted at the area or object level. While the latter aims at the direct mapping of individual downed stems, snags and other dead wood debris, area-based methods provide averages of dead wood for a given region. Traditional ground-based techniques use a suitable sampling strategy to make projections for an entire area [1,2,3,5]. Other area-based approaches involve predictors to model the total amount of dead wood for an area. The direct mapping of individual dead wood objects provides details on the spatial distribution of dead wood, which in turn is a requirement for several ecological and silvicultural applications [7,15]. Regardless of whether traditional ground-based inventories or remote sensing methods are used for the direct mapping of dead wood, the wall-to-wall detection of all individual dead wood objects can be considered a complex task as it is impeded by two major difficulties:

- Ground-based campaigns suffer from challenging carrier phase differential global navigation satellite system (CDGNSS) conditions. Thus, the subdecimeter positioning accuracy needed to survey CWD (especially when campaign data are used as reference for remote sensing-based inferences) can hardly be achieved. Alternatively, positioning based on tachymetry could be carried out. However, this would entail a great deal of effort in forest environments.

- The use of remote-sensing methods is limited as CWD objects are too small to be detected via satellite-borne data. Also, the forest canopy and potentially the undergrowth prevent the visibility of the CWD objects. Consequently, previous research has mainly focused on the use of active systems such airborne light detection and ranging (LiDAR) [7,8,9,12,14,15,16] or terrestrial laser scanning (TLS) [8,11,17,18].

1.2. Previous Studies on Dead Wood Mapping

1.2.1. Area-Based Dead Wood Mapping Using Airborne Light Detection and Ranging (LiDAR) Data

Area-based approaches are less demanding with respect to the required 3D point density and several studies report the successful use of LiDAR data for area-based dead wood assessments [9,10,19,20,21,22,23,24]. For example, Pesonen et al. [24] utilized airborne LiDAR data to predict downed and standing CWD in the Koli National Park in eastern Finland. The authors used height-intensity metrics as predictors and reported that LiDAR-based estimates for downed CWD are more accurate than the estimates of ground-survey based characteristics of living trees. In another study Pesonen et al. [9] compared several ground based sampling methods for CWD assessment including the usage of airborne LiDAR data as auxiliary information. The inclusion of LiDAR-based probability layers was found to be promising and could increase the efficiency of CWD inventories. Bright et al. [22] used a random forest algorithm to predict living and dead tree basal area derived from LiDAR-derived metrics. The intensity was shown to be an important discriminator between dead and living trees. Sumnall et al. [23] revealed that the season of LiDAR data acquisition (leaf-on vs. leaf-off) had great impact on dead wood detection and the differentiation of decay stages for both snags and logs. Tanhuanpää et al. [10] used bi-temporal airborne LiDAR data to predict downed CWD in an urban boreal forest close to Helsinki, Finland. The prediction was based on detected canopy changes at the individual tree level as well as a set of allometric equations. Accordingly, trees missing in the second acquisition were related to CWD downed in between both LiDAR flights.

1.2.2. Object-Based Dead Wood Mapping Using Airborne LiDAR Data

Direct mapping of individual dead wood objects demands a sufficient sampling rate in terms of either laser pulses per area or geometric resolution if imaging methods are used. With respect to airborne LiDAR data, high-density point clouds (>20 pts/m²) are frequently used to detect downed logs. In [8] the authors found that the minimum size of the detectable CWD depends on point cloud density. As previously mentioned, one difficulty with direct mapping of individual downed CWD is the generation of valid reference data [12] since achieving accurate positioning in forests is complicated. Positional errors frequently exceed the diameters of CWD by the order of one or two magnitudes [7,11,13,15,16]. Thus, in several studies reference data are generated through manual labeling of CWD based on the same laser or optical data used for automatic CWD delineation [11,14,16,18,25,26].

Positional errors in the reference data complicate the validation of CWD mapping results as suitable strategies for linking mapped CWD and reference data need to be developed [7,11,12,13]. Some studies manually assess map quality and thus introduce a certain degree of subjectivity [7,15,16,25]. Nevertheless, several of them demonstrate the potential of airborne LiDAR data for direct mapping of individual dead wood objects. Blanchard et al. [16] utilized rasterized multi-return LiDAR data as input for a rule-based object-based image analysis (OBIA) approach in order to classify downed logs, canopy cover, and the ground in California, USA. Their test site comprised bare ground, shrubs and isolated patches of sparse conifer forests. The authors achieved a classification accuracy of 73%. Muecke et al. [15] used airborne LiDAR data to detect downed trees with a stem diameter >30 cm on a site in Eastern Hungary (Nagyerdo). The site was covered with deciduous forest and the LiDAR data were collected during leaf-off conditions. The authors rasterized terrain-normalized LiDAR data and created a clean map of downed wood by removing height values between 2 and 7 m. Downed logs were identified by means of a classification scheme. The accuracy was assessed using expert knowledge. The authors reported a completeness of 75% and a correctness of 90%. Similar work was conducted by Leiterer et al. [14]. The study aimed at the LiDAR-based detection of downed stems with a diameter >30 cm at sites featuring mixed (Germany, Uckermark) or deciduous forest (Laegern, Switzerland). Overall, 70% of the logs were at least partially detected. Lindberg et al. [13] developed a line template-matching method to detect storm-felled trees in a managed hemiboreal forest in the south west of Sweden. The approach made direct use of the LiDAR-based point cloud. Only returns between 0.2 and 1.0 m above ground were considered for the line template matching; 41% of the ground surveyed downed stems could be automatically linked to the LiDAR-derived stems. The reported overdetection rate using the proposed LiDAR approach amounts to 30%. Nyström et al. [7] used the same dataset to detect windthrown trees but developed a slightly different method. Again, a template matching approach was applied. The authors used a 10 cm resolution terrain-normalized height model of the forest floor comprising the windthrown trees. The templates had a rectangular shape and featured different widths (0.3–0.9 m) but equal lengths (8 m). The achieved accuracy was similar to the results achieved by Lindberg et al. [13]. Polewski et al. [12] applied a normalized cut approach which merged short segments into whole stems to detect fallen trees in the Bavarian Forest National Park, Germany. The main tree species occurring within the test site were Norway spruce and European beech. The LiDAR data were recorded under leaf-off conditions. For model training, simulated data of downed logs was used. The temporal offset between reference data and LiDAR data as well as overstory cover complicated the validation and only stems visible in the LiDAR data were used for accuracy assessment. The authors reported a detection rate between 75% and 85% for an overstory cover of 30-40%. The overdetection rate was below 20%.

1.2.3. Object-Based Dead Wood Mapping Using Terrestrial Laser Scanning (TLS) Data

As occlusion by overstory coverage or limitations originating from insufficient point density in airborne LiDAR data have been recognized as restrictive factors, some studies assess the potential of TLS data for CWD mapping. Polewski et al. [18] developed a cylinder detection framework for the automatic detection of downed logs. The perceptibility of cylindrical shapes in 3D point clouds sets minimum requirements in terms of point density which could be achieved using TLS scans featuring densities between 800 and 44,000 pts/m². The authors tested the approach at three different plots in the Bavarian Forest National Park. The reference data was manually digitized from the TLS point clouds. The study revealed a strong impact of the plot characteristics on detected tree length completeness and error rate. For an error rate of 0.2 the completeness ranged from 0.4 to 0.75. Yrttimaa et al. [11] suggest another method involving cylinder fitting. The workflow furthermore involves the rasterization of the point cloud, raster image segmentation and classification, and the delineation of the position of the logs. The study area is located in Evo, Finland and features Scots pine, Norway spruce, and birch. The TLS data were acquired at 20 sample plots, with each plot being covered by five scans. Even though reference data were collected in the field, validation was accomplished based on visual interpretation of the TLS data. Overall, 68% of the dead wood was automatically detected.

1.2.4. Object-Based Dead Wood Mapping Using Optical Data

Occlusion by overstory coverage is the major obstacle for employing high-resolution optical data to detect downed CWD. Indeed, some studies are concerned with the detection of individual snags using airborne optical data [27,28,29,30,31]. Butler and Schlaeper [30] used color infrared (CIR) aerial photos to detect spruce snags in mountain forests in Switzerland. The detection was conducted manually and required expert knowledge. In total, 82% of the snags were identified. Dunford et al. [29] collected red-green-blue (RGB) imagery by means of the paraglider drone “Pixy” over a Mediterranean riparian forest in southeastern France. Snags were classified using pixel-based and object-based classification. The authors report errors of omission and commission of 80% and 65%, respectively. Pasher and King [28] used CIR airborne optical for canopy dead wood detection in a temperate hardwood forest in Gatineau Park, Canada. The direct detection approach involved Iterative Self-Organizing Data (ISODATA) clustering, object-based classification, and spectral unmixing. The validation revealed a detection accuracy of 90% for the control site. A recent publication by Krzystek et al. [27] reports on large scale mapping of snags in Šumava National Park and Bavarian Forest National Park. The entire site covers an area of 924 km². Due to bark beetle attacks, a great part is characterized by dead wood such as snags. The separation of dead standing trees and living trees involved multispectral aerial imagery and geometric features derived from LiDAR data. The authors used random forests and logistic regressions as classifiers and report an overall accuracy of above 90%.

The use of optical data for downed CWD detection requires free sight of the objects. This requirement can be met if the forest considered is very sparse or if there are large gaps in the forest canopy. Pirotti et al. [32] investigated high-resolution RGB imagery for damage assessment caused by windthrow in the Tuscany Region, Italy. Due to a heavy storm, a great percentage of forest was damaged resulting in large patches of logs. Three different machine learning approaches were tested. The authors report a high agreement (R² = 0.92) between field measurements and classification results. Jiang et al. [25] applied semantic segmentation on airborne CIR imagery for log detection in the Bavarian Forest National Park, Germany. The deep learning approach is implemented in the DenseNet framework. Training and test data were obtained from manual labelling of the CIR imagery. In general, only visible logs were considered. Although the illustrations provided suggest that parts of the forest are very sparse, a considerable percentage of logs might be occluded. The authors report almost no false positive alarms and recall rates of 0.95 and 0.68.

A feasible strategy to mitigate occlusion of the forest floor and thus of downed CWD in optical imagery is the use of wide aperture angles in conjunction with great overlap of the individual images. Thus, sensing canopy gaps from different angles will enable the view of different patches of the ground. These patches might be connected to one orthomosaic created from structure from motion (SfM) processing (see following paragraph). Furthermore, the canopy gap fraction might be increased in deciduous forest during leaf-off conditions. Recent technical developments with respect to unmanned aerial systems (UAS) permit the acquisition of this kind of imagery. Also, the geometric resolution is sufficiently high for CWD mapping. Inoue et al. [26] test UAS data for the detection of downed trees in a dense deciduous broadleaved forest in the Ogawa Forest Reserve in Kitaibaraki, Japan. The helicopter-like UAS (RMAX-G1) generated RGB images with a geometric resolution of 0.5–1.0 cm. As the data were recorded during leaf-off conditions, >80% of the logs could be manually identified. This study reveals that there is a need for further research in terms of data acquisition and processing.

1.3. Unmanned Aerial Systems (UAS) Imagery and Structure from Motion (SfM) for Small-Scale Mapping Tasks: Potential and Principles

The above studies highlight the great potential of UAS for CWD mapping. UAS have appeared in the past decade as a novel form of Earth observation (EO) data that can bridge the gap between in-situ and far range EO data and, thus, enable development of improved data upscaling approaches [33]. Often UAS are equipped with optical camera systems that provide ultra-high-resolution imagery (i.e., centimeter scale), which is one precondition for CWD mapping. Digital images can be recorded nearly anytime and at low cost. Flight settings and imaging parameters can be adapted according to the needs of the application in terms of spectral channels, image overlap, illumination conditions and/or geometric resolution. The high flexibility, scalability, and ease of use of UAS has led to an increased usage of UAS-borne data in many fields of application where high spatial resolution is needed such as structural health monitoring [34], forestry [35], archeology [36], costal mapping [37] or topographic surveying [38]. Lately, this usage has been further pushed by the emergence of low-cost consumer UAS with even easier operation. Such drones have also triggered the widespread use of UAS data for citizen science or humanitarian crowdsourcing organizations such as the UAViators [39]. Besides ultra high-resolution imagery, the ability to acquire 3D information is of particular interest for forestry research since it enables the separate investigation of different elevation layers (as demonstrated in this study).

Prevalent photogrammetric processing chains (commonly summarized as SfM) exist to generate 3D point clouds and orthomosaics based on overlapping UAS imagery [40]. The SfM approach employs stereoscopic principles [40]. SfM permits the simultaneous approximation of constant imaging properties, the motion of an individual camera, and 3D object characteristics. It comprises three main phases: (1) recognition of noticeable image features/points in overlapping images [41,42]; (2) estimation of an initial imaging geometry and the corresponding sparse 3D point cloud [43,44]; and (3) optimization of the model as a whole [45]. Eventually, the refined sparse 3D point cloud can be densified by applying dense stereo matching approaches [46]. The 3D positional accuracy of point clouds and orthomosaics derived from UAS image data depends on the UAS survey parameters (flight pattern, camera viewing angle, distribution of ground control points, GCPs) and the SfM processing details [38,47,48]. For a weak network of tie points, as commonly achieved with parallel (i.e., airborne campaign-like) flight tracks and nadir images, systematic errors such as doming of the 3D model can occur [47]. These errors can either be minimized by installing sufficient and well-distributed GCPs or by implementing more comprehensive flight patterns including oblique images [38,47,48]. Thereby, systematic errors can be avoided even with inexpensive equipment. Thanks to current technological developments, such constraints can be overcome by next generation UAS even with simple flight patterns weak tie point networks. These systems comprise hardware for real time kinematic (RTK)-based positioning and permit positional accuracies better than 5 cm [49,50], thereby allowing for accurate and reliable direct georeferencing. In addition, systematic geometric errors such as doming can be avoided [48,51].

1.4. Scope and Remainder of This Publication

The introduction clarified the great importance of CWD in natural and commercial forests. It was also shown which difficulties and challenges still exist regarding CWD mapping. Furthermore, the literature review reveals that most of the studies on CWD mapping have been conducted in boreal and hemiboreal forests and employ laser data. Although UAS imagery might have a great potential for CWD mapping, as discussed in the previous sections, there is a lack of studies that investigate the potential of such data for CWD mapping. Consequently, this study addresses these open questions. The overall goal of this research is to develop and validate a cost-effective and flexible approach to CWD mapping for deciduous forests that can be used to support forest inventories. We suggest a novel end-to-end approach using UAS optical imagery and SfM to map CWD underneath a forest canopy. The workflow exploits the three-dimensionality of the SfM point cloud to eliminate elements preventing an unobstructed vision of the forest floor.

The remainder of the publication is organized as follows: Section 2 presents the materials and methods, including a description of the test site, field work, data, UAS data processing, reference data collection, methodological development, and the framework for accuracy analysis. Section 3 lays out the results of this research, including the analysis of the achieved mapping accuracies. Section 4 is dedicated to the discussion of the results obtained, which is followed by concluding remarks in Section 5.

2. Materials

2.1. The Supersite ‘Huss’ Within the Hanich National Park (HNP)

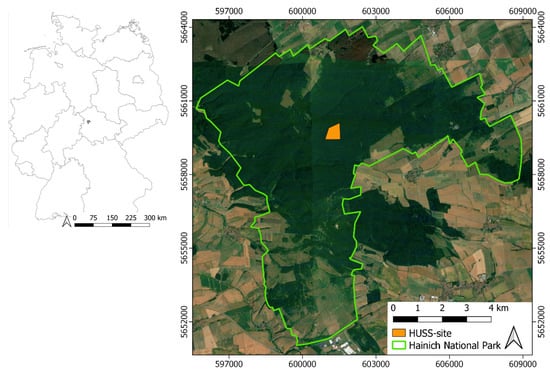

The Hainich National Park (HNP) is located in the center of Germany (Figure 1). It was established on 31 December 1997. Since 25 June 2011, the park is a UNESCO World Heritage Site of primeval beech forests of the Carpathians and old beech forests in Germany. Although it comprises a rather small area (75 km²), the HNP plays an important role in the preservation and protection of beech-dominated ecosystems. The beech forests of the park thrive on soils that have developed from limestone of the Middle Trias era.

Figure 1.

Overview of the study area. The Hainich National Park is located in the center of Germany. The Huss supersite is part of the core area of the park. The satellite imagery in the backdrop is taken from Google Earth (2020). The coordinate system used for all figures of this paper is universal transverse mercator (UTM), zone 32N, ellipsoid WGS84.

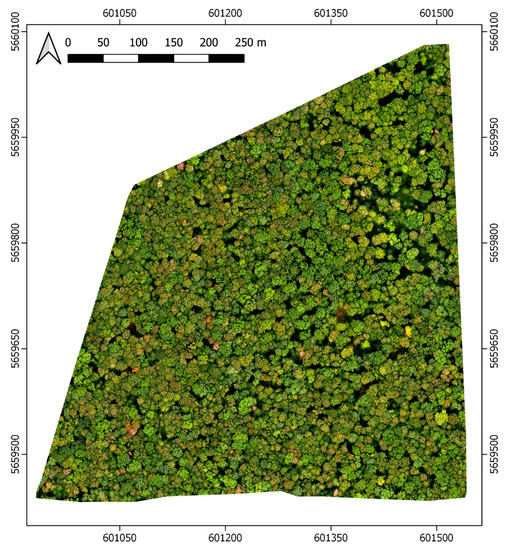

The study site “Huss” (Figure 2) has already been the focus of many forest ecological investigations and is equipped with a multitude of instruments for various long-term measurements and experiments [4,31,52,53,54,55,56]. It is located in the core area of the HNP near a flux tower operated by the University of Göttingen. The site covers an area of 28.2 ha. It is dominated by beeches (Fagus sylvatica) but comprises a great diversity of tree species, such as ash (Fraxinus excelsior), alder (Aldus glutinosa), sycamore maple (Acer pseudoplatanus), hornbeam (Carpinus betulus), wych elm (Ulmus glabra), common and sessile oak (Quercus robur, Quercus petraea), and chequers (Sorbus torminalis). The forest is unmanaged and home to a wide variety of flora, fungi, and fauna of around 10.000 species. Prominent examples of animals are European wildcats, various bat species, woodpeckers, roe deer, and more than 70 beetle species [56]. During the years 2018 and 2019, the Hainich forest experienced serious droughts and it received only 60% of the amount of precipitation relative to the long-term mean. This led to a widespread and noticeable damage of the beech population.

Figure 2.

The Huss site in September 2019. The dimensions of the site are approximately 550 m × 550 m. However, its footprint is not exactly square, resulting in an area of 28.2 ha. Only deciduous tree species occur. The gaps in the canopy are caused by natural processes such as wind throw or the death of old tree individuals etc. Since the forest is not managed, the dead wood remains on the forest floor until it decomposes completely. The dense canopy hardly permits woody undergrowth. Displayed data: Orthomosaic based on unmanned aerial systems (UAS) imagery. The data shown in this figure were acquired under leaf-on conditions on 20 August 2019 and are solely used for visualization purposes.

2.2. Field Work: UAS Mission and Check Point Surveying

We used the RTK version of the DJI (Da-Jiang Innovations Science and Technology Co., Ltd) Phantom 4 Pro to capture the UAS imagery. This UAS allows for accurate real-time positioning in the order of centimeters (see Table 1) if correction data from a reference station is received. For this campaign, the German satellite positioning service SAPOS was available. The correction data were received via a mobile internet connection. The nearest SAPOS reference station is “Muhlhausen 2” with an average distance of 14.5 km. The correction signal was persistently received during the flights. The Phantom 4 Pro RTK features a camera with a 1” CMOS (complementary metal-oxide-semiconductor) sensor and a mechanical shutter. The field of view of the camera is 84°. The 3D RTK coordinate of the image center is stored in exchangeable image file format (EXIF) format along with several other parameters. For more UAS specifications see Table 1.

Table 1.

Specifications of Da-Jiang Innovations Science and Technology Co., Ltd’s (DJI) real time kinematic (RTK) version of the Phantom 4 Pro according to [49]. Abbreviations: JPEG = Joint Photographic Experts Group; EXIF = exchangeable image file format (EXIF), CDGNSS = carrier-phase differential global navigation satellite system.

The UAS campaign was conducted in the leaf-off season in early spring 2019 (Table 2). During the flights, the sky was overcast. This resulted in diffuse and consistent illumination conditions. Accordingly, unwanted effects such as hard shadows and strong illumination differences between the forest canopy and forest floor were avoided. The wind speed was very low so that hardly any movements of the trees were observed during the flights. A simple airborne campaign-like flight pattern with parallel flight lines was chosen. To increase the probability of obtaining data from the forest floor, vastly overlapping nadir images were acquired. Motion blur was avoided by setting the flight speed, shutter speed (fixed to 1/320 s), and spatial resolution to the values reported in Table 2. With respect to the aperture, the exposure value was set to –0.3. Take-off and landing were operated from the flux tower platform close to the Huss site. The platform enabled the visual observation of the UAS throughout the mission.

Table 2.

UAS mission and acquisition parameters. Wind speed was measured at the weather station Weberstedt/Hainich located 5 km to the NE of the test site. The mission footprint (i.e., the entire area covered by the UAS campaign) is noticeably larger than the Huss site because the flight planning accounted for a buffer surrounding the site. Abbreviation: ISO = International Organization for Standardization.

Due to the application of direct georeferencing during the SfM processing, GCPs were not needed. Nevertheless, five check points were equally distributed at glades of the Huss site to allow for evaluating the positional accuracy of the processed orthomosaic and digital elevation model (DEM). To identify and locate the check points precisely in the UAS imagery, 50 cm × 50 cm Teflon panels featuring a black cross to mark the panel center were utilized. The positions of the Teflon panels were measured using survey-grade equipment. More specifically, a ppm10xx-04 full RTK CDGNSS sensor was employed in combination with a Novatel Vexxis GNSS L1/L2-Antenna [57]. Each check point was surveyed 50 times. The root-mean-square error (RMSE) (computed separately for x, y, z) was below 2 cm for all check points. In this study, the averaged positions of the 50 measurements were used.

2.3. Light Detection and Ranging (LiDAR) Data

For the terrain normalization of the SfM model (see Figure 3 for workflow), LiDAR data made openly available by the Thuringian land surveying office were used [58]. The LiDAR data were acquired in February 2017 and contained returns classified into ground and non-ground points. The average density of the ground points was 8 pts/m². According to the metadata provided by the Thuringian land surveying office, the horizontal and vertical accuracy of the LiDAR point clouds were 0.15 m and 0.09 m, respectively.

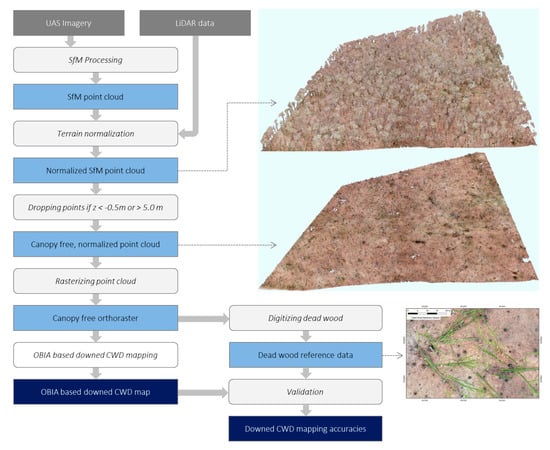

Figure 3.

The overall workflow developed and applied in this study. Color coding: Grey = input data, white = processing steps, light blue = intermediate products, dark blue = final products. The reference data for validation was digitized using the canopy-free raster data.

3. Methods

This section provides details on the method for automatically detecting dead wood and on the scheme for evaluating the mapping results. The workflow is depicted in Figure 3. In summary, the workflow begins with the processing of the UAS data into a point cloud using a SfM approach. This point cloud is normalized with respect to the terrain using LiDAR data. Subsequently, all points with a value less than –0.5 m or greater than 5 m were removed. The remaining points representing the forest floor including CWD and tree stumps were converted to a raster data set which is the basis for CWD mapping.

3.1. UAS Data Processing

3.1.1. Delineation of SfM Point Cloud

Using the SfM approach, a dense 3D point cloud was created based on the UAS images (Figure 3). The orthomosaic (raster) of the forest floor was delineated later in a separate step (see Section 3.1.2). The 3D reconstruction software Metashape 1.5.1 (Agisoft LLC) was used for SfM processing. The UAS images were not modified before processing. Based on the accurate position information of the UAS data, direct georeferencing was applied and no GCPs were used for processing. Accordingly, the parameter for the position accuracy of the camera was set to 0.02 m (Table 3). The exact determination of the camera positions (in the order of a few centimeters) ensures reliable internal calibration of the camera parameters and thus prevents systematic errors in the height models created, such as doming or bowling.

Table 3.

UAS data-processing parameters (Agisoft Metashape 1.5.1). Camera parameters according to Brown–Conrady model [59]. Abbreviations: f = focal length; cx, cy = principal point offset; k1, k2, k3 = radial distortion coefficients; p1, p2 = tangential distortion coefficients.

All images were aligned during processing and approximately 105,000 tie points were detected. According to flight altitude and camera hardware, the nominal ground resolution was roughly 4.2 cm. The dense point cloud comprised circa 650 million points for the entire UAS-mission area and 410 million points for the Huss site, which corresponded to an average point density of 1424 pts/m². As mentioned above, five check points were installed to assess the geometric accuracy of the SfM-based model. At all points, the deviation between check point coordinate and model coordinate was less than 5 cm (measured separately for x, y, z). As shown in Table 4, the RMSE of the control points (x, y, z) is below 3.5 cm. Further indications of the high geometric accuracy of the SfM model are the minor camera positional error and the low effective reprojection error (Table 4).

Table 4.

UAS data-processing results and camera (Brown–Conrady) model [59].

3.1.2. Generation of Canopy-Free Orthomosaic

The processing workflow for the generation of the canopy-free orthomosaic is provided in Figure 3. Processing was carried out using LASTools version 181108 [60]. First, the SfM point cloud was normalized for terrain using the ground points of the LiDAR dataset (LASTools command lasheight). Accordingly, the height of the terrain surface was subtracted from the SfM model, thus creating a surface model that only contains the object heights. Subsequently, all points featuring either a height below –0.5 m or above 5.0 m were removed, which resulted in an average point density of 688 pts/m² (Figure 3). The remaining points represent the forest floor, the lower part of tree trunks, and downed CWD. The lower threshold of –0.5 m was chosen to remove invalid points. Since the pulled-out roots of some windthrown trees cause cavities in the forest floor, a higher threshold would have resulted in holes in the point cloud data. The upper threshold of 5 m excludes most branches while maintaining the points representing downed CWD. To transfer this method to another area, this value needs to be adjusted according to the forest structure. It is also necessary to consider that trees may not immediately fall over completely, but first become caught in neighboring trees. In our study, all downed CWD was below 5 m over ground. At this point it should be mentioned that supplementary data such as LiDAR data are not necessarily required for terrain normalization. This operation can also be performed using the generated SfM point cloud. However, this requires an accurate identification of the ground points. Since this is not the focus of our study, we have used free LiDAR-based ground points as described above.

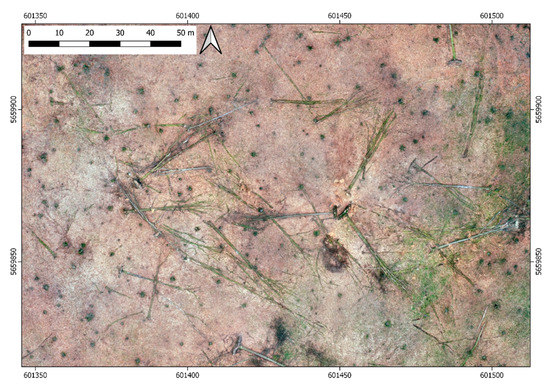

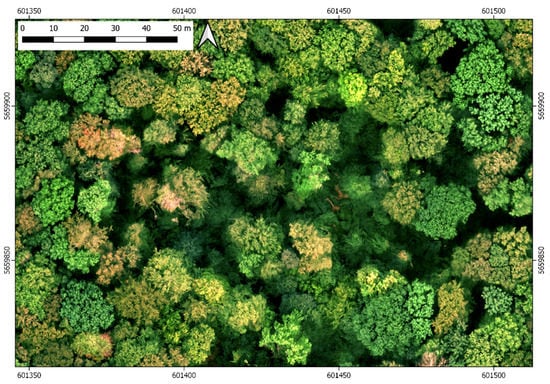

In a last step, the canopy-free orthomosaic was created by rasterizing the point cloud using the LASTools command lasgrid with the argument –rgb (to generate an RGB raster). The cell size of the raster was set to 5 cm. After running lasgrid, some voids occurred underneath a few tree crowns. These voids could be filled by using the argument –fill 10 (searching and filling voids in the grid with a square search radius of 0.5 m). Figure 4 shows a subset of the final canopy-free orthomosaic, on the basis of which the automatic CWD detection is carried out. For comparison, the same area is shown in Figure 5 during leaves-on conditions. In Figure 4, foliage and dense canopy cover prevent the view of the forest floor and dead wood might only be detected in glades.

Figure 4.

Subset of the canopy-free orthomosaic created and used for coarse wood debris (CWD) mapping in this study. The subset is identical to the one shown in Figure 4. UAS imagery was acquired during leaf-off season (acquisition date 24/03/2019). The dark dots represent the lower part of the stems of standing trees. The varying color of the dead wood corresponds to different species and stages of decomposition. Since some trunks have been lying on the forest floor for several years, advanced decay is found. Such logs feature almost the same elevation as the surrounding ground and might not be detected when only geometric properties are considered.

Figure 5.

Subset of a UAS imagery-based orthomosaic (acquisition date 2019/09/19) for the Huss site. Due to the summer drought, foliage coloring and defoliation has already started. Still, the leaves prevent the view of the forest floor and dead wood can only be detected in canopy gaps.

3.2. Collection of Reference Data for Accuracy Assessment

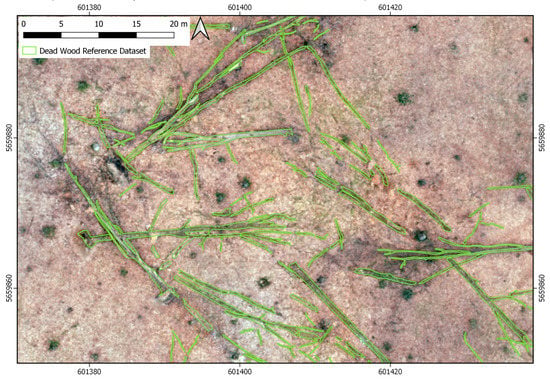

The obligations of the HNP administration include the monitoring of the natural forest development in the park with a special geographic focus on the core zone, including the Huss site. One of the tasks is the regular mapping of downed CWD. According to the specifications of the park administration, a CWD featuring a minimum diameter of 0.15 m and a minimum length of 2 m needs to be inventoried. During a field campaign carried out by rangers of the HNP, CWD was surveyed within the Huss site using RTK CDGNSS survey grade equipment. Similar to other studies [7,11,13,15,16], challenging GNSS conditions prevented accurate positioning and the positional accuracy was rather low (in the order of 10 m). Consequently, this data set was not appropriate to validate the mapping results of our study. In order to validate the map product, CWD was manually digitized for one quarter (north-eastern quadrant) of the Huss site which was representative of the entire site. This approach has been used in many similar studies [11,14,16,18,25,26]. Figure 6 shows a subsection of the validation area including digitized CWD.

Figure 6.

Subset of the reference data used for validation. Since no suitable in-situ dead wood measurements were available, the dead wood was digitized manually for a quarter of the Huss site. A total of 225 dead wood objects met the criteria of the HNP administration.

The total length of downed CWD within the validation area fulfilling the specified criteria with regard to minimum length and minimum diameter was 6.473 km, which corresponded to 225 dead wood objects (essentially downed trees and several dismantled major branches).

3.3. Automized Dead Wood Detection Using a Raster Data-Based Object-Based Image Analysis (OBIA) Approach

In our study, we developed a line recognition approach that is exclusively based on spectral information. This approach utilizes the canopy-free orthomosaic as an input data set. For each of the three image layers (blue, green, and red) a line extraction algorithm was applied (Table 5). Different variables were defined describing the characteristics of the extracted linear structures (“Extract lines for RGB layers”), including line length (“length of the line in pixels”), line width (“width of the line in pixels”), border width (“width of the homogeneous border along the extracted line”), and line direction (“direction of the line in degrees”). In order to find lines in all possible directions, the line extraction algorithm was embedded in a loop covering all angles from 0 to 179 degrees. The grey values of the resulting layers (‘Blines’, ‘Glines’, and ‘Rlines’) were subsequently summed up utilizing the ‘layer arithmetics’ algorithm to receive a final layer, highlighting lines which occur in each individual band.

Table 5.

The eCognition processing parameters used for raster data-based dead wood mapping.

After the generation of the lines layer, a threshold-based segmentation and classification (Table 5, “Segment and classify lines”) was applied. This routine utilizes the line layer to create a new image object level (“lvl1”) and to classify all resulting objects into line and non-line objects. A threshold of 30 for the grey value has been set after visually comparing the line layer to the RGB image. The generation of image objects generally reduces noise effects and increases the information basis for further analyses by adding shape, texture, and contextual features.

The resulting classification of linear structures was further adapted to meet certain object criteria and to eliminate misclassifications (Table 5, “Reshaping”). Hence, linear objects smaller than a minimum mapping unit of 30 pixels were removed. In order to ensure a connection between objects belonging to the same dead wood cluster, classified segments were grown into other objects with values larger than 0 in the line layer. Afterwards, all neighboring objects classified as dead wood were merged. In addition, the resulting objects are grown by two pixels in all directions to join parallel linear segments which very likely belong to the same dead wood (Table 5, “Pixel-based growing”).

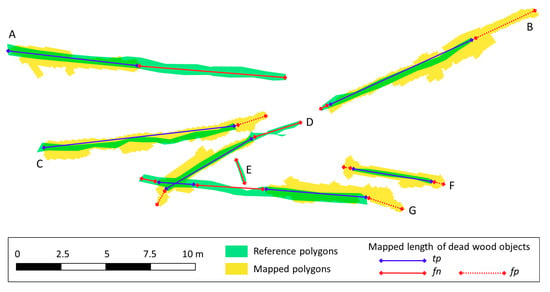

3.4. Accuracy Analysis

For assessing the accuracy of the downed CWD detection, two object-based approaches have been considered: (a) length-based assessment and (b) count-based assessment. For (a) we measured the length of correctly detected (true positive, tp length), missed out (false negative, fn length), and wrongly detected (false positive, fp length) CWD objects (i.e., CWD meters). For (b) the number of correctly detected, missed out, and wrongly detected CWD objects was determined (Figure 7).

Figure 7.

Mapped and manually digitized CWD for a small subset of the Huss site to illustrate the length-based accuracy analysis approach. Seven dead wood objects are shown (A–G). The length of the overlap area of reference polygons and mapped polygons corresponding to the same CWD object (solid blue lines) was defined as correctly detected (tp length). Missed out parts of CWD objects (solid red lines) correspond to fn length, while dotted red lines refer to overestimation (fp length). The length measurements were summed up for the entire validation area (one fourth of the Huss site).

The length measurements required for the first of the above assessments were performed manually. Accordingly, correctly detected, missed out, and wrongly detected fractions of CWD objects were digitized. To this end, the geographic information system (GIS) software QGIS 3.10.1 was used. The length of the overlap between a CWD reference polygon and an automatically detected CWD polygon was defined as a correctly detected length (Figure 7). Missed out and wrongly detected dead wood was measured in the same way. Finally, the individual object-wise length measurements were summed up for an overall assessment.

The count-based validation approach considers dead wood objects as entities (e.g., one log or one dismantled major branch). The accuracy of detecting individual dead wood objects was based on the following criteria. A dead wood object was tagged as correctly identified (tp) if >50% of its length was correctly detected. All other object segments were either tagged fn (missed out) or fp (overdetection) (Figure 7). For example, for object A in Figure 7, the length of the correctly recognized (tp) partition of the object is less than 50% of the total length of this object. Consequently, this dead wood object was tagged as missed out (fn). Except for object E, the remaining objects were considered as correctly identified (tp). Following this evaluation scheme, the figures for precision and recall were computed according to the following equations:

4. Results

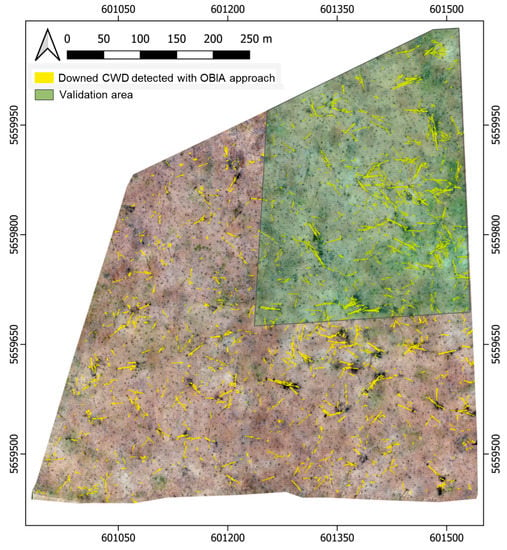

4.1. Coarse Wood Debris (CWD) Map

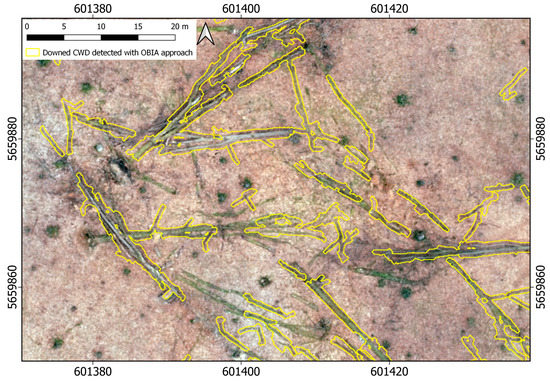

Figure 8 shows a map of the automatically detected downed CWD for the entire Huss site. Although this illustration does not facilitate detailed discussions on the quality of the dead wood detection, it is obvious that the OBIA approach is capable of identifying linear features. From a visual perspective, there is no risk of confusion with non-elongated objects such as tree stumps and/or patches covered with short green vegetation (dominant species: Allium ursinum). Even though some dead wood objects are missed out, the false negative rate of the mapping result seems to be rather low.

Figure 8.

CWD map of the entire Huss site including validation area. An initial visual assessment suggests that the developed object-based image analysis (OBIA) approach is able to identify the predominant part of the CWD.

Figure 9 provides a detailed view of the generated CWD map. It shows the same subset of the Huss site as Figure 6 and reveals that the majority of the dead wood was detected within this map section. Moreover, as the presented mapping method focuses on the recognition of linear features, it was possible to avoid confusion with trunks of standing trees. Still, some CWD objects were missed out entirely and some false positive detections occur that can most likely be attributed to linear artefacts in the canopy-free orthomosaic.

Figure 9.

Subset of Figure 8 providing a detailed view of the mapped CWD. According to the map, the majority of the dead wood was detected, even though the object shapes do not match the dead wood boundaries precisely for all examples. Since the mapping method was designed to detect linear features, confusion between CWD and standing stems was avoided.

4.2. Accuracy Analysis

As explained in Section 3.3, the accuracy of the dead wood mapping was estimated on the basis of the total length of the downed CWD objects (in meters) as well as the number of dead wood objects identified. According to the reference data set, the total length of dead wood in the validation area (north-eastern quadrant of the Huss site, see Figure 8) is 6473 m, of which 4478 m were correctly identified. A total of 1995 m was missed out (Table 6) and the overdetection amounts to 887 m which corresponds to a precision of 83.5% and a recall of 69.2%.

Table 6.

Statistics obtained from the accuracy assessment. For explanation see Section 3.4 and Figure 7. Abbreviations: tp = true positive, fn = false negative, fp = false positive.

Regarding the count-based CWD assessment, 180 out of 225 downed CWD objects were correctly detected according to the criteria defined in Section 3.4. Furthermore, overall 45 objects were missed out (fn) while 76 false alarms (fp) occurred. The figures for precision and recall are 70.3 and 80.0 respectively (Table 6).

5. Discussion

To our knowledge, this is the first study investigating the usage of UAS-borne imagery for automatic detection of downed CWD underneath a dense canopy of deciduous forest. Inoue et al. [26] use a comparable UAS dataset in a deciduous forest to manually detect logs, but did not remove the canopy. According to their report, the recognition of the logs was hindered due to occlusion caused by tree crowns. In our study, the impact of occlusion was minimized due to the usage of a canopy-free orthomosaic. Other publications using optical data for dead wood recognition either focused on snag detection or on mapping of logs within large patches of storm-felled trees. Accordingly, the accuracies reported in these papers might not be comparable to the validation results of our study.

LiDAR-based research aiming at downed CWD detection was carried out in several studies [7,8,9,12,14,15,16,18]. The results of those publications dealing with direct mapping of individual dead wood objects can be used as a benchmark to assess the performance of our approach. Nonetheless, it should be mentioned that the majority of the reported LiDAR-based results employ high density point clouds (>20 pts/m²) which are usually not (yet) generated in the framework of regular LiDAR acquisitions commissioned by land surveying offices or similar authorities.

Overall, a comparison with studies reporting accuracy measures based on airborne LiDAR data reveals that our results are among the better in terms of CWD detection rate (precision and recall; see Section 1, [8]). This outcome is particularly promising when considering that we even included small CWD objects with a minimum diameter of 15 cm. As in several other studies [11,14,16,18,25,26], reference data were obtained from the same data set that was also used to classify downed CWD and a simple, objective, and reproducible validation approach was developed.

Table 6 reveals certain discrepancies for the results obtained from the length- and count-based assessments. While a considerable fraction of the total length of the dead wood was missed out, the number of missed out dead wood objects was comparably small. This imbalance originates from the definition of correctly identified CWD objects. Several times, branches of downed trees were missed out using the OBIA approach. This results in an underestimation of the overall CWD length for the validation site. Nonetheless, the correctly recognized fraction of the individual CWD object can be above 50% and thus causes a true positive vote for this object.

The proposed method requires a sufficient proportion of gaps in the forest canopy to grant free sight of the downed CWD objects. This requirement can be met if the forest is very sparse or if there are large gaps in the forest canopy. Another option is to acquire the UAS data during leaf-off conditions, as in this study. However, some patches of the forest floor might remain occluded by stems or other tree elements which results in areas of no data. Thanks to the high percentage of image overlap (Table 2) and the wide aperture angle of the used camera (Table 1), only six no data patches greater than 0.01 m² occurred in this study. Their sizes range from 0.017 m² to 0.887 m². As the patches were rather small, they were filled during the processing.

Without any doubt, no data areas are a source of uncertainty due to the potential omission of CWD objects. The number and size of no data areas can further be reduced by more sophisticated flight patterns including multiple flight directions, even greater image overlap, and off-nadir images. However, passive optical data will fail over dense and non-deciduous forests. Under such conditions, active systems such as LiDAR are superior.

Despite its limitations, one of the great advantages of UAS for CWD detection are their general flexibility and the scalability of the imagery they produce. If there is the need for detecting even smaller CWD objects or FWD, UAS missions can be executed at lower flight altitudes and UAS camera lenses with smaller aperture angles can be employed to improve ground resolution. UAS campaigns can be conducted at comparably low costs and at almost any time if they are not prevented by inappropriate weather conditions.

Another advantage of using UAS is the availability of high-resolution spectral and geometric information. Although it is understood that the full potential of such UAS data can only be exploited if both spectral and geometric information is integrated, this study only uses RGB orthomosaics for CWD detection (yet, it should be emphasized again that without the geometric information of the 3D point cloud, the creation of a canopy-free mosaic would not have been possible). The rationale behind this is to detect CWD of various stages of decay. In particular, heavily decomposed downed CWD hardly stands out from the ground due to its height and thus might not be detectable using the geometric information [13] only. At the same time, these linear structures are still visible in RGB images. The integration of spectral and geometric information becomes particularly interesting when it comes to CWD monitoring on a regular (e.g., yearly) basis. For such applications, geometric (height) information are likely to improve the detection of net gains of CWD, especially for fresh dead wood. This matter has to be further investigated in future research. Another research question to be answered is to what extent the increment of the spectral information like the addition of a red-edge or near infrared (NIR) channel can lead to an improvement of CDW detection. UAS equipped with multispectral cameras are available and allow for the acquisition of the needed data.

In this study, an image analysis approach was developed and tested for CWD mapping. Despite its simplicity, the mapping results and accuracy statistics can compete with those of previous studies. The line detection algorithm used is likely to produce similar results for other study areas. Nevertheless, other CWD mapping approaches based on RGB imagery might result in increased detection rates and need to be tested in future studies. Machine learning, and in particular deep learning, is widely used for various EO applications and has already been successfully demonstrated for the mapping of storm-felled trees [32] and downed logs [25] using optical data. The major disadvantages of these techniques are the need for massive amounts of training data and their limitations with respect to the transferability to other sites with different characteristics. Nonetheless, it is likely that CWD recognition will improve when recent machine-learning developments are taken into account [25].

Due to the mainly regular shapes of downed CWD, template matching approaches have already been implemented for CWD mapping using optical [32] and airborne LiDAR data [7,15]. Depending on the EO data, site characteristics, and CWD detection requirements (e.g., minimum size of CWD), the templates need to be adjusted to optimize the detection rate. Thus, existing region- and data-adjusted templates and template-based methods might not be transferable to other regions and data sets.

In summary, ultra high-resolution UAS imagery holds a large potential to reliably detect and monitor small scale forest objects such as downed CWD. However, suitable methods need to be (further) developed and adjusted in order to accurately extract these forest attributes from the wealth of available information. Hence, it is without doubt that ultra high-resolution data will trigger the development of novel as well as the refinement of existing image analysis methods.

6. Conclusions

This study aimed at investigating the potential of UAS imagery for mapping downed CWD in a natural and dense deciduous forest. The developed OBIA approach utilized only spectral (RGB) data for the actual dead wood classification. However, the 3D information contained in the acquired UAS data set was still a key requirement for achieving the study objective. The reason for this is that the available multi-view stereoscopic UAS imagery enabled the generation of 3D point clouds. These permitted the separation of objects (or points of interest) according to their height above ground. In this work, we took advantage of this possibility by extracting only ground and near-ground points from the 3D data. This filtered point cloud was then used to delineate a canopy-free orthomosaic containing only spectral information. The resulting RGB raster provided a detailed representation of the forest floor and formed the very basis for detecting dead wood in the studied area.

A simple and transferable line detection method was developed to map downed CWD. The approach led to expedient classification accuracies that are comparable to the results achieved in previous studies using airborne high-density LiDAR point clouds.

The data for this study were generated using an inexpensive RTK-capable UAS. For forest applications in particular, these systems feature several advantages. One major benefit is the dispensability of GCPs. RTK technology allows for very accurate measurements of the UAS position that propagate to the acquired images and to the final 3D models created from the imagery. In this way, change detection approaches without the effort of additional co-registration become possible [50]. This is of particular interest for monitoring purposes such as the observation of the development of CWD. However, it is worth noting that the specific application of CWD change detection might still require the co-registration of multi-temporal UAS orthomosaics due to the small size of dead wood objects.

For once-off surveys aiming at the evaluation of the status-quo of downed CWD, the centimeter-level positioning capability of RTK systems is not needed (if a centimeter to decimeter-level location of the CWD objects is not mandatory). Conventional GNSS positional accuracies and sophisticated flight patterns still permit the generation of sufficient 3D models with negligible systematic errors [38,47]. Such models can be produced using images acquired with low-cost off-the-shelf consumer drones.

As a final remark, CWD mapping in deciduous forests is feasible at low cost and with high flexibility. Besides CWD mapping, several other domains do and will benefit from the very high-resolution and inexpensive spectral and height data that can be acquired by UAS. These disciplines include, but are not limited to, agriculture [61,62], geology [63], archeology [36], structural health monitoring [34], topographic surveying [38], and general mapping [64]. Consequently, an extensive use of UAS in agencies, businesses, and in the private domain (including citizen science) is expected.

Author Contributions

All the authors have made substantial contribution towards the successful completion of this manuscript: Conceptualization, C.T. (Christian Thiel); methodology, C.T. (Christian Thiel), M.V., C.T. (Christian Thau), A.H., S.H.; validation, L.E., C.T. (Christian Thiel), A.H.; formal analysis, C.T. (Christian Thiel), M.M.M.; investigation, C.T. (Christian Thiel), M.M.M.; resources, C.T. (Christian Thiel), S.H.; data curation, C.T. (Christian Thiel), M.M.M.; writing—original draft preparation, C.T. (Christian Thiel), L.E., M.M.M.; writing—review and editing, C.T. (Christian Thau), M.M.M.; visualization, M.M.M., C.T. (Christian Thiel); supervision, C.T. (Christian Thiel); project administration, C.T. (Christian Thiel); funding acquisition, C.T. (Christian Thiel) All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors thank the HNP administration for general support during the field work. The authors also thank the University of Göttingen for providing access to the flux tower platform in order to launch and land the UAS.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Puletti, N.; Giannetti, F.; Chirici, G.; Canullo, R. Deadwood distribution in European forests. J. Maps 2017, 13, 733–736. [Google Scholar] [CrossRef]

- Ifadis, I.M.; Demertzi, A.A. Mapping Based on Dead Wood Availability. Locating Biodiversity Hotspots in Managed Forests. In Proceedings of the 14th International Multidisciplinary Scientific Geoconference (SGEM), Albena, Bulgaria, 17–26 June 2014; pp. 391–398. [Google Scholar]

- Bauerle, H.; Nothdurft, A.; Kandler, G.; Bauhus, J. Monitoring habitat trees and coarse woody debris based on sampling schemes. Allg. Forst Jagdztg. 2009, 180, 249–260. [Google Scholar]

- Holzwarth, F.; Kahl, A.; Bauhus, J.; Wirth, C. Many ways to die—Partitioning tree mortality dynamics in a near-natural mixed deciduous forest. J. Ecol. 2013, 101, 220–230. [Google Scholar] [CrossRef]

- Mataji, A.; Sagheb-Talebi, K.; Eshaghi-Rad, J. Deadwood assessment in different developmental stages of beech (Fagus orientalis Lipsky) stands in Caspian forest ecosystems. Int. J. Environ. Sci. Technol. 2014, 11, 1215–1222. [Google Scholar] [CrossRef][Green Version]

- Albrecht, L. The importance of dead woody material in forests. Forstwiss. Cent. 1991, 110, 106–113. [Google Scholar] [CrossRef]

- Nystrom, M.; Holmgren, J.; Fransson, J.E.S.; Olsson, H. Detection of windthrown trees using airborne laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 21–29. [Google Scholar] [CrossRef]

- Marchi, N.; Pirotti, F.; Lingua, E. Airborne and terrestrial laser scanning data for the assessment of standing and lying deadwood: Current situation and new perspectives. Remote Sens. 2018, 10, 1356. [Google Scholar] [CrossRef]

- Pesonen, A.; Leino, O.; Maltamo, M.; Kangas, A. Comparison of field sampling methods for assessing coarse woody debris and use of airborne laser scanning as auxiliary information. For. Ecol. Manag. 2009, 257, 1532–1541. [Google Scholar] [CrossRef]

- Tanhuanpaa, T.; Kankare, V.; Vastaranta, M.; Saarinen, N.; Holopainen, M. Monitoring downed coarse woody debris through appearance of canopy gaps in urban boreal forests with bitemporal ALS data. Urban For. Urban Green. 2015, 14, 835–843. [Google Scholar] [CrossRef]

- Yrttimaa, T.; Saarinen, N.; Luoma, V.; Tanhuanpaa, T.; Kankare, V.; Liang, X.L.; Hyyppa, J.; Holopainen, M.; Vastaranta, M. Detecting and characterizing downed dead wood using terrestrial laser scanning. ISPRS J. Photogramm. Remote Sens. 2019, 151, 76–90. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Detection of fallen trees in ALS point clouds using a normalized cut approach trained by simulation. ISPRS J. Photogramm. Remote Sens. 2015, 105, 252–271. [Google Scholar] [CrossRef]

- Lindberg, E.; Hollaus, M.; Mücke, W.; Fransson, J.E.S.; Pfeifer, N. Detection of Lying Tree Stems from Airborne Laser Scanning Data Using a Line Template Matching Algorithm. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Antalya, Turkey, 11–13 November 2013. [Google Scholar]

- Leiterer, R.; Mucke, W.; Morsdorf, F.; Hollaus, M.; Pfeifer, N.; Schaepman, M.E. Operational forest structure monitoring using airborne laser scanning. Photogramm. Fernerkund. Geoinf. 2013, 173–184. [Google Scholar] [CrossRef]

- Mucke, W.; Deak, B.; Schroiff, A.; Hollaus, M.; Pfeifer, N. Detection of fallen trees in forested areas using small footprint airborne laser scanning data. Can. J. Remote Sens. 2013, 39, S32–S40. [Google Scholar] [CrossRef]

- Blanchard, S.D.; Jakubowski, M.K.; Kelly, M. Object-based image analysis of downed logs in disturbed forested landscapes using lidar. Remote Sens. 2011, 3, 2420–2439. [Google Scholar] [CrossRef]

- Aicardi, I.; Dabove, P.; Lingua, A.M.; Piras, M. Integration between TLS and UAV photogrammetry techniques for forestry applications. iForest Biogeosci. For. 2017, 10, 41–47. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. A voting-based statistical cylinder detection framework applied to fallen tree mapping in terrestrial laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2017, 129, 118–130. [Google Scholar] [CrossRef]

- Sherrill, K.R.; Lefsky, M.A.; Bradford, J.B.; Ryan, M.G. Forest structure estimation and pattern exploration from discrete-return lidar in subalpine forests of the central Rockies. Can. J. For. Res. 2008, 38, 2081–2096. [Google Scholar] [CrossRef]

- Hauglin, M.; Gobakken, T.; Lien, V.; Bollandsas, O.M.; Naesset, E. Estimating potential logging residues in a boreal forest by airborne laser scanning. Biomass Bioenergy 2012, 36, 356–365. [Google Scholar] [CrossRef]

- Andersen, H.E.; McGaughey, R.J.; Reutebuch, S.E. Estimating forest canopy fuel parameters using LIDAR data. Remote Sens. Environ. 2005, 94, 441–449. [Google Scholar] [CrossRef]

- Bright, B.C.; Hudak, A.T.; McGaughey, R.; Andersen, H.E.; Negron, J. Predicting live and dead tree basal area of bark beetle affected forests from discrete-return lidar. Can. J. Remote Sens. 2013, 39, S99–S111. [Google Scholar] [CrossRef]

- Sumnall, M.J.; Hill, R.A.; Hinsley, S.A. Comparison of small-footprint discrete return and full waveform airborne lidar data for estimating multiple forest variables. Remote Sens. Environ. 2016, 173, 214–223. [Google Scholar] [CrossRef]

- Pesonen, A.; Maltamo, M.; Eerikainen, K.; Packalen, P. Airborne laser scanning-based prediction of coarse woody debris volumes in a conservation area. For. Ecol. Manag. 2008, 255, 3288–3296. [Google Scholar] [CrossRef]

- Jiang, S.; Yao, W.; Heurich, M. Dead Wood Detection Based on Semantic Segmentation of Vhr Aerial Cir Imagery Using Optimized Fcn-Densenet. In Proceedings of the Photogrammetric Image Analysis & Munich Remote Sensing Symposium, Munich, Germany, 18–20 September 2019. [Google Scholar]

- Inoue, T.; Nagai, S.; Yamashita, S.; Fadaei, H.; Ishii, R.; Okabe, K.; Taki, H.; Honda, Y.; Kajiwara, K.; Suzuki, R. Unmanned aerial survey of fallen trees in a deciduous broadleaved forest in Eastern Japan. PLoS ONE 2014, 9, 7. [Google Scholar] [CrossRef] [PubMed]

- Krzystek, P.; Serebryanyk, A.; Schnorr, C.; Cervenka, J.; Heurich, M. Large-scale mapping of tree species and dead trees in sumava national park and bavarian forest national park using lidar and multispectral imagery. Remote Sens. 2020, 12, 661. [Google Scholar] [CrossRef]

- Pasher, J.; King, D.J. Mapping dead wood distribution in a temperate hardwood forest using high resolution airborne imagery. For. Ecol. Manag. 2009, 258, 1536–1548. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piegay, H.; Tremelo, M.L. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Butler, R.; Schlaepfer, R. Spruce snag quantification by coupling colour infrared aerial photos and a GIS. For. Ecol. Manag. 2004, 195, 325–339. [Google Scholar] [CrossRef]

- Butler-Manning, D. Stand Structure, Gap Dynamics and Regeneration of a Semi-Natural Mixed Beech Forest on Limestone In Central Europe—A case study. Ph.D. Thesis, Albert-Ludwigs Universitat, Freiburg, Germany, 2007. [Google Scholar]

- Yang, B.; Travaglini, D.; Giannetti, F.; Kutchartt, E.; Bottalico, F.; Chirici, G. Kernel feature cross-correlation for unsupervised quantification of damage from windthrow in forests. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 17–22. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.J.; Chen, X.Y. Unmanned aerial vehicle for remote sensing applications-A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Kersten, J.; Rodehorst, V.; Hallermann, N.; Debus, P.; Morgenthal, G. Potentials of Autonomous UAS and Automated Image Analysis for Structural Health Monitoring. In Proceedings of the 40th IABSE Symposium, Nantes, France, 19–21 September 2018. [Google Scholar]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Tscharf, A.; Rumpler, M.; Fraundorfer, F.; Mayer, G.; Bischof, H. On the use of uavs in mining and archaeology—Geo-accurate 3D reconstructions using various platforms and terrestrial views. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 15–22. [Google Scholar] [CrossRef]

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Coastal mapping using DJI Phantom 4 RTK in post-processing kinematic mode. Drones 2020, 4, 9. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Network, H.U. UAViators - Humanitarian UAV Network. Available online: http://uaviators.org/ (accessed on 20 September 2020).

- Schonberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Förstner, W. A framework for Low Level Feature Extraction. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 383–394. [Google Scholar]

- Zhuo, X.Y.; Koch, T.; Kurz, F.; Fraundorfer, F.; Reinartz, P. Automatic UAV image geo-registration by matching UAV images to georeferenced image data. Remote Sens. 2017, 9, 376. [Google Scholar] [CrossRef]

- Nister, D. An efficient solution to the five-point relative pose problem. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 756–770. [Google Scholar] [CrossRef] [PubMed]

- Chum, O.; Matas, J.; Obdrzalek, S. Enhancing Ransac by Generalized Model Optimization. In Proceedings of the Asian Conference on Computer Vision, Jeju, Korea, 27–30 January 2004; pp. 812–817. [Google Scholar]

- Triggs, B.; Mclauchlan, P.; Hartley, R.i.; Fitzgibbon, A. Bundle Adjustment–A Modern Synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar]

- Tippetts, B.; Lee, D.-J.; Lillywhite, K.; Archibald, J. Review of stereo vision algorithms and their suitability for resource-limited systems. J. Real Time Image Process. 2013, 11, 5–25. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Griffiths, D.; Burningham, H. Comparison of pre- and self-calibrated camera calibration models for UAS-derived nadir imagery for a SfM application. Prog. Phys. Geogr. Earth Environ. 2018, 43, 215–235. [Google Scholar] [CrossRef]

- DJI. DJI Phantom 4 RTK User Manual v1.4; DJI: Shenzhen, China, 2018. [Google Scholar]

- Thiel, C.; Müller, M.M.; Berger, C.; Cremer, F.; Dubois, C.; Hese, S.; Baade, J.; Klan, F.; Pathe, C. Monitoring selective logging in a pine-dominated forest in central germany with repeated drone flights utilizing a low cost RTK quadcopter. Drones 2020, 4, 11. [Google Scholar] [CrossRef]

- Forlani, G.; Dall’Asta, E.; Diotri, F.; di Cella, U.M.; Roncella, R.; Santise, M. Quality assessment of DSMs produced from UAV flights georeferenced with on-board RTK positioning. Remote Sens. 2018, 10, 311. [Google Scholar] [CrossRef]

- Beneke, C.; Butler-Manning, D. Coarse Woody Debris (CWD) in the Weberstedter Holz, a Near Natural Beech Forest in Central Germany; Forest & Lancsape Denmark: Copenhagen, Denmark, 2003. [Google Scholar]

- Guse, T. Regeneration und Etablierungvon Sechs Mitteleuropäischen Laubbaumarten in Einem „Naturnahen“ Kalkbuchenwald im Nationalpark Hainich/Thüringen; University Jena: Jena, Germany, 2009. [Google Scholar]

- Seele, C. The Influence of Deer Browsing on Natural Forest Regeneration; University Jena: Jena, Germany, 2011. [Google Scholar]

- Ratcliffe, S.; Holzwarth, F.; Nadrowski, K.; Levick, S.; Wirth, C. Tree neighbourhood matters—Tree species composition drives diversity-productivity patterns in a near-natural beech forest. For. Ecol. Manag. 2015, 335, 225–234. [Google Scholar] [CrossRef]

- Fritzlar, D.; Henkel, A.; Hornschuh, M.; Kleidon-Hildebrandt, A.; Kohlhepp, B.; Lehmann, R.; Lorenzen, K.; Mund, M.; Profft, I.; Siebicke, L.; et al. Exkursionsführer—Wissenschaft im Hainich; Nationalparkverwaltung Hainich, ThüringenForst: Bad Langensalza, Germany, 2016; p. 63. [Google Scholar]

- PPM. 10xx GNSS Sensor. Available online: http://www.ppmgmbh.com/ppm_design/10xx-GNSS-Sensor.html (accessed on 5 November 2019).

- Geoinformation, L.f.B.u. Geodaten in Thueringen. Available online: www.geoportal-th.de (accessed on 20 September 2020).

- Conrady, A.E. Lens-systems, decentered. Mon. Not. R. Astron. Soc. 1919, 79, 384–390. [Google Scholar] [CrossRef]

- Isenburg, M. LASTools. Available online: https://rapidlasso.com (accessed on 20 September 2020).

- Padua, L.; Vanko, J.; Hruska, J.; Adao, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. Data acquisition and Analysis Methods in UAV-based Applications for Precision Agriculture. In Proceedings of the 15th International Conference on Distributed Computing in Sensor Systems, Santorini, Greece, 29–31 May 2019; IEEE: New York, NY, USA, 2019; pp. 377–384. [Google Scholar]

- Zachariah, D.F.; Terry, L.P. An orientation based correction method for SfM-MVS point clouds-Implications for field geology. J. Struct. Geol. 2018, 113, 76–89. [Google Scholar] [CrossRef]

- Milas, A.S.; Cracknell, A.P.; Warner, T.A. Drones—The third generation source of remote sensing data. Int. J. Remote Sens. 2018, 39, 7125–7137. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).