1. Introduction

Ship detection in optical remote sensing image is a challenging task and has a wide range of applications such as ship positioning, maritime traffic control and vessel salvage [

1]. Differing from natural image that taken in close-range shooting with horizontal view, remote sensing image acquired by satellite sensor with a top-down perspective is vulnerable to the factor such as weather. Offshore and inland river ship detection has been studied on both synthetic aperture radar (SAR) and optical remote sensing imagery. Some alternative methods of machine learning approaches have also been proposed [

2,

3,

4,

5]. However, the classic ship detection methods based on SAR images will cause a high false alarm ratio and be influenced by the sea surface model, especially on inland rivers and in offshore areas. Schwegmann et al. [

6] used deep highway networks to avoid the vanishing gradient problem. They developed their own three-class SAR dataset that allows for more meaningful analysis of ship discrimination performances. They used data from Sentinel-1 (Extra Wide Swath), Sentinel-3 and RADARSAT-2 (Scan-SAR Narrow). They used Deep Highway Networks 2, 20, 50, 100 with 5-fold cross-validation and obtained an accuracy of 96% outperforming classical techniques such as SVM, Decision Trees, and Adaboost. Carlos Bentes et al. [

7] used a custom CNN with TerraSAR-X Multi Look Ground Range Detected (MGD) images to detect ships and iceberg. They compared their results with SVM and PCA+SVM, and showed that the proposed model outperforms these classical techniques. The classic detection methods based on SAR images do not perform well on small and gathering ships. And with the increasing resolution and quantity of optical remote sensing images, ship detection in optical remote sensing images has attracted a lot of research interests.This paper mainly discusses ship detection in optical remote sensing images. In the object detection task, natural image is mainly used to front-back object detection. By contrast, remote sensing image is mainly used to left-right object detection [

8]. Ship detection in remote sensing image is immensely affected by the viewpoint changes, cloud occlusion, wave interference, background clutter. Of these, the characteristics of optical remote sensing image such as the diversity of target size, high complexity of background and small targets makes ship detection particularly difficult.

In recent years, ship detection methods in optical remote sensing image mainly adopt a coarse-to-fine detection strategy which is based on two-stage [

9,

10]. The first step is the ship candidate extraction stage. All candidate regions that possibly contain ship targets are searched out in the entire image by some region proposal algorithms, which is a coarse extraction process. In this case, the information of image such as color, texture and shape is usually taken into account [

1,

11]. Region proposal algorithms include the sliding windows-based method [

12], the image segmentation-based method [

10], and the saliency analysis-based method [

13,

14]. These methods can preliminarily extract the candidate region of ships, then the ship candidate region is filtered and merged according to shape, scale information and neighborhood analysis methods [

15]. Selective search algorithm (SS) [

16] is a representative algorithm for candidate region extraction and is widely used in object detection task.

The second step is the ship recognition stage. The ship candidate regions are classified by a binary classifier which distinguishes whether the ship target is located in the candidate region [

17]. It is a fine recognition process. The features of ships are extracted and then candidate regions are classified. Many traditional methods extract low-level features, such as scale-invariant feature transform (SIFT) [

18], histogram of oriented gradients (HOG) [

19], deformed part mode (DPM) feature [

20] and structure-local binary patterns (LBP) feature [

21,

22] to classify candidate regions. With the popularization of deep learning, some methods use convolution neural network (CNN) to extract the features of ships, which are the high-level feature with more semantic information. These extracted features combine with a classifier to classify all candidate regions to distinguish the ship from the background. Many excellent classifiers such as the support vector machine (SVM) [

1,

23], AdaBoost [

24], and unsupervised discrimination strategy [

13] are adopted to recognize the candidate regions.

Although the traditional method has achieved considerable detection results in clear and calm ocean environments, there still have many deficiencies. Yao et al. [

25] found that the traditional methods have some shortcomings. First, the extracted candidate regions have a large amount of redundancy, which leads to expensive calculation. Second, the manual feature focuses on the shape or texture of ships, which requires manual observation of all ships. The complex background and variability in ship size will lead to poor detection robustness and low detection speed. Most important of all, when the size of the ships is very small or the ships are concentrated at the port, the extraction of the ship candidate region is particularly difficult. Therefore, the accuracy and efficiency of ship detection are greatly reduced.

Recently, convolutional neural networks (CNN) with good feature expression capability have widely used in image classification [

26], object detection [

27,

28], semantic segmentation [

29], image segmentation [

30], image registration [

31,

32]. Object detection based on deep convolution neural network has achieved good performance on large scale natural image data set. These methods are mainly divided into two main categories: two-stage method and one-stage method. Two-stage method originated from R-CNN [

33], then successively arise Fast R-CNN [

34] and Faster R-CNN [

28]. R-CNN is the first object detection framework based on deep convolutional neural networks [

35], which uses the selective search algorithm (SS) to extract the candidate regions and computes features by CNN. A set of class-specific linear SVMs [

36] and regressors are used to classify and fine-tune the bounding boxes, respectively. Fast R-CNN is improved on the basis of R-CNN to avoid repeated calculations of candidate region features. Faster R-CNN proposes a region proposed network (RPN) instead of the selective search method (SS) to extract candidate regions, which improves the computational efficiency by sharing the features between the RPN and the object detection network. One-stage methods, such as YOLO [

27] and SSD [

37], solve the detection problem as a regression problem and achieve an end-to-end mapping directly from image pixels to bounding box coordinates by a full convolutional network. SSD detects objects on multiple feature maps with different resolutions from a deep convolutional network and achieves better detection results than YOLO.

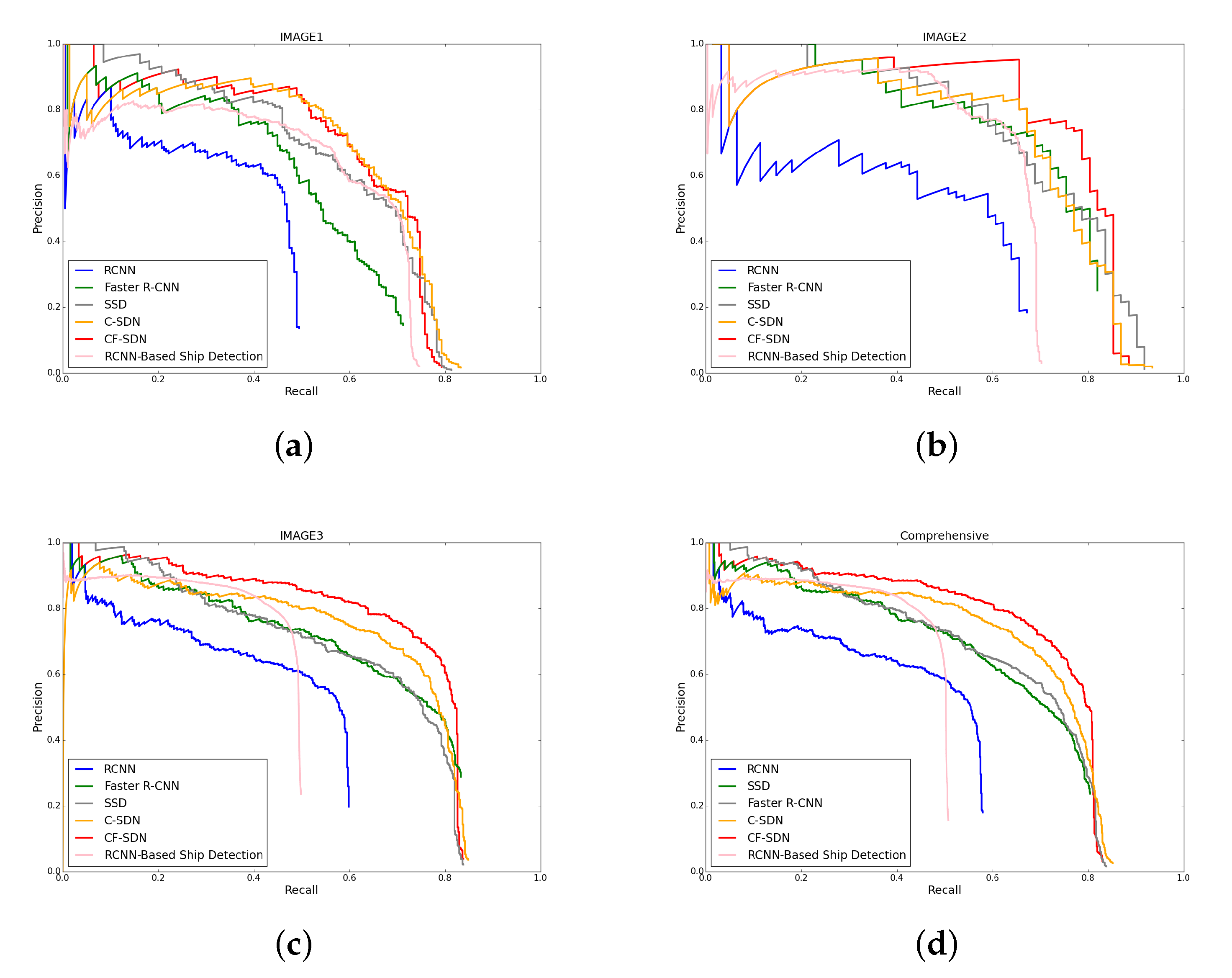

In recent years, many ship detection algorithms [

25] based on deep convolutional neural networks have been proposed. These methods intuitively extract features of images through CNN, avoiding complex shape and texture analysis, which significantly improve the detection accuracy and efficiency of ships in optical remote sensing images. Zhang et al. [

38] proposed S-CNN, which combines CNN with the designed proposals extracted from two ship models. Zou et al. [

23] proposed the SVD Networks, which use CNN to adaptively learn the features of the image and adopt feature pooling operation and the linear SVM classifier to determine the position of the ship. Hou et al. [

39] proposed the size-adapted CNN to enhance the performance of ship detection for different ship sizes, which contains multiple fully convolutional networks of different scales to adapt to different ships sizes. Yao et al. [

25] applied a region proposal network (RPN) [

28] to discriminate ship targets and regress the detection bounding boxes, in which the anchors are designed by intrinsic shape of ship targets. Wu et al. [

40] trained a classification network to detect the locations of ship heads, and adopted an iterative multitask network to perform bounding-box regression and classification [

41]. But these methods must first perform feature region extraction operations, so the efficiency of the algorithm is reduced. The most important is that these methods can produce more false detection on land and small ship cannot be detected.

This paper includes three main contributions:

(1) Aiming at the false detection on land, we use a sea-land separation algorithm [

42] which combines gradient information and gray information. This method uses gradient and gray information to achieve preliminary separation of land and sea, and then eliminates non-connected regions through a series of morphological operations and ignoring small area operations.

(2) About small ship cannot be detected, we used The Feature Pyramid Network (FPN) [

43] and a multi-scale detection strategy to solve this problem. The Feature Pyramid Network (FPN) proposes a top-down path that combines a horizontally connected structure that combines low resolution, strong semantic features with high resolution, weak semantic features to effectively solve small target detection problem. The multi-scale detection strategy is proposed to achieve ship detection with different degrees of refinement.

(3) We designed a two-stage inspection network for ship detection in optical remote sensing images. It can obtain the position of the predicted ship directly from the image without additional candidate region extraction operations, which greatly improves the efficiency of ship detection. Finally, we propose a coarse-to-fine ship detection network (CF-SDN) which has the feature extraction structure with the form of feature pyramid network, achieving end-to-end mapping directly from image pixels to bounding boxes with confidence scores. The CF-SDN contains multiple detection layers with a coarse-to-fine detection strategy employed at each detection layer.

The remainder of this paper is organized as follows. In Section II, we introduce our method including procedure of optical remote sensing image preprocessing including the sea-land separation algorithm, the multi-scale detection strategy, two strategies to eliminate the influence of cutting image, and the structure of the coarse-to-fine ship detection network (CF-SDN), including the feature extraction structure, the distribution of anchor, the coarse-to-fine detection strategy, the details of training and testing.. Section III describes the experiments performed on optical remote sensing image data set and Section IV presents conclusions.

2. Methodology

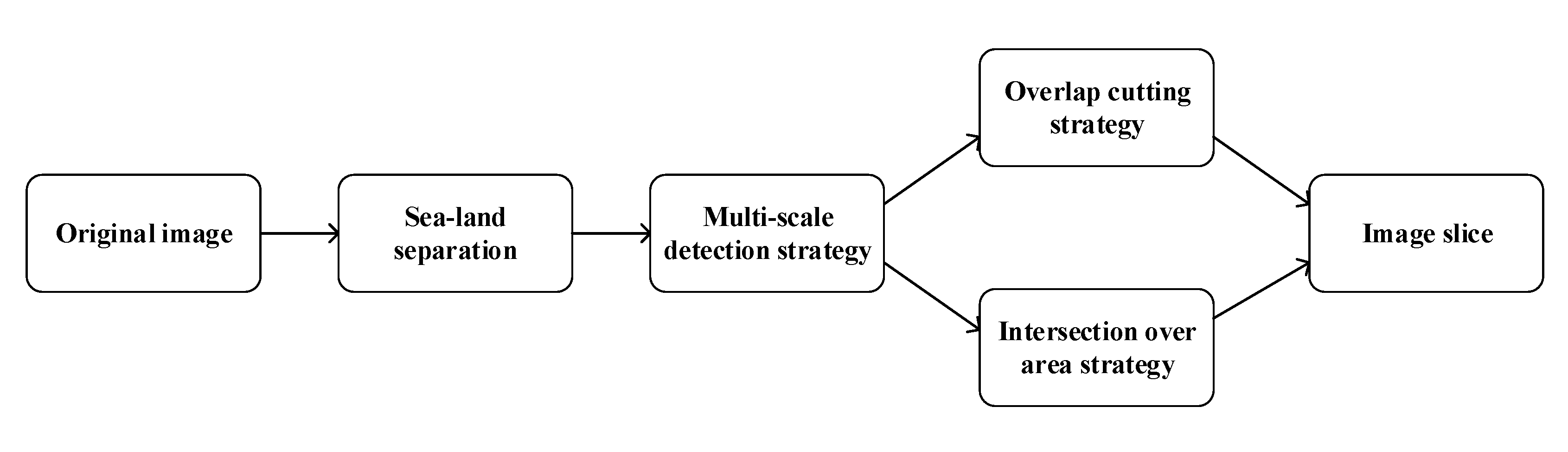

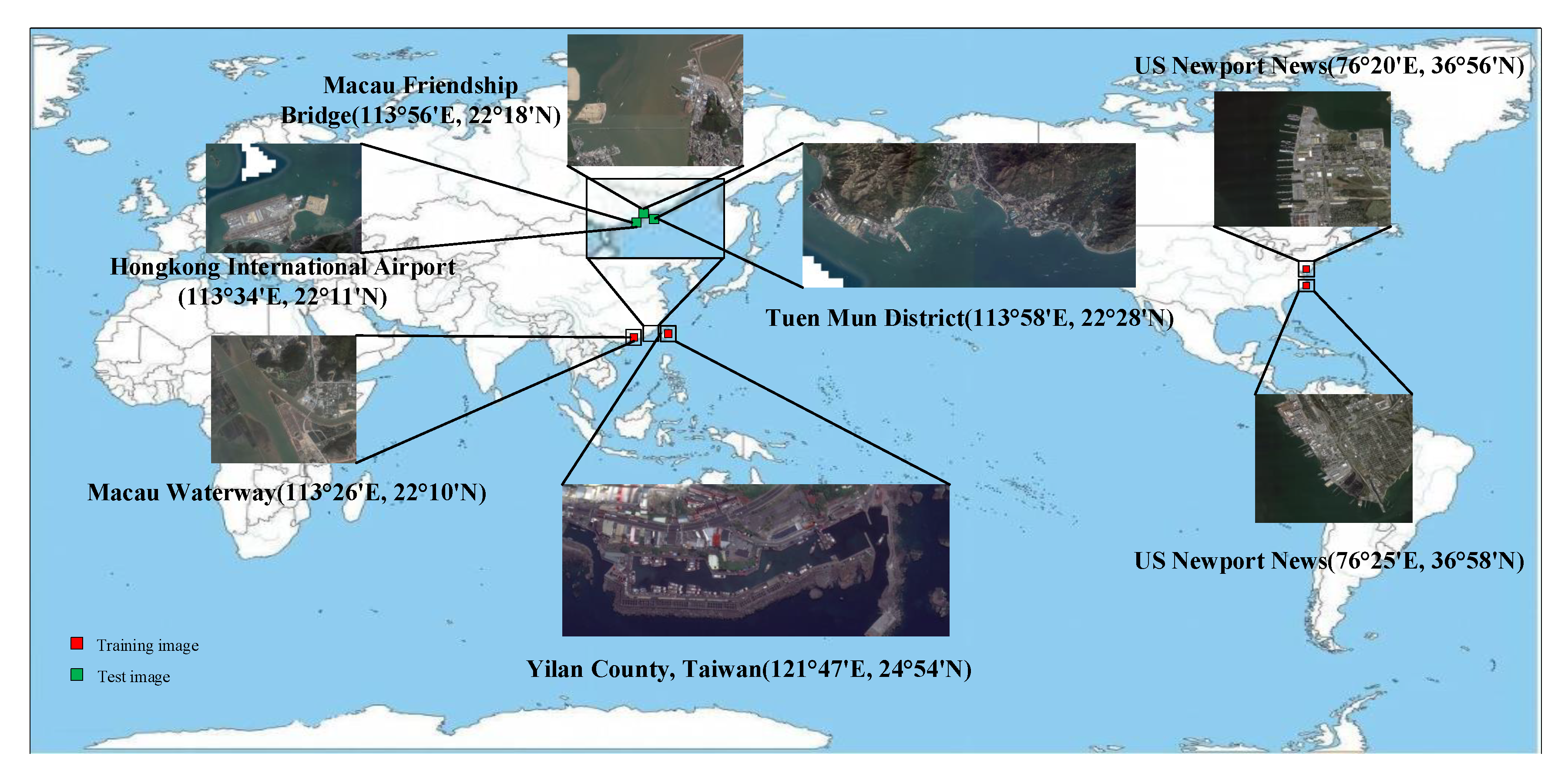

In this section, we will introduce the procedure of optical remote sensing image preprocessing including the sea-land separation algorithm, the multi-scale detection strategy, two strategies to eliminate the influence of cutting image, and the structure of the coarse-to-fine ship detection network (CF-SDN), including the feature extraction structure, the distribution of anchor, the coarse-to-fine detection strategy, the details of training and testing. The procedure of optical remote sensing image preprocessing is shown in

Figure 1.

2.1. Sea-land Separation Algorithm

Optical remote sensing images are obtained by satellites and aerial sensors. So the area that the image covered is wide and the geographical background is complex. In ship detection task, ships are usually scattered in water area (sea area) or in inshore area. In generally, the land and ship area present a relatively high gray level and have much complex texture, which are contrary to the situation in the sea area. Due to the complexity of the background in optical remote sensing images, the characteristics of some land areas are very similar to those of ships. This can easily lead to the detection of ship on land, which is called false alarm. Therefore, it is necessary to use sea-land separation algorithms to distinguish the sea area (or water area) from the land area before formal detection.

The sea-land separation algorithm [

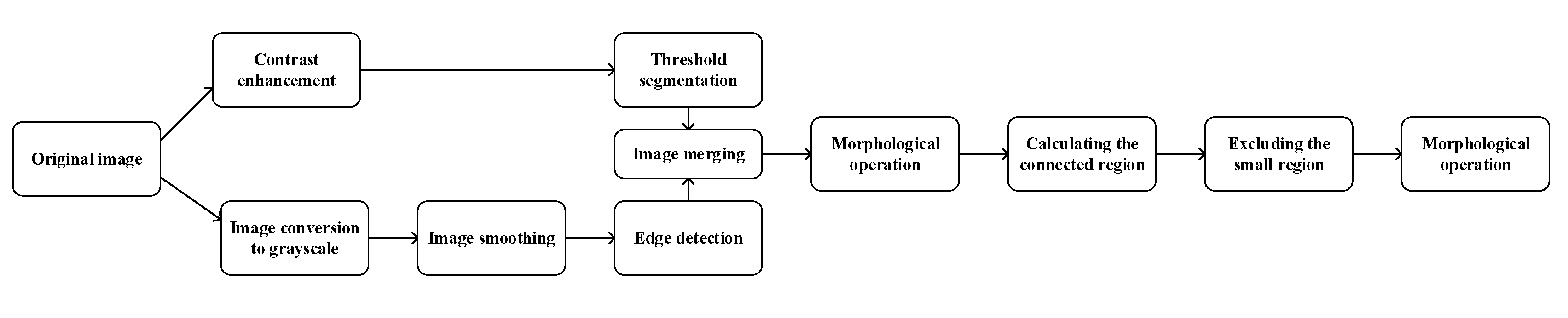

42] used in this paper considers the gradient information and the gray information of the optical remote sensing image comprehensively, combines some typical image morphology algorithms, and finally generates a binary image. In the process of sea-land separation, the algorithm that only considers the gradient information of the image performs well when the sea surface is relatively calm and the land texture is complex. However, the algorithm is difficult to achieve sea-land separation when the sea surface texture is complicated. The algorithm considering gray-scale information of the image is suitable for processing uniform texture images, but is difficult to process a complex image region. Therefore, the advantages of these two algorithms can be complemented with each other. The combination of gradient information and gray scale information can adapt to the complex situation of the optical remote sensing images, and can overcome the problem of poor sea-land separation performance caused by considering single information. The sea-land separation process is shown in

Figure 2. The specific implementation details of the algorithm are as follows:

(1) Threshold segmentation and edge detection are performed on the original image respectively. Before the threshold segmentation, the contrast of the image is enhanced to highlight the regions where the pixel values have large difference. Similarly, the image should be smoothed before performing edge detection. The traditional edge detection methods produce a lot of subtle wavy textures on the sea surface, which can be eliminated by filters. Here, we enhance the contrast of the image by histogram equalization, and perform threshold segmentation by the Otsu algorithm. At the same time, the median filter is used to smooth the image and the median filter size that selected in our experiment is , because the median filter is a nonlinear filtering that can not only remove noise but also preserve the edge information of the image when the image background is complex. Then the canny operator is used to detect the image edges, and we set the low and high thresholds to 10% of the maximum and 20% of the maximum, respectively.

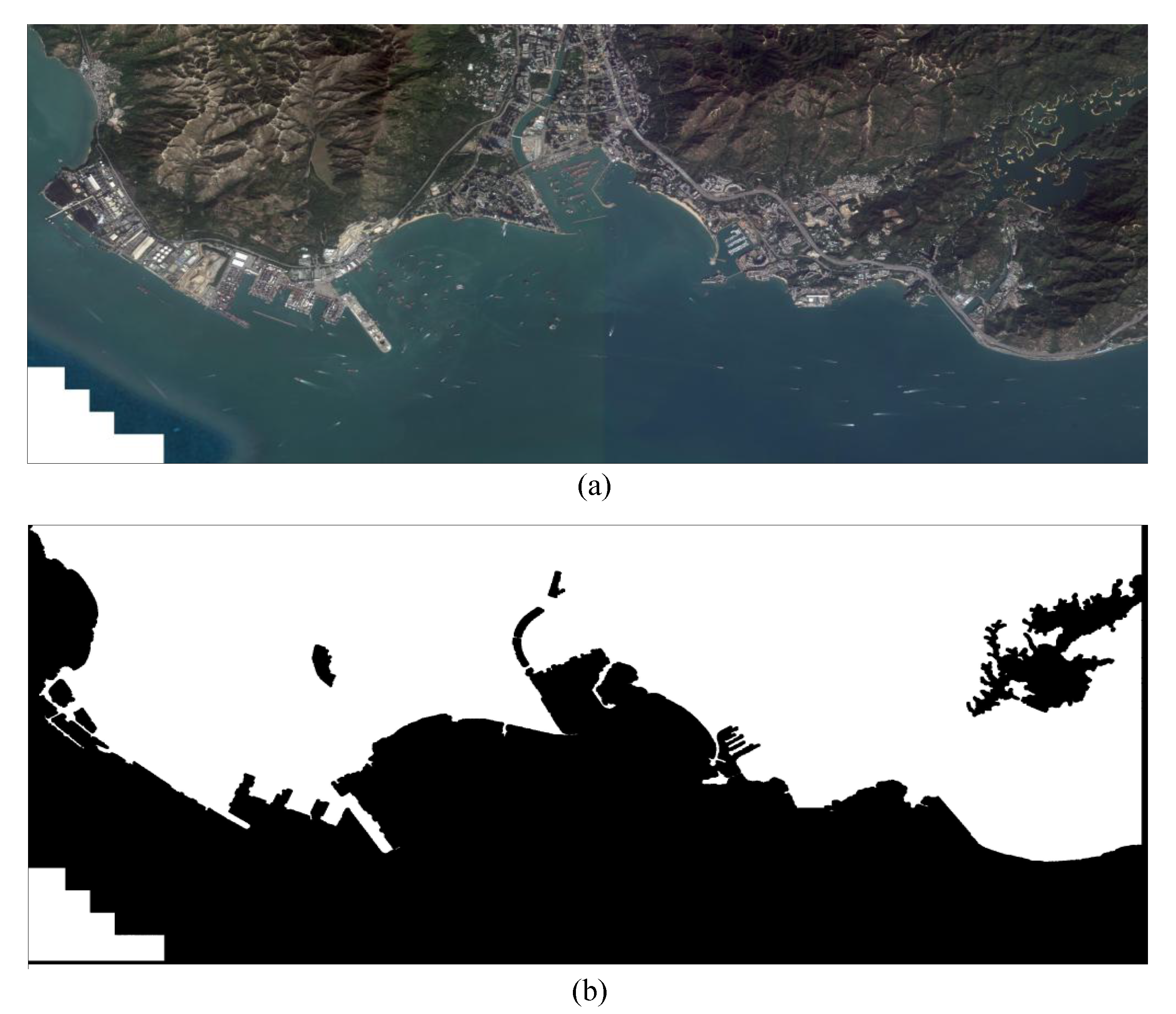

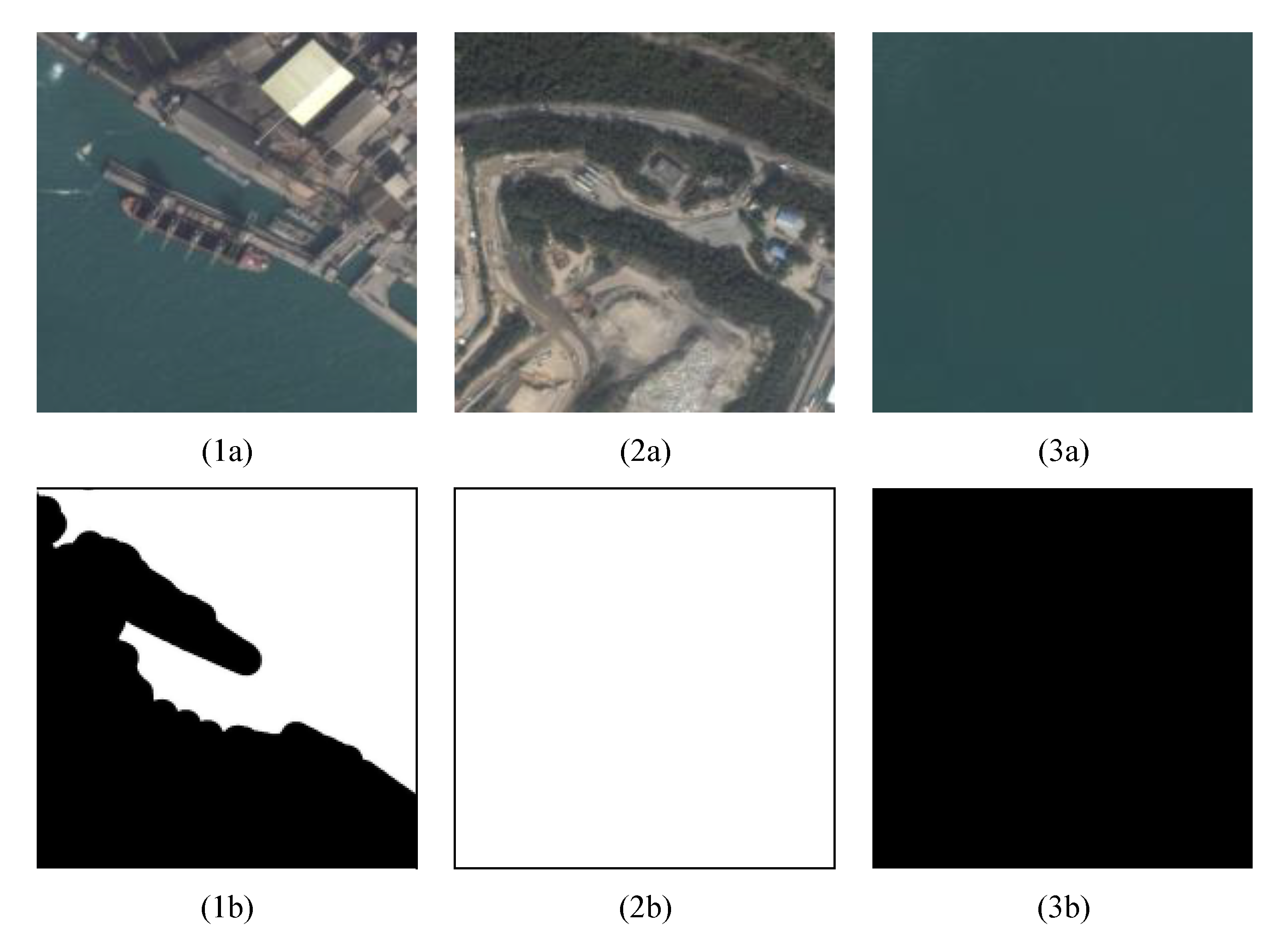

(2) The threshold segmentation results and the edge detection results are combined by logical OR operation, then a binary image is generated to highlight non-water areas, which is regarded as the preliminary sea-land separation result. In the binary image, The pixel value of the land area is set to 1, and the pixel value of the water area is set to 0. The final result (such as IMAGES3) is shown in

Figure 3.

(3) Finally, a series of specific morphological operations are performed on this binary image. The basic specific morphological operation algorithms include dilation operation, erosion operation, open operation and close operation. Among them, the dilation operation and the close operation can fill gaps in the land contours of the binary map and remove small holes, while the erosion operations and the open operations can eliminate some small protrusions and narrow sections in the land area. Here, we first perform dilation operation and close operation on the binary image to eliminate the small holes in the land area. Then we calculate the connected regions for the processed binary image and exclude the small regions (corresponding to the ship or the small island at sea). The bumps on the land edges are eliminated by the erosion operation and the opening operation. The above specific morphological operation can be repeated to ensure the sea and land areas are completely separated. The size and shape of structuring elements is determined by analyzing the characteristic of non-connected areas on land from every experiment. The shape of structuring elements that selected in our experiment is disk, and the size of disk is 5 and 10.

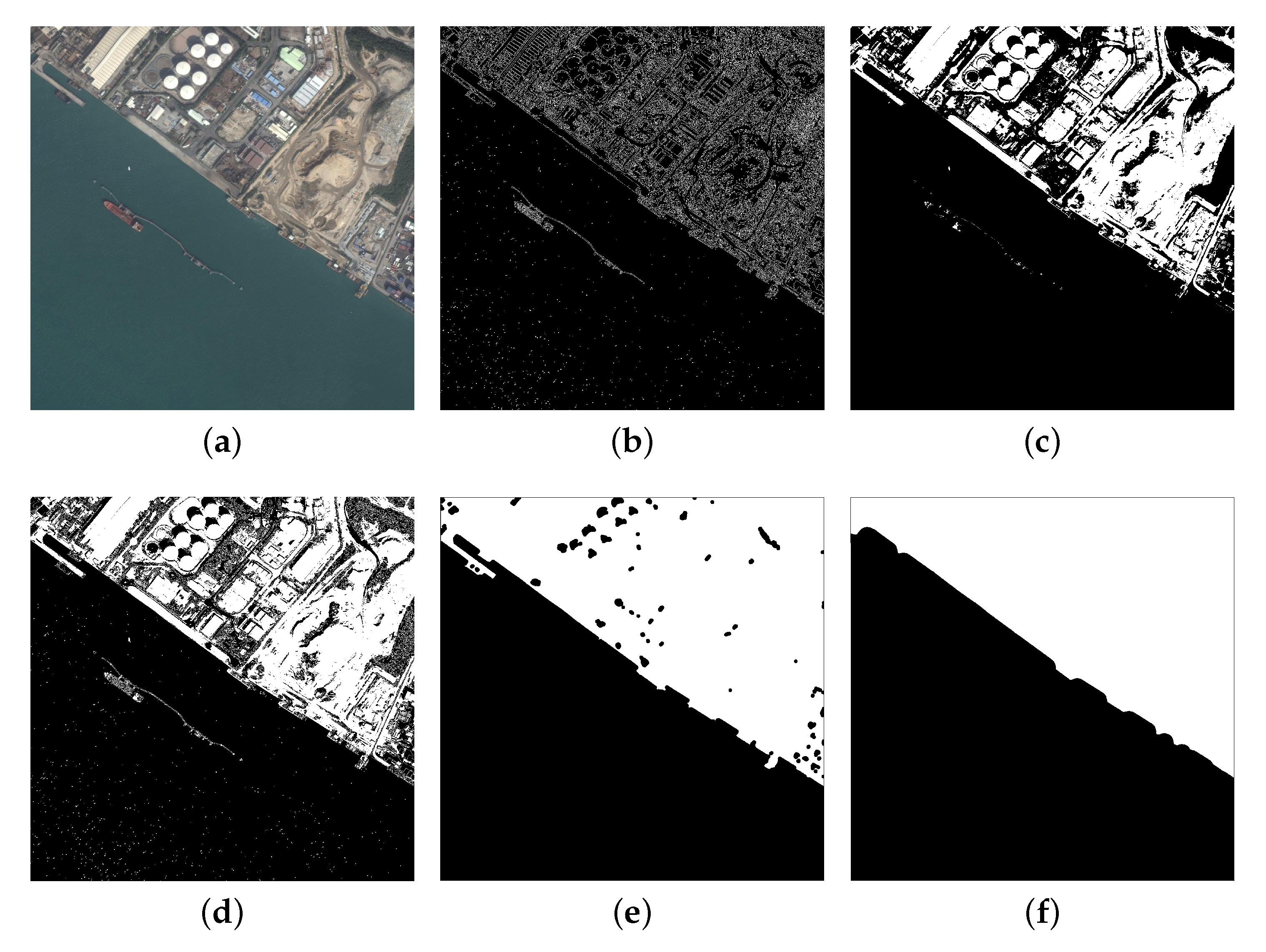

Figure 4 gives the intermediate results of a typical image slice in the sea-land separation process.

During test, only the area that contains the water area is sent into CF-SDN to detect ships and the pure land area is ignored.

Figure 4 gives the intermediate results of a typical image slice in the sea-land separation process. It can be found that the results of edge detection and threshold segmentation can complement each other to highlight non-water areas more completely. When only use threshold segmentation method, the area with low gray values on land may be classified as sea areas. Edge detection highlights the areas with complex textures and complements the results of threshold segmentation. We perform the expansion filtering and closing operations on the combined results in sequence. Then the connected regions are calculated and the small regions are removed. The final sea-land separation results highlight the land area and ships on the surface are classified as sea area.

2.2. Multi-Scale Detection Strategy

Generally the optical remote sensing images size is very large. The length and width of the image is usually several thousand pixels, the ship targets seem to be very small on the entire image. Therefore, it is necessary to cut the entire remote sensing image into some image slices and detect it separately. These image slices are normalized to the fixed size () in a certain proportion. Then the coarse-to-fine ship detection network outputs the detection results of these image slices. Here, the outputs of network are scaled according to the corresponding proportion. Finally, these detection results are mapped back to the original image according to the cutting position.

The sea-land separation results obtained in the previous subsection will also be applied in this subsection. Most ship detection methods set the pixel value of the land area in the remote sensing image to zero or the image’s mean value to achieve the purpose of shielding land during the detection process. However, roughly removing original pixel values of the land area can easily lead to miss detection of ships at boundary between sea and land. If separation results are not accurate enough, detection performance will be greatly reduced. In this paper, we use a threshold to quickly exclude the areas that only contain land, and detect ships in areas that contain water (include the boundary between the sea and land). The specific method is as follows:

First, when the testing optical remote sensing image is cut, the corresponding sea-land separation result (a binary image) will be cut into some binary image slices with the same cutting method. And In the cut image, the ratio of ship area to slice area will become larger. Through a lot of experimental and statistical analysis, we found that when the average value of each binary image slice is less than a certain threshold, the water area in the image slice does not appear the ships. So each remote sensing image slice corresponds to a binary image slice.

Figure 5 lists 3 examples. We calculate the average value of each binary image slice, and determine whether the image contains water. If the value is greater than the set threshold (0.8), we can think the corresponding remote sensing image slice almost does not contain water area, so we skip it and do not detect it.

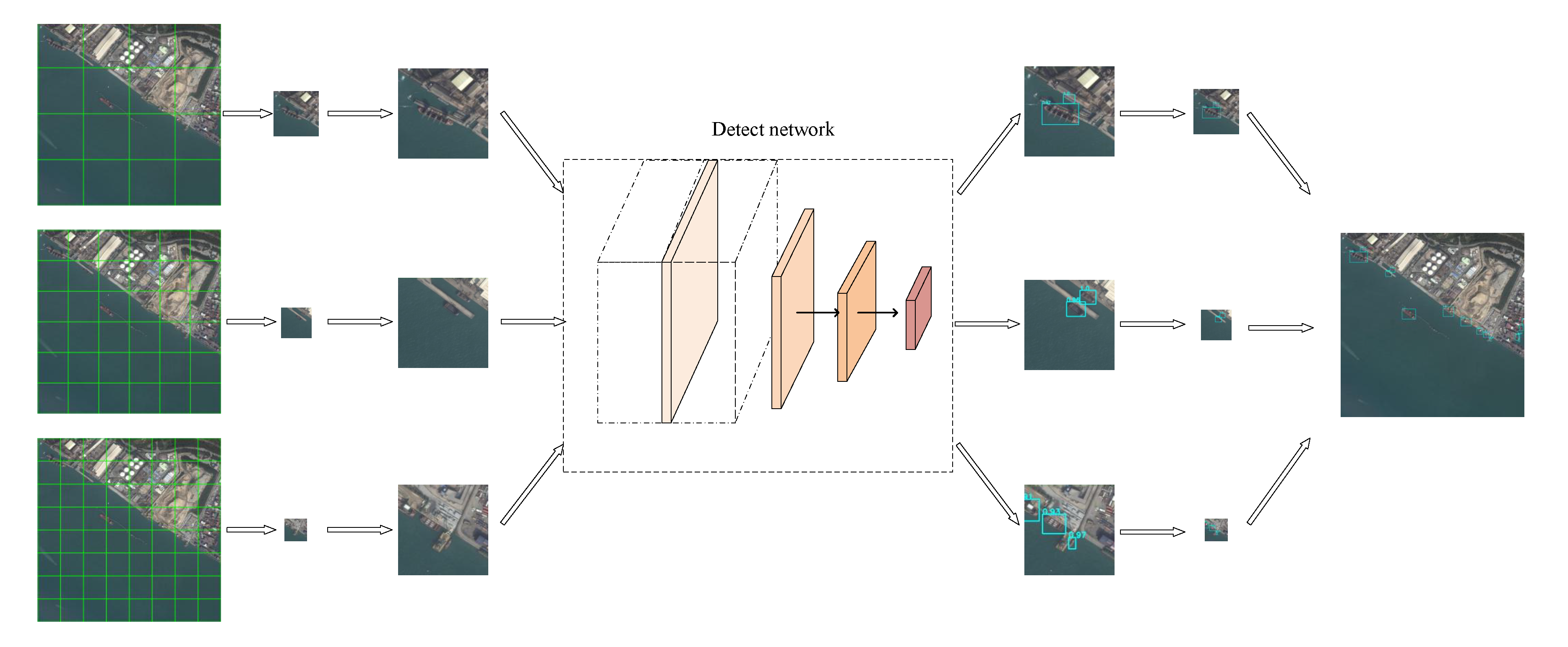

All mentioned above is the method using a single cutting size to cut and detect the testing optical remote sensing image. However, the scale distribution range of ships is wide. The size of small ship is only dozens of pixels, while the size of large ships is tens of thousands of pixels. It is difficult to determine the cutting size to ensure that ships at all scales can be accurately predicted. If the cutting size is small, many large ships will be cut off, which leads to miss detection. If the cutting size is large, many small ships will look smaller, which are difficult to detect. We propose a multi-scale detection strategy shown in

Figure 6 to solve this dilemma.

The multi-scale detection strategy is that multiple cutting sizes are used to cut the testing optical remote sensing image into multiple different scales image slices in the test process. The testing optical remote sensing image is detected with multiple cutting sizes to achieve different degrees of refinement detection. And the detection results at each cutting size are combined to make the ship detection in optical remote sensing image more detailed and accurate.

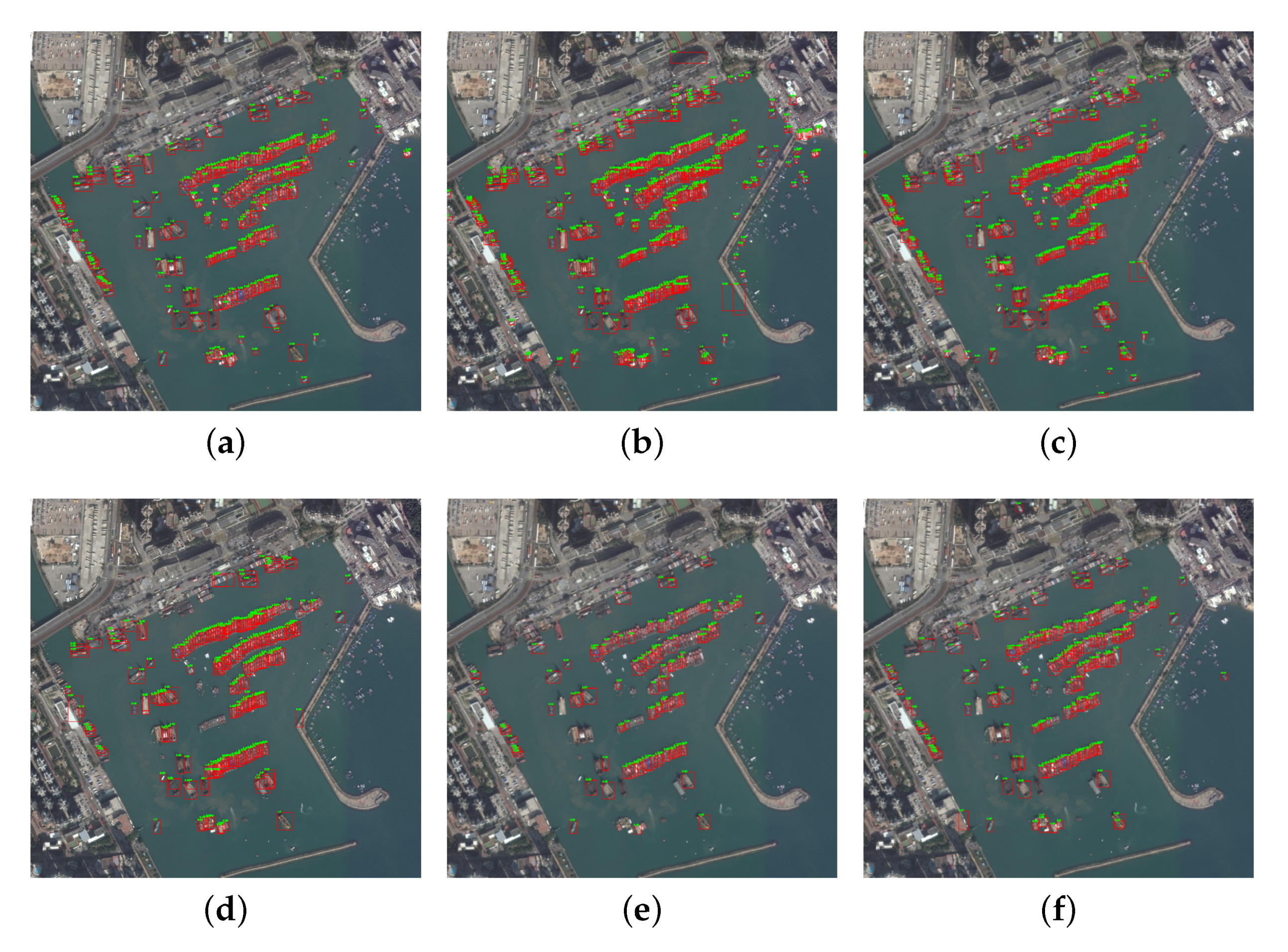

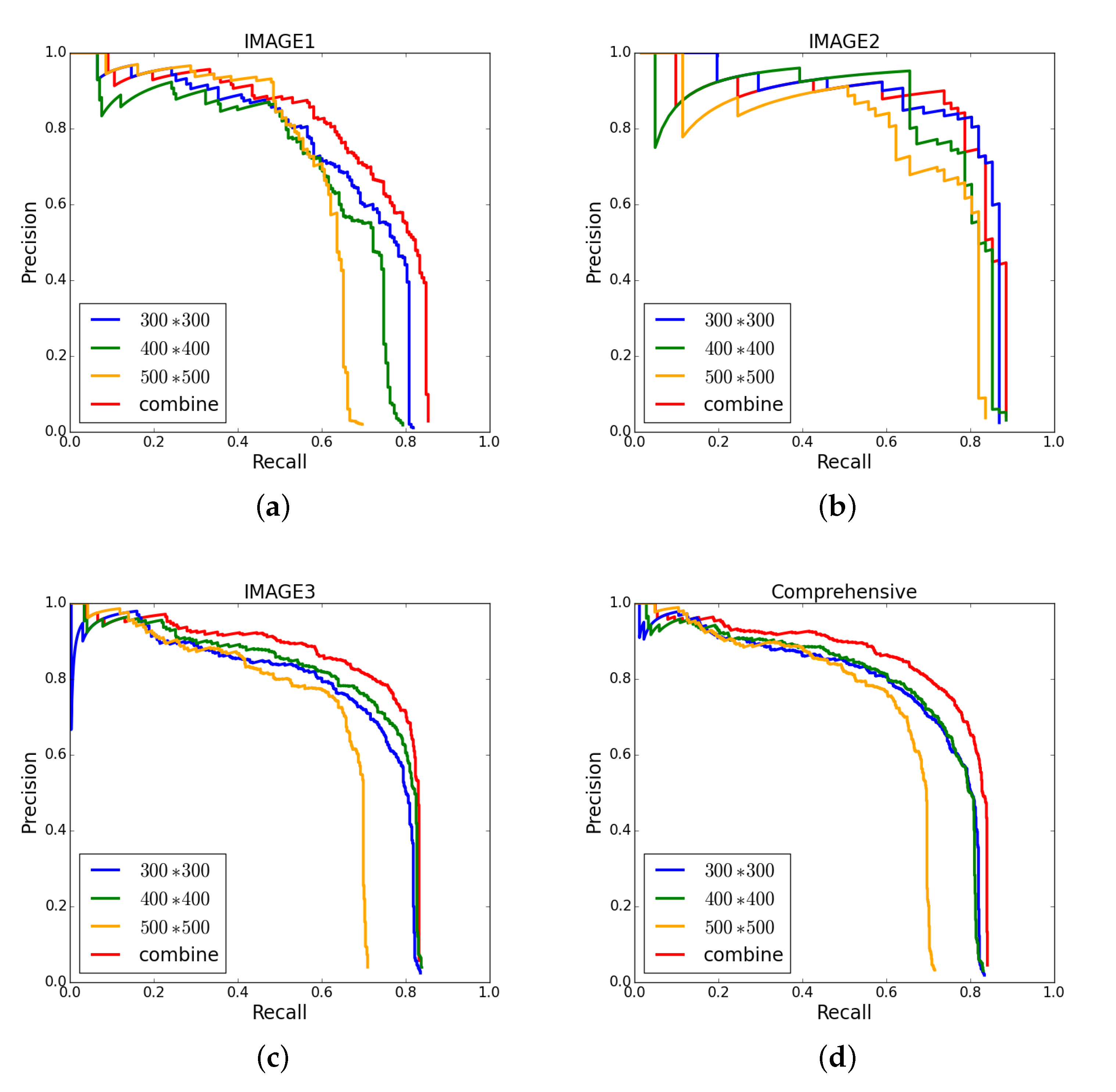

In the experiment, we do a lot of tests and statistical analysis on the data set used in the experiments, and we find that the maximum length of the ship in the data does not exceed 200 pixels, the maximum width does not exceed 60 pixels, the minimum length is greater than 30 pixels, and the minimum width is greater than 10 pixels. Finally, the image slices can achieve satisfactory results when we choose the three cutting scales, , and respectively. And then image slices of each scale are detected separately. The detection results at multiple cutting sizes are combined and most of the redundant bounding boxes are deleted by non-maximal suppression (NMS), then we obtain the final detection results.

2.3. Elimination of Cutting Effect

Because the optical remote sensing images need to be cut during the detection process, many ships are easy to be cut off. This results in some bounding boxes which are output by the network only containing a portion of the ship. We adopt two strategies to eliminate the effect of cutting.

(1) We slice the image by overlap cutting. The overlap cutting is a strategy to ensure each ship appears completely at least once in all cutting image slices. This strategy produces overlapping slices by moving stride smaller than the slice size. For example, when the slice size is 300*300, the stride must be less than 300, and the produced slices certainly have overlapping parts. Moreover, different cutting scales are used in the test process. The ship which is cut off at one scale may completely appear at another scale. These bounding boxes detected from each image slice are mapped back to the original image according to the cutting position, which ensure that at least one of the bounding boxes of the same ship can completely contain the ship. The overlap cutting size used in experiment is 100 and the stride is 100.

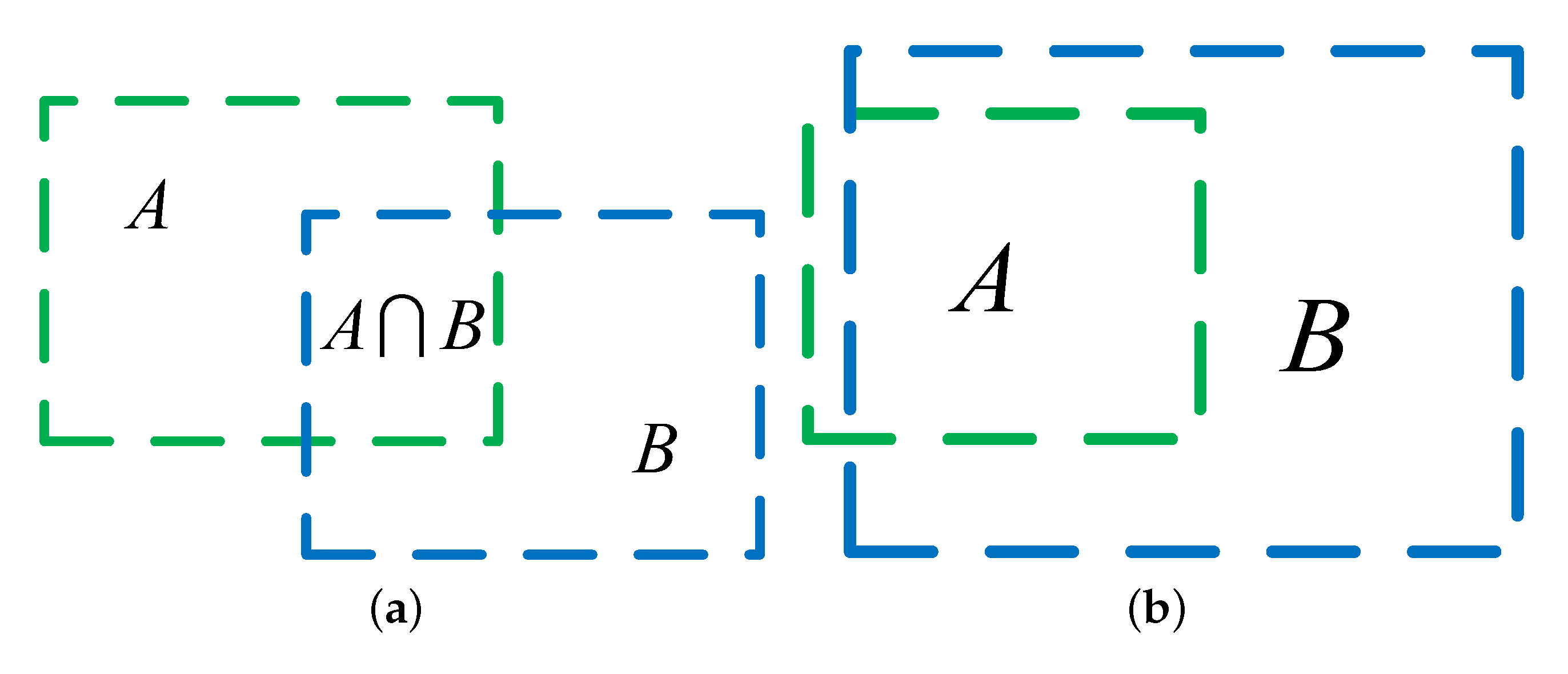

(2) Suppose there are two bounding boxes

A and

B, shown in

Figure 7a. The original NMS method calculates the Intersection over Union (IoU) of the two bounding boxes and compares it with the threshold to decide whether to delete the bounding box with lower confidence. However, optical remote sensing image ship detection is special. As shown in

Figure 7b, it is assumed that the bounding box A only contains a part of a ship, and the bounding box B completely contains the same ship, so most of the area A is contained in B. But according to the above calculation method, the IOU between A and B may not exceed the threshold, so the bounding box A is retained and becomes a redundant bounding box.

In order to solve this situation, a new metric named IOA (intersection over area) is used in the NMS to determine whether to delete the bounding box. We define IOA between box

A and box

B as:

Here, assuming that the confidence of B is lower than A (if the confidence of the two boxes is equal, then box B is the smaller one.) and refers to the area of the overlap between box A and box B.

During the test, we first perform non-maximum suppression on all detection results, which calculates the value of IOU between overlapping bounding boxes (the threshold is 0.5) to remove some redundant bounding boxes. For the remaining bounding boxes, the IOA between the overlapping bounding boxes are calculated. If the IOA between the two bounding boxes exceeds the threshold which is set to 0.8 in the experiments, the bounding box with lower confidence is removed. The remaining bounding boxes are the final detection results.

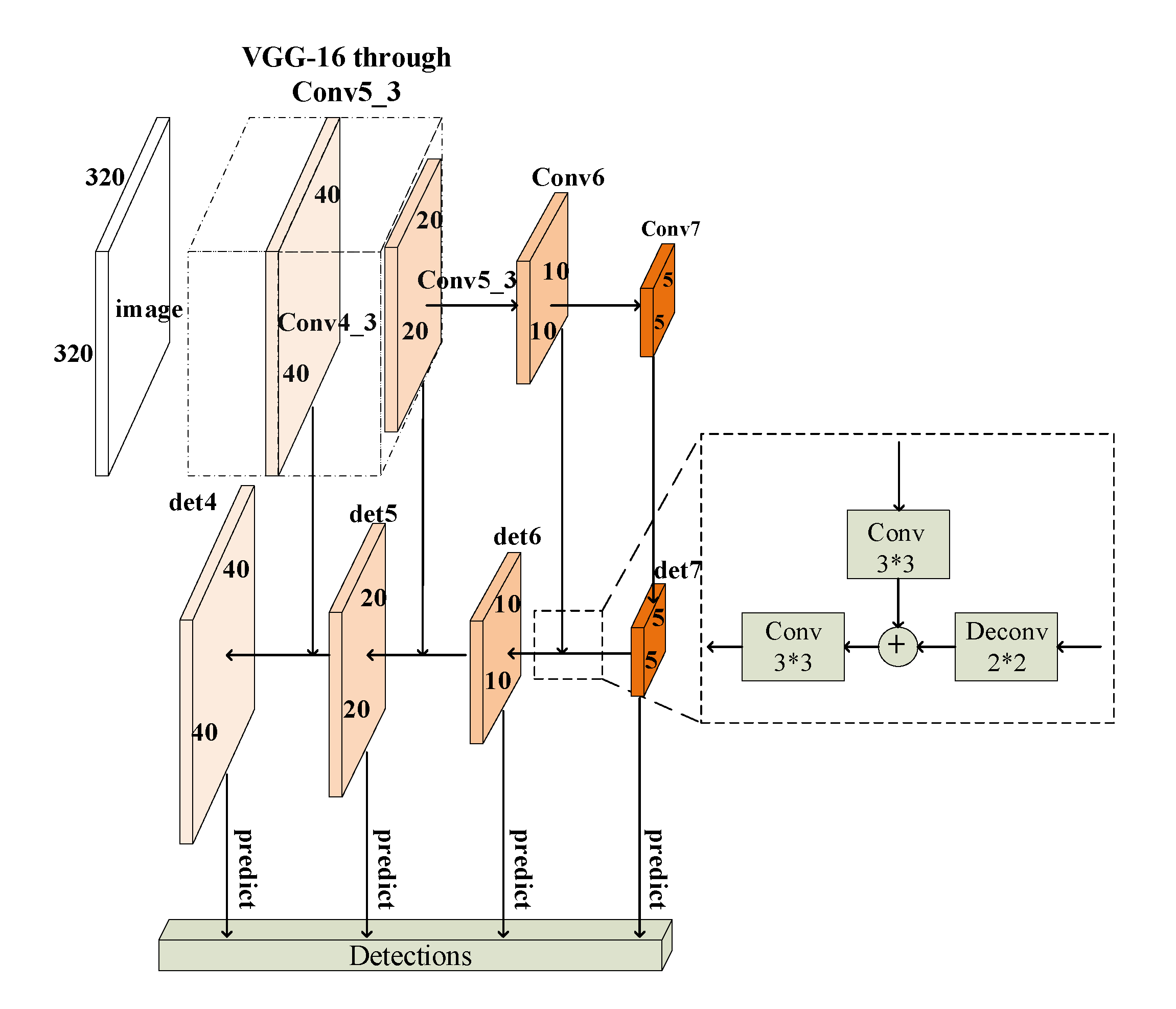

2.4. The Feature Extraction Structure

Using deep convolutional neural networks for target detection have an important problem. It is that the feature map output by the convolutional layer becomes smaller as the network deepens, and the information of the small target is also lost. This causes low detection accuracy for small target. Considering that shallow feature maps have higher resolution and deep feature maps contain more semantic information, we used FPN [

43] to solve this problem. This structure can fuse features of different layers and independently predict object position of each feature layer. Therefore, the CF-SDN not only can preserve the information of small ship, but also have more semantic information. The input of the network is an image slice which is cut from optical remote sensing images, and the output is the predicted bounding boxes and the corresponding confidences. The feature extraction structure of the CF-SDN is shown in the

Figure 8.

We select the first 13 convolutional layers and the first 4 max pooling layers of VGG-16 which is pre-trained with ImageNet dataset [

44] as the basic network, and add 2 convolutional layers (

and

) at the end of the network. The two convolutional layers (

and

) reduce the resolution of the feature map to half in sequence. With the deepening of the network, the features are continuously sampled by the max pooling layer, and the resolution of the output feature map get smaller, but the semantic information is more abundant. This is similar to the bottom-up process in FPN networks(A deep convnet computes an inherent multi-scale and pyramidal shape feature hierarchy). We select four different resolution feature maps that output from

,

,

and

, as shown in

Figure 8. The strides of the selected feature maps are 8, 16, 32 and 64. The input size of this network is

pixels and the resolutions of the selected feature map are

(

),

(

),

(

) and

(

).

We set four detection layers in the network, and generate four feature maps of corresponding size through the selected feature maps. Then these feature maps are used as the input of four detection layers respectively. The deepest feature map (

) output by

is directly considered as the feature map of the last detection layer input, which is named det7. The feature maps used as inputs of the remaining detection layers are generated sequentially from the back to front in a lateral connections manner. The dotted line in the

Figure 8 demonstrates the lateral connections manner. The deconvolution layer doubles the resolution of the deep feature map, while the convolution layer only changes the channel number of the feature map without changing the resolution. Feature maps are fused by element addition, and a

convolutional layer is added to decrease the aliasing effect caused by up-sampling. The fusion feature map serves as the input of the detection layer.

2.5. The Distribution of Anchors

In this subsection, we design the distribution of anchors at each detection layer. Anchors [

28] are a set of reference boxes at each feature map cell, which tile the feature map in a convolutional manner. At each feature map cell, we predict the offsets relative to the anchor shapes in the cell and the confidence that indicate the presence of ship in each of those boxes. In optical remote sensing images, the scale distribution of ships is discrete, and ships usually have diverse aspect ratio depending on different orientations. So anchors with multiple sizes and aspect ratios are set at each detection layer to increase the number of matched anchors.

Feature maps from different detection layer have different resolutions and receptive field sizes introduce two types of receptive fields in CNN [

45,

46], one is the theoretical receptive field which indicates the input region that theoretically affects the value of this unit, the other is the effective receptive field which indicates the input region has effective influence on the output value. Zhang et al. [

47] points out that the effective receptive field is smaller than the theoretical receptive field, and anchors should be significantly smaller than theoretical receptive field in order to match the effective receptive field. At the same time, the article states that the stride size of a detection layer determines the interval of its anchor on the input image.

As listed in the second and third column of

Table 1, the stride size and the size of theoretical receptive field at each detection layer are fixed. Considering that the anchor size set for each layer should be smaller than the calculated theoretical receptive field, we design the anchor size of each detection layer as shown in the fourth column of

Table 1. The anchors of each detect layer have two scales and five aspect ratios. The aspect ratios are set to

, so there are

anchors at each feature map cell on each detection layer.

2.6. The Coarse-to-Fine Detection Strategy

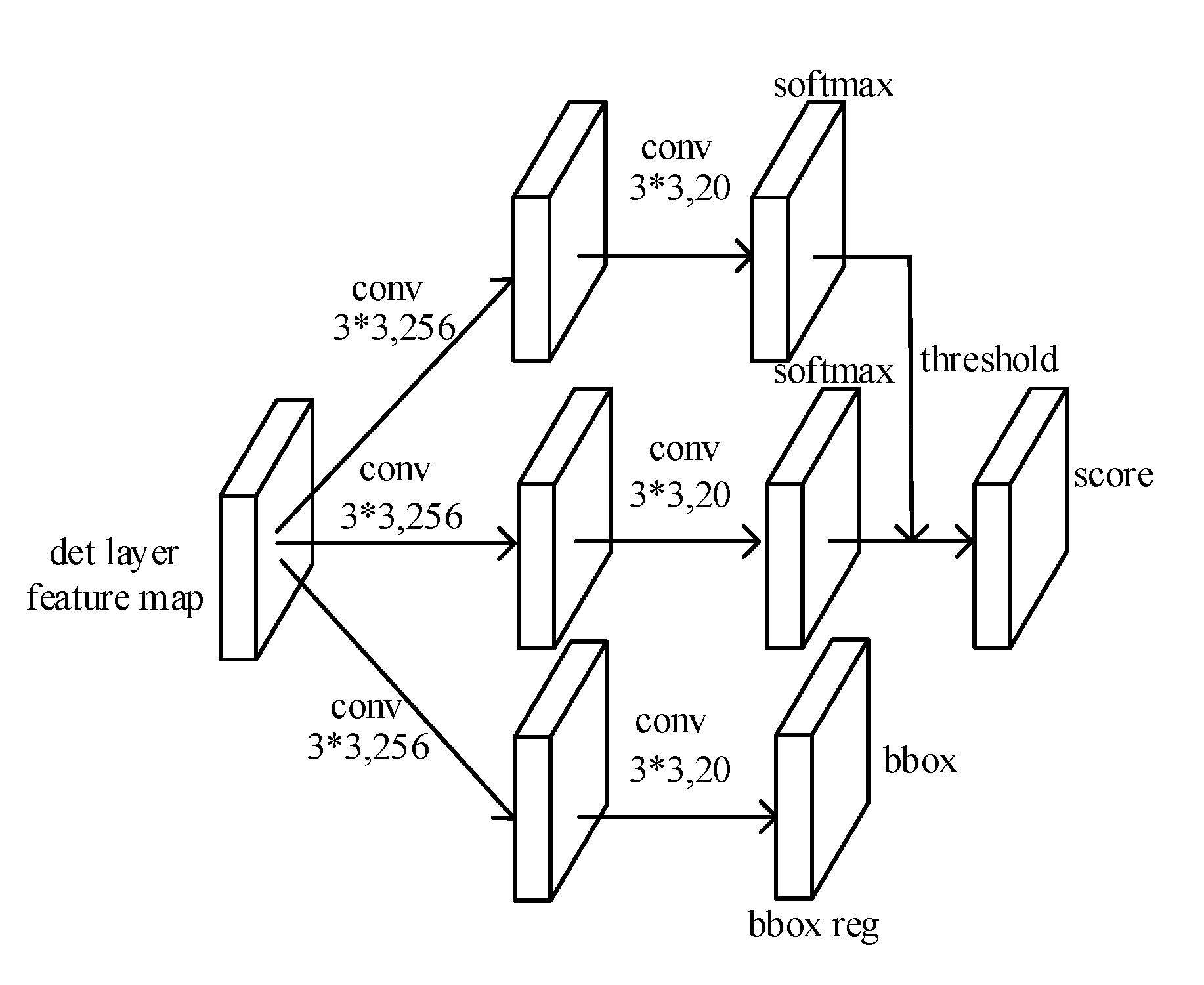

The structure of the detection layer is shown in

Figure 9. We set up three parallel branches at each detection layer, two for classification and the other for bounding box regression. In

Figure 9, the branches from top to bottom are coarse classification network, fine classification network and bounding box regression network, respectively. At each feature map cell, the bounding box regression network predicts the offsets relative to the anchor shapes in the cell, and the coarse classification network predicts the confidence which indicates the presence of ship in each of those boxes. This is a coarse detection process which obtains some bounding boxes with confidences. Then, the image block contained in the bounding box which has a confidence higher than the threshold (set to 0.1) is further classified (ship or background) by the fine classification network to obtain the final detection result. This is a fine detection process.

2.6.1. Loss Function

Aiming at the structure of the detection layer, the multi-task loss

L are used to jointly optimize model parameters:

In Equation (

2)

i is the index of an anchor from the coarse classification network and the bounding box regression network in a batch, and

is the predicted probability that the anchor

i is a ship. If the anchor is positive, the ground truth label

is 1, and

is 0 conversely.

is a vector representing the 4 parameterized coordinates of the predicted bounding box, and

is that of the ground-truth box associated with a positive anchor. The term

means the regression loss is activated only for positive anchors and disabled otherwise.

j is the index of an anchor from the fine classification network in a mini-batch, and the meaning of

and

is similar to

and

. The three terms are normalized by

,

and

and weighted by the balancing parameter

,

and

.

represents the number of positive and negative anchors from the coarse classification network in the batch.

represents the number of positive anchors from the bounding box regression network in the batch, and

represents the number of positive and negative anchors from the fine classification network in the batch. In our experiment, we set

.

In Equation (

2) the classification loss

is the log loss from the coarse classification network:

the regression loss

is the smooth L1 loss from the bounding box regression network:

2.6.2. Training Phase

In the training phase, these three branches are trained at the same time. A binary class label is set for each anchor in each branch.

(1) For coarse classification network and bounding box regression network, the anchors assigned positive label must satisfy one of the following two conditions: (i) match a ground truth box with the highest Intersection-over-Union (IoU) overlap. (ii) match a ground-truth box with an IoU overlap higher than 0.5. The anchors which have IoU overlap lower than 0.3 for all ground-truth boxes are assigned as negative label. The SoftMax layer outputs the confidences of each anchor at each cell on the feature map. Anchors whose confidence higher than 0.1 are selected as the train samples of the fine classification network.

(2) For fine classification network, the anchors selected from the previous step are further given positive and negative label. Here, the IoU overlap threshold for selecting the positive anchor is raised from 0.5 to 0.6. The larger threshold means that the positive anchor selected is closer to the ground truth box, which makes the classification more precise. Since the number of negative samples in remote sensing images is much larger than the number of positive samples, we randomly select negative samples to ensure that the ratio between positive and negative samples in each mini-batch is 1:3. If the number of positive samples is 0, the number of negative samples is set to 256.

2.6.3. Testing Phase

In the testing phase, firstly the bounding box regression network outputs the coordinate offsets to each anchor at each feature map cell. Then we adjust the position of each anchor by the box regression strategy and to get the bounding boxes. The outputs of the two classification networks are the confidence scores s1 and s2 corresponding to each bounding box. The confidence scores encode the probability of the ship appearing in the bounding box. First, if s1 output from the coarse classification network is lower than 0.1, the corresponding bounding box is removed. Then the confidence corresponding to the remaining bounding box is determined as the product of s1 and s2. The bounding box with the confidence larger than 0.2 is selected. Finally, non-maximum suppression (NMS) is applied to get final detection results.