UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA)

Abstract

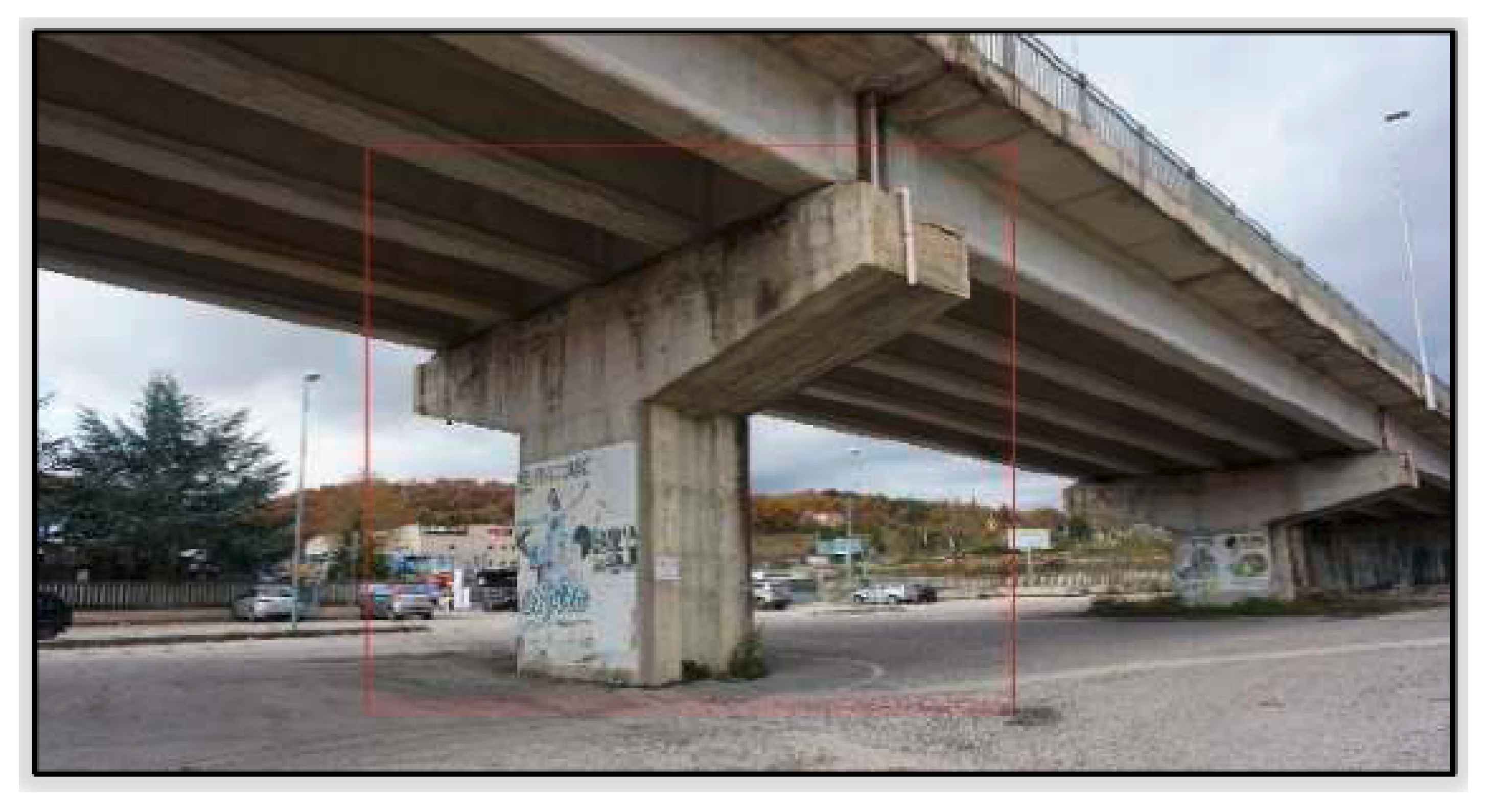

1. Introduction

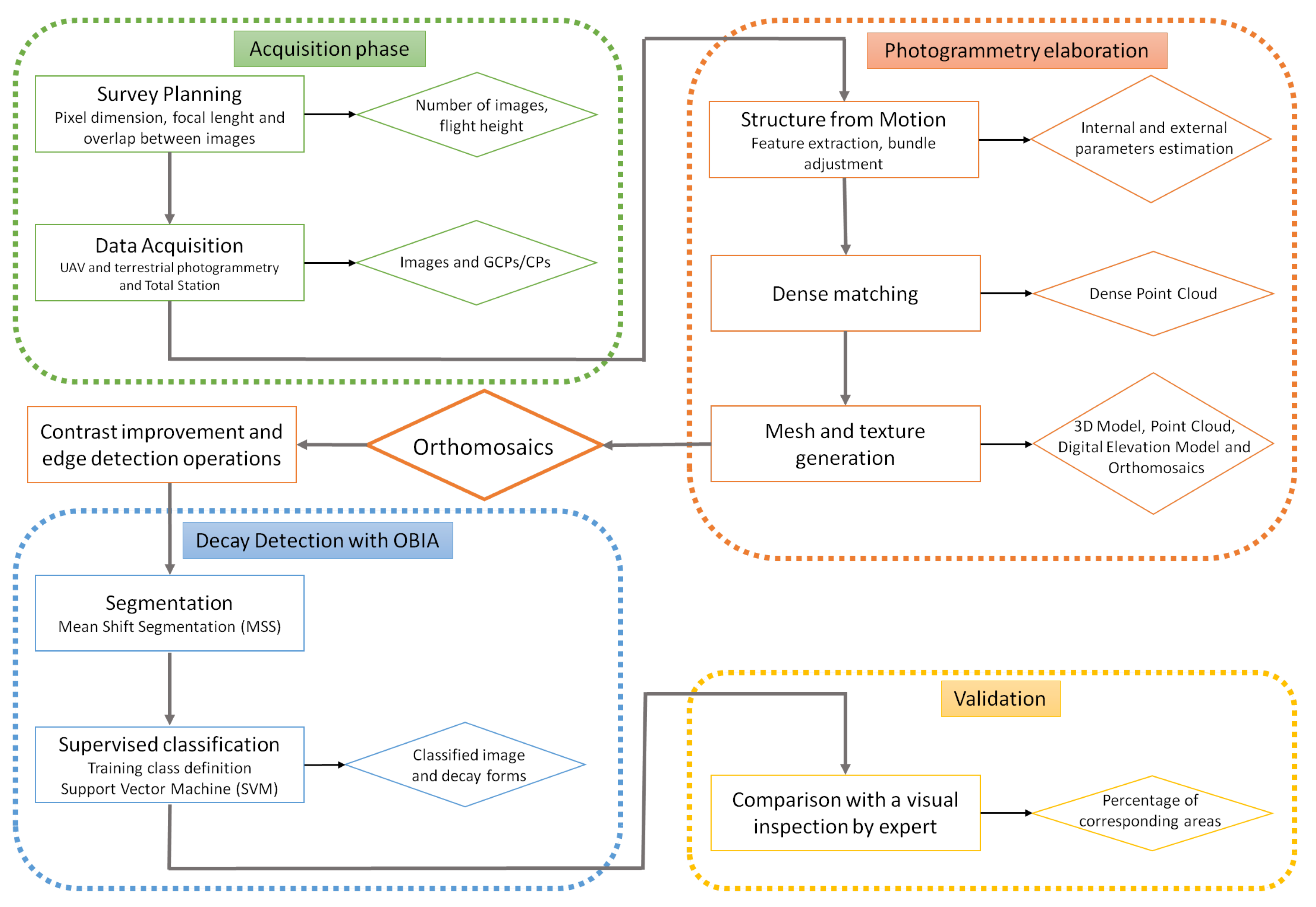

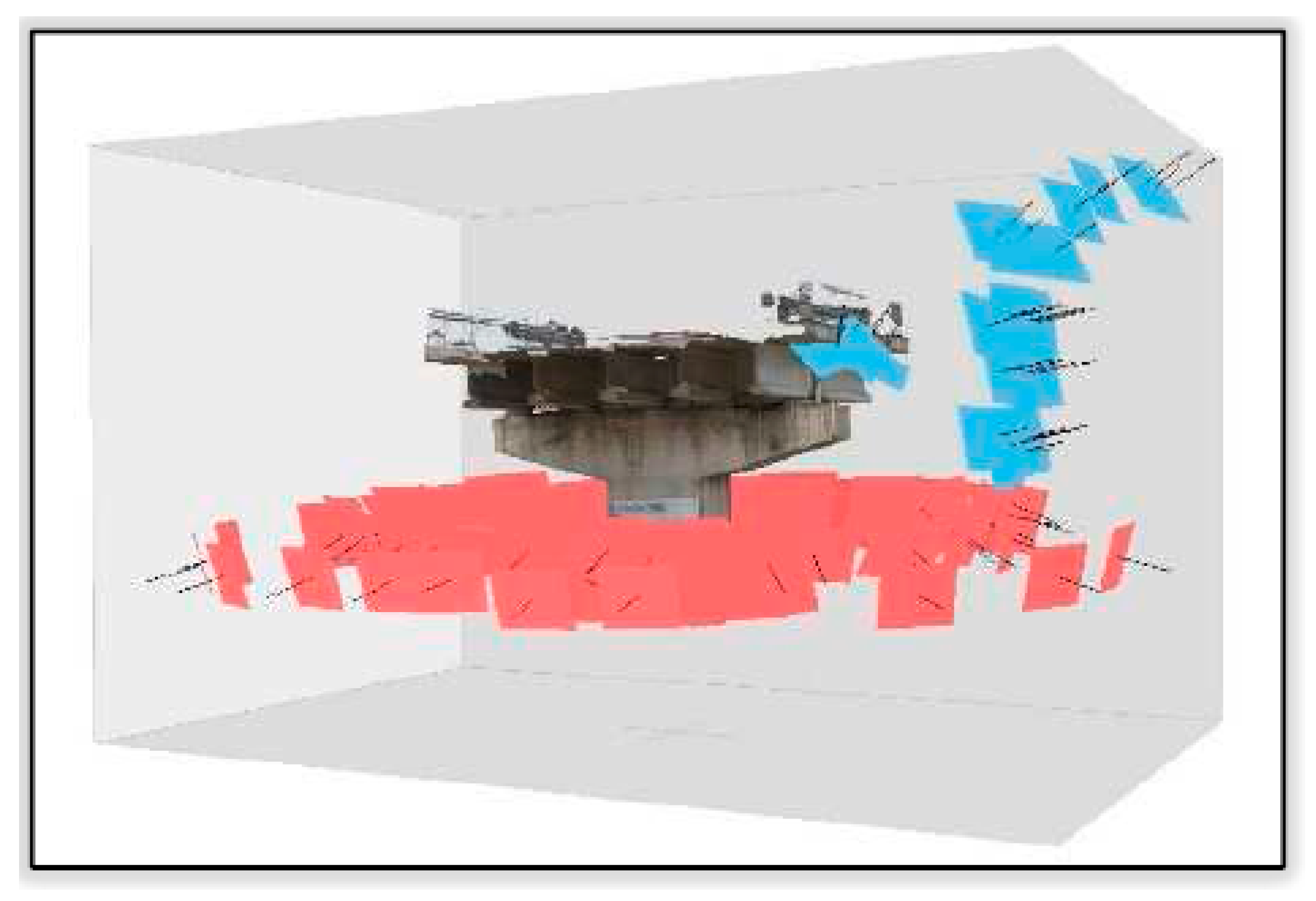

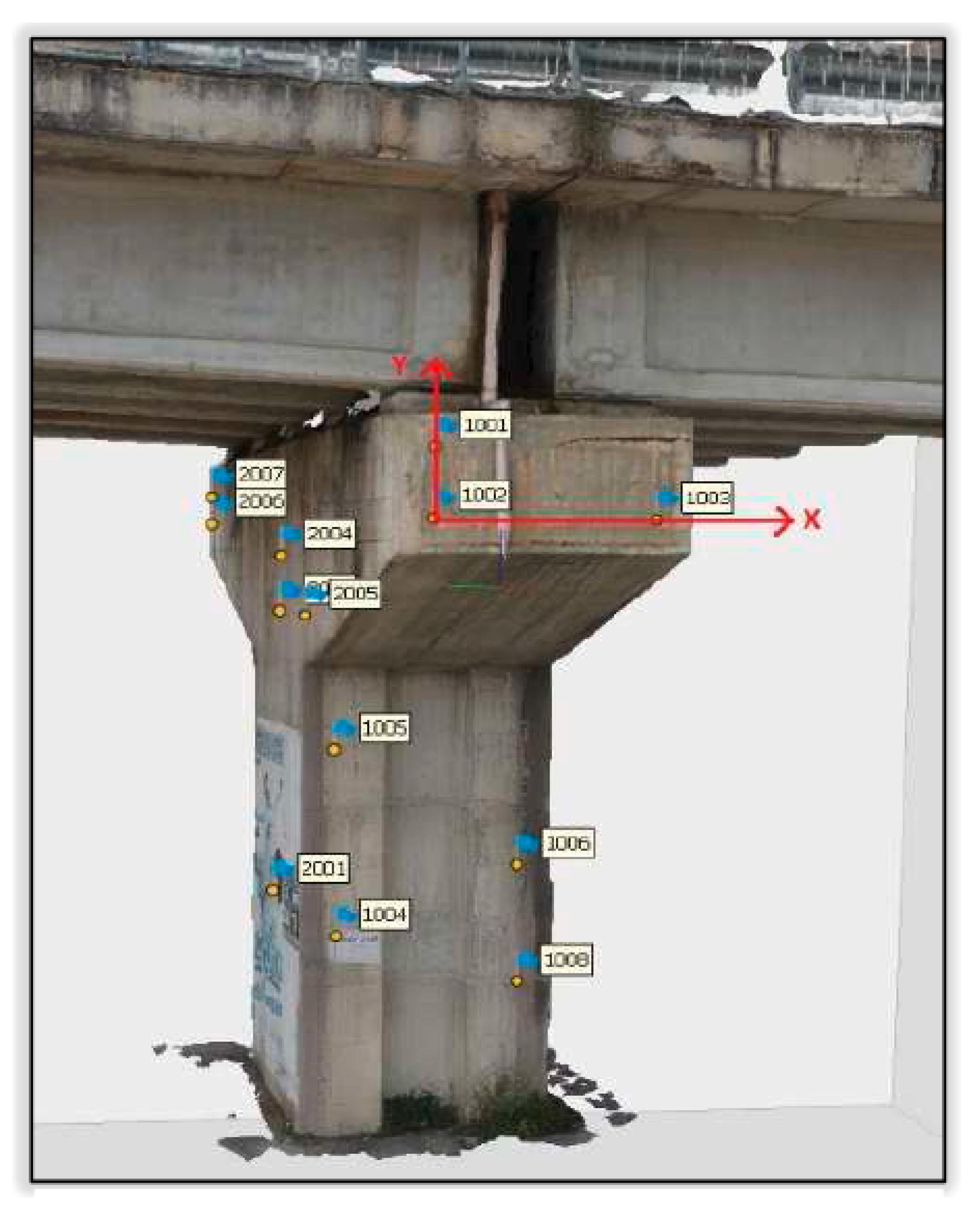

2. Materials and Methods

- spatial radius (expressed in pixel unit), affects the connectivity of the elements and the smoothness of the generated segments. It controls distance (number of pixels) that is considered when grouping pixels into image segments [53]. The choice depends on the size of the objects in the image;

- range radius (expressed in radiometry unit), affects the number of segments. It refers to the degree of spectral variability (distance in the n-dimensions of spectral space) allowed in an image segment. The choice depends on the contrast (the lower the contrast, the lower should be);

- minimum region size M (expressed in pixel unit), is the minimum size of the region and it affects noise; the smaller it is, the larger the small objects will be. It is chosen according to the size of the smallest objects in the image to be segmented.

3. Results

3.1. Digital Terrestrial and UAV Photogrammetry Results

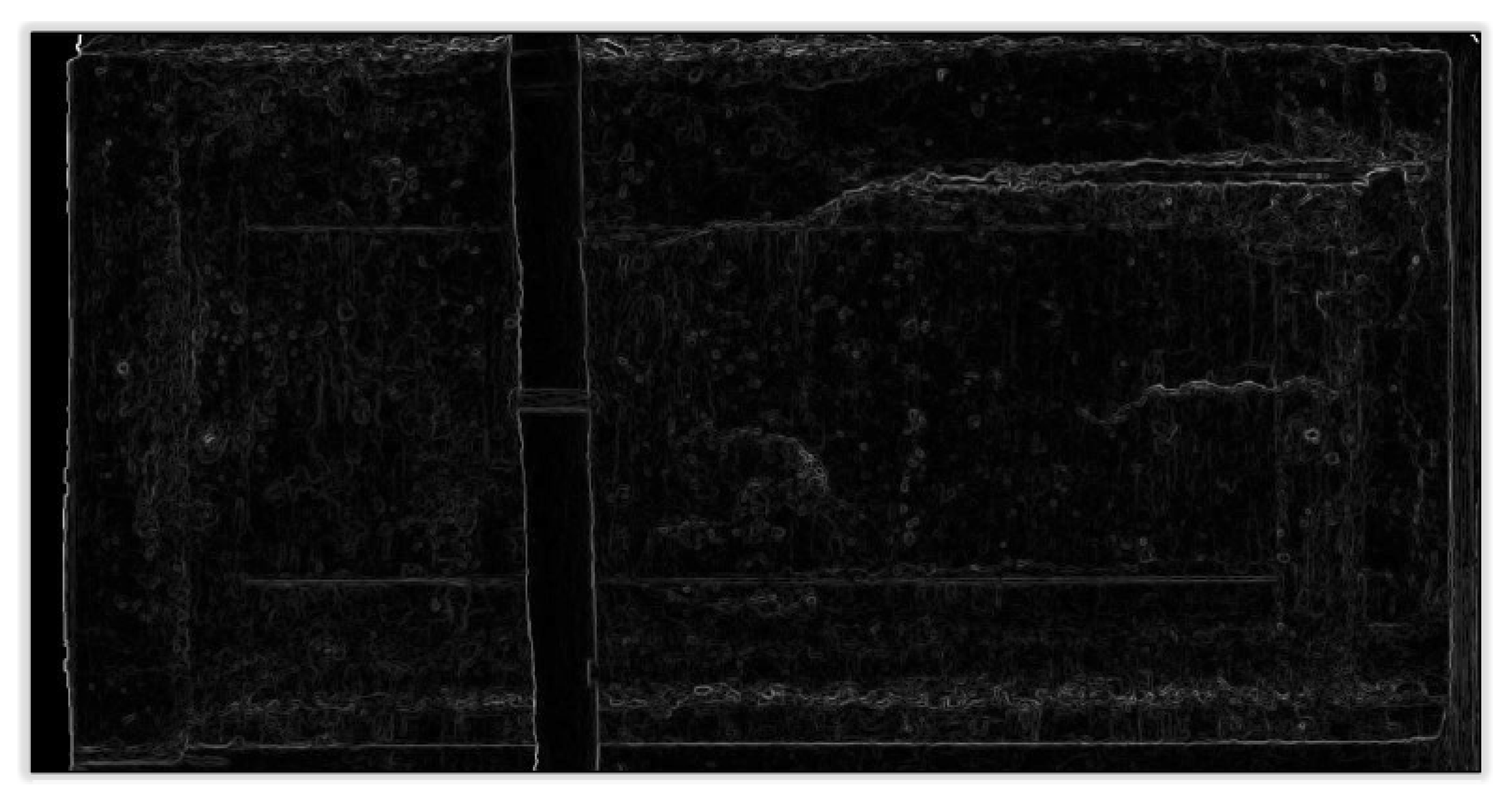

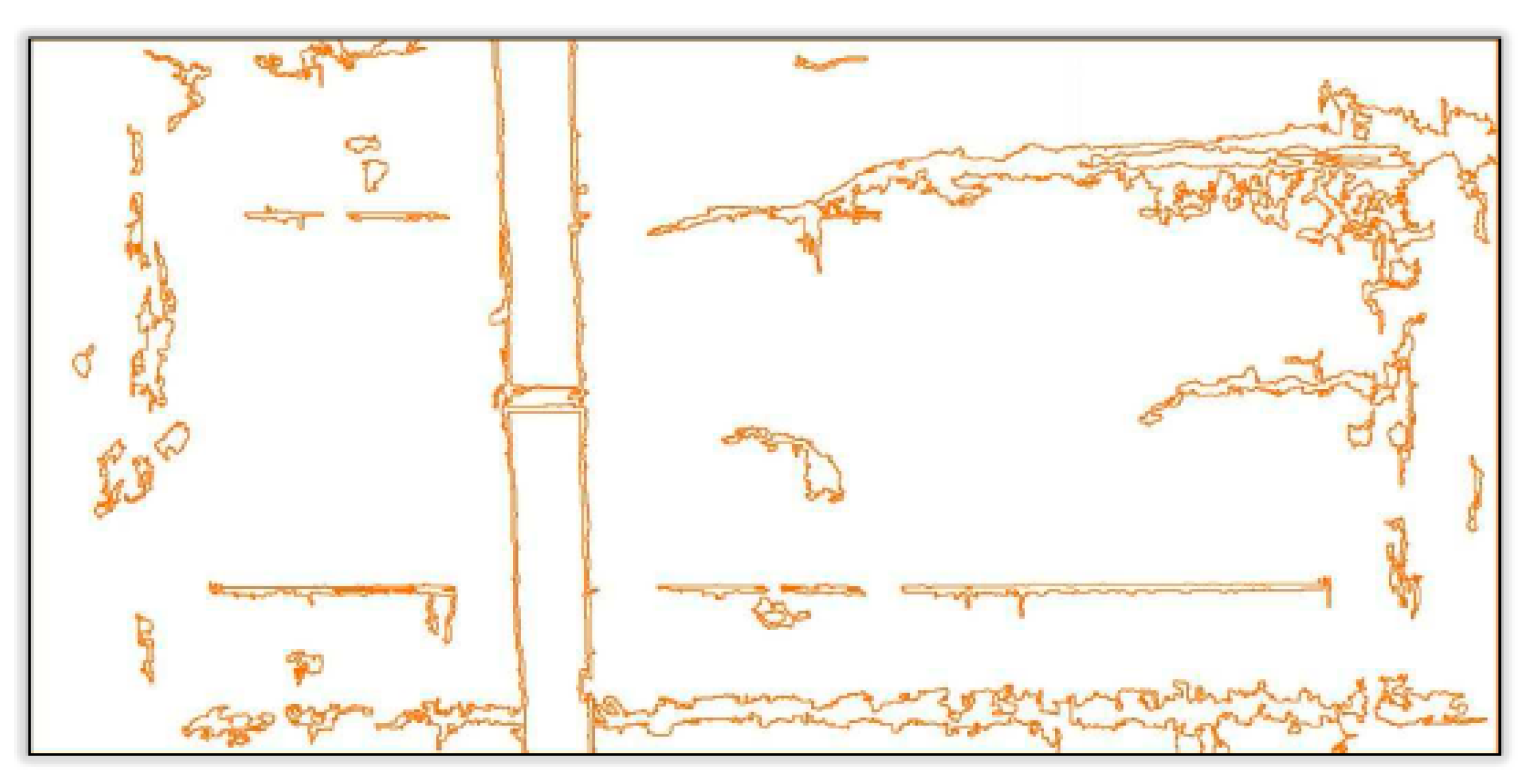

3.2. Image Processing Techniques

3.2.1. Image Contrast Enhancement

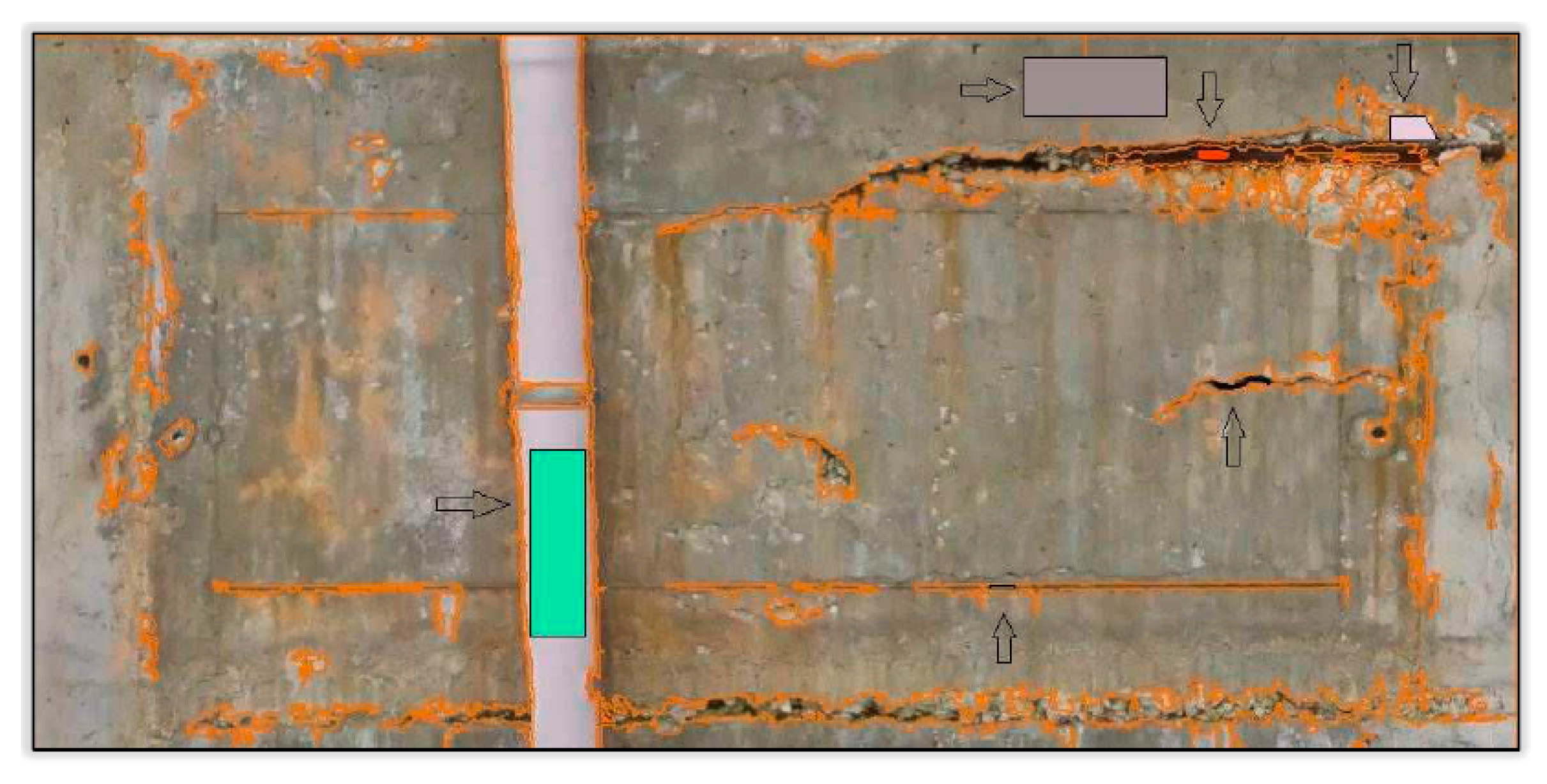

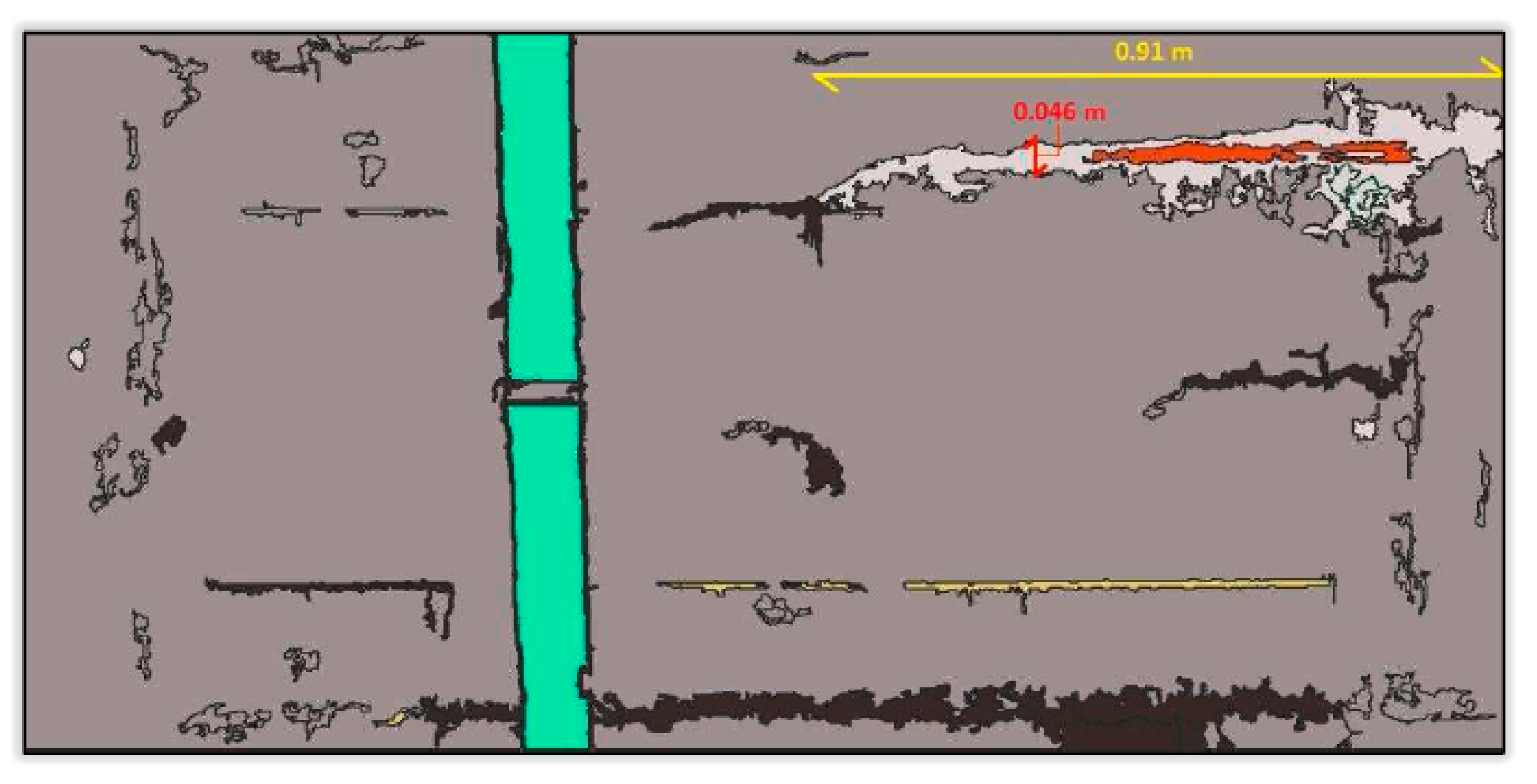

3.2.2. OBIA: Segmentation and Classification

- Classes creation: a label for each type of segment present on the scene to be classified is created; 6 classes reported in Table 2 were defined;

- Training areas definition for each class: training areas for each class were selected on the orthomosaic. Spectral information (average value and standard deviation) of the orthomosaics was assigned to the corresponded class of segmented image. Since training data will be used in the decision model, known and well-located areas that best represent the class are chosen on the part of the image where each class previously defined is clearly visible and differentiated (Figure 10). These areas are representative of each class. To ensure an accurate classification, the areas need to be located where they cover the full range of variability of each class, excluding boundaries between two or more different classes [56];

- Classifier training: classifier is trained by giving the training areas as example. In the end, the algorithm create a decision model, that is the set of rules used to classify the other segments in the image. Support Vector Machine (SVM) algorithm was used; the SVM was not born for automatic image classification; however, in the last decade, it has demonstrated great effectiveness in various applications of high-resolution image analysis [24,57,58,59,60]. The SVM algorithm is a supervised non-parametric classifier based on Vapnik’s statistical learning theory [24,61];

- Classification: the decision model was applied to the entire segmented image, in order to generate a vector thematic map. Every pixel of the image is associated with a class. The result is showed in Figure 11.

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| 2D | Two Dimensional |

| 3D | Three Dimensional |

| ANAS | Azienda Nazionale Autonoma delle Strade |

| CP | Check Point |

| ENAC | Italian Civil Aviation Authority |

| GCP | Ground Control Point |

| GNSS | Global Navigation Satellite System |

| GSD | Ground Sample Distance |

| MSS | Mean-Shift Segmentation |

| NDT | Non-Destructive Tests |

| OBIA | Object Based Image Analysis |

| OTB | Orfeo ToolBox |

| RFI | Rete Ferroviaria Italiana |

| RGB | Red Green Blue |

| RMS | Root Mean Square |

| ROI | Region Of Interest |

| SVM | Support Vector Machine |

| UAV | Unmanned Aerial Vehicle |

References

- Bellino, F. Un intervento poco risolutivo. Il G. Dell’Ingegnere 2019, 6, 25. [Google Scholar]

- D’Amato, A. Quali Sono i Ponti e i Viadotti a Rischio Nell’italia Che Crolla. Nextquotidiano 2019. Available online: https://www.nextquotidiano.it/quali-sono-i-ponti-e-i-viadotti-a-rischio-nellitalia-che-crolla/ (accessed on 18 June 2020).

- Alessandrini, S. Il crollo del ponte Morandi a Genova. Ingenio 2020. Available online: https://www.ingenio-web.it/20966-il-crollo-del-ponte-morandi-a-genova#:~:text=Alle%2011.36%20del%2014%20agosto,del%20viadotto%20sul%20Polcevera%2C%20un (accessed on 19 June 2020).

- Gomarasca, M.A. Basics of Geomatics; Springer Science Business Media: Dordrecht, The Netherlands, 2009. [Google Scholar]

- Dominici, D.; Alicandro, M.; Massimi, V. UAV photogrammetry in the post-earthquake scenario: Case studies in L’Aquila. Geomat. Nat. Hazards Risk 2017, 8, 87–103. [Google Scholar] [CrossRef]

- Barazzetti, L.; Forlani, G.; Remondino, F.; Roncella, R.; Scaioni, M. Experiences and achievements in automated image sequence orientation for close-range photogrammetric projects. Videometrics Range Imaging Appl. XI 2011, 8085, 80850F. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International wOrkshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Mangina, E.; Zolanvari, S.I.; Byrne, J. UAV bridge inspection through evaluated 3D reconstructions. J. Bridge Eng. 2019, 24, 05019001. [Google Scholar] [CrossRef]

- Avsar, Ö.; Akca, D.; Altan, O. Photogrammetric deformation monitoring of the second Bosphorus Bridge in Istanbul. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 71–76. [Google Scholar] [CrossRef]

- Marmo, F.; Demartino, C.; Candela, G.; Sulpizio, C.; Briseghella, B.; Spagnuolo, R.; Xiao, Y.; Vanzi, I.; Rosati, L. On the form of the Musmeci’s bridge over the Basento river. Eng. Struct. 2019, 191, 658–673. [Google Scholar] [CrossRef]

- Jiang, R.; Jáuregui, D.V.; White, K.R. Close-range photogrammetry applications in bridge measurement: Literature review. Measurement 2008, 41, 823–834. [Google Scholar] [CrossRef]

- Maas, H.G.; Hampel, U. Photogrammetric techniques in civil engineering material testing and structure monitoring. Photogramm. Eng. Remote Sens. 2006, 72, 39–45. [Google Scholar] [CrossRef]

- Valença, J.; Júlio, E.N.B.S.; Araújo, H.J. Applications of photogrammetry to structural assessment. Exp. Tech. 2012, 36, 71–81. [Google Scholar] [CrossRef]

- Whiteman, T.; Lichti, D.D.; Chandler, I. Measurement of deflections in concrete beams by close-range digital photogrammetry. Proc. Symp. Geospat. Theory Process. Appl. 2002, 9, 12. [Google Scholar]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D computer vision for visual inspection of a large gravity dam. Front. Built Environ. 2018, 4, 31. [Google Scholar] [CrossRef]

- Buffi, G.; Manciola, P.; Grassi, S.; Barberini, M.; Gambi, A. Survey of the Ridracoli Dam: UAV–based photogrammetry and traditional topographic techniques in the inspection of vertical structures. Geomat. Nat. Hazards Risk 2017, 8, 1562–1579. [Google Scholar] [CrossRef]

- Rau, J.Y.; Hsiao, K.W.; Jhan, J.P.; Wang, S.H.; Fang, W.C.; Wang, J.L. Bridge crack detection using multi-rotary UAV and object-base image analysis. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 311. [Google Scholar] [CrossRef]

- Fernandez Galarreta, J.; Kerle, N.; Gerke, M. UAV-based urban structural damage assessment using object-based image analysis and semantic reasoning. Nat. Hazards Earth Syst. Sci. 2015, 15. [Google Scholar] [CrossRef]

- Karantanellis, E. Photogrammetry techniques for object-based building crack detection and characterization. In Proceedings of the 16th European Conference in Earthquake Engineering, Thessaloniki, Greece, 18–21 June 2018. [Google Scholar]

- Marsella, M.; Scaioni, M. Sensors for deformation monitoring of large civil infrastructures. Sensors 2018, 18, 3941. [Google Scholar] [CrossRef]

- Mistretta, F.; Sanna, G.; Stochino, F.; Vacca, G. Structure from motion point clouds for structural monitoring. Remote Sens. 2019, 11, 1940. [Google Scholar] [CrossRef]

- Schweizer, E.A.; Stow, D.A.; Coulter, L.L. Automating near real-time, post-hazard detection of crack damage to critical infrastructure. Photogramm. Eng. Remote Sens. 2018, 84, 75–86. [Google Scholar] [CrossRef]

- Teodoro, A.C.; Araujo, R. Comparison of performance of object-based image analysis techniques available in open source software (Spring and Orfeo Toolbox/Monteverdi) considering very high spatial resolution data. J. Appl. Remote Sens. 2016, 10, 016011. [Google Scholar] [CrossRef]

- Valença, J.; Puente, I.; Júlio, E.; González-Jorge, H.; Arias-Sánchez, P. Assessment of cracks on concrete bridges using image processing supported by laser scanning survey. Constr. Build. Mater. 2017, 146, 668–678. [Google Scholar] [CrossRef]

- Duque, L.; Seo, J.; Wacker, J. Synthesis of unmanned aerial vehicle applications for infrastructures. J. Perform. Constr. Facil. 2018, 32, 04018046. [Google Scholar] [CrossRef]

- Beshr, A.A.E.-W.; Kaloop, M.R. Monitoring bridge deformation using auto-correlation adjustment technique for total station observations. Sci. Res. 2013, 4. [Google Scholar] [CrossRef]

- Beltempo, A.; Cappello, C.; Zonta, D.; Bonelli, A.; Bursi, O.S.; Costa, C.; Pardatscher, W. Structural health monitoring of the Colle Isarco viaduct. In Proceedings of the IEEE Workshop on Environmental, Energy, and Structural Monitoring Systems (EESMS), Trento, Italy, 9–10 July 2015; pp. 7–11. [Google Scholar]

- Lachat, E.; Landes, T.; Grussenmeyer, P. Investigation of a combined surveying and scanning device: The Trimble SX10 Scanning total station. Sensors 2017, 17, 730. [Google Scholar] [CrossRef] [PubMed]

- Elnabwy, M.T.; Kaloop, M.R.; Elbeltagi, E. Talkha steel highway bridge monitoring and movement identification using RTK-GPS technique. Measurement 2013, 46, 4282–4292. [Google Scholar] [CrossRef]

- Kaloop, M.; Elbeltagi, E.; Hu, J.; Elrefai, A. Recent advances of structures monitoring and evaluation using GPS-time series monitoring systems: A review. ISPRS Int. J. Geo-Inf. 2017, 6, 382. [Google Scholar] [CrossRef]

- Chen, Q.; Jiang, W.; Meng, X.; Jiang, P.; Wang, K.; Xie, Y.; Ye, J. Vertical deformation monitoring of the suspension bridge tower using GNSS: A case study of the forth road bridge in the UK. Remote Sens. 2018, 10, 364. [Google Scholar] [CrossRef]

- Tang, P.; Akinci, B.; Garrett, J.H. Laser scanning for bridge inspection and management. IABSE Symp. Rep. 2007, 93, 17–24. [Google Scholar] [CrossRef]

- Teza, G.; Galgaro, A.; Moro, F. Contactless recognition of concrete surface damage from laser scanning and curvature computation. NDT E Int. 2009, 42, 240–249. [Google Scholar] [CrossRef]

- Liu, W.; Chen, S.; Hauser, E. Lidar-based bridge structure defect detection. Exp. Tech. 2011, 35, 27–34. [Google Scholar] [CrossRef]

- Guldur, E.; Hajjar, J. Laser-based surface damage detection and quantification using predicted surface properties. Autom. Constr. 2017, 83, 285–302. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Fiorillo, S.; Villa, G.; Marchesi, A. Tecniche di telerilevamento per il riconoscimento dei soggetti arborei appartenenti al genere Platanus spp. In Proceedings of the ASITA Conference, Lecco, Italy, 29 September–1 October 2015. [Google Scholar]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 1–8. [Google Scholar] [CrossRef]

- De Luca, G.; N Silva, J.M.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-based land cover classification of cork oak woodlands using UAV imagery and orfeo toolbox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef]

- Agisoft Metashape. 2019. Available online: https://www.agisoft.com/ (accessed on 22 April 2020).

- Orfeo ToolBox. 2020. Available online: https://www.orfeo-toolbox.org/CookBook/recipes/contrast_enhancement.html (accessed on 8 July 2020).

- Dermanis, A.; Biagi, L.G.A. Il Telerilevamento, Informazione Territoriale Mediante Immagini da Satellite; CEA: Casa Editrice Ambrosiana, Milano, 2002. [Google Scholar]

- Davis, L.S. A survey of edge detection techniques. Comput. Graph. Image Process. 1975, 4, 248–270. [Google Scholar] [CrossRef]

- Nadernejad, E.; Sharifzadeh, S.; Hassanpour, H. Edge detection techniques: Evaluations and comparisons. Appl. Math. Sci. 2008, 2, 1507–1520. [Google Scholar]

- OTB CookBook, 6.6.1. 2018. Available online: https://www.orfeo-toolbox.org/CookBook-6.6.1/Applications/app_EdgeExtraction.html?highlight=edge%20extraction (accessed on 7 July 2020).

- Reddy, G.O.; Singh, S.K. (Eds.) Geospatial Technologies in Land Resources Mapping, Monitoring and Management; Springer International Publishing: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Hsu Geospatial Sites, Object Based Classification. 2020. Available online: http://gsp.humboldt.edu/OLM/Courses/GSP_216_Online/lesson6-1/object.html (accessed on 11 March 2020).

- Schiewe, J. Segmentation of high-resolution remotely sensed data-concepts, applications and problems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 380–385. [Google Scholar]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable mean-shift algorithm and its application to the segmentation of arbitrarily large remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 952–964. [Google Scholar] [CrossRef]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Image Segmentation of UAS Imagery. Available online: http://myweb.facstaff.wwu.edu/wallin/esci497_uas/labs/Image_seg_otb.htm (accessed on 4 September 2020).

- Hay, G.J.; Castilla, G. Object-based image analysis: Strengths, weaknesses, opportunities and threats (SWOT). In Proceedings of the 1st International Conference on Object-based Image Analysis, Salzburg, Austria, 4–5 July 2006. [Google Scholar]

- Orfeo Toolbox, Docs, All Applications, Feature Extraction, EdgeExtraction. Available online: https://www.orfeo-toolbox.org/CookBook/Applications/app_EdgeExtraction.html (accessed on 5 September 2020).

- Hsu Geospatial Sites, Supervised Classification. 2020. Available online: http://gsp.humboldt.edu/OLM/Courses/GSP_216_Online/lesson6-1/supervised.html#:~:text=Training%20sites%20are%20areas%20that,of%20each%20of%20the%20classes (accessed on 7 September 2020).

- Bruzzone, L.; Carlin, L. A multilevel context-based system for classification of very high spatial resolution images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2587–2600. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L. (Eds.) Kernel Methods for Remote Sensing Data Analysis; John Wiley Sons: Chichester, UK, 2009. [Google Scholar]

- Inglada, J. Automatic recognition of man-made objects in high resolution optical remote sensing images by SVM classification of geometric image features. ISPRS J. Photogramm. Remote Sens. 2007, 62, 236–248. [Google Scholar] [CrossRef]

- Tuia, D.; Pacifici, F.; Kanevski, M.; Emery, W.J. Classification of very high spatial resolution imagery using mathematical morphology and support vector machines. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3866–3879. [Google Scholar] [CrossRef]

- Vapnik, V.N. An overview of statistical learning theory. IEEE Trans. Neural Netw. 1999, 10, 988–999. [Google Scholar] [CrossRef] [PubMed]

- Croft, C.; Macdonald, S. (Eds.) Concrete: Case Studies in Conservation Practice; Getty Publications: Los Angeles, CA, USA, 2019; Volume 1. [Google Scholar]

- Macdonald, S. (Ed.) Concrete: Building Pathology; John Wiley Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Christophe, E.; Inglada, J.; Giros, A. Orfeo toolbox: A complete solution for mapping from high resolution satellite images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1263–1268. [Google Scholar]

- Lee, K.; Kim, K.; Lee, S.G.; Kim, Y. Determination of the Normalized Difference Vegetation Index (NDVI) with Top-of-Canopy (TOC) reflectance from a KOMPSAT-3A image using Orfeo ToolBox (OTB) extension. ISPRS Int. J. Geo-Inf. 2020, 9, 257. [Google Scholar] [CrossRef]

| Sensor | Camera | Sony Alpha 6000 | |

| Resolution | 24 MP | ||

| Focal length | 16 mm | ||

| Sensor dimension | Width | 23.5 mm | |

| Height | 15.6 mm | ||

| Weight | 345 g | ||

| UAV | Typology | Micro UAV- Hexacopter | |

| Brand | Flytop | ||

| Model | FlyNovex | ||

| Weight at teakeoff | 6.00 kg | ||

| Maximum wind velocity for safe operation | Gusts up to 30 km/h (8 m/s) | ||

| Autonomy | 20 min in hovering at 25 C | ||

| Operating altitude | 1–150 m | ||

| ENAC Certification | Yes |

| Class ID | Colour | Object Typology |

|---|---|---|

| 1 | Grey | Background (not deteriorated concrete) |

| 2 | Orange | Exposed rebars |

| 3 | Light grey | Spalling |

| 4 | Black | Cracks |

| 5 | Yellow | Formwork lines |

| 6 | Light blue | Drainpipe |

| Class ID and Colour | Object Typology | Associated Objects Number | % Associated Object | Area (cm2) | % Area |

|---|---|---|---|---|---|

| 1 | Background | 43 | 67.19 | 16,734.85 | 88.08 |

| 2 | Exposed rebars | 1 | 1.56 | 78.51 | 0.41 |

| 3 | Spalling | 3 | 4.69 | 504.27 | 2.65 |

| 4 | Cracks/Rock pockets | 9 | 14.06 | 733.94 | 3.86 |

| 5 | Formwork lines | 4 | 6.25 | 79.54 | 0.42 |

| 6 | Drainpipe | 4 | 6.25 | 868.72 | 4.57 |

| TOTAL | 64 | 100 | 19,000 | 100 |

| Area (cm) | % Area | |

|---|---|---|

| Background | 13,597.00 | 71.56 |

| Exposed rebars | 110.00 | 0.58 |

| Spalling | 510.00 | 2.68 |

| Cracks/Rock pockets | 650.00 | 3.42 |

| Formwork line | 143.00 | 0.75 |

| Drainpipe | 960.00 | 5.05 |

| Detachment | 260.00 | 1.37 |

| Map cracking | 430.00 | 2.26 |

| Washout | 2340.00 | 12.32 |

| Total image area | 19,000.00 | 100 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zollini, S.; Alicandro, M.; Dominici, D.; Quaresima, R.; Giallonardo, M. UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sens. 2020, 12, 3180. https://doi.org/10.3390/rs12193180

Zollini S, Alicandro M, Dominici D, Quaresima R, Giallonardo M. UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sensing. 2020; 12(19):3180. https://doi.org/10.3390/rs12193180

Chicago/Turabian StyleZollini, Sara, Maria Alicandro, Donatella Dominici, Raimondo Quaresima, and Marco Giallonardo. 2020. "UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA)" Remote Sensing 12, no. 19: 3180. https://doi.org/10.3390/rs12193180

APA StyleZollini, S., Alicandro, M., Dominici, D., Quaresima, R., & Giallonardo, M. (2020). UAV Photogrammetry for Concrete Bridge Inspection Using Object-Based Image Analysis (OBIA). Remote Sensing, 12(19), 3180. https://doi.org/10.3390/rs12193180