Abstract

Fog affects transportation due to low visibility and also aggravates air pollutants. Thus, accurate detection and forecasting of fog are important for the safety of transportation. In this study, we developed a decision tree type fog detection algorithm (hereinafter GK2A_FDA) using the GK2A/AMI and auxiliary data. Because of the responses of the various channels depending on the time of day and the underlying surface characteristics, several versions of the algorithm were created to account for these differences according to the solar zenith angle (day/dawn/night) and location (land/sea/coast). Numerical model data were used to distinguish the fog from low clouds. To test the detection skill of GK2A_FDA, we selected 23 fog cases that occurred in South Korea and used them to determine the threshold values (12 cases) and validate GK2A_FDA (11 cases). Fog detection results were validated using the visibility data from 280 stations in South Korea. For quantitative validation, statistical indices, such as the probability of detection (POD), false alarm ratio (FAR), bias ratio (Bias), and equitable threat score (ETS), were used. The total average POD, FAR, Bias, and ETS for training cases (validation cases) were 0.80 (0.82), 0.37 (0.29), 1.28 (1.16), and 0.52 (0.59), respectively. In general, validation results showed that GK2A_FDA effectively detected the fog irrespective of time and geographic location, in terms of accuracy and stability. However, its detection skill and stability were slightly dependent on geographic location and time. In general, the detection skill and stability of GK2A_FDA were found to be better on land than on coast at all times, and at night than day at any location.

1. Introduction

Fog is a meteorological phenomenon that occurs near the Earth’s surface and affects human activities in various ways [1,2,3]. Fog affects transportation due to low visibility [1,2,4,5,6]. Moreover, it often damages crops and aggravates air pollutants due to solar energy reduction and temperature inversion [7,8]. Therefore, its accurate detection is important for not only reducing traffic problems but also studying climate and air quality [1].

Many studies have analyzed the characteristics of fog and detected it using ground observation, numerical model, and satellite data [3,5,9,10,11,12,13]. As the spatio-temporal variation of fog is strong, fog detection, especially with satellite data, is significantly advantageous [1,14,15]. Geostationary satellites have wide spatial coverage, unlike polar-orbit satellites and ground observation data [14,16,17,18], with high temporal resolution being their biggest advantage because of the possibility of continuous monitoring of fog and utilization of their data for short-term forecasts [1].

The quality of satellite data, in terms of accuracy, number of channels, spatial resolution, and temporal frequency, has significantly improved, which has led to the development of many fog detection techniques using the satellite data. As satellites have different channels available for day and night, fog detection algorithms have been separately developed in most studies for day or night according to the solar zenith angle (SZA) [2]. Solar radiation is present during the day; thus, reflectance is the main parameter used to detect fog during daytime [3,5,17]. Conversely, solar radiation is not present at night; therefore, the dual channel difference (DCD: brightness temperature (BT) of 3.8 μm − BT11.2) method, presented by Eyre et al. [19], is mainly used for nighttime. This method involves measuring the difference in the brightness temperatures of two channels based on the difference between the emissivities of short-wave infrared channel (3.8 μm) and infrared channel (IR) of 11.2 μm in the water drop [16,20]. However, distinguishing fog from other objects only using two test elements for day and night has certain limitations. Particularly, low clouds are difficult to distinguish because of their physical properties being similar to those of fog [21]. To resolve this difficulty, some researchers have used the roughness of the upper surface of clouds and difference between surface and cloud top temperatures [2,3,13,16,20,22,23]. In addition, to eliminate cirrus clouds in the fog product, the brightness temperature difference (BTD) between 10 and 12 μm has been utilized [13,17]. As the reflectance of snow-covered areas is similar to that of fog, the normalized difference snow index (NDSI) has been used to determine whether the pixel is snow or fog [24,25].

Despite these efforts, fog detection using satellite data remains a major issue because of the limitations of the satellites (such as those of polar orbiting satellites), and the complexity of fog. A polar orbiting satellite consists of many channels and can detect fog with significantly high spatial resolution; however, detecting a rapidly changing fog by observing the same area only twice a day is difficult. Conversely, fog can also be detected in real time using geostationary satellites, such as Communication, Ocean and Meteorological (COMS) and Multifunction Transport Satellite (MTSAT). However, these satellites have lower spatial resolutions compared to the polar orbit satellites. This makes the detection of locally occurring fog with geostationary satellites difficult. In addition, they only have five channels, with many researchers recommending the need for more channels to separate the fog from clear ground, cirrus or middle-high clouds, and snow [16,17]. Recently, GEO-KOMPSAT-2A (GK2A) and Himawari-8, launched by South Korea and Japan, respectively, significantly addressed these limitations [2,3,20]. The Advanced Meteorological Imager (AMI) of GK2A and Advanced Himawari Imager (AHI) of Himawari-8 contain 16 channels and high spatial resolutions (0.5, 1, and 2 km at 0.64 μm, other visible (VIS), and infrared channels, respectively). GK2A is the second geostationary satellite of South Korea, launched in December 2018. Remarkable improvement in the observing capability of GK2A, compared to COMS, in terms of the number of channels (5 to 16), spatial resolution (1–4 to 0.5–2 km), and observing frequency (15 to 10 min), provide an opportunity to detect local and variable fog [26,27].

To develop an algorithm for real-time fog detection using GK2A/AMI data, we utilized a decision tree method. As the availability of satellite data differed between day and nighttime, the fog detection algorithm was developed separately, according to the SZA. In addition, as the geographic background and characteristics of fog over the Korean Peninsula differed, according to geographic location, the algorithms were developed differently based on land, sea, and coast. Additionally, we used numerical model data as it was difficult to distinguish the fog from the low clouds using only the satellite data. Details about the fog detection methods and data used are described in Section 2. In Section 3, the qualitative and quantitative results of fog detection are presented. Finally, discussions on the performance of fog detection algorithms and conclusions are presented in Section 4 and Section 5, respectively.

2. Materials and Methods

2.1. Materials

In this study, mainly GK2A/AMI and numerical weather model (NWP) data, obtained from the National Meteorological Satellite Center (NMSC) of Korea Meteorological Administration (KMA), were used for fog detection. As auxiliary data, land sea mask, land cover data, and snow cover were used. Visibility data (unit: km) measured by a visibility meter obtained from KMA were used to validate the fog detection results. The characteristics of the selected channels among the 16 channels of GK2A/AMI are presented in Table 1.

Table 1.

Characteristics of the selected channels among the 16 channels of advanced meteorological imager (AMI) of GK2A used in this study.

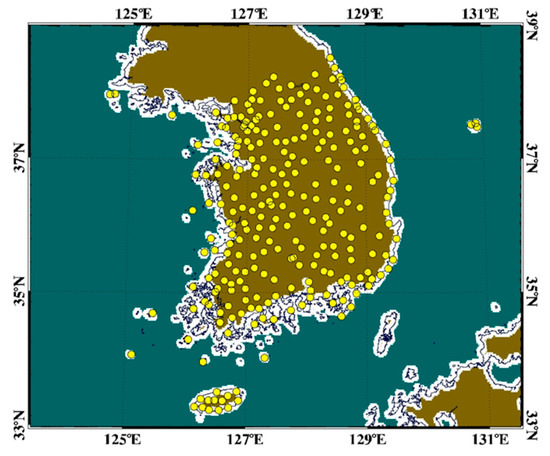

The brightness temperature at 11 μm (CSR_BT11) obtained from clear sky radiance (CSR) was used as the surface temperature for clear sky to distinguish between fog and low clouds. The CSR data were obtained through the simulation of the radiative transfer model, RTTOV version 12.1, using the vertical profile data of temperature and moisture provided by the numerical weather prediction model operated by KMA [20]. The spatial resolution and temporal frequency of CSR_BT11 were 10 km and 1 h, respectively. As the spatio-temporal resolution differs between CSR_BT11 and satellite data, spatio-temporal matching was necessary for the simultaneous use of both data. Therefore, CSR_BT11 was interpolated to fit the spatio-temporal resolution of the satellite data. The land sea mask data was categorized into three types: land, sea, and coast. The coastal area was defined by 3 × 3 pixels from the coastline, as shown in Figure 1 (white lines). In addition, a land cover map was used to reduce the false-detected pixels in the desert area having spectral emissivity similar to that of the fog, with a lower emissivity of channel 7 than that of channel 13. The snow cover data obtained from NMSC were also used to minimize the false detection of fog in the snow-covered area. The spatial distribution of the visibility meter is shown in Figure 1. The number of stations was approximately 280, with the observing frequencies as 1 min. We used the recalculated 10 min average visibility data obtained from KMA, as the temporal frequency of the satellite fog product was 10 min.

Figure 1.

Spatial distribution of the visibility data with land sea mask. Yellow dots represent the stations of the visibility meter data. The white, blue, and brown shaded pixels represent coast, sea, and land, respectively.

For qualitative analysis, the fog detection results of COMS Meteorological Data Processing System (CMDPS) and fog product of red-green-blue (RGB) composite using GK2A were used. The NMSC has been operationally detecting fog using CMDPS since April 2011. Here, CMDPS is a set of algorithms that retrieve various weather elements, such as fog and sea surface temperature, from COMS data, the first geostationary satellite of Korea. The fog product of RGB using GK2A uses BT12.3 − BT10.5, BT10.5 − BT3.9, and BT10.5 in red, green, and blue, respectively, like the Night Microphysics RGB used in EUMATSAT to derive a composite image [28].

We selected 23 fog cases (23 cases × 24 h/cases × 6 scenes/h = 3312 scenes) that occurred around the Korean Peninsula, and 12 of these were used as training cases (T#) for determining the threshold values and 11 were used for validating the fog detection algorithm, as shown in Table 2. The total number of scenes for the training and validation cases (V#) were 1728 (=12 training cases × 24 h × 6 scenes/h) and 1584 (=11 validation cases × 24 h × 6 scenes/h), respectively. These training and validation fog cases were selected based on the order of fog occurrence time, from July to September, 2019 and from October, 2019 to March, 2020, respectively. To assess the detection level of the fog detection algorithm developed in this study, we included fog cases with very few fog stations for local fog cases (V8 and V9 in Table 2).

Table 2.

Summary of the fog cases used for training (T) and validation (V) of the fog detection algorithm. The number of fogs and stations indicate the number of visibility meters, showing a visibility of less than 1 km and the total number of visibility meters used for validation, respectively.

2.2. Methods

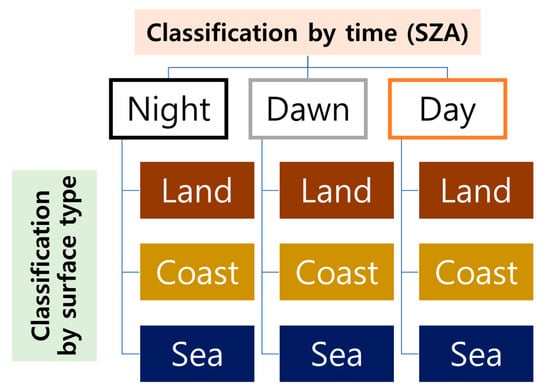

The fog detection algorithm of GK2A (GK2A_FDA) consists of three parts, as shown in Figure 2. The upper left part sets the initial threshold values of various test elements (e.g., DCD, BTD) through histogram analysis and prepares the background data, such as the 30-day composite visible channel reflectance (Vis_Comp). The lower left part optimizes the threshold values of various test elements through sensitivity tests based on Hanssen–Kuiper skill score (KSS) using the training cases and visibility meter data. The right part shows the entire process of the fog detection algorithm from reading the data to validating the fog detection results. As the availability of AMI channels depends on the time of the day and the characteristics of the background differ based on the geographic location, we developed nine types of fog detection algorithms based on SZA (day, dawn/twilight, and night) and surface type (land, coast, and sea), as shown in Figure 3. The threshold values of SZA for separating fog detection algorithms were determined based on the maximum total fog detection level obtained through intensive sensitivity tests.

Figure 2.

Flow chart of the fog detection algorithm prepared using the GK2A/AMI and auxiliary data.

Figure 3.

Differentiation of fog detection algorithms, according to the solar zenith angle (SZA) and geographic location.

As fog is a complex phenomenon and AMI has 16 channels, we used various test elements based on the optical and textural properties of the fog to distinguish it from other objects (clear, low cloud, middle-high cloud, snow, etc.), as shown in Table 3. In this study, an algorithm based on a decision tree method was developed to remove the non-fog pixels after the first detection of the fog candidate pixels among all pixels using following steps. In the first step, the fog candidate pixels were mainly detected by the difference (ΔVIS) of reflectance at daytime and the DCD at nighttime [5,6,16]. The difference of reflectance means the difference between the reflectance of 0.64 μm and Vis_Comp as clear sky reflectance. Vis_Comp of a pixel was obtained through the minimum value composition of the reflectance of 0.64 μm during the past 30 days, including the fog detection day [5]. The Vis_Comp data were obtained every 10 min considering different SZAs. We used 30 days for generating Vis_Comp as the Korean Peninsula is affected by the monsoon system, with frequent occurrence of clouds for more than 15 days, especially during summer.

Table 3.

Summary of the threshold values of the test elements, according to time and geographic location. The calculation method of each test element is described in Table A1 in Appendix A.

It is difficult to distinguish between fog and clouds, such as low cloud or ice cloud, with only ΔVIS and DCD. Therefore, the difference (ΔFTs) between the brightness temperatures of the top of the fog and surface temperature, local standard deviation (LSD), and normalized LSD (NLSD) were used. As the top height of the fog was lower than that of the clouds (low, middle, and high clouds), the ΔFTs was used to distinguish the fog from low clouds. In general, the top of the fog is relatively smoother than that of the cloud because fog mainly occurs when the boundary layer of the atmosphere is stable. Thus, we used the LSD of the brightness temperature (LSD_BT11.2) and NLSD of the reflectance (NLSD_vis). NLSD implies the division of standard deviation by the average of 3 × 3 pixels [2]. Clear pixels misclassified as fog in the first step were reclassified using channel (13.3 μm) and 8.6 μm [29,30]. BTD between 13.3 and 11.2 μm was only used during daytime. BTD between 8.6 and 10.5 μm was mainly used to distinguish between ice and water clouds [31,32]. To distinguish fog from clear pixels or ice cloud (e.g., cirrus), a brightness temperature of 8.6 μm was used as it can be used regardless of day and night. In addition, remaining pixels with optically thin middle-high clouds or partly clouded were removed using BTD between 10.5 and 12.3 μm [13,17].

In South Korea, various types of fog, such as radiation fog, occur, and although there are differences depending on geographic location, about 26.5 days of fog occur on average. According to Lee and Suh [33], radiation fog occurs frequently at a rate of 58.5% (especially, 71.3% in inland) for annual total fog events occurring in South Korea from 2015 to 2017. Even for thick fog with visibility less than 100 m, 62.4% is radiation fog (especially, 86.2% in inland). Most radiation fog dissipates rapidly after sunrise [34]. Thus, we applied a fog life cycle and strict threshold tests to remove the falsely detected fog after sunrise (SZA < 60°) assuming the non-occurrence of new radiation fog during the day. The life cycle test reclassified the newly detected fog after sunrise into non-fog pixels as fog disappeared after sunrise. NDSI was used to reclassify the fog pixels with vague properties into non-fog pixels. NDSI, which is usually used to detect snow, can be used for separating clear pixels from falsely detected fog pixels due to their different characteristics on the spectrum, compared to soil and fog [35].

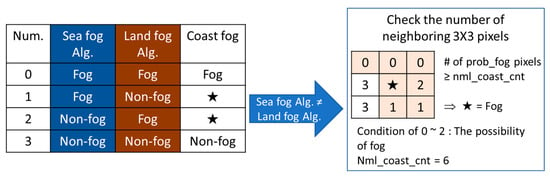

As the algorithms were developed differently according to SZA and geographic location, a spatio-temporal discontinuity in the fog detection results was inevitable. Therefore, we applied the blending method to minimize the discontinuity at dawn and in the coastal area. Fog detection using satellite data during dawn/twilight and in coastal areas has been significantly difficult because of the unavailability of visible channel and DCD and complex background [36]. In order to mitigate the temporal discontinuity that occurs during dawn, the night fog detection results and the dawn fog detection results were blended under the assumption that the fog generated at night persists even during the dawn period. Additionally, in order to mitigate the spatial discontinuity occurring at the coast, the fog detection algorithm of land and sea was applied at the same time, and the two detection results were blended. In this case, if the results of both algorithms were the same, the final result remained the same as shown in Figure 4. Whereas, if the two detection results were different from each other, they were adjusted using the results of the surrounding detection. If the majority of the 3 × 3 pixels around the given pixel were fog, then it was regarded as fog. During post-processing, falsely detected pixels appearing in the snow and desert areas were removed using the snow and land cover data, respectively.

Figure 4.

Conceptual diagram for the spatial blending method. The part marked with a star indicates a case where the results of the sea fog algorithm and the land fog algorithm are different from each other.

Finally, fog detection results were validated using the visibility meter as the ground observation data. Due to the different spatial representativeness of the two data, particularly being relatively lower for the visibility data and GK2A/AMI having a navigation error, validation was performed using 3 × 3 pixels based on the satellite pixel closest to the visibility data for the same observation time [1]. The performance levels of GK2A_FDA were validated using the following evaluation metrics (probability of detection: POD, false alarm ratio: FAR, bias ratio: Bias, and equitable threat score: ETS) based on a 2 × 2 contingency table (Table 4). Pixels detected as fog matched with a visibility of less than 1 km (more than 1 km) were defined as hit (false alarm). POD, FAR, Bias, and ETS were calculated using Equations (1)–(6). POD and FAR values were obtained between 0 and 1, and exhibited higher detection levels as they approached 1 and 0, respectively. Bias was used as the indicator of over- or under-detection of fog. ETS has from −1/3 to 1. Like POD, ETS indicates a higher detection level as it approaches 1. KSS was the difference between POD and FAR, which ranged from −1 to 1; the closer the KSS to 1, the higher the fog detection level:

POD = Hit/(Hit + Miss),

FAR = False alarm/(Hit + False alarm),

Bias = (Hit + False alarm)/(Hit + Miss),

KSS = POD − FAR,

Hit_ref = (Hit + Miss)(Hit + False alarm)/(Hit + Miss + False alarm + Correct Negative),

ETS = (Hit − Hit_ref)/(Hit − Hit_ref + Miss + False alarm).

Table 4.

Contingency table for fog detection validation.

To evaluate the stability of the fog detection level of the GK2A_FDA, we used the standard deviation (SD) of the evaluation matrix for the selected fog cases:

where N is the total number of fog cases (training cases: 12 and validation cases: 11); is the evaluation matrix, POD, FAR, Bias, and ETS of each fog case; and is the average of . Like FAR, the closer the standard deviation is to zero, the better the stability of the detection level.

3. Results

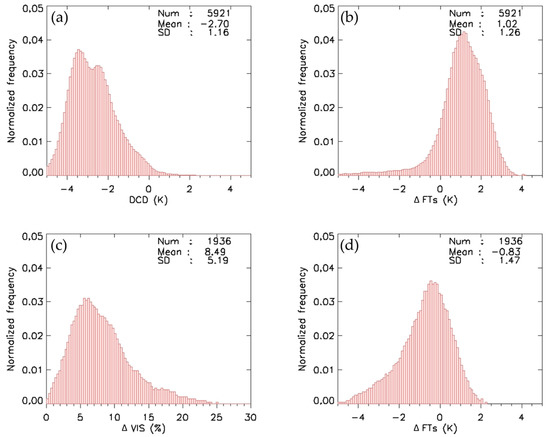

3.1. Frequency Analysis for Setting Threshold Values

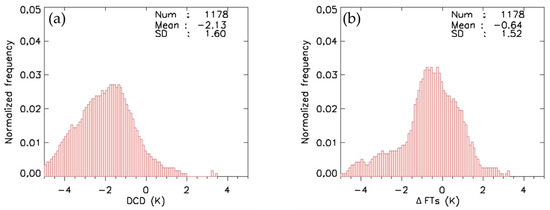

To set the initial thresholds for each test element, the frequency analysis of the test elements over the fog was performed using the 1728 scenes from the training cases. Fog pixels were selected using the co-located visibility meter data and visual inspection. Figure 5 shows the frequency distribution of the three test elements according to day and night on land. The average DCD was −2.7 K, and most DCDs were less than 0 K (98%) during nighttime (Figure 5a). Pixels above 0 K were mainly found in sub-pixel-sized fog. ΔFTs showed a non-negligible difference between day and night (Figure 5b,d). Most ΔFTs during the nighttime were greater than 0 K (Figure 5b); however, most ΔFTs during daytime were less than 1 K (Figure 5d). This could be due to diurnal variation in the temperature profile at the boundary layer, strong inversion during nighttime, and the lapse rate profile during daytime. Most ΔVISs were greater than 3%, with some reaching 25% (Figure 5a). Fog pixels with ΔVISs less than 2–3% were the cases with sub-pixel-sized fog or weak fog or limitation of the visibility meter. The distribution of the histogram in each test element appeared broad due to the complexity and diversities in the fog and background. The initial threshold values determined from the frequency analysis were statistically optimized using iterative sensitivity tests. The optimized threshold values were selected when KSS was maximum.

Figure 5.

Frequency distribution of the optical/textural properties of fog pixels in the training cases at nighttime (a,b) and daytime (c,d). (a) Dual Channel Difference (DCD), (b,d) Difference between the BT of the fog top and surface temperature (ΔFTs), and (c) Difference between the reflectance of 0.64 μm (VIS0.6) and Vis_Comp (ΔVIS).

Since there is no visibility system in the ocean, the threshold was set by visual analysis of the spatial distribution of the test elements along with the frequency analysis using the visibility meter installed on the coast (including the islands) as shown in Figure 6. The final thresholds were set after the sensitivity test from initial thresholds through the visibility meter on the coast and islands.

Figure 6.

Frequency distribution of the optical/textural properties of fog pixels in the training cases at nighttime (a,b) and daytime (c,d) on the coast. (a) DCD, (b,d) ΔFTs, and (c) ΔVIS.

3.2. Validation Results of Fog Detection Algorithm

3.2.1. Fog Detection Results

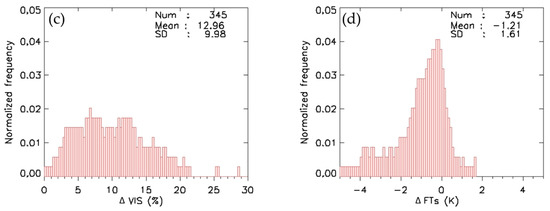

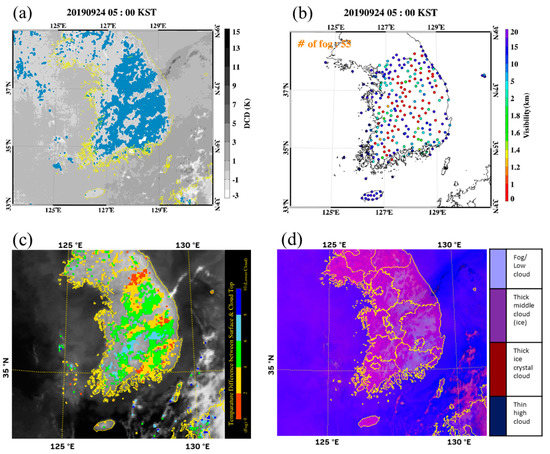

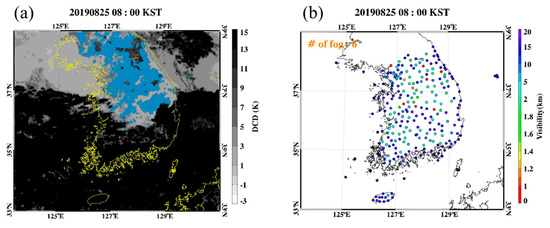

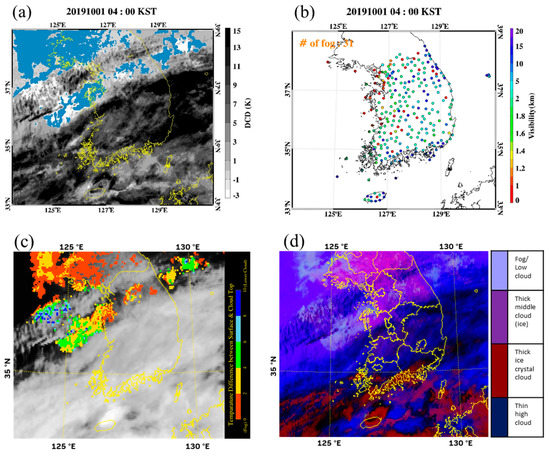

Fog detection results from the GK2A/AMI data set are shown in Figure 7, Figure 8, Figure 9 and Figure 10 along with the fog detection results of CMDPS, fog product of RGB composite using GK2A, image of the visible channel (0.64 μm), and ground-observed visibility data. Qualitative analysis was conducted by selecting two fog cases from the training cases (Figure 7 and Figure 8) and validation cases (Figure 9 and Figure 10), respectively. To qualitatively evaluate the detection level based on fog intensity, the two fog cases (strong and wide area, weak and local fogs) were used. As shown in the visibility meter and color composite (Figure 7b,d), the fog was strong and widespread in most parts of South Korea. This was a typical radiation fog that occurred in the inland areas of South Korea during fall. The visual comparison of fog detection results with the visibility data and color composite indicated that both GK2A_FDA and CMDPS effectively detected the fog in terms of spatial distribution in South Korea (Figure 7a,c). Compared to the visibility and color composite map, GK2A _FDA appeared to slightly over-detect the fog.

Figure 7.

Sample image of fog detection results at 05:00 KST (South Korea Standard Time) 24 September 2019. (a) Fog image of GK2A_FDA, (b) ground-observed visibility, (c) fog image of CMDPS, and (d) fog product of GK2A RGB. Sky blue color in (a) indicates foggy pixels.

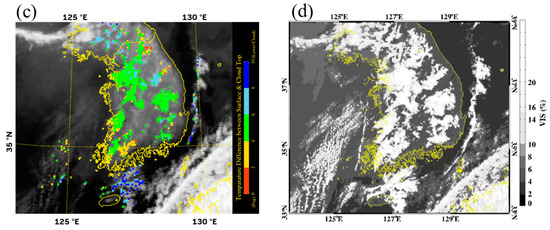

Figure 8.

Sample image of fog detection results at 08:00 KST on 25 August 2019. (a) Fog image of GK2A_FDA, (b) ground-observed visibility, (c) fog image of CMDPS, and (d) image of visible channel (0.64 μm). Sky blue color in (a) indicates foggy pixels.

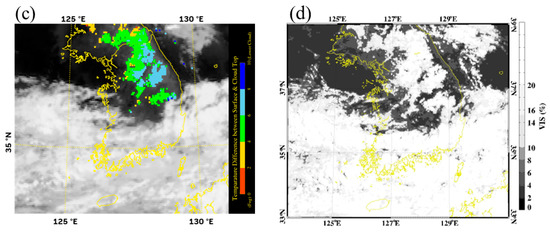

Figure 9.

Sample image of fog detection results at 09:00 KST on 20 October 2019. (a) Fog image of GK2A_FDA, (b) ground-observed visibility, (c) fog image of CMDPS, and (d) image of visible channel (0.64 μm). Sky blue color in (a) indicates foggy pixels.

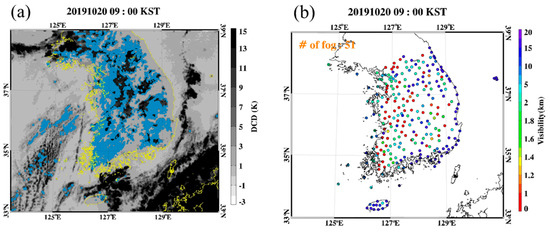

Figure 10.

Sample image of fog detection results at 04:00 KST on 01 October 2019. (a) Fog image of GK2A_FDA, (b) ground-observed visibility, (c) fog image of CMDPS, and (d) fog product of GK2A RGB. Sky blue color in (a) indicates foggy pixels.

Figure 8 shows a relatively weak and localized case of fog. The visibility meter showed that fog occurred only at the very few stations located in the central to northern parts of South Korea; however, the visibility in most inland areas in South Korea was less than 2 km. Moreover, the image of the visible channel showed a wide distribution of fog/low cloud and middle cloud over the central and northern parts of South Korea. GK2A_FDA and CMDPS were found to effectively detect the spatial pattern of the fog over the central and northern parts of South Korea. However, compared to the visibility meter (Figure 8b), both algorithms certainly over-detected the fog. This over-detection was attributed to the dissipation pattern of radiation fog after sunrise. The solar heating of the land surface can increase the land surface and surface boundary layer temperatures, thereby dissipating the lowest layer fog. Thus, the visibility is increased to the level of mist, and fog is changed into low cloud, with no changes taking place at the top of the fog. This could be a main cause of false detection of low cloud as fog during daytime.

In the validation case (Figure 9), both GK2A_FDA and CMDPS effectively detected the fog similar to the training case (Figure 7). In this case, GK2A_FDA slightly over-detected the fog and CMDPS under-detected the fog compared to the visibility meter and image of the visible channel, in particular, in the south-western part of South Korea.

Figure 10 shows the case of a localized fog with mid to high clouds. Fog was observed to occur in most parts of South Korea, significantly scattered with a widespread low visibility over South Korea. Both fog detection algorithms detected the fog in the north-western part of South Korea but could not detect it in the central to southern parts of South Korea due to the presence of mid to high clouds. This is a typical case where any satellite equipped with optical sensors is unable to observe the lower parts of the clouds. Therefore, in this study, when the clout top height was more than 2 km (ΔFTs greater than 10 K), it was regarded as a cloud and excluded from validation.

3.2.2. Quantitative Validation Results

Table 5 shows the results of quantitative validation using the visibility meter for the 12 training cases. The total average POD, FAR, Bias, and ETS were 0.80, 0.37, 1.28, and 0.52, respectively. In general, GK2A_FDA effectively detected the fog irrespective of the time and geographic location, in terms of POD, FAR, Bias, and ETS. However, the detection skill of GK2A_FDA was clearly dependent on the geographic location and time. In addition, the stability (SD: standard deviation) of GK2A_FDA was slightly different based on the geographic location and time. As shown by the KSS, the temporally averaged detection level on the land (KSS: 0.46) was better than that (KSS: 0.31) over the coastal area, and the spatially averaged detection level during nighttime (POD: 0.80, FAR: 0.34, and ETS: 0.54) and dawn (POD: 0.85, FAR: 0.36, and ETS: 0.53) was better than that (POD: 0.77, FAR: 0.47, and ETS: 0.44) during daytime. The SDs of POD, FAR, Bias, and ETS (0.16, 0.10, 0.28, and 0.11) were the smallest at night, which indicated that the fog detection algorithm for night was least sensitive to the fog cases.

Table 5.

Summary of the validation results for the training cases according to time and geographic location.

As shown in Table 6 (validation cases), the fog detection level of GK2A_FDA was very good and stable regardless of the region and time; the total POD, FAR, Bias, and ETS and their SDs were significantly similar to that of the training cases. The total average POD, FAR, Bias, and ETS for the 11 validation cases were 0.82, 0.29, 1.16, and 0.59, respectively; however, the SDs of POD, FAR, Bias, and ETS were 0.19, 0.28, 1.00, and 0.21, respectively, slightly higher than that of the training cases. In general, the fog detection level was slightly higher on land and slightly lower in the coastal area, irrespective of the time. In terms of fog detection time, the detection level was improved at all times, especially during the day (POD: 0.77 to 0.78, FAR: 0.47 to 0.30). However, GK2A_FDA over-detected the fog irrespective of the geographic location and time, similar to the training cases. Further, the detection skill and stability of GK2A_FDA were evidently dependent on the geographic location (better at land than at the coast) and time (better during the night than day), similar to the training cases.

Table 6.

Same as Table 5 except for validation cases.

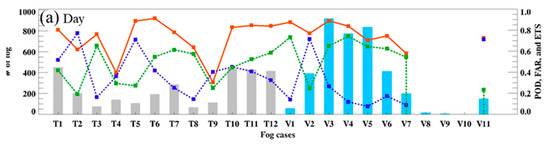

Figure 11 shows the validation results based on the time for the 12 training and 11 validation cases used in this study. In general, the fog detection level was found to be significantly dependent on the characteristics (e.g., fog area) of the fog cases, irrespective of the detection time. In terms of POD and FAR, higher POD and lower FAR was observed for the cases with a large number of fog points and vice versa. The best and worst results were found in V3–V6 and T4, and V9–V10 cases, respectively, which had the highest and lowest numbers of fog points, respectively. The larger SD in the validation compared to the training cases was due to the larger variation in the number of fog points in the validation cases. For July 26 (T4), POD was equal to FAR at all times. A detailed analysis of the satellite images to find the causes of the low level of fog detection (not shown) revealed many fog pixels being covered with low-mid clouds, with no removal of the cloud pixels during validation because of the forecast error of CSR_BT11. This suggested that the quality of CSR_BT11 is very important in separating low to mid clouds from the fog. For 10 February 2020 (V9), FAR was higher than POD, regardless of the time. In this case, detecting the fog was significantly difficult because of the extremely local occurrence of the fog, with a semi-transparent cirrus above it. In the cases of T9 and V2, detection levels were found to be good at night and dawn, but POD was found to be a little higher than or similar to FAR during the day (Figure 11a). Similar to the V9 case, these was extremely locally occurring fog. Although GK2A_FDA was developed to remove partly and thin cirrus clouds, it still falsely detected partly clouds as fog on 17 September 2019 (T9).

Figure 11.

Validation results for the training (T) and validation cases (V) with ground-observed visibility data according to the time ((a) day, (b) night, (c) dawn/twilight, and (d) total). Red, blue, and green lines with dots indicate POD, FAR, and ETS for each fog case, respectively. The bars represent the total number of fog points used for validation. The gray and blue bar graphs are used to distinguish between training and validation cases.

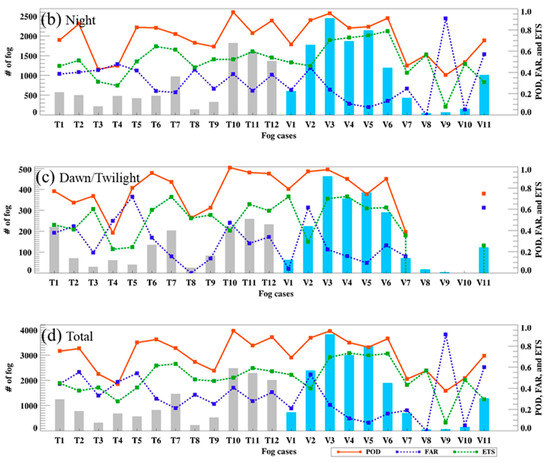

Figure 12 shows the relation between the number of fog stations and detection skill of GK2A_FDA according to the time of the day. The detection level of GK2A_FDA was closely linked with the number of fog stations, irrespective of the time of the day. In general, POD and FAR were positively and negatively related to the number of fog stations, respectively. In particular, POD was significantly affected by the number of fog stations during dawn (Corr.: 0.65) and nighttime (Corr.: 0.74). However, the positive relation of POD with the detection skill and number of fogs was not clear for daytime, contrary to the relation observed for the training (Corr.: 0.47) and validation (Corr.: −0.13) cases. Moreover, the negative relation (Corr.: −0.18–−0.33) between the detection skill and number of fogs was not only weak but also dependent on the time of the day, indicating that the detection skill of GK2A_FDA was clearly dependent on the characteristics of fog, such as fog area and intensity.

Figure 12.

Scatter plots between the number of fogs and statistical skill scores (POD and FAR) based on the time of the day ((a) day, (b) night, (c) dawn/twilight, and (d) total). Correlation coefficients (Corr.) between the number of fogs and statistical skill scores are shown at the top of each figure according to the training cases (T), validation cases (V), and all cases (A). Square and triangle points in each figure indicate POD and FAR, respectively. The training and validation cases are shown separately, with or without shading.

4. Discussion

In this study, we developed a decision tree type fog detection algorithm using GK2A/AMI and different types of auxiliary data, including the output of numerical forecast mode. For this, the most recent geostationary satellite data (GK2A/AMI, Himawari-8/AHI, Geostationary Operational Environmental Satellite (GOES)-R/Advanced Baseline Imager (ABI)), along with numerical model results, land/sea mask, land cover, snow cover data, etc. were required for fog detection using GK2A_FDA. The main advantages of GK2A_FDA were the different availability of satellite data based on the time of the day (day/dawn/night) and different backgrounds based on the geographic locations (land/coast/sea) considered. In addition, we not only evaluated the detection skills (POD, FAR, Bias, and ETS) but also the stability (their standard deviations) of GK2A_FDA using ground-observed visibility data for the 23 fog cases.

The total average POD, FAR, Bias, and ETS for the 12 training (11 validation) cases were 0.80 (0.82), 0.37 (0.29), 1.28 (1.16), and 0.52 (0.59), respectively, with their standard deviations being 0.15 (0.19), 0.13 (0.28), 0.38 (1.00), and 0.11 (0.21), respectively. Table 7 shows the validation results of fog detection using various satellite and auxiliary data using the ground observation data for the past five years (2014–2019). Due to difference in the areas analyzed, analysis periods, and satellite and auxiliary data used in this study, directly comparing them was difficult. However, when the number of fog cases and visibility meter data were used for validation, the detection level of the fog detection algorithm developed in this study was found to be similar or superior to that of the past algorithms. In previous studies, all PODs and FARs were found to be less than 0.7 but greater than 0.31, respectively.

Table 7.

Summary of the validation results using ground observation data of previous studies during the past five years (2014–2019).

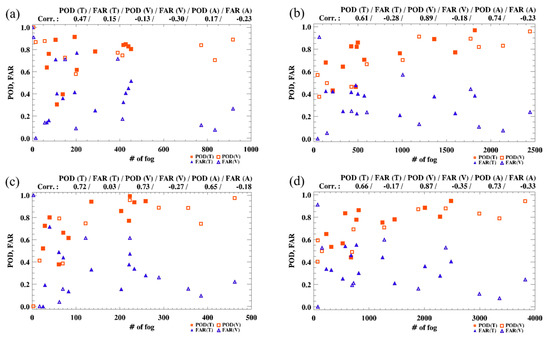

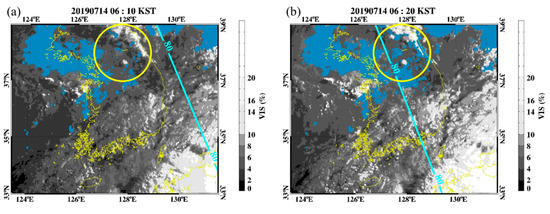

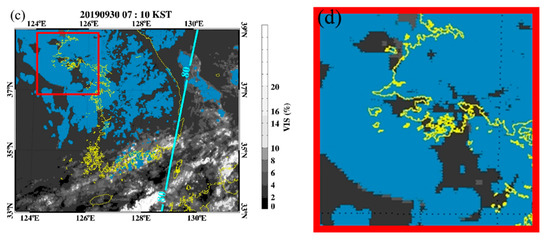

Although we tried to minimize the spatio-temporal discontinuities in the fog detection results, non-negligible spatio-temporal discontinuities existed in the fog detection results, as shown in Figure 13. Temporal and spatial discontinuities were found at the temporal boundary between dawn and daytime (Figure 13a,b), spatial boundary, and coastal lines (Figure 13c,d). Compared to the fog detection result at dawn (Figure 13a), a new fog was abruptly detected during daytime at the right side of the boundary line (Figure 13b). The coastal line contains many non-fog areas surrounded by the fog areas (Figure 13c,d). When considering the strong spatial continuity of the sea fog, we obtained blank areas, which can be considered as a type of spatial discontinuity. We optimized the threshold of SZA for separating dawn and daytime using various sensitivity tests; however, the discontinuities continued in many cases. A complete elimination of spatio-temporal discontinuities was impossible because the channels used were fundamentally different. Despite these fundamental limitations, more studies should be carried out for continuously detecting the fog, without considering time and geographic location.

Figure 13.

Sample images of spatio-temporal discontinuities obtained from (a) 06:10 KST to (b) 06:20 KST on 14 July 2019 and at (c) 07:10 KST on 30 September 2019). Bright sky blue lines indicate the SZA. (d) An enlarged picture of the red box area shown in (c).

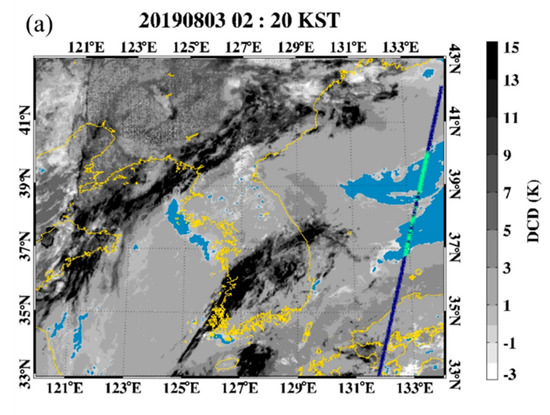

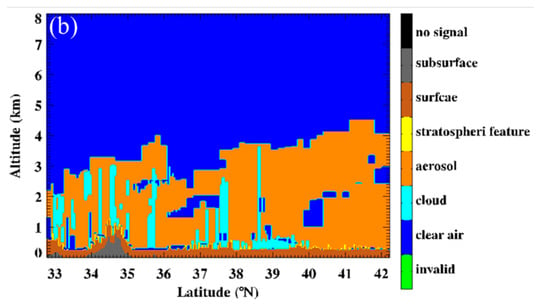

The detection skills of GK2A_FDA over the sea were not quantitatively evaluated in this study because of the limited validation data. In many studies, Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation (CALIPSO) data have been used for fog detection validation over sea [15,16,17,20,40]. Figure 14 shows a validation result for the sea fog detection of GK2A_FDA using Vertical Feature Mask (VFM) data produced from CALIPSO. The VFM data has an issue of incorrectly defining the sea fog layer as the surface (e.g., 37°N–40°N). As suggested by Wu et al. [40], if the backscattering of the layer defined as the surface is greater than 0.03 , it is redefined as the sea fog layer. As the duration of sea fog is long, unlike land fog, the GK2A data were validated ± 20 min based on the passing time of CALIPSO. The VFM data showed that GK2A_FDA effectively detected the sea fog (POD: 0.80, FAR: 0.20, KSS: 0.60, and Bias: 1.00). When considering advection fogs that frequently occur in the western and southern seas to the Korean Peninsula, quantitative validation of the fog detection algorithm in the sea should be performed using the CALIPSO data and others [15,32,41].

Figure 14.

Sample image of (a) the fog detection result for the sea fog at 02:00 KST on 03 August 2019. Navy blue line represents the CALIPSO track and green points on the track indicate the fog pixels redefined from the VFM data. (b) VFM data of the track depicted (a) over the sea.

In addition, for stable fog detection in the long term, it is necessary to improve the level of fog detection on daytime land and for weak or local fog. In particular, the quality of numerical models and background data should be improved for the accurate separation of fog from low to mid clouds.

5. Conclusions

Fog affects transportation due to low visibility and impacts aggravates air pollutants. Thus, accurate detection and forecasting of fog are important for the safety of transportation and air quality management. We developed a decision tree type fog detection algorithm (GK2A_FDA) using the second geostationary satellite of South Korea, GK2A/AMI, and auxiliary data, such as numerical model output and land cover data. The ultimate goal of this study was to reduce the damage caused by fog through real-time fog detection using GK2A/AMI as a high-resolution geostationary satellite. In fact, the fog detection algorithm developed in this study has been temporarily in operation since July 2019. The GK2A/AMI has 16 channels, with a 2 (0.5–1) km spatial resolution in infrared (visible) channels. It observes the full disk and East Asian region every 10 and 2 min, respectively. The spatial resolution and temporal frequency of the surface temperature predicted by the numerical model operated by the KMA were 10 km and 1 h, respectively. The results of GK2A_FDA were validated using the visibility data from 280 stations in South Korea. We selected 23 fog cases (3312 scenes) that occurred across South Korea and used 1728 scenes to determine the threshold values and 1584 scenes to validate GK2A_FDA. As the spatio-temporal resolution of the satellite data, model output, and visibility data were different, the spatio-temporal co-location process was applied to simultaneously use both data.

Fog is a complicated phenomenon in terms of size and intensity. Additionally, AMI contains 16 channels and the quality of auxiliary data (e.g., numerical model output) has significantly improved; thus, we used its various combination of test elements for improving fog detection. We developed GK2A_FDA (nine types of fog detection algorithms) differently, according to the SZA (day, dawn, and night) and surface type (land, sea, and coast) considering the availability of satellite data during day and nighttime, and different background characteristics based on the geographic location. Thus, the test steps were 6 (6) and 5 (4) for day and nighttime on land (sea), respectively. The main test elements used for the day and night were the reflectance differences at 0.63 μm and DCD between the fog and other objects, respectively. The temperature difference between the top of the fog and surface has been commonly used for separating fog from low-to-mid clouds. The threshold values were optimized through the sensitivity tests for every test element using the 12 training cases after the initial setting of threshold values in the histogram analysis for the selected fog pixels. The fog detection results had a spatio-temporal discontinuity because of the different development of GK2A_FDA according to SZA and geographic location. Therefore, we included the fog detected at nighttime to minimize the discontinuity at dawn and calculated the weight sum of the land and sea algorithms in the coastal area. In addition, we eliminated the falsely detected fog pixels appearing in the snow or desert area using the snow cover map and land cover data.

The validation results of the 12 training and 11 validation cases showed that GK2A_FDA effectively detected the fog, irrespective of time and geographic location in terms of accuracy and stability. The total average POD, FAR, Bias, and ETS for the training (validation) cases were 0.80 (0.82), 0.37 (0.29), 1.28 (1.16), and 0.52 (0.59), respectively. The SDs of the total averages of POD, FAR, Bias, and ETS for the training (validation) cases were 0.15 (0.19), 0.13 (0.28), 0.38 (1.00), and 0.11 (0.21), respectively. In general, the detection skills and stability of GK2A_FDA were observed to be slightly increased and decreased in the validation cases, respectively, which were mainly related to the fog area (variations in the number of fog pixels), as shown in Figure 11, and were found to be slightly dependent on geographic location and time. In the training cases, the detection skill of GK2A_FDA was observed to be better on land (POD: 0.82, FAR: 0.37, and ETS: 0.54) than (POD: 0.72, FAR: 0.40, and ETS: 0.46) over the coastal area. The detection levels during nighttime (POD: 0.80, FAR: 0.34, and ETS: 0.54) and dawn (POD: 0.85, FAR: 0.36, and ETS: 0.53) were better than that (POD: 0.77, FAR: 0.47, and ETS: 0.44) during daytime. In addition, the detection skill of GK2A_FDA was found to be the most stable at night in terms of the standard deviation of POD (0.16), FAR (0.10), Bias (0.28), and ETS (0.11). Further, the detection skill of GK2A_FDA for the validation cases was found to be better at land (POD: 0.83, FAR: 0.28, Bias: 1.15, and ETS: 0.60) than at the coastal area (POD: 0.72, FAR: 0.43, Bias: 1.25, and ETS: 0.46), and better at night (POD: 0.83, FAR: 0.28, Bias: 1.16, and ETS: 0.60) than during the day (POD: 0.78, FAR: 0.30, Bias: 1.12, and ETS: 0.57), similar to the training cases. In order for the fog detection algorithm developed in this study to be used in a forecast office (e.g., NMSC/KMA), it is necessary to evaluate not only fog cases but also non fog cases (e.g., precipitation cases, clear cases). So, we will continue to evaluate the level of this fog detection algorithm using various fog and non-fog cases.

Author Contributions

Conceptualization, M.-S.S.; methodology, M.-S.S.; software, J.-H.H. and H.-Y.Y.; validation, J.-H.H.; formal analysis, J.-H.H., M.-S.S., and H.-Y.Y.; investigation, N.-Y.R.; resources, M.-S.S.; data curation, J.-H.H., N.-Y.R., and H.-Y.Y.; writing—original draft preparation, J.-H.H.; writing—review and editing, M.-S.S.; visualization, J.-H.H. and H.-Y.Y.; supervision, M.-S.S.; project administration, M.-S.S.; funding acquisition, M.-S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Korea Meteorological Administration Research and Development Program under Grant KMI2018-06510. This work was also supported by “Development of Scene Analysis & Surface Algorithms” project, funded by ETRI, which is a subproject of “Development of Geostationary Meteorological Satellite Ground Segment (NMSC-2019-01)” program funded by NMSC (National Meteorological Satellite Center) of KMA (Korea Meteorological Administration).

Acknowledgments

The authors would like to thank the anonymous reviewers for their very competent comments and helpful suggestions. We are also grateful to Korean Meteorological Administration’s National Meteorological Satellite Center for providing vast amounts of satellite and numerical data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Test Elements and Acronyms

Table A1.

Definition of each test elements.

Table A1.

Definition of each test elements.

| Test Elements | Unit | Definition |

|---|---|---|

| DCD | K | BT3.8 − BT11.2 |

| ΔVIS | % | VIS0.6 − Vis_Comp. |

| Vis_Comp | % | minimum value composite of visible channel reflectance during 30 days |

| ΔFTs | K | BT11.2 − CSR_BT11 |

| LSD | K | Standard deviation of 3 × 3 pixels |

| NLSD | LSD/Average of 3 × 3 pixels | |

| BTD_08_10 | K | BT8.7 − BT10.5 |

| BTD_10_12 | K | BT10.5 − BT12.3 |

| BTD_13_11 | K | BT13.3 − BT11.2 |

Table A2.

List of acronyms.

Table A2.

List of acronyms.

| Acronyms | Description |

|---|---|

| ABI | Advanced Baseline Imager |

| AHI | Advanced Himawari Imager |

| AMI | Advanced Meteorological Imager |

| ASOS | Automated Surface Observing System |

| AWOS | Automated Weather Observing System |

| Bias | Bias Ratio |

| BT | Brightness Temperature |

| BTD | Brightness Temperature Difference |

| CALIPSO | Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation |

| COMS | Communication, Ocean and Meteorological Satellite |

| Corr. | Correlation coefficients |

| CMDPS | COMS Meteorological Data Processing System |

| CSR | Clear Sky Radiance |

| CSR_BT11 | Clear sky radiance for brightness temperature at 11 µm |

| DCD | Dual Channel Difference |

| ETS | Equitable Threat Score |

| FAR | False Alarm Ratio |

| ΔFTs | Difference between the BT of the fog top and surface temperature |

| GK2A | GEO-KOMPSAT-2A |

| GK2A_FDA | Fog detection algorithm of GK2A |

| GOES | Geostationary Operational Environmental Satellite |

| ISAI | Infrared Atmospheric Sounding Interferometer |

| IR | Infrared |

| KMA | Korea Meteorological Administration |

| KSS | Hanssen-Kuiper skill score |

| LSD | Local Standard Deviation |

| LSD_BT11.2 | LSD of Brightness Temperature at 11.2 μm |

| METAR | Meteorological Terminal Aviation Routine Weather Report |

| MTSAT | Multifunction Transport Satellite |

| NDSI | Normalized Difference Snow Index |

| NIR | Near Infrared |

| NLSD | Normalized LSD |

| NLSD_vis | NLSD of reflectance |

| NMSC | National Meteorological Satellite Center |

| NWP | Numerical Weather Model |

| POD | Probability Of Detection |

| RGB | Red-Green-Blue |

| RTTOV | Radiative Transfer of TOVS |

| SD | Standard Deviation |

| SEVIRI | Spinning Enhanced Visible and Infrared Imager |

| SYNOP | Surface Synoptic Observations |

| SZA | Solar Zenith Angle |

| UM | Unified Model |

| VFM | Vertical Feature Mask |

| VIS | Visible |

| ΔVIS | Difference between the reflectance of 0.64 μm (VIS0.6) and Vis_Comp |

| Vis_Comp | minimum value composite of visible channel reflectance during 30 days |

References

- Cermak, J. SOFOS—A New Satellite-Based Operational fog Observation Scheme. Ph.D. Thesis, Phillipps-University, Marburg, Germany, 2006. [Google Scholar] [CrossRef]

- Suh, M.S.; Lee, S.J.; Kim, S.H.; Han, J.H.; Seo, E.K. Development of Land fog Detection Algorithm based on the Optical and Textural Properties of Fog using COMS Data. Korean J. Remote Sens. 2017, 33, 359–375. [Google Scholar] [CrossRef]

- Han, J.H.; Suh, M.S.; Kim, S.H. Development of Day Fog Detection Algorithm Based on the Optical and Textural Characteristics Using Himawari-8 Data. Korean J. Remote Sens. 2019, 35, 117–136. [Google Scholar] [CrossRef]

- Bendix, J. A satellite-based climatology of fog and low-level stratus in Germany and adjacent areas. Atmos. Res. 2002, 64, 3–18. [Google Scholar] [CrossRef]

- Yi, L.; Thies, B.; Zhang, S.; Shi, X.; Bendix, J. Optical thickness and effective radius retrievals of low stratus and fog from MTSAT daytime data as a prerequisite for Yellow Sea fog detection. Remote Sens. 2015, 8, 8. [Google Scholar] [CrossRef]

- Ellrod, G.P. Advances in the detection and analysis of fog at night using GOES multispectral infrared imagery. Weather Forecast. 1995, 10, 606–619. [Google Scholar] [CrossRef]

- Jhun, J.G.; Lee, E.J.; Ryu, S.A.; Yoo, S.H. Characteristics of regional fog occurrence and its relation to concentration of air pollutants in South Korea. Atmosphere 1998, 23, 103–112. [Google Scholar]

- Underwood, S.J.; Ellrod, G.P.; Kuhnert, A.L. A multiple-case analysis of nocturnal radiation-fog development in the central valley of California utilizing the GOES nighttime fog product. J. Appl. Meteorol. 2004, 43, 297–311. [Google Scholar] [CrossRef]

- Heo, K.Y.; Ha, K.J. Classification of synoptic pattern associated with coastal fog around the Korean peninsula. Atmosphere 2004, 40, 541–556. [Google Scholar]

- Gultepe, I.; Müller, M.D.; Boybeyi, Z. A new visibility parameterization for warm-fog applications in numerical weather prediction models. J. Appl. Meteorol. Climatol. 2006, 45, 1469–1480. [Google Scholar] [CrossRef]

- Van der Velde, I.R.; Steeneveld, G.J.; Wichers Schreur, B.G.J.; Holtslag, A.A.M. Modeling and forecasting the onset and duration of severe radiation fog under frost conditions. Mon. Weather Rev. 2010, 138, 4237–4253. [Google Scholar] [CrossRef]

- Lee, H.D.; Ahn, J.B. Study on classification of fog type based on its generation mechanism and fog predictability using empirical method. Atmosphere 2013, 23, 103–112. [Google Scholar] [CrossRef]

- Musial, J.P.; Hüsler, F.; Sütterlin, M.; Neuhaus, C.; Wunderle, S. Daytime low stratiform cloud detection on AVHRR imagery. Remote Sens. 2014, 6, 5124–5150. [Google Scholar] [CrossRef]

- Lee, J.R.; Chung, C.Y.; Ou, M.L. Fog detection using geostationary satellite data: Temporally continuous algorithm. Asia Pac. J. Atmos. Sci. 2011, 47, 113–122. [Google Scholar] [CrossRef]

- Shin, D.; Kim, J.H. A new application of unsupervised learning to nighttime sea fog detection. Asia Pac. J. Atmos. Sci. 2018, 54, 527–544. [Google Scholar] [CrossRef]

- Shin, D.G.; Park, H.M.; Kim, J.H. Analysis of the Fog Detection Algorithm of DCD Method with SST and CALIPSO Data. Atmosphere 2013, 23, 471–483. [Google Scholar] [CrossRef]

- Ishida, H.; Miura, K.; Matsuda, T.; Ogawara, K.; Goto, A.; Matsuura, K.; Sato, Y.; Nakajima, T.Y. Scheme for detection of low clouds from geostationary weather satellite imagery. Atmos. Res. 2014, 143, 250–264. [Google Scholar] [CrossRef]

- Egli, S.; Thies, B.; Bendix, J. A hybrid approach for fog retrieval based on a combination of satellite and ground truth data. Remote Sens. 2018, 10, 628. [Google Scholar] [CrossRef]

- Eyre, J.R.; Brownscombe, J.L.; Allam, R.J. Detection of fog at night using advanced very high resolution radiometer (AVHRR) imagery. Meteorol. Mag. 1984, 113, 266–271. [Google Scholar]

- Kim, S.H.; Suh, M.S.; Han, J.H. Development of Fog Detection Algorithm during Nighttime Using Himawari-8/AHI Satellite and Ground Observation Data. Asia Pac. J. Atmos. Sci. 2019, 55, 337–350. [Google Scholar] [CrossRef]

- Cermak, J. Fog and low cloud frequency and properties from active-sensor satellite data. Remote Sens. 2018, 10, 1209. [Google Scholar] [CrossRef]

- Ellrod, G.P.; Gultepe, I. Inferring low cloud base heights at night for aviation using satellite infrared and surface temperature data. Pure Appl. Geophys. 2007, 164, 1193–1205. [Google Scholar] [CrossRef]

- Park, H.M.; Kim, J.H. Detection of sea fog by combining MTSAT infrared and AMSR microwave measurements around the Korean Peninsula. Atmosphere 2012, 22, 163–174. [Google Scholar] [CrossRef]

- Cermak, J.; Bendix, J. A novel approach to fog/low stratus detection using Meteosat 8 data. Atmos. Res. 2008, 87, 279–292. [Google Scholar] [CrossRef]

- Jeon, J.Y.; Kim, S.H.; Yang, C.S. Fundamental research on spring season daytime sea fog detection using MODIS in the yellow sea. Korean J. Remote Sens. 2016, 32, 339–351. [Google Scholar] [CrossRef]

- Choi, Y.S.; Ho, C.H. Earth and environmental remote sensing community in South Korea: A review. Remote Sens. Appl. Soc. Environ. 2015, 2, 66–76. [Google Scholar] [CrossRef]

- Chung, S.R.; Ahn, M.H.; Han, K.S.; Lee, K.T.; Shin, D.B. Meteorological Products of Geo-KOMPSAT 2A (GK2A) Satellite. Asia Pac. J. Atmos. Sci. 2020, 56, 185. [Google Scholar] [CrossRef]

- EUMETSAT. Best Practices for RGB Compositing of Multi-Spectral Imagery; User Service Division, EUMETSAT: Darmstadt, Germany, 2009; p. 8. [Google Scholar]

- Cermak, J. Low clouds and fog along the South-Western African coast—Satellite-based retrieval and spatial patterns. Atmos. Res. 2012, 116, 15–21. [Google Scholar] [CrossRef]

- NMSC. Cloud Mask Algorithm Theoretical Basis Document. Available online: http://nmsc.kma.go.kr/homepage/html/base/cmm/selectPage.do?page=static.edu.atbdGk2a (accessed on 2 July 2020).

- King, M.D.; Kaufman, Y.J.; Menzel, W.P.; Tanre, D. Remote sensing of cloud, aerosol, and water vapor properties from the moderate resolution imaging spectrometer(MODIS). IEEE Trans. Geosci. Remote Sens. 1992, 30, 2–27. [Google Scholar] [CrossRef]

- Baum, B.A.; Platnick, S. Introduction to MODIS cloud products. In Earth Science Satellite Remote Sensing, 1st ed.; Qu, J.J., Gao, W., Kafatos, M., Murphy, R.E., Salomonson, V.V., Eds.; Springer: Berlin/Heidelberg, 2006; Volume 1, pp. 74–91. [Google Scholar]

- Lee, H.K.; Suh, M.S. Objective Classification of Fog Type and Analysis of Fog Characteristics Using Visibility Meter and Satellite Observation Data over South Korea. Atmosphere 2019, 29, 639–658. [Google Scholar] [CrossRef]

- Andersen, H.; Cermak, J. First fully diurnal fog and low cloud satellite detection reveals life cycle in the Namib. Atmos. Meas. Tech. 2018, 11. [Google Scholar] [CrossRef]

- Dong, C. Remote sensing, hydrological modeling and in situ observations in snow cover research: A review. J. Hydrol. 2018, 561, 573–583. [Google Scholar] [CrossRef]

- Schreiner, A.J.; Ackerman, S.A.; Baum, B.A.; Heidinger, A.K. A multispectral technique for detecting low-level cloudiness near sunrise. J. Atmos. Ocean Technol. 2007, 24, 1800–1810. [Google Scholar] [CrossRef]

- Lefran, D. A One-Year Geostationary Satellite-Derived Fog Climatology for Florida. Master’s Thesis, Florida State University, Tallahassee, FL, USA, 2015. [Google Scholar]

- Nilo, S.T.; Romano, F.; Cermak, J.; Cimini, D.; Ricciardelli, E.; Cersosimo, A.; Paola, F.D.; Gallucci, D.; Gentile, S.; Geraldi, E.; et al. Fog detection based on meteosat second generation-spinning enhanced visible and infrared imager high resolution visible channel. Remote Sens. 2018, 10, 541. [Google Scholar] [CrossRef]

- Leppelt, T.; Asmus, J.; Hugershofer, K. Preliminary studies of MTG potential in satellite based fog and low cloud detection. In Proceedings of the 2018 EUMETSAT Meteorological Satellite Conference, Tallinn, Estonia, 17–21 September 2018. [Google Scholar]

- Wu, D.; Lu, B.; Zhang, T.; Yan, F. A method of detecting sea fogs using CALIOP data and its application to improve MODIS-based sea fog detection. J. Quant. Spectrosc. Radiat. Transf. 2015, 153, 88–94. [Google Scholar] [CrossRef]

- Kang, T.H.; Suh, M.S. Detailed Characteristics of Fog Occurrence in South Korea by Geographic Location and Season—Based on the Recent Three Years (2016–2018) Visibility Data. J. Clim. Res. 2019, 14, 221–244. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).