1. Introduction

Accelerating climate and human changes have significant influences on hydrological systems and notably on surface water dynamics [

1]. Accurate mapping and monitoring of lakes, reservoirs and wetlands is essential to understand inundation patterns, water availability for irrigation, domestic use or hydropower, as well as ecosystem health. Across large wetlands and multiple dispersed water bodies, remote sensing provides rising opportunities to monitor surface water variations, which can be difficult to capture by localised hydrological monitoring or modelling [

2]. These notably face stark difficulties in representing flooded areas due to data scarcity and inaccuracies in global digital elevation models to represent and account for the flat, yet complex topography of large floodplains [

3].

In recent years, several works have harnessed the rising number of free, high spatial and temporal resolution imagery from passive and active sensors, mapping and monitoring small water bodies [

4,

5,

6,

7], lakes, floodplains and wetlands [

8,

9,

10,

11,

12,

13,

14]. In parallel, numerous surface water databases have been developed at the global and regional scale. The Moderate Resolution Imaging Spectroradiometer (MODIS) with up to twice daily observations from the Aqua and Terra constellation of sensors has notably been used in several land cover applications. Carroll et al. [

15] produced a global inventory of surface water using MODIS and Shuttle Radar Topography Missions (SRTM) elevation data for the year 2000, updated by the 250 m Global Water Pack produced by Klein et al. [

16]. Khandelwal et al. [

17] developed an approach for global eight day monitoring of surface water extent using 500 m MODIS imagery, while D’Andrimont and Defourny [

18] used twice daily observations to characterise surface water at a 10 day time step over 2004–2010 across Africa.

The multiplication of higher resolution sensors notably Landsat and Sentinel missions has led global-scale analyses to move towards moderate resolution [

19]. Feng et al. [

20], Verpoorter et al. [

21] for instance produced a 30 m inland water body dataset for the year 2000 based on Landsat imagery. Yamazaki et al. [

22] developed the Global 3 arc-second (90 m) Water Body Map (G3WBM) based on Landsat multi-temporal imagery and the Global Land Survey (GLS) database. Using the full catalogue of Landsat imagery, Pekel et al. [

23] developed the Global Surface Water (GSW) dataset harnessing Google Earth Engines’ (GEE) [

24] capacity to produce not only a global water map, but also a database of water dynamics over 1984 to 2019 at a 30 m spatial resolution and a monthly scale. This can notably be combined with satellite altimetry data (e.g., DAHITI [

25]) to produce global lake and reservoir volume estimates. Donchyts et al. [

26] produced a similar tool (Deltares Aqua Monitor) based on Landsat imagery at a 30 m resolution, while Yang et al. [

27] recently used Level 1C Sentinel-2 imagery in GEE to map surface water dynamics across the whole of France.

Despite the vast opportunities provided by these global datasets, research has also shown the limitations of these works in specific contexts. Optical sensors such as those aboard Landsat satellites are affected by cloud cover, leading to incomplete representation of seasonal wetlands and inundation patterns [

28]. To overcome these limitations, Yao et al. [

29] used Landsat imagery since 1992 and enhanced cloud corrections to increase the number of available observations and create a long-term high frequency time series. Based on multiple sensors, including active sensors, a global database of surface water extent over 12 years (Global Inundation Extent from Multi-Satellites—GIEMS) was developed at a 0.25

(around 27 km) resolution, subsequently downscaled to 500 m based on ancillary data [

30,

31,

32]. Combining optical and radar imagery can notably improve observations during the rainy season when cloud obstructions interfere with reflectance values of passive sensors [

33]. Similarly, a rising number of works seek to combine the unparalleled long-term observations from Landsat (launched in 1972) with the recent advances from Sentinel-2 imagery [

34,

35], and NASA has distributed Harmonized Landsat and Sentinel-2 surface reflectance datasets at a 30 m resolution since 2013 [

36]. However, these approaches notably suffer from the difficulties associated with the presence of vegetation within the pixel affecting the spectral signature. Methods are indeed calibrated and designed to classify open water bodies, leading to omission errors on smaller water bodies, floodplains and wetlands containing large areas with flooded vegetation [

22,

23,

28,

37,

38].

In mixed water environments such as floodplains, which concentrate meanders and shallow water basins and where temporary flood patterns require high image availability, novel approaches to build upon the multiple sources of imagery are necessary [

39,

40,

41]. Optimal approaches to characterise long-term surface water extent must seek to combine or fuse the observations from multiple sensors [

28,

42]. Large-scale computing geoprocessing capacities made available through Google Earth Engines have vastly increased the possibilities to exploit multiple imagery across multiple locations. However, combining and fusing satellite sources require understanding the relative benefits of these observations including in long-term studies to guide their selection and combination [

43,

44]. The need for consistent long-term datasets to monitor land use also introduces further constraints to classify data and threshold indices in automated, but consistent ways [

42,

45,

46], whose accuracy must be assessed and optimised against ground truth data.

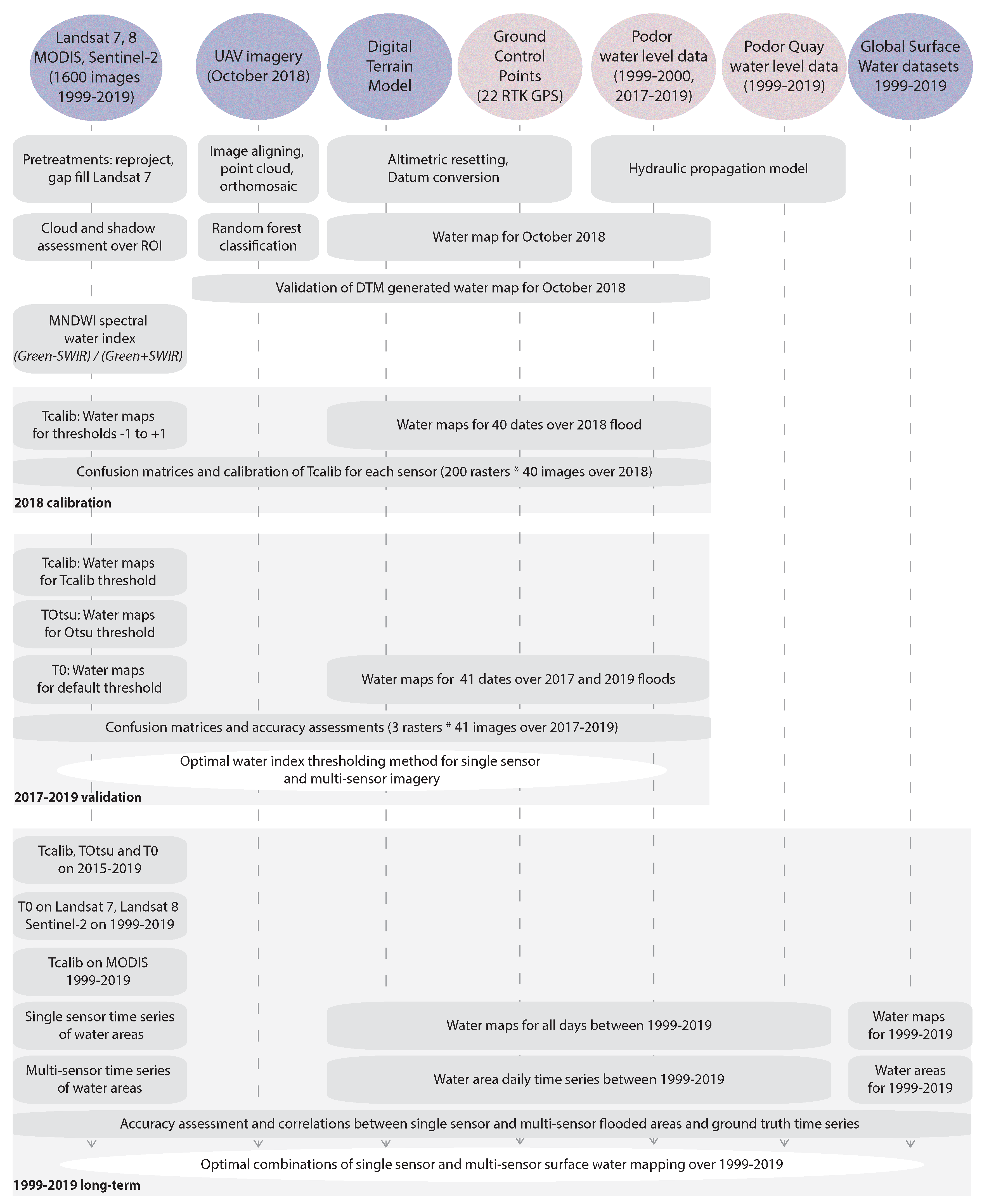

This paper seeks to evaluate and compare the relative benefits of combining multiple imagery sources to monitor surface water areas in floodplains, characterised by temporary inundation and heterogeneous water environments. Extensive and high accuracy field monitoring of surface water over 1999–2019 in the Senegal River floodplain is used to quantify the capacity and accuracy of four major sources of Earth observation to monitor long-term surface water variations. Three thresholding methods are compared to improve the classification of mixed waters with Landsat 7, Landsat 8, Sentinel-2 and MODIS, before associating all 1600 observations over 1999–2019 to evaluate the skill of single sensor and multi-sensor combinations and guide the development of long-term studies of large floodplains and wetlands. The possibilities offered within Google Earth Engines are explored in order to reduce downloading and processing times and easily replicate the approach and findings across entire floodplains of the large rivers of the world.

4. Discussion

The rising availability of remote sensing imagery provides increased opportunities for surface water monitoring. Multi-sensor is the future direction [

42], and this research provides essential information into the relative benefits of combining sensors over different periods of the past twenty years (1999–2019). Numerous multi-sensor approaches have been developed to combine the observations from a virtual constellation of satellites and provide greater temporal resolution, notably in cloud affected settings and periods. Whitcraft et al. [

19], Claverie et al. [

36] notably argued for the need to combine the Landsat 7, Landsat 8, as well as Sentinel-2A and 2B satellites, to take full advantage of the rising spatial resolution (up to 10 m on certain S2 bands). However, our research shows that for earlier years, coarse resolution, high repetitivity MODIS imagery remains essential even in the monitoring of small (19 km

) water bodies and should not be overlooked in favour of higher resolution sensors.

Previous research notably showed that MODIS was suited to the study of hydrological systems superior to 10,000 km

[

8]. Khandelwal et al. [

17] using a supervised classification algorithm based on Support Vector Machines (SVMs) showed that MODIS imagery could be used to study water trends on large lakes ranging between 240 km

and 5380 km

. Our results show that site-specific thresholds on MNDWI can be used to monitor daily long-term variations of flood dynamics under 19 km

and reduce the RMSE by 50% compared to Otsu segmentation. Field calibrated thresholds are shown to be most suited with MODIS to force a lower threshold and take into account the presence of shallow waters and flooded vegetation in the pixels, supporting results on other wetlands [

12].

Global Surface Water datasets [

23] are often referred to as the best attempt to provide water maps at high resolution [

42] and many global datasets are developed solely on Landsat and Sentinel archives [

22,

23,

26,

36]. The results demonstrate the clear limitations before 2013 of GSW datasets built on one image every 30 days, to monitor flood dynamics in floodplains. These are notably insufficient to reproduce surface water dynamics in the smallest (<1 km

), temporary water bodies with flash floods [

7,

82,

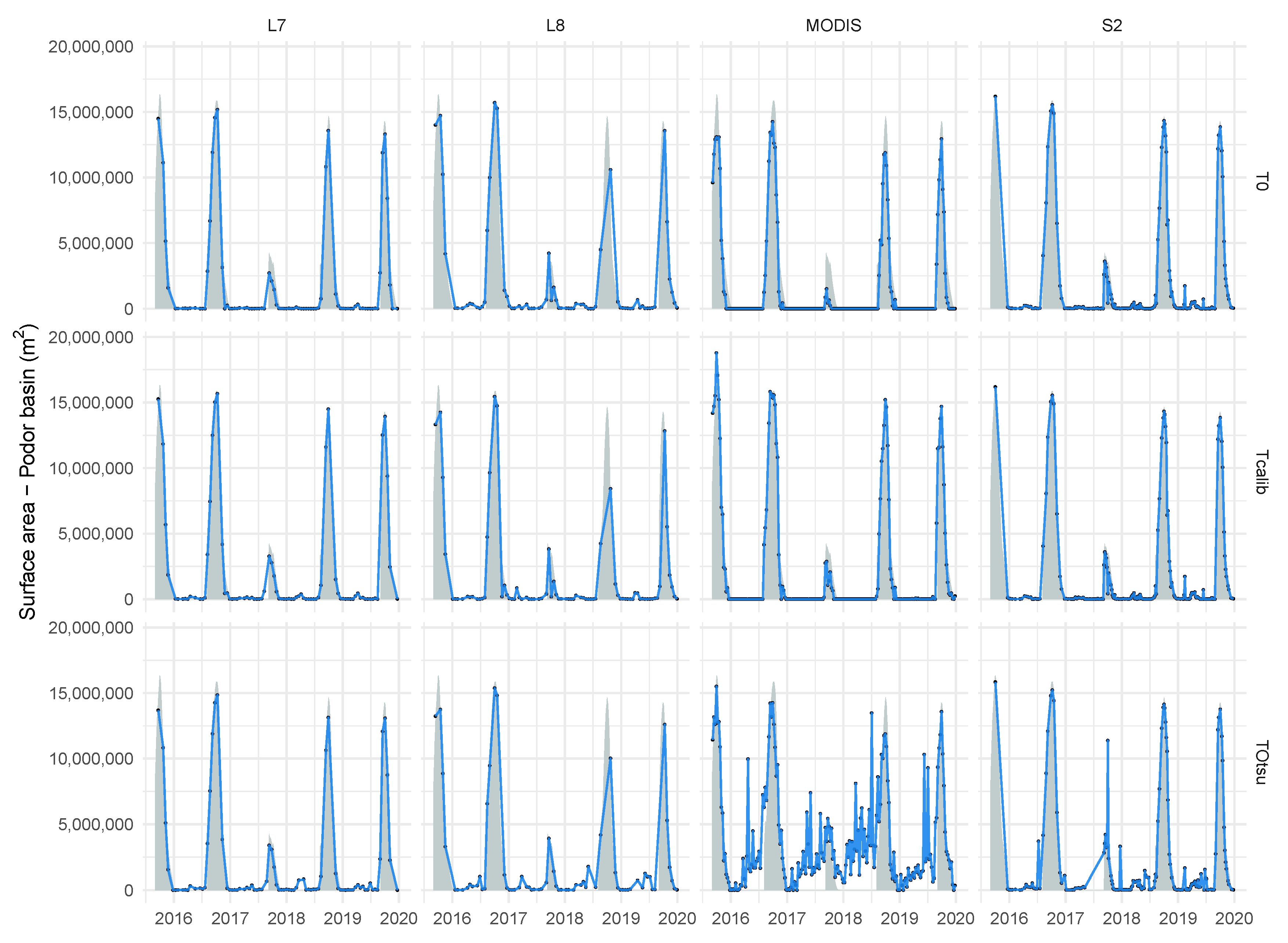

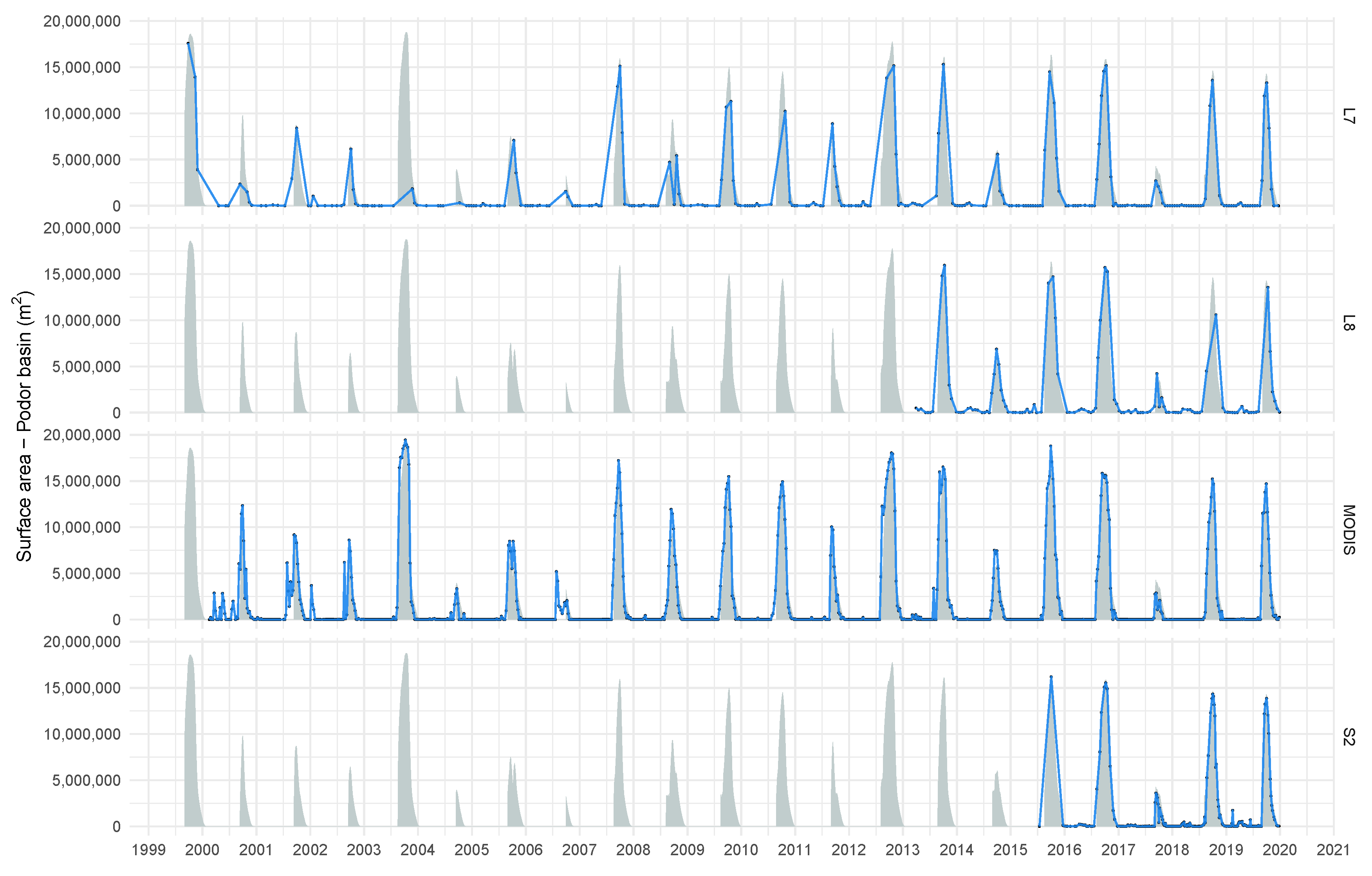

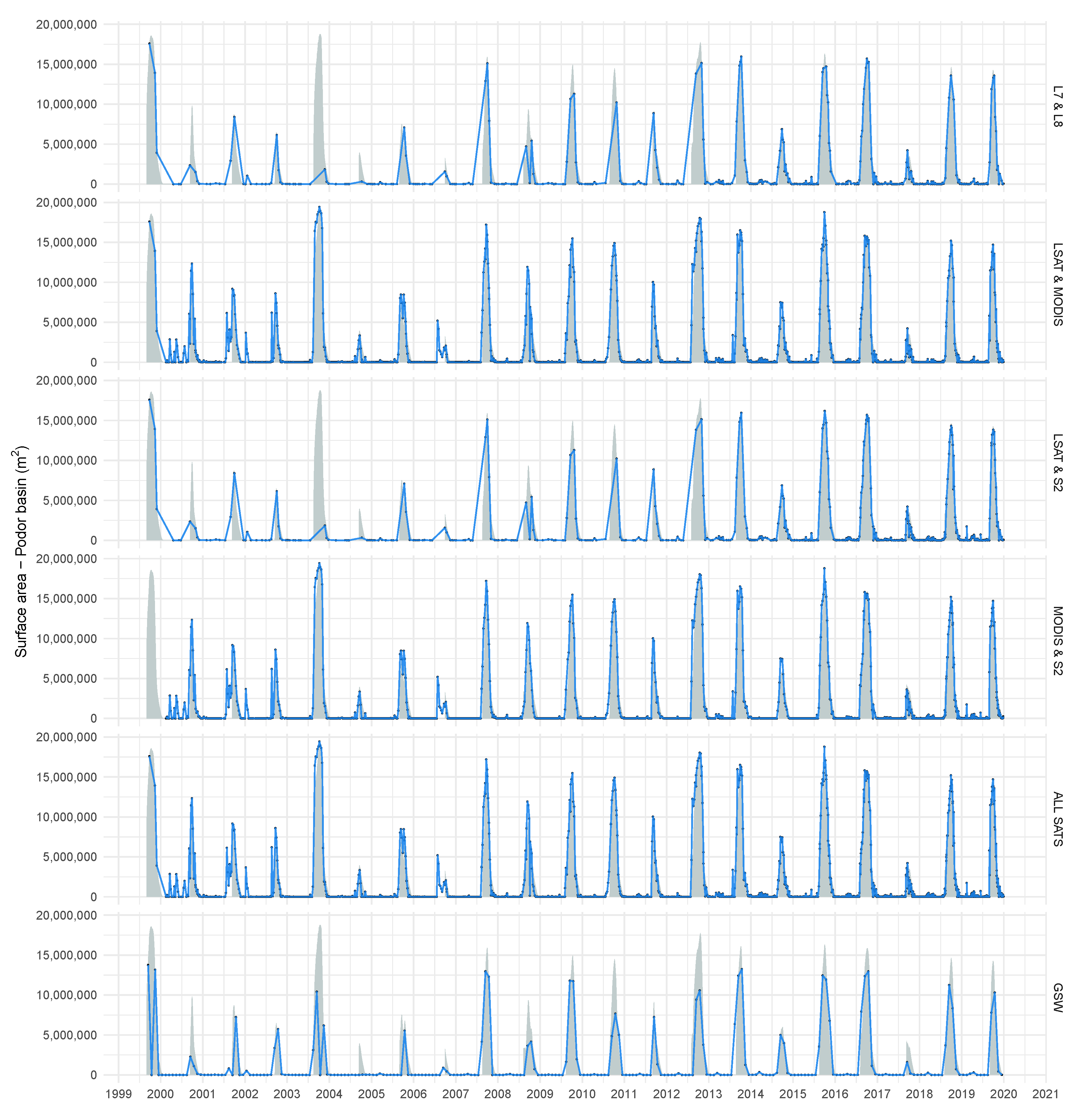

83] and are also insufficient in floodplains with slow flood dynamics. Using the full Landsat archive provides observations every 24 days on average over 1999–2019, but the benefits of integrating additional data sources remain indisputable, e.g., to capture flood peaks in 2003 and 2004 missed here by Landsat. Despite cloud presence during the flood rise, optical imagery is shown here to be sufficient to monitor surface water variations. Combining or fusing optical imagery with active radar imagery such as Sentinel-1 may be relevant in areas or periods where cloud presence is more frequent and problematic [

84], notably during the monsoon season at tropical latitudes. To capture the benefits of both Landsat spatial resolution and MODIS temporal resolution, the integration of data fusion methods such as the ESTARFM algorithm [

85,

86] within GEE has the potential to raise performance further. Similarly, when focussing on smaller hydrological objects or on low water periods, pansharpening and subpixel approaches [

42] may provide benefits and should be supported by the integration of the adequate panchromatic bands and algorithms in the GEE platform.

The full MODIS and Landsat surface reflectance imagery have been integrated in Google Earth Engines, and access to these cloud geoprocessing capacities here greatly reduces the download and treatment times. Level 2 surface reflectance Sentinel-2 imagery for our region of interest is however only available since 16 December 2018, and integration within GEE of the full Level 2 products will be a welcome addition for the academic community. Results based on Level 1C imagery nevertheless show here good stability and consistency between sensors, supporting similar studies using digital numbers and top of atmosphere reflectance [

27,

64,

87]. Undetected clouds notably cause problems with the Otsu algorithm on Sentinel-2 imagery here. These are less prevalent with Landsat imagery, which benefits from clouds masks produced by the improved CFmask algorithm. Considering the relative weaknesses of default cloud algorithms for MODIS and Sentinel-2 compared to CFmask, integration within GEE of improved cloud masks or efficient cloud algorithms should be investigated to assess the potential improvements. Baetens et al. [

88] showed that the Fmask or MAJA algorithms could improve further on the Sen2Cor approach employed by ESA. Nevertheless, optically thin clouds will remain challenging to identify and may be omitted by CFMask, including on Landsat imagery.

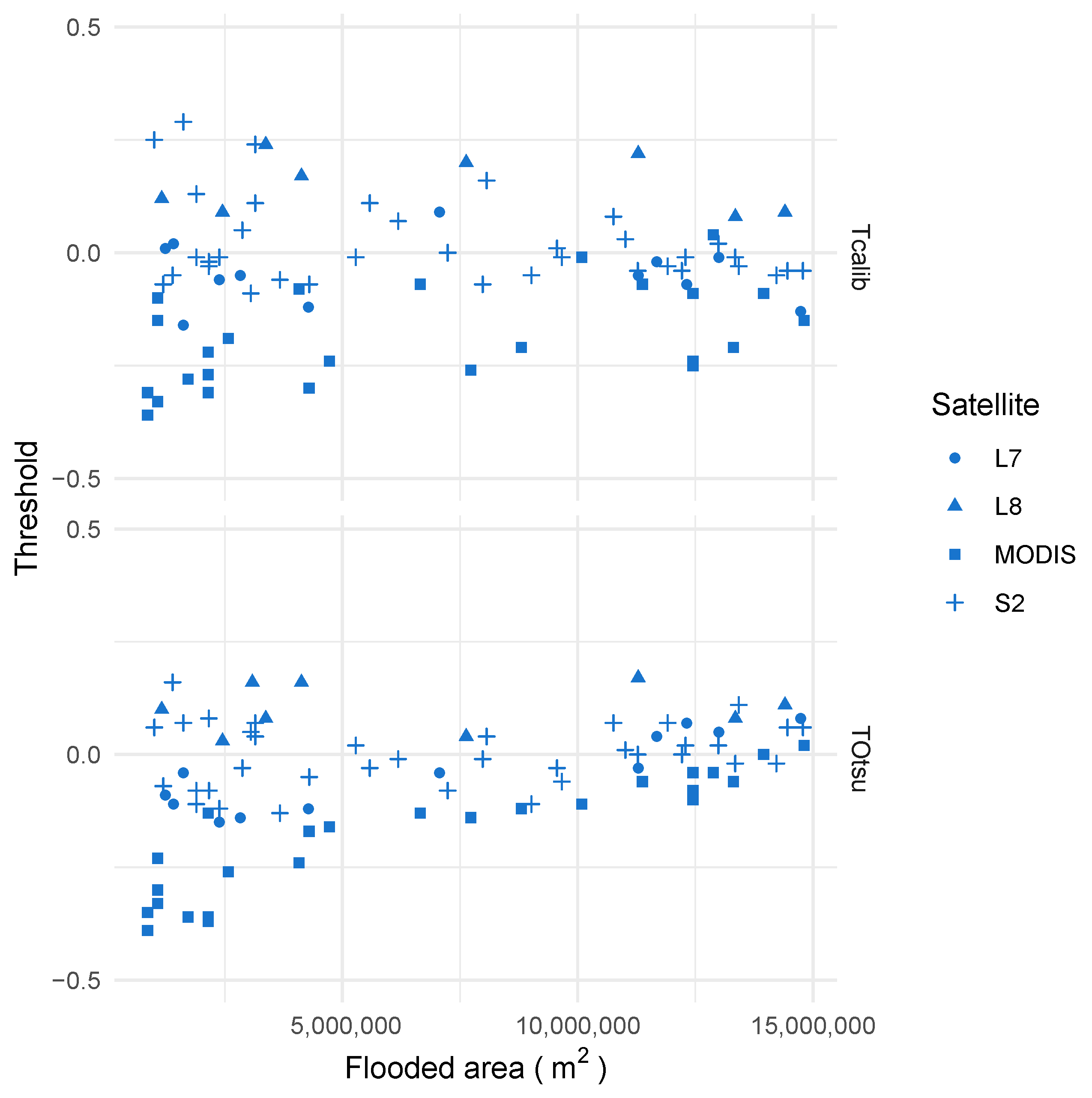

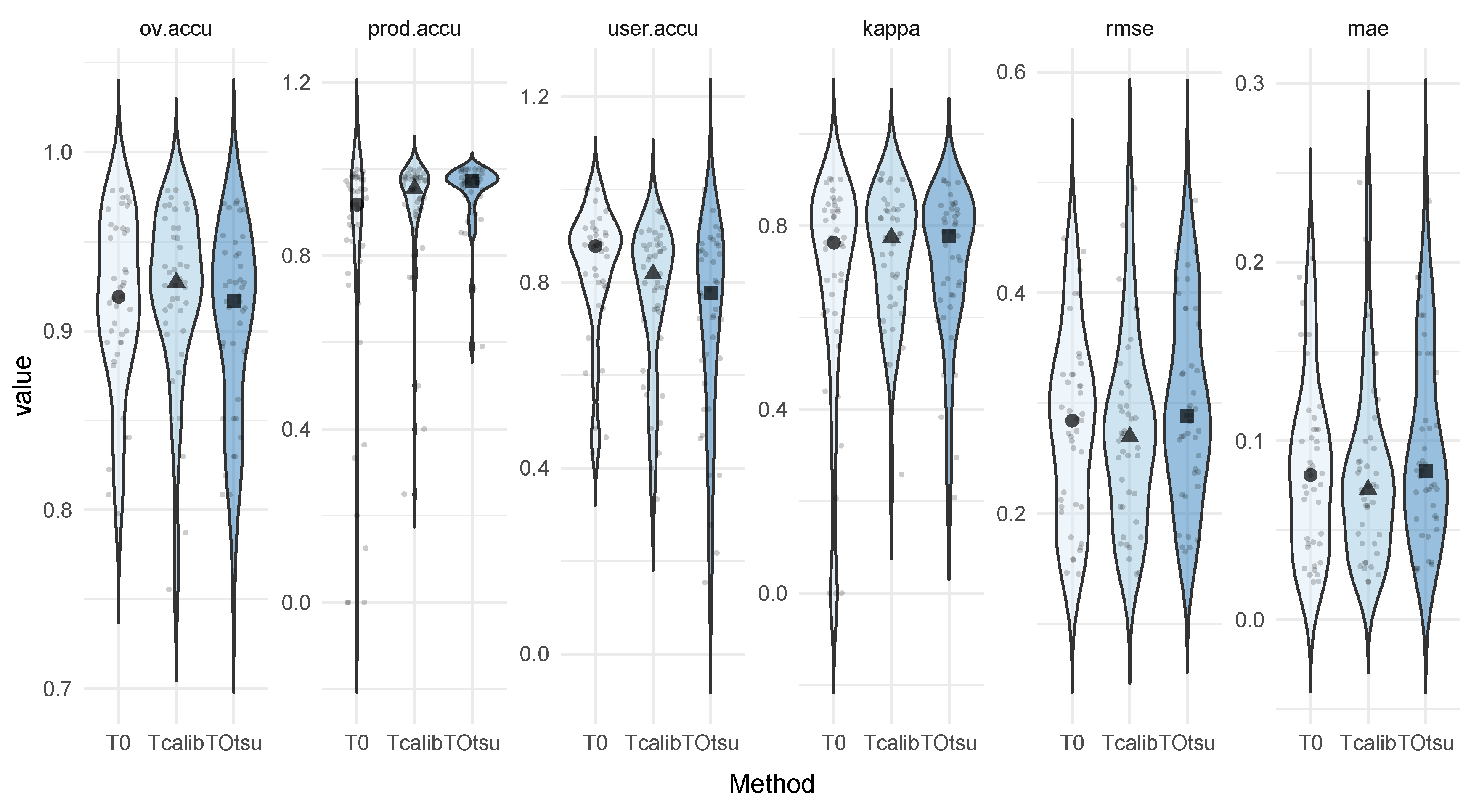

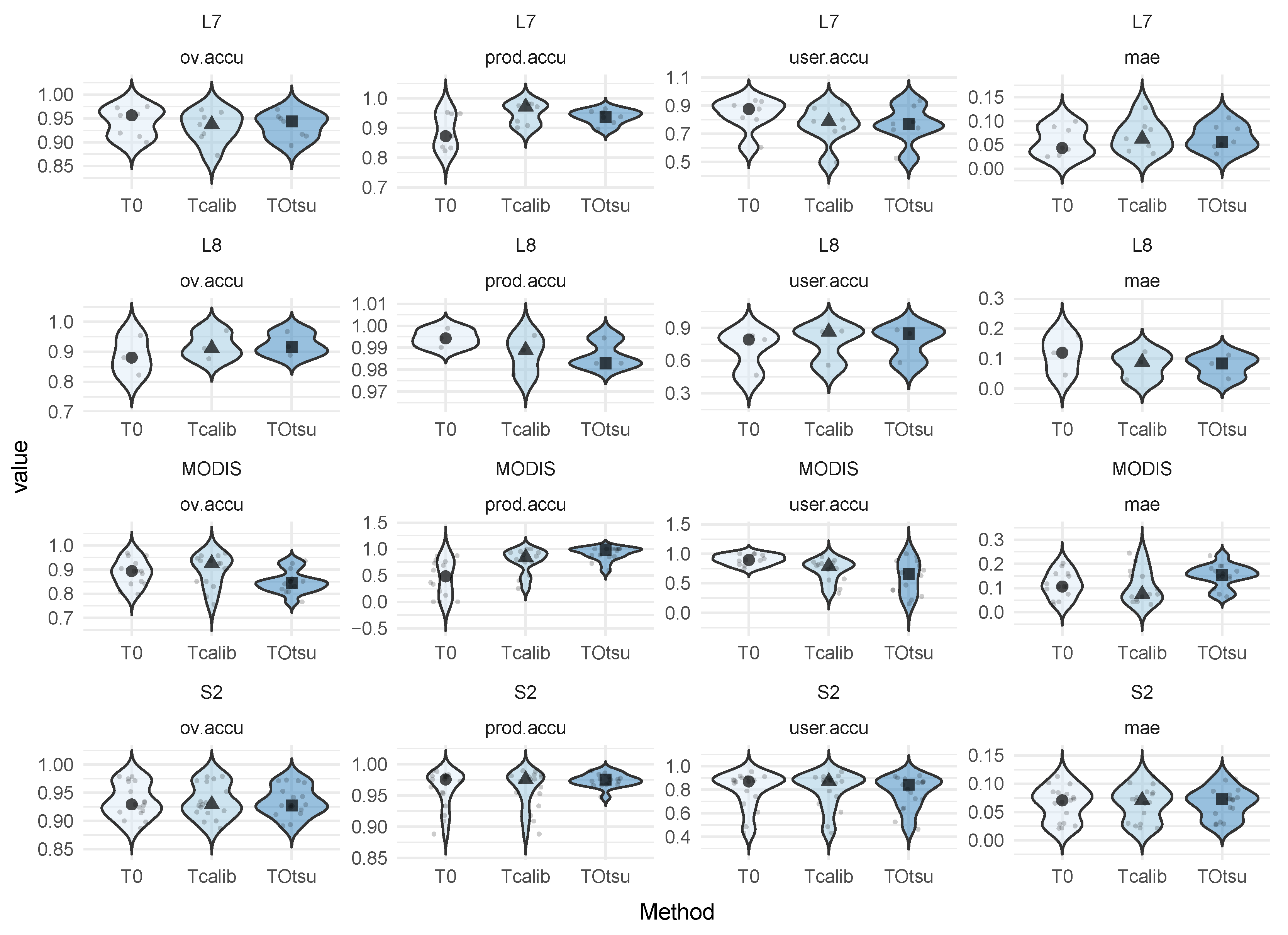

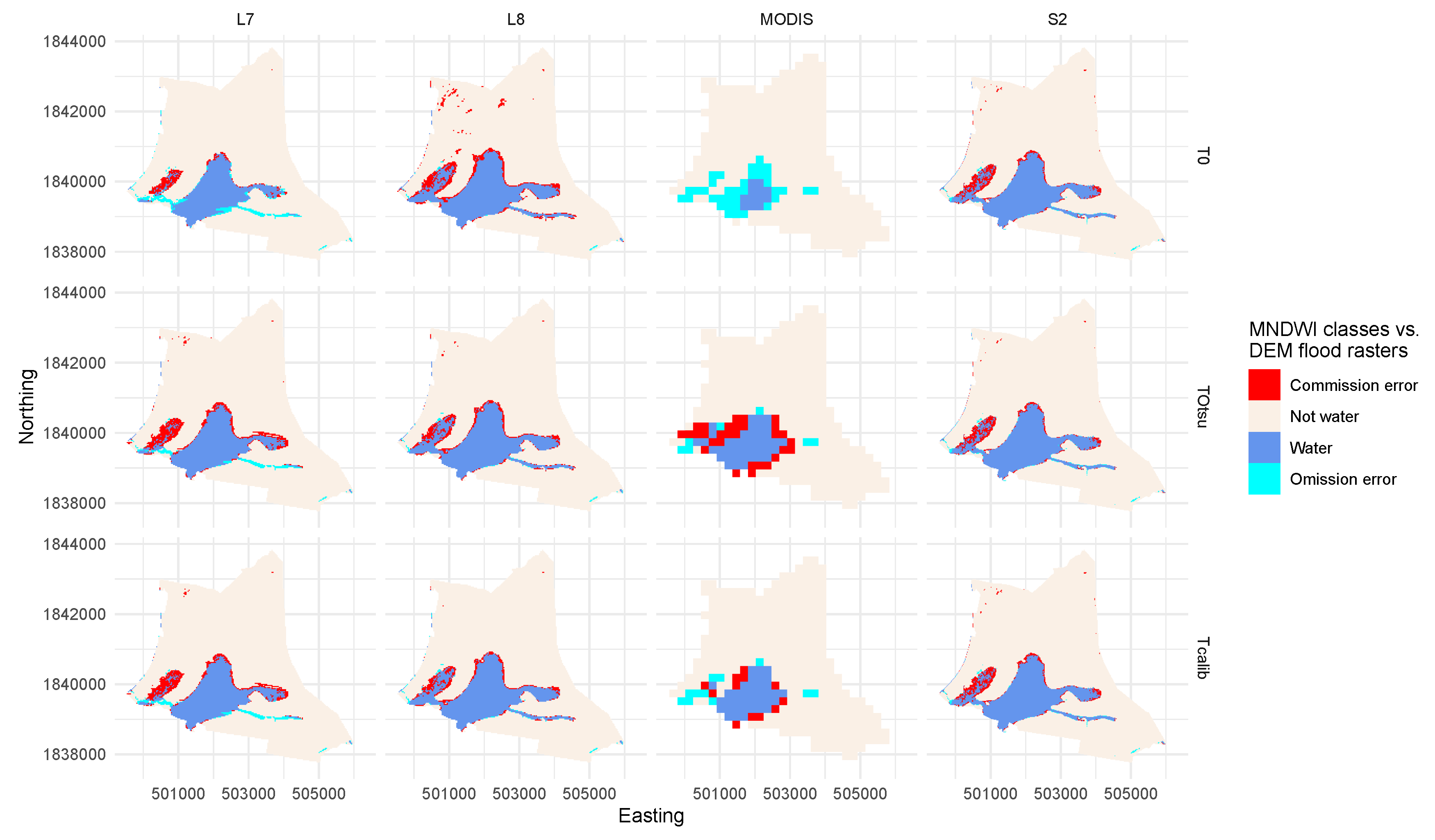

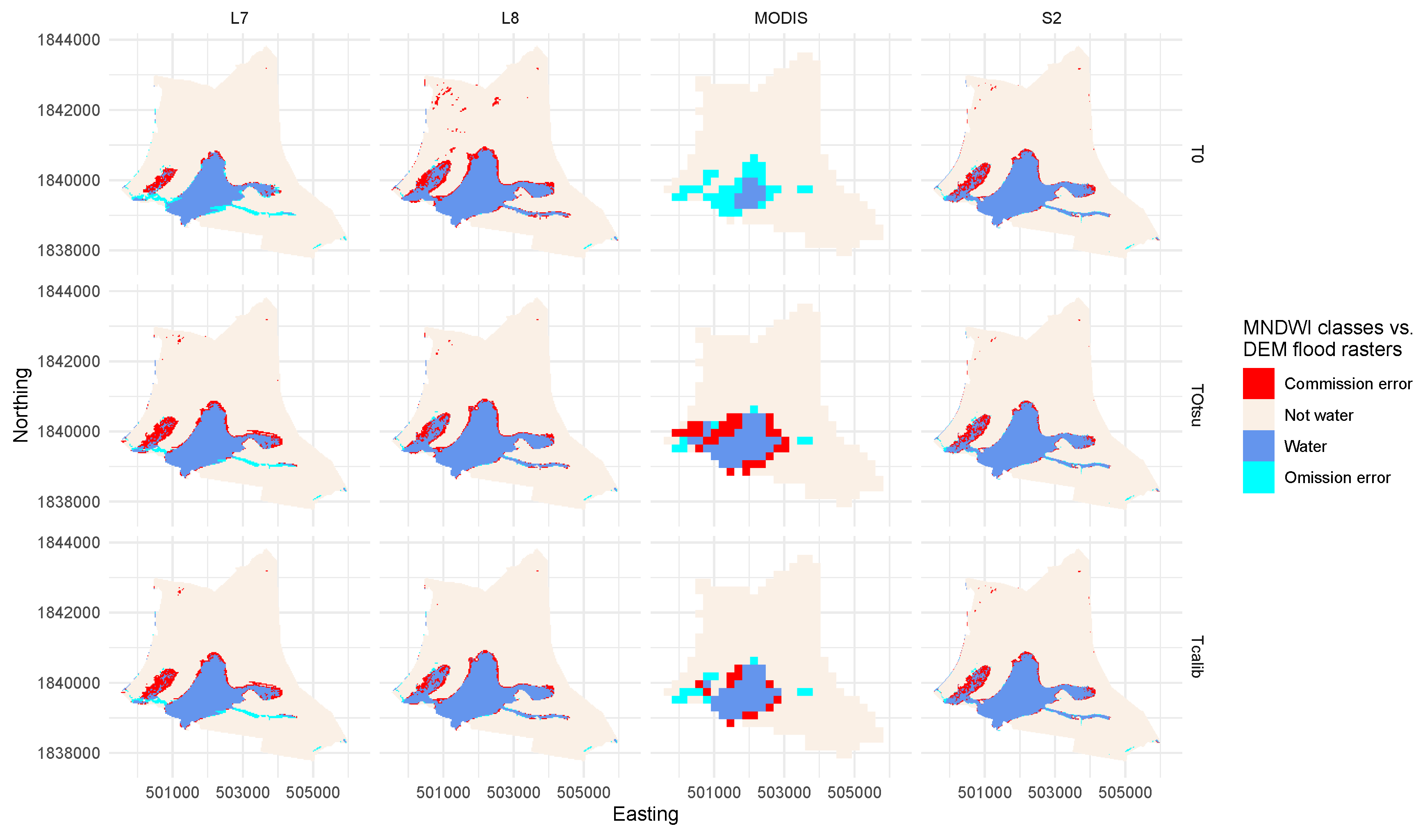

Water classification using MNDWI reach very high levels of accuracy with the sensor-specific optimal thresholding methods developed in this paper. The results show here that no single method is suitable for all sensors due to the specificities of each sensor and the particularities of long-term monitoring. Otsu segmentation, known to perform well [

64], notably suffers due to the temporary nature of water bodies and the sharp decline in water areas. Even though image growing was used to specifically include the permanent river areas, a decline in the performance of the Otsu algorithm was observed as water occupies too small a fraction, around 10% according to Lee et al. [

89]. Water indices and especially MNDWI are well suited to multi-temporal water mapping [

42]; however, alternate classification approaches may help improve performance, especially in different settings. Substituting green bands for ultra-blue and blue bands in these water indices may provide benefits in open water environments with low turbidity, while red band-based water indices may assist classification in water bodies with high suspended sediment loads [

64]. Machine learning algorithms such as random forest integrated into GEE, and used here on the UAV data, are known to perform well on complex imagery, though their use can be more resource intensive, requiring additional training data and making them less suited to long-term studies [

42]. Amani et al. [

39] with a large amount of field samples used a random forest approach to map Canadian wetlands, but overall accuracy reached 71% with over 34% and 37% of omission and commission errors, respectively. These errors can be explained by the difficulties in heterogeneous wetland environments, where water bodies are not continuous objects due to the presence of flooded vegetation and shallow disconnected meanders. The GSW datasets based on machine learning systems have also been shown to perform less well in mixed water environments found in small water bodies and wetlands [

7,

83].

In parallel, these results highlight the value of long-term hydrological observations to assess the accuracy and skill of remote sensing datasets. Remote sensing studies often rely on alternate high resolution satellite imagery or, where possible, in situ observations [

90], such as geolocalised sampling using GPS points, transects and contours to train and evaluate classifications. Validation is often based on random sampling of water bodies, but is restricted, for reasons of cost or feasibility, in the number of observations over time and rarely informs about the suitability to reproduce daily water dynamics in long-term monitoring [

7,

42,

91]. Many global water datasets are similarly developed against ground truth data for open water bodies, which as discussed above, can reduce their value in wetlands and other heterogeneous water environments. Long-term hydrological observatories, supported by citizen science observations [

92], must then be encouraged to support the development and assessment of remote sensing products and algorithms relevant to the water community. New products such as higher accuracy DEMs from Tandem-X [

3] or localised UAV-based methods [

93] also have the potential to provide valuable ground truth data. Finally, our results point to the importance of using multiple metrics in classification accuracy assessments. Overall accuracy can lead to difficult or incomplete interpretation [

77] of classifications, due to the effects of class prevalence [

78], i.e., correctly classified non-water pixels as water recedes for instance, and minor (two to three percentage points) differences in accuracy can cause large differences on the resulting surface areas.

5. Conclusions

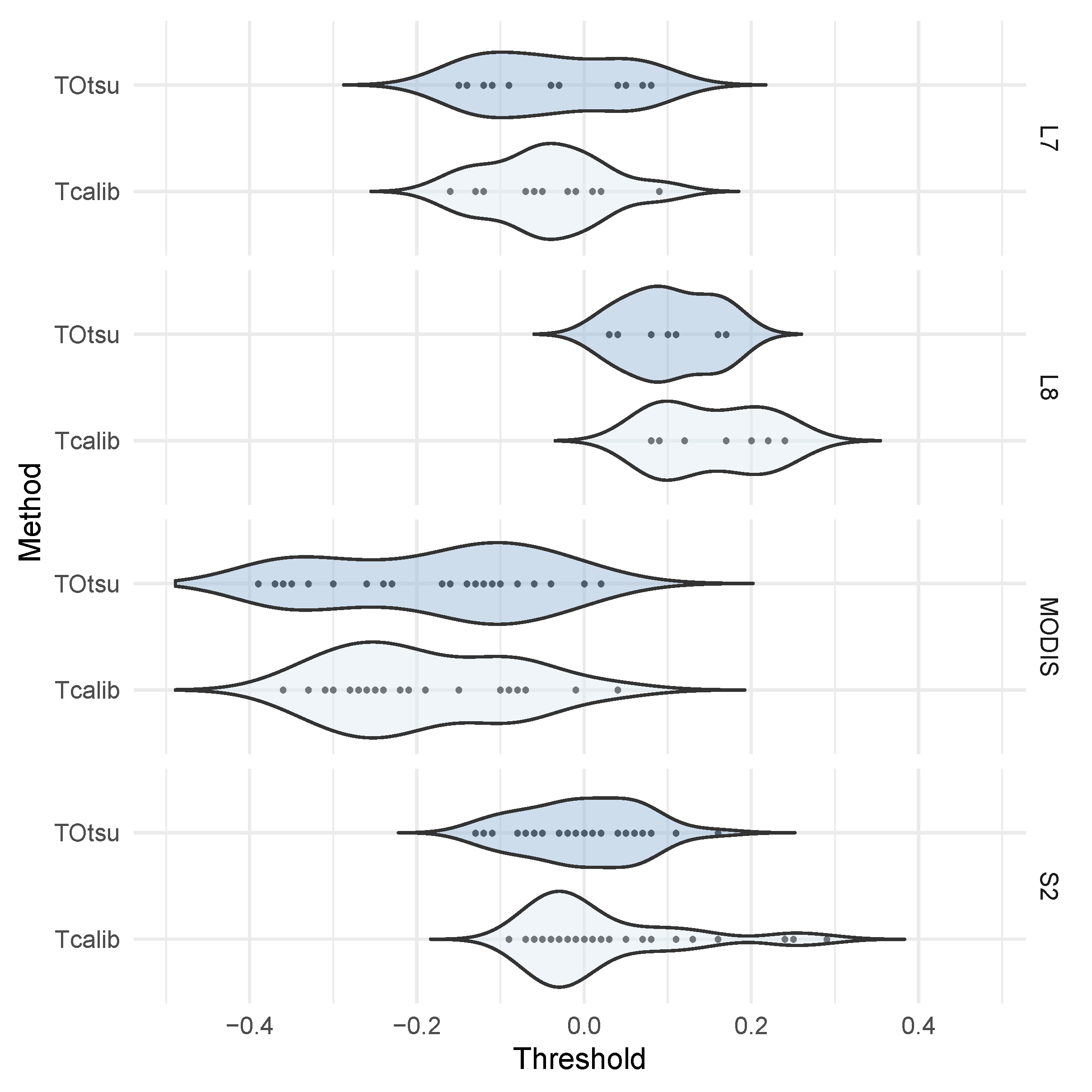

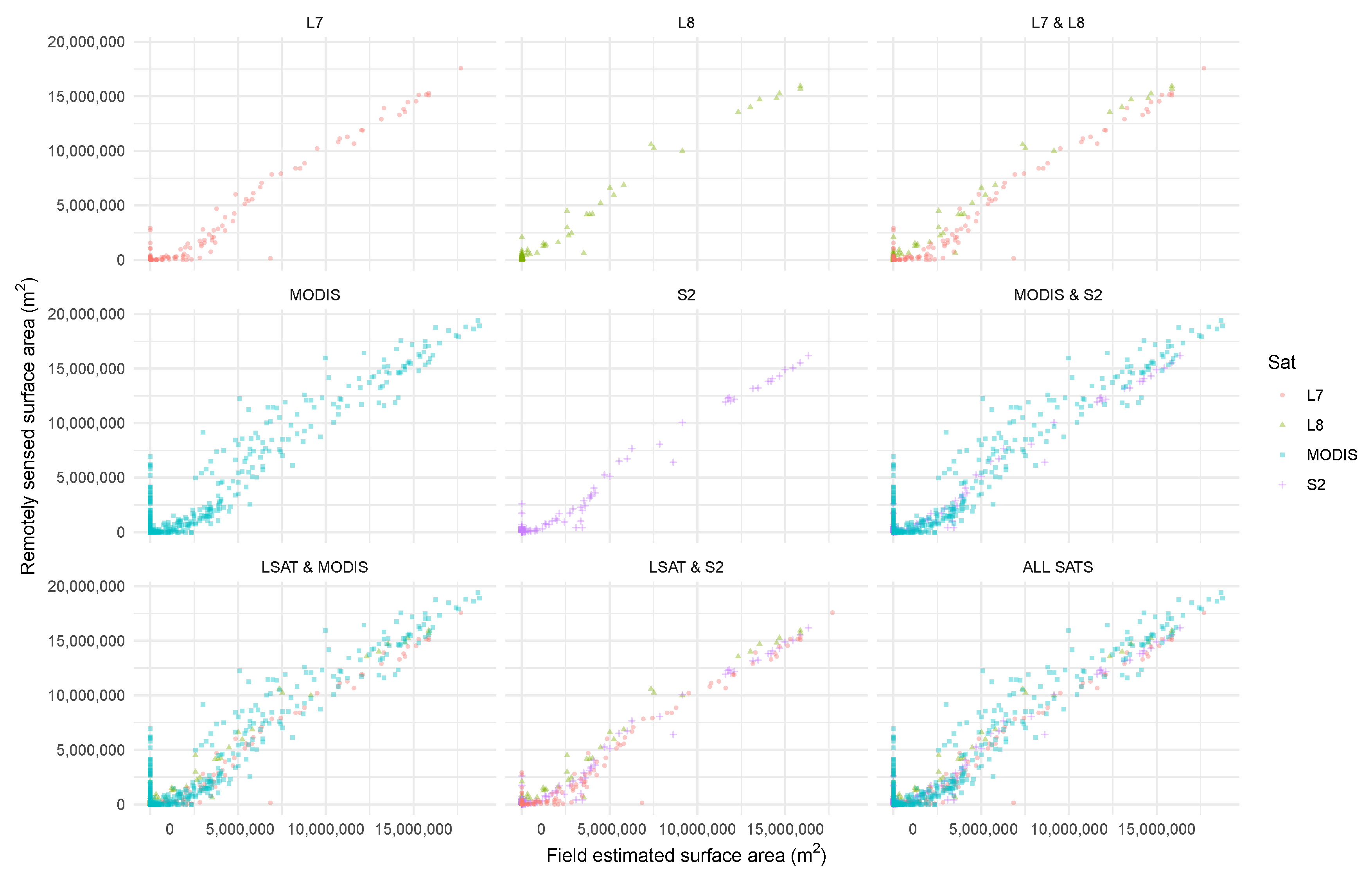

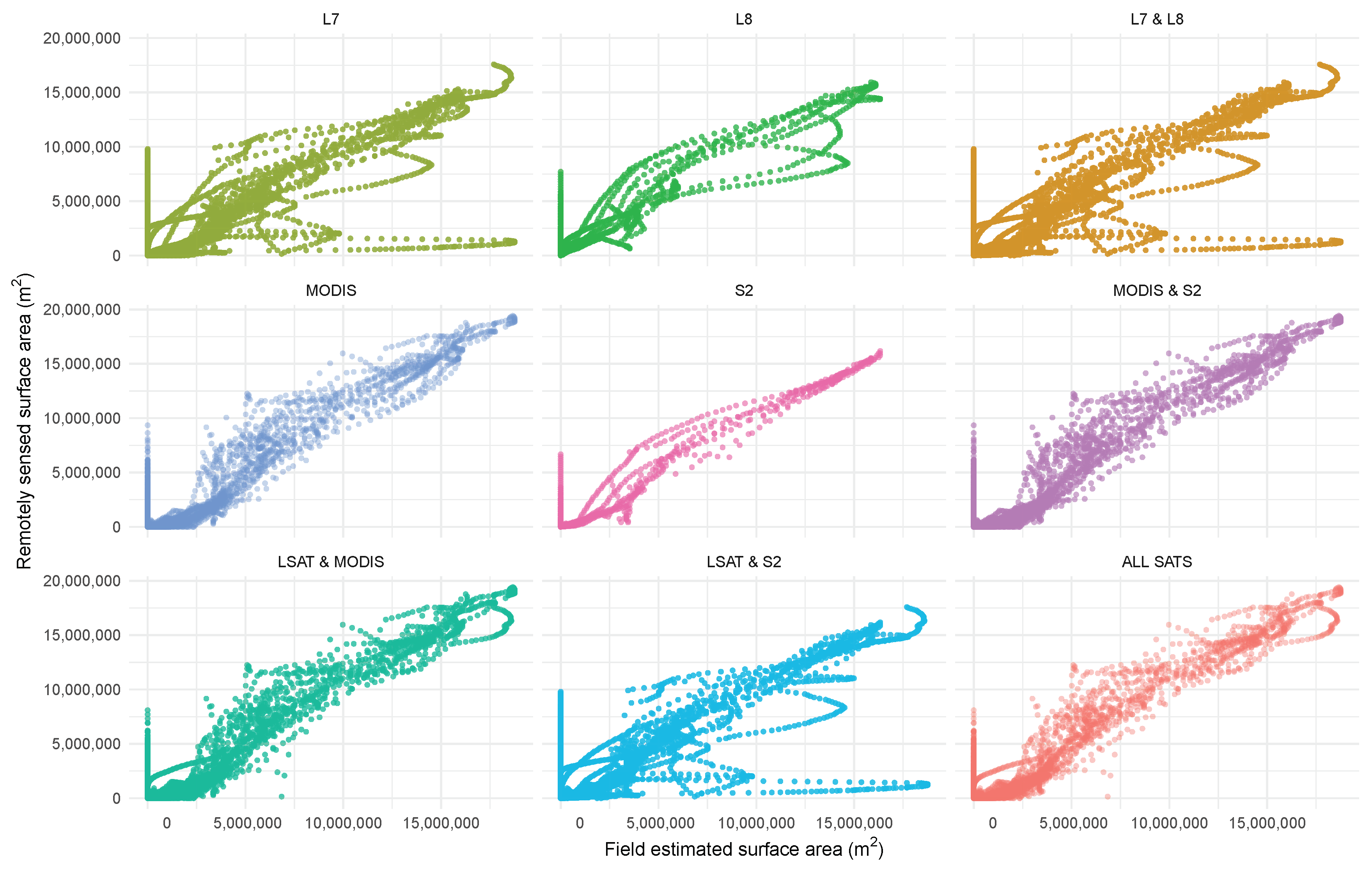

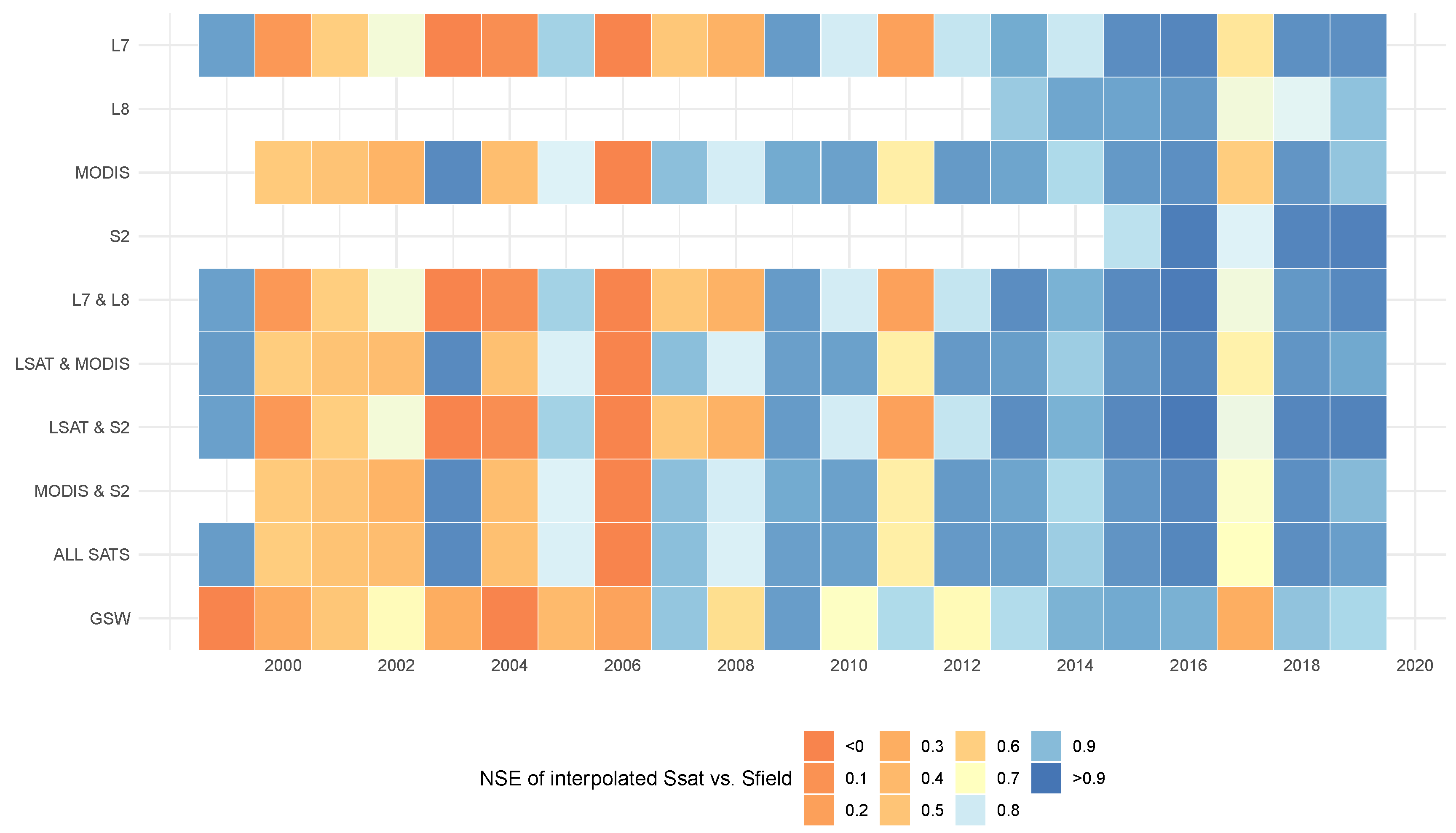

Detailed comparison of four major sources of optical Earth observations against extensive ground truth data highlight the heterogeneous performance of three MNDWI thresholding methods. The Otsu algorithm (TOtsu) is shown to be highly effective to classify selected images from Sentinel-2 and Landsat 8 sensors. However, when used in conditions of long-term observations in temporary water bodies, the Otsu method underperforms compared to a default threshold (T0). On Landsat 7, a default threshold performs best, while for MODIS imagery, a site-specific data intensive approach is essential to reduce the underestimation of pixels where water and flooded vegetation gather. The sensor-specific MNDWI thresholding methods identified here result in significant improvements in the accuracy of the mapped flooded areas. MODIS, despite its 500 m spatial resolution, performs remarkably well with a calibrated threshold and demonstrates its value in monitoring hydrological systems as small as 19 km. Landsat 7, even after 2003 and gap filling for the scan line failure, provides remarkably good observations, comparable with Sentinel-2 in terms of RMSE.

In long-term water mapping, Landsat imagery before 2013 fails to capture substantial information, in terms of flood peaks and flood dynamics. Combining multiple sensors’ observations is essential, and before 2013, it is recommended to integrate MODIS and Landsat observations notably on water bodies of several hundred hectares with similar temporary flood dynamics. After 2013, the combination of Landsat 7 and Landsat 8 becomes sufficient, and integrating MODIS observations can degrade performance marginally. The constellation of Sentinel-2A and 2B satellites offering high spatial and temporal resolution performs well in surface water monitoring, and integrating MODIS observations degrades overall accuracy. The integration of Landsat and Sentinel-2 yields modest improvements. This has important implications when developing long-term consolidated datasets designed to be used by researchers and stakeholders to understand flood variations, water balance modelling and water availability of wetlands and floodplains.

The frequent surface water maps produced by this semi-automated multi-sensor approach may be used to understand how water overflows into the floodplain and improve hydrological representations of hysteresis as water recedes. Supported by Google Earth Engines’ geoprocessing capacities, this approach can be applied across entire wetlands and floodplains to provide high resolution maps of flooded areas, calibrate hydrological models, improve local digital elevation models and investigate relationships with agricultural practices.