1. Introduction

Automatic robots have become a hot topic over the last few years due to a growing market demand for products such as autonomous cars, rescue robots, and military robots. In order to succeed in their missions, these robots usually require accurate motion information (position, velocity, and attitude) for control and navigation. Many kinds of sensors can provide useful information for motion estimation, locally or globally, such as a camera, the IMU, and the global navigation satellite system (GNSS). Typically, a modern robot is equipped with several kinds of sensors with complementary sensing capacities, in order to make motion information estimation accurate and robust. Specifically, the inertial navigation system (INS) can provide position, velocity, and attitude information at a high frequency by integrating IMU measurements [

1,

2,

3,

4]. However, IMU measurements are typically corrupted by several kinds of errors, such as the scale factor error, misalignment, biases, and sensor-induced random output noise [

5]. Such measurement errors make the long-term integration of IMU measurements unreliable. In contrast to the short-term accuracy of the INS, the GNSS can provide position information in the geodetic reference frame with a relatively consistent accuracy and no position drift. However, the provided position information is low-frequency and discontinuous. For those reasons, the INS/GNSS integrated navigation system has been well-studied over the past several decades for providing high-precision, high-frequency motion information without drift [

6,

7,

8]. However, the performance of the integrated system greatly depends on the availability of GNSS signals, especially for the low-grade IMU [

7,

8]. Unfortunately, satellite signals are vulnerable to electromagnetic interference and easily shielded by high buildings, which makes GNSS measurements unreliable. Another well-known technique with the capacity for local motion information estimation is the visual-inertial navigation system (VINS), which fuses the measurements provided by the camera and the IMU [

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21]. The two sensors are complementary in many ways. The IMU makes the metric scale and the gravity vector observable and provides accurate inter-frame motion information. The visual information aids the IMU in bias estimation. Nevertheless, the global position and yaw estimation of the VINS finally drifts [

14], unless other sensors/systems that provide global measurements, such as the GNSS, are integrated with the VINS [

15,

16]. According to the above analyses, there is an irresistible trend to fuse the complementary information from the redundant sensors for motion information estimation.

Information fusion of the INS/GNSS integrated navigation system can be implemented by Bayesian filters, such as the Kalman filter or extended Kalman filter [

6]. In comparison, the information fusion algorithms of the VINS become more complex, due to the high nonlinearity of visual residual functions and the high number of visual measurements. Generally, the information fusion algorithms of the VINS can be divided into two categories, namely the optimization-based methods [

9,

10,

11,

12,

13,

15,

16] and the Bayesian filter-based methods [

14,

17,

18,

19,

20,

21]. The optimization-based methods typically can achieve a higher accuracy than the filter-based methods by benefitting from the ability of re-linearization. The computational complexity of the filtered-based methods is cubic with the number of visible mappoints. For achieving a real-time performance, the number of mappoints in the state of the filter is strictly limited, which also decreases the accuracy of information fusion [

22]. The multistate constraint Kalman filter (MSCKF) is a special augmented Kalman filter. It keeps a sliding window of past poses in its state vector [

20,

21]. The positions of mappoints are marginalized first by using the left null space trick. Then, a probabilistic constraint between the poses is constructed. Hence, its computational complexity is linear with the number of mappoints and cubic with the number of poses in the window. For the optimization-based methods, by exploring the first- and second-order sparsity structure of the normal equation, they also become highly efficient [

23,

24]. However, full optimization finally becomes infeasible as the number of measurements increases. For limiting the computational complexity, sliding window optimization methods have been designed and widely used for solving the VINS problem. These kinds of methods work in the spirit of optimization, but only keep a sliding window of poses [

9,

10,

11,

12,

13]. The states outside the sliding window are either directly fixed [

9,

12] or marginalized out [

10,

11,

13].

Typically, the sampling frequency of the IMU is high—approximately hundreds of Hertz. For the filter-based methods, the time update is required to operate at the IMU sampling frequency. For the optimization-based methods, it is impracticable to perform optimization at the IMU sampling frequency. One possible solution to this problem is to integrate the IMU measurements during the sampling interval of the other sensors working at a low sampling frequency. However, this will cause another problem in that the initial integration values are unknown or inaccurate. Once the initial integration values are updated at each iteration of optimization, the IMU measurements need to be re-integrated, which makes the computational complexity increase. In order to achieve fusion with other sensors, uncertainty of the integrated IMU measurements is also necessary. For propagating the uncertainty, the initial uncertainty traditionally needs to be calculated by back propagating the measurement uncertainties of sensors after each iteration. Then, the uncertainty of the integrated IMU measurements is re-propagated, which will also cause additional computation.

For coping with these two challenges, the IMU preintegration technique was first developed by Lupton et al. [

25,

26] for the optimization-based VINS. The technique can also be used in other optimization-based multi-sensor fusion frameworks, such as the LiDAR/IMU/GNSS integrated navigation system [

27]. In [

25,

26], the attitude is parametrized by the Euler angles, which causes a singularity in specific cases [

28]. In [

29,

30], the attitude is parametrized by the specific rotation group SO (3), in order to avoid the singularity and carefully cope with uncertainty propagation of the preintegrated IMU measurements. In [

10], the attitude is parametrized by the attitude quaternion. The covariance propagation of the preintegrated IMU measurements is calculated by using the dynamic equations of the preintegration error states. In [

31,

32], closed-form solutions of IMU preintegration are derived by using linear system theory. However, all of the above IMU preintegration algorithms assume that the gravity vector is constant in the VINS reference frame. In practice, the gravity vector changes with the position relative to the VINS reference frame. The longer the relative position, the more obvious the change of gravity. The earth rotation is also not considered in the above IMU preintegration algorithms. In comparison, the gravity change and earth rotation are well-modeled in the strap-down inertial navigation algorithms [

1,

2,

3,

4], the INS/GNSS, and the INS/GNSS/Vision integrated navigation systems [

6,

7,

8], in order to remove their influence from the IMU measurements. Motivated by these conventional INS relevant algorithms, we redesigned the IMU preintegration algorithm by taking the change of gravity and the earth rotation into consideration. Both terms are functions of the geodetic position. There are several kinds of geodetic reference models for the earth, such as the geodetic reference system 1980 (GRS-80), the world geodetic system 1984 (WGS-84), and the Chinese Geodetic Coordinate System 2000 (CGCS-2000). In the INS relevant algorithms, the WGS-84 model has been widely used. In this paper, the WGS-84 model is also adopted.

The preintegrated IMU measurements can be used to construct a preintegration factor, and the preintegration factor needs to be weighted by its square-root information matrix before being added to the optimization. In this paper, the uncertainty of the preintegrated IMU measurements is propagated in the form of a square root information matrix [

33]. Compared with the covariance propagation form [

25,

26,

29,

30,

31,

32], the square-root information matrix is directly available for weighting the preintegrated IMU measurements and theoretically has a better numerical stability [

33]. IMU measurements are typically corrupted by several kinds of errors. In this paper, we assume that the IMU has been well-calibrated offline. Then, the remaining IMU measurement errors include the sensor-induced random output noise and the slowly time-varying biases. In this paper, the sensor-induced random output noise is modeled as a white noise process. Additionally, the slowly time-varying bias is modeled as a one-order Markov process.

In order to evaluate the performance of the improved IMU preintegration algorithm, we implement a tightly-coupled monocular visual-inertial system [

34], and the GNSS positioning results are fused in a loosely-coupled way. We perform experiments by using the dataset collected by our sensor platform. The sensor platform is equipped with two different-grade IMUs, in order to analyze the influence of the earth rotation and the change of gravity on different-grade IMUs.

Our contributions can be summarized as follows:

We have redesigned the IMU preintegration algorithm by taking the earth rotation and the change of gravity into consideration;

The uncertainty of the preintegrated IMU measurements has been propagated in the form of a square-root information matrix;

We have evaluated the influence of the earth rotation and the change of gravity on the preintegrated IMU measurements by using two different-grade IMUs.

The remainder of this paper is organized as follows. In

Section 2, the coordinate frames, notations, attitude parameterizations, and geodetic reference model of the earth are introduced. In

Section 3, all of the details of the improved IMU preintegration algorithm are given, including the position, velocity, and attitude dynamic equations in the

W frame; the IMU error model; the uncertainty propagation of preintegrated IMU measurements; and the IMU preintegration factors. In

Section 4, the joint optimization framework of the VINS is briefly described. In

Section 5, all of the details of experiment setup are introduced. In

Section 6, all experimental results are given. In

Section 7, we discuss the influence of the gravity change and the earth rotation on the IMU preintegration, according to the experimental results. In

Section 8, we draw the conclusions.

2. Preliminaries

In this section, some fundamental professional knowledge used in designing the IMU preintegration algorithm is introduced, including the definitions of coordinate frames and notations, the attitude parameterizations, and the earth geodetic reference model. This fundamental professional knowledge has been well-developed in the INS and GNSS research communities for several decades.

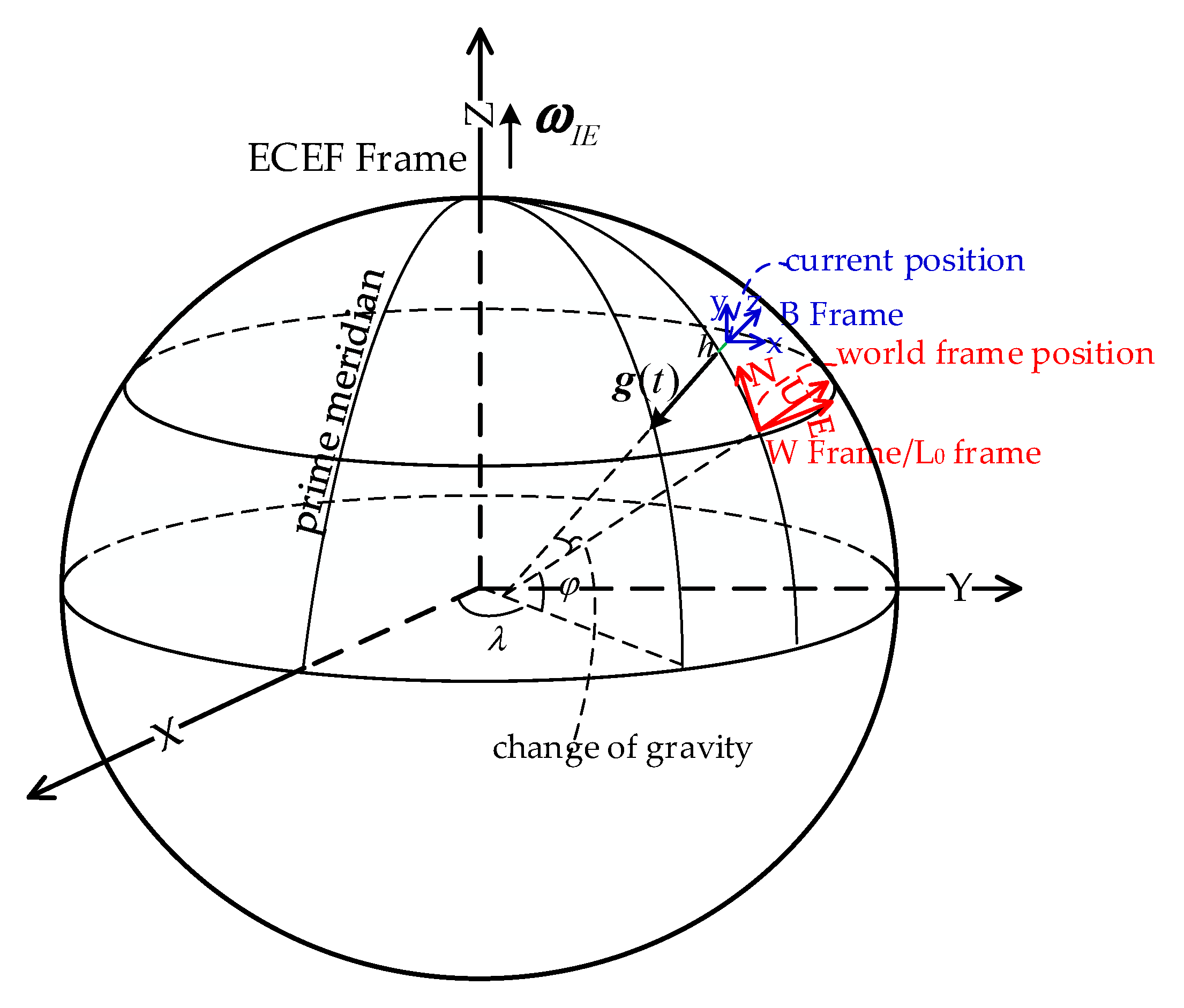

2.1. Coordinate Frames

A coordinate frame is defined by three unit vectors that are perpendicular to each other in a right-hand form. For making a mathematical operation valid, all related free vectors and points should be projected into the same reference coordinate frame.

The E frame is the earth-centered, earth-fixed coordinate frame, with its Z axis parallel to the earth polar axis, its X axis passing through the Greenwich meridian, and its Y axis lying in the equatorial plane to form a right-handed coordinate frame.

The I frame is the non-rotating and non-accelerating inertial coordinate frame used as the reference coordinate frame for the IMU measurement. Theoretically, its orientation can be arbitrary. In this paper, the E frame at the initial time is selected as a historically fixed inertial coordinate frame.

The L frame is the local level coordinate frame, with its X axis parallel to the east, Y axis parallel to the north, and Z axis vertical to the local earth surface.

The B frame is the IMU coordinate frame with axes parallel to the nominal orthogonal input axes.

The C frame is the camera coordinate frame with the origin at the optical center, the X axis horizontal and pointing to the right, and the Y axis vertical and pointing downwards.

The W frame is the reference coordinate frame for the VINS. In this paper, the L frame at the initial position or another fixed position is selected as the W frame.

2.2. Nomenclature

| A0, A1, A2 | arbitrary orthogonal right-handed coordinate frames; |

| specific force measured by the accelerometer, which is produced by a non-gravitational force; |

| local plumb-bob gravity vector that is a function of the geodetic position; |

| position vector of frame A2 relative to frame A1, with projection in frame A0; |

| velocity vector of frame A2 relative to frame A1, with projection in frame A0; |

| angular velocity vector of frame A2 relative to frame A1, with projection in frame A0; |

| direction cosine matrix that transforms a vector from frame A2 to frame A1; |

| attitude quaternion corresponding to ; |

| rotation/angle-axis vector defined by the axis of rotation and the magnitude of rotation around the axis; |

| n-dimension identity matrix; |

| zero matrix with m rows and n columns. |

| Set an arbitrary vector as , then |

| the skew-symmetric matrix of the vector, as follows: ; |

| the quaternion form of the vector, as follows: ; |

| the bar above the vector means it is a constant vector; |

| the hat above the vector means it is an estimation; |

| the tilde above the vector means it is a measurement; |

| the point above the vector means it is the time derivative of the vector. |

2.3. Attitude Parameterization

The direction cosine matrix, the rotation vector, and the attitude quaternion can be used to parameterize the attitude (rotation) between two coordinate frames with no singularity. Moreover, the three parameterization methods are mathematically equivalent.

The direction cosine matrix

is a unit and orthogonal matrix, and the columns are equal to the projections of the unit vectors along the axes of frame

A2 into frame

A1. For an arbitrary vector

with projections

,

in the coordinate frame

A1,

A2, respectively, the following relationship holds [

1]:

According to Equation (1), it is easy to derive the chain rule of the direction cosine matrix:

The rotation vector

is defined by a unit vector

and the magnitude

around the unit vector. According to the Rodrigues formula [

1,

35], the counterpart direction cosine matrix

is a function of the rotation vector, as follows:

The attitude quaternion is composed of four elements, as follows:

where

,

,

. We refer readers to reference [

35] for more details about the quaternion. The attitude quaternion is also a function of the rotation vector, as follows:

The chain rule of the attitude quaternion is defined on the basis of quaternion multiplication, as follows:

where

is the quaternion multiplication operator and

. The left and right quaternion multiplication matrices are as follows:

The conversion relationships between the direction cosine matrix and the attitude quaternion are as follows:

where

,

,

.

is the trace of

, i.e., the sum of the diagonal elements of the direction cosine matrix.

2.4. Time Derivative of the Attitude Parameter

The time derivative of the direction cosine matrix

is as follows:

The time derivative of the attitude quaternion

is as follows:

2.5. The Earth Model

In this paper, the WGS-84 geodetic reference model is adopted for modeling the earth as shown in

Figure 1. Set the geodetic position (latitude, longitude, and altitude) in the WGS-84 reference frame as

,

, and

. Then, the direction cosine matrix between the

L frame and

E frame is as follows:

The XYZ position in the

E frame can be recovered from the geodetic position, as follows:

where

is the eccentricity of the earth and

is the radius of curvature of the prime vertical plane. It is a function of the latitude, i.e.,

.

is the equatorial earth radius.

The geodetic position can also be recovered from the XYZ position in the

E frame. The longitude can be recovered directly as follows:

The latitude and altitude need to be recovered by the following iterative algorithm. The initial values are set as follows:

Then, the latitude and altitude are iteratively calculated until convergence, as follows:

For notation convenience, the computation process of Equations (15), (16), and (17) is donated as follows:

The normal gravity in the

L frame is as follows:

where

is calculated by the WGS-84 gravity model parameters, as follows:

The gravity anomaly is omitted in our algorithm. Therefore, the normal gravity is approximately considered as the local gravity in this paper.

The earth rotation vector in the

E frame is as follows:

where

.

The measurement of the accelerometer is a specific force. The influence of gravity needs to be removed from the preintegrated IMU measurements, in order to recover the metric motion. Therefore, we need to know the gravity vector projected in the W frame. The measurement of a gyroscope (rate sensor) is the angular velocity relative to the I frame. The influence of the earth rotation needs to be removed from the gyroscope measurement, in order to recover the real angular velocity relative to the earth.

Set the geodetic position of the W frame as , , and . The XYZ position in the E frame can be calculated by Equation (14). Then, the direction cosine matrix between the W frame and E frame can be calculated by Equation (13).

The earth rotation vector in the

W frame is calculated as follows:

Set the position of the IMU at time

t in the

W frame as

. Then, its position in the

E frame can be calculated as follows:

Then, the geodetic position of IMU at time

t can be calculated by Equation (18), i.e.,

. The local normal gravity vector

in the

L frame can be calculated by Equation (19). The direction cosine matrix

can be calculated by Equation (13). Therefore, the direction cosine matrix between the

L frame and

W frame is

. Finally, the gravity vector in the

W frame is calculated as follows:

3. The Improved IMU Preintegration Technology

The position, velocity, and attitude are obtained by integrating the IMU measurements. For performing such integrations, the dynamic equations for the position, velocity, and attitude should be derived first. Generally, the position, velocity, and attitude of the VINS are parametrized in the W frame. Hence, the dynamic equations are also derived in the W frame.

First, the relationship of the positions parameterized in the

W frame and

I frame is as follows:

where

because the origins of the

E frame and

I frame are coincident, i.e.,

, and the

W frame is fixed with respect to the earth.

According to Equation (11), the time derivative of Equation (25) is as follows:

where

,

.

is the earth rotation in the

E frame.

For obtaining the acceleration information, we need to further derive the time derivative of Equation (26) as follows:

where

.

Substituting the equation

into Equation (27), we have

Let us left-multiply both sides of Equation (28) by

. After re-organization, we have

According to the theory of the force composition and decomposition, the acceleration

relative to the inertial space is the resultant force of the specific force

and the gravitational force

, i.e.,

. Substituting the equation into Equation (29), we have

where

is the gravity in the

W coordinate frame.

is the well-known Coriolis acceleration.

Equation (30) is the velocity differential equation. The position differential equation can be easily expressed as follows:

The attitude is parameterized by the attitude quaternion. Furthermore, its time derivative can be derived according to Equation (12), as follows:

Then, the position, velocity, and attitude can be reckoned by numerically integrating the three dynamic equations, as follows:

where

.

is written as

k in many subscripts and superscripts for convenience.

is the sampling number during

and

is the IMU sampling interval. The change of gravity is negligible during

. Therefore,

is used to represent the gravity during the time interval. Moreover,

is the so-called IMU position preintegration, as follows:

The velocity is reckoned as follows:

where

is the so-called IMU velocity preintegration, as follows:

Finally, the attitude increment is the so-called IMU attitude preintegration. It can be reckoned by integrating Equation (32), as follows:

3.1. IMU Error Model and Preintegrated IMU Measurements

Typically, the IMU measurements are corrupted by several kinds of errors, such as the scale factor error, misalignment, sensor-induced random output noise, and slowly time-varying biases. In this paper, we assume that the IMU has been well-calibrated offline. Therefore, the scale factor error and misalignment are negligible. The sensor-induced random output noise is modeled as a white noise process. The slowly time-varying biases are molded as one-order Markov processes. Then, the IMU measurements are modeled as follows:

where

and

are Gaussian white noise processes, and

,

.

denotes a normal distribution.

and

are IMU time-varying biases and are modeled as follows:

where

and

are the correlation times of Markov processes.

and

are driving Gaussian white noise processes, and

,

.

The IMU preintegration Equations (34), (36), and (37) can be solved by numerical integration. The initial body frame is selected as the reference frame for integration. Additionally, the initial values are known and independent of the position, velocity, and attitude in the W coordinate frame, i.e., , , . This is the core idea of the IMU preintegration algorithm.

Set the IMU preintegrations at time

as

,

, and

, and the IMU bias estimations as

and

. When new IMU measurements

and

are received,

, and the IMU preintegrations are updated recursively, as follows:

where

.

3.2. Uncertainty Propagation and Jacobian Matrix of Bias Correction

The uncertainty propagation of IMU preintegration is similar to the time update of the Bayesian filter used in the INS/GNSS integrated navigation system. Due to the IMU preintegration errors being time-varying, we also need linear dynamic equations for tracking the errors, just like Psi- and Phi- angle error equations in the INS/GNSS integrated navigation system [

36]. The Jacobian matrices for belated bias correction are contained in the transition matrix of the IMU preintegration errors. For iteratively updating the transition matrix, we also need the linear dynamic equations of the IMU preintegration errors. Hence, in this section, we will derive the linear dynamic equations first.

(1). Attitude preintegration error dynamic equation: The attitude preintegration error is defined in a right perturbation form. Set the attitude preintegration error at time

t as

. Then we have

where

.

The gyroscope bias error is defined as follows:

Substituting the Equations (41) and (42) into Equation (2), we have

where

, and

,

is sufficiently small.

The expanded form of Equation (43) is as follows:

where

,

because the magnitudes of

and

are both quite small. Moreover,

. All second-order small terms are omitted.

Then, the attitude preintegration error dynamic equation can be derived as follows:

(2). Velocity preintegration error dynamic equation: The velocity preintegration error is defined as follows:

where

is the velocity preintegration error at time

t.

The accelerometer bias error is defined as follows:

According to the Equations (40), (41), (46), and (47), we have

The expanded form of Equation (48) is as follows:

where

.

Then, the velocity preintegration error dynamic equation can be derived as follows:

(3).

Position preintegration error dynamic equation: The position preintegration error is defined as follows:

where

is the position preintegration error at time

t.

The position preintegration is the integration of velocity preintegration, so

Rewriting the Equations (39), (45), (50), and (52) in a matrix form, we have

Rewriting Equation (53) in compact form,

where

and

.

According to the linear system theorem [

37], the system can be discretized as follows:

where

is the equivalent noise during

, as follows:

The mean of

is zero. The covariance is calculated as follows:

where

. Additionally, the equivalent noise

is a Gaussian white noise sequence.

Hence, the square root information matrix of the equivalent noise is as follows:

The transition matrix from

to

is propagated as follows:

where

and

are adjacent IMU sampling epochs, and

.

Set the square root information matrix of the IMU preintegration errors at time

as

. Then, the square root information matrix

can be calculated by performing QR decomposition on the following data matrix [

33], as follows:

where

is a unitary matrix. The right side of Equation (60) is an upper triangular matrix. The initial square-root information matrix at time

should be large enough, because the initial values of IMU preintegration are deterministic. In our implementation, the initial square-root information matrix is set as

.

3.3. IMU Preintegration Factor

Given the preintegrated IMU measurements, all residual functions are listed as follows:

where

,

,

,

,

,

, and

. The function

means the sub-block of

from

to

rows and

to

columns. Additionally,

All of the above residual functions should be weighted by the square-root information matrix before adding them to optimization.

4. Nonlinear Joint Optimization

We use the hybrid sliding window nonlinear optimization for state estimation (more details can be found in our previous work [

34]).

The full state vector is defined as follows:

where

where

is the inverse depth parameter of the

m-th mappoint from its first observing keyframe,

N is the number of keyframes in the sliding window,

M is the number of visible mappoints, and

is the camera-IMU calibration parameter.

The joint optimization problem can be formulated as a nonlinear least-squares problem. The cost function is the sum of the prior and the Mahalanobis norm of all measurement residuals within the sliding window, as follows:

where

and

represent the prior information;

,

, and

are the measurement set of the IMU, camera, and GNSS, respectively;

is the IMU preintegration residual function, as shown in Equation (61);

is the re-projection residual function of the

l-th mappoint in the

j-th image (more details can be found in [

34]);

is the Huber loss function for coping with potential outliers [

38];

is the square root information matrix of the visual measurement; and

is the GNSS residual at time

, as follows:

where

and

are functions of the geodetic position of the selected world frame;

is the lever arm vector between the GNSS antenna and IMU, measured manually; and

is the XYZ position of the GNSS antenna in the E frame, and provided by the GNSS receiver.

5. Experiments

In order to evaluate the performance of the proposed IMU preintegration algorithm, we conducted experiments by using the dataset collected by our sensor platform. Our sensor platform consists of a left-right stereo camera and two different grades of IMU, as shown in

Figure 2. The stereo camera consists of two Prosilica GT 1910 monocular cameras by AVT. Both of them are global shutter monochrome CCD cameras which deliver 1920 × 1080 images. The cameras are synchronously triggered by an Arduino Uno R3 microcontroller at 10 Hz. The microcontroller parses GPS time from the NMEA messages provided by Ublox NEO-M8N. The two cameras are equipped with LM8HC lenses by Kowa. The horizontal field of view of the lens is about 60 degrees. The two IMUs are MTi-G-710 and POS620, respectively. The MTi-G-710 is an industrial-grade MEMS-based IMU by Xsens. The POS620 is a quasi-navigation-grade IMU by the MPSTNAV company of China, and contains three-axis high-precision fiber optic gyroscopes (FOGs).

The technical parameters of the two different-grade IMUs are listed in

Table 1. During data collection, the two IMUs were sampled at 200 Hz.

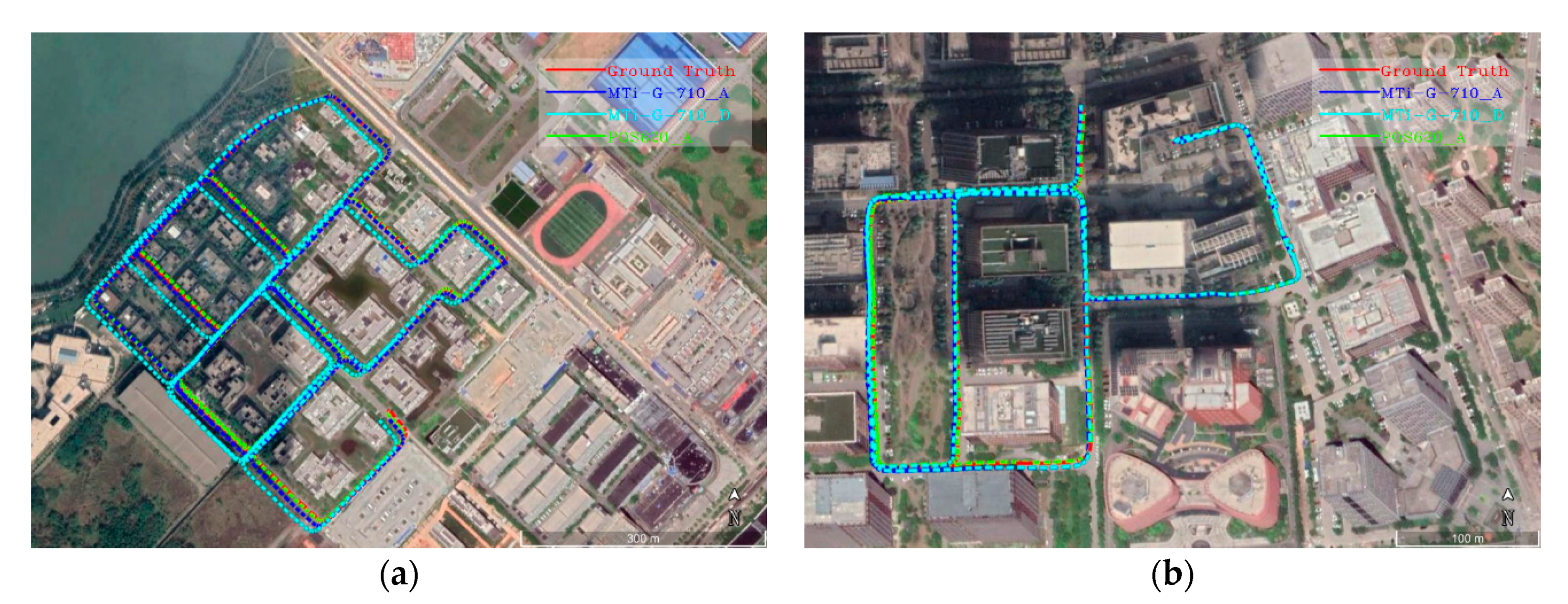

Our dataset contains two sequences collected at two different places in Wuhan city of China, namely the Wuhan Research and Innovation Center (WRIC) and the Guanggu financial harbor (GFH). There are many low-slung buildings and trees in the two places. These static objects provide a high-quality texture for visual measurements, as shown in

Figure 3. Additionally, navigation satellite signals are not easily shielded or reflected in the two places. The GNSS raw data was recorded by the Trimble receiver. Furthermore, we used the GINS software developed by the MPSTNAV company of China to post-process the recorded GNSS raw data, in order to obtain high-precision real-time kinematic (RTK) positioning results.

Generally, the RTK positioning error was less than 0.10 m. The integrated navigation results of fusing the RTK positions and the POS620 measurements by Rauch-Tung-Striebel (RTS) smoothing served as the ground truth data. The dataset was used to evaluate the proposed IMU preintegration algorithm. It did not contain GNSS deny environment and visual challenges, such as overexposure, illumination changes, and poorly textured scenes. The motions performed by the vehicle during data collection were variable, including linear acceleration and deceleration, zero-speed motion, turning motion, and backward motion, i.e., almost all motions that can be performed by a ground vehicle. Additionally, changing slopes also exist in the two places. Therefore, the motions in the collected dataset were complicated enough to evaluate the proposed IMU preintegration algorithm.

Because it is quite difficult to obtain sufficient disparities from images recorded by a monocular camera when a vehicle runs along a straight road, we could not initialize the monocular visual-inertial system. For coping with this issue, stereo images were used to initialize the VINS. After initialization, only the images of the left side camera were used in optimization.

It is well-known that the earth is an approximate spheroid, and the direction of gravity approximately points to the center of the earth. Therefore, the direction and amplitude of gravity change with the position on the earth. For two positions that are far apart, the gravity vectors will be quite different. Therefore, it is imprecise for the traditional IMU preintegration algorithms to assume that the gravity vector is constant in the

W frame, especially after the vehicle has traveled a long distance. An example is where the VINS system (maybe another system where the IMU preintegration technology is used) was initialized at city A and traveled to city B, where the

W coordinate frame was located at city A. To simulate this situation, i.e., to amplify the influence of the change of gravity, we deliberately moved the

W coordinate frame a certain distance from the starting position of the testing dataset. Specifically, if the geodetic position of the starting position was

,

, and

, the geodetic displacement was

,

, and

, respectively. Then, the new position of the

W coordinate frame was

,

, and

, respectively. The new position of the

W frame may not be located in a living area, and may be a mountain or a lake. However, this does not affect the evaluation of the proposed algorithm mathematically. In order to systematically evaluate the influence of the change of gravity, the geodetic displacement of the world frame was incrementally set as 0, 0.1, 0.25, 0.5, 1.0, and 2.0 degrees, where

,

. The corresponding Euclidean displacements were 0, 14.7, 36.7, 73.3, 146.4, and 292.1 kilometers. The new positions of the

W frame were drawn in Google Earth, as shown in

Figure 4.

In order to achieve good alignment with the geodetic frame, the RTK positions were added into optimization during the initial period, i.e., we directly used the RTK positioning results. Then, the RTK positions were removed after initialization, for better reflecting the impact of the IMU preintegration. At this time, the VINS system will have accurate prior information. For analyzing the influence of the earth rotation, the performance of the proposed IMU preintegration algorithm was also evaluated with or without a consideration of the earth rotation. Finally, the performance of the proposed IMU preintegration algorithm was evaluated under one of the four settings, as follows:

Setting A: Both the change of gravity and the earth rotation are considered, i.e., the proposed algorithm is used in a normal way;

Setting B: The change of gravity is considered, but the earth rotation is omitted;

Setting C: The change of gravity is omitted, but the earth rotation is considered;

Setting D: Both the change of gravity and the earth rotation are omitted, and the proposed IMU preintegration algorithm degenerates to a traditional IMU preintegration algorithm.

During the initial period, the proposed IMU preintegration algorithm was used under Setting A.

7. Discussion

In this section, we will discuss the influence of the gravity change and the earth rotation on the IMU preintegration, according to the results presented in the previous section.

According to the RMSEs of the horizontal attitude of the MTi-G-710 and POS620 under

Setting A and

Setting C presented in

Table 2,

Table 3 and

Table 4, we can observe that ignoring the change of gravity makes the horizontal attitude errors increase as the geodetic displacement increases linearly. The RMSEs of the horizontal attitude error are about 1.38 times greater than the geodetic displacement. This tendency is also obvious in

Figure 8b and

Figure 9b, where the pitch and roll errors fluctuate sharply. If the vehicle moves in a small area, i.e., the geodetic displacement is almost 0 degrees, ignoring the change of gravity does not have much of an influence on the horizontal attitude errors, as shown in

Table 2,

Table 3 and

Table 4, under

Setting C. This phenomenon can also be directly observed in

Figure 7.

According to the position RMSEs of MTi-G-710 under

Setting A and

Setting C presented in

Table 2, we can observe that the RMSEs of the position are lower than 5.6 m under

Setting A. The position RMSEs increase and become greater than 8.73 m under

Setting C in

Table 2, if the geodetic displacement is greater than 0.5 degrees. We can also observe a similar tendency under

Setting A and

Setting C in

Table 3. The increase of the position RMSE becomes obvious when the geodetic displacement is greater than 1 degree, due to the short length of the sequence GFH.

According to the position RMSEs of POS620 under

Setting A and

Setting C presented in

Table 4, we can observe that the position RMSEs are lower than 1.9 m in sequence WRIC and lower than 0.85 m in sequence GFH under

Setting A. Additionally, the position RMSEs become greater than 2.36 m in sequence WRIC and 1.83 m in sequence GFH, if the geodetic displacement is greater than 0.1 degrees. The motion estimation results diverge under

Setting C, if the geodetic displacement is greater than 0.25 degrees in the sequence WRIC and 0.1 degrees in the sequence GFH. Compared with the MTi-G-710, the POS620 is more sensitive to the change of gravity, due to its high-precision measurements, which means that it has a greater weight during information fusion. If the vehicle moves in a small area, i.e., the geodetic displacement is almost 0 degrees, ignoring the change of gravity has no obvious influence on the position errors for both low-grade and high-grade IMUs, as shown in

Table 2,

Table 3 and

Table 4 under

Setting C.

According to the RMSEs of the MTi-G-710 under

Setting A and

Setting B presented in

Table 2 and

Table 3, we can observe that ignoring the earth rotation has no obvious influence on the accuracy of the position and the horizontal attitude. From another perspective, the RMSEs of MTi-G-710 under

Setting B and

Setting D presented in

Table 2 and

Table 3 show that the positioning accuracy is obviously increased under large geodetic displacement by taking the change of gravity into consideration, but ignoring the earth rotation. Therefore, the earth rotation can be safely omitted for the industrial-grade MEMS-based IMU. In contrast, the motion estimation results diverge if the earth rotation is omitted from the measurements of the navigation-grade IMU (POS620), as shown in

Figure 5. This is because the earth rotation is about

, and the gyro bias instability and noise density of the angler random walk of the MTi-G-710 are

and

, respectively, making it impossible to sense the earth rotation. In contrast, the gyro bias instability and noise density of the angler random walk of the POS620 are about

and

, respectively. Therefore, the POS620 can sense the earth rotation effectively. At the same time, it should be noted that the higher noise density of MTi-G-710 makes the weight of its preintegrated IMU measurements lower, which makes the visual constrains more significant. However, the high grade of POS620 results in a greater weight of the preintegrated IMU measurements and reduces the effect of the visual constrains, making the system solution more sensitive to the modeling error of the earth rotation.

Since the yaw state is unobservable for the VINS and therefore cannot be estimated effectively [

14], the drift of the yaw angle is completely random, making the yaw RMSEs stochastic under different settings and geodetic displacements, as shown in

Table 2,

Table 3 and

Table 4.