Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset

Abstract

1. Introduction

2. Materials and Methods

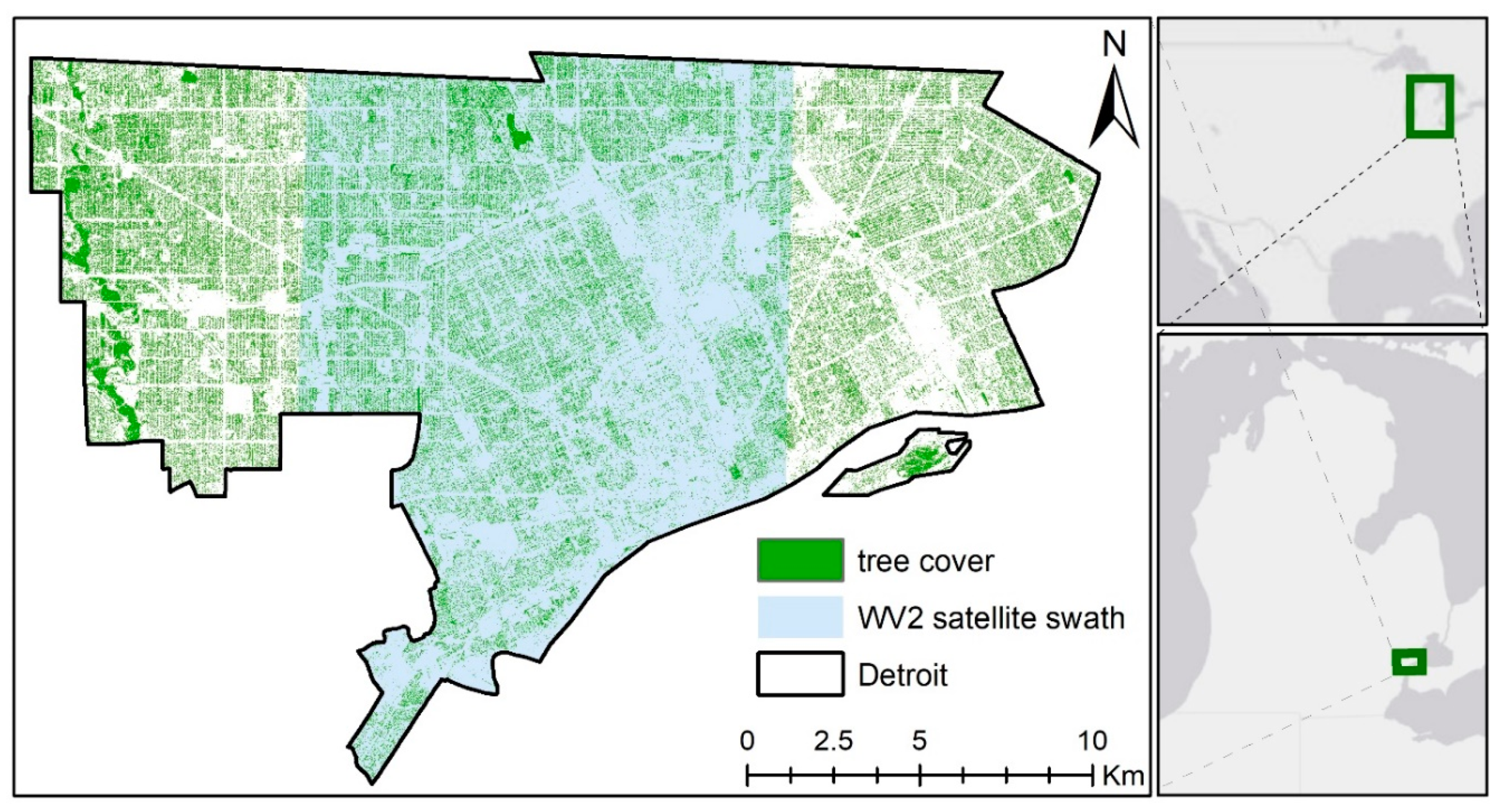

2.1. Study Area

2.2. Data Description: Tree Census

2.3. Data Description: Remote Sensing Data

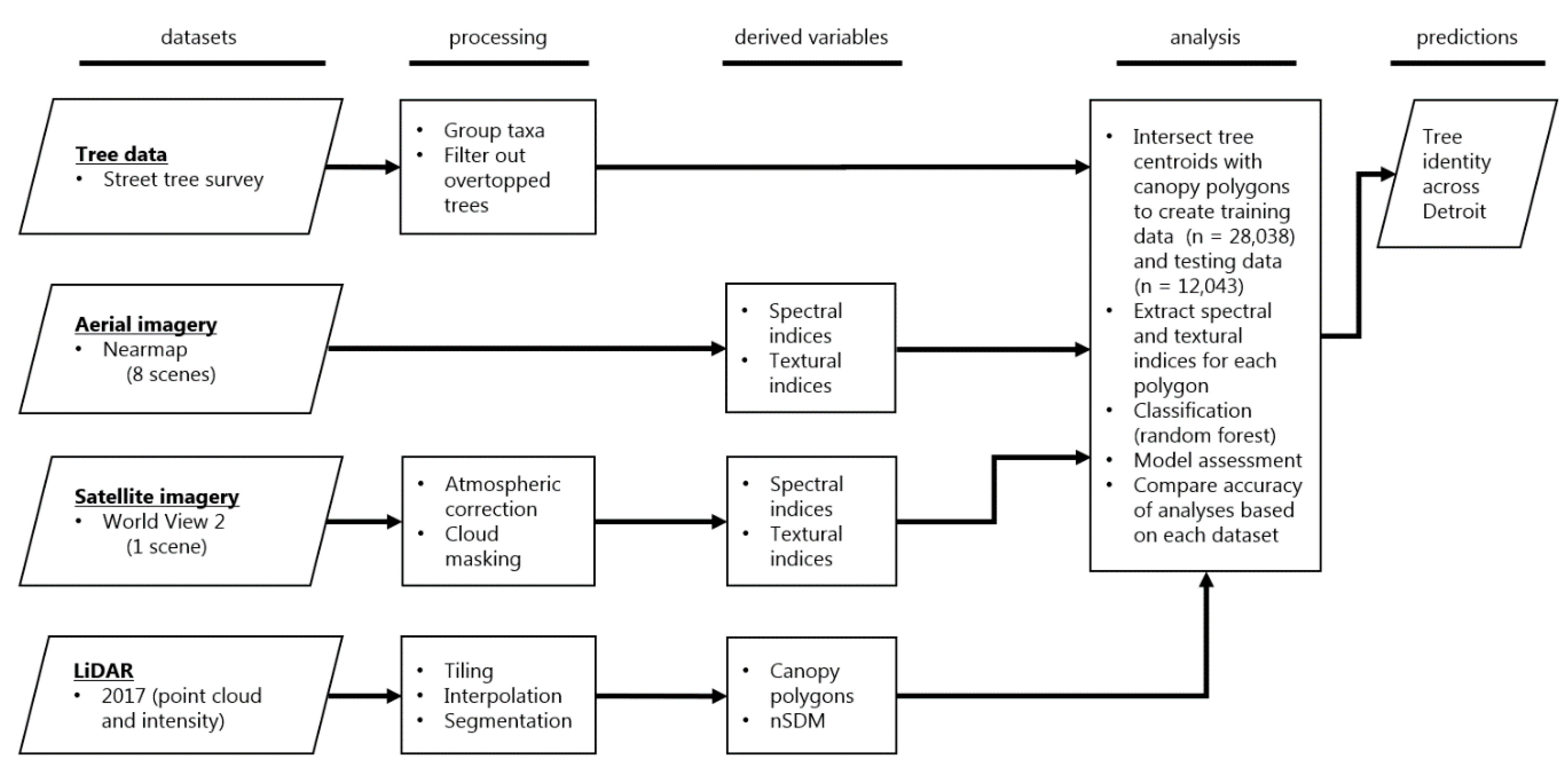

2.4. Workflow

3. Results

3.1. Classification Results

3.2. Variable Importance

3.3. Predictions

4. Discussion

4.1. Aerial Images for Tree Classification

4.2. Accuracy Compared to Other Studies

4.3. Leveraging Urban Tree Censuses

4.4. Applications

4.5. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Roy, S.; Byrne, J.; Pickering, C. A systematic quantitative review of urban tree benefits, costs, and assessment methods across cities in different climatic zones. Urban For. Urban Green. 2012, 11, 351–363. [Google Scholar] [CrossRef]

- Eisenman, T.S.; Churkina, G.; Jariwala, S.P.; Kumar, P.; Lovasi, G.S.; Pataki, D.E.; Weinberger, K.R.; Whitlow, T.H.; Eisenman, T.S.; Churkina, G.; et al. Urban trees, air quality, and asthma: An interdisciplinary review. Landsc. Urban Plan. 2019, 187, 47–59. [Google Scholar] [CrossRef]

- Sæbø, A.; Popek, R.; Nawrot, B.; Hanslin, H.M.; Gawronska, H.; Gawronski, S.W. Plant species differences in particulate matter accumulation on leaf surfaces. Sci. Total Environ. 2012, 427–428, 347–354. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Chang, Y.; Yan, P. Ranking the suitability of common urban tree species for controlling PM 2.5 pollution. Atmos. Pollut. Res. 2014, 6, 267–277. [Google Scholar] [CrossRef]

- Green, B.J.; Levetin, E.; Horner, W.E.; Codina, R.; Barnes, C.S.; Filley, W.V. Landscape Plant Selection Criteria for the Allergic Patient. J. Allergy Clin. Immunol. Pract. 2018, 6, 1869–1876. [Google Scholar] [CrossRef]

- Pulighe, G.; Fava, F.; Lupia, F. Insights and opportunities from mapping ecosystem services of urban green spaces and potentials in planning. Ecosyst. Serv. 2016, 22, 1–10. [Google Scholar] [CrossRef]

- Bodnaruk, E.W.; Kroll, C.N.; Yang, Y.; Hirabayashi, S.; Nowak, D.J.; Endreny, T.A. Where to plant urban trees? A spatially explicit methodology to explore ecosystem service tradeoffs. Landsc. Urban Plan. 2017, 157, 457–467. [Google Scholar] [CrossRef]

- Pontius, J.; Hanavan, R.P.; Hallett, R.A.; Cook, B.D.; Corp, L.A. High spatial resolution spectral unmixing for mapping ash species across a complex urban environment. Remote Sens. Environ. 2017, 199, 360–369. [Google Scholar] [CrossRef]

- Souci, J.; Hanou, I.; Puchalski, D. High-resolution remote sensing image analysis for early detection and response planning for emerald ash borer. Photogramm. Eng. Remote Sens. 2009, 75, 905–909. [Google Scholar]

- Katz, D.S.W.; Batterman, S.A. Urban-scale variation in pollen concentrations: A single station is insufficient to characterize daily exposure. Aerobiologia 2020. [Google Scholar] [CrossRef]

- Nowak, D.J.; Crane, D.E.; Stevens, J.C.; Hoehn, R.E.; Walton, J.T.; Bond, J. A ground-based method of assessing urban forest structure and ecosystem services. Arboric. Urban For. 2008, 34, 347–358. [Google Scholar]

- US Forest Service. Urban Forest Inventory & Analysis. Available online: https://www.fia.fs.fed.us/program-features/urban/ (accessed on 1 January 2020).

- Keller, J.K.K.; Konijnendijk, C.C. Short communication: A comparative analysis of municipal urban tree inventories of selected major cities in North America and Europe. Arboric. Urban For. 2012, 38, 24–30. [Google Scholar]

- Hauer, R.J.; Peterson, W.D. Municipal Tree Care and Management in the United States: A 2014 Urban and Community Forestry Census of Tree Activities; Special Publication 16-1; College of Natural Resources, University of Wisconsin: Stevens Point, WI, USA, 2016. [Google Scholar]

- McPherson, E.G.; Nowak, D.J.; Heisler, G.; Grimmond, S.; Souch, C.; Grant, R.; Rowntree, R. Quantifying urban forest structure, function, and value: The Chicago Urban Forest Climate Project. Urban Ecosyst. 1997, 1, 49–61. [Google Scholar] [CrossRef]

- McPherson, E.G.; Van Doorn, N.S.; Peper, P.J. Urban Tree Database and Allometric Equations (General technical report PSW-GTR-253); U.S. Department of Agriculture, Forest Service, Pacific Southwest Research Station: Albany, CA, USA, 2016; Available online: https://www.fs.fed.us/psw/publications/documents/psw_gtr253/psw_gtr_253.pdf (accessed on 1 January 2020).

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with Random forest using very high spatial resolution 8-band worldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef]

- Waser, L.T.; Küchler, M.; Jütte, K.; Stampfer, T. Evaluating the potential of worldview-2 data to classify tree species and different levels of ash mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef]

- Cho, M.A.; Malahlela, O.; Ramoelo, A. Assessing the utility WorldView-2 imagery for tree species mapping in South African subtropical humid forest and the conservation implications: Dukuduku forest patch as case study. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 349–357. [Google Scholar] [CrossRef]

- Karna, Y.K.; Hussin, Y.A.; Gilani, H.; Bronsveld, M.C.; Murthy, M.S.R.; Qamer, F.M.; Karky, B.S.; Bhattarai, T.; Aigong, X.; Baniya, C.B. Integration of WorldView-2 and airborne LiDAR data for tree species level carbon stock mapping in Kayar Khola watershed, Nepal. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 280–291. [Google Scholar] [CrossRef]

- Deng, S.; Katoh, M.; Guan, Q.; Yin, N.; Li, M. Interpretation of forest resources at the individual tree level at Purple Mountain, Nanjing City, China, using WorldView-2 imagery by combining GPS, RS and GIS technologies. Remote Sens. 2013, 6, 87–110. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Omer, G.; Mutanga, O.; Abdel-Rahman, E.M.; Adam, E. Performance of Support Vector Machines and Artifical Neural Network for Mapping Endangered Tree Species Using WorldView-2 Data in Dukuduku Forest, South Africa. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4825–4840. [Google Scholar] [CrossRef]

- Li, D.; Ke, Y.; Gong, H.; Li, X. Object-Based Urban Tree Species Classification Using Bi-Temporal WorldView-2 and WorldView-3 Images. Remote Sens. 2015, 7, 16917–16937. [Google Scholar] [CrossRef]

- Ardila, J.P.; Bijker, W.; Tolpekin, V.A.; Stein, A. Context-sensitive extraction of tree crown objects in urban areas using VHR satellite images. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 57–69. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and lidar data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Voss, M.; Sugumaran, R. Seasonal effect on tree species classification in an urban environment using hyperspectral data, LiDAR, and an object-oriented approach. Sensors 2008, 8, 3020–3036. [Google Scholar] [CrossRef] [PubMed]

- Jensen, R.R.; Hardin, P.J.; Hardin, A.J. Classification of urban tree species using hyperspectral imagery. Geocarto Int. 2012, 27, 443–458. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Zhang, C.; Qiu, F. Mapping Individual Tree Species in an Urban Forest Using Airborne Lidar Data and Hyperspectral Imagery. Photogramm. Eng. Remote Sens. 2012, 78, 1079–1087. [Google Scholar] [CrossRef]

- Zhang, Z.; Kazakova, A.; Moskal, L.M.; Styers, D.M. Object-based tree species classification in urban ecosystems using LiDAR and hyperspectral data. Forests 2016, 7, 122. [Google Scholar] [CrossRef]

- Hershey, R.; Befort, W. Aerial Photo Guide to New England Forest Types (General Technical Report NE-195); U.S. Department of Agriculture, Forest Service, Northeastern Forest Experiment Station: Washington, DC, USA, 1995. Available online: https://www.nrs.fs.fed.us/pubs/6667 (accessed on 1 January 2020).

- Morgan, J.L.; Gergel, S.E.; Coops, N.C. Aerial Photography: A Rapidly Evolving Tool for Ecological Management. Bioscience 2010, 60, 47–59. [Google Scholar] [CrossRef]

- Key, T.; Warner, T.A.; Mcgraw, J.B.; Fajvan, M.A. A Comparison of Multispectral and Multitemporal Information in High Spatial Resolution Imagery for Classification of Individual Tree Species in a Temperate Hardwood Forest. Remote Sens. Environ. 2001, 75, 100–112. [Google Scholar] [CrossRef]

- Polgar, C.A.; Primack, R.B. Leaf-out phenology of temperate woody plants: From trees to ecosystems. New Phytol. 2011, 191, 926–941. [Google Scholar] [CrossRef] [PubMed]

- Katz, D.S.W.; Batterman, S.A. Allergenic pollen production across a large city for common ragweed (Ambrosia artemisiifolia). Landsc. Urban Plan. 2019, 190, 103615. [Google Scholar] [CrossRef]

- Endsley, K.A.; Brown, D.G.; Bruch, E. Housing Market Activity is Associated with Disparities in Urban and Metropolitan Vegetation. Ecosystems 2018, 21, 1–15. [Google Scholar] [CrossRef]

- Khosravipour, A.; Skidmore, A.K.; Isenburg, M.; Wang, T.; Hussin, Y.A. Generating pit-free canopy height models from airborne LiDAR. Photogramm. Eng. Remote Sens. 2014, 80, 863–872. [Google Scholar] [CrossRef]

- Roussel, J.; Auty, D. Lidr: Airborne Lidar Data Manipulation and Visualization for Forestry Applications. 2017. Available online: https://github.com/Jean-Romain/lidR (accessed on 1 January 2020).

- Popescu, S.C.; Wynne, R.H. Seeing the Trees in the Forest: Using Lidar and Multispectral Data Fusion with Local Filtering and Variable Window Size for Estimating Tree Height. Photogramm. Eng. Remote Sens. 2004, 70, 589–604. [Google Scholar] [CrossRef]

- Dalponte, M.; Coomes, D.A. Tree-centric mapping of forest carbon density from airborne laser scanning and hyperspectral data. Methods Ecol. Evol. 2016, 1236–1245. [Google Scholar] [CrossRef]

- Martin, P.H.; Marks, P.L. Intact forests provide only weak resistance to a shade-tolerant invasive Norway maple (Acer platanoides L.). J. Ecol. 2006, 94, 1070–1079. [Google Scholar] [CrossRef]

- Mizunuma, T.; Mencuccini, M.; Wingate, L.; Ogée, J.; Nichol, C.; Grace, J. Sensitivity of colour indices for discriminating leaf colours from digital photographs. Methods Ecol. Evol. 2014, 5, 1078–1085. [Google Scholar] [CrossRef]

- Weil, G.; Lensky, I.M.; Resheff, Y.S.; Levin, N. Optimizing the timing of unmanned aerial vehicle image acquisition for applied mapping ofwoody vegetation species using feature selection. Remote Sens. 2017, 9, 1130. [Google Scholar] [CrossRef]

- Heinzel, J.; Koch, B. Investigating multiple data sources for tree species classification in temperate forest and use for single tree delineation. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 101–110. [Google Scholar] [CrossRef]

- Zvoleff, A. GLCM: Calculate Textures from Grey-Level Co-Occurrence Matrices (GLCMs). 2016. Available online: https://CRAN.R-project.org/package=glcm (accessed on 1 January 2020).

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R news 2002, 2, 18–22. [Google Scholar] [CrossRef]

- R Core Team R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing: Vienna, Austria, 2018. Available online: www.R-project.org (accessed on 1 January 2020).

- Hill, R.A.; Wilson, A.K.; George, M.; Hinsley, S.A. Mapping tree species in temperate deciduous woodland using time-series multi-spectral data. Appl. Veg. Sci. 2010, 13, 86–99. [Google Scholar] [CrossRef]

- Heenkenda, M.K.; Joyce, K.E.; Maier, S.W.; Bartolo, R. Mangrove species identification: Comparing WorldView-2 with aerial photographs. Remote Sens. 2014, 6, 6064–6088. [Google Scholar] [CrossRef]

- Wang, K.; Wang, T.; Liu, X. A review: Individual tree species classification using integrated airborne LiDAR and optical imagery with a focus on the urban environment. Forests 2019, 10, 1. [Google Scholar] [CrossRef]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Trends in automatic individual tree crown detection and delineation-evolution of LiDAR data. Remote Sens. 2016, 8, 333. [Google Scholar] [CrossRef]

- Marconi, S.; Graves, S.J.; Gong, D.; Nia, M.S.; Le Bras, M.; Dorr, B.J.; Fontana, P.; Gearhart, J.; Greenberg, C.; Harris, D.J.; et al. A data science challenge for converting airborne remote sensing data into ecological information. PeerJ 2019, 6, 1–27. [Google Scholar] [CrossRef]

- Katz, D.S.W.; Dzul, A.; Kendel, A.; Batterman, S.A. Effect of intra-urban temperature variation on tree flowering phenology, airborne pollen, and measurement error in epidemiological studies of allergenic pollen. Sci. Total Environ. 2019, 653, 1213–1222. [Google Scholar] [CrossRef]

- Imhoff, M.L.; Zhang, P.; Wolfe, R.E.; Bounoua, L. Remote sensing of the urban heat island effect across biomes in the continental USA. Remote Sens. Environ. 2010, 114, 504–513. [Google Scholar] [CrossRef]

- Rizwan, A.M.; Dennis, L.Y.C.; Liu, C. A review on the generation, determination and mitigation of urban heat island. J. Environ. Sci. 2008, 20, 120–128. [Google Scholar] [CrossRef]

- Jochner, S.; Menzel, A. Urban phenological studies—Past, present, future. Environ. Pollut. 2015, 203, 250–261. [Google Scholar] [CrossRef]

- Neil, K.; Wu, J. Effects of urbanization on plant flowering phenology: A review. Urban Ecosyst. 2006, 9, 243–257. [Google Scholar] [CrossRef]

| Focal Taxa | Common Name | Abundant Anemophilous Species | Relative Basal Area Street Trees (%) | n |

|---|---|---|---|---|

| Acer | maple | A. saccharinum, A. rubrum, A. saccharum, A. negundo | 35.5 | 38,363 |

| Acer platanoides | Norway maple | A. platanoides | 11.4 | 30,845 |

| Aesculus | horse chestnut | A. hippocastanum | 0.9 | 1167 |

| Ailanthus | tree of heaven | A. altissima | 0.4 | 962 |

| Catalpa | catalpa | C. speciosa | 1.6 | 1280 |

| Celtis | hackberry | C. occidentalis | 1.3 | 2240 |

| Fraxinus | ash | F. Pennsylvanica, F. americana | 5.2 | 11,786 |

| Ginkgo | ginkgo | G. biloba | 0.2 | 1122 |

| Gleditsia | honey locust | Gleditsia tricanthos | 10.2 | 22,331 |

| Morus | mulberry | M. alba, M. rubra | 0.4 | 2237 |

| Platanus | sycamore, London planetree | P. occidentalis, P. x acerfolia | 9.1 | 12,499 |

| Populus | poplar | P. deltoides, P. alba | 2.0 | 1086 |

| Pyrus | pear | P. calleryana | 0.3 | 2644 |

| Quercus | oak | Q. palustris, Q. rubra, Q. macrocarpa, Q. alba, Q. robur | 5.6 | 6846 |

| Tilia | basswood | C. americana, C. cordata | 3.7 | 8144 |

| Ulmus | elm | U. pumila, U. americana, U. rubra | 10.4 | 9989 |

| other | - | 14.8 | 42,581 | |

| total | 100 | 169,011 |

| Dataset | Scenes (n) | Years | Spatial Resolution | Spectral Resolution | RMSE (m) | Accessibility |

|---|---|---|---|---|---|---|

| WorldView-2 | 1 | 2011 | 0.5 m (panchromatic) 2.0 m (multispectral) | 1 8 | 1.62 | Private; purchase of individual scenes |

| Nearmap | 8 | 2014–2018 | 0.6 m | 3 | 1.06 | Private; subscription based |

| LiDAR | 1 | 2017 | 2.2 ppm | - | - | Public |

| Model Name | Accuracy | User Accuracy | Producer Accuracy | Kappa Statistic |

|---|---|---|---|---|

| All datasets | 0.740 | 0.817 | 0.377 | 0.682 |

| LiDAR (all) | 0.443 | 0.195 | 0.143 | 0.309 |

| LiDAR (intensity only) | 0.371 | 0.107 | 0.105 | 0.217 |

| LiDAR (all) and Nearmap | 0.697 | 0.778 | 0.327 | 0.630 |

| LiDAR (all) and WorldView 2 (all) | 0.631 | 0.650 | 0.292 | 0.548 |

| Nearmap | 0.682 | 0.759 | 0.316 | 0.612 |

| WorldView 2 (all) | 0.578 | 0.502 | 0.234 | 0.479 |

| WorldView 2 (spectral indices) | 0.525 | 0.484 | 0.215 | 0.414 |

| WorldView 2 (raw) | 0.562 | 0.487 | 0.221 | 0.459 |

| Acer | Acer platanoides | Aesculus | Ailanthus | Catalpa | Celtis | Coniferous | Fraxinus | Ginkgo | Gleditsia | Morus | Other | Platanus | Populus | Pyrus | Quercus | Tilia | Ulmus | Total | User Accuracy | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acer | 2105 | 182 | 36 | 37 | 28 | 27 | 9 | 137 | 41 | 130 | 24 | 61 | 243 | 57 | 21 | 190 | 99 | 300 | 3727 | 0.56 |

| Acer platanoides | 133 | 2555 | 48 | 2 | 0 | 6 | 2 | 10 | 1 | 8 | 2 | 21 | 8 | 1 | 7 | 12 | 20 | 30 | 2866 | 0.89 |

| Aesculus | 1 | 1 | 23 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 28 | 0.82 |

| Ailanthus | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.00 |

| Catalpa | 0 | 0 | 0 | 0 | 66 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 72 | 0.92 |

| Celtis | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.00 |

| coniferous | 0 | 0 | 0 | 0 | 0 | 0 | 17 | 2 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 1 | 23 | 0.74 |

| Fraxinus | 21 | 7 | 17 | 1 | 7 | 6 | 0 | 350 | 0 | 33 | 1 | 1 | 3 | 5 | 3 | 3 | 17 | 14 | 489 | 0.72 |

| Ginkgo | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.00 |

| Gleditsia | 115 | 15 | 13 | 1 | 1 | 49 | 5 | 156 | 2 | 2194 | 10 | 19 | 42 | 11 | 10 | 97 | 69 | 66 | 2875 | 0.76 |

| Morus | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.00 |

| other | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1.00 |

| Platanus | 44 | 4 | 0 | 1 | 0 | 2 | 0 | 6 | 1 | 9 | 2 | 3 | 687 | 20 | 0 | 6 | 7 | 13 | 805 | 0.85 |

| Populus | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.00 |

| Pyrus | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 13 | 0 | 0 | 0 | 13 | 1.00 |

| Quercus | 19 | 9 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 11 | 1 | 9 | 6 | 0 | 3 | 359 | 6 | 7 | 432 | 0.83 |

| Tilia | 18 | 12 | 10 | 1 | 0 | 1 | 0 | 10 | 0 | 6 | 1 | 3 | 2 | 0 | 1 | 11 | 317 | 3 | 396 | 0.80 |

| Ulmus | 38 | 3 | 0 | 0 | 1 | 0 | 0 | 20 | 0 | 2 | 0 | 3 | 14 | 3 | 2 | 1 | 1 | 228 | 316 | 0.72 |

| Total | 2494 | 2788 | 148 | 43 | 103 | 92 | 33 | 692 | 45 | 2397 | 41 | 125 | 1005 | 97 | 60 | 682 | 536 | 662 | 12,043 | |

| Producer accuracy | 0.84 | 0.92 | 0.16 | 0.0 | 0.64 | 0.0 | 0.52 | 0.51 | 0.0 | 0.92 | 0.0 | 0.01 | 0.68 | 0.0 | 0.22 | 0.53 | 0.59 | 0.34 |

| Taxon | Trees (n) | Trees (%) | Area (m2) | Area (%) |

|---|---|---|---|---|

| Acer | 235,292 | 42.5 | 12,770,224 | 42.2 |

| Acer platanoides | 74,892 | 13.5 | 4,250,872 | 14.1 |

| Aesculus | 934 | 0.2 | 53,220 | 0.2 |

| Ailanthus | 110 | <0.1 | 6486 | <0.1 |

| Catalpa | 3926 | 0.7 | 185,373 | 0.6 |

| Celtis | 191 | <0.1 | 12,618 | <0.1 |

| conifers | 5757 | 1.0 | 290,176 | 1.0 |

| Fraxinus | 18,378 | 3.3 | 792,887 | 2.6 |

| Ginko | 96 | <0.1 | 4927 | <0.1 |

| Gleditsia | 126,330 | 22.8 | 6,615,295 | 21.9 |

| Morus | 130 | <0.1 | 8431 | <0.1 |

| other | 369 | <0.1 | 20,461 | 0.1 |

| Platanus | 16,312 | 2.9 | 974,651 | 3.2 |

| Populus | 251 | <0.1 | 16,793 | <0.1 |

| Pyrus | 514 | 0.1 | 29,022 | <0.1 |

| Quercus | 29,287 | 5.3 | 1,850,539 | 6.1 |

| Tilia | 13,222 | 2.4 | 778,361 | 2.6 |

| Ulmus | 27,227 | 4.9 | 1,585,638 | 5.2 |

| Total | 553,218 | 100.0 | 30,245,974 | 100.0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Katz, D.S.W.; Batterman, S.A.; Brines, S.J. Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset. Remote Sens. 2020, 12, 2475. https://doi.org/10.3390/rs12152475

Katz DSW, Batterman SA, Brines SJ. Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset. Remote Sensing. 2020; 12(15):2475. https://doi.org/10.3390/rs12152475

Chicago/Turabian StyleKatz, Daniel S. W., Stuart A. Batterman, and Shannon J. Brines. 2020. "Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset" Remote Sensing 12, no. 15: 2475. https://doi.org/10.3390/rs12152475

APA StyleKatz, D. S. W., Batterman, S. A., & Brines, S. J. (2020). Improved Classification of Urban Trees Using a Widespread Multi-Temporal Aerial Image Dataset. Remote Sensing, 12(15), 2475. https://doi.org/10.3390/rs12152475