A Robust and Versatile Pipeline for Automatic Photogrammetric-Based Registration of Multimodal Cultural Heritage Documentation †

Abstract

:1. Introduction

1.1. Structure

1.2. Related Literature

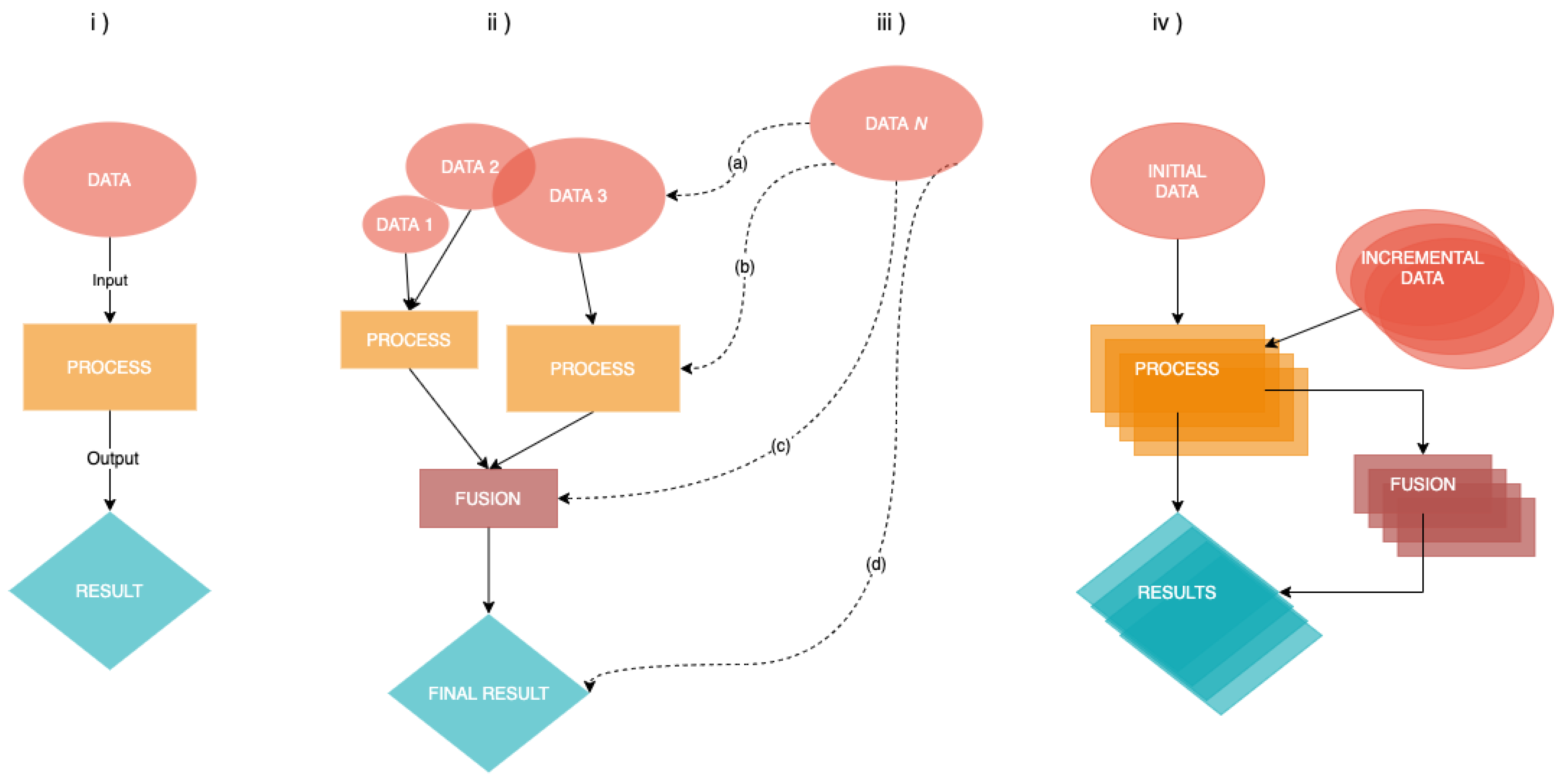

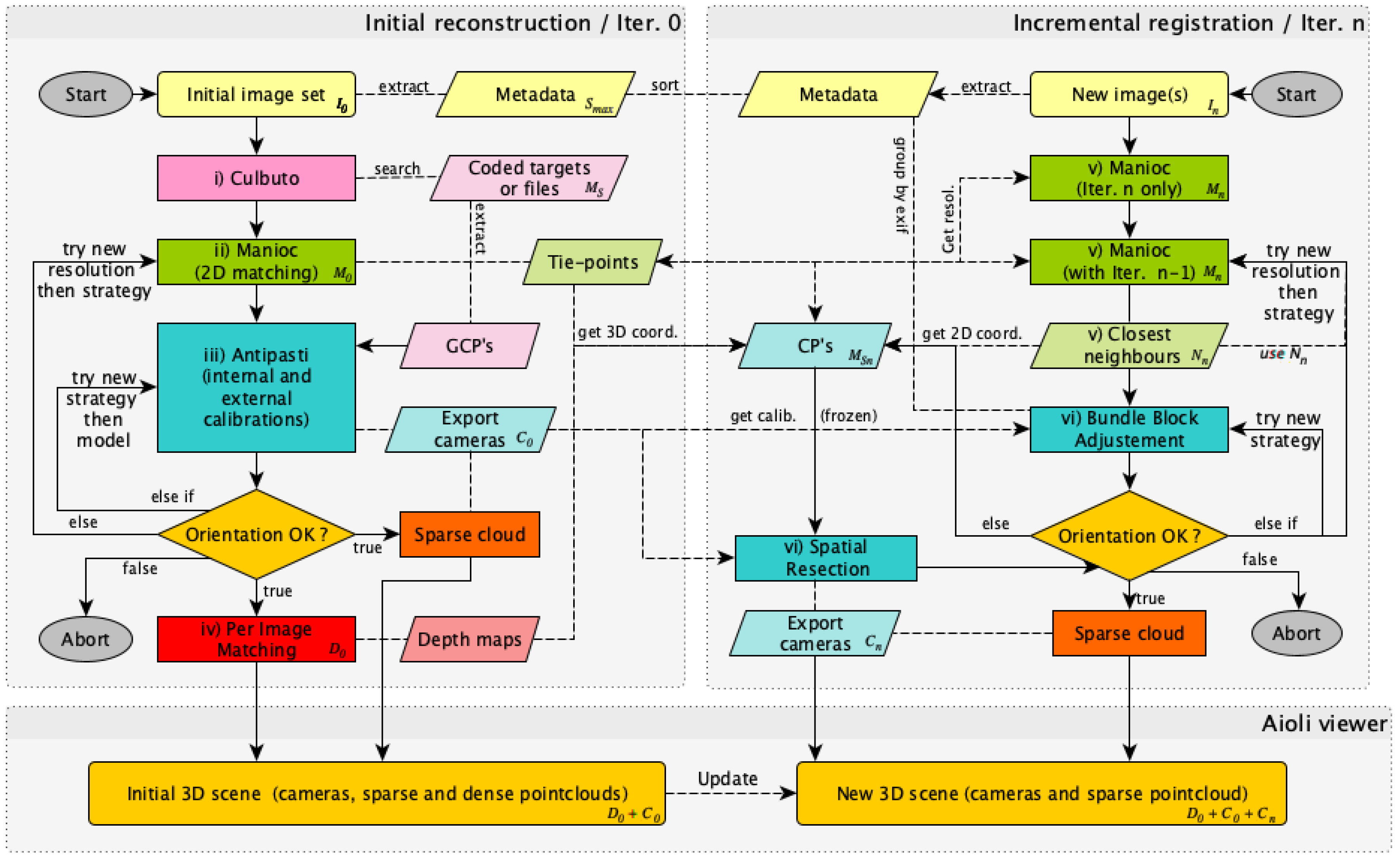

2. Totally Automated Co-Registration and Orientations

2.1. Overview and Technical Description

- (i)

- (ii)

- Manioc is an automatic sub-sampling parametrization for 2D matching: it takes , and the largest dimension of the images in , subsampled at scale , and outputs image correspondences .

- (iii)

- Antipasti is an adaptative self-calibration method: it takes , and outputs external and internal calibrations .

- (iv)

- The fully automatic Per Image Matching tools produces the dense cloud from , , .

- (v)

- During incremental registration, Manioc takes , new image set and and produces (correspondences with all image sets). It also gives the closest neighbours of : .

- (vi)

- Finally, a robust and versatile incremental co-registration is performed given , , , TACO computes , in the same spatial system as , either by Bundle Block Adjustment or Spatial Resection.

2.2. A Robust and Automated Initial Reconstruction for Complex Data Sets

2.3. A Versatile and Incremental Spatial Registration of New Images from Oriented Image Set

3. Results and Discussions

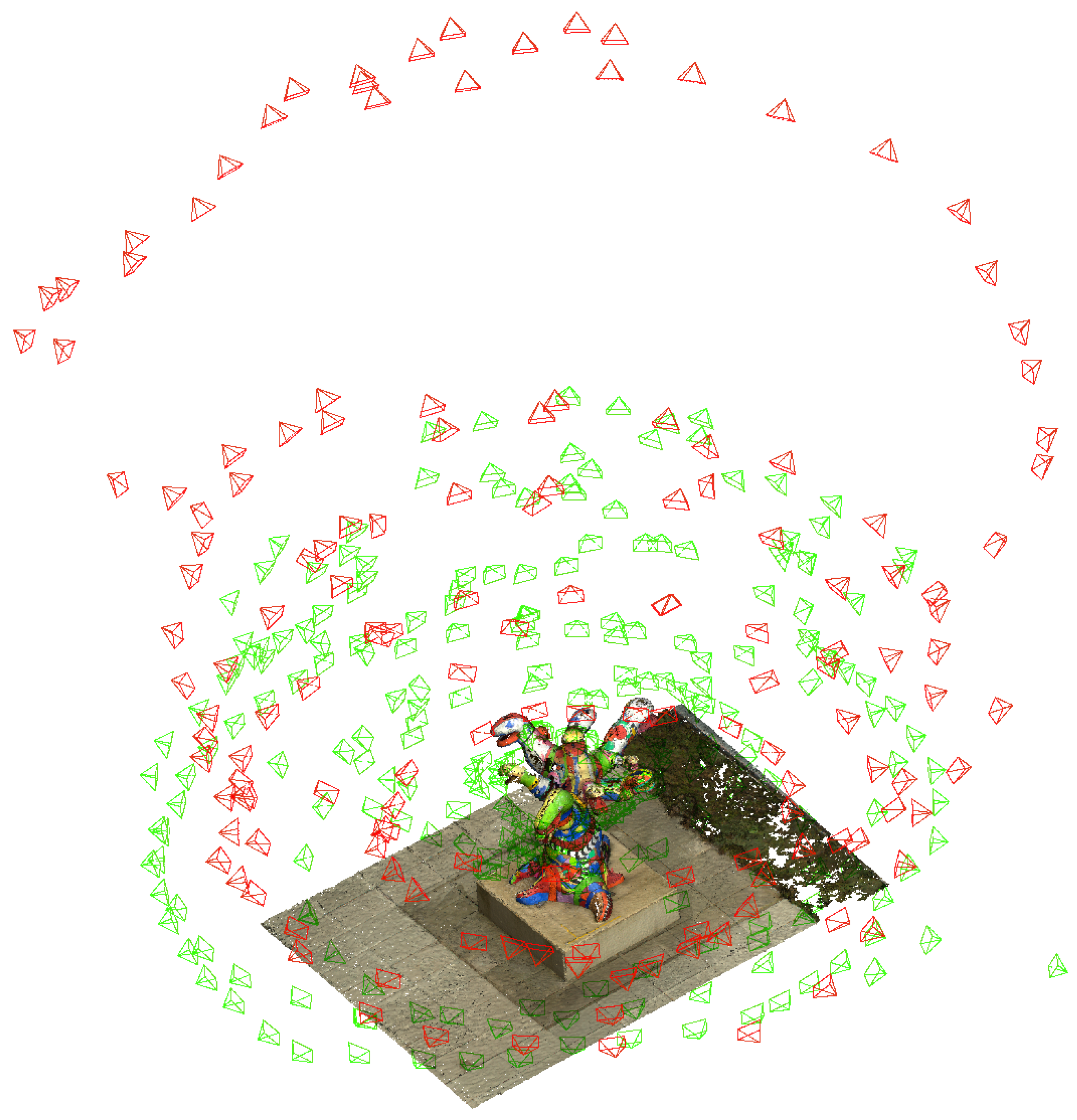

- The Arbre-Serpents dataset (Figure 5) demonstrates the versatility to manage complex UAV-based data-acquisition.

- The SA13 dataset (Figure 6) proves the ability to handle varied multi-focal acquisition.

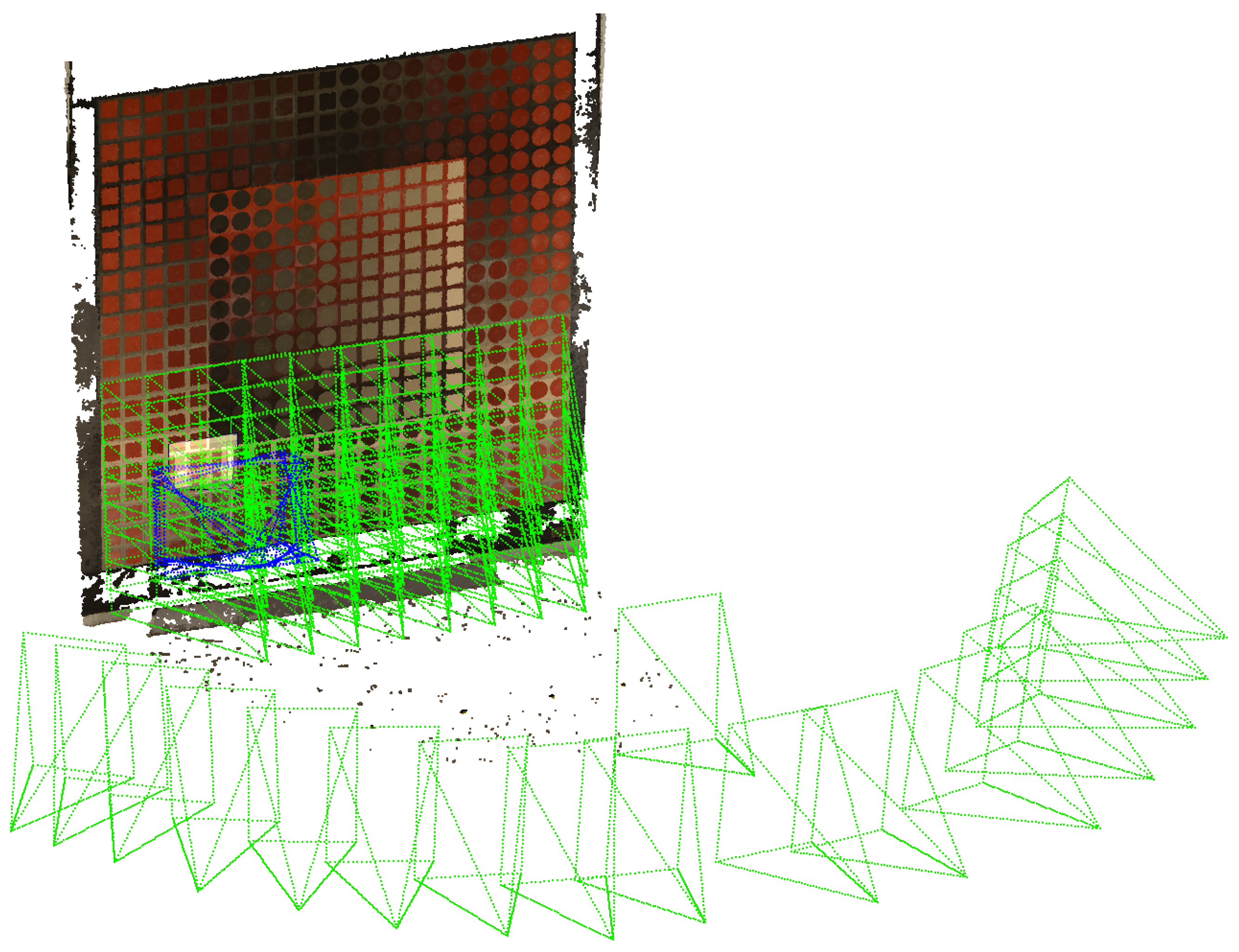

- The Vasarely dataset (Figure 7) highlight the capacity to deal with multi-scalar acquisition (14× GSD magnification).

- The Old-Charity dataset (Figure 8) illustrate the velocity (40s of computing) to register an isolated picture on large image-set.

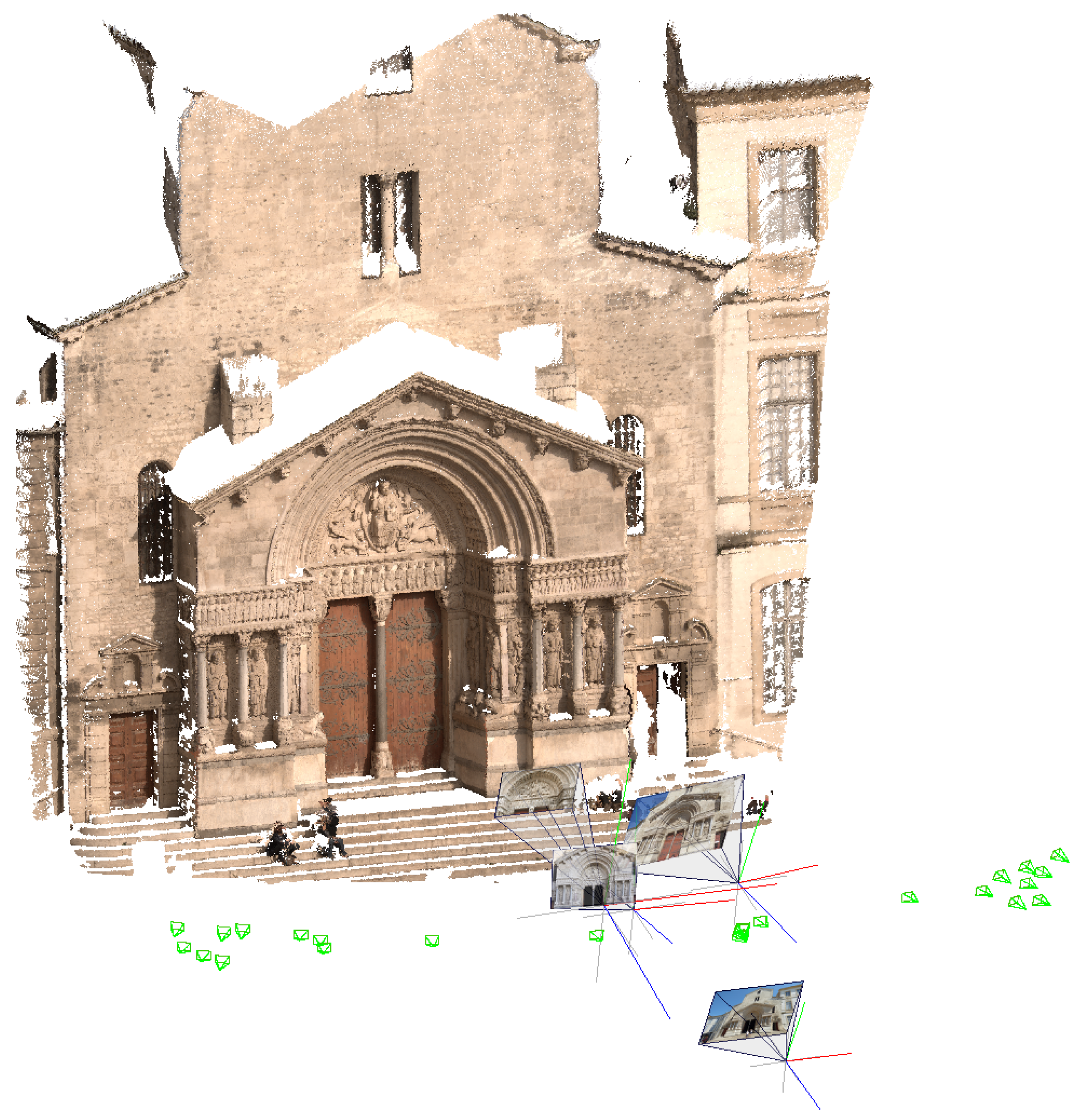

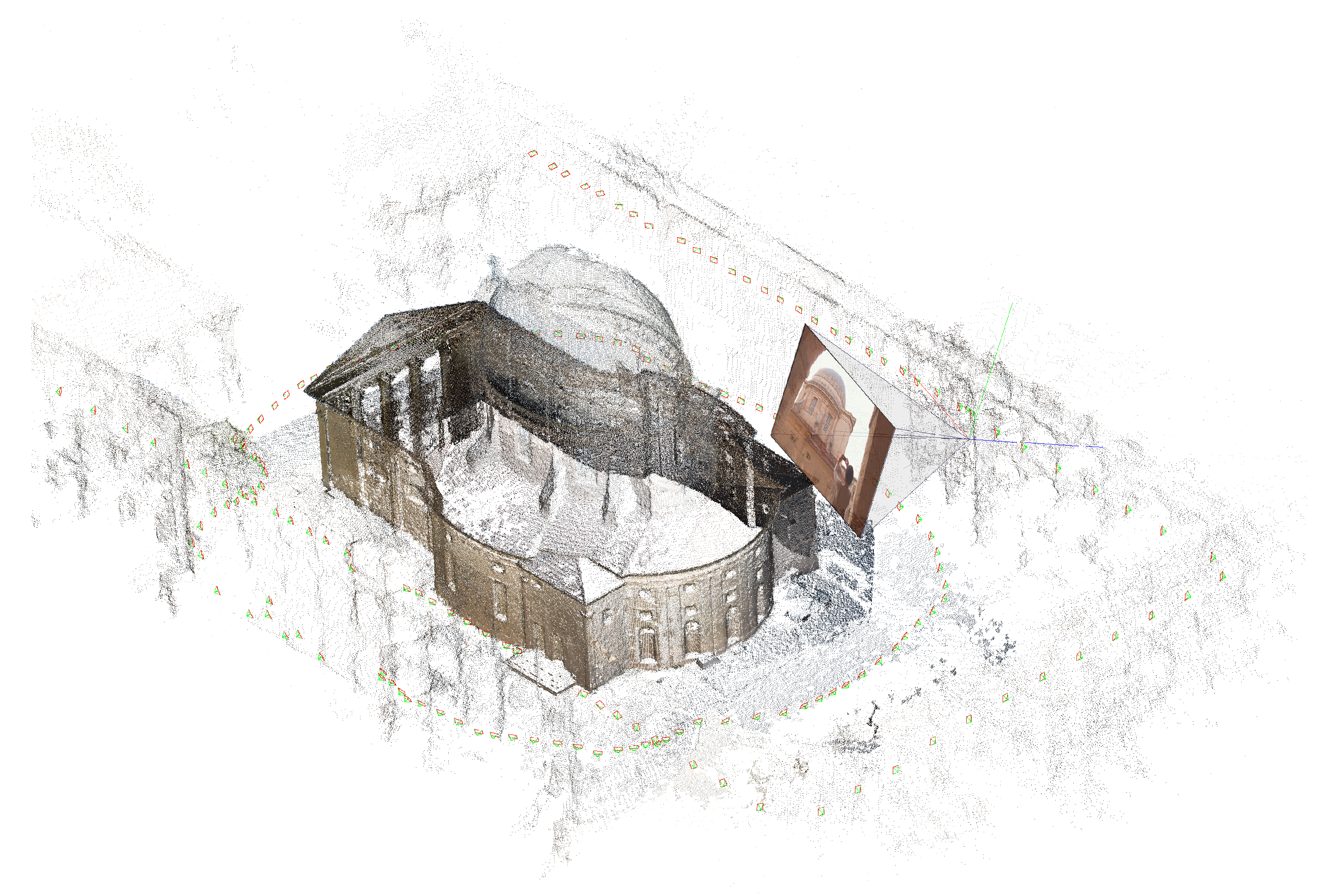

- The Saint-Trophisme dataset (Figure A2) shows the robustness to integrate multi-temporal images including web-archive.

3.1. Toward the Interoperability Challenge

- A reversible tie-points format between single and multi-image observations.

- A lossless intrinsic calibration model according to variable intrinsic parameters conventions.

- A generic format for external calibration preserving uncertainty metrics.

- A human/machine understandable format to manage 2D/3D coordinates of GCPs.

- An enriched pointcloud format to compare and evaluate multi-source dense matching results.

- On Nettuno dataset (Figure 9) the interoperable process solved critical 2D matching issue between very low overlapping terrestrial and UAV acquisition.

- On Lys model dataset (Figure 10) through the interoperable process a complex dataset composed of 841 was oriented with a single automated iteration.

4. Conclusions and Perspectives

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. AIOLI

Cooperative Development between TACO and AIOLI

References

- Pamart, A.; Guillon, O.; Vallet, J.M.; De Luca, L. Toward a multimodal photogrammetric acquisition and processing methodology for monitoring conservation and restoration studies. In Proceedings of the 14th Eurographics Workshop on Graphics and Cultural Heritage, Genova, Italy, 5–7 October 2016; pp. 207–210. [Google Scholar]

- Pamart, A.; Guillon, O.; Faraci, S.; Gattet, E.; Genevois, M.; Vallet, J.M.; De Luca, L. Multispectral Photogrammetric Data Acquisition and Processing for Wall Paintings Studies; ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences: Nafplio, Greece, 2017; pp. 559–566. [Google Scholar] [CrossRef] [Green Version]

- Pamart, A.; Ponchio, F.; Abergel, V.; Alaoui M’Darhri, A.; Corsini, M.; Dellepiane, M.; Morlet, F.; Scopigno, R.; De Luca, L. A complete framework operating Spatially-Oriented RTI in a 3D/2D Cultural Heritage documentation and analysis tool. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-2/W9. in press. [Google Scholar] [CrossRef] [Green Version]

- Grussenmeyer, P.; Drap, P.; Gaillard, G. ARPENTEUR 3.0: Recent Developments in Web Based Photogrammetry; ISPRS, Commission VI: Education and Communications: Sao Paulo, Brazil, September 2002; pp. 1–7. [Google Scholar]

- Nilsson, D.; Pletinckx, D.; Van Gool, L.; Vergauwen, M. The ARC 3D Webservice. EPOCH Knowhow Book. 2007, pp. 978–991. Available online: http://www.her-it-age.net/ (accessed on 23 June 2020).

- Heller, J.; Havlena, M.; Jancosek, M.; Torii, A.; Pajdla, T. 3D reconstruction from photographs by CMP SfM web service. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; pp. 30–34. [Google Scholar]

- Alliez, P.; Forge, F.; de Luca, L.; Pierrot-Deseilligny, M.; Preda, M. Culture 3D Cloud: A Cloud Computing Platform for 3D Scanning, Documentation, Preservation and Dissemination of Cultural Heritage. ERCIM News 2017, 111, 64. [Google Scholar]

- Nocerino, E.; Poiesi, F.; Locher, A.; Tefera, Y.T.; Remondino, F.; Chippendale, P.; Van Gool, L. 3D reconstruction with a collaborative approach based on smartphones and a cloud-based server. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-2/W8, 187–194. [Google Scholar] [CrossRef] [Green Version]

- Moulon, P.; Monasse, P.; Perrot, R.; Marlet, R. OpenMVG: Open multiple view geometry. In International Workshop on Reproducible Research in Pattern Recognition; Springer: Cham, Switzerland, 2016; pp. 60–74. [Google Scholar]

- Rupnik, E.; Daakir, M.; Pierrot Deseilligny, M. MicMac—A free, open-source solution for photogrammetry. Open Geospat. Data Softw. Stand. 2017, 2, 14. [Google Scholar] [CrossRef]

- Hartley, R.I.; Mundy, J.L. Relationship between photogrammmetry and computer vision. Integrating photogrammetric techniques with scene analysis and machine vision. Int. Soc. Opt. Photonics 1993, 1944, 92–106. [Google Scholar]

- Granshaw, S.I.; Fraser, C.S. Computer vision and photogrammetry: Interaction or introspection? Photogramm. Rec. 2015, 30, 3–7. [Google Scholar] [CrossRef]

- Aicardi, I.; Chiabrando, F.; Lingua, A.M.; Noardo, F. Recent trends in cultural heritage 3D survey: The photogrammetric computer vision approach. J. Cult. Herit. 2018, 32, 257–266. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Heidelberg, Berlin, 1999; pp. 298–372. [Google Scholar]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote. Sens. 2006, 36, 266–272. [Google Scholar]

- Gonzalez-Aguilera, D.; López-Fernández, L.; Rodriguez-Gonzalvez, P.; Hernandez-Lopez, D.; Guerrero, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; et al. GRAPHOS–open-source software for photogrammetric applications. Photogramm. Rec. 2018, 33, 11–29. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez-Aguilera, D.; Ruiz de Ona, E.; López-Fernandez, L.; Farella, E.M.; Stathopoulou, E.K.; Toschi, I.; Remondino, F.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Fusiello, A.; et al. PhotoMatch—An open-source multi-view and multi-modal feature matching tool for photogrammetric applications. ISPRS Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, in press. [Google Scholar]

- Remondino, F.; El-Hakim, S. Image-based 3D Modelling: A Review. Photogramm. Rec. 2006, 21, 269–291. [Google Scholar] [CrossRef]

- Remondino, F.; Nocerino, E.; Toschi, I.; Menna, F. A critical review of automated photogrammetric processing of large datasets. In Proceedings of the 26th International CIPA Symposium 2017, Ottawa, ON, Canada, 28 August–1 September 2017; Volume 42, pp. 591–599. [Google Scholar]

- Stathopoulou, E.K.; Welponer, M.; Remondino, F. Open-Source Image-Based 3d Reconstruction Pipelines: Review, Comparison and Evaluation. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, 42, 331–338. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L.; Remondino, F.; Scaioni, M.; Lo Brutto, M.; Rizzi, A.; Brumana, R. Geometric and radiometric analysis of paintings. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2010, 38, 62–67. [Google Scholar]

- Nocerino, E.; Rieke-Zapp, D.H.; Trinkl, E.; Rosenbauer, R.; Farella, E.; Morabito, D.; Remondino, F. Mapping VIS and UVL imagery on 3D geometry for non-invasive, non-contact analysis of a vase. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2018, 42, 773–780. [Google Scholar] [CrossRef] [Green Version]

- Grifoni, E.; Legnaioli, S.; Nieri, P.; Campanella, B.; Lorenzetti, G.; Pagnotta, S.; Poggialini, F.; Palleschi, V. Construction and comparison of 3D multi-source multi-band models for cultural heritage applications. J. Cult. Herit. 2018, 34, 261–267. [Google Scholar] [CrossRef]

- Chane, C.S.; Mansouri, A.; Marzani, F.S.; Boochs, F. Integration of 3D and multispectral data for cultural heritage applications: Survey and perspectives. Image Vis. Comput. 2013, 31, 91–102. [Google Scholar] [CrossRef] [Green Version]

- Yeh, C.K.; Matsuda, N.; Huang, X.; Li, F.; Walton, M.; Cossairt, O. A streamlined photometric stereo framework for cultural heritage. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 738–752. [Google Scholar]

- Van der Perre, A.; Hameeuw, H.; Boschloos, V.; Delvaux, L.; Proesmans, M.; Vandermeulen, B.; Van Gool, L.; Watteeuw, L. Towards a combined use of IR, UV and 3D-Imaging for the study of small inscribed and illuminated artefacts. In Lights On… Cultural Heritage and Museums! LabCR Laboratory for Conservation and Restoration, FLUP Faculty of Arts and Humanities, University of Porto: Porto, Portugal, 2016; pp. 163–192. [Google Scholar]

- Suwardhi, D.; Menna, F.; Remondino, F.; Hanke, K.; Akmalia, R. Digital 3D Borobudur-Integration of 3D surveying and modeling techniques. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2015, 40, 417. [Google Scholar] [CrossRef] [Green Version]

- Ramos, M.M.; Remondino, F. Data fusion in cultural heritage a review. ISPRS– Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2015, 5, W7. [Google Scholar] [CrossRef] [Green Version]

- Toschi, I.; Capra, A.; De Luca, L.; Beraldin, J.A.; Cournoyer, L. On the evaluation of photogrammetric methods for dense 3D surface reconstruction in a metrological context. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-5, 371–378. [Google Scholar] [CrossRef] [Green Version]

- Calvet, L.; Gurdjos, P.; Griwodz, C.; Gasparini, S. Detection and accurate localization of circular fiducials under highly challenging conditions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 562–570. [Google Scholar]

- Wang, J.; Olson, E. AprilTag 2: Efficient and robust fiducial detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rosu, A.M.; Assenbaum, M.; De la Torre, Y.; Pierrot-Deseilligny, M. Coastal digital surface model on low contrast images. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2015, 40, 307. [Google Scholar] [CrossRef] [Green Version]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent developments in large-scale tie-point matching. ISPRS J. Photogramm. Remote. Sens. 2016, 115, 47–62. [Google Scholar] [CrossRef]

- Farella, E.; Torresani, A.; Remondino, F. Sparse point cloud filtering based on covariance features. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, 42, 465–472. [Google Scholar] [CrossRef] [Green Version]

- Shih, T.; Faig, W. A solution for space resection in closed form. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 1988, 27, 547–556. [Google Scholar]

- Larsson, V.; Kukelova, Z.; Zheng, Y. Making minimal solvers for absolute pose estimation compact and robust. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2335–2343. [Google Scholar]

- Ke, T.; Roumeliotis, S.I. An efficient algebraic solution to the perspective-three-point problem. arXiv 2017, arXiv:1701.08237. [Google Scholar]

- Persson, M.; Nordberg, K. Lambda Twist: An Accurate Fast Robust Perspective Three Point (P3P) Solver. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 318–332. [Google Scholar]

- Palma, G.; Corsini, M.; Dellepiane, M.; Scopigno, R. Improving 2D-3D Registration by Mutual Information using Gradient Maps. In Proceedings of the Eurographics Italian Chapter Conference, Genova, Italy, 18–19 November 2010; pp. 89–94. [Google Scholar] [CrossRef]

- Merkel, D. Docker: Lightweight Linux Containers for Consistent Development and Deployment. Linux J. 2014, 2014, 2. [Google Scholar]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapè, A. MIMETIC: Mobile encrypted traffic classification using multimodal deep learning. Comput. Netw. 2019, 165, 106944. [Google Scholar] [CrossRef]

- Anzid, H.; Le Goic, G.; Bekkari, A.; Mansouri, A.; Mammass, D. Multimodal Images Classification using Dense SURF, Spectral Information and Support Vector Machine. Procedia Comput. Sci. 2019, 148, 107–115. [Google Scholar] [CrossRef]

- Belhi, A.; Bouras, A.; Foufou, S. Leveraging known data for missing label prediction in cultural heritage context. Appl. Sci. 2018, 8, 1768. [Google Scholar] [CrossRef] [Green Version]

- Manuel, A.; Véron, P.; De Luca, L. 2D/3D semantic annotation of spatialized images for the documentation and analysis of cultural heritage. In Proceedings of the 14th Eurographics Workshop on Graphics and Cultural Heritage, Genova, Italy, 5–7 October 2016; pp. 101–104. [Google Scholar]

- Manuel, A.; M’Darhri, A.A.; Abergel, V.; Rozar, F.; De Luca, L. A semi-automatic 2D/3D annotation framework for the geometric analysis of heritage artefacts. In Proceedings of the 2018 3rd Digital Heritage International Congress (DigitalHERITAGE), San Francisco, CA, USA, 26–30 October 2018; pp. 1–7. [Google Scholar]

Sample Availability: Data sets presented in this paper are available and shareable on request, in case there is no conflict with the right-holders of the artworks. TACO library will be released as a deliverable of the ANR SUMUM project, grant Référence ANR-17-CE38-0004 of the French Agence Nationale de la Recherche. |

| Dataset | Initial nb. of Images | Computational Time | Sparse Cloud (k)Points | Dense Cloud (M)Points | Initial Avg. RE in (px) |

|---|---|---|---|---|---|

| SA13 | 8 | 2 min | 102 | 2.35 | 1.08 |

| Fragment * | 21 | 4 min | 60 | 1.34 | 0.47 |

| Saint-Trophisme | 26 | 7 min | 94 | 3.13 | 0.54 |

| Owl | 40 | 7 min | 108 | 2.68 | 0.93 |

| Vasarely | 65 | 7 min | 127 | 1.76 | 0.74 |

| Excavation site * | 95 | 43 min | 641 | 1.34 | 0.49 |

| Small temple * | 134 | 120 min | 1850 | 4.04 | 0.74 |

| Old-charity | 181 | 170 min | 224 | 1.14 | 0.73 |

| Arbre-serpents | 273 | 390 min | 1468 | 5.62 | 1.26 |

| Nettuno | 256 | Nc. | 2806 | 9.87 | 0.43 |

| Lys model | 841 | Nc. | 20,989 | 44.4 | 0.66 |

| Dataset | Added Images | Number of Iterations | Number of Sensors | Number of Focal Length | Final Avg. RE in(px) |

|---|---|---|---|---|---|

| SA13 | +107 | 12 | 1 | 6 | 1.54 |

| Saint-Trophisme | +4 | 4 | 4 | 4 | 0.6 * |

| Vasarely | +6 | 3 | 1 | 2 | 0.80 |

| Old-charity | +1 | 1 | 1 | 1 | 1.12 * |

| Arbre-serpents | +259 | 3 | 1 | 1 | 1.65 |

| Nettuno | Na | 1 | 2 | 2 | 0.59 |

| Lys model | Na | 1 | 2 | 3 | 1.21 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pamart, A.; Morlet, F.; De Luca, L.; Veron, P. A Robust and Versatile Pipeline for Automatic Photogrammetric-Based Registration of Multimodal Cultural Heritage Documentation. Remote Sens. 2020, 12, 2051. https://doi.org/10.3390/rs12122051

Pamart A, Morlet F, De Luca L, Veron P. A Robust and Versatile Pipeline for Automatic Photogrammetric-Based Registration of Multimodal Cultural Heritage Documentation. Remote Sensing. 2020; 12(12):2051. https://doi.org/10.3390/rs12122051

Chicago/Turabian StylePamart, Anthony, François Morlet, Livio De Luca, and Philippe Veron. 2020. "A Robust and Versatile Pipeline for Automatic Photogrammetric-Based Registration of Multimodal Cultural Heritage Documentation" Remote Sensing 12, no. 12: 2051. https://doi.org/10.3390/rs12122051

APA StylePamart, A., Morlet, F., De Luca, L., & Veron, P. (2020). A Robust and Versatile Pipeline for Automatic Photogrammetric-Based Registration of Multimodal Cultural Heritage Documentation. Remote Sensing, 12(12), 2051. https://doi.org/10.3390/rs12122051