Robust Infrared Small Target Detection via Jointly Sparse Constraint of l1/2-Metric and Dual-Graph Regularization

Abstract

1. Introduction

2. Preliminaries and Related Algorithms

2.1. Graph Laplacian

2.2. Related Algorithms

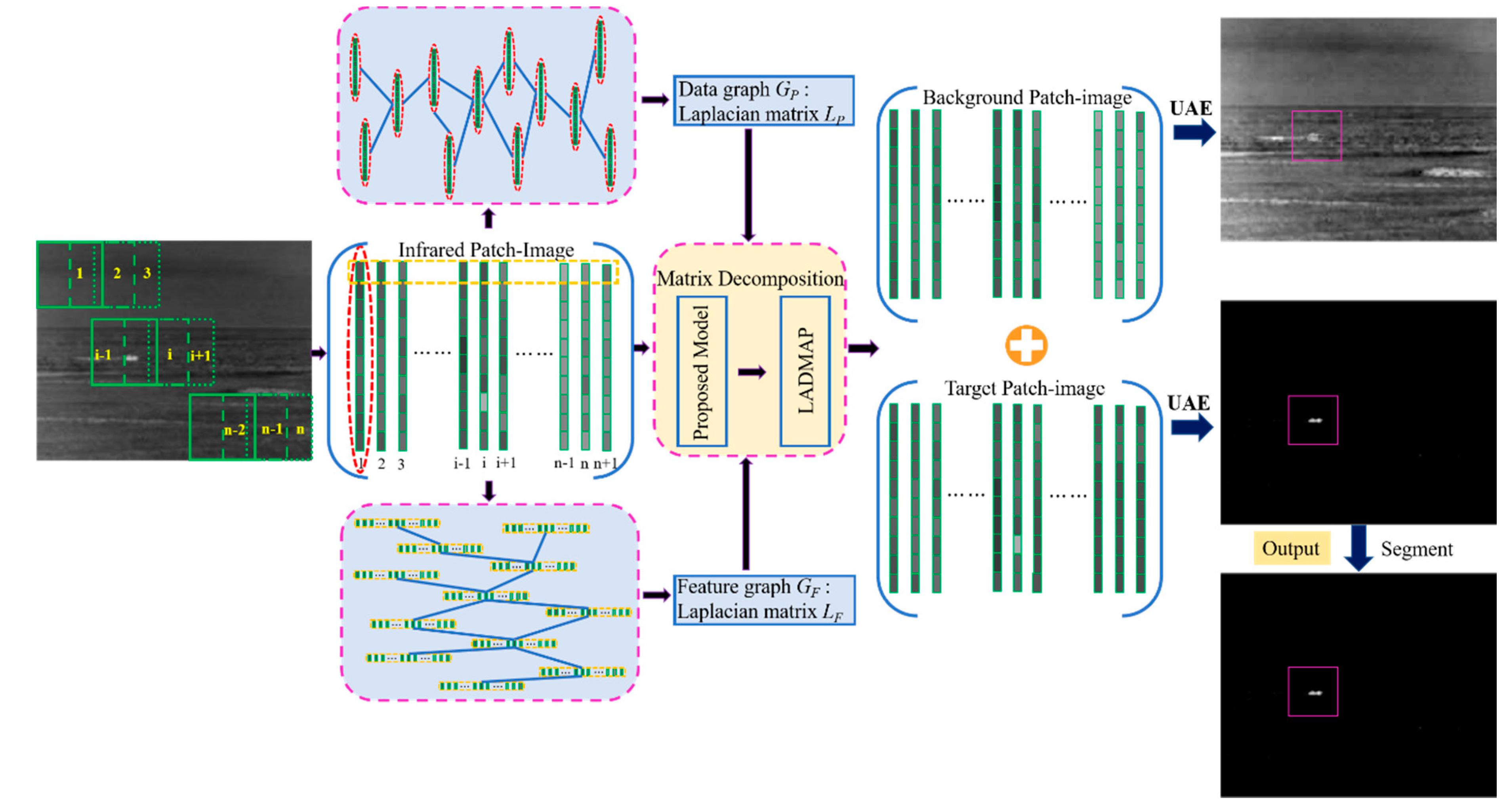

3. Algorithm Description

3.1. Patch and Feature Graph Regularizations

3.2. l1/2-Norm Regularization with Non-Negative Constraint

4. LADMAP for Solving the Proposed Model

4.1. Solution of the Proposed Method

| Algorithm 1: The revised LADMAP for Solving the Proposed Model |

| Input: Infrared small target image , , , and the number of nearest neighbors |

| Output: |

| Initialize: Construct infrared patch-image ; ; ; ; ; ; ; ; ; Compute and from graph and . |

| Whilenot convergeddo |

| 1: Compute by Equation (10); |

| 2: Compute by Equation (15); |

| where ; |

| . |

| 3: Compute by Equation (20) and by Equation (21); |

| 4: Check convergence condition according to Equation (23); |

| 5: Update k: k = k+1 |

| end |

4.2. Complexity Analysis

5. Experimental Evaluation and Analysis

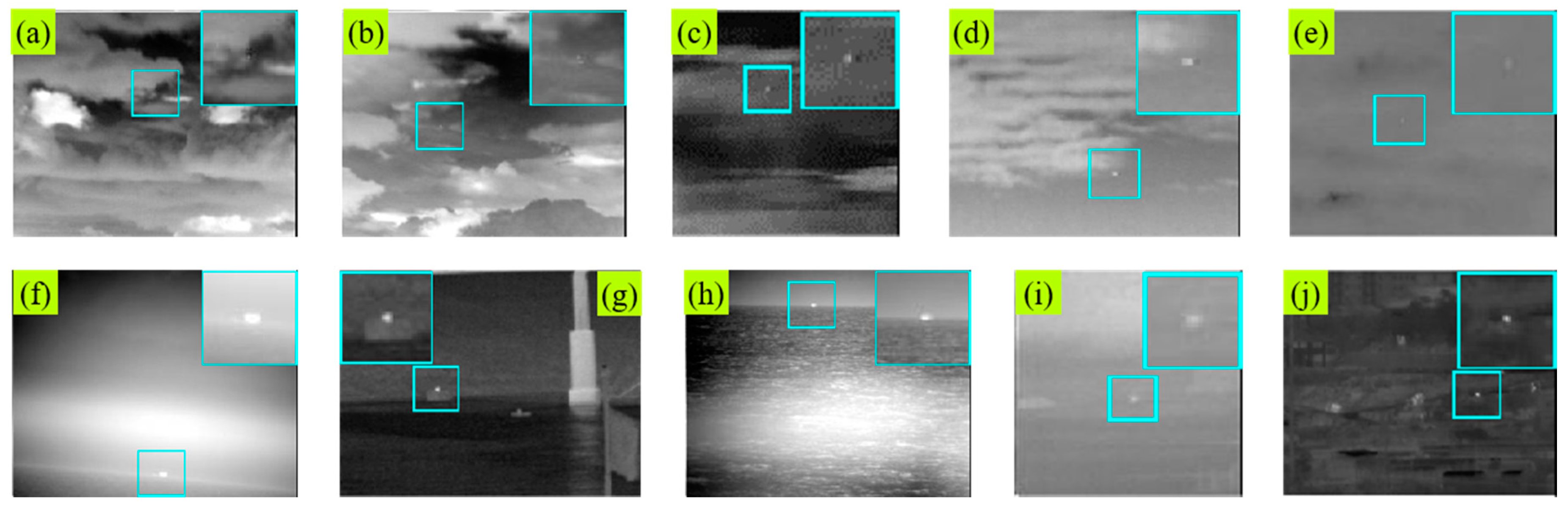

5.1. Datasets and Baselines

5.2. Evaluation Indicators

5.3. Validity of the Proposed Patch and Feature Sparse Regularizations

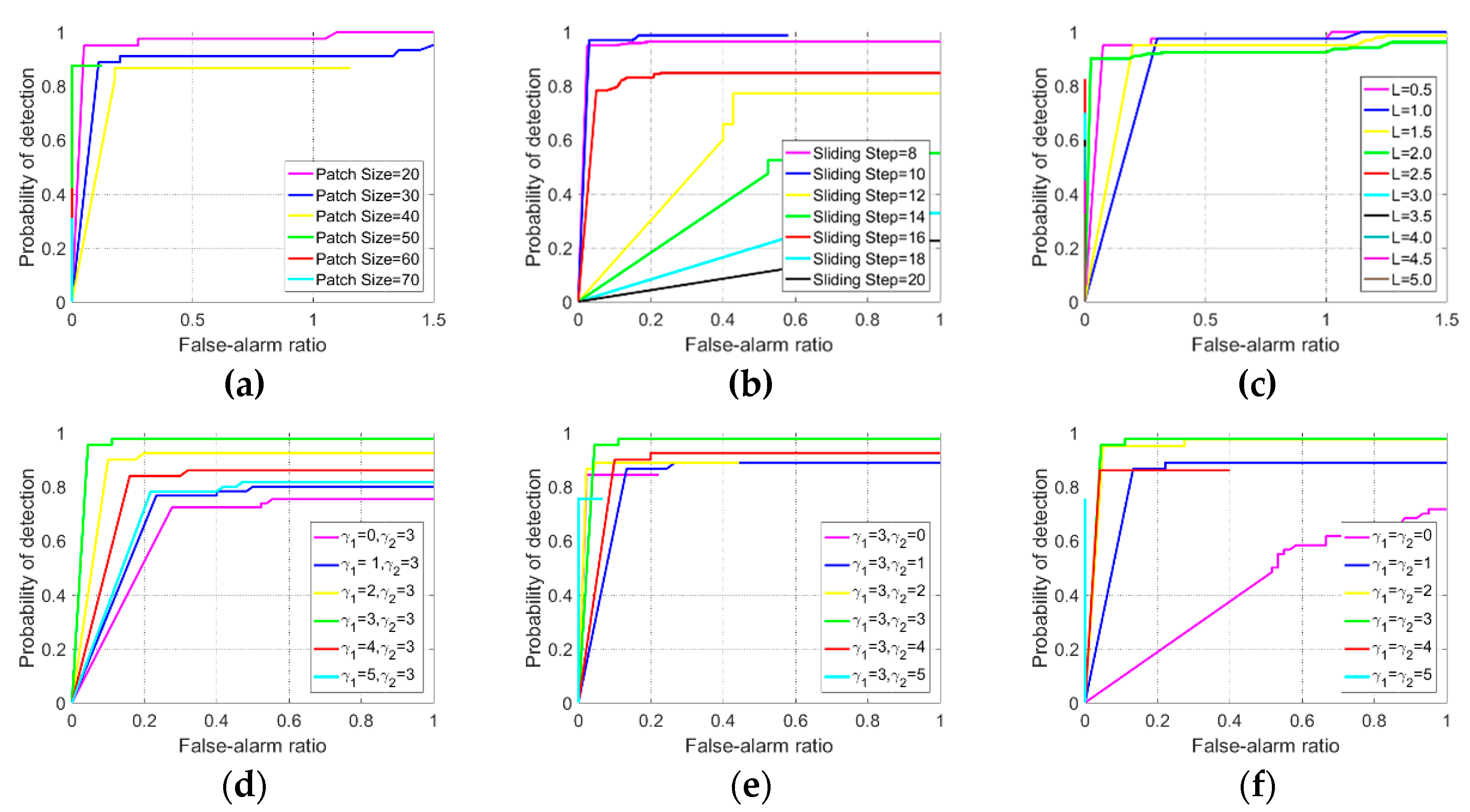

5.4. Sensitivity to Parameters

5.5. Qualitative Evaluations

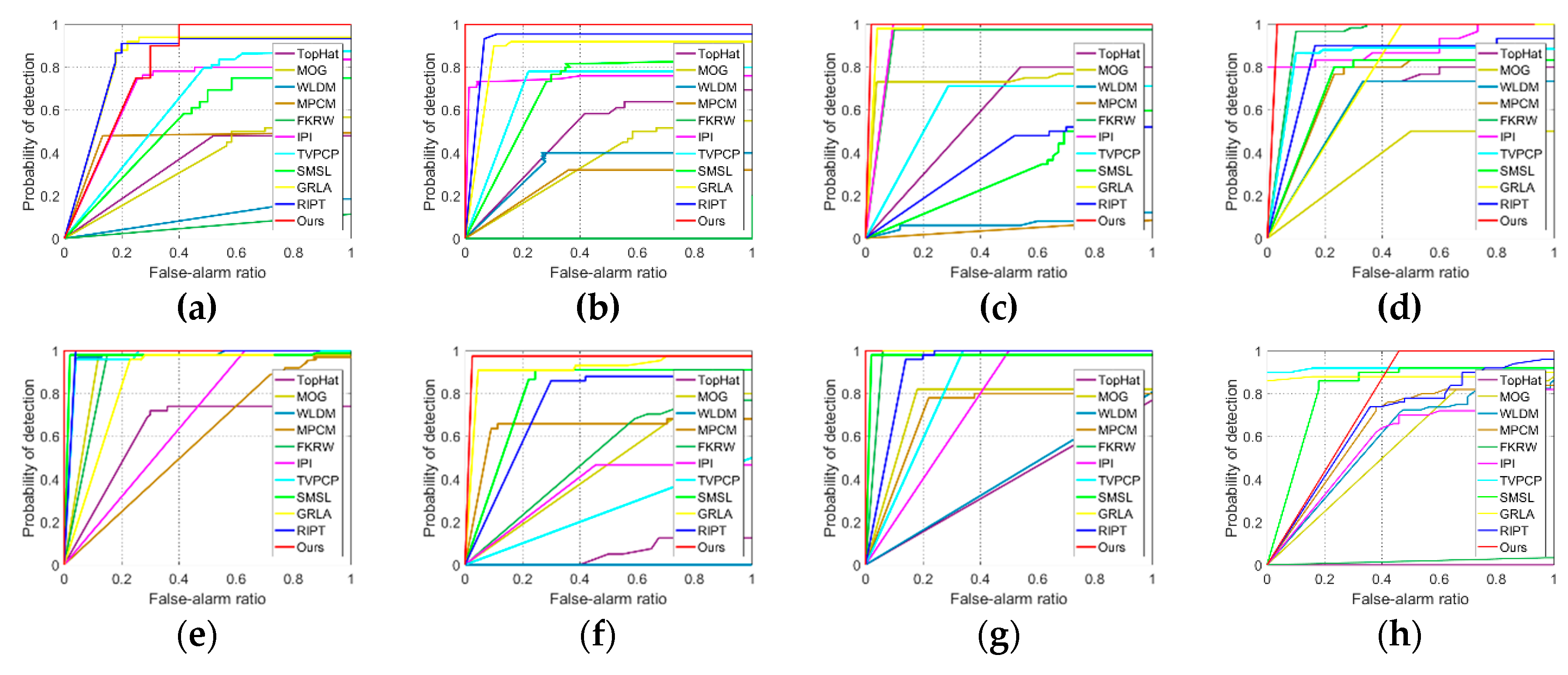

5.6. Quantitative Evaluations

5.7. Convergence Analysis

5.8. Execution Time Comparison

6. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhou, A.R.; Xie, W.X.; Pei, J.H. Background modeling in the fourier domain for maritime infrared target detection. IEEE Trans. Circ. Syst. Vid. 2019, 99, 1–16. [Google Scholar] [CrossRef]

- Rozantsev, A.; Lepetit, V.; Fua, P. Detecting flying objects using a single moving camera. IEEE Trans. Pattern Anal. 2017, 39, 879–892. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y. Robust infrared small target detection using local steering kernel reconstruction. Pattern Recogn. 2017, 77, 113–125. [Google Scholar] [CrossRef]

- Liu, D.L.; Li, Z.H.; Wang, X.R.; Zhang, J.Q. Moving target detection by nonlinear adaptive filtering on temporal profiles in infrared image sequences. Infrared Phys. Technol. 2015, 73, 41–48. [Google Scholar] [CrossRef]

- Rodriguez-Blanco, M.; Golikov, V. Multiframe GLRT-based adaptive detection of multipixel targets on a sea surface. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1–7. [Google Scholar] [CrossRef]

- Zhao, F.; Wang, T.T.; Shao, S.D.; Zhang, E.H.; Lin, G.F. Infrared moving small-target detection via spatiotemporal consistency of trajectory points. IEEE Geosci. Remote Sens. 2020, 17, 122–126. [Google Scholar] [CrossRef]

- Gao, C.Q.; Wang, L.; Xiao, Y.X.; Zhao, Q.; Meng, D.Y. Infrared small-dim target detection based on Markov random field guided noise modeling. Pattern Recogn. 2018, 76, 463–475. [Google Scholar] [CrossRef]

- Deshpande, S.D.; Meng, H.E.; Ronda, V.; Chan, P. Max-mean and Max-median filters for detection of small-targets. Proc. Spie Int. Soc. Opt. Eng. 1999, 3809, 74–83. [Google Scholar]

- Bai, X.Z.; Zhou, F.G. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recogn. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Gu, Y.F.; Wang, C.; Liu, B.X.; Zhang, Y. A kernel-based nonparametric regression method for clutter removal in infrared small-target detection applications. IEEE Geosci. Remote Sens. 2010, 7, 469–473. [Google Scholar] [CrossRef]

- Chen, C.L.; Li, H.; Wei, Y.T.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Wei, Y.T.; You, X.G.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recogn. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.P.; Liu, M.L.; Ye, C.H.; Zhou, X. Small infrared target detection based on weighted local difference measure. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4204–4214. [Google Scholar] [CrossRef]

- Li, W.; Zhao, M.J.; Deng, X.Y.; Li, L.; Li, L.W.; Zhang, W.J. Infrared small target detection using local and nonlocal spatial information. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3677–3689. [Google Scholar] [CrossRef]

- Qin, Y.; Bruzzone, L.; Gao, C.Q.; Li, B. Infrared small target detection based on facet kernel and random walker. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7104–7118. [Google Scholar] [CrossRef]

- Yao, S.K.; Chang, Y.; Qin, X.J. A coarse-to-fine method for infrared small target detection. IEEE Geosci. Remote Sens. 2019, 16, 256–260. [Google Scholar] [CrossRef]

- Han, J.H.; Liu, S.B.; Qin, G.; Zhao, Q.; Zhang, H.H.; Li, N.N. A local contrast method combined with adaptive background estimation for infrared small target detection. IEEE Geosci. Remote Sens. 2019, 16, 1442–1446. [Google Scholar] [CrossRef]

- Gao, C.Q.; Meng, D.Y.; Yang, Y.; Wang, Y.T.; Zhou, X.F.; Hauptmann, A.G. Infrared patch-image model for small target detection in a single image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Wright, J.; Ganesh, A.; Rao, S.; Ma, Y. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. Adv. Neural Inf. Process. Syst. 2009, 58, 289–298. [Google Scholar]

- Wang, X.Y.; Peng, Z.M.; Kong, D.H.; He, Y.M. Infrared dim and small target detection based on stable multisubspace learning in heterogeneous scenes. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5481–5493. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y.Q. Reweighted infrared patch-tensor model with both nonlocal and local priors for single-frame small target detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y.Q.; Song, Y. Infrared small target and background separation via column-wise weighted robust principal component analysis. Infrared Phys. Technol. 2016, 77, 421–430. [Google Scholar] [CrossRef]

- Zhang, L.D.; Peng, Z.M. Infrared small target detection based on partial sum of the tensor nuclear norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

- Zhou, F.; Wu, Y.Q.; Dai, Y.M.; Wang, P. Detection of small target using schatten 1/2 quasi-norm regularization with reweighted sparse enhancement in complex infrared scenes. Remote Sens. 2019, 11, 2058. [Google Scholar] [CrossRef]

- Xu, Z.B.; Chang, X.; Xu, F.; Zhang, H. L1/2 regularization: A thresholding representation theory and a fast solver. IEEE Trans. Neur. Net. Learn. 2012, 23, 1013–1027. [Google Scholar]

- Yin, M.; Gao, J.B.; Lin, Z.C. Laplacian regularized low-rank representation and its applications. IEEE Trans. Pattern Anal. 2016, 38, 504–517. [Google Scholar] [CrossRef]

- Shang, F.H.; Jiao, L.C.; Wang, F. Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recogn. 2012, 45, 2237–2250. [Google Scholar] [CrossRef]

- Javed, S.; Mahmood, A.; Al-Maadeed, S.; Bouwmans, T.; Jung, S.K. Moving object detection in complex scene using spatiotemporal structured-sparse RPCA. IEEE Trans. Image Process. 2019, 28, 1007–1022. [Google Scholar] [CrossRef]

- Tang, C.; Liu, X.W.; Zhu, X.Z.; Xiong, J.; Li, M.M.; Xia, J.Y.; Wang, X.K.; Wang, L.Z. Feature selective projection with low-rank embedding and dual laplacian regularization. IEEE Trans. Knowl. Data. En. 2019, 1–14. [Google Scholar] [CrossRef]

- Fan, B.J.; Cong, Y.; Tang, Y.D. Dual-graph regularized discriminative multitask tracker. IEEE Trans. Multimed. 2018, 20, 2303–2315. [Google Scholar] [CrossRef]

- Lin, Z.C.; Liu, R.S.; Su, Z.X. Linearized alternating direction method with adaptive penalty for low-rank representation. Adv. Neural Inf. Process. Syst. 2011, 612–620. [Google Scholar]

- Zhao, Y.; Pan, H.B.; Du, C.P.; Peng, Y.R.; Zheng, Y. Bilateral two-dimensional least mean square filter for infrared small target detection. Infrared Phys. Technol. 2014, 65, 17–23. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, J.G.; Li, M.; An, W. Infrared small-faint target detection using non-i.i.d. mixture of gaussians and flux density. Remote Sens. 2019, 11, 2831. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective infrared small target detection utilizing a novel local contrast method. IEEE Geosci. Remote Sens. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Bai, X.Z.; Bi, Y.G. Derivative entropy-based contrast measure for infrared small-target detection. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2452–2466. [Google Scholar] [CrossRef]

- Deng, H.; Sun, X.P.; Zhou, X. A multiscale fuzzy metric for detecting small infrared targets against chaotic cloudy/sea-sky backgrounds. IEEE Trans. Cybern. 2018, 49, 1694–1707. [Google Scholar] [CrossRef]

- Liu, D.P.; Cao, L.; Li, Z.Z.; Liu, T.M.; Che, P. Infrared small target detection based on flux density and direction diversity in gradient vector field. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2528–2554. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, S.C.; Zhao, W. Sparse representation based infrared small target detection via an online-learned double sparse background dictionary. Infrared Phys. Technol. 2019, 99, 14–27. [Google Scholar] [CrossRef]

- Wang, X.; Shen, S.Q.; Ning, C.; Xu, M.X.; Yan, X.J. A sparse representation-based method for infrared dim target detection under sea–sky background. Infrared Phys. Technol. 2015, 71, 347–355. [Google Scholar] [CrossRef]

- Gao, J.Y.; Guo, Y.L.; Lin, Z.P.; An, W.; Li, J. Robust infrared small target detection using multiscale gray and variance difference measures. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 5039–5052. [Google Scholar] [CrossRef]

- Wu, S.C.; Zuo, Z.R. Small target detection in infrared images using deep convolutional neural networks. Int. J. Wavelets. Multi. 2019, 38, 03371. [Google Scholar]

- Lin, L.K.; Wang, S.Y.; Tang, Z.X. Using deep learning to detect small targets in infrared oversampling images. J. Syst. Eng. Electron. 2018, 29, 947–952. [Google Scholar]

- Zhao, D.; Zhou, H.X.; Rang, S.H.; Jia, X.P. An adaptation of cnn for small target detection in the infrared. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 669–672. [Google Scholar]

- Dey, M.; Rana, S.P.; Siarry, P. A robust flir target detection employing an auto-convergent pulse coupled neural network. Remote Sens. Lett. 2019, 10, 639–648. [Google Scholar] [CrossRef]

- Wang, X.Y.; Peng, Z.M.; Kong, D.H.; Zhang, P.; He, Y.M. Infrared dim target detection based on total variation regularization and principal component pursuit. Image Vis. Comput. 2017, 63, 1–9. [Google Scholar] [CrossRef]

- Zhou, F.; Wu, Y.Q.; Dai, Y.M.; Wang, P.; Ni, K. Graph-regularized laplace approximation for detecting small infrared target against complex backgrounds. IEEE Access 2019, 7, 85354–85371. [Google Scholar] [CrossRef]

- Zhu, H.; Liu, S.M.; Deng, L.Z.; Li, Y.S.; Xiao, F. Infrared small target detection via low-rank tensor completion with top-hat regularization. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1004–1016. [Google Scholar] [CrossRef]

- Deng, Y.; Dai, Q.H.; Liu, R.S.; Zhang, Z.K.; Hu, S.Q. Low-rank structure learning via nonconvex heuristic recovery. IEEE Trans. Neur. Net. Learn. 2013, 24, 383–396. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv 2010, arXiv:1009.5055. [Google Scholar]

- Zhou, X.W.; Yang, C.; Zhao, H.Y.; Yu, W.C. Low-rank modeling and its applications in image analysis. ACM Comput. Surv. (CSUR) 2014, 47, 1–33. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Rin Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Wen, Z.W.; Goldfarb, D.; Yin, W.T. Alternating direction augmented lagrangian methods for semidefinite programming. Math. Program. Comput. 2010, 2, 203–230. [Google Scholar] [CrossRef]

| Methods | Advantages | Disadvantages |

|---|---|---|

| IPI [18] | Perform well in uniform background | Over-shrink leading to missing detection or remaining residuals, time consuming |

| IPT [21] | Perform well in relative complex scenes, computational friendly | Losing dim target, fails to eliminate target-like point |

| STPI [7] | Achieve good performance for slowly changing background | Sensitive to strong edges and clutters, difficult to address non-Gaussian noise |

| STTM [47] | Perform well for homogeneous and slowly changing scenes | Difficult to address highly dynamic scenes, easily leaving residuals |

| SMSL [20] | Perform well for salient target scenes, computational friendly | Hard to suppress strong edges, easily missing weak target |

| WIPI [22] | Works well for high contrast scenes | Incapability to address the sparse noise, time consuming |

| Reference [23] | Eliminate sparse edges and noise, computational friendly | Difficult to suppress the interferences with similar appearance to targets |

| Reference [24] | Preserve target structure, suppress non-target residuals | Cannot completely suppress significant edge structure |

| TVPCP [45] | Recover homogeneous background well | Sensitive to the ground disturbance with high thermal, takes a long time |

| GRLA [46] | Perform better in background suppression | Weaken target energy, unable to maintain target structure |

| Scenes | Sequences | Frames/Resolution | Target Features | Background Features |

|---|---|---|---|---|

| Deep-space | 1, 2 | 100,100/, | Very small and weak with low contrast, moving along the cloud edge or buried in cloud. | Containing numerous irregular strong cloud clutter, and brightness changes greatly. |

| Sky-cloudy | 3, 4, 5 | 50,30,100/ , , | Small with irregular shape, brightness varies greatly. | Containing substantial banded and floccus cloud and background noise. Low resolution. |

| Sea-sky | 6, 7, 8 | 100,100,200/ , , | Target size changes greatly. Relatively high contrast. Emerging on sea-sky line. | Background with strong sea waves, bright glitters, and artificial buildings. Low signal-to-clutter. |

| Terrain | 9, 10 | 100,100/, | Small square target with fuzzy contour, moving fast. Contrast changes obviously. | Background with heavy noise, plants, mountains, and manmade buildings. Low contrast. |

| No. | Methods | Parameter Settings |

|---|---|---|

| 1 | TDLMS | Support size: , step size: |

| 2 | TopHat | Structure shape: square, structure size: |

| 3 | MOG | Patch size: , step size: , noise component: 3, frames: 3, , |

| 4 | WLDM | , , |

| 5 | MPCM | |

| 6 | FKRW | , , , window size: |

| 7 | IPI | Patch size: , step size: , , , |

| 8 | TVPCP | Patch size: , step size: , , , , , , |

| 9 | SMSL | Patch size: , step size: , , , |

| 10 | GRLA | Patch size: , step size: , , , , , , , |

| 11 | RIPT | Patch size:, step size: , , , , , |

| 12 | Ours | Patch size: , step size: , , , , , |

| P | 20 | 30 | 40 | 50 | 60 | 70 | |

|---|---|---|---|---|---|---|---|

| S | |||||||

| 8 | 0.68 | 1.84 | 3.58 | 5.71 | 9.53 | 13.75 | |

| 10 | 0.38 | 0.95 | 1.74 | 3.23 | 5.35 | 7.69 | |

| 12 | 0.27 | 0.61 | 1.17 | 2.15 | 3.64 | 4.76 | |

| 14 | 0.21 | 0.43 | 0.83 | 1.52 | 2.17 | 3.46 | |

| 16 | 0.16 | 0.35 | 0.63 | 1.04 | 1.60 | 2.45 | |

| 18 | 0.14 | 0.26 | 0.46 | 0.81 | 1.19 | 1.73 | |

| 20 | 0.11 | 0.22 | 0.38 | 0.68 | 0.88 | 1.44 | |

| Deep-space (Sequences 1 and 2) | Metrics | TopHat | MOG | WLDM | MPCM | FKRW | IPI | TVPCP | SMSL | GRLA | RIPT | Ours |

| SCRG | 1.75 | 4.26 | 18.42 | 23.75 | 102.19 | 82.14 | 112.03 | 196.64 | 296.46 | 348.13 | 512.06 | |

| BSF | 3.23 | 3.12 | 16.49 | 13.75 | 142.19 | 68.68 | 83.02 | 168.43 | 182.69 | 212.46 | 364.04 | |

| CG | 1.78 | 1.06 | 126.49 | 248.37 | 26.22 | 108.27 | 113.67 | 22.35 | 80.26 | 82.19 | 186.44 | |

| Sky-cloud (Sequences 3–5) | Metrics | TopHat | MOG | WLDM | MPCM | FKRW | IPI | TVPCP | SMSL | GRLA | RIPT | Ours |

| SCRG | 4.54 | 3.15 | 10.81 | 32.28 | 55.62 | 126.81 | 124.26 | 212.06 | 318.29 | 316.42 | 586.24 | |

| BSF | 1.04 | 2.08 | 6.81 | 21.24 | 48.63 | 122.19 | 110.13 | 182.56 | 286.92 | 228.89 | 426.25 | |

| CG | 3.93 | 0.44 | 86.81 | 224.88 | 136.86 | 62.27 | 48.53 | 29.25 | 102.71 | 57.98 | 146.42 | |

| Sea-sky (Sequences 6–8) | Metrics | TopHat | MOG | WLDM | MPCM | FKRW | IPI | TVPCP | SMSL | GRLA | RIPT | Ours |

| SCRG | 1.21 | 3.48 | 8.16 | 42.23 | 45.18 | 104.15 | 144.58 | 162.10 | 206.38 | 172.61 | 332.62 | |

| BSF | 2.65 | 3.68 | 4.25 | 22.23 | 25.81 | 128.76 | 156.36 | 109.24 | 166.73 | 118.09 | 306.16 | |

| CG | 2.76 | 2.18 | 116.23 | 186.43 | 32.52 | 65.87 | 75.35 | 36.62 | 48.67 | 69.08 | 108.91 | |

| Terrain-sky (Sequences 9 and 10) | Metrics | TopHat | MOG | WLDM | MPCM | FKRW | IPI | TVPCP | SMSL | GRLA | RIPT | Ours |

| SCRG | SCRG | 1.42 | 2.69 | 15.35 | 58.39 | 70.92 | 96.00 | 108.66 | 134.69 | 266.73 | 232.56 | |

| BSF | BSF | 1.55 | 1.22 | 8.16 | 48.39 | 82.93 | 104.20 | 88.66 | 146.28 | 216.22 | 197.86 | |

| CG | CG | 2.82 | 0.67 | 286.18 | 257.39 | 69.28 | 81.40 | 52.11 | 30.28 | 66.62 | 86.43 |

| Methods | Seq 1 | Seq 2 | Seq 3 | Seq 4 | Seq 5 | Seq 6 | Seq 7 | Seq 8 | Seq 9 | Seq 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| TopHat | 0.069 | 0.063 | 0.034 | 0.059 | 0.052 | 0.085 | 0.065 | 0.071 | 0.042 | 0.056 |

| MOG | 269.9 | 274.5 | 7.73 | 121.7 | 77.5 | 156.2 | 235.7 | 172.4 | 1.64 | 126.32 |

| WLDM | 1.21 | 1.43 | 0.36 | 0.87 | 0.66 | 1.33 | 0.91 | 1.42 | 0.37 | 0.65 |

| MPCM | 0.58 | 0.56 | 0.42 | 0.36 | 0.58 | 0.69 | 0.62 | 1.07 | 0.47 | 0.15 |

| FKRW | 0.63 | 0.61 | 0.35 | 0.69 | 0.42 | 0.61 | 0.63 | 1.37 | 0.39 | 0.26 |

| IPI | 14.72 | 14.36 | 0.25 | 2.83 | 1.58 | 16.23 | 6.25 | 18.6 | 0.29 | 1.62 |

| TVPCP | 265.5 | 248.8 | 10.42 | 81.44 | 38.85 | 183.4 | 98.81 | 187.2 | 10.9 | 42.9 |

| SMSL | 0.39 | 0.35 | 0.14 | 0.21 | 0.19 | 0.36 | 0.59 | 0.38 | 0.10 | 0.49 |

| GRLA | 3.86 | 3.69 | 0.55 | 1.95 | 1.94 | 12.87 | 5.55 | 3.42 | 0.58 | 2.23 |

| RIPT | 1.81 | 1.91 | 0.18 | 0.85 | 0.37 | 1.54 | 1.22 | 2.09 | 0.20 | 0.60 |

| Ours | 2.34 | 2.09 | 0.22 | 1.41 | 0.73 | 1.99 | 1.39 | 2.31 | 0.37 | 0.86 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, F.; Wu, Y.; Dai, Y.; Ni, K. Robust Infrared Small Target Detection via Jointly Sparse Constraint of l1/2-Metric and Dual-Graph Regularization. Remote Sens. 2020, 12, 1963. https://doi.org/10.3390/rs12121963

Zhou F, Wu Y, Dai Y, Ni K. Robust Infrared Small Target Detection via Jointly Sparse Constraint of l1/2-Metric and Dual-Graph Regularization. Remote Sensing. 2020; 12(12):1963. https://doi.org/10.3390/rs12121963

Chicago/Turabian StyleZhou, Fei, Yiquan Wu, Yimian Dai, and Kang Ni. 2020. "Robust Infrared Small Target Detection via Jointly Sparse Constraint of l1/2-Metric and Dual-Graph Regularization" Remote Sensing 12, no. 12: 1963. https://doi.org/10.3390/rs12121963

APA StyleZhou, F., Wu, Y., Dai, Y., & Ni, K. (2020). Robust Infrared Small Target Detection via Jointly Sparse Constraint of l1/2-Metric and Dual-Graph Regularization. Remote Sensing, 12(12), 1963. https://doi.org/10.3390/rs12121963