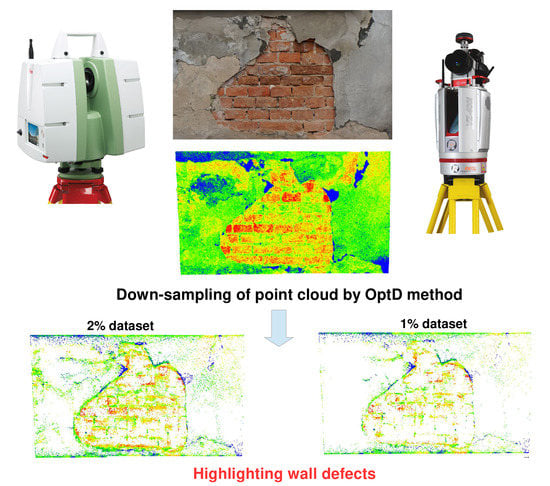

On the Use of the OptD Method for Building Diagnostics

Abstract

1. Introduction

2. Materials and Methods

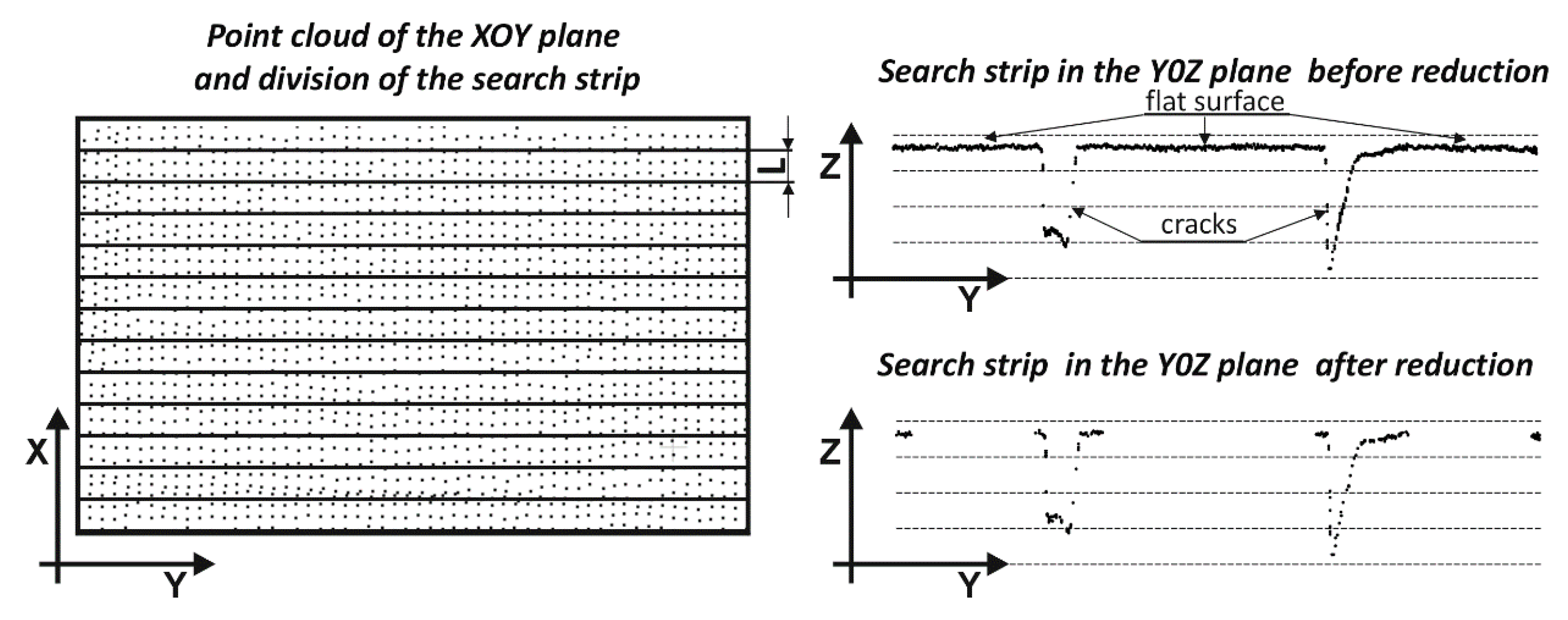

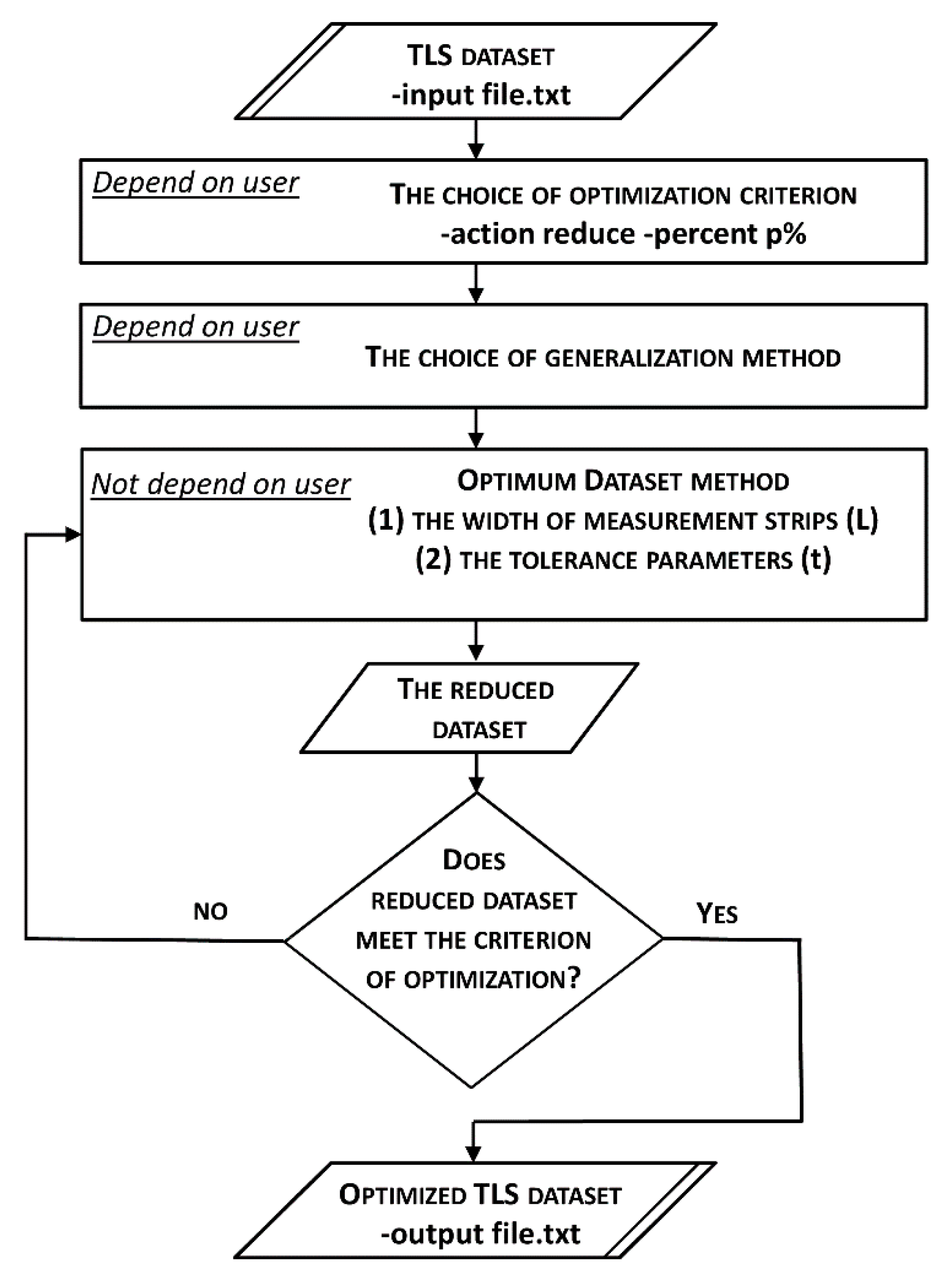

Theoretical Background of OptD Method

- Geometry visibility. Improvement of visibility and readability of the certain shape details. After applying OptD, it is possible to better distinguish object shapes that might originally be hardly visible because of the presence of a large amount of data [34];

- Processing time. Dataset reduction enables a computationally more efficient execution of the time-consuming analysis of the acquired data [30].

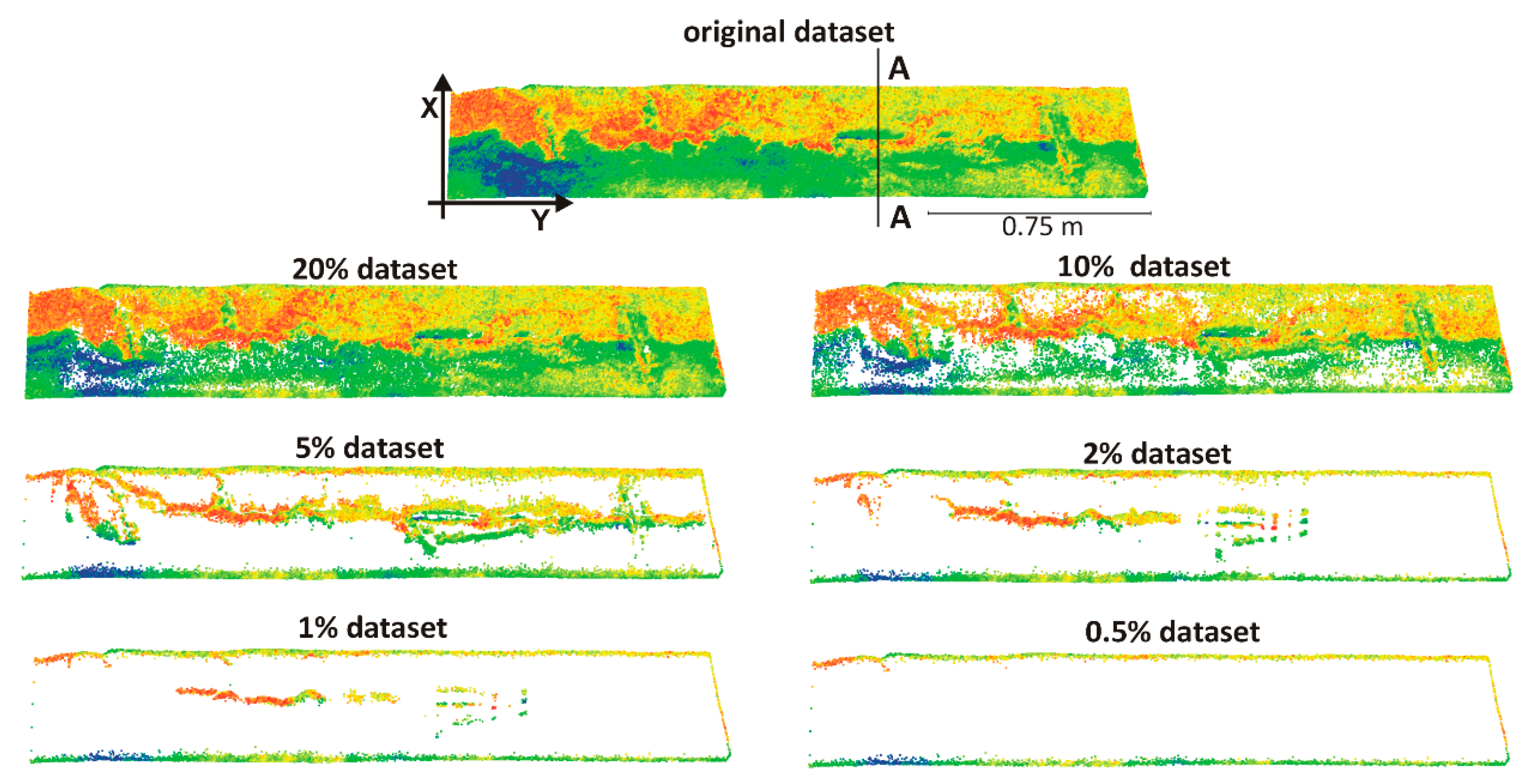

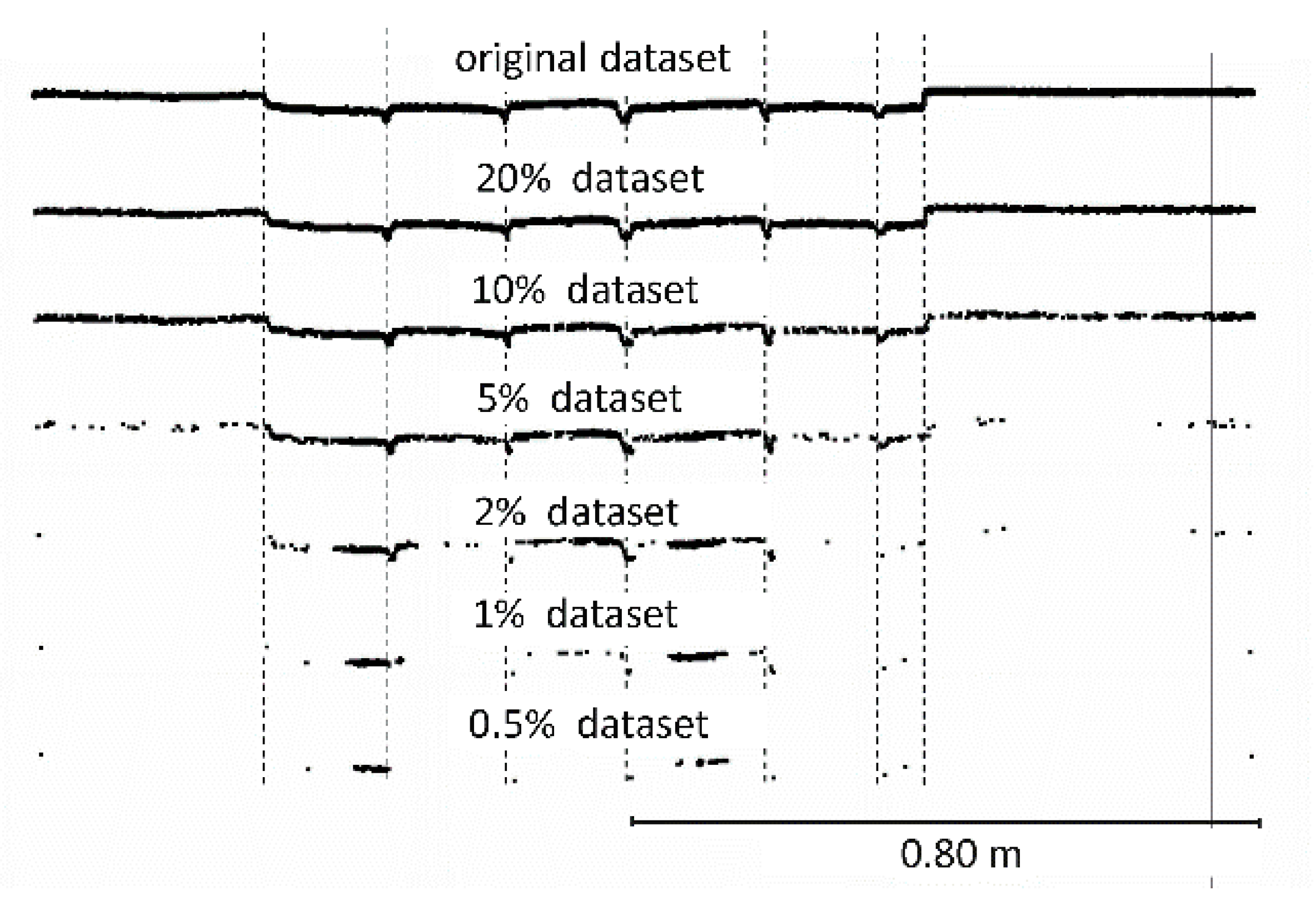

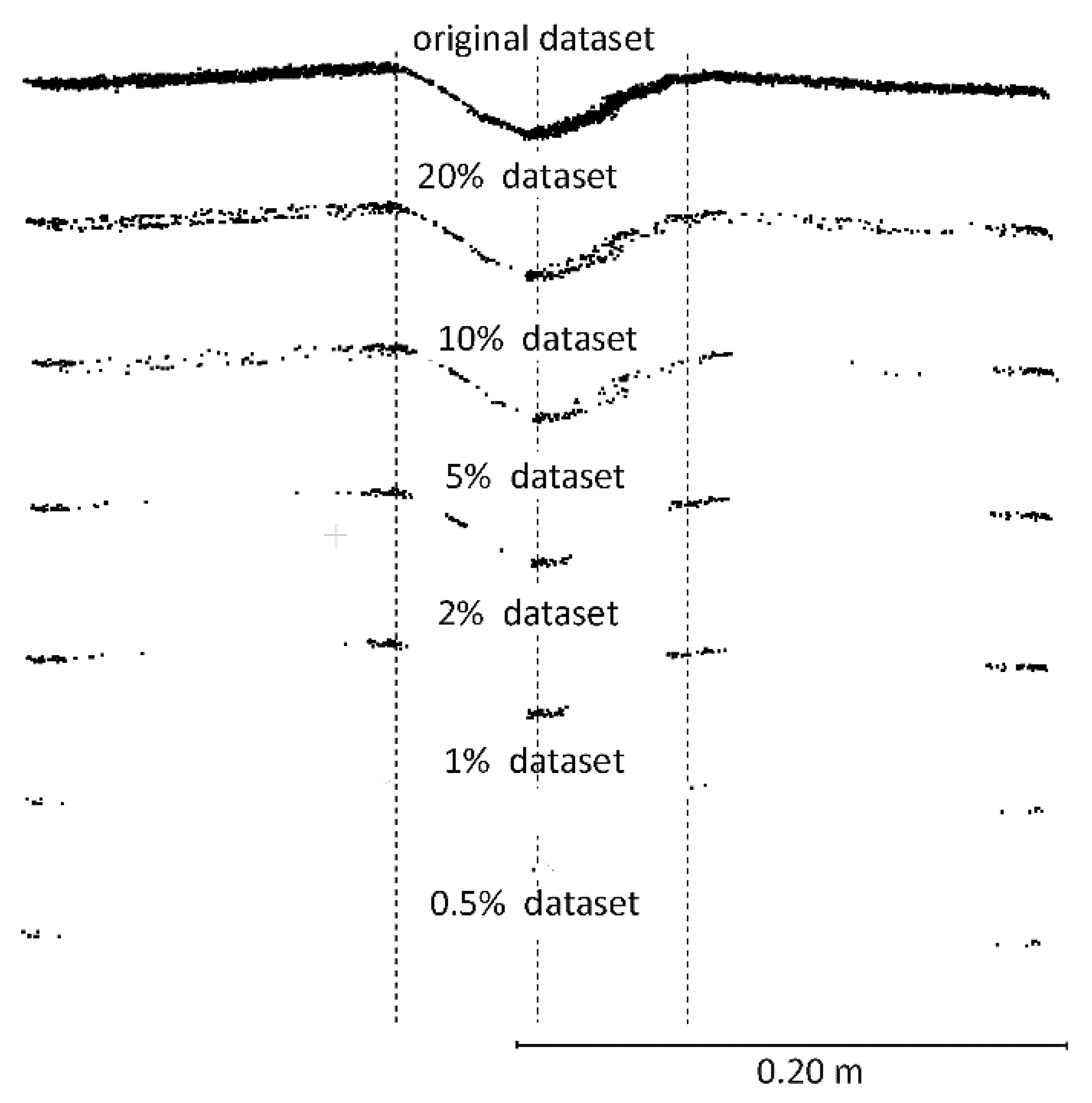

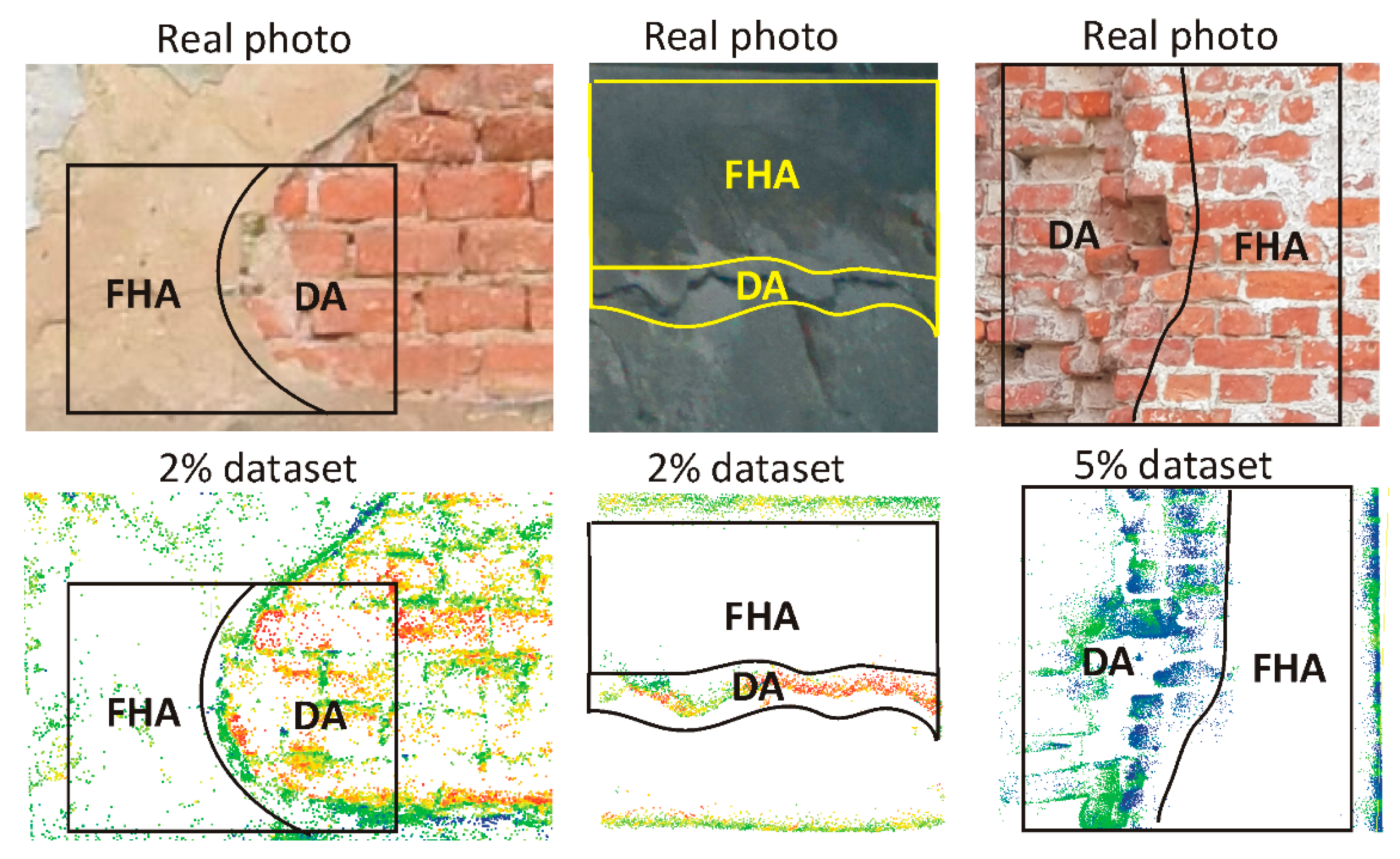

3. Results

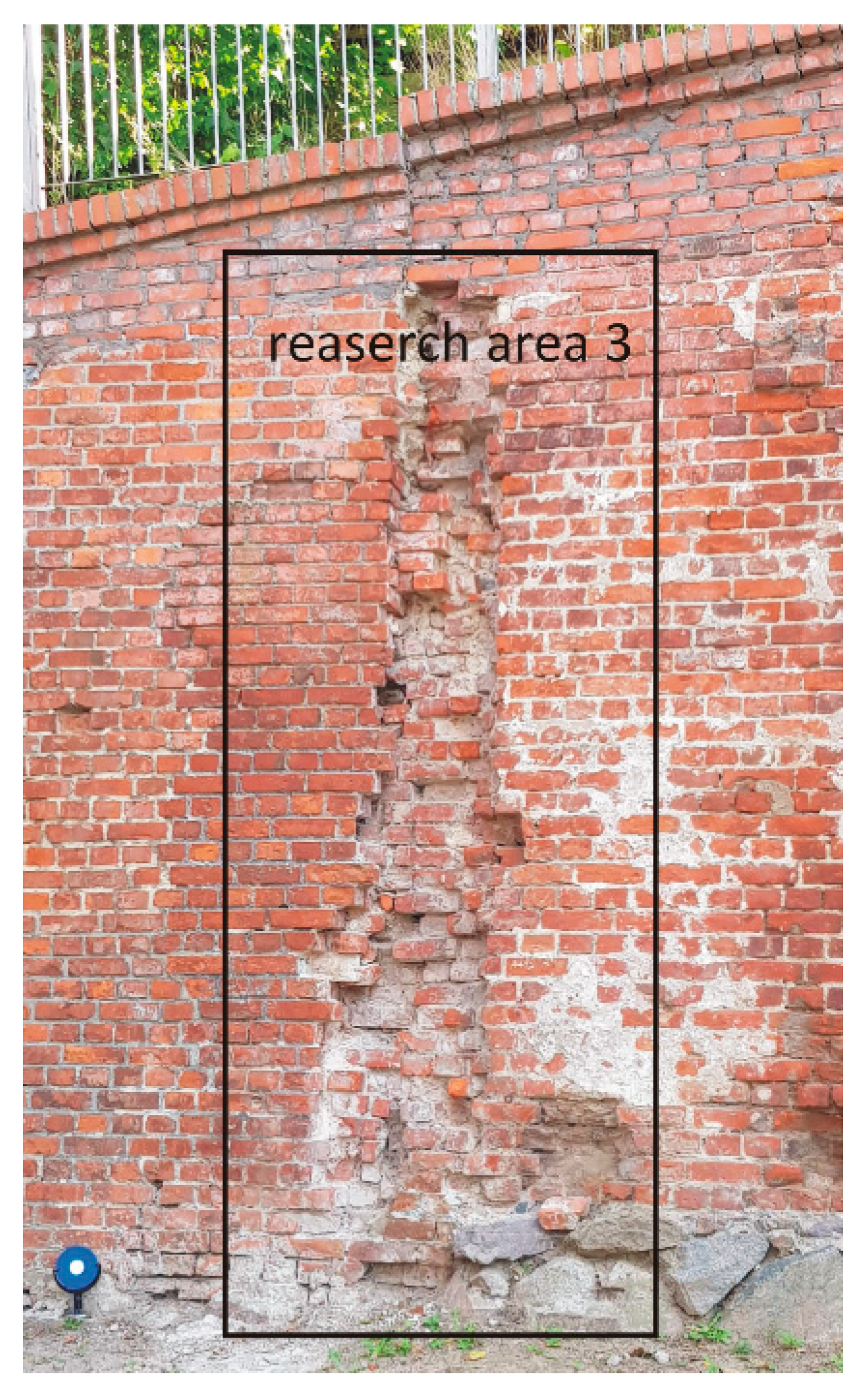

3.1. Objects of Research and Used Equipment

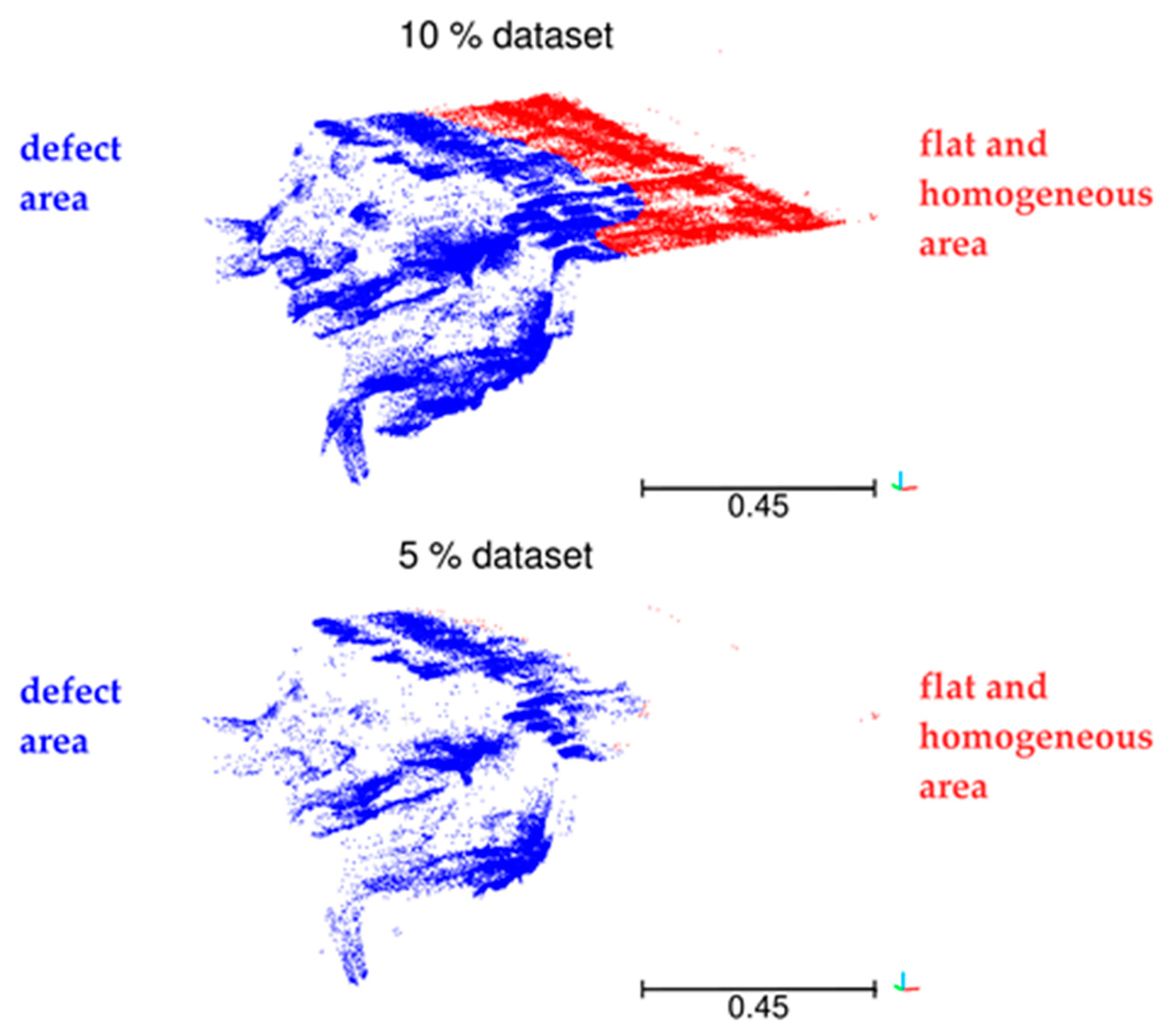

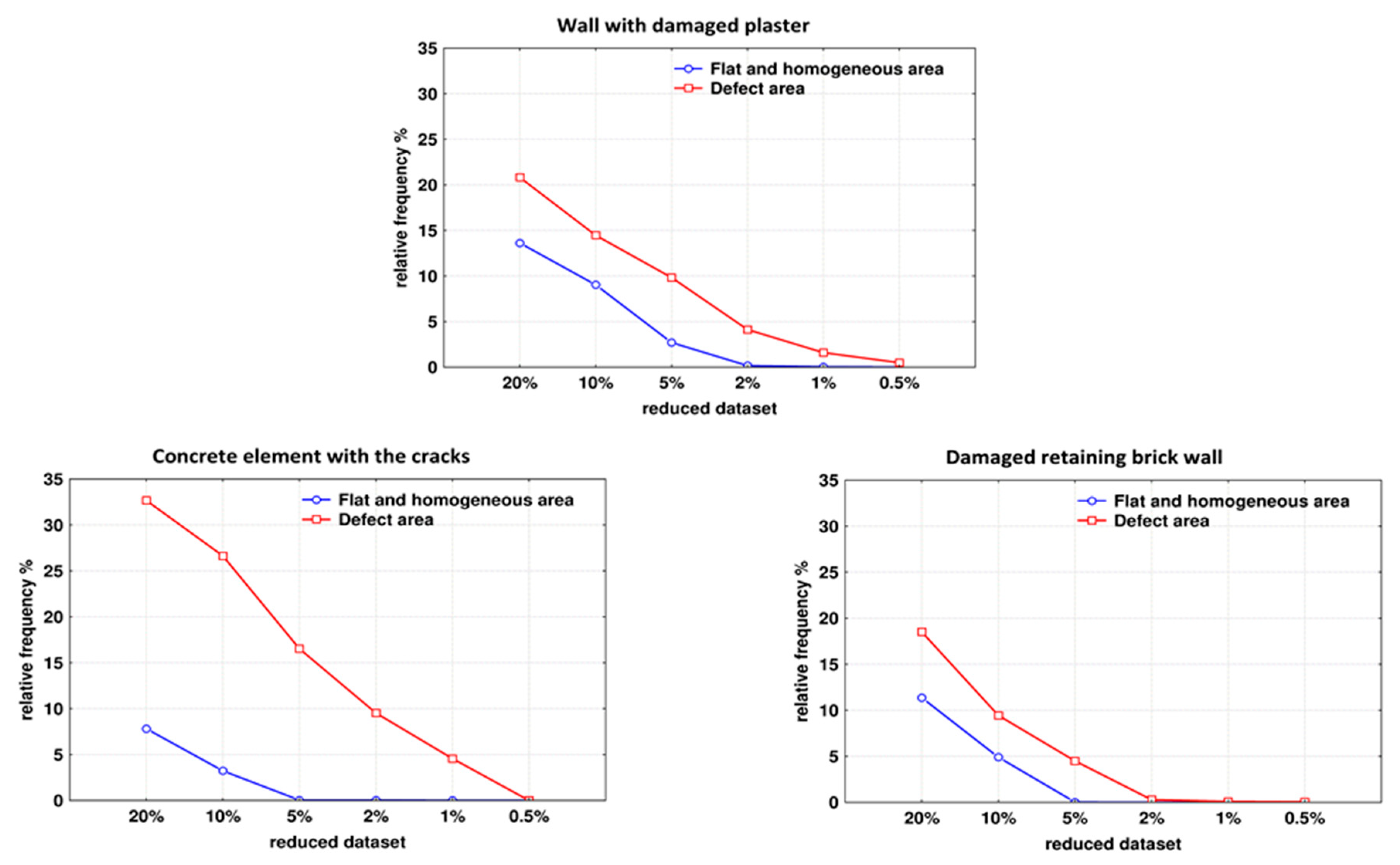

3.2. Data Processing Using the OptD Method

4. Discussion

5. Conclusions

- The reduced dataset obtained with OptD has a significantly lower point density on regular areas (wall without defects) than on defects (cavities and cracks).

- Obtained results show that OptD can be effectively used for optimizing cloud points for diagnostic measurements buildings and other structures.

- The results of this work indicate the possibility of using OptD as a tool for easing the detection of defects in buildings and structures. Our future work will be dedicated to the investigation of this aspect.

- The main disadvantage of the OptD method is that it may retain a large number of points at the border of the region of interest.

- Authors have been working to implement the OptD method in point cloud data processing software.

Author Contributions

Funding

Conflicts of Interest

References

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef]

- Reshetyuk, Y.; Mårtensson, S.G. Terrestrial laser scanning for detection of landfill gas: A pilot study. J. Appl. Geod. 2014, 8, 87–96. [Google Scholar] [CrossRef]

- Ossowski, R.; Przyborski, M.; Tysiac, P. Stability Assessment of Coastal Cliffs Incorporating Laser Scanning Technology and a Numerical Analysis. Remote Sens. 2019, 11, 1951. [Google Scholar] [CrossRef]

- Kasperski, J.; Delacourt, C.; Allemand, P.; Potherat, P.; Jaud, M.; Varrel, E. Application of a Terrestrial Laser Scanner (TLS) to the study of the Séchilienne landslide (Isère, France). Remote Sens. 2010, 2, 2785–2802. [Google Scholar] [CrossRef]

- Suchocki, C. Application of terrestrial laser scanner in cliff shores monitoring. Rocz. Ochr. Sr. 2009, 11, 715–725. [Google Scholar]

- Nowak, R.; Orłowicz, R.; Rutkowski, R. Use of TLS (LiDAR) for building diagnostics with the example of a historic building in Karlino. Buildings 2020, 10, 24. [Google Scholar] [CrossRef]

- Kermarrec, G.; Kargoll, B.; Alkhatib, H. Deformation analysis using B-spline surface with correlated terrestrial laser scanner observations-a bridge under load. Remote Sens. 2020, 12, 829. [Google Scholar] [CrossRef]

- Suchocki, C.; Katzer, J. TLS technology in brick walls inspection. In Proceedings of the 2018 Baltic Geodetic Congress (BGC Geomatics), Olsztyn, Poland, 21–23 June 2018; pp. 359–363. [Google Scholar]

- Suchocki, C.; Damięcka, M.; Jagoda, M. Determination of the building wall deviations from the vertical plane. In Proceedings of the 7th International Conference on Environmental Engineering, ICEE 2008—Conference Proceedings, Vilnius, Lithuania, 22–23 May 2008; pp. 1488–1492. [Google Scholar]

- Pu, S.; Rutzinger, M.; Vosselman, G.; Oude Elberink, S. Recognizing basic structures from mobile laser scanning data for road inventory studies. ISPRS J. Photogramm. Remote Sens. 2011, 66, S28–S39. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Yu, Y.; Wang, C.; Chapman, M.; Yang, B. Using mobile laser scanning data for automated extraction of road markings. ISPRS J. Photogramm. Remote Sens. 2014, 87, 93–107. [Google Scholar] [CrossRef]

- Chmelina, K.; Jansa, J.; Hesina, G.; Traxler, C. A 3-d laser scanning system and scan data processing method for themonitoring of tunnel deformations. J. Appl. Geod. 2012, 6, 177–185. [Google Scholar] [CrossRef]

- Tan, K.; Cheng, X.; Ju, Q.; Wu, S. Correction of Mobile TLS Intensity Data for Water Leakage Spots Detection in Metro Tunnels. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1711–1715. [Google Scholar] [CrossRef]

- Yastikli, N. Documentation of cultural heritage using digital photogrammetry and laser scanning. J. Cult. Herit. 2007, 8, 423–427. [Google Scholar] [CrossRef]

- Grilli, E.; Remondino, F. Classification of 3D digital heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Armesto-González, J.; Riveiro-Rodríguez, B.; González-Aguilera, D.; Rivas-Brea, M.T. Terrestrial laser scanning intensity data applied to damage detection for historical buildings. J. Archaeol. Sci. 2010, 37, 3037–3047. [Google Scholar] [CrossRef]

- Suchocki, C.; Jagoda, M.; Obuchovski, R.; Šlikas, D.; Sužiedelytė-Visockienė, J. The properties of terrestrial laser system intensity in measurements of technical conditions of architectural structures. Metrol. Meas. Syst. 2018, 25, 779–792. [Google Scholar] [CrossRef]

- Suchocki, C.; Katzer, J.; Rapiński, J. Terrestrial Laser Scanner as a Tool for Assessment of Saturation and Moisture Movement in Building Materials. Period. Polytech. Civ. Eng. 2018, 62, 694–699. [Google Scholar] [CrossRef]

- Suchocki, C.; Katzer, J. Terrestrial laser scanning harnessed for moisture detection in building materials—Problems and limitations. Autom. Constr. 2018, 94, 127–134. [Google Scholar] [CrossRef]

- Li, Q.; Cheng, X. Damage detection for historical architectures based on tls. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2018; Volune XLII, pp. 7–10. [Google Scholar]

- Peppe, P.J.; De Donno, G.; Marsella, M.; Orlando, L.; Renzi, B.; Salviani, S.; Santarelli, M.L.; Scifoni, S.; Sonnessa, A.; Verri, F.; et al. High-resolution geomatic and geophysical techniques integrated with chemical analyses for the characterization of a Roman wall. J. Cult. Herit. 2016, 17, 141–150. [Google Scholar] [CrossRef]

- Nowak, R.; Orłowicz, R. Testing of Chosen Masonry Arched Lintels. Int. J. Archit. Herit. 2020. [Google Scholar] [CrossRef]

- Peng, J.; Kim, C.S.; Kuo, C.C.J. Technologies for 3D mesh compression: A survey. J. Vis. Commun. Image Represent. 2005, 16, 688–733. [Google Scholar] [CrossRef]

- Maglo, A.; Lavoue, G.; Dupont, F.; Hudelot, C. 3D Mesh Compression: Survey, Comparisons, and Emerging Trends. ACM Comput. Surv. 2015, 47, 1–44. [Google Scholar] [CrossRef]

- Du, X.; Zhuo, Y. A point cloud data reduction method based on curvature. In Proceedings of the 2009 IEEE 10th International Conference on Computer-Aided Industrial Design & Conceptual Design—CAID CD’2009, Wenzhou, China, 26–29 November 2009; pp. 914–918. [Google Scholar] [CrossRef]

- Mancini, F.; Castagnetti, C.; Rossi, P.; Dubbini, M.; Fazio, N.L.; Perrotti, M.; Lollino, P. An integrated procedure to assess the stability of coastal rocky cliffs: From UAV close-range photogrammetry to geomechanical finite element modeling. Remote Sens. 2017, 9, 1235. [Google Scholar] [CrossRef]

- Lin, Y.-J.; Benziger, R.R.; Habib, A. Planar-Based Adaptive Down-Sampling of Point Clouds. Photogramm. Eng. Remote Sens. 2016, 82, 955–966. [Google Scholar] [CrossRef]

- Katzer, J.; Kobaka, J. Combined non-destructive testing approach to waste fine aggregate cement composites. Sci. Eng. Compos. Mater. 2009, 16, 277–284. [Google Scholar] [CrossRef]

- Saint-Pierre, F.; Philibert, A.; Giroux, B.; Rivard, P. Concrete Quality Designation based on Ultrasonic Pulse Velocity. Constr. Build. Mater. 2016, 125, 1022–1027. [Google Scholar] [CrossRef]

- Błaszczak-Bąk, W.; Sobieraj-Żłobińska, A.; Kowalik, M. The OptD-multi method in LiDAR processing. Meas. Sci. Technol. 2017, 28, 7500–7509. [Google Scholar] [CrossRef]

- Błaszczak-Bąk, W. New optimum dataset method in LiDAR processing. Acta Geodyn. Geomater. 2016, 13, 381–388. [Google Scholar] [CrossRef]

- Bauer-Marschallinger, B.; Sabel, D.; Wagner, W. Optimisation of global grids for high-resolution remote sensing data. Comput. Geosci. 2014, 72, 84–93. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Can. Cartogr. 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Błaszczak-Bąk, W.; Sobieraj-Złobińska, A.; Wieczorek, B. The Optimum Dataset method—Examples of the application. In Proceedings of the E3S Web of Conferences, Wrocław, Poland, 5 January 2018; Volume 26, pp. 1–6. [Google Scholar]

- Błaszczak-Bąk, W.; Poniewiera, M.; Sobieraj-Żłobińska, A.; Kowalik, M. Reduction of measurement data before Digital Terrain Model generation vs. DTM generalisation. Surv. Rev. 2019, 51, 422–430. [Google Scholar] [CrossRef]

- Błaszczak-Bąk, W.; Koppanyi, Z.; Toth, C. Reduction Method for Mobile Laser Scanning Data. ISPRS Int. J. Geo-Inf. 2018, 7, 285. [Google Scholar] [CrossRef]

| ID | ScanWorld | ScanWorld | Weight | Error [m] | Error Vector [m] | Error Horz [m] | Error Vert [m] |

|---|---|---|---|---|---|---|---|

| 1 | SW-002 | SW-003 | 1 | 0.001 | (−0.001, 0.000,0.000) | 0.001 | 0.000 |

| 2 | SW-002 | SW-003 | 1 | 0.001 | (0.000, 0.001,0.000) | 0.001 | 0.000 |

| 3 | SW-002 | SW-003 | 1 | 0.000 | (0.000, 0.000,0.000) | 0.000 | 0.000 |

| 4 | SW-002 | SW-003 | 1 | 0.000 | (0.000, 0.000,0.000) | 0.000 | 0.000 |

| Sample | Dimensions [m] | No points |

|---|---|---|

| wall with damaged plaster | 1.50 ∙ 0.90 | 2,377,449 |

| concrete element with cracks | 2.30 ∙ 0.38 | 762,480 |

| damaged brick wall | 3.92 ∙ 1.85 | 7,272,966 |

| p% | 20% | 10% | 5% | 2% | 1% | 0.5% |

|---|---|---|---|---|---|---|

| Wall with damaged plaster | ||||||

| L [m] | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| t [m] | 0.002 | 0.003 | 0.004 | 0.009 | 0.016 | 0.031 |

| Iterations | 9 | 14 | 14 | 13 | 11 | 10 |

| Concrete element with the cracks | ||||||

| L [m] | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| t [m] | 0.002 | 0.003 | 0.005 | 0.013 | 0.022 | 0.036 |

| Iterations | 15 | 13 | 13 | 12 | 12 | 10 |

| Damaged retaining brick wall | ||||||

| L [m] | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 | 0.005 |

| t [m] | 0.073 | 0.215 | 0.678 | 0.752 | 0.821 | 0.954 |

| Iterations | 10 | 7 | 6 | 10 | 10 | 11 |

| Flat and Homogeneous Area (FHA) | Defect Area (DA) | |||||

|---|---|---|---|---|---|---|

| Number of Points | Percentage | Number of Points | Percentage | Density Points/0.01m2 | ||

| Wall with damaged plaster | ||||||

| original dataset | 181,062 | 100.000% | 204,813 | 100.000% | 17,226 | 1 |

| 20% dataset | 24,664 | 13.622% | 42,651 | 20.824% | 3587 | 2 |

| 10% dataset | 16,370 | 9.041% | 29,638 | 14.471% | 2493 | 2 |

| 5% dataset | 4881 | 2.696% | 20,130 | 9.828% | 1693 | 4 |

| 2% dataset | 305 | 0.168% | 8441 | 4.121% | 710 | 24 |

| 1% dataset | 49 | 0.027% | 3257 | 1.590% | 274 | 59 |

| 0.5% dataset | 5 | 0.003% | 993 | 0.485% | 84 | 176 |

| Concrete element with the cracks | ||||||

| original dataset | 45,351 | 100.000% | 20,912 | 100.000% | 12,560 | 1 |

| 20% dataset | 3,544 | 7.815% | 6835 | 32.685% | 4105 | 4 |

| 10% dataset | 1,464 | 3.228% | 5572 | 26.645% | 3347 | 8 |

| 5% dataset | 12 | 0.026% | 3456 | 16.526% | 2076 | 625 |

| 2% dataset | 11 | 0.024% | 1992 | 9.526% | 1196 | 393 |

| 1% dataset | 1 | 0.002% | 952 | 4.552% | 572 | 2065 |

| 0.5% dataset | 0 | 0.000% | 0 | 0.000% | 0 | – |

| Damaged retaining brick wall | ||||||

| original dataset | 466,071 | 100.000% | 663,249 | 100.000% | 9116 | 1 |

| 20% dataset | 52,965 | 11.364% | 122,862 | 18.524% | 1689 | 2 |

| 10% dataset | 22,841 | 4.901% | 62,539 | 9.429% | 860 | 2 |

| 5% dataset | 52 | 0.011% | 29,789 | 4.491% | 409 | 403 |

| 2% dataset | 15 | 0.003% | 1860 | 0.280% | 26 | 87 |

| 1% dataset | 10 | 0.002% | 497 | 0.075% | 7 | 35 |

| 0.5% dataset | 4 | 0.001% | 317 | 0.048% | 4 | 56 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suchocki, C.; Błaszczak-Bąk, W.; Damięcka-Suchocka, M.; Jagoda, M.; Masiero, A. On the Use of the OptD Method for Building Diagnostics. Remote Sens. 2020, 12, 1806. https://doi.org/10.3390/rs12111806

Suchocki C, Błaszczak-Bąk W, Damięcka-Suchocka M, Jagoda M, Masiero A. On the Use of the OptD Method for Building Diagnostics. Remote Sensing. 2020; 12(11):1806. https://doi.org/10.3390/rs12111806

Chicago/Turabian StyleSuchocki, Czesław, Wioleta Błaszczak-Bąk, Marzena Damięcka-Suchocka, Marcin Jagoda, and Andrea Masiero. 2020. "On the Use of the OptD Method for Building Diagnostics" Remote Sensing 12, no. 11: 1806. https://doi.org/10.3390/rs12111806

APA StyleSuchocki, C., Błaszczak-Bąk, W., Damięcka-Suchocka, M., Jagoda, M., & Masiero, A. (2020). On the Use of the OptD Method for Building Diagnostics. Remote Sensing, 12(11), 1806. https://doi.org/10.3390/rs12111806