1. Introduction

The boundaries of agricultural fields are important features that define agricultural units and allow one to spatially aggregate information about fields and their characteristics. This information includes location, shape, spatial extent, and field characteristics such as crop type, soil type, fertility, yield, and taxation. Agricultural information are important indicators to monitor agriculture policies and developments; thus, they need to be up-to-date, accurate, and reliable [

1]. Mapping the spatiotemporal distribution and the characteristics of agricultural fields is paramount for their effective and sound management.

According to [

2], agricultural areas refer to land suitable for agricultural practices, which include arable land, permanent cropland, and permanent grassland. Agricultural field boundaries (AFB) can be conceptualized as the natural disruptions that partition locations where a change of crop type occurs, or comparable crops naturally detach [

3]. Traditionally, AFB were established through surveying techniques, which are laborious, costly, and time-consuming. Currently, the availability of very high resolution (VHR) satellite imageries and the advancement in deep learning-based image analysis have shown potential for the automated delineation of agricultural field boundaries [

4]. Notwithstanding the benefits of VHR images for the delineation of agricultural field boundaries, these data are often expensive and subject to closed data sharing policies. For this reason, in this study we focus on Sentinel-2 images, which are freely available with an open data policy. Among other applications, Sentinel-2 images are extensively used for crop classification [

5,

6,

7]. The images convey relatively good spectral information (13 spectral bands) and medium spatial resolution (up to 10 m) [

8]. However, their potential for the detection and delineation of agricultural boundaries have not been fully explored, they are limited to crop land classification [

6] and instance segmentation [

9].

Spectral and spatial-contextual features form the basis for the detection of agricultural field boundaries. Standard edge detection methods such as the Canny detector have been applied extensively to extract edges from a variety of images [

10]. Multiresolution image segmentation techniques [

11] and contour detection techniques such as global probabilities of boundaries (gPb) [

12] are more recent techniques that can be applied to extract edges. Multi-Resolution Segmentation (MRS) is a region-mergin segmentation technique, which merges pixels into uniform regions according to a homogeneity criterion. It stops when all possible merges exceed a predefined threshold for the homogeneity criterion. GPb combines multiscale spatial cues based on color, brightness, and texture with global image information to make predictions of boundary probabilities. Both approaches use spectral and spatial information in an unsupervised manner, so that they are designed to detect generic edges in an image. For this reason, those techniques cannot extract specific type of images edges (semantic contours), like AFB.

Deep learning in image analysis is a collection of machine learning techniques based on algorithms that can learn features such as edges and curves from a given input image to address classification problems. Examples of deep learning methods used in remote sensing are convolutional neural networks (CNNs) and fully convolutional networks (FCNs). CNNs can learn the spatial-contextual features from a 3D input volume and produce a one-dimensional (feature) vector which is connected to the fully connected layer [

13]. The fully connected layer is used to predict the class labels using the features extracted by the convolutional layers. FCNs are pixel-wise classification techniques that extract hierarchical features automatically from the input image. In FCNs, fully connected layers are usually replaced by transposed convolutional layers. CNNs and FCNs have been recently used for land cover and land use classification (LCLU). For instance, in [

14], CNNs are applied to identify slums’ degree of deprivation, considering different levels of deprivation including socio-economical aspects. FCN applications include detection of cadastral boundaries [

15] and delineation of agricultural fields in smallholder farms [

4]. Despite the wide applications of these methods in LCLU classification, their capabilities for boundary delineation have not been explored for medium resolution data such as Sentinel-2; they are limited to VHR images [

4].

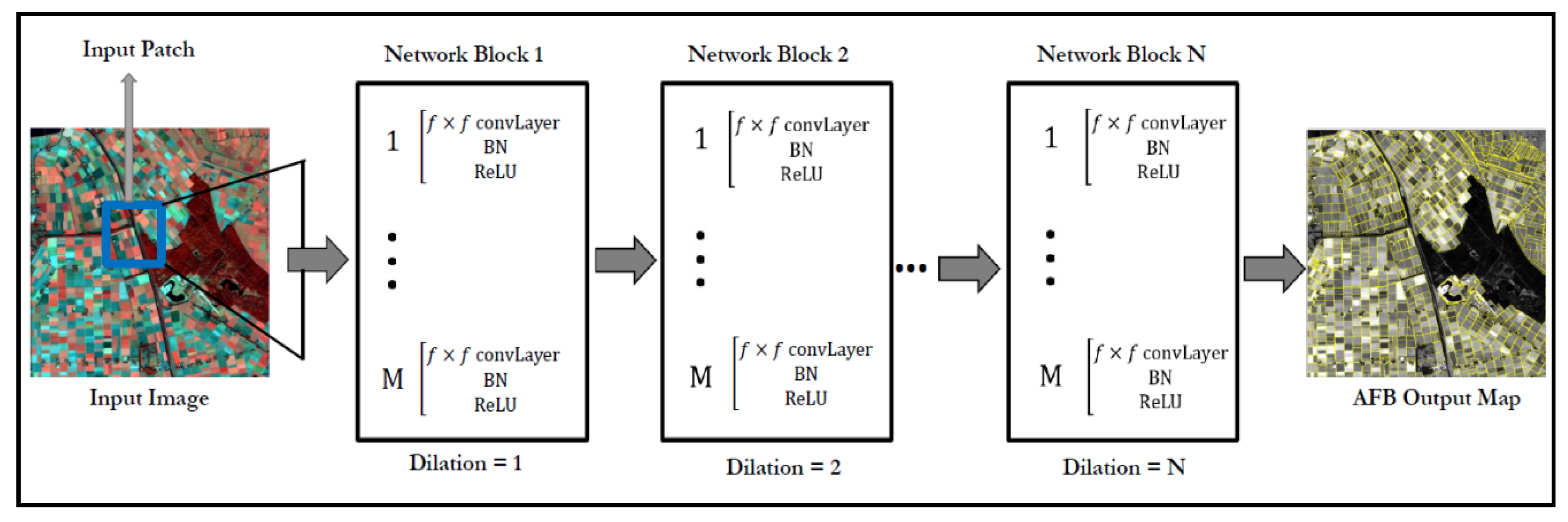

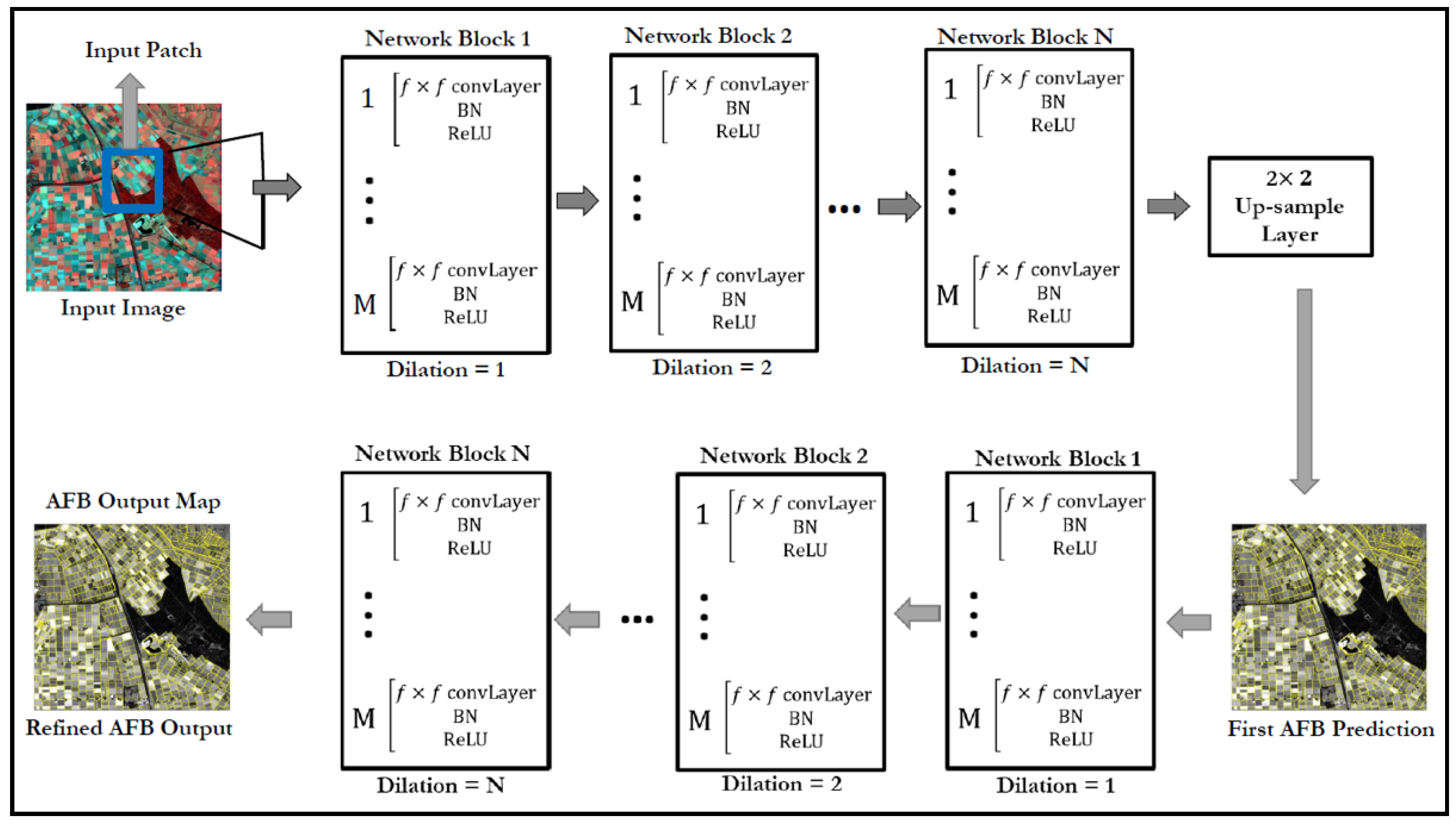

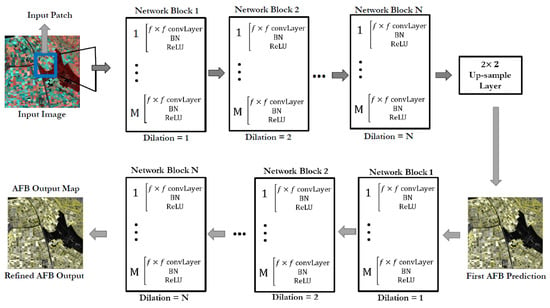

This study introduces a multiple dilation FCN (MD-FCN) for AFB delineation using free (medium resolution) Sentinel-2 images. The MD-FCN is a dilated fully convolutional network that is trained end-to-end to extract the spatial features from 10 m resolution input and produce the AFB at the same resolution. However, the spatial resolution of 10 m is relatively coarse to map the boundaries of the agricultural fields, resulting in large uncertainty in the exact location of the boundaries. Super-resolution mapping (SRM) techniques can be applied to increase the spatial resolution of the thematic map using different techniques, e.g., two-point histogram [

16], Markov-random-field-based super-resolution mapping [

17], and Hopfield Neural Network [

18]. This paper introduces a novel super-resolution contour detector based on FCN (SRC-Net) to delineate agricultural field boundaries from Sentinel-2 images which is inspired by the idea of SRM. SRC-Net aims to learn the features from 10 m resolution images and makes predictions of the AFB at 5 m resolution. The novel method (SRC-Net) also learns the contextual features from the label space of boundaries to produce refined boundaries. We compare the performance of the SRC-Net with the results obtained from a 5 m resolution image acquired by RapidEye using MD-FCN. The aim of the comparison is to assess the performance of the SRC-Net method. In both methods (MD-FCN and SRC-Net), we address the delineation of the agricultural field boundary (AFB) as a classification problem of class AFB boundary and non-boundary.

5. Discussion

Identification of the boundaries is a difficult problem, especially when the boundaries are detected from medium spatial resolution data such as Sentinel-2 images. Based on the definition of boundaries (AFB) that was adopted in this study, AFB is not necessarily associated to the object such as roads and ditches. We infer the AFB as the transition from one agricultural field to another, or from agricultural field to non-agricultural field. While roads and ditches can be possible boundaries present in the image, in this study we classified them as the non-agricultural land cover types rather than the boundaries themselves. Taking the above criteria in consideration, we therefore mapped the boundaries as the outer extent of agricultural fields, which do not have a specific size.

In this section, we discuss the results of the compared methods. We divide the discussion into three subsections. We first describe the accuracy assessment strategy followed by applicability of the methods and finally we describe the limitations of the developed methods.

5.1. Accuracy Assessment Strategies

F-Score is an accuracy assessment method commonly used to assess land cover classification maps. We used the F-score accuracy assessment test to assess the performance of our methods in detecting the agricultural field boundaries. Arguably, F-score is the desirable accuracy measure to assess the accuracy of multi-class classification through analyzing the accuracy per specific class [

26], rather than assessing the overall aggregate accuracy for all classes. In this study, our aim was to assess the performance of the methods in detecting the agricultural field boundaries, and we considered the boundaries as one class against the rest. This is because there are unbalanced classes, i.e., the class boundary is much smaller than the class non-boundaries. Thus, in this specific case, the F-score was an appropriate and reliable accuracy assessment method such that it balances the size of the two classes.

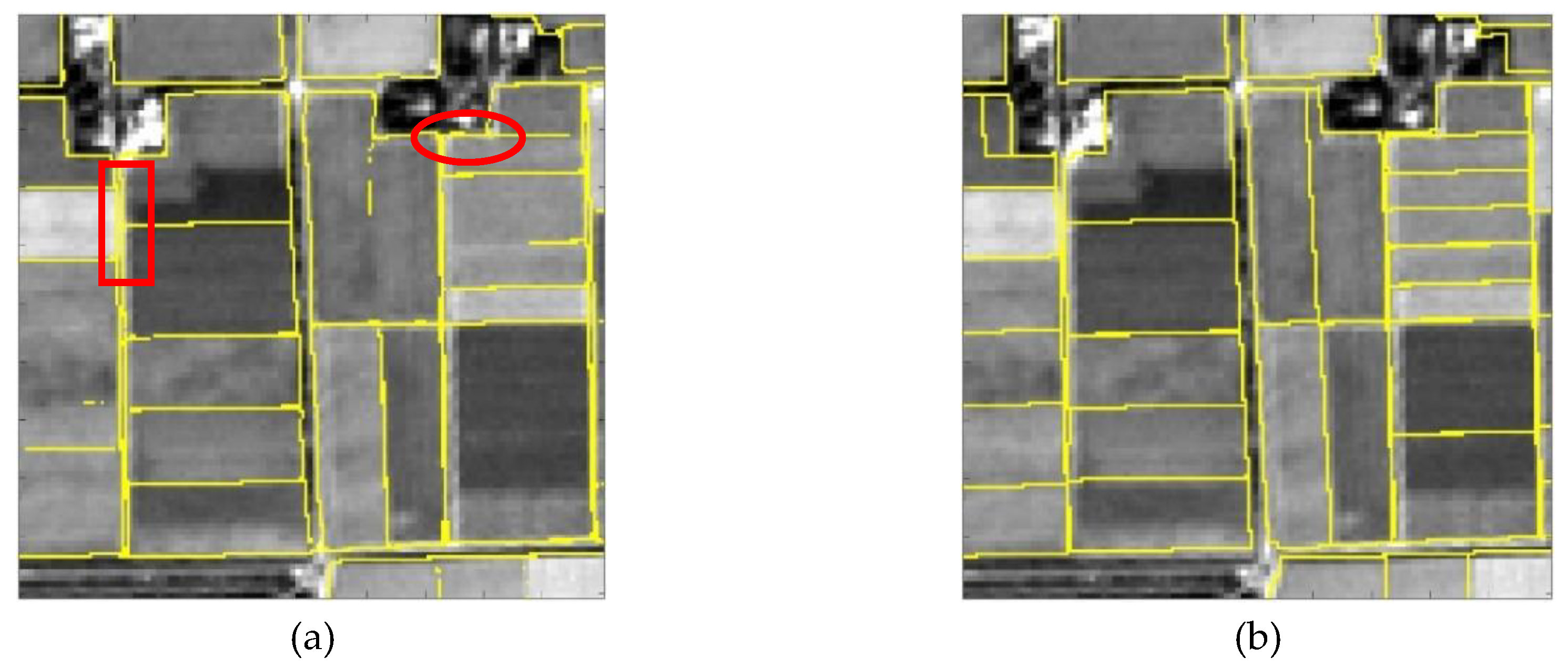

In this study, we assessed the accuracy of the method outputs against reference data. The reference data was rasterized from its original format vector to allow for the automatic accuracy analysis from the FCN method. The FCN method employs the pixel by pixel comparison to assess for the accuracy of classification results from the reference data. Despite the wide application of this method and its automated performance, the pixel by pixel approach requires that both (classification output and reference) are in raster format, thus forces the rasterization of the reference data. Ordinarily, rasterization of vector data, especially polyline vector, results in the loss of data quality due to the stair-like structure of the rasterized lines. Such quality loss may damage the accuracy assessment. Using vector data as the reference data for accuracy assessment of the boundaries will be considered for further studies.

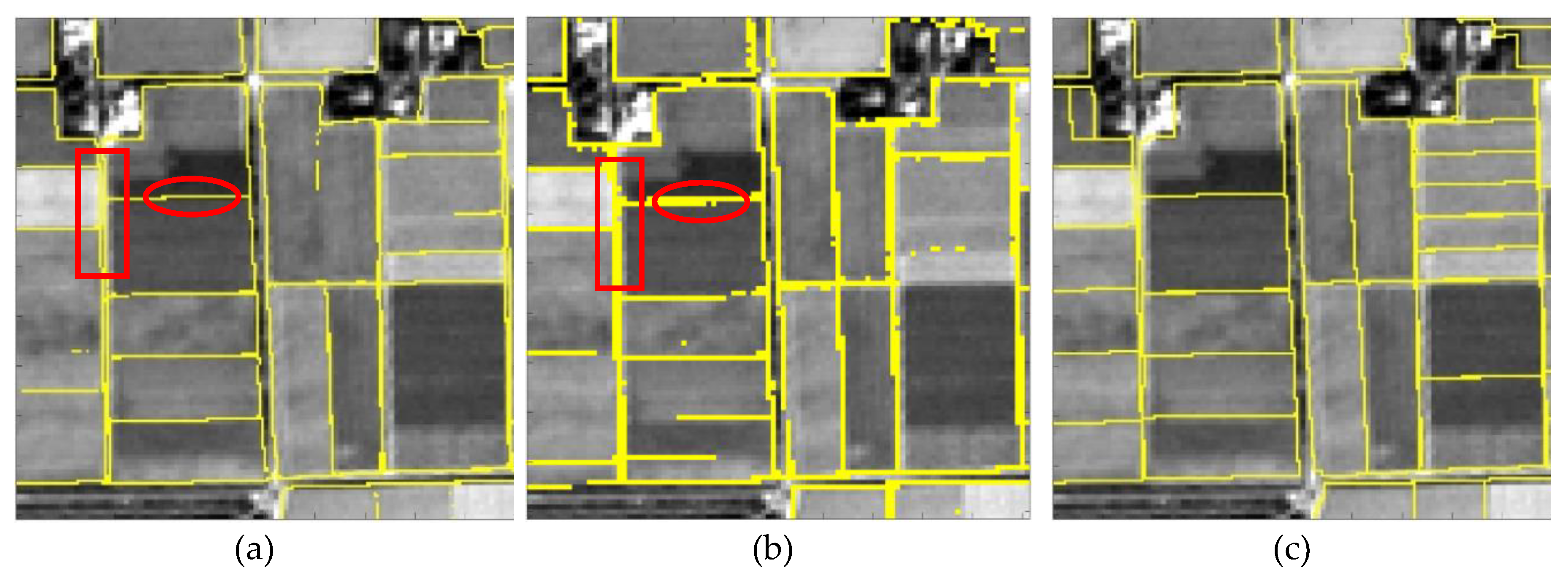

Based on the results for the AFB from SRC-Net, we, therefore, concluded that delineation of agricultural field boundaries from the Sentinel-2 image using a novel super-resolution contour detector based on fully convolutional networks can produce boundaries at 5 m resolution with the reasonable results as shown in

Section 4.

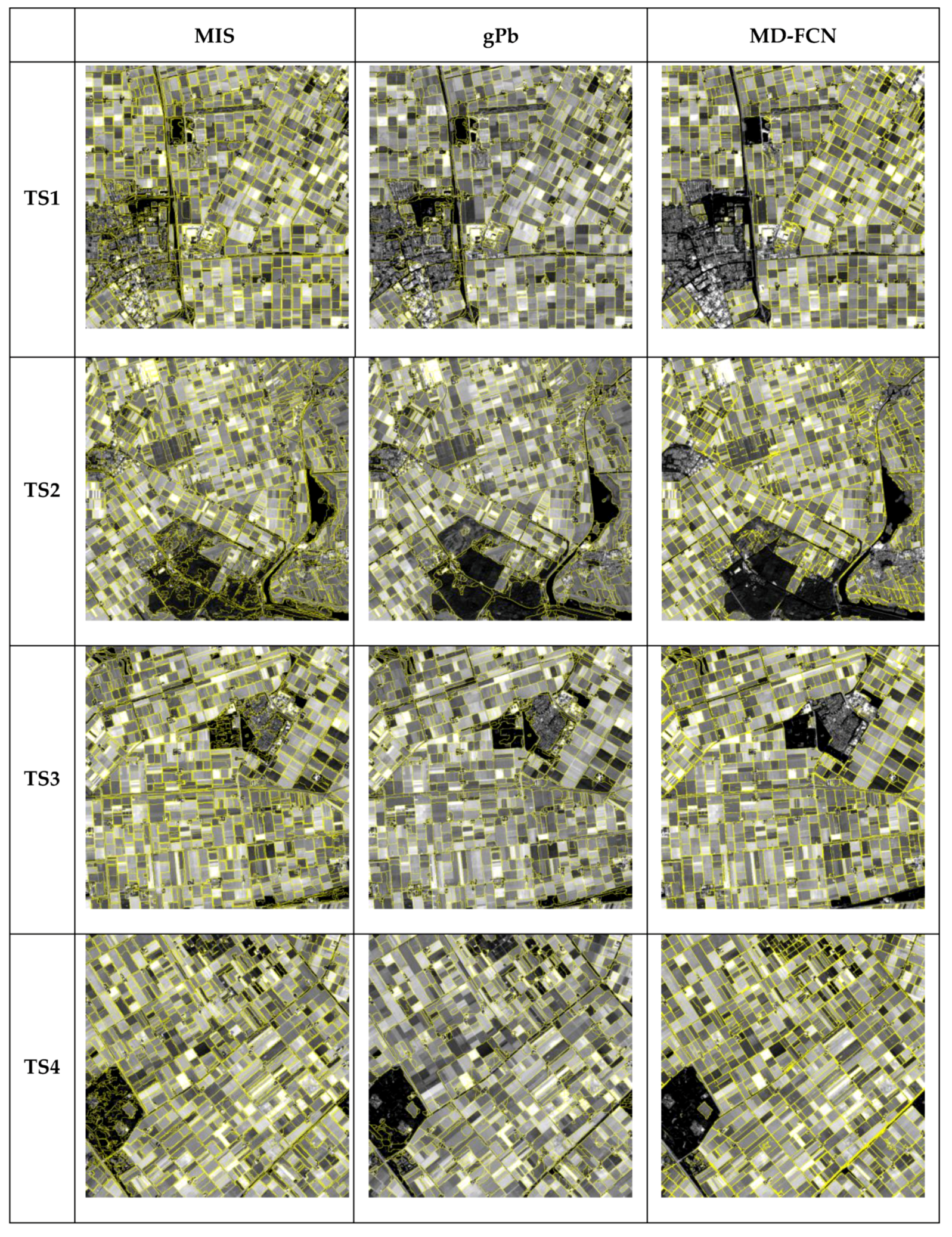

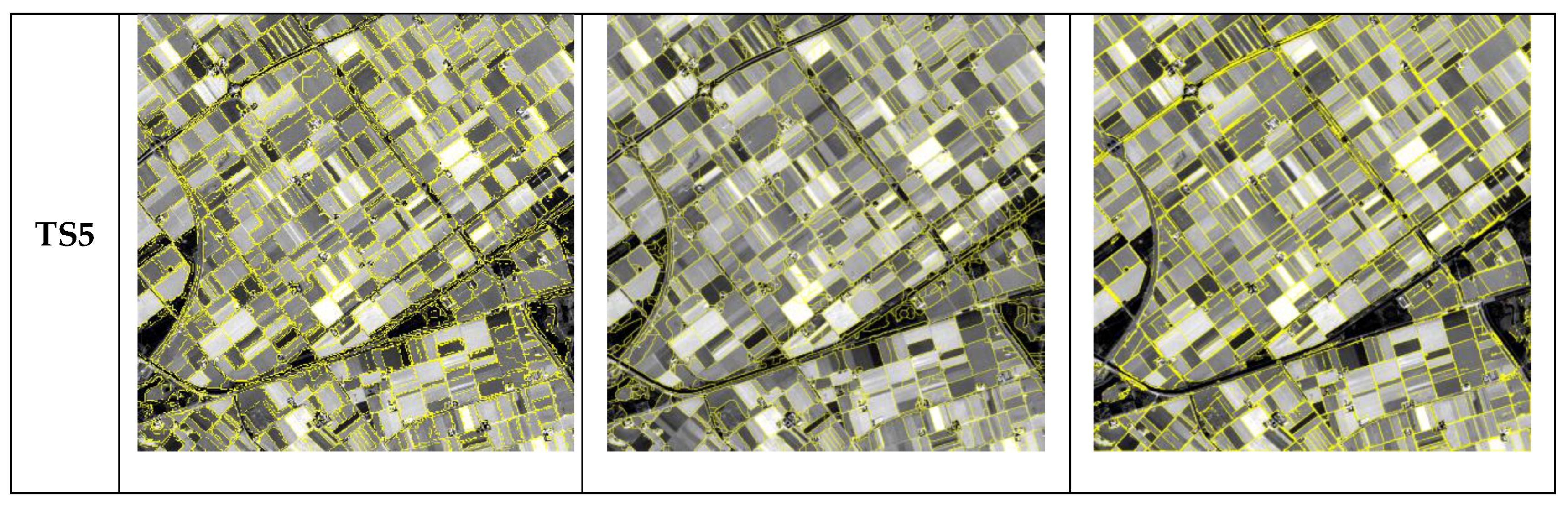

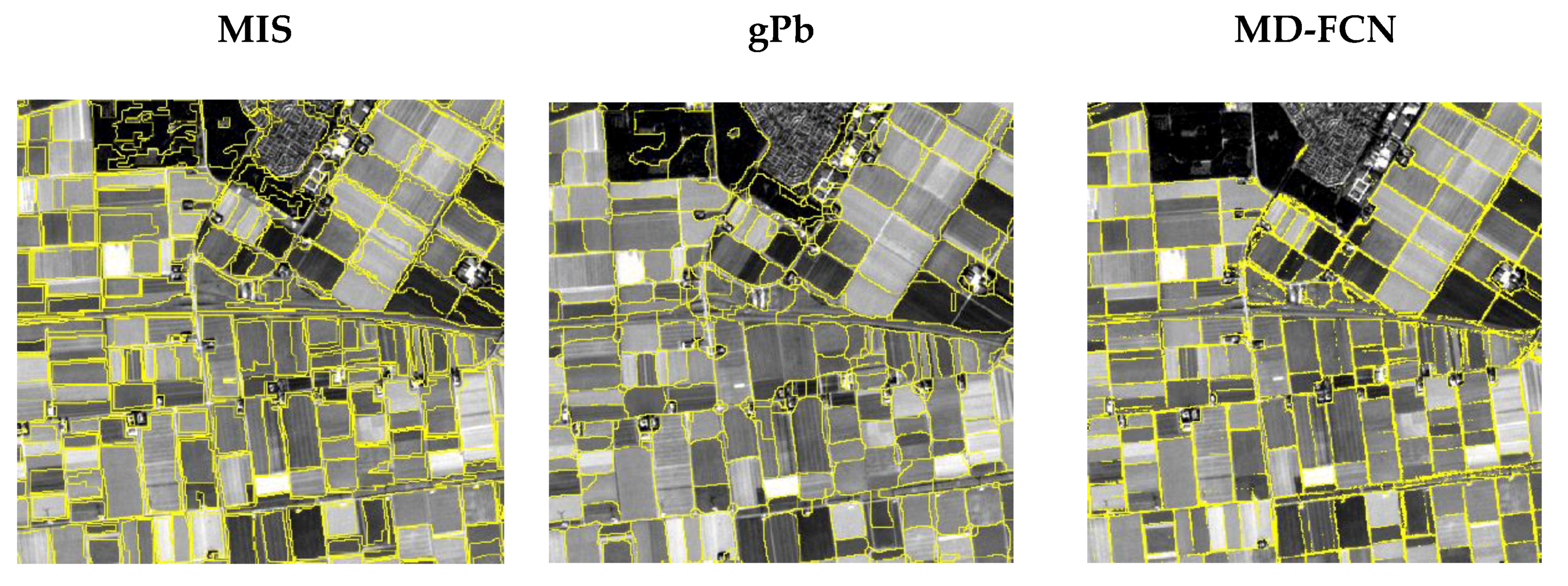

5.2. Applicability of the Methods

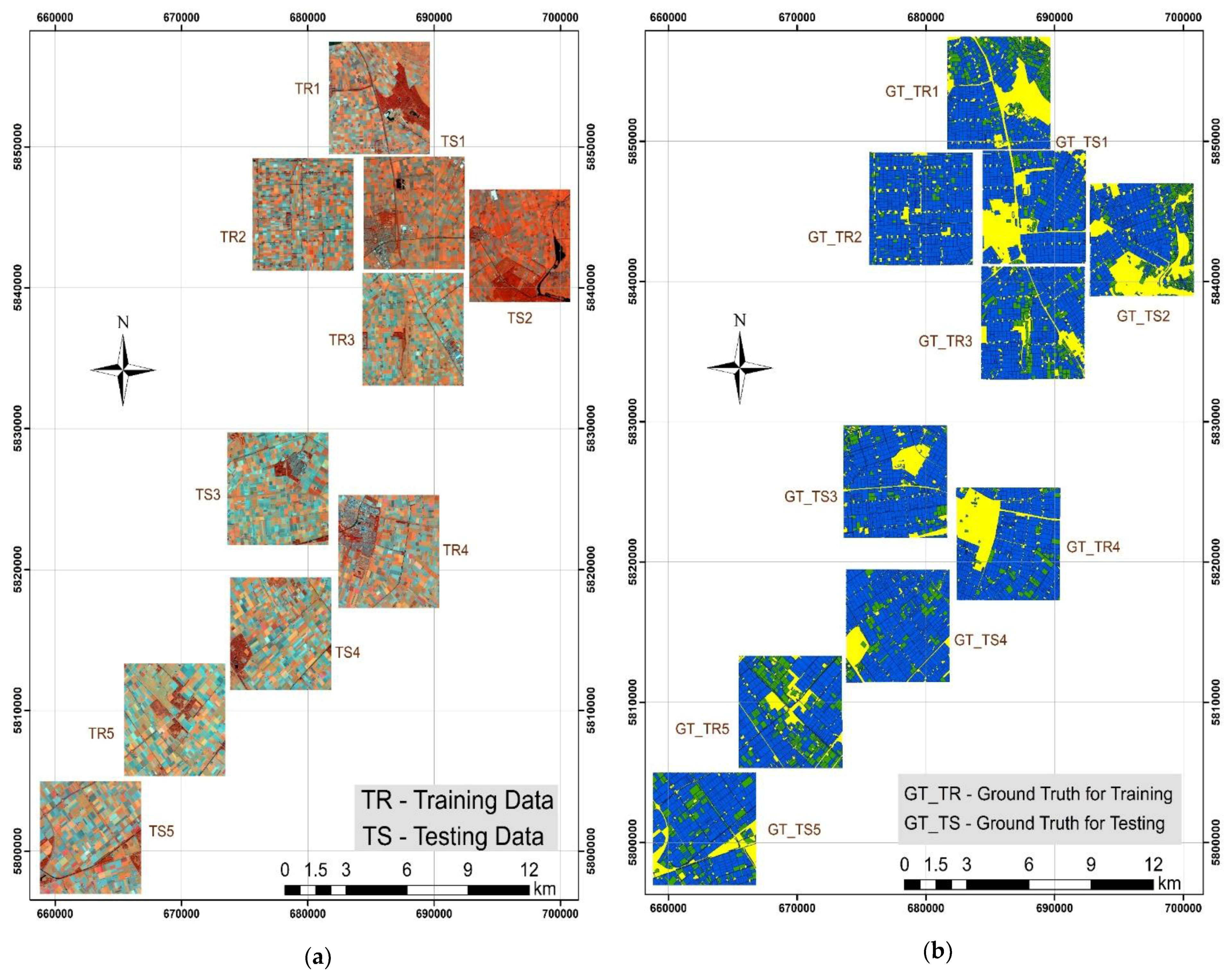

The deep learning methods developed in this study (MD-FCN and SRC-Net) are robust for detecting agricultural fields. The methods are replicable and scalable to the whole province of Flevoland. This is because the training data used for training the networks were good representative for the whole province of Flevoland. Therefore, it is feasible to produce the map over the large area such as the entire province of Flevoland. Additionally, these methods can be applied to the whole country of the Netherlands and other countries provided that enough training data is available. A challenging aspect is the processing time, especially in the training phase. In the testing phase, FCNs are computationally efficient.

5.3. Limitation of the Methods

In this study, both methods that we developed (MD-FCN and SRC-Net) are time consuming as they approximately require 2 and 4 h for MD-FCN and SRC-Net respectively to train the model from a tile of 800

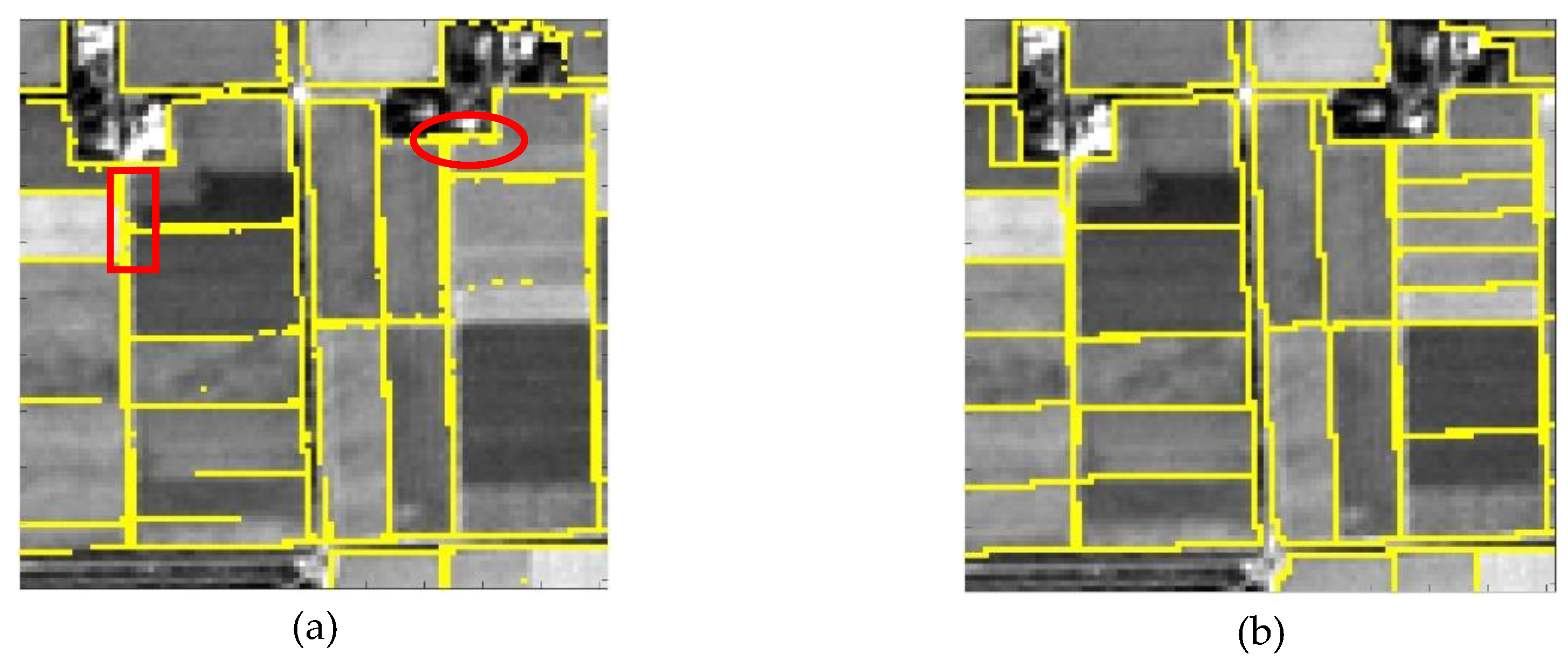

pixels at 10 m resolution using a graphical processing unit (GPU). This is because the methods are deep as they comprised a large number of convolutional layers. Therefore, the methods need more processing power including both central processing unit (CPU) and GPU. Additionally, for training and testing a large area, a random access memory (RAM) of at least 16 GB is needed for convenience performance of processing. In addition, all methods produced fragmented boundary maps. These outputs depict that the boundary delineation problem using FCN is a challenge because the networks learn small and thin features such as lines. The limitation can be solved by deriving a hierarchical segmentation as in [

4].

6. Conclusions

In reference to the accuracy assessment presented in

Section 5.1, both MD-FCN and SRC-Net methods can be applied to detect boundaries of agricultural fields. We applied the baseline methods gPb and multiresolution image segmentation using eCognition. Based on the obtained accuracy, MD-FCN performs significantly better than the baseline methods with an increment of 31%–32% in the average F-score. This is because the MD-FCN can automatically learn discriminative spatial-contextual features from the input images and precisely generate the required outputs. Furthermore, the SRC-Net reduces the noise and increases the capability of separating different fields. The results of SRC-Net show that this network is applicable in detecting agricultural field boundaries, showing the opportunity of using open and free data of Sentinel-2 to automatically detect boundaries at 5 m resolution.

In future work, we will aim at developing the strategies to produce segmentation where fragmented boundaries are connected to obtain close contours. Additionally, we may apply the MD-FCN on study areas other than Flevoland that have irregular fields. Future work should involve the investigation of the vector format for reference data as this could increase the classification output accuracy, especially when we consider buffering the feature. This technique will allow us to include location tolerance, hence increasing the assessment accuracy.