1. Introduction

Numerous monitoring and surveillance imaging systems for outdoor and indoor environments have been developed during the past decades based mainly on standard RGB optical, usually CCTV, cameras. However, RGB sensors provide relatively limited spectral information especially for challenging scenes under problematic conditions of constantly changing irradiance, illumination, as well as the presence of smoke or fog.

During the last decade, research and development in optics, photonics and nanotechnology permitted the production of new innovative video sensors which can cover a wide range of the ultraviolet, visible as well as near, shortwave and longwave infrared spectrum. Multispectral and hyperspectral video sensors have been developed, based mainly on (a) filter-wheels, (b) micropatterned coatings on individual pixels, (c) optical filters monolithically integrated on top of CMOS image sensors. In particular, hyperspectral video technology has been employed, for the detection and tracking of moving objects in engineering, security and environmental monitoring applications. Indeed, several detection algorithms have been proposed for various applications with moderate to sufficient effectiveness [

1,

2]. In particular, hyperspectral video systems have been employed for developing object tracking solutions through hierarchical decomposition for chemical gas plume tracking [

3]. Multiple object tracking based on background estimation in hyperspectral video sequences as well as multispectral change detection through joint dictionary data have also been addressed [

4,

5]. Certain processing pipelines have also been proposed to address the changing environmental illumination conditions [

2]. Scene recognition and video summarization have been also proposed based on machine learning techniques and RGB data [

6,

7].

These detection capabilities are gradually starting to be integrated with other video modalities like, e.g., standard optical (RGB), thermal, and other sensors towards the effective automation of the recognition modules. For security applications, the integration of multisensor information has been recently proposed towards the efficient fusion of the heterogeneous information towards robust large-scale video surveillance system [

8]. In particular, multiple target detection, tracking, and security event recognition is an important application of computer vision with significant attention on human motion/activity recognition and abnormal event detection [

9]. Most algorithms are based on learning robust background models from standard optical RGB cameras [

10,

11,

12,

13,

14] and more recently from other infrared sensors and deep learning architectures [

15]. However, estimating a foreground/background model is very sensitive to illumination changes. Moreover, extracting the foreground objects as well as recognizing its semantic class/label is not always trivial. In particular, in challenging outdoor (like in

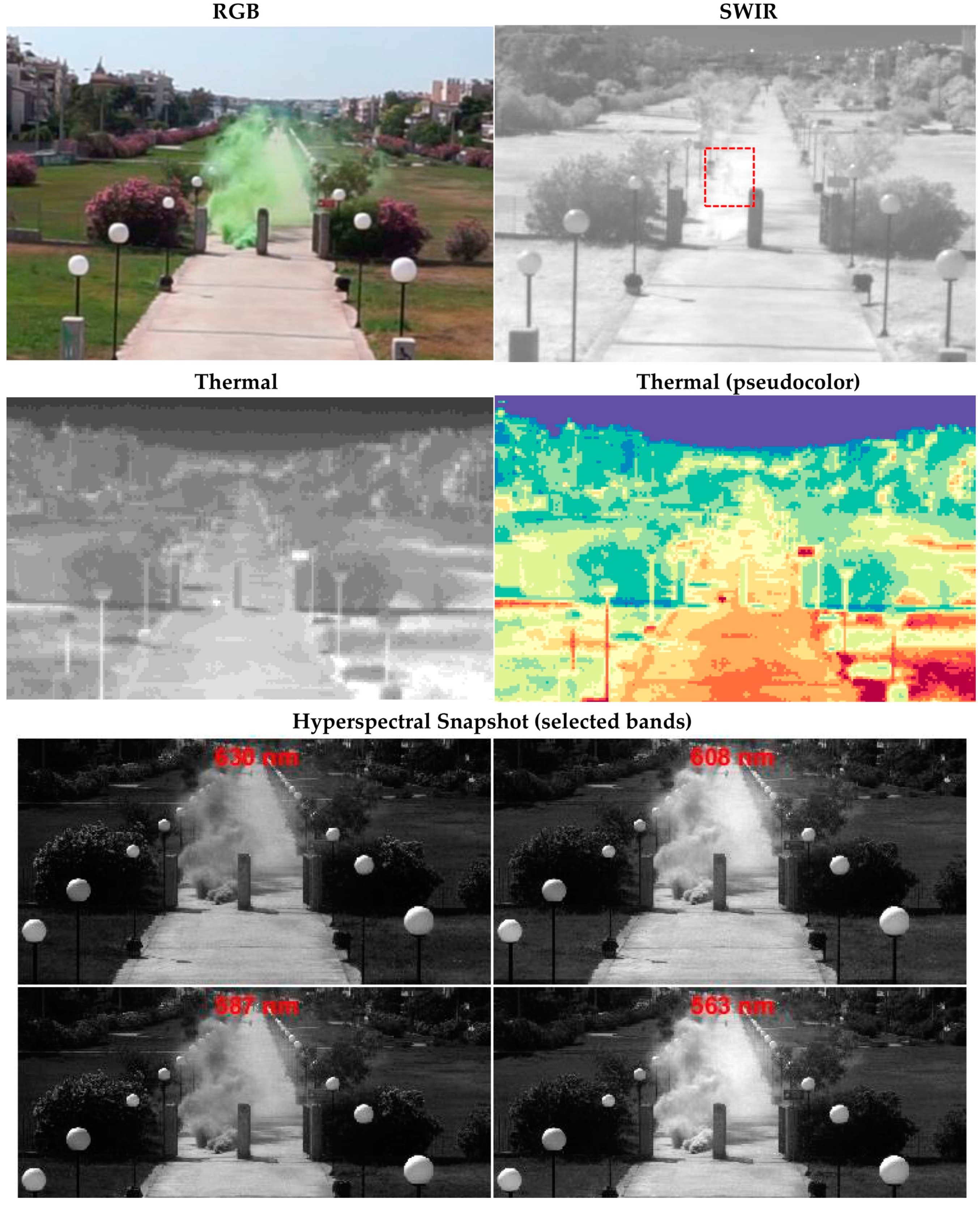

Figure 1) and indoor scenes with a rapidly changing background such algorithms fail to model the background efficiently, resulting to several false positives or negatives.

Recent advances in machine learning have provided robust and efficient tools for object detection (i.e., point out a bounding box around the object of interest in order to locate it within the image plane) based on deep neural network architectures. Since, the number of object occurrences in the scene is not a priori known, a standard convolutional network, followed by a fully connected layer, is not adequate. In order to limit the search space (the number of image regions to search for objects) the recent R-CNN [

16] method proposed to extract just 2000 regions from the image which were generated by a selective search algorithm. Based on R-CNN, the Fast R-CNN method was proposed which instead of feeding the region proposals to the CNN, feeds the input image to the CNN, to generate a convolutional feature map. Thus, it is not necessary to feed 2000 region proposals to the convolutional neural network. Instead, the convolution operation is done only once per image [

17]. Improving upon this, a more recent approach utilizes a separate network to predict the region proposals. These are reshaped using a RoI pooling layer which is then used to classify the image within the proposed region and predict the offset values for the bounding boxes (FR-CNN [

17]). In the same direction, the Mask R-CNN deep framework [

18] decomposes the instance segmentation problem into the subtasks of bounding box object detection and mask prediction. These subtasks are handled by dedicated networks that are trained jointly. Briefly, Mask R-CNN is based on FR-CNN [

17] bounding box detection model with an additional mask branch that is a small fully convolutional network (FCN) [

19]. At inference, the mask branch is applied to each detected object in order to predict an instance-level foreground segmentation mask.

All of the previous state-of-the-art object detection algorithms use regions to localize the object within the image. The network does not look at the complete image but instead, looks at parts of the image which have high probabilities of containing an object/target. In contrast, the YOLO (‘You Only Look Once’) method [

20] employs a single convolutional network which predicts the bounding boxes and the class probabilities for these boxes. YOLO has demonstrated state-of-the-art performance in a number of benchmark datasets, while advancements have been already proposed [

21] towards improving both accuracy and computational performance for real-time applications.

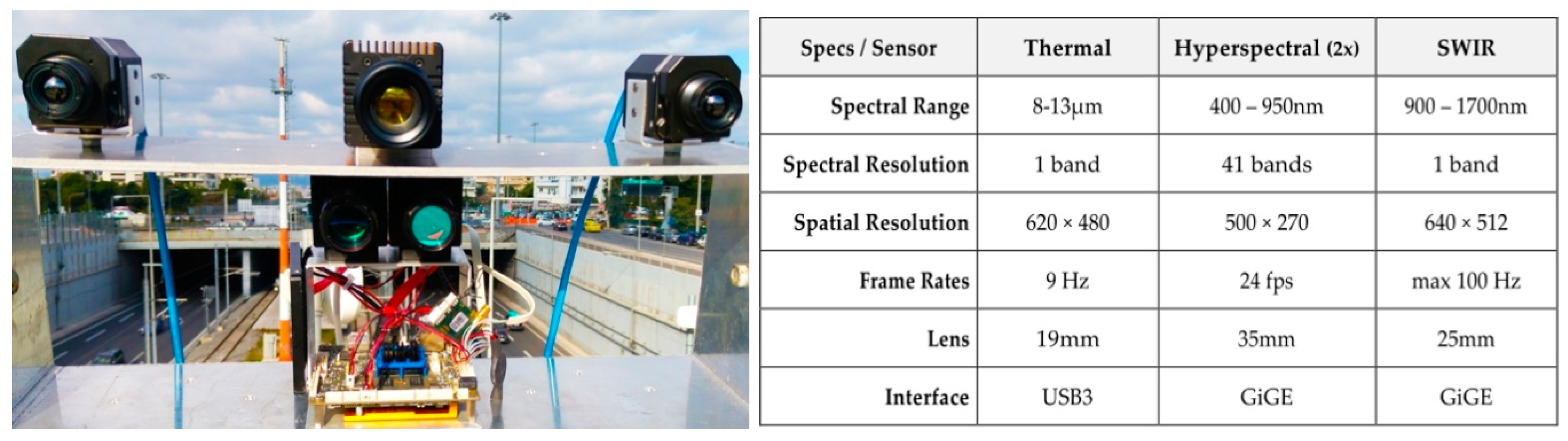

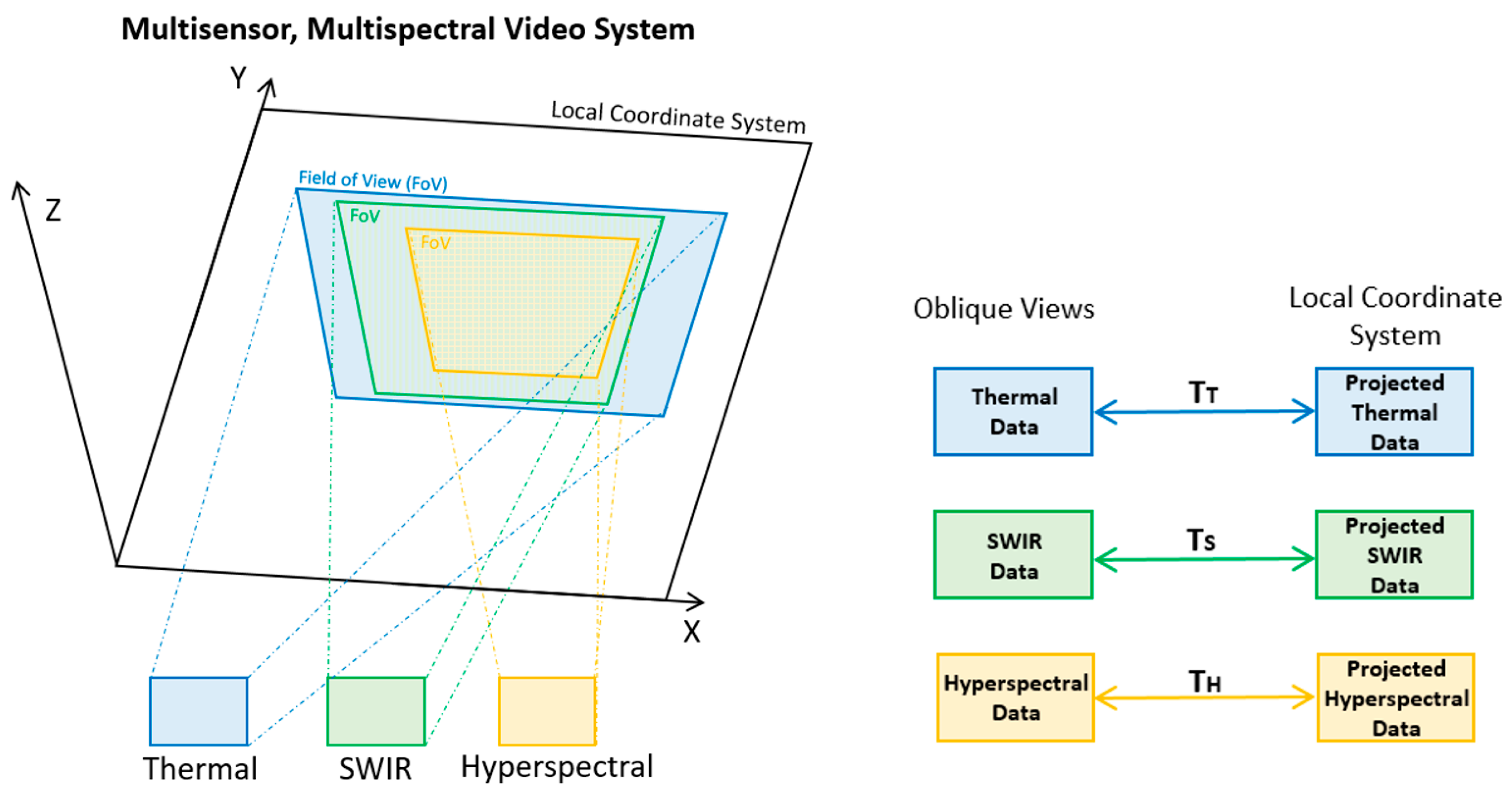

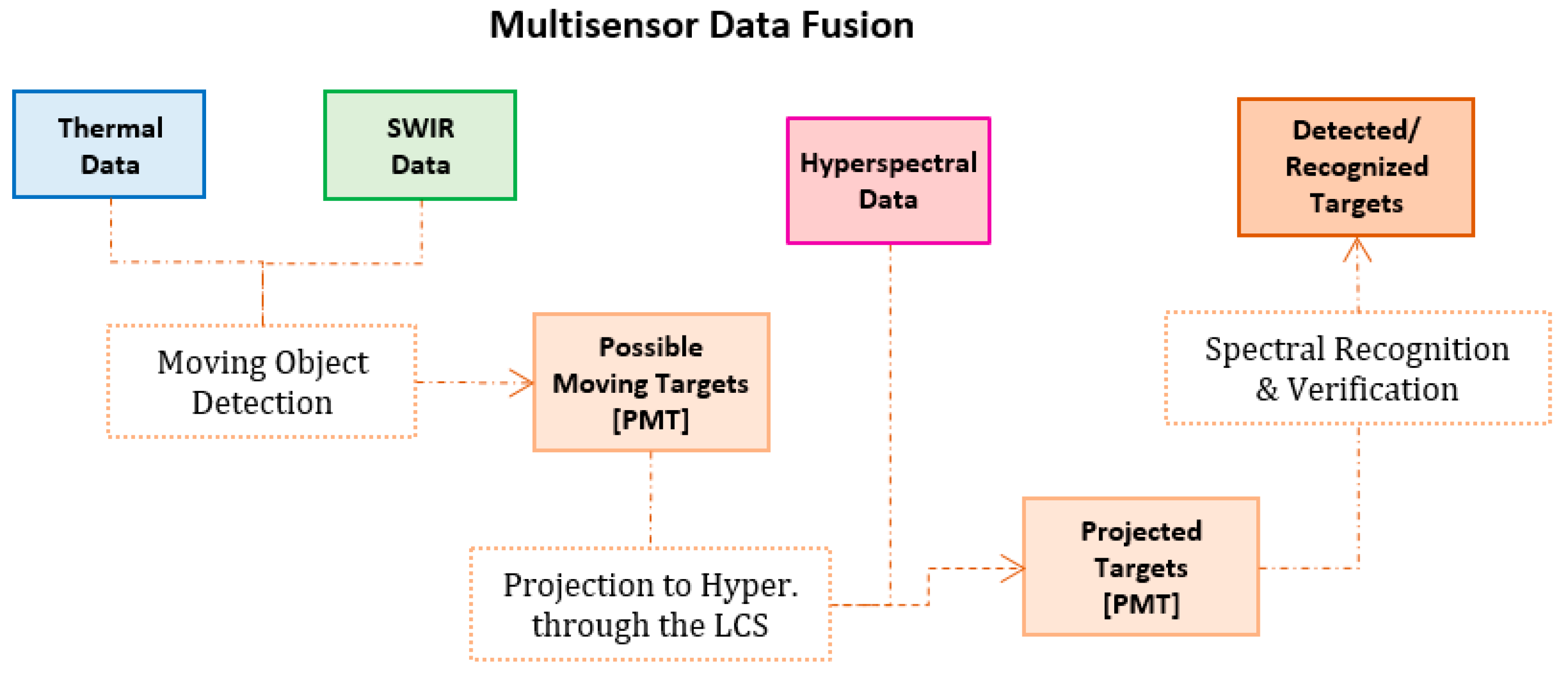

Towards a similar direction and additionally aiming at exploiting multisensor imaging systems for challenging indoor and outdoor scenes, in this paper, we propose a fusion strategy along with an object/target detection and verification processing pipeline for monitoring and surveillance tasks. In particular, we build upon recent developments [

4,

22] on classification and multiple object tracking from a single hyperspectral sensor and have moreover integrated another thermal and shortwave (SWIR) video sensors similar to [

23]. However, apart from the dynamic background modeling and subtraction scheme [

23], here, we have integrated state-of-the-art deep architectures for supervised target detection making the developed framework detector-agnostic. Therefore, the main novelty of the paper lies in the proposed processing framework which can be deployed in single board computers locally near the sensors (at the edge). It is able to exploit all imaging modalities without having to process all acquired hypercubes, but only selected parts, i.e., candidate regions, allowing for real-time performance. Moreover, the developed system has been validated in both indoor and outdoor datasets in challenging scenes under different conditions like the present of fog, smoke, and rapidly changing illumination.

3. Experimental Results and Validation

3.1. Indoor/Outdoor Datasets, Implementation Details, and Evaluation Metrics

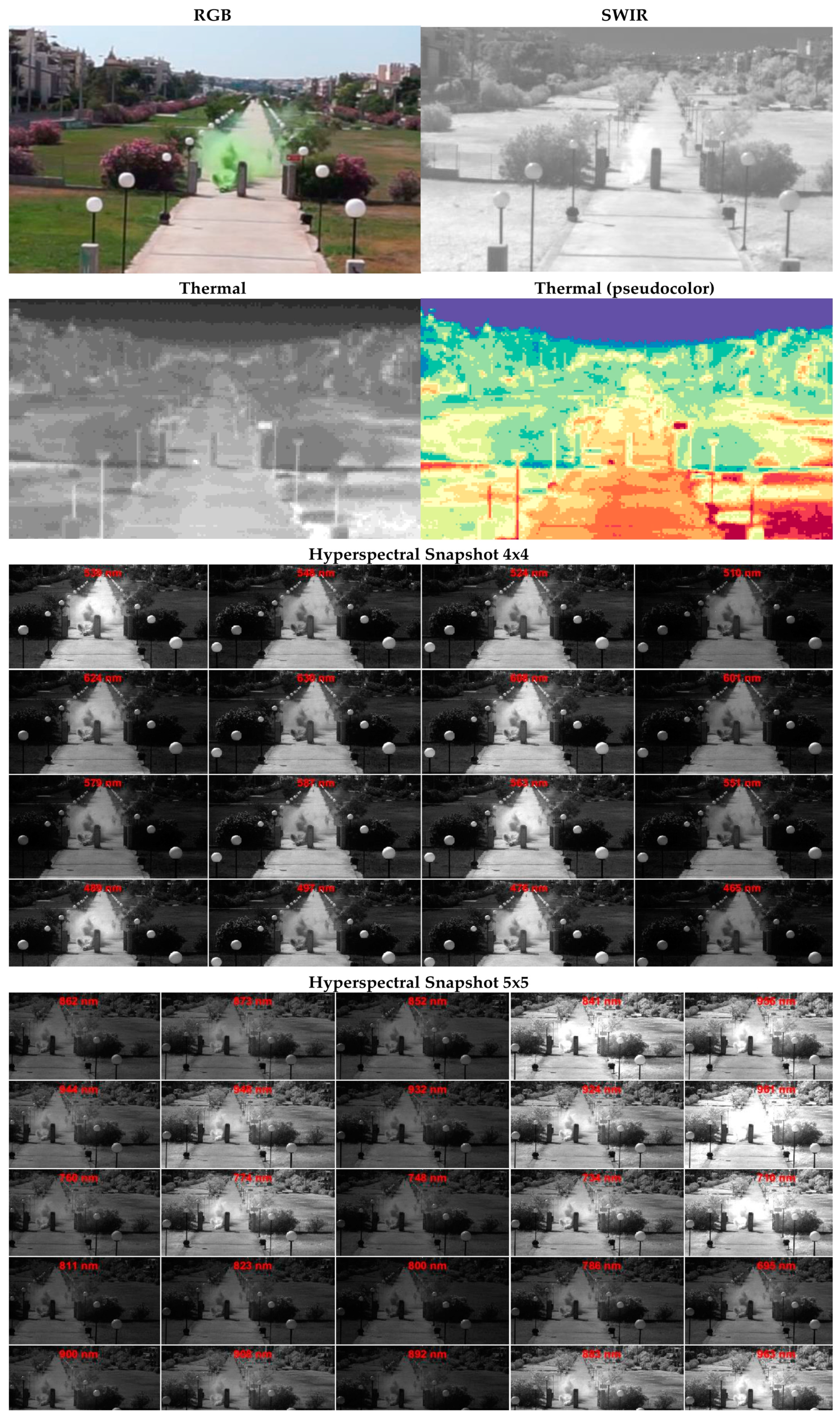

Several experiments have been performed in order to develop and validate the performance of the different hardware and software modules. Three benchmark datasets in challenging environments were employed for the quantitative and qualitative evaluation i.e., one outdoor (outD1) and two indoor ones (inD1 and inD2). In particular, the first one (outD1) was collected from the rooftop of a building overlooking a park walkway, situated above the Vrilissia Tunnel of Attica Tollways, in Northern Attica, Greece. The distance between camera and object was ~70 m. The conditions were sunny, but gusts of wind were disruptive to the data acquisition leading to not perfectly stabilized video sequence that was addressed by the coregistration software modules in near-real-time. For the generation of smoke, a smoke bomb was used, which generated a dense green cloud of smoke that lasted a couple of minutes. This resulted in a low number of acquired frames, in which smoke was present in the scene. The two indoor datasets (inD1, inD2) were collected at the School of Rural and Surveying Engineering, in the Zografou Campus of the National Technical University of Athens, Greece. The specific lecture room has an array of windows on two walls. The distance between the camera and ROI was approximately 15 m. The day was partially cloudy, with illumination changes from moving clouds, scattered throughout both datasets. Smoke was generated by the use of a fog/smoke machine. Dataset inD1 was collected in such a manner that the initial frames were clear, and the rest possessed a relatively small amount of smoke. The Dataset inD2 was created with the most challenging conditions: with the room almost full of smoke, with relatively lower intensity, contrast, and illumination changes derived from the partially opened windows.

Regarding the implementation details of the integrated supervised deep learning FR-CNN and YOLO methods which require a training procedure,

Table 1 briefly overviews the procedure. Although, according to the literature, both deep learning methods required a huge amount of training, and we performed experiments with a limited amount of training data in order to evaluate their robustness for classes (like pedestrians, people, etc.) that have been already pretrained, albeit not in challenging, like the considered here, environments. Indeed, for the outdoor dataset (outD1) only 100 frames were used for training. This can be considered as a significantly limited number as moreover only one moving object appeared in all frames. The training process lasted seven hours for the FR-CNN and 6 h for the YOLO methods. For the indoor datasets, in order to examine the robustness of the training procedure we build one single training model by collecting a small number of the initial frames from each dataset. In particular, 156 frames were taken from inD1 and 100 frames from inD2. The training process lasted five hours for the FR-CNN and eight hours for the YOLO.

3.2. Quantitative Evaluation

The quantitative evaluation was performed on two stages: validation and testing. Validation was performed on a smaller number of annotated frames to provide an initial assessment of the overall achieved accuracy. Then, the validation procedure took place on relatively large number of frames where the ground truth and true positives (TPs), false positives (FPs), and false negatives (FNs) were counted after an intensive laborious manual procedure.

The quantitative evaluation results for the outdoor dataset outD1 are presented in

Table 2. More specifically, the BS method resulted into the higher accuracy rates with precision at 0.90, recall at 0.97 and multiple object detection accuracy (MODA) at 0.88. The supervised methods (FR-CNN, YOLO) did not manage to perform adequately, mainly due to the limited training data (less than 100 frames with just one object) as well as the relatively small-size objects/targets. For those small objects it is difficult to retain strong discriminative features during the deep (decreasing in size) convolutional layers.

In

Table 3 and

Table 4, the results from the two indoor datasets are presented. In particular, in

Table 3, the quantitative results for the inD1 dataset indicated that the BS method failed to successfully detect the moving objects in the scene. This was mainly due to the important illumination changes which were dynamically affecting every video frame. In particular, the windows around the room allowed the changing amounts of daylight of a cloudy day to affect constantly scene illumination resulting into challenging detection conditions for background modeling methods. Therefore, the BS resulted into numerous false positives and relative low accuracy rates. On the other hand, the supervised FR-CNN and YOLO methods resulted into relative high detection rates. On the validation phase, both algorithms perform in a similar manner resulting into very few false negatives (with a recall > 0.96 on both cases). Regarding false positives, the FR-CNN resulted into marginally more precise results (by approximately 5%). However, in the testing dataset, the precision scores reversed, with the YOLO detector to provide a high precision rate of 0.99. The FR-CNN resulted into more False Positives achieving a precision of 0.77. Regarding, false negatives, which is crucial for our proposed solution, the FR-CNN provided very robust results, achieving a recall rate of 0.97, which was 14% better than YOLO’s 0.83. Therefore, while the reported overall accuracy favors YOLO by 8%, given our overall design and the crucial role of false negatives in monitor and surveillance tasks the FR-CNN can be considered as well.

For the second indoor dataset, which had a significant presence of smoke, the calculated quantitative results are presented in

Table 4. For the validation phase, the FR-CNN managed to fit better into the same, relatively small, training set. As in the previous case the YOLO did not manage to score high accuracy rates during the validation phase. However, in the large testing dataset, the YOLO method resulted overall into higher accuracy rates. The BS method failed again to provide any valuable results. The Recall and Precision detection rates were slightly lower compared to inD1 dataset, which can be attributed to the presence of smoke. Indeed, addressing heavy smoke in this indoor scene was a challenge for the detectors, resulting into a number of false negatives, as objects were less likely to stand out from the background. Moreover, sudden random spikes and illumination changes resulted in false positives; however in a lower rate that in inD1.

Moreover, in order to assess the overall performance of each detector in all datasets, in

Table 5, the Overall Accuracy results are presented, for all indoor (left) and all (both indoor and outdoor) datasets (right). The performance of the considered detectors in terms of overall accuracy was reported at 0.43, 0.66, and 0.59 for the BS, FR-CNN, and YOLO methods, respectively. Of course, these results are largely constrained by the relatively lower performance of the deep architectures in the outdoor (outD1) dataset, which can be attributed to the small number of available frames (training set) for the demanding learning process of the deep convolutional networks. Despite the relatively similar performance in the overall precision rates of FR-CNN and YOLO, the first one delivered significant higher accuracy recall rates and thus performed in overall better than the other two. Still, however, the detection of small objects is a challenge which is mainly associated with the convolutional neural network processes.

3.3. Qualitative Evaluation

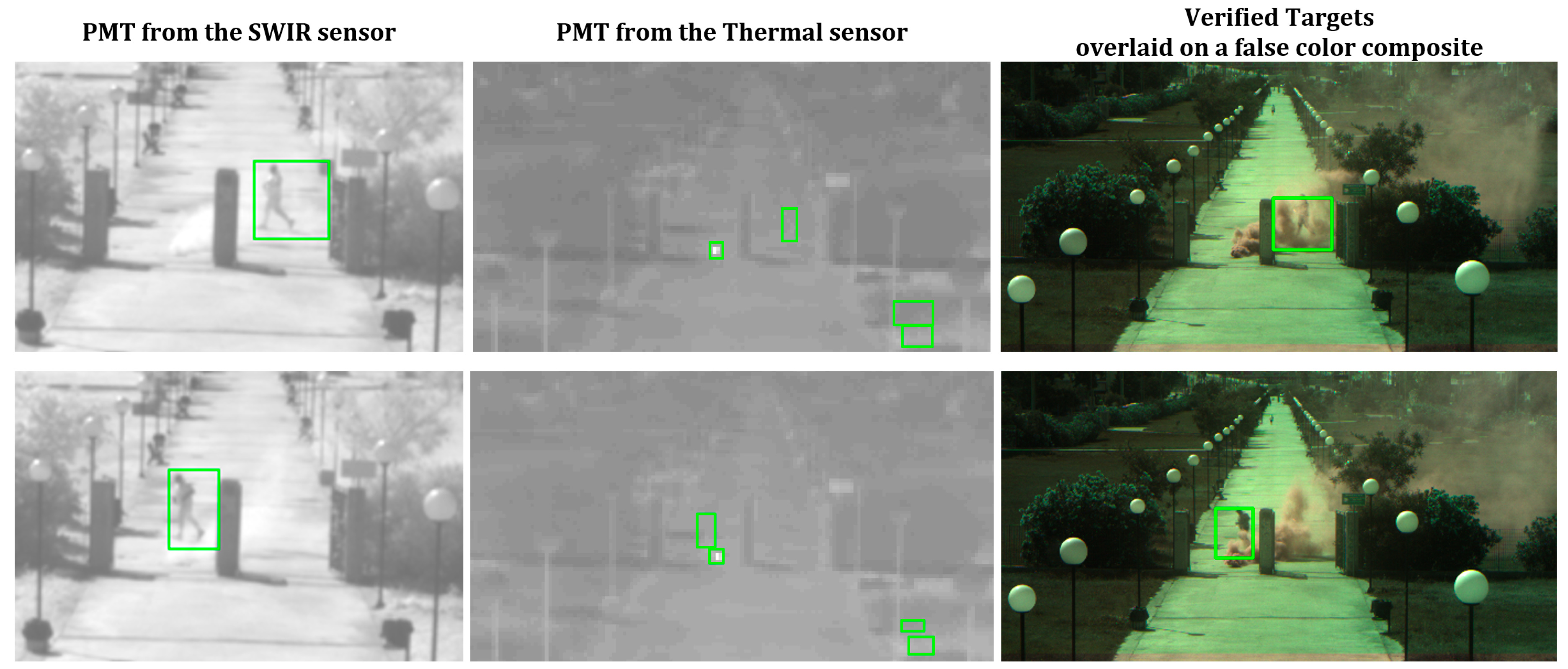

Apart from the quantitative evaluation, a qualitative one was also performed in order to better understand and report on the performance on the developed methodology and each integrated detector. In

Figure 8, the indicative detection results based on the BS detector on the outD1 dataset are presented. For example, at frame #33, the outline of the detected object managed to describe adequately the ground truth. The finally detected target is in a similar way accurate, while the moving person is visible both on the SWIR and the hyperspectral images had being moving behind the low-density smoke. For frames #47 and #90, the outlines of the detected objects had a relatively irregular shape which didn’t perfectly match the actual ground truth. This can be attributed to the higher velocity of the detected object in this particular batch of frames as well as the movement behind relatively thick smoke clouds which challenged the developed detection methodology. As a result, the corresponding final bounding boxes included more background pixels than those in frame #33. It is also important to note that the object is not visible on any of the hyperspectral bands as the accumulated smoke is too dense.

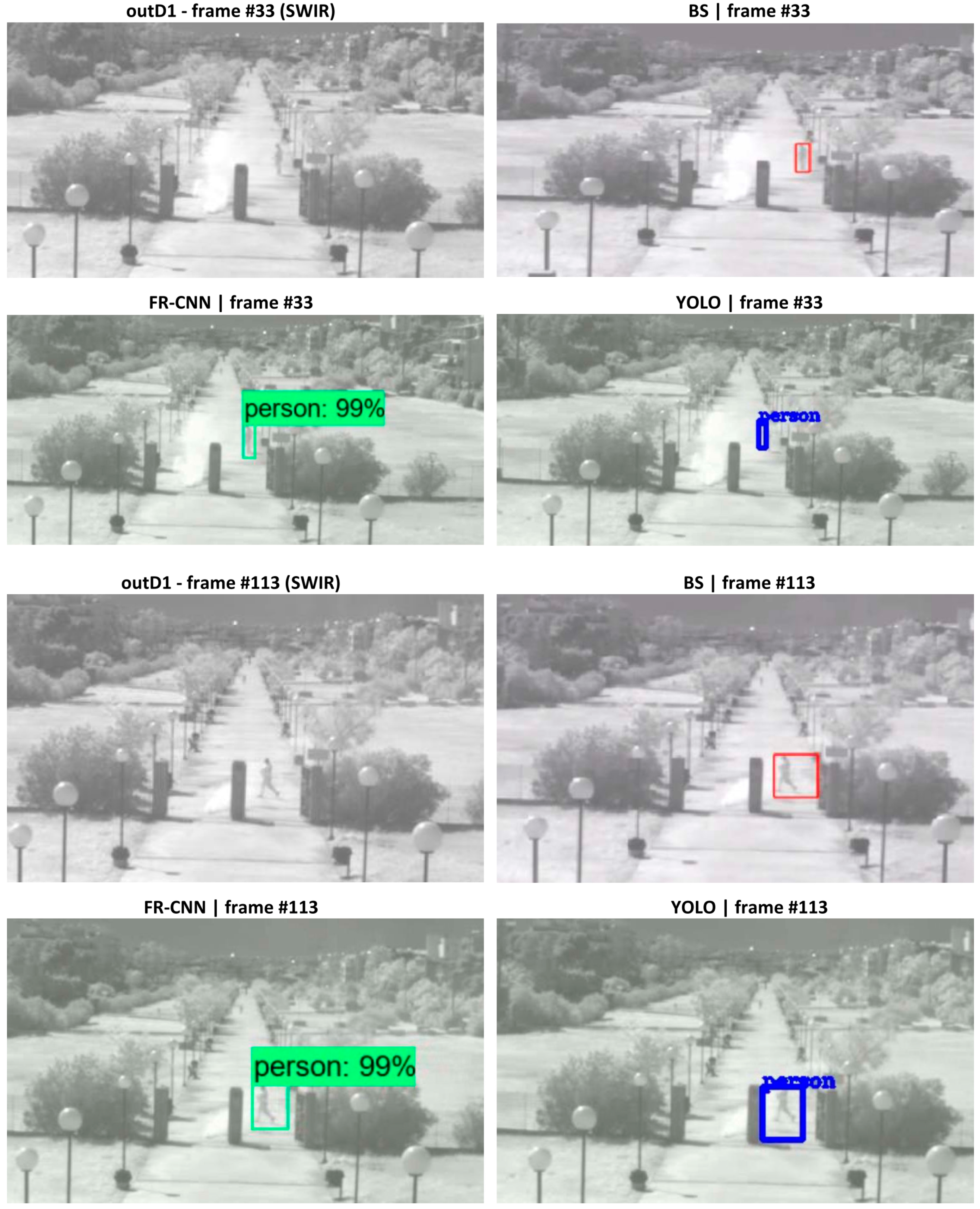

In

Figure 9, the indicative successful detection results on the outdoor outD1 dataset are presented from the integrated BS, FR-CNN, and YOLO detectors on the SWIR footage. In particular, at frame #33 the resulting detection from the BS method is presented with a red bounding box.

The FR-CNN result is presented with a green bounding box and the YOLO one with a blue bounding box. In all three cases, the detection result was adequate with the corresponding bounding boxes tightly describing the target. At frame #113, which is presented using the same layout as before, the resulting bounding boxes successfully included the desired target; however, they were relatively larger and contained more background than those of frame #33, affecting the object and background statistics and multitemporal modeling.

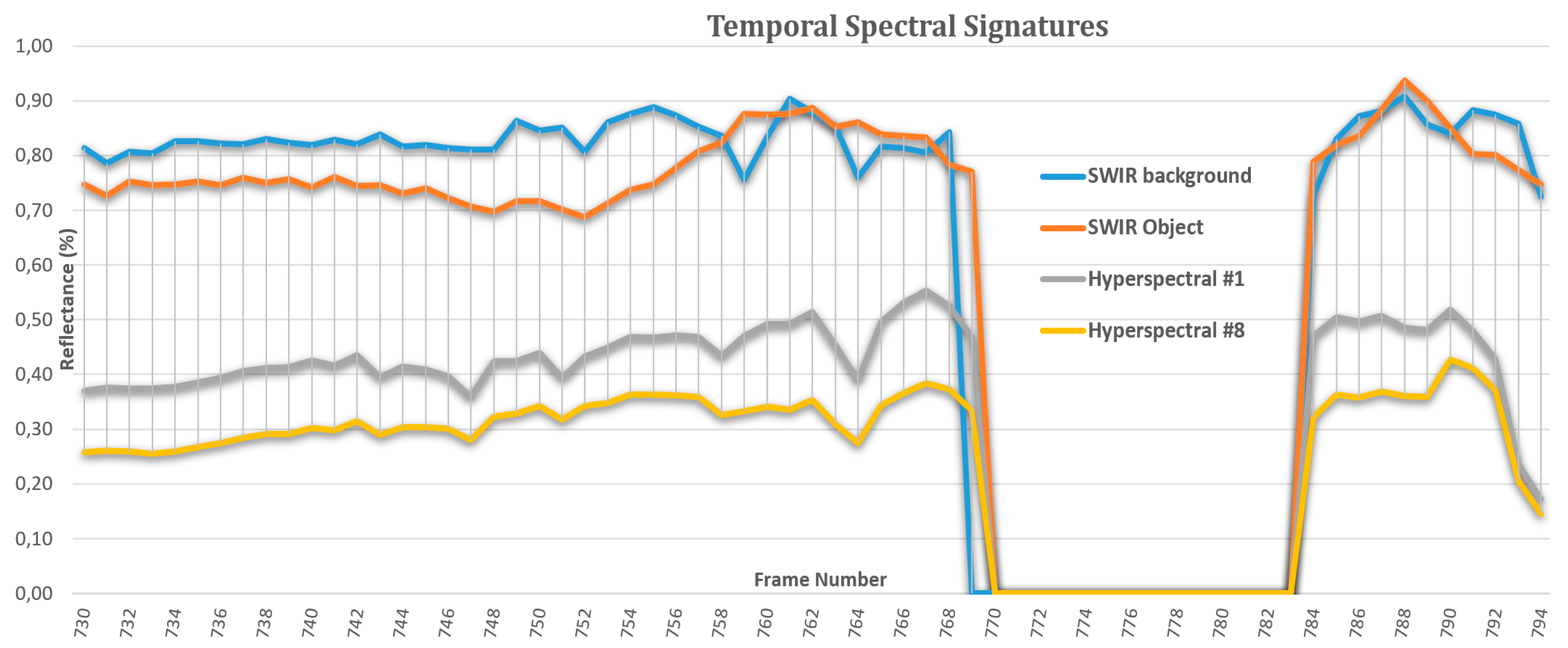

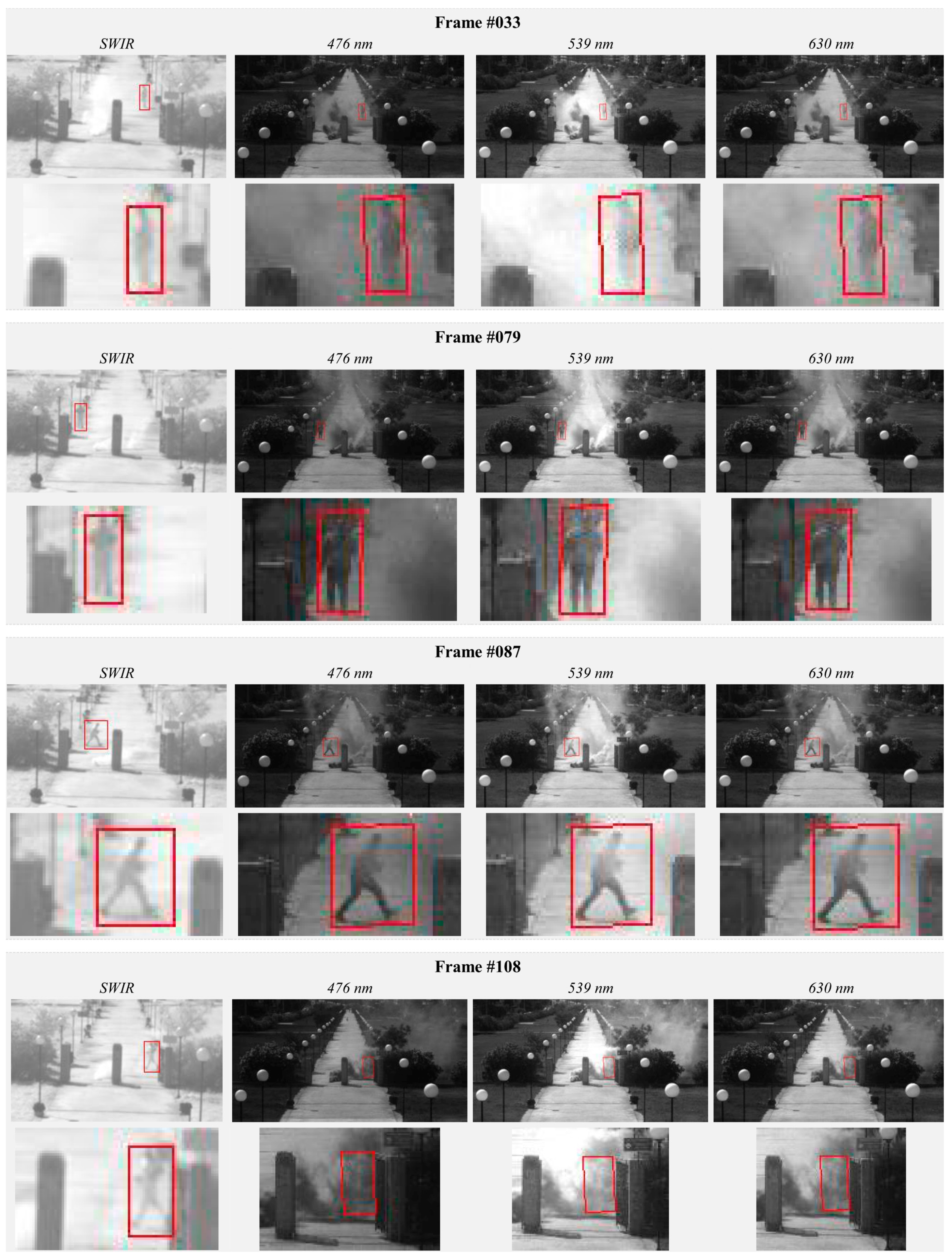

Moreover, in order to evaluate the overall performance of the developed approach, experimental results based on the BS detector are presented in

Figure 10 with indicative frames (#033, #079, #087, and #108) from the outD1 dataset. In particular, the final detected moving targets are overlaid on the respective acquired SWIR image that was employed in the detection process, as well as on three hyperspectral bands centered approximately 476, 539, and 630 nm, respectively. The bounding boxes are outlined in red, and zoomed in views are also provided. It can be observed that the projection of the quadrilateral bounding box on the hyperspectral image plane, distorts it slightly into a more general polygon shape.

In particular, for frame #033 the moving target can be hardly discriminated from the background in the SWIR imagery. However, the developed detection and data association procedure managed to correctly detect the target and correctly project its bounding box onto the hyperspectral image plane. At frame #079, the detection worked also adequately with the bounding box containing 100% of the moving target. At frame #087, even with the target moving relatively fast (running), the developed detection procedure achieved correct localization of the SWIR image and correct projection onto the hypercube. At frame #108 which represents a rather challenging scene with the presence of dense smoke cloud, the algorithm managed to detect and successfully track the moving object. The projection on the hyperspectral cube indicated relatively high reflectance values that were not matching the actual target or background but the green dense smoke. The data association term indicated high confidence levels in the initial SWIR detection and, therefore, the final verified target correctly detected the object boundaries.

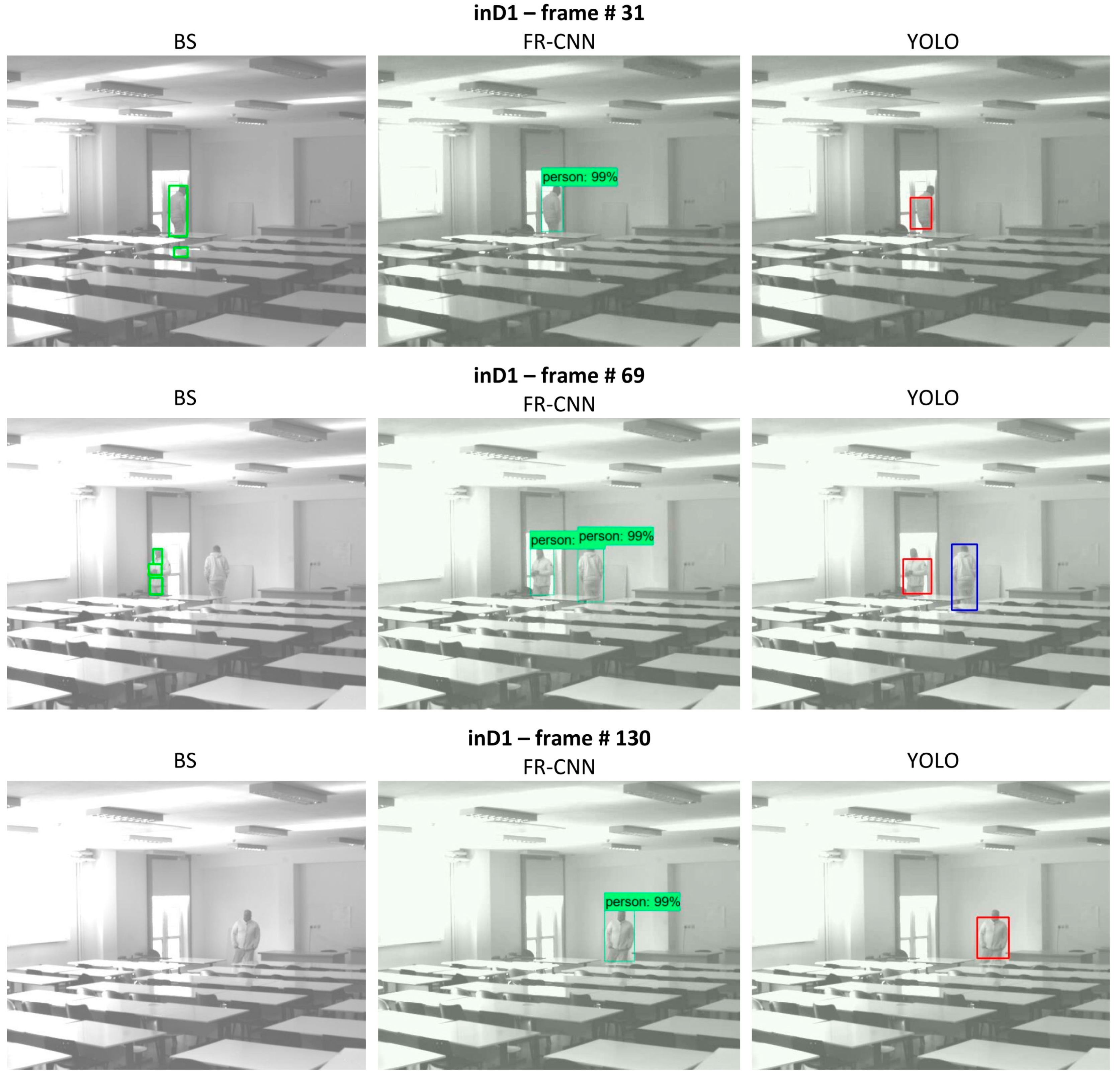

Regarding the performance on the indoor datasets in

Figure 11 and

Figure 12, indicative detection results overlaid onto the SWIR data based on the integrated BS, FR-CNN, and YOLO detectors are presented. In particular, as has been already reported from the quantitative results (

Section 3.2), the BS method failed to deliver acceptable results in the challenging indoor environments. This can be attributed to changes in illumination which caused numerous false positive cases. False negatives occurred also from the BS method. In the inD1 dataset, at frame #31 (

Figure 10, top), the reflection of the person on the table was wrongly detected as a target by the BS method. The FR-CNN presented the best results in this frame if we consider that the resulting bounding box from the YOLO method did not describe optimally the person’s silhouette. At frame #69 (

Figure 10, middle), there are two moving targets in the ground truth and the BS method detected only one and in multiple instances.

The FR-CNN delivered the best results with the YOLO not adequately describing the corresponding silhouettes. At frame #130, the BS method resulted into a false negative while again both the supervised methods managed to detect the object, with the FR-CNN detecting more precisely the target’s silhouette.

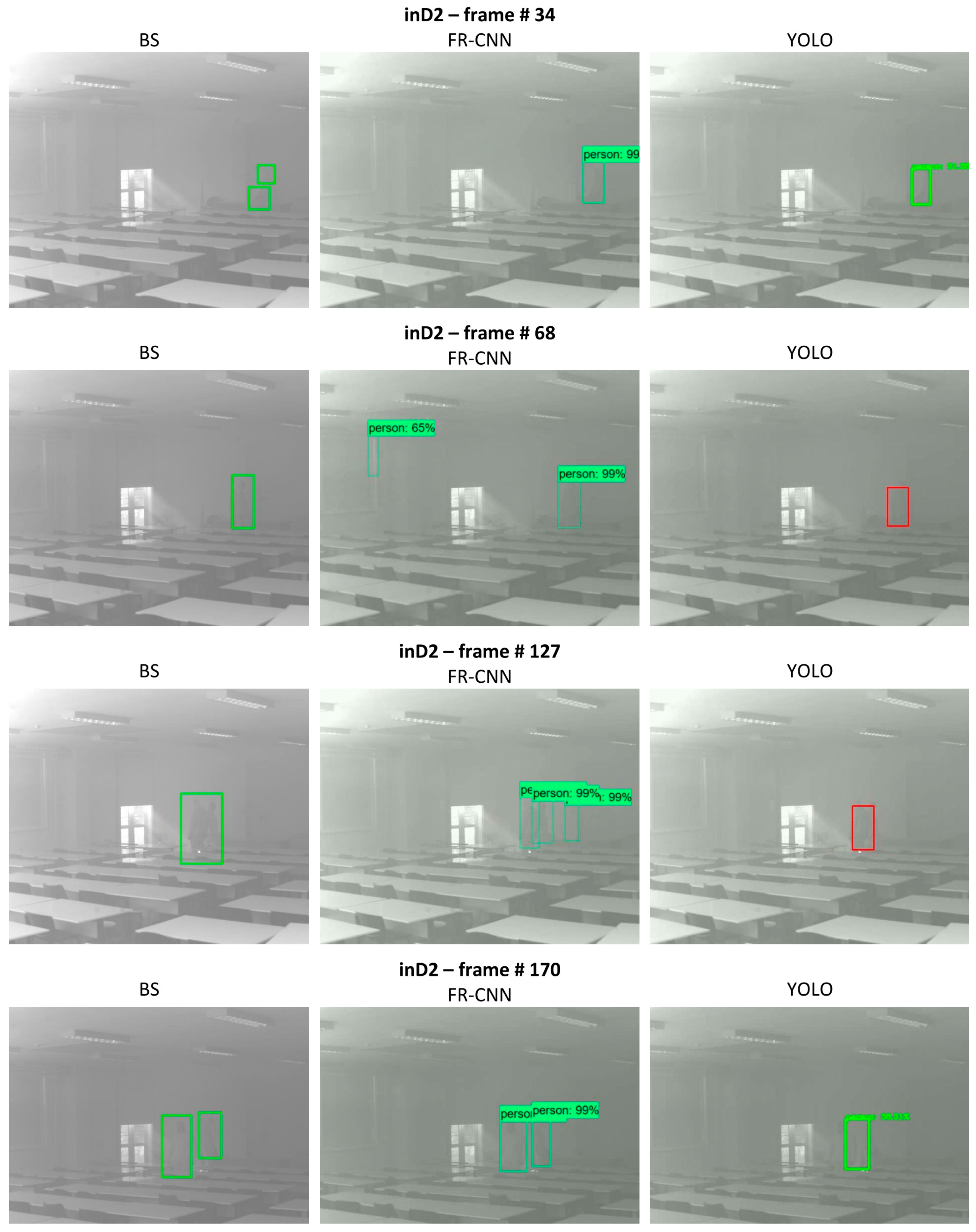

Regarding the performance on the challenging indoor dataset inD2 with the presence of dense smoke and rapidly changing illumination, in

Figure 11 indicative detection results are presented. Similar with the previous indoor case, the BS method failed to detect adequately the moving objects, due to the fact that smoke was present, lowering the intensity and contrast of brightness values. At frame #34, all considered methods managed to detect the target with the BS delivering two different instances/segments. At frame #68, the FR-CNN resulted in a false positive which, however, was associated with a relatively lower confidence level (65% against >85%). At frame #127, the ground truth indicates two moving objects which are partially occluded since the silhouettes of the two moving people are overlapping. The YOLO and BS methods failed to detect both targets and delivered instead a relatively large bounding box containing both objects. The FR-CNN output highlighted both existing targets accurately but delivered in addition a false positive. At frame #170, the ground truth indicated two targets in this highly challenging scene and both BS and FR-CNN methods achieved their detection. The single bounding box delivered by the YOLO detector, was located in the image region between the two objects, partially including both of them. It should be noted that the aforementioned indicative results were in favor of the BS method since the goal was to compare the detection performance in terms of precision also in silhouette detection. In most cases the BS method failed to accurately detect targets, delivering numerous false positives and negatives.

4. Conclusions

In this paper, we propose the use of a multisensor monitoring system based on different spectral imaging sensors that can be fruitfully fused towards addressing surveillance tasks under a range of critical environmental and illumination conditions, like smoke, fog, day and night acquisitions, etc. These conditions have been proved challenging for conventional RGB cameras or single sensor setups. In particular, we have integrated and developed the required hardware and software modules in order to perform near real-time video analysis for detecting and tracking moving objects/targets. Different unsupervised and supervised object detectors have been integrated in a detector-agnostic manner. The performed experimental results and validation procedure demonstrated the capabilities of the proposed system to monitor critical infrastructure in challenging conditions. Regarding the integrated detectors, the BS method although having performed successfully in an outdoor scene (with relatively smaller number of video frames and limited number of training sets) resulted into numerous false positives and false negatives, in the indoor environments with significant challenges in varying illumination conditions with or without the presence of smoke. The deep learning architectures managed even with a relatively small number of training set to tune their hyperparameters. In particular, FR-CNN and YOLO achieved high accuracy rates under the most difficult and challenging scenes. Apart from the normally required huge training set, another shortcoming that was observed regarding the FR-CNN and YOLO was the limited performance on small (few pixels) objects attributed to the several, decreasing in size, convolutional layers of their deep architectures. Among the future work is the development and validation of a multiple object tracking of different types (e.g., vehicles, bicycles, and pedestrians) in challenging scenes and environments.