A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks

Abstract

:1. Introduction

2. Related Work

2.1. Convolutional Networks for Semantic Segmentation (Patch-Based Learning)

2.2. Fully-Convolutional Networks for Semantic Segmentation (Pixel-Based Learning)

2.3. Object-Based Learning for Semantic Segmentation

3. Materials and Methods

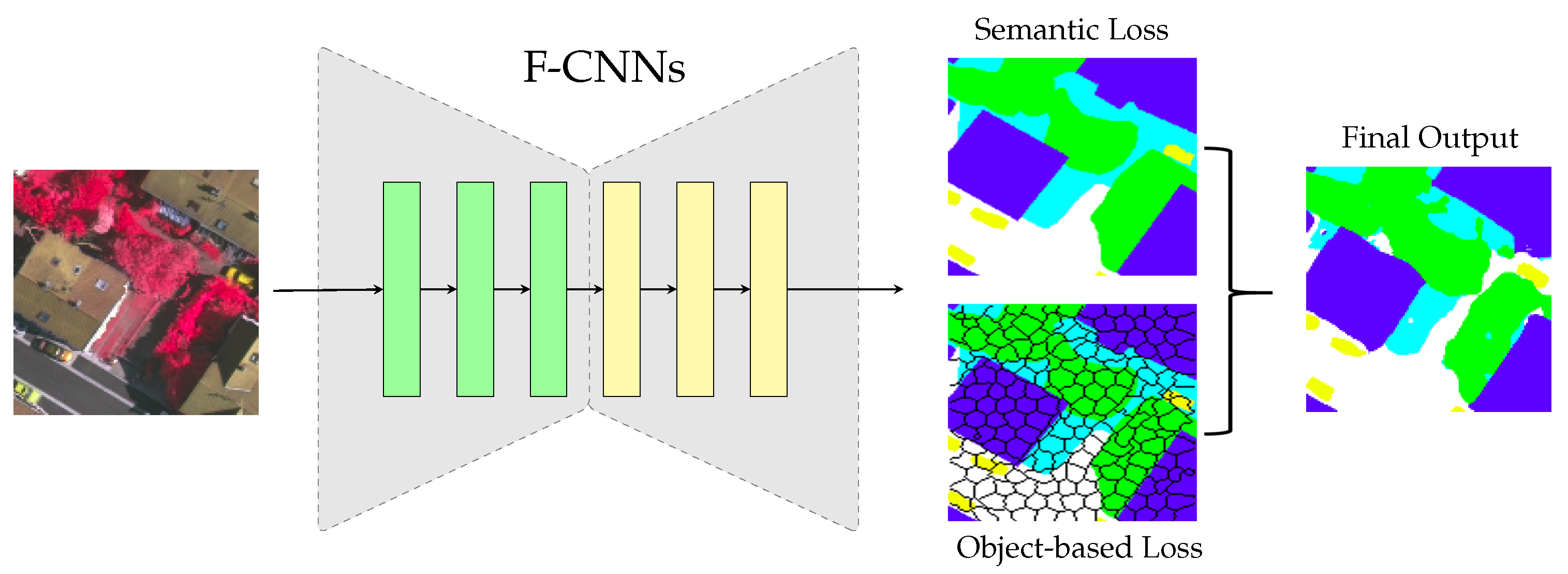

3.1. The Developed Object-Based Learning Framework

3.2. Implementation Details

3.2.1. Patch-Based Learning

3.2.2. Pixel-Based Learning

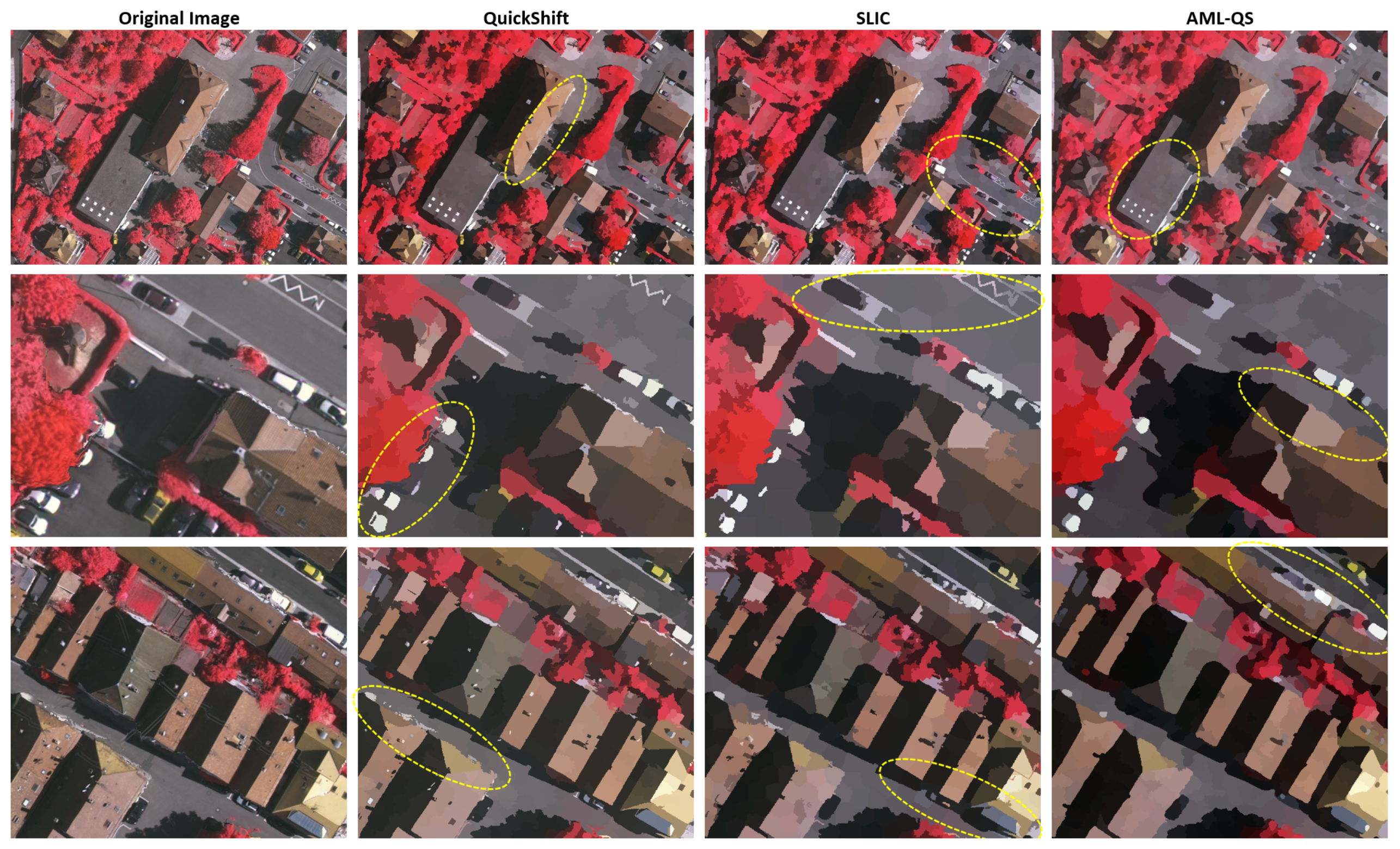

3.2.3. Generation of Objects/Superpixels

- (i)

- Perform a nonlinear, anisotropic diffusion by taking also into account the fact that signal continuity in spectrum is, usually, more plausible than continuity in space

- (ii)

- Take into account the fact that objects/segments/superpixels in the spatial directions should be enhanced, smoothed and elegantly simplified while their contours/edges/boundaries must remain perfectly spatially localized: no edge displacements, intensity shifts or spurious extrema should occur

- (iii)

- Tackle only the kind of noise that never forms a coherent structure in both spatial and spectral directions

3.3. Dataset and Training Procedure

Training Time and Optimal Stop Points

3.4. Quantitative Evaluation Metrics

4. Experimental Results and Discussion

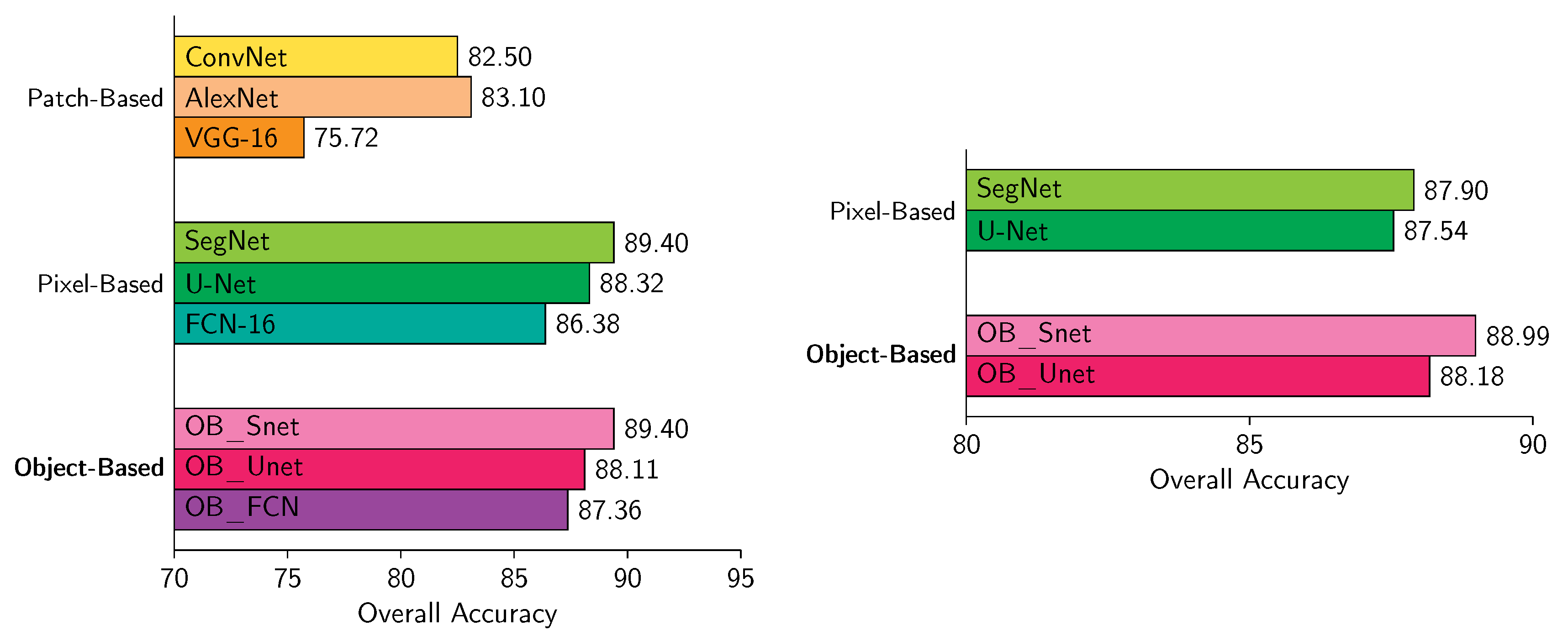

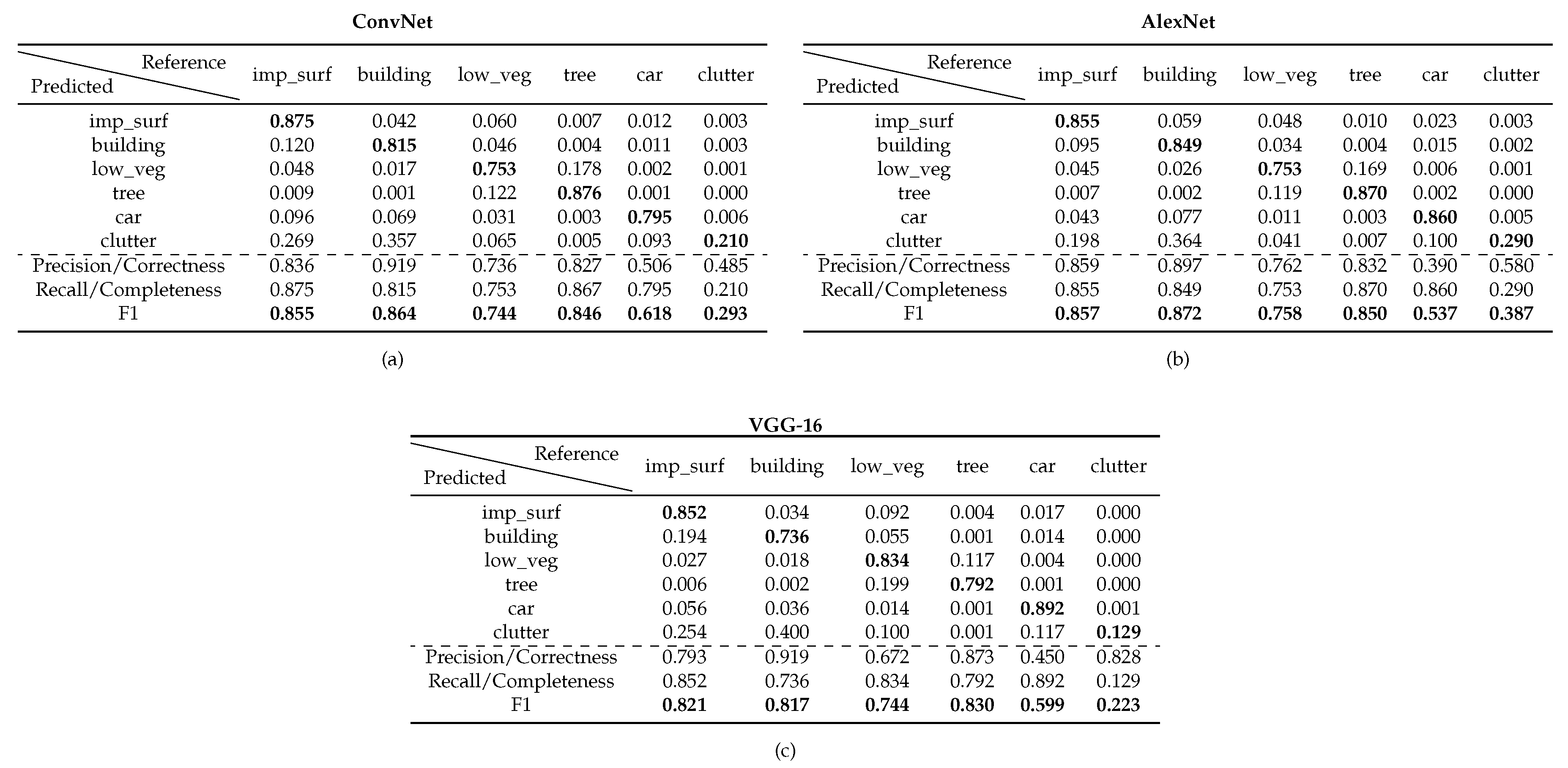

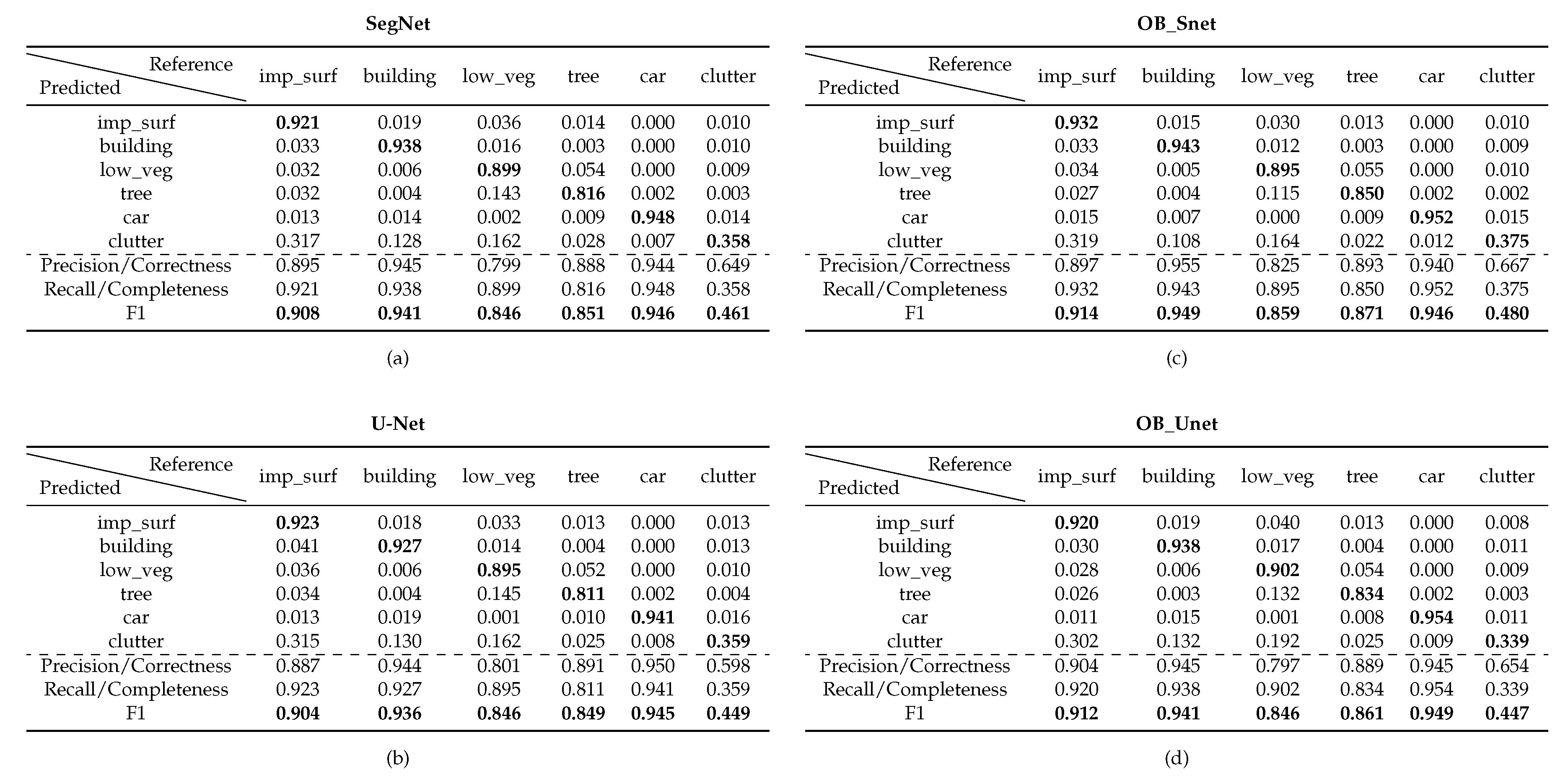

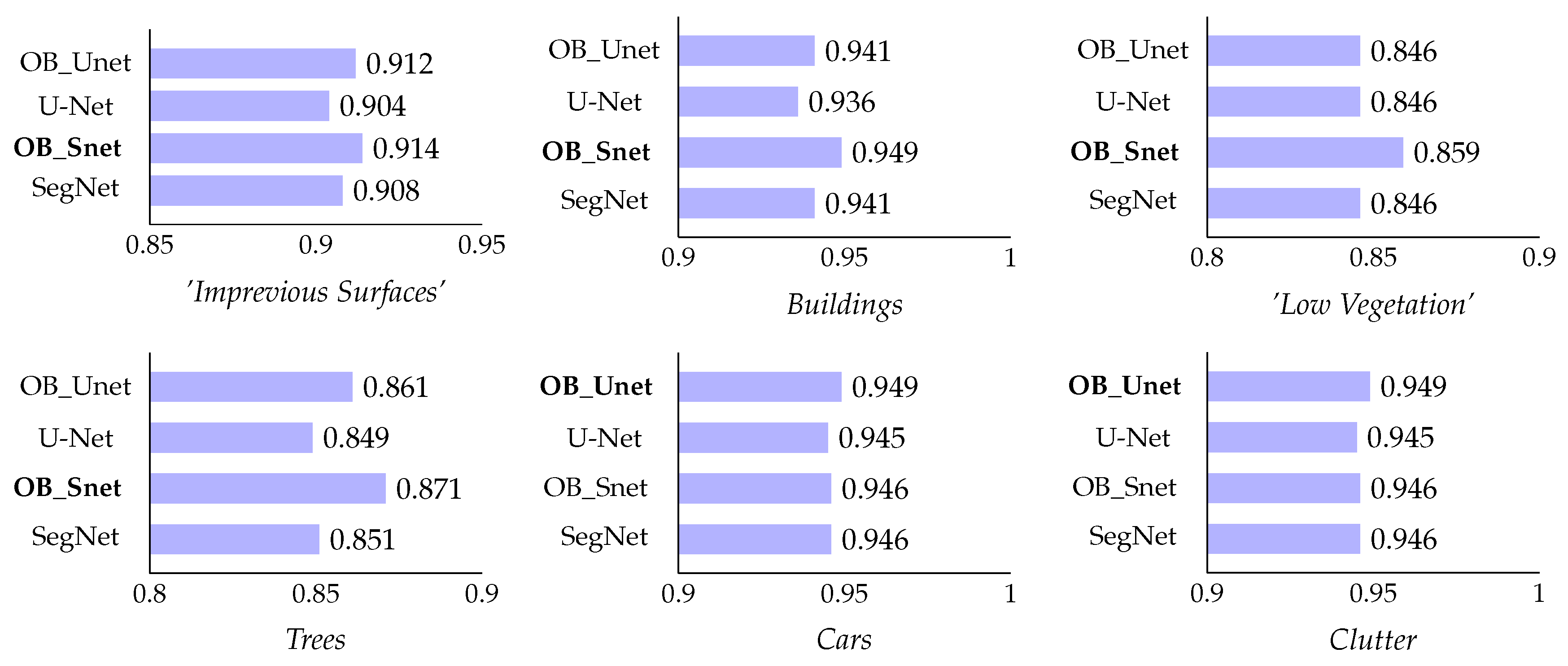

4.1. Quantitative Evaluation

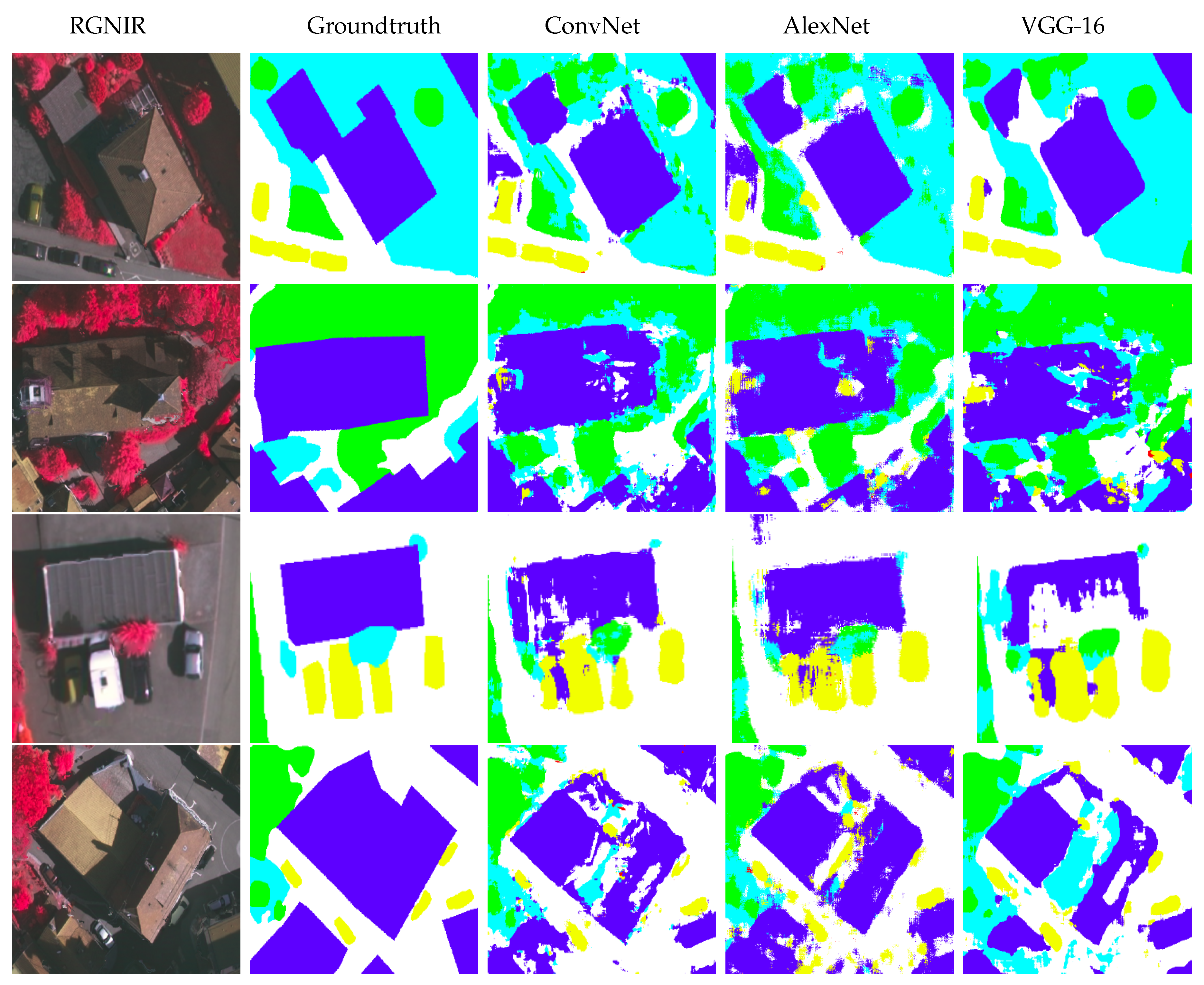

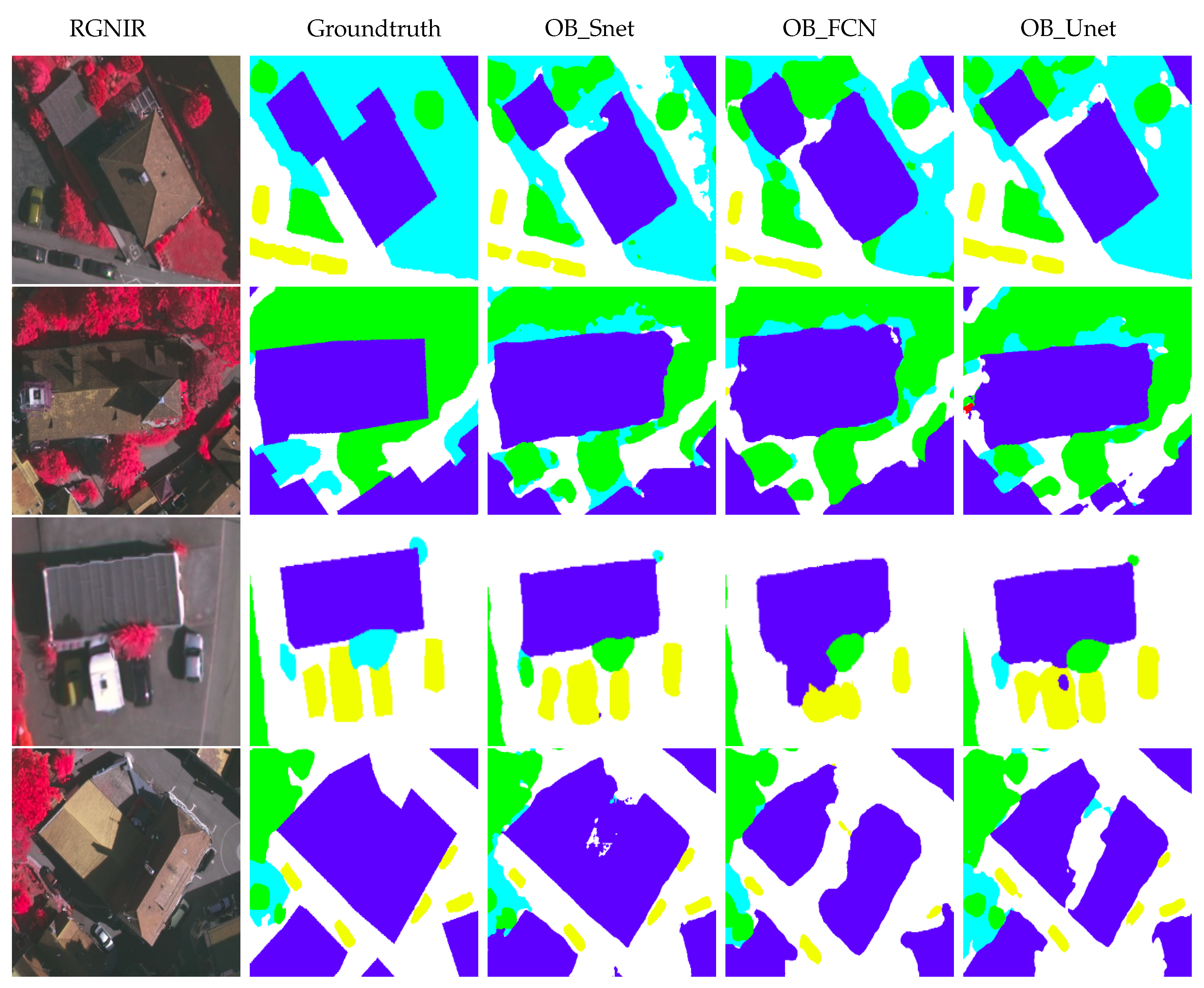

4.2. Qualitative Evaluation

4.3. Discussion

Comparison with Other State-Of-The-Art Methods on the ISPRS Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CNNs | Convolutional Neural Networks |

| F-CNNs | Fully Convolutional Neural Networks |

| AML | Anisotropic Morphological Levelings |

| AML-QS | Anisotropic Morphological Levelings-Quickshift segmentation algorithm |

| OB_Snet | Object-based SegNet |

| OB_Unet | Object-based U-Net |

| OB_FCN | Object-based FCN-16 |

| OA | Overall Accuracy |

| IoU | Intersection over Union |

| HD | Hausdorff Distance |

| SLIC | Simple Linear Iterative Clustering |

| VGG | Visual Geometry Group |

| DSM | Digital Surface Model |

| VHR | Very High Resolution |

| CRF | Conditional Random Field |

References

- Zhu, X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 55. [Google Scholar] [CrossRef]

- Springenberg, J.; Dosovitskiy, A.; Brox, T.; Riedmiller, M. Striving for Simplicity: The All Convolutional Net. In Proceedings of the International Conference on Learning Representations (ICLR), Workshop Track, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1; Curran Associates Inc.: Red Hook, NY, USA, 2012; NIPS’12; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Papadomanolaki, M.; Vakalopoulou, M.; Karantzalos, K. Patch-based deep learning architectures for sparse annotated very high resolution datasets. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, UAE, 6–8 March 2017. [Google Scholar]

- Volpi, M.; Tuia, D. Dense Semantic Labeling of Subdecimeter Resolution Images with Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 881–893. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; Vancoillie, F.; et al. Geographic object-based image analysis: Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Tzotsos, A.; Karantzalos, K.; Argialas, D. Object-based image analysis through nonlinear scale-space filtering. ISPRS J. Photogramm. Remote Sens. 2011, 66, 2–16. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefévre, S. Segment-before-Detect: Vehicle Detection and Classification through Semantic Segmentation of Aerial Images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Vakalopoulou, M.; Karantzalos, K.; Komodakis, N.; Paragios, N. Building detection in very high resolution multispectral data with deep learning features. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Van-Den Hengel, A. Effective Semantic Pixel Labelling with Convolutional Networks and Conditional Random Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Nogueira, K.; Penatti, O.A.; dos Santos, J.A. Towards Better Exploiting Convolutional Neural Networks for Remote Sensing Scene Classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef]

- Nogueira, K.; Miranda, W.O.; Santos, J.A.D. Improving Spatial Feature Representation from Aerial Scenes by Using Convolutional Networks. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, SIBGRAPI ’15, Salvador, Brazil, 26–29 August 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 289–296. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. CAFFE: Convolutional Architecture for Fast Feature Embedding. In Proceedings of the 22Nd ACM International Conference on Multimedia, MM ’14, Orlando, FL, USA, 3–7 November 2014; ACM: New York, NY, USA, 2014; pp. 675–678. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; Lecun, Y. Overfeat: Integrated recognition, localization and detection using convolutional networks. In Proceedings of the International Conference on Learning Representations (ICLR2014), CBLS, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2016; pp. 770–778. [Google Scholar]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very Deep Convolutional Neural Networks for Complex Land Cover Mapping Using Multispectral Remote Sensing Imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef]

- Albert, A.; Kaur, J.; Gonzalez, M.C. Using Convolutional Networks and Satellite Imagery to Identify Patterns in Urban Environments at a Large Scale. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, KDD ’17, Halifax, NS, Canada, 13–17 August 2017; ACM: New York, NY, USA, 2017; pp. 1357–1366. [Google Scholar] [CrossRef]

- Karakizi, C.; Karantzalos, K.; Vakalopoulou, M.; Antoniou, G. Detailed Land Cover Mapping from Multitemporal Landsat-8 Data of Different Cloud Cover. Remote Sens. 2018, 10, 1214. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Cao, L.; Zhang, L. Pre-Trained AlexNet Architecture with Pyramid Pooling and Supervision for High Spatial Resolution Remote Sensing Image Scene Classification. Remote Sens. 2017, 9, 848. [Google Scholar] [CrossRef]

- Anwer, R.; Khan, F.; van de Weijer, J.; Molinier, M.; Laaksonen, J. Binary patterns encoded convolutional neural networks for texture recognition and remote sensing scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 138, 74–85, Project: 112403. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets; CoRR: Leawood, KS, USA, 2014; abs/1405.3531. [Google Scholar]

- Filin, O.; Zapara, A.; Panchenko, S. Road Detection with EOSResUNet and Post Vectorizing Algorithm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Rakhlin, A.; Davydow, A.; Nikolenko, S. Land Cover Classification From Satellite Imagery with U-Net and Lovasz-Softmax Loss. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-LinkNet: LinkNet with Pretrained Encoder and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Iglovikov, V.; Seferbekov, S.; Buslaev, A.; Shvets, A. TernausNetV2: Fully Convolutional Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Bulò, S.R.; Porzi, L.; Kontschieder, P. In-place Activated BatchNorm for Memory-Optimized Training of DNNs. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5639–5647. [Google Scholar]

- Seferbekov, S.; Iglovikov, V.; Buslaev, A.; Shvets, A. Feature Pyramid Network for Multi-Class Land Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Semantic Segmentation of Earth Observation Data Using Multimodal and Multi-scale Deep Networks. In Proceedings of the Asian Conference on Computer Vision (ACCV16), Taipei, Taiwan, 20–24 November 2016. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very High Resolution Urban Remote Sensing With Multimodal Deep Networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Hazirbas, C.; Ma, L.; Domokos, C.; Cremers, D. FuseNet: Incorporating Depth into Semantic Segmentation via Fusion-Based CNN Architecture. In Proceedings of the 13th Asian Conference on Computer Vision, ACCV, Taipei, Taiwan, 20–24 November 2016. [Google Scholar]

- Li, R.; Liu, W.; Yang, L.; Sun, S.; Hu, W.; Zhang, F.; Li, W. DeepUNet: A Deep Fully Convolutional Network for Pixel-Level Sea-Land Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017. [Google Scholar] [CrossRef]

- Marmanis, D.D.; Wegner, J.; Galliani, S.; Schindler, K.; Datcu, M.; Stilla, U. Semantic Segmentation of Aerial Images with an Ensemble of CNNs. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-3, 473–480. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X. RiFCN: Recurrent Network in Fully Convolutional Network for Semantic Segmentation of High Resolution Remote Sensing Images; CoRR: Leawood, KS, USA, 2018; abs/1805.02091. [Google Scholar]

- Marmanis, D.; Schindler, K.; Dirk Wegner, J.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an Edge: Improving Semantic Image Segmentation with Boundary Detection. ISPRS J. Photogramm. Remote Sens. 2016, 135. [Google Scholar] [CrossRef]

- Liu, S.; Ding, W.; Liu, C.; Liu, Y.; Wang, Y.; Li, H. ERN: Edge Loss Reinforced Semantic Segmentation Network for Remote Sensing Images. Remote Sens. 2018, 10, 1339. [Google Scholar] [CrossRef]

- Liu, Y.; Piramanayagam, S.; Monteiro, S.T.; Saber, E. Dense Semantic Labeling of Very-High-Resolution Aerial Imagery and LiDAR with Fully-Convolutional Neural Networks and Higher-Order CRFs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1561–1570. [Google Scholar]

- Vakalopoulou, M.; Bus, N.; Karantzalos, K.; Paragios, N. Integrating edge/boundary priors with classification scores for building detection in very high resolution data. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Wang, Y.; Liang, B.; Ding, M.; Li, J. Dense Semantic Labeling with Atrous Spatial Pyramid Pooling and Decoder for High-Resolution Remote Sensing Imagery. Remote Sens. 2018, 11, 20. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Mostajabi, M.; Yadollahpour, P.; Shakhnarovich, G. Feedforward semantic segmentation with zoom-out features. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3376–3385. [Google Scholar]

- Aytekin, Ç.; Ni, X.; Cricri, F.; Fan, L.; Aksu, E. Memory-Efficient Deep Salient Object Segmentation Networks on Gridized Superpixels. In Proceedings of the 20th IEEE International Workshop on Multimedia Signal Processing, MMSP 2018, Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar]

- Audebert, N.; Boulch, A.; Randrianarivo, H.; Le Saux, B.; Ferecatu, M.; Lefévre, S.; Marlet, R. Deep Learning for Urban Remote Sensing. In Proceedings of the Joint Urban Remote Sensing (JURSE), Dubai, UAE, 6–8 March 2017. [Google Scholar]

- Gonzalo-Martin, C.; Garcia-Pedrero, A.; Lillo, M.; Menasalvas, E. Deep learning for superpixel-based classification of remote sensing images. In Proceedings of the GEOgraphic-Object-Based Image Analysis (GEOBIA), Enschede, The Netherlands, 14–16 September 2016. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. In Proceedings of the NIPS-W, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Papadomanolaki, M.; Vakalopoulou, M.; Zagoruyko, S.; Karantzalos, K. Benchmarking Deep Learning Frameworks for the Classification of Very High Resolution Satellite Multispectral Data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 83–88. [Google Scholar] [CrossRef]

- Meyer, F.; Maragos, P. Nonlinear Scale-Space Representation with Morphological Levelings. J. Vis. Comun. Image Represent. 2000, 11, 245–265. [Google Scholar] [CrossRef]

- Karantzalos, K.; Argialas, D.; Paragios, N. Comparing morphological levelings constrained by different markers. In Proceedings of the 8th International Symposium on Mathematical Morphology, Rio de Janeiro, Brazil, 10–13 October 2007; pp. 113–124. [Google Scholar]

- Karantzalos, K.; Argialas, D. Improving edge detection and watershed segmentation with anisotropic diffusion and morphological levellings. Int. J. Remote Sens. 2006, 27, 5427–5434. [Google Scholar] [CrossRef]

- Velasco-Forero, S.; Angulo, J. Morphological scale-space for hyperspectral images and dimensionality exploration using tensor modeling. In Proceedings of the 2009 First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing, Grenoble, France, 26–28 August 2009; pp. 1–4. [Google Scholar]

- Karantzalos, K. Intrinsic dimensionality estimation and dimensionality reduction through scale space filtering. In Proceedings of the 2009 16th International Conference on Digital Signal Processing, Santorini-Hellas, Greece, 5–7 July 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Vedaldi, A.; Soatto, S. Quick Shift and Kernel Methods for Mode Seeking. In Proceedings of the European Conference on Computer Vision, ECCV, Marseille, France, 12–18 October 2008. [Google Scholar]

- ISPRS. Available online: http://www2.isprs.org/commissions/comm3/wg4/semantic-labeling.html (accessed on 20 March 2019).

- Kohli, P.; Ladický, L.; Torr, P.H. Robust Higher Order Potentials for Enforcing Label Consistency. Int. J. Comput. Vis. 2009, 82, 302–324. [Google Scholar] [CrossRef]

- Liu, Y.; Minh Nguyen, D.; Deligiannis, N.; Ding, W.; Munteanu, A. Hourglass-ShapeNetwork Based Semantic Segmentation for High Resolution Aerial Imagery. Remote Sens. 2017, 9, 522. [Google Scholar] [CrossRef]

- Newell, A.; Yang, K.; Deng, J. Stacked Hourglass Networks for Human Pose Estimation. In Proceedings of the 14th European Conference Computer Vision, ECCV, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Murphy, K.P.; Weiss, Y.; Jordan, M.I. Loopy Belief Propagation for Approximate Inference: An Empirical Study. In Proceedings of the Fifteenth Conference on Uncertainty in Artificial Intelligence, UAI, Stockholm, Sweden, 30 July–1 August 1999. [Google Scholar]

| Category | Vaihingen | Potsdam |

|---|---|---|

| Impervious_Surfaces | 0.293 | 0.299 |

| Buildings | 0.269 | 0.282 |

| Low_Vegetation | 0.194 | 0.209 |

| Trees | 0.224 | 0.144 |

| Cars | 0.013 | 0.017 |

| Clutter | 0.007 | 0.048 |

| (a) | |||

| Mins/Epoch | OptimalEpoch | TotalMins | |

| ConvNet | 1 | 30 | 30 |

| AlexNet | 1.5 | 32 | 48 |

| VGG-16 | 12 | 22 | 264 |

| SegNet | 20 | 51 | 1020 |

| U-Net | 20 | 31 | 620 |

| FCN-16 | 20 | 50 | 1000 |

| OB_Snet | 30 | 42 | 1260 |

| OB_Unet | 30 | 38 | 1140 |

| OB_FCN | 30 | 40 | 1200 |

| (b) | |||

| Mins/Epoch | OptimalEpoch | TotalMins | |

| SegNet | 30 | 34 | 1020 |

| U-Net | 30 | 43 | 1290 |

| OB_Snet | 45 | 32 | 1440 |

| OB_Unet | 80 | 39 | 3120 |

| Vaihingen | Potsdam | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | SegNet | OB_Snet | U-Net | OB_Unet | FCN-16 | OB_FCN | SegNet | OB_Snet | U-Net | OB_Unet | |

| Category | |||||||||||

| Impervious_Surfaces | 78.38 | 78.64 | 77.74 | 77.53 | 74.47 | 76.13 | 79.50 | 80.27 | 79.01 | 80.21 | |

| Buildings | 85.85 | 85.41 | 83.74 | 83.83 | 78.69 | 80.45 | 86.70 | 88.07 | 85.74 | 86.79 | |

| Low_Vegetation | 63.13 | 63.10 | 60.07 | 60.50 | 57.72 | 58.09 | 68.73 | 70.36 | 68.81 | 68.77 | |

| Trees | 73.45 | 74.09 | 72.21 | 72.15 | 70.94 | 72.71 | 69.58 | 72.37 | 69.43 | 70.87 | |

| Cars | 62.20 | 63.43 | 64.59 | 64.07 | 47.96 | 49.81 | 81.05 | 80.87 | 81.28 | 81.69 | |

| Clutter | 0.00 | 0.00 | 8.22 | 9.66 | 7.13 | 0.00 | 24.14 | 25.92 | 24.16 | 24.04 | |

| Vaihingen | Potsdam | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | SegNet | OB_Snet | U-Net | OB_Unet | FCN-16 | OB_FCN | SegNet | OB_Snet | U-Net | OB_Unet | |

| Category | |||||||||||

| Buildings | 21.35 | 20.89 | 21.52 | 22.08 | 26.63 | 25.05 | 45.36 | 41.87 | 59.66 | 44.70 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papadomanolaki, M.; Vakalopoulou, M.; Karantzalos, K. A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks. Remote Sens. 2019, 11, 684. https://doi.org/10.3390/rs11060684

Papadomanolaki M, Vakalopoulou M, Karantzalos K. A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks. Remote Sensing. 2019; 11(6):684. https://doi.org/10.3390/rs11060684

Chicago/Turabian StylePapadomanolaki, Maria, Maria Vakalopoulou, and Konstantinos Karantzalos. 2019. "A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks" Remote Sensing 11, no. 6: 684. https://doi.org/10.3390/rs11060684

APA StylePapadomanolaki, M., Vakalopoulou, M., & Karantzalos, K. (2019). A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks. Remote Sensing, 11(6), 684. https://doi.org/10.3390/rs11060684