Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space

Abstract

1. Introduction

2. Materials and Methods

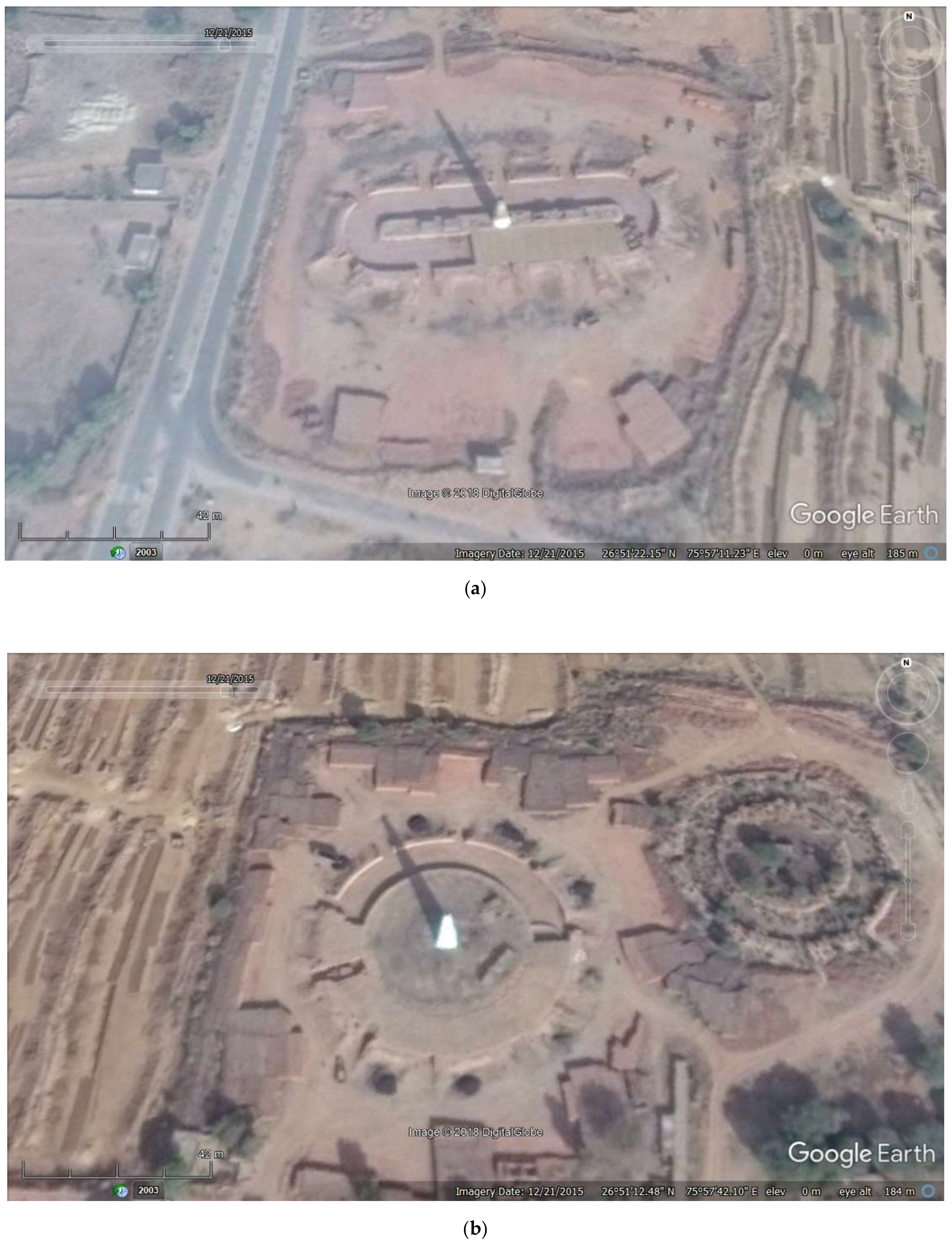

2.1. Study Area and Imagery

2.2. Analyses

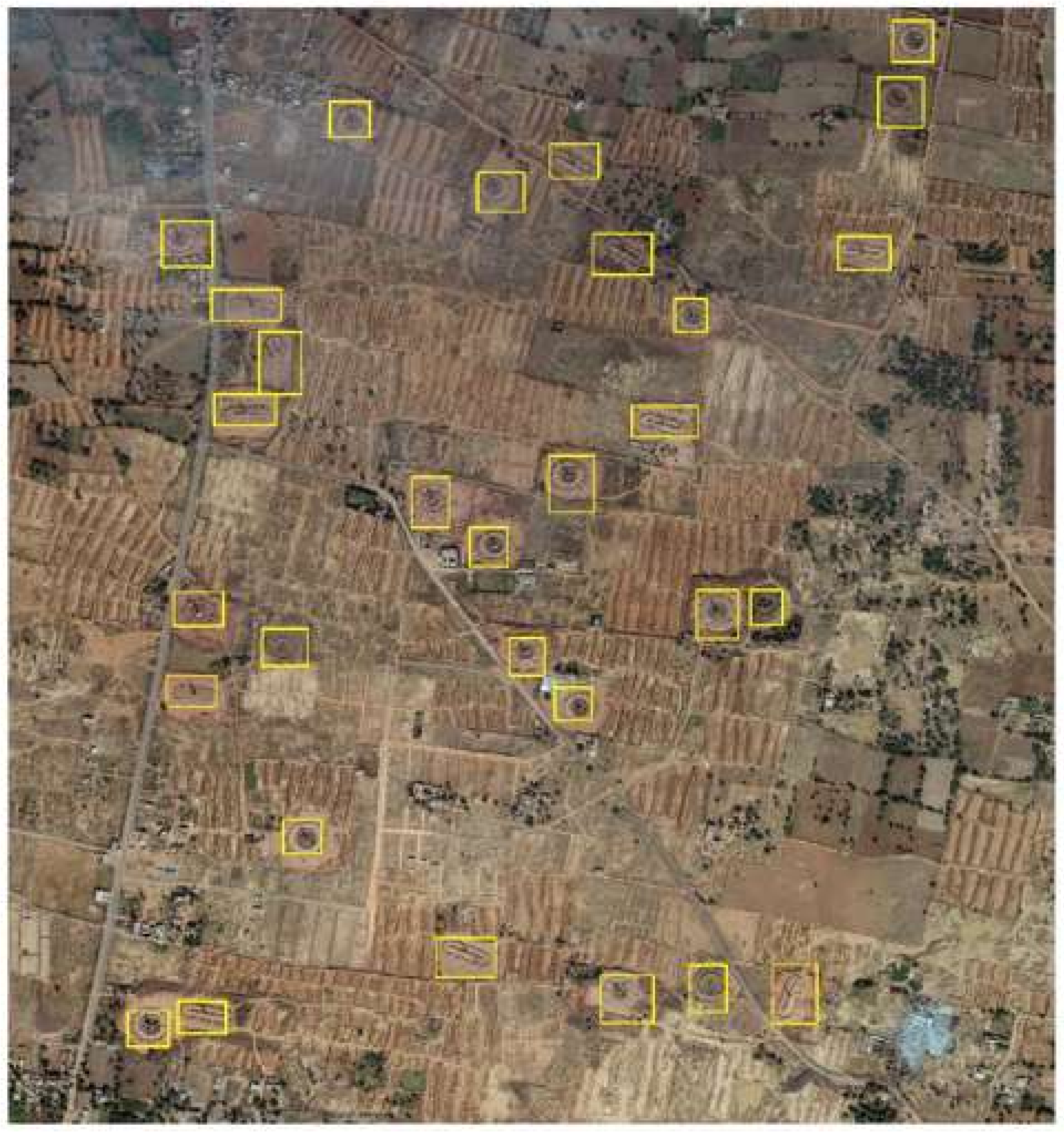

2.2.1. Classification with Faster R-CNN

2.2.2. Re-Classification with a CNN

2.2.3. Accuracy Assessment

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- GSI. The Global Slavery Index 2016, 3rd ed.; Walk Free Foundation: Nedlands, Australia, 2016. [Google Scholar]

- United Nations. Sustainable Development Goals. Department of Economic and Social Affairs. Available online: https://sustainabledevelopment.un.org/sdgs (accessed on 7 November 2016).

- United Nations. Sustainable Development Goal 8. Sustainable Development Knowledge Platform. Department of Economic and Social Affairs. 2016. Available online: https://sustainabledevelopment.un.org/sdg8 (accessed on 7 November 2016).

- Jackson, B.; Bales, K.; Owen, S.; Wardlaw, J.; Boyd, D.S. Analysing slavery through satellite technology: How remote sensing could revolutionise data collection to help end modern slavery. J. Mod. Slavery 2018, 4, 169–199. Available online: http://slavefreetoday.org/journal/articles issues/v4i2SEfullpub.pdf#page=180 (accessed on 28 January 2019).

- Lechner, A.M.; McIntyre, N.; Raymond, C.M.; Witt, K.; Scott, M.; Rifkin, W. Challenges of integrated modelling in mining regions to address social, environmental and economic impacts. Environ. Model. Softw. 2017, 268–281. [Google Scholar] [CrossRef]

- Bales, K. Disposable People: New Slavery in the Global Economy; University of California Press: Berkeley, CA, USA, 2012. [Google Scholar]

- Kara, S. Bonded Labor: Tackling the System of Slavery in South Asia; Columbia University Press: New York, NY, USA, 2014. [Google Scholar]

- Khan, A.; Qureshi, A.A. Bonded Labour in Pakistan; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- ILO. Unfree Labour in Pakistan: Work, Debt and Bondage in Brick Kilns; International Labour Office: Geneva, Switzerland, 2005. [Google Scholar]

- Save the Children. The Small Hands of Slavery; Save the Children Fund: London, UK, 2007. [Google Scholar]

- Khan, A. Over 250,000 Children Work in Brick Kilns; The Express Tribune: Karachi, Pakistan, 3 October 2010; Available online: https://tribune.com.pk/story/57855/over-250000-children-work-in-brick-kilns/ (accessed on 8 August 2017).

- Boyd, D.S.; Jackson, B.; Wardlaw, J.; Foody, G.M.; Marsh, S.; Bales, K. Slavery from space: Demonstrating the role for satellite remote sensing to inform evidence-based action related to UN SDG number 8. ISPRS J. Photogramm. Remote Sens. 2018, 142, 380–388. [Google Scholar] [CrossRef]

- Knoth, C.; Slimani, S.; Appel, M.; Pebesma, E. Combining automatic and manual image analysis in a web-mapping application for collaborative conflict damage assessment. Appl. Geogr. 2018, 97, 25–34. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- A Fonte, C.C.; Bastin, L.; See, L.; Foody, G.; Lupia, F. Usability of VGI for validation of land cover maps. Int. J. Geogr. Inf. Sci. 2015, 29, 1269–1291. [Google Scholar] [CrossRef]

- Foody, G.M. Citizen science in support of remote sensing research. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; IEEE: Piscataway, NJ, USA; pp. 5387–5390. [Google Scholar]

- Fritz, S.; See, L.; Perger, C.; McCallum, I.; Schill, C.; Schepaschenko, D.; Duerauer, M.; Karner, M.; Dresel, C.; Laso-Bayas, J.C.; et al. A global dataset of crowdsourced land cover and land use reference data. Sci. Data 2017, 4, 170075. [Google Scholar] [CrossRef] [PubMed]

- See, L.; Mooney, P.; Foody, G.; Bastin, L.; Comber, A.; Estima, J.; Fritz, S.; Kerle, N.; Jiang, B.; Laakso, M.; et al. Crowdsourcing, citizen science or volunteered geographic information? The current state of crowdsourced geographic information. ISPRS Int. J. Geo-Inf. 2016, 5, 55. [Google Scholar] [CrossRef]

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- Simpson, R.; Page, K.R.; De Roure, D. Zooniverse: Observing the world’s largest citizen science platform. In Proceedings of the 23rd International Conference on World Wide Web, Seoul, Korea, 7–11 April 2014; pp. 1049–1054. [Google Scholar]

- Olteanu-Raimond, A.M.; Hart, G.; Foody, G.M.; Touya, G.; Kellenberger, T.; Demetriou, D. The scale of VGI in map production: A perspective on European National Mapping Agencies. Trans. GIS 2017, 21, 74–90. [Google Scholar] [CrossRef]

- Peddle, D.R.; Foody, G.M.; Zhang, A.; Franklin, S.E.; LeDrew, E.F. Multi-source image classification II: An empirical comparison of evidential reasoning and neural network approaches. Can. J. Remote Sen. 1994, 20, 396–407. [Google Scholar] [CrossRef]

- Mather, P.; Tso, B. Classification Methods for Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Jiang, Z.; Zhang, H.; Cai, B.; Meng, G.; Zuo, D. Chimney and condensing tower detection based on faster R-CNN in high resolution remote sensing images. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3329–3332. [Google Scholar]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Zou, H. Toward fast and accurate vehicle detection in aerial images using coupled region-based convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3652–3664. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Q.; Chen, G.; Dai, F.; Zhu, K.; Gong, Y.; Xie, Y. An object-based supervised classification framework for very-high-resolution remote sensing images using convolutional neural networks. Remote Sens. Lett. 2018, 9, 373–382. [Google Scholar] [CrossRef]

- Cui, W.; Zheng, Z.; Zhou, Q.; Huang, J.; Yuan, Y. Application of a parallel spectral–spatial convolution neural network in object-oriented remote sensing land use classification. Remote Sens. Lett. 2018, 9, 334–342. [Google Scholar] [CrossRef]

- Chen, F.; Ren, R.; Van de Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast automatic airport detection in remote sensing images using convolutional neural networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, C.; Xiao, S. Deformable Faster R-CNN with aggregating multi-layer features for partially occluded object detection in optical remote sensing images. Remote Sens. 2018, 10, 1470. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–9. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Bales, K. Blood and Earth: Modern Slavery, Ecocide, and the Secret to Saving the World; Spiegel & Grau: New York, NY, USA, 2016; 304p. [Google Scholar]

| Kiln | Non-Kiln | ∑ | |

|---|---|---|---|

| Kiln | 178 | 188 | 366 |

| Non-Kiln | 0 | 0 | 0 |

| ∑ | 178 | 188 | 366 |

| Kiln | Non-Kiln | ∑ | |

|---|---|---|---|

| Kiln | 169 | 9 | 178 |

| Non-Kiln | 9 | 179 | 188 |

| ∑ | 178 | 188 | 366 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Foody, G.M.; Ling, F.; Boyd, D.S.; Li, X.; Wardlaw, J. Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space. Remote Sens. 2019, 11, 266. https://doi.org/10.3390/rs11030266

Foody GM, Ling F, Boyd DS, Li X, Wardlaw J. Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space. Remote Sensing. 2019; 11(3):266. https://doi.org/10.3390/rs11030266

Chicago/Turabian StyleFoody, Giles M., Feng Ling, Doreen S. Boyd, Xiaodong Li, and Jessica Wardlaw. 2019. "Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space" Remote Sensing 11, no. 3: 266. https://doi.org/10.3390/rs11030266

APA StyleFoody, G. M., Ling, F., Boyd, D. S., Li, X., & Wardlaw, J. (2019). Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space. Remote Sensing, 11(3), 266. https://doi.org/10.3390/rs11030266