Single Remote Sensing Image Dehazing Using a Prior-Based Dense Attentive Network

Abstract

1. Introduction

- (1)

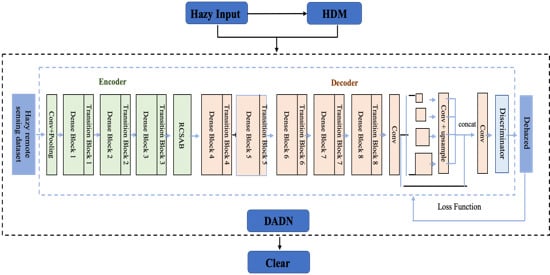

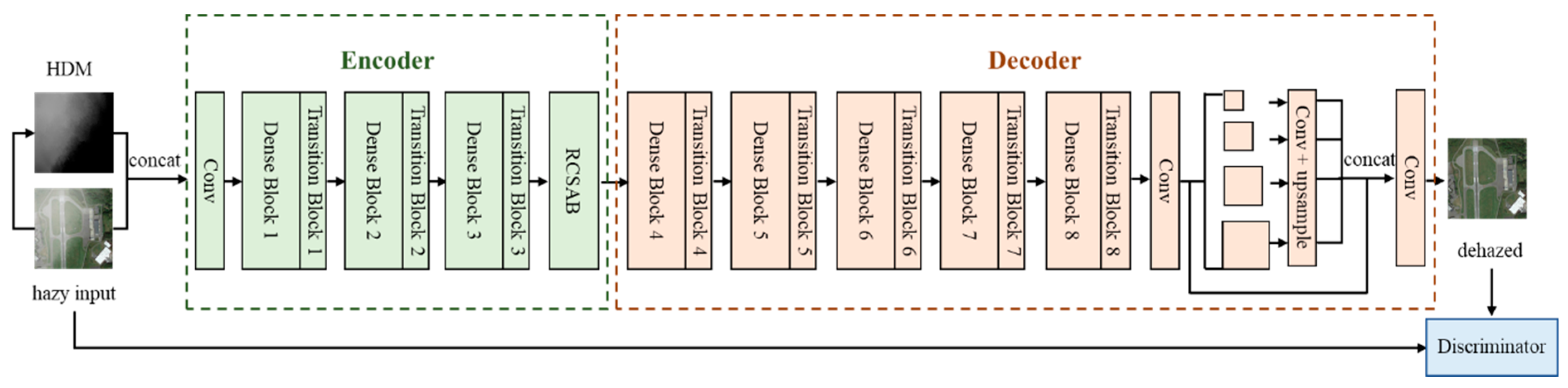

- A single hazy remote sensing image dehazing solution, which combines both physical prior and deep learning technology, is presented to better describe the haze distribution in remote sensing images, and thus deal with non-uniform haze removal. In this solution, we first extract an HDM from the original hazy image, and subsequently leverage the HDM prior as input of the network together with the original hazy image.

- (2)

- An encoder-decoder structured dehazing framework is proposed to directly learn clear images from input images, without estimation of any intermediate parameters. The proposed network is constructed based on dense blocks and attention blocks for accurate clear image estimation. Furthermore, we leverage a discriminator at the end of net to fine-tune the output and ensure that the estimated dehazed result is undifferentiated from the corresponding clear image.

- (3)

- A large-scale hazy remote sensing dataset is created as a benchmark which contains both uniform and non-uniform, high-resolution and low-resolution, synthetic and real hazy remote sensing images. Experimental results on the proposed dataset demonstrate the outstanding performance of the proposed method.

2. Methodology

2.1. Atmospheric Scattering Model (ASM)

2.2. Network Architecture

2.2.1. Haze Density Map (HDM)

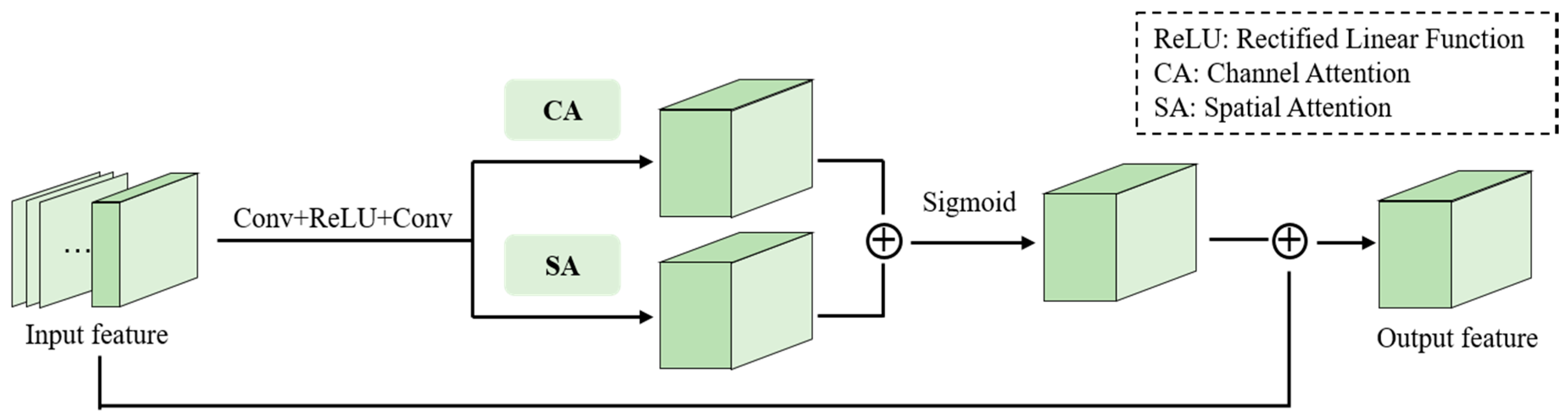

2.2.2. Encoder

2.2.3. Decoder

2.2.4. Discriminator

2.3. Loss Function

3. Experiments and Discussion

3.1. Experimental Settings

3.1.1. Datasets

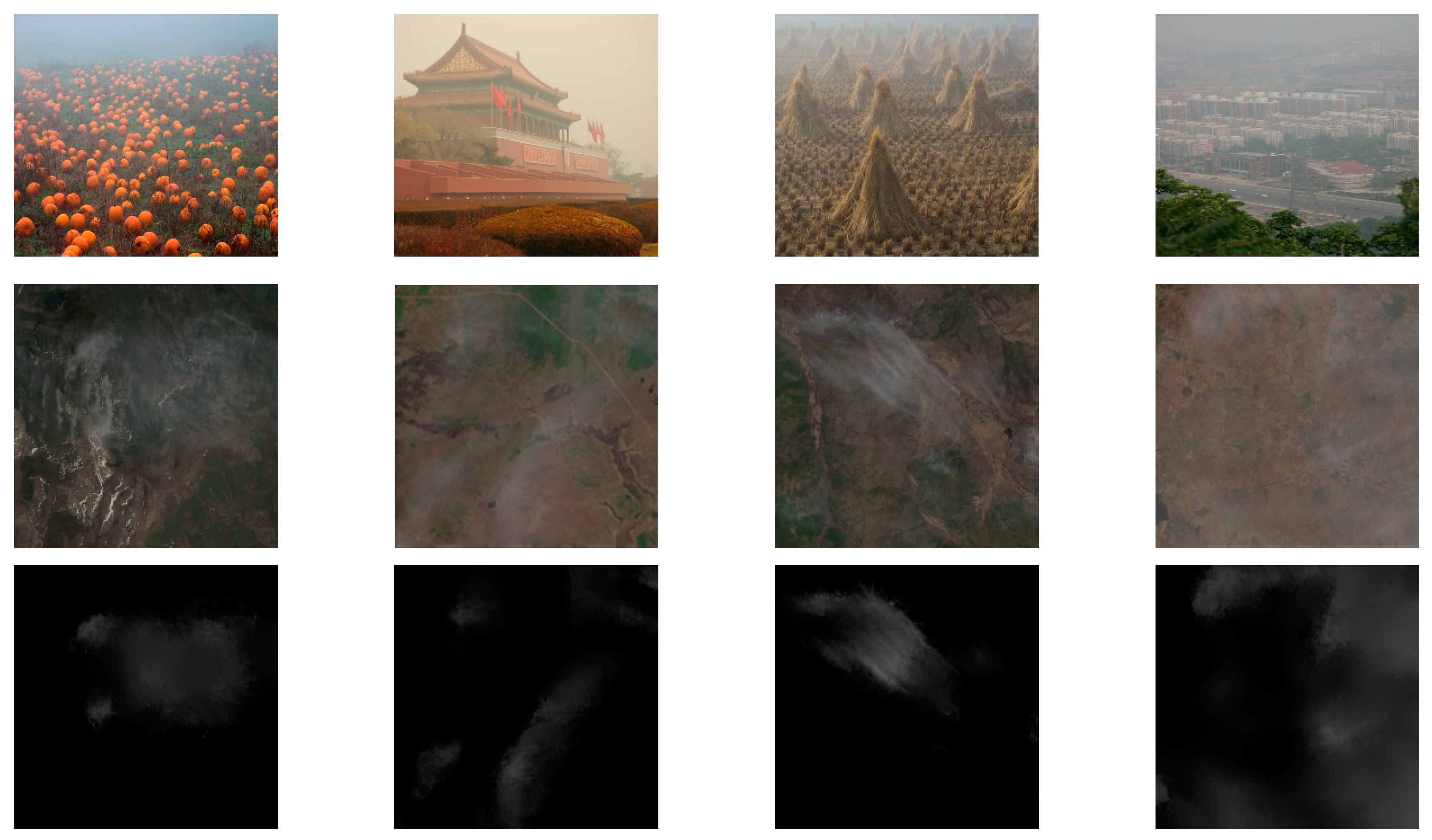

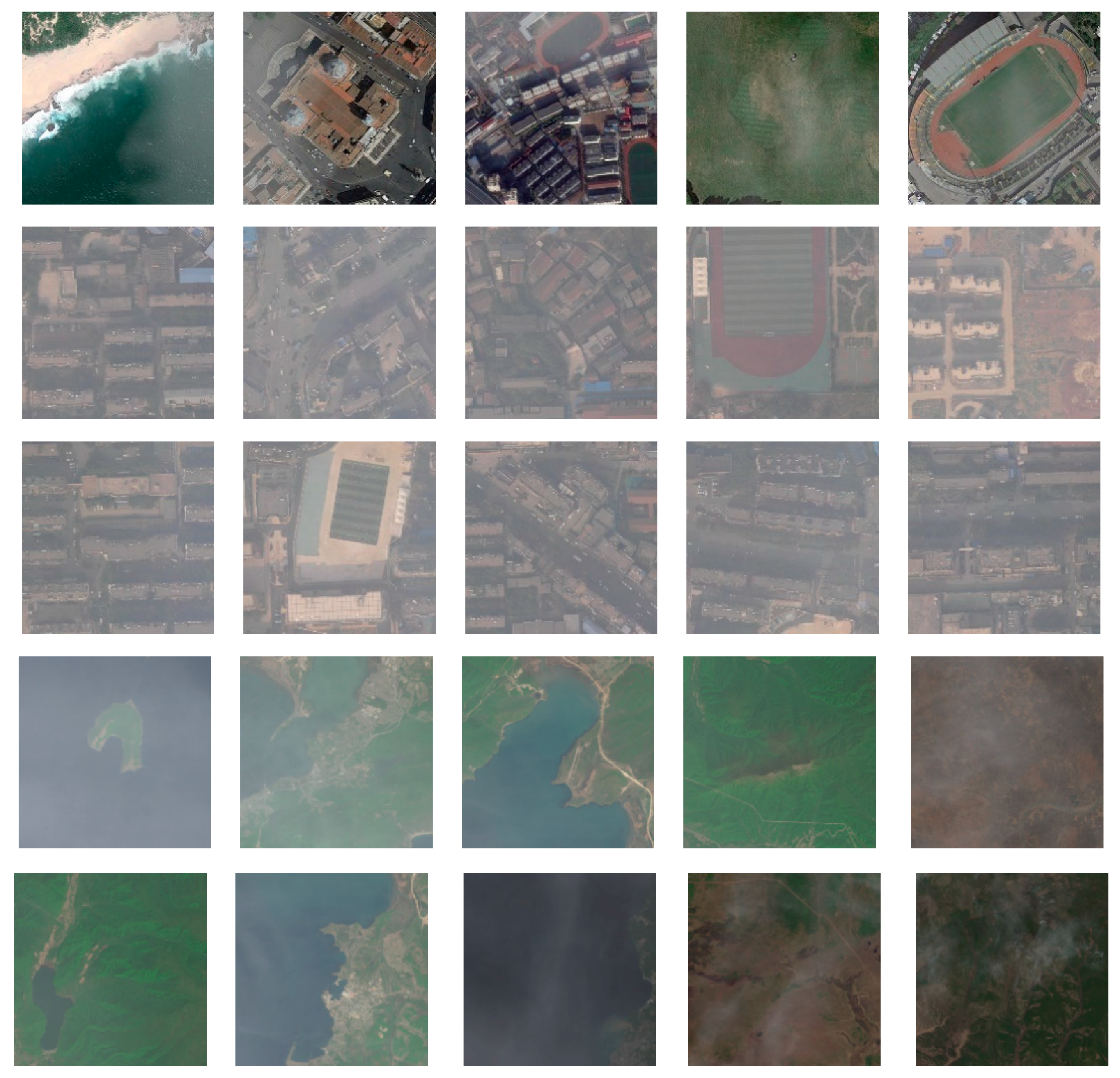

- Test Dataset 1: Test dataset 1 consisted of 1650 synthetic uniform hazy remote sensing images. We simulated the images through the classical ASM Equation (1), with the rest of the AID dataset (1650 in total) as the clear images and (see examples in the first two rows of Figure 8).

- Test Dataset 2: Test dataset 2 contained 1650 synthetic non-uniform hazy remote sensing images. With the non-uniform transition maps extracted from the real hazy images, we added these non-uniform transition maps to the clear images and created 1650 non-uniform hazy remote sensing images (see examples in the second two rows of Figure 8).

- Test Dataset 3: Test dataset 3 was made up of real unmanned aerial vehicle (UAV) images obtained in August 2017 in Daye, China, under hazy weather conditions (see examples in the third two rows of Figure 8).

- Test Dataset 4: Test dataset 4 consisted of real hazy remote sensing images from the Landsat 8 Operational Land Imager making use of the bands (2), (3), and (4) as BGR true color, (see examples in the last two rows of Figure 8).

3.1.2. Training Details

3.1.3. Evaluation Criteria

3.2. Experimental Results

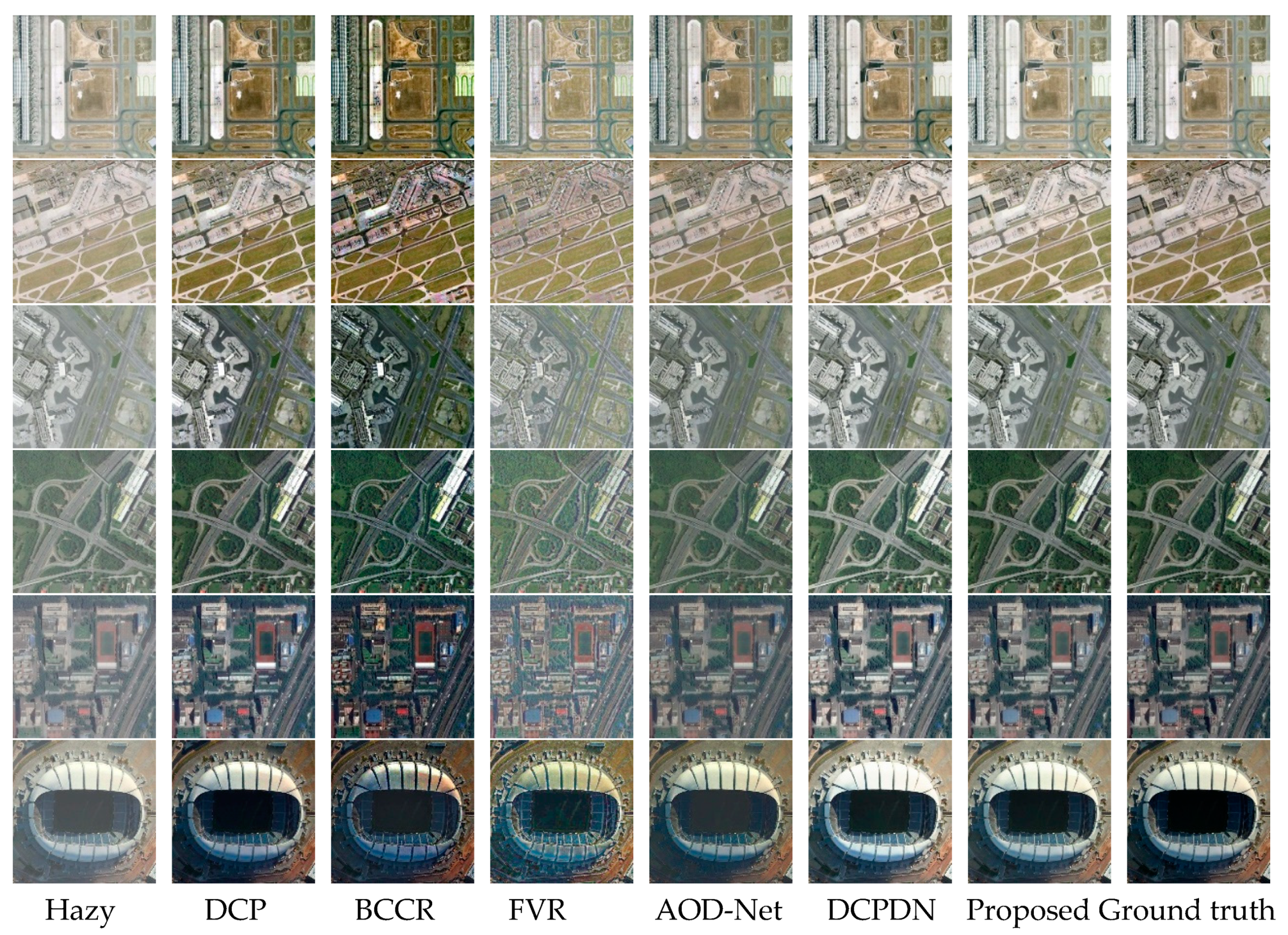

3.2.1. Results on Test Dataset 1

3.2.2. Results on Test Dataset 2

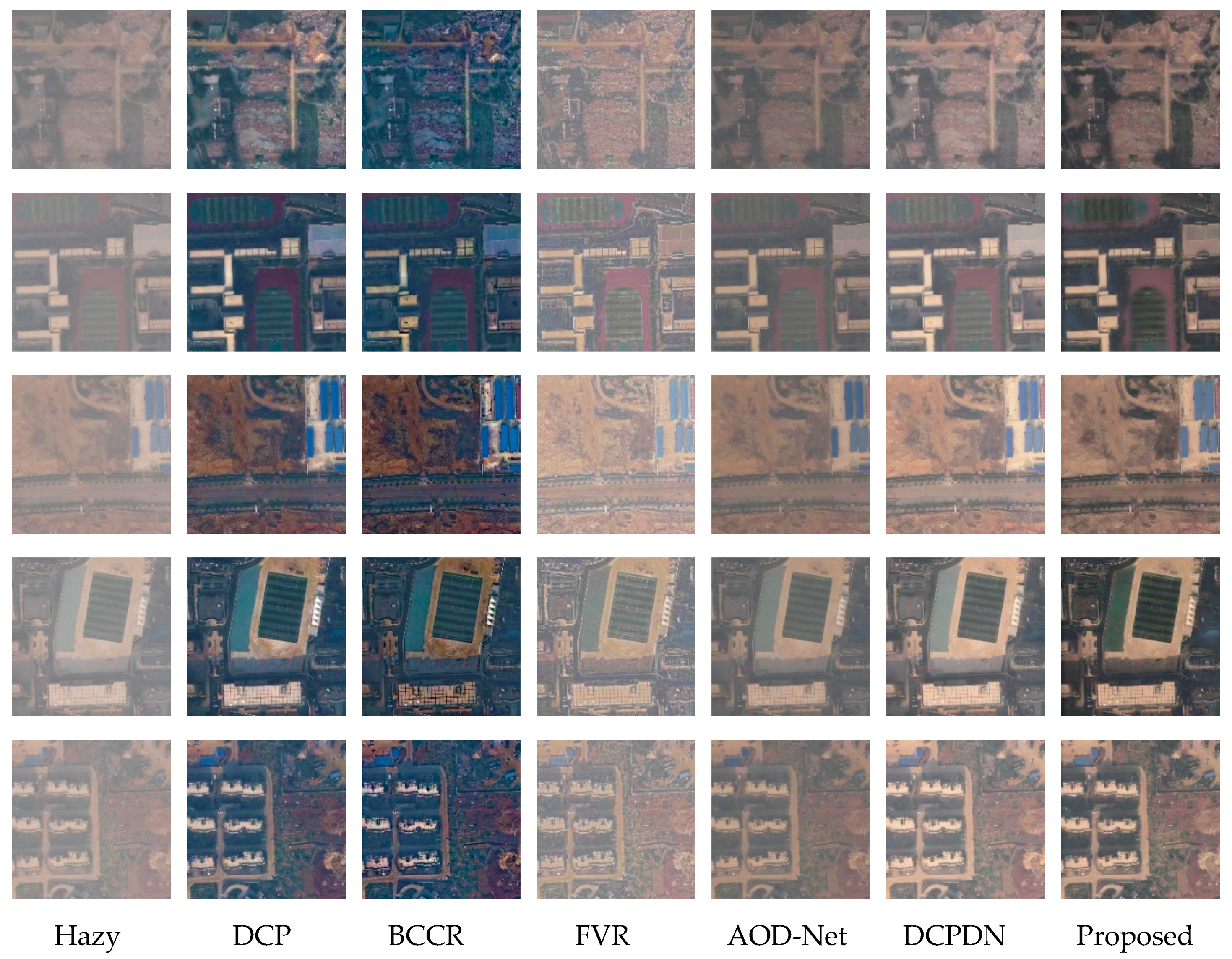

3.2.3. Results on Test Dataset 3

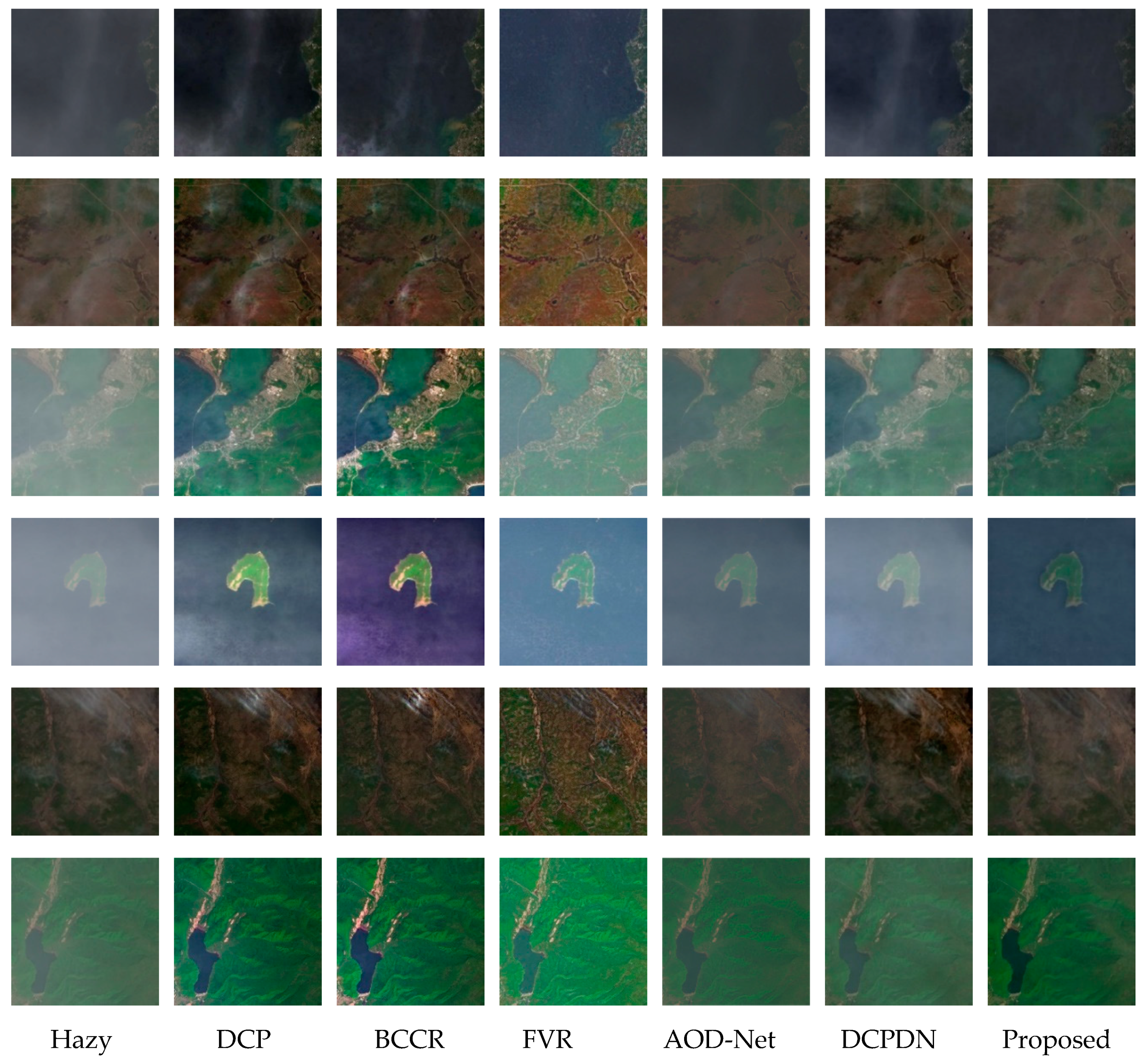

3.2.4. Results on Test Dataset 4

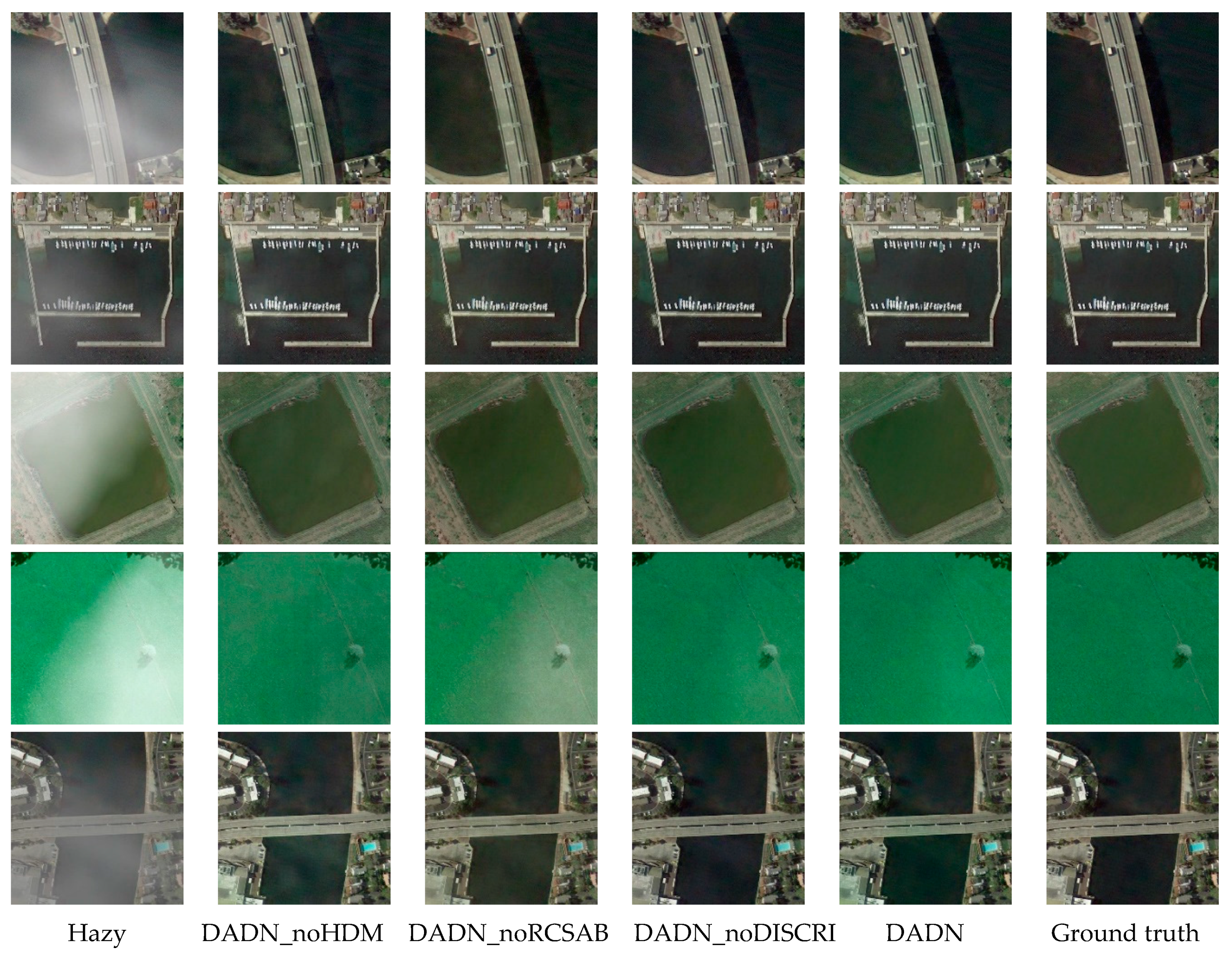

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Govender, M.; Chetty, K.; Bulcock, H. A review of hyperspectral remote sensing and its application in vegetation and water resource studies. Water SA 2007, 33, 145–151. [Google Scholar] [CrossRef]

- Casson, B.; Delacourt, C.; Allemand, P. Contribution of multi-temporal remote sensing images to characterize landslide slip surface‒Application to the La Clapière landslide (France). Nat. Hazards Earth Syst. Sci. 2005, 5, 425–437. [Google Scholar] [CrossRef]

- Sobrino, J.A.; Raissouni, N. Toward remote sensing methods for land cover dynamic monitoring: Application to Morocco. Int. J. Remote Sens. 2000, 21, 353–366. [Google Scholar] [CrossRef]

- Valero, S.; Chanussot, J.; Benediktsson, J.A.; Talbot, H.; Waske, B. Advanced directional mathematical morphology for the detection of the road network in very high resolution remote sensing images. Pattern Recognit. Lett. 2010, 31, 1120–1127. [Google Scholar] [CrossRef]

- Kopf, J.; Neubert, B.; Chen, B.; Cohen, M.; Cohen-Or, D.; Deussen, O.; Uyttendaele, M.; Lischinski, D. Deep Photo: Model-Based Photograph Enhancement and Viewing; ACM: New York, NY, USA, 2008. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Removing weather effects from monochrome images. In Proceedings of the IEEE computer society conference on computer vision and pattern recognition, Kauai, HI, USA, 8–14 December 2001; p. II. [Google Scholar]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Instant dehazing of images using polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Treibitz, T.; Schechner, Y.Y. Polarization: Beneficial for visibility enhancement. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 525–532. [Google Scholar]

- Xu, Z.; Liu, X.; Ji, N. Fog removal from color images using contrast limited adaptive histogram equalization. In Proceedings of the 2009 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons, Inc.: New York, NY, USA, 1976; 421p. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar]

- Berman, D.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Ynag, M.-H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 154–169. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3194–3203. [Google Scholar]

- Fu, X.; Wang, J.; Zeng, D.; Huang, Y.; Ding, X. Remote sensing image enhancement using regularized-histogram equalization and DCT. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2301–2305. [Google Scholar] [CrossRef]

- Makarau, A.; Richter, R.; Müller, R.; Reinartz, P. Haze detection and removal in remotely sensed multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5895–5905. [Google Scholar] [CrossRef]

- Long, J.; Shi, Z.; Tang, W.; Zhang, C. Single remote sensing image dehazing. IEEE Geosci. Remote Sens. Lett. 2014, 11, 59–63. [Google Scholar] [CrossRef]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Jiang, H.; Lu, N.; Yao, L. A high-fidelity haze removal method based on hot for visible remote sensing images. Remote Sens. 2016, 8, 844. [Google Scholar] [CrossRef]

- Liu, Q.; Gao, X.; He, L.; Lu, W. Haze removal for a single visible remote sensing image. Signal Process. 2017, 137, 33–43. [Google Scholar] [CrossRef]

- Xie, F.; Chen, J.; Pan, X.; Jiang, Z. Adaptive Haze Removal for Single Remote Sensing Image. IEEE Access 2018, 6, 67982–67991. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 15 June 2000; Volume 1, pp. 598–605. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- Zhang, H.; Patel, V.M. Density-aware single image de-raining using a multi-stream dense network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 695–704. [Google Scholar]

- Pelt, D.M.; Sethian, J.A. A mixed-scale dense convolutional neural network for image analysis. Proc. Natl. Acad. Sci. USA 2018, 115, 254–259. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Kim, J.H.; Choi, J.H.; Cheon, M.; Lee, J.-S. RAM: Residual Attention Module for Single Image Super-Resolution. arXiv 2018, arXiv:1811.12043. [Google Scholar]

- Pan, X.; Xie, F.; Jiang, Z.; Shi, Z.; Luo, X. No-reference assessment on haze for remote-sensing images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1855–1859. [Google Scholar] [CrossRef]

- Dougherty, E.R.; Lotufo, R.A. Hands-on Morphological Image Processing; SPIE Press: Bellingham, WA, USA, 2003. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: Spatial pyramid matching for recognizing natural scene categories. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2169–2178. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 2672–2680. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Xia, G.-S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Ketkar, N. Introduction to PyTorch. In Deep Learning with Python; Apress: Berkeley, CA, USA, 2017; pp. 195–208. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Tarel, J.P.; Hautiere, N. Fast visibility restoration from a single color or gray level image. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2201–2208. [Google Scholar]

| Layer | Operation | Output Size | Output Channels | |

|---|---|---|---|---|

| Input | 512 × 512 | 6 | ||

| Convolution | 7 × 7 convolution, stride = 2 | 256 × 256 | 64 | |

| Pooling | 3 × 3 max pooling, stride = 2 | 128 × 128 | 64 | |

| Dense block 1 | 128 × 128 | 256 | ||

| Transition block 1 | 1 × 1 convolution, stride = 1 | 128 × 128 | 128 | |

| 2 × 2 average pooling, stride = 2 | 64 × 64 | |||

| Dense block 2 | 64 × 64 | 512 | ||

| Transition block 2 | 1 × 1 convolution, stride = 2 | 64 × 64 | 256 | |

| 2 × 2 average pooling, stride = 2 | 32 × 32 | |||

| Dense block 3 | 32 × 32 | 1024 | ||

| Transition block 3 | 1 × 1 convolution, stride = 2 | 32 × 32 | 512 | |

| 2 × 2 average pooling, stride = 2 | 16 × 16 | |||

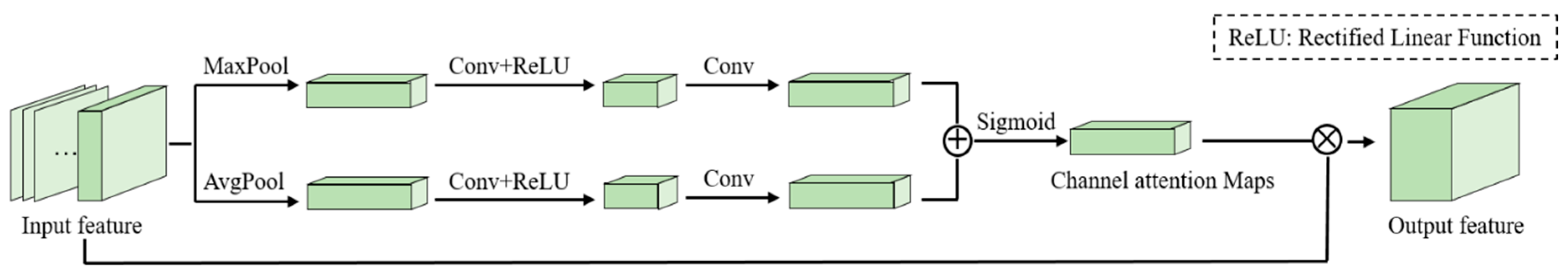

| RCSAB | Convolution | 16 × 16 | 512 | |

| Channel attention | 16 × 16 average pooling, 16 × 16 max pooling | |||

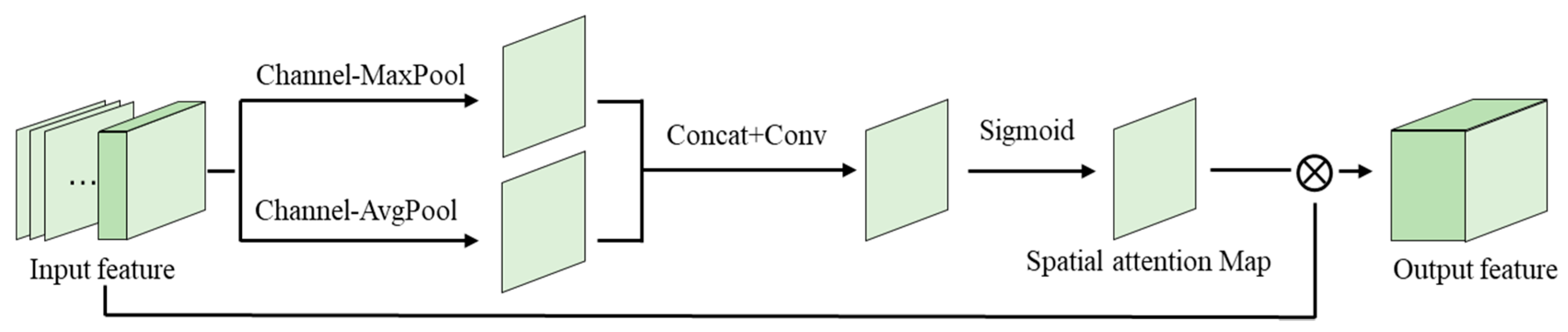

| Spatial attention | channel average pooling, channel max pooling | |||

| Layer | Operation | Output Size | Output Channels |

|---|---|---|---|

| Dense block 4 | 1 × 1 convolution, 3 × 3 convolution | 16 × 16 | 768 |

| Transition block 4 | 1 × 1 convolution | 16 × 16 | 384 |

| nearest upsample, scale = 2 | 32 × 32 | ||

| Dense block 5 | 1 × 1 convolution, 3 × 3 convolution | 32 × 32 | 640 |

| Transition block 5 | 1 × 1 convolution | 32 × 32 | 256 |

| nearest upsample, scale = 2 | 64 × 64 | ||

| Dense block 6 | 1 × 1 convolution, 3 × 3 convolution | 64 × 64 | 384 |

| Transition block 6 | 1 × 1 convolution | 64 × 64 | 64 |

| nearest upsample, scale = 2 | 128 × 128 | ||

| Dense block 7 | 1 × 1 convolution, 3 × 3 convolution | 128 × 128 | 128 |

| Transition block 7 | 1 × 1 convolution | 128 × 128 | 32 |

| nearest upsample, scale = 2 | 256 × 256 | ||

| Dense block 8 | 1 × 1 convolution, 3 × 3 convolution | 256 × 256 | 64 |

| Transition block 8 | 1 × 1 convolution | 256 × 256 | 16 |

| nearest upsample, scale = 2 | 512 × 512 | ||

| Convolution | 3 × 3 convolution | 512 × 512 | 20 |

| Pyramid pooling block | 32 × 32 average pooling, 1 × 1 convolution, nearest upsample | 512 × 512 | 1 |

| 16 × 16 average pooling, 1 × 1 convolution, nearest upsample | 512 × 512 | 1 | |

| 8 × 8 average pooling, 1 × 1 convolution, nearest upsample | 512 × 512 | 1 | |

| 4 × 4 average pooling, 1 × 1 convolution, nearest upsample | 512 × 512 | 1 | |

| Convolution | 3 × 3 convolution | 512 × 512 | 3 |

| Dehazed output | 512 × 512 | 3 |

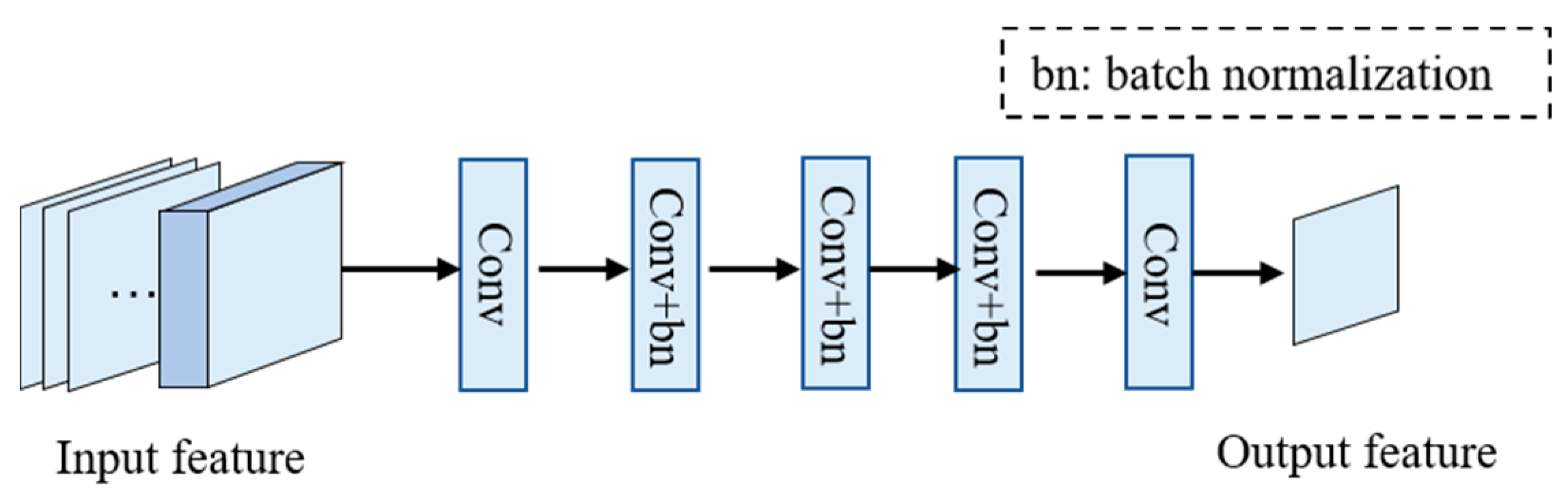

| Layer | Operation | Output Size | Output Channels |

|---|---|---|---|

| input | 512 × 512 | 3 | |

| Convolution | 4 × 4 convolution, stride = 2 | 256 × 256 | 64 |

| Convolution | 4 × 4 convolution, stride = 2 | 128 × 128 | 128 |

| Batch normalization | batch normalization | 128 × 128 | 128 |

| Convolution | 4 × 4 convolution, stride = 2 | 64 × 64 | 256 |

| Batch normalization | batch normalization | 64 × 64 | 256 |

| Convolution | 4 × 4 convolution, stride = 1 | 63 × 63 | 512 |

| Batch normalization | batch normalization | 63 × 63 | 512 |

| Convolution | 4 × 4 convolution, stride = 1 | 62 × 62 | 1 |

| DCP | BCCR | FVR | AOD-Net | DCPDN | Proposed | |

|---|---|---|---|---|---|---|

| PSNR | 22.4270 | 20.1578 | 16.0833 | 22.7267 | 23.8002 | 31.5095 |

| SSIM | 0.8853 | 0.78460 | 0.8109 | 0.9137 | 0.9397 | 0.9888 |

| DCP | BCCR | FVR | AOD-Net | DCPDN | Proposed | |

|---|---|---|---|---|---|---|

| PSNR | 19.9344 | 17.7576 | 16.1356 | 19.5275 | 28.0737 | 34.9841 |

| SSIM | 0.8132 | 0.7399 | 0.4773 | 0.82133 | 0.8886 | 0.8990 |

| DADN_noHDM | DADN_noRCSAB | DADN_noDISCRI | Proposed | |

|---|---|---|---|---|

| PSNR | 30.4668 | 29.3695 | 34.6575 | 35.1895 |

| SSIM | 0.8919 | 0.8894 | 0.8964 | 0.8965 |

| Time | 0.1841 | 0.2262 | 0.1891 | 0.2368 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, Z.; Zhan, Z.; Yuan, Q.; Yan, L. Single Remote Sensing Image Dehazing Using a Prior-Based Dense Attentive Network. Remote Sens. 2019, 11, 3008. https://doi.org/10.3390/rs11243008

Gu Z, Zhan Z, Yuan Q, Yan L. Single Remote Sensing Image Dehazing Using a Prior-Based Dense Attentive Network. Remote Sensing. 2019; 11(24):3008. https://doi.org/10.3390/rs11243008

Chicago/Turabian StyleGu, Ziqi, Zongqian Zhan, Qiangqiang Yuan, and Li Yan. 2019. "Single Remote Sensing Image Dehazing Using a Prior-Based Dense Attentive Network" Remote Sensing 11, no. 24: 3008. https://doi.org/10.3390/rs11243008

APA StyleGu, Z., Zhan, Z., Yuan, Q., & Yan, L. (2019). Single Remote Sensing Image Dehazing Using a Prior-Based Dense Attentive Network. Remote Sensing, 11(24), 3008. https://doi.org/10.3390/rs11243008