Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks

Abstract

:1. Introduction

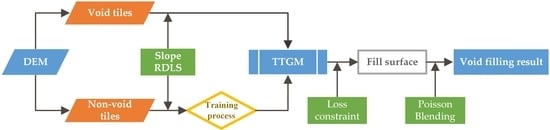

2. Methodology

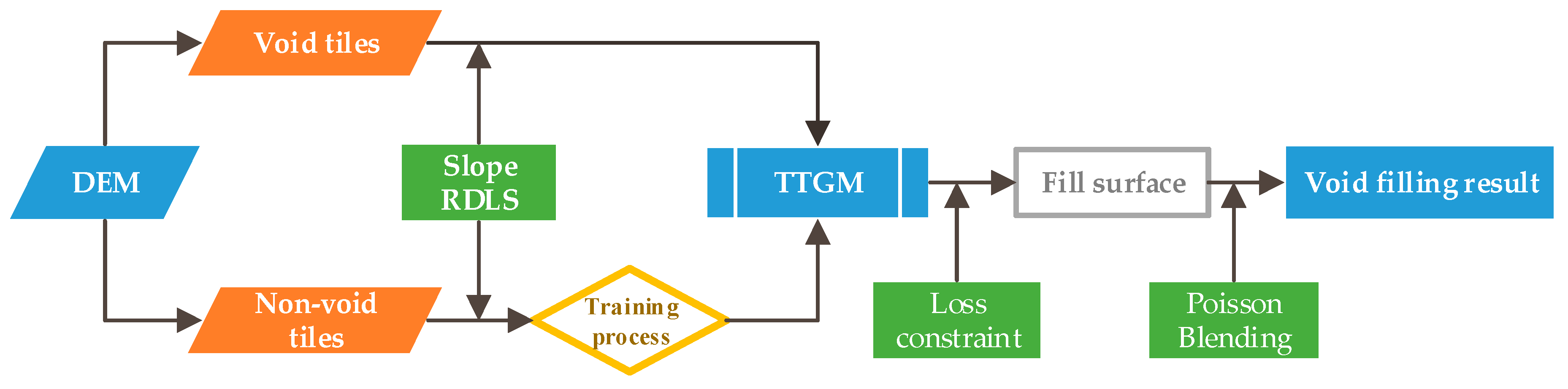

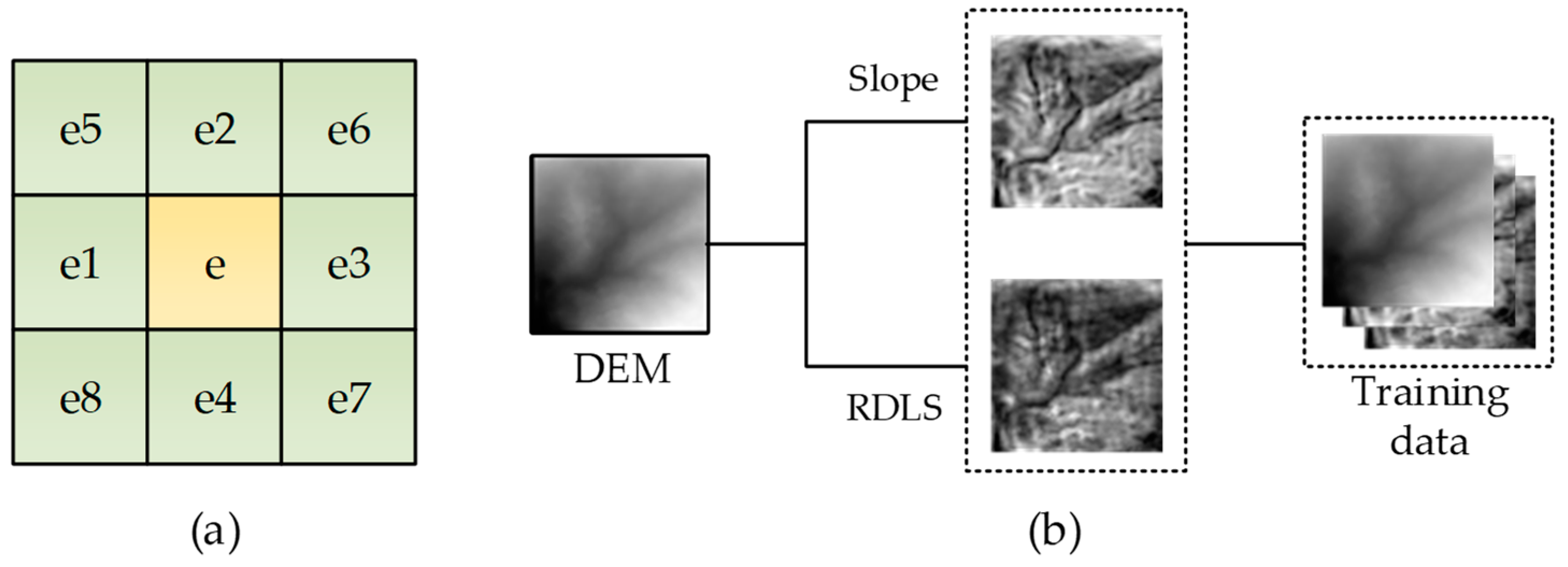

2.1. Terrain Texture Generation model

2.1.1. Generative Adversarial Networks

2.1.2. The Framework of the Terrain Texture Generation Model

2.2. DEM Void Filling by Constrained Image Generation

2.2.1. Design of Loss Functions

Pixel-Wise Loss

Context Loss

Perceptual Loss

2.2.2. Seamless Blending

3. Experiments

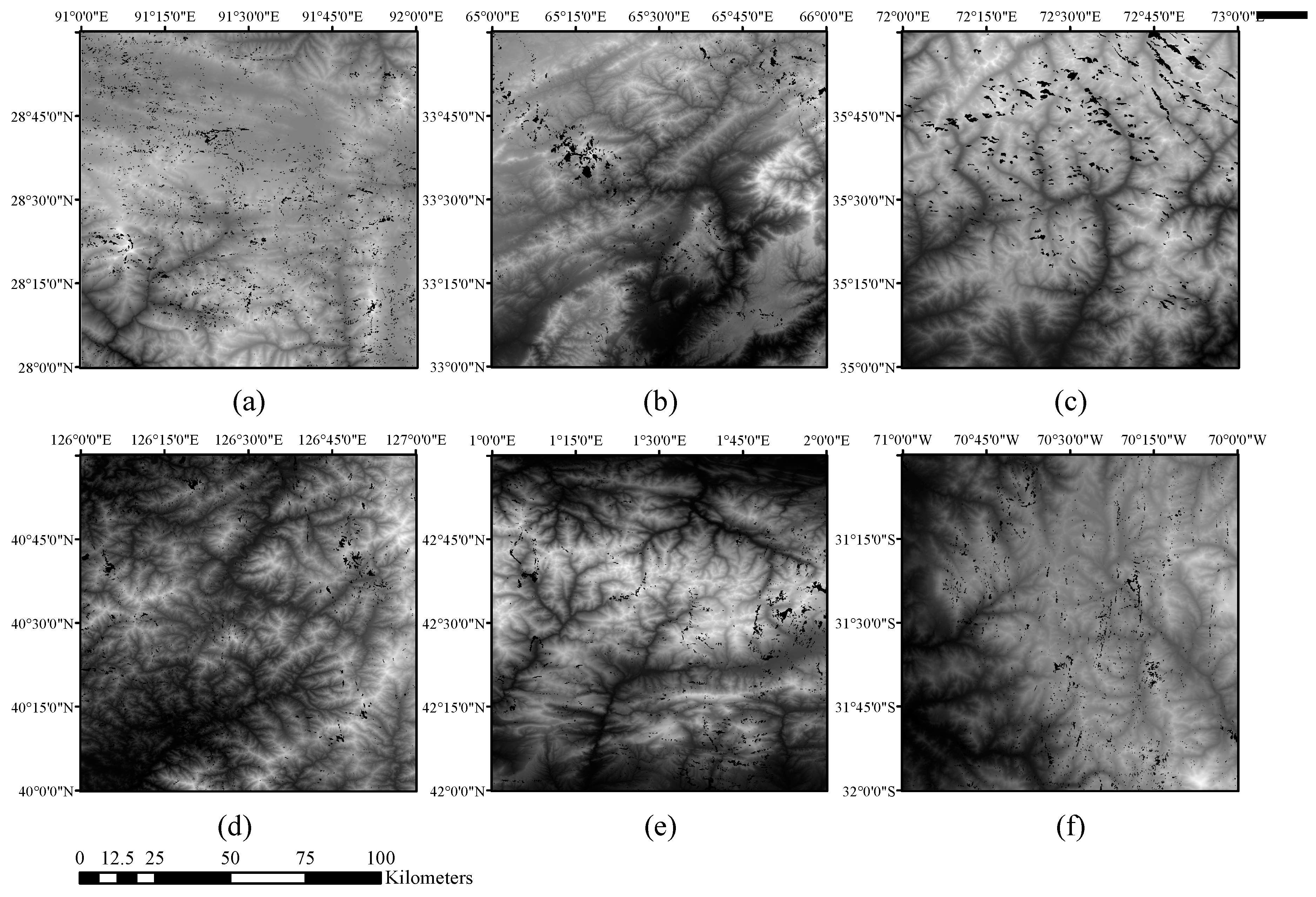

3.1. Data Description and Evaluation Metrics

3.2. Simulated Experiments

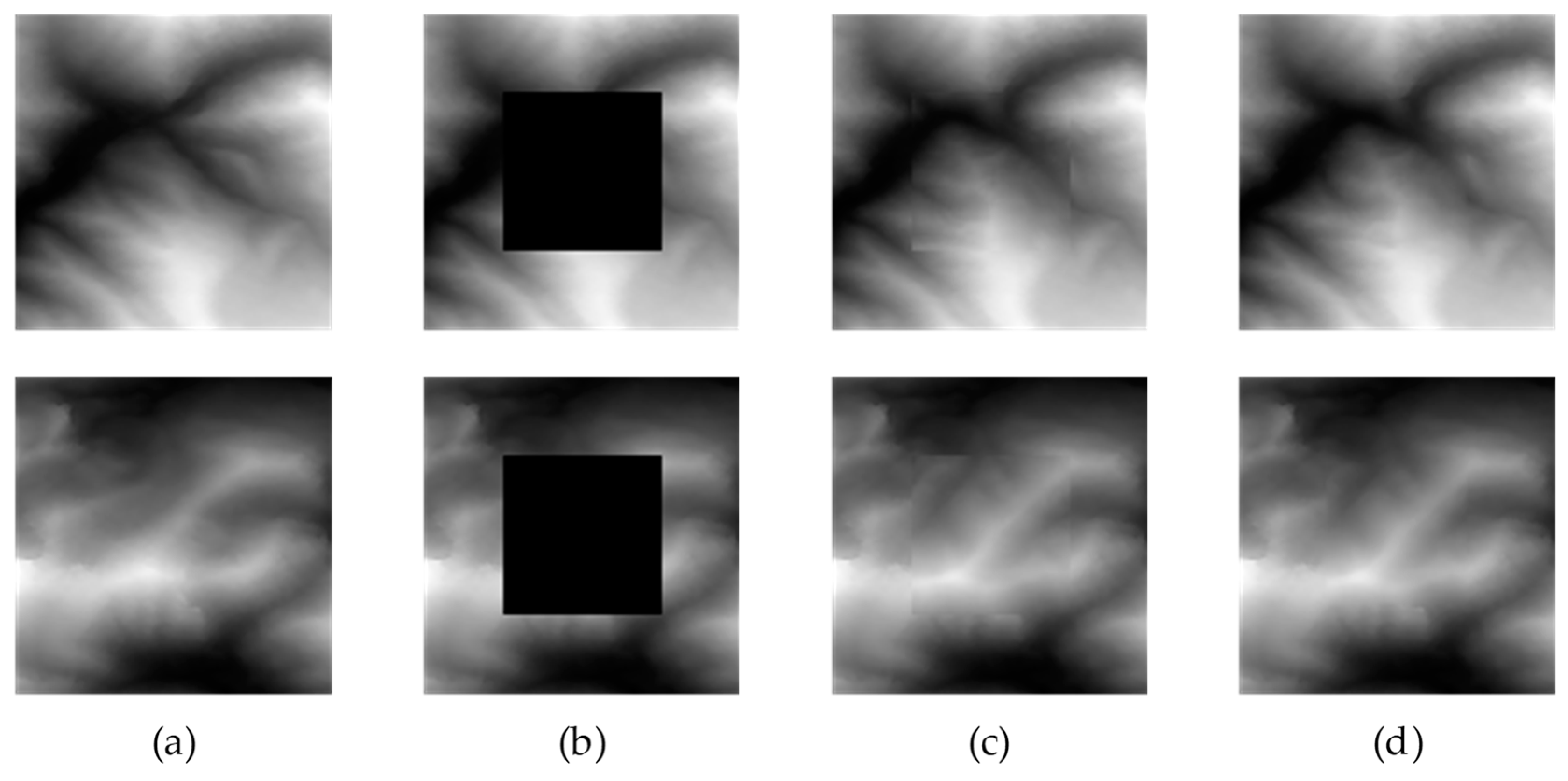

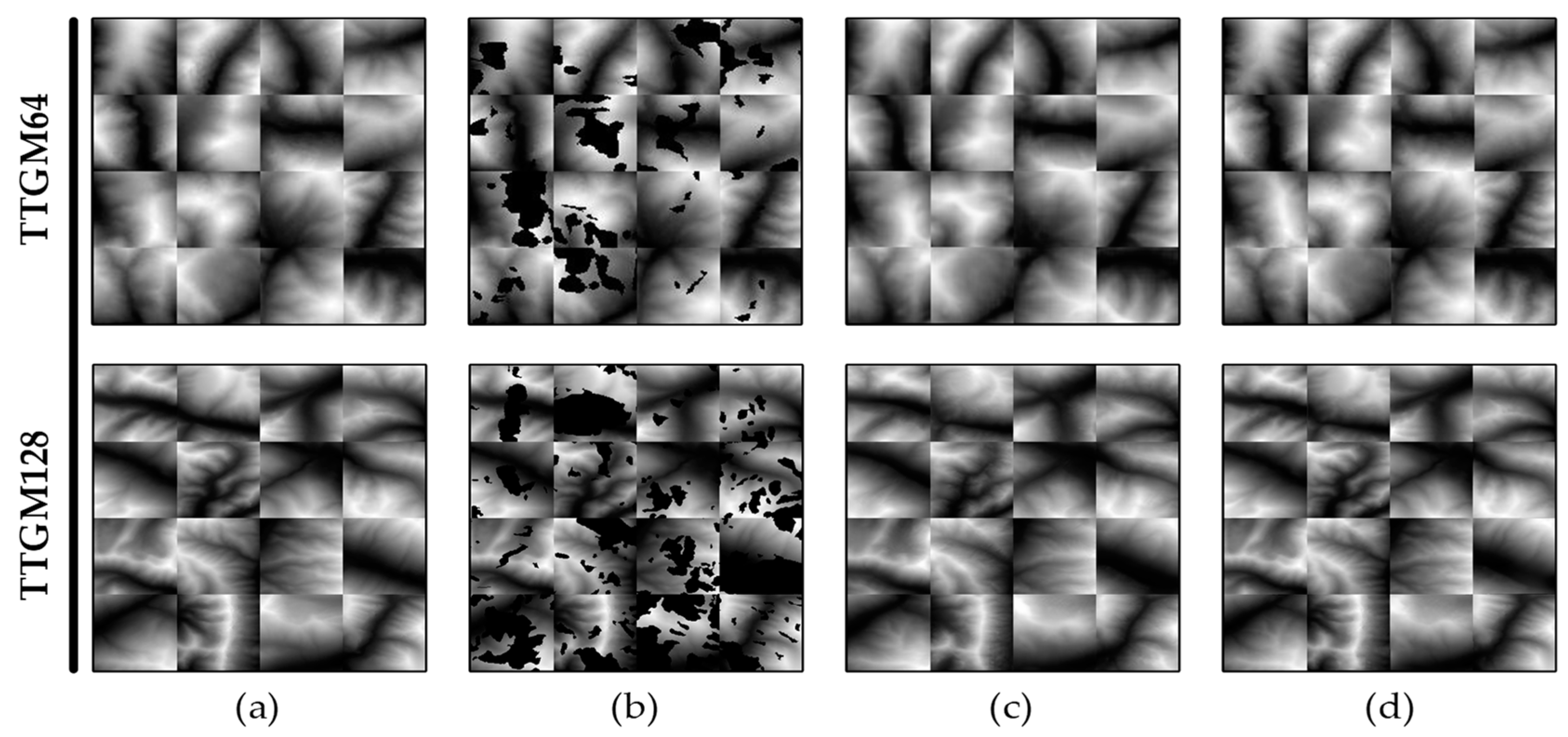

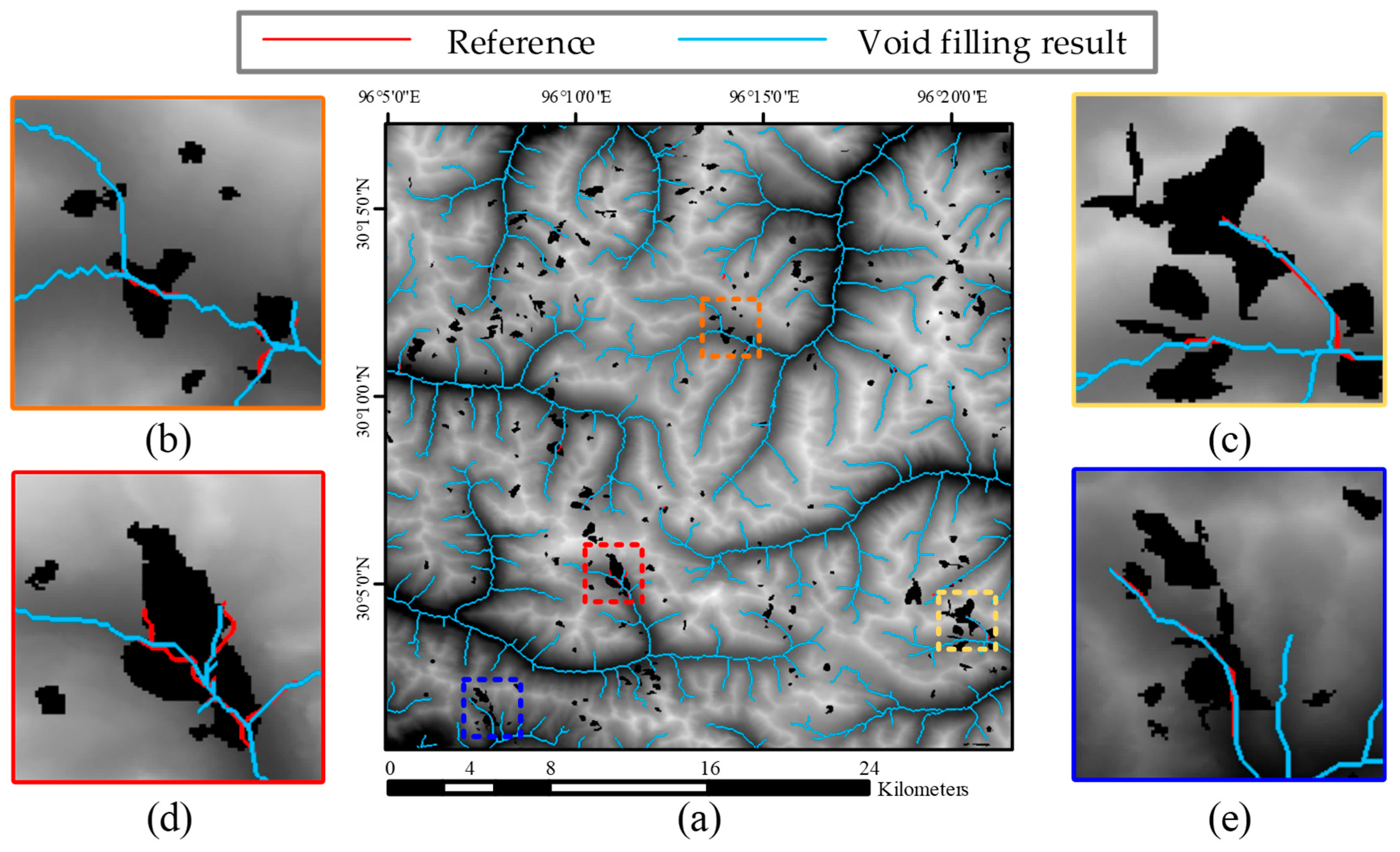

3.2.1. Void Filling of Simulated Real Pattern Voids

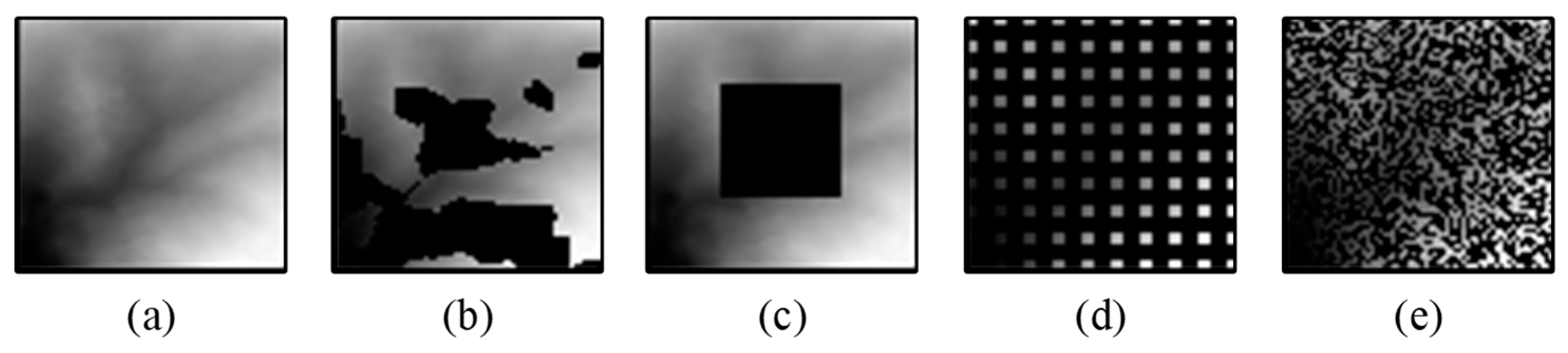

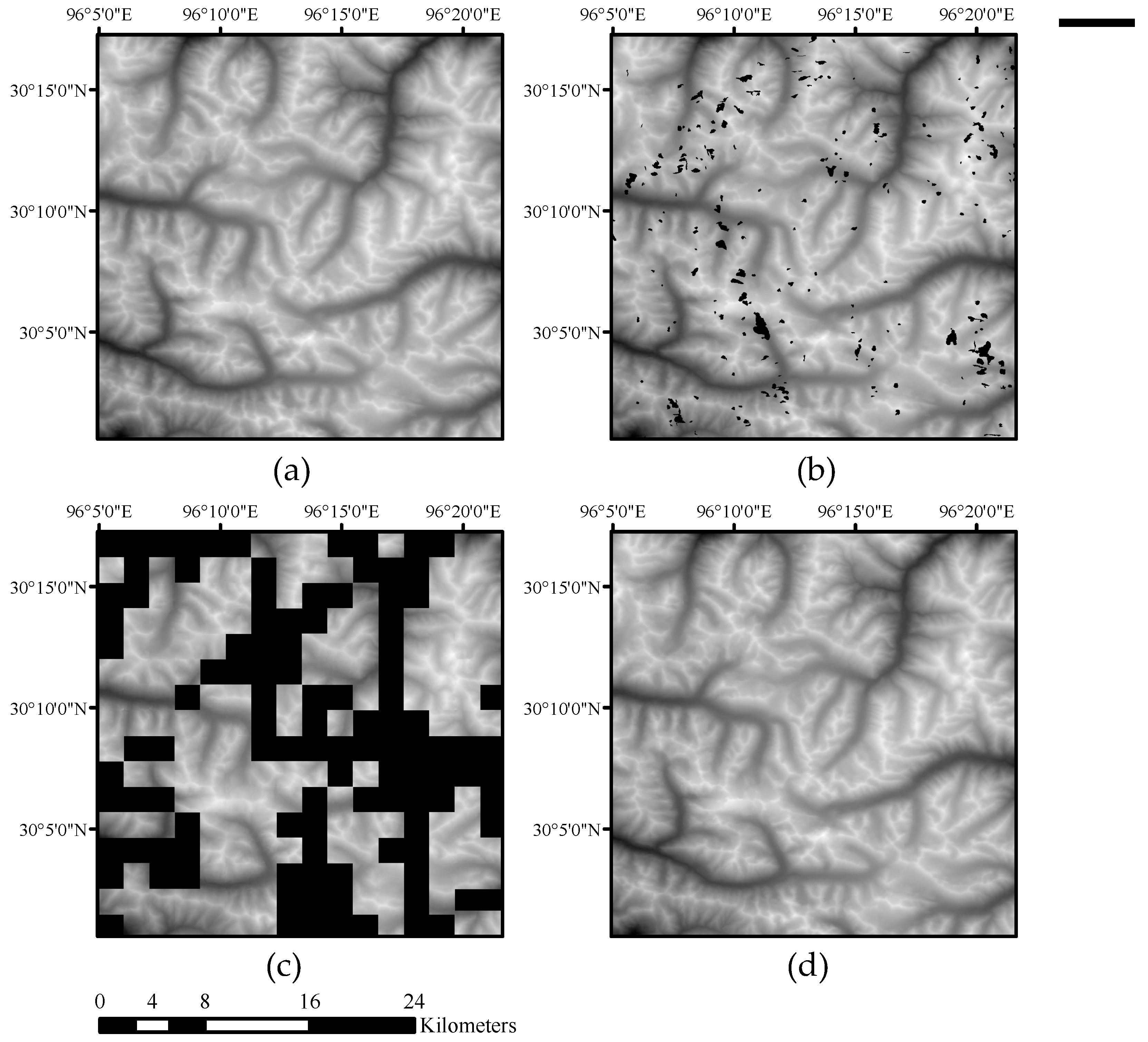

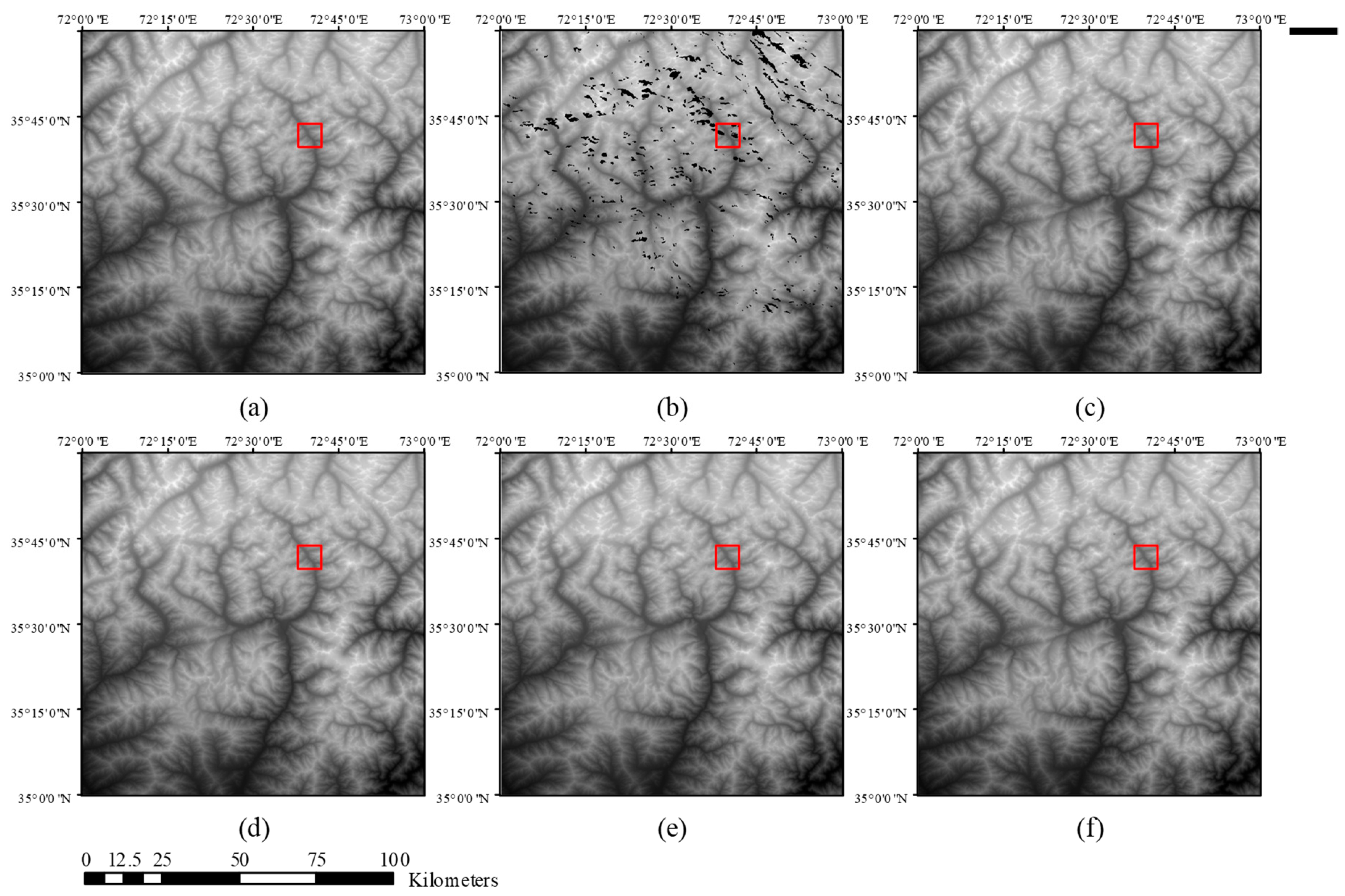

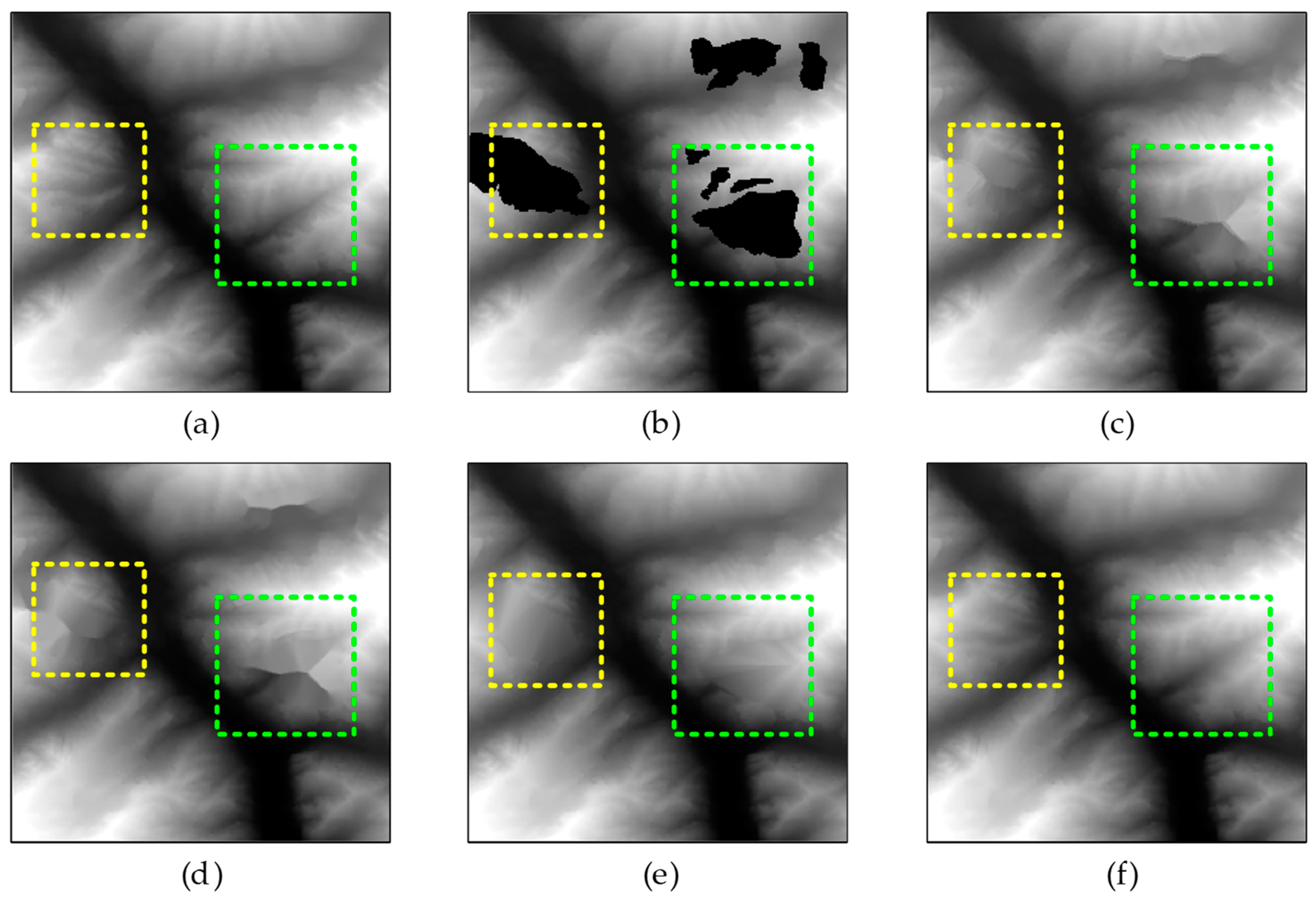

3.2.2. Application of the Proposed Method on Simulated SRTM Void Data

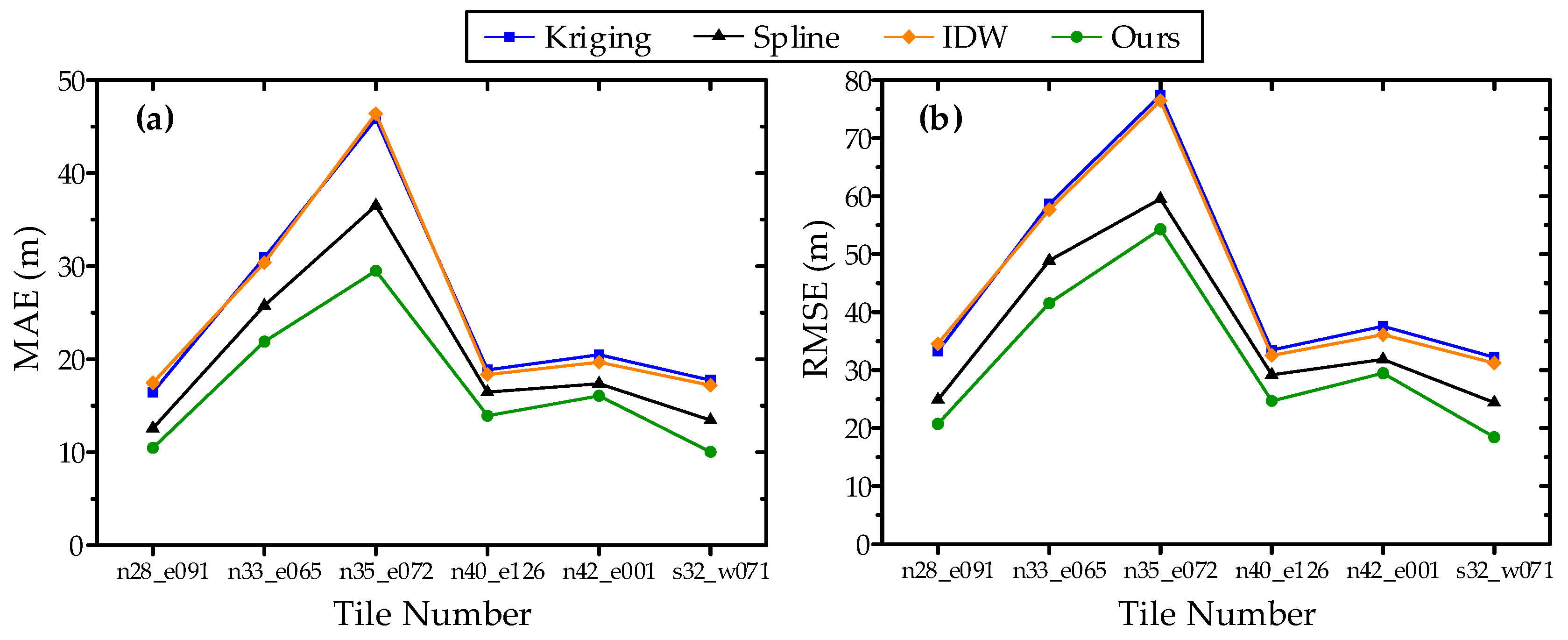

3.3. Comparison with Interpolation Methods

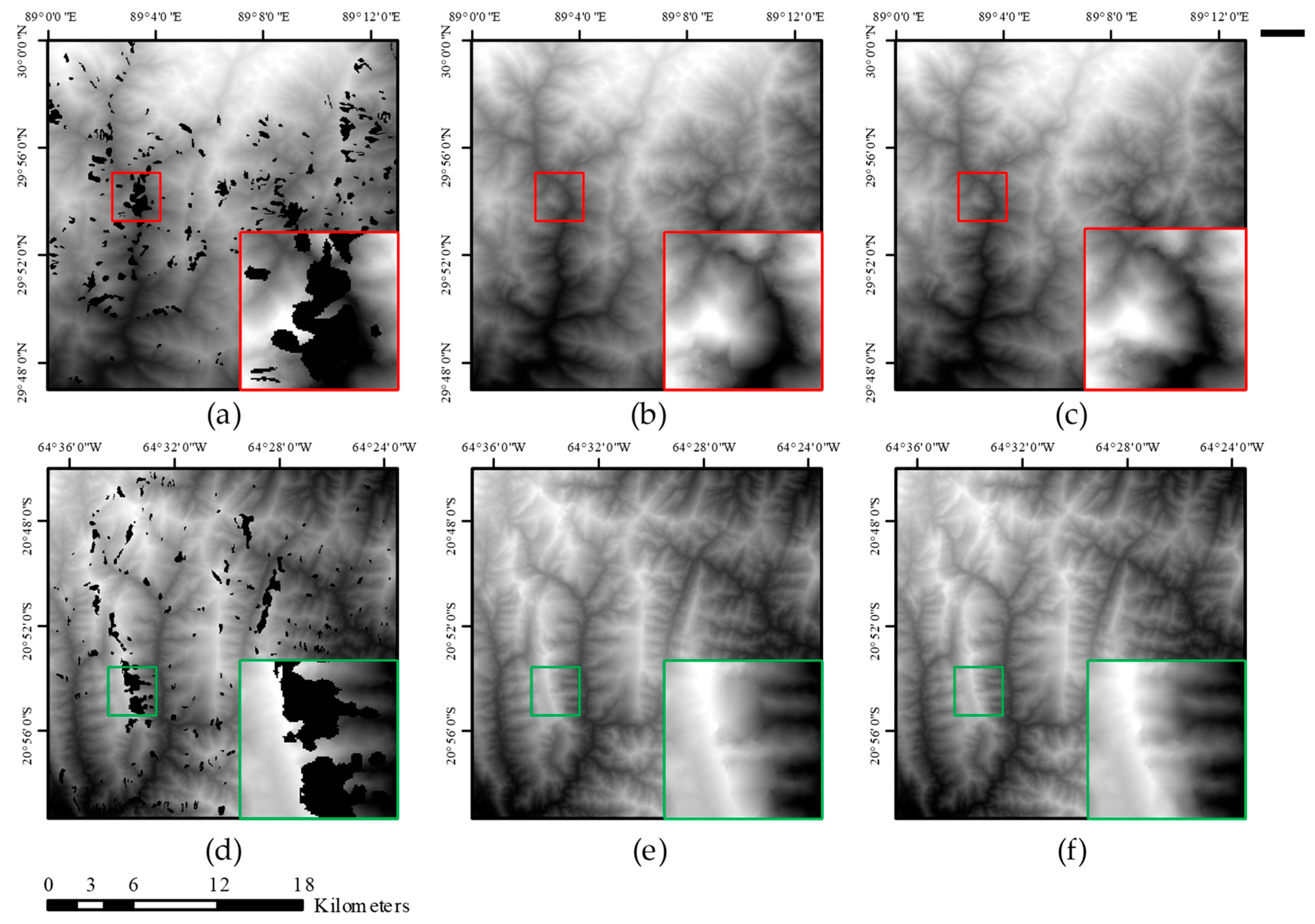

3.4. Real-Data Experiments

4. Discussions

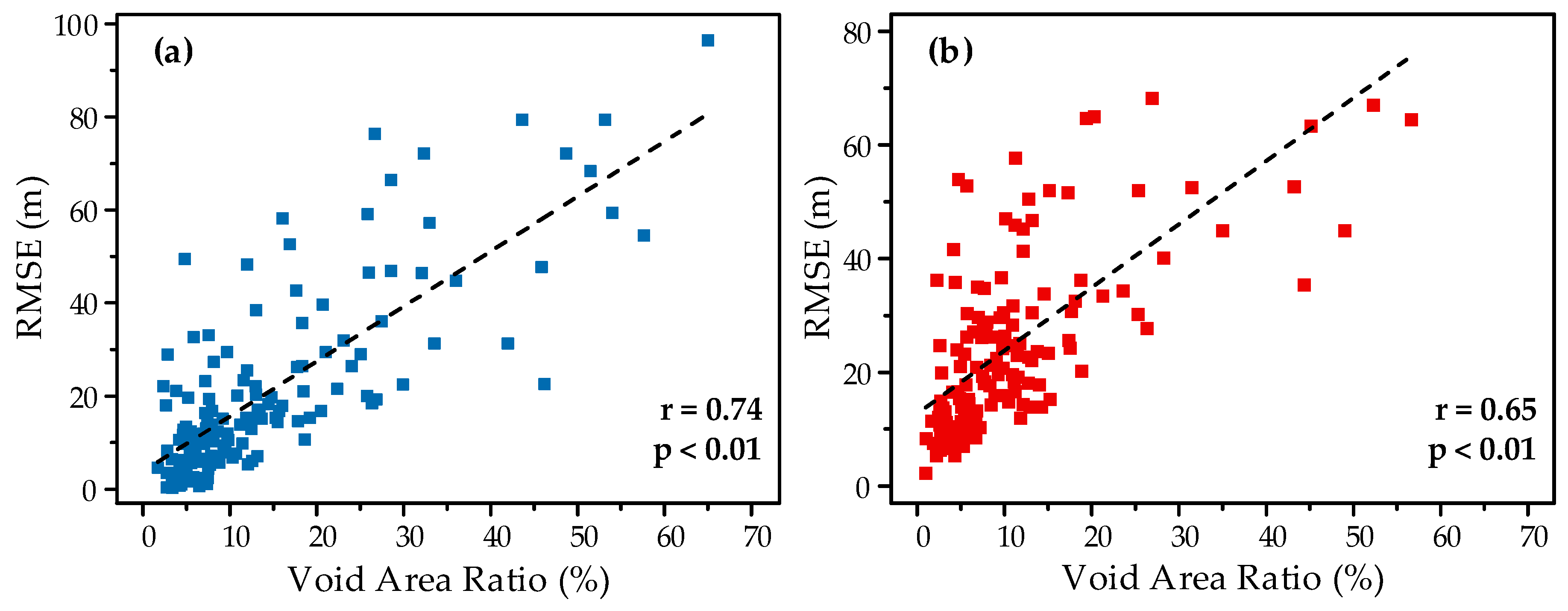

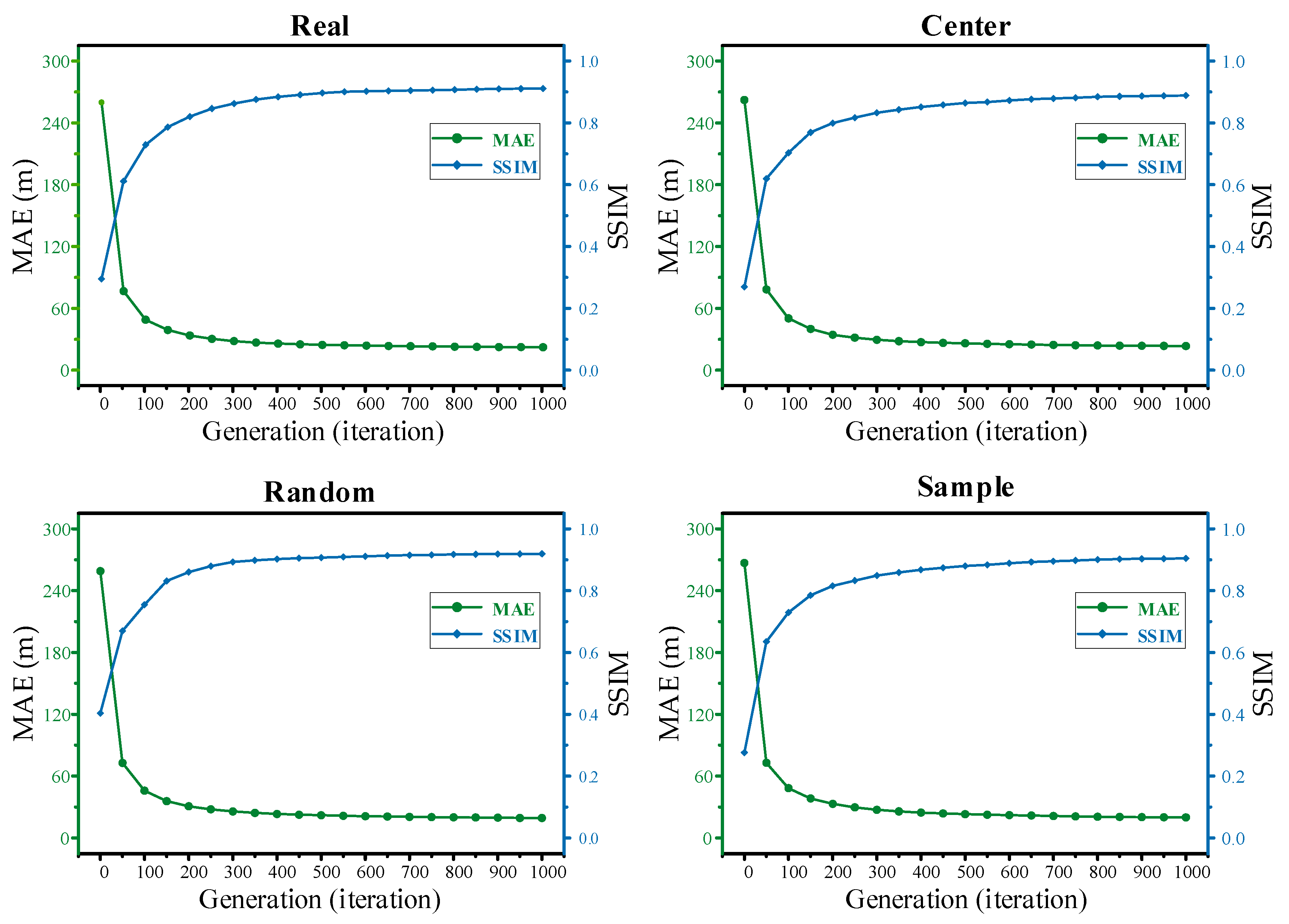

4.1. Evaluation of the Void Filling Method with Respect to Input

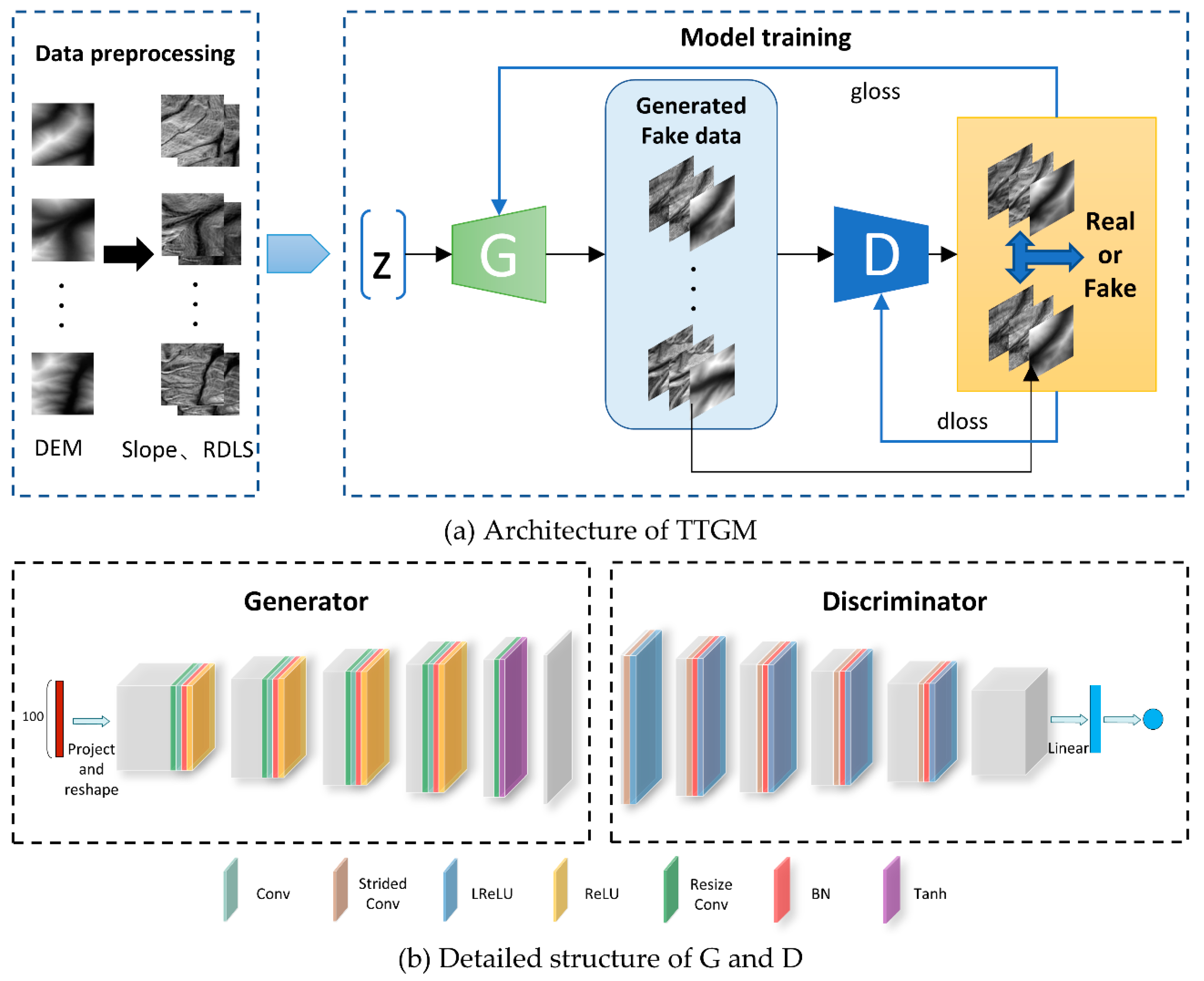

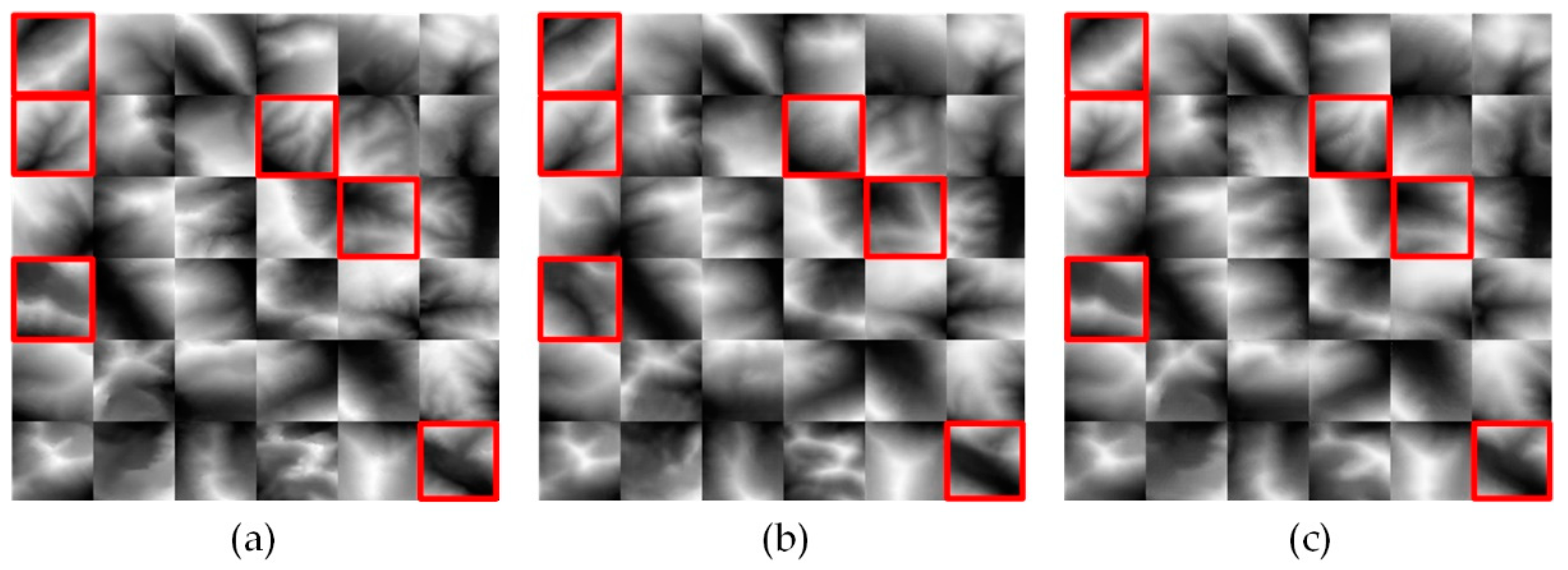

4.2. Validation of the Model’s Ability to Simulate Terrain Texture in the DEM

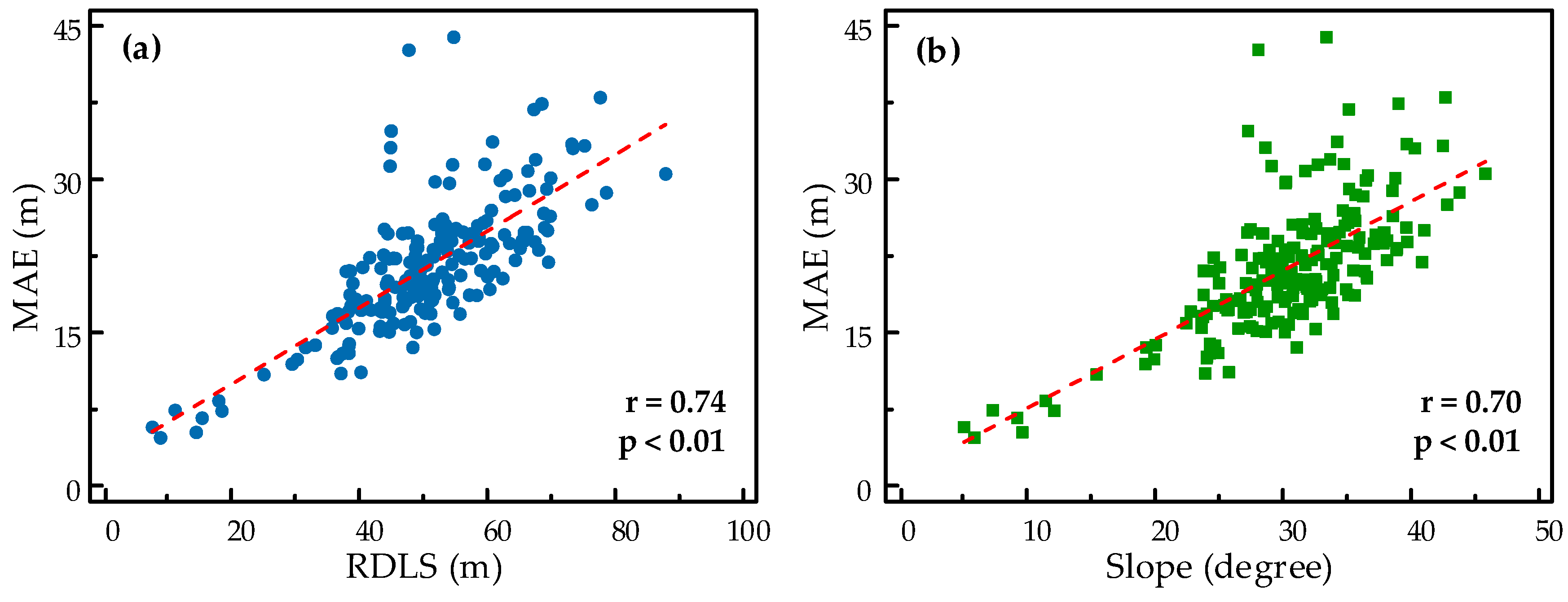

4.3. Influence of Terrain Factors on Generated Images

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Xiong, L.; Tang, G.; Yan, S.; Zhu, S.; Sun, Y. Landform-oriented flow-routing algorithm for the dual-structure loess terrain based on digital elevation models. Hydrol. Process. 2014, 28, 1756–1766. [Google Scholar] [CrossRef]

- Zhou, Q.; Liu, X. Analysis of errors of derived slope and aspect related to DEM data properties. Comput. Geosci. 2004, 30, 369–378. [Google Scholar] [CrossRef]

- Wechsler, S. Uncertainties associated with digital elevation models for hydrologic applications: A review. Hydrol. Earth Syst. Sci. Discuss. 2007, 11, 1481–1500. [Google Scholar] [CrossRef]

- Fu, P.; Rich, P.M. A geometric solar radiation model with applications in agriculture and forestry. Comput. Electron. Agric. 2002, 37, 25–35. [Google Scholar] [CrossRef]

- Ehsani, A.H.; Quiel, F. Application of Self Organizing Map and SRTM data to characterize yardangs in the Lut desert, Iran. Remote Sens. Environ. 2008, 112, 3284–3294. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, C.; Zhang, H.; Tang, Y.; Liu, X. Analysis of Permafrost Region Coherence Variation in the Qinghai–Tibet Plateau with a High-Resolution TerraSAR-X Image. Remote Sens. 2018, 10, 298. [Google Scholar] [CrossRef]

- Yue, L.; Shen, H.; Yu, W.; Zhang, L. Monitoring of Historical Glacier Recession in Yulong Mountain by the Integration of Multisource Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 388–400. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Feng, R.; Jie, L.; Zhang, L. DEM generation from contours and a low-resolution DEM. ISPRS J. Photogramm. Remote Sens. 2017, 134, 135–147. [Google Scholar] [CrossRef]

- Karkee, M.; Steward, B.L.; Aziz, S.A. Improving quality of public domain digital elevation models through data fusion. Biosyst. Eng. 2008, 101, 293–305. [Google Scholar] [CrossRef]

- Mukherjee, S.; Joshi, P.K.; Mukherjee, S.; Ghosh, A.; Garg, R.D.; Mukhopadhyay, A. Evaluation of vertical accuracy of open source Digital Elevation Model; (DEM). Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 205–217. [Google Scholar] [CrossRef]

- Yamazaki, D.; Ikeshima, D.; Tawatari, R.; Yamaguchi, T.; O’Loughlin, F.; Neal, J.C.; Sampson, C.C.; Kanae, S.; Bates, P.D. A high-accuracy map of global terrain elevations. Geophys. Res. Lett. 2017, 44, 5844–5853. [Google Scholar] [CrossRef]

- Meng, X. SRTM DEM and its application advances. Int. J. Remote Sens. 2011, 32, 3875–3896. [Google Scholar]

- Zhang, G.; Xie, H.; Kang, S.; Yi, D.; Ackley, S.F. Monitoring lake level changes on the Tibetan Plateau using ICESat altimetry data (2003–2009). Remote Sens. Environ. 2011, 115, 1733–1742. [Google Scholar] [CrossRef]

- Toutin, T. ASTER DEMs for geomatic and geoscientific applications: A review. Int. J. Remote Sens. 2008, 29, 1855–1875. [Google Scholar] [CrossRef]

- Krieger, G.; Moreira, A.; Fiedler, H.; Hajnsek, I.; Werner, M.; Younis, M.; Zink, M. TanDEM-X: A satellite formation for high-resolution SAR interferometry. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3317–3341. [Google Scholar] [CrossRef]

- Farr, T.G.; Rosen, P.A.; Caro, E.; Crippen, R.; Duren, R.; Hensley, S.; Kobrick, M.; Paller, M.; Rodriguez, E.; Roth, L.; et al. The Shuttle Radar Topography Mission. Rev. Geophys. 2007, 45, 361. [Google Scholar] [CrossRef]

- Blomgren, S. A digital elevation model for estimating flooding scenarios at the Falsterbo Peninsula. Environ. Model. Softw. 1999, 14, 579–587. [Google Scholar] [CrossRef]

- Chen, C.; Yue, T. A method of DEM construction and related error analysis. Comput. Geosci. 2010, 36, 717–725. [Google Scholar] [CrossRef]

- Robinson, T.P.; Metternicht, G. Testing the performance of spatial interpolation techniques for mapping soil properties. Comput. Electron. Agric. 2006, 50, 97–108. [Google Scholar] [CrossRef]

- Reuter, H.I.; Nelson, A.; Jarvis, A. An evaluation of void-filling interpolation methods for SRTM data. Int. J. Geogr. Inf. Sci. 2007, 21, 983–1008. [Google Scholar] [CrossRef]

- Grohman, G.; Kroenung, G.; Strebeck, J. Filling SRTM Voids: The Delta Surface Fill Method. Photogramm. Eng. Remote Sens. 2006, 72, 213–216. [Google Scholar]

- Luedeling, E.; Siebert, S.; Buerkert, A. Filling the voids in the SRTM elevation model—A TIN-based delta surface approach. ISPRS J. Photogramm. Remote Sens. 2007, 62, 283–294. [Google Scholar] [CrossRef]

- Yue, T.-X.; Chen, C.-F.; Li, B.-L. A high-accuracy method for filling voids and its verification. Int. J. Remote Sens. 2012, 33, 2815–2830. [Google Scholar] [CrossRef]

- Zhu, D.; Cheng, X.; Zhang, F.; Yao, X.; Gao, Y.; Liu, Y. Spatial interpolation using conditional generative adversarial neural networks. Int. J. Geogr. Inf. Sci. 2019. [Google Scholar] [CrossRef]

- Dong, G.; Chen, F.; Ren, P. Filling SRTM Void Data Via Conditional Adversarial Networks. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 7441–7443. [Google Scholar]

- Gavriil, K.; Muntingh, G.; Barrowclough, O.J.D. Void Filling of Digital Elevation Models with Deep Generative Models. IEEE Geosci. Remote Sens. Lett. 2019. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Bing, X.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the International Conference on Neural Information Processing Systems, Kuching, Malaysia, 3–6 November 2014. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Catanzaro, B. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 8798–8807. [Google Scholar]

- Liu, M.Y.; Breuel, T.; Kautz, J. Unsupervised Image-to-Image Translation Networks. arXiv 2017, arXiv:1703.00848. Available online: https://arxiv.org/abs/1703.00848 (accessed on 2 March 2017).

- Guo, J.; Lu, S.; Han, C.; Zhang, W.; Wang, J. Long Text Generation via Adversarial Training with Leaked Information. arXiv 2017, arXiv:1709.08624. Available online: https://arxiv.org/abs/1709.08624 (accessed on 24 September 2017).

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. 2017, 36, 107. [Google Scholar] [CrossRef]

- Yeh, R.A.; Chen, C.; Lim, T.Y.; Schwing, A.G.; Hasegawa-Johnson, M.; Do, M.N. Semantic Image Inpainting with Deep Generative Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 5485–5493. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. Available online: https://arxiv.org/abs/1511.06434 (accessed on 19 November 2015).

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv 2017, arXiv:1701.07875. Available online: https://arxiv.org/abs/1701.07875 (accessed on 26 Janurary 2017) .

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Zhen, W.; Smolley, S.P. Least Squares Generative Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2794–2802. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. Available online: https://arxiv.org/abs/1502.03167 (accessed on 11 February 2015).

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the International Conference on International Conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Jian, S. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1026–1034. [Google Scholar]

- Sajjadi, M.S.M.; Schölkopf, B.; Hirsch, M. EnhanceNet: Single Image Super-Resolution through Automated Texture Synthesis. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4491–4500. [Google Scholar]

- Rez, P.; Gangnet, M.; Blake, A. Poisson image editing. ACM Trans. Graph. 2003, 22, 313–318. [Google Scholar]

- Horn, B.K.P. Hill shading and the reflectance map. Proc. IEEE 1981, 69, 14–47. [Google Scholar] [CrossRef]

- Zhou, W.; Alan Conrad, B.; Hamid Rahim, S.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans Image Process 2004, 13, 600–612. [Google Scholar]

- Ma, L.; Li, Y. Evaluation of SRTM DEM over China. In Proceedings of the IEEE International Conference on Geoscience & Remote Sensing Symposium (IGARSS), Denver, CO, USA, 31 July–4 August 2006. [Google Scholar]

- Hancock, G.R.; Martinez, C.; Evans, K.G.; Moliere, D.R. A comparison of SRTM and high-resolution digital elevation models and their use in catchment geomorphology and hydrology: Australian examples. Earth Surf. Process. Landf. 2010, 31, 1394–1412. [Google Scholar] [CrossRef]

- Robinson, N.; Regetz, J.; Guralnick, R.P. EarthEnv-DEM90: A nearly-global, void-free, multi-scale smoothed, 90 m digital elevation model from fused ASTER and SRTM data. ISPRS J. Photogramm. Remote Sens. 2014, 87, 57–67. [Google Scholar] [CrossRef]

- Jhee, H.; Cho, H.-C.; Kahng, H.-K.; Cheung, S. Multiscale quadtree model fusion with super-resolution for blocky artefact removal. Remote Sens. Lett. 2013, 4, 325–334. [Google Scholar] [CrossRef]

| Unit: m | ME | SD | MAE | RMSE | SSIM |

|---|---|---|---|---|---|

| TTGM64 | 0.86 | 18.81 | 15.22 | 20.21 | 0.92 |

| TTGM128 | 0.12 | 22.99 | 17.18 | 24.02 | 0.90 |

| Min | Max | Mean | STD | |

|---|---|---|---|---|

| Original DEM | 4090 | 5537 | 4928 | 226 |

| Void filling result | 4096 | 5529 | 4931 | 225 |

| Kriging | Spline | IDW | Ours | |

|---|---|---|---|---|

| ME | 0.10 | 0.73 | −0.46 | −0.59 |

| SD | 77.42 | 59.51 | 76.44 | 54.28 |

| MAE | 45.84 | 35.52 | 46.41 | 29.51 |

| RMSE | 77.42 | 59.51 | 76.44 | 54.29 |

| Void Type | Model | ME | SD | MAE | RMSE | SSIM |

|---|---|---|---|---|---|---|

| Real | TTGM-D | 1.39 | 18.58 | 14.75 | 20.67 | 0.89 |

| TTGM-DSR | 2.01 | 18.42 | 14.85 | 19.78 | 0.91 | |

| Center | TTGM-D | 1.48 | 27.53 | 23.35 | 29.55 | 0.88 |

| TTGM-DSR | −2.98 | 26.51 | 21.39 | 28.14 | 0.90 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Yue, L.; Liu, X. Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks. Remote Sens. 2019, 11, 2829. https://doi.org/10.3390/rs11232829

Qiu Z, Yue L, Liu X. Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks. Remote Sensing. 2019; 11(23):2829. https://doi.org/10.3390/rs11232829

Chicago/Turabian StyleQiu, Zhonghang, Linwei Yue, and Xiuguo Liu. 2019. "Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks" Remote Sensing 11, no. 23: 2829. https://doi.org/10.3390/rs11232829

APA StyleQiu, Z., Yue, L., & Liu, X. (2019). Void Filling of Digital Elevation Models with a Terrain Texture Learning Model Based on Generative Adversarial Networks. Remote Sensing, 11(23), 2829. https://doi.org/10.3390/rs11232829