Lane-Level Road Extraction from High-Resolution Optical Satellite Images

Abstract

1. Introduction

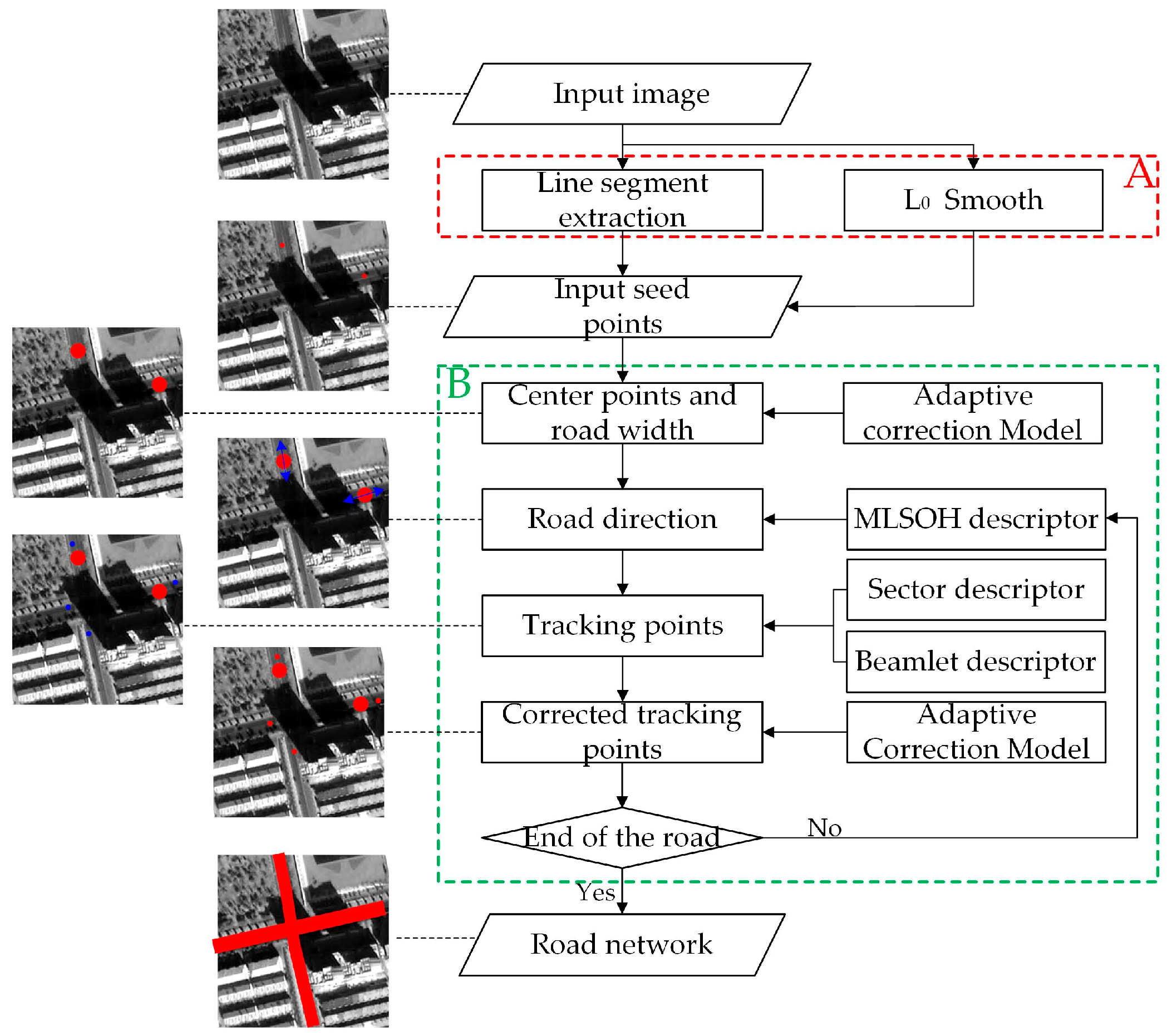

2. Methodology

2.1. Preprocessing

2.1.1. L0 Smoothing

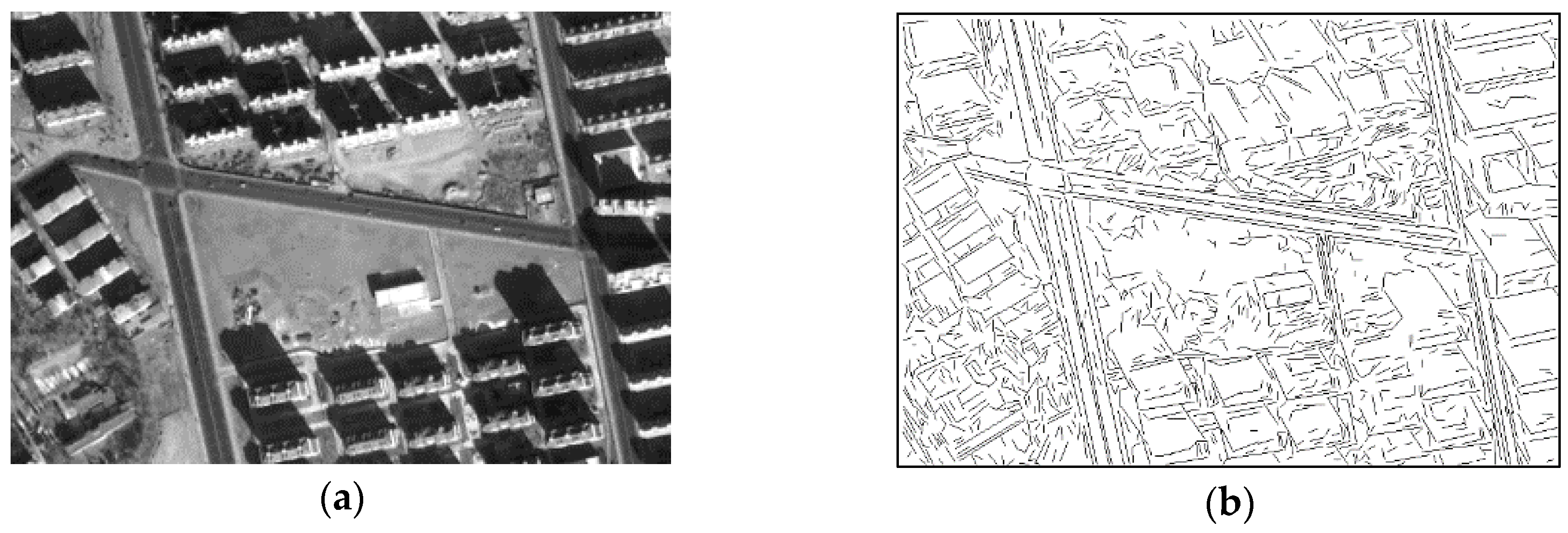

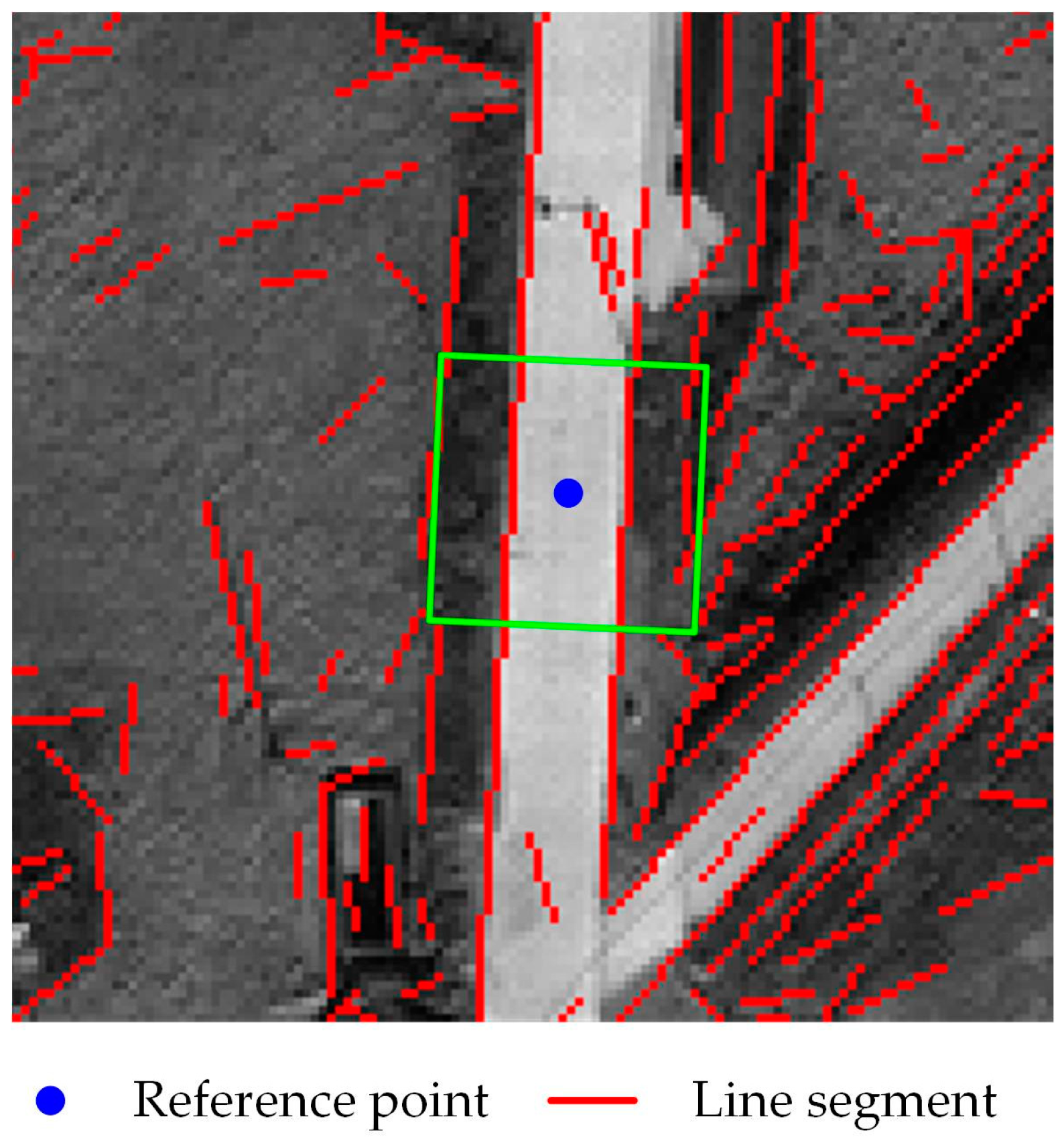

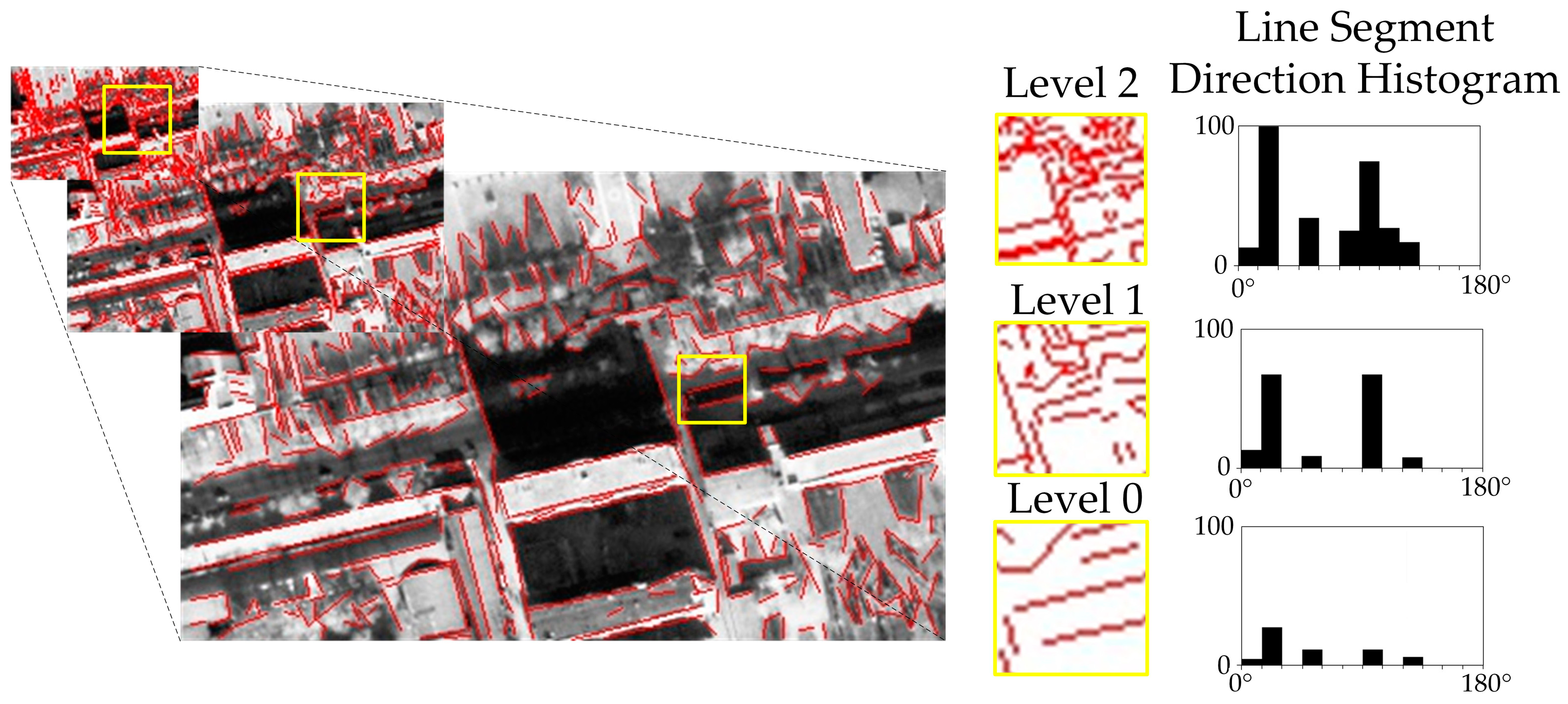

2.1.2. Line Segment Extraction

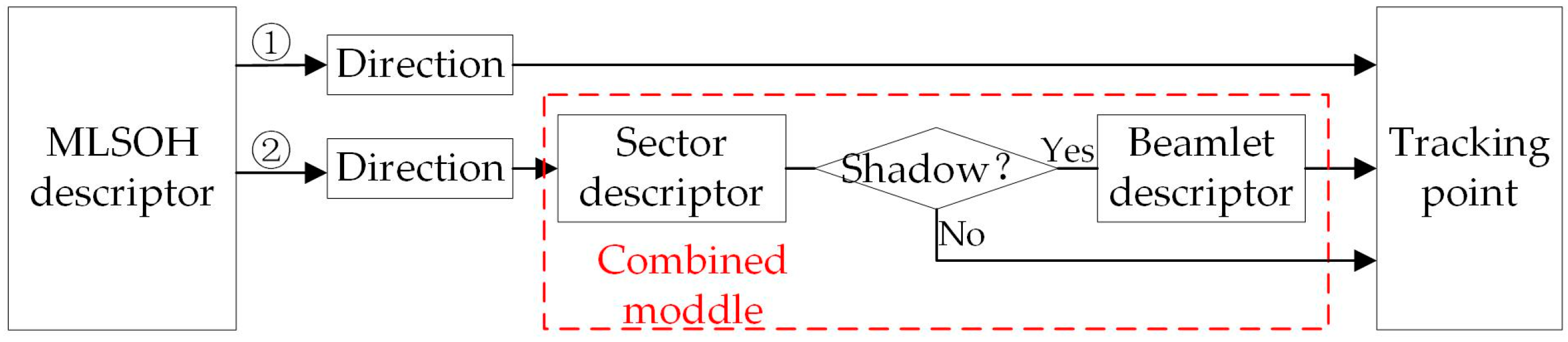

2.2. Road Matching Model

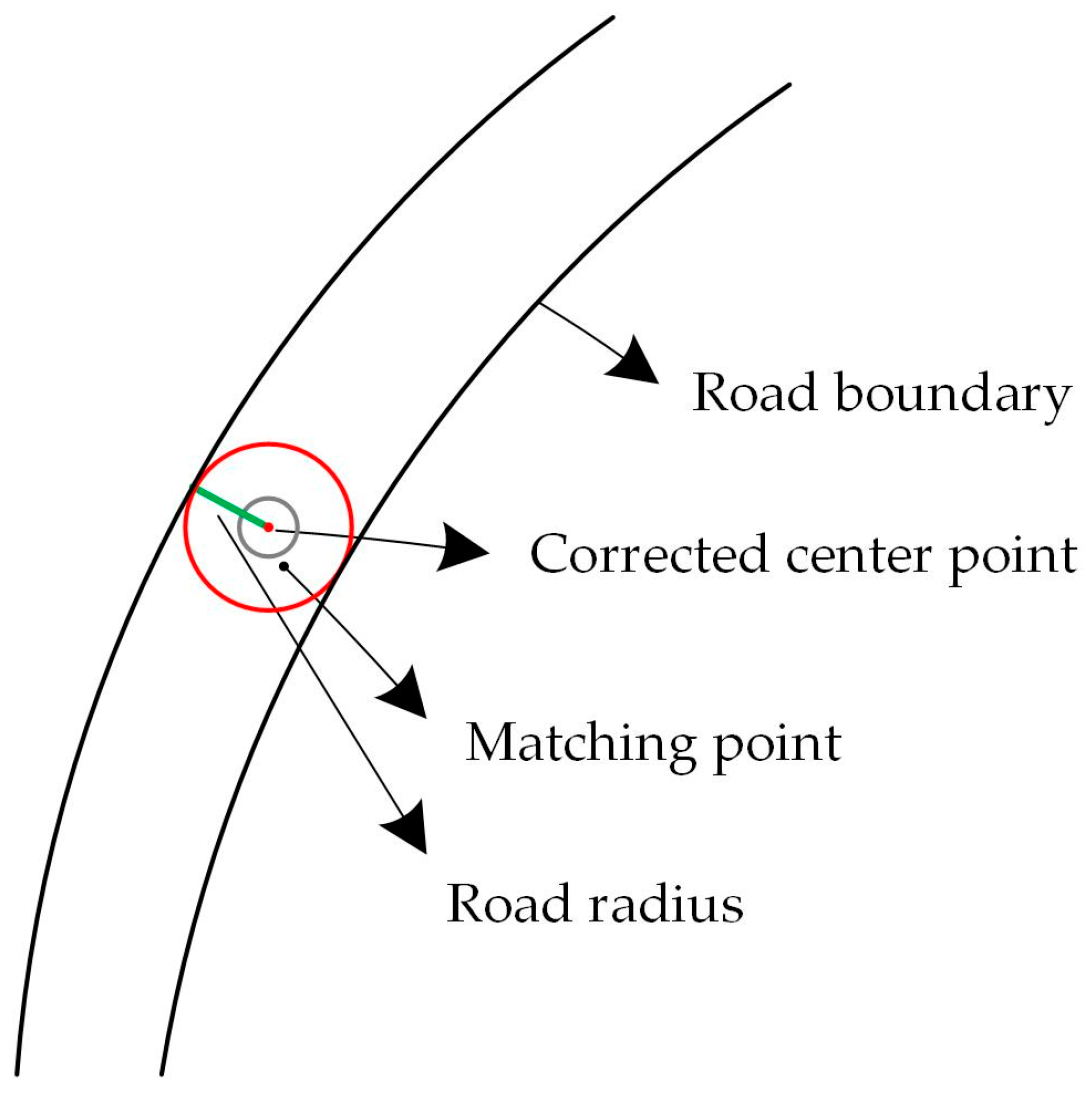

2.2.1. Adaptive Correction Model

2.2.2. MLSOH Descriptor

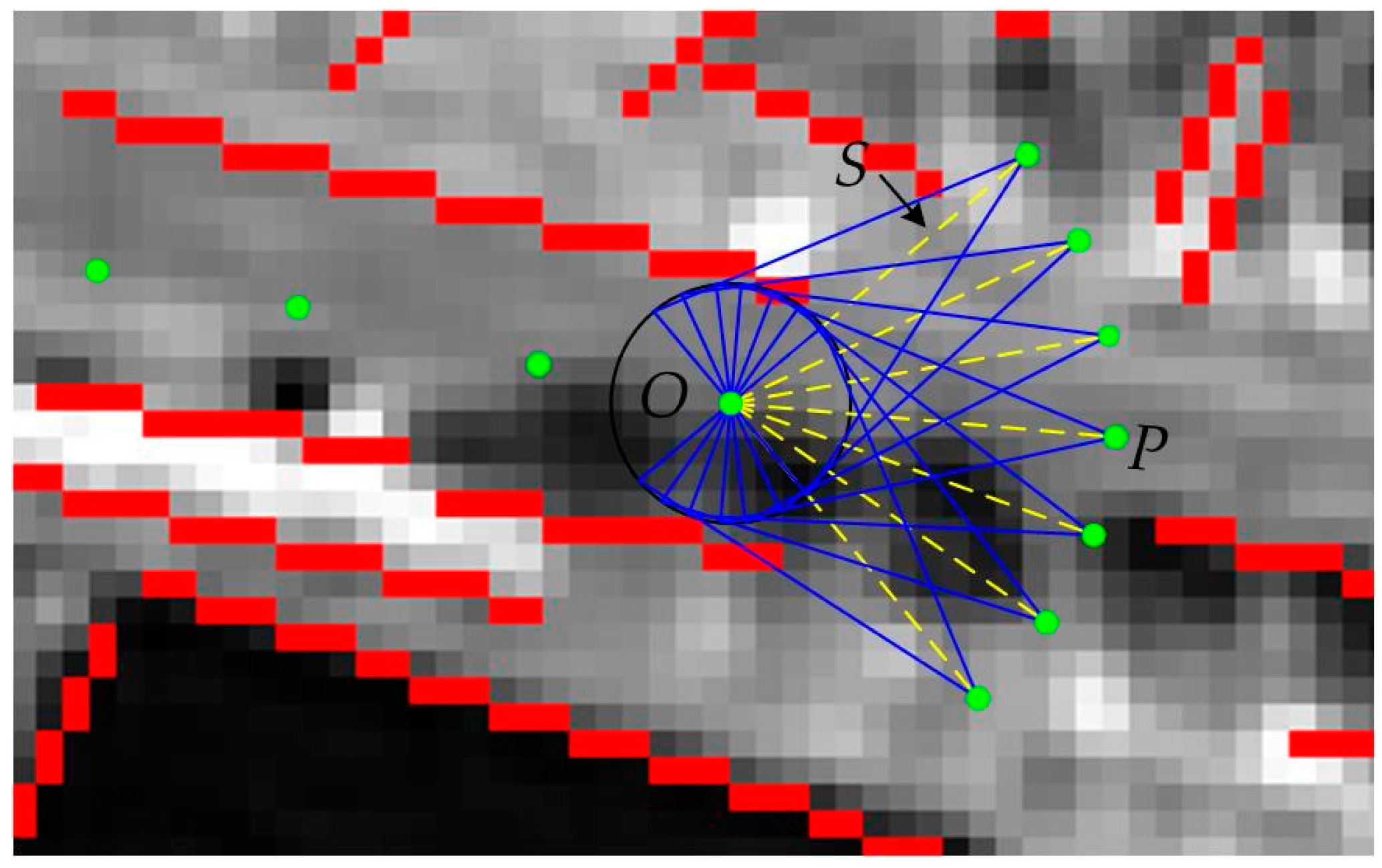

2.2.3. Sector Descriptor

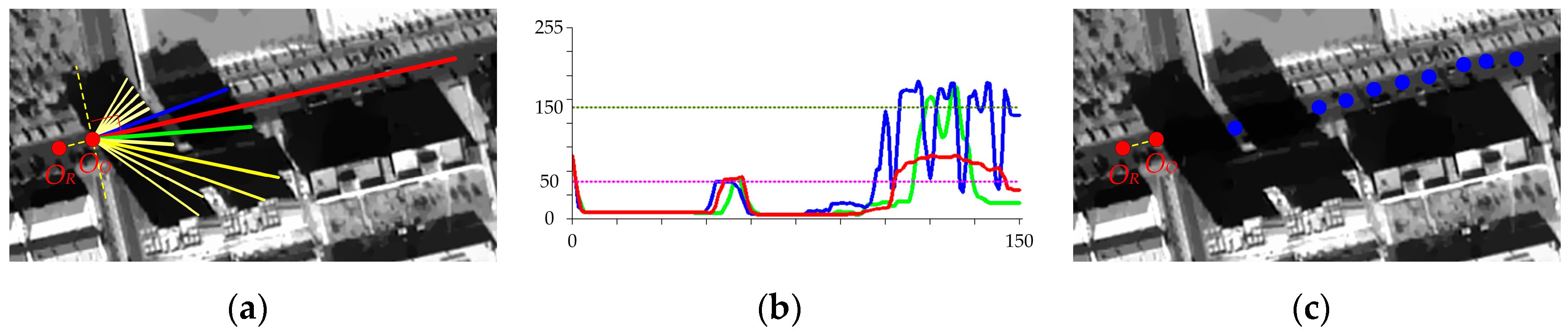

2.2.4. Beamlet Descriptor

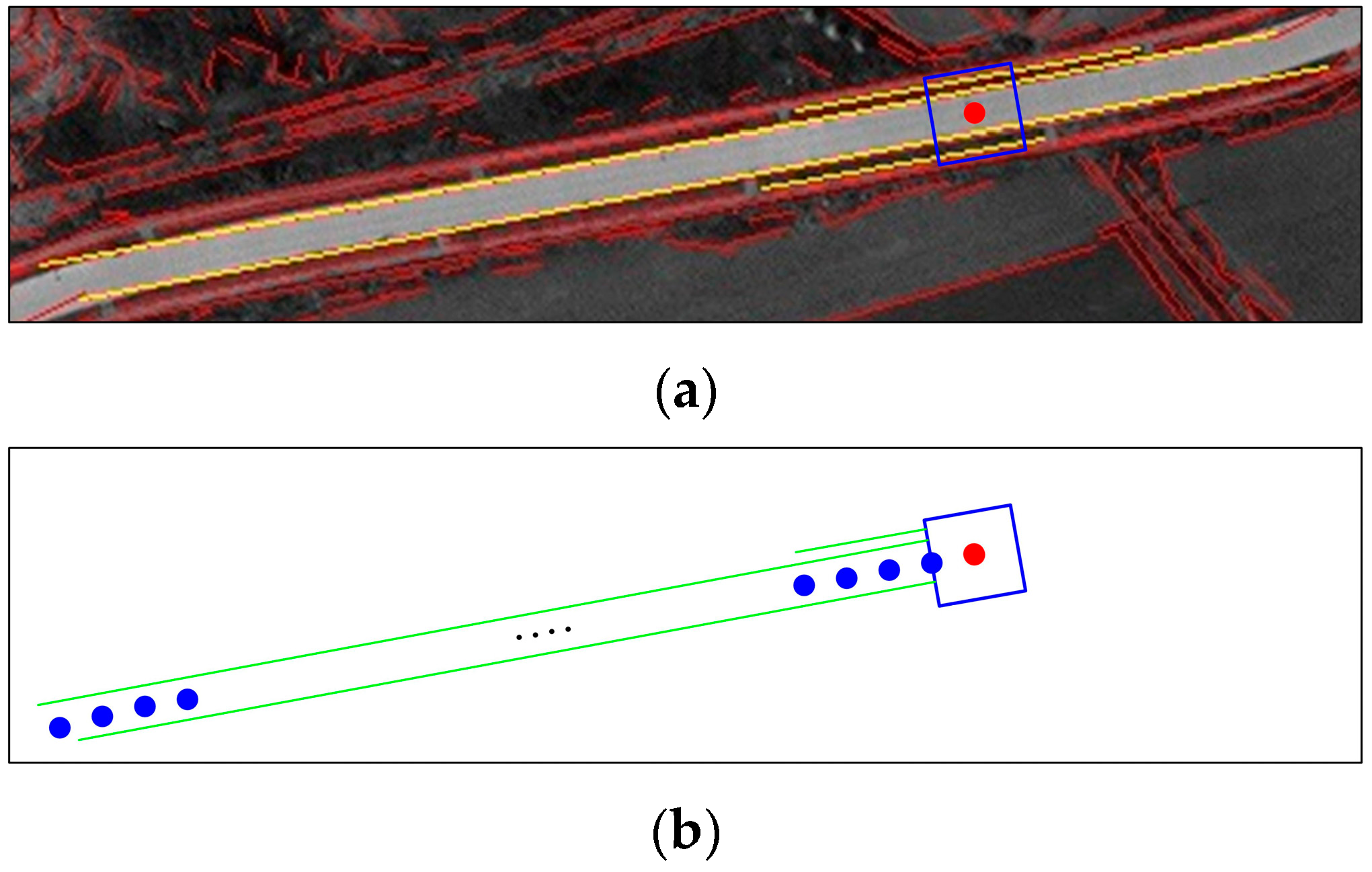

2.3. Road Tracking Progress

2.3.1. Single-Lane Tracking Mode

2.3.2. Double-Lane Tracking Mode

3. Experimental Analysis and Discussion

3.1. Experimental Setup

3.1.1. Description of Test Images and Compared Methods

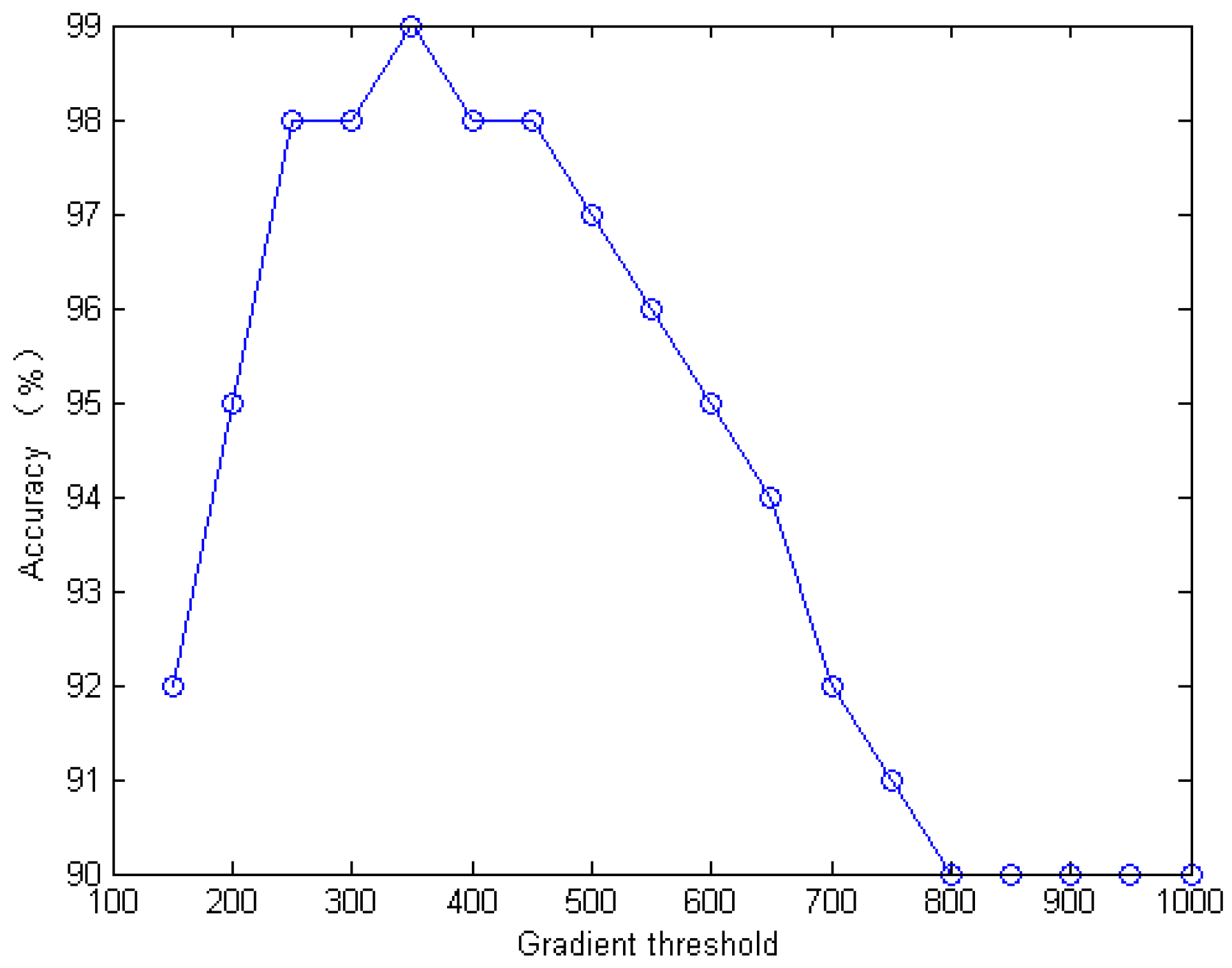

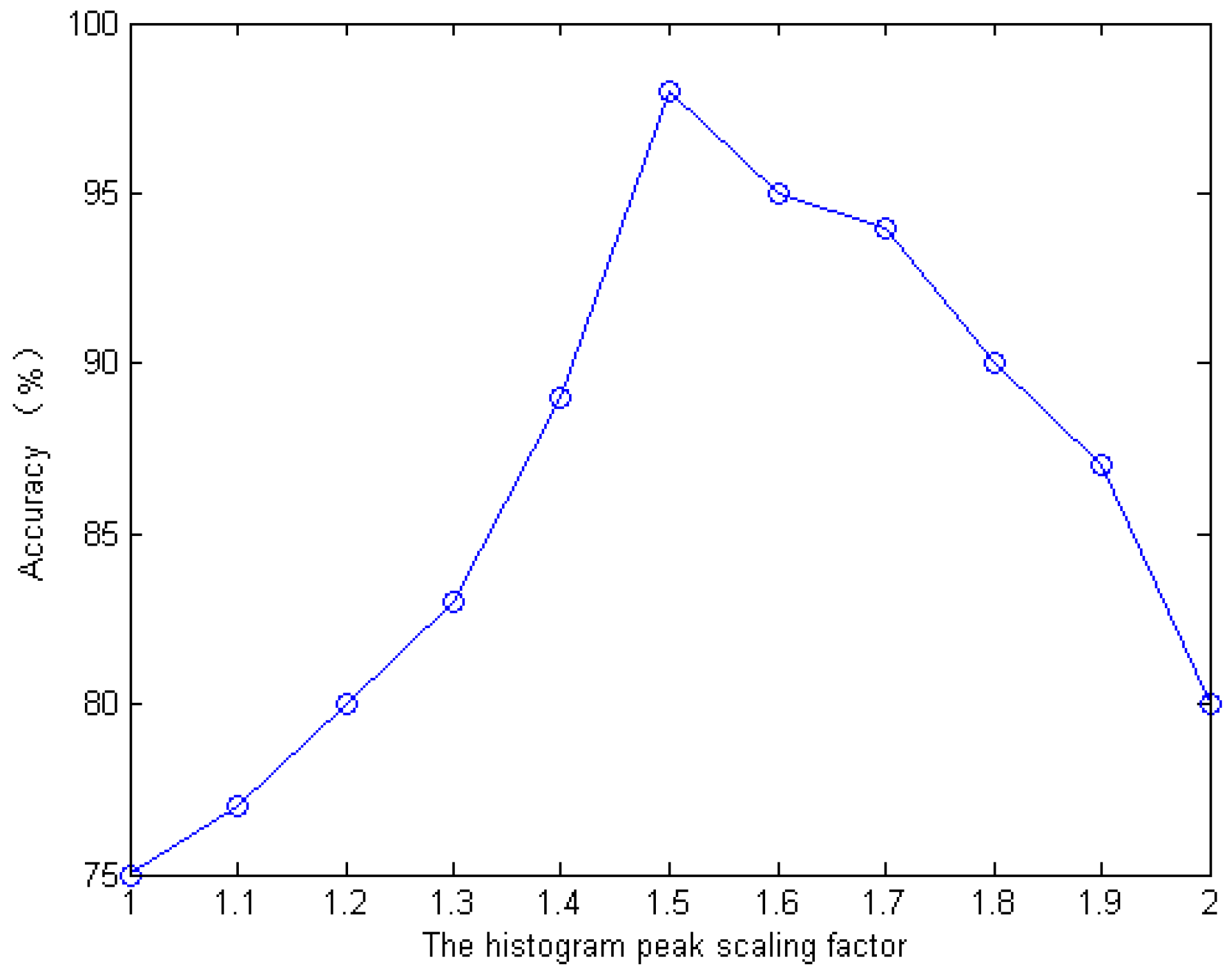

3.1.2. Parameter Settings

3.1.3. Evaluation Metrics

3.2. Results

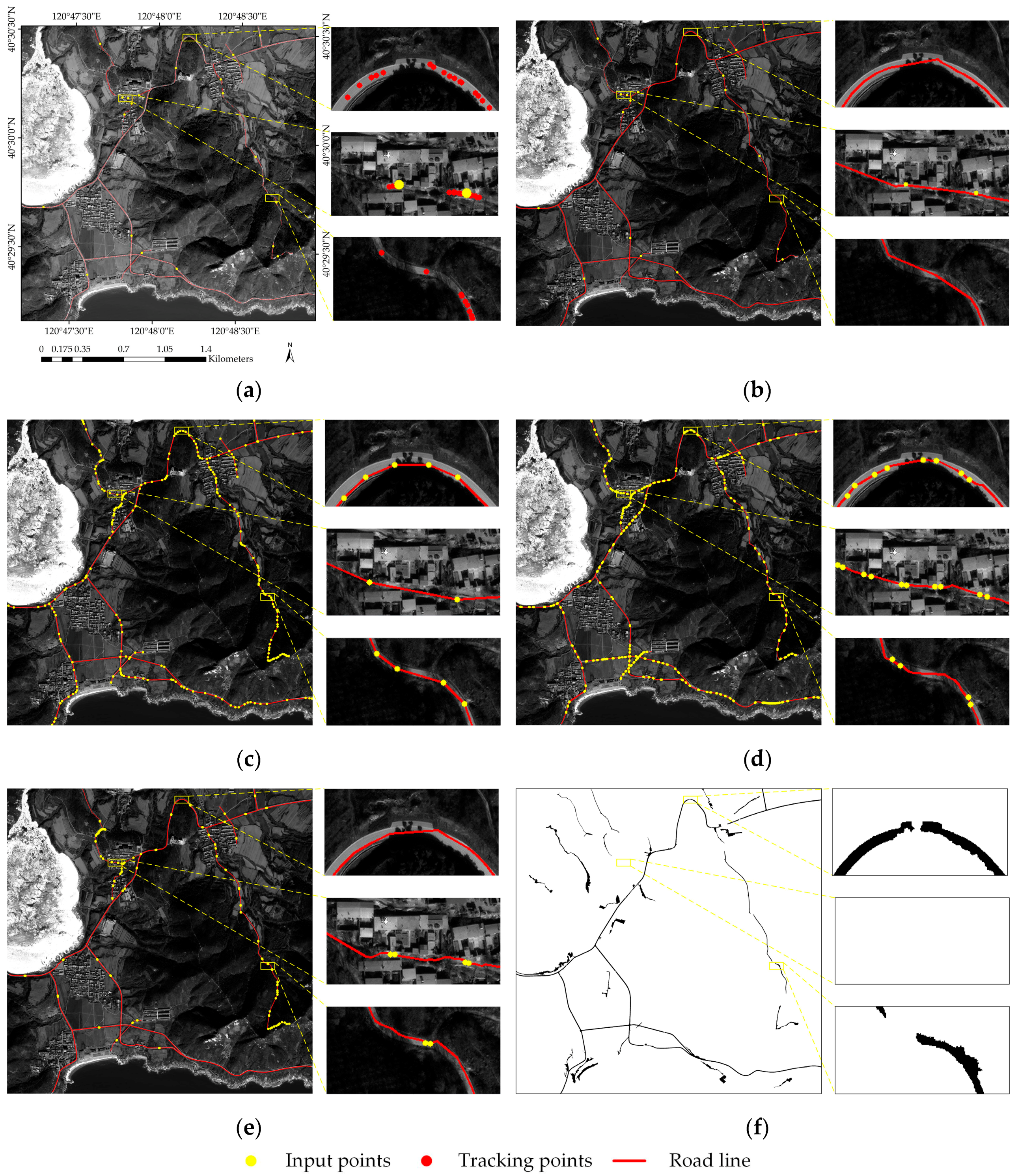

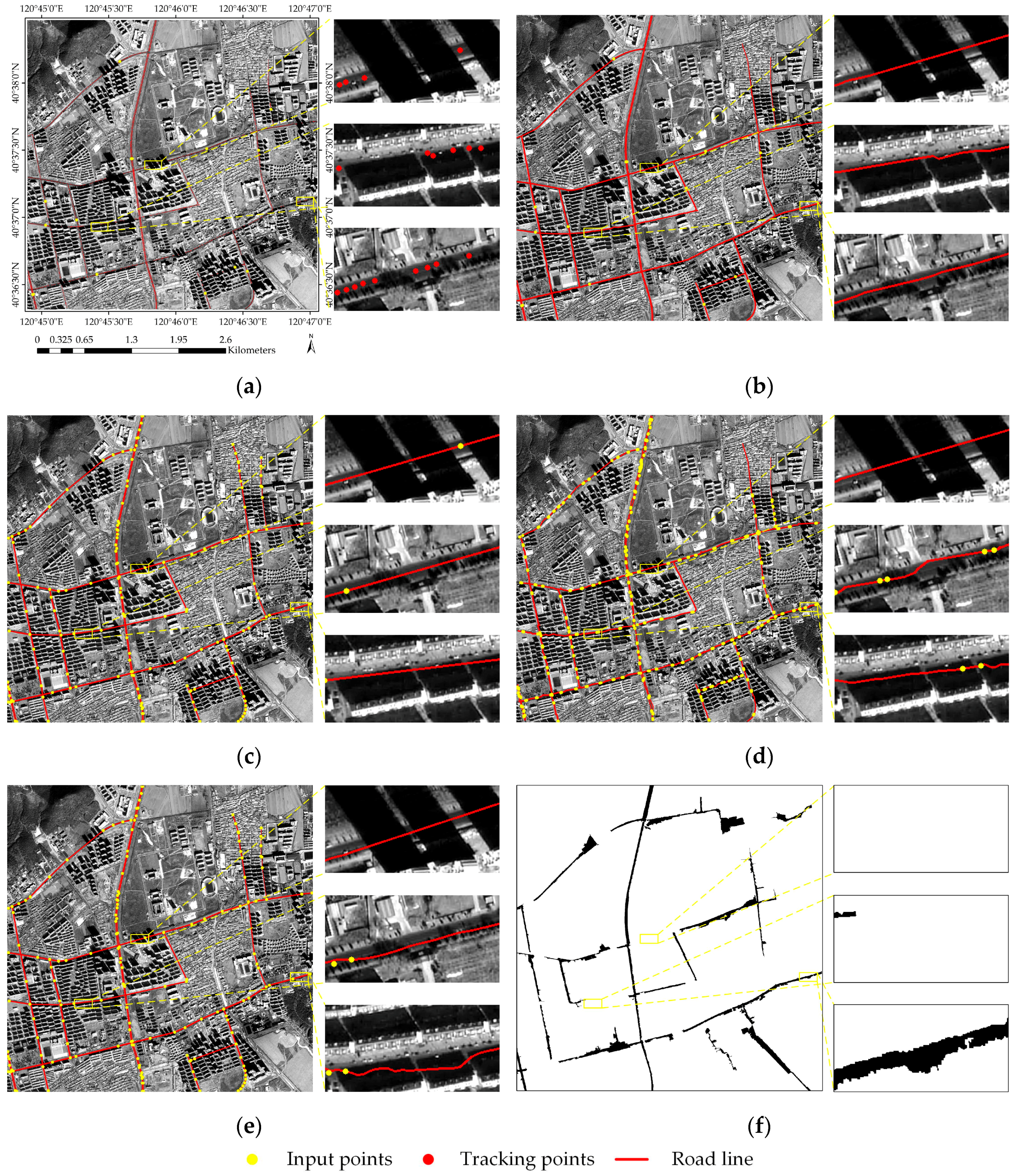

3.2.1. Pleiades Satellite Data

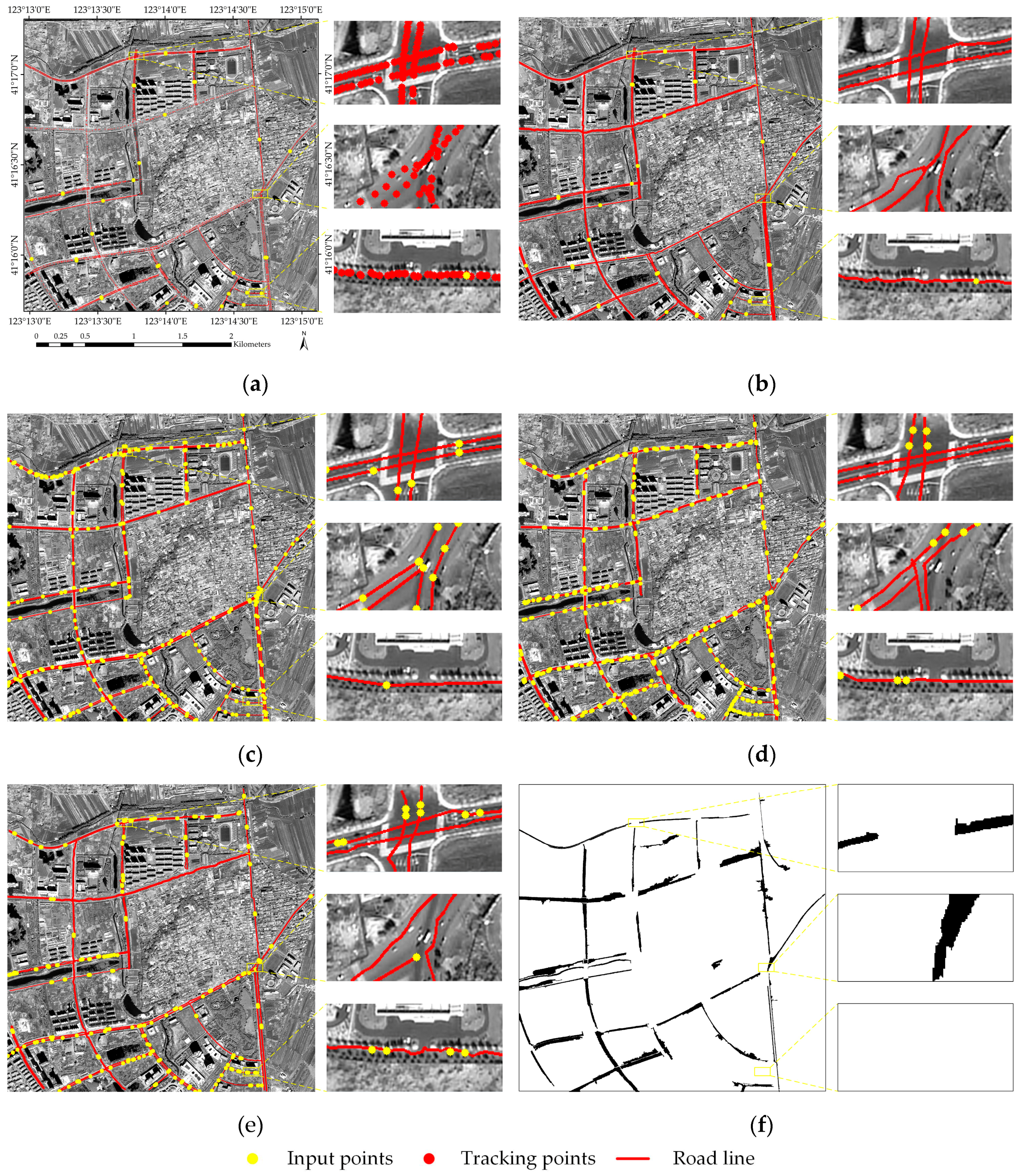

3.2.2. GF2 Data

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bajcsy, R.; Tavakoli, M. Computer Recognition of Roads from Satellite Pictures. IEEE Trans. Syst. Man Cybern. 1976, 6, 623–637. [Google Scholar] [CrossRef]

- Hofmann, P.; Blaschke, T.; Strobl, J. Quantifying the robustness of fuzzy rule sets in object-based image analysis. Int. J. Remote Sens. 2011, 32, 7359–7381. [Google Scholar] [CrossRef]

- Raziq, A.; Xu, A.; Li, Y. Automatic Extraction of Urban Road Centerlines from High-Resolution Satellite Imagery Using Automatic Thresholding and Morphological Operation Method. J. Geogr. Inf. Syst. 2016, 8, 517–525. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Road Network Extraction from VHR Satellite Images Using Context Aware Object Feature Integration and Tensor Voting. Remote Sens. 2016, 8, 637. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Object-based road extraction from satellite images using ant colony optimization. Int. J. Remote Sens. 2017, 38, 179–198. [Google Scholar] [CrossRef]

- Ameri, F.; Zoej, M.J.V. Road vectorisation from high-resolution imagery based on dynamic clustering using particle swarm optimisation. Photogramm. Rec. 2015, 30, 363–386. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R. Hierarchical graph-based segmentation for extracting road networks from high-resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2017, 126, 245–260. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Zhang, H.; Wang, X. Road centerline extraction from high-resolution imagery based on shape features and multivariate adaptive regression splines. IEEE Geosci. Remote Sens. Lett. 2013, 10, 583–587. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Gamba, P.; Li, Z. An Object-Based Method for Road Network Extraction in VHR Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4853–4862. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Mura, M.D. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Cheng, J.; Ding, W.; Ku, X.; Sun, J. Road extraction from high-resolution sar images via automatic local detecting and human-guided global tracking. Int. J. Antenn. Propag. 2014, 2012, 1497–1500. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Mnih, V.; Hinton, G.E. Learning to detect roads in high-resolution aerial images. In Proceedings of the Computer Vision—ECCV, European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2018; Volume 6316, pp. 210–223. [Google Scholar]

- Rajeswari, M.; Gurumurthy, K.S.; Omkar, S.N.; Senthilnath, J.; Reddy, L.P. Automatic road extraction using high resolution satellite images based on Level Set and Mean Shift methods. In Proceedings of the Fourth International Conference on Computing, Kanyakumari, India, 8–10 April 2011. [Google Scholar]

- Jiang, Y. Research on road extraction of remote sensing image based on convolutional neural network. EURASIP J. Image Video Process. 2019, 2019, 31. [Google Scholar] [CrossRef]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road Segmentation of Remotely-Sensed Images Using Deep Convolutional Neural Networks with Landscape Metrics and Conditional Random Fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Hengel, V.D. Effective semantic pixel labelling with convolutional networks and Conditional Random Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Sui, H.; Hua, L.; Gong, J. Automatic extraction of road networks from remotely sensed images based on GIS knowledge. Image Proc. Pattern Recognit. Remote Sens. 2003, 4898, 226–238. [Google Scholar]

- Chen, H.; Yin, L.; Ma, L. Research on road information extraction from high resolution imagery based on global precedence. In Proceedings of the 2014 Third International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Changsha, China, 11–14 June 2014; pp. 151–155. [Google Scholar]

- Li, J.; Qin, Q.; Xie, C.; Zhao, Y. Integrated use of spatial and semantic relationships for extracting road networks from floating car data. Int. J. Appl. Earth Obs. Geoinform. 2012, 19, 238–247. [Google Scholar] [CrossRef]

- Cao, C.; Sun, Y. Automatic Road Centerline Extraction from Imagery Using Road GPS Data. Remote Sens. 2014, 6, 9014–9033. [Google Scholar] [CrossRef]

- Uemura, T.; Uchimura, K.; Koutaki, G. Road extraction in urban areas using boundary code segmentation for DSM and aerial rgb images. J. Inst. Image Electron. Eng. Jpn. 2011, 40, 74–85. [Google Scholar]

- Herumurti, D.; Uchimura, K.; Koutaki, G.; Uemura, T. Urban road network extraction based on zebra crossing detection from a very high resolution RGB aerial image and DSM data. In Proceedings of the International Conference on Signal-Image Technology & Internet-Based Systems, Kyoto, Japan, 2–5 December 2013; pp. 79–84. [Google Scholar]

- Li, Q.; Fan, H.; Luan, X.; Yang, B.; Liu, L. Polygon-based approach for extracting multilane roads from OpenStreetMap urban road networks. Int. J. Geogr. Inf. Sci. 2014, 28, 2200–2219. [Google Scholar] [CrossRef]

- Treash, K.; Amaratunga, K. Automatic Road Detection in Grayscale Aerial Images. J. Comput. Civ. Eng. 2000, 14, 60–69. [Google Scholar] [CrossRef]

- Sghaier, M.O.; Lepage, R. Road extraction from very high resolution remote sensing optical images based on texture analysis and beamlet transform. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 1946–1958. [Google Scholar] [CrossRef]

- Sengupta, S.K.; Lopez, A.S.; Brase, J.M.; Paglieroni, D.W. Phase-based road detection in multi-source images. IGARSS 2004. 2004 IEEE Internat. Geosci. Remote Sens. Symp. 2004, 6, 3833–3836. [Google Scholar]

- Shao, Y.; Guo, B.; Hu, X.; Di, L. Application of a fast linear feature detector to road extraction from remotely sensed imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2011, 4, 626–631. [Google Scholar] [CrossRef]

- Schubert, H.; Van De Gronde, J.J.; Roerdink, J.B.T.M. Efficient Computation of Greyscale Path Openings. Math. Morphol. Theory Appl. 2016, 1, 189–202. [Google Scholar] [CrossRef][Green Version]

- Xu, C.; Prince, J.L. Gradient vector flow: A new external force for snakes. In Proceedings of the IEEE Computer Society Conference on Computer Vision & Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 66–71. [Google Scholar]

- Li, C.; Kao, C.-Y.; Gore, J.C.; Ding, Z. Minimization of region-scalable fitting energy for image segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar] [PubMed]

- Nakaguro, Y.; Makhanov, S.; Dailey, M. Numerical experiments with cooperating multiple quadratic snakes for road extraction. Int. J. Geogr. Inf. Sci. 2011, 25, 765–783. [Google Scholar] [CrossRef]

- Sethian, J.A. A fast marching level set method for monotonically advancing fronts. Proc. Natl. Acad. Sci. USA 1996, 93, 1591–1595. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, Z.; Zhang, J. An approach of semi-automated road extraction from aerial images based on template matching and neural network. 2000. Available online: https://pdfs.semanticscholar.org/a5da/323a4e136db68c3400d31904545b40e50d7f.pdf (accessed on 24 October 2019).

- Leninisha, S.; Vani, K. Water flow based geometric active deformable model for road network. ISPRS J. Photogramm. Remote Sens. 2015, 102, 140–147. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, J.; Liu, Z.; Shen, J.; Duan, M. Semi-automatic extraction of road networks by least squares interlaced template matching in urban areas. Int. J. Remote Sens. 2011, 32, 4943–4959. [Google Scholar] [CrossRef]

- Lin, X.; Liu, Z. Semi-automatic extraction of ribbon roads from high resolution remotely sensed imagery by T-shaped template matching. Geom. Inf. Sci. Wuhan Univ. 2009, 7147, 293–296. [Google Scholar]

- Zhang, J.; Lin, X.; Liu, Z.; Shen, J. Semi-automatic road tracking by template matching and distance transformation in urban areas. Int. J. Remote Sens. 2011, 32, 8331–8347. [Google Scholar] [CrossRef]

- Xu, L.; Lu, C.; Xu, Y.; Jia, J. Image smoothing via l0 gradient minimization. ACM Trans. Graph. 2010, 30, 1–12. [Google Scholar]

- Dai, J.; Li, Z.; Jinwei, L.I.; Fang, X. A line extraction method for chain code tracking with phase verification. Acta Geod. Geophys. 2017, 46, 218–227. [Google Scholar]

- Tan, R.; Wan, Y.; Yuan, F.; Li, G. Semi-automatic road extraction of high resolution remote sensing images based on circular template. Bull. Surv. Map. 2014, 10, 63–66. [Google Scholar]

- Li, G.; An, J.; Chen, C. Automatic Road Extraction from High-Resolution Remote Sensing Image Based on Bat Model and Mutual Information Matching. J. Comput. 2011, 6, 2417–2426. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Xu, M. Road Structure Refined CNN for Road Extraction in Aerial Image. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1–5. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road Extraction from High-Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road Extraction from High-Resolution Remote Sensing Imagery Using Refined Deep Residual Convolutional Neural Network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef]

- Wiedemann, C.; Heipke, C.; Mayer, H. Empirical evaluation of automatically extracted road axes. In Empirical Evaluation Techniques in Computer Vision; Bowyer, K., Phillips, P.J., Eds.; IEEE Computer Society Press: Los Alamitos, CA, USA, 1998; pp. 172–187. [Google Scholar]

- Wiedemann, C.; Ebner, H. Automatic completion and evaluation of road networks. Int. Arch. Photogramm. Remote Sens. 2000, 33, 979–986. [Google Scholar]

| Method | Proposed Method | ERDAS Method | eCognition Method | T-shaped Method | Rectangle Method |

|---|---|---|---|---|---|

| Completeness (%) | 98.34 | 98.10 | 86.84 | 97.74 | 98.36 |

| Correctness (%) | 98.69 | 97.42 | 51.80 | 97.84 | 98.54 |

| Quality (%) | 97.04 | 95.62 | 48.03 | 95.37 | 96.95 |

| Seed Points | 21 | 237 | 0 | 364 | 144 |

| Time (s) | 1089 | 2396 | 2038 | 3584 | 1741 |

| Method | Proposed Method | ERDAS Method | eCognition Method | T-shaped Method | Rectangle Method |

|---|---|---|---|---|---|

| Completeness (%) | 98.44 | 97.56 | 62.63 | 97.16 | 98.36 |

| Correctness (%) | 98.97 | 98.69 | 40.86 | 98.51 | 98.48 |

| Quality (%) | 97.44 | 96.31 | 32.84 | 95.75 | 96.89 |

| Seed Points | 16 | 171 | 0 | 394 | 120 |

| Time (s) | 800 | 1257 | 1479 | 2547 | 1039 |

| Method | Proposed Method | ERDAS Method | eCognition Method | T-shaped Method | Rectangle Method |

|---|---|---|---|---|---|

| Completeness (%) | 98.01 | 97.33 | 52.67 | 97.12 | 97.29 |

| Correctness (%) | 98.40 | 97.38 | 52.83 | 97.68 | 97.87 |

| Quality (%) | 96.57 | 94.85 | 35.83 | 94.93 | 95.27 |

| Seed Points | 33 | 277 | 0 | 488 | 204 |

| Time (s) | 1650 | 2493 | 2530 | 3857 | 2236 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Zhu, T.; Zhang, Y.; Ma, R.; Li, W. Lane-Level Road Extraction from High-Resolution Optical Satellite Images. Remote Sens. 2019, 11, 2672. https://doi.org/10.3390/rs11222672

Dai J, Zhu T, Zhang Y, Ma R, Li W. Lane-Level Road Extraction from High-Resolution Optical Satellite Images. Remote Sensing. 2019; 11(22):2672. https://doi.org/10.3390/rs11222672

Chicago/Turabian StyleDai, Jiguang, Tingting Zhu, Yilei Zhang, Rongchen Ma, and Wantong Li. 2019. "Lane-Level Road Extraction from High-Resolution Optical Satellite Images" Remote Sensing 11, no. 22: 2672. https://doi.org/10.3390/rs11222672

APA StyleDai, J., Zhu, T., Zhang, Y., Ma, R., & Li, W. (2019). Lane-Level Road Extraction from High-Resolution Optical Satellite Images. Remote Sensing, 11(22), 2672. https://doi.org/10.3390/rs11222672