Daytime Rainy Cloud Detection and Convective Precipitation Delineation Based on a Deep Neural Network Method Using GOES-16 ABI Images

Abstract

1. Introduction

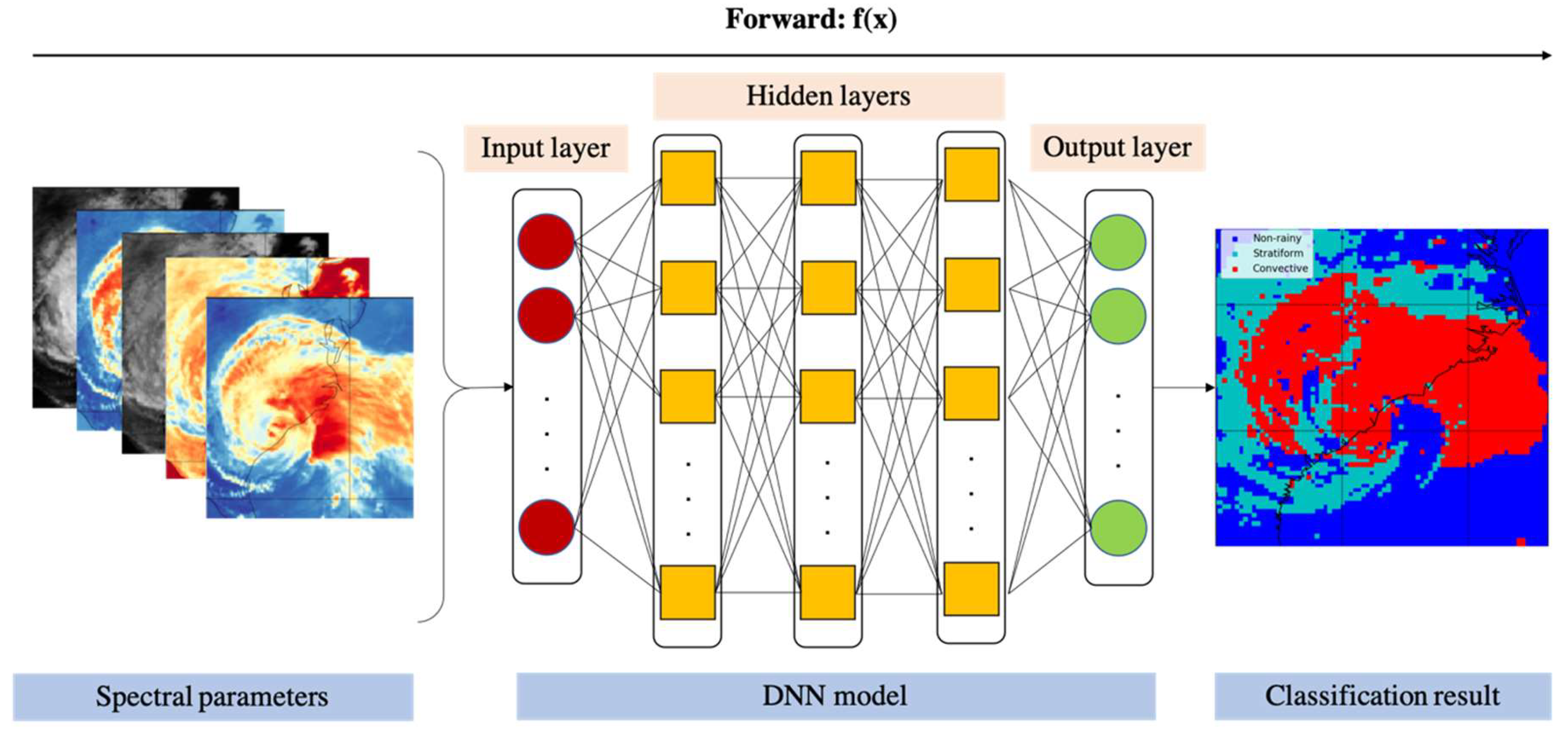

2. Data and Spectral Parameters

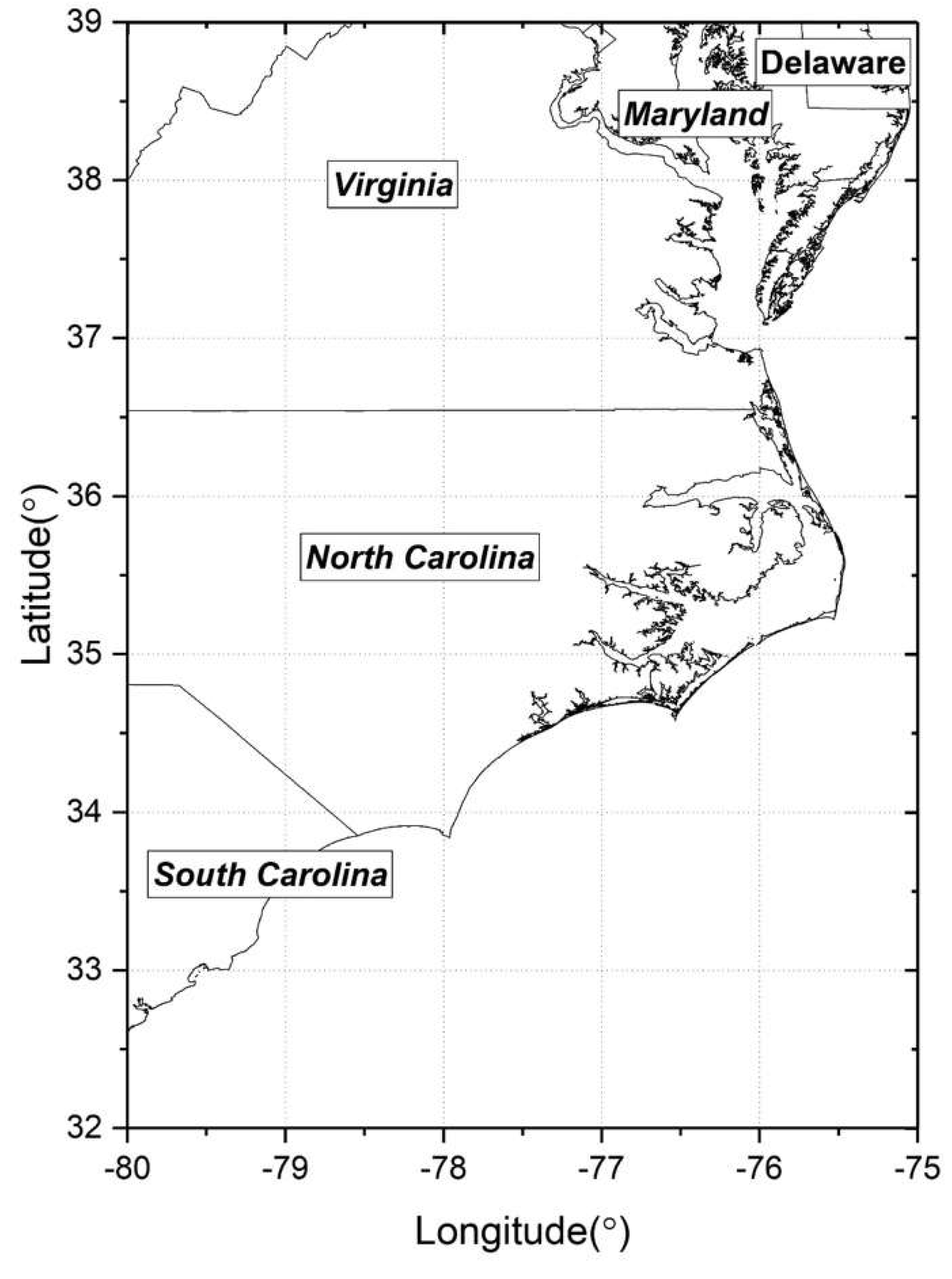

2.1. Study Area

2.2. GPM-IMERG Precipitation Estimates and Gauge Data

2.3. GOES-16 ABI Data

2.4. Spectral Parameters

3. Methodology and Models

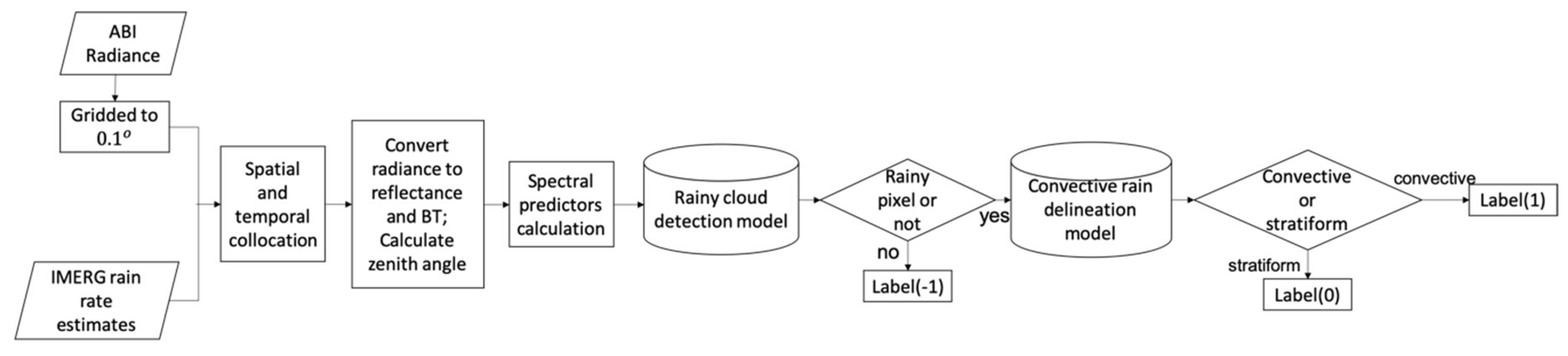

3.1. Data Processing

- Data preprocessing. First, the 16 bands of ABI radiance are gridded to the spatial resolution of 0.1°, the same as IMERG precipitation estimates. Second, six individual ABI data with scanning time ranges included in one IMERG time step are averaged to complete the spatial and temporal collocation between the two datasets. Third, Ref, BT, and sun zenith angle (z) are calculated, with reflectance being derived as follows (Equation (2)):where λ is the central wavelength of the channel, is the radiance value, and κ is the reflectance conversion factor. The BT is derived from Equation (3) as follows:where fk1, fk2, bc1, and bc2 are the Planck function constants. Sun zenith angle (z) is calculated through the variables in the ABI data file and pixel locations.

- Spectral parameters are calculated and normalized.

- Each set of parameters is sorted into rainy and non-rainy clouds according to the associated IMERG rain rate (r). If r < 0.1 mm/hr, the sample is classified as non-rainy; otherwise, it is labeled as rainy. The rainy cloud detection models are built using DNN models, through which rainy samples are separated automatically from non-rainy ones.

- After rainy or non-rainy samples are distinguished, the convective and stratiform rain clouds are split based on their rain rates. The adopted threshold to discriminate convection or stratus cloud is calculated through the Z–r relation [49]:where Z () is the reflectivity factor of the radar, r (mm/hr) is the corresponding rain rate, and dBZ is the decibel relative to Z. Lazri [18] uses dBZ = 40 as the threshold of Z for convective precipitation. Then r is 12.24 mm/hr according to Equation (5). As precipitation rates measured by meteorological radar tend to be overestimated due to anomalous propagation of the radar beam [50], a lower threshold for r is more reasonable. This research uses r = 10 mm/hr as the final threshold because it is adopted frequently and has worked well in previous studies [20,51,52]:

- Convective precipitation delineation models are built using training samples derived from step 4 with DNN models.

- Accuracy is evaluated using validation data and case studies.

3.2. Model Development

4. Model Performance Evaluation

4.1. Evaluation Metrics

- The probability of detection (POD). The POD is the rate of testing samples correctly recognized as rainy/convective by the model:

- The probability of false detection (POFD). The POFD indicates the fraction of rainy/convective events incorrectly predicted by the model:

- The false alarm ratio (FAR). The FAR is the ratio of the incorrect detection of rainy/convective pixels and the total pixels recognized as rainy/convective:

- The bias. Bias represents model over- or underestimates of reality:

- The critical success index (CSI). The CSI is the fraction between the correct prediction of rainy/convective pixels by the model and the total number of pixels detected as rainy/convective by both IMERG and the model:

- The model accuracy (MA). The MA is the probability of a correct prediction of both rainy/convective and non-rainy/stratiform pixels by the model:where the contingency parameters a, b, c, and d are summarized (Table 4).

4.2. Comparison of DNN, SVM, and RF Performance on Testing Data

5. Case Study

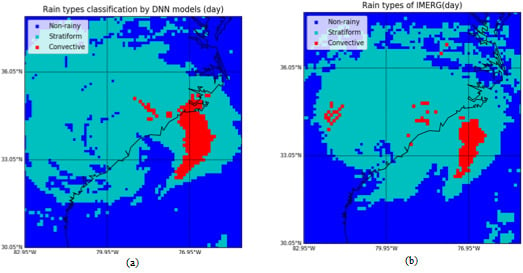

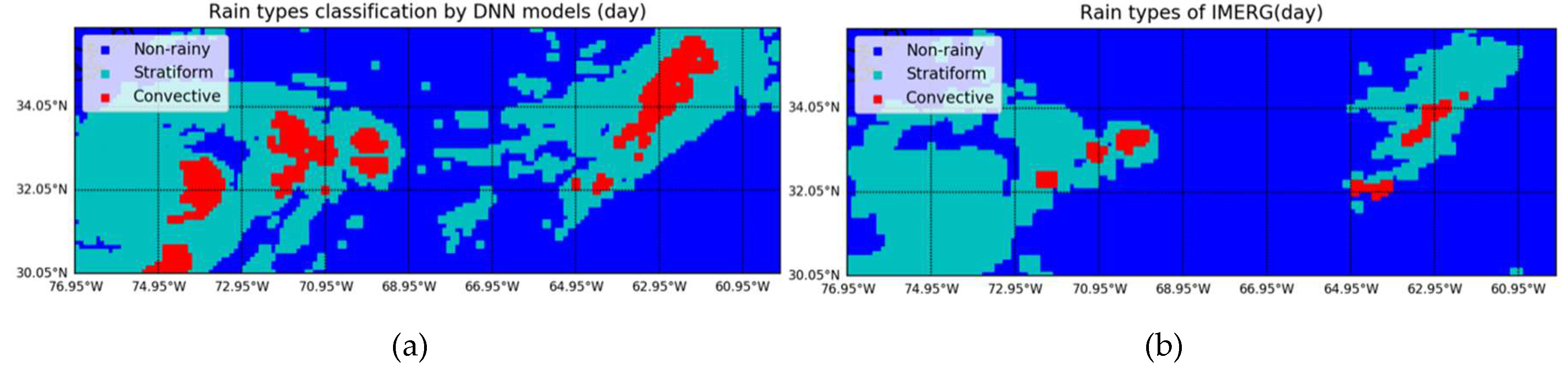

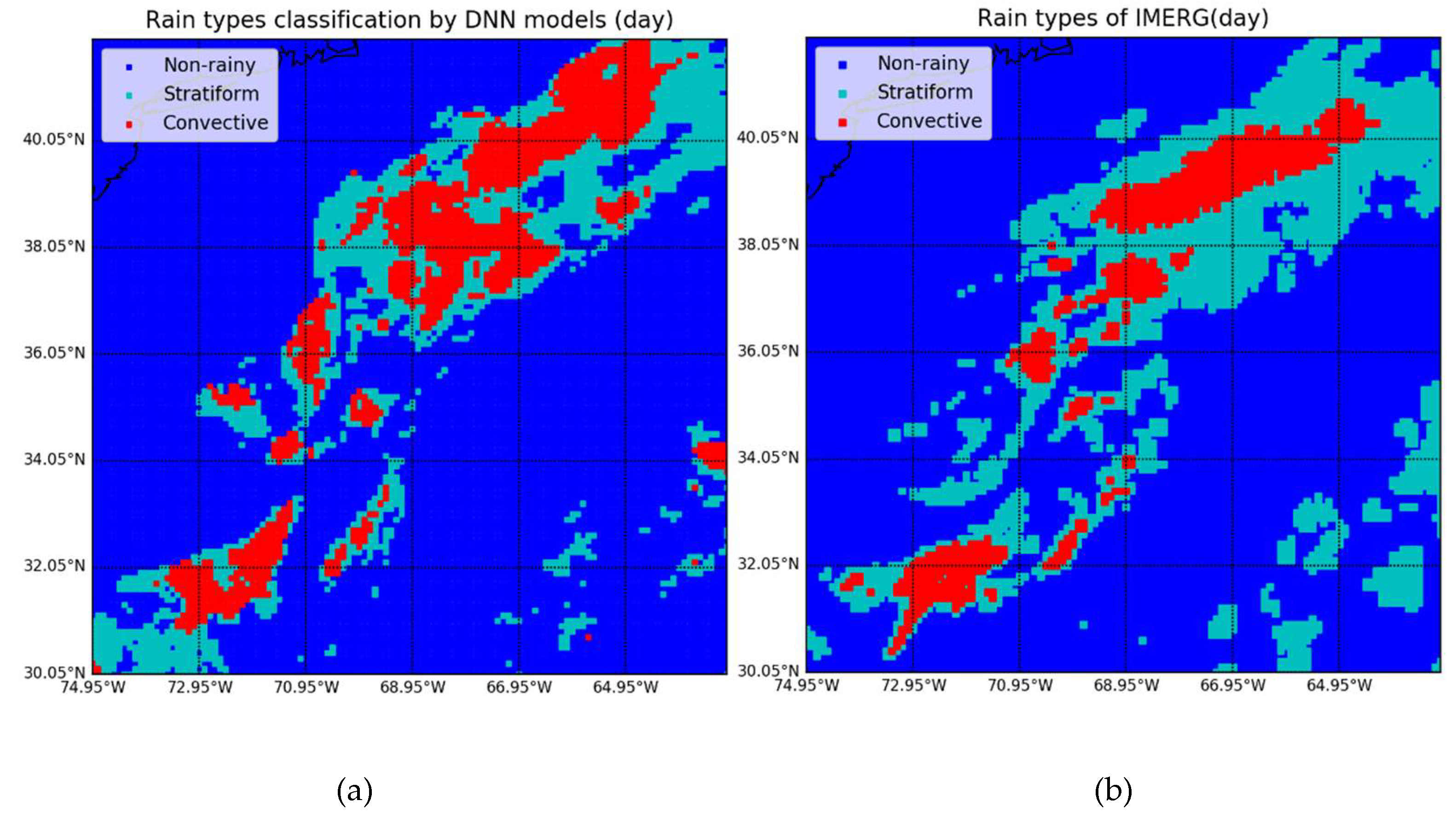

5.1. Normal Precipitation Events

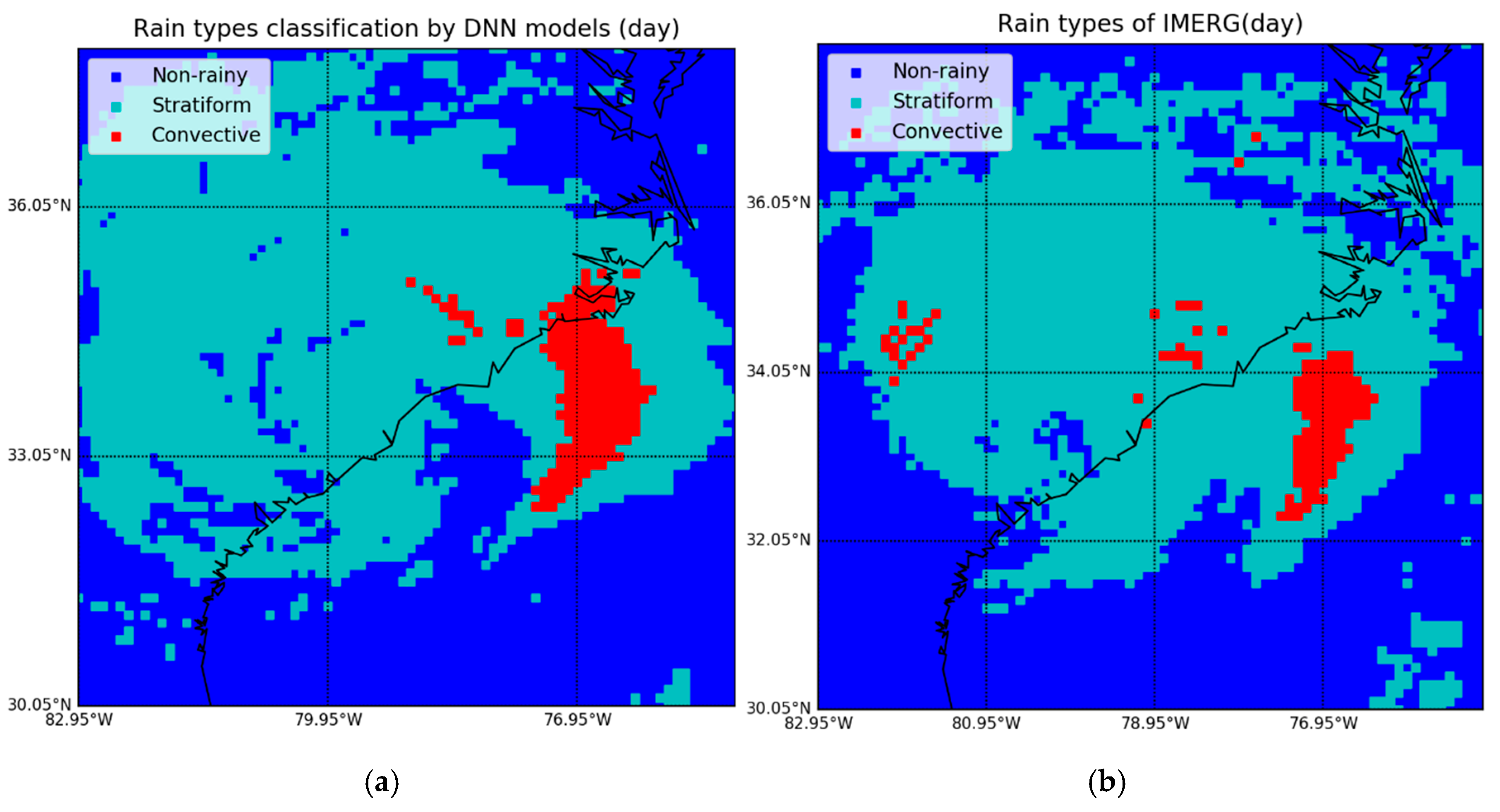

5.2. Hurricane Florence

6. Discussion

7. Conclusions

- In the detection of rainy areas, the system provides reliable results of normal precipitation events and precipitation extremes such as hurricanes with a tendency toward overestimation;

- The DNN achieves better performance than the two ML methods, with higher accuracies of the assessors on testing data;

- The system performs better over the ocean versus land;

- This study is offered as a contribution to combine the advantages of AI methodology with the modeling of atmospheric phenomena, a relatively innovative domain needing more exploration. More specifically, the system combines DNN-classifier and spectral features of rainy clouds to investigate precipitation properties. This research establishes an essential step with which to estimate precipitation rates further.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ABI | Advanced Baseline Imager |

| AVHRR | Advanced Very High Resolution Radiometer |

| AVNNET | Averaged neural networks |

| BP | Back-propagation |

| CCD | Cold cloud duration |

| CCPD | Cold cloud phase duration |

| CMORPH | Climate Prediction Center Morphing Technique |

| CONUS | Contiguous U.S. |

| CSI | Critical success index |

| CTT | Cloud-top temperature |

| dBZ | Decibel relative to reflectivity factor of the radar |

| DL | Deep learning |

| DNN | Deep neural network |

| FAR | False alarm ratio |

| GBRT | Gradient-boosted regression trees |

| IMERG | Integrated Multi-Satellite Retrievals for Global Precipitation Measurement |

| LWP | Liquid water path |

| MA | Model accuracy |

| ML | Machine learning |

| MLP | Multilayer perceptron network |

| NIR | Near-infrared |

| NNET | Neural networks |

| PMW | Passive microwave |

| POD | Probability of detection |

| POFD | Probability of false detection |

| QPE | Quantitative precipitation estimation |

| TRMM | Tropical Rainfall Measuring Mission |

References

- Mason, D.; Speck, R.; Devereux, B.; Schumann, G.-P.; Neal, J.; Bates, P. Flood Detection in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote. Sens. 2009, 48, 882–894. [Google Scholar] [CrossRef]

- Keefer, D.K.; Wilson, R.C.; Mark, R.K.; Brabb, E.E.; Brown, W.M.; Ellen, S.D.; Harp, E.L.; Wieczorek, G.F.; Alger, C.S.; Zatkin, R.S. Real-Time Landslide Warning During Heavy Rainfall. Science 1987, 238, 921–925. [Google Scholar] [CrossRef] [PubMed]

- Alfieri, L.; Thielen, J. A European precipitation index for extreme rain-storm and flash flood early warning. Meteorol. Appl. 2015, 22, 3–13. [Google Scholar] [CrossRef]

- Valipour, M. Optimization of neural networks for precipitation analysis in a humid region to detect drought and wet year alarms. Meteorol. Appl. 2016, 23, 1–100. [Google Scholar] [CrossRef]

- O’Gorman, P.A. Precipitation Extremes under Climate Change. Curr. Clim. Chang. Rep. 2015, 1, 49–59. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z. The Impact of Extreme Precipitation on the Vulnerability of a Country. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2018; Volume 170, p. 032089. [Google Scholar]

- Skofronick-Jackson, G.; Petersen, W.A.; Berg, W.; Kidd, C.; Stocker, E.F.; Kirschbaum, D.B.; Kakar, R.; Braun, S.A.; Huffman, G.J.; Iguchi, T.; et al. THE GLOBAL PRECIPITATION MEASUREMENT (GPM) MISSION FOR SCIENCE AND SOCIETY. Bull. Am. Meteorol. Soc. 2017, 98, 1679–1695. [Google Scholar] [CrossRef]

- Karbalaee, N.; Hsu, K.; Sorooshian, S.; Braithwaite, D. Bias adjustment of infrared-based rainfall estimation using Passive Microwave satellite rainfall data. J. Geophys. Res. Atmos. 2017, 122, 3859–3876. [Google Scholar] [CrossRef]

- Hong, Y.; Hsu, K.-L.; Sorooshian, S.; Gao, X. Precipitation Estimation from Remotely Sensed Imagery Using an Artificial Neural Network Cloud Classification System. J. Appl. Meteorol. 2004, 43, 1834–1853. [Google Scholar] [CrossRef]

- Thies, B.; Nauss, T.; Bendix, J. Discriminating raining from non-raining clouds at mid-latitudes using meteosat second generation daytime data. Atmos. Chem. Phys. Discuss. 2008, 8, 2341–2349. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Nelkin, E.J.; Wolff, D.B.; Adler, R.F.; Gu, G.; Hong, Y.; Bowman, K.P.; Stocker, E.F. The TRMM Multisatellite Precipitation Analysis (TMPA): Quasi-Global, Multiyear, Combined-Sensor Precipitation Estimates at Fine Scales. J. Hydrometeorol. 2007, 8, 38–55. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Nelkin, E.J. Integrated Multi-satellitE Retrievals for GPM (IMERG) technical documentation. NASA/GSFC Code 2015, 612, 47. [Google Scholar]

- Joyce, R.J.; Janowiak, J.E.; Arkin, P.A.; Xie, P. CMORPH: A Method that Produces Global Precipitation Estimates from Passive Microwave and Infrared Data at High Spatial and Temporal Resolution. J. Hydrometeorol. 2004, 5, 487–503. [Google Scholar] [CrossRef]

- Jensenius, J. Cloud Classification. 2017. Available online: https://www.weather.gov/lmk/cloud_classification (accessed on 17 April 2019).

- World Meteorological Orgnization. International Cloud Atlas. Available online: https://cloudatlas.wmo.int/clouds-supplementary-features-praecipitatio.html (accessed on 10 December 2019).

- Donald, D. What Type of Clouds Are Rain Clouds? 2018. Available online: https://sciencing.com/type-clouds-rain-clouds-8261472.html (accessed on 17 April 2019).

- Milford, J.; Dugdale, G. Estimation of Rainfall Using Geostationary Satellite Data. In Applications of Remote Sensing in Agriculture; Elsevier BV: Amsterdam, The Netherlands, 1990; pp. 97–110. [Google Scholar]

- Lazri, M.; Ameur, S.; Brucker, J.M.; Ouallouche, F. Convective rainfall estimation from MSG/SEVIRI data based on different development phase duration of convective systems (growth phase and decay phase). Atmospheric Res. 2014, 147, 38–50. [Google Scholar] [CrossRef]

- Arai, K. Thresholding Based Method for Rainy Cloud Detection with NOAA/AVHRR Data by Means of Jacobi Itteration Method. Int. J. Adv. Res. Artif. Intell. 2016, 5, 21–27. [Google Scholar] [CrossRef]

- Tebbi, M.A.; Haddad, B. Artificial intelligence systems for rainy areas detection and convective cells’ delineation for the south shore of Mediterranean Sea during day and nighttime using MSG satellite images. Atmospheric Res. 2016, 178, 380–392. [Google Scholar] [CrossRef]

- Mohia, Y.; Ameur, S.; Lazri, M.; Brucker, J.M. Combination of Spectral and Textural Features in the MSG Satellite Remote Sensing Images for Classifying Rainy Area into Different Classes. J. Indian Soc. Remote Sens. 2017, 45, 759–771. [Google Scholar] [CrossRef]

- Yang, C.; Yu, M.; Li, Y.; Hu, F.; Jiang, Y.; Liu, Q.; Sha, D.; Xu, M.; Gu, J. Big Earth data analytics: A survey. Big Earth Data 2019, 3, 83–107. [Google Scholar] [CrossRef]

- McGovern, A.; Elmore, K.L.; Gagne, D.J.; Haupt, S.E.; Karstens, C.D.; Lagerquist, R.; Smith, T.; Williams, J.K. Using Artificial Intelligence to Improve Real-Time Decision-Making for High-Impact Weather. Bull. Am. Meteorol. Soc. 2017, 98, 2073–2090. [Google Scholar] [CrossRef]

- Engström, A.; Bender, F.A.-M.; Charlson, R.J.; Wood, R. The nonlinear relationship between albedo and cloud fraction on near-global, monthly mean scale in observations and in the CMIP5 model ensemble. Geophys. Res. Lett. 2015, 42, 9571–9578. [Google Scholar] [CrossRef]

- Meyer, H.; Kühnlein, M.; Appelhans, T.; Nauss, T. Comparison of four machine learning algorithms for their applicability in satellite-based optical rainfall retrievals. Atmospheric Res. 2016, 169, 424–433. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Chiu, L.S.; Hao, X.; Yang, C. Characteristic of TMPA Rainfall; American Association of Geographer Annual Meeting, Washington, DC, USA, 2019.

- Huffman, G.J.; Bolvin, D.T.; Braithwaite, D.; Hsu, K.; Joyce, R.; Xie, P.; Yoo, S.H. NASA Global Precipitation Measurement (GPM) Integrated Multi-satellitE Retrievals for GPM (IMERG). 2018. Available online: https://pmm.nasa.gov/sites/default/files/document_files/IMERG_ATBD_V5.2_0.pdf (accessed on 4 October 2019).

- Gaona, M.F.R.; Overeem, A.; Brasjen, A.M.; Meirink, J.F.; Leijnse, H.; Uijlenhoet, R.; Gaona, M.F.R. Evaluation of Rainfall Products Derived from Satellites and Microwave Links for The Netherlands. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 6849–6859. [Google Scholar] [CrossRef]

- Wei, G.; Lü, H.; Crow, W.T.; Zhu, Y.; Wang, J.; Su, J. Comprehensive Evaluation of GPM-IMERG, CMORPH, and TMPA Precipitation Products with Gauged Rainfall over Mainland China. Adv. Meteorol. 2018, 2018, 1–18. [Google Scholar] [CrossRef]

- Benz, A.; Chapel, J.; Dimercurio, A., Jr.; Birckenstaedt, B.; Tillier, C.; Nilsson, W., III; Colon, J.; Dence, R.; Hansen, D., Jr.; Campbell, P. GOES-R SERIES DOCUMENTS. 2018. Available online: https://www.goes-r.gov/downloads/resources/documents/GOES-RSeriesDataBook.pdf (accessed on 3 August 2019).

- GOES-R Series Program Office. Goddard Space Flight Center. 2019. GOES-R Series Data Book. Available online: https://www.goes-r.gov/downloads/resources/documents/GOES-RSeriesDataBook.pdf (accessed on 10 December 2019).

- Cotton, W.; Bryan, G.; Heever, S.V.D. Storm and Cloud Dynamics; Academic press: Cambridge, MA, USA, 2010; Volume 99. [Google Scholar]

- Reid, J.S.; Hobbs, P.V.; Rangno, A.L.; Hegg, D.A. Relationships between cloud droplet effective radius, liquid water content, and droplet concentration for warm clouds in Brazil embedded in biomass smoke. J. Geophys. Res. Space Phys. 1999, 104, 6145–6153. [Google Scholar] [CrossRef]

- Chen, F.; Sheng, S.; Bao, Z.; Wen, H.; Hua, L.; Paul, N.J.; Fu, Y. Precipitation clouds delineation scheme in tropical cyclones and its validation using precipitation and cloud parameter datasets from TRMM. J. Appl. Meteorol. Clim. 2018, 57, 821–836. [Google Scholar] [CrossRef]

- Han, Q.; Rossow, W.B.; Lacis, A.A. Near-Global Survey of Effective Droplet Radii in Liquid Water Clouds Using ISCCP Data. J. Clim. 1994, 7, 465–497. [Google Scholar] [CrossRef]

- Nakajima, T.Y.; Nakajma, T. Wide-Area Determination of Cloud Microphysical Properties from NOAA AVHRR Measurements for FIRE and ASTEX Regions. J. Atmospheric Sci. 1995, 52, 4043–4059. [Google Scholar] [CrossRef]

- GOES-R ABI Fact Sheet Band 6 ("Cloud Particle Size" near-infrared). Available online: http://cimss.ssec.wisc.edu/goes/OCLOFactSheetPDFs/ABIQuickGuide_Band06.pdf (accessed on 4 October 2019).

- Vijaykumar, P.; Abhilash, S.; Santhosh, K.R.; Mapes, B.E.; Kumar, C.S.; Hu, I.K. Distribution of cloudiness and categorization of rainfall types based on INSAT IR brightness temperatures over Indian subcontinent and adjoiningoceanic region during south west monsoon season. J. Atmos. Solar Terr. Phys. 2017, 161, 76–82. [Google Scholar] [CrossRef]

- Scofield, R.A. The NESDIS Operational Convective Precipitation- Estimation Technique. Mon. Weather. Rev. 1987, 115, 1773–1793. [Google Scholar] [CrossRef]

- Giannakos, A.; Feidas, H. Classification of convective and stratiform rain based on the spectral and textural features of Meteosat Second Generation infrared data. In Theoretical and Applied Climatology; Springer: New York, NY, USA, 2013; Volume 113, pp. 495–510. [Google Scholar]

- Feidas, H.; Giannakos, A. Classifying convective and stratiform rain using multispectral infrared Meteosat Second Generation satellite data. In Theoretical and Applied Climatology; Springer: New York, NY, USA, 2012; Volume 108, pp. 613–630. [Google Scholar]

- Schmit, T.J.; Griffith, P.; Gunshor, M.M.; Daniels, J.M.; Goodman, S.J.; Lebair, W.J. A Closer Look at the ABI on the GOES-R Series. Bull. Am. Meteorol. Soc. 2017, 98, 681–698. [Google Scholar] [CrossRef]

- Thies, B.; Nauß, T.; Bendix, J.; Nauss, T. Precipitation process and rainfall intensity differentiation using Meteosat Second Generation Spinning Enhanced Visible and Infrared Imager data. J. Geophys. Res. Space Phys. 2008, 113. [Google Scholar] [CrossRef]

- Baum, B.A.; Soulen, P.F.; Strabala, K.I.; Ackerman, S.A.; Menzel, W.P.; King, M.D.; Yang, P. Remote sensing of cloud properties using MODIS airborne simulator imagery during SUCCESS: 2. Cloud thermodynamic phase. J. Geophys. Res. Space Phys. 2000, 105, 11781–11792. [Google Scholar] [CrossRef]

- Baum, B.A.; Platnick, S. Introduction to MODIS cloud products. In Earth Science Satellite Remote Sensing: Science and Instruments; Qu, J.J., Gao, W., Kafatos, M., Murphy, R.E., Salomonson, V.V., Eds.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Kuligowski Robert, J. GOES-R Advanced Baseline Imager (ABI) Algorithm Theoretical Basis Document for Rainfall Rate (QPE). 2010. Available online: https://www.star.nesdis.noaa.gov/goesr/documents/ATBDs/Baseline/ATBD_GOES-R_Rainrate_v2.6_Oct2013.pdf (accessed on 4 October 2019).

- Hartmann, D.L. Global Physical Climatology, 2nd ed.; Academic Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Hunter, S.M. WSR-88D radar rainfall estimation: Capabilities, limitations and potential improvements. Natl. Wea. Dig. 1996, 20, 26–38. [Google Scholar]

- Islam, T.; Rico-Ramirez, M.A.; Han, D.; Srivastava, P.K. Artificial intelligence techniques for clutter identification with polarimetric radar signatures. Atmospheric Res. 2012, 109, 95–113. [Google Scholar] [CrossRef]

- Baquero, M.; Cruz-Pol, S.; Bringi, V.; Chandrasekar, V. Rain-rate estimate algorithm evaluation and rainfall characterization in tropical environments using 2DVD, rain gauges and TRMM data. In Proceedings of the 25th Anniversary IGARSS 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29–29 July 2005; Volume 2, pp. 1146–1149. [Google Scholar]

- Maeso, J.; Bringi, V.; Cruz-Pol, S.; Chandrasekar, V. DSD characterization and computations of expected reflectivity using data from a two-dimensional video disdrometer deployed in a tropical environment. In Proceedings of the 25th Anniversary IGARSS 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29–29 July 2005; pp. 5073–5076. [Google Scholar]

- Qian, Y.; Fan, Y.; Hu, W.; Soong, F.K. On the training aspects of deep neural network (DNN) for parametric TTS synthesis. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3829–3833. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8609–8613. [Google Scholar]

- Xu, Y.; Huang, Q.; Wang, W.; Plumbley, M.D. Hierarchical learning for DNN-based acoustic scene classification. arXiv 2016, arXiv:1607.03682. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Kingsbury, B.; et al. Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Caruana, R.; Niculescu-Mizil, A. An empirical comparison of supervised learning algorithms. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 161–168. [Google Scholar]

- Rodgers, E.; Siddalingaiah, H.; Chang, A.T.C.; Wilheit, T. A Statistical Technique for Determining Rainfall over Land Employing Nimbus 6 ESMR Measurements. J. Appl. Meteorol. 1979, 18, 978–991. [Google Scholar] [CrossRef][Green Version]

- Berg, W.; Kummerow, C.; Morales, C.A. Differences between East and West Pacific Rainfall Systems. J. Clim. 2002, 15, 3659–3672. [Google Scholar] [CrossRef]

| Band Number | Central Wavelength (μm) | Spatial Resolution at Nadir (km) | κ-Factor (W −1 * m * μm) | Used in Study | Primary Application |

|---|---|---|---|---|---|

| 1 | 0.47 | 1.0 | 1.5177−3 | Aerosols | |

| 2 | 0.64 | 0.5 | 1.8767−3 | √ | Clouds |

| 3 | 0.865 | 1.0 | 3.1988−3 | √ | Vegetation |

| 4 | 1.378 | 2.0 | 8.4828−3 | Cirrus | |

| 5 | 1.61 | 1.0 | 1.26225−2 | Snow/ice discrimination, cloud phase | |

| 6 | 2.24 | 2.0 | 3.98109−2 | √ | Cloud particle size, snow cloud phase |

| 7 | 3.9 | 2.0 | - | Fog, stratus, fire, volcanism | |

| 8 | 6.19 | 2.0 | - | √ | Various atmospheric features |

| 9 | 6.95 | 2.0 | - | Middle-level water vapor features | |

| 10 | 7.34 | 2.0 | - | √ | Lower-level water vapor features |

| 11 | 8.5 | 2.0 | - | √ | Cloud-top phase |

| 12 | 9.61 | 2.0 | - | √ | Total column of ozone |

| 13 | 10.35 | 2.0 | - | √ | Clouds |

| 14 | 11.2 | 2.0 | - | √ | Clouds |

| 15 | 12.3 | 2.0 | - | √ | Clouds |

| 16 | 13.3 | 2.0 | - | √ | Air temperature, clouds |

| Spectral Parameters | Cloud Features Reflected by the Parameter |

|---|---|

| Ref0.64 | Cloud brightness |

| Ref0.865 | Cloud brightness |

| Ref2.24 | Cloud particle size |

| BT10.35 | Cloud particle size, cloud-top temperature |

| ΔBT6.19−10.35 | Cloud-top temperature, convective level |

| ΔBT7.34−12.3 | Cloud-top temperature, convective level |

| ΔBT6.19−7.34 | Cloud height and thickness |

| ΔBT13.3−10.35 | Cloud-top height |

| ΔBT9.61−13.3 | Cloud-top height |

| ΔBT8.5−10.35 | Cloud phase (positive for thick ice clouds, negative for thin low-level water clouds) |

| ΔBT8.5−12.3 | Optical thickness (negative values for thin optical thickness) |

| BT6.19 | Upper-level tropospheric water vapor |

| ΔBT7.34−8.5 | Cloud optical thickness |

| ΔBT7.34−11.2 | Cloud-top temperature and height |

| ΔBT11.2−12.3 | Cloud thickness, particle size |

| Training and Validation Samples | Testing Samples |

|---|---|

| 3–5, 17, 22 June; | 1, 10, 24 June; |

| 4, 7, 30, 31 July; | 11, 26 July; |

| 2–4, 8–12, 18–20, 22 August; | 5–7 August; |

| 7–8, 14, 17, 25 September | 4–6 September |

| By IMERG | |||

|---|---|---|---|

| Yes (Rainy/Convective) | No (Non-Rainy/Stratiform) | ||

| By models | Yes (Rainy/Convective) | a | b |

| No (Non-rainy/Stratiform) | c | d | |

| Model | POD (Ideal 1) | POFD (Ideal 0) | FAR (Ideal 0) | Bias (Ideal 1) | CSI (Ideal 1) | MA (Ideal 1) |

|---|---|---|---|---|---|---|

| DNN | 0.86 | 0.13 | 0.20 | 1.07 | 0.71 | 0.87 |

| SVM | 0.85 | 0.13 | 0.21 | 1.07 | 0.69 | 0.86 |

| RF | 0.85 | 0.14 | 0.21 | 1.09 | 0.70 | 0.86 |

| Model | POD (Ideal 1) | POFD (Ideal 0) | FAR (Ideal 0) | Bias (Ideal 1) | CSI (Ideal 1) | MA (Ideal 1) |

|---|---|---|---|---|---|---|

| DNN | 0.72 | 0.23 | 0.24 | 0.94 | 0.58 | 0.74 |

| SVM | 0.86 | 0.40 | 0.44 | 1.55 | 0.51 | 0.69 |

| RF | 0.78 | 0.35 | 0.43 | 1.37 | 0.49 | 0.70 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Q.; Li, Y.; Yu, M.; Chiu, L.S.; Hao, X.; Duffy, D.Q.; Yang, C. Daytime Rainy Cloud Detection and Convective Precipitation Delineation Based on a Deep Neural Network Method Using GOES-16 ABI Images. Remote Sens. 2019, 11, 2555. https://doi.org/10.3390/rs11212555

Liu Q, Li Y, Yu M, Chiu LS, Hao X, Duffy DQ, Yang C. Daytime Rainy Cloud Detection and Convective Precipitation Delineation Based on a Deep Neural Network Method Using GOES-16 ABI Images. Remote Sensing. 2019; 11(21):2555. https://doi.org/10.3390/rs11212555

Chicago/Turabian StyleLiu, Qian, Yun Li, Manzhu Yu, Long S. Chiu, Xianjun Hao, Daniel Q. Duffy, and Chaowei Yang. 2019. "Daytime Rainy Cloud Detection and Convective Precipitation Delineation Based on a Deep Neural Network Method Using GOES-16 ABI Images" Remote Sensing 11, no. 21: 2555. https://doi.org/10.3390/rs11212555

APA StyleLiu, Q., Li, Y., Yu, M., Chiu, L. S., Hao, X., Duffy, D. Q., & Yang, C. (2019). Daytime Rainy Cloud Detection and Convective Precipitation Delineation Based on a Deep Neural Network Method Using GOES-16 ABI Images. Remote Sensing, 11(21), 2555. https://doi.org/10.3390/rs11212555