Local Region Proposing for Frame-Based Vehicle Detection in Satellite Videos

Abstract

1. Introduction

2. Local Region Proposing

2.1. Semantic Region Extraction

2.2. Searching Possible Locations in Semantic Regions

2.3. Histogram Mixture Model

2.3.1. Histogram Mixture Model for Removing Obvious False Alarms

2.3.2. Estimating Histogram Mixture Model

| Algorithm 1 Removing Obvious False Alarms by Histogram Mixture Model (HistMM) |

|

| Algorithm 2 Training procedure of Histogram Mixture Model (HistMM) |

|

3. Experimental Results

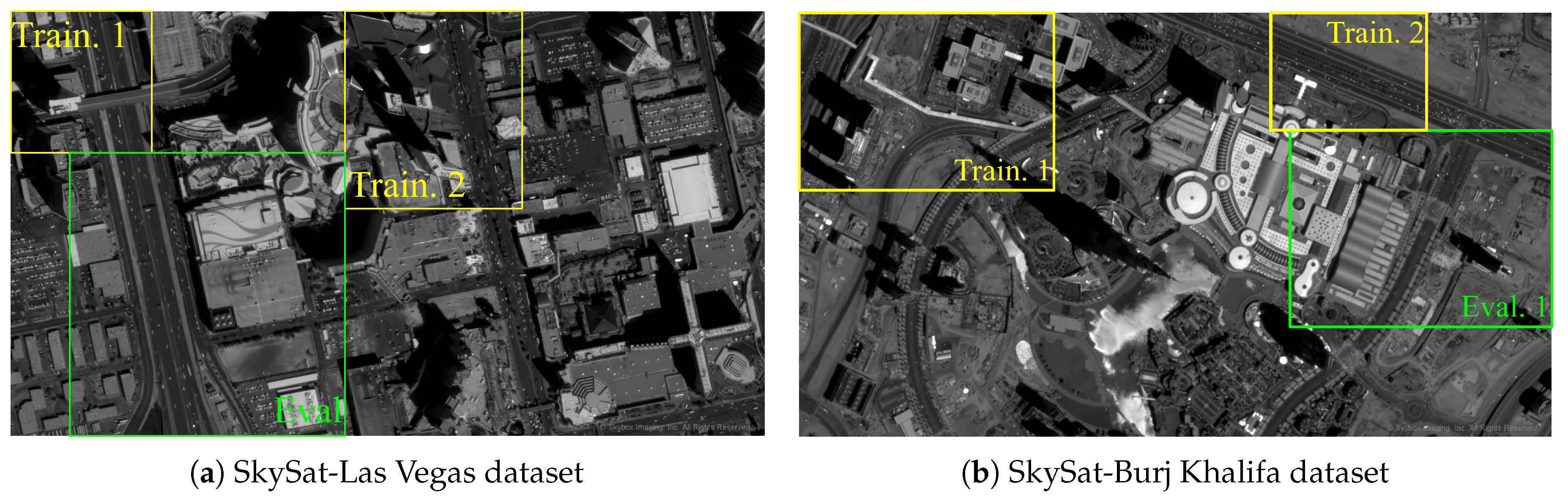

3.1. Datasets

3.2. Parameter Discussion

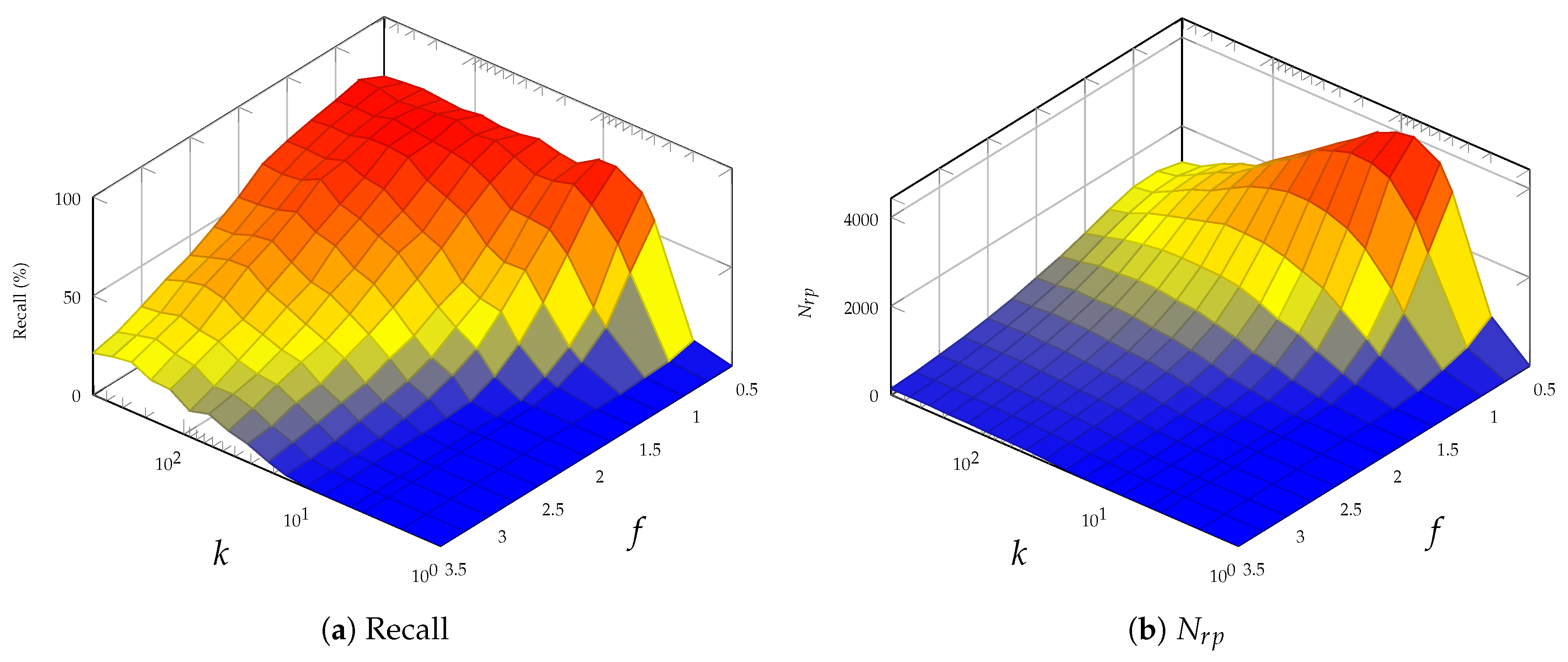

- Semantic region Scale k controls size of the semantic regions generated. A larger k is preferred as it will generate a coarse segmentation as required. The semantic regions are allowed to be larger than the target size on purpose. As presented in Figure 5, reducing k gives fine-scale segmentation and leads to an increased number of region proposals with lower recall rate, while with increasing k, LRP generates fewer region proposals with improved recall rate.

- Threshold Factor f controls the segmentation threshold in each semantic region. Selecting a large f would result in fragmented region proposals and decrease recall scores. As illustrated in Figure 5, increasing f from 1.0 to 3.5, the recall scores experience a drop of over 40%.

- HistMM Threshold is the Bayesian decision threshold in the HistMM for removing obvious false alarms as presented Section 2.3. The HistMM model with a smaller tends to keep more obvious false alarms, which leads to unnecessarily more region proposals decreases. On the other hand, increasing would filter out more obvious false alarms from the searched region proposals. As shown in Figure 6, when increases to 0.5, the number of region proposals () reduces significantly, while the recall scores holds nearly stable about 80%, which presents the most efficient case.

3.3. Comparison of Region Proposal Approaches

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Luo, Y.; Zhou, L.; Wang, S.; Wang, Z. Video Satellite Imagery Super Resolution via Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2398–2402. [Google Scholar] [CrossRef]

- Xiao, A.; Wang, Z.; Wang, L.; Ren, Y. Super-Resolution for “Jilin-1” Satellite Video Imagery via a Convolutional Network. Sensors 2018, 18, 1194. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Hu, R.; Wang, Z.; Xiao, J. Virtual Background Reference Frame Based Satellite Video Coding. IEEE Signal Process. Lett. 2018, 25, 1445–1449. [Google Scholar] [CrossRef]

- Xiao, J.; Zhu, R.; Hu, R.; Wang, M.; Zhu, Y.; Chen, D.; Li, D. Towards Real-Time Service from Remote Sensing: Compression of Earth Observatory Video Data via Long-Term Background Referencing. Remote Sens. 2018, 10, 876. [Google Scholar] [CrossRef]

- Du, B.; Sun, Y.; Cai, S.; Wu, C.; Du, Q. Object Tracking in Satellite Videos by Fusing the Kernel Correlation Filter and the Three-Frame-Difference Algorithm. IEEE Geosci. Remote Sens. Lett. 2018, 15, 168–172. [Google Scholar] [CrossRef]

- Zhang, J.; Jia, X.; Hu, J.; Tan, K. Satellite Multi-Vehicle Tracking under Inconsistent Detection Conditions by Bilevel K-Shortest Paths Optimization. In Proceedings of the 2018 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar]

- Yang, T.; Wang, X.; Yao, B.; Li, J.; Zhang, Y.; He, Z.; Duan, W. Small moving vehicle detection in a satellite video of an urban area. Sensors 2016, 16, 1528. [Google Scholar] [CrossRef] [PubMed]

- Mou, L.; Zhu, X.X. Spatiotemporal scene interpretation of space videos via deep neural network and tracklet analysis. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1823–1826. [Google Scholar]

- Cristani, M.; Farenzena, M.; Bloisi, D.; Murino, V. Background subtraction for automated multisensor surveillance: A comprehensive review. EURASIP J. Adv. Signal Process. 2010, 2010, 343057. [Google Scholar] [CrossRef]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; Volume 4, pp. 3099–3104. [Google Scholar]

- Reilly, V.; Idrees, H.; Shah, M. Detection and tracking of large number of targets in wide area surveillance. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Springer: Berlin, Germany, 2010; pp. 186–199. [Google Scholar]

- Xiao, J.; Cheng, H.; Sawhney, H.; Han, F. Vehicle detection and tracking in wide field-of-view aerial video. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 679–684. [Google Scholar]

- Sommer, L.W.; Teutsch, M.; Schuchert, T.; Beyerer, J. A survey on moving object detection for wide area motion imagery. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Zivkovic, Z. Improved adaptive Gaussian mixture model for background subtraction. In Proceedings of the Proceedings of the 17th International Conference on Pattern Recognition (ICPR 2004), Cambridge, UK, 26 August 2004; Volume 2, pp. 28–31. [Google Scholar]

- Pollard, T.; Antone, M. Detecting and tracking all moving objects in wide-area aerial video. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 16–21 June 2012; pp. 15–22. [Google Scholar]

- Barnich, O.; Van Droogenbroeck, M. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar] [CrossRef]

- Xiang, X.; Zhai, M.; Lv, N.; El Saddik, A. Vehicle counting based on vehicle detection and tracking from aerial videos. Sensors 2018, 18, 2560. [Google Scholar] [CrossRef]

- Kang, K.; Ouyang, W.; Li, H.; Wang, X. Object detection from video tubelets with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 817–825. [Google Scholar]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, X.; Fu, K.; Wang, C.; Wang, H. Object detection in high-resolution remote sensing images using rotation invariant parts based model. IEEE Geosci. Remote Sens. Lett. 2014, 11, 74–78. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Guo, L.; Liu, Z.; Bu, S.; Ren, J. Effective and efficient midlevel visual elements-oriented land-use classification using VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4238–4249. [Google Scholar] [CrossRef]

- Alexe, B.; Deselaers, T.; Ferrari, V. Measuring the objectness of image windows. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2189–2202. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.M.; Zhang, Z.; Lin, W.Y.; Torr, P. BING: Binarized normed gradients for objectness estimation at 300fps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3286–3293. [Google Scholar]

- Zitnick, C.L.; Dollár, P. Edge boxes: Locating object proposals from edges. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin, Germany, 2014; pp. 391–405. [Google Scholar]

- Gokberk Cinbis, R.; Verbeek, J.; Schmid, C. Segmentation driven object detection with fisher vectors. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2968–2975. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Manen, S.; Guillaumin, M.; Van Gool, L. Prime object proposals with randomized prim’s algorithm. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2536–2543. [Google Scholar]

- Rantalankila, P.; Kannala, J.; Rahtu, E. Generating object segmentation proposals using global and local search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2417–2424. [Google Scholar]

- Endres, I.; Hoiem, D. Category-independent object proposals with diverse ranking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 222–234. [Google Scholar] [CrossRef] [PubMed]

- Carreira, J.; Sminchisescu, C. CPMC: Automatic object segmentation using constrained parametric min-cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1312–1328. [Google Scholar] [CrossRef] [PubMed]

- Pont-Tuset, J.; Arbelaez, P.; Barron, J.T.; Marques, F.; Malik, J. Multiscale combinatorial grouping for image segmentation and object proposal generation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Matas, J.; Chum, O.; Urban, M.; Pajdla, T. Robust wide-baseline stereo from maximally stable extremal regions. Image Vis. Comput. 2004, 22, 761–767. [Google Scholar] [CrossRef]

- Teutsch, M.; Krüger, W.; Beyerer, J. Evaluation of object segmentation to improve moving vehicle detection in aerial videos. In Proceedings of the 2014 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Seoul, Korea, 26–29 August 2014; pp. 265–270. [Google Scholar]

- Zheng, Z.; Zhou, G.; Wang, Y.; Liu, Y.; Li, X.; Wang, X.; Jiang, L. A novel vehicle detection method with high resolution highway aerial image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2338–2343. [Google Scholar] [CrossRef]

- Szegedy, C.; Reed, S.; Erhan, D.; Anguelov, D.; Ioffe, S. Scalable, high-quality object detection. arXiv, 2014; arXiv:1412.1441. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Zhang, F.; Du, B.; Zhang, L.; Xu, M. Weakly supervised learning based on coupled convolutional neural networks for aircraft detection. IEEE Trans. Geosci. Remote Sens. 2016, 54, 5553–5563. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Zivkovic, Z.; van der Heijden, F. Recursive unsupervised learning of finite mixture models. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 651–656. [Google Scholar] [CrossRef] [PubMed]

- Hosang, J.; Benenson, R.; Dollár, P.; Schiele, B. What makes for effective detection proposals? IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 814–830. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the ICML Workshop on Deep Learning for Audio, Speech and Language Processing, Atlanta, GA, USA, 16 June 2013. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- KaewTraKulPong, P.; Bowden, R. An improved adaptive background mixture model for real-time tracking with shadow detection. In Video-Based Surveillance Systems; Springer: Berlin, Germany, 2002; pp. 135–144. [Google Scholar]

- Team, P. Application Program Interface: In Space for Life on Earth. San Francisco, CA. Available online: https://api.planet.com (accessed on 31 August 2019).

| Dataset | Region | Size | Average Vehicle Size |

|---|---|---|---|

| SkySat-Las Vegas | Train. 1 | ||

| Train. 2 | |||

| Eval | |||

| SkySat-Burj Khalifa | Train. 1 | ||

| Train. 2 | |||

| Eval |

| Dataset | Recall | |||||

|---|---|---|---|---|---|---|

| Before | After | Diff | Before | After | Diff | |

| SkySat-Las Vegas | 75.92% | 75.10% | −0.82% | 30,614 | 10,100 | −67.01% |

| SkySat-Burj Khalifsa | 77.31% | 76.83% | −0.48% | 17,017 | 6525 | −61.66% |

| Method | SkySat-Las Vegas | SkySat-Burj Khalifsa | ||||

|---|---|---|---|---|---|---|

| Recall | Time (s) | Recall | Time (s) | |||

| SP | 4092 | 37.95% | 1.98 | 7922 | 51.38% | 1.28 |

| SS | 18,222 | 20.00% | 588.97 | 11,728 | 19.34% | 264.00 |

| MSER | 15,347 | 37.73% | 0.48 | 10,569 | 55.80% | 0.34 |

| Top-hat-Ostu | 1329 | 2.01% | 0.02 | 1280 | 29.28% | 0.01 |

| RPN (Finetuned from Fast-RCNN-LRP) | 13,288 | 90.00% | 0.72 | 7908 | 90.05% | 0.48 |

| lLRP | 9874 | 80.00% | 4.23 | 7424 | 79.56% | 3.60 |

| Method | SkySat-Las Vegas | SkySat-Burj Khalifa | ||||||

|---|---|---|---|---|---|---|---|---|

| Rcll | Prcn | AP | Rcll | Prcn | AP | |||

| Fast R-CNN-SP | 34.32% | 35.53% | 34.91% | 29.20% | 46.41% | 31.82% | 37.75% | 35.30% |

| Fast R-CNN-SS | 14.32% | 19.57% | 16.54% | 7.43% | 16.02% | 12.78% | 14.22% | 5.90% |

| Fast R-CNN-MSER | 30.45% | 31.16% | 30.80% | 20.21% | 41.44% | 47.17% | 44.12% | 33.96% |

| Fast R-CNN-Top-hat-Ostu | 1.82% | 8.08% | 2.97% | 1.15% | 26.52% | 26.23% | 26.37% | 13.37% |

| Fast R-CNN-LRP | 58.18% | 43.91% | 50.05% | 49.48% | 64.09% | 42.49% | 51.10% | 50.57% |

| Faster R-CNN (Finetuned from Fast R-CNN-LRP) | 59.32% | 55.53% | 56.31% | 46.46% | 62.43% | 46.12% | 53.05% | 45.15% |

| Dataset | Method | Rcll | Prcn | |

|---|---|---|---|---|

| SkySat- Las Vegas | GMM | 45.8% | 49.6% | 47.6% |

| GMMv2 | 64.7% | 26.7% | 37.8% | |

| ViBe | 58.0% | 16.7% | 25.9% | |

| Fast-RCNN-LRP | 58.18% | 43.91% | 50.05% | |

| SkySat- Burj Khalifa | GMM | 33.5% | 56.7% | 42.1% |

| GMMv2 | 70.1% | 37.7% | 49.0% | |

| ViBe | 74.6% | 22.0% | 34.0% | |

| Fast-RCNN-LRP | 64.09% | 42.49% | 51.10% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Jia, X.; Hu, J. Local Region Proposing for Frame-Based Vehicle Detection in Satellite Videos. Remote Sens. 2019, 11, 2372. https://doi.org/10.3390/rs11202372

Zhang J, Jia X, Hu J. Local Region Proposing for Frame-Based Vehicle Detection in Satellite Videos. Remote Sensing. 2019; 11(20):2372. https://doi.org/10.3390/rs11202372

Chicago/Turabian StyleZhang, Junpeng, Xiuping Jia, and Jiankun Hu. 2019. "Local Region Proposing for Frame-Based Vehicle Detection in Satellite Videos" Remote Sensing 11, no. 20: 2372. https://doi.org/10.3390/rs11202372

APA StyleZhang, J., Jia, X., & Hu, J. (2019). Local Region Proposing for Frame-Based Vehicle Detection in Satellite Videos. Remote Sensing, 11(20), 2372. https://doi.org/10.3390/rs11202372