Parameterized Nonlinear Least Squares for Unsupervised Nonlinear Spectral Unmixing

Abstract

1. Introduction

2. Bilinear Mixing Models

2.1. GBM

2.2. Fan Model

3. Proposed PNLS

3.1. Definition of the Alternate LS/NLS Problems

3.1.1. Constrained NLS for Endmembers Estimation

3.1.2. Constrained LS for Abundances Estimation

3.1.3. Constrained LS for Nonlinearity Coefficients Estimation

3.2. Sigmoid Parameterization

3.3. Gauss–Newton Based Optimization

3.3.1. Endmembers Updating Rule

3.3.2. Abundances Updating Rule

3.3.3. Nonlinearity Coefficients Updating Rule

3.4. Generalization to Fan Model

| Algorithm 1 GBM-PNLS for unsupervised nonlinear unmixing |

| Input: hyperspectral data matrix , parameter and iteration number T. Output: endmember matrix , abundance matrix and nonlinearity coefficients matrix .

|

| Algorithm 2 Fan-PNLS for unsupervised nonlinear unmixing |

| Input: hyperspectral data matrix , parameter and iteration number T. Output: endmember matrix and abundance matrix .

|

3.5. Implement Details

3.5.1. Initialization

3.5.2. Damping

3.5.3. ASC Factor

3.5.4. Stopping Criteria

4. Experimental Results and Analysis

4.1. Synthetic Experiments

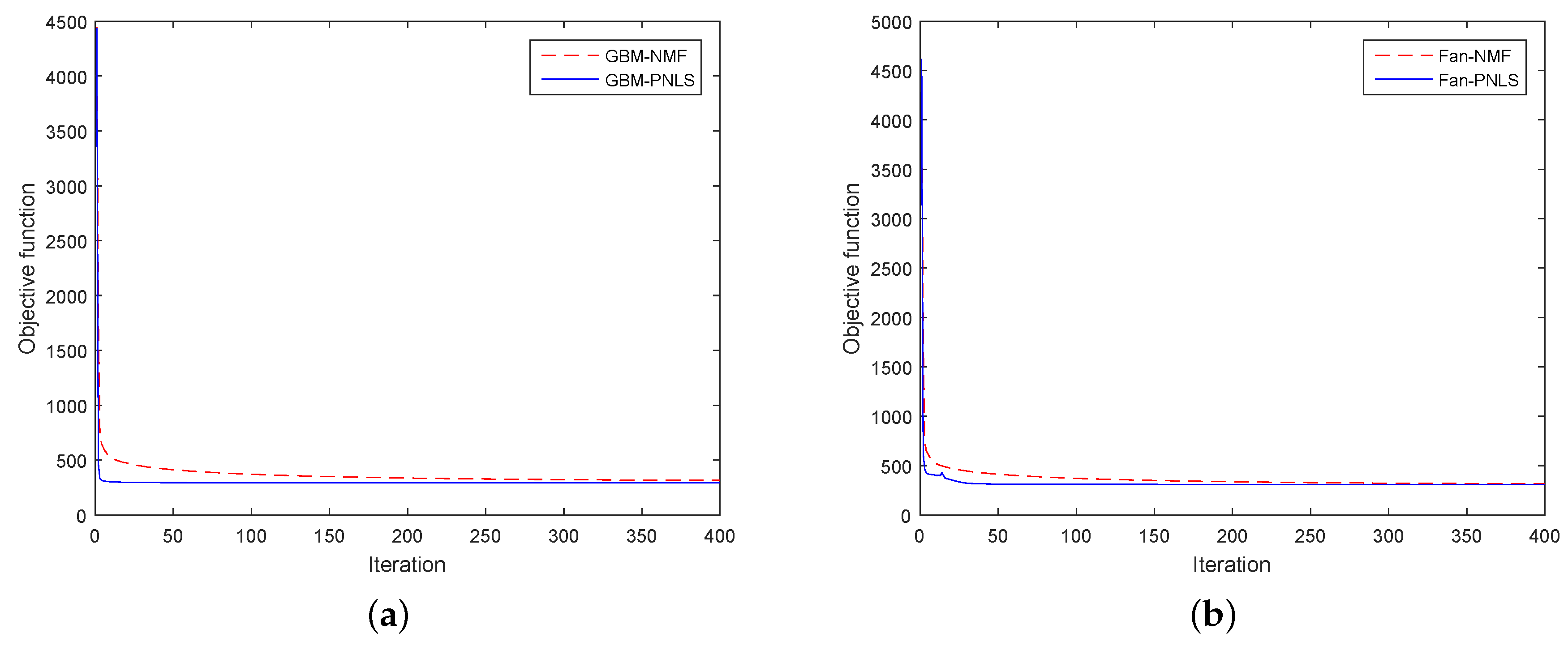

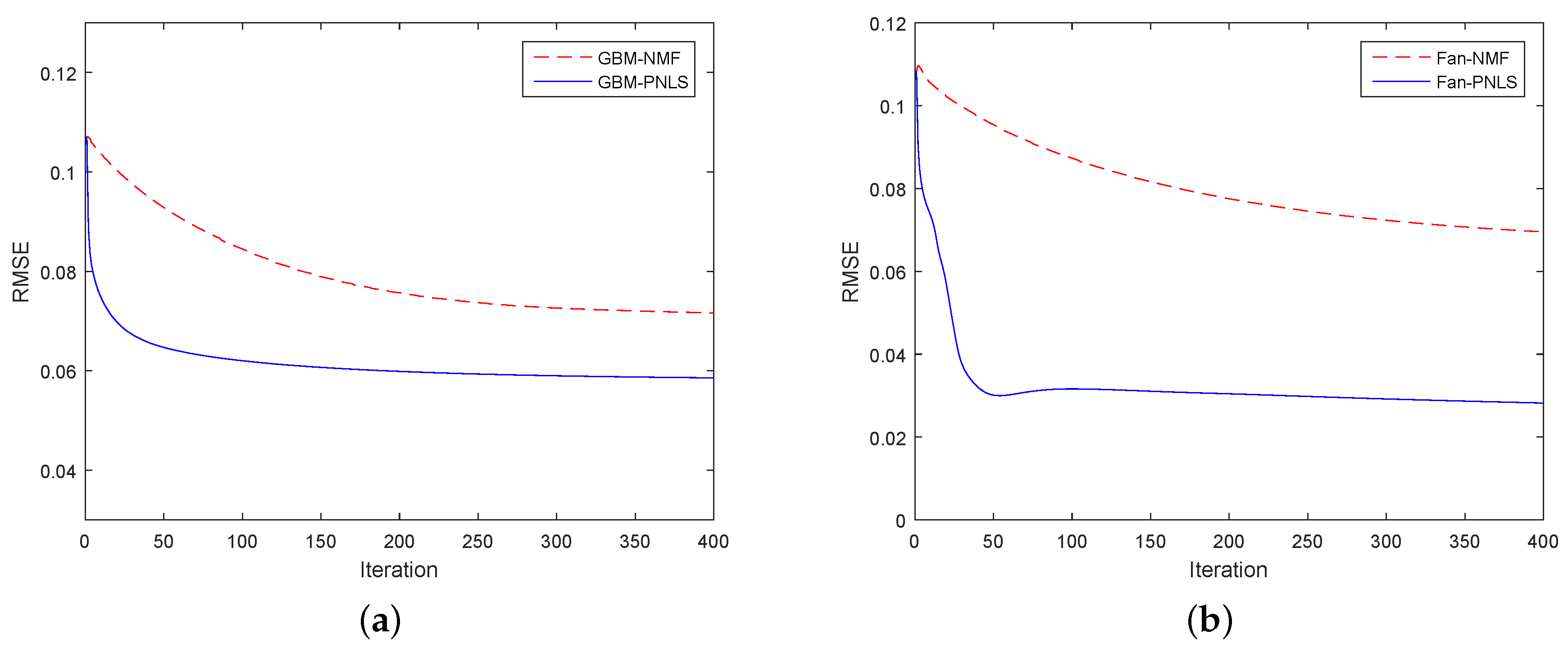

4.1.1. Convergence Test

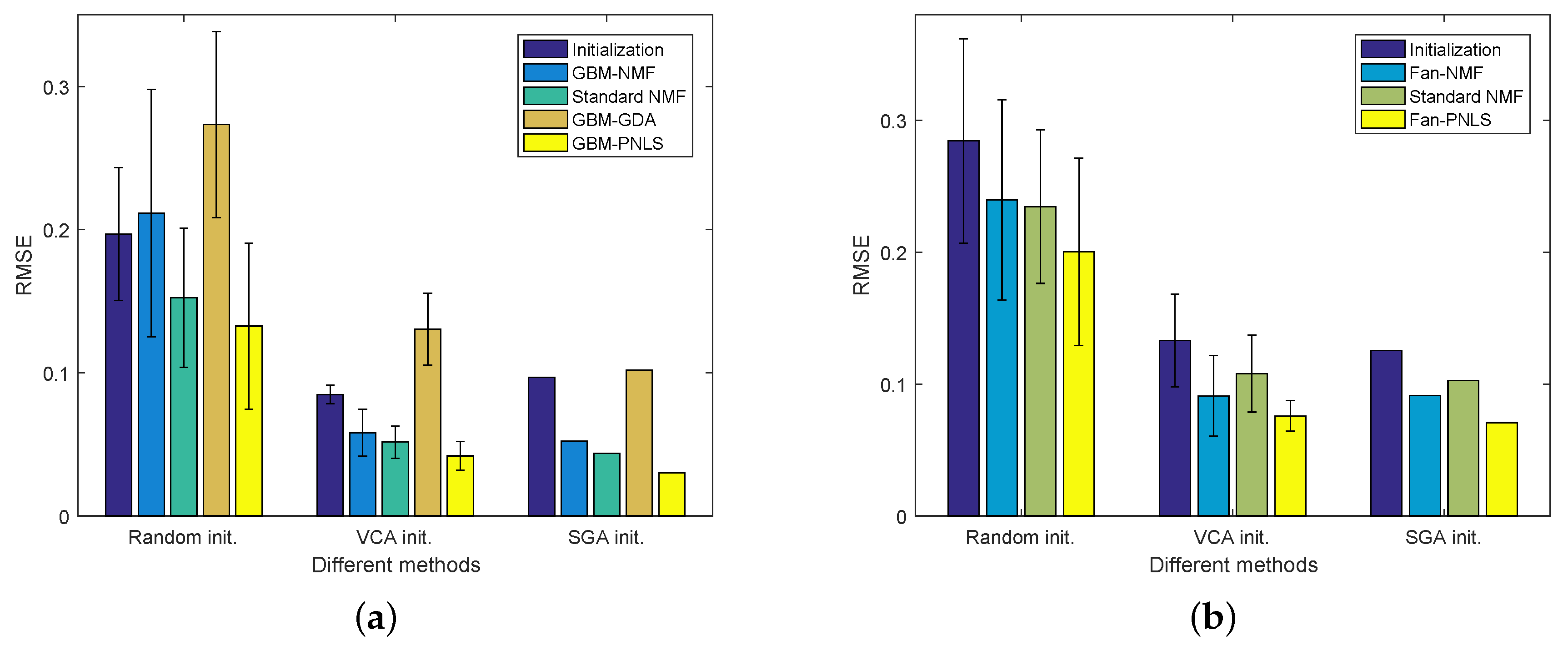

4.1.2. Comparison of Different Initialization Methods

4.1.3. Robustness to Various Noise Levels

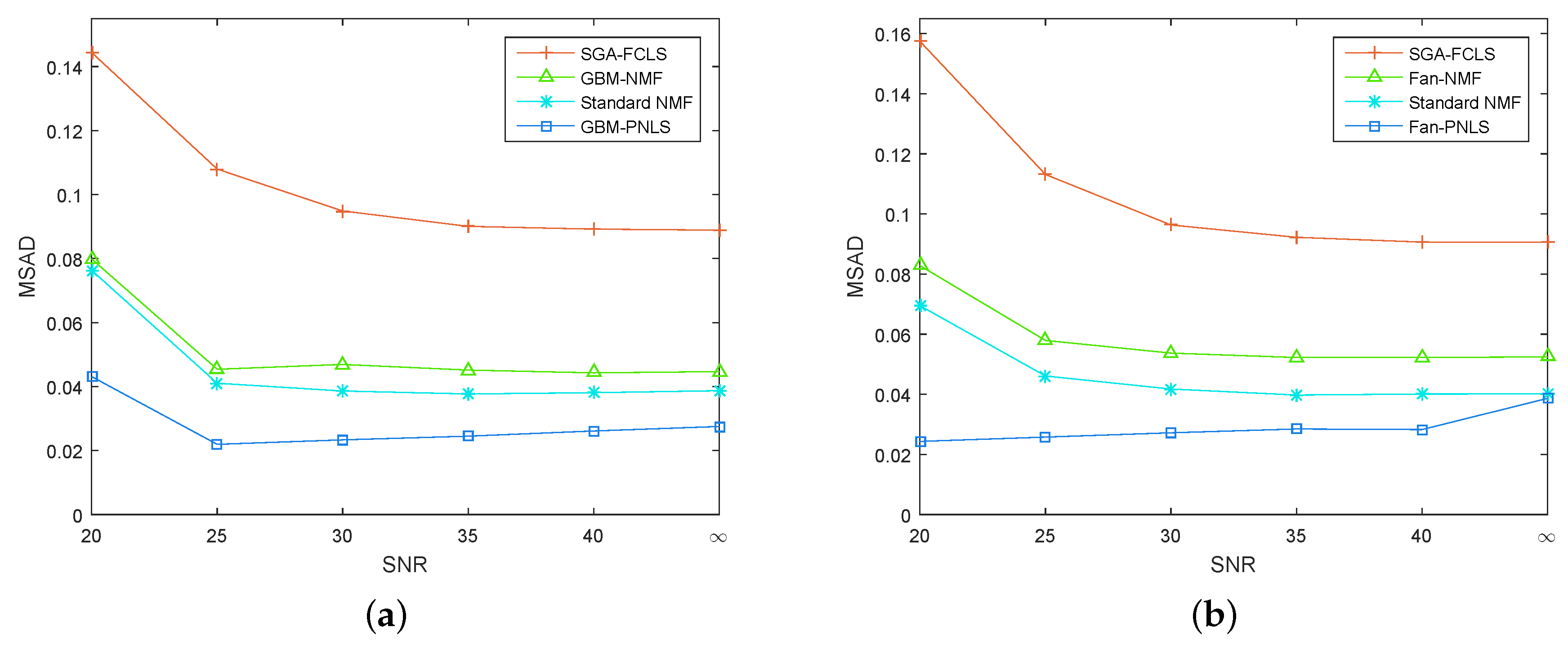

4.1.4. Results for Different Endmember Numbers

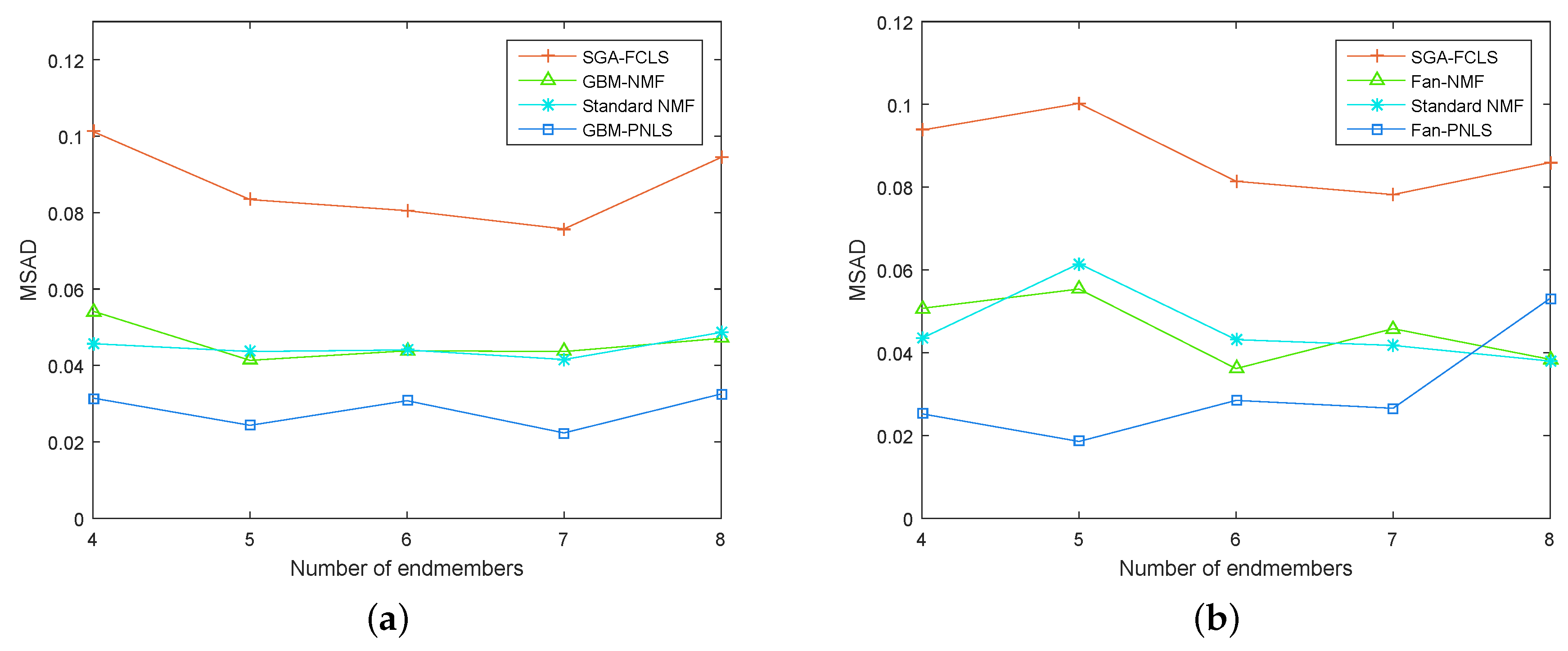

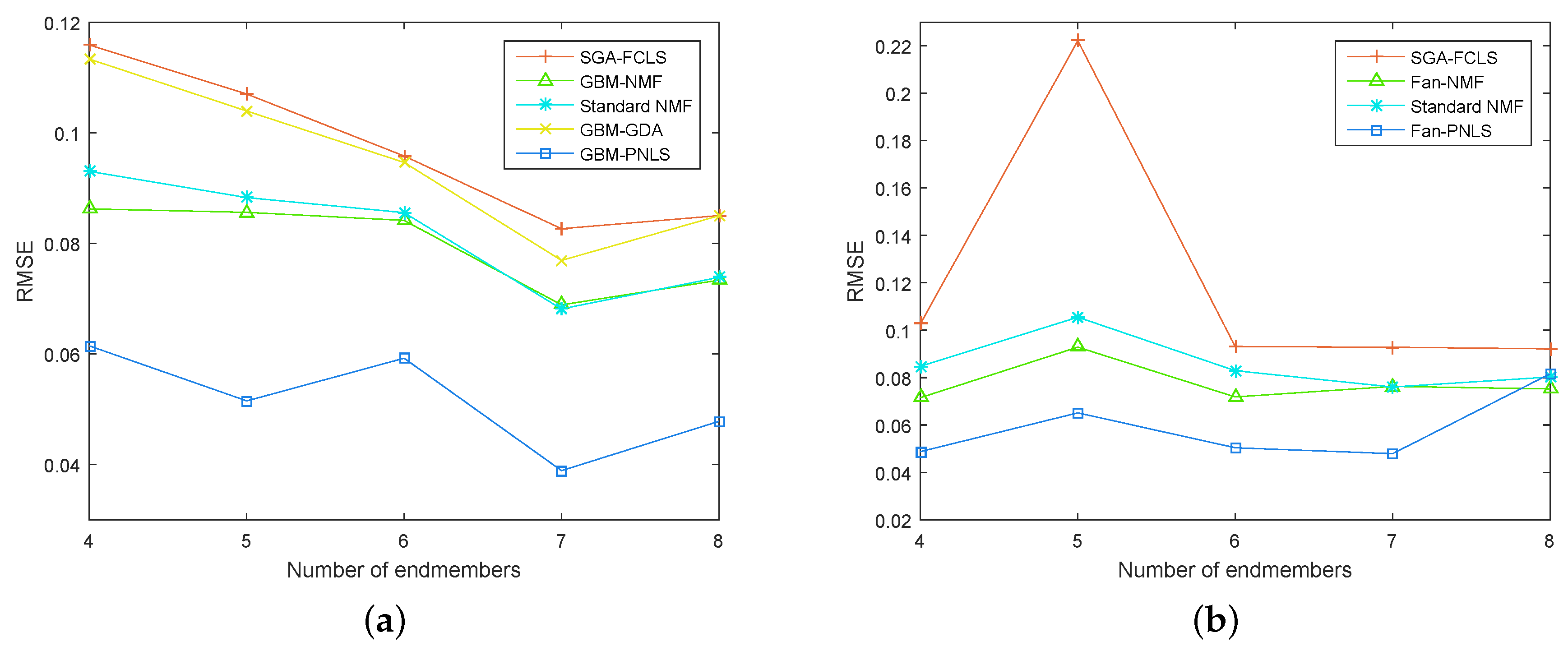

4.1.5. Robustness to Different Mixing Degrees

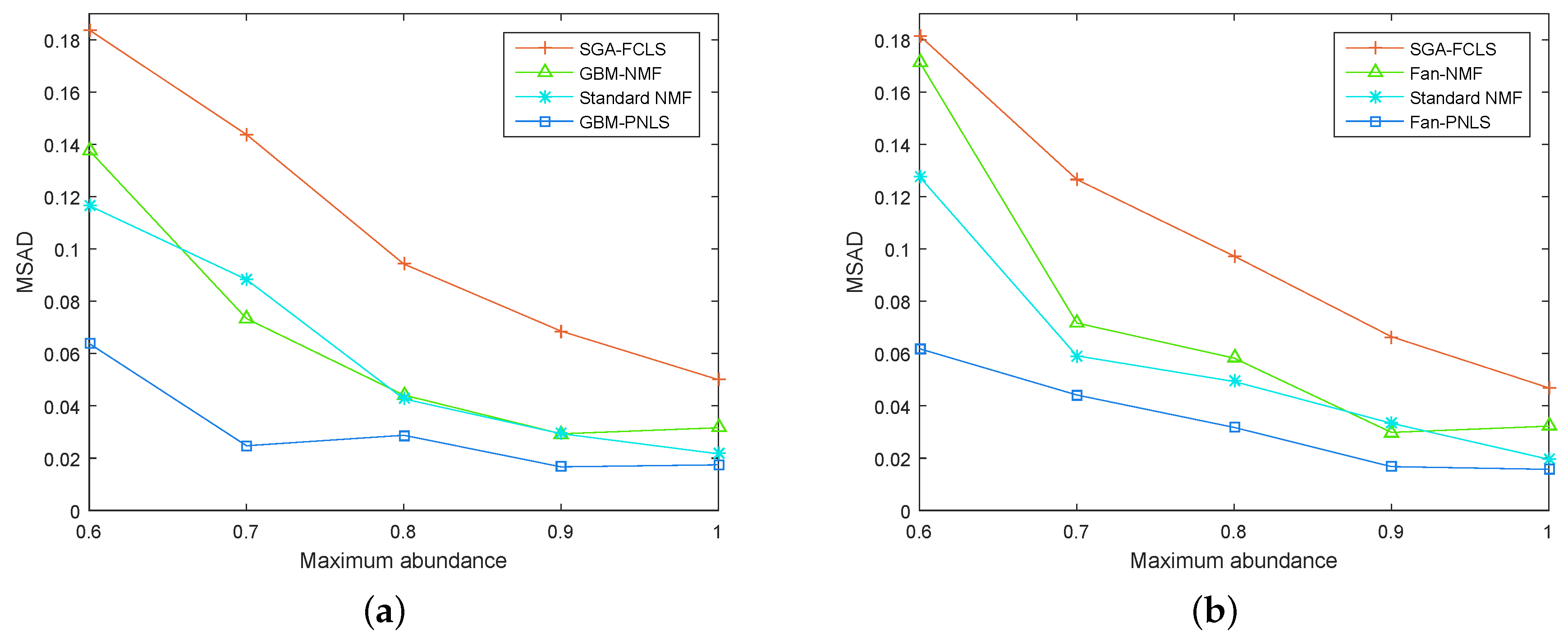

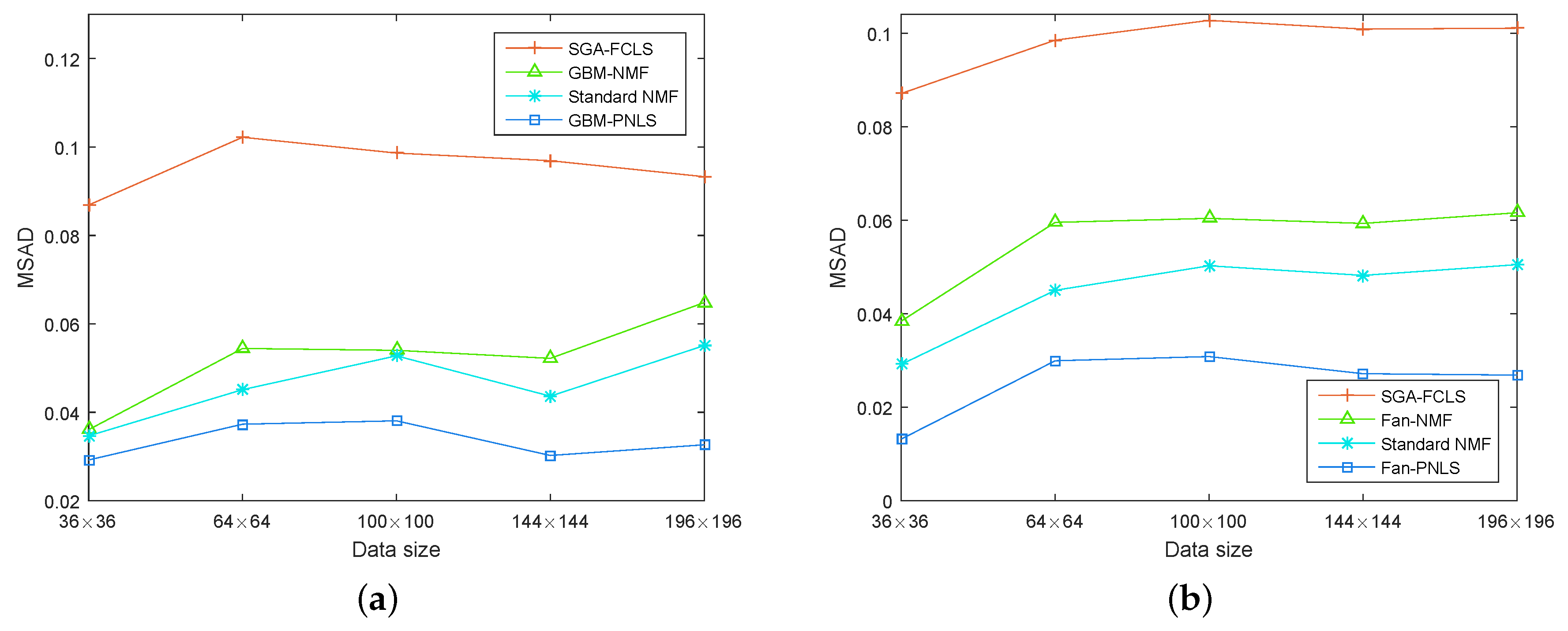

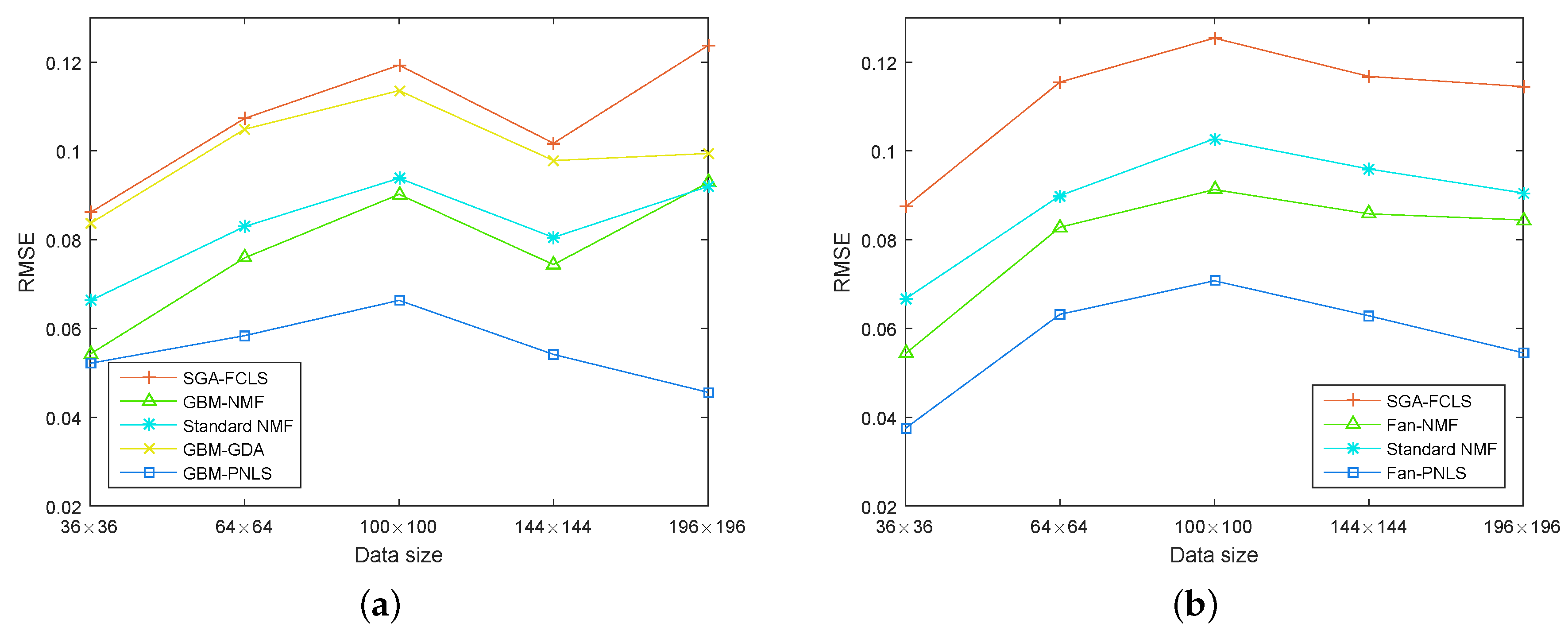

4.1.6. Robustness to Different Data Sizes

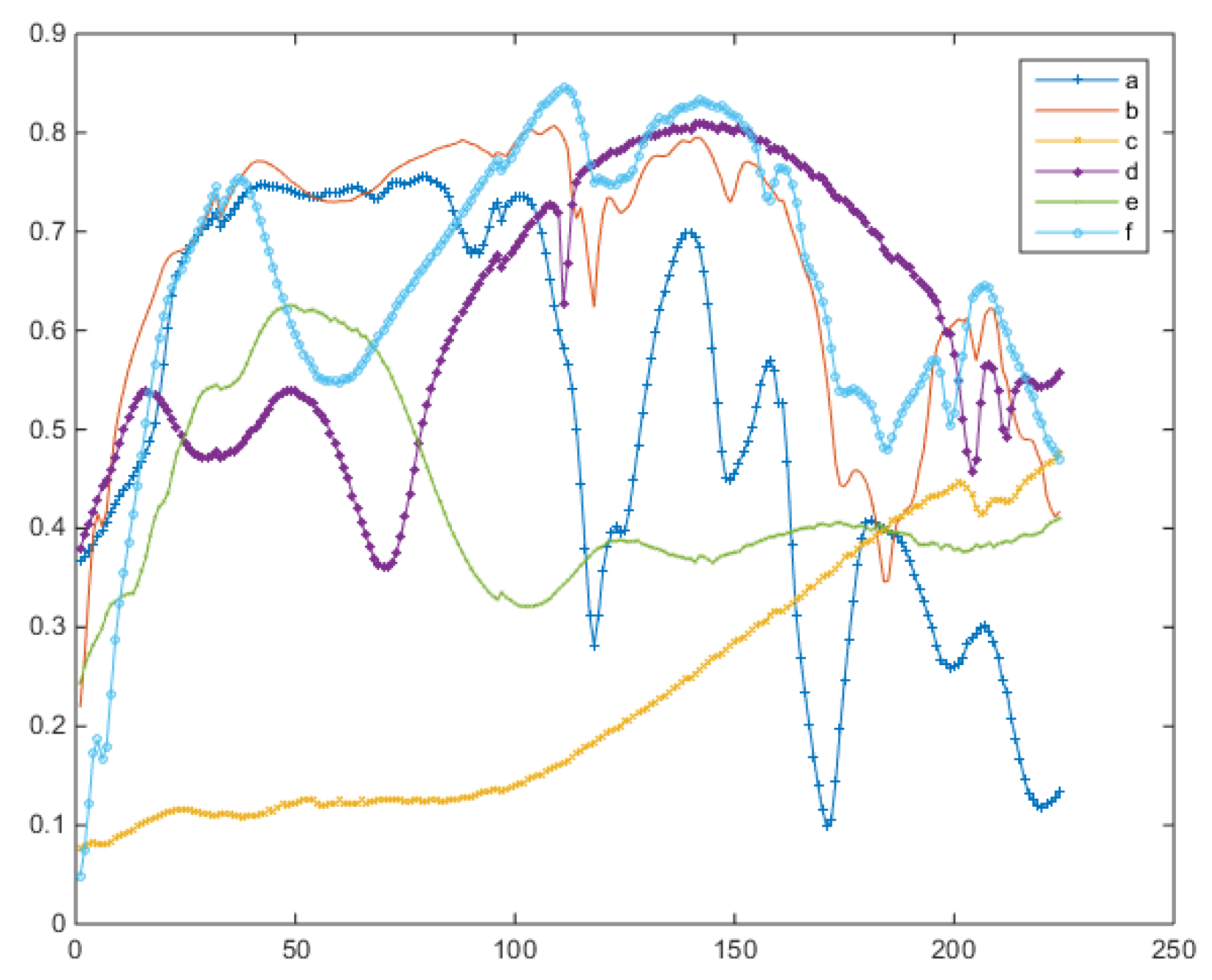

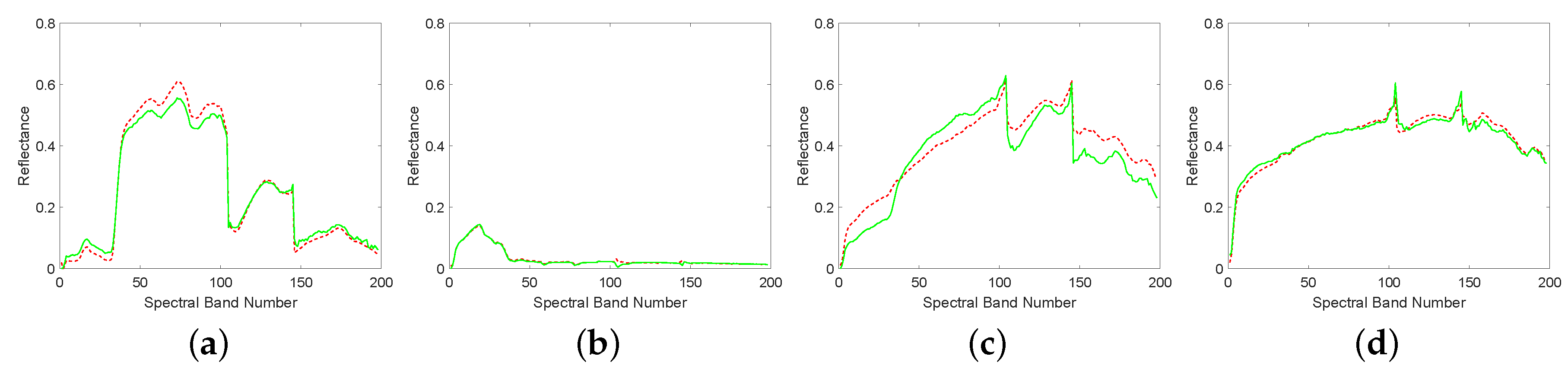

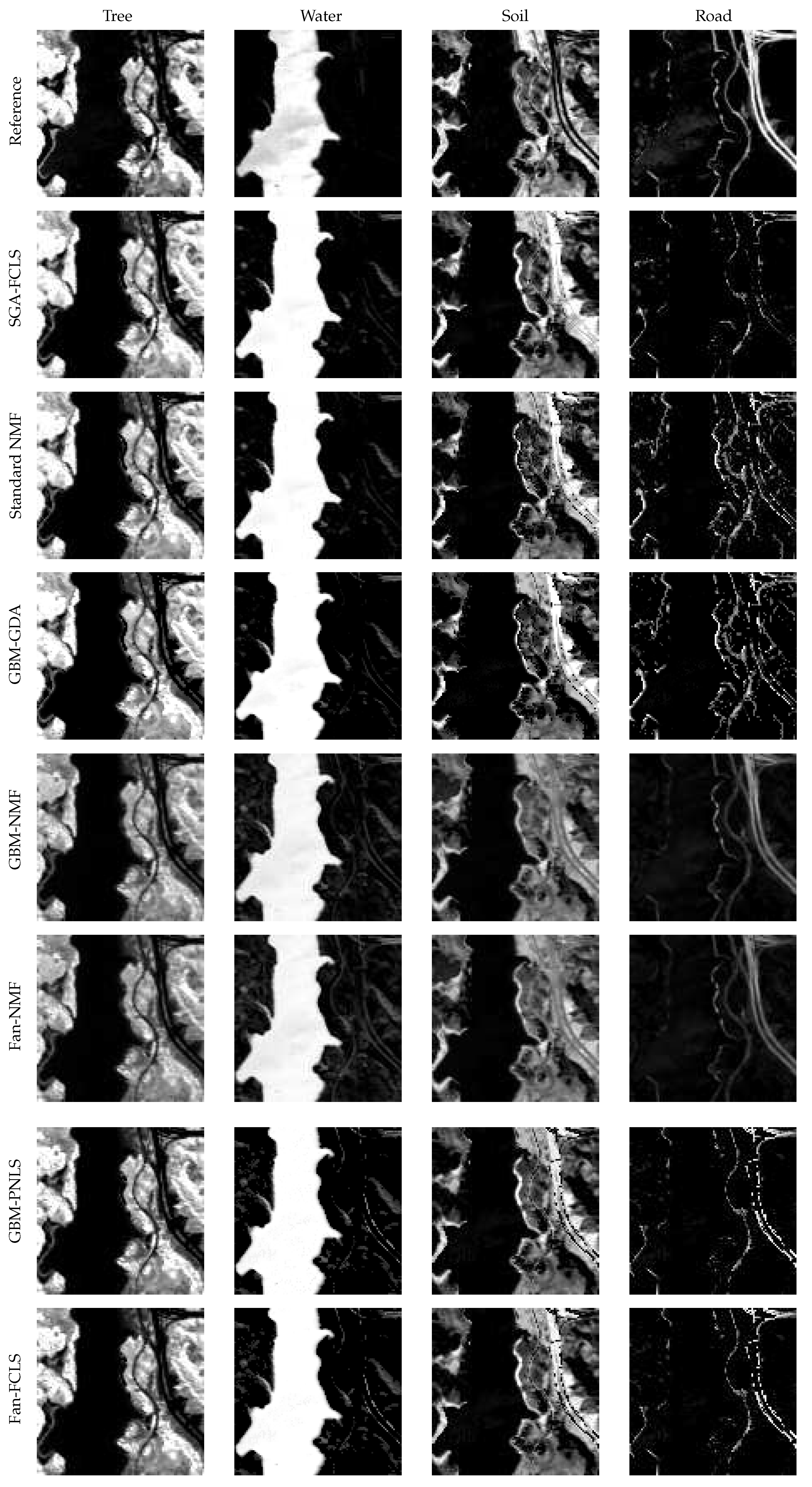

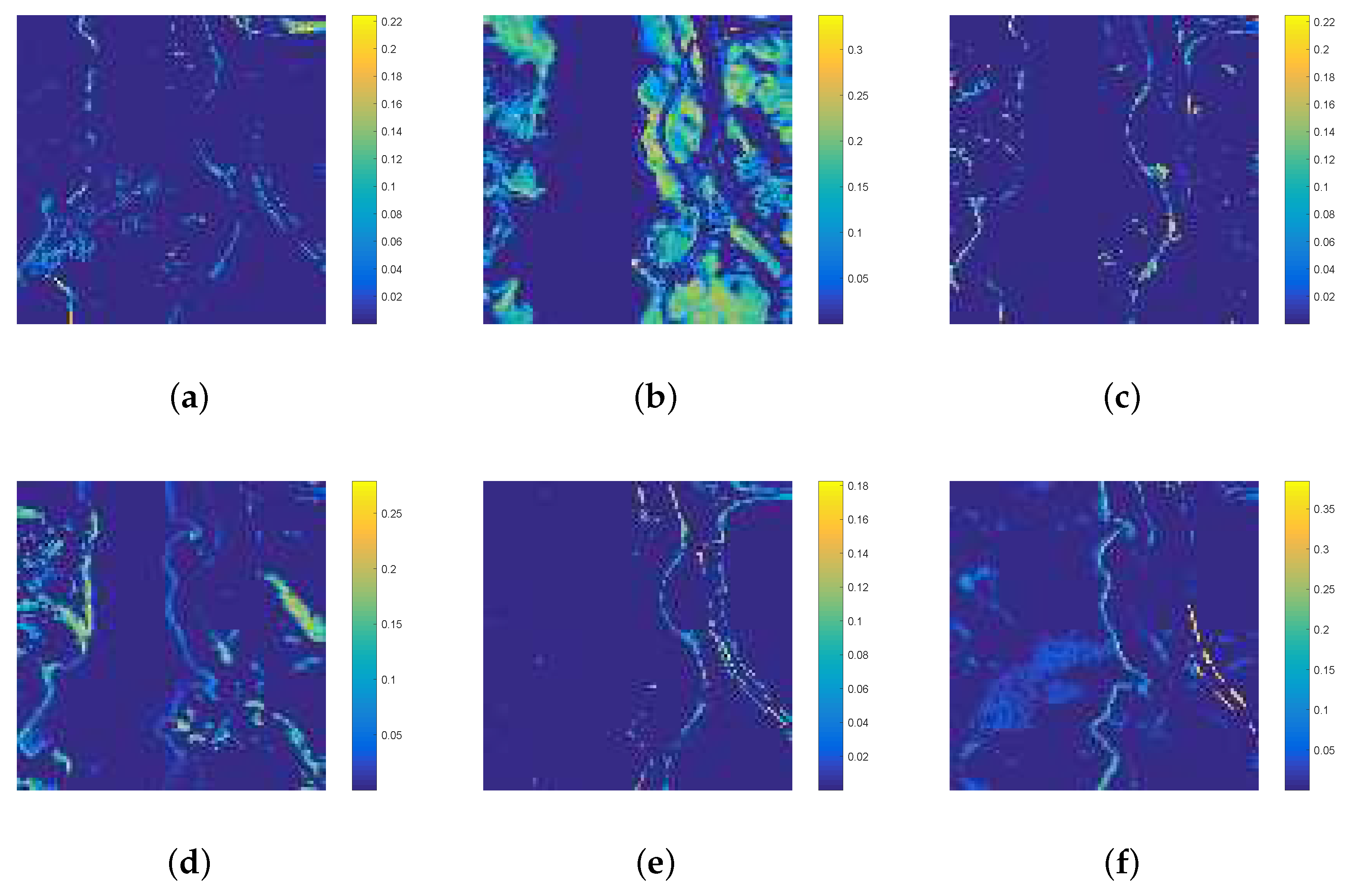

4.2. Real Data Experiments

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nascimento, J.M.P.; Dias, J.M.B. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2002, 39, 529–545. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Xia, W.; Wang, B.; Zhang, L. An approach based on constrained nonnegative matrix factorization to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 757–772. [Google Scholar] [CrossRef]

- Lu, X.; Wu, H.; Yuan, Y. Double constrained NMF for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2746–2758. [Google Scholar] [CrossRef]

- Heylen, R.; Parente, M.; Gader, P. A review of nonlinear hyperspectral unmixing methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1844–1868. [Google Scholar] [CrossRef]

- Kwon, H.; Nasrabadi, N.M. Kernel orthogonal subspace projection for hyperspectral signal classification. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2952–2962. [Google Scholar] [CrossRef]

- Chen, J.; Richard, C.; Honeine, P. Nonlinear unmixing of hyperspectral data based on a linear-mixture/nonlinear-fluctuation model. IEEE Trans. Signal Process. 2013, 61, 480–492. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, S.; Jia, X. Spectral unmixing in multiple-kernel Hilbert space for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3968–3981. [Google Scholar] [CrossRef]

- Hapke, B. Bidirectional reflectance spectroscopy: 1. Theory. J. Geophys. Res. Solid Earth 1981, 86, 3039–3054. [Google Scholar] [CrossRef]

- Babaie-Zadeh, M.; Jutten, C.; Nayebi, K. Blind separating convolutive post-nonlinear mixtures. In Proceedings of the ICA 2001, San Diego, CA, USA, December 2001; pp. 138–143. [Google Scholar]

- Nascimento, J.M.P.; Bioucasdias, J.M. Nonlinear mixture model for hyperspectral unmixing. In Proceedings Image and Signal Processing for Remote Sensing XV. International Society for Optics and Photonics; SPIE: Berlin, Germany, 2009; Volume 7477, p. 74770I. [Google Scholar]

- Fan, W.; Hu, B.; Miller, J.; Li, M. Comparative study between a new nonlinear model and common linear model for analysing laboratory simulated-forest hyperspectral data. Int. J. Remote Sens. 2009, 30, 2951–2962. [Google Scholar] [CrossRef]

- Halimi, A.; Altmann, Y.; Dobigeon, N.; Tourneret, J.Y. Unmixing hyperspectral images using the generalized bilinear model. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 1886–1889. [Google Scholar]

- Altmann, Y.; Halimi, A.; Dobigeon, N.; Tourneret, J.Y. Supervised nonlinear spectral unmixing using a postnonlinear mixing model for hyperspectral imagery. IEEE Trans. Image Process. 2012, 21, 3017–3025. [Google Scholar] [CrossRef] [PubMed]

- Yokoya, N.; Chanussot, J.; Iwasaki, A. Nonlinear unmixing of hyperspectral data using semi-nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1430–1437. [Google Scholar] [CrossRef]

- Boardman, J.W. Automating spectral unmixing of AVIRIS data using convex geometry concepts. In Proceedings of the Summaries of the Fourth Annual JPL Airborne Geoscience Workshop, Washington, DC, USA, 25–29 October 1993. [Google Scholar]

- Chang, C.I.; Wu, C.C.; Liu, W.; Ouyang, Y.C. A new growing method for simplex-based endmember extraction algorithm. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2804–2819. [Google Scholar] [CrossRef]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. In Proceedings Imaging Spectrometry V. International Society for Optics and Photonics; SPIE: Berlin, Germany, 1999; Volume 3753, pp. 266–276. [Google Scholar]

- Altmann, Y.; Dobigeon, N.; Tourneret, J.Y. Unsupervised post-nonlinear unmixing of hyperspectral images using a Hamiltonian Monte Carlo algorithm. IEEE Trans. Image Process. 2014, 23, 2663–2675. [Google Scholar] [CrossRef]

- Eches, O.; Guillaume, M. A bilinear–bilinear nonnegative matrix factorization method for hyperspectral unmixing. IEEE Geosci. Remote Sens. Lett. 2013, 11, 778–782. [Google Scholar] [CrossRef]

- Li, J.; Li, X.; Zhao, L. Unsupervised nonlinear hyperspectral unmixing based on the generalized bilinear model. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 6553–6556. [Google Scholar]

- Lin, C.J. Projected gradient methods for nonnegative matrix factorization. Neural Comput. 2007, 19, 2756–2779. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Algorithms for non-negative matrix factorization. In Proceedings of the International Conference on Neural Information Processing Systems, Denver, CO, USA, 3–8 December 2001; pp. 556–562. [Google Scholar]

- Bro, R.; De Jong, S. A fast non-negativity-constrained least squares algorithm. J. Chemom. 2015, 11, 393–401. [Google Scholar] [CrossRef]

- Kim, H.; Park, H. Nonnegative matrix factorization based on alternating nonnegativity constrained least squares and active set method. Siam J. Matrix Anal. Appl. 2008, 30, 713–730. [Google Scholar] [CrossRef]

- Han, J.; Moraga, C. The Influence of the Sigmoid Function Parameters on the Speed of Backpropagation Learning; Springer: Berlin/Heidelberg, Germany, 1995; pp. 195–201. [Google Scholar]

- Björck, Å. Numerical Methods for Least Squares Problems; SIAM: Philadelphia, PA, USA, 1996. [Google Scholar]

- Levenberg, K. A method for the solution of certain non-linear problems in least squares. J. Heart Lung Transpl. Off. Publ. Int. Soc. Heart Transpl. 1944, 31, 436–438. [Google Scholar] [CrossRef]

- Halimi, A.; Altmann, Y.; Dobigeon, N.; Tourneret, J.Y. Nonlinear Unmixing of Hyperspectral Images Using a Generalized Bilinear Model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4153–4162. [Google Scholar] [CrossRef]

- Zdunek, R. Hyperspectral image unmixing with nonnegative matrix factorization. In Proceedings of the International Conference on Signals and Electronic Systems, Wroclaw, Poland, 18–21 September 2012; pp. 1–4. [Google Scholar]

- Drees, L.; Roscher, R.; Wenzel, S. Archetypal Analysis for Sparse Representation-based Hyperspectral Sub-pixel Quantification. Photogramm. Eng. Remote Sens. 2018, 84, 279–286. [Google Scholar] [CrossRef]

- Swayze, G.A.; Clark, R.N.; King, T.V.V.; Gallagher, A.; Calvin, W.M. The U.S. Geological Survey, Digital Spectral Library: Version 1: 0.2 to 3.0 μm; Technical Report; Geological Survey (US): Reston, VA, USA, 1993.

- Pu, H.; Chen, Z.; Wang, B.; Xia, W. Constrained least squares algorithms for nonlinear unmixing of hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1287–1303. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, Y.; Xiang, S.; Fan, B.; Pan, C. Structured sparse method for hyperspectral unmixing. ISPRS J. Photogramm. Remote Sens. 2014, 88, 101–118. [Google Scholar] [CrossRef]

- Williams, M.D.; Kerekes, J.P.; van Aardt, J. Application of abundance map reference data for spectral unmixing. Remote Sens. 2017, 9, 793. [Google Scholar] [CrossRef]

- Zhu, F. Hyperspectral Unmixing Datasets & Ground Truths. 2014. Available online: http://www.escience.cn/people/feiyunZHU/DatasetGT.html (accessed on 5 June 2017).

- Wang, Y.; Pan, C.; Xiang, S.; Zhu, F. Robust hyperspectral unmixing with correntropy-based metric. IEEE Trans. Image Process. 2015, 24, 4027–4040. [Google Scholar] [CrossRef] [PubMed]

| GBM | Fan Model | |

|---|---|---|

| \ | ||

| \ | ||

| \ |

| Substances | SGA-FCLS | Standard NMF | GBM-NMF | Fan-NMF | GBM-PNLS | Fan-PNLS |

|---|---|---|---|---|---|---|

| Tree | 15.59 | 6.08 | 16.02 | 15.88 | 6.17 | 5.64 |

| Water | 25.40 | 12.54 | 26.66 | 22.73 | 6.74 | 7.13 |

| Dirt | 13.36 | 11.51 | 16.50 | 49.31 | 11.84 | 12.67 |

| Road | 10.69 | 8.71 | 26.64 | 23.58 | 3.31 | 3.38 |

| Average | 16.26 | 9.71 | 21.45 | 27.87 | 7.02 | 7.21 |

| SGA-FCLS | Standard NMF | GBM-GDA | GBM-NMF | Fan-NMF | GBM-PNLS | Fan-PNLS | |

|---|---|---|---|---|---|---|---|

| RMSE | 38.38 | 15.51 | 37.54 | 21.80 | 24.70 | 14.78 | 14.65 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, R.; Li, X.; Lu, H.; Li, J.; Zhao, L. Parameterized Nonlinear Least Squares for Unsupervised Nonlinear Spectral Unmixing. Remote Sens. 2019, 11, 148. https://doi.org/10.3390/rs11020148

Huang R, Li X, Lu H, Li J, Zhao L. Parameterized Nonlinear Least Squares for Unsupervised Nonlinear Spectral Unmixing. Remote Sensing. 2019; 11(2):148. https://doi.org/10.3390/rs11020148

Chicago/Turabian StyleHuang, Risheng, Xiaorun Li, Haiqiang Lu, Jing Li, and Liaoying Zhao. 2019. "Parameterized Nonlinear Least Squares for Unsupervised Nonlinear Spectral Unmixing" Remote Sensing 11, no. 2: 148. https://doi.org/10.3390/rs11020148

APA StyleHuang, R., Li, X., Lu, H., Li, J., & Zhao, L. (2019). Parameterized Nonlinear Least Squares for Unsupervised Nonlinear Spectral Unmixing. Remote Sensing, 11(2), 148. https://doi.org/10.3390/rs11020148