Drawback in the Change Detection Approach: False Detection during the 2018 Western Japan Floods

Abstract

1. Introduction

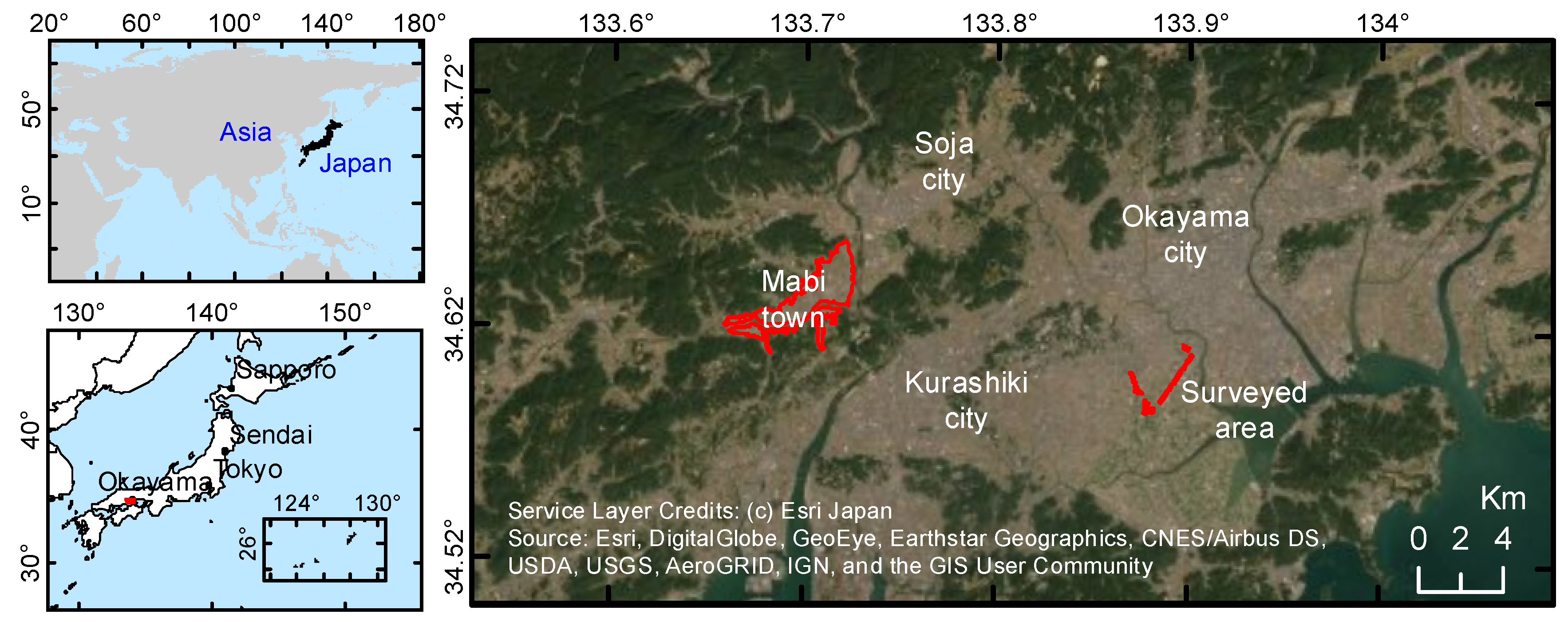

2. Dataset and Case Study

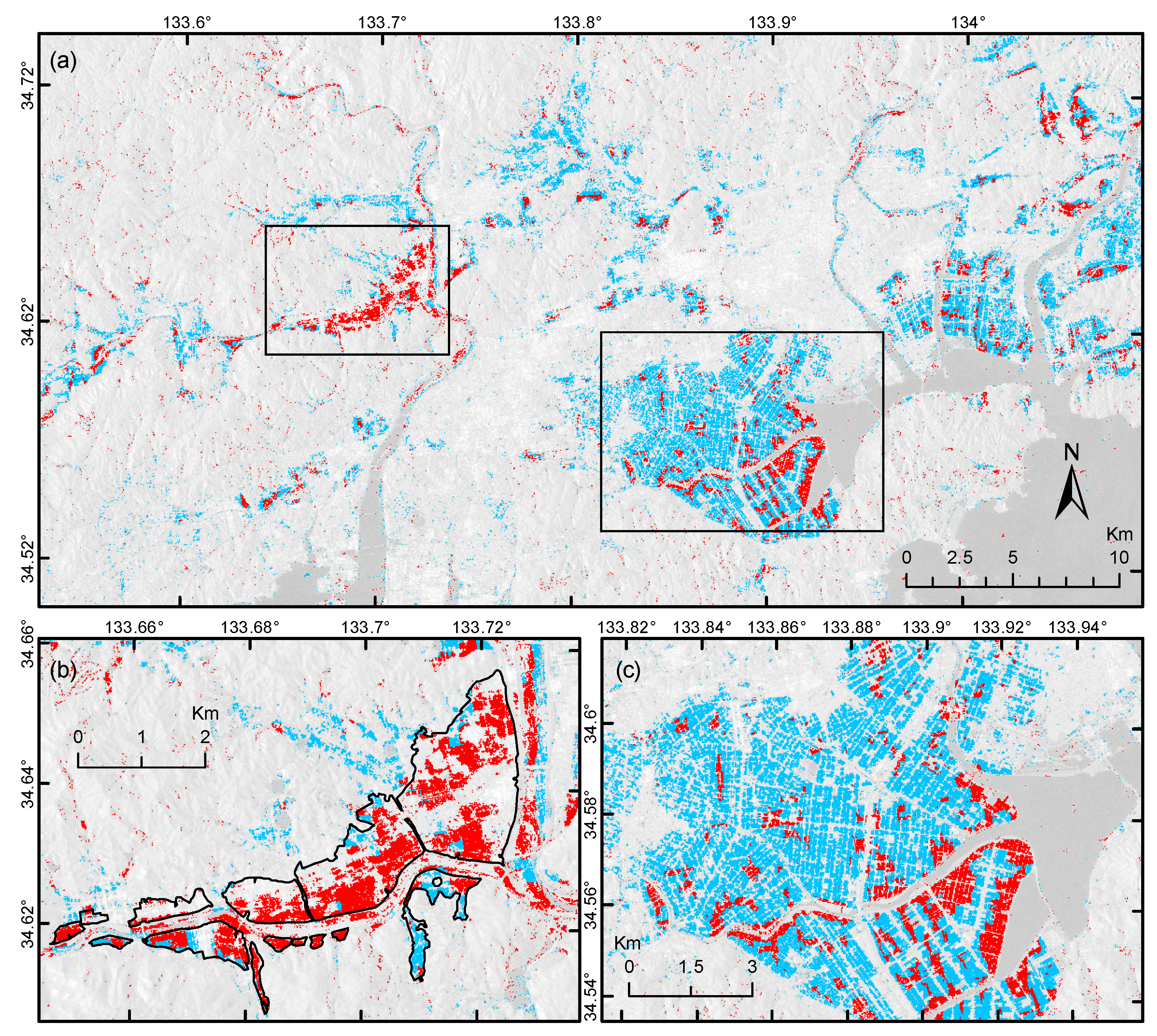

2.1. The 2018 Western Japan Floods

2.2. The Advance Land Observing Satellite-2 (ALOS-2)

2.3. The Sentinel-1 Satellite

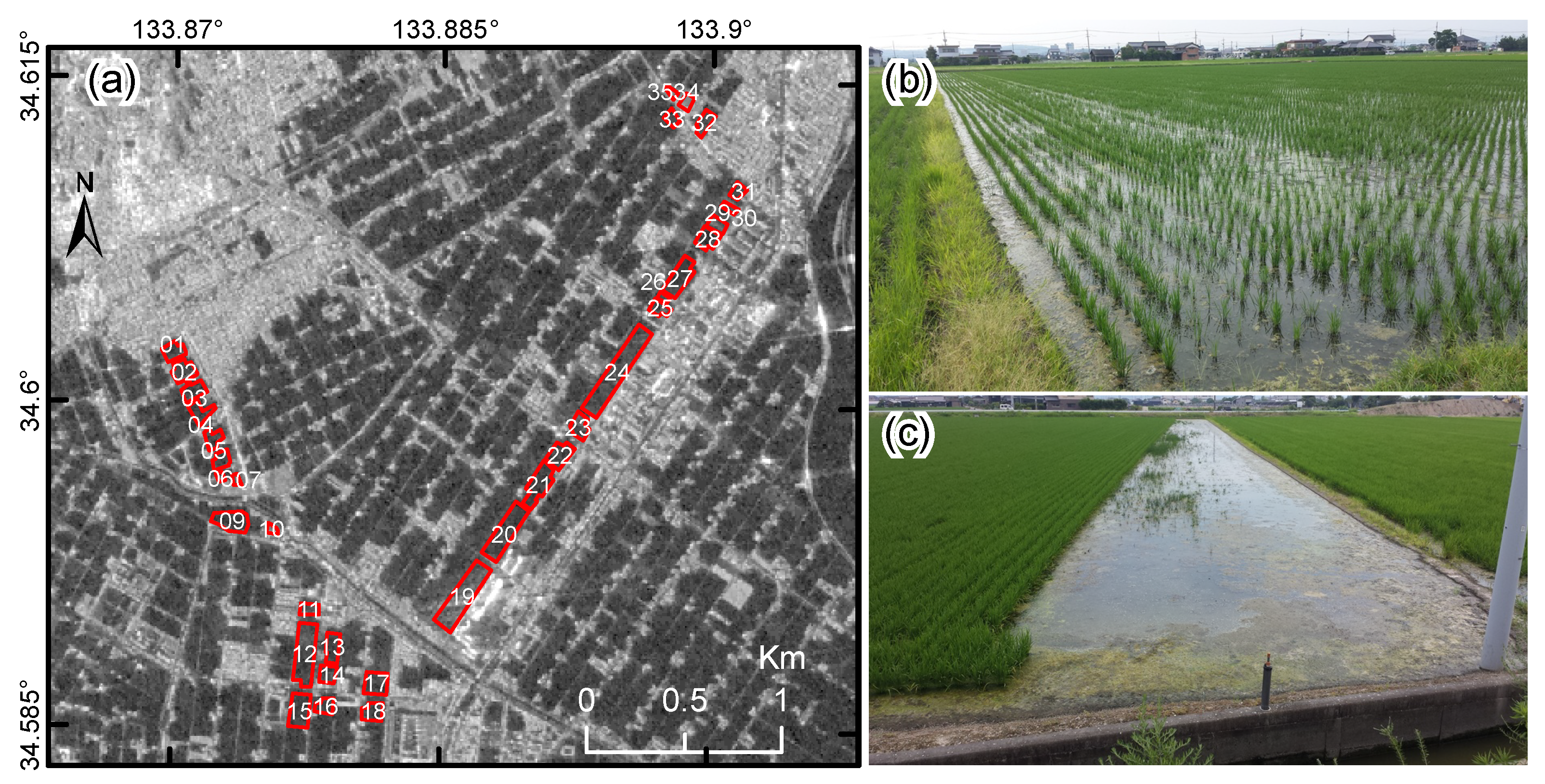

2.4. Truth Data

3. Methods

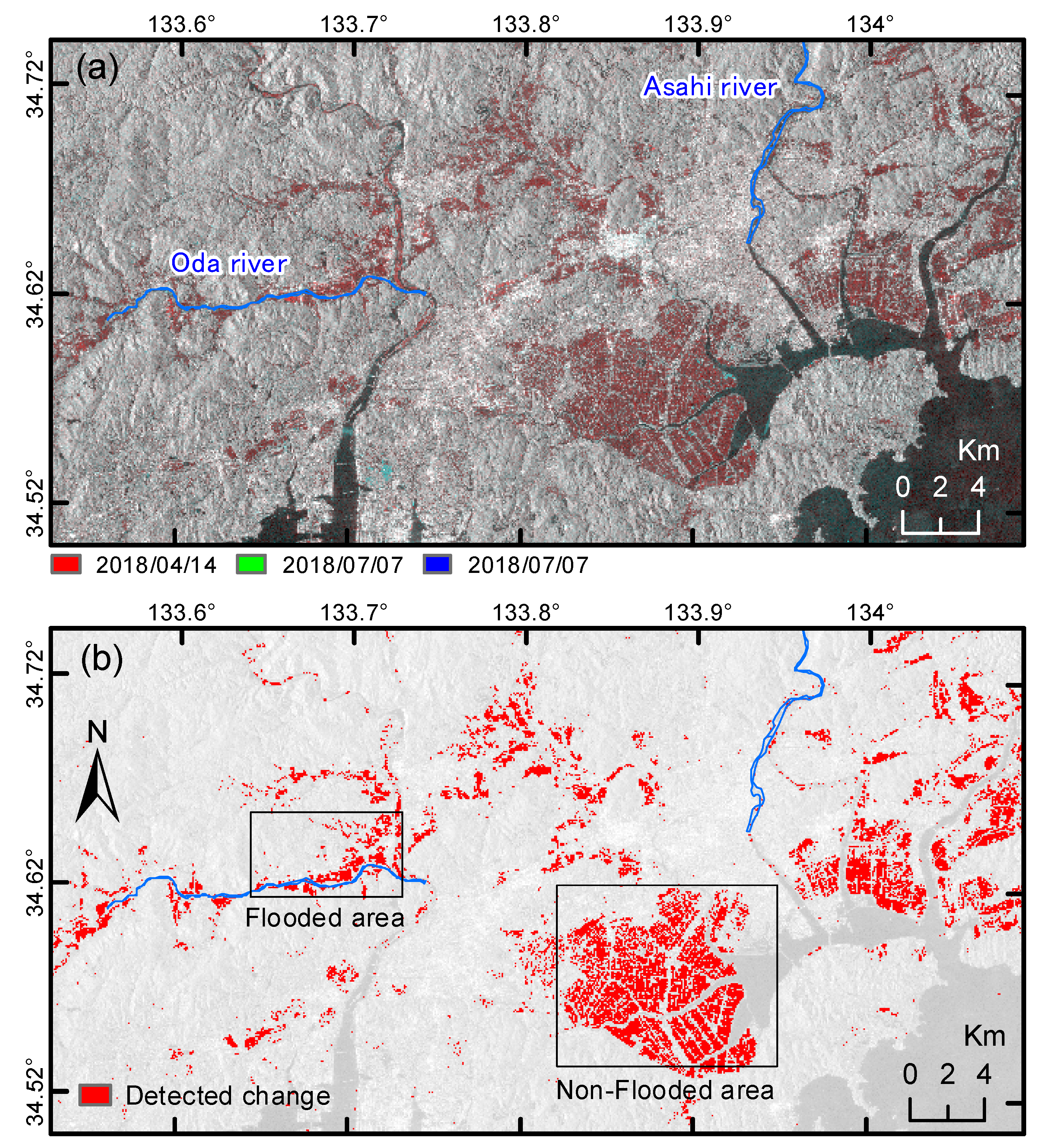

3.1. Current Practice for Flood Mapping

3.1.1. Intensity Thresholding

3.1.2. Coherence Approach

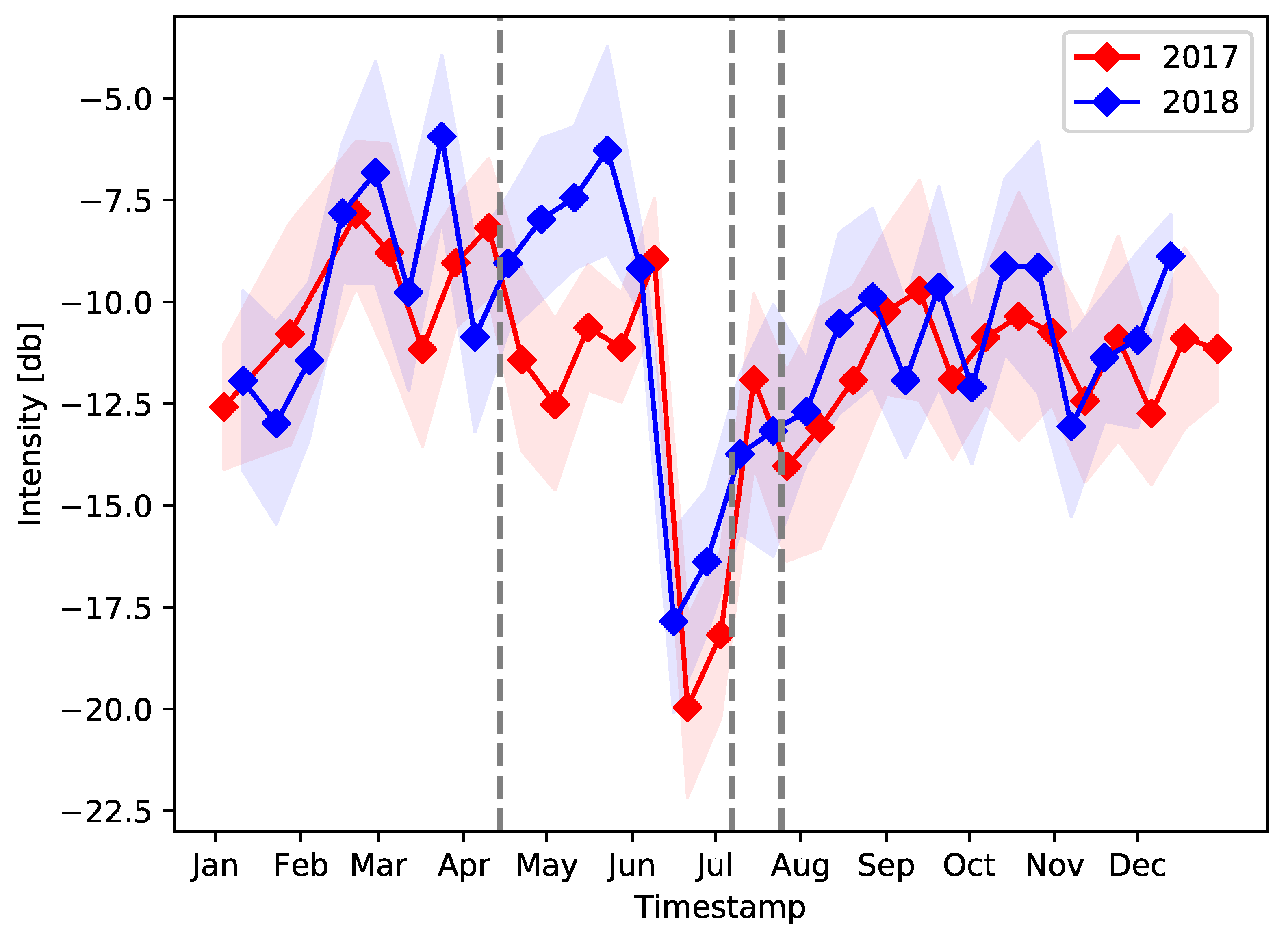

3.2. Backscattering Dynamics of Agriculture Targets

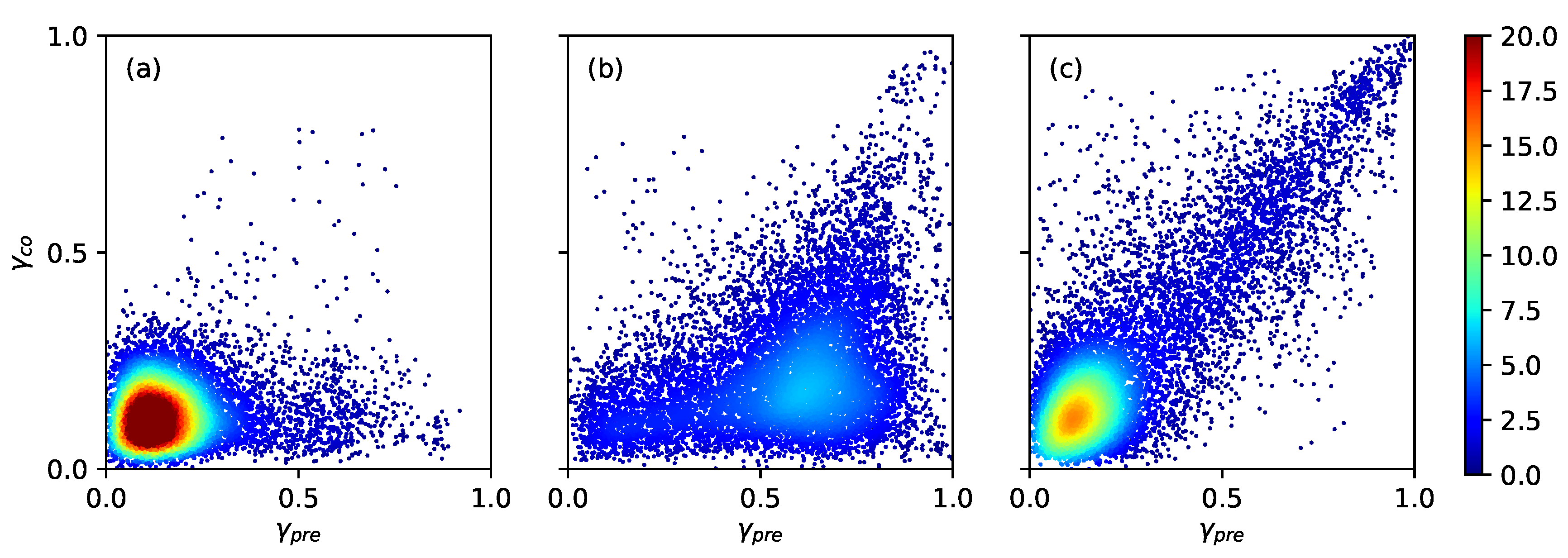

3.3. A New Metric: Conditional Coherence

3.4. Machine Learning Classification

3.4.1. Unsupervised Classification

3.4.2. Supervised Thresholding

4. Results

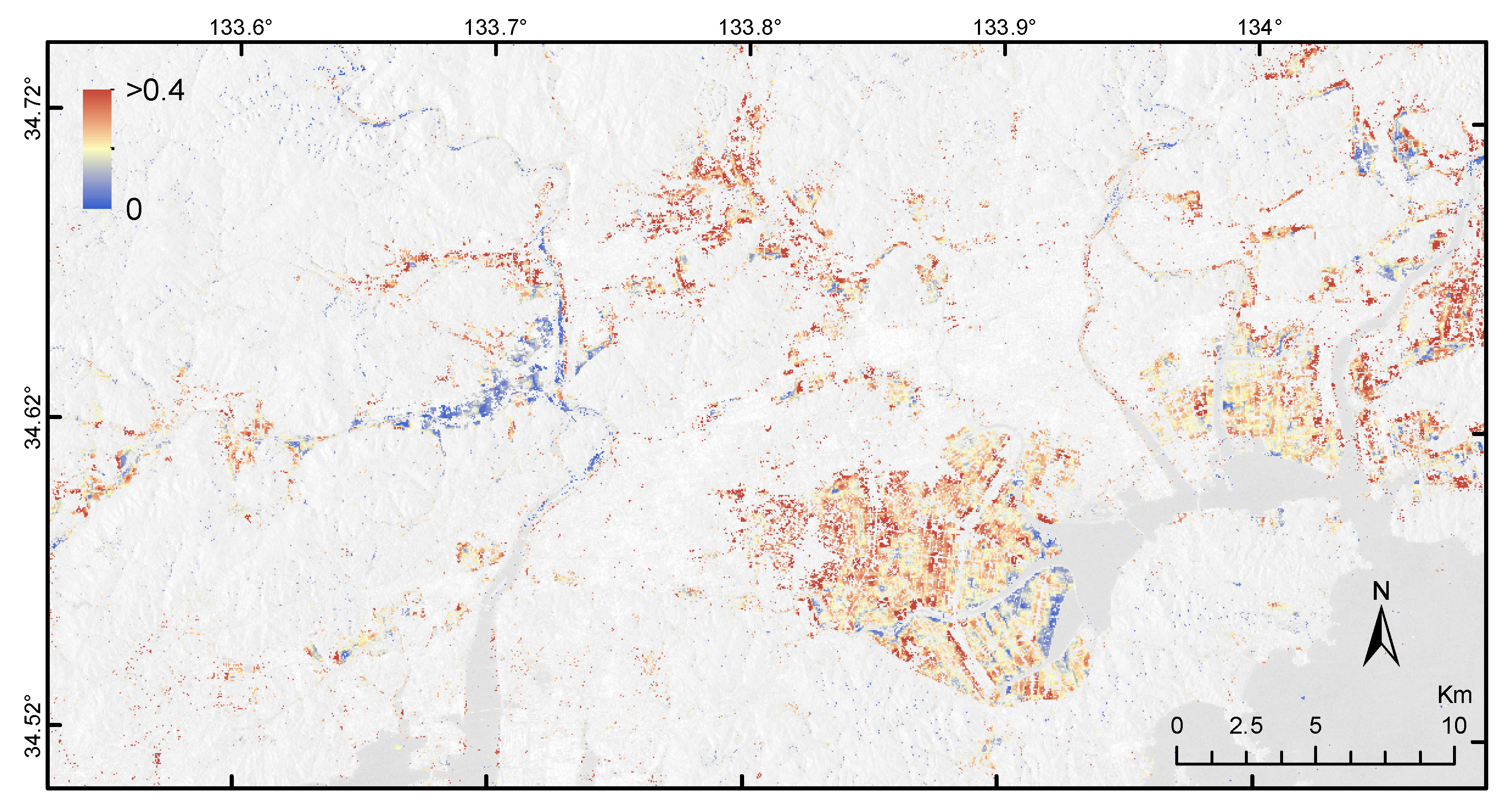

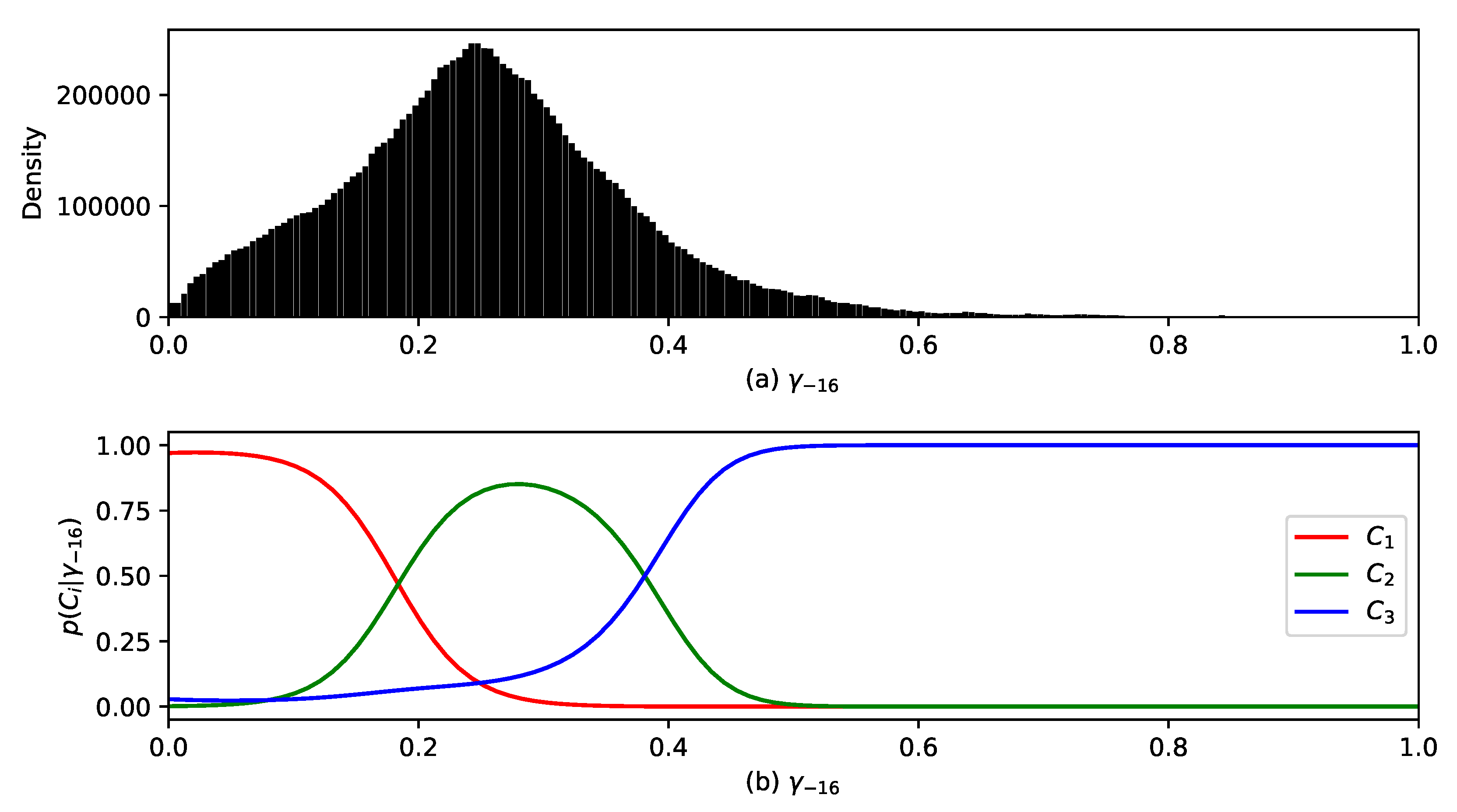

4.1. Conditional Coherence

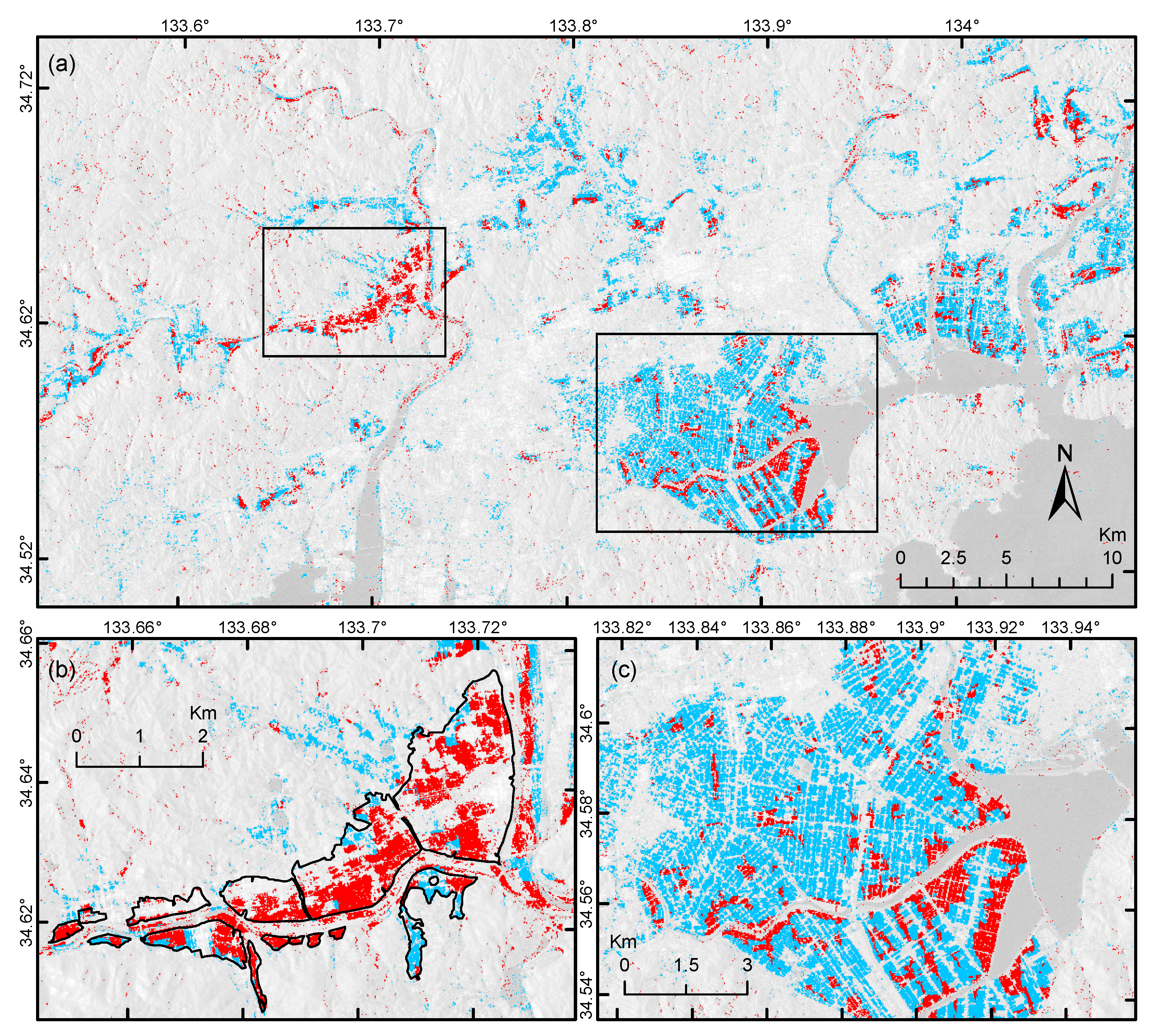

4.2. Classification of Flooded and Non-Flooded Agriculture Targets

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Duan, W.; He, B.; Nover, D.; Fan, J.; Yang, G.; Chen, W.; Meng, H.; Liu, C. Floods and associated socioeconomic damages in China over the last century. Nat. Hazards 2016, 82, 401–413. [Google Scholar] [CrossRef]

- Morris, J.; Brewin, P. The impact of seasonal flooding on agriculture: the spring 2012 floods in Somerset, England. J. Flood Risk Manag. 2014, 7, 128–140. [Google Scholar] [CrossRef]

- Schumann, G.J.P.; Moller, D.K. Microwave remote sensing of flood inundation. Phys. Chem. Earth 2015, 83–84, 84–95. [Google Scholar] [CrossRef]

- Nakmuenwai, P.; Yamazaki, F.; Liu, W. Automated Extraction of Inundated Areas from Multi-Temporal Dual-Polarization RADARSAT-2 Images of the 2011 Central Thailand Flood. Remote Sens. 2017, 9, 78. [Google Scholar] [CrossRef]

- Liu, W.; Yamazaki, F. Review article: Detection of inundation areas due to the 2015 Kanto and Tohoku torrential rain in Japan based on multitemporal ALOS-2 imagery. Nat. Hazards Earth Syst. Sci. 2018, 18, 1905–1918. [Google Scholar] [CrossRef]

- Boni, G.; Ferraris, L.; Pulvirenti, L.; Squicciarino, G.; Pierdicca, N.; Candela, L.; Pisani, A.R.; Zoffoli, S.; Onori, R.; Proietti, C.; et al. A Prototype System for Flood Monitoring Based on Flood Forecast Combined With COSMO-SkyMed and Sentinel-1 Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2016, 9, 2794–2805. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Guerriero, L.; Ferrazzoli, P. Flood monitoring using multitemporal COSMO-SkyMed data: Image segmentation and signature interpretation. Remote Sens. Environ. 2011, 115, 990–1002. [Google Scholar] [CrossRef]

- Pierdicca, N.; Pulvirenti, L.; Boni, G.; Squicciarino, G.; Chini, M. Mapping Flooded Vegetation Using COSMO-SkyMed: Comparison With Polarimetric and Optical Data Over Rice Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2017, 10, 2650–2662. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring Flood Evolution in Vegetated Areas Using COSMO-SkyMed Data: The Tuscany 2009 Case Study. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2013, 6, 1807–1816. [Google Scholar] [CrossRef]

- Sui, H.; An, K.; Xu, C.; Liu, J.; Feng, W. Flood Detection in PolSAR Images Based on Level Set Method Considering Prior Geoinformation. IEEE Geosci. Remote Sens. Lett. 2018, 15, 699–703. [Google Scholar] [CrossRef]

- Arnesen, A.S.; Silva, T.S.; Hess, L.L.; Novo, E.M.; Rudorff, C.M.; Chapman, B.D.; McDonald, K.C. Monitoring flood extent in the lower Amazon River floodplain using ALOS/PALSAR ScanSAR images. Remote Sens. Environ. 2013, 130, 51–61. [Google Scholar] [CrossRef]

- Moya, L.; Marval Perez, L.R.; Mas, E.; Adriano, B.; Koshimura, S.; Yamazaki, F. Novel Unsupervised Classification of Collapsed Buildings Using Satellite Imagery, Hazard Scenarios and Fragility Functions. Remote Sens. 2018, 10, 296. [Google Scholar] [CrossRef]

- Moya, L.; Yamazaki, F.; Liu, W.; Yamada, M. Detection of collapsed buildings from lidar data due to the 2016 Kumamoto earthquake in Japan. Nat. Hazards Earth Syst. Sci. 2018, 18, 65–78. [Google Scholar] [CrossRef]

- Moya, L.; Mas, E.; Adriano, B.; Koshimura, S.; Yamazaki, F.; Liu, W. An integrated method to extract collapsed buildings from satellite imagery, hazard distribution and fragility curves. Int. J. Disaster Risk Reduct. 2018, 31, 1374–1384. [Google Scholar] [CrossRef]

- Moya, L.; Zakeri, H.; Yamazaki, F.; Liu, W.; Mas, E.; Koshimura, S. 3D gray level co-occurrence matrix and its application to identifying collapsed buildings. ISPRS J. Photogramm. Remote. Sens. 2019, 149, 14–28. [Google Scholar] [CrossRef]

- Endo, Y.; Adriano, B.; Mas, E.; Koshimura, S. New Insights into Multiclass Damage Classification of Tsunami-Induced Building Damage from SAR Images. Remote Sens. 2018, 10, 2059. [Google Scholar] [CrossRef]

- Adriano, B.; Xia, J.; Baier, G.; Yokoya, N.; Koshimura, S. Multi-Source Data Fusion Based on Ensemble Learning for Rapid Building Damage Mapping during the 2018 Sulawesi Earthquake and Tsunami in Palu, Indonesia. Remote Sens. 2019, 11, 886. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Mastuoka, M. Building Damage Assessment Using Multisensor Dual-Polarized Synthetic Aperture Radar Data for the 2016 M 6.2 Amatrice Earthquake, Italy. Remote Sens. 2017, 9, 330. [Google Scholar] [CrossRef]

- Rosenqvist, A. Temporal and spatial characteristics of irrigated rice in JERS-1 L-band SAR data. Int. J. Remote Sens. 1999, 20, 1567–1587. [Google Scholar] [CrossRef]

- Le Toan, T.; Laur, H.; Mougin, E.; Lopes, A. Multitemporal and dual-polarization observations of agricultural vegetation covers by X-band SAR images. IEEE Trans. Geosci. Remote Sens. 1989, 27, 709–718. [Google Scholar] [CrossRef]

- Kurosu, T.; Fujita, M.; Chiba, K. Monitoring of rice crop growth from space using the ERS-1 C-band SAR. IEEE Trans. Geosci. Remote Sens. 1995, 33, 1092–1096. [Google Scholar] [CrossRef]

- Cabinet Office of Japan. Summary of Damage Situation Caused by the Heavy Rainfall in July 2018. Available online: http://www.bousai.go.jp/updates/h30typhoon7/index.html (accessed on 7 August 2018).

- Plank, S. Rapid damage assessment by means of multitemporal SAR—A comprehensive review and outlook to Sentinel-1. Remote Sens. 2014, 6, 4870–4906. [Google Scholar] [CrossRef]

- Geospatial Information Authority of Japan. Information about the July 2017 Heavy Rain. Available online: https://www.gsi.go.jp/BOUSAI/H30.taihuu7gou.html (accessed on 19 July 2019).

- The International Charter Space and Major Disasters. Flood in Japan. Available online: https://disasterscharter.org/en/web/guest/activations/-/article/flood-in-japan-activation-577- (accessed on 22 January 2019).

- Wang, Z.; Ben-Arie, J. Detection and segmentation of generic shapes based on affine modeling of energy in eigenspace. IEEE Trans. Image Process. 2001, 10, 1621–1629. [Google Scholar] [CrossRef] [PubMed]

- Moon, H.; Chellappa, R.; Rosenfeld, A. Optimal edge-based shape detection. IEEE Trans. Image Process. 2002, 11, 1209–1227. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Athitsos, V.; Sclaroff, S.; Betke, M. Detecting Objects of Variable Shape Structure With Hidden State Shape Models. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 477–492. [Google Scholar] [CrossRef] [PubMed]

- Li, Q. A Geometric Framework for Rectangular Shape Detection. IEEE Trans. Image Process. 2014, 23, 4139–4149. [Google Scholar] [CrossRef] [PubMed]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Statist. Soc. B 1977, 39, 1–38. [Google Scholar] [CrossRef]

- Redner, R.A.; Walker, H.F. Mixture Densities, Maximum Likelihood and the Em Algorithm. SIAM Rev. 1984, 26, 195–239. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.C.; Smola, A.J.; Williamson, R.C. Estimating the Support of a High-Dimensional Distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef]

| EM | One-Class SVM | Total | |||

|---|---|---|---|---|---|

| Flooded | Non-Flooded | Flooded | Non-Flooded | ||

| GSI | 327,464 | 60,012 | 327,306 | 65,170 | 392,476 |

| Survey | 319 | 28,476 | 308 | 28,487 | 28,795 |

| NF-area | 813,561 | 3,069,984 | 810,299 | 3,073,246 | 3,883,545 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moya, L.; Endo, Y.; Okada, G.; Koshimura, S.; Mas, E. Drawback in the Change Detection Approach: False Detection during the 2018 Western Japan Floods. Remote Sens. 2019, 11, 2320. https://doi.org/10.3390/rs11192320

Moya L, Endo Y, Okada G, Koshimura S, Mas E. Drawback in the Change Detection Approach: False Detection during the 2018 Western Japan Floods. Remote Sensing. 2019; 11(19):2320. https://doi.org/10.3390/rs11192320

Chicago/Turabian StyleMoya, Luis, Yukio Endo, Genki Okada, Shunichi Koshimura, and Erick Mas. 2019. "Drawback in the Change Detection Approach: False Detection during the 2018 Western Japan Floods" Remote Sensing 11, no. 19: 2320. https://doi.org/10.3390/rs11192320

APA StyleMoya, L., Endo, Y., Okada, G., Koshimura, S., & Mas, E. (2019). Drawback in the Change Detection Approach: False Detection during the 2018 Western Japan Floods. Remote Sensing, 11(19), 2320. https://doi.org/10.3390/rs11192320