UAS for Wetland Mapping and Hydrological Modeling

Abstract

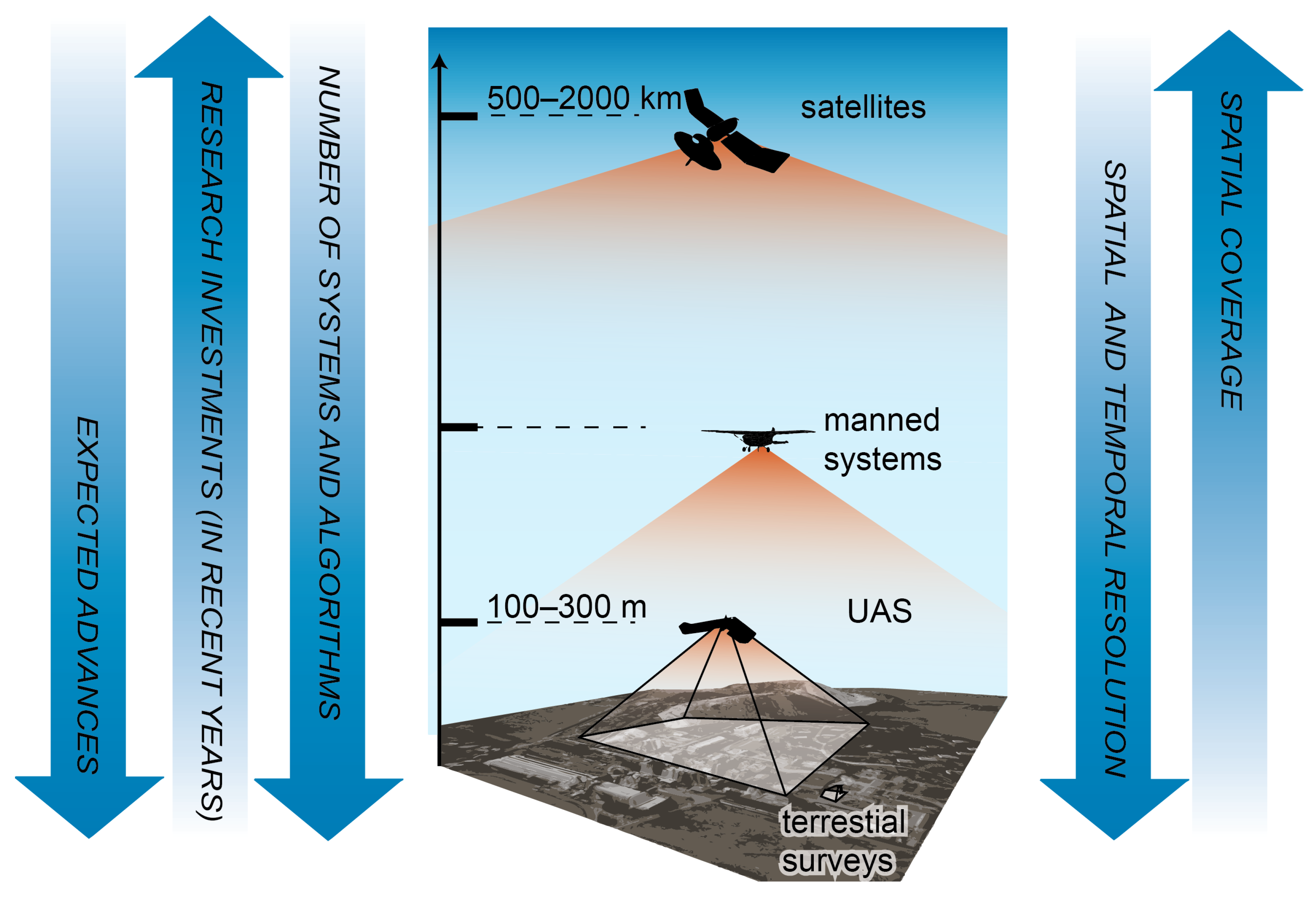

1. Introduction

2. Background

3. UAS Platforms

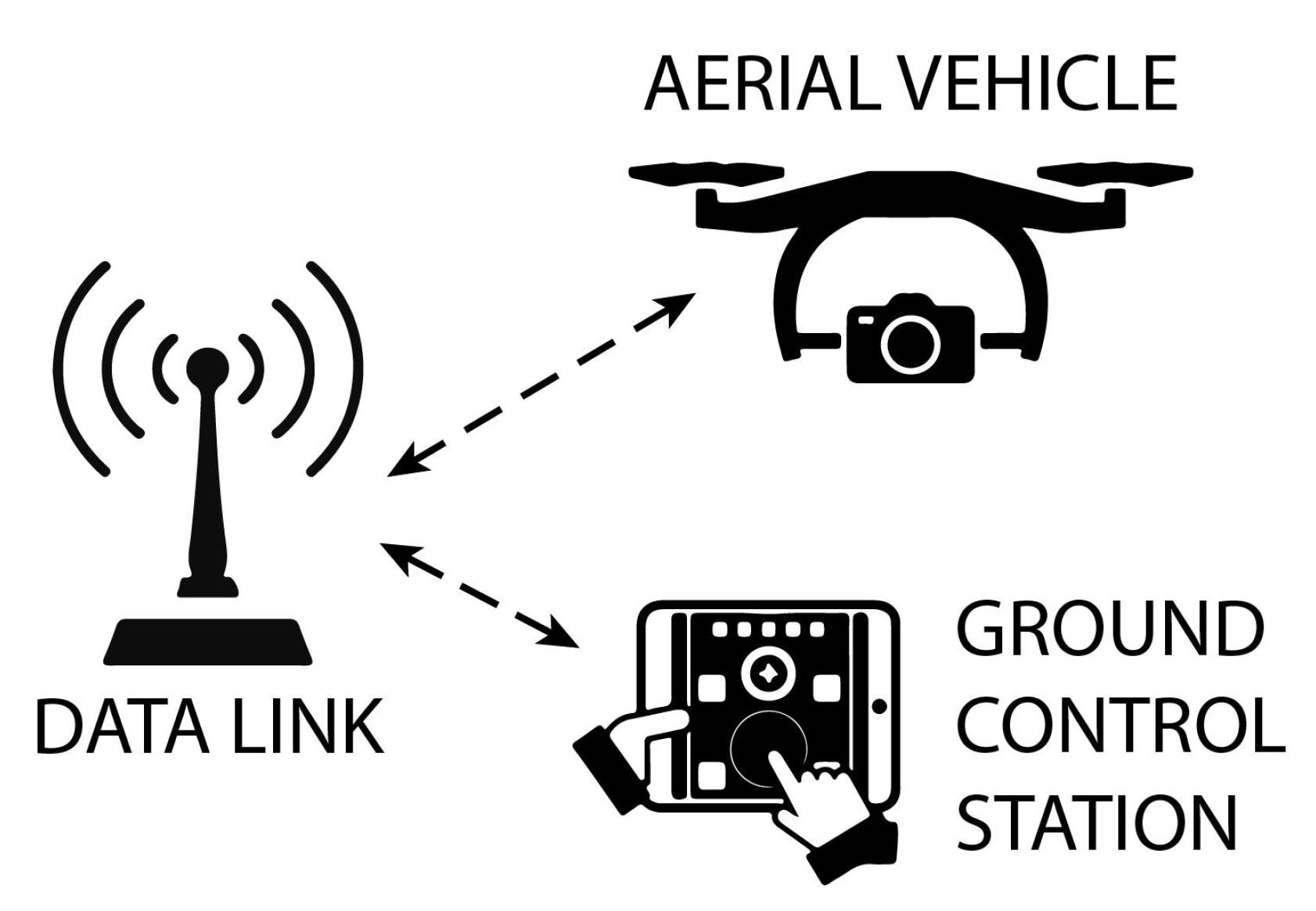

3.1. The System

3.2. Sensing Payloads

3.2.1. RGB (Visible-Band) Cameras

3.2.2. NIR and Multispectral Cameras

3.2.3. Hyperspectral Cameras

3.2.4. Thermal Sensors

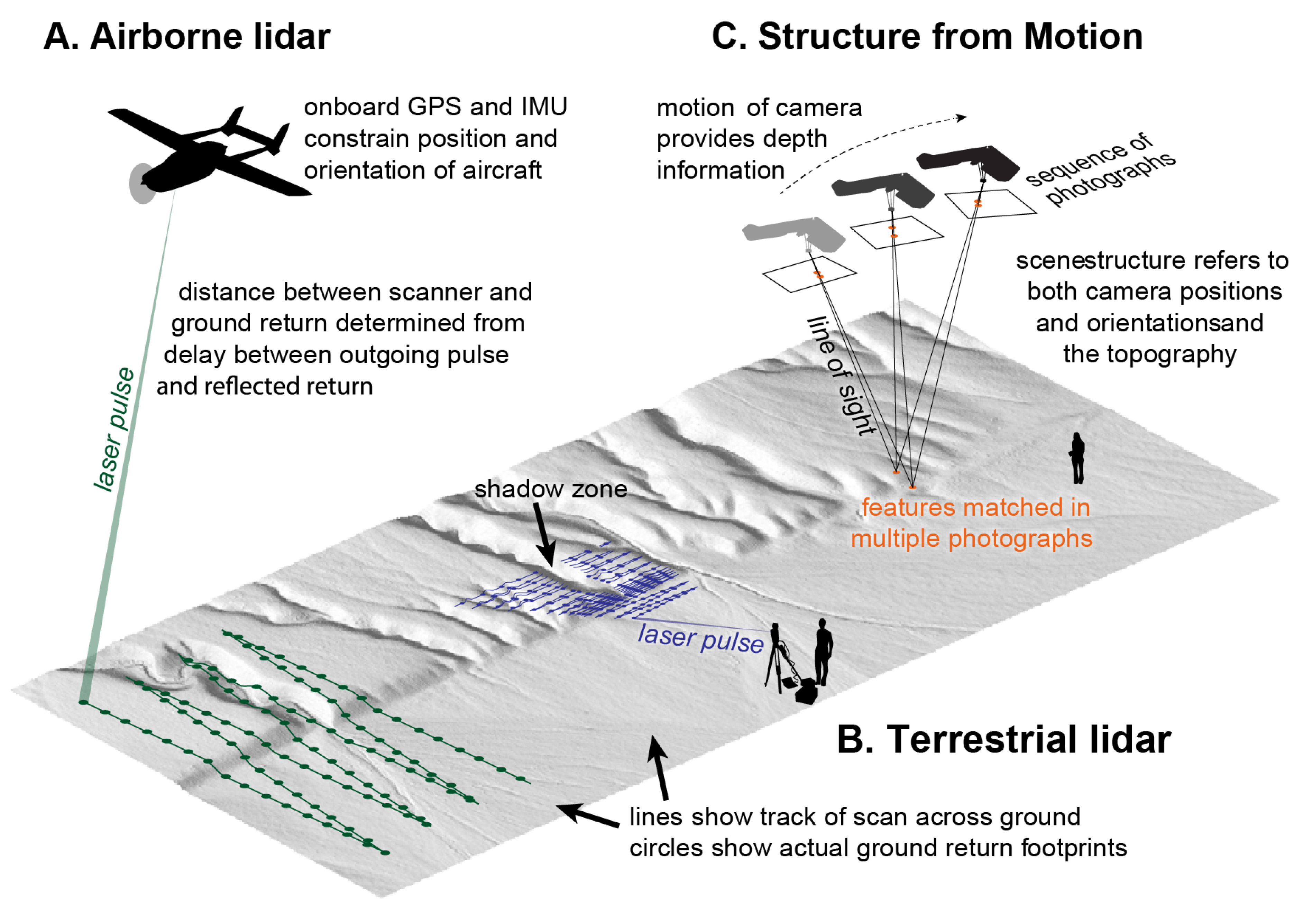

3.2.5. Laser Scanners

4. UAS-Based Spatial Data

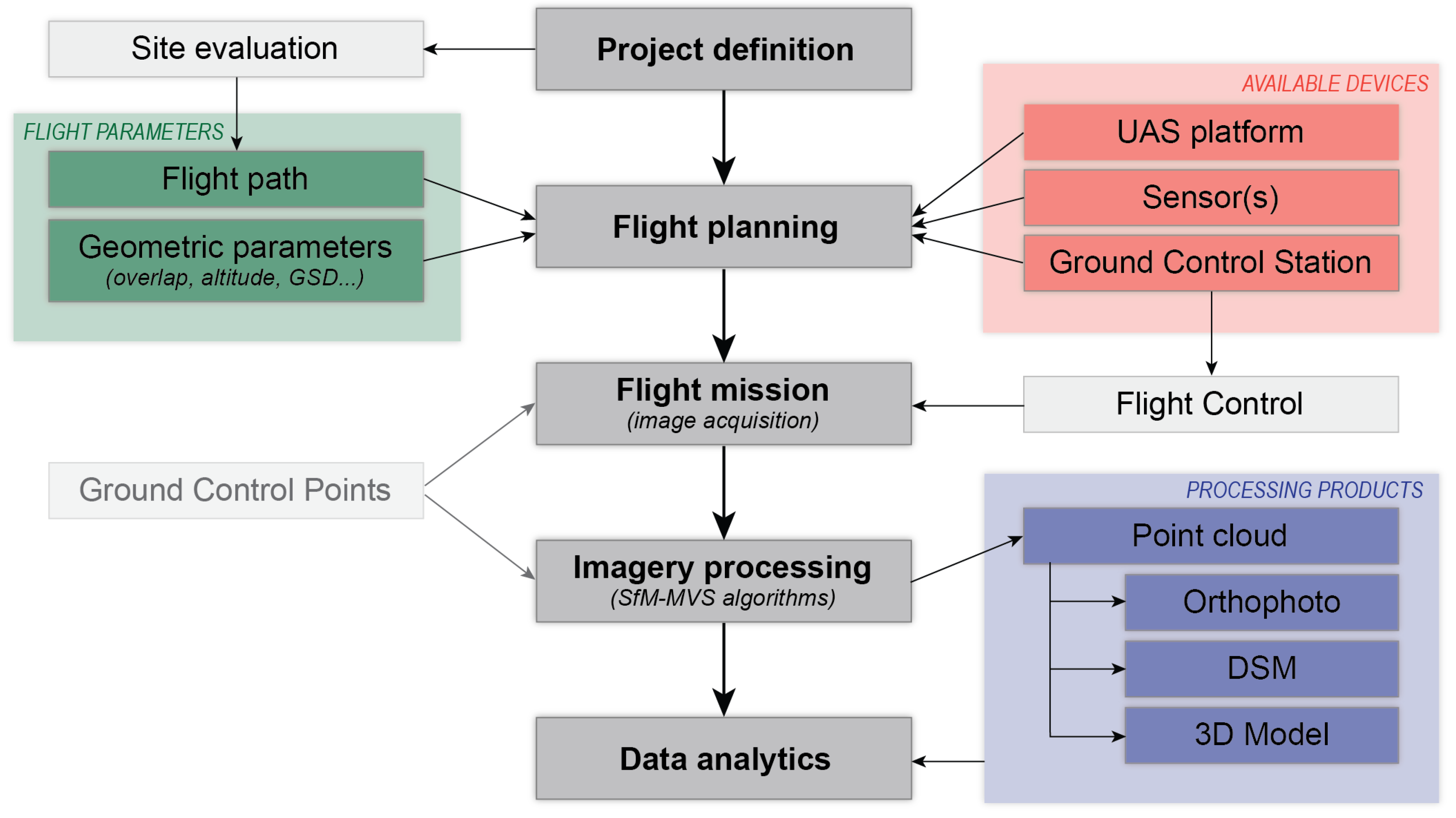

4.1. Data Acquisition Process

4.1.1. UAS Operation and Control

- Ground control: also known as Remotely Piloted Vehicles (“RPVs”), this control option requires constant input information from an operator. A ground control station (GCS) is a control center located on land or sea that provides aircraft status information (location, orientation, systems information, etc.) and accepts and transmits control information from the operator.

- Semi-autonomous: this control method is perhaps the most common and has an operator manually controlling the aircraft during pre-flight, take-off, landing, and a limited set of other maneuvers, but reverts to autopilot enabled autonomous flight for the majority of the mission. For example, the vehicle may be programmed to fly between specified waypoints once in-flight.

- Fully autonomous: here control relies on controlling the unmanned vehicle only by the on-board computer without human participation. It means no human input is necessary to perform an objective following the decision to take-off. In this mode, the aircraft must have the capability to assess its condition, and status as well as make decisions affecting its flight and mission.

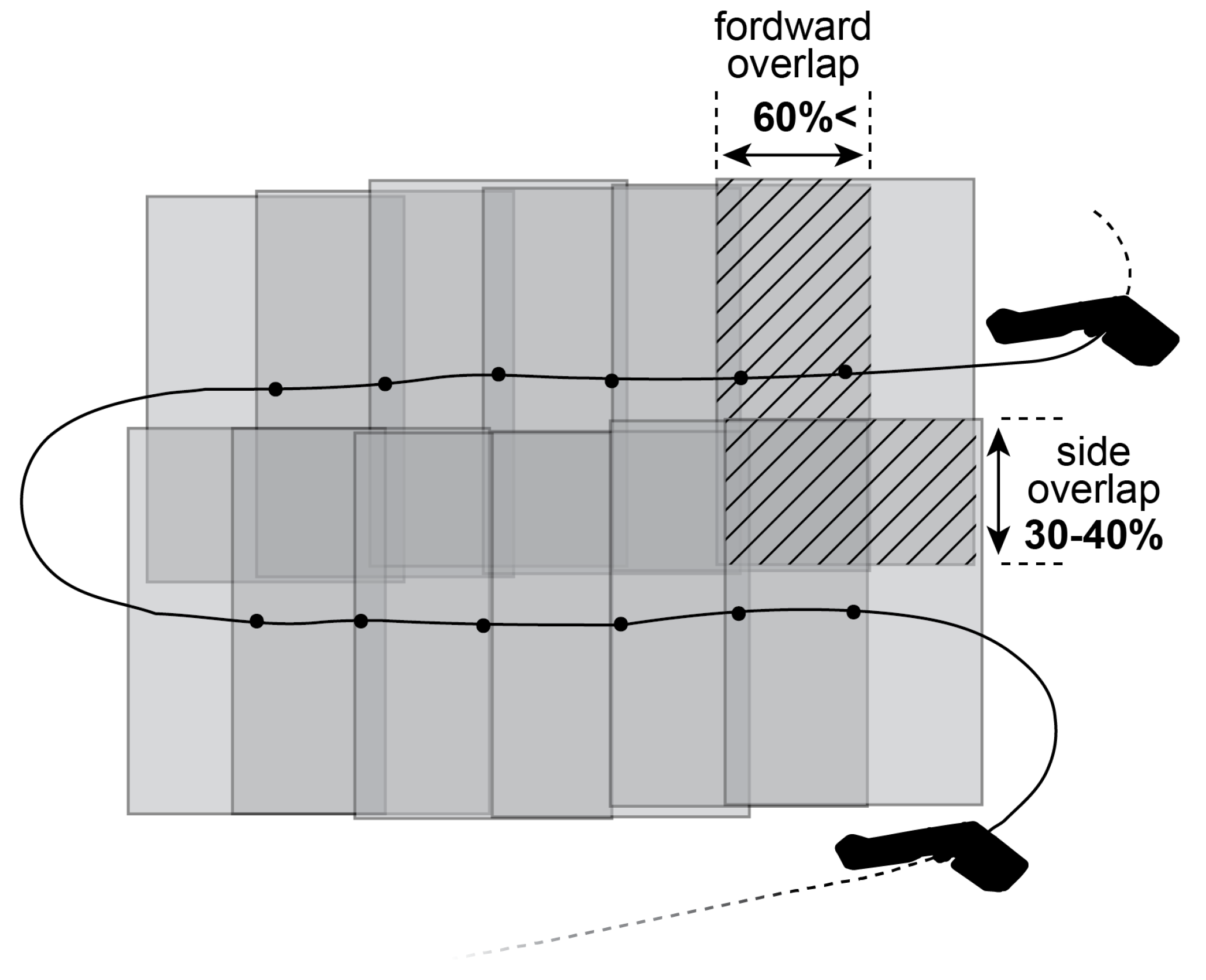

4.1.2. Photogrammetric Flight Planning

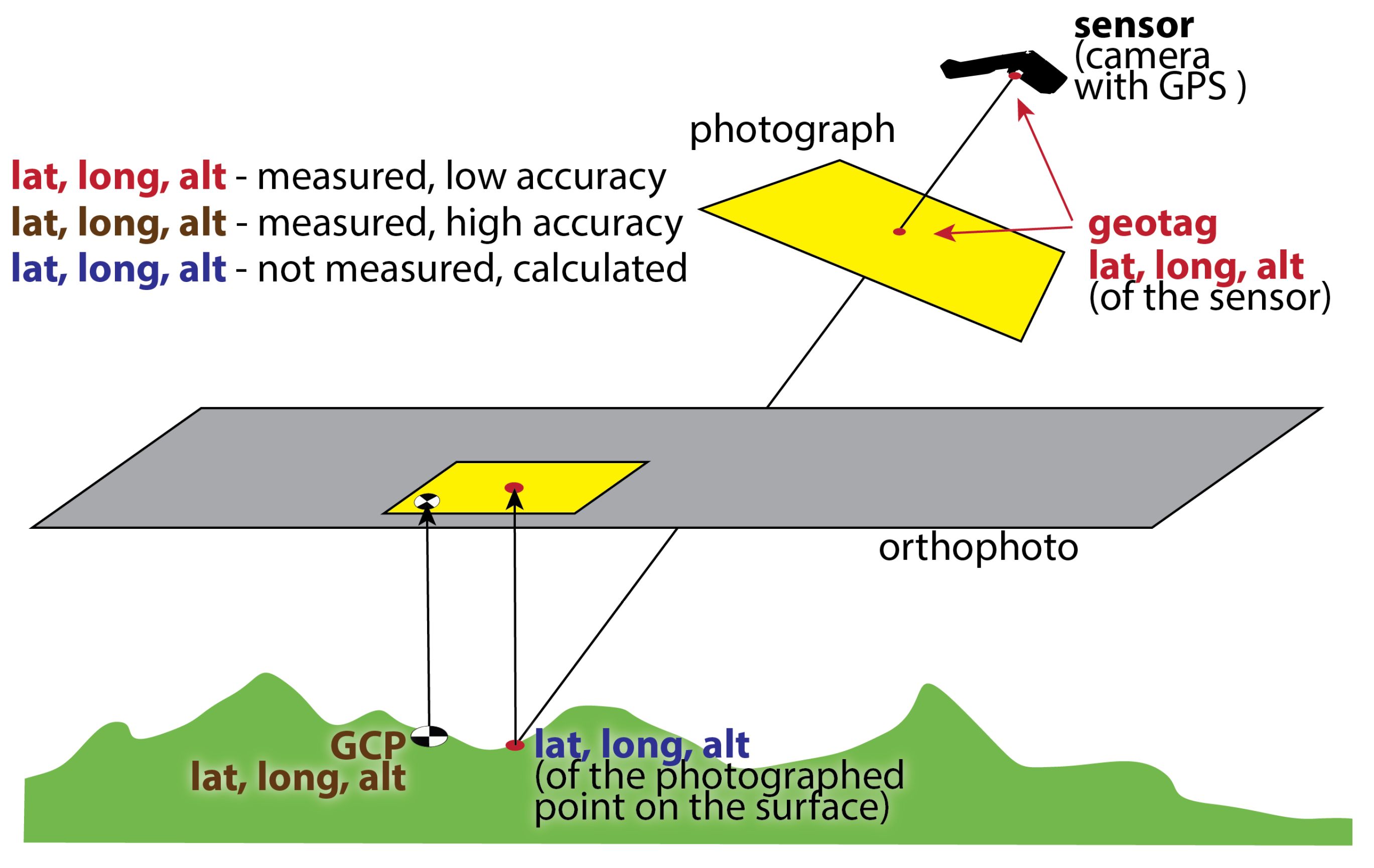

- Ground Control Points (GCPs)Ground control points (GCPs) are points on the surface of the Earth of a precisely known location. GCPs are tied in during data processing to georeferenced images from a project and convert ground coordinates of the points to real world locations. They need to be distributed evenly throughout the mapping area before the flight, and measured with high precision techniques such as differential GPS. The precision and accuracy of the data processed with the use of GCPs is very high—on the order of couple of centimeters [49,50]. In the context of wetland mapping and monitoring, the use of GCPs has several shortcomings. First, actually deploying and locating the targets that will serve as GCPs may not be possible in wetland environments due to access issues. Moreover, dense vegetation can make it impossible to identify the targets within the acquired imagery.

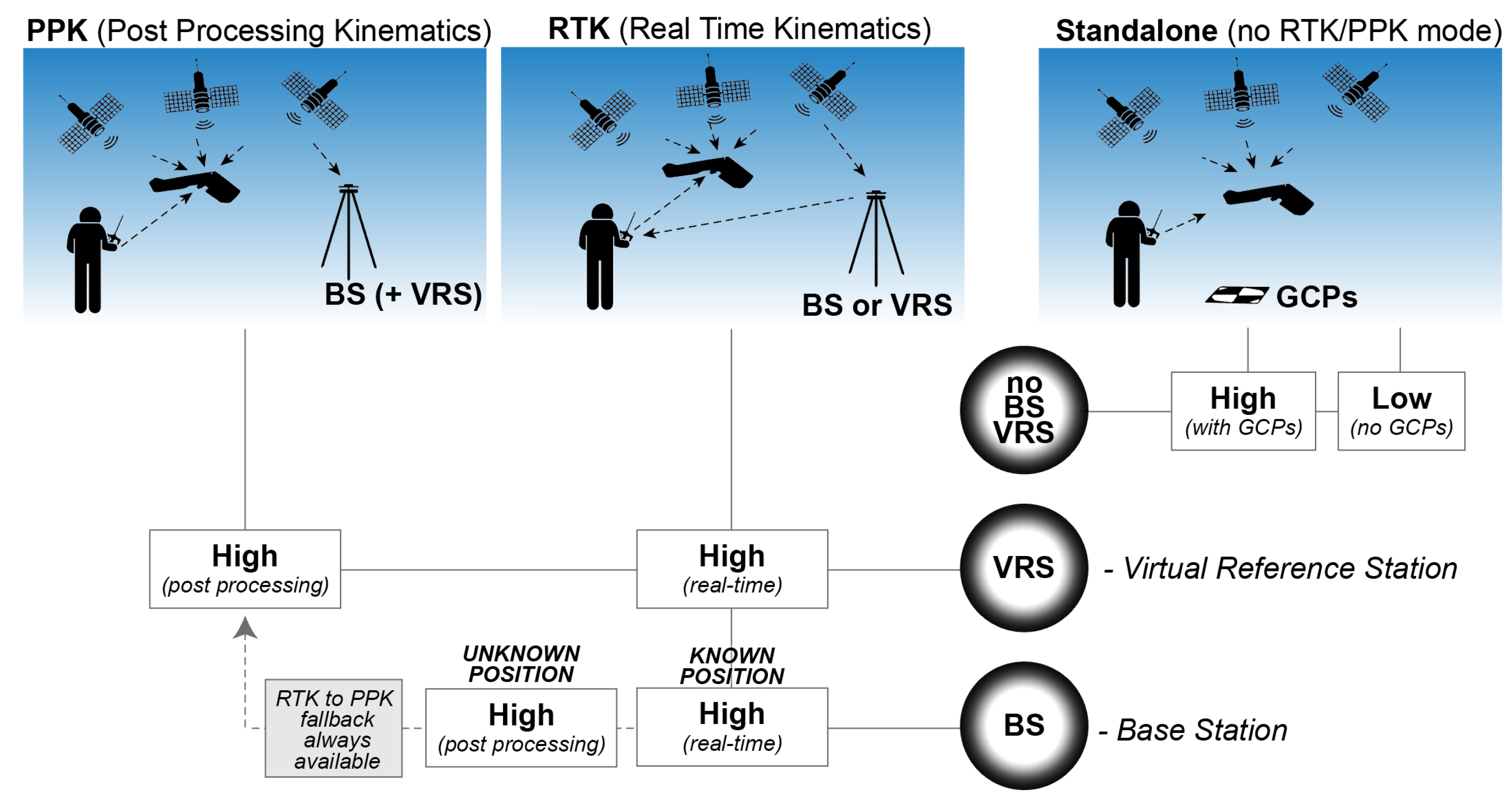

- Real Time Kinematics (RTK) and Post Processing Kinematics (PPK)RTK-enabled drones use differential GPS measurements to improve accuracy. The base station (or the Virtual Reference Station—VRS) constantly provides correction and calibration of the UAS position data (see Figure 13). Each base station measurement is paired in real time with the measurement of the GPS on board the UAS. Successive GPS measurements at the base stations are paired with GPS measurements made by the drone. This provides a mechanism for substantially reducing the errors common between the two measurements (usually resulting in errors on the order of a centimeter or less for the aircraft position relative to the base station). If the UAS operates in the RTK mode, these corrections are applied real-time, requiring an uninterrupted connection between the drone and the base stations throughout the survey. This is hard to achieve in all survey areas, where building, trees, hills can be obstacles in a signal exchange. This limitation is bypassed by using a Post-Processed Kinematic (PPK) solution, in which the base station and the UAS collect the location data independently and the pairing is executed during the data processing stage. The less accurate data of the GPS onboard the UAS is corrected using the more accurate base station data, resulting in more precise geotags of aerial imagery or other survey data.

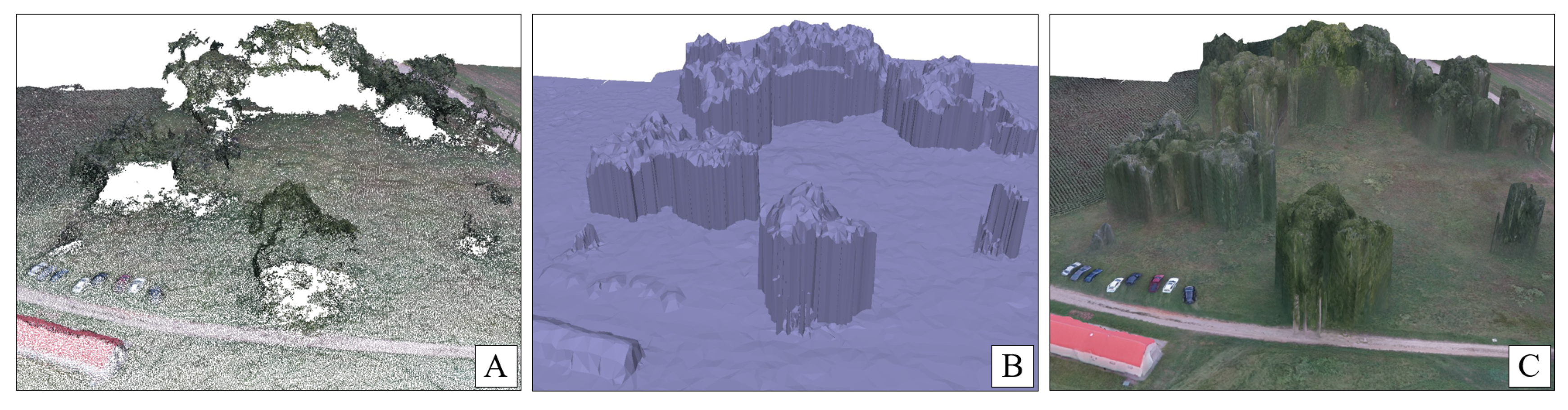

4.2. Surface Reconstruction and Structure from Motion (SfM)

4.2.1. Photogrammetric Processing Software

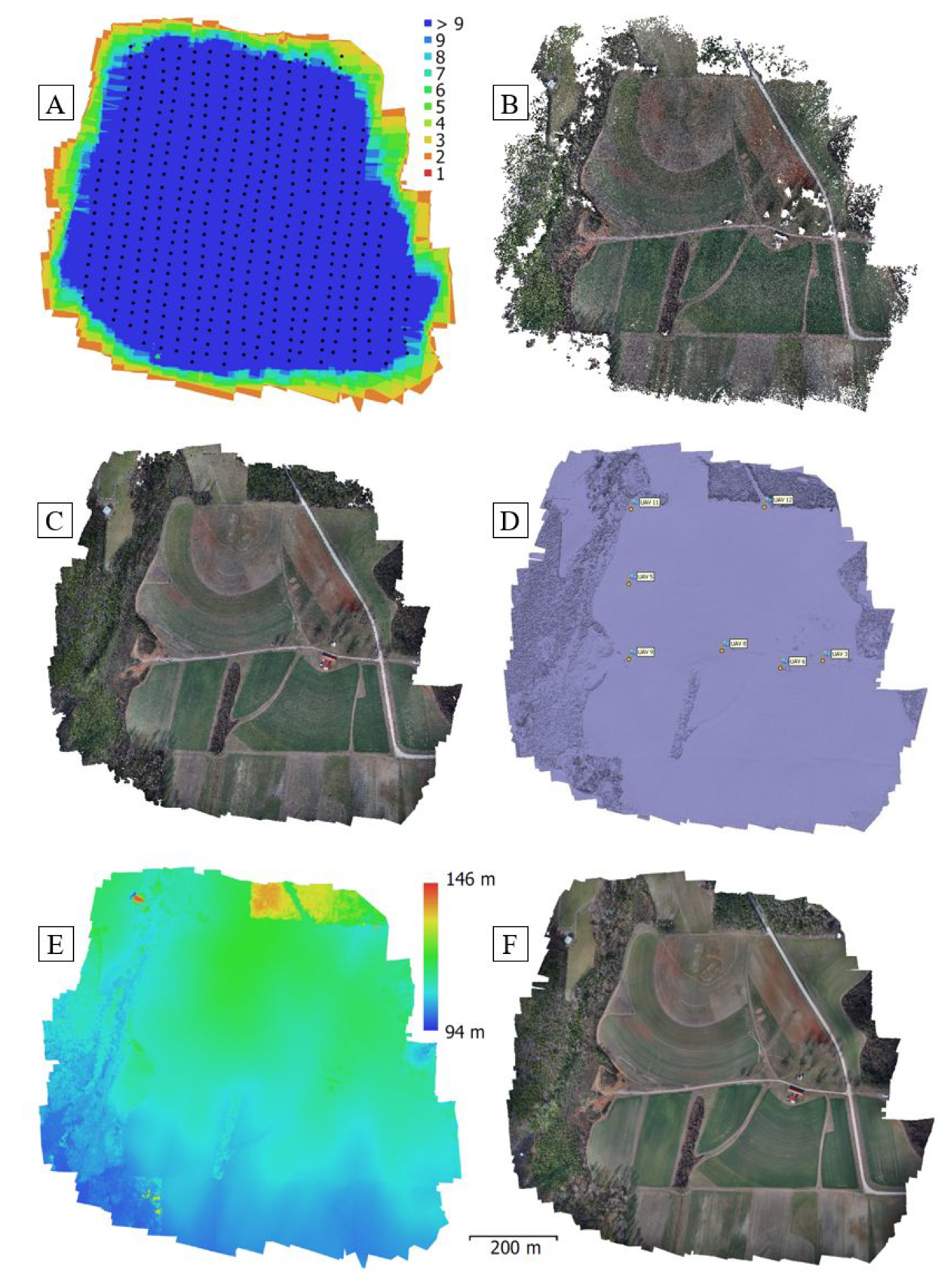

4.2.2. Processing Outputs

- Orthomosaic—several blending modes (for example, assigning a raster color that represents the weighted average value of all pixels from individual photos) can be used for creating a georeferenced orthophotomap. The result looks like an aerial image consisting of all the individual pictures stitched together but is geometrically correct and can be used as cartographic material.

- Digital Surface Model (DSM)—is created by interpolating the elevation value of the raster cells based on the points that are located within this cell. It is crucial to understand that the product of processing RGB imagery can create a Digital Surface Model, not the bare-earth DEM (see Figure 7). That is to say, whereas lidar point clouds are often processed to remove canopy returns that is not possible with DSM.

- 3D Mesh—is a triangulated irregular network created by connecting the vertices of dense point cloud (see Figure 15, D) that can also be exported with texture and viewed as colored 3D model.

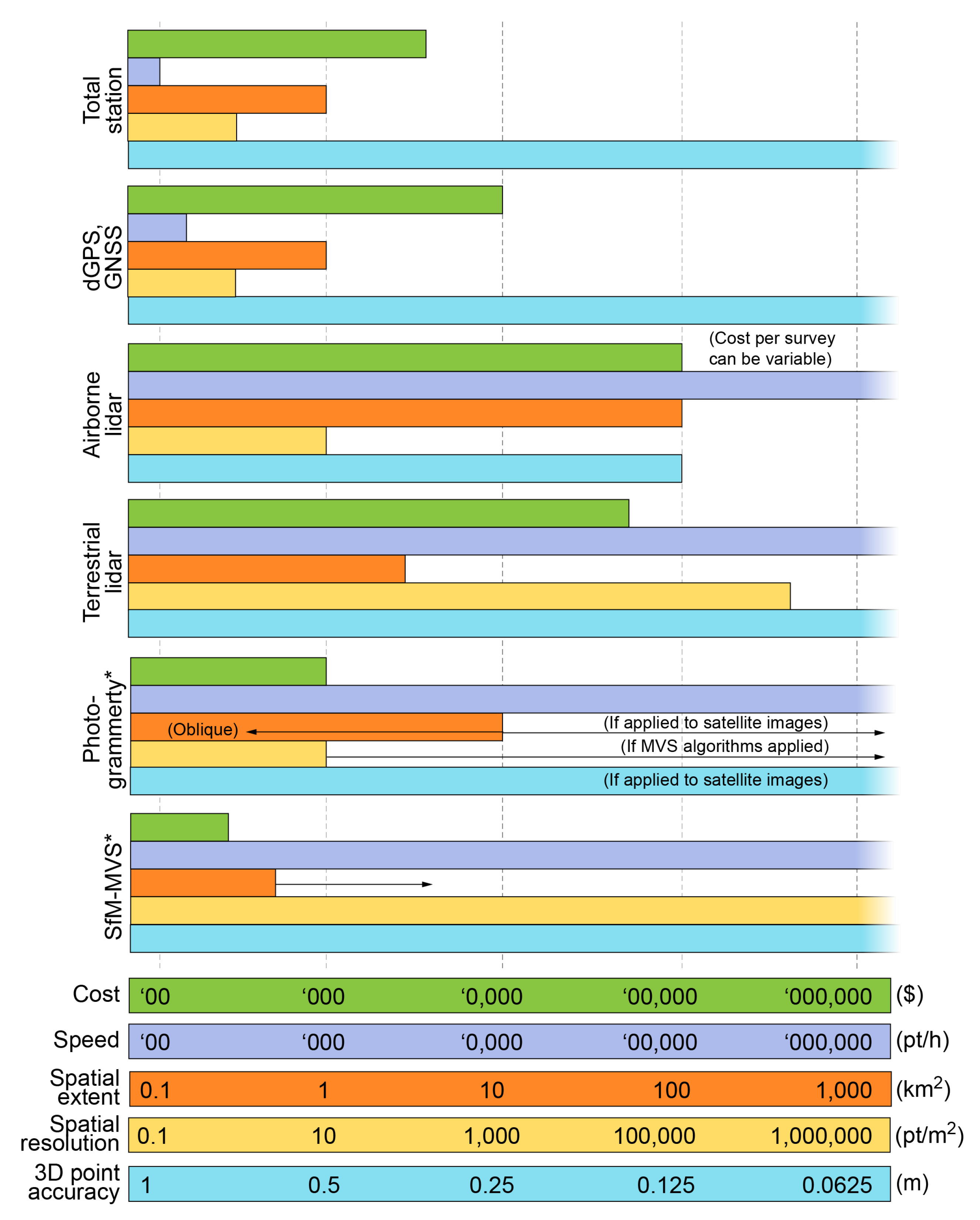

4.3. Cost and Time Effectiveness

- low initial investment cost;

- low mobilization cost;

- decreased time required for data acquisition.

- challenges for acquiring and processing data over large spatial scales (legal and technological);

- repeatability depends on factors outside of the control of the surveyor;

- more affordable solutions (SfM from RGB sensors, multispectral data) limit the application range.

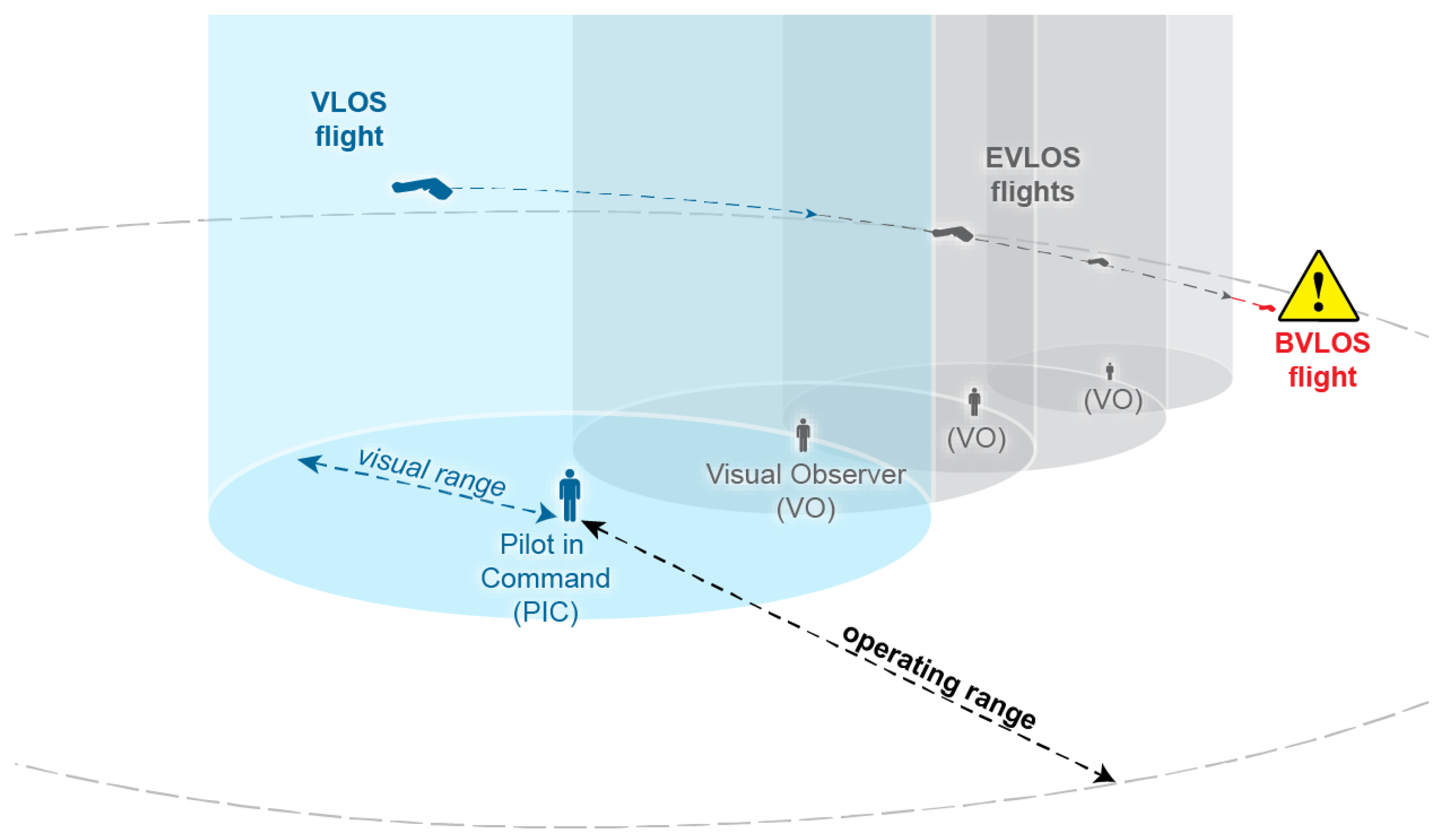

4.4. Legal Constraints

5. Applications for Wetland Mapping and Hydrologic Modeling

6. Discussion and Conclusions

- The key advantage of the UAS is the ability to capture spatial data with high spatial and temporal resolution coupled with time and cost efficiency. Filling the void between time-consuming terrain measurements and expensive and sophisticated satellite and airborne data collection benefits a vast array of research, including environmental monitoring and modeling at unprecedented resolution and ease (see Section 2 and Section 4.3)

- The use of UAS-derived data bolstered by environmental sensor networks, satellite-based remote sensing, and high-performance numerical modeling [104] brings new opportunities in hydrology and related fields: modeling, predictions and overall understanding of hydrological processes enters into new era thanks to availability and affordability of reliable high resolution terrain data (see Section 5).

- The most common type of UAS used for wetland mapping and hydrological modeling is the rotary wing and the prevailing sensor used for this purpose is an RGB camera (see Section 5). It is caused by affordability and ease of use of both technologies. There is room for development and advancement of other sensors in addition to taking advantage of fixed wing UAS capabilities (see Section 3.1).

- Ample choice of processing software and analytics algorithms gives opportunity to investigate various hydrological phenomena, but the shortcoming of the Structure from Motion algorithms in reconstructing homogeneous and moving scene elements makes it challenging to capture water (it is either still and homogeneous or moving) and snow (homogeneous) surfaces, crucial for analyzing some aspects of hydrological studies.

- Another shortcoming of using optical sensor for hydrological research lies in the inability to penetrate vegetation. Representing the ground surface is crucial, especially for hydrological modeling and wetland studies in very densely vegetated wetland areas. This can be addressed by the use of lidar, but, just like hyperspectral sensors, they face a challenge of maintaining good quality data capture capabilities while miniaturization progresses. An additional barrier is the relatively high cost of these sensors (see Section 3.2).

- The issue of scale can also be a disadvantage for the use of UAS. Many wetlands cover significant areas and capturing the whole basin is also very important in hydrological modeling. While technology allows for long flights, the legislation prohibits operations beyond the line of sight (without a waiver), which results in the need of multiple flight missions in commonly hard to reach wetland areas. With the addition of extended visual line of sight (EVLOS) lies a hope for the future change of law or easing the waiver obtaining process (see Section 4.4).

- Along with all the advantages of the UAS use also come the safety and privacy concerns. It is crucial for each UAS user to be informed, follow the state and federal legislature, and ensure the proper equipment maintenance in order not only to avoid collision but also to maximise the benefits of UAS use while respecting privacy.

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AGL | Above Ground Level |

| ASL | Above Sea Level |

| AOI | Area of Interest |

| BVLOS | Beyond Visual Line of Sight |

| CIR | Color-Infrared |

| dGPS | differential Global Positioning System |

| DEM | Digital Elevation Model |

| DoD | Department of Defense |

| DSM | Digital Surface Model |

| DTM | Digital Terrain Model |

| EVLOS | Extended Visual Line of Sight |

| FAA | Federal Aviation Administration |

| GCP | Ground Control Point |

| GCS | Ground Control Station |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| GSD | Ground Sampling Distance |

| IMU | Inertial Measurement Unit |

| INS | Inertial Navigation System |

| lidar | light detection and ranging |

| MVS | Multiple View Stereo |

| NDVI | Normalized Difference Vegetation Index |

| NIR | Near-infrared |

| OBIA | Object-based Image Analysis |

| PPK | Post Processed Kinematics |

| RGB | Red Green Blue |

| RTK | Real Time Kinematics |

| SAR | Synthetic Aperture Radar |

| SfM | Structure from Motion |

| STOL | Short Take-Off and Landing |

| UAS | Unmanned Aerial System |

| UAV | Unmanned Aerial Vehicle |

| VLOS | Visual Line of Sight |

| VTOL | Vertical Take-Off and Landing |

References

- Madden, M.; Jordan, T.; Bernardes, S.; Cotten, D.L.; O’Hare, N.; Pasqua, A. Unmanned aerial systems and structure from motion revolutionize wetlands mapping. In Remote Sensing of Wetlands: Applications and Advances; CRC Press: Boca Raton, FL, USA, 2015; pp. 195–220. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who launched what, when and why; trends in global land-cover observation capacity from civilian earth observation satellites. ISPRS J. Photogramm. Remote Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Wekerle, T.; Filho, J.B.P.; da Costa, L.E.V.L.; Trabasso, L.G. Status and trends of smallsats and their launch vehicles—An up-to-date review. J. Aerosp. Technol. Manag. 2017, 9, 269–286. [Google Scholar] [CrossRef]

- McCabe, M.F.; Aragon, B.; Houborg, R.; Mascaro, J. CubeSats in hydrology: Ultrahigh-resolution insights into vegetation dynamics and terrestrial evaporation. Water Resour. Res. 2017, 53, 10017–10024. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Dustin, M.C. Monitoring Parks with Inexpensive UAVs: Cost Benefits Analysis for Monitoring and Maintaining Parks Facilities. Ph.D. Thesis, University of Southern California, Los Angeles, CA, USA, August 2015. [Google Scholar]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A.; Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- van der Wal, T.; Abma, B.; Viguria, A.; Prévinaire, E.; Zarco-Tejada, P.J.; Serruys, P.; van Valkengoed, E.; van der Voet, P. Fieldcopter: Unmanned aerial systems for crop monitoring services. In Precision Agriculture ’13; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013; pp. 169–175. [Google Scholar] [CrossRef]

- Przybilla, H.; Wester-Ebbinghaus, W. Bildflug mit ferngelenktem Kleinflugzeug. Bildmessung und Luftbildwesen; Zeitschrift für Photogrammetrie und Fernerkundung; Herbert Wichmann Verlag: Karlsruhe, Germany, 1979; pp. 137–142. [Google Scholar]

- Eisenbeiß, H.; Zurich, E.T.H.; Eisenbeiß, H.; Zürich, E.T.H. UAV Photogrammetry. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 2009. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Fahlstrom, P.G.; Gleason, T.J. Introduction to UAV Systems, Fourth Edition; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Austin, R. Unmanned Aircraft Systems: UAVs Design, Development and Deployment; John Wiley & Sons, Ltd.: Chichester, UK, 2010. [Google Scholar]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Qi, S.; Wang, F.; Jing, L. Unmanned aircraft system pilot/operator qualification requirements and training study. MATEC Web Conf. 2018, 179, 03006. [Google Scholar] [CrossRef]

- Senthilnath, J.; Kandukuri, M.; Dokania, A.; Ramesh, K. Application of UAV imaging platform for vegetation analysis based on spectral-spatial methods. Comput. Electron. Agric. 2017, 140, 8–24. [Google Scholar] [CrossRef]

- Husson, E.; Ecke, F.; Reese, H.; Husson, E.; Ecke, F.; Reese, H. Comparison of manual mapping and automated object-based image analysis of non-submerged aquatic vegetation from very-high-resolution UAS images. Remote Sens. 2016, 8, 724. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral remote sensing from unmanned aircraft: Image processing workflows and applications for rangeland environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Turner, I.L.; Harley, M.D.; Drummond, C.D. UAVs for coastal surveying. Coastal Eng. 2016, 114, 19–24. [Google Scholar] [CrossRef]

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-weight multispectral UAV sensors and their capabilities for predicting grain yield and detecting plant diseases. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 963–970. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Kostrzewa, J.; Meyer, W.H.; Laband, S.; Terre, W.A.; Petrovich, P.; Swanson, K.; Sundra, C.; Sener, W.; Wilmott, J. Infrared microsensor payload for miniature unmanned aerial vehicles. In Unattended Ground Sensor Technologies and Applications V; Carapezza, E.M., Ed.; SPIE: Bellingham, WA, USA, 2003. [Google Scholar] [CrossRef]

- Khanal, S.; Fulton, J.; Shearer, S. An overview of current and potential applications of thermal remote sensing in precision agriculture. Comput. Electron. Agric. 2017, 139, 22–32. [Google Scholar] [CrossRef]

- Shafian, S.; Maas, S. Index of soil moisture using raw landsat image digital count data in texas high plains. Remote Sens. 2015, 7, 2352–2372. [Google Scholar] [CrossRef]

- Hassan-Esfahani, L.; Torres-Rua, A.; Jensen, A.; McKee, M. Assessment of surface soil moisture using high-resolution multi-spectral imagery and artificial neural networks. Remote Sens. 2015, 7, 2627–2646. [Google Scholar] [CrossRef]

- Soliman, A.; Heck, R.; Brenning, A.; Brown, R.; Miller, S. Remote sensing of soil moisture in vineyards using airborne and ground-based thermal inertia data. Remote Sens. 2013, 5, 3729–3748. [Google Scholar] [CrossRef]

- Osroosh, Y.; Peters, R.T.; Campbell, C.S.; Zhang, Q. Automatic irrigation scheduling of apple trees using theoretical crop water stress index with an innovative dynamic threshold. Comput. Electron. Agric. 2015, 118, 193–203. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolás, E.; Nortes, P.A.; Alarcón, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- O’Shaughnessy, S.A.; Evett, S.R.; Colaizzi, P.D.; Howell, T.A. A crop water stress index and time threshold for automatic irrigation scheduling of grain sorghum. Agric. Water Manag. 2012, 107, 122–132. [Google Scholar] [CrossRef]

- Wang, B.; Shi, W.; Liu, E. Robust methods for assessing the accuracy of linear interpolated DEM. Int. J. Appl. Earth Observ. Geoinform. 2015, 34, 198–206. [Google Scholar] [CrossRef]

- Mahlein, A.K. Plant disease detection by imaging sensors—Parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef]

- Calderón, R.; Montes-Borrego, M.; Landa, B.B.; Navas-Cortés, J.A.; Zarco-Tejada, P.J. Detection of downy mildew of opium poppy using high-resolution multi-spectral and thermal imagery acquired with an unmanned aerial vehicle. Precis. Agric. 2014, 15, 639–661. [Google Scholar] [CrossRef]

- Oerke, E.C.; Fröhling, P.; Steiner, U. Thermographic assessment of scab disease on apple leaves. Precis. Agric. 2010, 12, 699–715. [Google Scholar] [CrossRef]

- Heritage, G.L.; Milan, D.J.; Large, A.R.G.; Fuller, I.C. Influence of survey strategy and interpolation model on DEM quality. Geomorphology 2009, 112, 334–344. [Google Scholar] [CrossRef]

- Alho, P.; Kukko, A.; Hyyppä, H.; Kaartinen, H.; Hyyppä, J.; Jaakkola, A. Application of boat-based laser scanning for river survey. Earth Surf. Process. Landf. 2009, 34, 1831–1838. [Google Scholar] [CrossRef]

- Hodge, R.; Brasington, J.; Richards, K. Analysing laser-scanned digital terrain models of gravel bed surfaces: Linking morphology to sediment transport processes and hydraulics. Sedimentology 2009, 56, 2024–2043. [Google Scholar] [CrossRef]

- Lang, M.; Bourgeau-Chavez, L.; Tiner, R.; Klemas, V. Advances in remotely sensed data and techniques for wetland mapping and monitoring. In Remote Sensing of Wetlands; CRC Press: Boca Raton, FL, USA, 2015; pp. 79–116. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Mitsch, W.J.; Gosselink, J.G. Wetlands; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2007. [Google Scholar]

- Hogg, A.R.; Holland, J. An evaluation of DEMs derived from LiDAR and photogrammetry for wetland mapping. For. Chron. 2008, 84, 840–849. [Google Scholar] [CrossRef]

- Snyder, G.I.; Sugarbaker, L.J.; Jason, A.L.; Maune, D.F. National Requirements for Improved Elevation Data; USGS: Reston, VA, USA, 2014. [CrossRef]

- Miziński, B.; Niedzielski, T. Fully-automated estimation of snow depth in near real time with the use of unmanned aerial vehicles without utilizing ground control points. Cold Reg. Sci. Technol. 2017, 138, 63–72. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Hugenholtz, C.; Brown, O.; Walker, J.; Barchyn, T.; Nesbit, P.; Kucharczyk, M.; Myshak, S. Spatial accuracy of UAV-derived orthoimagery and topography: Comparing photogrammetric models processed with direct geo-referencing and ground control points. GEOMATICA 2016, 70, 21–30. [Google Scholar] [CrossRef]

- Lane, S.N.; Richards, K.S.; Chandler, J.H. Developments in monitoring and modelling small-scale river bed topography. Earth Surf. Process. Landf. 1994, 19, 349–368. [Google Scholar] [CrossRef]

- Chandler, J. Effective application of automated digital photogrammetry for geomorphological research. Earth Surf. Process. Landf. 1999, 24, 51–63. [Google Scholar] [CrossRef]

- Westaway, R.M.; Lane, S.N.; Hicks, D.M. The development of an automated correction procedure for digital photogrammetry for the study of wide, shallow, gravel-bed rivers. Earth Surf. Process. Landf. 2000, 25, 209–226. [Google Scholar] [CrossRef]

- Bennett, G.; Molnar, P.; Eisenbeiss, H.; McArdell, B. Erosional power in the Swiss Alps: Characterization of slope failure in the Illgraben. Earth Surf. Process. Landf. 2012, 37, 1627–1640. [Google Scholar] [CrossRef]

- Brasington, J.; Rumsby, B.T.; McVey, R.A. Monitoring and modelling morphological change in a braided gravel-bed river using high resolution GPS-based survey. Earth Surf. Process. Landf. 2000, 25, 973–990. [Google Scholar] [CrossRef]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Structure from Motion (SfM) Photogrammetry. In Geomorphological Techniques; Cook, S.J., Clarke, L.E., Nield, J.M., Eds.; British Society for Geomorphology: London, UK, 2015. [Google Scholar]

- Ullman, S.; Brenner, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar] [CrossRef]

- Seitz, S.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A comparison and evaluation of multi-view stereo reconstruction algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Volume 1 (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: a new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Gomez, C.; Hayakawa, Y.; Obanawa, H. A study of Japanese landscapes using structure from motion derived DSMs and DEMs based on historical aerial photographs: New opportunities for vegetation monitoring and diachronic geomorphology. Geomorphology 2015, 242, 11–20. [Google Scholar] [CrossRef]

- Carrivick, J.; Smith, M.; Quincey, D. Structure from Motion in the Geosciences; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2016. [Google Scholar]

- Smith, M.; Carrivick, J.; Quincey, D. Structure from motion photogrammetry in physical geography. Prog. Phys. Geogr. Earth Environ. 2015, 40, 247–275. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. Earth Surf. 2012, 117, 1–17. [Google Scholar] [CrossRef]

- Verhoeven, G.; Doneus, M.; Briese, C.; Vermeulen, F. Mapping by matching: A computer vision-based approach to fast and accurate georeferencing of archaeological aerial photographs. J. Archaeol. Sci. 2012, 39, 2060–2070. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.F.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2007, 80, 189–210. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the 2018 IEEE International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar] [CrossRef]

- Wu, M. Research on optimization of image fast feature point matching algorithm. EURASIP J. Image Video Process. 2018, 2018. [Google Scholar] [CrossRef]

- Işık, Ş. A Comparative evaluation of well-known feature detectors and descriptors. Int. J. Appl. Math. Electron. Comput. 2014, 3, 1–6. [Google Scholar] [CrossRef]

- Strecha, C.; von Hansen, W.; Gool, L.V.; Fua, P.; Thoennessen, U. On benchmarking camera calibration and multi-view stereo for high resolution imagery. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 June 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Johnson, K.; Nissen, E.; Saripalli, S.; Arrowsmith, J.R.; McGarey, P.; Scharer, K.; Williams, P.; Blisniuk, K. Rapid mapping of ultrafine fault zone topography with structure from motion. Geosphere 2014, 10, 969–986. [Google Scholar] [CrossRef]

- Oliensis, J. A critique of structure-from-motion algorithms. Comput. Vis. Image Underst. 2000, 80, 172–214. [Google Scholar] [CrossRef]

- Wenger, S.M.B. Evaluation of SfM against Traditional Stereophotogrammetry and Lidar Techniques for DSM Creation in Various Land Cover Areas. Ph.D. Thesis, Stellenbosch University, Stellenbosch, South Africa, December 2016. [Google Scholar]

- Jenson, S.K. Applications of hydrologic information automatically extracted from digital elevation models. Hydrolog. Process. 1991, 5, 31–44. [Google Scholar] [CrossRef]

- Kenward, T. Effects of digital elevation model accuracy on hydrologic predictions. Remote Sens. Environ. 2000, 74, 432–444. [Google Scholar] [CrossRef]

- Bakuła, K.; Salach, A.; Wziątek, D.Z.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Evaluation of the accuracy of lidar data acquired using a UAS for levee monitoring: preliminary results. Int. J. Remote Sens. 2017, 38, 2921–2937. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidarbased point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2017, 38, 2411–2426. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovskỳ, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Fitzpatrick, B.P. Unmanned Aerial Systems for Surveying and Mapping: Cost Comparison of UAS versus Traditional Methods of Data Acquisition. Ph.D. Thesis, University of Southern California, Los Angeles, CA, USA, August 2016. [Google Scholar]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Federal Aviation Administration. Summary of Small Unmanned Aircraft Rule (Part 107); Federal Aviation Administration: Washington, DC, USA, 2016.

- Federal Aviation Administration. No Drone Zone; Federal Aviation Administration: Washington, DC, USA, 2016.

- Federal Aviation Administration. Part 107 Waivers; Federal Aviation Administration: Washington, DC, USA, 2016.

- Jiang, T.; Geller, J.; Ni, D.; Collura, J. Unmanned aircraft system traffic management: Concept of operation and system architecture. Int. J. Transp. Sci. Technol. 2016, 5, 123–135. [Google Scholar] [CrossRef]

- Askelson, M.; Cathey, H. Small UAS Detect and Avoid Requirements Necessary for Limited Beyond Visual Line of Sight (BVLOS) Operations; Technical Report; ASSURE: Starkville, MS, USA, 2017. [Google Scholar]

- Federal Aviation Administration. Integration of Civil Unmanned Aircraft Systems (UAS) in the National Airspace System (NAS) Roadmap Federal Aviation Administration Second Edition; Technical Report; Federal Aviation Administration: Washington, DC, USA, 2018.

- Jones, T. International Commercial Drone Regulation and Drone Delivery Services; RAND: Santa Monica, CA, USA, 2017. [Google Scholar]

- Biggs, H.J.; Nikora, V.I.; Gibbins, C.N.; Fraser, S.; Green, D.R.; Papadopoulos, K.; Hicks, D.M. Coupling Unmanned Aerial Vehicle (UAV) and hydraulic surveys to study the geometry and spatial distribution of aquatic macrophytes. J. Ecohydraul. 2018, 3, 45–58. [Google Scholar] [CrossRef]

- Gray, P.; Ridge, J.; Poulin, S.; Seymour, A.; Schwantes, A.; Swenson, J.; Johnston, D. Integrating drone imagery into high resolution satellite remote sensing assessments of estuarine environments. Remote Sens. 2018, 10, 1257. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A. Multi-view object-based classification of wetland land covers using unmanned aircraft system images. Remote Sens. Environ. 2018, 216, 122–138. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Liu, T.; Morton, J.; Wilhelm, V.L. Object-based classification of wetland vegetation using very high-resolution unmanned air system imagery. Eur. J. Remote Sens. 2017, 50, 564–576. [Google Scholar] [CrossRef]

- Wan, H.; Wang, Q.; Jiang, D.; Fu, J.; Yang, Y.; Liu, X. Monitoring the invasion of Spartina alterniflora using very high resolution unmanned aerial vehicle imagery in Beihai, Guangxi (China). Sci. World J. 2014, 2014, 1–7. [Google Scholar] [CrossRef]

- Li, Q.S.; Wong, F.K.; Fung, T. Assessing the utility of UAV-borne hyperspectral image and photogrammetry derived 3D data for wetland species distribution quick mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2017, 42, 209–215. [Google Scholar] [CrossRef]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M. UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2017, 4, 20–33. [Google Scholar] [CrossRef]

- Wigmore, O.; Mark, B.; McKenzie, J.; Baraer, M.; Lautz, L. Sub-metre mapping of surface soil moisture in proglacial valleys of the tropical Andes using a multispectral unmanned aerial vehicle. Remote Sens. Environ. 2019, 222, 104–118. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Chaplot, V. Impact of spatial input data resolution on hydrological and erosion modeling: Recommendations from a global assessment. Phys. Chem. Earth Parts A/B/C 2014, 67–69, 23–35. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, R.; Bao, Y.; Wang, J.; Yu, W.; Shen, Z. Uncertainty of SWAT model at different DEM resolutions in a large mountainous watershed. Water Res. 2014, 53, 132–144. [Google Scholar] [CrossRef]

- Shrestha, R.; Tachikawa, Y.; Takara, K. Input data resolution analysis for distributed hydrological modeling. J. Hydrol. 2006, 319, 36–50. [Google Scholar] [CrossRef]

- Horritt, M.; Bates, P. Effects of spatial resolution on a raster based model of flood flow. J. Hydrol. 2001, 253, 239–249. [Google Scholar] [CrossRef]

- Molnár, D.K.; Julien, P.Y. Grid-size effects on surface runoff modeling. J. Hydrol. Eng. 2000, 5, 8–16. [Google Scholar] [CrossRef]

- Bruneau, P.; Gascuel-Odoux, C.; Robin, P.; Merot, P.; Beven, K. Sensitivity to space and time resolution of a hydrological model using digital elevation data. Hydrol. Process. 1995, 9, 69–81. [Google Scholar] [CrossRef]

- Vivoni, E.R.; Rango, A.; Anderson, C.A.; Pierini, N.A.; Schreiner-McGraw, A.P.; Saripalli, S.; Laliberte, A.S. Ecohydrology with unmanned aerial vehicles. Ecosphere 2014, 5, art130. [Google Scholar] [CrossRef]

- Petrasova, A.; Mitasova, H.; Petras, V.; Jeziorska, J. Fusion of high-resolution DEMs for water flow modeling. Open Geospat. Data Softw. Stand. 2017, 2. [Google Scholar] [CrossRef]

- Tang, Q.; Schilling, O.S.; Kurtz, W.; Brunner, P.; Vereecken, H.; Franssen, H.J.H. Simulating flood-induced riverbed transience using unmanned aerial vehicles, physically based hydrological modeling, and the ensemble Kalman filter. Water Resour. Res. 2018, 54, 9342–9363. [Google Scholar] [CrossRef]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Wetland assessment using unmanned aerial vehicle (UAV) photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2016, 41, 781–788. [Google Scholar] [CrossRef]

- Jeziorska, J.; Mitasova, H.; Petrasova, A.; Petras, V.; Divakaran, D.; Zajkowski, T. Overland flow analysis using time series of sUAS derived data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-8, 159–166. [Google Scholar] [CrossRef]

- Capolupo, A.; Pindozzi, S.; Okello, C.; Fiorentino, N.; Boccia, L. Photogrammetry for environmental monitoring: The use of drones and hydrological models for detection of soil contaminated by copper. Sci. Total Environ. 2015, 514, 298–306. [Google Scholar] [CrossRef]

- Tamminga, A.; Hugenholtz, C.; Eaton, B.; Lapointe, M. Hyperspatial remote sensing of channel reach morphology and hydraulic fish habitat using an unmanned aerial vehicle (UAV): A first assessment in the context of river research and management. River Res. Appl. 2014, 31, 379–391. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.; González-Dugo, V.; Berni, J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

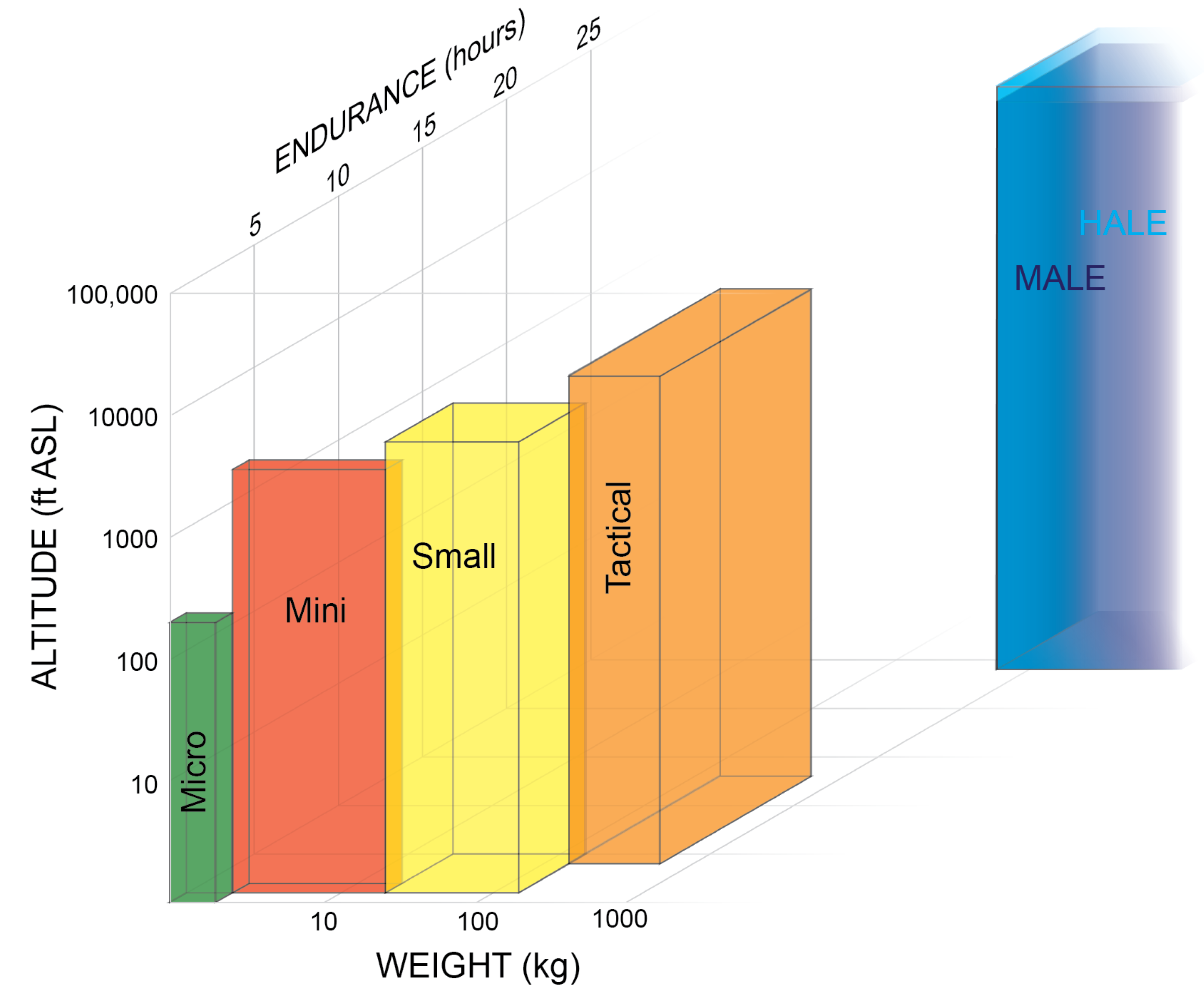

| Category | Weight [kg] | Altitude [feet ASL] | Radius [km] | Endurance [h] |

|---|---|---|---|---|

| Micro | <2 | up to 200 | <5 | < 1 |

| Mini | 2–20 | up to 3000 | <25 | 1–2 |

| Small | 20–150 | up to 5000 | <50 | 1–5 |

| Tactical | 150–600 | up to 10,000 | 100–300 | 4–15 |

| MALE | >600 | up to 45,000 | >500 | >24 |

| HALE | >600 | up to 60,000 | global | >24 |

| Fixed Wing | Multi Rotor | |

|---|---|---|

| advantages | longer flight autonomy, larger areas covered in less time, better control of flight parameters, higher control of image quality, greater stability (better aerodynamic performance minor influence of environmental conditions), higher flight safety (safer recovery from power loss), | greater maneuverability, lower price, more compact and portable, easy to use, higher payload capacity, ability to hover, small landing/takeoff zone |

| disadvantages | less compact, less portable, higher price, challenging to fly larger takeoff/landing site needed | shorter range, less stable in the wind, |

| Manufacturer and Model | Resolution [px] | Weight [g] | Speed [/s] |

|---|---|---|---|

| DJI Zenmuse X7 | 24 MP (multiple photo sizes) | 449 | up to 6000 |

| MAPIR Survey3 | 4000 × 3000 | 50 * | up to 200 |

| PhaseOne iXU-RS 1000 | 11,608 × 8708 | 930 | up to 2500 |

| Sony ILCE-QX1 | 5456 × 3632 | 158 * | up to 4000 |

| senseFly S.O.D.A. | 5472 × 3648 | 111 | up to 2000 |

| Manufacturer and Model | Resolution [px] | Pixel Size [µm] | Weight [g] | Spectral Range Central Wavelength [nm] (Band with [nm]) |

|---|---|---|---|---|

| Buzzard | 1280 × 1024 | 5.3 | 250 | Blue: 500 (50) |

| Camera six | Green: 550 (25) | |||

| Red: 675 (25) | ||||

| NIR1: 700 (10) | ||||

| NIR2: 750 (10) | ||||

| NIR3: 780 (10) | ||||

| MicaSense | 1280 × 960 | 3.75 | 180 | Blue: 475 (20) |

| RedEdge | Green: 560 (20) | |||

| Red: 668 (10) | ||||

| Red edge: 717 (10) | ||||

| NIR: 840 (40) | ||||

| Parrot | 1280 × 960 | 3.75 | 71 | Green: 550 (40) |

| Sequoia+ | Red: 660 (40) | |||

| Red edge: 735 (10) | ||||

| NIR: 790 (40) | ||||

| Sentera Quad | 1248 × 950 | 3750 | 0.17 | RGB Red: 655 (40) |

| Red edge: 725 (25) | ||||

| NIR: 800 (25) | ||||

| Tetracam | 2048 × 1536 | 3200 | 0.09 | Green: 520–600 |

| ADC Micro | Red: 630–690 | |||

| NIR: 760–900 | ||||

| Tetracam | 1280 × 1024 | 5.2 | 700 | Blue: 490 (10) |

| MiniMCA6 | Green: 550 (10) | |||

| Red: 680 (10) | ||||

| Red edge: 720 (10) | ||||

| NIR1: 800 (10) | ||||

| NIR2: 900(20) |

| Manufacturer and Model | Spectral Range [nm] | Number of Bands | Spatial Resolution [px] | Weight [g] |

|---|---|---|---|---|

| BaySpec OCI-UAV-1000 | 600–1000 | 100 | 2048 * | 272 |

| Brandywine Photonics CHAI V-640 | 350–1080 | 256 | 640 × 512 | 480 |

| HySpex VNIR-1024 | 400–1000 | 108 | 1024 * | 4000 |

| NovaSol Alpha-SWIR microHSI | 900–1700 | 160 | 640 * | 1200 |

| Quest Hyperea 660 C1 | 400–1000 | 660 | 1024 * | 1440 |

| Resonon Pika L | 400–1000 | 281 | 900 * | 600 |

| Resonon Pika NIR | 900–1700 | 164 | 320 * | 2700 |

| SENOP VIS-VNIR Snapshot | 400–900 | 380 | 1010 × 1010 | 720 |

| SPECIM SPECIM FX17 | 900–1700 | 224 | 640 * | 1700 |

| Surface Optics Corp. SOC710-GX | 400–1000 | 120 | 640 * | 1250 |

| XIMEA MQ022HG-IM-LS150-VISNIR | 470–900 | 150+ | 2048 × 5 | 300 |

| Manufacturer and Model | Resolution [px] | Weight [g] | Spectral Band [µm] |

|---|---|---|---|

| FLIR T450sc | 320 × 240 | 880 * | 7.5–13.0 |

| FLIR Tau 640 | 640 × 512 | 110 | 7.5–13.5 |

| FLIR Thermovision A40M | 320 × 240 | 1400 | 7.5–13.5 |

| ICI 320x | 320 × 240 | 150 * | 7.0–14.0 |

| ICI 7640 P-Series | 640 × 480 | 127.6 | 7.0–14.0 |

| InfraTec mobileIR M4 | 160 × 120 | 265 * | 8.0–14.0 |

| Optris PI400 | 382 × 288 | 320 * | 7.5–13.0 |

| Pearleye LWIR | 640 × 480 | 790 * | 8.0–14.0 |

| Photon 320 | 324 × 256 | 97 | 7.5–13.5 |

| Tamarisk 640 | 640 × 480 | 121 | 8.0–14.0 |

| Thermoteknix MIRICLE 370 K | 640 × 480 | 166 | 8.0–12.0 |

| Xenix Gobi-384 (Scientific) | 384 × 288 | 500 * | 8.0–14.0 |

| Manufacturer and Model | Range [m] | Weight [g] | Field of View [°] | Laser Class | Accuracy [mm] |

|---|---|---|---|---|---|

| Riegl VUX-1UAV | 3–350 | 3500 | 330 | 1 | 10 |

| Riegl VUX-240 | 5–1400 | 3800 | ±37.5 | 3R | 20 |

| Routescene UAV LidarPod | 0–100 | 1300 | (H)41, (V)360 | 1 | (XY) 15 * (Z) 8 * |

| Velodyne HDL-32E | 80–100 | 1300 | (H)360, | 1 | 20 |

| (V)+10 to −30 | |||||

| Velodyne PUC VLP-16 | 0–100 | 830 | (H)360, (V)±15 | 1 | 30 |

| YellowScan Mapper II | 10–75 | 2100 | 100 | 1 | (XY) 150 (Z) 50 |

| YellowScan Surveyor | 10–60 | 1600 | 360 | 1 | 50 |

| Reference | Objective | Main Conclusions | Type | Sensor Used | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | R | V | T | M | H | L | ||||

| Wigmore et al. [96] | Map surface soil moisture content in Andean wetlands using UAS based multispectral imagery to better understand controls and impacts on its observed spatial variability within these systems. | UAS can provide reliable sub-metre estimates of surface soil moisture and provide unique insights into spatially heterogeneous environments. | R | T | M | |||||

| Biggs et al. [89] | Coupling UAS and hydraulic surveys to study the geometry and spatial distribution of aquatic macrophytes. | The aerial surveying techniques can be used to efficiently estimate vegetation abundance, surface area blockage factor and also to visualise flow through patch mosaics, enabling targeted management of aquatic vegetation. | R | V | ||||||

| Gray et al. [90] | Classification of estuarine wetlands based on WorldView-3 and RapidEye satellite imagery, using UAS imagery to assist training a Support Vector Machine. | UAS can be highly effective in training and validating satellite imagery. Within a fixed budget, it allows much larger training and testing sample sizes. The UAS accuracy is similar to field-based assessments. | F | V | ||||||

| Liu and Abd-Elrahman [91] | Testing approach seamlessly integrating multi-view data and object-based classification of wetland land covers. | Multi-view OBIA (MV-OBIA) substantially improves the overall accuracy compared with traditional OBIA, regardless of the features used for classification and types of wetland land covers. Two window-based implementations of MV-OBIA both show potential in generating an equal if not higher overall accuracy compared with MV-OBIA at substantially reduced computational costs. | F | V | ||||||

| Sankey et al. [95] | A fusion method for individual plant species identification and 3D characterization at submeter scales based on UAV lidar and hyperspectral imagery. | UAS lidar characterized the individual vegetation canopy structure and bare ground elevation, whereas the hyperspectral sensor provided species-specific spectral signatures for the dominant and target species at study area. The fusion of the two different data sources performed better than either data type alone in the arid and semi-arid ecosystems with sparse vegetation. | R | H | L | |||||

| Tang et al. [106] | Investigation of the effect of the spatial and temporal variability of riverbed topography and riverbed hydraulic conductivity on predictions of hydraulic states and fluxes and to test whether data assimilation based on the ensemble Kalman filter can better reproduce flood-induced changes to hydraulic states and parameters with the help of riverbed topography changes recorded with an unmanned aerial vehicle (UAV) and through-water photogrammetry. | Updating of riverbed hydraulic conductivity and aquifer hydraulic conductivity based on ensemble Kalman filter and UAV-based observations of riverbed topography transience after a major flood event strongly improve predictions of postflood hydraulic states—the RMSE was reduced by 55%. | F | V | ||||||

| Li et al. [94] | Assessment of the utility of UAV-borne hyperspectral image and photogrammetry derived 3D data for wetland species distribution quick mapping. | The utility of UAV-borne hyperspectral and photogrammetry-derived 3D data help to characterize and monitor wetland environment. UAS offers a solution for detail species survey of wetland area in a relatively low cost of time and labor. | R | V | H | |||||

| Pande-Chhetri et al. [92] | Comparison of classification methods for wetland vegetation based on UAS imagery. | The use of OBIA of high spatial resolution (sub-decimeter) UAS imagery is viable for wetland vegetation mapping. Object-based classification produced higher accuracy than pixel-based classification. Discadvantage for OBIA is a great amount of time and efforts spent on scale parameter selection or post-classification refinement for an object-based approach with expert knowledge. | F | V | H | |||||

| Petrasova et al. [105] | Updating lidar-based DEM with UAS-based DSMs for overland flow modeling using fast and effective technique to merge raster DEMs with different spatial extents by blending the DEMs along their overlap using distance-based weighted average. | The novel approach based on spatially variable overlap width improves preservation of subtle topographic features of the high-resolution DEMs while ensuring smooth transition. The two case studies demonstrated the importance of smooth transition for modeling water flow patterns while capturing the impacts of microtopography. | F | V | ||||||

| Senthilnath et al. [19] | Evaluation of performance of proposed spectral-spatial classification methods for crop region mapping and tree crown mapping of images acquired using UAV. | UAV images obtained using the two UAV platforms were used to demonstrate the performance of the proposed algorithm. From the obtained results, it was concluded that the proposed spectral-spatial classification performs better and was more robust than the other algorithms in the literature. | F | R | V | |||||

| Boon et al. [107] | Assessing if the use of UAV photogrammetry can be used to enhance the wetland delineation classification and WET-Health assessment. | UAV photogrammetry can significantly enhance wetland delineation and classification but also be a valuable contribution to WET-Health assessment. | R | V | ||||||

| Husson et al. [20] | Comparison of Manual Mapping and Automated Object-Based Image Analysis of Non-Submerged Aquatic Vegetation | Automated classification of non-submerged aquatic vegetation from true-colour UAS images was feasible, indicating good potential for operative mapping of aquatic vegetation. | F | V | ||||||

| Jeziorska et al. [108] | (1) Assessment of the suitability of digital surface models (DSMs) produced by sUAS photogrammetry for overland flow simulation in the context of precision agriculture applications, (2) Development of a workflow for overland flow pattern simulation using high spatial and temporal resolution DSMs derived from sUAS data, and (3) Investigation of the differences between flow patterns based on sUAS derived DSMs and lidar based DEMs. | (1) sUAS derived data can improve the quality of the flow pattern modeling due to the increased spatial and temporal resolution. It can capture preferential flow along tillage that is represented by capturing the changing microtopography. (2) Due to the high resolution of obtained data, vegetation significantly disrupts the flow pattern. Therefore, densely vegetated areas are not suitable for water flow modeling. (3) Overland water flow modeling based on data from airborne lidar surveys is suitable for identifying potentially vulnerable areas. sUAS based data, however, is needed to actually identify and monitor gully formation. | F | V | ||||||

| Wallace et al. [79] | Investigation of the potential of UAS based airborne laser scanning and structure from motion (SfM) to measure and monitor structural properties of forests. | Although airborne laser scanning is capable of providing more accurate estimates of the vertical structure of forests across the larger range of canopy densities found in this study, SfM was still found to be an adequate low-cost alternative for surveying of forest stands. | R | V | L | |||||

| Capolupo et al. [109] | Introduce and test an innovative approach able to predict copper accumulation points at plot scales, using a combination of aerial photos, taken by drones, micro-rill network modelling and wetland prediction indices usually used at catchment scales. | The DEM obtained with a resolution of 30 mm showed a high potential for the study of micro-rill processes and Tpographic (TI) and Clima-Topographic (CTI) indices were able to predict zones of copper accumulation at a plot scale. | R | V | ||||||

| Tamminga et al. [110] | Assessing the capabilities of an UAS to characterize the channel morphology and hydraulic habitat with the goal of identifying its advantages and challenges for river research and management. | By enabling dynamic linkages between geomorphic processes and aquatic habitat to be established, the advantages of UAVs make them ideally suited to river research and management. | R | V | ||||||

| Wan et al. [93] | Monitoring the invasion of Spartina alterniflora using very high resolution UAS imagery. | Imagery can provide details on distribution, progress, and early detection of Spartina alterniflora invasion. OBIA, object based image analysis for remote sensing detection method, can enable control measures to be more effective, accurate, and less expensive than a field survey. | R | V | H | |||||

| Zarco-Tejada et al. [111] | Detect water stress based on Fluorescence, temperature and narrow-band indices acquired from a UAV platform. | The research proves feasibility of thermal, narrow-band indices and fluorescence retrievals obtained from a micro-hyperspectral imagery and a light-weight thermal camera on board small UAV platforms for stress detection in a heterogeneous tree canopy where very high resolution is required. | F | T | H | |||||

| Laliberte et al. [21] | Develop a relatively automated and efficient image processing workflow for deriving geometrically and radiometrically corrected multispectral imagery from a UAS for the purpose of species-level rangeland vegetation mapping. | Comparison of vegetation and soil spectral responses for the airborne and WorldView-2 satellite data demonstrate potential for conducting multi-scale studies and evaluating upscaling the UAS data to larger areas. | F | M | ||||||

| Berni et al. [97] | Generate quantitative remote sensing products by means of an UAS equipped with inexpensive thermal and narrowband multispectral imaging sensors and compare them to the products of traditional manned airborne sensors. | The low cost and operational flexibility, along with the high spatial, spectral, and temporal resolutions provided at high turnaround times, make this platform suitable for a number of applications, where time-critical management is required. The results yielded comparable estimations, if not better, than those obtained by traditional manned airborne sensors. | R | T | M | |||||

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeziorska, J. UAS for Wetland Mapping and Hydrological Modeling. Remote Sens. 2019, 11, 1997. https://doi.org/10.3390/rs11171997

Jeziorska J. UAS for Wetland Mapping and Hydrological Modeling. Remote Sensing. 2019; 11(17):1997. https://doi.org/10.3390/rs11171997

Chicago/Turabian StyleJeziorska, Justyna. 2019. "UAS for Wetland Mapping and Hydrological Modeling" Remote Sensing 11, no. 17: 1997. https://doi.org/10.3390/rs11171997

APA StyleJeziorska, J. (2019). UAS for Wetland Mapping and Hydrological Modeling. Remote Sensing, 11(17), 1997. https://doi.org/10.3390/rs11171997