Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping

Abstract

1. Introduction

- To investigate the potential, limitations, and utilization of GEE for feature extraction.

- To study the advantages of adding spatial feature to classify land cover and the feasibility of high dimensional feature space in similar applications.

- To evaluate the performance of machine learning models to classify the land surface by using high dimensional feature space.

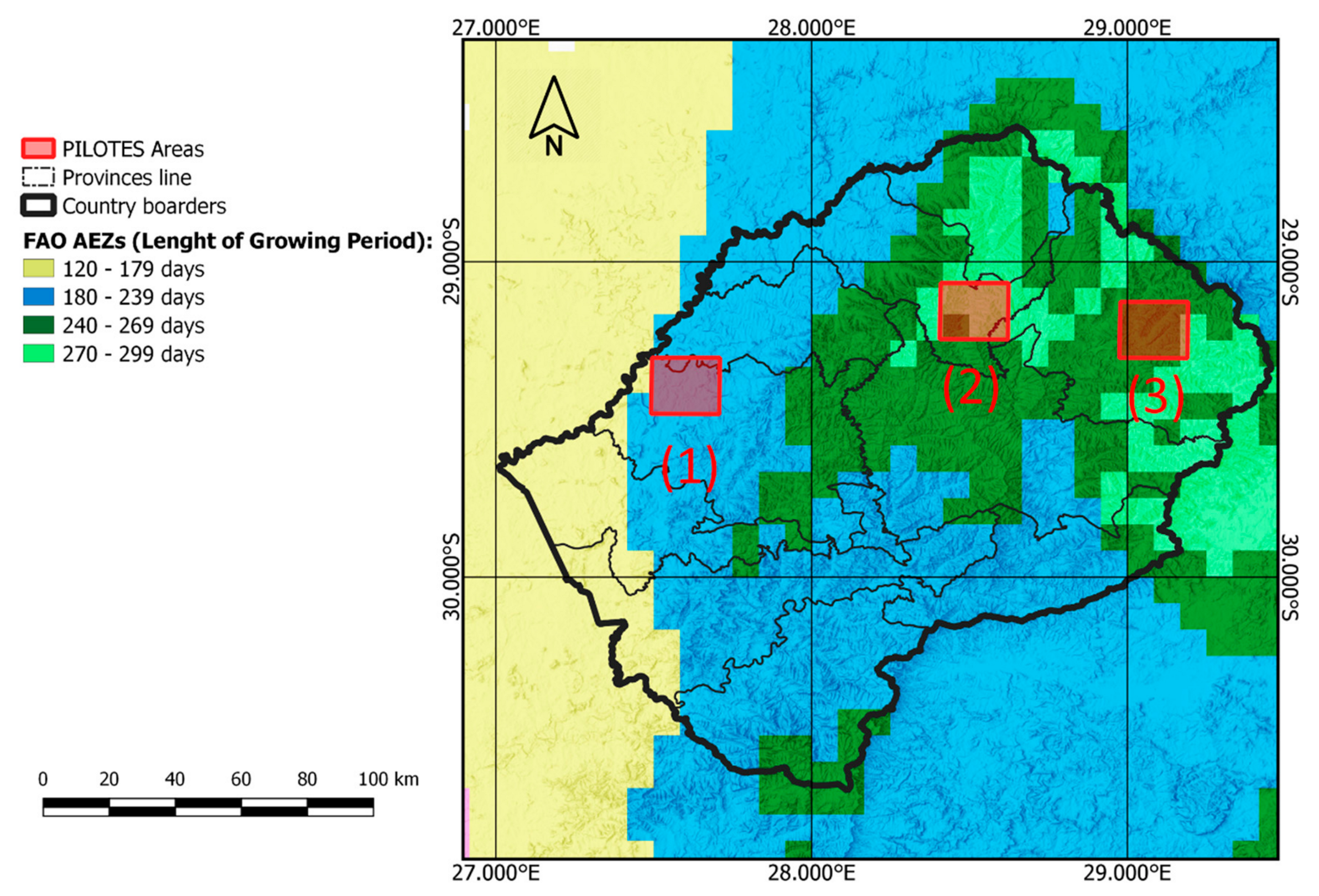

- To evaluate the methodology on three different areas in Lesotho to ensure that it is independent from climatic variables and agro-ecological zones.

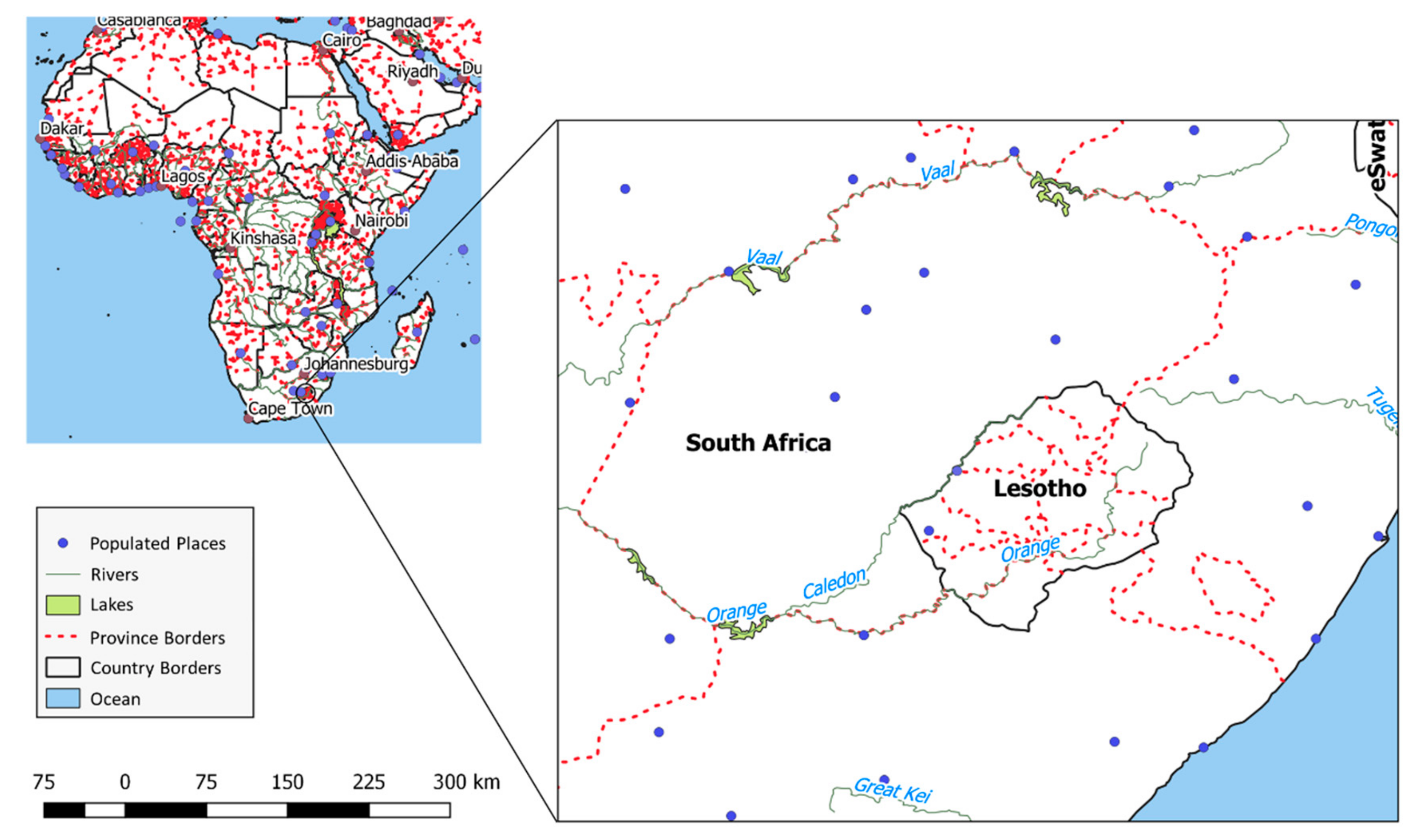

2. Study Area and Data

2.1. Study Area

2.2. FAO Land Cover Lesotho Classes

2.3. Test and Training Data Set Generation

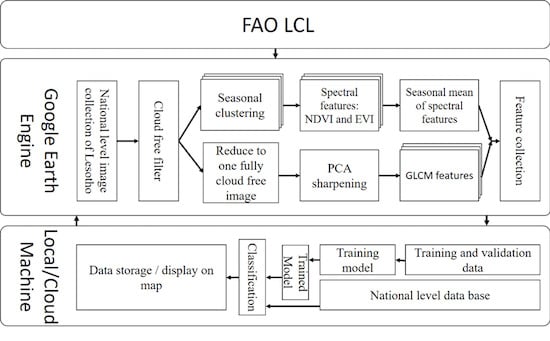

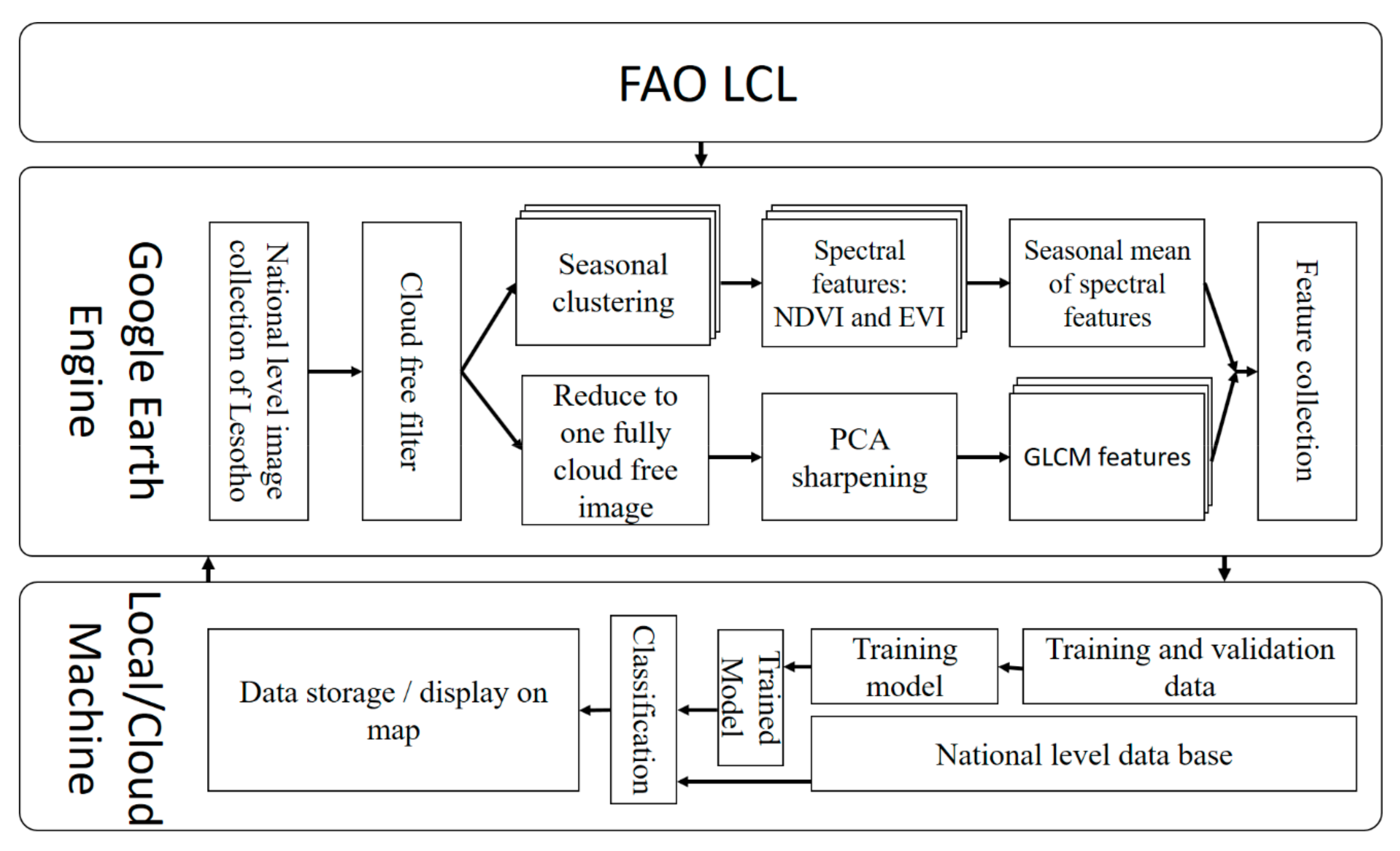

3. Methods

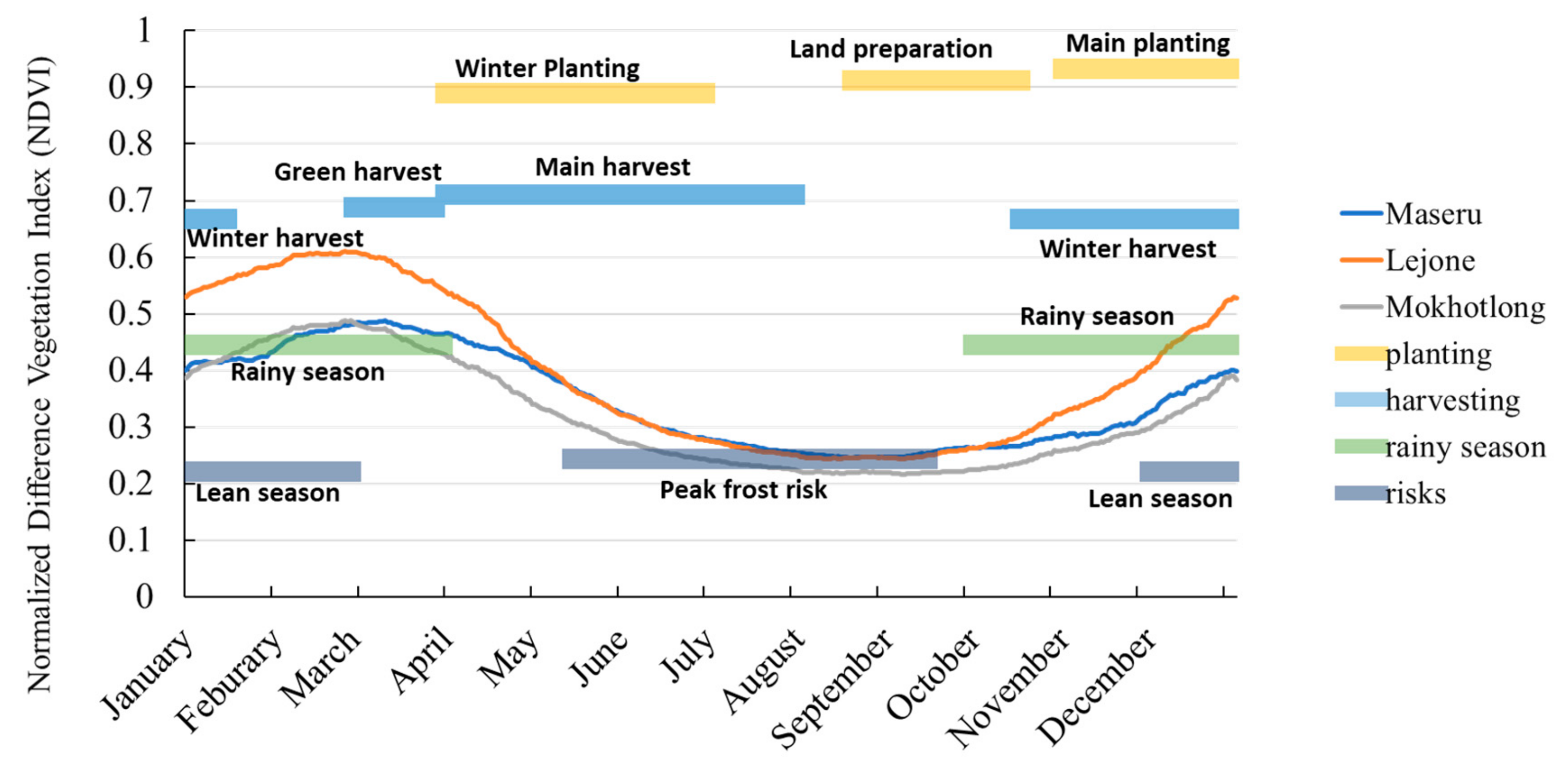

3.1. Google Earth Engine Data

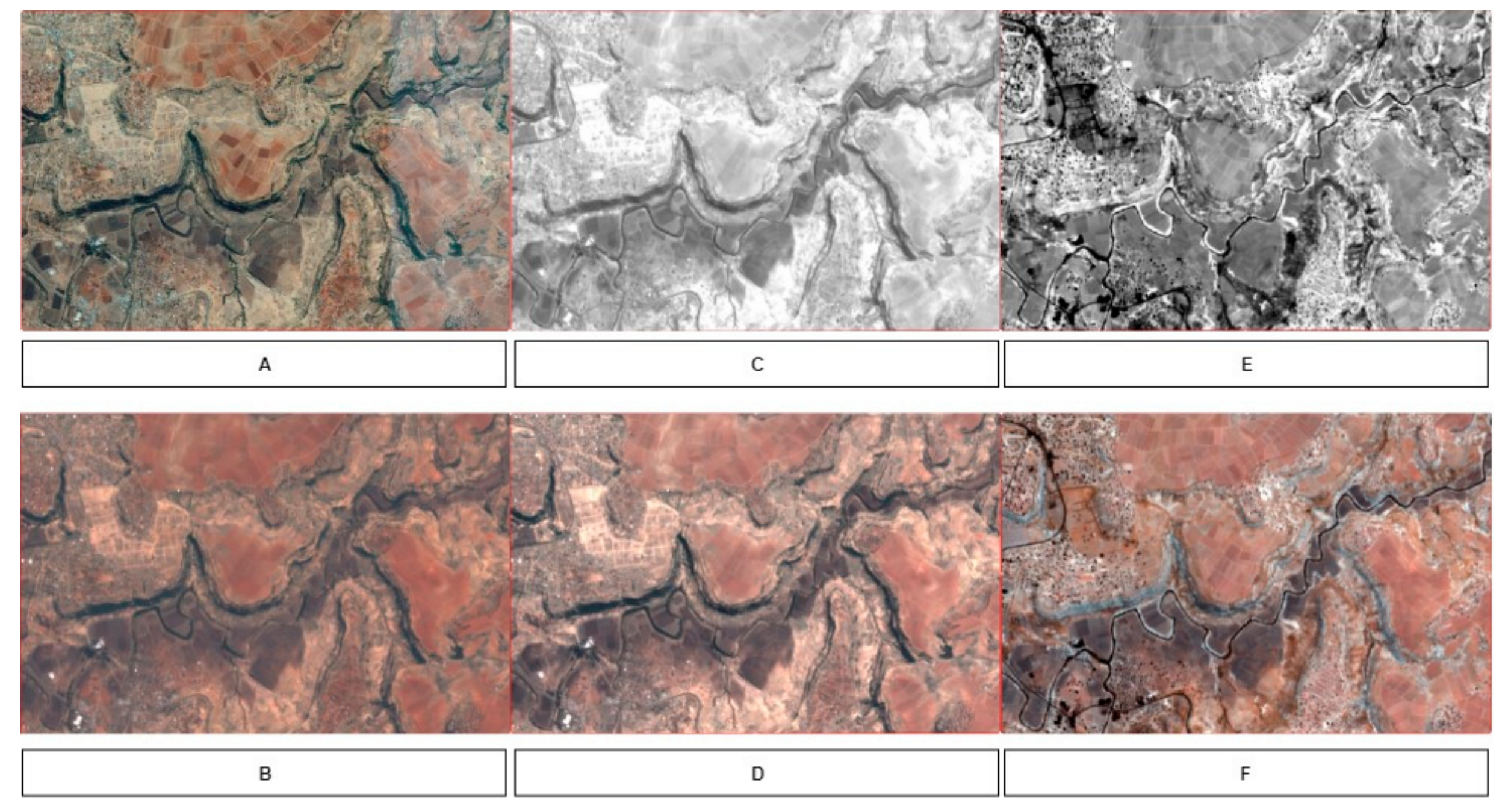

3.2. Data Preparation

3.2.1. Spectral Features

3.2.2. Spatial Features

Image Pre-Processing with PCA

Texture Features: Grey Level Co-occurrence Matrix (GLCM)

3.3. Trained Machine Learning Models

4. Results

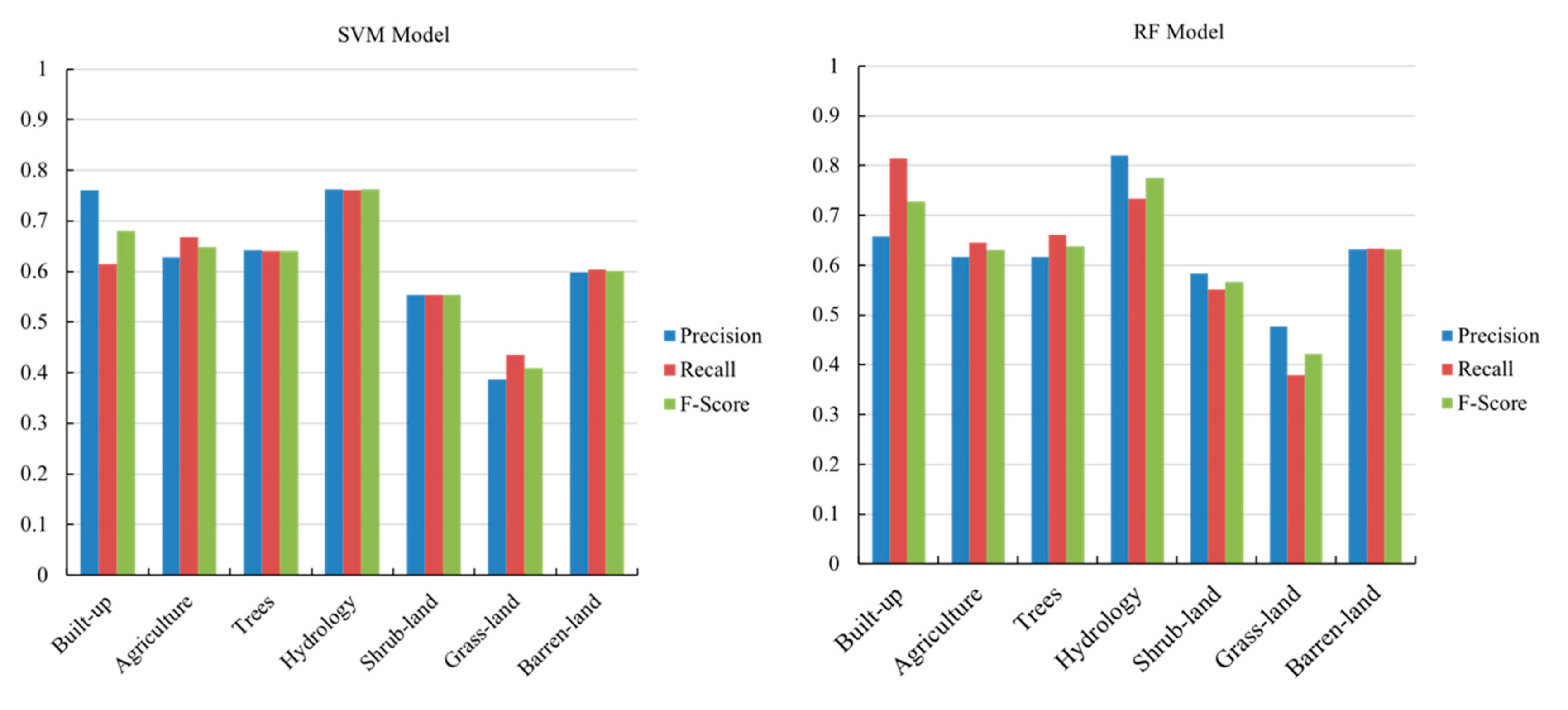

4.1. Trained Models’ Performance

4.2. Classes Accuracy and Inter-Class Similarities

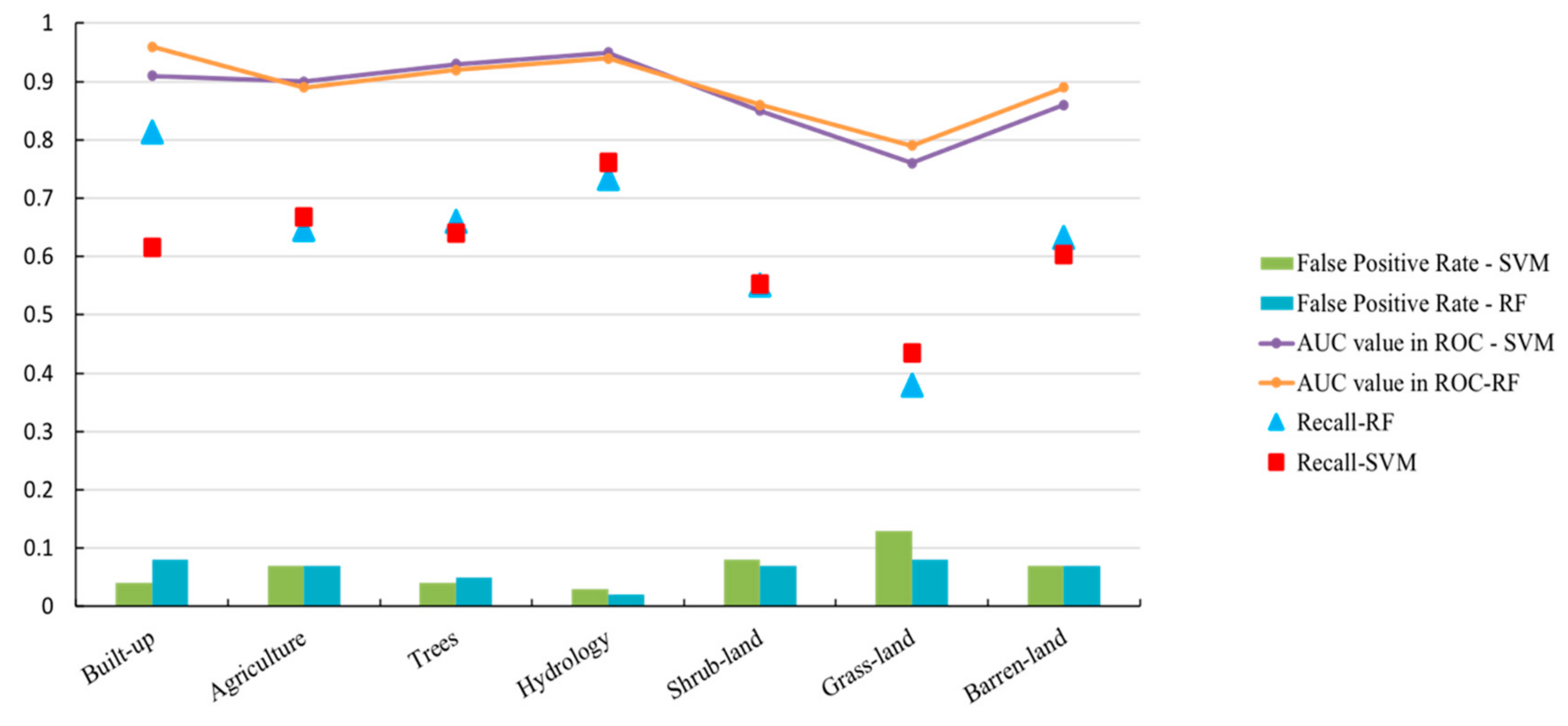

4.3. Discriminating Ability of the Train Models: Precision, Recall, and Receiver Operator Curve

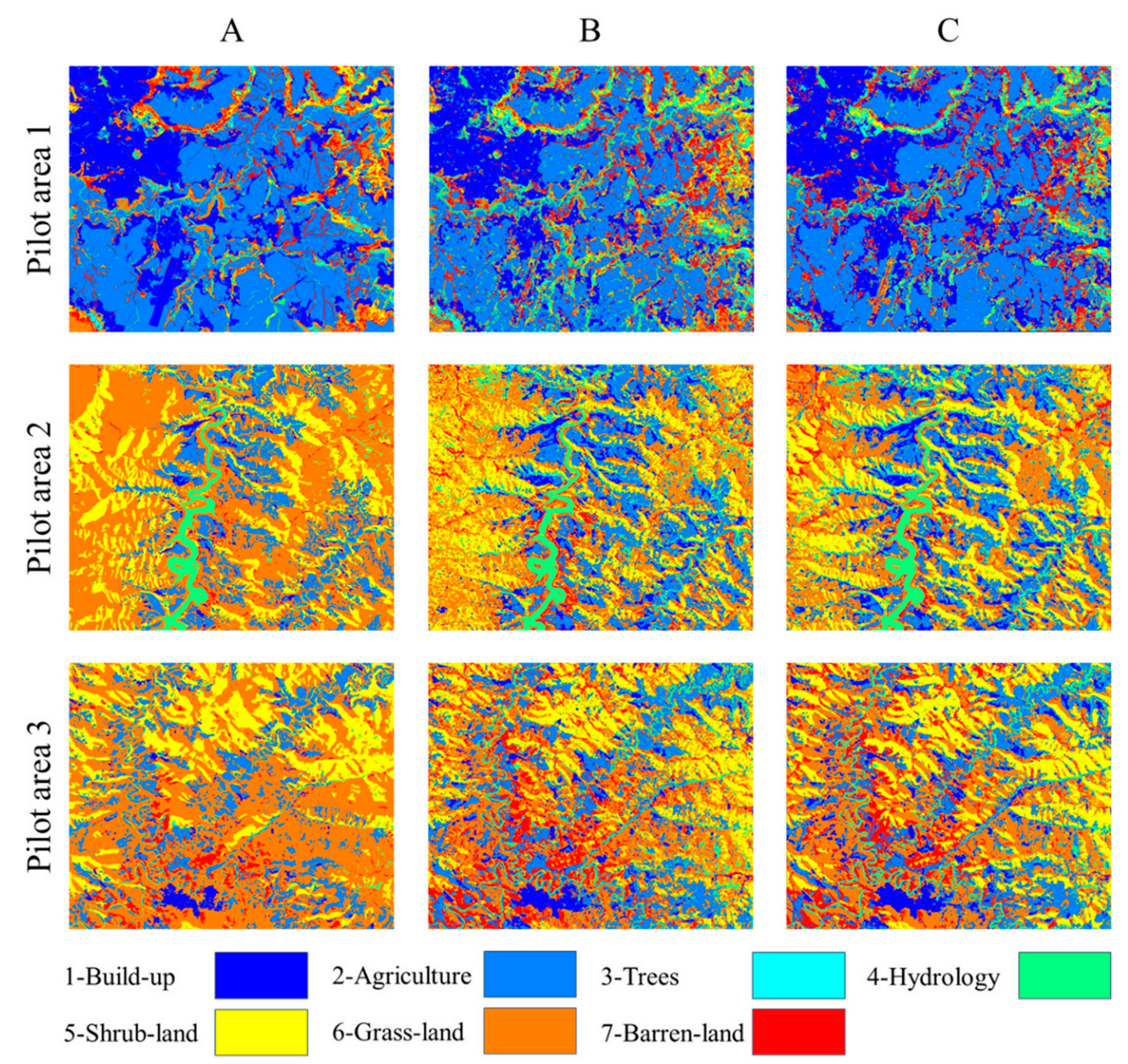

4.4. Classification Results and Final Land Cover Product

5. Discussion

5.1. Google Earth Engine as a Cloud Base Remote Sensing Platform

5.2. The Effect of Spectral and Spatial Features on Accuracy Performance

5.3. The Inter-Class Confusion Rates

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Nations, U. World population prospects: The 2015 revision. U. N. Econ. Soc. Aff. 2015, 33, 1–66. [Google Scholar]

- Kamwi, J.M.; Chirwa, P.W.; Manda, S.O.; Graz, P.F.; Kätsch, C. Livelihoods, land use and land cover change in the Zambezi Region, Namibia. Popul. Environ. 2015, 37, 207–230. [Google Scholar] [CrossRef]

- Nations, U. Resolution adopted by the General Assembly on 25 September 2015. In Transforming Our World: The 2030 Agenda for Sustainable Development; United Nations: New York, NY, USA, 2015. [Google Scholar]

- United-Nations. Sustainable Development Goals Indicators. Available online: https://unstats.un.org/sdgs/metadata/files/Metadata-02-04-01.pdf (accessed on 23 May 2019).

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Latham, J.; Cumani, R.; Rosati, I.; Bloise, M. Global Land Cover Share (GLC-SHARE) Database Beta-Release Version 1.0-2014; FAO: Rome, Italy, 2014. [Google Scholar]

- Inglada, J.; Vincent, A.; Arias, M.; Tardy, B.; Morin, D.; Rodes, I. Operational high-resolution land cover map production at the country scale using satellite image time series. Remote Sens. 2017, 9, 95. [Google Scholar] [CrossRef]

- Nyland, E.K.; Gunn, E.G.; Shiklomanov, I.N.; Engstrom, N.R.; Streletskiy, A.D. Land Cover Change in the Lower Yenisei River Using Dense Stacking of Landsat Imagery in Google Earth Engine. Remote Sens. 2018, 10, 1226. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Cardille, J.A.; Fortin, J.A. Bayesian updating of land-cover estimates in a data-rich environment. Remote Sens. Environ. 2016, 186, 234–249. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef]

- Lesiv, M.; Fritz, S.; McCallum, I.; Tsendbazar, N.; Herold, M.; Pekel, J.F.; Buchhorn, M.; Smets, B.; van de Kerchove, R. Evaluation of ESA CCI Prototype Land Cover Map at 20m; International Institute for Applied Systems Analysis: Laxenburg, Austria, 2017. [Google Scholar]

- Hachigonta, S.; Nelson, G.C.; Thomas, T.S.; Sibanda, L.M. Southern African Agriculture and Climate Change: A Comprehensive Analysis; International Food Policy Research Institute: Washington, DC, USA, 2013. [Google Scholar]

- Mumby, P.; Green, E.; Edwards, A.; Clark, C. The cost-effectiveness of remote sensing for tropical coastal resources assessment and management. J. Environ. Manag. 1999, 55, 157–166. [Google Scholar] [CrossRef]

- Ridder, R.M. Global forest resources assessment 2010: Options and recommendations for a global remote sensing survey of forests. FAO For. Resour. Assess. Programme Work. Pap. 2007, 141. Available online: http://www.fao.org/3/a-ai074e.pdf (accessed on 23 May 2019).

- UN FAO. GeoNetwork Opensource Portal to Spatial Data and Information. Available online: http://www.fao.org/geonetwork/srv/en/main.home (accessed on 30 July 2019).

- Stibig, H.J.; Belward, A.S.; Roy, P.S.; Rosalina-Wasrin, U.; Agrawal, S.; Joshi, P.K.; Beuchle, R.; Fritz, S.; Mubareka, S.; Giri, C. A land-cover map for South and Southeast Asia derived from SPOT-VEGETATION data. J. Biogeogr. 2007, 34, 625–637. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- European-Space-Agency. Copernicus Data Access Policy. Available online: https://www.copernicus.eu/en/about-copernicus/international-cooperation (accessed on 23 May 2019).

- Woodcock, C.E.; Allen, R.; Anderson, M.; Belward, A.; Bindschadler, R.; Cohen, W.; Gao, F.; Goward, S.N.; Helder, D.; Helmer, E. Free access to Landsat imagery. Science 2008, 320, 1011. [Google Scholar] [CrossRef] [PubMed]

- Loveland, T.R.; Dwyer, J.L. Landsat: Building a strong future. Remote Sens. Environ. 2012, 122, 22–29. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.; Tyukavina, A.; Thau, D.; Stehman, S.; Goetz, S.; Loveland, T.R. High-resolution global maps of 21st-century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef] [PubMed]

- Pekel, J.F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418. [Google Scholar] [CrossRef] [PubMed]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A scalable satellite-based crop yield mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X.; Menarguez, M.A.; Zhang, G.; Qin, Y.; Thau, D.; Biradar, C.; Moore, B., III. Mapping paddy rice planting area in northeastern Asia with Landsat 8 images, phenology-based algorithm and Google Earth Engine. Remote Sens. Environ. 2016, 185, 142–154. [Google Scholar] [CrossRef] [PubMed]

- Patel, N.N.; Angiuli, E.; Gamba, P.; Gaughan, A.; Lisini, G.; Stevens, F.R.; Tatem, A.J.; Trianni, G. Multitemporal settlement and population mapping from Landsat using Google Earth Engine. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 199–208. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, B.; Thau, D.; Moore, R. Building a better urban picture: Combining day and night remote sensing imagery. Remote Sens. 2015, 7, 11887–11913. [Google Scholar] [CrossRef]

- Coltin, B.; McMichael, S.; Smith, T.; Fong, T. Automatic boosted flood mapping from satellite data. Int. J. Remote Sens. 2016, 37, 993–1015. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Y.; Clinton, N.; Wang, J.; Wang, X.; Liu, C.; Gong, P.; Yang, J.; Bai, Y.; Zheng, Y. Mapping major land cover dynamics in Beijing using all Landsat images in Google Earth Engine. Remote Sens. Environ. 2017, 202, 166–176. [Google Scholar] [CrossRef]

- Sidhu, N.; Pebesma, E.; Câmara, G. Using Google Earth Engine to detect land cover change: Singapore as a use case. Eur. J. Remote Sens. 2018, 51, 486–500. [Google Scholar] [CrossRef]

- Fischer, G.; Nachtergaele, F.O.; Prieler, S.; Teixeira, E.; Tóth, G.; van Velthuizen, H.; Verelst, L.; Wiberg, D. Global Agro-Ecological Zones (GAEZ v3. 0)-Model Documentation; IIASA: Laxenburg, Austria; FAO: Rome, Italy, 2012. [Google Scholar]

- Mokarram, M.; Sathyamoorthy, D. Modeling the relationship between elevation, aspect and spatial distribution of vegetation in the Darab Mountain, Iran using remote sensing data. Model. Earth Syst. Environ. 2015, 1, 30. [Google Scholar] [CrossRef]

- Nations, FaAOoTU. ISO19144-2: Geographic Information Classification Systems—Part 2: Land Cover Meta Language (LCML); International Organization for Standardization (ISO): Geneva, Switzerland, 2012. [Google Scholar]

- The United Nations, FAO. Land Cover Atlas of Lesotho; FAO: Rome, Italy, 2017. [Google Scholar]

- Campbell, J.B. Introduction to Remote Sensing, Virginia Polytechnic Institute and State University; The Guildford Press: New York, NY, USA, 1996. [Google Scholar]

- Vancutsem, C.; Marinho, E.; Kayitakire, F.; See, L.; Fritz, S. Harmonizing and combining existing land cover/land use datasets for cropland area monitoring at the African continental scale. Remote Sens. 2013, 5, 19–41. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1–17. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. 1974. Available online: https://ntrs.nasa.gov/search.jsp?R=19740022614 (accessed on 13 August 2019).

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- A Database for Remote Sensing Indices. Available online: https://www.indexdatabase.de/db/s-single.php?id=96 (accessed on 23 May 2019).

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Shah, V.P.; Younan, N.H.; King, R.L. An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- Conners, R.W.; Trivedi, M.M.; Harlow, C.A. Segmentation of a high-resolution urban scene using texture operators. Comput. Vis. Gr. Image Process. 1984, 25, 273–310. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature selection of time series MODIS data for early crop classification using random forest: A case study in Kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Mardani, M.; Fujii, Y.; Saito, T. Detection and Mapping of Hairy Vetch in Images Obtained by UAVs. In Proceedings of the International Workshop on Image Electronics and Visual Computing, Da Nang, Vietnam, 28 February–3 March 2017. [Google Scholar]

- Breiman, L. Bagging Predictors; Report No. 421; Univ. California: Pasadena, CA, USA, 1994. [Google Scholar]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. A training algorithm for optimal margin classifiers. In Proceedings of the 5th Annual ACM Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd international conference on Machine learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Mäkelä, H.; Pekkarinen, A. Estimation of timber volume at the sample plot level by means of image segmentation and Landsat TM imagery. Remote Sens. Environ. 2001, 77, 66–75. [Google Scholar] [CrossRef]

- Matton, N.; Canto, G.; Waldner, F.; Valero, S.; Morin, D.; Inglada, J.; Arias, M.; Bontemps, S.; Koetz, B.; Defourny, P. An automated method for annual cropland mapping along the season for various globally-distributed agrosystems using high spatial and temporal resolution time series. Remote Sens. 2015, 7, 13208–13232. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D. Automated crop field extraction from multi-temporal Web Enabled Landsat Data. Remote Sens. Environ. 2014, 144, 42–64. [Google Scholar] [CrossRef]

- Daubenmire, R. Mountain topography and vegetation patterns. Northwest Sci. 1980, 54, 146–152. [Google Scholar]

| Class Code | LC Type | LC Name | LC Description |

|---|---|---|---|

| 1 | BUILT-UP (4 classes) | Urban Areas | Relatively larger urban built-up areas, commonly with presence of trees |

| Urban Commercial and/or Industrial areas | Commercial and/or industrial built-up areas | ||

| Rural Settlements, Plain Areas | Rural houses in flat lying plain areas + small cultivated herbaceous crops + closed herbaceous natural vegetation, often together with trees and/or shrubs employed for demarcation | ||

| Rural Settlements, Slopping and Mountain Areas | Rural houses in sloping and mountainous areas + herbaceous natural vegetation, occasionally with shrubs employed for demarcation, usually treeless | ||

| 2 | AGRICULTURE (5 Classes) | Rainfed Agriculture, Plain Areas | Rainfed herbaceous crops cultivated in flat-lying plains, relatively larger sized fields |

| Rainfed Agriculture, Sloping & Mountainous regions | Rainfed herbaceous crops in sloping land and mountains with terracing and/or contour ploughing, small and medium sized fields, sometimes with lines of shrubs demarcating fields | ||

| Rainfed Agriculture, Sheet Erosion | Rainfed herbaceous crops with visible water sheet erosion, commonly with associated gully erosion | ||

| Irrigated Agriculture | Small size irrigated herbaceous crops near water courses | ||

| Rainfed Agriculture + Rainfed Orchards | Small rainfed herbaceous crops + regular rainfed orchard plantations (usually as rows of fruit trees separating elongated fields) | ||

| 3 | TREES (7 Classes) | Trees, Needle leaved, (Closed) | Closed evergreen needle-leaved trees, sometimes occurring as plantations |

| Trees, Needle leaved, (Open) | Open evergreen needle-leaved trees + herbaceous natural vegetation | ||

| Trees, Broadleaved, (Closed) | Closed deciduous broadleaved trees, commonly along river beds | ||

| Trees, Broadleaved, (Open) | Open deciduous broadleaved trees + herbaceous natural vegetation | ||

| Trees, Undifferentiated (Closed) | Closed undifferentiated trees | ||

| Trees, Undifferentiated, (Open) | Open undifferentiated trees + herbaceous natural vegetation | ||

| Trees, (Sparse) | Sparse trees + herbaceous natural vegetation (closed-open) | ||

| 4 | HYDROLOGY (4 Classes) | Large Waterbody | Large perennial fresh water lake or dam reservoir |

| Small Waterbody | Small fresh water seasonal and/or perennial reservoir, Pool, Waterhole, etc. | ||

| Wetland (Perennial and/or seasonal) | Natural perennial and/or seasonal fresh waterbody + Perennial closed-open natural vegetation | ||

| River Bank | River Bank (soil/sand deposits) + perennial or periodic flowing fresh water (river) | ||

| 5 | SHRUBLAND (2 Classes) | Shrub-land-(Closed) | Natural Shrubs (H = 0.5 to 1.5 m), Closed |

| Shrub-land-(Open) | Natural Shrubs (H = 0.5 to 1.5 m), Open + Natural herbaceous vegetation (Open Closed) | ||

| 6 | GRASSLAND (1 Class) | Grassland | Grassland—Natural vegetation |

| 7 | BARREN LAND (5 Classes) | Bare Rock | Rock outcrops |

| Bare Area | Bare areas—undifferentiated areas not used for cultivation and usually devoid of grass or shrub cover | ||

| Boulders & Loose Rocks | Areas with large scattered boulders and/or unconsolidated loose rocks, commonly sloping, usually together with patchy natural vegetation and/or shrubs and/or natural trees | ||

| Gullies | Gully erosion, occasionally with trees and/or tall shrubs | ||

| Mines & Quarries | Major mines and quarries as well as temporary building material extraction sites |

| Image Source | Spatial Resolution (Meter) | Spectral Resolution |

|---|---|---|

| Rapid Eye | 5 | 5 bands (440 to 850 nm) |

| Spot 5 | 2.5 | 5 Bands (480 to 1750 nm) |

| Aerial orthophotos | 0.5 | 3 Bands (visible light) |

| Classifier | Training Time (Seconds) | Over-All Accuracy (%) |

|---|---|---|

| Bagged Trees | 76 | 62.6 |

| Support Vector Machine | 1197 | 60.4 |

| Class No. | Class Name | Built-Up | Agriculture | Trees | Hydrology | Shrub-Land | Grass-Land | Barren-Land |

|---|---|---|---|---|---|---|---|---|

| 1 | Built-up | 81 | 6 | 3 | 1 | 1 | 5 | 3 |

| 2 | Agriculture | 9 | 65 | 2 | 2 | 6 | 11 | 5 |

| 3 | Trees | 10 | 3 | 66 | 3 | 11 | 4 | 3 |

| 4 | Hydrology | 6 | 7 | 5 | 73 | 2 | 4 | 3 |

| 5 | Shrub-land | 4 | 6 | 13 | 1 | 55 | 11 | 10 |

| 6 | Grass-land | 11 | 15 | 5 | 3 | 14 | 38 | 14 |

| 7 | Barren-land | 7 | 6 | 3 | 3 | 8 | 9 | 63 |

| Class No. | Class Name | Built-Up | Agriculture | Trees | Hydrology | Shrub-Land | Grass-Land | Barren-Land |

|---|---|---|---|---|---|---|---|---|

| 1 | Built-up | 62 | 8 | 5 | 3 | 2 | 15 | 5 |

| 2 | Agriculture | 5 | 67 | 2 | 3 | 5 | 13 | 6 |

| 3 | Trees | 4 | 2 | 64 | 4 | 13 | 8 | 4 |

| 4 | Hydrology | 2 | 6 | 4 | 76 | 2 | 5 | 5 |

| 5 | Shrub-land | 2 | 5 | 9 | 2 | 55 | 17 | 9 |

| 6 | Grass-land | 5 | 13 | 4 | 4 | 17 | 43 | 14 |

| 7 | Barren-land | 3 | 7 | 3 | 4 | 9 | 14 | 60 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mardani, M.; Mardani, H.; De Simone, L.; Varas, S.; Kita, N.; Saito, T. Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping. Remote Sens. 2019, 11, 1907. https://doi.org/10.3390/rs11161907

Mardani M, Mardani H, De Simone L, Varas S, Kita N, Saito T. Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping. Remote Sensing. 2019; 11(16):1907. https://doi.org/10.3390/rs11161907

Chicago/Turabian StyleMardani, Mohammad, Hossein Mardani, Lorenzo De Simone, Samuel Varas, Naoki Kita, and Takafumi Saito. 2019. "Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping" Remote Sensing 11, no. 16: 1907. https://doi.org/10.3390/rs11161907

APA StyleMardani, M., Mardani, H., De Simone, L., Varas, S., Kita, N., & Saito, T. (2019). Integration of Machine Learning and Open Access Geospatial Data for Land Cover Mapping. Remote Sensing, 11(16), 1907. https://doi.org/10.3390/rs11161907