A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features

Abstract

:1. Introduction

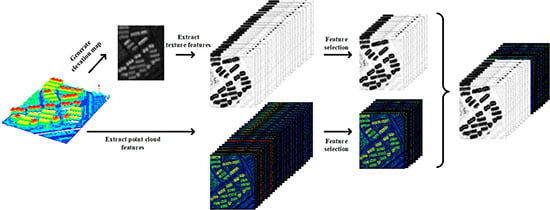

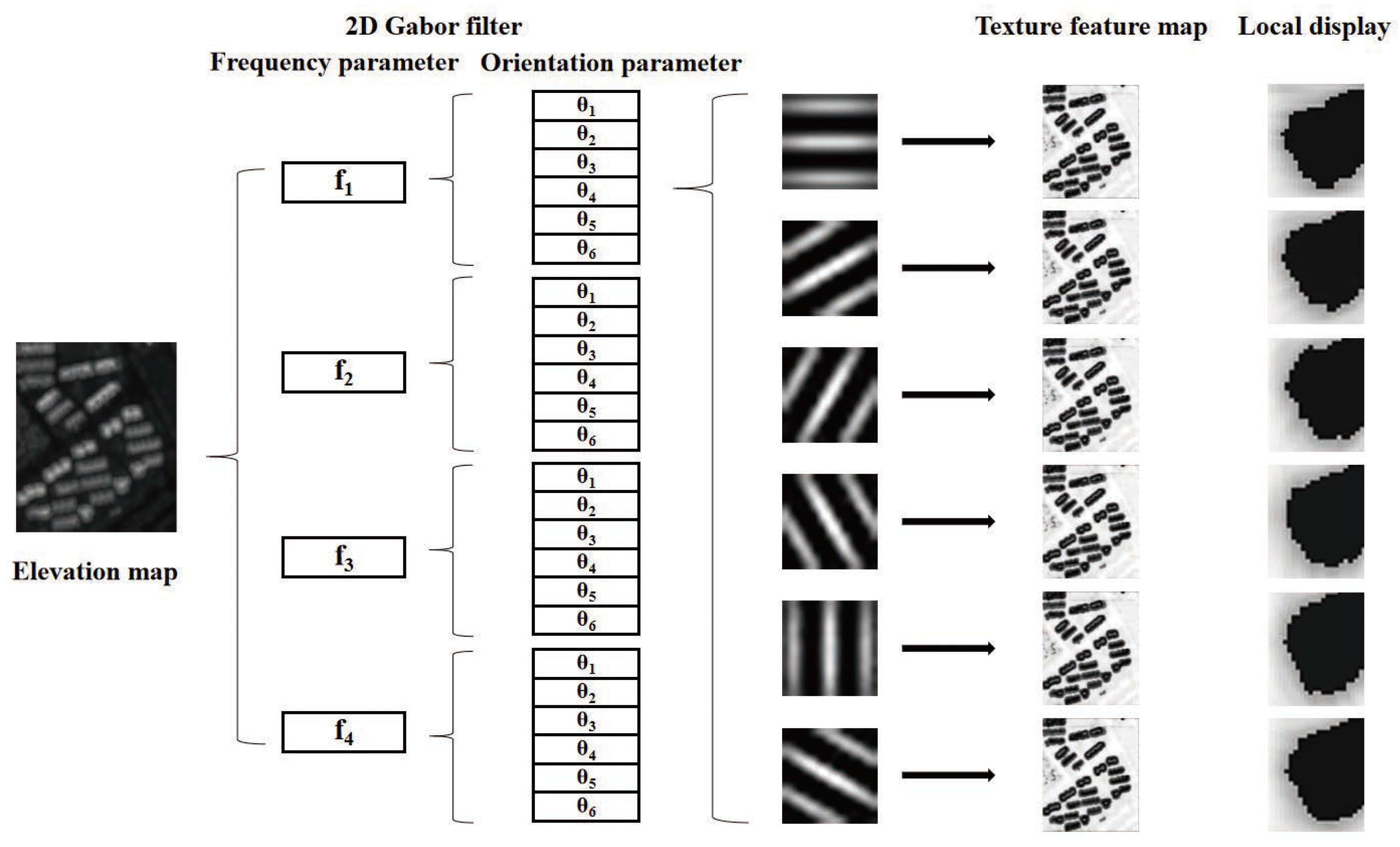

2. Basic Theory of Gabor Filters

3. Building Extraction Based on the Fusion of Point Cloud and Texture Features

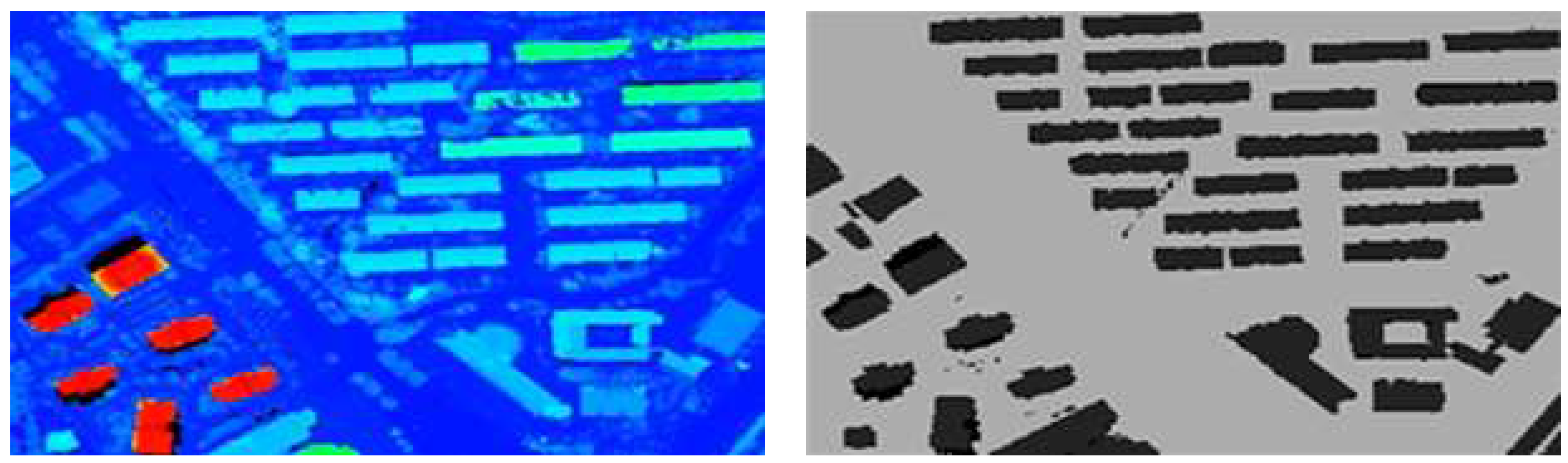

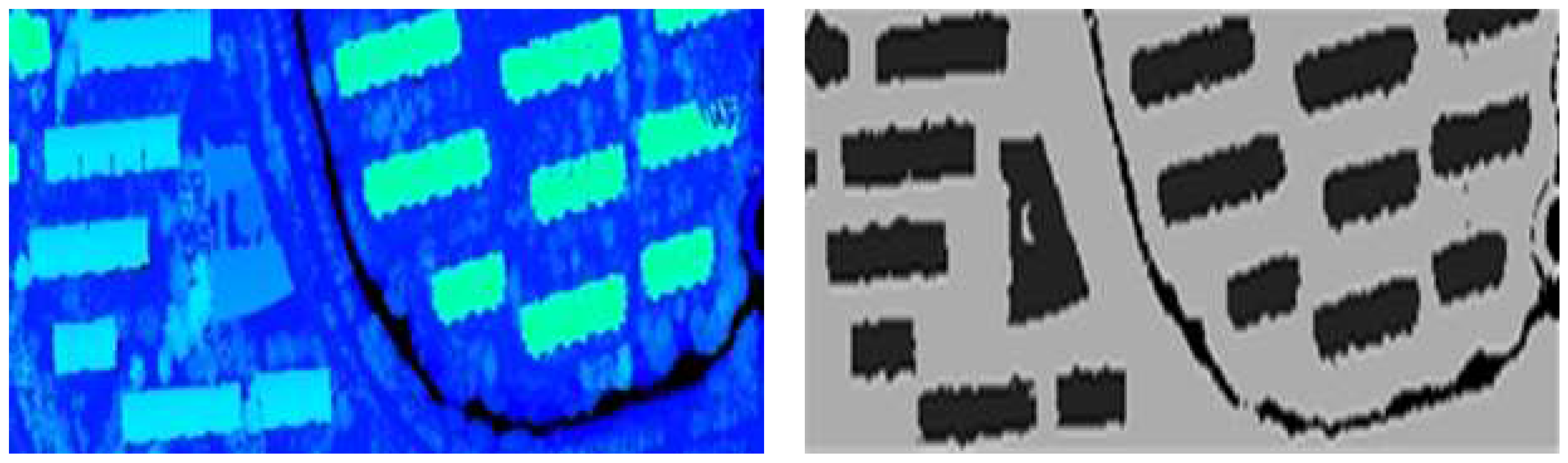

3.1. Point Cloud Features

3.2. Texture Feature Extraction Based on the Elevation Map

3.3. Feature Selection for Reducing the Number of Features

3.4. Definition of the Objective Function

3.5. Implementation of the Proposed Method

- Step 1: Input the testing images, and compute the feature vectors of the point cloud. Generate elevation maps, and extract texture features via the Gabor filter from them.

- Step 2: Build the training and testing samples based on the fusion of point cloud and texture features;

- Step 3: Randomly generate the initial population of PSO in the range of −10–10 via decimal coding, and transform it into binary coding;

- Step 4: Conduct building extraction, and compute the fitness value of each particle by Equation (9);

- Step 5: Operation of PSO:

- Step 6: Conduct building extraction, and compute the fitness value of each particle by Equation (9);

- Step 7: If the solution is better, replace the current particle; otherwise, the particle does not change, and then, find the current global best solution;

- Step 8: Judge whether the maximum number of iterations is reached, and if it is, go to Step 9; otherwise, go to Step 5;

- Step 9: Output the optimal feature combination, and compare it with other building extraction methods via the extraction accuracy.

4. Experimental Results and Discussion

4.1. Experimental Platform and Data Information

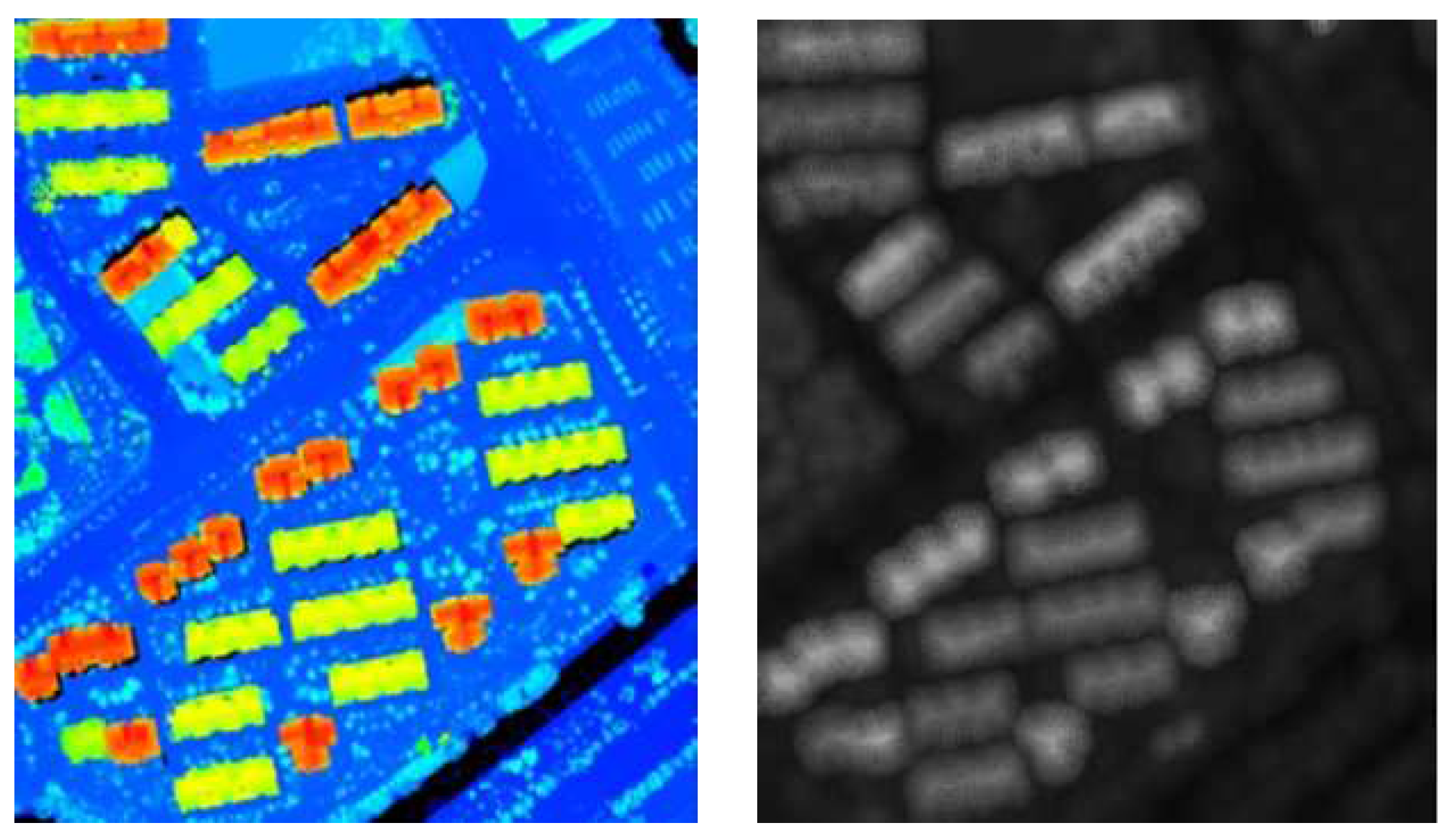

4.2. Extraction of Texture Features

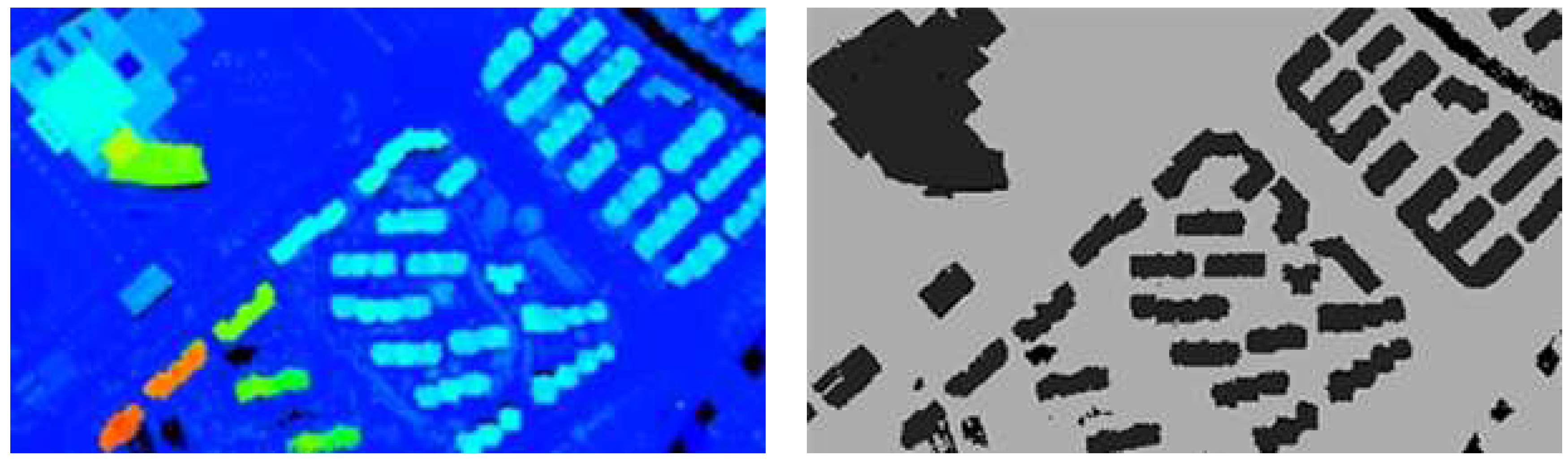

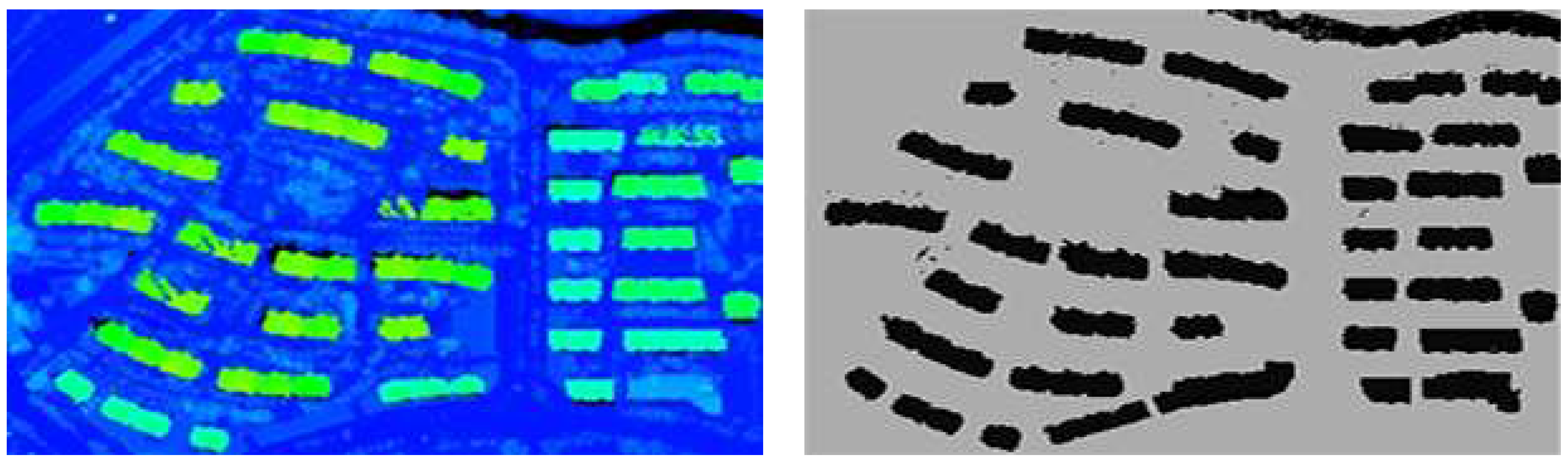

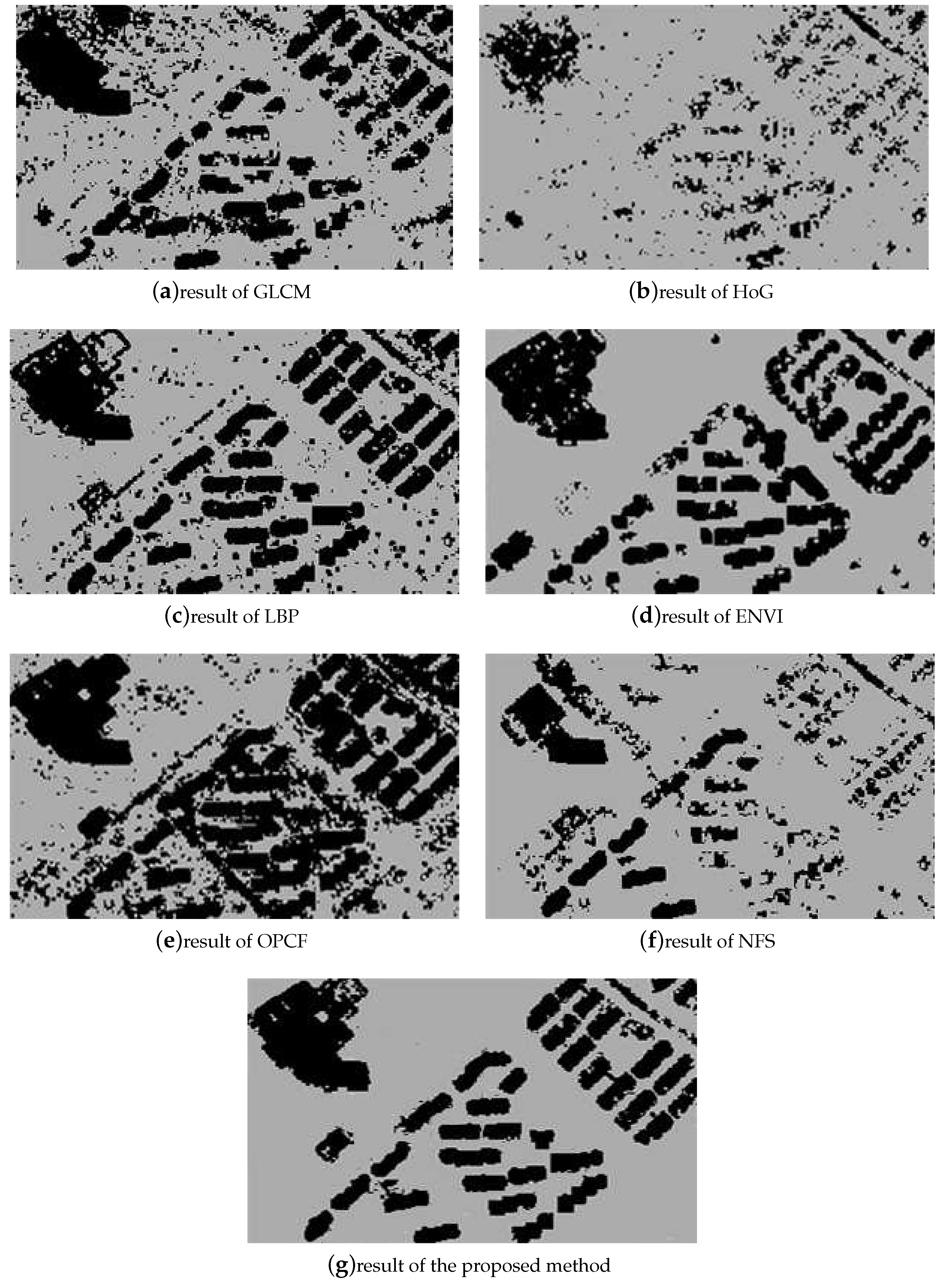

4.3. Comparative Analysis and Accuracy Evaluation of Building Extraction

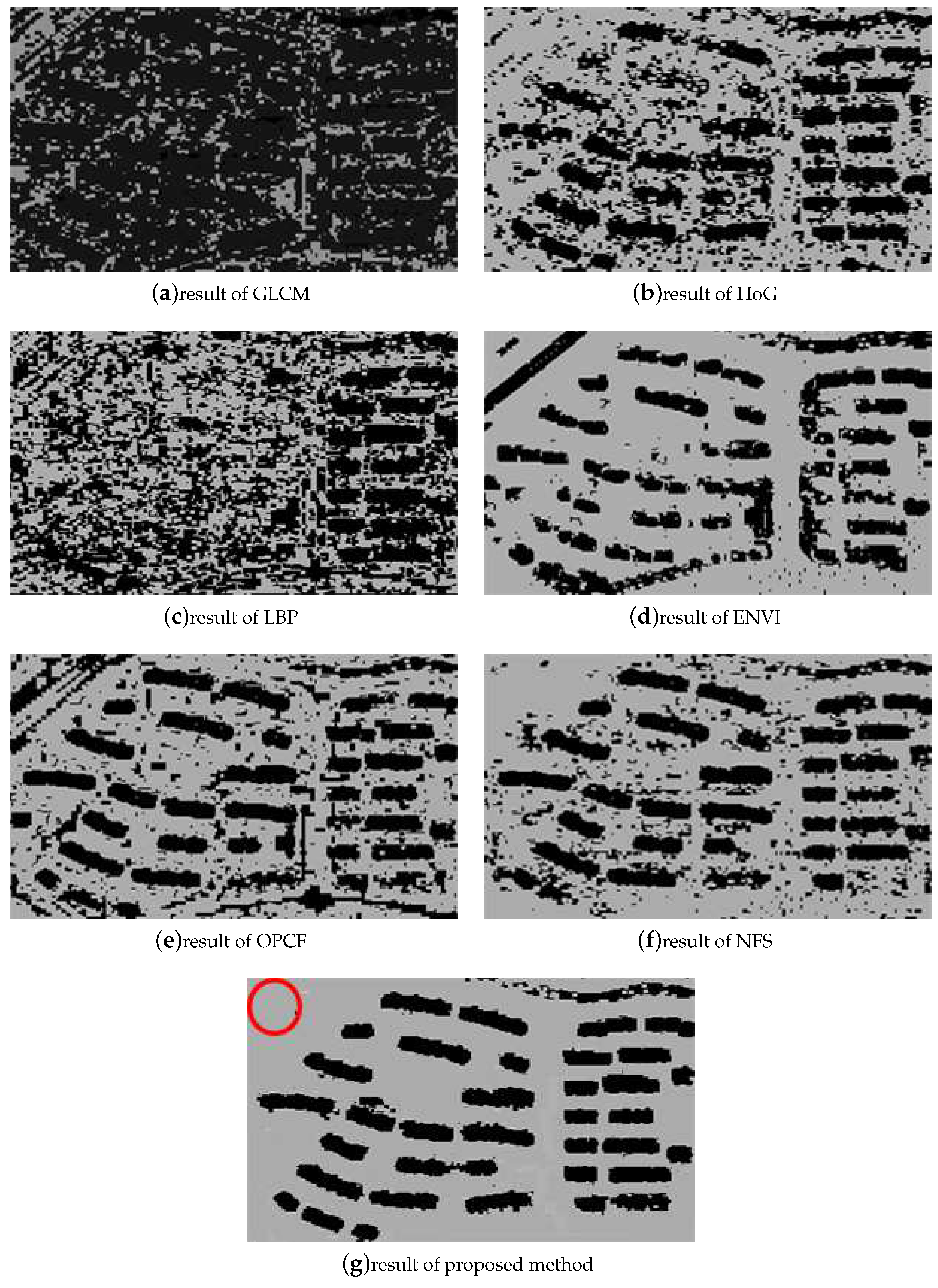

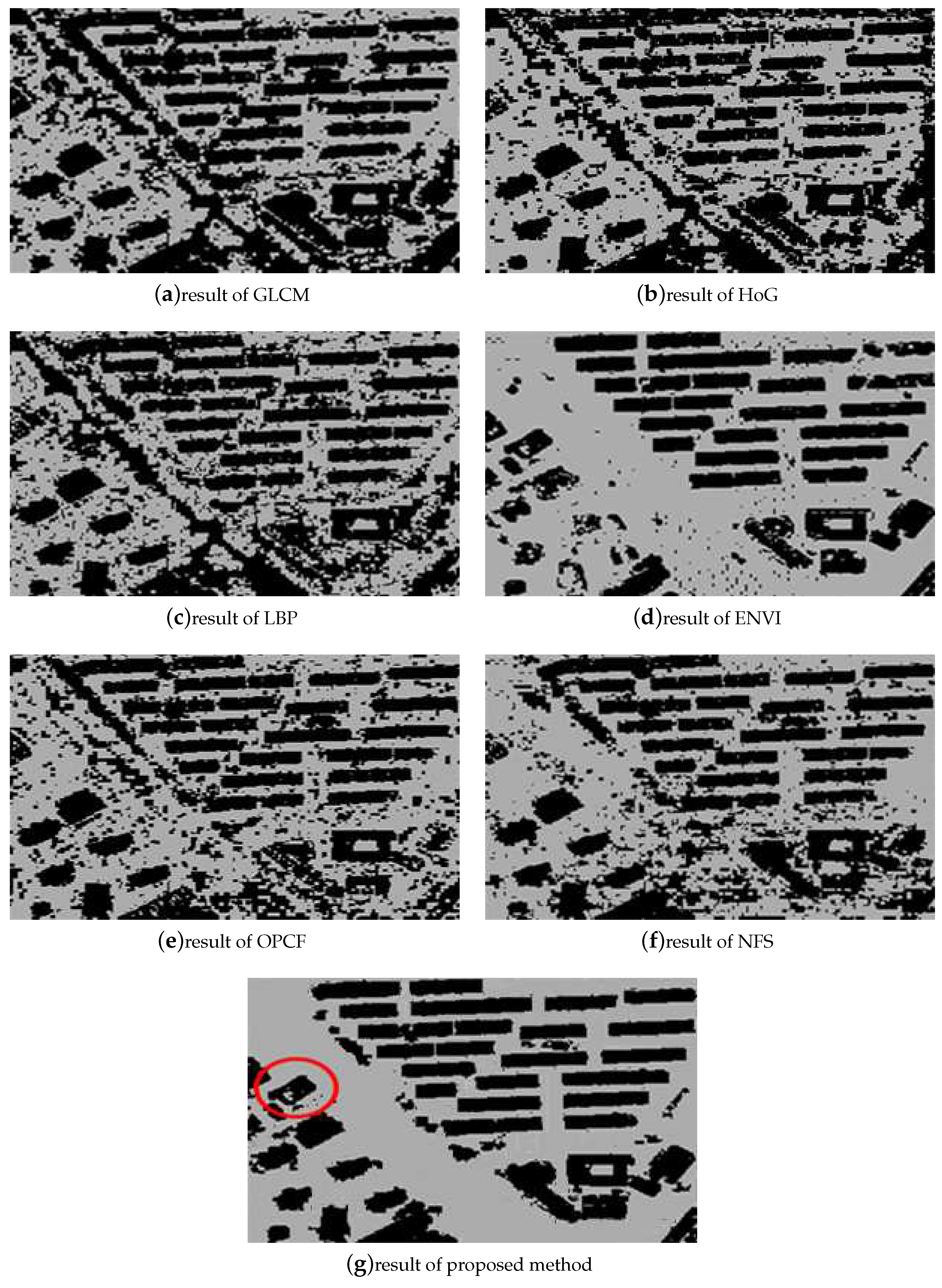

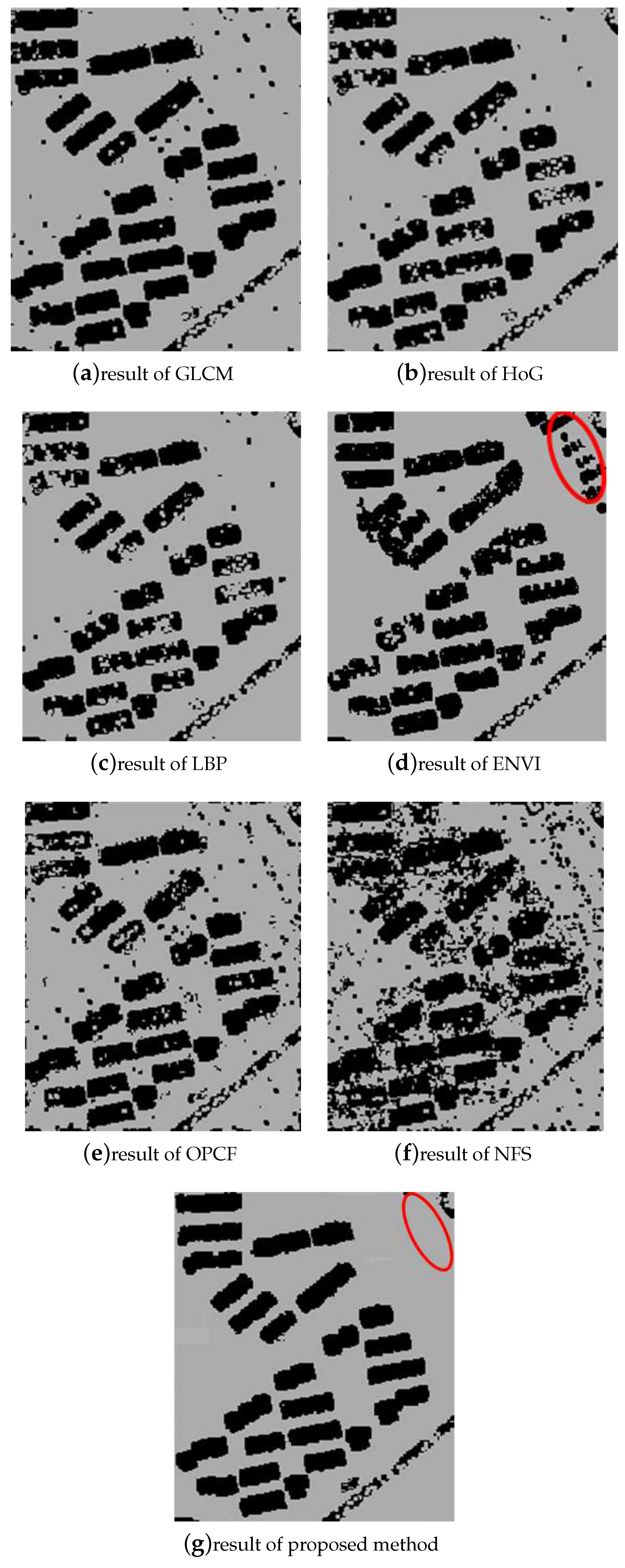

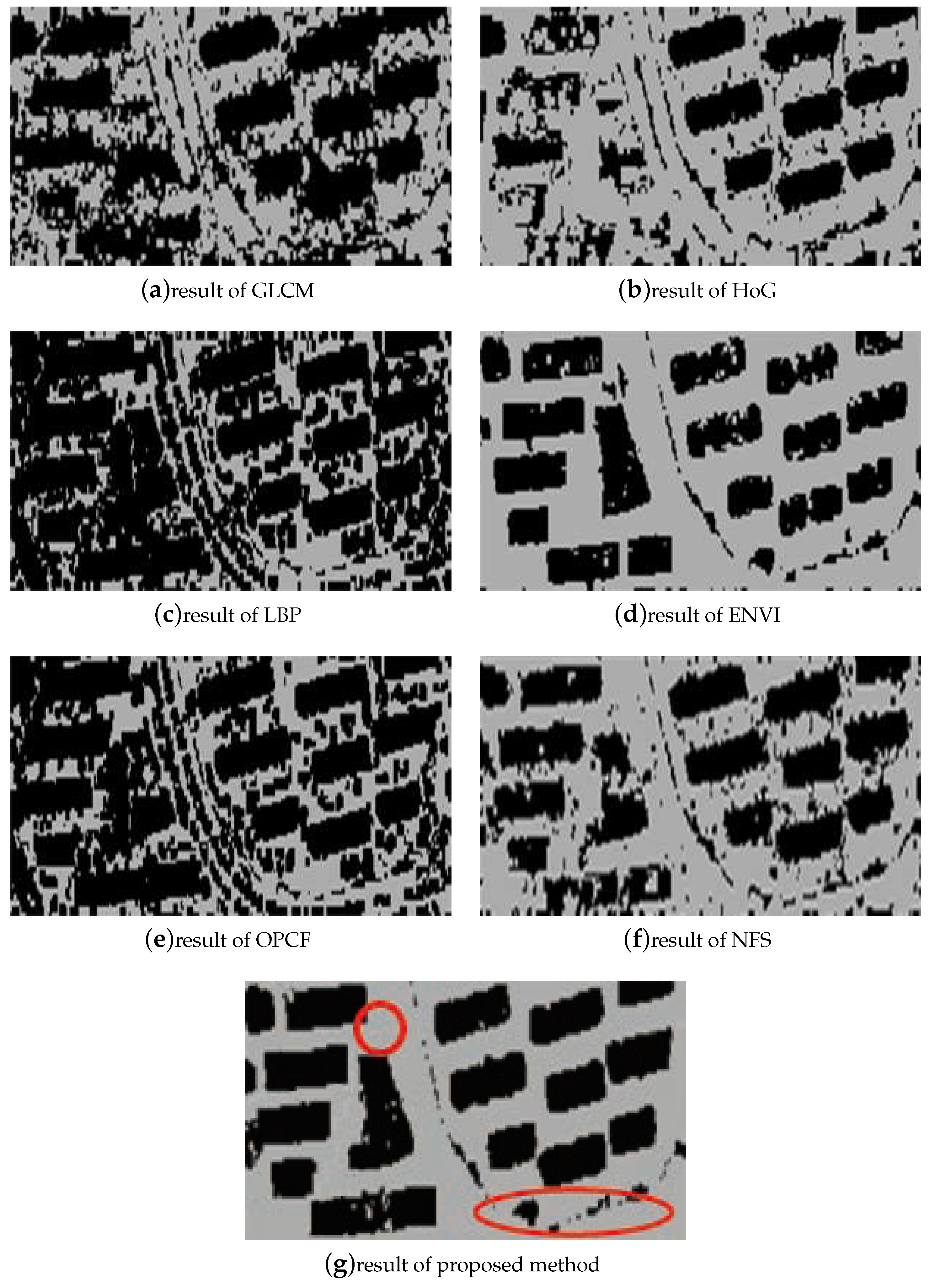

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Li, X.; Ling, F.; Foody, G.M.; Du, Y. A Superresolution Land-Cover Change Detection Method Using Remotely Sensed Images with Different Spatial Resolutions. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3822–3841. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Chiteculo, V. Determining Tree Height and Crown Diameter from High-resolution UAV Imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Marullo, S.; Patsaeva, S.; Fiorani, L. Remote sensing of the coastal zone of the European seas. Int. J. Remote Sens. 2018, 39, 9313–9316. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, L.; Wang, C.; Zhuo, L.; Tian, Q.; Liang, X. Road Recognition From Remote Sensing Imagery Using Incremental Learning. IEEE Trans. Intell. Transp. Syst. 2017, 99, 1–13. [Google Scholar] [CrossRef]

- Huang, X.; Yuan, W.; Li, J.; Zhang, L. A New Building Extraction Postprocessing Framework for High-Spatial-Resolution Remote-Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 654–668. [Google Scholar] [CrossRef]

- Li, E.; Xu, S.; Meng, W.; Zhang, X. Building Extraction from Remotely Sensed Images by Integrating Saliency Cue. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 906–919. [Google Scholar] [CrossRef]

- Magnússon, E.; Belart, J.; Pálsson, F.; Ágústsson, H.; Crochet, P. Geodetic Mass Balance Record with Rigorous Uncertainty Estimates Deducedfrom Aerial Photographs and LiDAR Data–Case Study from Drangajökull Icecap, NW Iceland. Cryosphere 2016, 9, 4733–4785. [Google Scholar] [CrossRef]

- Höfler, V.; Wessollek, C.; Karrasch, P. Knowledge-Based Modelling of Historical Surfaces Using LiDAR Data. Earth Resour. Environ. Remote Sens./GIS Appl. VII 2016, 1–11. [Google Scholar] [CrossRef]

- Harmon, J.M.; Leone, M.P.; Prince, S.D.; Snyder, M. LiDAR for Archaeological Landscape Analysis: A Case Study of Two Eighteenth-Century Maryland Plantation Sites. Am. Antiq. 2017, 71, 649–670. [Google Scholar] [CrossRef]

- Baek, N.; Shin, W.S.; Kim, K.J. Geometric primitive extraction from LiDAR-scanned point clouds. Clust. Comput. 2017, 20, 741–748. [Google Scholar] [CrossRef]

- Rozsa, Z.; Sziranyi, T. Obstacle Prediction for Automated Guided Vehicles Based on Point Clouds Measured by a Tilted LiDAR Sensor. IEEE Trans. Intell. Transp. Syst. 2018, 99, 1–13. [Google Scholar] [CrossRef]

- Zheng, Y.; Weng, Q.; Zheng, Y. A Hybrid Approach for Three-Dimensional Building Reconstruction in Indianapolis from LiDAR Data. Remote Sens. 2017, 9, 310. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, L.; Chen, Y.; Wu, Y.; Li, M. Building Point Detection from Vehicle-Borne LiDAR Data Based on Voxel Group and Horizontal Hollow Analysis. Remote Sens. 2016, 8, 419. [Google Scholar] [CrossRef]

- Qin, Y.; Li, S.; Vu, T.T.; Niu, Z.; Ban, Y. Synergistic Application of Geometric and Radiometric Features of LiDAR Data for Urban Land Cover Mapping. Opt. Express 2015, 23, 13761–13775. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Duan, Y.; Zhang, Y.; Cao, R. Extracting Buildings from and Regularizing Boundaries in Airborne liDAR Data Using Connected Operators. Int. J. Remote Sens. 2016, 37, 889–912. [Google Scholar] [CrossRef]

- Huang, R.; Yang, B.; Liang, F.; Dai, W.; Li, J.; Tian, M.; Xu, W. A top-down Strategy for Buildings Extraction from Complex Urban Scenes Using Airborne LiDAR Point Clouds. Infrared Phys. Technol. 2018, 92, 203–218. [Google Scholar] [CrossRef]

- Yi, C.; Zhang, Y.; Wu, Q.; Xu, Y.; Remil, O.; Wei, M.; Wang, J. Urban Building Reconstruction from Raw LiDAR Point Data. Comput.-Aided Des. 2017, 93, 1–14. [Google Scholar] [CrossRef]

- Siddiqui, F.; Teng, S.; Awrangjeb, M.; Lu, G. A Robust Gradient Based Method for Building Extraction from LiDAR and Photogrammetric Imagery. Sensors 2016, 16, 1110. [Google Scholar] [CrossRef]

- Liu, C.; Wang, X.; Huang, H.; Gong, P.; Wu, D.; Jiang, J. The Importance of Data Type, Laser Spot Density and Modelling Method for Vegetation Height Mapping in Continental China. Int. J. Remote Sens. 2016, 37, 6127–6148. [Google Scholar] [CrossRef]

- Kang, H.; Sim, Y.; Han, J. Terrain Rendering with Unlimited Detail and Resolution. Graph. Models 2018, 97, 64–79. [Google Scholar] [CrossRef]

- He, Y.; Chen, L.; Chen, J.; Li, M. A Novel Way to Organize 3D LiDAR Point Cloud as 2D Depth Map Height Map and Surface Normal Map. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1383–1388. [Google Scholar]

- Konstantinidis, D.; Stathaki, T.; Argyriou, V.; Grammalidis, N. Building Detection Using Enhanced HoG–LBP Features and Region Refinement Processes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 888–905. [Google Scholar] [CrossRef]

- Cao, J.; Pang, Y.; Li, X. Learning Multilayer Channel Features for Pedestrian Detection. IEEE Trans. Image Process. 2017, 26, 3210–3220. [Google Scholar] [CrossRef] [PubMed]

- Du, S.; Zhang, Y.; Zou, Z.; Xu, S.; He, X.; Chen, S. Automatic Building Extraction from LiDAR Data Fusion of Point and Grid-based Features. ISPRS J. Photogramm. Remote Sens. 2017, 130, 294–307. [Google Scholar] [CrossRef]

- Niemi, M.T.; Vastaranta, M.; Vauhkonen, J.; Melkas, T.; Holopainen, M. Airborne LiDAR-derived Eelevation Data in Terrain Trafficability Mapping. Scand. J. For. Res. 2017, 32, 761–773. [Google Scholar] [CrossRef]

- Alatas, B. Sports Inspired Computational Intelligence Algorithms for Global Optimization. Artif. Intell. Rev. 2017, 12, 1–49. [Google Scholar] [CrossRef]

- Li, C.; Wei, W.; Li, J.; Song, W. A Cloud-based Monitoring System via Face Recognition Using Gabor and CS-LBP Features. J. Supercomput. 2017, 73, 1532–1546. [Google Scholar] [CrossRef]

- Kaggwa, F.; Ngubiri, J.; Tushabe, F. Combined Feature Level and Score Level Fusion Gabor Filter-Based Multiple Enrollment Fingerprint Recognition. Int. Conf. Signal Process. 2017, 159–165. [Google Scholar] [CrossRef]

- Kim, J.; Um, S.; Min, D. Fast 2D Complex Gabor Filter with Kernel Decomposition. IEEE Trans. Image Process. 2018, 27, 1713–1722. [Google Scholar] [CrossRef]

- Luan, S.; Chen, C.; Zhang, B.; Han, J.; Liu, J. Gabor Convolutional Networks. IEEE Trans. Image Process. 2017, 99, 4357–4366. [Google Scholar]

- Karanam, S.; Gou, M.; Wu, Z.; Rates-Borras, A.; Camps, O.; Radke, R.J. A Systematic Evaluation and Benchmark for Person Re-Identification: Features, Metrics, and Datasets. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 31, 523–536. [Google Scholar] [CrossRef] [PubMed]

- Thanou, D.; Chou, P.; Frossard, P. Graph-Based Compression of Dynamic 3D Point Cloud Sequences. IEEE Trans. Image Process. 2016, 25, 1765–1778. [Google Scholar] [CrossRef] [PubMed]

- Song, W.; Lei, Y.; Chen, S.; Pan, Z.; Yang, J.J.; Pan, H.; Du, X.; Cai, W.; Wang, Q. Multiple Facial Image Features-based Recognition for The Automatic Diagnosis of Turner Syndrome. Comput. Ind. 2018, 100, 85–95. [Google Scholar] [CrossRef]

- Meng, F.; Wang, X.; Shao, F.; Wang, D.; Hua, X. Energy-Efficient Gabor Kernels in Neural Networks with Genetic Algorithm Training Method. Electronics 2019, 8, 105. [Google Scholar] [CrossRef]

- Lei, H.; Jiang, G.; Quan, L. Fast Descriptors and Correspondence Propagation for Robust Global Point Cloud Registration. IEEE Trans. Image Process. 2017, 26, 3614–3623. [Google Scholar] [CrossRef]

- Fu, Y.; Chiang, H.D. Toward Optimal Multiperiod Network Reconfiguration for Increasing the Hosting Capacity of Distribution Networks. IEEE Trans. Power Deliv. 2018, 33, 2294–2304. [Google Scholar] [CrossRef]

- Yang, J.; Cao, Z.; Qian, Z. A Fast and Robust Local Fescriptor for 3D Point Cloud Registration. Inf. Sci. 2016, 346, 163–179. [Google Scholar] [CrossRef]

- Hasanipanah, M.; Amnieh, H.B.; Arab, H.; Zamzam, M.S. Feasibility of PSO–ANFIS model to Estimate Rock Fragmentation Produced by Mine Blasting. Neural Comput. Appl. 2018, 30, 1015–1024. [Google Scholar] [CrossRef]

- Lin, J.C.W.; Yang, L.; Fournier-Viger, P.; Hong, T.P.; Voznak, M. A Binary PSO Approach to Mine High-utility Itemsets. Soft Comput. 2017, 21, 5103–5121. [Google Scholar] [CrossRef]

- Wang, M.; Wu, C.; Wang, L.; Xiang, D.; Huang, X. A feature selection approach for hyperspectral image based on modified ant lion optimizer. Knowl.-Based Syst. 2019, 168, 39–48. [Google Scholar] [CrossRef]

- Phan, A.; Nguyen, M.; Bui, L. Feature weighting and SVM parameters optimization based on genetic algorithms for classification problems. Appl. Intell. 2016, 46, 455–469. [Google Scholar] [CrossRef]

- Wan, Y.; Wang, M.; Ye, Z.; Lai, X. A “Tuned” Mask Learnt Approach Based on Gravitational Search Algorithm. Comput. Intell. Neurosci. 2016, 2016, 1–16. [Google Scholar] [CrossRef] [PubMed]

| Category | Name | Abbreviation | Meaning | Formula |

|---|---|---|---|---|

| Eigenvalue-based features | Sum | SU | Sum of eigenvalues | |

| Total variance | TV | Total variance | ||

| Eigen entropy | EI | Characteristic entropy | ||

| Anisotropy | AN | Anisotropy | ||

| Planarity | PL | Planarity | ||

| Linearity | LI | Linearity | ||

| Surface roughness | SR | Surface roughness | ||

| Sphericity | SP | Sphericity | ||

| Density-based feature | Point Density | PD | Point Density | |

| Elevation-based features | Height above | HA | The height difference between the current point and the lowest point | |

| Height below | HB | The height difference between the highest point and the current point | ||

| Sphere Variance | SPV | Standard deviation of the height difference in the spherical neighborhood |

| Experimental Data | Data Area | Number of Points | Point Cloud Density | ||

|---|---|---|---|---|---|

| Original Data | After Dilution | Original Data | After Dilution | ||

| LDR 1 | 174,080 | 4,486,763 | 19,320 | 25.799339 | 0.111040 |

| LDR 2 | 155,595 | 3,989,310 | 21,926 | 25.683631 | 0.140958 |

| MDR | 186,147 | 585,024 | 23,675 | 26.261592 | 0.183575 |

| HDR 1 | 99,470 | 2,283,275 | 29,127 | 23.062170 | 0.294197 |

| HDR 2 | 68,040 | 1,897,760 | 20,663 | 27.936171 | 0.303810 |

| Experimental Data | GLCM | HoG | LBP | OPCF | NFS | ENVI | Proposed |

|---|---|---|---|---|---|---|---|

| LDR 1 | 86.9984 | 75.9503 | 88.3870 | 80.4586 | 78.7330 | 87.4203 | 90.4238 |

| LDR 2 | 65.5523 | 85.1865 | 74.5297 | 85.5651 | 89.6949 | 91.3310 | 92.2558 |

| MDR | 75.8902 | 78.9356 | 73.3347 | 81.7022 | 82.3527 | 83.5180 | 87.1679 |

| HDR 1 | 87.5064 | 90.8264 | 90.0470 | 87.4961 | 81.6047 | 90.2660 | 92.1138 |

| HDR 2 | 62.3917 | 76.6975 | 75.2795 | 79.4367 | 84.2762 | 86.2752 | 89.1207 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, X.; Yang, J.; Li, Y.; Wang, M. A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sens. 2019, 11, 1636. https://doi.org/10.3390/rs11141636

Lai X, Yang J, Li Y, Wang M. A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sensing. 2019; 11(14):1636. https://doi.org/10.3390/rs11141636

Chicago/Turabian StyleLai, Xudong, Jingru Yang, Yongxu Li, and Mingwei Wang. 2019. "A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features" Remote Sensing 11, no. 14: 1636. https://doi.org/10.3390/rs11141636

APA StyleLai, X., Yang, J., Li, Y., & Wang, M. (2019). A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sensing, 11(14), 1636. https://doi.org/10.3390/rs11141636