Hyperspectral Image Resolution Enhancement Approach Based on Local Adaptive Sparse Unmixing and Subpixel Calibration

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets

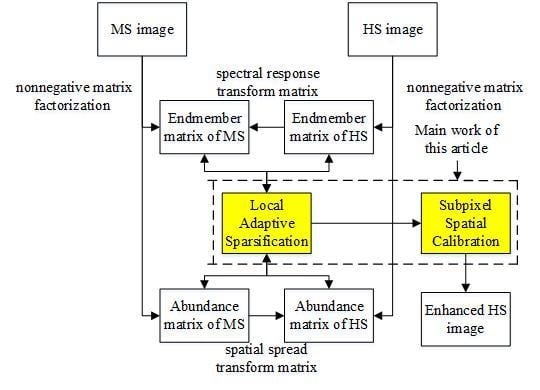

2.2. Local Adaptive Sparse Unmixing Based Fusion Approach

| Algorithm 1 Local Adaptive Sparse Unmixing Based Fusion |

| 1: Input: Hyperspectral data and multispectral data , degradation matrix L and G, permissible error 2: initialization stage 3: Endmember extraction from to initialize by VCA. 4: Initialize from and initial by using the FCLS method. 5: Initialize from by . 6: Initialize from and initial by using the FCLS method. 7: Optimization stage 8: Outer loop for 9: Inter loop1 Sparse optimize LHS (Iteration until convergence) 10: generate the sparsification matrix from by (7) and (8) 11: generate the local adaptive sparse abundance matrix for by (11) 12: Optimize using the sparse abundance matrix by (13). 13: Optimize by using (14) 14: end 15: Update from by . 16: Inter loop2 Sparse optimize HMS (Iteration until convergence) 17: generate the sparsification matrix from by (9) and (10) 18: generate the local adaptive sparse abundance matrix for by (12) 19: Optimize using the sparse abundance matrix by (15). 20: Optimize by using (16) 21: end 22: Update from by 23: end for 24: fusion stage 25: Fuse and by using to get HSS. 26: Output: fused high spatial-spectral resolution hyperspectral image . |

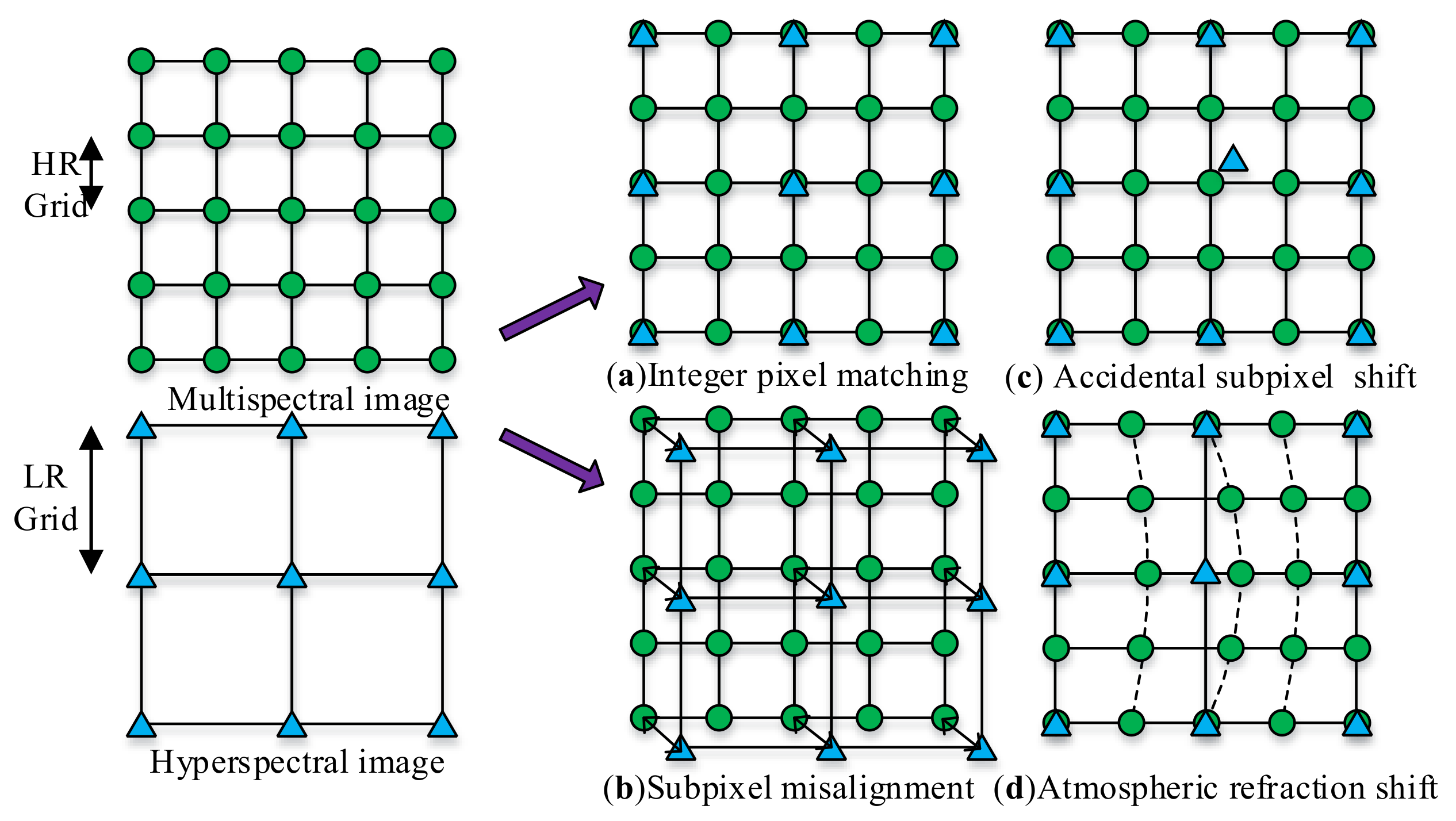

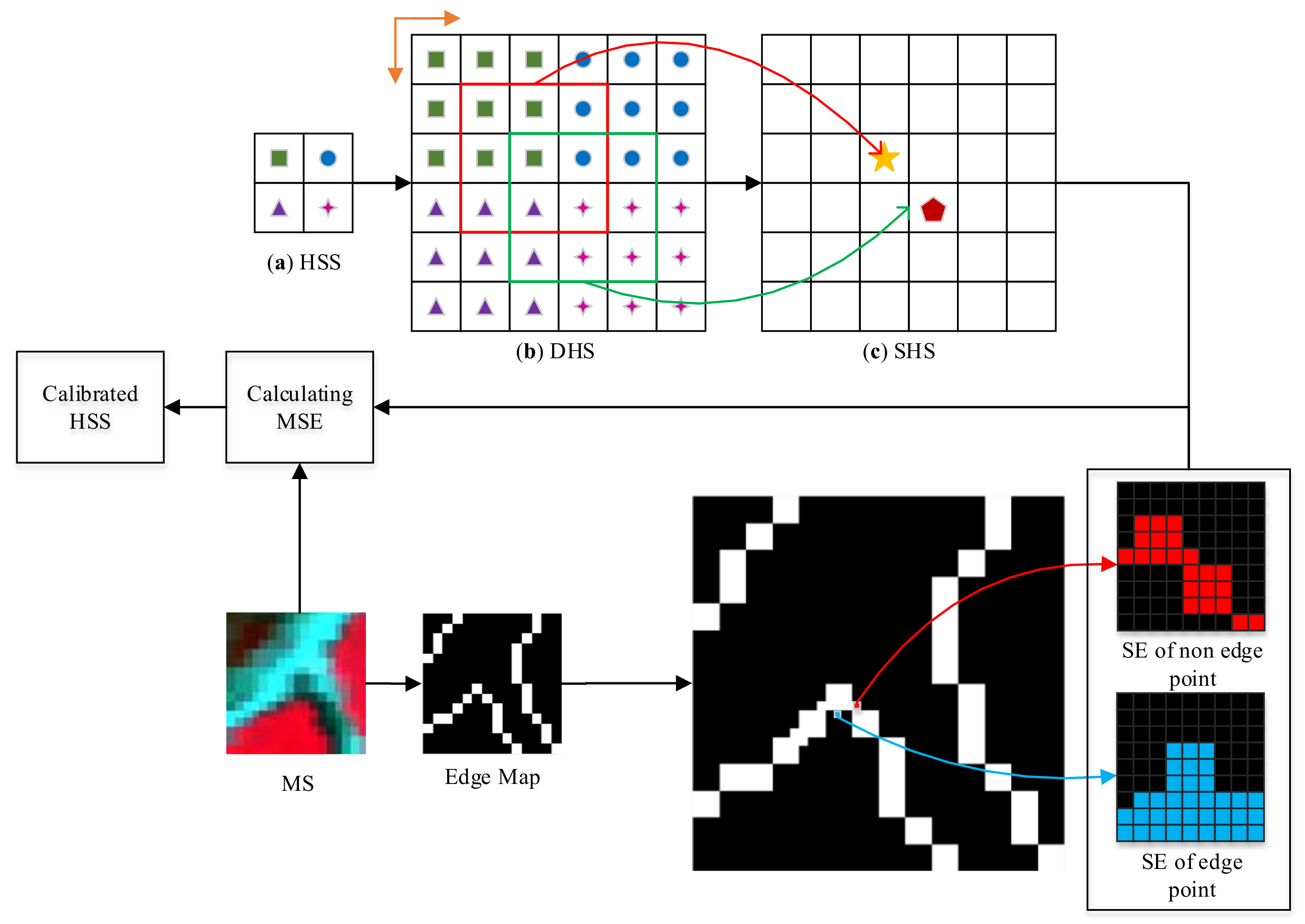

2.3. Subpixel Spatial Calibration Phase

3. Experimental Results

3.1. Experimental Setup

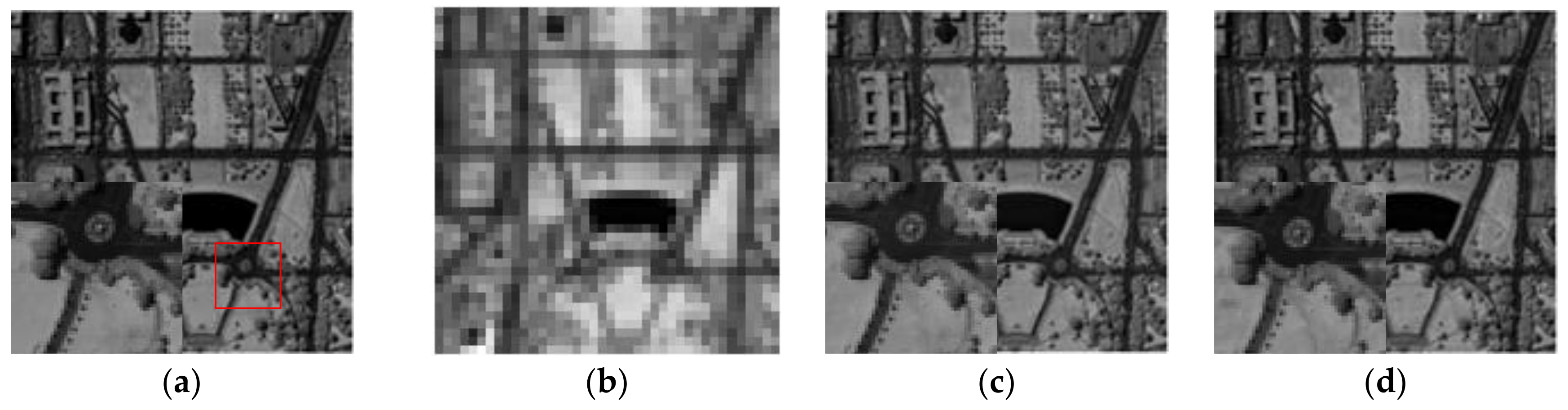

3.2. Integer Pixel Matched Fusion Experiment

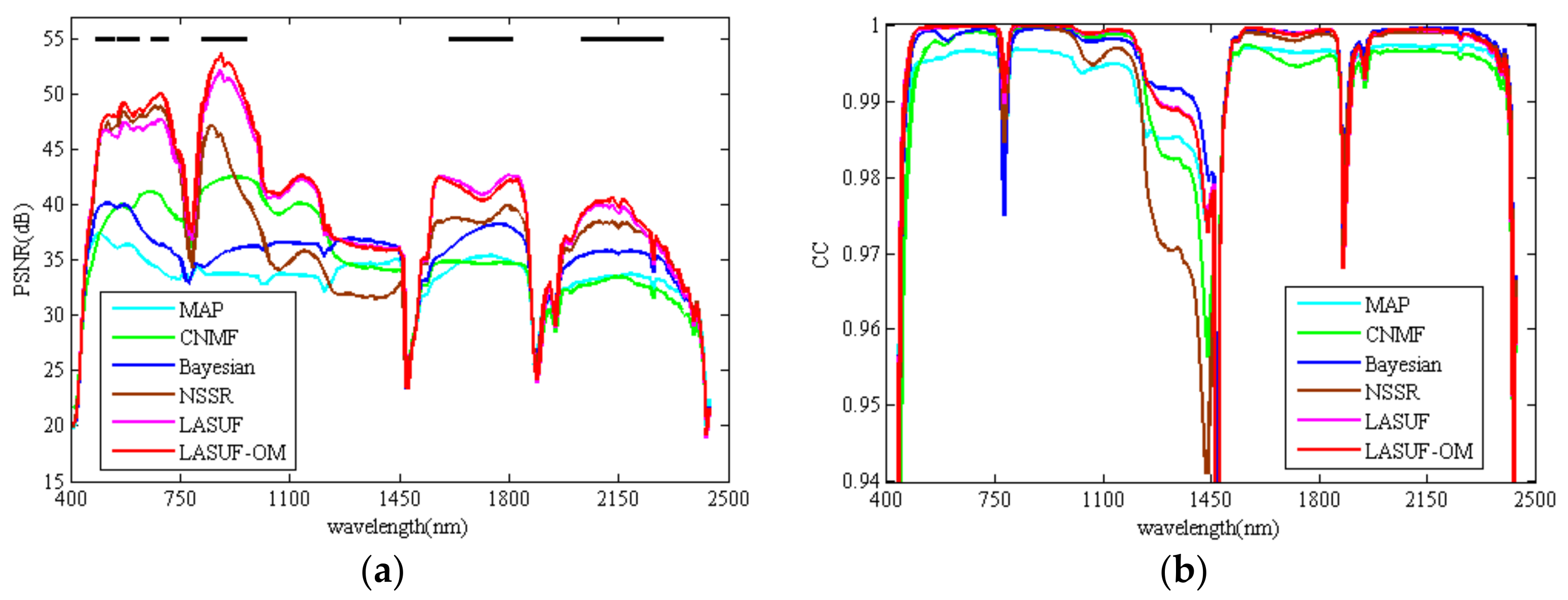

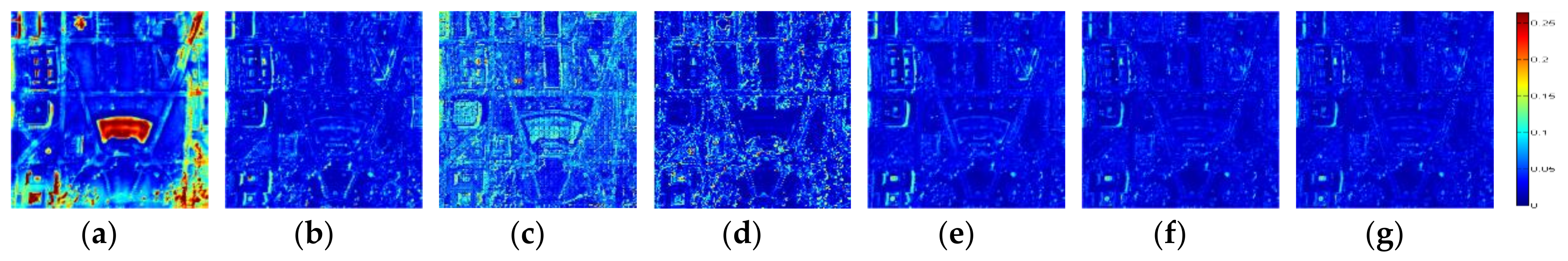

3.2.1. From the Spectral Viewpoint

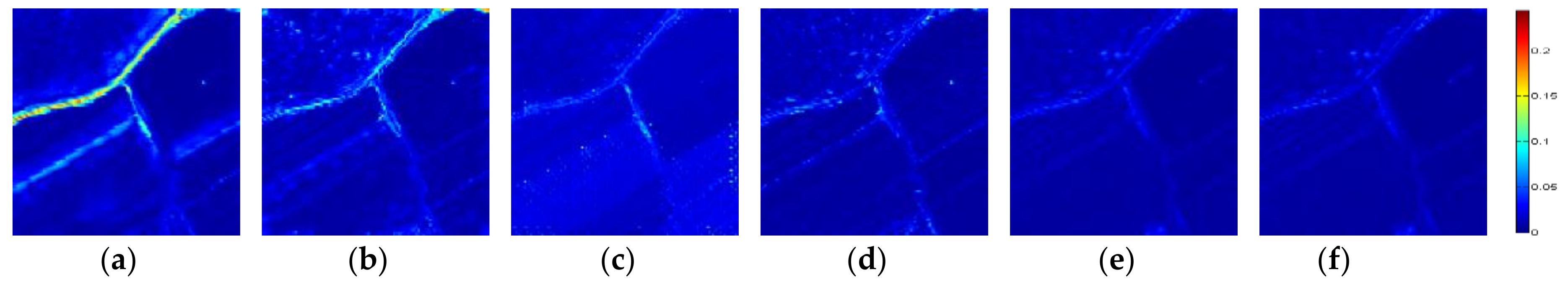

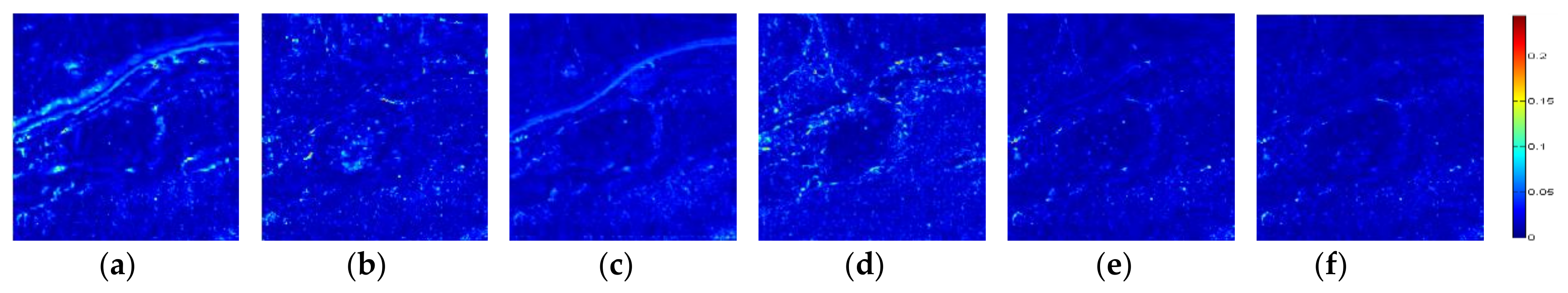

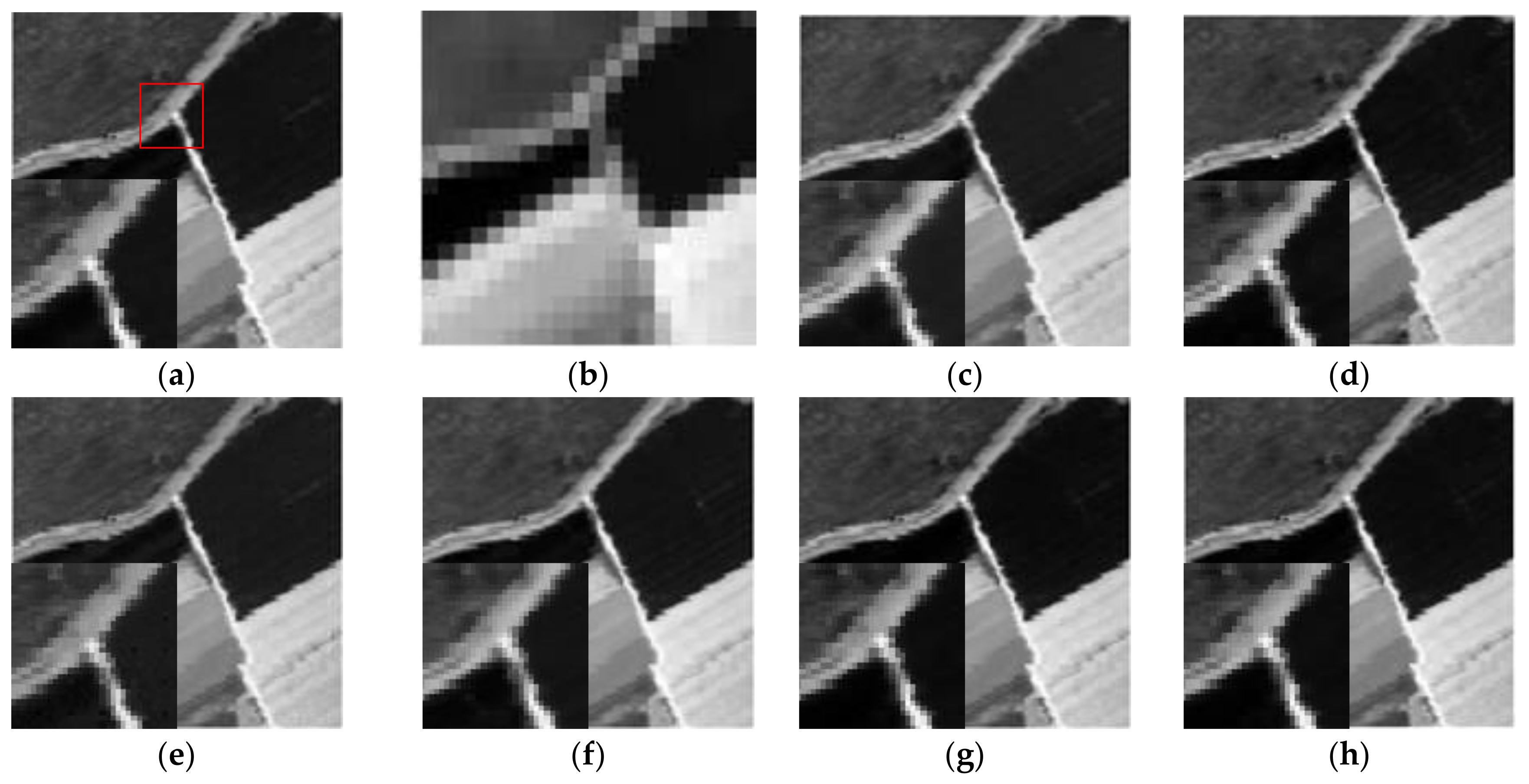

3.2.2. From the Spatial Viewpoint

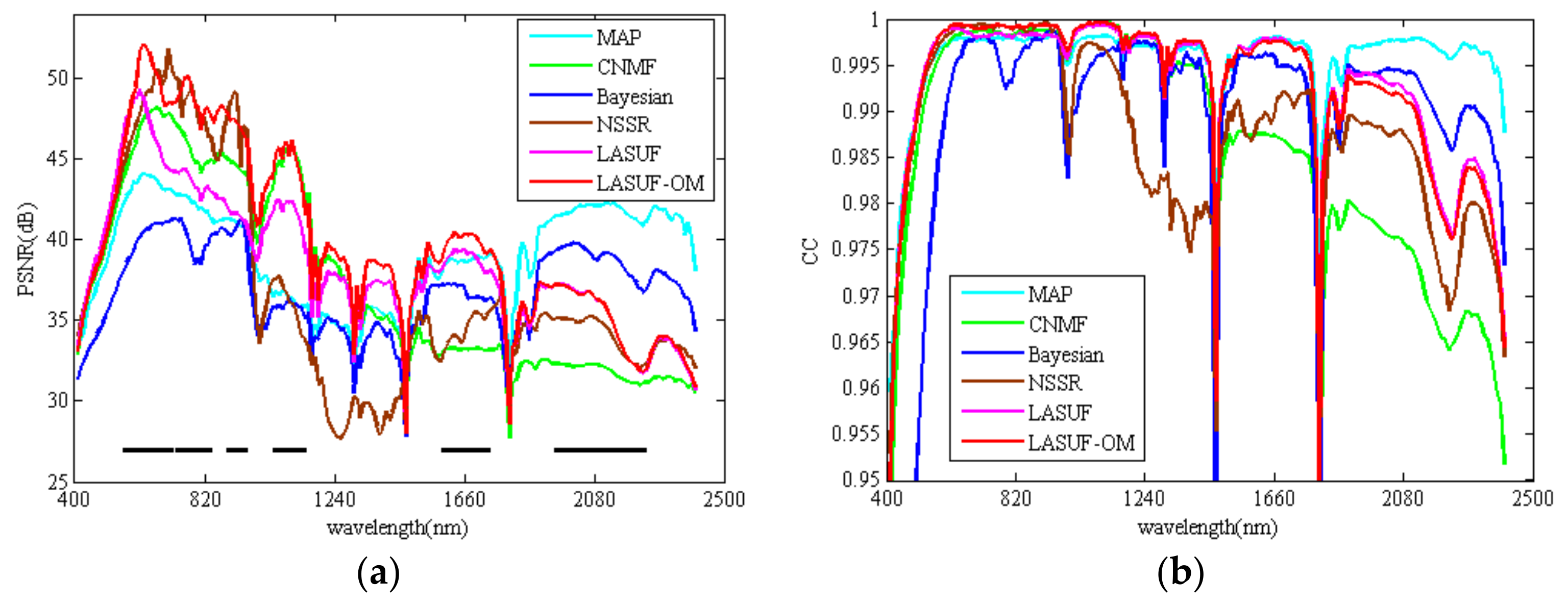

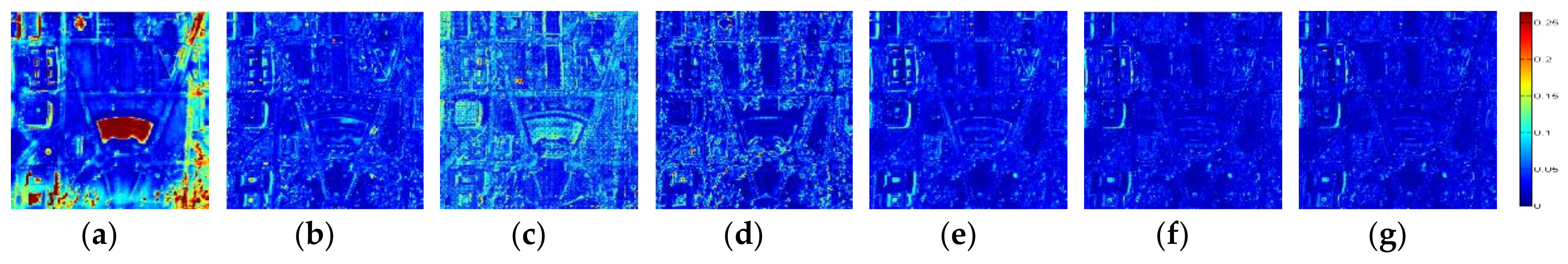

3.3. Subpixel Shift Experiment

4. Discussion

4.1. Endmembers Collinearlity

4.2. Computational Efficiency

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Loncan, L.; Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simoes, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Vivone, G.; Alparone, L.; Chanussot, J.; Mura, M.D.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A Critical Comparison among Pansharpening Algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Mookambiga, A.; Gomathi, V. Comprehensive review on fusion techniques for spatial information enhancement in hyperspectral imagery. Multidimens. Syst. Sign Process. 2016, 27, 863–889. [Google Scholar] [CrossRef]

- Charles, A.S.; Rozell, C.J. Spectral Superresolution of Hyperspectral Imagery Using Reweighted l1 Spatial Filtering. IEEE Geosci. Remote Sens. Lett. 2014, 11, 602–606. [Google Scholar] [CrossRef]

- Li, J.; Yuan, Q.; Feng, H.; Meng, X.; Zhang, L. Hyperspectral Image Super-Resolution by Spectral Mixture Analysis and Spatial-Spectral Group Sparsity. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1250–1254. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, J.; Chan, J.C.-W. Hyperspectral imagery super-resolution by spatial-spectral joint nonlocal similarity. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2671–2679. [Google Scholar] [CrossRef]

- Gu, Y.; Zhang, Y.; Zhang, J. Integration of Spatial-Spectral Information for Resolution Enhancement in Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1347–1358. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. Fusion of hyperspectral and panchromatic images using multi-resolution analysis and nonlinear PCA band reduction. Inf. Fusion 2001, 2, 117–186. [Google Scholar]

- Shah, V.P.; Younan, N.; King, R.L. An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 56, 1323–1335. [Google Scholar] [CrossRef]

- Laben, C.; Brower, B. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6 011 875, 4 January 2000. [Google Scholar]

- Licciardi, G.A.; Khan, M.M.; Chanussot, J.; Montanvert, A.; Condat, L.; Jutten, C. Fusion of hyperspectral and panchromatic images using multiresolution analysis and nonlinear PCA band reduction. EURASIP J. Adv. Signal Process. 2012, 2012, 207. [Google Scholar] [CrossRef]

- Starck, J.L.; Fadili, J.; Murtagh, F. The undecimated wavelet decomposition and its reconstruction. IEEE Trans. Image Process. 2007, 16, 297–309. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Kang, X.; Li, J.; Benediktsson, J.A. Spectral-spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2666–2677. [Google Scholar] [CrossRef]

- Naoto, Y.; Claas, G.; Jocelyn, C. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar]

- Gomez, R.; Jazaeri, A.; Kafatos, M. Wavelet-based hyperspectral and multi-spectral image fusion. Proc. SPIE 2001, 4383, 36–42. [Google Scholar]

- Choi, Y.; Sharifahmadian, E.; Latifi, S. Performance analysis of contourlet based hyperspectral image fusion methods. Int. J. Inf. Theory 2013, 2, 1–14. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. MAP estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef] [PubMed]

- Eismann, M.T.; Hardie, R.C. Application of the stochastic mixing model to hyperspectral resolution enhancement. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1924–1933. [Google Scholar] [CrossRef]

- Eismann, M.T.; Hardie, R.C. Hyperspectral resolution enhancement using high-resolution multispectral imagery with arbitrary response functions. IEEE Trans. Geosci. Remote Sens. 2005, 43, 455–465. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Bayesian fusion of hyperspectral and multispectral images. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3176–3180. [Google Scholar]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Fast fusion of multi-band images based on solving a sylvester equation. IEEE Trans. Image Process. 2015, 24, 4109–4121. [Google Scholar] [CrossRef] [PubMed]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Bayesian fusion of multi-band images. IEEE J. Sel. Top. Signal Process. 2015, 9, 1117–1127. [Google Scholar] [CrossRef]

- Simões, M.; Dias, J.B.; Almeida, L.B.; Chanussot, J. Hyperspectral image superresolution: An edge-preserving convex formulation. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4166–4170. [Google Scholar]

- Simões, M.; Dias, J.B.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled Nonnegative Matrix Factorization Unmixing for Hyperspectral and Multispectral Data Fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Yokoya, N.; Mayumi, N.; Iwasaki, A. Cross-Calibration for Data Fusion of EO-1/Hyperion and Terra/ASTER. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 419–426. [Google Scholar] [CrossRef]

- Bendoumi, M.A.; He, M.; Mei, S. Hyperspectral Image Resolution Enhancement Using High-Resolution Multispectral Image Based on Spectral Unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6574–6583. [Google Scholar] [CrossRef]

- Kim, Y.; Choi, J.; Han, D.; Kim, Y. Block-Based Fusion Algorithm with Simulated Band Generation for Hyperspectral and Multispectral Images of Partially Different Wavelength Ranges. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2997–3007. [Google Scholar] [CrossRef]

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 1409–1413. [Google Scholar]

- Grohnfeldt, C.; Zhu, X.; Bamler, R. Jointly sparse fusion of hyperspectral and multispectral imagery. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Melbourne, Australia, 21–26 July 2013. [Google Scholar]

- Wei, Q.; Bioucas-Dias, J.M.; Dobigeon, N.; Tourneret, J.-Y.; Chen, M.; Godsill, S. Multi-band image fusion based on spectral unmixing. IEEE Trans. Geosci. Remote Sens. 2016, 23, 1632–1636. [Google Scholar]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 63–78. [Google Scholar]

- Huang, B.; Song, H.; Cui, H.; Peng, J.; Xu, Z. Spatial and spectral image fusion using sparse matrix factorization. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1693–1704. [Google Scholar] [CrossRef]

- Dong, W.; Fu, F.; Shi, G.; Cao, X.; Wu, J.; Li, G.; Li, X. Hyperspectral image super-resolution via non-negative structured sparse representation. IEEE Trans. Image Process. 2016, 25, 2337–2352. [Google Scholar] [CrossRef] [PubMed]

- Chen, Q.; Shi, Z.; An, Z. Hyperspectral image fusion based on sparse constraint NMF. Opt. Int. J. Light Electron Opt. 2014, 125, 832–838. [Google Scholar] [CrossRef]

- Sun, X.; Zhang, L.; Yang, H.; Wu, T.; Cen, Y.; Guo, Y. Enhancement of Spectral Resolution for Remotely Sensed Multispectral Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2198–2211. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Target Detection Blind Test. Available online: http://dirsapps.cis.rit.edu/blindtest/ (accessed on 4 July 2006).

- Jia, S.; Qian, Y. Constrained nonnegative matrix factorization for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2009, 47, 161–173. [Google Scholar] [CrossRef]

- Liu, X.; Xia, W.; Wang, B.; Zhang, L. An approach based on constrained nonnegative matrix factorization to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 757–772. [Google Scholar] [CrossRef]

- Karoui, M.S.; Deville, Y.; Benhalouche, F.Z.; Boukerch, I. Hypersharpening by Joint-Criterion Nonnegative Matrix Factorization. IEEE Trans. Geosci. Remote Sens. 2017, 5, 1660–1670. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Van der Meer, F.D.; Jia, X. Collinearity and orthogonality of endmembers in linear spectral unmixing. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 491–503. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C.I. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Nascimento, J.M.P.; Bioucas-Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by nonnegative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [PubMed]

- Park, S.C.; Park, M.K.; Kang, M.G. Super-Resolution Image Reconstruction: A Technical Overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Soille, P. Morphological Image Analysis, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 132, p. 392. [Google Scholar]

- Teng, Y.; Zhang, Y.; Chen, Y.; Ti, C. A novel hyperspectral images destriping method based on edge reconstruction and adaptive morphological operators. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2948–2952. [Google Scholar]

- Teng, Y.; Zhang, Y.; Chen, Y.; Ti, C. Adaptive Morphological Filtering Method for Structural Fusion Restoration of Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 655–667. [Google Scholar] [CrossRef]

- Du, Q.; Younan, N.H.; King, R.; Shah, V.P. On the performance evaluation of pan-sharpening techniques. IEEE Geosci. Remote Sens. Lett. 2007, 4, 518–522. [Google Scholar] [CrossRef]

| Dataset | Index | PSNR (dB) | SAM (rad) | CC | ERGAS | Time (s) |

|---|---|---|---|---|---|---|

| Salinas | MAP | 32.9592 | 0.0187 | 0.9877 | 0.9536 | 6.68 |

| CNMF | 35.2277 | 0.0128 | 0.9869 | 0.9197 | 70.79 | |

| Bayesian | 35.7891 | 0.0141 | 0.9896 | 0.8319 | 6.79 | |

| NSSR | 37.1807 | 0.0113 | 0.9871 | 0.7883 | 50.81 | |

| LASUF | 39.4132 | 0.0095 | 0.9899 | 0.7737 | 18.08 | |

| LASUF-OM | 40.0492 | 0.0091 | 0.9901 | 0.7639 | 39.72 |

| Dataset | Index | PSNR (dB) | SAM (rad) | CC | ERGAS | Time (s) |

|---|---|---|---|---|---|---|

| Washington D.C. | MAP | 38.7546 | 0.0354 | 0.9889 | 1.7922 | 6.85 |

| CNMF | 37.1528 | 0.0268 | 0.9858 | 1.8408 | 78.38 | |

| Bayesian | 37.8320 | 0.0316 | 0.9875 | 1.414 | 7.73 | |

| NSSR | 38.7432 | 0.0274 | 0.9894 | 1.7245 | 61.77 | |

| LASUF | 38.3789 | 0.0249 | 0.9923 | 1.3383 | 16.77 | |

| LASUF-OM | 40.8441 | 0.0226 | 0.9924 | 1.3041 | 38.06 |

| Dataset | Index | PSNR (dB) | SAM (rad) | CC | ERGAS | Time (s) |

|---|---|---|---|---|---|---|

| Montana | MAP | 41.8429 | 0.0204 | 0.9935 | 1.577 | 14.73 |

| CNMF | 43.6353 | 0.0196 | 0.9922 | 1.7007 | 230.39 | |

| Bayesian | 43.8688 | 0.0193 | 0.9934 | 1.5844 | 9.97 | |

| NSSR | 44.1863 | 0.0202 | 0.9917 | 1.7156 | 121.25 | |

| LASUF | 49.0741 | 0.0145 | 0.9939 | 1.4636 | 72.86 | |

| LASUF-OM | 49.5409 | 0.0136 | 0.9942 | 1.4323 | 109.27 |

| Dataset | Index | PSNR (dB) | SAM (rad) | CC | ERGAS |

|---|---|---|---|---|---|

| Washington D.C. | MAP | 35.4668 | 0.0664 | 0.9887 | 1.9176 |

| CNMF | 35.8417 | 0.0321 | 0.9838 | 1.8451 | |

| Bayesian | 34.8561 | 0.0598 | 0.9780 | 1.7198 | |

| NSSR | 35.7487 | 0.0356 | 0.9865 | 1.7901 | |

| LASUF | 37.2507 | 0.0284 | 0.9911 | 1.4886 | |

| LASUF-OM, k = 3 | 38.7873 | 0.0251 | 0.9913 | 1.4139 | |

| LASUF-OM, k = 6 | 39.1114 | 0.0244 | 0.9916 | 1.3879 |

| Dataset | Index | PSNR (dB) | SAM (rad) | CC | ERGAS |

|---|---|---|---|---|---|

| Washington D.C. | MAP | 35.1011 | 0.0694 | 0.9880 | 2.0194 |

| CNMF | 35.3238 | 0.0371 | 0.9737 | 1.9078 | |

| Bayesian | 34.7970 | 0.0607 | 0.9775 | 1.7349 | |

| NSSR | 35.3824 | 0.0396 | 0.9845 | 1.9528 | |

| LASUF | 36.4405 | 0.0303 | 0.9904 | 1.5454 | |

| LASUF-OM, k = 3 | 38.2911 | 0.0255 | 0.9912 | 1.4283 | |

| LASUF-OM, k = 6 | 38.5723 | 0.0247 | 0.9914 | 1.4028 |

| Index | Mean (Er) | Min (Er) | Mean (Er) | Min (Er) |

|---|---|---|---|---|

| CNMF | LASUF | |||

| Salinas | 0.0340 | 0.0131 | 0.1257 | 0.0235 |

| Washington D.C. | 0.1607 | 0.0585 | 0.4397 | 0.1685 |

| Montana | 0.0534 | 0.0220 | 0.1800 | 0.0350 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teng, Y.; Zhang, Y.; Ti, C.; Zhang, J. Hyperspectral Image Resolution Enhancement Approach Based on Local Adaptive Sparse Unmixing and Subpixel Calibration. Remote Sens. 2018, 10, 592. https://doi.org/10.3390/rs10040592

Teng Y, Zhang Y, Ti C, Zhang J. Hyperspectral Image Resolution Enhancement Approach Based on Local Adaptive Sparse Unmixing and Subpixel Calibration. Remote Sensing. 2018; 10(4):592. https://doi.org/10.3390/rs10040592

Chicago/Turabian StyleTeng, Yidan, Ye Zhang, Chunli Ti, and Junping Zhang. 2018. "Hyperspectral Image Resolution Enhancement Approach Based on Local Adaptive Sparse Unmixing and Subpixel Calibration" Remote Sensing 10, no. 4: 592. https://doi.org/10.3390/rs10040592

APA StyleTeng, Y., Zhang, Y., Ti, C., & Zhang, J. (2018). Hyperspectral Image Resolution Enhancement Approach Based on Local Adaptive Sparse Unmixing and Subpixel Calibration. Remote Sensing, 10(4), 592. https://doi.org/10.3390/rs10040592