Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models

Abstract

:1. Introduction

2. Materials and Methods

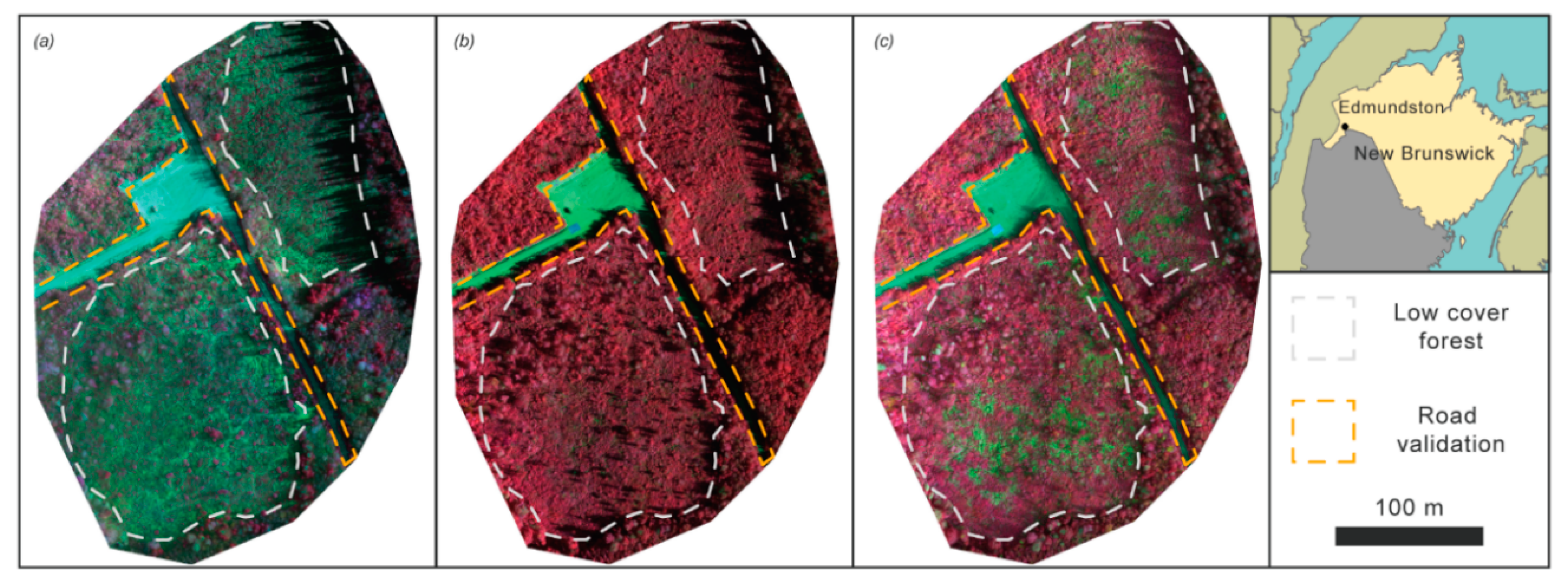

2.1. Study Area

2.2. Data

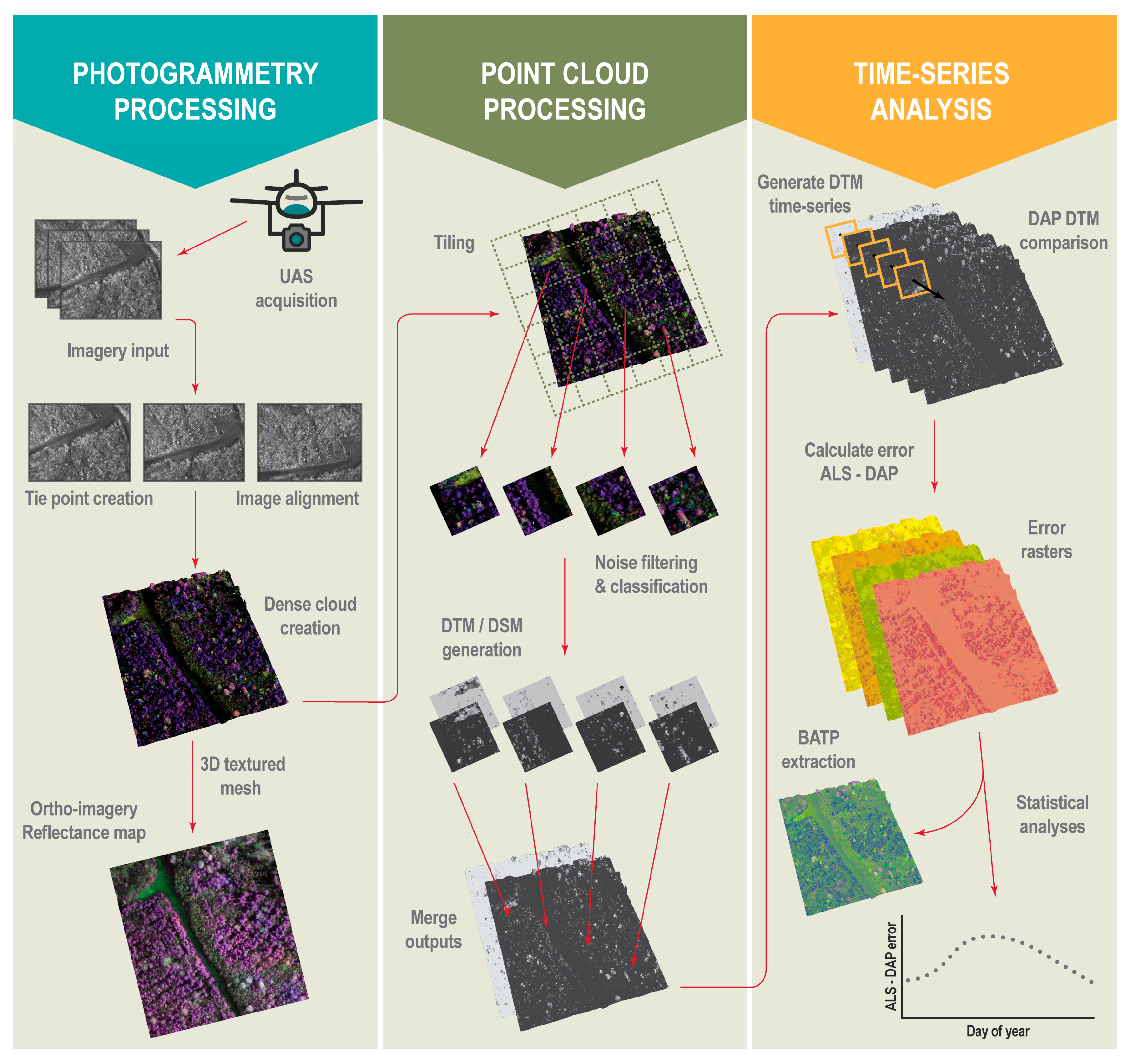

2.3. Photogrammetric Processing

2.4. Point Cloud Processing

2.5. Time Series Analysis

2.5.1. Generalized Additive Mixed Models

2.5.2. Best Available Terrain Pixel Compositing

3. Results

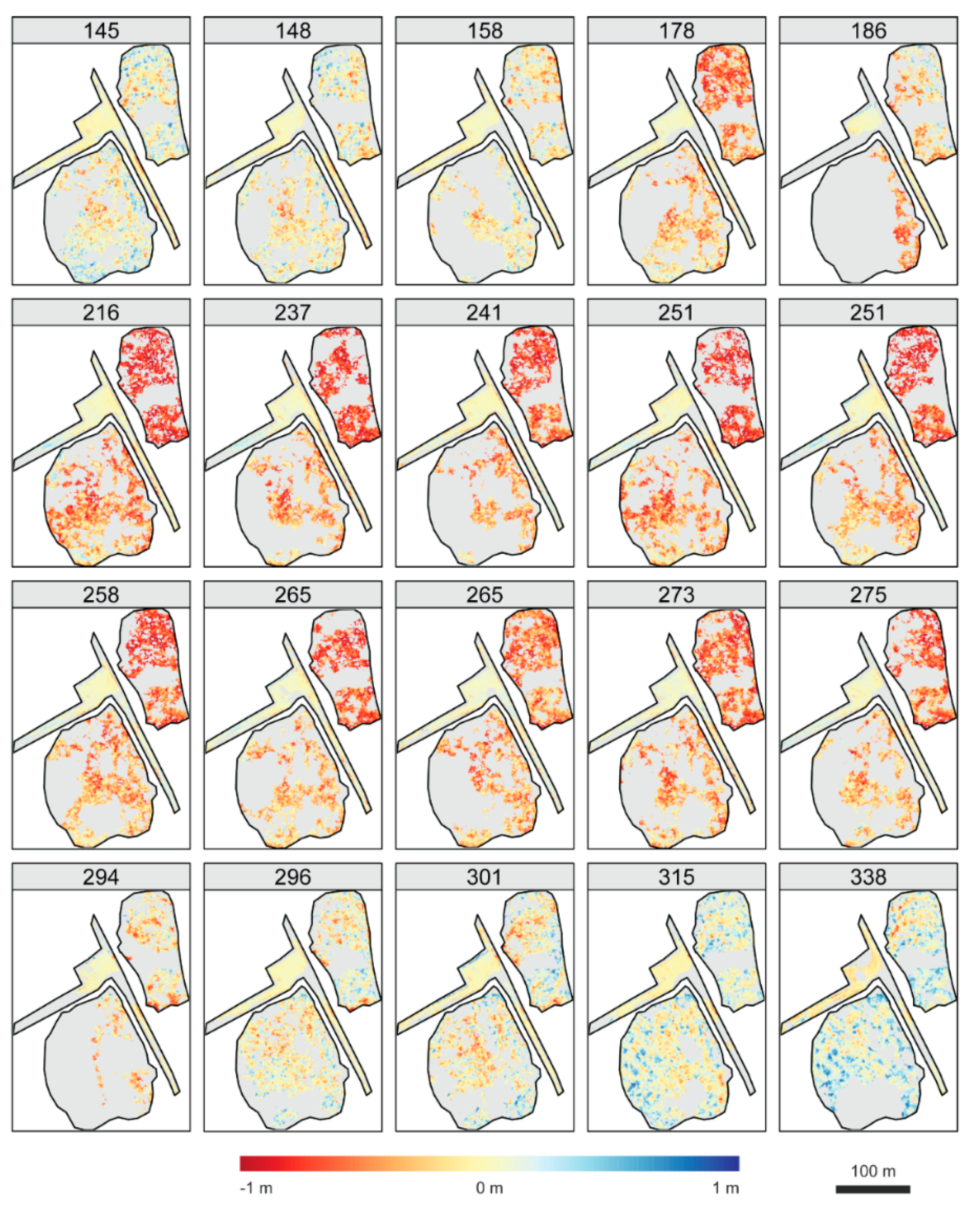

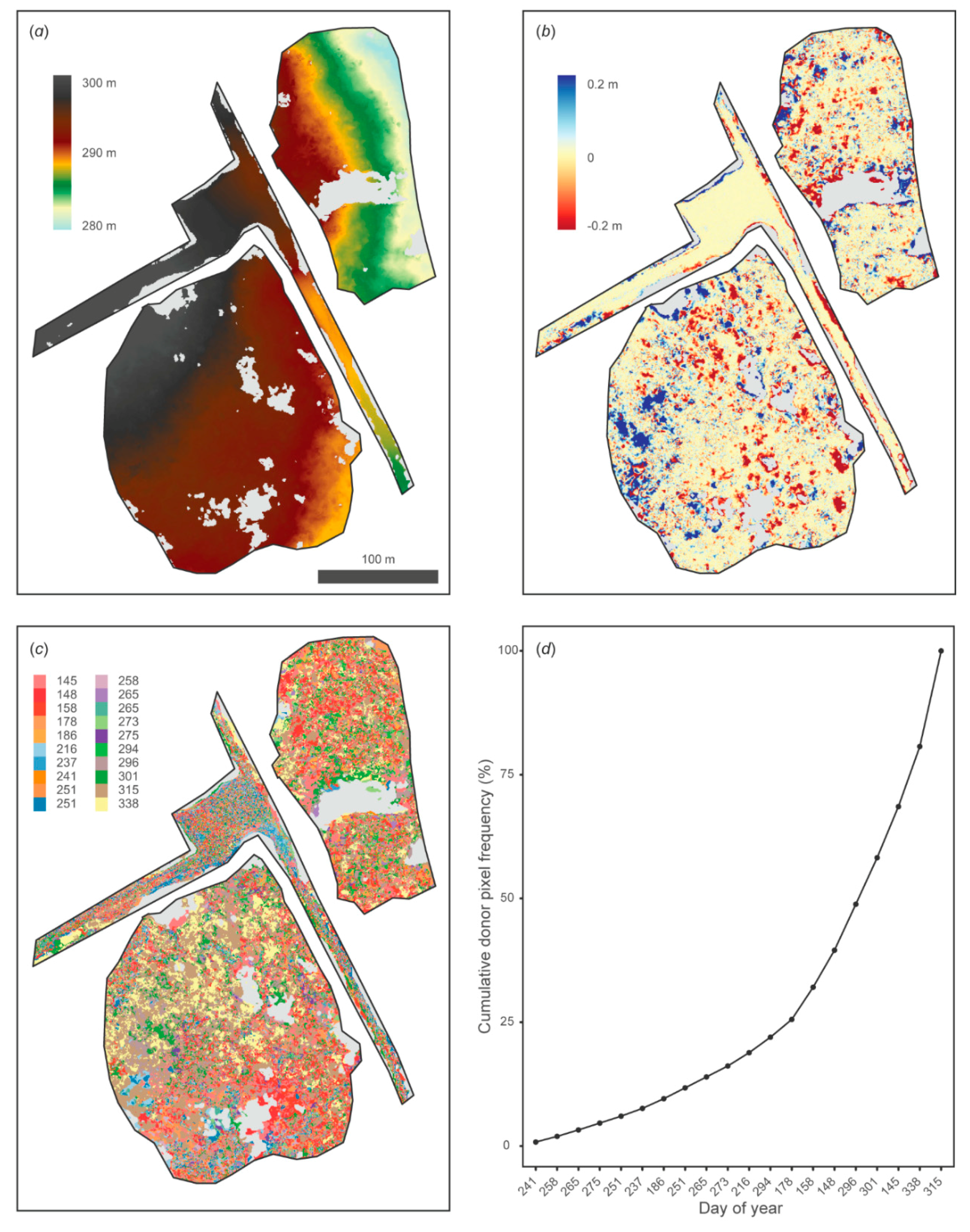

3.1. DTM Summary and Validation

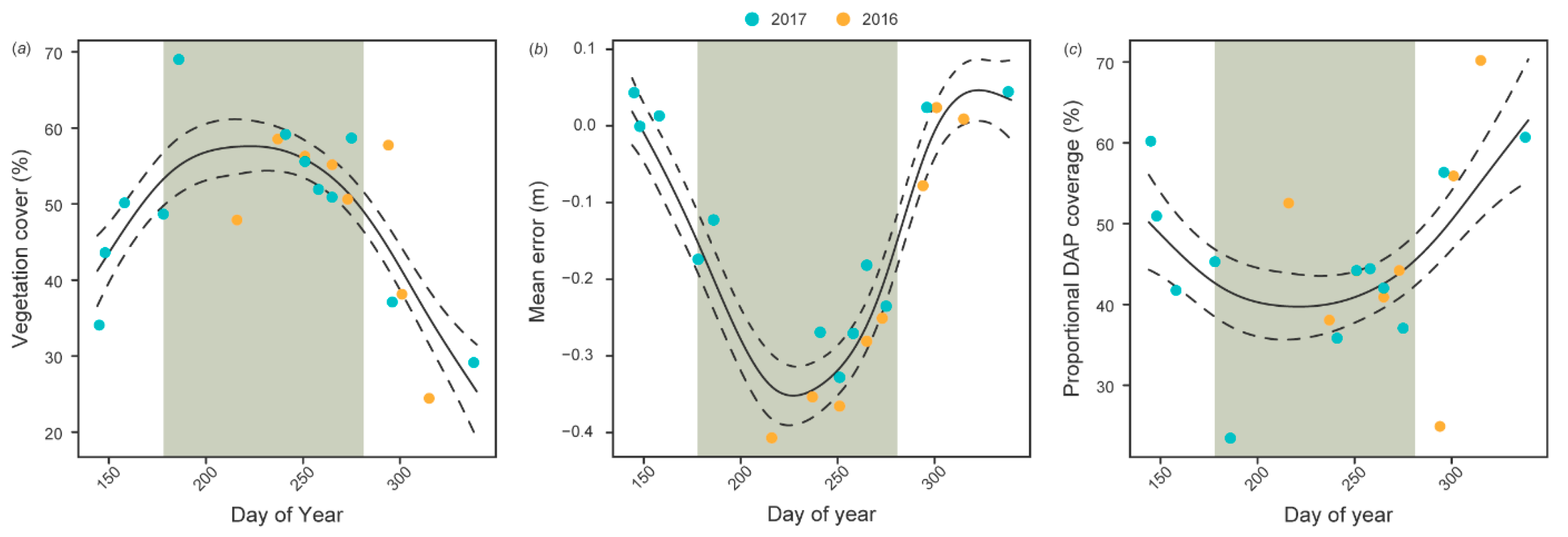

3.2. Generalized Additive Mixed Models

3.3. Best Available Terrain Pixel Compositing

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Reutebuch, S.E.; Andersen, H.; Mcgaughey, R.J.; Forest, L. Light detection and ranging (lidar): An emerging tool for multiple resource inventory. J. For. 2005, 103, 286–292. [Google Scholar] [CrossRef]

- Mulverhill, C.; Coops, N.C.; White, J.C.; Tompalski, P.; Marshall, P.L.; Bailey, T. Enhancing the estimation of stem-size distributions for unimodal and bimodal stands in a boreal mixedwood forest with airborne laser scanning data. Forests 2018, 9. [Google Scholar] [CrossRef]

- Tompalski, P.; Coops, N.C.; Wulder, M.A.; Bailey, T. Combining multi-date airborne laser scanning and digital aerial photogrammetric data for forest growth and yield modelling. Remote Sens. 2018, 10, 347. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, M.A.; Coops, N.C. Update of forest inventory data with lidar and high spatial resolution satellite imagery. Can. J. Remote Sens. 2008, 34, 5–12. [Google Scholar] [CrossRef]

- Matasci, G.; Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W.; Zald, H.S.J. Large-area mapping of Canadian boreal forest cover, height, biomass and other structural attributes using Landsat composites and lidar plots. Remote Sens. Environ. 2018, 209, 90–106. [Google Scholar] [CrossRef]

- Matasci, G.; Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W.; Bolton, D.K.; Tompalski, P.; Bater, C.W. Three decades of forest structural dynamics over Canada’s forested ecosystems using Landsat time-series and lidar plots. Remote Sens. Environ. 2018, 216, 697–714. [Google Scholar] [CrossRef]

- Dash, J.; Pearse, G.; Watt, M. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- White, J.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The utility of Image-Based Point Clouds for forest inventory: A comparison with airborne laser scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef]

- Leberl, F.; Irschara, A.; Pock, T.; Meixner, P.; Gruber, M.; Scholz, S.; Wiechert, A. point clouds: Lidar versus 3D Vision. Photogramm. Eng. Remote Sens. 2010, 76, 1123–1134. [Google Scholar] [CrossRef]

- Saarinen, N.; Vastaranta, M.; Näsi, R.; Rosnell, T.; Hakala, T.; Honkavaara, E.; Wulder, M.; Luoma, V.; Tommaselli, A.; Imai, N.; et al. Assessing biodiversity in boreal forests with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2018, 10, 338. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Crawford, P. Assessing the status of forest regeneration using digital aerial photogrammetry and unmanned aerial systems. Int. J. Remote Sens. 2018, 39, 5246–5264. [Google Scholar] [CrossRef]

- McGlone, J.; Mikhail, E.; Bethel, J.; Mullen, R. Manual of Photogrammetry, 5th ed.; American Society of Photogrammetry and Remote Sensing: Bethesda, MD, USA, 2004; ISBN 1-57083-071-1. [Google Scholar]

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote sensing technologies for enhancing forest inventories: A review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; McCartney, G.; MacLean, D.A. Digital aerial photogrammetry for assessing cumulative spruce budworm defoliation and enhancing forest inventories at a landscape-level. ISPRS J. Photogramm. Remote Sens. 2018, 142, 1–11. [Google Scholar] [CrossRef]

- Tziavou, O.; Pytharouli, S.; Souter, J. Unmanned Aerial Vehicle (UAV) based mapping in engineering geological surveys: Considerations for optimum results. Eng. Geol. 2018, 232, 12–21. [Google Scholar] [CrossRef]

- Stepper, C.; Straub, C.; Immitzer, M.; Pretzsch, H. Using canopy heights from digital aerial photogrammetry to enable spatial transfer of forest attribute models: A case study in central Europe. Scand. J. For. Res. 2017, 32, 748–761. [Google Scholar] [CrossRef]

- White, J.C.; Stepper, C.; Tompalski, P.; Coops, N.C.; Wulder, M.A. Comparing ALS and image-based point cloud metrics and modelled forest inventory attributes in a complex coastal forest environment. Forests 2015, 6, 3704–3732. [Google Scholar] [CrossRef]

- Šedina, J.; Pavelka, K.; Raeva, P. UAV remote sensing capability for precision agriculture, forestry and small natural reservation monitoring. In Proceedings of the SPIE 10213, Anaheim, CA, USA, 9 April 2017. [Google Scholar]

- Puliti, S.; Saarela, S.; Gobakken, T.; Ståhl, G.; Næsset, E. Combining UAV and Sentinel-2 auxiliary data for forest growing stock volume estimation through hierarchical model-based inference. Remote Sens. Environ. 2018, 204, 485–497. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Marshall, P.; Tompalski, P.; Crawford, P. Unmanned aerial systems for precision forest inventory purposes a review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Patias, P.; Giagkas, F.; Georgiadis, C.; Mallinis, G.; Kaimaris, D.; Tsioukas, V. Evaluating horizontal positional accuracy of low-cost UAV orthomosaics over forest terrain using ground control points extracted from different sources. Proc. SPIE 2017, 10444. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Clarke, R. The regulation of civilian drones’ impacts on behavioural privacy. Comput. Law Secur. Rev. 2014, 30, 286–305. [Google Scholar] [CrossRef]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-Stump Detection, Segmentation, Classification, and Measurement Using Unmanned Aerial Vehicle (UAV) Imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Tompalski, P.; Crawford, P.; Day, K.J.K. Updating residual stem volume estimates using ALS- and UAV-acquired stereo-photogrammetric point clouds. Int. J. Remote Sens. 2016, 1161. [Google Scholar] [CrossRef]

- Pickell, P.D.; Hermosilla, T.; Frazier, R.J.; Coops, N.C.; Wulder, M.A. Forest recovery trends derived from Landsat time series for North American boreal forests Forest recovery trends derived from Landsat time series for North American boreal forests. Int. J. Remote Sens. 2016, 1161, 138–149. [Google Scholar] [CrossRef]

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W. An integrated Landsat time series protocol for change detection and generation of annual gap-free surface reflectance composites. Remote Sens. Environ. 2015, 158, 220–234. [Google Scholar] [CrossRef]

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W. Regional detection, characterization, and attribution of annual forest change from 1984 to 2012 using Landsat-derived time-series metrics. Remote Sens. Environ. 2015, 170, 121–132. [Google Scholar] [CrossRef]

- Pasquarella, V.J.; Bradley, B.A.; Woodcock, C.E. Near-real-time monitoring of insect defoliation using Landsat time series. Forests 2017, 8, 275. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Loveland, T.R.; Woodcock, C.E.; Belward, A.S.; Cohen, W.B.; Fosnight, E.A.; Shaw, J.; Masek, J.G.; Roy, D.P. The global Landsat archive: Status, consolidation, and direction. Remote Sens. Environ. 2016, 185, 271–283. [Google Scholar] [CrossRef]

- Löw, F.; Prishchepov, A.V.; Waldner, F.; Dubovyk, O.; Akramkhanov, A.; Biradar, C.; Lamers, J.P.A. Mapping Cropland abandonment in the Aral Sea Basin with MODIS time series. Remote Sens. 2018, 10, 159. [Google Scholar] [CrossRef]

- Jin, S.; Sader, S.A. MODIS time-series imagery for forest disturbance detection and quantification of patch size effects. Remote Sens. Environ. 2005, 99, 462–470. [Google Scholar] [CrossRef]

- Lu, Y.; Coops, N.C.; Hermosilla, T. Regional assessment of pan-Pacific urban environments over 25 years using annual gap free Landsat data. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 198–210. [Google Scholar] [CrossRef]

- Lu, Y.; Coops, N.C.; Hermosilla, T. Chronicling urbanization and vegetation changes using annual gap free Landsat composites from 1984 to 2012. JURSE 2017. [Google Scholar] [CrossRef]

- Liu, X. Airborne LiDAR for DEM generation: Some critical issues. Prog. Phys. Geogr. 2008, 32, 31–49. [Google Scholar] [CrossRef]

- Furze, S.; Ogilvie, J.; Arp, P.A. Fusing digital elevation models to improve hydrological interpretations. J. Geogr. Inf. Syst. 2017, 09, 558–575. [Google Scholar] [CrossRef]

- Tomaštík, J.; Mokroš, M.; Saloš, S.; Chudỳ, F.; Tunák, D. Accuracy of photogrammetric UAV-based point clouds under conditions of partially-open forest canopy. Forests 2017, 8, 151. [Google Scholar] [CrossRef]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Pádua, L.; Hruška, J.; Bessa, J.; Adão, T.; Martins, L.M.; Gonçalves, J.A.; Peres, E.; Sousa, A.M.R.; Castro, J.P.; Sousa, J.J. Multi-temporal analysis of forestry and coastal environments using UASs. Remote Sens. 2018, 10, 24. [Google Scholar] [CrossRef]

- Eltner, A.; Baumgart, P.; Maas, H.G.; Faust, D. Multi-temporal UAV data for automatic measurement of rill and interrill erosion on loess soil. Earth Surf. Process. Landf. 2015, 40, 741–755. [Google Scholar] [CrossRef]

- Vega, F.A.; Ramírez, F.C.; Saiz, M.P.; Rosúa, F.O. Multi-temporal imaging using an unmanned aerial vehicle for monitoring a sunflower crop. Biosyst. Eng. 2015, 132, 19–27. [Google Scholar] [CrossRef] [Green Version]

- Rossini, M.; Di Mauro, B.; Garzonio, R.; Baccolo, G.; Cavallini, G.; Mattavelli, M.; De Amicis, M.; Colombo, R. Rapid melting dynamics of an alpine glacier with repeated UAV photogrammetry. Geomorphology 2018, 304, 159–172. [Google Scholar] [CrossRef]

- Xiang, J.; Chen, J.; Sofia, G.; Tian, Y.; Tarolli, P. Open-pit mine geomorphic changes analysis using multi-temporal UAV survey. Environ. Earth Sci. 2018, 77, 1–18. [Google Scholar] [CrossRef]

- Mirijovskỳ, J.; Langhammer, J. Multitemporal monitoring of the morphodynamics of a mid-mountain stream using UAS photogrammetry. Remote Sens. 2015, 7, 8586–8609. [Google Scholar] [CrossRef]

- Lin, Y.; Jiang, M.; Yao, Y.; Zhang, L.; Lin, J. Use of UAV oblique imaging for detection of individual trees in residential environments. Urban For. Urban Green. 2015, 14, 404–412. [Google Scholar] [CrossRef]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotský, M. Template for high-resolution river landscape mapping using UAV technology. Meas. J. Int. Meas. Confed. 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Hébert, F. Best Management Practices for Tolerant Hardwoods in New Brunswick. Volume I: Distribution, Silvics and Stand Attributes of Key Species Associated with Northern Hardwoods in New Brunswick; Northern Hardwoods Research Institute: Edmunston, NB, Canada, 2016. [Google Scholar]

- Central Uplands Ecoregion. In Our Landscape Heritage: The Story of Ecological Land Classification in New Brunswick; Government of New Brunswick: Fredericton, NB, Canada, 2007; pp. 145–150. ISBN 978-1-55396-203-8.

- Pix4D Pix4D—Sequoia. Available online: https://pix4d.com/sequoia-faq/ (accessed on 3 August 2018).

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- Agisoft LLC. Agisoft PhotoScan Professional Edition 2018; Agisoft LLC: Petersburg, Russia, 2018. [Google Scholar]

- Isenburg, M. LAStools. Available online: http://lastools.org 2018 (accessed on 24 July 2018).

- Meng, X.; Currit, N.; Zhao, K. Ground filtering algorithms for airborne LiDAR data: A review of critical issues. Remote Sens. 2010, 2, 833–860. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Wood, S.N. Fast stable restricted maximum likelihood and marginal likelihood estimation of semiparametric generalized linear models. J. R. Stat. Soc. Ser. B Stat. Methodol. 2011, 73, 3–36. [Google Scholar] [CrossRef] [Green Version]

- Shadish, W.R.; Zuur, A.F.; Sullivan, K.J. Using generalized additive (mixed) models to analyze single case designs. J. Sch. Psychol. 2014, 52, 149–178. [Google Scholar] [CrossRef] [PubMed]

- Zuur, A.; Ieno, E.N.; Meesters, E. A Beginner’s Guide to R; Springer Science & Business Media: New York, NY, USA, 2009; ISBN 978-0-387-93837-0. [Google Scholar]

- Rickbeil, G.J.M.; Hermosilla, T.; Coops, N.C.; White, J.C.; Wulder, M.A. Barren-ground caribou (Rangifer tarandus groenlandicus) behaviour after recent fire events; integrating caribou telemetry data with Landsat fire detection techniques. Glob. Chang. Biol. 2017, 23, 1036–1047. [Google Scholar] [CrossRef] [PubMed]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.J.; Faias, S.P.; Tomé, M.; Díaz-Varela, R.A. Use of multi-temporal UAV-derived imagery for estimating individual tree growth in Pinus pinea stands. Forests 2017, 8, 1–19. [Google Scholar] [CrossRef]

- Mokroš, M.; Výbošt’ok, J.; Merganič, J.; Hollaus, M.; Barton, I.; Koreň, M.; Tomaštík, J.; Čerňava, J. Early stage forest windthrow estimation based on unmanned aircraft system imagery. Forests 2017, 8, 1–17. [Google Scholar] [CrossRef]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

| Acquisition Date | Day of Year | Mean Flight Altitude (m) | Mean GSD (cm) | Mean Sun Angle (°) | Mean DAP Density (pts/m2) |

|---|---|---|---|---|---|

| 2017-05-25 | 145 | 116.2 | 13.5 | 71.2 | 25.4 |

| 2017-05-28 | 148 | 102.2 | 12.1 | 68.3 | 31.0 |

| 2017-06-07 | 158 | 116.1 | 13.2 | 45.7 | 23.0 |

| 2017-06-27 | 178 | 114.7 | 13.4 | 54.0 | 26.6 |

| 2017-07-05 | 186 | 113.4 | 13.3 | 58.4 | 39.8 |

| 2016-08-03 | 216 | 102.5 | 12.2 | 68.7 | 23.8 |

| 2016-08-24 | 237 | 101.6 | 12.4 | 72.4 | 24.6 |

| 2017-08-29 | 241 | 99.9 | 12.1 | 67.0 | 33.4 |

| 2016-09-07 | 251 | 104.0 | 12.6 | 71.8 | 22.9 |

| 2017-09-08 | 251 | 102.1 | 12.2 | 64.9 | 33.3 |

| 2017-09-15 | 258 | 101.1 | 12.0 | 73.8 | 35.0 |

| 2016-09-21 | 265 | 110.0 | 12.9 | 68.9 | 25.8 |

| 2017-09-22 | 265 | 102.7 | 12.2 | 67.4 | 32.5 |

| 2016-09-29 | 273 | 116.5 | 13.9 | 75.8 | 20.8 |

| 2017-10-02 | 275 | 101.0 | 11.9 | 74.4 | 29.0 |

| 2016-10-20 | 294 | 102.6 | 12.6 | 82.7 | 22.7 |

| 2017-10-23 | 296 | 102.6 | 12.3 | 58.3 | 30.6 |

| 2016-10-27 | 301 | 105.8 | 13.1 | 72.9 | 26.0 |

| 2016-11-10 | 315 | 102.6 | 12.6 | 73.3 | 27.4 |

| 2017-12-04 | 338 | 100.3 | 12.4 | 74.5 | 27.4 |

| Date | Day of Year | Study Area Only | Road Validation Only | |||||

|---|---|---|---|---|---|---|---|---|

| Mean Error (m) | Standard Deviation of Error (m) | Range of Error (m) | Mean Vegetation Cover (%) | DAP DTM Coverage (%) | Mean Validation Error (m) | Standard Deviation of Validation Error (m) | ||

| 2017-05-25 | 145 | 0.04 | 0.15 | 1.41 | 33.50 | 60.20 | −0.02 | 0.06 |

| 2017-05-28 | 148 | 0.00 | 0.16 | 1.58 | 43.22 | 50.94 | 0.00 | 0.06 |

| 2017-06-07 | 158 | 0.01 | 0.15 | 2.28 | 48.26 | 41.75 | 0.01 | 0.06 |

| 2017-06-27 | 178 | −0.17 | 0.23 | 1.28 | 47.85 | 45.29 | 0.03 | 0.07 |

| 2017-07-05 | 186 | −0.12 | 0.22 | 2.55 | 66.67 | 23.47 | 0.02 | 0.11 |

| 2016-08-03 | 216 | −0.41 | 0.38 | 1.96 | 45.29 | 52.54 | −0.01 | 0.08 |

| 2016-08-24 | 237 | −0.35 | 0.36 | 1.55 | 54.73 | 38.07 | 0.00 | 0.08 |

| 2017-08-29 | 241 | −0.27 | 0.28 | 1.37 | 55.06 | 35.84 | 0.00 | 0.07 |

| 2016-09-07 | 251 | −0.37 | 0.36 | 1.55 | 53.61 | 44.16 | −0.01 | 0.08 |

| 2017-09-08 | 251 | −0.33 | 0.32 | 1.59 | 52.06 | 44.19 | 0.00 | 0.08 |

| 2017-09-15 | 258 | −0.27 | 0.29 | 1.39 | 49.29 | 44.43 | 0.01 | 0.07 |

| 2016-09-21 | 265 | −0.28 | 0.31 | 1.54 | 52.03 | 40.91 | 0.01 | 0.08 |

| 2017-09-22 | 265 | −0.18 | 0.23 | 1.37 | 48.59 | 42.03 | 0.04 | 0.07 |

| 2016-09-29 | 273 | −0.25 | 0.29 | 1.87 | 48.33 | 44.20 | 0.03 | 0.09 |

| 2017-10-02 | 275 | −0.24 | 0.28 | 1.63 | 55.85 | 37.07 | 0.00 | 0.09 |

| 2016-10-20 | 294 | −0.08 | 0.21 | 1.65 | 55.48 | 24.92 | 0.01 | 0.08 |

| 2017-10-23 | 296 | 0.02 | 0.16 | 1.47 | 37.62 | 56.35 | −0.01 | 0.05 |

| 2016-10-27 | 301 | 0.02 | 0.18 | 1.50 | 37.08 | 55.91 | −0.02 | 0.07 |

| 2016-11-10 | 315 | 0.01 | 0.13 | 1.28 | 28.56 | 70.19 | −0.06 | 0.05 |

| 2017-12-04 | 338 | 0.04 | 0.19 | 2.01 | 32.86 | 60.68 | −0.11 | 0.10 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Pelletier, G. Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models. Remote Sens. 2018, 10, 1554. https://doi.org/10.3390/rs10101554

Goodbody TRH, Coops NC, Hermosilla T, Tompalski P, Pelletier G. Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models. Remote Sensing. 2018; 10(10):1554. https://doi.org/10.3390/rs10101554

Chicago/Turabian StyleGoodbody, Tristan R.H., Nicholas C. Coops, Txomin Hermosilla, Piotr Tompalski, and Gaetan Pelletier. 2018. "Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models" Remote Sensing 10, no. 10: 1554. https://doi.org/10.3390/rs10101554

APA StyleGoodbody, T. R. H., Coops, N. C., Hermosilla, T., Tompalski, P., & Pelletier, G. (2018). Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models. Remote Sensing, 10(10), 1554. https://doi.org/10.3390/rs10101554