Enhancing Sustainability of E-Learning with Adoption of M-Learning in Business Studies

Abstract

1. Introduction

2. Theoretical Background

2.1. E-Learning Platforms and Sustainability

2.1.1. E-Learning Platforms

2.1.2. Sustainability Issues and E-Learning Platforms

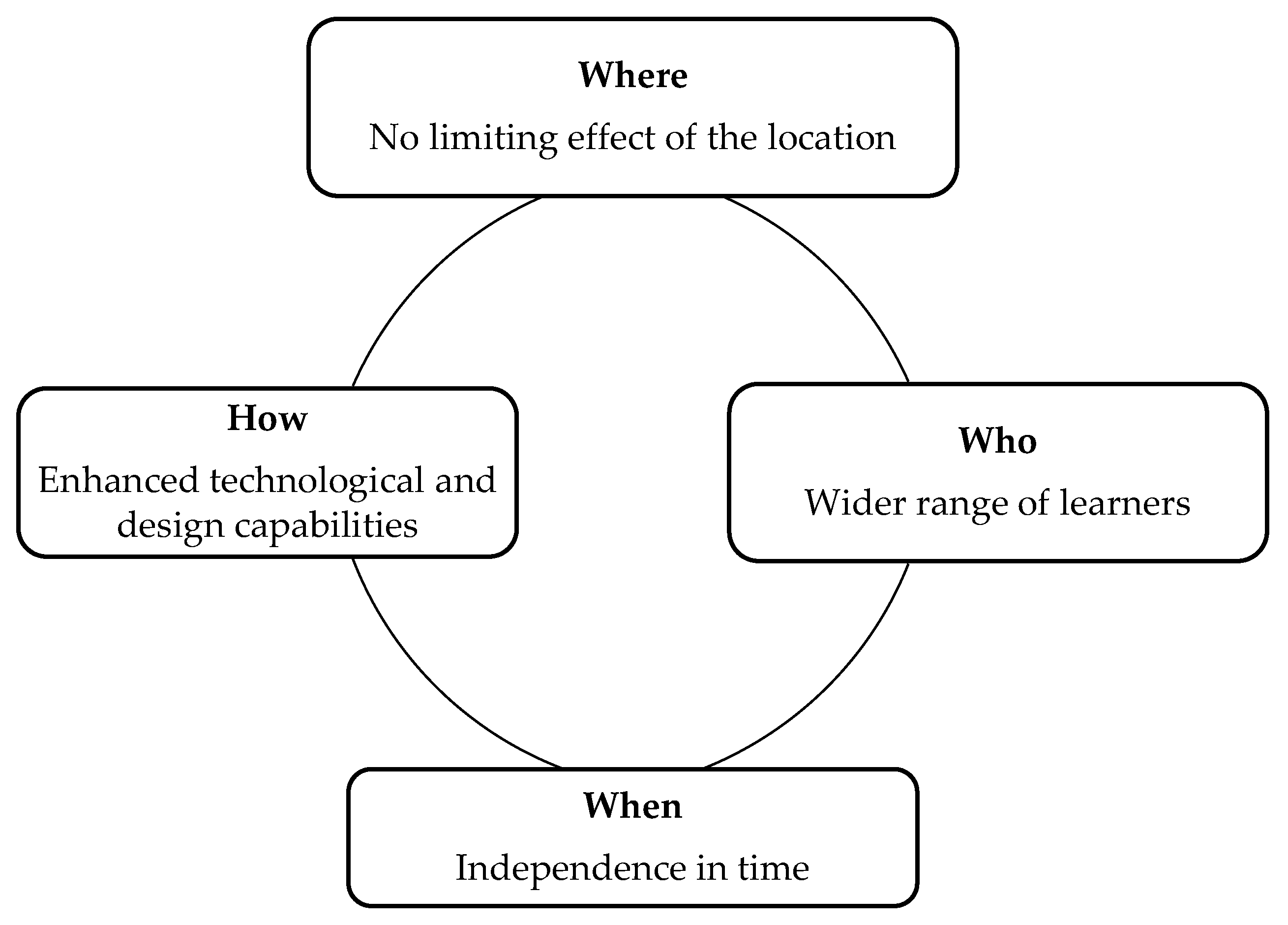

2.2. Sustainable Mobility in E-Learning Platforms

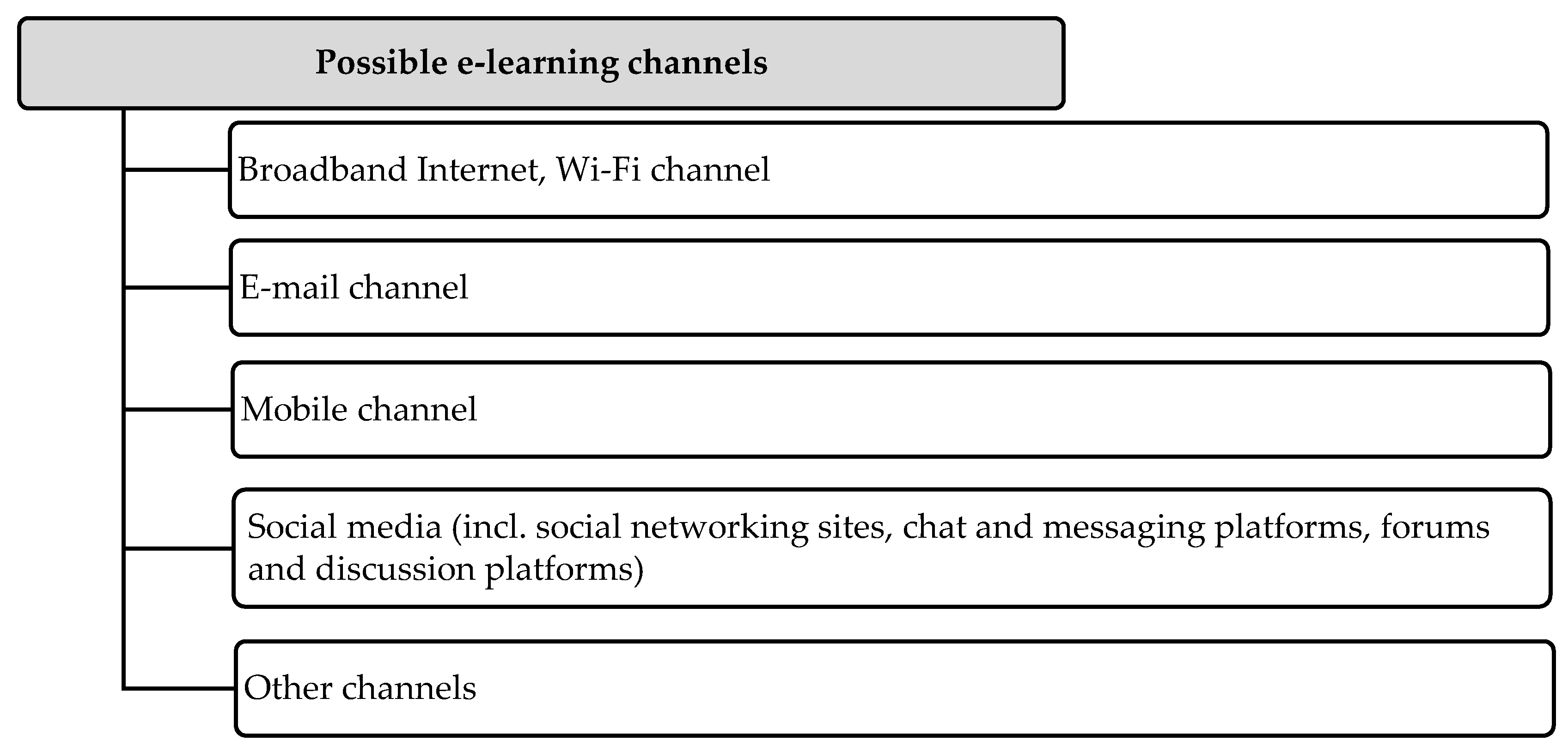

2.2.1. Mobile Technologies in Channels

2.2.2. Mobile Features of E-Learning Platforms

2.3. Microsoft Teams

2.3.1. Definition

2.3.2. Features

- Teams and channels. Teams comprise channels that form a place for communication between the users.

- Conversations within channels and teams. All team members can see and add different posts in the general channel as a form of conversation. They can also use the @ (Mention) function to invite other members to discussions.

- Chat. The primary function of chat in most applications is usually collaboration or conversation, which can occur between teams, groups, and individuals.

- SharePoint file storage. Each team using Teams has a site created in SharePoint Online that contains a default document library folder. All files shared across all conversations are automatically saved in this folder. Security with permissions options can also be customised for confidential data.

- Online video calls and screen sharing. Setting up video calls with users inside or outside the organisation is possible. Good video call performance is the basis for effective collaboration. In addition, fast sharing or desktop sharing is possible when multiple users collaborate in real time.

- Online meetings. This feature helps to improve communication, extend meetings, and even train and teach through online meetings, which can involve up to 10,000 users. Online meetings can involve users from outside or inside the organisation. Additional functionalities of online meetings include assistance with scheduling and timing, a note-taking application, uploading files, and chatting with participants during the meeting.

- Audio conference. With audio conferencing, all members can join an online meeting via phone. Using a dial-in number, even users on the move can participate without needing the Internet. It should be noted that this functionality is only available with a specific type of license.

- Microsoft 365 Business Voice. Microsoft 365 Business Voice can completely replace a company’s or organisation’s telephone system. Again, it should be noted that the functionality is only available with a specific type of license.

- Seamless communication—the MS Teams platform offers an extensive array of features that support not only audio and video calls but also live chat functionalities and real-time conversational engagements, thereby facilitating highly effective and efficient communication among team members, irrespective of their physical locations or geographical distances [77];

- Document collaboration—within this robust platform, users are allowed to share various documents and engage in collaborative efforts in real-time while simultaneously leveraging the all-in-one integrations with a wide range of Microsoft Office applications, such as Word and PowerPoint, which significantly enhances the collaborative experience [78];

- meeting flexibility—the MS Teams application supports various meeting types. This includes spontaneous gatherings and scheduled appointments. It enables both formal and informal discussions. The platform allows diverse collaboration styles to fit team needs [79].

- Enhanced productivity—the various features integrated into the platform are specifically designed to streamline and optimise workflows, thereby enabling teams to operate with heightened efficiency while simultaneously maintaining a high level of organisational structure that is crucial for successful collaboration in a remote work environment [77];

- Accessibility—MS Teams enables seamless connectivity for users. It allows individuals to collaborate from any location. This is especially useful for teams spread across different regions or countries. The platform helps overcome traditional barriers to communication and teamwork [79];

- Educational applications—within the context of academic environments, MS Teams plays a pivotal role in supporting structured learning experiences by providing organised materials and assignments, which ultimately contributes to the enhancement of effectiveness and the overall quality of online education, particularly in an era where digital learning has become increasingly prevalent [78].

2.3.3. Usage

2.3.4. Copilot in Microsoft Teams

3. Research Model and Research Methodology

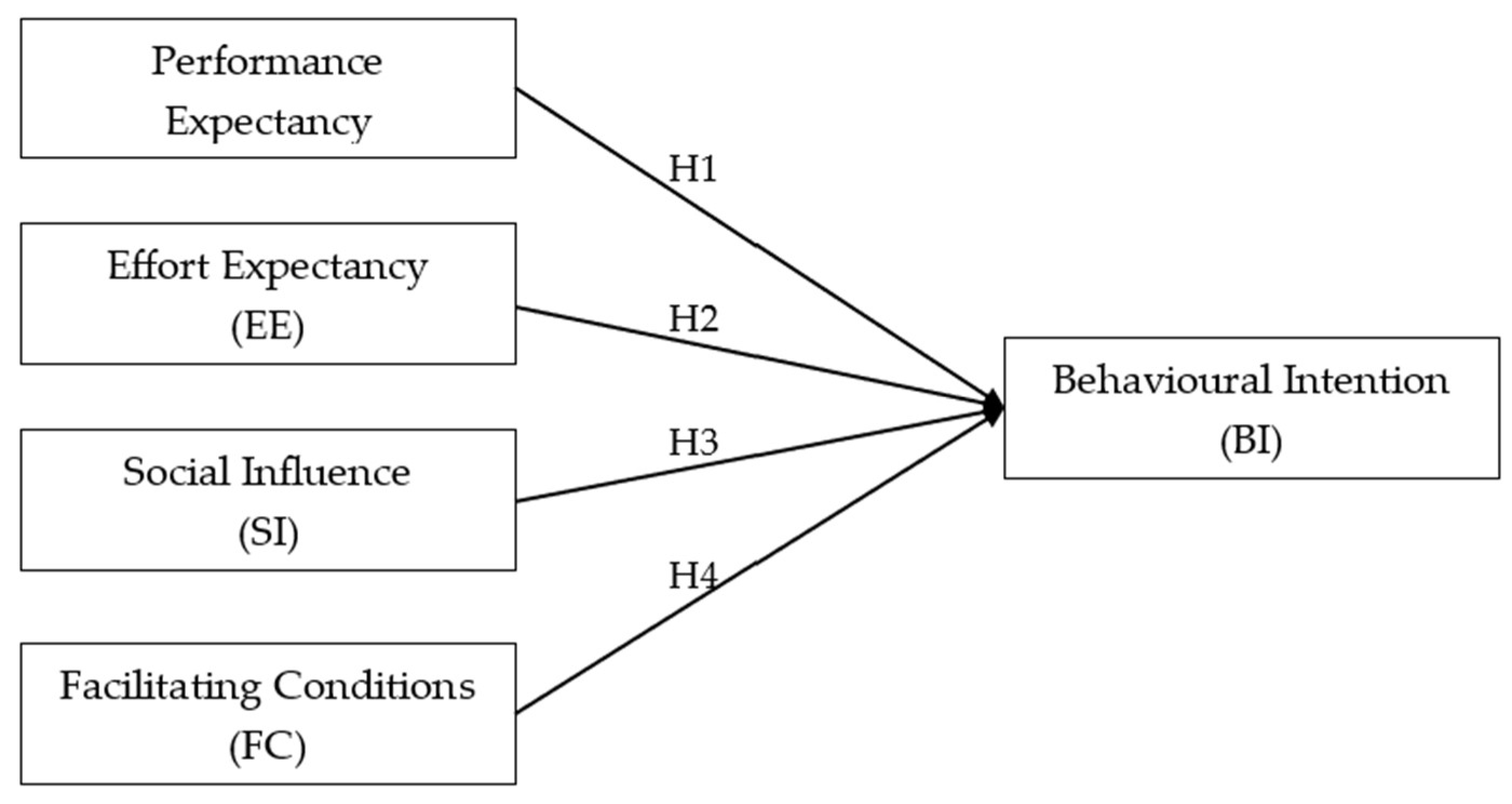

3.1. Research Model

- Performance Expectancy (PE):

- PE1: The Microsoft Teams mobile application enables me to complete tasks quickly.

- PE2: The Microsoft Teams mobile application restricts my task execution.

- PE3: The Microsoft Teams mobile application enhances my productivity.

- PE4: The Microsoft Teams mobile application reduces my efficiency in the classroom (group).

- PE5: Using the Microsoft Teams mobile application facilitates the completion of my academic obligations (assignments, duties, projects, seminar papers, etc.).

- PE6: Using the Microsoft Teams mobile application decreases the quality of my academic work (assignments, duties, projects, seminar papers, etc.).

- PE7: Using the Microsoft Teams mobile application contributes to my classmates and colleagues perceiving me as competent.

- PE8: Using the Teams mobile app increases teachers’/professors’ respect for me.

- PE9: Using the Teams mobile app reduces my chances of being promoted to a higher year.

- PE10: The Teams mobile app is helpful for teaching and learning.

- Effort Expectancy (EE):

- EE1: Learning to use Teams is easy for me.

- EE2: The Microsoft Teams application is straightforward for completing my academic obligations.

- EE3: My interaction with the Microsoft Teams application is clear and understandable.

- EE4: The Microsoft Teams mobile application is flexible for interaction.

- EE5: I can quickly learn to use the Microsoft Teams application.

- EE6: The Microsoft Teams mobile application is easy to use.

- EE7: Using the Microsoft Teams application takes too much time when completing my regular academic tasks.

- EE8: Working with the Microsoft Teams application is complex and challenging.

- Social Influence (SI):

- SI1: People who influence me believe I should use the Microsoft Teams application.

- SI2: My parents and friends think I should use the Microsoft Teams application.

- SI3: Teachers/professors at my university provide support in using the Microsoft Teams application.

- SI4: Teachers/professors at my university strongly support using the Microsoft Teams application in their courses.

- SI5: Overall, the university supports using the Microsoft Teams application.

- SI6: Installing the Microsoft Teams application is considered a status symbol at my university.

- Facilitating Conditions (FC):

- FC1: I have the necessary resources (e.g., phone, computer, internet) to use the Microsoft Teams application.

- FC2: I have the knowledge to use the Microsoft Teams application.

- FC3: The Microsoft Teams application is incompatible with other mobile applications and my operating system.

- FC4: A helpdesk is available at the university to assist with issues related to the Microsoft Teams application.

- FC5: Using the Microsoft Teams application aligns with my student lifestyle.

- Behavioural Intention (BI):

- BI1: I complete my academic obligations through the Microsoft Teams application whenever possible.

- BI2: I perceive using the Microsoft Teams application as involuntary.

- BI3: I intend to use the Microsoft Teams application.

- BI4: I would like to use the Microsoft Teams application as much as possible for various tasks.

- BI5: I would like to use the Microsoft Teams application as much as possible to meet my academic obligations.

3.2. Research Methodology

4. Results

- The first phase of the research evaluated the psychometric properties of all measurement scales to ensure their reliability and discriminant validity.

- The subsequent phase of the study focused on hypothesis testing and model analysis to examine structural relationships, explained variance (R2), effect sizes (f2), and predictive relevance (Q2).

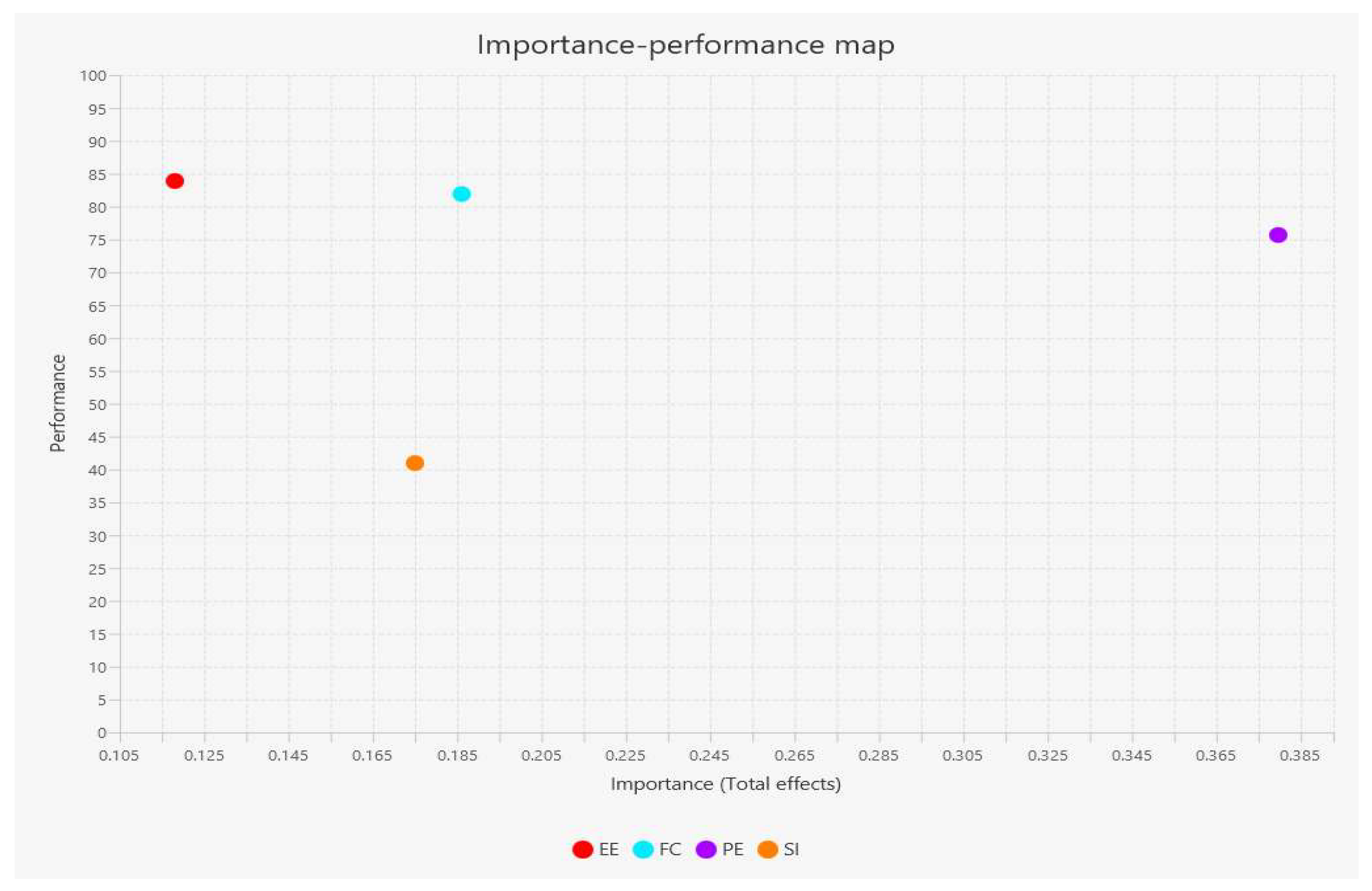

- IPMA provides deeper insights by assessing the relative importance and performance of predictor constructs, allowing for the identification of key areas for strategic improvement [111].

- MGA was applied to assess whether significant differences exist between subgroups, enabling the exploration of potential moderating effects and examining how the relationships in the model vary across different user groups [110].

4.1. Measurement Model Assessment

4.2. Structural Model Assessment

- Performance Expectancy (f2 = 0.152) establishes a moderate effect size, subsequently supporting its substantial contribution to supporting behavioural intention.

- Social Influence (f2 = 0.045) and Facilitating Conditions (f2 = 0.040) demonstrate small but significant effects, indicating that while peer influence and resource availability influence Behavioural Intention, their impact is more constrained than Performance Expectancy.

- Effort Expectancy (f2 = 0.016) exhibits the smallest effect size, suggesting that although the ease of use is significant, it exerts a relatively minor influence on Behavioural Intention compared to other factors.

4.3. Importance–Performance Map Analysis (IPMA)

4.4. Multigroup Analysis

- Configurational Invariance checks that both groups share the same conceptual model structure.

- Compositional Invariance proves that construct scores are calculated equivalently across groups.

- Equality of Means and Variances assesses whether significant differences exist in means and variances across groups.

5. Discussion

5.1. Key Determinants of Behavioural Intention

5.2. Explanatory Power and Effect Sizes

5.3. Predictive Relevance and Model Fit

5.4. IPMA and Multigroup Analysis Insights

5.5. Contribution to Sustainability of E-Learning

6. Conclusions

- Enhancing system usability to align with changing user expectations and improving user experience [35].

- Reducing reliance on external technical support by implementing intuitive system designs, self-service resources, and user training programs [41].

- Leveraging social and peer influence by fostering collaborative learning environments, peer mentoring, and instructor engagement strategies [135].

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baron, N.S. Words Onscreen: The Fate of Reading in a Digital World; Oxford University Press: New York, NY, USA, 2015. [Google Scholar]

- Ross, B.; Pechenkina, E.; Aeschliman, C.; Chase, A.-M. Print Versus Digital Texts: Understanding the Experimental Research and Challenging the Dichotomies. Res. Learn. Technol. 2017, 25, 1976. [Google Scholar] [CrossRef]

- Delgado, P.; Vargas, C.; Ackerman, R.; Salmerón, L. Don’t Throw Away Your Printed Books: A Meta-Analysis on the Effects of Reading Media on Reading Comprehension. Educ. Res. Rev. 2018, 25, 23–38. [Google Scholar] [CrossRef]

- Singer, L.M.; Alexander, P.A. Reading on Paper and Digitally: What the Past Decades of Empirical Research Reveal. Rev. Educ. Res. 2017, 87, 1007–1041. [Google Scholar] [CrossRef]

- Zabasta, A.; Kazymyr, V.; Drozd, O.; Verslype, S.; Espeel, L.; Bruzgiene, R. Development of Shared Modeling and Simulation Environment for Sustainable E-Learning in the STEM Field. Sustainability 2024, 16, 2197. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Chao, C.M. Factors Determining the Behavioral Intention to Use Mobile Learning: An Application and Extension of the UTAUT Model. Front. Psychol. 2019, 10, 1652. [Google Scholar] [CrossRef] [PubMed]

- Almaiah, M.A.; Alamri, M.M.; Al-Rahmi, W. Applying the UTAUT Model to Explain the Students’ Acceptance of Mobile Learning System in Higher Education. IEEE Access 2019, 7, 174673–174686. [Google Scholar] [CrossRef]

- Hew, K.F.; Brush, T. Integrating Technology into K-12 Teaching and Learning: Current Knowledge Gaps and Recommendations for Future Research. Educ. Technol. Res. Dev. 2007, 55, 223–252. [Google Scholar] [CrossRef]

- Zawacki-Richter, O. The Current State and Impact of COVID-19 on Digital Higher Education in Germany. Hum. Behav. Emerg. Technol. 2021, 3, 218–226. [Google Scholar] [CrossRef]

- Laskova, K. 21st Century Teaching and Learning with Technology: A Critical Commentary. Acad. Lett. 2021, 2, 2090. [Google Scholar] [CrossRef]

- Bentaa, D.; Bologaa, A.; Dzitaca, I. E-Learning Platforms in Higher Education. Case Study. Procedia Comput. Sci. 2014, 31, 1170–1176. [Google Scholar] [CrossRef]

- Ouadoud, M.; Rida, N.; Chafiq, T. Overview of E-Learning Platforms for Teaching and Learning. Int. J. Recent Contrib. Eng. Sci. IT 2021, 9, 50–70. [Google Scholar] [CrossRef]

- Al-Adwan, A.S.; Nofal, M.; Akram, H.; Albelbisi, N.A.; Al-Okaily, M. Towards a Sustainable Adoption of E-Learning Systems: The Role of Self-Directed Learning. J. Inf. Technol. Educ. Res. 2022, 21, 245–267. [Google Scholar] [CrossRef]

- Sizova, D.A.; Sizova, T.V.; Adulova, E.S. Advances in Social Science, Education and Humanities Research. In Proceedings of the International Scientific Conference “Digitalization of Education: History, Trends and Prospects” (DETP 2020), Yekaterinburg, Russia, 23–24 April 2020; Volume 437, pp. 328–334. [Google Scholar]

- Wasserman, M.E.; Fisher, S.L. E-Learning. In Encyclopedia of Electronic HRM; Bondarouk, T., Fisher, S., Eds.; De Gruyter Oldenbourg: Boston, MA, USA, 2020; pp. 188–194. [Google Scholar] [CrossRef]

- Javorcik, T.; Polasek, R. The Basis for Choosing MicroLearning within the Terms of E-Learning in the Context of Student Preferences. In Proceedings of the 16th International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 15–16 November 2018; pp. 237–244. [Google Scholar] [CrossRef]

- Mohana, M.; Valliammal, N.; Suvetha, V.; Krishnaveni, M. A Study on Technology-Enhanced Multimedia Learning for Enhancing Learner’s Experience in E-Learning. In Proceedings of the 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), Bengaluru, India, 1–2 September 2023. [Google Scholar] [CrossRef]

- Kimura, R.; Matsunaga, M.; Barroga, E.; Hayashi, N. Asynchronous E-Learning with Technology-enabled and Enhanced Training for Continuing Education of Nurses: A Scoping Review. BMC Med. Educ. 2023, 23, 505. [Google Scholar] [CrossRef]

- Timbi-Sisalima, C.; Sánchez-Gordón, M.; Hilera-Gonzalez, J.R.; Otón-Tortosa, S. Quality Assurance in E-Learning: A Proposal from Accessibility to Sustainability. Sustainability 2022, 14, 3052. [Google Scholar] [CrossRef]

- Cassidy, A.; Fu, G.; Valley, W.; Lomas, C.; Jovel, E.; Riseman, A. Flexible Learning Strategies in the First through Fourth-Year Courses. Coll. Essays Learn. Teach. 2016, 9, 83–94. [Google Scholar] [CrossRef][Green Version]

- Santiago, C.S., Jr.; Ulanday, M.L.P.; Centeno, Z.J.R.; Bayla, M.C.D.; Callanta, J.S. Flexible Learning Adaptabilities in the New Normal: E-Learning Resources, Digital Meeting Platforms, Online Learning Systems and Learning Engagement. Asian J. Distance Educ. 2021, 6, 38–56. [Google Scholar] [CrossRef]

- Furqon, M.; Sinaga, P.; Liliasari, L.; Riza, L.S. The Impact of Learning Management System (LMS) Usage on Students. TEM J. 2023, 12, 1082–1089. [Google Scholar] [CrossRef]

- Kirange, S.; Sawai, D. A Comparative Study of E-Learning Platforms and Associated Online Activities. Online J. Distance Educ. E Learn. 2021, 9, 192–1999. [Google Scholar]

- Nedungadi, P.; Raman, R. A New Approach to Personalization: Integrating E-Learning and M-Learning. Educ. Technol. Res. Dev. 2012, 60, 659–678. [Google Scholar] [CrossRef]

- Kasabova, G.; Parusheva, S.; Bankov, B. Learning Management Systems as a Tool for Learning in Higher Education. Izvestia J. Union Sci. Varna Econ. Sci. Ser. 2023, 12, 224–233. [Google Scholar] [CrossRef]

- Al-Abri, A.; Jamoussi, Y.; Kraiem, N.; Al-Khanjari, Z. Comprehensive Classification of Collaboration Approaches in E-Learning. Telemat. Inform. 2017, 34, 878–893. [Google Scholar] [CrossRef]

- Chou, Y. Actionable Gamification: Beyond Points, Badges, and Leaderboards; Createspace Independent Publishing Platform: Scotts Valley, CA, USA, 2015. [Google Scholar]

- Rajšp, A.; Beranič, T.; Heričko, M.; Horng-Jyh, P.W. Students’ Perception of Gamification in Higher Education Courses. In Proceedings of the Central European Conference on Information and Intelligent Systems, Varazdin, Croatia, 27–29 September 2017; pp. 69–75. [Google Scholar]

- El-Sabagh, H.A. Adaptive E-Learning Environment Based on Learning Styles and Its Impact on Development Students’ Engagement. Int. J. Educ. Technol. High. Educ. 2021, 18, 53. [Google Scholar] [CrossRef]

- Nuankaew, P.; Nuankaew, W.; Phanniphong, K.; Imwut, S.; Bussaman, S. Students Model in Different Learning Styles of Academic Achievement at the University of Phayao, Thailand. Int. J. Emerg. Technol. Learn. 2019, 14, 133. [Google Scholar] [CrossRef]

- Nouri, J. The Flipped Classroom: For Active, Effective and Increased Learning—Especially for Low Achievers. Int. J. Educ. Technol. High. Educ. 2016, 13, 33. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Alhumaid, K.; Akour, I.; Salloum, S. Factors That Affect E-Learning Platforms after the Spread of COVID-19: Post Acceptance Study. Data 2021, 6, 49. [Google Scholar] [CrossRef]

- Encarnacion, R.F.E.; Galang, A.A.D.; Hallar, B.J.A. The Impact and Effectiveness of E-Learning on Teaching and Learning. Int. J. Comput. Sci. Res. 2020, 5, 383–397. [Google Scholar] [CrossRef]

- Naveed, Q.N.; Choudhary, H.; Ahmad, N.; Alqahtani, J.; Qahmash, A.I. Mobile Learning in Higher Education: A Systematic Literature Review. Sustainability 2023, 15, 13566. [Google Scholar] [CrossRef]

- Martono Kurniawan, T.; Nurhayati Oky, D. Implementation of Android-Based Mobile Learning Application as a Flexible Learning Media. Int. J. Comput. Sci. Issues 2014, 11, 168–174. [Google Scholar]

- Snezhko, Z.; Babaskin, D.; Vanina, E.; Rogulin, R.; Egorova, Z. Motivation for Mobile Learning: Teacher Engagement and Built-In Mechanisms. Int. J. Interact. Mob. Technol. 2022, 16, 78–93. [Google Scholar] [CrossRef]

- Ayuningtyas, P. Whatsapp: Learning on the Go. Metathesis J. Engl. Lang. Lit. Teach. 2018, 2, 159–170. [Google Scholar] [CrossRef]

- Salhab, R.; Daher, W. University Students’ Engagement in Mobile Learning. Eur. J. Investig. Health Psychol. Educ. 2023, 13, 202–216. [Google Scholar] [CrossRef]

- Anuyahong, B.; Pucharoen, N. Exploring the Effectiveness of Mobile Learning Technologies in Enhancing Student Engagement and Learning Outcomes. Int. J. Emerg. Technol. Learn. 2023, 18, 50–63. [Google Scholar] [CrossRef]

- Al-Razgan, M.; Alotaibi, H. Personalized Mobile Learning System to Enhance Language Learning Outcomes. Indian J. Sci. Technol. 2019, 12, 1–9. [Google Scholar] [CrossRef]

- Gikas, J.; Grant, M.M. Mobile Computing Devices in Higher Education: Student Perspectives on Learning with Cellphones, Smartphones & Social Media. Internet High. Educ. 2013, 19, 18–26. [Google Scholar] [CrossRef]

- Alrasheedi, M.; Capretz, L.F. Determination of Critical Success Factors Affecting Mobile Learning: A Meta-Analysis Approach. Turk. Online J. Educ. Technol. 2015, 14, 41–51. [Google Scholar]

- Alasmari, T. The Effect of Screen Size on Students’ Cognitive Load in Mobile Learning. J. Educ. Teach. Learn. 2020, 5, 280–295. [Google Scholar] [CrossRef]

- Lakshminarayanan, R.; Ramalingam, R.; Shaik, S.K. Challenges in Transforming, Engaging and Improving M-Learning in Higher Educational Institutions: Oman Perspective. In Proceedings of the Third International Conference of Educational Technology, Seeb, Oman, 24–26 March 2015. [Google Scholar]

- Ghamdi, E.; Yunus, F.; Da’ar, O.; El-Metwally, A.; Khalifa, M.; Aldossari, B.; Househ, M. The Effect of Screen Size on Mobile Phone User Comprehension of Health Information and Application Structure: An Experimental Approach. J. Med. Syst. 2016, 40, 11. [Google Scholar] [CrossRef]

- Shonola, S.A.; Joy, M. Mobile Learning Security Issues from Lecturers’ Perspectives (Nigerian Universities Case Study). In Proceedings of the EDULEARN14 Conference, Barcelona, Spain, 7–9 July 2014; pp. 7081–7088. [Google Scholar]

- Parusheva, S.; Aleksandrova, Y.; Hadzhikolev, H. Use of Social Media in Higher Education Institutions—An Empirical Study Based on Bulgarian Learning Experience. TEM J. 2018, 7, 171–181. [Google Scholar] [CrossRef]

- Merelo, J.J.; Castillo, P.A.; Mora, A.M. Chatbots and Messaging Platforms in the Classroom: An Analysis from the Teacher’s Perspective. Educ. Inf. Technol. 2024, 29, 1903–1938. [Google Scholar] [CrossRef]

- Ghafar, Z.N. Media Platforms in Any Fields, Academic, Non-Academic, All Over the World in Digital Era: A Critical Review. J. Digit. Learn. Distance Educ. 2024, 2, 707–721. [Google Scholar] [CrossRef]

- Mahdiuon, R.; Salimi, G.; Raeisy, L. Effect of Social Media on Academic Engagement and Performance: Perspective of Graduate Students. Educ. Inf. Technol. 2020, 25, 2427–2446. [Google Scholar] [CrossRef]

- Sevnarayan, K. Telegram as a Teaching Tool in Distance Education. In Proceedings of the Writing Research Across Borders, Trondheim, Norway, 18–22 February 2023. [Google Scholar] [CrossRef]

- Dollah, M.H.M.; Nair, S.M.; Wider, W. The Effects of Utilizing Telegram App to Enhance Students’ ESL Writing Skills. Int. J. Educ. Stud. 2021, 4, 10–16. [Google Scholar] [CrossRef]

- Deng, L.; Shen, Y.W.; Chan, J.W.W. Supporting Cross-Cultural Pedagogy with Online Tools: Pedagogical Design and Student Perceptions. TechTrends 2021, 65, 760–770. [Google Scholar] [CrossRef]

- Sternad Zabukovšek, S.; Deželak, Z.; Parusheva, S.; Bobek, S. Attractiveness of Collaborative Platforms for Sustainable E-Learning in Business Studies. Sustainability 2022, 14, 8257. [Google Scholar] [CrossRef]

- Ironsi, C.S. Google Meet as a Synchronous Language Learning Tool for Emergency Online Distant Learning During the COVID-19 Pandemic: Perceptions of Language Instructors and Preservice Teachers. J. Appl. Res. High. Educ. 2022, 14, 640–659. [Google Scholar] [CrossRef]

- de Lima, D.P.R.; Gerosa, M.A.; Conte, T.U. What to Expect, and How to Improve Online Discussion Forums: The Instructors’ Perspective. J. Internet Serv. Appl. 2019, 10, 22. [Google Scholar] [CrossRef]

- Martin, F.; Wang, C.; Sadaf, A. Student Perception of Helpfulness of Facilitation Strategies That Enhance Instructor Presence, Connectedness, Engagement and Learning in Online Courses. Internet High. Educ. 2018, 37, 52–65. [Google Scholar] [CrossRef]

- Guo, C.; Shea, P.; Chen, X.D. Investigation on Graduate Students’ Social Presence and Social Knowledge Construction in Two Online Discussion Settings. Educ. Inf. Technol. 2022, 27, 2751–2769. [Google Scholar] [CrossRef]

- Munoto, M.; Sumbawati, M.S.; Sari, S.F. The Use of Mobile Technology in Learning with Online and Offline Systems. Int. J. Inf. Commun. Technol. Educ. 2021, 17, 54–67. [Google Scholar] [CrossRef]

- Gure, S.G. “M-Learning”: Implications and Challenges. Int. J. Sci. Res. 2016, 5, 2087–2093. [Google Scholar]

- Razzaque, A. M-Learning Improves Knowledge Sharing over e-Learning Platforms to Build Higher Education Students’ Social Capital. SAGE Open 2020, 10, 1–9. [Google Scholar] [CrossRef]

- Filippo, D.; Barreto, C.G.; Fuks, H.; Lucena, C.J.P. Collaboration in Learning with Mobile Devices: Tools for Forum Coordination. In Proceedings of the 22nd ICDE—World Conference on Distance Education, Promoting Quality in Online, Flexible and Distance Education, Ed. ABED, Rio de Janeiro, Brazil, 3–6 September 2006; pp. 1–10. [Google Scholar]

- Kljun, M.; Krulec, R.; Pucihar, K.C.; Solina, F. Persuasive Technologies in m-Learning for Training Professionals: How to Keep Learners Engaged with Adaptive Triggering. IEEE Trans. Learn. Technol. 2019, 12, 370–383. [Google Scholar] [CrossRef]

- Kartika, D.A.I.; Nurkhamidah, N.; Santosa, I. Construction of EFL Learning Object Materials for Senior High School. J. Ilmu Sos. Dan Pendidik. 2022, 6, 2332–2343. Available online: http://ejournal.mandalanursa.org/index.php/JISIP/index (accessed on 20 January 2025).

- Balasundaram, S.; Mathew, J.; Nair, S. Microlearning and Learning Performance in Higher Education: A Post-Test Control. J. Learn. Dev. 2024, 11, 1–14. [Google Scholar]

- Farmer, L.S. Collective Intelligence in Online Education. Handb. Res. Pedagog. Models Next Gen. Teach. Learn. 2018, 285–305. [Google Scholar] [CrossRef]

- Saranya, A.K. A Critical Study on the Efficiency of Microsoft Teams in Online Education. In Efficacy of Microsoft Teams During COVID-19—A Survey; Bonfring Publication: Tamilnadu, India, 2020; pp. 310–323. [Google Scholar]

- Yusuf, M.O. Information and Communication Technology and Education: Analyzing the Nigerian National Policy for Information Technology. Int. Educ. J. 2005, 6, 316–321. [Google Scholar]

- Yaroslav, Y. Using Microsoft Teams in Online Learning of Students: Methodical Aspect. Inf. Technol. Learn. Tools 2024, 100, 72–91. [Google Scholar] [CrossRef]

- Ilag, B.N. Microsoft Teams Overview. In Understanding Microsoft Teams Administration; Apress: Berkeley, CA, USA, 2020. [Google Scholar] [CrossRef]

- Pal, D.; Vanijja, V. Perceived Usability Evaluation of Microsoft Teams as an Online Learning Platform During COVID-19 Using System Usability Scale and Technology Acceptance Model in India. Child. Youth Serv. Rev. 2020, 119, 105535. [Google Scholar] [CrossRef]

- Amaxra. Microsoft Teams Mobile App Overview; Amaxra: Redmond, WA, USA, 2020; Available online: https://www.amaxra.com/microsoft-teams-mobile-app-overview#:~:text=Overview%3A%20Microsoft%20Teams%20Mobile%20App,-Originally%20only%20released&text=(The%20app%20was%20previously%20also,mobile%20device%2C%20anywhere%20and%20anytime (accessed on 17 February 2025).

- Microsoft. Welcome to Microsoft Teams; Microsoft: Redmond, DC, USA, 2018; Available online: https://docs.microsoft.com/en-us/microsoftteams/teams-overview (accessed on 17 February 2025).

- Techterms. App Definition; Techterms: 2019. Available online: https://techterms.com/definition/app (accessed on 13 February 2025).

- Compete 366. What Is Microsoft Teams, and Who Should Be Using It? Compete 366: London, UK, 2019; Available online: https://www.compete366.com/blog-posts/microsoft-teams-what-is-it-and-should-we-be-using-it/ (accessed on 15 February 2025).

- Ilag, B.N.; Tripathy, D.; Ireddy, V. Organization Readiness for Microsoft Teams. In Understanding Microsoft Teams Administration: Configure, Customize and Manage the Teams Experience; Apress: Berkeley, CA, USA, 2023; pp. 285–334. [Google Scholar] [CrossRef]

- Narayn, H. Teams and Power Virtual Agents. In Building the Modern Workplace with SharePoint Online: Solutions with SPFx, JSON Formatting, Power Automate, Power Apps, Teams, and PVA; Apress: Berkeley, CA, USA, 2023; pp. 351–386. [Google Scholar]

- Ilag, B.N.; Sabale, A.M. Microsoft Teams Overview. In Troubleshooting Microsoft Teams: Enlisting the Right Approach and Tools in Teams for Mapping and Troubleshooting Issues; Apress: Berkeley, CA, USA, 2022; pp. 17–74. [Google Scholar] [CrossRef]

- Waizenegger, L.; McKenna, B.; Cai, W.; Bendz, T. An Affordance Perspective of Team Collaboration and Enforced Working from Home during COVID-19. Eur. J. Inf. Syst. 2020, 29, 429–442. [Google Scholar] [CrossRef]

- Bailenson, J.N. Nonverbal Overload: A Theoretical Argument for the Causes of Zoom Fatigue. Technol. Mind Behav. 2021, 2, 1–6. [Google Scholar] [CrossRef]

- Statista. Downloads of Microsoft Teams Mobile App Worldwide from 3rd Quarter 2019 to 4th Quarter 2023, by Region; Statista: Hamburg, Germany, 2024; Available online: https://www.statista.com/statistics/1240026/microsoft-teams-global-downloads-app-by-region/ (accessed on 17 January 2025).

- Statista. Most Popular Microsoft Apps Worldwide in 4th Quarter 2023, by Downloads (In Millions); Statista: Hamburg, Germany, 2024; Available online: https://www.statista.com/statistics/1268166/most-downloaded-microsoft-apps-worldwide/ (accessed on 17 January 2025).

- Microsoft. Get Started with Copilot in Microsoft Teams Meetings; Microsoft Support: Redmond, DC, USA, 2024; Available online: https://support.microsoft.com/en-us/office/get-started-with-copilot-in-microsoft-teams-meetings-0bf9dd3c-96f7-44e2-8bb8-790bedf066b1 (accessed on 11 February 2025).

- Microsoft. Use Copilot in Microsoft Teams Chat and Channels; Microsoft Support: Redmond, DC, USA, 2024; Available online: https://support.microsoft.com/en-us/office/use-copilot-in-microsoft-teams-chat-and-channels-cccccca2-9dc8-49a9-ab76-b1a8ee21486c (accessed on 11 February 2025).

- Microsoft. Manage Copilot for Microsoft Teams Meetings and Events; Microsoft Learn: Redmond, DC, USA, 2024; Available online: https://learn.microsoft.com/en-us/microsoftteams/copilot-teams-transcription (accessed on 11 February 2025).

- Fishbein, M.; Ajzen, I. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research. Philos. Rhetor. 1977, 10, 130–132. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Ajzen, I. The Theory of Planned Behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Taylor, S.; Todd, P.A. Understanding Information Technology Usage: A Test of Competing Models. Inf. Syst. Res. 1995, 6, 144–176. [Google Scholar] [CrossRef]

- Thompson, R.L.; Higgins, C.A.; Howell, J.M. Personal Computing: Toward a Conceptual Model of Utilization. MIS Q. 1991, 15, 125–143. [Google Scholar] [CrossRef]

- Rogers, E.M. Diffusion of Innovations, 4th ed.; Free Press: New York, NY, USA, 1995. [Google Scholar] [CrossRef][Green Version]

- Bandura, A. Social Foundations of Thought and Action: A Social Cognitive Theory; Prentice Hall: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- Attuquayefio, S.N.; Addo, H. Using the UTAUT Model to Analyze Students’ ICT Adoption. Int. J. Educ. Dev. Using Inf. Commun. Technol. 2014, 10, 75–86. [Google Scholar] [CrossRef]

- Abbad, M.M. Using the UTAUT Model to Understand Students’ Usage of E-Learning Systems in Developing Countries. Educ. Inf. Technol. 2021, 26, 7205–7224. [Google Scholar] [CrossRef]

- Garavand, A.; Samadbeik, M.; Nadri, H.; Rahimi, B.; Asadi, H. Effective Factors in Adoption of Mobile Health Applications Between Medical Sciences Students Using the UTAUT Model. Methods Inf. Med. 2019, 58, 131–139. [Google Scholar] [CrossRef]

- Yu, C.S. Factors Affecting Individuals to Adopt Mobile Banking: Empirical Evidence from the UTAUT Model. J. Electron. Commer. Res. 2012, 13, 104–121. [Google Scholar]

- Bhatiasevi, V. An Extended UTAUT Model to Explain the Adoption of Mobile Banking. Inf. Dev. 2016, 32, 799–814. [Google Scholar] [CrossRef]

- Oliveira, T.; Faria, M.; Thomas, M.A.; Popovič, A. Extending the Understanding of Mobile Banking Adoption: When UTAUT Meets TTF and ITM. Int. J. Inf. Manag. 2014, 34, 689–703. [Google Scholar] [CrossRef]

- Mosunmola, A.; Mayowa, A.; Okuboyejo, S.; Adeniji, C. Adoption and Use of Mobile Learning in Higher Education: The UTAUT Model. In Proceedings of the 9th International Conference on E-Education, E-Business, E-Management and E-Learning, San Diego, CA, USA, 11–13 January 2018; ACM: New York, NY, USA, 2018; pp. 20–25. [Google Scholar] [CrossRef]

- Uzoka, F.M.E. Organisational Influences on E-Commerce Adoption in a Developing Country Context Using UTAUT. Int. J. Bus. Inf. Syst. 2008, 3, 300–316. [Google Scholar] [CrossRef]

- Cody-Allen, E.; Kishore, R. An Extension of the UTAUT Model with E-Quality, Trust, and Satisfaction Constructs. In Proceedings of the 2006 ACM SIGMIS CPR Conference on Computer Personnel Research: Forty-Four Years of Computer Personnel Research: Achievements, Challenges & the Future, Claremont, CA, USA, 13–15 April 2006; ACM: New York, NY, USA, 2006; pp. 82–89. [Google Scholar] [CrossRef]

- Garson, G.D. Partial Least Squares: Regression and Structural Equation Models; Statistical Associates Publishers: Asheboro, NC, USA, 2016. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial Least Squares Structural Equation Modeling. In Handbook of Market Research; Homburg, C., Klarmann, M., Vomberg, A.E., Eds.; Springer: Cham, Switzerland, 2021; pp. 587–632. [Google Scholar] [CrossRef]

- Sternad Zabukovšek, S.; Kalinic, Z.; Bobek, S.; Tominc, P. SEM–ANN Based Research of Factors’ Impact on Extended Use of ERP Systems. Cent. Eur. J. Oper. Res. 2019, 27, 703–735. [Google Scholar] [CrossRef]

- Sternad Zabukovšek, S.; Bobek, S.; Zabukovšek, U.; Kalinić, Z.; Tominc, P. Enhancing PLS-SEM-Enabled Research with ANN and IPMA: Research Study of Enterprise Resource Planning (ERP) Systems’ Acceptance Based on the Technology Acceptance Model (TAM). Mathematics 2022, 10, 1379. [Google Scholar] [CrossRef]

- Zabukovšek, U.; Tominc, P.; Bobek, S. Business IT Alignment Impact on Corporate Sustainability. Sustainability 2023, 15, 12519. [Google Scholar] [CrossRef]

- Henseler, J.; Hubona, G.; Ray, P.A. Using PLS Path Modeling in New Technology Research: Updated Guidelines. Ind. Manag. Data Syst. 2016, 116, 2–20. [Google Scholar] [CrossRef]

- Ringle, C.M.; Wende, S.; Becker, J.-M. SmartPLS 4. Boenningstedt: SmartPLS GmbH. Available online: http://www.smartpls.com (accessed on 12 September 2024).

- Sarstedt, M.; Henseler, J.; Ringle, C.M. Multigroup Analysis in Partial Least Squares (PLS) Path Modeling: Alternative Methods and Empirical Results. In Measurement and Research Methods in International Marketing; Sarstedt, M., Schwaiger, M., Taylor, C.R., Eds.; Emerald Group Publishing Limited: Bingley, UK, 2011; pp. 195–218. [Google Scholar] [CrossRef]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to Use and How to Report the Results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Dijkstra, T.K.; Henseler, J. Consistent Partial Least Squares Path Modeling. MIS Q. 2015, 39, 297–316. [Google Scholar] [CrossRef]

- George, D.; Mallery, P. SPSS for Windows Step by Step: A Simple Guide and Reference, 11.0 Update, 4th ed.; Allyn & Bacon: Boston, MA, USA, 2003; ISBN 978-0205375523. [Google Scholar]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 3rd ed.; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2022. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Chin, W.W. The Partial Least Squares Approach to Structural Equation Modeling. In Modern Methods for Business Research; Marcoulides, G.A., Ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1998; pp. 295–336. [Google Scholar] [CrossRef]

- Sternad Zabukovšek, S.; Bobek, S. Using the Technology Acceptance Model for Factors Influencing Acceptance of Enterprise Resource Planning Solutions. In Encyclopedia of Information Science and Technology, 6th ed.; IGI Global: Hershey, PA, USA, 2025; pp. 1–28. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria Versus New Alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Ringle, C.M.; Sarstedt, M. Gain More Insight from Your PLS-SEM Results: The Importance-Performance Map Analysis. Ind. Manag. Data Syst. 2016, 116, 1865–1886. [Google Scholar] [CrossRef]

- Matthews, L. Applying Multigroup Analysis in PLS-SEM: A Step-by-Step Process. In Partial Least Squares Path Modeling: Basic Concepts, Methodological Issues and Applications; Latan, H., Noonan, R., Eds.; Springer: Cham, Switzerland, 2017; pp. 219–243. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. Testing Measurement Invariance of Composites Using Partial Least Squares. Int. Mark. Rev. 2016, 33, 405–431. [Google Scholar] [CrossRef]

- Vyas, N.; Nirban, V. Students’ Perception on the Effectiveness of Mobile Learning in an Institutional Context. ELT Res. J. 2014, 3, 26–36. [Google Scholar]

- Eom, S. The Effects of the Use of Mobile Devices on the E-Learning Process and Perceived Learning Outcomes in University Online Education. E Learn. Digit. Media 2023, 20, 80–101. [Google Scholar] [CrossRef]

- Yusri, I.K.; Goodwin, R.; Mooney, C. Teachers and Mobile Learning Perception: Towards a Conceptual Model of Mobile Learning for Training. Procedia Soc. Behav. Sci. 2015, 176, 425–430. [Google Scholar] [CrossRef][Green Version]

- Popovici, A.; Mironov, C. Students’ Perception on Using eLearning Technologies. Procedia Soc. Behav. Sci. 2015, 180, 1514–1519. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Al-Khasawneh, A.; Althunibat, A. Exploring the Critical Challenges and Factors Influencing the E-Learning System Usage during COVID-19 Pandemic. Educ. Inf. Technol. 2020, 25, 5261–5280. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Lu, X.; Viehland, D. Factors Influencing the Adoption of Mobile Learning. In ACIS 2008 Proceedings, Proceedings of the Australasian Conference on Information Systems, Christchurch, New Zealand, 3–5 December 2008; p. 56. Available online: https://aisel.aisnet.org/acis2008/56/ (accessed on 5 February 2025).

- Fabito, B.S. Exploring Critical Success Factors of Mobile Learning as Perceived by Students of the College of Computer Studies–National University. In Proceedings of the 2017 International Conference on Soft Computing, Intelligent System and Information Technology (ICSIIT), Bali, Indonesia, 26–29 September 2017; IEEE: Yogyakarta, Indonesia, 2017; pp. 220–226. [Google Scholar] [CrossRef]

- Diemer, T.T.; Fernandez, E.; Streepey, J.W. Student Perceptions of Classroom Engagement and Learning Using iPads. J. Teach. Learn. Technol. 2013, 1, 13–25. [Google Scholar]

- Herrador-Alcaide, T.C.; Hernández-Solís, M.; Hontoria, J.F. Online Learning Tools in the Era of m-Learning: Utility and Attitudes in Accounting College Students. Sustainability 2020, 12, 5171. [Google Scholar] [CrossRef]

- Dirin, A.; Nieminen, M. User Experience Evolution of M-Learning Applications. In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU 2017), Porto, Portugal, 25–27 April 2017; SCITEPRESS: Porto, Portugal, 2017; pp. 154–161. [Google Scholar] [CrossRef]

- Fombona, J.; Pascual, M.A.; Pérez Ferra, M. Analysis of the Educational Impact of M-Learning and Related Scientific Research. J. New Approaches Educ. Res. 2020, 9, 167–180. [Google Scholar] [CrossRef]

- Bervell, B.; Arkorful, V. LMS-Enabled Blended Learning Use Intention Among Distance Education Students in Ghana: Initial Application of UTAUT Model. Educ. Inf. Technol. 2020, 25, 1117–1135. [Google Scholar] [CrossRef]

- Al-Azawei, A.; Parslow, P.; Lundqvist, K. Investigating the Effect of Learning Styles in a Blended E-Learning System: An Extension of the Technology Acceptance Model (TAM). Australas. J. Educ. Technol. 2017, 33, 1–23. [Google Scholar] [CrossRef]

| Variables | 2021/2022 | 2023/2024 | Total | |||

|---|---|---|---|---|---|---|

| Frequency | % | Frequency | % | Frequency | % | |

| Gender | ||||||

| Male | 59 | 39.60% | 98 | 33.11% | 157 | 35.28% |

| Female | 90 | 60.40% | 198 | 66.89% | 288 | 64.72% |

| Age | ||||||

| <19 years | 0 | 0.00% | 2 | 0.68% | 2 | 0.45% |

| 19–25 years | 139 | 93.29% | 293 | 98.99% | 432 | 97.08% |

| >25 years | 10 | 6.71% | 1 | 0.34% | 11 | 2.47% |

| Study Program | ||||||

| Higher Education | 96 | 64.43% | 83 | 28.04% | 179 | 40.22% |

| University Program | 32 | 21.48% | 187 | 63.18% | 219 | 49.21% |

| Master’s Program | 21 | 14.09% | 26 | 8.78% | 47 | 10.56% |

| Year of Study | ||||||

| First Year | 105 | 70.47% | 163 | 55.07% | 268 | 60.22% |

| Second Year | 22 | 14.77% | 38 | 12.84% | 60 | 13.48% |

| Third Year | 20 | 13.42% | 88 | 29.73% | 108 | 24.27% |

| Graduate Year | 2 | 1.34% | 7 | 2.36% | 9 | 2.02% |

| Frequency of Using MS Teams | ||||||

| Three or more times per day | 27 | 18.12% | 56 | 18.92% | 83 | 18.65% |

| Once or twice per day | 63 | 42.28% | 163 | 55.07% | 226 | 50.79% |

| Less than once per day | 25 | 16.78% | 43 | 14.53% | 68 | 15.28% |

| Once a week or less | 34 | 22.81% | 34 | 11.49% | 68 | 15.28% |

| Factors | Indicators | Mean | Median | Observed Min | Observed Max | Standard Deviation | Factor Loadings |

|---|---|---|---|---|---|---|---|

| Behavioural Intention | BI1 | 3.948 | 4.000 | 1.000 | 5.000 | 1.008 | 0.727 |

| BI3 | 4.002 | 4.000 | 1.000 | 5.000 | 0.844 | 0.844 | |

| BI4 | 3.665 | 4.000 | 1.000 | 5.000 | 0.952 | 0.899 | |

| BI5 | 3.863 | 4.000 | 1.000 | 5.000 | 0.944 | 0.908 | |

| Effort Expectancy | EE1 | 4.447 | 5.000 | 1.000 | 5.000 | 0.643 | 0.768 |

| EE2 | 4.321 | 4.000 | 1.000 | 5.000 | 0.685 | 0.816 | |

| EE3 | 4.364 | 4.000 | 1.000 | 5.000 | 0.659 | 0.768 | |

| EE4 | 4.162 | 4.000 | 1.000 | 5.000 | 0.707 | 0.765 | |

| EE5 | 4.438 | 4.000 | 1.000 | 5.000 | 0.603 | 0.815 | |

| EE6 | 4.413 | 4.000 | 1.000 | 5.000 | 0.639 | 0.828 | |

| Facilitating Conditions | FC1 | 4.620 | 5.000 | 1.000 | 5.000 | 0.648 | 0.685 |

| FC2 | 4.533 | 5.000 | 2.000 | 5.000 | 0.554 | 0.701 | |

| FC5 | 3.953 | 4.000 | 1.000 | 5.000 | 0.869 | 0.816 | |

| Performance Expectancy | PE1 | 3.921 | 4.000 | 1.000 | 5.000 | 0.882 | 0.732 |

| PE10 | 4.245 | 4.000 | 1.000 | 5.000 | 0.741 | 0.731 | |

| PE3 | 3.622 | 4.000 | 1.000 | 5.000 | 0.895 | 0.764 | |

| PE5 | 4.178 | 4.000 | 1.000 | 5.000 | 0.757 | 0.795 | |

| Social Influence | SI1 | 2.782 | 3.000 | 1.000 | 5.000 | 1.087 | 0.923 |

| SI2 | 2.501 | 3.000 | 1.000 | 5.000 | 1.029 | 0.922 |

| Factors | Cronbach’s Alpha | Composite Reliability (CR) | Dijkstra–Henseler’s Rho (ρ_A) | Average Variance Extracted (AVE) | f2 |

|---|---|---|---|---|---|

| Behavioural Intention | 0.866 | 0.872 | 0.845 | 0.718 | |

| Effort Expectancy | 0.883 | 0.888 | 0.793 | 0.630 | 0.016 |

| Facilitating Conditions | 0.621 | 0.669 | 0.734 | 0.542 | 0.040 |

| Performance Expectancy | 0.750 | 0.753 | 0.756 | 0.571 | 0.152 |

| Social Influence | 0.825 | 0.825 | 0.923 | 0.851 | 0.045 |

| Factors | BI | EE | FC | PE | SI |

|---|---|---|---|---|---|

| Behavioural Intention | 0.847 | ||||

| Effort Expectancy | 0.457 | 0.794 | |||

| Facilitating Conditions | 0.495 | 0.544 | 0.736 | ||

| Performance Expectancy | 0.611 | 0.532 | 0.504 | 0.756 | |

| Social Influence | 0.415 | 0.199 | 0.298 | 0.422 | 0.923 |

| Factors | BI | EE | FC | PE | SI |

|---|---|---|---|---|---|

| Behavioural Intention | |||||

| Effort Expectancy | 0.518 | ||||

| Facilitating Conditions | 0.611 | 0.746 | |||

| Performance Expectancy | 0.752 | 0.644 | 0.657 | ||

| Social Influence | 0.491 | 0.227 | 0.354 | 0.537 |

| Relationships | Original Sample (β) | Sample Mean (M) | Standard Deviation (STDEV) | t-Statistics | p-Values | Hypothesis |

|---|---|---|---|---|---|---|

| PE → BI | 0.380 | 0.380 | 0.047 | 8.117 | 0.000 | H1 confirmed |

| EE → BI | 0.118 | 0.118 | 0.048 | 2.448 | 0.014 | H2 confirmed |

| SI → BI | 0.175 | 0.174 | 0.041 | 4.241 | 0.000 | H3 confirmed |

| FC → BI | 0.186 | 0.189 | 0.054 | 3.462 | 0.001 | H4 confirmed |

| Indicator | Q2predict | PLS–SEM_RMSE | LM_RMSE | Difference |

|---|---|---|---|---|

| BI1 | 0.259 | 0.870 | 0.877 | −0.007 |

| BI3 | 0.317 | 0.699 | 0.700 | −0.001 |

| BI4 | 0.312 | 0.791 | 0.796 | −0.005 |

| BI5 | 0.349 | 0.763 | 0.763 | 0.000 |

| Importance | Performance | |

|---|---|---|

| EE | 0.118 | 83.890 |

| FC | 0.186 | 81.907 |

| PE | 0.380 | 75.671 |

| SI | 0.175 | 40.962 |

| Construct | Original Correlation | Correlation Permutation Mean | 5.0% | Permutation p-Value |

|---|---|---|---|---|

| BI | 0.999 | 0.999 | 0.998 | 0.120 |

| EE | 0.998 | 0.998 | 0.995 | 0.487 |

| FC | 0.987 | 0.986 | 0.953 | 0.357 |

| PE | 0.999 | 0.997 | 0.993 | 0.686 |

| SI | 1.000 | 0.999 | 0.997 | 0.971 |

| Mean Values | |||||

| Construct | Original Difference | Permutation Mean Difference | 5.0% | 95.0% | Permutation p-Value |

| BI | −0.160 | −0.002 | −0.170 | 0.164 | 0.060 |

| EE | −0.282 | −0.001 | −0.168 | 0.162 | 0.003 |

| FC | −0.306 | −0.001 | −0.169 | 0.163 | 0.002 |

| PE | −0.348 | −0.001 | −0.168 | 0.163 | 0.000 |

| SI | 0.067 | −0.003 | −0.171 | 0.164 | 0.243 |

| Variance Differences | |||||

| Construct | Original Difference | Permutation Mean Difference | 5.0% | 95.0% | Permutation p-Value |

| BI | −0.001 | −0.006 | −0.276 | 0.254 | 0.510 |

| EE | −0.405 | −0.009 | −0.303 | 0.323 | 0.007 |

| FC | −0.119 | −0.007 | −0.290 | 0.277 | 0.261 |

| PE | 0.090 | −0.006 | −0.255 | 0.243 | 0.268 |

| SI | −0.120 | −0.005 | −0.212 | 0.200 | 0.180 |

| Path | Original (2022) | p-Value (2022) | Original (2024) | p-Value (2024) | Invariant |

|---|---|---|---|---|---|

| EE → BI | 0.071 | 0.424 | 0.145 | 0.013 | No |

| FC → BI | 0.251 | 0.005 | 0.157 | 0.014 | Yes |

| PE → BI | 0.367 | 0.000 | 0.385 | 0.000 | Yes |

| SI → BI | 0.155 | 0.033 | 0.183 | 0.000 | Yes |

| Original (2022) | Original (2024) | Mean (2022) | Mean (2024) | STDEV (2022) | STDEV (2024) | t Value (2022) | t Value (2024) | p-Value (2022) | p-Value (2024) | |

|---|---|---|---|---|---|---|---|---|---|---|

| EE → BI | 0.071 | 0.145 | 0.075 | 0.144 | 0.089 | 0.058 | 0.799 | 2.487 | 0.424 | 0.013 |

| FC → BI | 0.251 | 0.157 | 0.250 | 0.160 | 0.090 | 0.064 | 2.807 | 2.471 | 0.005 | 0.014 |

| PE → BI | 0.367 | 0.385 | 0.371 | 0.386 | 0.096 | 0.055 | 3.805 | 7.051 | 0.000 | 0.000 |

| SI → BI | 0.155 | 0.183 | 0.153 | 0.182 | 0.073 | 0.051 | 2.128 | 3.601 | 0.033 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Parusheva, S.; Klancnik, I.S.; Bobek, S.; Sternad Zabukovsek, S. Enhancing Sustainability of E-Learning with Adoption of M-Learning in Business Studies. Sustainability 2025, 17, 3487. https://doi.org/10.3390/su17083487

Parusheva S, Klancnik IS, Bobek S, Sternad Zabukovsek S. Enhancing Sustainability of E-Learning with Adoption of M-Learning in Business Studies. Sustainability. 2025; 17(8):3487. https://doi.org/10.3390/su17083487

Chicago/Turabian StyleParusheva, Silvia, Irena Sisovska Klancnik, Samo Bobek, and Simona Sternad Zabukovsek. 2025. "Enhancing Sustainability of E-Learning with Adoption of M-Learning in Business Studies" Sustainability 17, no. 8: 3487. https://doi.org/10.3390/su17083487

APA StyleParusheva, S., Klancnik, I. S., Bobek, S., & Sternad Zabukovsek, S. (2025). Enhancing Sustainability of E-Learning with Adoption of M-Learning in Business Studies. Sustainability, 17(8), 3487. https://doi.org/10.3390/su17083487