1. Introduction

In globalized capital markets, the sustainability of corporate governance has become the cornerstone of market resilience and stakeholder trust [

1]. However, this cornerstone is being systematically eroded by increasingly sophisticated and covert financial fraud [

2]. From Enron’s elaborately constructed off-balance-sheet entities to Luckin Coffee’s revenue inflation via complex transactional webs, modern fraud is no longer the manipulation of isolated indicators [

3]. It has evolved into a toxic configuration woven from multiple pressures, governance deficiencies, and strategic narratives. This configurational risk pattern, deeply coupled with the extreme sparsity of fraud samples in the data space, poses a fundamental challenge to existing corporate governance diagnostic and risk-warning paradigms.

The first limitation of the current paradigm stems from its inherent atomistic, variable-based analytical approach. Whether exemplified by classical econometric tools such as M-Score [

4] or by machine learning methods applied after feature concatenation such as eXtreme Gradient Boosting (XGBoost) [

5], the essential operation remains the estimation of each risk variable’s marginal contribution. Such linear additivity cannot capture synergistic effects and non-linear emergence across modalities. For instance, these methods struggle to decode a typical governance-failure configuration in which sustained deterioration of objective financial conditions, structural defects in internal governance, and affective dissonance in managerial narratives co-resonate to amplify risk. This lack of holistic, systemic risk perception constitutes the core theoretical obstacle behind the performance bottleneck of conventional detectors.

The second limitation lies in the fragility of the data foundation. Fraud samples form a highly sparse and non-linear data manifold in the feature space [

6]. This not only causes models to be systematically biased toward the majority class, creating a catastrophic risk of false negatives, but also imposes stringent domain-adaptability requirements on data augmentation techniques. Traditional Synthetic Minority Over-sampling Technique (SMOTE), due to its linear interpolation mechanism, cannot generate high-fidelity non-linear samples. Meanwhile, general-purpose, domain-agnostic Generative Adversarial Networks (GANs), lacking mathematical constraints on intrinsic economic logic, cannot guarantee joint distribution consistency across multimodal features [

7]. They often produce samples that are statistically plausible but logically absurd, such as simultaneously generating high profitability and persistent negative operating cash flow, thereby introducing disguised noise rather than effective signals of governance failure into the model.

Accordingly, current intelligent diagnostics for financial fraud face two linked limitations: the restricted perspective of the analytic lens and the weak reliability of the data foundation. Against this background, this study aims to construct an intelligent multimodal diagnostic framework, SFG-2DCNN, that improves financial fraud detection and supports sustainable corporate governance by addressing these two limitations in an integrated way. Building on this objective, the study concentrates on two interrelated scientific challenges. The first is the Signal Logicalization challenge in generation, which concerns how to synthesize high-quality governance failure samples on a sparse manifold that are realistic, diverse, and economically coherent. The second is the Configuration Spatialization challenge in detection, which concerns how to move from shallow vector-based analysis to deep and precise recognition of multimodal risk configurations.

To systematically address the above challenges, the SFG-2DCNN framework is grounded in strain theory [

8], information asymmetry theory [

9], game theory [

10], and configurational theory [

11]. Building on these foundations, it operationalizes a multi-stage intelligent diagnostic workflow that progresses from joint distribution alignment to spatial configuration learning and is driven by two core mechanisms.

First, SFG-2DCNN addresses the Signal Logicalization challenge in the generation stage through joint distribution alignment. This mechanism is implemented by SMOTE-FraudGAN, a hybrid generative network. SMOTE-FraudGAN employs a multi-generator, divide-and-conquer strategy and uses the Joint Maximum Mean Discrepancy (JMMD) loss as a cross-modal regularizer. This joint distribution alignment enforces the statistical dependencies between heterogeneous features (finance, governance, and text) in their joint domain, ensuring the generated synthetic samples are simultaneously authentic, diverse, and logically self-consistent. For example, when the framework generates an objective financial signal such as high financial leverage, it simultaneously produces a corresponding subjective textual signal such as overly optimistic sentiment used for concealment. In this way, typical fraud patterns are reproduced and the quality of augmented samples is substantially improved.

Second, SFG-2DCNN addresses the Configuration Spatialization challenge in the detection stage through spatial configuration learning. This mechanism is implemented by a feature-topology mapping strategy together with a two-dimensional Convolutional Neural Network (2D-CNN). Guided by principles of economic theory and feature interaction efficiency, the feature-topology mapping strategy transforms high-information-density multimodal features, refined through a dual-screening mechanism, into an ordered two-dimensional feature matrix. This spatial configuration learning encodes abstract toxic configurations into local spatial patterns that can be learned by the 2D-CNN, thereby enabling precise capture of higher-order interactions through deep spatial fusion. For example, by placing the proportion of independent directors next to local text sentiment tones in the matrix, the convolutional kernels of the 2D-CNN can efficiently learn the spatial texture of governance deficiencies that resonate with emotional concealment.

The study’s contributions and implications are threefold. Theoretically, it explicitly defines and jointly addresses the coupled Signal Logicalization and Configuration Spatialization challenges in financial fraud detection and advances the paradigm from variable-based analysis to multimodal configurational analysis within a concrete multi-stage framework. Managerially, the SFG-2DCNN framework provides boards, auditors, and regulators with an intelligent diagnostic tool that prioritizes high-risk firms and reveals covert risk configurations to support more targeted oversight. Socially, the findings support sustainable corporate governance by reducing undetected fraud, strengthening investor protection, and reinforcing trust in capital markets.

The remainder of this paper is organized as follows.

Section 2 reviews the related literature on financial fraud detection and multimodal learning.

Section 3 presents the theoretical foundations and the proposed SFG-2DCNN diagnostic framework.

Section 4 describes the experimental design and reports the main empirical results. Finally,

Section 5 concludes with a discussion of implications and directions for future research.

3. Sustainable-Governance Diagnostic Framework: From Theoretical Mapping to Algorithmic Implementation

To systematically respond to the deeply coupled challenges of Signal Logicalization and Configuration Spatialization mentioned earlier, this study constructs an end-to-end intelligent diagnostic framework. The framework follows the core principle of theory-guided algorithm design, aiming to progressively map abstract theoretical insights about governance failures into executable and verifiable algorithmic modules, thereby achieving a rigorous transition from theory to practice.

3.1. Theoretical Background

As shown in

Figure 1, this diagnostic framework is grounded in an integrated perspective that combines strain theory, information asymmetry theory, game theory, and configurational theory. This integrated perspective provides a solid theoretical basis for all subsequent modeling choices and ensures the internal logical consistency of the entire framework.

Strain theory reveals the motivational roots of why firms deviate from a sustainable development path. The structural pressures organizations face in achieving legitimate goals are a key driver for them to adopt short-sighted or even fraudulent behaviors to maintain an appearance of prosperity. This perspective guides the framework in operationalizing the “pressure” construct into a multi-dimensional feature set covering both internal financial distress and external macroeconomic fluctuations.

Information asymmetry theory clarifies the window of opportunity for governance failures to occur. Management can exploit its informational advantage for impression management, undermining the transparency and accountability that are the cornerstones of sustainable governance. This perspective directly guides the framework to analyze textual narratives such as MD&A disclosures in depth, to capture contradictory signals including “affective dissonance,” and to consider corporate governance structures as a key internal mechanism for regulating information asymmetry.

Game theory frames fraud as a strategic game that erodes market trust, providing crucial inspiration for algorithm design. It not only provides theoretical support for using GANs, an algorithmic simulation of a game process, but also guides feature engineering to systematically mine non-traditional, highly covert financial indicators. This design counters fraudsters’ strategic evasion of traditional monitoring targets, thereby enhancing the adversarial robustness of the diagnostic framework.

Configurational theory, as the overarching paradigm, encourages this study to transition from variable-based thinking to configurational thinking. It posits that fraud is not determined by the net effect of a single indicator but by a causal recipe composed of multiple conditions such as pressure, opportunity, and specific signals. This perspective operationalizes fraud as a toxic configuration, thus providing a direct methodological justification for using a 2D-CNN, an architecture naturally suited for identifying local spatial patterns.

3.2. Multi-Stage Diagnostic Process

Based on the theoretical framework described above, this study designs a 3-stage systematic diagnostic process: Decoding Governance Failure, Joint Distribution Alignment, and Spatial Configuration Learning. The aim is to operationalize abstract theories into algorithms to solve the core challenges of financial fraud detection.

3.2.1. Stage 1: Decoding Governance Failure—Constructing a Multimodal Signal Blueprint

The objective of this stage is to refine a multimodal signal configuration that can comprehensively describe the risk of governance failure. To counter fraudsters’ strategic evasion of traditional indicators, this study follows the theory of combined feature selection [

43] and designs a dual-screening process that balances predictive performance with economic interpretability.

- (1)

Structured Features (; )

The structured dataset consists of financial and non-financial ratios. The financial part is based on the three major financial statements. After numerical processing, a large-scale generation of combinations was performed by dividing any two line items, creating a pool covering profitability, solvency, and operational efficiency. From this, initial candidate ratios with clear business meanings and adequate sample coverage were selected. The non-financial part was constructed based on corporate governance, macroeconomic conditions, and market environment, and was standardized into initial candidate ratios covering factors such as shareholding structure, market ecosystem, internal controls, and regional risks.

To ensure the quality of the final feature set, this study employs a statistics–machine learning collaborative screening mechanism for structured features:

Statistical pre-screening (point-biserial correlation): Point-biserial correlation () is used to quickly eliminate features with a weak statistical correlation to the fraud label. We adopted the standard empirical threshold of , representing a moderate-to-strong linear association, to effectively filter out low-relevance noise features before non-linear refinement, thereby ensuring that the retained variables possess a fundamental connection to fraud mechanics before non-linear refinement.

Machine learning refinement (XGBoost algorithm): All structured features that pass the pre-screening are fed into an XGBoost model for secondary refinement. By ranking features based on their information gain in XGBoost, a subset of features with the strongest non-linear discriminatory power and lowest redundancy is selected.

Through this process, structured financial indicators () and non-financial indicators () were ultimately selected. Together, they form an objective portrait and structured context for the occurrence of fraud. These features include not only classic financial indicators such as ROE and current ratio but also non-traditional, highly covert warning signals such as Total Liabilities/Operating Costs, as well as features related to the company’s internal and external environment, such as macroeconomic pressures and corporate governance deficiencies.

- (2)

Unstructured Features ()

The original MD&A texts were subjected to standardized processing, including denoising, normalization, and sentence-level segmentation.

Higher-order semantic feature extraction (): To decode the latent risks within the text, a FinBERT model pre-trained on financial domain data was used to encode the MD&A text into -dimensional contextual embedding vectors. This method can accurately capture risk signals in complex financial contexts (e.g., the true meaning of debt default), providing a higher-order, dense representation of the text’s core content.

Quantifying affective dissonance (): To operationalize the impression management strategy, this study concurrently employs the Loughran–McDonald financial dictionary to quantify the sentiment and tone of the text, generating a -dimensional feature (negative, positive, uncertainty, litigious, net sentiment score, and strong and weak modality). This feature aims to capture the contradiction between objective financial performance and management’s subjective narrative—the phenomenon of affective dissonance—providing crucial leading information for identifying deceptive intent.

The -dimensional provides high-dimensional, context-sensitive semantic signals, while the -dimensional offers a low-dimensional, highly interpretable supervisory signal that captures key features for judging deceptive behavior.

- (3)

Final Multimodal Feature Set ()

In summary, the multimodal feature vector

in this study is formed by concatenating four features from three modalities:

3.2.2. Stage 2: Joint Distribution Alignment—Reconstructing Logically Consistent Governance Failure Scenarios

This stage aims to address the Signal Logicalization challenge caused by data scarcity through the SMOTE-FraudGAN hybrid generative model. The core idea is to ensure that the generated governance failure scenarios (synthetic samples) are not only statistically realistic but also logically self-consistent from an economic perspective.

SMOTE-FraudGAN combines the distribution-smoothing capability of SMOTE with the non-linear generative power of Joint-FraudGAN:

Step 1: SMOTE Pre-smoothing and Manifold Regularization

Before directly applying the GAN, this stage first uses the SMOTE technique to perform an initial oversampling of the original scarce fraud samples

. By randomly selecting one of its

-nearest neighbors

for linear interpolation, a small number of synthetic samples

are generated as:

This step provides an optimized initial distribution for the subsequent Joint-FraudGAN, effectively accelerating convergence and significantly reducing the risk of mode collapse during the initial training phase.

Step 2: Joint-FraudGAN Deep Refinement and Joint Distribution Alignment

This is the core stage for achieving joint distribution consistency across feature domains, where the samples initially augmented by SMOTE are deeply optimized and refined. The overall process is shown in

Figure 2.

- (1)

Multi-Generator Module and Multi-Discriminator Module

As shown in

Table 1, since a single generator struggles to learn the complex, heterogeneous feature distribution of financial fraud samples, this study introduces a divide-and-conquer strategy. It decomposes the multimodal feature generation task, based on economic priors, among seven parallel, domain-specialized generators. This structure significantly reduces the learning difficulty for each generator, allowing them to capture the highly cohesive distributions within their respective domains more stably and finely. All modules also receive random noise and the fraud label (as a conditional input, i.e., a CGAN architecture) to enhance the diversity and class adherence of the generated samples.

As shown in

Table 2, the Joint-FraudGAN comprises five discriminator modules to enforce comprehensive constraints. Beyond the standard Base Discriminator for overall authenticity, this study introduces three Feature Domain Discriminators to ensure intra-domain consistency across subsets. Crucially, a Multi-task Discriminator goes beyond standard outputs by simultaneously verifying class correctness (

) and cycle consistency. This dual-check mechanism prevents the generation of statistically plausible but logically absurd samples (i.e., disguised noise), ensuring the capture of specific toxic configurations of governance failure by the framework.

- (2)

Joint Constraint Loss Functions and Diversity Enhancement Loss Functions

To fundamentally resolve the logical conflict between cross-modal features, this study introduces

to constrain the generator

. JMMD measures the difference between the joint probability distributions of generated and real samples in a Reproducing Kernel Hilbert Space, forcing the generator to learn and reproduce the true fraud patterns that arise from the combined effect of all feature modalities. For instance, it ensures that a generated sample with high debt (

) is accompanied by a low frequency of uncertainty words (

, used to conceal risk), reflecting a logical association. Its core formula is as follows:

where

represents the feature mapping,

are real samples, and

are generated samples. Minimizing

forces

to converge to the true distribution

across the entire joint domain, thereby ensuring that the generated fraud samples are logically self-consistent within the domain.

To address the common issue of mode collapse in GAN, this study also introduces a diversity loss

as an additional constraint.

forces the generator

to map changes in the input noise

to significant changes in the generated sample

by maximizing the mutual information

. Its core formula is as follows:

ensures that small variations in the input noise lead to significant differences in the output samples, thereby effectively broadening the distribution of generated samples in the feature space and greatly enhancing the diversity and representativeness of the synthetic fraud samples.

- (3)

Multi-task Discriminator Loss Function

The task of the discriminator is to maximize its ability to distinguish between real and generated samples while ensuring that the generated samples have the correct fraud label. This study introduces a multi-task discriminator loss (

) to drive the Multi-Task Discriminator to perform two key tasks: first, class discrimination (classification), ensuring that the generated sample’s label

is correct (i.e.,

); and second, cycle-consistency verification, assessing whether the reconstructed representation

remains consistent with the original input

. The core formula is as follows:

where

is the binary cross-entropy loss, and

controls the weight of the cycle-consistency constraint.

- (4)

Basic Generative Adversarial Loss Function and Total Loss Function

For each generator

and discriminator

, the generator tries to create samples similar to the real data, while the discriminator tries to distinguish between generated and real samples. The basic GAN loss is formulated as:

where

is a sample from the real data distribution, and

is noise input. The generator

aims to maximize the probability of the discriminator making a mistake, which is equivalent to minimizing

.

During the training process, the total loss is formulated as:

where

and

control the weights of the JMMD and diversity losses during training. By dynamically adjusting these weights, a balance between salient features and sample diversity can be achieved during the generation process, ensuring that the generated fraud samples are both representative and diverse.

3.2.3. Stage 3: Spatial Configuration Learning—Achieving Deep Diagnosis of Toxic Configurations

This stage aims to capture higher-order spatial configuration patterns among multimodal features through a feature-topology mapping strategy and a 2D-CNN detector, completing the transition from vector-based relationship analysis to spatial relationship diagnosis.

- (1)

Feature Ordering Principles and Ordered Matrix Transformation (Feature Image Mapping)

This step is not a simple reshaping of dimensions but a theory-driven process that explicitly encodes abstract signal configurations into spatial neighborhood patterns. Before reshaping the trimodal feature vector (total dimension ) into an two-dimensional feature matrix , we innovatively follow the principle of feature interaction efficiency for logical group ordering:

Intra-modal cohesion: Within high-dimensional, homogeneous feature blocks (e.g., the features), we perform proximity sorting based on the correlation between features. This ensures that strongly related financial indicators are physically adjacent in the matrix, maximizing the feature extraction efficiency of the 2D-CNN’s local receptive field.

Inter-modal juxtaposition: Features from different modalities that have a strong theoretical interaction logic (e.g., financial ratios representing objective outcomes and sentiment tones reflecting subjective intent) are placed closely together at the block boundaries of the matrix.

Spatial aggregation: For low-dimensional but high-value features (e.g., ), we place them centrally in the matrix to artificially enhance their signal density within the local receptive field, preventing them from being diluted by high-dimensional features.

The final ordering strategy is [ (134)] → [ (7)] → [ (16)] → [ (768)]. All feature values are normalized and then converted to a pixel intensity scale () to serve as a single-channel input for the 2D-CNN. The brightness for missing values or pixels with a zero denominator is set to .

This ordered topological mapping successfully transforms an abstract, higher-order fraud signal configuration (e.g., the synergy between high financial leverage and overly optimistic management rhetoric) into a concrete spatial neighborhood texture that a 2D-CNN can directly recognize.

- (2)

2D-CNN Detector and Deep Spatial Fusion

This stage is the core detection phase for deeply deciphering higher-order interaction patterns. The feature matrix

generated in the previous step is used as a single-channel input for the 2D-CNN. By leveraging the two-dimensional receptive field of the 2D-CNN’s convolutional kernels, which slide over

and simultaneously aggregate cross-modal feature information from adjacent horizontal and vertical positions. This convolution operation can be formally expressed as:

where

is the output feature map element at position

in layer

,

is the convolutional kernel,

is the input feature map from the previous layer (which is

when

),

and

are the dimensions of the kernel,

is the activation function, and

is the bias term.

Since the features have been ordered according to interaction efficiency, the 2D-CNN can efficiently capture the toxic configurations encoded as spatial neighborhood patterns (such as the spatial adjacency of optimistic distortion in MD&A text, low governance levels, and high financial leverage). This achieves a deep spatial fusion of heterogeneous multimodal features, ultimately leading to a high-confidence fraud classification.

In summary, SMOTE-FraudGAN (SFG) and 2D-CNN together form an end-to-end system framework (SFG-2DCNN) that addresses the two core pain points of financial fraud identification. It aims to capture the synergistic non-linear relationship between deteriorating financial conditions (Structural-Fin), internal and external environmental pressures (Structural-NonFin), and overly optimistic management/risk concealment (Semantic/Tone).

4. Experimental Design and Results Analysis

This section aims to conduct a comprehensive evaluation of the proposed multimodal intelligent diagnostic framework (SFG-2DCNN) through a rigorous and reproducible empirical design. The core objective of the experimental design is not only to demonstrate the framework’s performance advantage but also to achieve a holistic validation of the contribution of each innovative component and, ultimately, to verify its diagnostic robustness in the complex and dynamic capital market environment.

4.1. Experimental Setup

4.1.1. Data Sources, Sample Definition, and Preprocessing

This study draws on data for Chinese A-share listed companies from 2001 to 2021. The CSRC operates a stringent administrative enforcement regime that generates explicit, regulator-verified fraud labels for misconduct ranging from fictitious reporting to material omissions. Enforcement records produced under this regime form a large-scale, well-annotated fraud dataset that is well suited for training data-intensive deep learning models.

Structured data (financial, governance, economic environment, etc.) were sourced from the China Stock Market & Accounting Research (CSMAR) database, while unstructured text data (MD&A) were obtained from the China Research Data Service Platform (CNRDS). Following domain conventions, this study excluded companies in the financial industry and samples with missing key features.

Governance failure (fraud) sample definition: Strictly defined as company-year observations marked in the CSMAR violations table for core financial fraud behaviors (including “fictitious profits,” “inflated assets,” “false records,” “delayed disclosure,” “material omissions,” “misleading disclosures,” and “fraudulent IPOs”).

Control (non-fraud) sample definition: Samples with non-financial violations, abnormal audit opinions, or risk warnings (ST/*ST) were excluded to ensure a clean negative class.

Innovative handling of gray samples: To address the potential contamination of false negatives in the training data, this study introduces a dual cross-validation mechanism using Benford’s Law and Isolation Forest to screen for high-risk observations in the negative class, defining them as Gray Samples. Unlike traditional deletion strategies, this study adopts an innovative approach of marking and retaining the identified gray samples. This allows the subsequent generative model to perceive these ambiguous areas on the boundary between safety and violation, thereby enhancing the model’s ability to recognize the full spectrum of governance risks and improving its overall robustness.

After screening, a total of 5732 fraud samples and 45,780 non-fraud samples were obtained. A 5-fold cross-validation method was used to divide the dataset into training and testing sets. All sample balancing techniques were applied only to the training set, while the test set maintained its original class distribution for a fair and unbiased final evaluation of the downstream detection models.

4.1.2. Experimental Environment and Model Configuration

All experiments were conducted in an Ubuntu 22.04 LTS environment, using Python 3.10.13 and PyTorch 2.2.1 (CUDA 12.1, cuDNN 9) as the core deep learning framework, accelerated on a single NVIDIA GeForce RTX 4060 Ti 16 GB GPU. Auxiliary tools such as scikit-learn 1.4.2, imbalanced-learn 0.12.2, Transformers 4.41.2, and XGBoost 2.0.3 were used for the baseline model construction, feature engineering, and evaluation.

SMOTE-FraudGAN aims to provide a stable training environment for Joint-FraudGAN (JFGAN) through SMOTE pre-smoothing, ensuring that the generated samples are domain-logically self-consistent and diverse. Key parameter settings are shown in

Table 3.

The 2D-CNN detector is designed to decipher higher-order fraud configurations by learning from the spatial topological structure. Key parameter settings are shown in

Table 4.

4.1.3. Evaluation Metrics

Considering that in governance failure diagnostics, the cost of false negatives is extremely high and poses a serious threat to market sustainability, we adopt a multi-dimensional evaluation system. The evaluation focuses on two primary metrics, F1-score and the Area Under the ROC Curve (AUC). The F1-score is the harmonic mean of precision and recall and provides a comprehensive assessment of performance on the minority fraud class. AUC is a robust measure of a classifier’s overall discrimination ability across all possible thresholds and is insensitive to class imbalance.

In addition, three secondary metrics are reported to provide a more complete picture of classification performance. Precision measures the proportion of true positives among all observations predicted as fraud, and higher precision indicates a lower false-alarm rate. Recall measures the proportion of actual fraud cases that are correctly identified. Accuracy measures the overall correctness of classification across both fraud and non-fraud samples.

4.1.4. Baseline Models and Experimental Groups

To systematically validate the performance of our framework and precisely quantify the independent contributions of each innovative component, this study designs an experimental matrix consisting of four major groups.

Proposed full model: The complete SFG-2DCNN framework, which uses SMOTE-FraudGAN for sample balancing and a 2D-CNN based on ordered feature-topology mapping for deep spatial fusion detection.

Group 1. Traditional and single-modality baselines: This group aims to reproduce the performance limits of traditional audit and single-modality models, demonstrating the necessity of multimodal and complex models. A series of baseline models were trained using only structured features, including Z-Score (Altman), an authoritative domain baseline; Logistic Regression (LR-Structural), a linear statistical model baseline; Support Vector Machine (SVM-Structural), a strong non-linear baseline in traditional machine learning; XGBoost (XGB-Structural), a strong baseline for ensemble learning on structured data; and Multilayer Perceptron (MLP-Structural), a basic deep learning baseline.

Group 2. Imbalanced handling baselines: This group aims to validate the superiority of SMOTE-FraudGAN. While uniformly using a 2D-CNN detector, the effects of different sample balancing strategies were compared, including no balancing (Unbalanced-2DCNN), traditional SMOTE (SMOTE-2DCNN), and a basic GAN (BasicGAN-2DCNN).

Group 3. Detecting architecture baselines: This group aims to validate the effectiveness of the 2D-CNN’s deep spatial fusion. Using the high-quality data generated by SMOTE-FraudGAN, the performance of different detectors was compared, including SVM (SFG-SVM), XGBoost (SFG-XGB), MLP (SFG-MLP), and 1D-CNN (SFG-1DCNN).

Group 4. Ablation study variants: This group aims to precisely quantify the independent contributions of the key innovative components in the full model by systematically removing them. The specific settings are shown in

Table 5.

4.2. Experimental Results and Analysis

4.2.1. Qualitative Analysis of Generated Sample Quality

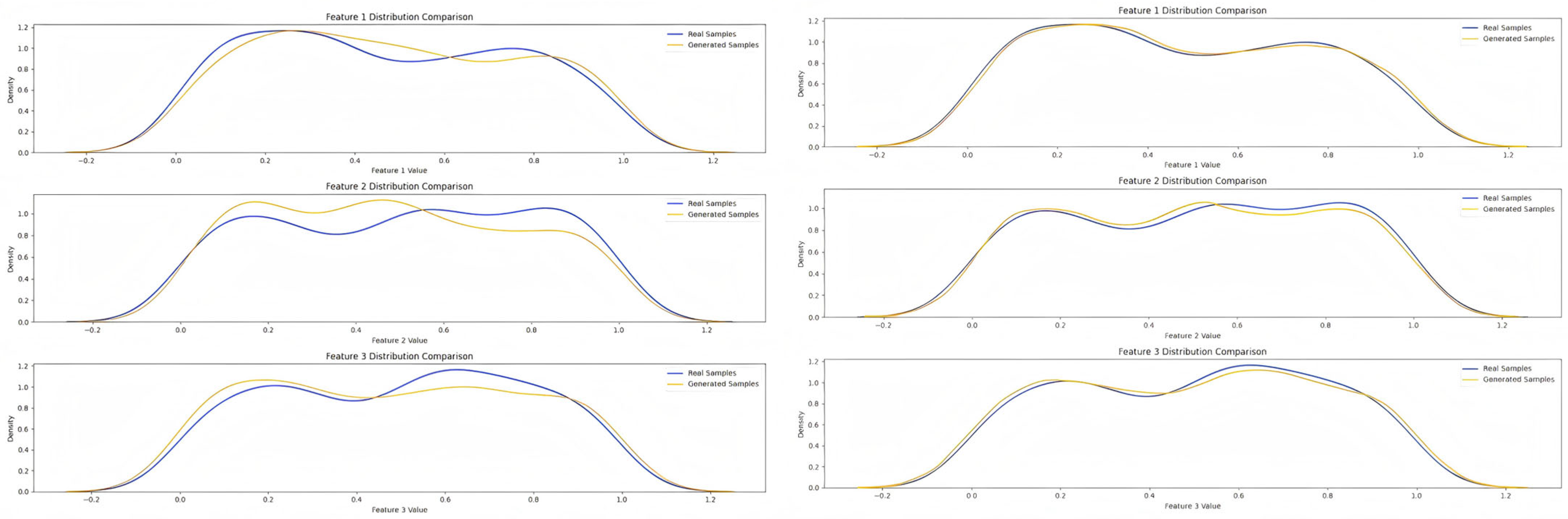

This section aims to visually validate the effectiveness of SMOTE-FraudGAN in addressing the Signal Logicalization challenge, specifically in ensuring the cross-modal joint distribution consistency of the generated samples.

First, the model’s training process demonstrated high stability. The loss function curves (

Figure 3) converge smoothly, indicating that the generator and discriminator successfully reached a Nash Equilibrium in their dynamic game, effectively avoiding common pitfalls in GAN training such as mode collapse. This provides a necessary foundation for generating high-quality samples that are both authentic and diverse.

More importantly, the superiority of the proposed framework is highlighted in the comparison of distribution fidelity (

Figure 4). Unlike the significant distributional mismatch produced by a domain-agnostic general-purpose GAN, SMOTE-FraudGAN successfully achieves a precise fit to the true fraud data manifold by using the JMMD loss as a key cross-modal regularization constraint. This mechanism forces the generator to learn and reproduce the true statistical dependencies of heterogeneous features (such as high financial leverage and abnormally optimistic text) in the high-dimensional space. This precise joint distribution alignment ensures that the synthetic samples are highly self-consistent in terms of economic logic, fundamentally overcoming the Signal Logicalization challenge and providing a solid and reliable data foundation for the downstream detector.

4.2.2. Overall Performance Comparison: Validating the Model’s Superiority

This section comprehensively validates the performance superiority of the proposed framework by systematically comparing the Full-Model (SFG-2DCNN) with three major groups of baseline models (Groups 1, 2, and 3).

As shown in

Table 6, the proposed SFG-2DCNN achieves the best performance across all key metrics, with an AUC of

and an F1-score of

. This result not only significantly surpasses all baseline models, breaking through the performance bottleneck of existing detection paradigms, but also provides initial confirmation of the framework’s success in systematically addressing the two deeply coupled challenges of Signal Logicalization and Configuration Spatialization.

Analysis 1: The Necessity of Multimodal Configurational Perspective (Comparison with Group 1)

This experiment aims to validate the limitations of traditional detection paradigms that rely solely on structured data. The results show that even the best-performing baseline in Group 1, XGB-Structural (), has a large performance gap compared to the full model. This significant difference strongly supports the multimodal configurational thinking advocated in this study, proving that relying only on objective, easily manipulated structured data cannot fully capture the entire picture of fraudulent behavior. The integration of the company’s internal and external environment (non-financial ratios) and subjective textual information (MD&A sentiment, higher-order semantics) is crucial for identifying covert toxic configurations.

Analysis 2: The Value of Joint Distribution Alignment (Comparison with Group 2)

This experiment aims to validate the superiority of the SMOTE-FraudGAN augmentation strategy while keeping the detector consistent. The traditional linear interpolation method, SMOTE-2DCNN (), is limited in performance because it cannot capture the highly non-linear characteristics of fraudulent behavior. The general-purpose, domain-agnostic BasicGAN-2DCNN (), although better than SMOTE, fails to achieve optimal performance due to a lack of cross-modal consistency constraints. The superiority of SFG-2DCNN is directly attributable to SMOTE-FraudGAN’s success in resolving the dilemma between diversity and domain-logical consistency in generated samples. Its core JMMD joint constraint forces the generated samples to reproduce the true cross-domain feature joint distribution, thereby providing high-quality, logically self-consistent training data for the downstream detector, which is key to overcoming the Signal Logicalization challenge.

Analysis 3: The Effectiveness of 2D-CNN Spatial Fusion (Comparison with Group 3)

This experiment aims to validate the superiority of the 2D-CNN architecture in capturing spatial configurations, while keeping the input data quality consistent. The performance of SFG-2DCNN is significantly better than that of the state-of-the-art model for tabular data, SFG-XGB (), and the sequential model, SFG-1DCNN (). This comparison directly validates the effectiveness of the proposed feature-topology mapping strategy. By recasting vectorwise feature relations into an ordered spatial layout, the 2D-CNN leverages its two-dimensional receptive field to efficiently capture toxic configurations expressed as local neighborhood patterns. This establishes the 2D-CNN as the preferred detector for deep spatial fusion of heterogeneous multimodal features and constitutes the key algorithmic realization of the Configuration Spatialization breakthrough.

4.2.3. Ablation Study: Validating the Model’s Innovations

This section aims to precisely quantify the independent contribution of each core innovative component of the SFG-2DCNN to its final performance by systematically removing them, thereby achieving a comprehensive, component-level validation of the proposed methodology.

Group A Ablation: Validation of the Domain-Adaptive Generative Network (SMOTE-FraudGAN)

This group of experiments validates the effectiveness of the key constraints and strategies in the SMOTE-FraudGAN architecture (such as , , and multi-generator divide-and-conquer) in addressing the Signal Logicalization challenge.

The results of the Group A ablation study (

Table 7) provide causal validation for the internal mechanisms of SMOTE-FraudGAN. Removing the JMMD loss, the core constraint (V-w/o

), led to the most severe performance degradation (AUC drop of

), confirming that cross-modal feature joint distribution alignment is the fundamental driver for generating high-quality samples. Furthermore, using a single generator (V-Single-G,

) and removing the SMOTE pre-smoothing stage (V-w/o SMOTE,

) both resulted in significant performance drops, validating the key contributions of the divide-and-conquer strategy for stably learning heterogeneous feature distributions and the hybrid mechanism for providing an optimized initial distribution for stable GAN training on a sparse manifold.

Group B Ablation: Validation of Spatial Configuration Learning and Feature Ordering Principles

This group of experiments aims to validate the contribution of the feature ordering principle, based on economic theory, in the feature matrix transformation strategy for tackling the Configuration Spatialization challenge.

As shown in

Table 8, randomly shuffling the feature order (V-Shuffled) led to a significant drop in model performance (AUC

), providing direct causal evidence for the criticality of feature spatial layout. This result proves that the superiority of the 2D-CNN is not coincidental but strictly dependent on the feature-topology mapping strategy. When this strategy is disrupted, adjacent pixels in the matrix lose their semantic correlation, and the spatial neighborhood texture is destroyed, thus confirming that this mapping is the core mechanism for encoding abstract toxic configurations into patterns recognizable by the 2D-CNN.

Group C Ablation: Analysis of Multimodal Feature Gains

This group of experiments quantifies the performance gain of multimodal fusion relative to single modalities and analyzes the independent contributions of different feature domains.

The results of the Group C ablation study (

Table 9) confirm the indispensability of multimodal fusion. Relying on a single modality (V-Structural Only or V-Textual Only) led to substantial performance degradation (AUC drops of

and

, respectively), proving that the synergistic predictive power of fusing objective results with subjective intent is crucial. Within the textual domain, higher-order semantic features (V-w/o Semantic,

) and sentiment tone features (V-w/o Tone,

) also demonstrated unique complementary value: the former provided higher-information-density risk signals, while the latter served as an interpretable probe for affective dissonance, offering indispensable supplementary information.

4.2.4. Sensitivity and Robustness Analysis: Exploring the Credibility and Boundaries of Core Conclusions

- (1)

Sensitivity Analysis: Exploring the Optimal Boundaries of Core Hyperparameters

This section aims to validate the reasonableness and optimality of the model’s key hyperparameter choices.

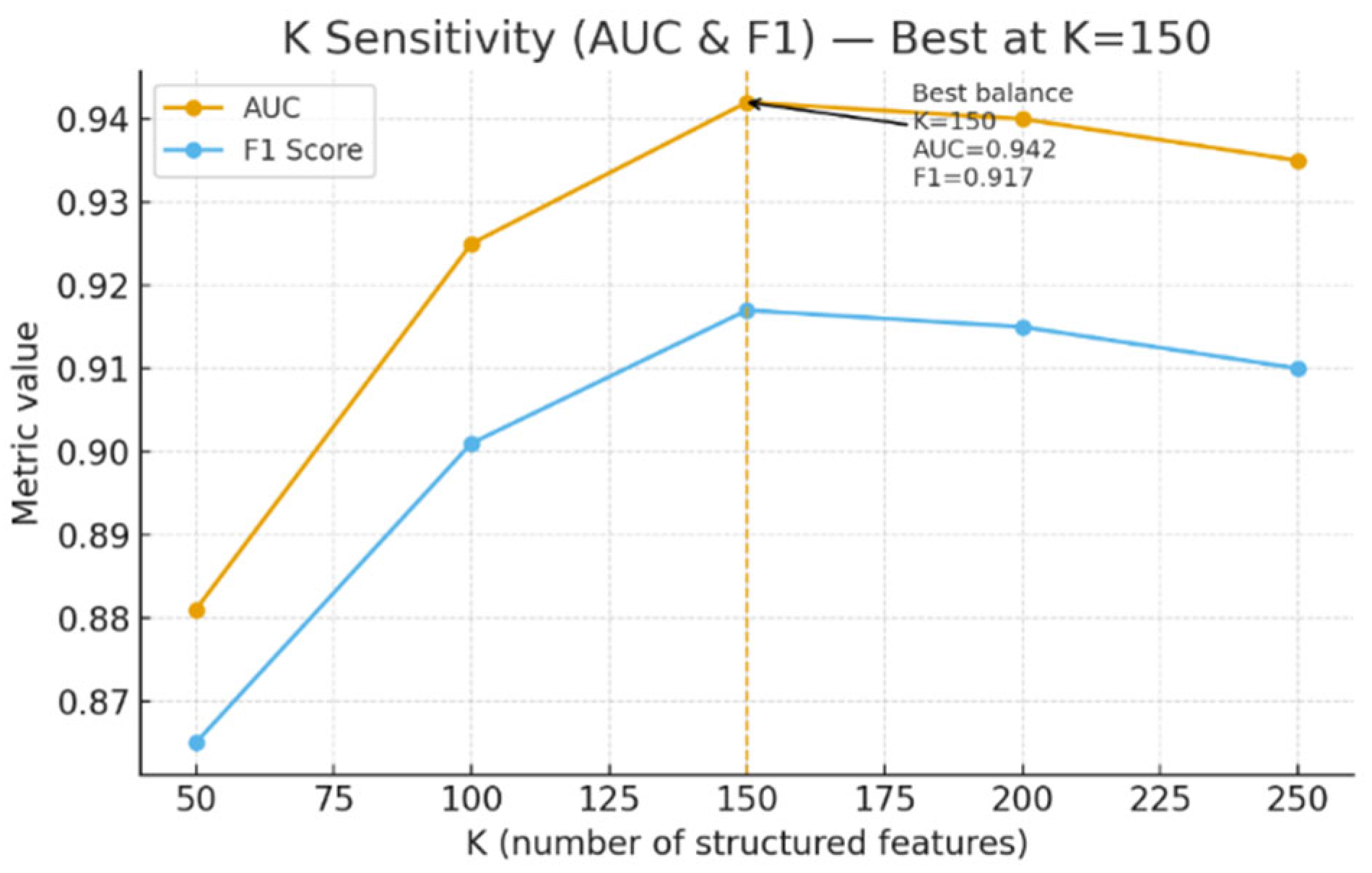

Analysis of the number of structured features (K value): This experiment systematically tested the performance changes in the downstream 2D-CNN when different numbers of features

were retained after XGBoost gain ranking in the structured feature selection process. As shown in

Figure 5, the model’s AUC value shows a trend of rapid increase followed by a plateau as K increases, reaching an optimal balance around

. When

increases further, performance slightly decreases, indicating that redundant or noisy features have been introduced. This result demonstrates that the dual-screening through point-biserial correlation and XGBoost gain is effective.

Figure 5.

Sensitivity Analysis of the Number of Structured Features.

Figure 5.

Sensitivity Analysis of the Number of Structured Features.

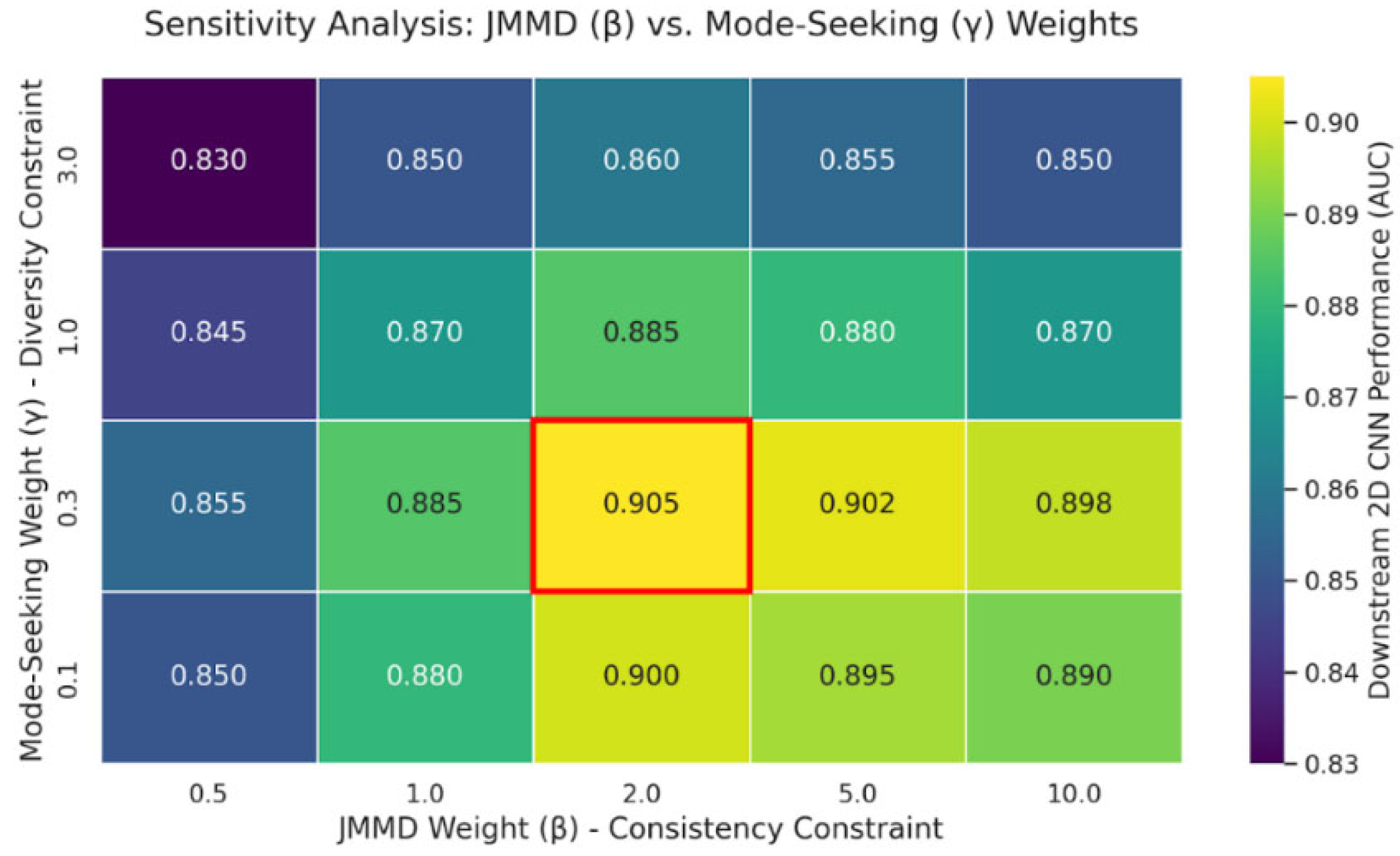

Balance analysis of SMOTE-FraudGAN loss weights: This experiment used a grid search strategy to systematically adjust the combination of the joint consistency loss (

, weight

) and the diversity loss (

, weight

) and evaluated the final performance of the downstream 2D-CNN. As shown in

Figure 6, too low a

leads to domain-logically inconsistent generated samples, while too high a

causes the generated samples to deviate from the true data manifold, reducing their realism. The weight combination of

and

was confirmed to accurately calibrate the core dilemma of “realism vs. diversity,” ensuring the high quality of the generated samples.

Figure 6.

Sensitivity Analysis of SMOTE-FraudGAN Loss Weights.

Figure 6.

Sensitivity Analysis of SMOTE-FraudGAN Loss Weights.

Analysis of Convolutional Kernel Size: As shown in

Table 10, this experiment examined the impact of different convolutional kernel sizes

on the ability to capture spatial configurations, finding that the model performed best with a

kernel. Kernels that are too small cannot cover sufficient cross-modal boundary information, leading to inadequate fusion, while oversized kernels are prone to capturing irrelevant long-distance features, introducing noise.

Table 10.

Sensitivity Analysis of Convolutional Kernel Size.

Table 10.

Sensitivity Analysis of Convolutional Kernel Size.

| Kernel Size | AUC | F1 |

|---|

| 0.880 | 0.875 |

| 0.942 | 0.917 |

| 0.930 | 0.905 |

| 0.915 | 0.895 |

- (2)

Robustness Analysis: Evaluating Key Design Choices and Generalization Ability

This section evaluates the robustness of the core findings by varying key assumptions and data-partitioning schemes.

Gray sample handling strategy: This experiment added two different sample handling methods, Robust-Naive (not identifying gray samples) and Robust-DeleteGray (completely removing gray samples), to validate the effectiveness of the proposed strategy of marking gray samples. The experimental results, as shown in

Table 11, indicate that Robust-Naive performed the worst, suggesting that false negative samples severely contaminate the negative class data. Although Robust-DeleteGray performed better than the former, it was significantly inferior to the Full-Model. This result confirms that this innovative mark and retain gray samples strategy allows SMOTE-FraudGAN to perceive high-risk samples at the edge of abnormality, thereby improving the model’s ability to recognize complex decision boundaries.

Table 11.

Robustness Analysis of Gray Sample Handling Strategies.

Table 11.

Robustness Analysis of Gray Sample Handling Strategies.

| Handling Strategy | AUC | F1 |

|---|

| Robust-Naive | 0.865 | 0.850 |

| Robust-DeleteGray | 0.895 | 0.875 |

| Full Model | 0.942 | 0.917 |

Robustness Test of Temporal Stability: This experiment used a rolling time window approach to verify the temporal stability of our model. As shown in

Table 12, compared to XGBoost, the average performance of SFG-2DCNN consistently remained at a high level with an extremely low standard deviation. This strongly demonstrates that the SFG-2DCNN model has learned the deep, essential configurational patterns of fraudulent behavior, rather than superficial correlations of specific historical periods, and possesses high temporal stability and generalization ability.

Table 12.

Robustness Analysis of Temporal Stability.

Table 12.

Robustness Analysis of Temporal Stability.

| Training Window | Test Window | SFG-2DCNN (AUC) | XGB-Structural (AUC) |

|---|

| 2001–2011 | 2012–2013 | 0.885 | 0.745 |

| 2001–2013 | 2014–2015 | 0.879 | 0.690 |

| 2001–2015 | 2016–2017 | 0.881 | 0.730 |

| 2001–2017 | 2018–2019 | 0.868 | 0.720 |

| 2001–2019 | 2020–2021 | 0.862 | 0.685 |

| Mean | 0.875 | 0.714 |

| Std. Dev. | ±0.010 | ±0.026 |

Robustness test of data partitioning method (K-fold cross-validation): This experiment validated the stability of our model through unified experiments under different data partitioning strategies, including 5-fold cross-validation, 10-fold cross-validation, 20-fold cross-validation, and a traditional single random split (

). As shown in

Table 13, the proposed framework’s average performance was highly consistent across all cross-validation settings (

) with an extremely low standard deviation (≤0.005), strongly demonstrating the model’s high robustness to data partitioning and validating the stability and credibility of our experimental conclusions.

4.2.5. Visual Interpretation of Toxic Configurations

To visually validate toxic configurations, we applied Gradient-weighted Class Activation Mapping (Grad-CAM) on a randomly selected fraud sample. As shown in

Figure 7, the heatmap reveals a hierarchical and distributed attention structure: the primary hotspot (Red Zone) concentrates at the interface of financial pressure and textual sentiment, confirming the detection of spatial synergies between financial distress and affective dissonance. Simultaneously, a secondary cluster (Orange) and scattered points capture MD&A anomalies and isolated red flags. This indicates that SFG-2DCNN integrates holistic configurations with granular signals for robust diagnosis.

4.3. Experimental Conclusion and Discussion

Overall, the empirical results in

Section 4 provide convergent evidence that the proposed SFG-2DCNN framework effectively addresses the coupled challenges of Signal Logicalization and Configuration Spatialization.

First, the comparative experiments establish the external performance advantage of SFG-2DCNN. Its best results () clearly exceed all baseline models, indicating that relative to traditional sample-balancing methods (e.g., SMOTE, GAN) and vector-based detectors (e.g., XGBoost, 1D-CNN), the integrated design of sample generation and multimodal spatial fusion is essential for achieving a substantial improvement in detection accuracy. This finding is consistent with a configurational view of fraud and suggests that capturing higher-order toxic configurations through spatial fusion is more effective than assessing marginal variable contributions.

Second, the ablation studies provide strong support for the internal mechanisms of the framework. Removing the JMMD-based joint constraint produces the largest performance decline (AUC drop of ), highlighting the central role of domain-adaptive sample generation in addressing the Signal Logicalization challenge. Disrupting the spatial feature layout also leads to a marked degradation in performance (AUC drop of ), which confirms the importance of spatial configuration learning for tackling the Configuration Spatialization challenge. In contrast to general-purpose generative models, these results underline that enforcing intrinsic economic logic through joint distribution alignment is critical for synthesizing valid financial data.

Finally, the sensitivity and robustness analyses show that the framework is relatively insensitive to key hyperparameters and remains stable under temporal and cross-validation tests. This stability indicates that spatial configuration learning captures robust structural patterns rather than transient correlations and offers an advantage over shallow fusion strategies that are prone to overfitting.

In summary, this framework, through the two pillars of joint distribution alignment and spatial configuration learning, successfully overcomes the core challenges of data scarcity and signal covertness, and its conclusions are highly credible.