Research on Wetland Fine Classification Based on Remote Sensing Images with Multi-Temporal and Feature Optimization

Abstract

1. Introduction

- (1)

- A multi-satellite and multi-temporal remote sensing image fusion module is proposed, in which the fused information can not only fill the gaps in remote sensing image data that are susceptible to interference by clouds, fog, shadows, etc., and ensure the integrity of the information, but also obtain the data of “high spatial resolution + rich spectral and texture”, and also incorporate the information of seasonal color changes in wetlands into the features, so that the differentiation of wetland areas is increased. It can also incorporate the seasonal color change information of wetlands into the features and increase the differentiation of wetland areas. This will improve the accuracy of regional classification.

- (2)

- The proposed Feature Optimization Module reduces the information that causes confusion between features and the duplicated features, reduces the data dimensions, and avoids the interference of “dimensional catastrophe” on the model. By filtering the key features, the amount of data to be processed by the model is significantly reduced, and the consumption of hardware resources is lowered, so that the model can be trained and classified faster.

2. Materials and Methods

2.1. Methods

2.1.1. Multi-Satellite and Multi-Temporal Remote Sensing Image Fusion Module

2.1.2. Feature Optimization Module

2.1.3. Feature Classification Network Module

2.2. Case Study

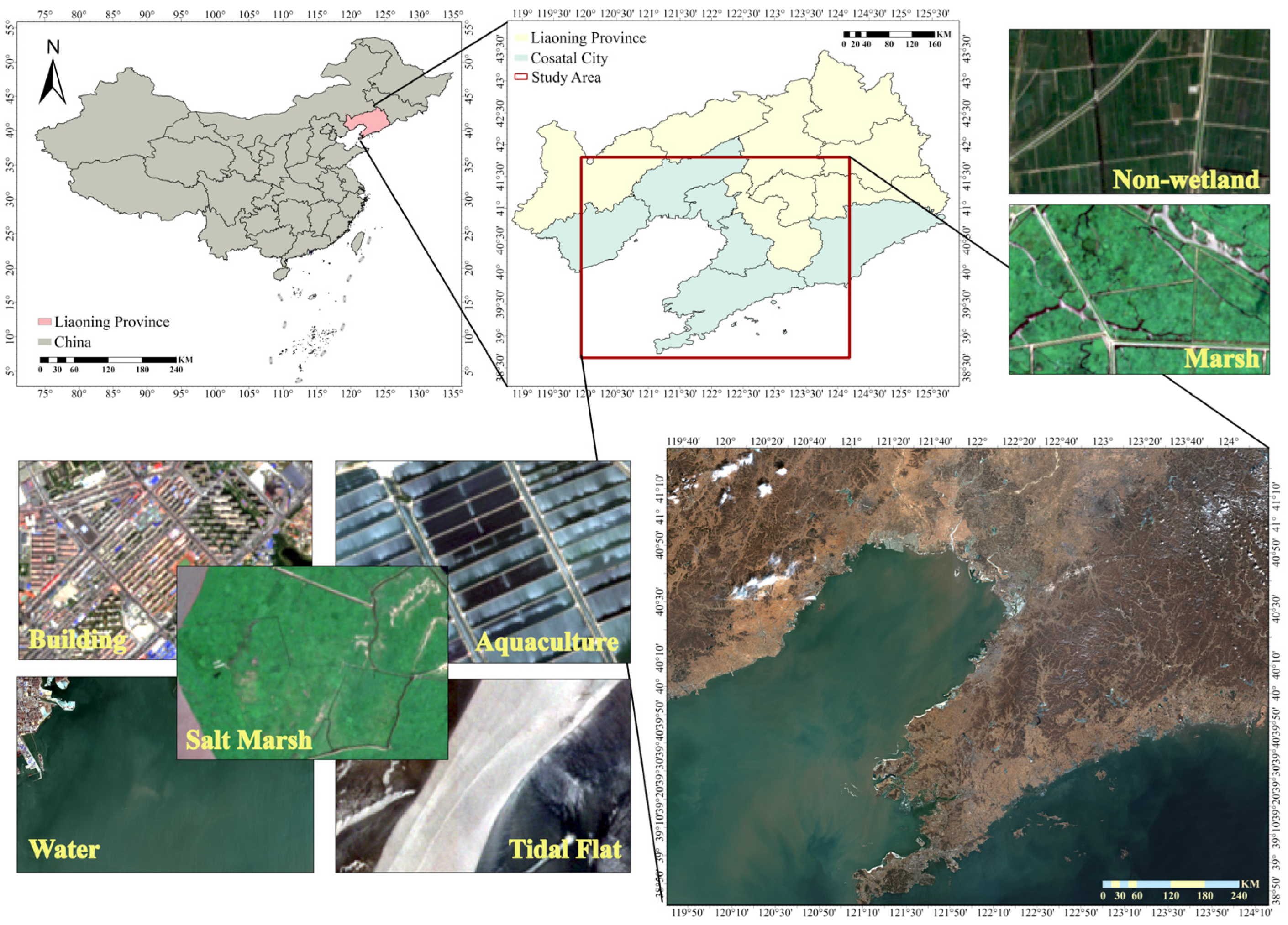

2.2.1. Study Area

2.2.2. Wetland Category

2.2.3. Experimental Environment

2.2.4. Accuracy Assessment

2.2.5. Experimental Scheme Settings

3. Results

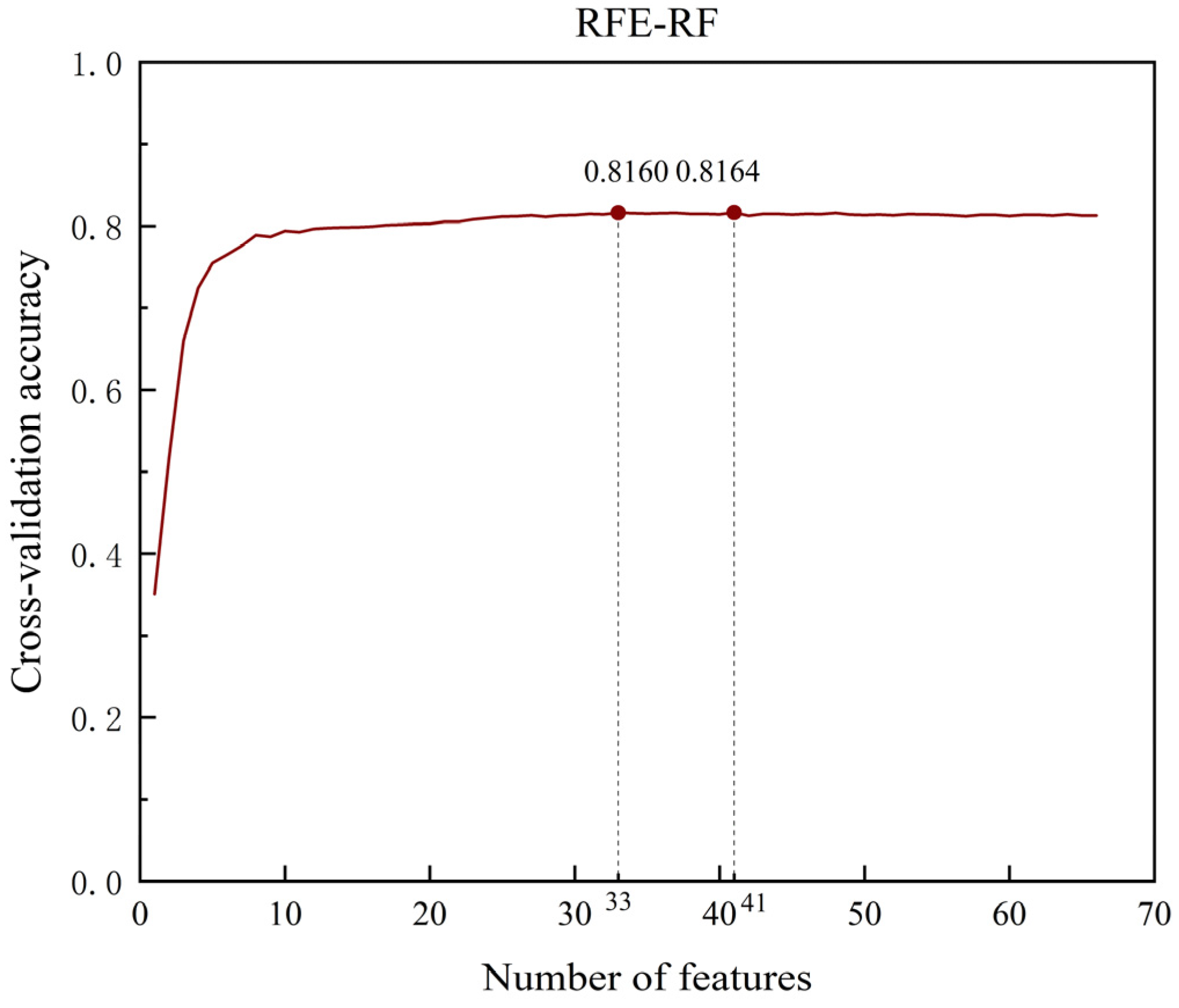

3.1. Feature Optimization Results

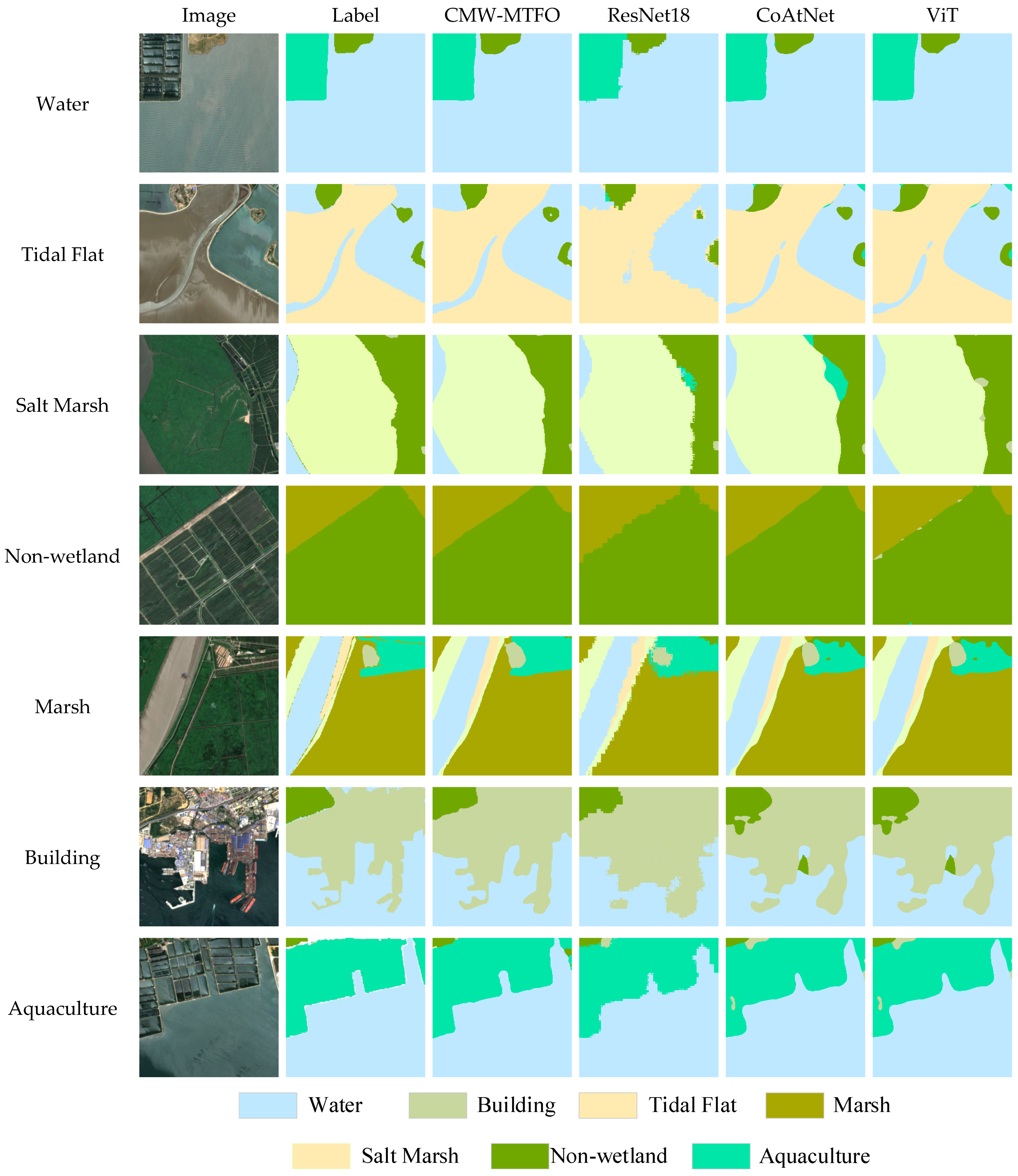

3.2. Evaluation of the Effectiveness and Accuracy of Wetland Classification Based on CMW-MTFO

3.3. Impact of Feature Optimization Module in CMW-MTFO to Improve Wetland Classification Accuracy

3.4. Comparison of Wetland Classification Results Based on Multi-Temporal and Single-Temporal Images

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mumtaz, M.; Jahanzaib, S.H.; Hussain, W.; Khan, S.; Youssef, Y.M.; Qaysi, S.; Abdelnabi, A.; Alarifi, N.; Abd-Elmaboud, M.E. Synergy of Remote Sensing and Geospatial Technologies to Advance Sustainable Development Goals for Future Coastal Urbanization and Environmental Challenges in a Riverine Megacity. ISPRS Int. J. Geo-Inf. 2025, 14, 30. [Google Scholar] [CrossRef]

- Convention on Wetlands. Global Wetland Outlook 2025: Valuing, Conserving, Restoring and Financing Wetlands; Secretariat of the Convention on Wetlands: Gland, Switzerland, 2025. [Google Scholar]

- Big Earth Data in Support of the Sustainable Development Goals—Special Report for a Decade of the SDGs; CBAS: Beijing, China, 2025. [CrossRef]

- Global-Scale Sustainable Development Scientific Monitoring Report (2025) A Decade; CBAS: Beijing, China, 2025. [CrossRef]

- Moreno, G.M.d.S.; de Carvalho Júnior, O.A.; de Carvalho, O.L.F.; Andrade, T.C. Deep semantic segmentation of mangroves in Brazil combining spatial, temporal, and polarization data from Sentinel-1 time series. Ocean Coast. Manag. 2023, 231, 106381. [Google Scholar] [CrossRef]

- Wu, R.; Wang, J. Identifying Coastal Wetlands Changes Using a High-Resolution Optical Images Feature Hierarchical Selection Method. Appl. Sci. 2022, 12, 8297. [Google Scholar] [CrossRef]

- Xie, Y.; Rui, X.; Zou, Y.; Tang, H.; Ouyang, N. Mangrove monitoring and extraction based on multi-source remote sensing data: A deep learning method based on SAR and optical image fusion. Acta Oceanol. Sin. 2024, 43, 110–121. [Google Scholar] [CrossRef]

- Khan, M.S.I.; Vega-Corredor, M.C.; Wilson, M.D. Mapping Wetlands with High-Resolution Planet SuperDove Satellite Imagery: An Assessment of Machine Learning Models Across the Diverse Waterscapes of New Zealand. Remote Sens. 2025, 17, 2626. [Google Scholar] [CrossRef]

- Norris, G.S.; LaRocque, A.; Leblon, B.; Barbeau, M.A.; Hanson, A.R. Comparing Pixel- and Object-Based Approaches for Classifying Multispectral Drone Imagery of a Salt Marsh Restoration and Reference Site. Remote Sens. 2024, 16, 1049. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Zhang, X.; Che, C.; Huang, C.; Li, H.; Peng, Y.; Li, Z.; Liu, Q. Fine-Scale Classification of Dominant Vegetation Communities in Coastal Wetlands Using Color-Enhanced Aerial Images. Remote Sens. 2025, 17, 2848. [Google Scholar] [CrossRef]

- Gao, Y.; Hu, Z.; Wang, Z.; Shi, Q.; Chen, D.; Wu, S.; Gao, Y.; Zhang, Y. Phenology Metrics for Vegetation Type Classification in Estuarine Wetlands Using Satellite Imagery. Sustainability 2023, 15, 1373. [Google Scholar] [CrossRef]

- Luo, K.; Samat, A.; Van De Voorde, T.; Jiang, W.; Li, W.; Abuduwaili, J. An automatic classification method with weak supervision for large-scale wetland mapping in transboundary (Irtysh River) basin using Sentinel 1/2 imageries. J. Environ. Manag. 2025, 380, 124969. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Hu, S.; He, Y.; You, S.; Yang, X.; Gan, Y.; Liu, A. A Fine-Scale Mangrove Map of China Derived from 2-Meter Resolution Satellite Observations and Field Data. ISPRS Int. J. Geo-Inf. 2021, 10, 92. [Google Scholar] [CrossRef]

- Morgan, G.R.; Wang, C.; Li, Z.; Schill, S.R.; Morgan, D.R. Deep Learning of High-Resolution Aerial Imagery for Coastal Marsh Change Detection: A Comparative Study. ISPRS Int. J. Geo-Inf. 2022, 11, 100. [Google Scholar]

- Luo, J.; He, Z.; Lin, H.; Wu, H. Biscale Convolutional Self-Attention Network for Hyperspectral Coastal Wetlands Classification. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6002705. [Google Scholar] [CrossRef]

- Yang, M.; Qin, J.; Wang, X.; Gu, Y. Research on the Wetland Vegetation Classification Method Based on Cross-Satellite Hyperspectral Images. J. Mar. Sci. Eng. 2025, 13, 801. [Google Scholar] [CrossRef]

- Asif, M.R. Benchmarking Deep Learning for Wetland Mapping in Denmark Using Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 11953–11962. [Google Scholar] [CrossRef]

- Xing, H.; Niu, J.; Feng, Y.; Hou, D.; Wang, Y.; Wang, Z. A coastal wetlands mapping approach of Yellow River Delta with a hierarchical classification and optimal feature selection framework. CATENA 2023, 223, 106897. [Google Scholar] [CrossRef]

- Munizaga, J.; García, M.; Ureta, F.; Novoa, V.; Rojas, O.; Rojas, C. Mapping Coastal Wetlands Using Satellite Imagery and Machine Learning in a Highly Urbanized Landscape. Sustainability 2022, 14, 5700. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, K.; Cao, J. Classification of coastal wetlands in the Pearl River Estuary using Zhuhai-1 hyperspectral imagery and XGBoost algorithm. Bull. Surv. Mapp. 2023, 12, 136–141. [Google Scholar]

- Yang, R.; Luo, F.; Ren, F.; Huang, W.; Li, Q.; Du, K.; Yuan, D. Identifying Urban Wetlands through Remote Sensing Scene Classification Using Deep Learning: A Case Study of Shenzhen, China. ISPRS Int. J. Geo-Inf. 2022, 11, 131. [Google Scholar]

- Yamazaki, D.; Ikeshima, D.; Tawatari, R.; Yamaguchi, T.; O’Loughlin, F.; Neal, J.C.; Sampson, C.C.; Kanae, S.; Bates, P.D. A high-accuracy map of global terrain elevations. Geophys. Res. Lett. 2017, 44, 5844–5853. [Google Scholar] [CrossRef]

- Zhao, C.; Jia, M.; Wang, Z.; Mao, D.; Wang, Y. Toward a better understanding of coastal salt marsh mapping: A case from China using dual-temporal images. Remote Sens. Environ. 2023, 295, 113664. [Google Scholar]

- Zhao, J.; Wang, Z.; Zhang, Q.; Niu, Y.; Lu, Z.; Zhao, Z. A novel feature selection criterion for wetland mapping using GF-3 and Sentinel-2 Data. Ecol. Indic. 2025, 171, 113146. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Munich, Germany, 2018; Volume 11211, pp. 833–851. [Google Scholar]

- Li, Y.; Deng, T.; Fu, B.; Lao, Z.; Yang, W.; He, H.; Fan, D.; He, W.; Yao, Y. Evaluation of Decision Fusions for Classifying Karst Wetland Vegetation Using One-Class and Multi-Class CNN Models with High-Resolution UAV Images. Remote Sens. 2022, 14, 5869. [Google Scholar] [CrossRef]

- Yu, L.; Zeng, Z.; Liu, A.; Xie, X.; Wang, H.; Xu, F.; Hong, W. A Lightweight Complex-Valued DeepLabv3+ for Semantic Segmentation of PolSAR Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 930–943. [Google Scholar]

- Chen, X.; Zhan, Z.; Gong, Y.; Guo, C.; Zhang, M. Analysis of Influence Factors of Typical Salt Vegetation Degradation in Tidal Wetland of Liaohe River Estuary. Yellow River 2022, 44, 81–85. [Google Scholar]

- Chen, K.; Cong, P.; Qu, L.; Liang, S.; Sun, Z. Annual variation of the landscape pattern in the Liao River Delta wetland from 1976 to 2020. Ocean Coast. Manag. 2022, 224, 106175. [Google Scholar]

- Wu, Y.; Song, S.; Zhao, J.; Zhang, F.; An, W.; Zhang, G. Assessment of Carbon Density in Typical Areas of Coastal Salt Marshes: A Case Study of Liaohe Estuary in the Bohai Sea. Guangxi Sci. 2025, 32, 59–67. [Google Scholar]

- Hu, J.; Tang, S.; Yuan, K.; Xie, S.; Zhou, K.; Yu, H. Fine classification mapping of Liaohe Estuary Wetland based on Google Earth Engine and dense time series information. Remote Sens. Technol. Appl. 2025, 40, 647–658. [Google Scholar]

- Cui, L.; Zhang, J.; Wu, Z.; Xun, L.; Wang, X.; Zhang, S.; Bai, Y.; Zhang, S.; Yang, S.; Liu, Q. Superpixel segmentation integrated feature subset selection for wetland classification over Yellow River Delta. Environ. Sci. Pollut. Res. 2023, 30, 50796–50814. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Liu, L.; Zhao, T.; Chen, X.; Lin, S.; Wang, J.; Mi, J.; Liu, W. GWL_FCS30: A global 30 m wetland map with a fine classification system using multi-sourced and time-series remote sensing imagery in 2020. Earth Syst. Sci. Data 2023, 15, 265–293. [Google Scholar]

- Wang, X.; Wang, L.; Tian, J.; Shi, C. Object-based spectral-phenological features for mapping invasive Spartina alterniflora. Int. J. Appl. Earth Obs. Geoinf. 2021, 101, 102349. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Las Vegas, NV, USA, 2016; pp. 770–778. [Google Scholar]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying Convolution and Attention for All Data Sizes. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 14 December 2021; Volume 34, pp. 3965–3977. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar]

- Zeng, Y.; Hao, D.; Huete, A.; Dechant, B.; Berry, J.; Chen, J.M.; Joiner, J.; Frankenberg, C.; Bond-Lamberty, B.; Ryu, Y.; et al. Optical vegetation indices for monitoring terrestrial ecosystems globally. Nat. Rev. Earth Environ. 2022, 3, 477–493. [Google Scholar] [CrossRef]

- He, G.; Li, S.; Huang, C.; Xu, S.; Li, Y.; Jiang, Z.; Xu, J.; Yang, F.; Wan, W.; Zou, Q.; et al. Comparison of Algorithms and Optimal Feature Combinations for Identifying Forest Type in Subtropical Forests Using GF-2 and UAV Multispectral Images. Forests 2024, 15, 1327. [Google Scholar] [CrossRef]

- Ke, L.; Zhang, S.; Lu, Y.; Lei, N.; Yin, C.; Tan, Q.; Wang, L.; Liu, D.; Wang, Q. Classification of Wetlands in the Liaohe Estuary Based on MRMR-RF-CV Feature Preference of Multisource Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 6116–6133. [Google Scholar] [CrossRef]

- Miao, J.; Wang, J.; Zhao, D.; Shen, Z.; Xiang, H.; Gao, C.; Li, W.; Cui, L.; Wu, G. Modeling strategies and influencing factors in retrieving canopy equivalent water thickness of mangrove forest with Sentinel-2 image. Ecol. Indic. 2024, 158, 111497. [Google Scholar]

| Satellite/Sensor | Data Level | Time | Band Spectrum | Spatial Resolution | |

|---|---|---|---|---|---|

| Sentinel-2A/MSI | L2A | 6 March 2024 24 June 2024 22 September 2024 21 November 2024 | B2 (Blue) B3 (Green) B4 (Red) B5 (RedEdge1) B6 (RedEdge2) B7 (RedEdge3) B8 (NIR) B8A (NIRNarrow) B9 (Water) B11 (SWIR1) B12 (SWIR2) | 0.458~0.523 μm 0.543~0.578 μm 0.650~0.680 μm 0.698~0.713 μm 0.733~0.748 μm 0.773~0.793 μm 0.785~0.900 μm 0.855~0.875 μm 0.935~0.955 μm 1.565~1.655 μm 2.100~2.280 μm | 10 m 10 m 10 m 20 m 20 m 20 m 10 m 20 m 60 m 20 m 20 m |

| Sentinel-2B/MSI | L2A | 8 March 2024 26 June 2024 24 September 2024 23 November 2024 | |||

| Class | Description | Image Sample |

|---|---|---|

| Salt Marsh | Located in the coastal intertidal zone, the vegetation is dominated by Phragmites Australis and Suaeda Salsa, influenced by tidal action. |  |

| Marsh | Herbaceous plants growing in freshwater. |  |

| Tidal Flat | Coastal tidal inundation zones, including intertidal mudflats, rocky areas and sandy sections with less than 10% vegetation cover. |  |

| Water | Including rivers, lakes, estuarine waters, reservoirs, and oceans. |  |

| Aquaculture | Coastal areas with regular shapes, such as fish ponds and shrimp ponds. |  |

| Building | Including industrial land, towns and ports, etc. |  |

| Non-wetland | Including arable land, farmland, irrigated arable land and other non-wetland areas. |  |

| Scheme | Composition Characteristics | Number of Features | Class |

|---|---|---|---|

| ① | Multi-temporal optimal feature subset | 33 | Multi- temporal |

| ② | Spectral Bands+ Vegetation Indices+ Texture Features+ Terrain Features | 154 | |

| ③ | RGB color images | 3 | Single- temporal |

| ④ | Optimal Feature Subset for March | 10 | |

| ⑤ | Optimal Feature Subset for June | 19 | |

| ⑥ | Optimal Feature Subset for September | 17 | |

| ⑦ | Optimal Feature Subset for November | 11 |

| Feature Class | Full Name | Feature Abbreviation | Formula |

|---|---|---|---|

| Spectral Bands | Band | B | B9, B8A, B8, B7, B6, B5, B4, B3, B2, B12, B11 |

| Vegetation Indices | Normalized Difference Water Index | NDWI | (B3 − B8)/(B3 + B8) |

| Normalized Difference Vegetation Index | NDVI | (B8 − B4)/(B8 + B4) | |

| Enhanced Vegetation Index | EVI | 2.5 × (B8 − B4)/(B8 + 6.0 × B4 − 7.5 × B2 + 1) | |

| Enhanced Vegetation Index 2 | EVI2 | 2.5 × (B8 − B4)/(B8 + 2.4 × B4 + 1) | |

| Soil Adjusted Vegetation Index | SAVI | 1.5 × (B8 − B4)/(B8 + B4 + 0.5) | |

| Optimized Soil Adjusted Vegetation Index | OSAVI | (B8 − B4)/(B8 + B4 + 0.16) | |

| Modified Soil Adjusted Vegetation Index | MSAVI | (2 × B8 + 1 − sqrt((2 × B8 + 1)^2−8 × (B8 − B4)))/2 | |

| Normalized Difference Vegetation Index red-edge 1 | NDVIre1 | (B8 − B5)/(B8 + B5) | |

| Normalized Difference Vegetation Index red-edge 2 | NDVIre2 | (B8 − B6)/(B8 + B6) | |

| Normalized Difference Vegetation Index red-edge 3 | NDVIre3 | (B8 − B7)/(B8 + B7) | |

| Modified Normalized Difference Water Index | MNDWI | (B3 − B11)/(B3 + B11) | |

| Modified Normalized Difference Water Index 2 | MNDWI2 | (B3 − B12)/(B3 + B12) | |

| Land Surface Water Index | LSWI | (B8 − B11)/(B8 + B11) | |

| Nonphotosynthetic Vegetation Index-2 | NPV2 | (B11 − B12)/(B11 + B12) | |

| Normalized Difference Senescent Vegetation Index | NDSVI | (B11 − B4)/(B11 + B4) | |

| Texture Features | Mean | - | |

| Variance | Var | ||

| Homogeneity | Homo | ||

| Terrain Features | Elevation | - | Altitude |

| Slope | - | Slope |

| Feature Class | Optimal Feature |

|---|---|

| Spectral Bands | B9_3, B9_6, B12_6, B9_9, B4_6, B5_6, B12_3, B5_9, B12_9, B3_11, B9_11, B12_11 |

| Vegetation Indices | EVI_6, NDSVI_9, NPV2_9, NPV2_11, NDSVI _6, NPV2_6, SAVI_9, SAVI_3, NPV2_3, EVI_9, NDSVI _11, NDVIre2_6, EVI_3, NDVIre3_6, SAVI_11 |

| Texture Features | Var_B8_6, Homo_B8_6, Var_B4_9, Homo_B4_9 |

| Terrain Features | DEM, Slope |

| Model | OA (%) | Kappa |

|---|---|---|

| CMW-MTFO | 98.31 | 0.9795 |

| CoAtNet | 97.20 | 0.9660 |

| ResNet18 | 97.42 | 0.9687 |

| ViT | 97.58 | 0.9706 |

| RF | 87.22 | 0.8509 |

| SVM | 67.96 | 0.6262 |

| Model | CMW-MTFO | ResNet18 | CoAtNet | ViT | RF | SVM | |

|---|---|---|---|---|---|---|---|

| Class | |||||||

| Water | 98.20 | 97.01 | 97.11 | 97.68 | 89.03 | 75.51 | |

| Tidal Flat | 98.81 | 98.54 | 98.32 | 99.04 | 88.00 | 72.53 | |

| Salt Marsh | 97.96 | 97.10 | 96.74 | 98.20 | 82.46 | 51.83 | |

| Non-wetland | 97.43 | 96.50 | 94.92 | 96.27 | 79.19 | 62.94 | |

| Marsh | 99.36 | 99.14 | 97.80 | 99.24 | 95.79 | 83.21 | |

| Building | 97.48 | 96.09 | 94.18 | 96.49 | 85.14 | 65.99 | |

| Aquaculture | 98.91 | 97.75 | 96.31 | 97.65 | 91.14 | 63.04 | |

| Type | CMW-MTFO | RGB Color Images | |||

|---|---|---|---|---|---|

Model | OA (%) | Kappa | OA (%) | Kappa | |

| CMW-MTFO/DeepLabV3+ | 98.31 | 0.9795 | 96.53 | 0.9578 | |

| CoAtNet | 97.20 | 0.9660 | 91.77 | 0.9003 | |

| ResNet18 | 97.42 | 0.9687 | 93.97 | 0.9268 | |

| ViT | 97.58 | 0.9706 | 88.16 | 0.8567 | |

| Model | CMW-MTFO | Non-Preferred Features |

|---|---|---|

| OA(%) | 98.31 | 97.25 |

| Kappa | 0.9795 | 0.9677 |

| Model | CMW-MTFO | March Single-Temporal | June Single-Temporal | September Single-Temporal | November Single-Temporal |

|---|---|---|---|---|---|

| OA (%) | 98.31 | 94.67 | 96.50 | 96.36 | 96.08 |

| Kappa | 0.9795 | 0.9354 | 0.9576 | 0.9558 | 0.9525 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, D.; Wu, W.; Ma, Y.; Feng, D. Research on Wetland Fine Classification Based on Remote Sensing Images with Multi-Temporal and Feature Optimization. Sustainability 2025, 17, 10900. https://doi.org/10.3390/su172410900

Xu D, Wu W, Ma Y, Feng D. Research on Wetland Fine Classification Based on Remote Sensing Images with Multi-Temporal and Feature Optimization. Sustainability. 2025; 17(24):10900. https://doi.org/10.3390/su172410900

Chicago/Turabian StyleXu, Dongping, Wei Wu, Yesheng Ma, and Dianxing Feng. 2025. "Research on Wetland Fine Classification Based on Remote Sensing Images with Multi-Temporal and Feature Optimization" Sustainability 17, no. 24: 10900. https://doi.org/10.3390/su172410900

APA StyleXu, D., Wu, W., Ma, Y., & Feng, D. (2025). Research on Wetland Fine Classification Based on Remote Sensing Images with Multi-Temporal and Feature Optimization. Sustainability, 17(24), 10900. https://doi.org/10.3390/su172410900